Abstract

Purpose

This study reports REACH (the extent to which an intervention or program was delivered to the identified target population) of interventions integrating primary care and behavioral health implemented by real-world practices.

Methods

Eleven practices implementing integrated care interventions provided data to calculate REACH as follows: 1) Screening REACH defined as proportion of target patients assessed for integrated care, and 2) Integrated care services REACH—defined as proportion of patients receiving integrated services of those who met specific criteria. Difference in mean REACH between practices was evaluated using t test.

Results

Overall, 26.2% of target patients (n = 24,906) were assessed for integrated care and 41% (n = 836) of eligible patients received integration services. Practices that implemented systematic protocols to identify patients needing integrated care had a significantly higher screening REACH (mean, 70%; 95% CI [confidence interval], 46.6–93.4%) compared with practices that used clinicians’ discretion (mean, 7.9%; 95% CI, 0.6–15.1; P = .0014). Integrated care services REACH was higher among practices that used clinicians’ discretion compared with those that assessed patients systematically (mean, 95.8 vs 53.8%; P = .03).

Conclusion

REACH of integrated care interventions differed by practices’ method of assessing patients. Measuring REACH is important to evaluate the extent to which integration efforts affect patient care and can help demonstrate the impact of integrated care to payers and policy makers.

Keywords: Delivery of Health Care, Integrated, Evaluation Studies, Health Plan Implementation, Primary Health Care

Effective models of integrating behavioral health care and primary care now exist,1,2 and a central tenet of the success of these models in real-world practices is their ability to consistently deliver care for patients who may benefit from integration.3,4 However, practices experience challenges in identifying patients who may benefit from integrated care and then tracking them to ensure patients are engaged in services.5,6

REACH (the extent to which an intervention or program was delivered to the identified target population) is a key component of the Reach Effectiveness Adoption Implementation Maintenance (RE-AIM) framework, a planning and evaluation framework that focuses on identifying factors critical for translating research into practice in real-world settings. REACH is a measure of participation, referring to the percentage and characteristics of persons who receive or are affected by an intervention, program, or policy.7 Calculating the REACH of innovations into a practice target population is a critical measure for research, evaluation, and for practices aspiring to integrate behavioral health and primary care.

Assessing REACH of innovations to integrate primary care and behavioral health can help practices 1) provide a way to measure success in serving patients, 2) plan and modify the use of in-practice and external resources (eg, referral to specialists) that patients may need, and 3) improve their integration strategy. We undertook this study of 11 practices that implemented innovations to integrate behavioral health and primary care as part of the Advancing Care Together (ACT) program. The purpose was to: 1) describe how practices integrating care measured REACH of their innovations, and 2) report REACH of ACT innovations in terms of the percentage of target patients assessed for integrated care and the percentage of patients meeting specific criteria that receive integrated care services.

Methods

ACT was a demonstration program funded by The Colorado Health Foundation with the aim to discover practical models to integrate mental health, substance use, and primary care services for people whose health care needs span physical, emotional, and behavioral domains (www.advancingcaretogether.org). From 2011 to 2014, 9 primary care practices and 2 mental health organizations in Colorado participating in the ACT program implemented practice-level strategies to integrate primary and behavioral health care. For primary care practices this was the addition of behavioral health services. For community mental health centers, strategies included integration of primary care and substance use services. With the addition of these services, both types of practices were integrating care for patients in new ways, and we refer to this as integration or integrated care. In both primary care and community mental health centers, changes included identifying and engaging patients who would benefit from these new services.

A transdisciplinary research team, with expertise in qualitative research methods, epidemiology, biostatistics, practice-based research, health care policy, health economics, anthropology, and integrated care conducted a cross-practice process and outcome evaluation, including determining REACH of integrated care innovations. The University of Texas Health Science Center at Houston and the Oregon Health & Science University approved the study protocol.

Data Collection

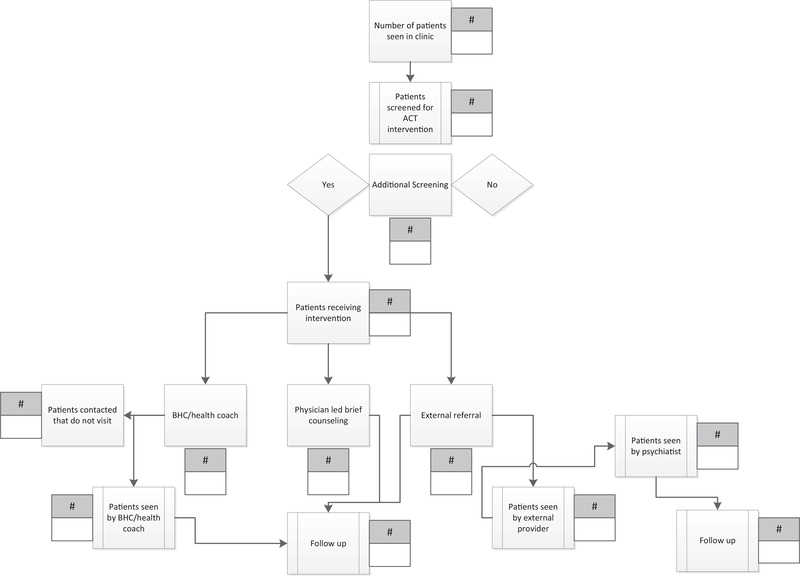

At the start of the ACT program, practices worked with the evaluation team to graphically depict their intervention workflow. Appendix Figure 1 shows an example of 1 practice’s intervention figure. This process enabled the evaluation team to characterize practices’ interventions, patient populations targeted for integration, the methods practices planned to use to assess patients for medical and/or behavioral health conditions, the measures they planned to use, and the subsequent pathways to provide integration services to patients who needed them.

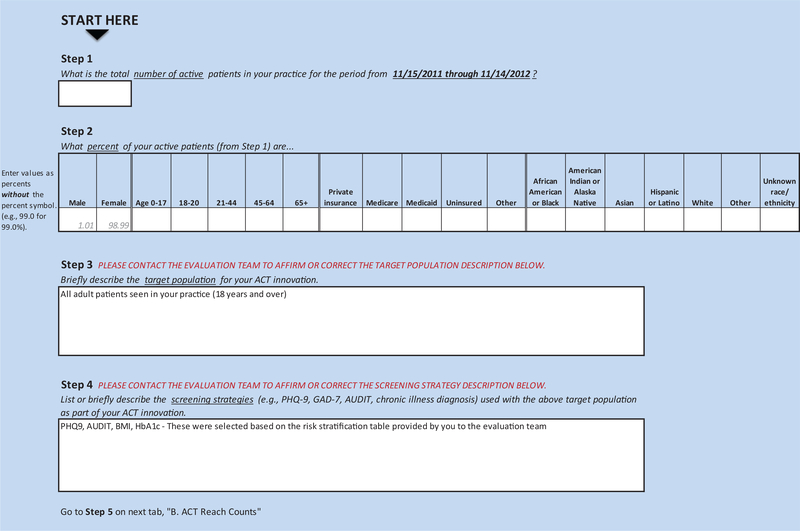

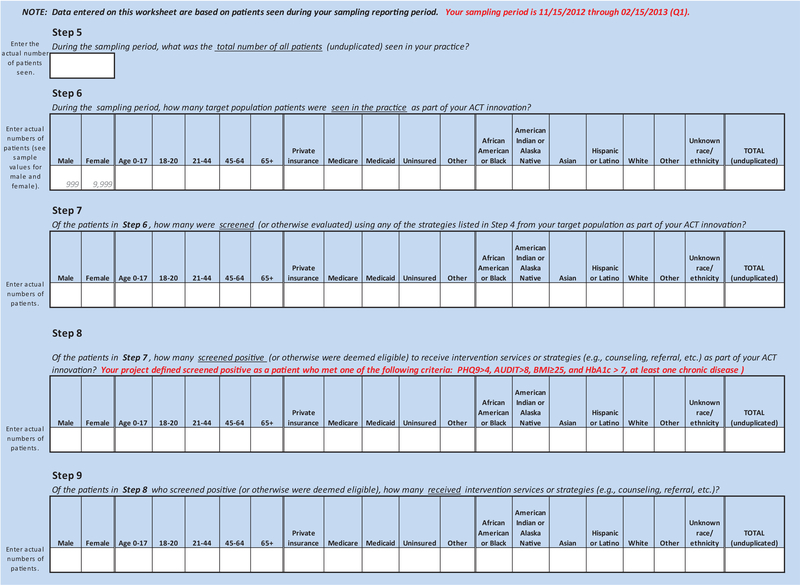

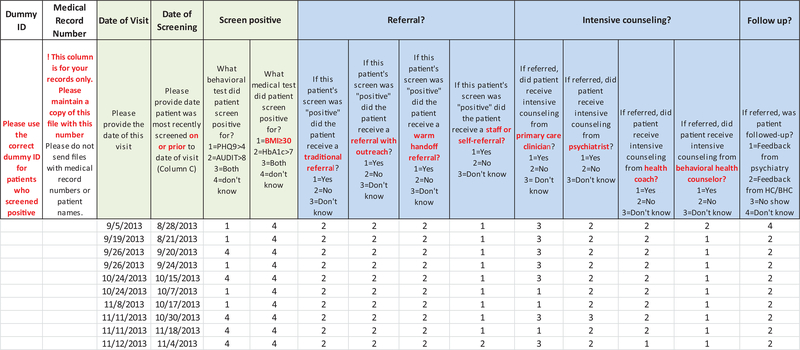

The intervention figure was the basis for tailoring 2 tools: 1) REACH Reporter, and 2) Patient Tracking Sheet. (Appendix Figures 2 and 3). The evaluation team provided these tools to practices to assist in collecting data required for calculating REACH of their innovations. The REACH reporter was a Microsoft Excel spreadsheet, modeled after a REACH reporting tool developed for Colorado’s Department of Public Health and Environment.8 The Patient Tracking Sheet, also a Microsoft Excel worksheet, helped practices collect detailed data on receipt and type of services (eg, referral for behavioral health counseling, warm handoff) provided to patients whose health assessments suggested need for additional services or follow-up.

REACH Assessment

We used a pragmatic application of the RE-AIM framework to assess REACH.9 REACH was assessed at 2 levels as follows: 1) Screening REACH, defined as the proportion of target patients who were assessed using measurement tools selected by practices (eg, Patient Health Questionnaire 2 and 9 [PHQ2, PHQ9],10,11 body mass index [BMI], Generalized Anxiety Disorder 7 [GAD7],12 and Alcohol Use Disorders Identification Test [AUDIT]13); and 2) Integrated care services REACH, defined as the proportion of patients receiving integrated services (eg, counseling by behavioral health clinicians in the practice, referral to behavioral health services outside the practice, health coaching, care coordination) out of those who screened positive or met specific criteria. To the extent possible, practices extracted these data from electronic health record (EHR) systems but when not available in discrete data fields, practices collected data manually using the Patient Tracking Sheet. Practices completed the REACH Reporter every 3 months over 1 year of implementing their integrated care innovations. The evaluation team met quarterly with practices to review their data and discuss experiences implementing their interventions that might be shaping these numbers.

We report REACH of interventions for the last quarter of data collected at each practice because practices’ interventions were fully implemented by this time and represented optimal REACH of their respective interventions. Practices reported number and demographic (age, race/ethnicity, and insurance) distribution of patients who 1) comprised the target population for their innovation, 2) were assessed for specific health problems, 3) suggested a need for further services, and 4) received counseling or referral for additional services.

Qualitative Data Collection

Qualitative process data evaluating the ACT program are described elsewhere.5,14 Briefly, we collected observation and interview data from each practice. This was complemented by data collected via an online diary in which practice members wrote regular journal entries about their implementation experiences. Together, these data exposed how integrated programs were implemented, and allowed us to examine practice members’ experiences during the implementation process. In addition, quarterly meetings with practices were audio recorded and provided contextual information to understand REACH of ACT innovations.

Data Management

Detailed Field Notes were prepared from observation visits and quarterly debriefing meetings with ACT innovators. Interviews were audio recorded and professionally transcribed, then reviewed for accuracy and deidentified. Qualitative data were entered into Atlas.ti (Version 7.0, Atlas.ti Scientific Software Development, GmbH) a program for qualitative data management and analysis. We used Microsoft Excel and SAS version 9.3 (SAS Institute) for all quantitative analyses.

Data Analysis

Qualitative data were analyzed in real time using a grounded theory approach15,16 to identify practices’ strategies for identifying patients needing integrated care and engaging patients in these services. We used descriptive statistics (mean, standard deviation, range, and percentages) to describe the practices. We calculated REACH for each practice and for the overall ACT program. We calculated difference in REACH stratified by method used by practices to identify patients needing integrated care (ie, systematic vs clinical discretion) and to deliver integrated care services. We used t test and simple linear regression to evaluate differences in REACH.

Results

ACT included 9 primary care practices and 2 mental health centers. Practice characteristics are described in Table 1. Primary care practices included 3 group practices with more than 10 full-time equivalent (FTE) primary care clinicians; 2 of these practices (a clinician-owned practice and an integrated delivery system) had large proportions of privately insured and white patients and 1 community health center had a high proportion of Latino patients on Medicaid or uninsured. Six practices were small-to-medium-size primary care practices (< 10 FTE primary care clinicians). Of these, 2 practices were hospital-system owned (1 serving mostly underserved minorities and the other mostly seniors on Medicare), 3 practices were clinician owned, serving mostly white, insured patients of which 1 was a solo practice, and 1 practice was a federally qualified health center. The 2 community mental health centers served predominantly white patients who were on Medicaid or were uninsured.

Table 1.

ACT Practice Characteristics

| Provider FTEs |

Patient Sex, % |

Patient Ethnicity/Race, % |

Insurance Type, % |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Site ID | Type | Ownership | Primary care | Behavioral health | Women | Latino | Black | White | Medicare | Medicaid | Uninsured | Private |

| 14 | Multi-specialty group | Hospital system, HMO, and not for profit | 18.7 | 0.6 | 58 | 7 | 12 | 55 | 32 | 0.4 | 0 | 67 |

| 13 | Multi-specialty group | Clinician | 13.6 | 1.0 | 59 | 7 | 0.6 | 85 | 15 | 8 | 6 | 69 |

| 4 | Community health center | Private not for profit | 11 | 2 | 61 | 61 | 1 | 34 | 5 | 36 | 37 | 15 |

| 16 | Single specialty group | Hospital system | 9 | 1.4 | 62 | 22 | 37 | 20 | 9 | 67 | 19 | 3 |

| 12 | Multi-specialty group | Hospital system | 3.15 | 0.9 | 67 | 21 | 10 | 58 | 57 | 10 | 15 | 3 |

| 7 | Multi-specialty group | Clinician | 10 | 0.5 | 43 | 6 | 0.04 | 94 | 11 | 20 | 7 | 62 |

| 10 | Single specialty group | Clinician | 4.8 | 0.5 | 56 | 11 | 7 | 56 | 10 | 0 | 5 | 85 |

| 9 | Solo | Clinician | 2 | 0.5 | 55 | 40 | 0 | 60 | 6 | 5 | 69 | 20 |

| 17 | Single specialty group | Federally qualified health center | 6 | 2 | 53 | 34 | 0.9 | 63 | 12 | 20 | 36 | 29 |

| 18a | Single specialty group | Not for profit | 0.4 | 22.8 | 64 | 17 | 2 | 76 | 12 | 36 | 43 | 6 |

| 19a | Solo | Not for profit | 2.2 | 7.9 | 61 | 11 | 0.2 | 82 | 23 | 37 | 19 | 13 |

Mental health clinics.

Table 2 describes the integration strategies implemented by practices, their target population, method of assessment, and measures used to assess patients. Practices implemented a range of integration strategies; all practices colocated primary care and behavioral health clinicians. Most practices targeted patients 18 years of age or older except for 1 practice, which focused on an elderly population, and another that targeted pregnant patients. Additional details about types and characteristics of integration strategies implemented by ACT practices are described elsewhere.14

Table 2.

Integration Strategy Implemented by ACT practices

| Site ID | Integration Strategy | Target Population | Method of Assessment | Assessment Measures Used |

|---|---|---|---|---|

| 4 | This practice embedded psychology doctoral trainees in prenatal clinic | Pregnant patients seen at clinic | In waiting room by BHCs | Completion of PHQ9, GAD7, AUDIT, HbA1c |

| 7 | This primary care practice automated screening for behavioral health needs using a tablet. A psychologist with a private, colocated practice became an employee who provided traditional mental health services in the practice. | Patients ≥18 y seen at clinic | In waiting room using tablet devices | Completion of PHQ2 followed by PHQ9, if PHQ2 positive |

| 10 | This private primary care practice partnered with a CMHC to hire a BHC. The practice also expanded health coaching services. | Patients ≥18 y seen at clinic | In waiting room using paper-based survey, then transition to tablet devices | Completion of PHQ9, GAD7, AUDIT |

| 9 | A small primary care practice added a traditional mental health therapist from a private mental health agency to provide colocated care and brief interventions. | Patients ≥18 y seen at clinic | In waiting room using paper-based survey, then transition to tablet devices | Completion of PHQ9, GAD7, AUDIT, and to assess tobacco use. |

| 17 | An FQHC with a colocated mental health therapist added a colocated substance use counselor from a collaborating CMHC. | Patients ≥18 y seen at clinic | In waiting or exam room by medical assistants | Completion of PHQ9 and SBIRT |

| 19 | This practice screened patients using a tablet device that was programmed to directly transfer entered data to an EHR-linked interface accessible to providers. Further, a PC clinician/MA team, and a BHC were embedded in the practice to provide primary care and BH services. | Patients ≥18 y seen at clinic | In waiting room using handheld tablet devices | Completion of PHQ9, GAD7, AUDIT, BMI, HbA1c |

| 16 | This primary care practice expanded their existing integrated care model by working with a research team to develop and implement a screening form for patients to self identify behavioral health needs. | English and Spanish-speaking patients ≥18 y seen at clinic | In waiting room using paper-based survey | Completion of a newly developed “Improve your Health” survey |

| 12 | A postdoctoral training program provides colocated mental health services in an FQHC serving seniors. A computerized cognitive and psychological screening program was developed and implemented. | Patients ≥50 y seen for an annual wellness or medically necessary visit | Clinical discretion by primary care providers | Completion of a newly developed cognitive screening tool called CaPS |

| 13 | This private primary care practice expanded their partnership with a private mental health agency to provide integrated care. First, an urgent consult schedule was created for BHC services. Over time, services expanded to enable full-time BHC coverage within the practice setting. | Patients ≥18 y seen at clinic for an annual, diabetes, or hypertensive exama | Clinical discretion by primary care providers, then transitioned to systematic in waiting room using paper-based survey | Completion of PHQ2 |

| 14 | A BHC was embedded in a primary care setting with multiple clinics (e.g., family medicine, pediatrics). BHC provides brief counseling and helps connect patients to specialty MH services within the large, integrated health system or to external resources. | Patients ≥18 y seen at clinic | Clinical discretion by primary care providers | Referral to a behavioral health counselor |

| 18 | A primary care team (including PA, MA, care coordinator, and substance use counselor) were embedded in a CMHC. | Clients without primary care physician on record | Clinical discretion by mental health providers | Referral to the primary care team |

Abbreviations: BH, behavioral health; BHC, behavioral health clinician; CaPS, Cognitive and Psychological Screen; CMHC, community mental health center; FQHC, federally qualified health center; PC, primary care; AUDIT, Alcohol Use Disorders Identification Test; PHQ, patient health questionnaire; GAD, generalized anxiety disorder; HbA1c, glycosylated hemoglobin; BMI, body mass index; SBIRT, screening, brief intervention, and referral to treatment; PA, physician assistant; MA, medical assistant.

PHQ2 was used to screen for depression; PHQ9 was used to screen or monitor for depression; GAD7 was used to screen or monitor for anxiety disorder; AUDIT was used to screen or monitor for alcohol use; DAST was used to screen or monitor for substance use; BMI was used to screen or monitor for obesity; and HbA1c was measured to monitor for diabetes.

Target population changed midstream.

Six practices (No. 4, 7, 10, 9, 17, and 19) used a systematic approach to identifying patients needing integrated care. To do so, they established workflows that included incorporating screening into the patient check-in process and administered screening tools routinely in practice waiting rooms using paper-based surveys, tablet computers, and/or by practice staff. Five practices (No. 6, 12, 13, 14, and 18) relied on clinician discretion to identify patients who might benefit from integrated care.

Practices used evidence-based measures such as PHQ2, PHQ9, GAD7, AUDIT, BMI, and glycosylated hemoglobin (HbA1c) to identify patients needing integrated care. Two practices (No. 16 and 12) developed new measurement tools to identify this need, with 1 practice developing a tool to assess patients’ readiness to change specific health behaviors and the other practice developing a tool to screen for cognitive decline among elderly patients.

Screening REACH

Across all practices, 6529 of 24,906 target patients (26.2%) were assessed for integrated care (Table 3). This varied significantly across practices ranging from 1.1 to 91%. Practices that implemented systematic protocols to assess patients for integrated care reached, on average, 70% of their target patients (95% CI, 46.6–93.4); significantly higher (P = .0014) compared with practices that did not have practice-level systems in place to assess patient need, but relied on clinicians’ discretion (mean, 7.9%; 95% CI, 0.6–15.1).

Table 3.

REACH of Interventions Over a 3-Month Period Among ACT Practices

| Site ID | Measure of Reach | No. Target Patients | No. Patients Assessed | Screening REACHa (%) |

|---|---|---|---|---|

| 4 | Completion of PHQ9, GAD7, and AUDIT | 65 | 51 | 78.5 |

| 7 | Completion of PHQ9, GAD7, AUDIT, and to assess tobacco use | 1876 | 287 | 15.3 |

| 10 | Completion of PHQ9, GAD7, AUDIT, and HbA1c | 1868 | 1609 | 86.0 |

| 9 | Completion of PHQ2 followed by PHQ9 if PHQ2 is positive | 546 | 498 | 91.0 |

| 17 | Completion of PHQ9 and SBIRT | 2519 | 1491 | 59.2 |

| 19 | Completion of PHQ9, GAD7, AUDIT, BMI, and HbA1c | 440 | 396 | 90.0 |

| 16 | Completion of a newly developed “Improve your Health” survey | 887 | 182 | 20.5 |

| 12 | Completion of a newly developed cognitive screening tool called CaPS | 942 | 34 | 3.6 |

| 13 | Referral to behavioral health provider | 1700 | 206 | 12.1 |

| 14 | Referral to a behavioral health counselor | 14,879 | 169 | 1.1 |

| 18 | Referral to the primary care team | 773 | 17 | 2.2 |

| Total | 24,906 | 6529 | 26.2 |

CaPS, Cognitive and Psychological Screen; AUDIT, Alcohol Use Disorders Identification Test; PHQ, patient health questionnaire; GAD, generalized anxiety disorder; BMI, body mass index; SBIRT, screening, brief intervention, and referral to treatment.

Screening REACH is defined as percentage of target patients who were assessed for integrated care.

Qualitative data show that practices with higher screening REACH a priori defined their target patient population, screening tools (eg, PHQ2, PHQ9, GAD7), methods to survey patients (tablet computers and/or by practice staff), and frequency of assessment (eg, every visit, annually). Then, they systematically deployed practice-wide protocols to identify target patients and administer screening tools. Qualitative data show that practices with lower screening REACH either relied solely on clinician discretion in the course of usual care to identify patient need or did not proactively identify target patients and methods to administer assessment tools. This resulted in challenges establishing a standard workflow. One practice that assessed patients systematically using tablet computers achieved low REACH (15.3%), primarily because data from the tablets had to be entered manually by practice members into the EHR system and this did not occur routinely.

Integrated Care Services REACH

Table 4 shows practices’ method of tracking patients who were identified as needing integrated care services, such as counseling, within the practice or referral to outside resources, and the percentage of patients who received such services, that is, integrated care services REACH. Most practices (9 of 11 practices) did not have established methods to track patients’ care before implementing their integrated care interventions. As part of their ACT interventions, 2 practices (No. 10 and 19) created new EHR templates to track receipt of services. Both practices created protocols and trained practice staff in consistent use of these templates. However, even in the last quarter of data collection, these 2 practices were experiencing challenges with consistent documentation. Seven practices agreed to manually track receipt of integrated care services as part of our evaluation and recorded this information in the Patient Tracking Sheet (Appendix Figure 3).

Table 4.

Receipt of Integrated Services for Patients Assessed Over a 3-Month Period Among ACT practices

| Site ID | Method of Identifying Patients | Method to Track Patients | No. Patients Screened Positive or Meeting Specific Criteria | No. Patients Received Services | Integrated Care Services REACHa (%) |

|---|---|---|---|---|---|

| 4 | Systematic | Manual | 23 | 21 | 91.3 |

| 7 | Systematic | EHR | 69 | 62 | 89.9 |

| 10 | Systematic | Created new EHR template | 689 | 176 | 25.5 |

| 9 | Systematic | Manual | 261 | Data not available | — |

| 17 | Systematic | Manual | 289 | 44 | 15.2 |

| 19 | Systematic | Created new EHR template | 267 | 126 | 47.2 |

| 16 | Clinical discretion | Manual | 180 | 148 | 82.2 |

| 12 | Clinical discretion | Manual | 33 | 32 | 97.0 |

| 13 | Clinical discretion | Manual | 41 | 41 | 100.0 |

| 14 | Clinical discretion | EHR | 169 | 169 | 100.0 |

| 18 | Clinical discretion | Manual | 17 | 17 | 100.0 |

| Total | 2038 | 836 | 41.0 |

ACT, Advancing Care Together; EHR, electronic health record.

Integrated care services REACH defined as percentage patients who received integrated services out of those screened positive or meeting specific criteria.

Regardless of the method employed to track patients, there was variability in REACH of integrated care services across practices. On average, practices that used clinicians’ discretion to identify patients needing integrated care had significantly higher (P = .03) integrated care services REACH (mean, 95.8%; range, 82.2 to 100%) compared with practices that identified patients systematically (mean, 53.8%; range, 15.2 to 91.3%). This is because patients identified by clinicians’ discretion were already selected by clinicians to have immediate need for integrated services. Those identified through a system-wide protocol using screening tools such as PHQ9 typically were “flagged” for clinicians, who discussed the positive score with patients, which could result in the decision that additional treatment was not needed.

Discussion

We assessed REACH in 11 practices at 2 levels; screening REACH and integrated care services REACH. Practices that developed systematic strategies for identifying patient need using evidence-based assessment tools reached, on average, 70% of targeted patients. Practices that did not assess patients systematically had lower screening REACH (mean, 7.9%). Further, we observed variation in receipt of integrated care services among patients screened positive. Not surprisingly, practices that relied on clinical discretion to identify patient need engaged a higher percentage of patients in subsequent services.

ACT practices that assessed patients systematically ran the risk of identifying more patients who needed integrated care than they had the capacity to address. This common challenge has led the United States Preventive Services Task Force (USPSTF) to recommend against universally screening for depression unless there are “staff-assisted supports” such as integrated behavioral health in place.17 Yet practices that did screen systematically gained information about patient need for integrated care in the practice population, and were well positioned to work on identifying ways to meet this need either in the practice, or by connecting patients to external resources and treatments. In contrast, practices that chose to identify patients through clinical discretion and then refer patients to behavioral health and/or other services reached relatively small numbers of patients even though their target populations were large. These practices were concerned about exceeding their capacity to provide treatment to a large number of patients who could potentially be identified through universal screening. Although there is still significant disagreement in clinical and research communities about whether to screen universally for behavioral health conditions,18 practices embarking on integrated care interventions need to balance the tension between reaching a large segment of their patient panel for assessment and the capacity to provide integrated care services to patients who need them. It is likely more beneficial to identify target patients at high risk and then systematically assess their need for integrated care using evidence-based tools rather than rely on clinical discretion alone.

The role of screening in identifying “cases,” (eg, of depression) is established. Many articles, including several systematic reviews, have been used to develop guidelines and recommendations for screening for behavioral health needs in primary care (eg, USPSTF).6,17,19–22 This manuscript is a departure from previously published research on the role of screening, in that it goes beyond quantifying number of cases identified through screening, to assessing REACH of these interventions, demonstrating how practices do with screening and treating the population of patients they serve. Through qualitative findings, we identified implementation strategies that may result in “touching” a higher proportion of eligible patients with integrated care services. To our knowledge, findings looking at REACH of integrated care in real-world practices has not been previously reported.

REACH is an important evaluation metric for programs and demonstration projects implementing practice change initiatives.9,23,24 This study shows that REACH also served as a key implementation measure.7 When measured in conjunction with qualitative assessments of the implementation process, it generated important quality improvement lessons for practices and cross practice, transportable findings for the field in general.23 Measuring REACH helped ACT practices improve their integration efforts because doing so 1) provided a measurable way to assess whether interventions were maximally reaching the target populations, 2) helped them plan and prepare for use of in-practice and external resources (eg, referral to specialists) that patients needed, 3) served as an evaluation measure of the approaches used to engage patients in need of integrated care, and 4) informed further refinements to the integration strategy to more effectively REACH the target populations. Our findings showed that measuring REACH of integrated care innovations was an essential skill for practices aiming to integrate primary care and behavioral health.

To effectively use REACH as a quality improvement measure, practices need to invest in building capacity to track care processes related to integration through their EHRs as well as invest in robust quality improvement resources. Most practices’ EHR systems were not equipped to systematically track the types of care patients received, as most practices had to do this manually. This is an important technological problem, given how important it is for practices to monitor patients’ engagement in services, and should be a basic function of EHRs.

Conclusion

In this study of practices deploying a variety of strategies to integrate primary care and behavioral health, REACH varied widely. Practices’ approach to identifying patients in need of integrated care influenced REACH and subsequent patient care. Tracking patients to assess receipt of integrated care services was a challenge for practices, and better methods, in particular basic EHR functionality, are urgently needed to guide interventions and improve care. EHR vendors and practice facilitation/learning collaboratives would do well to assist practices with measuring REACH.

Acknowledgments

Funding: This work is funded by grants from The Colorado Health Foundation. MD’s time is supported by an Agency for Healthcare Research & Quality-funded PCOR K12 award (Award No. 1 K12 HS022981 01).

The authors are grateful to the participating practices and their patients.

Appendix Figure 1.

Example of an intervention process diagram. Adapted from an open access article published by Balasubramanian et al. in Implementation Science http://www.implementationscience.com/content/10/1/31. ACT, Advancing Care Together; BHC, behavioral health clinician.

Appendix Figure 2.

Advancing Care Together (ACT) REACH reporter.

Appendix Figure 3.

Example of a patient tracking sheet.

Footnotes

Conflict of interest: none declared.

Contributor Information

Bijal A. Balasubramanian, Department of Epidemiology, Human Genetics, and Environmental Sciences, University of Texas School of Public Health, Dallas Regional Campus, Dallas.

Douglas Fernald, Department of Family Medicine, University of Colorado School of Medicine, Aurora.

L. Miriam Dickinson, Department of Family Medicine, University of Colorado School of Medicine, Aurora.

Melinda Davis, Oregon Rural Practice-Cased Research Network, Portland.

Rose Gunn, Department of Family Medicine, Oregon Health & Science University, Portland.

Benjamin F. Crabtree, Department of Family Medicine and Community Health, Rutgers–Robert Wood Johnson Medical School, Somerset, NJ and Department of Epidemiology, Rutgers School of Public Health, Piscataway, NJ.

Benjamin F. Miller, Department of Family Medicine, University of Colorado School of Medicine, Aurora.

Deborah J. Cohen, Department of Family Medicine and the Department of Medical Informatics and Clinical Epidemiology, Oregon Health & Science University, Portland.

References

- 1.Miller BF, Petterson S, Brown Levey SM, Payne-Murphy JC, Moore M, Bazemore A. Primary care, behavioral health, provider colocation, and rurality. J Am Board Fam Med 2014;27:367–74. [DOI] [PubMed] [Google Scholar]

- 2.Miller BF, Petterson S, Burke BT, Phillips RL Jr, Green LA. Proximity of providers: Colocating behavioral health and primary care and the prospects for an integrated workforce. Am Psychol 2014;69: 443–51. [DOI] [PubMed] [Google Scholar]

- 3.Levey SM, Miller BF, deGruy FV 3rd. Behavioral health integration: An essential element of population-based healthcare redesign. Transl Behav Med. 2012;2(3):364–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Burke B, Miller B, Proser M, et al. A needs-based method for estimating the behavioral health staff needs of community health centers. BMC Health Services Research 2013;13:245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Davis M, Balasubramanian BA, Waller E, Miller BF, Green LA, Cohen DJ. Integrating behavioral and physical health care in the real world: Early lessons from Advancing Care Together. J Am Board Fam Med 2013;26:588–602. [DOI] [PubMed] [Google Scholar]

- 6.Phillps RL, Miller BF, Petterson SM, Teevan B. Better integration of mental health care improves depression screening and treatment in primary care. Am Fam Physician 2011;84:980. [PubMed] [Google Scholar]

- 7.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: The RE-AIM framework. Am J Public Health 1999;89:1322–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Fernald D, Harris A, Deaton EA, et al. A standardized reporting system for assessment of diverse public health programs. Prev Chronic Dis. 2012;9:E147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Reach Effectiveness Adoption Implementation Maintenance (RE-AIM). 2012. Available from: http://www.re-aim.org. Accessed August 2012.

- 10.Kroenke K, Spitzer RL, Williams JB. The PHQ-9: Validity of a brief depression severity measure. J Gen Intern Med 2001;16:606–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kroenke K, Spitzer RL, Williams JB. The Patient Health Questionnaire-2: Validity of a two-item depression screener. Med Care 2003;41:1284–92. [DOI] [PubMed] [Google Scholar]

- 12.Spitzer RL, Kroenke K, Williams JB, Löwe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Archives of internal medicine 2006;166:1092–7. [DOI] [PubMed] [Google Scholar]

- 13.Saunders JB, Aasland OG, Babor TF, de la Fuente JR, Grant M. Development of the Alcohol Use Disorders Identification Test (AUDIT): WHO Collaborative Project on Early Detection of Persons with Harmful Alcohol Consumption–II. Addiction 1993; 88:791–804. [DOI] [PubMed] [Google Scholar]

- 14.Cohen DJ, Balasubramanian BA, Davis M, et al. Understanding care integration from the ground up: five organizing constructs that shape integrated practices. J Am Board Fam Med 2015;28:S7–S20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Miller WL, Crabtree BF. The dance of interpretation In: Crabtree BF, Miller WL, eds. Doing Qualitative Research. 2nd ed. Thousand Oaks, CA: Sage Publications, 1999;127–43. [Google Scholar]

- 16.Borkan J Immersion/Crystallization. 2nd ed. Thousand Oaks, CA: Sage Publications; 1999. [Google Scholar]

- 17.U.S. Preventive Services Task Force. Screening for Depression in Adults. 2009. Available from: http://www.uspreventiveservicestaskforce.org/uspstf/uspsaddepr.htm. Accessed January 15, 2011.

- 18.Moyer VA. Screening for cognitive impairment in older adults: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med 2014; 160:791–7. [DOI] [PubMed] [Google Scholar]

- 19.Gilbody S, Sheldon T, House A. Screening and case-finding instruments for depression: a meta-analysis. CMAJ 2008;178:997–1003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kessler R Identifying and screening for psychological and comorbid medical and psychological disorders in medical settings. J Clin Psychol 2009; 65:253–67. [DOI] [PubMed] [Google Scholar]

- 21.Nimalasuriya K, Compton MT, Guillory VJ. Screening adults for depression in primary care: A position statement of the American College of Preventive Medicine. J Fam Pract 2009;58:535–8. [PubMed] [Google Scholar]

- 22.O’Connor EA, Whitlock EP, Beil TL, Gaynes BN. Screening for depression in adult patients in primary care settings: A systematic evidence review. Ann Intern Med 2009;151:793–803. [DOI] [PubMed] [Google Scholar]

- 23.Balasubramanian BA, Cohen DJ, Davis M, et al. Learning evaluation: Blending quality improvement and implementation research methods to study healthcare innovations. Implement Sci. 2015;10:31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cohen DJ, Balasubramanian BA, Isaacson NF, Clark EC, Etz RS, Crabtree BF. Coordination of health behavior counseling in primary care. Ann Fam Med 2011;9:406–15. [DOI] [PMC free article] [PubMed] [Google Scholar]