Abstract

In this study, we demonstrate a novel self-navigated motion correction method that suppresses eye motion and blinking artifacts on wide-field optical coherence tomographic angiography (OCTA) without requiring any hardware modification. Highly efficient GPU-based, real-time OCTA image acquisition and processing software was developed to detect eye motion artifacts. The algorithm includes an instantaneous motion index that evaluates the strength of motion artifact on en face OCTA images. Areas with suprathreshold motion and eye blinking artifacts are automatically rescanned in real-time. Both healthy eyes and eyes with diabetic retinopathy were imaged, and the self-navigated motion correction performance was demonstrated.

1. Introduction

Optical coherence tomography (OCT) is a non-invasive modality capable of detailed imaging of retinal and choroidal structure [1]. OCT can also produce angiographic data (OCTA) by measuring motion contrast between successive OCT images [2–5]. Compared to conventional angiographic imaging techniques such as fluorescein angiography (FA), OCTA provides superior resolution as well as volumetric data [6]. As a non-invasive imaging method, OCTA also avoids the potential side effects and discomfort associated with dye injection [6].

Wide-field OCTA imaging has recently attracted significant research interest. Some diseases, like diabetic retinopathy (DR), can manifest with predominantly peripheral vascular changes not visible in typical OCTA macular scans [7]. While it has a wider field-of-view (FOV) than OCTA, because of potential side effects FA is not considered suitable for routine imaging [8]. In contrast, wide-field OCT which is faster, safer, and more comfortable for the patient could be used in routine settings. Wide-field OCT has been implemented in studies since 2010, but it has been limited by lower resolution [9]. With improvement in laser sweep-rate and image processing techniques, several groups have explored high-resolution wide-field OCTA [10–12]. To maintain comparable image resolution with a larger FOV, the total number of sampling points needs to be greatly increased along both the fast and slow axes. Typically, the sampling density should meet the Nyquist criterion. This results in longer inter-frame and total imaging times, which in turn exacerbates artifacts due to microsaccadic motion, blinking, and tear film evaporation [13]. Hardware improvements can yield high-resolution, large FOV OCTA simply by increasing scan rate [11,14], and many groups have developed such fast-scanning systems [14–17]. Still, even in state-of-the-art systems, data acquisition requires some trade-off between image resolution and FOV size [11].

Successful widefield systems, therefore, require some means of artifact minimization. One widely used approach especially on laboratory devices is postprocessing [18–23]. Postprocessing algorithms can be used to suppress certain artifacts [24]. However, postprocessing corrections involve some degree of information loss [18] and may require multiple volume acquisitions, thereby increasing the total imaging time [18]. Multi-image registration and montage can further enlarge the FOV of OCTA images [25,26]. However, image stitching can introduce new artifacts while simultaneously increasing acquisition time and difficulty [27].

An alternative approach to artifact minimization is to leverage real-time tracking system [28]. Some prototypes [28–31] and most commercial OCT systems such as RTVue (Optovue Inc., USA), CIRRUS (Carl Zeiss AG, Germany) and Spectralis (Heidelberg Engineering, Germany) have already incorporated motion tracking. They require additional imaging hardware in the OCT optical axis to simultaneously acquire images. Infrared fundus photography or scanning laser ophthalmoscopy (SLO) [28–30] are two common allied methods used to perform tracking. Such schemes have several drawbacks. Both fundus camera and SLO images need to be acquired and processed separately from the OCT image, increasing the processing complexity of the system [28]. In addition, SLO and fundus photography can only provide indirect information about the OCT image quality. Furthermore, additional hardware can increase the cost of OCT system and make maintenance and repair more difficult.

Apparently, a method that does not require additional hardware can obviate these problems [32]. One possible solution is a blink and motion artifact correction method directly based on OCTA, which often already calculates decorrelation [3] that can be used to detect motion. Here, we introduce a novel OCTA-based self-navigated motion correction method that can efficiently remove blink and motion artifacts without additional hardware support. We use the term “self-navigated motion correction” in order to emphasize that our system automatically detects motion, but relies on the imaging subject to fixate on a target in order to correct this motion when it is detected. This is unlike conventional active tracking methods used in prototypes and commercial systems, since the self-navigated motion correction reported here is a passive technology that does not actively track eye movements. As we will show, this self-navigated motion correction technique enables rapid acquisition of wide-field OCTA data while minimizing motion artifacts.

2. Method

2.1. 400-kHz swept-source OCT (SS-OCT) system

This system uses a customized 400-kHz swept-source laser (Axsun Technologies, USA), which is 4-6 times faster than the laser used in commercially available devices. The laser has a center wavelength of 1060 nm with 100 nm sweep range operating at 100% duty cycle. The maximum theoretical axial resolution is 4 µm in tissue [33]. With an appropriate optical design of the sample arm we recently reported [11] that a 75-degree maximum FOV can be achieved. Different from our previously study, the spot size on the retina was reduced to 10 µm, which is equivalent to the maximum lateral resolution that this system can achieve.

2.2. GPU-based real-time OCT/OCTA

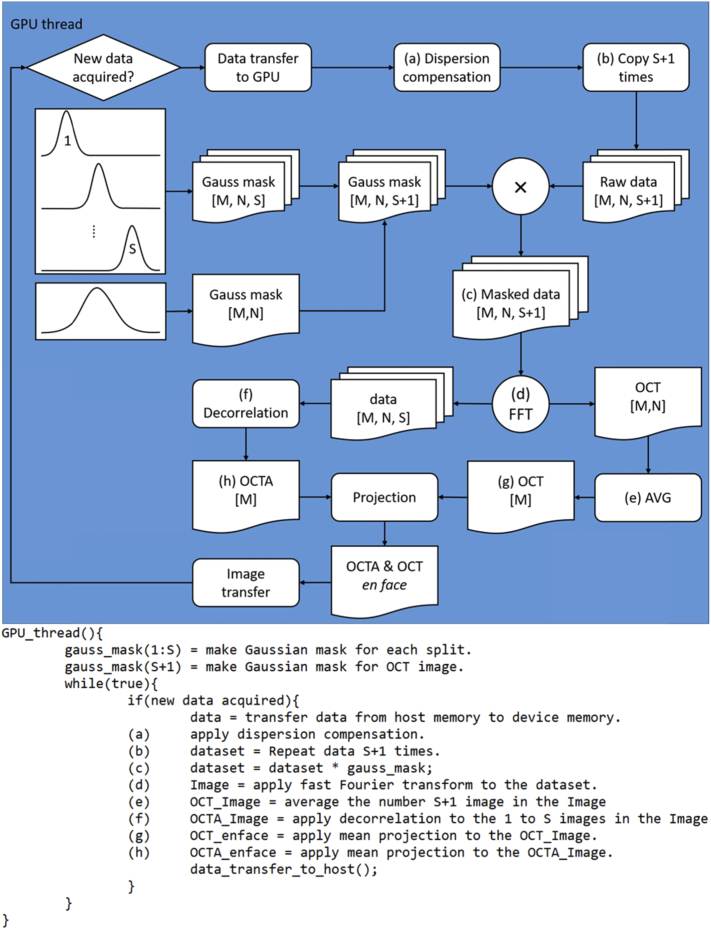

Graphics processing unit (GPU)-based parallel computing techniques can significantly improve the OCT and OCTA data processing speed. Many groups have developed GPU-based data processing techniques previously [34–36]; with the help of GPU parallel processing, a megahertz A-line rate data processing speed can be achieved [37]. Real-time OCTA image processing has also been realized previously [38–40]. In this study, we developed GPU-based real-time OCT/OCTA data acquisition and processing software for swept-source OCT system modified from our previous work [40]. The split-spectrum amplitude-decorrelation angiography (SSADA) algorithm was applied to compute OCTA flow signal [3]. SSADA increases the flow signal-to-noise ratio by combing flow information from each split-spectrum. Different from previous study, here, the GPU-based parallel data processing further improves real-time efficiency by processing the OCT and OCTA images together in a single GPU thread (Fig. 1).

Fig. 1.

GPU-based real-time OCT/OCTA image processing thread. Once the raw spectrum batch is acquired and transferred from the host memory to the device memory, the thread will begin to process the new data. The dataset contains spectra from M location with N repeated scans at each location. A GPU-based dispersion compensation algorithm is applied to the raw spectrum (a). The raw spectrum is then copied to form a dataset with S+1 copies, where S indicates the number of spectrum splits, and the one additional copy is used to generate the OCT image (b). Then a set of Gaussian masks are applied to the dataset (c). A fast Fourier transform (FFT) is then applied to the combined dataset, which generates a split spectrum image and OCT image (d). The OCT data is then averaged across N repeats to generate an averaged OCT image, which reduces noise (e). The split spectrum image set is then processed using a decorrelation algorithm to generate the OCTA image (f). Then the OCTA and OCT images are projected using mean projection to generate an en face image (g, h). The following pseudo code is used to demonstrate the process.

Instead of processing each B-scan separately, a batch of B-scans is processed together. First, the raw spectra are processed to compensate for dispersion. Then, a pre-generated Gaussian mask matrix is applied. This matrix contains 11 narrow Gaussian masks for OCTA image processing and one additional broad Gaussian mask for structural OCT data processing. For each processing batch, the fast Fourier transform is only applied once to minimize the processing time. After the fast Fourier transform, the OCT image is generated by averaging the repeated scans. The remaining 11 OCT images from the spectrum splits are further processed using amplitude decorrelation algorithm. The speed bottleneck of real-time processing is not the data computation, but the data transfer. To avoid extra time wasted beyond the data acquisition time, 12 B-scans at four locations were batched for each processing unit. The total data computation and transfer time for each batch is less than 30 ms, lower than the acquisition time of 42 ms for 12 B-scans. The mean values of OCT and OCTA cross-sectional images are projected to generate OCT and OCTA en face images. Both cross-sectional and en face images are displayed on a custom graphical user interface (GUI) in real-time.

2.3. Self-navigated motion correction OCT/OCTA

Conventional real-time motion detection algorithms are based on the correlation between two sequentially acquired fundus en face images either from infrared camera or SLO. The information used to calculate correlation is mainly from the major retinal vasculature. This procedure requires additional hardware and software support. OCTA generates vasculature and motion signal itself; therefore, its cross-sectional frames (B-frames) are inherently co-registered with the location of eye blinks and motions. To better represent the motion strength quantitatively, we defined an instantaneous motion strength index (IMSI) using the normalized standard deviation of the en face OCTA data:

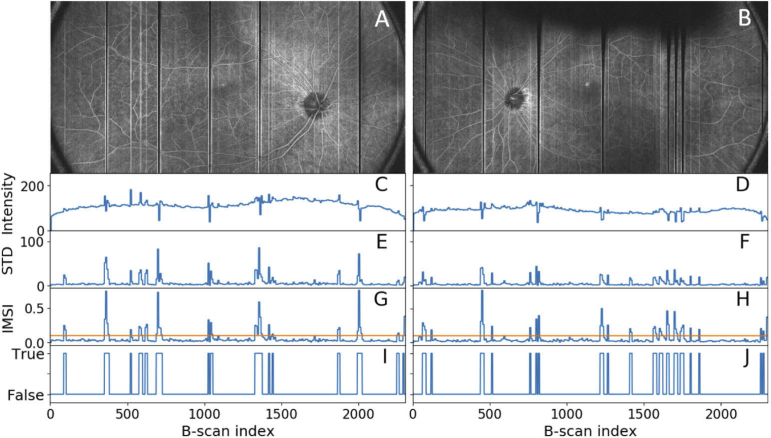

The IMSI was calculated in a single CPU thread after the OCTA en face image is generated in the GPU. represents the mean projection of OCTA values from single batch (4 OCTA B-frames generated from 12 OCT B-scans). A fixed threshold is set to highlight all involuntary motion that is intensive enough to affect image quality. The performance of this motion index was evaluated using wide-field en face OCTA images generated in real-time from a healthy human volunteer (Fig. 2).

Fig. 2.

(A, B) En face OCTA images acquired from a healthy volunteer without the motion correction system engaged; (C, D) mean values from each OCTA batch; (E, F) standard deviation (STD) between B-frames in each batch; (G, H) IMSI calculated using the en face OCTA image, with yellow line indicating the threshold; (I, J) motion trigger signal generated after the threshold applied to the IMSI value.

Besides the detection of microsaccades, blink detection is also required to achieve artifact-free OCTA imaging. During high-resolution wide-field OCTA image acquisition with our system, the imaging subject can freely blink several times in order to keep the tear film intact. During the blinking time course, the signal strength is significantly reduced. One straightforward approach for blink detection is to set a secondary threshold on the signal strength of the OCT structural image. When the signal strength is subthreshold, a blink may be detected. However, this approach may cause many false detections during wide-field imaging. A major artifact source in wide-field OCT image is shadow artifacts caused by vignetting and vitreous opacity, which frequently occur in wide FOV imaging and significantly reduce signal strength. In this old-fashioned way, the signal strength decrease caused by shadow artifacts can be mistakenly detected as a blink. However, at the initiation of a blink, the eye usually has a large axial movement, which can be detected by IMSI. When the eye is closed, the OCTA signal has low variation, and the IMSI value is low across an entire batch. The IMSI calculated from combining the batch at the blink initiation and eye closure will yield a high value, indicating blinking artifacts. In our motion and blink detection mechanism, the IMSI is a normalized metric, independent of the variation of OCTA signal strength. This single fixed IMSI threshold can be used to handle blinks and various types of motions across different imaging subjects and different systems.

After accurately detecting motion or blink artifacts, the system can then automatically rescan the artifact affected area to restore the image quality (Fig. 3). When eye blinks or microsaccades are detected, the system will record the B-scan number when the motion begins, it will continue scanning and acquiring the following positions. The following batches’ IMSI will also be calculated. When the IMSI values return to the normal level (subthreshold), the system will reset the scanner and calculate the IMSI from the new batch acquired after the scanning reset. By doing so, the image can be re-evaluated. If additional artifacts are detected at the same position, the scanner will reset again to rescan the artifact affected area until the blink or motion process is completed. As can be seen (Fig. 4), this procedure is capable of correctly removing blink artifacts.

Fig. 3.

Flow chart of the GPU-based real-time self-navigated motion correction thread. Variable n indicates the global batch number counter, variable m indicates a batch that contains artifacts, and f is the motion flag. After the GPU thread generates a new en face image, the image is then processed to calculate IMSI to determine if motion occurred. If motion occurred, the thread will wait until no additional motion events occur to reset the galvo scanner. The following pseudo code is used to better demonstrate the process.

Fig. 4.

High-density wide-field OCTA images. (A) wide-field OCTA image acquired without self-navigated motion correction engaged; (B) wide-field OCTA image acquired with self-navigated motion correction engaged. The shadowing artifact is caused by eye lashes; (C) high-resolution wide-field OCTA image acquired with self-navigated motion correction engaged; (D, F) 3×3-mm inner retinal angiogram cropped from C; (E, G) 3×3-mm inner retinal angiogram acquired using commercial system for comparison.

3. Results

3.1. Healthy retina

To evaluate performance of the self-navigated motion correction system, we applied our algorithm with two different scanning patterns on a healthy volunteer. First, we acquired two wide-field high-resolution OCTA images from a healthy human volunteer, once without (Fig. 4(A)) and once with (Fig. 4(B)) the self-navigated motion correction system. For each volume, images contained 2560 A-lines per B-scan and 1920 B-scans per volume, with two repeats. A bidirectional scan pattern [41] was applied here. The sampling step size in the fast axis was 9 µm and in the slow axis was 12 µm. The total data acquisition time was 25 seconds without self-navigated motion correction. In this work, the total scanning time of the image with self-navigated motion correction was always less than 1 minute. During the data acquisition with real-time motion correction engaged, when the volunteer blinked the scanned eye, the system successfully detected each blink and rescanned the area right after the eye re-opened. Large artifacts caused by microsaccadic movements were also successfully detected and the affected areas can be rescanned right after the motion processes were completed. At the edge of the rescan waveform, there are fly-back artifacts, but the artifacts are only limited to 50 A-scans which is only 1/20 of the total A-scan in each B-scan. In order to complete the scan within a reasonable time (<1 min), very mild motion artifacts that were lower than the IMSI threshold were deliberately disregarded. After inner retinal layer was segmented [42], its angiogram was generated by projecting the maximum OCTA value within this slab [43]. A Gabor filter, histogram equalization, and a custom color map were applied to enhance the visualization.

A high-density retinal OCTA scan with horizontal 75-degree and vertical 38-degree FOV (23 ×12- mm) was acquired on the same healthy eye. A raster scan pattern was applied here. The scan contained 1208 A-lines per B-scan, 2304 positions per volume with 3 repeated B-scans at each position, which achieves the sampling density of 10 µm/line on both horizontal and vertical axes. Our results demonstrated that the en face OCTA (Fig. 4(C)) is free of blink and large motion artifacts, although a few minor artifacts still remain; the high sampling density enabled acquisition of high-resolution angiograms with fine vascular details. To further validate its image quality, the same healthy human subject was also scanned using a commercial OCT system (RTVue-XR Avanti; Optovue, Inc., Fremont, CA), with 3 × 3-mm (304 × 304 lines) retinal images acquired in both the central macular and the peripheral temporal regions. For a fair comparison, only a single OCTA scan volume from RTVue-XR were used to generate the inner retinal angiogram. The cropped images from high-resolution wide-field prototype OCTA at the same position were used for side-by-side comparison (Fig. 4(C)). For a qualitative inspection, the images from the prototype system and the commercial system show similar image quality and capillary visibility (Fig. 4(D), (E), (F), (G)).

We also evaluated the performance of the self-navigated motion correction system quantitatively. We scanned 14 eyes from 7 healthy human subjects with and without the motion correction and examined the real-time OCTA en face images generated along with the OCT spectrum raw data. Without the motion correction system, 104 blinks were present across the images. With the self-navigated motion correction system, no blink artifacts were present in the images. The motion was measured automatically by calculating the IMSI and detected by setting a threshold at 0.25. Without motion correction, 1,976 significant movements were detected in total. The number of artifacts was dropped to 168 when self-navigated motion correction was engaged; and the blink and motion artifacts were suppressed by 100% and 91.5% respectively.

3.2. Retina with diabetic retinopathy

A 23 × 12-mm OCTA using the same scan pattern described above was acquired from a 57-year-old female diagnosed with proliferative DR and early stage cataract. Our prototype system does not require dilation, but to minimize the difficulty of alignment during imaging this participant was dilated. The resulting OCTA demonstrated capillary dropout, microaneurysms, and intraretinal microvascular abnormalities in high resolution with a FOV that exceeds the current commercial OCT systems (Fig. 5).

Fig. 5.

Inner retinal angiogram acquired by our prototype high-resolution wide-field OCTA from a participant with proliferative diabetic retinopathy.

4. Discussion

In this paper, we presented a novel OCTA-based microsaccade and blink detection and correction method that does not require additional hardware or modification to an OCT/OCTA device. We applied this method to image both healthy and DR eyes, acquiring 75-degree wide-field capillary-level resolution OCTA with comparable capillary visibility to 3 × 3-mm commercial OCTA images. To our knowledge, this is the first application of a real-time self-navigated motion correction system on an OCTA device that relies exclusively on OCTA data for motion artifact correction. Previously, OCT systems either used infrared fundus photography or infrared SLO as the reference for eye motion and blink detection. Our system has several advantages over such conventional tracking and motion correction methods. First, since no additional hardware is required, it reduces the complexity and cost of OCT systems; second, OCTA provides richer vasculature information to evaluate motion amplitude; third, fundus images are not adequately sensitive to small changes in vessel location to precisely align OCTA images due to their relatively low resolution. OCTA is intrinsically sensitive to movements (such as microsaccades) which make the detection of motion much easier than third party tracking methods.

GPU-based data processing technology accelerated the development of real-time OCT/OCTA. Our self-navigated motion correction method relies on high-speed, real-time OCTA image processing to respond to microsaccade and blink artifacts. The current bottleneck in real-time OCTA image processing is data transfer speed. For real-time applications, the computation and transfer time needs to be less than the data acquisition time. For our 400-kHz OCT system with 1204 A-lines per B-scan, a minimum of 12 B-scans should be processed together in batches in a Nvidia RTX 2080ti GPU to maintain real-time processing. A larger batch can increase data computation and transfer efficiency at the cost of increased data transfer time. The motion correction system must balance the processing efficiency and the correction response time. A large batch will have a longer acquisition and processing time, which will result in a long response time. A slow response correction system will waste many “good” B-frames and directly extend the data acquisition time.

In our self-navigated motion correction system, one of the most important features is the IMSI metric. As the key motion indicator, the IMSI reliability directly correlates to the reliability of the motion correction. Here, IMSI is a normalized value across several different B-scans. The normalization process removed the dependency on signal strength, yielding a pure correlation to motion. Thus, in our self-navigated motion correction algorithm, IMSI is independent to variation between different types of motions, different imaging subjects, different SNR, and different types of systems. However, IMSI is still affected by the number of B-frames used in each batch. In our experience, if the processing batch is too small, it renders an unreliable IMSI. In our system, we used a high-speed swept-source laser, which requires at least 4 OCTA B-frames in each batch.

Wide-field OCTA imaging is challenging, and ocular pathology and involuntary motion can reduce image quality. Increasing the speed and sensitivity of the system can possibly overcome some of difficulties. Many groups have applied high-speed swept-source lasers [14,44] to prototype OCT systems. One of the fastest swept wavelength laser sources is the Fourier domain mode lock (FDML) laser. It can achieve megahertz swept source rates. At such a high speed, video-rate OCT imaging has been realized [45]. In our system, we employed a 400-kHz rather than a megahertz swept source laser. There are several considerations when selecting a laser source. One is that increasing sweep rate decreases the SNR of OCTA system. Another is the scanning speed. High scanning speeds require a resonant scanner, which frequently causes image distortion problems. Furthermore, high B-scan rates reduce the flow SNR and OCTA image quality due to diminished vascular flow contrast. Finally, for wide-field imaging, a sufficient imaging range is required. For a 3-megahertz OCT system, suppose we need 1536 pixels per A-line to achieve similar imaging depth in our system. To acquire those pixels, a 5 GHz balanced detector would be needed. However, currently it is a challenge to design such a balanced detector. We opted for the 400-kHz source for these reasons.

Another challenge for wide-field OCTA imaging is shadows. In our previous study, a special optical system was designed to eliminate the shadow caused by pupil misalignment [11]. This system was also employed in this work. The cross-scanning pattern applied in preview step can also increase the alignment efficiency. Compared to the conventional multi-position method, the method presented here has a higher refresh rate that can show shadows in real-time. Doing so can help control scan quality.

Our current motion correction system still has some limitations. The system can only provide an indicator that microsaccades and blinks occurred, and does not provide any quantitative lateral or axial motion trajectory information. Without such information, the system is reliant on the fixation target and the cooperation of the patient to realign the eye after movement, which may introduce artifacts like vessel interruption. Lack of lateral motion information can also cause low sensitivity to slow motion (like ocular drift). Even with a small fixation target, the change of gaze after each motion or blinks can also introduce vessel interruptions and some information loss. Evaporation of tear film during the imaging session will also reduce the image quality, as this leads to loss of focus. Several blinks are required to recover the tear film, which increases the chance of more vessel interruptions and information loss. Because our approach requires the imaging subject’s cooperation, this system cannot work on sedated patients, non-human primates and neonates. As a passive system, the image quality also relies on patient stability and ability to concentrate on the fixation target. An imaging subject with difficulty focusing on the fixation target and keeping steady during the imaging process will decrease the image quality.

Finally, we took advantage of GPU and SSADA parallel processing efficiency. Together, these software and hardware improvements enabled the high-quality images presented in this work.

5. Conclusion

We have successfully developed a novel real-time OCTA-based microsaccade and blink detection and correction system for high-resolution wide-field OCT/OCTA imaging. This motion correction system is integrated in a high-speed swept-source OCT system capable of acquiring a 75-degree field-of-view high-density OCTA image in healthy and DR patients. By calculating an instantaneous motion index and rescanning in real time, the system detects and eliminates artifacts due to eye blinking and large movements. This system represents a significant improvement in field-of-view and resolution compared to a conventional OCT system.

Funding

National Institutes of Health10.13039/100000002 (P30 EY010572, R01 EY024544, R01 EY027833); Research to Prevent Blindness10.13039/100001818 (unrestricted departmental funding grant, William & Mary Greve Special Scholar Award).

Disclosures

Oregon Health & Science University (OHSU) and Yali Jia have a significant financial interest in Optovue, Inc. These potential conflicts of interest have been reviewed and managed by OHSU

References

- 1.Huang D., Swanson E. A., Lin C. P., Schuman J. S., Stinson W. G., Chang W., Hee M. R., Flotte T., Gregory K., Puliafito C. A., Fujimoto J. G., “Optical coherence tomography,” Science 254(5035), 1178–1181 (1991). 10.1126/science.1957169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Makita S., Hong Y., Yamanari M., Yatagai T., Yasuno Y., “Optical coherence angiography,” Opt. Express 14(17), 7821–7840 (2006). 10.1364/OE.14.007821 [DOI] [PubMed] [Google Scholar]

- 3.Jia Y., Tan O., Tokayer J., Potsaid B., Wang Y., Liu J. J., Kraus M. F., Subhash H., Fujimoto J. G., Hornegger J., Huang D., “Split-spectrum amplitude-decorrelation angiography with optical coherence tomography,” Opt. Express 20(4), 4710–4725 (2012). 10.1364/OE.20.004710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.An L., Wang R. K., “In vivo volumetric imaging of vascular perfusion within human retina and choroids with optical micro-angiography,” Opt. Express 16(15), 11438–11452 (2008). 10.1364/OE.16.011438 [DOI] [PubMed] [Google Scholar]

- 5.Mariampillai A., Standish B. A., Moriyama E. H., Khurana M., Munce N. R., Leung M. K., Jiang J., Cable A., Wilson B. C., Vitkin I. A., Yang V. X. D., “Speckle variance detection of microvasculature using swept-source optical coherence tomography,” Opt. Lett. 33(13), 1530–1532 (2008). 10.1364/OL.33.001530 [DOI] [PubMed] [Google Scholar]

- 6.Yannuzzi L. A., Rohrer K. T., Tindel L. J., Sobel R. S., Costanza M. A., Shields W., Zang E., “Fluorescein angiography complication survey,” Ophthalmology 93(5), 611–617 (1986). 10.1016/S0161-6420(86)33697-2 [DOI] [PubMed] [Google Scholar]

- 7.You Q. S., Guo Y., Wang J., Wei X., Camino A., Zang P., Flaxel C. J., Bailey S. T., Huang D., Jia Y., “Detection of clinically unsuspected retinal neovascularization with wide-field optical coherence tomography angiography,” Retina 40(5), 891–897 (2020). 10.1097/IAE.0000000000002487 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Small R. G., Hildebrand P. L., “Preferred practice patterns,” Ophthalmology 103(12), 1987–1988 (1996). 10.1016/S0161-6420(96)30396-5 [DOI] [PubMed] [Google Scholar]

- 9.Klein T., Wieser W., Eigenwillig C. M., Biedermann B. R., Huber R., “Megahertz OCT for ultrawide-field retinal imaging with a 1050 nm Fourier domain mode-locked laser,” Opt. Express 19(4), 3044–3062 (2011). 10.1364/OE.19.003044 [DOI] [PubMed] [Google Scholar]

- 10.De Pretto L. R., Moult E. M., Alibhai A. Y., Carrasco-Zevallos O. M., Chen S., Lee B., Witkin A. J., Baumal C. R., Reichel E., de Freitas A. Z., Duker J. S., Waheed N. K., Fujimoto J. G., “Controlling for Artifacts in Widefield Optical Coherence Tomography Angiography Measurements of Non-Perfusion Area,” Sci. Rep. 9(1), 9096 (2019). 10.1038/s41598-019-43958-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wei X., Hormel T. T., Guo Y., Jia Y., “75-degree non-mydriatic single-volume optical coherence tomographic angiography,” Biomed. Opt. Express 10(12), 6286–6295 (2019). 10.1364/BOE.10.006286 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang Q., Lee C. S., Chao J., Chen C.-L., Zhang T., Sharma U., Zhang A., Liu J., Rezaei K., Pepple K. L., Munsen R., Kinyoun J., Johnstone M., Van Gelder R. N., Wang R. K., “Wide-field optical coherence tomography based microangiography for retinal imaging,” Sci. Rep. 6(1), 22017 (2016). 10.1038/srep22017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Spaide R. F., Fujimoto J. G., Waheed N. K., “Image artifacts in optical coherence angiography,” Retina 35(11), 2163–2180 (2015). 10.1097/IAE.0000000000000765 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wieser W., Biedermann B. R., Klein T., Eigenwillig C. M., Huber R., “Multi-megahertz OCT: High quality 3D imaging at 20 million A-scans and 4.5 GVoxels per second,” Opt. Express 18(14), 14685–14704 (2010). 10.1364/OE.18.014685 [DOI] [PubMed] [Google Scholar]

- 15.Zhi Z., Qin W., Wang J., Wei W., Wang R. K., “4D optical coherence tomography-based micro-angiography achieved by 1.6-MHz FDML swept source,” Opt. Lett. 40(8), 1779–1782 (2015). 10.1364/OL.40.001779 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Xu J., Zhang C., Xu J., Wong K., Tsia K., “Megahertz all-optical swept-source optical coherence tomography based on broadband amplified optical time-stretch,” Opt. Lett. 39(3), 622–625 (2014). 10.1364/OL.39.000622 [DOI] [PubMed] [Google Scholar]

- 17.Kang J., Feng P., Wei X., Lam E. Y., Tsia K. K., Wong K. K., “102-nm, 44.5-MHz inertial-free swept source by mode-locked fiber laser and time stretch technique for optical coherence tomography,” Opt. Express 26(4), 4370–4381 (2018). 10.1364/OE.26.004370 [DOI] [PubMed] [Google Scholar]

- 18.Zang P., Liu G., Zhang M., Dongye C., Wang J., Pechauer A. D., Hwang T. S., Wilson D. J., Huang D., Li D., Jia Y., “Automated motion correction using parallel-strip registration for wide-field en face OCT angiogram,” Biomed. Opt. Express 7(7), 2823–2836 (2016). 10.1364/BOE.7.002823 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kraus M. F., Potsaid B., Mayer M. A., Bock R., Baumann B., Liu J. J., Hornegger J., Fujimoto J. G., “Motion correction in optical coherence tomography volumes on a per A-scan basis using orthogonal scan patterns,” Biomed. Opt. Express 3(6), 1182–1199 (2012). 10.1364/BOE.3.001182 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Swanson E. A., Izatt J. A., Hee M. R., Huang D., Lin C., Schuman J., Puliafito C., Fujimoto J. G., “In vivo retinal imaging by optical coherence tomography,” Opt. Lett. 18(21), 1864–1866 (1993). 10.1364/OL.18.001864 [DOI] [PubMed] [Google Scholar]

- 21.Zawadzki R. J., Fuller A. R., Choi S. S., Wiley D. F., Hamann B., Werner J. S., “Correction of motion artifacts and scanning beam distortions in 3D ophthalmic optical coherence tomography imaging,” in Ophthalmic Technologies XVII, (International Society for Optics and Photonics, 2007), 642607. [Google Scholar]

- 22.Ricco S., Chen M., Ishikawa H., Wollstein G., Schuman J., “Correcting motion artifacts in retinal spectral domain optical coherence tomography via image registration,” in International Conference on Medical Image Computing and Computer-Assisted Intervention, (Springer, 2009), 100–107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hendargo H. C., Estrada R., Chiu S. J., Tomasi C., Farsiu S., Izatt J. A., “Automated non-rigid registration and mosaicing for robust imaging of distinct retinal capillary beds using speckle variance optical coherence tomography,” Biomed. Opt. Express 4(6), 803–821 (2013). 10.1364/BOE.4.000803 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Camino A., Jia Y., Liu G., Wang J., Huang D., “Regression-based algorithm for bulk motion subtraction in optical coherence tomography angiography,” Biomed. Opt. Express 8(6), 3053–3066 (2017). 10.1364/BOE.8.003053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wang J., Camino A., Hua X., Liu L., Huang D., Hwang T. S., Jia Y., “Invariant features-based automated registration and montage for wide-field OCT angiography,” Biomed. Opt. Express 10(1), 120–136 (2019). 10.1364/BOE.10.000120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Li Y., Gregori G., Lam B. L., Rosenfeld P. J., “Automatic montage of SD-OCT data sets,” Opt. Express 19(27), 26239–26248 (2011). 10.1364/OE.19.026239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cui Y., Zhu Y., Wang J. C., Lu Y., Zeng R., Katz R., Wu D. M., Vavvas D. G., Husain D., Miller J. W., “Imaging Artifacts and Segmentation Errors With Wide-Field Swept-Source Optical Coherence Tomography Angiography in Diabetic Retinopathy,” Trans. Vis. Sci. Tech. 8(6), 18 (2019). 10.1167/tvst.8.6.18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Vienola K. V., Braaf B., Sheehy C. K., Yang Q., Tiruveedhula P., Arathorn D. W., de Boer J. F., Roorda A., “Real-time eye motion compensation for OCT imaging with tracking SLO,” Biomed. Opt. Express 3(11), 2950–2963 (2012). 10.1364/BOE.3.002950 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ferguson R. D., Hammer D. X., Paunescu L. A., Beaton S., Schuman J. S., “Tracking optical coherence tomography,” Opt. Lett. 29(18), 2139–2141 (2004). 10.1364/OL.29.002139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hammer D. X., Ferguson R. D., Iftimia N. V., Ustun T., Wollstein G., Ishikawa H., Gabriele M. L., Dilworth W. D., Kagemann L., Schuman J. S., “Advanced scanning methods with tracking optical coherence tomography,” Opt. Express 13(20), 7937–7947 (2005). 10.1364/OPEX.13.007937 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pircher M., Götzinger E., Sattmann H., Leitgeb R. A., Hitzenberger C. K., “In vivo investigation of human cone photoreceptors with SLO/OCT in combination with 3D motion correction on a cellular level,” Opt. Express 18(13), 13935–13944 (2010). 10.1364/OE.18.013935 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Zhang Y., Wörn H., “Optical coherence tomography as highly accurate optical tracking system,” in 2014 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (IEEE, 2014), 1145–1150. [Google Scholar]

- 33.Fercher A. F., Drexler W., Hitzenberger C. K., Lasser T., “Optical coherence tomography-principles and applications,” Rep. Prog. Phys. 66(2), 239–303 (2003). 10.1088/0034-4885/66/2/204 [DOI] [Google Scholar]

- 34.Li J., Bloch P., Xu J., Sarunic M. V., Shannon L., “Performance and scalability of Fourier domain optical coherence tomography acceleration using graphics processing units,” Appl. Opt. 50(13), 1832–1838 (2011). 10.1364/AO.50.001832 [DOI] [PubMed] [Google Scholar]

- 35.Rasakanthan J., Sugden K., Tomlins P. H., “Processing and rendering of Fourier domain optical coherence tomography images at a line rate over 524 kHz using a graphics processing unit,” J. Biomed. Opt. 16(2), 020505 (2011). 10.1117/1.3548153 [DOI] [PubMed] [Google Scholar]

- 36.Sylwestrzak M., Szlag D., Szkulmowski M., Gorczynska I. M., Bukowska D., Wojtkowski M., Targowski P., “Four-dimensional structural and Doppler optical coherence tomography imaging on graphics processing units,” J. Biomed. Opt. 17(10), 100502 (2012). 10.1117/1.JBO.17.10.100502 [DOI] [PubMed] [Google Scholar]

- 37.Jian Y., Wong K., Sarunic M. V., “Graphics processing unit accelerated optical coherence tomography processing at megahertz axial scan rate and high resolution video rate volumetric rendering,” J. Biomed. Opt. 18(2), 026002 (2013). 10.1117/1.JBO.18.2.026002 [DOI] [PubMed] [Google Scholar]

- 38.Xu J., Wong K., Jian Y., Sarunic M. V., “Real-time acquisition and display of flow contrast using speckle variance optical coherence tomography in a graphics processing unit,” J. Biomed. Opt. 19(2), 026001 (2014). 10.1117/1.JBO.19.2.026001 [DOI] [PubMed] [Google Scholar]

- 39.Lee K. K., Mariampillai A., Joe X., Cadotte D. W., Wilson B. C., Standish B. A., Yang V. X., “Real-time speckle variance swept-source optical coherence tomography using a graphics processing unit,” Biomed. Opt. Express 3(7), 1557–1564 (2012). 10.1364/BOE.3.001557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wei X., Camino A., Pi S., Hormel T. T., Cepurna W., Huang D., Morrison J. C., Jia Y., “Real-time cross-sectional and en face OCT angiography guiding high-quality scan acquisition,” Opt. Lett. 44(6), 1431–1434 (2019). 10.1364/OL.44.001431 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ju M. J., Heisler M., Athwal A., Sarunic M. V., Jian Y., “Effective bidirectional scanning pattern for optical coherence tomography angiography,” Biomed. Opt. Express 9(5), 2336–2350 (2018). 10.1364/BOE.9.002336 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Guo Y., Camino A., Zhang M., Wang J., Huang D., Hwang T., Jia Y., “Automated segmentation of retinal layer boundaries and capillary plexuses in wide-field optical coherence tomographic angiography,” Biomed. Opt. Express 9(9), 4429–4442 (2018). 10.1364/BOE.9.004429 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hormel T. T., Wang J., Bailey S. T., Hwang T. S., Huang D., Jia Y., “Maximum value projection produces better en face OCT angiograms than mean value projection,” Biomed. Opt. Express 9(12), 6412–6424 (2018). 10.1364/BOE.9.006412 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Xu J., Wei X., Yu L., Zhang C., Xu J., Wong K., Tsia K. K., “High-performance multi-megahertz optical coherence tomography based on amplified optical time-stretch,” Biomed. Opt. Express 6(4), 1340–1350 (2015). 10.1364/BOE.6.001340 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Kolb J. P., Draxinger W., Klee J., Pfeiffer T., Eibl M., Klein T., Wieser W., Huber R., “Live video rate volumetric OCT imaging of the retina with multi-MHz A-scan rates,” PLoS One 14, e0213144 (2019). 10.1371/journal.pone.0213144 [DOI] [PMC free article] [PubMed] [Google Scholar]