Abstract

Goal: The purpose of this study was to identify clinically relevant patterns of glaucomatous vision loss through convex representation to predict glaucoma several years prior to disease onset. Methods: We developed a deep archetypal analysis to identify patterns of glaucomatous vision loss, and then projected visual fields over the identified patterns. Projections provided a representation that was more accurate in detecting glaucomatous vision loss, thus, more appropriate for recognizing preclinical signs of glaucoma prior to disease development. To overcome the class imbalance in prediction, we implemented a class-balanced bagging with neural networks. Results: Using original visual field as features of the class-balanced bagging classification provided an area under the receiver-operating characteristic curve (AUC) of 0.55 for predicting glaucoma approximately four years prior to disease development. Using convex representation of the visual fields as input features provided an AUC of 0.61 while using deep convex representation as input features improved the AUC to 0.71. Relevance vector machine (RVM) achieved an AUC of 0.64. Conclusion: Deep archetypal analysis representation of visual functional features with balanced bagging classification could serve as an automated tool for predicting glaucoma. Significance: Glaucoma is the second leading cause of worldwide blindness. Most people with glaucoma have no early symptoms or pain, delaying diagnosis in many patients until they reach late irreversible vision loss stages. In fact, about 50% of people with glaucoma are unaware they have the disease. Deep archetypal analysis models may impact clinical practice in effectively identifying at-risk glaucoma patients well prior to disease development.

Keywords: Glaucoma prediction, archetypal analysis, deep archetypal analysis, artificial intelligence, machine learning

Glaucoma is the second leading cause of worldwide blindness. Most people with glaucoma have no early symptoms or pain, delaying diagnosis in many patients until they have irreversible vision loss. In fact, about 50% of people with glaucoma are unaware they have the disease. Deep archetypal analysis models may impact clinical practice in effectively identifying at-risk glaucoma patients well prior to disease development.

I. Introduction

Archetypal analysis (AA) was first proposed by Cutler et al. [1]. However, several variations of AA has been proposed in the literature [2]–[4]. Deep AA (DAA), as a non–linear extension of the initial AA was recently proposed to address several limitations of the AA [5]–[7]. Deep AA does not rely on expert knowledge to combine relevant dimensions, learns appropriate transformations when combining features of different types and is able to incorporate additional information into the learning process.

As a proof of concept, we will deploy a particular type of DAA and show that it provides an appropriate framework for predicting ocular conditions such as glaucoma several years prior to the onset of the disease from visual field data.

Glaucoma is, in fact, a heterogeneous group of eyes diseases and the second leading causes of blindness worldwide [8]. It has multiple known risk factors including older age, African-American ethnicity, elevated intraocular pressure (IOP; fluid pressure inside the eye), and thinner central corneal thickness [9], [10]. However, subjects with these risk factors may or may not develop glaucoma as multiple other factors interact in a complex manner, making prediction of the disease in advance a non-trivial task. Moreover, glaucoma is asymptomatic and the patient is often not aware of the disease in early stages, before vision loss becomes significant [11]. Hence, any progress in predicting glaucoma early is clinically important and economically impactful.

Archetypal analysis was used to identify patterns of visual field (VF) loss of patients with glaucoma [12] and then used to detect glaucoma progression from AA-identified patterns of VF loss [13]. Recent advanced in data-driven models may also aid uncovering such hidden visual functional patterns that may lead to glaucoma and hence improve our understanding of mechanisms underlying glaucoma. These hidden patterns (of structural or functional defect) may also be helpful in developing frameworks that can predict glaucoma prior to its onset.

Currently, glaucoma-induced VF losses are mainly assessed using well-established standard automated perimetry (SAP) [14]. The Humphrey 30-2 testing system generates a map of 76 local retinal sensitivities to the light. VF map is typically used by clinicians subjectively to determine the severity of glaucoma-induced functional loss and remain as an important component of glaucoma assessment.

In this study, the authors propose a framework based on DAA of VFs for predicting glaucoma several years prior to clinical manifestation of the disease. The proposed framework provides convex representations from VFs, which is more specific and sensitive for predicting glaucoma in advance. The experimental results on a real-world glaucoma dataset signify the effectiveness of the proposed framework.

The rest of the paper is organized as follows: Section II provides literature review, section III discusses AA and deep AA, section IV explains the proposed framework, section V and is devoted to experimentation. Results and discussion are provided in section VI, and finally, conclusion is provided in sections VII.

II. Literature Review

Most of the previous studies for identifying glaucoma have been focused on utilizing machine learning or its subclass deep learning to diagnose glaucoma (clinical signs are obvious) [15]–[18]. In fact, a significant majority of deep learning models have been centered on diagnosis because of two major reasons. First, diagnosis requires cross-sectional data, which is easier to access. Second, models typically perform better for diagnosis because disease signs are already present thus easier to identify. However, predicting the future development of the disease from baseline parameters is a challenging task because 1) access to longitudinal data prior to disease development is more challenging and 2) identifying disease preclinical signs (that are not obvious yet) is more involved.

A few studies have attempted to predict glaucoma prior to disease onset [10], [19], [20]. However, most of those studies have only utilized statistical analysis to determine risk factors that may lead to the disease. Sehi et al. [19] used structural features such as optic nerve head topography and retinal nerve fiber layer thickness and applied a cox hazard model to predict glaucoma. Salvetat et al. [10] used multivariate cox hazard models and identified several structural and functional features that can serve as risk factors for glaucoma. However, the identified risk factors through statistical analysis were imprecise for prediction of glaucoma in advance of disease onset. To address this challenge, Bowd et al. [20] developed a machine learning classification using a combination structural and functional features to predict glaucoma prior to onset. They utilized relevance vector machines (RVM) as a classification model. To the best of our knowledge, that is the only study that has developed a machine learning-based framework for predicting glaucoma onset from baseline parameters. However, in their study, they only used VFs, fed the VF input features to the RVM classifier while more clinically relevant representations could improve recognizing subtle (preclinical) signs of the disease. Moreover, the datasets used in all the aforementioned studies are relatively small, and generalization of results of these studies may be incomplete.

The major highlights of our study are:

A. Clinically Relevant Unsupervised Convex Representation

Since we are analyzing VF data from eyes with normal VF and normal appearing optic nerve (according to clinical standards) at the baseline (when the participants entered the study then followed for years to see when they develop the disease), using VFs directly may not provide sufficient information for predicting glaucoma. We hypothesize that there are subtle hidden patterns of VF defect that are either unknown to clinicians or challenging to detect but they may be characteristic patterns of future glaucoma development. To overcome this issue, deep archetypal analysis (DAA) and simplex projection are employed to transform VFs into unsupervised convex representations (see section III and IV) prior to be used in machine learning classifiers. DAA models generate clinically relevant patterns of VF defect (Figs. 1 and 4). These patterns have been verified by a glaucoma expert in our team (M.G.) and further used in a machine learning approach to investigate their effectiveness objectively (Figs. 2 and 5). The proposed unsupervised convex representations were obtained by decomposing VFs onto the DAA patterns. We argue that VFs of eyes that progress to glaucoma have likely particular DAA patterns of vision loss that can be used to distinguish them from eyes that did not develop glaucoma. We will show that unsupervised convex representation provides clues about the forthcoming onset of the disease, thus effective in improving glaucoma prediction.

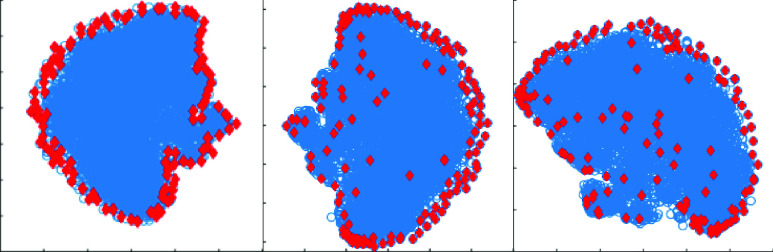

FIGURE 1.

Modelling properties of 128 DAA archetypes (atoms) obtained from (left) the first layer, (middle) the third layer, and (right) the fifth layer of the DAA framework by decomposing  VFs. Archetypes (red) and VFs (blue) were projected onto a

VFs. Archetypes (red) and VFs (blue) were projected onto a  space using t-SNE for visualization.

space using t-SNE for visualization.

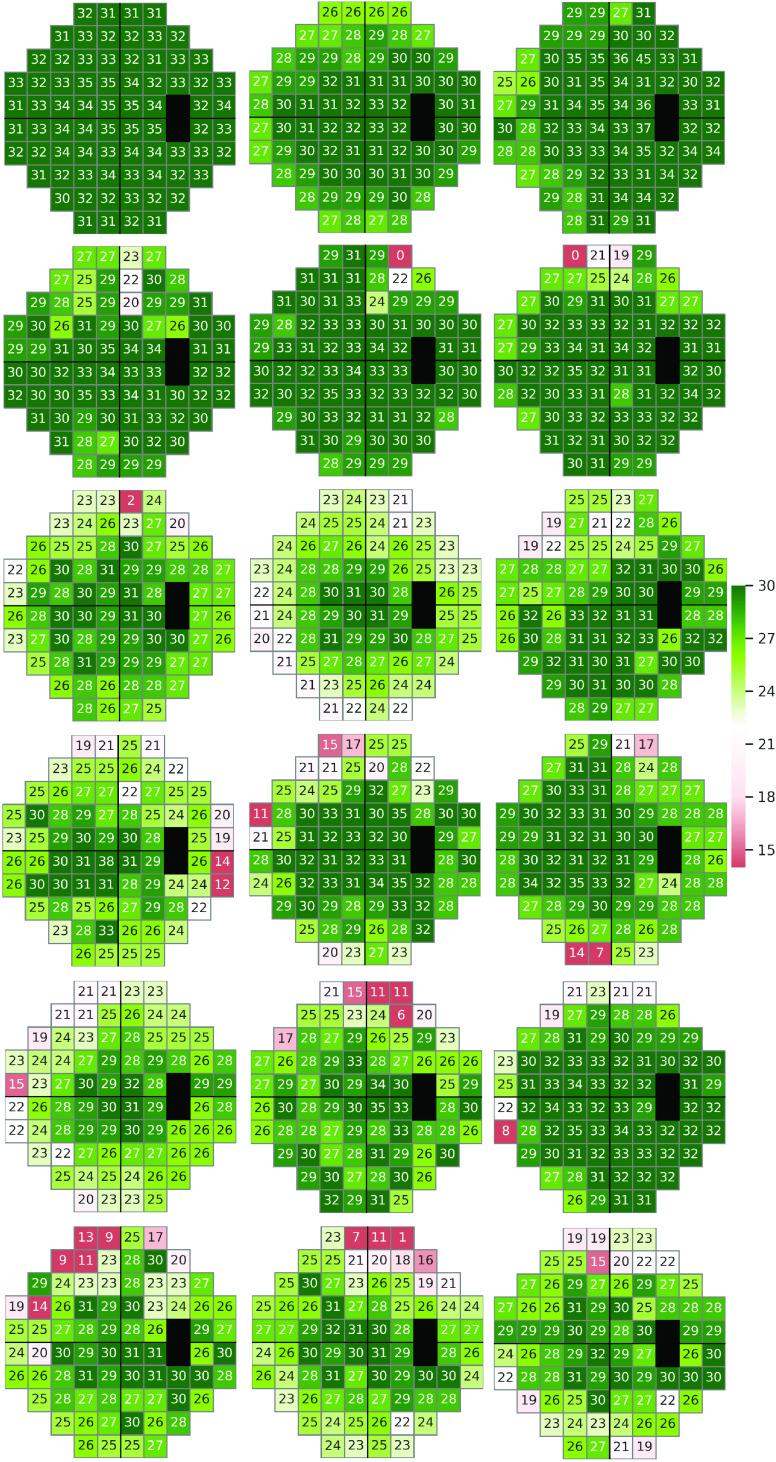

FIGURE 4.

Visual field defect patterns identified by deep archetypal analysis (DAA) of VFs collected at the baseline from the Ocular Hypertension Treatment Study (OHTS) participants.

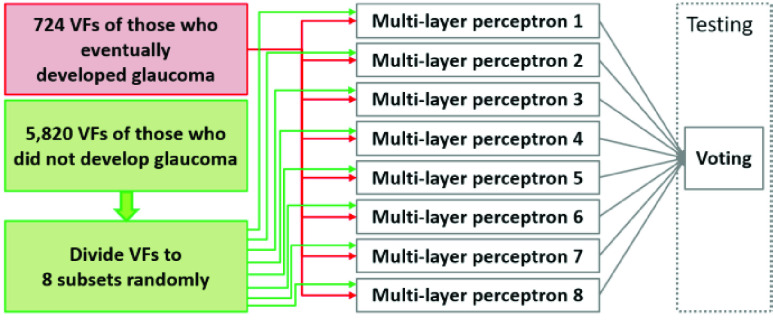

FIGURE 2.

Class-balanced bagging approach used for training the proposed framework.

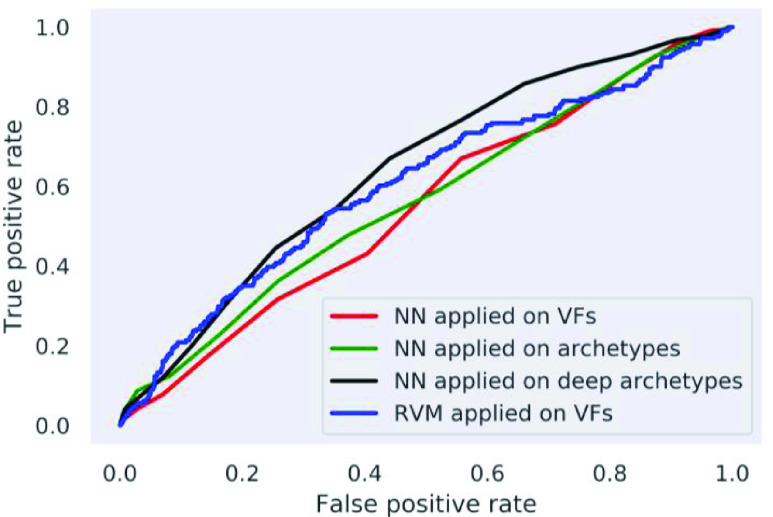

FIGURE 5.

ROC curves of the using neural networks (NN) applied on original visual fields, convex representation of visual fields obtained by AA or DAA, and relevance vector machine (RVM).

B. Class-Balanced Bagging

To overcome the inherent class imbalance in our dataset, a class-balanced bagging approach is proposed. This approach utilizes dense neural networks as base classifiers, and balanced class-examples is fed to each neural network in the training step. This approach will improve the performance by increasing the sensitivity while maintaining specificity and avoids over-fitting due to class imbalance.

III. Archetypal and Deep Archetypal Analysis

Archetypal analysis and deep archetypal analysis both perform matrix factorization and provide appropriate means for dictionary-based learning.

A. Archetypal Analysis

Archetypal analysis [1] is basically a matrix factorization method where a matrix,  (

( ), whose columns contain

), whose columns contain  -dimensional data points, is decomposed as

-dimensional data points, is decomposed as  .

.  contains

contains  archetypes that lie on extremal or convex hull of the data, and

archetypes that lie on extremal or convex hull of the data, and  (

( ) is a convex representation matrix. This implies that data points can be represented as a convex combination of archetypes. Similarly, archetypes can also be represented as a convex combination of the individual data points, that is

) is a convex representation matrix. This implies that data points can be represented as a convex combination of archetypes. Similarly, archetypes can also be represented as a convex combination of the individual data points, that is  , where

, where  (

( ) is a convex representation matrix. Both these conditions restrict archetypes to lie only on the convex hull. Thus, archetypes present a convenient method to capture extremal properties of the input data points.

) is a convex representation matrix. Both these conditions restrict archetypes to lie only on the convex hull. Thus, archetypes present a convenient method to capture extremal properties of the input data points.

Appropriate optimization frameworks could be used to obtain archetypes  from the input data points

from the input data points  [21]:

[21]:

|

Here  and

and  are columns of

are columns of  and

and  (

( ), respectively. Equation 1 is non-convex as both

), respectively. Equation 1 is non-convex as both  and

and  are unknown. Hence, the block-coordinate descent method

are unknown. Hence, the block-coordinate descent method  [21] can be employed to solve this optimization problem.

[21] can be employed to solve this optimization problem.

Drawback: AA effectively models the convex hull of data, however, it is limited in modeling either the average or local characteristics of data. To overcome these limitations, deep archetypal analysis has been proposed by our team and others, recently [5]–[7].

B. Deep Archetypal Analysis

Deep AA is a layered framework that performs multiple AA based factorizations on the input matrix and its subsequent factors. At the first layer of DAA, the input matrix  is decomposed into an archetypal dictionary

is decomposed into an archetypal dictionary  and convex-sparse representation matrix A1 using equation 1. A1 is given as input to the second layer, and is again factorized using AA to obtain dictionary

and convex-sparse representation matrix A1 using equation 1. A1 is given as input to the second layer, and is again factorized using AA to obtain dictionary  and convex-sparse representations

and convex-sparse representations  . The overall factorization is:

. The overall factorization is:  , where

, where  is the DAA dictionary computed at the second layer of DAA framework. This process is repeatedly performed until reaching a user-defined depth of factorization. Hence, DAA decomposes

is the DAA dictionary computed at the second layer of DAA framework. This process is repeatedly performed until reaching a user-defined depth of factorization. Hence, DAA decomposes  into

into  factors where

factors where  is the number of layers:

is the number of layers:  . The factorization at each layer of DAA framework can be represented as:

. The factorization at each layer of DAA framework can be represented as:

|

Figure 1 exhibits the data modelling capabilities of archetypes (AA atoms) and deep archetypes (DAA atoms). This figure illustrates the 2-d t-distributed stochastic neighbor embedding (t-SNE) [22] representation of 76-d VFs and AA/DAA atoms. As desired, the DAA archetypes (atoms) are modelling extremal as well as average characteristics of the data. This characteristic of DAA atoms can be attributed to the fact that dictionaries obtained at deeper layers ( ) are different convex combinations of archetypes obtained at the first layer. Since the combination of convex representations is also a convex representation [5], at any

) are different convex combinations of archetypes obtained at the first layer. Since the combination of convex representations is also a convex representation [5], at any  ’th layer (

’th layer ( ), a new convex representation matrix is obtained by factorizing

), a new convex representation matrix is obtained by factorizing  using AA. Hence, atoms of these deeper dictionaries can lie anywhere on the data-spread including the boundary. A DAA dictionary atom lies near the boundary if an archetype is unilaterally defining this atom in the convex combination. Similarly, if multiple archetypes have significant contribution in defining a deeper dictionary atom, it is bound to lie inside the data-spread. As a result, the DAA atoms systematically divide the data-spread into small groups, thus capturing both local and global characteristics of data.

using AA. Hence, atoms of these deeper dictionaries can lie anywhere on the data-spread including the boundary. A DAA dictionary atom lies near the boundary if an archetype is unilaterally defining this atom in the convex combination. Similarly, if multiple archetypes have significant contribution in defining a deeper dictionary atom, it is bound to lie inside the data-spread. As a result, the DAA atoms systematically divide the data-spread into small groups, thus capturing both local and global characteristics of data.

C. Visual Field Representation Using Archetypal and Deep Archetypal Analysis

AA/DAA is appropriate for VF data analysis because of two major reasons: 1) most of the clinically known glaucomatous patterns of VF loss lie on or near the boundary of the cloud of VF data in the initial  space. The convex hull modelling properties of AA/DAA can identify these patterns, and hence, provides a representation that is consistent with clinical knowledge, 2) unlike many other data-dependent dictionary learning methods, AA/DAA does not project the data to any latent space. Therefore, convex representations obtained by AA/DAA are interpretable and could be clinically explained (Fig. 4).

space. The convex hull modelling properties of AA/DAA can identify these patterns, and hence, provides a representation that is consistent with clinical knowledge, 2) unlike many other data-dependent dictionary learning methods, AA/DAA does not project the data to any latent space. Therefore, convex representations obtained by AA/DAA are interpretable and could be clinically explained (Fig. 4).

IV. Proposed Framework

In this section, the proposed framework for early or baseline prediction of glaucoma is described. This framework consists of two modules: feature extraction and classification as follows:

A. Feature Extraction Using Archetypal and Deep Archetypal Analysis

This study explores how convex representation of VFs is used to provide a reliable glaucoma prediction system. The features used in the proposed framework were raw VFs, convex representation of VFs through AA, and convex representation of VFs through DAA. Deep AA is applied on training VFs to obtain the DAA dictionary ( ), where

), where  represents the dictionary obtained at

represents the dictionary obtained at  th layer of the DAA framework. Each atom of this dictionary is considered as a vertex of a high-dimensional simplex, and the remaining training and test VFs are projected on this simplex to obtain convex representations as:

th layer of the DAA framework. Each atom of this dictionary is considered as a vertex of a high-dimensional simplex, and the remaining training and test VFs are projected on this simplex to obtain convex representations as:

|

such that  . Here

. Here  represents an input VF,

represents an input VF,  represents its corresponding convex representation and

represents its corresponding convex representation and  is the number of atoms in

is the number of atoms in  . These convex representations are inherently sparse. We will show that the convex representations highlight the early or slight vision loss (typically due to glaucoma) in a VF that may not be captured by summary parameters of VF such as mean deviation (MD) or pattern standard deviation (PSD) that are generated by clinical instruments and widely used by clinicians.

. These convex representations are inherently sparse. We will show that the convex representations highlight the early or slight vision loss (typically due to glaucoma) in a VF that may not be captured by summary parameters of VF such as mean deviation (MD) or pattern standard deviation (PSD) that are generated by clinical instruments and widely used by clinicians.

Since no class-specific information is used to obtain DAA dictionary (unlike other AA based classification frameworks), the convex representations obtained by simplex projection is an unsupervised procedure.

B. Class-Balanced Bagging for Classification

Like most of the datasets in real-world healthcare settings, our dataset was imbalanced as the number of subjects (who developed glaucoma at the end of the study) were significantly lower than the number of subjects who did not develop the disease. Most of the strong classifiers such as support vector machines and neural networks could be highly affected by class imbalance leading to biased towards the class with higher number of samples with a high missed detection rates. To overcome this issue, we developed a bagging-based approach where each individual classifier, a feed-forward neural network, was fed with class-balanced training examples, as illustrated in Figure 2.

In the training step, the framework divides the samples in the class with larger number of samples (those who did not develop glaucoma; called negative group) into smaller non-overlapping subsets such that the number of samples in each subset were almost equal to the number of positive class (those who eventually developed glaucoma). Each subset of negative and all the positive examples were fed to a neural network (multi-layer perceptron with similar parameters) to learn the discrimination between two classes. Therefore, each neural network was trained on the (same) positive examples but different subsets of negative examples. It is worth noting that this framework is different from traditional bagging approach where each example has similar likelihood of being selected for training in any of the eight classifiers. During testing, each neural network was considered as an independent classifier, and a majority voting rule was applied on individual predictions to obtain the final prediction.

V. Experimentation

A. Datasets

The ocular hypertension treatment study (OHTS) was a prospective, multi-center (across 22 centers in the US) investigation that sought to prevent or delay the onset of VF loss in patients at moderate risk of developing glaucoma [9].Unlike most of other datasets that collected glaucoma risk factors retrospectively, in the OHTS, risk factors were measured at the baseline prior to the onset of disease and afterwards routinely. Hence, the OHTS dataset allows the development and testing of a robust glaucoma prediction framework such as the framework that we developed.

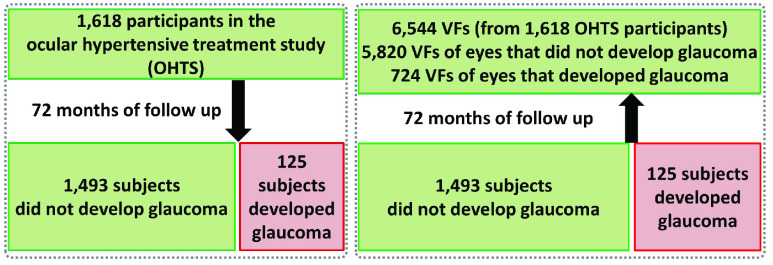

A total of 1,618 subjects with elevated IOP but normal appearing optic disc (structure) and normal VF (function) were followed for about six years. The demographic parameters, clinical information and VFs were collected every six month. After about 72 months, 125 participants developed glaucoma (187 eyes) while 1,493 did not develop the disease based on VF assessments (Fig. 3). For each participant, at least two or three reliable VF tests (< 33% false positives and false negative, and < 33% fixation loss errors, according to initial OHTS study criteria) were collected by Humphrey (Carl Zeiss Meditec, Dublin, California) full threshold using the SITA Standard 30-2 procedure (covering central 30 degree of VF) at the baseline (start date of each participant in the clinical trial). Two OHTS certified readers carefully examined follow up VFs and when they identified VF abnormality, they recalled subject for re-testing to confirm the abnormality. Glaucoma onset was further confirmed by an independent endpoint committee (see [9] for more details).

FIGURE 3.

Subjects and collected visual fields (VFs) in the ocular hypertension treatment study (OHTS). Left: Participants who were selected for the study and proportion who eventually developed glaucoma. Right: VFs of participants at the baseline (start of the study). Question was that whether we can recognize hidden pattern of visual functional loss from baseline VFs that may distinguish eyes that will eventually develop glaucoma from those that will not develop the disease.

In this paper, 7,248 VF tests collected only at the baseline were included for the downstream analysis (Fig. 3). We used full threshold values at each VF test location. We analyzed only VFs that were identified as normal by OHTS certified VF readers. We then hypothesized that there maybe subtle VF defect patterns in the VFs of those eyes that developed glaucoma several years later that either were missed by clinicians or were unknown to clinicians. Our aim was thus to identify those subtle VF defect patterns and show their effectiveness in predicting glaucoma well ahead of time.

B. Training and Testing Classifiers and Comparison

10-fold stratified cross-validation was utilized for computing the accuracy in terms of the area under the receiver operating characteristics (AUC) for neural networks applied on raw VFs, convex representation through AA, convex representation through DAA, and relevance vector machine (RVM) applied on raw VFs. As discussed in Section II, the only machine learning based method for glaucoma prediction, known to the authors, was RVM [20], which was compared against the proposed frameworks. Since the dataset used in this method is publicly unavailable and part of the features utilized in this method are unavailable for all the subjects in OHTS dataset, the authors have only used their proposed classification method (RVM classifier) to provide a fair comparison.

C. Parameter Setting and Performance Metric

All the parameters such as number of dictionary atoms (archetypes), the number of layers in DAA, the number of nodes and layers in neural network were selected such that the model provides an optimal average performance on the cross-validation data. More specifically, these parameters were selected based on an extensive grid search to provide maximum area under the ROC curve (AUC) and least missed detection rate. The proposed framework utilized 128 dictionary atoms and seven factorization levels in DAA framework. Each neural network consisted of a single hidden layer with 200 neurons. The Adam optimizer with a fixed learning rate of 0.0001 was used to train each neural network. For class-balanced bagging, the negative class was divided into eight subsets, and hence, the proposed framework was an ensemble of eight different neural networks. Gaussian kernel with a width of 0.9 was used in the baseline method for training the RVM. Similar to the proposed framework, parameter of RVM also were selected using a grid-search on the cross-validation data. The parameters of neural networks in all the experiments were kept the same. The DAA, MLP and AUC performance metrics were implemented in Python using scikit-learn library. RVM was implemented in Matlab because there was no implementation in Python. Statistical analyses were performed in R.

D. Visual Field Defect Pattern Recognition

We applied DAA on all baseline VFs to identify hidden patterns of (glaucomatous) VF loss. We first identified 128 deep archetypes that were prevalent in the data. We then excluded the archetypes that had significant correlation with other archetypes. Figure 4 shows 18 DAA VF defect patterns that were identified from baseline VF data of the OHTS participants. These patterns were assessed subjectively by a glaucoma expert to identify its clinical relevance (M.G.). Top-left pattern was identified as a normal pattern while other patterns were identified as patterns of (early) VF loss. To further assess the effectiveness of the models, the DAA archetypes were fed to an NN classifier and was compared against raw VFs and classical AA archetypes (Fig. 5). Although glaucoma experts had not identified any suspicious glaucomatous patterns of VF loss in subjective evaluation of the baseline VF data of the OHTS participants, we suggest these subtle VF defect patterns as possible risk factors (signs) of glaucoma development several years in advance of clinical manifestation of disease signs (Fig. 5).

VI. Results and Discussion

Out of 7,248 VF tests collected at the baseline, 6,544 VFs were labeled as both “reliable” and “normal”, in which 724 VFs corresponded to eyes that eventually developed glaucoma (over approximately six years of follow up) and 5,820 corresponded to eyes that did not develop glaucoma over the course of OHTS study (Fig. 3). The mean age (standard deviation; SD) of subjects in the normal and (converted to) glaucoma groups were 55.7 (9.6) and 58.8 (9.0) years, respectively (p < 0.001). Approximately 42% of subjects in the normal group were male while 56% of the subjects in the (converted to) glaucoma group were male (p < 0.001). Mean IOP of eyes in the normal and (converted to) glaucoma groups were 24.8 (2.9) and 26.1 (3.3), respectively (p < 0.001).

Visual field testing through standard automated perimetry (SAP) remains a gold standard for glaucoma assessment. Therefore, recognition of (glaucomatous) VF defect patterns is critical for diagnosis, severity identification, and therapy adjustments based on the type of defect [23]. However, manual classification of glaucoma through VFs requires significant clinical training and more importantly is labor intensive and highly subjective with limited agreement even among glaucoma specialists [24], [25]. Thus, any approach that can automatically identify early patterns of VF loss can impact glaucoma management.

Several researchers, including us, have used unsupervised learning to discover (glaucomatous) patterns of VF loss [12], [13], [26]–[29]. We have extensively used Gaussian mixture modeling (GMM) to discover patterns of VF loss and to identify glaucoma progression along those GMM-identified patterns [26]–[29]. Other teams have used classical AA for such goals [12], [13]. However, here we introduced a deep archetypal approach that identifies patterns of VF loss that are clinically more relevant (subjective evaluation) and patterns that may serve as signs of early glaucoma development (Fig. 4). We used 128 DAA patterns as input features to the classifier for predicting glaucoma. In fact, we investigated other numbers of patterns and obtained the optimum accuracy with 128 patterns. While these DAA patterns maximized the accuracy of glaucoma prediction, overlap among these patterns was significant when assessed visually. Therefore, a follow up study is warrant to provide a set of mutually exclusive DAA patterns for glaucoma assessment. To provide a fair comparison, we used 128 classical AA patterns as was used in DAA assessment. We used the implementation of Chen et al. for AA [21] and used the implementations in [5], [7] for DAA analysis.

While these patterns were not obvious to glaucoma experts before, DAA analysis identified these patterns as (possible) signs of future glaucoma development, which was confirmed further by objective analysis (Fig. 5, black curve with AUC of 0.71).

To avoid any bias due to multiple VF tests from same eyes of subjects, we accounted for correlation between tests and eyes of same subjects using a nested structure in generalized estimating equation (GEE) [30]. To account for multiple VFs from same eyes in training and testing of machine learning models, we selected the training and testing folds based on subjects rather than eyes or VFs.

Figure 5 illustrates the ROC curves of the proposed framework compared against RVM [20], classical AA approach, and raw VFs. The AUC of applying NN on DAA representation of VFs was 0.71 while the AUC of applying NN on classical AA representation of VFs and raw VFs were 0.61 and 0.55, respectively. The AUC of RVM [20] was 0.64. In fact, DAA provided a representation of VFs with significantly higher AUC than classical AA and original VFs in predicting the future development of glaucoma on both cross-validation and held-out datasets (statistical p < 0.001). This highlights that the deep convex representation, obtained by simplical projection, is more discriminative than the input original VFs as well AA and classical RVM.

At first, it may seem that an AUC of 0.71 is low compared to several approaches for identifying glaucoma with higher accuracy. While from statistical perspective this may seem a valid argument, from clinical perspective, the story is different. Predicting glaucoma from baseline VFs approximately five years prior to the disease development is a very challenging task. In fact, relatively, the easiest task would be performing automatic diagnosis, which means clinicians already have observed clinical signs of the disease however, in prediction there is no clinical sign and one would need to identify pre-clinical hidden patterns of the disease.

This study was conducted on VF tests with Humphrey 30-2 pattern. Other studies using VFs with Humphrey 24-2 or central 10-2 patterns may shed light on the effectiveness of DAA in predicting glaucoma. Nevertheless, VF testing is subjective, time-consuming and presenting a significant degree of variability. Therefore, future studies could investigate the role of structural data such as fundus photographs or optical coherence tomography (OCT) data in predicting glaucoma prior to disease onset.

VII. Conclusion

In this paper, deep archetypal framework was developed to effectively predict glaucoma several years prior to disease manifestation. The framework utilizes simplex projections to obtain unsupervised convex representations of VFs. We showed that these convex representations are clinically meaningful and more discriminative than raw VFs or other classical VF analysis approaches. To overcome the class-imbalance, the proposed framework utilizes a class-balanced bagging approach. As a proof of concept, OHTS glaucoma clinical trial dataset was used to assess the effectiveness of approach for early glaucoma prediction.

Experimental results signified that a system of deep archetypal representation and class-balanced bagging improved predicting glaucoma development from baseline measurements several years prior to disease development. Future work with independent datasets may be required to verify the findings of this study.

Acknowledgment

The authors thank Dianna Johnson for her assistance. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Funding Statement

This work was supported in part by the National Institute of Health (NIH) under Grant EY030142.

References

- [1].Cutler A. and Breiman L., “Archetypal analysis,” Technometrics, vol. 36, no. 4, pp. 338–347, Nov. 1994. [Google Scholar]

- [2].Bauckhage C. and Manshaei K., “Kernel archetypal analysis for clustering web search frequency time series,” in Proc. 22nd Int. Conf. Pattern Recognit., Aug. 2014, pp. 1544–1549. [Google Scholar]

- [3].Bauckhage C. and Thurau C., “Making archetypal analysis practical,” in Pattern Recognition. DAGM (Lecture Notes in Computer Science), vol. 5748, Denzler J., Notni G., and Süße H., Eds. Berlin, Germany: Springer, 2009. [Google Scholar]

- [4].Seth S. and Eugster M. J., “Archetypal analysis for nominal observations,” IEEE Trans. Pattern. Anal. Mach. Intell., vol. 38, pp. 849–861, May 2016. [DOI] [PubMed] [Google Scholar]

- [5].Keller S. M., Samarin M., Wieser M., and Roth V., “Deep archetypal analysis,” in Pattern Recognition. DAGM GCPR (Lecture Notes in Computer Science), vol. 11824, Fink G., Frintrop S., and Jiang X., Eds. Cham, Switzerland: Springer, 2019. [Google Scholar]

- [6].Thakur A. and Rajan P., “Deep archetypal analysis based intermediate matching kernel for bioacoustic classification,” IEEE J. Sel. Topics Signal Process., vol. 13, no. 2, pp. 298–309, May 2019, doi: 10.1109/JSTSP.2019.2906465. [DOI] [Google Scholar]

- [7].Thakur A. and Rajan P., “Deep archetypal analysis based intermediate matching kernel for bioacoustic classification,” IEEE J. Sel. Topics Signal Process., vol. 13, no. 2, pp. 298–309, May 2019. [Google Scholar]

- [8].Quigley H. A., “Glaucoma,” Lancet, vol. 377, pp. 77–1367, Apr. 2011. [DOI] [PubMed] [Google Scholar]

- [9].Gordon M. O.et al. , “The ocular hypertension treatment study: Baseline factors that predict the onset of primary open-angle glaucoma,” Arch Ophthalmol, vol. 120, pp. 714–720, Jun. 2002. [DOI] [PubMed] [Google Scholar]

- [10].Salvetat M. L., Zeppieri M., Tosoni C., Brusini P., and Medscape, “Baseline factors predicting the risk of conversion from ocular hypertension to primary open-angle glaucoma during a 10-year follow-up,” Eye, vol. 30, pp. 784–795, Jun. 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Weinreb R. N., Aung T., and Medeiros F. A., “The pathophysiology and treatment of glaucoma: A review,” J. Amer. Med. Assoc., vol. 311, pp. 1901–1911, May 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Elze T., Pasquale L. R., Shen L. Q., Chen T. C., Wiggs J. L., and Bex P. J., “Patterns of functional vision loss in glaucoma determined with archetypal analysis,” J. Roy. Soc. Interface, vol. 12, no. 103, Feb. 2015, Art. no. 20141118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Wang M.et al. , “An artificial intelligence approach to detect visual field progression in glaucoma based on spatial pattern analysis,” Investigative Ophthalmol. Vis. Sci., vol. 60, pp. 365–375, Jan. 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Johnson C. A., Sample P. A., Cioffi G. A., Liebmann J. R., and Weinreb R. N., “Structure and function evaluation (SAFE): I. Criteria for glaucomatous visual field loss using standard automated perimetry (SAP) and short wavelength automated perimetry (SWAP),” Amer. J. Ophthalmol., vol. 134, pp. 177–185, Aug. 2002. [DOI] [PubMed] [Google Scholar]

- [15].Xiangyu C., Yanwu X., Kee W. D. W., Yin W. T., and Jiang L., “Glaucoma detection based on deep convolutional neural network,” in Proc. Conf. IEEE Eng. Med. Biol. Soc., Aug. 2015, pp. 715–718. [DOI] [PubMed] [Google Scholar]

- [16].Liao W., Zou B., Zhao R., Chen Y., He Z., and Zhou M., “Clinical interpretable deep learning model for glaucoma diagnosis,” IEEE J. Biomed. Health Informat., vol. 24, no. 5, pp. 1405–1412, May 2020. [DOI] [PubMed] [Google Scholar]

- [17].Bojikian K. D., Lee C. S., and Lee A. Y., “Finding glaucoma in color fundus photographs using deep learning,” JAMA Ophthalmol., vol. 137, no. 12, p. 1361, Dec. 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Ahn J. M., Kim S., Ahn K.-S., Cho S.-H., Lee K. B., and Kim U. S., “A deep learning model for the detection of both advanced and early glaucoma using fundus photography,” PLoS ONE, vol. 13, no. 11, Nov. 2018, Art. no. e0207982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Sehi M., Bhardwaj N., Chung Y. S., Greenfield D. S., and Advanced Imaging for Glaucoma Study G., “Evaluation of baseline structural factors for predicting glaucomatous visual-field progression using optical coherence tomography, scanning laser polarimetry and confocal scanning laser ophthalmoscopy,” Eye, vol. 26, pp. 1527–1535, Dec. 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Bowd C.et al. , “Predicting glaucomatous progression in glaucoma suspect eyes using relevance vector machine classifiers for combined structural and functional measurements,” Investigative Ophthalmol. Vis. Sci., vol. 53, pp. 2382–2389, Apr. 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Chen Y., Mairal J., and Harchaoui Z., “Fast and robust archetypal analysis for representation learning,” presented at the IEEE Conf. Comput. Vis. Pattern Recognit, 2014. [Google Scholar]

- [22].van der Maaten L. and Hinton G., “Visualizing data using t-SNE,” J. Mach. Learn. Res., vol. 9, pp. 2579–2605, Nov. 2008. [Google Scholar]

- [23].Brusini P. and Johnson C. A., “Staging functional damage in glaucoma: Review of different classification methods,” Surv. Ophthalmol., vol. 52, pp. 156–179, Mar-Apr 2007. [DOI] [PubMed] [Google Scholar]

- [24].Lichter P. R., “Variability of expert observers in evaluating the optic disc,” Trans. Amer. Ophthalmol. Soc., vol. 74, pp. 532–572, Jan. 1976. [PMC free article] [PubMed] [Google Scholar]

- [25].Jampel H. D.et al. , “Agreement among glaucoma specialists in assessing progressive disc changes from photographs in open-angle glaucoma patients,” Amer. J. Ophthalmol., vol. 147, pp. 39–44 and e1, Jan. 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Bowd C.et al. , “Glaucomatous patterns in frequency doubling technology (FDT) perimetry data identified by unsupervised machine learning classifiers,” PLoS ONE, vol. 9, no. 1, Jan. 2014, Art. no. e85941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Yousefi S.et al. , “Learning from data: Recognizing glaucomatous defect patterns and detecting progression from visual field measurements,” IEEE Trans. Biomed. Eng., vol. 61, pp. 2112–2124, Jul. 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Yousefi S., Goldbaum M. H., Zangwill L. M., Medeiros F. A., and Bowd C., “Recognizing patterns of visual field loss using unsupervised machine learning,” Proc. SPIE, vol. 9034, Mar. 2014, Art. no. 90342M. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Yousefi S.et al. , “Unsupervised Gaussian mixture-model with expectation maximization for detecting glaucomatous progression in standard automated perimetry visual fields,” Transl. Vis. Sci. Technol., vol. 5, no. 3, p. 2, May 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Zeger S. L., Liang K. Y., and Albert P. S., “Models for longitudinal data: A generalized estimating equation approach,” Biometrics, vol. 44, pp. 1049–1060, Dec. 1988. [PubMed] [Google Scholar]