Abstract

As early as infancy, caregivers’ facial expressions shape children’s behaviors, help them regulate their emotions, and encourage or dissuade their interpersonal agency. In childhood and adolescence, proficiencies in producing and decoding facial expressions promote social competence, whereas deficiencies characterize several forms of psychopathology. To date, however, studying facial expressions has been hampered by the labor-intensive, time-consuming nature of human coding. We describe a partial solution: automated facial expression coding (AFEC), which combines computer vision and machine learning to code facial expressions in real time. Although AFEC cannot capture the full complexity of human emotion, it codes positive affect, negative affect, and arousal—core Research Domain Criteria constructs—as accurately as humans, and it characterizes emotion dysregulation with greater specificity than other objective measures such as autonomic responding. We provide an example in which we use AFEC to evaluate emotion dynamics in mother–daughter dyads engaged in conflict. Among other findings, AFEC (a) shows convergent validity with a validated human coding scheme, (b) distinguishes among risk groups, and (c) detects developmental increases in positive dyadic affect correspondence as teen daughters age. Although more research is needed to realize the full potential of AFEC, findings demonstrate its current utility in research on emotion dysregulation.

Keywords: arousal, emotion dysregulation, facial expression, negative valence system, positive valence system

According to functionalist accounts, human emotions motivate evolutionarily adaptive behaviors, help us shape our environments to achieve desired ends, and serve important communicative purposes in social settings (see Beauchaine, 2015; Beauchaine & Haines, in press; Campos, Mumme, Kermoian, & Campos, 1994; Thompson, 1990). Displays of emotion, including facial expressions, gestures, and vocalizations, facilitate adaptive group cohesion by conveying our intentions, motivations, and subjective feeling states to others, which allows us to better understand and predict one another’s behaviors (Darwin, 1872). Within social groups, expressions of emotion exhibit contagion, whereby individuals shift their attitudes, behaviors, and affective valence toward conformity with other group members and group norms (Smith & Mackie, 2015). Negative emotional contagion results in less cooperation and more conflict, whereas positive emotional contagion has the opposite effects (e.g., Barsade, 2002).

Given their importance to adaptive human function, it is of little surprise that facial expressions of emotion are used extensively to study socioemotional development and social interactions. Abnormalities in both production and decoding of facial expressions hinder socioemotional competence (Izard et al., 2001; Troster & Brambring, 1992). Moreover, environmental adversities including abuse and maltreatment confer biases in decoding facial expressions of anger and sadness, with adverse effects on social cognition and socioemotional function across development (Pollak, Cicchetti, Hornung, & Reed, 2000; Pollak & Sinha, 2002). Of note, treatments that improve children’s facial expression recognition yield corresponding increases in social competence (Domitrovich, Cortes, & Greenberg, 2007; Golan et al., 2009).

Despite the important rolesthat production and decoding of facial expressions play in healthy socioemotional development, coding facial expressions is a time-consuming, labor-intensive process. Training a coding team takes months of regular meetings, between which hours are spent coding practice sets for reliability. Multiple coders must be trained in case one or more does not achieve reliability. After training, comprehensive coding of facial actions requires up toanhour toannotatea single minute ofvideo, and a meaningful percentage of videos must be coded by two people to compute reliability (Bartlett, Hager, Ekman, & Sejnowski, 1999; Ekman & Friesen, 1978). In attempts to circumvent manual coding, researchers often rely on less labor-intensive markers of emotional experience and expression, such as autonomic psychophysiology, but these methods provide information at a much narrower bandwidth, and are open to multiple interpretations (see, e.g., Beauchaine & Webb, 2017; Zisner & Beauchaine, 2016).

In efforts to address the labor intensity of manual coding, psychologists and computer scientists have devised automated methods of capturing facial expressions of emotion (e.g., Dys & Malti, 2016; Gadea, Aliño, Espert, & Salvador, 2015; Haines, Southward, Cheavens, Beauchaine, & Ahn, 2019; Sikka et al., 2015). In this paper, we describe innovations in computer-vision and machine learning, which offer a partial solution to impediments of manual coding. Automated facial expression coding (AFEC) involves two steps, including (a) using computer-vision to extract facial expression information from pictures or video streams, and (b) applying machine learning to map extracted facial expression information to prototype emotion ratings made by expert human judges (Cohn, 2010; Cohn & De la Torre, 2014). In their current state of development, AFEC models cannot capture the full complexity of human emotion, and therefore cannot be used to replace human coders in all studies. In other contexts, however, they code facial expressions with accuracy that is similar to highly skilled human coders. Although rarely used by developmental psychopathologists to date, AFEC models can evaluate and map development of specific emotional processes, including core Research Domain Criteria constructs of positive valence, negative valence, and arousal/regulation. Our objectives in writing this paper are to (a) describe AFEC models, including their historical validation vis-à-vis gold-standard human coding; (b) provide a critical analysis of what AFEC models can and cannot do in contemporary developmental psychopathology and emotion dysregulation research; and (c) present example analyses in which we compare AFEC with human ratings using a different coding scheme to evaluate emotion expression and emotion dysregulation in dyadic conflict discussions between mothers and their depressed, self-injuring, and typically developing daughters.

When AFEC models can be applied, they offer several advantages over human coding. Because they are mathematical, they always assign the same code(s) to an image or video, which eliminates an important source of error: intercoder disagreement. AFEC models can also leverage computer processing speed to code facial expressions in real time. Before describing AFEC models, we first review historical perspectives on emotion, including how facial expressions relate to socioemotional development.

Facial Expressions of Emotion: Historical Perspectives

In Expression of Emotion in Man and Animals, Darwin (1872) noted the importance of facial expressions for development and communication of emotions. In this foundational text, Darwin expanded Duchenne’s (1990/1862) taxonomy of emotional expression by suggesting that facial expressions (a) map onto specific emotional states, which motivate adaptive behaviors; and (b) serve to regulate social interactions by allowing us to influence and understand others’ behaviors and intentions. From this functionalist view, abnormalities in production of facial expressions result in ineffective conveyance of emotional information, whereas abnormalities in recognition of facial expressions result in misattribution of other’s motivations, intentions, and emotional states. The abilities to accurately produce and recognize facial expressions of emotion are therefore tightly linked to healthy socioemotional development (Ekman & Oster, 1979).

Since Darwin’s work, multiple theories of emotion have guided facial expression research. Broadly speaking, these can be divided into discrete versus dimensional views.1 Discrete emotion theories postulate that distinct patterns of facial expressions represent separable emotional states that motivate adaptive responses to environmental cues. In support of this perspective, people across cultures display similar facial expressions in specific contexts and motivational states (Chevalier-Skolnikoff, 1973). Such findings and others led discrete emotion theorists to develop the concept of basic emotions. Cross-cultural studies consistently yield six universal (basic) facial expressions of emotion, including happiness, sadness, anger, fear, disgust, and surprise (Ekman, 1992, 1993; Ekman et al., 1987). Of note, these expressions are also observed in many nonhuman primate species (Preuschoft & van Hooff, 1995).

Although the existence of basic, universally recognized facial expressions is still a topic of debate (Jack, Garrod, Yu, Caldara, & Schyns, 2012), the idea that facial expressions represent discrete emotional states remains strong (Ekman & Cordaro, 2011). Many foundational studies on development of both facial expression recognition (e.g., Camras, Grow, & Ribordy, 1983; Pollak et al., 2000; Widen & Russell, 2003) and production (e.g., Buck, 1975; Field, Woodson, Greenberg, & Cohen, 1982; Odom & Lemond, 1972; Yarczower, Kilbride, & Hill, 1979) assume that emotions are discrete, and emphasis on conceptualizing emotions as discrete continues in the literature today.

In contrast to discrete emotion theory, dimensional perspectives assume that emotions, as well as facial expressions, are best represented as points along multiple latent continua, including dimensions such as valence and arousal. Dimensional theories gained traction in the mid-20th century, when Schlosberg (1941) showed that people categorize facial expressions along continua, analogous to the way we sort hues on a color wheel despite a continuous gradient of color in nature. Schlosberg (1952) showed that a large portion of variance in how people categorize facial expressions is captured by a circular rating space comprising two bipolar dimensions: pleasantness–unpleasantness and attention–rejection. Later, Schlosberg (1954) added a third dimension to capture arousal. Many other dimensional models of emotion have emerged since, including Russell’s circumplex model of valence and arousal (Russell, 1980; Russell & Bullock, 1985) and Watson and Tellegen’s (1985) variation of the circumplex, which orthogonalizes positive and negative affect intensity. Across studies, facial expressions map most reliably onto valence and arousal dimensions (Smith & Ellsworth, 1985). In contrast to discrete emotions theory, far fewer studies take a dimensional approach to investigate development of emotion (e.g., Luterek, Orsillo, & Marx, 2005; Yoshimura, Sato, Uono, & Toichi, 2014). In the next section, we summarize development of facial expressions and their recognition.

Facial Expressions of Emotion and Development of Social Communication

Production of facial expressions

Infants exhibit facial expressions of emotion at birth. Immediately thereafter, their facial expressions are modified through social learning and other reinforcement mechanisms. By age 1 month, infants produce emotionally congruent facial expressions to a variety of social and nonsocial incentives, such as smiling at others who smile at them, and smiling when presented with primary reinforcers. These expressions are readily and accurately interpreted by adults (Izard, Huebner, Risser, McGinnes, & Dougherty, 1980). Children who are blind at birth exhibit the same facial expressions as others, but become less expressive than typically developing peers as they age, indicating that development of emotion expression is determined in part by social mechanisms (Goodenough, 1932; Thompson, 1941). In very early infancy, infants do not have the capacity to regulate their spontaneous facial expressions (Izard & Malatesta, 1987).

By age 3–5 years, children can voluntarily produce facial expressions more accurately than they can recognize them, and the degree to which they accurately produce facial expressions predicts how willing their peers are to play with them (Field et al., 1982). Environmental stressors such as physical abuse during this age period influence how accurately children produce certain facial expressions (Camras et al., 1988). As children are exposed to increasingly complex social situations during the transition from preschool to elementary school, they learn to downregulate spontaneous facial expressions (Buck, 1977). Children who show more downregulation (better volitional control) enjoy higher social status than their peers (Zivin, 1977). Voluntary control over facial expressions continues to improve into early adolescence, when accuracy reaches a natural peak that can further improve with training (Ekman, Roper, & Hager, 1980). Adolescents who do not exhibit age-appropriate regulation of facial expressions have more internalizing and externalizing symptoms than their peers (Keltner, Moffitt, & Stouthamer-Loeber, 1995). Furthermore, male externalizing children and adolescents show poor correspondence between facial expressions of emotion and physiological reactivity to others’ emotions (Marsh, Beauchaine, & Williams, 2008).

As this discussion suggests, the ability to volitionally up- and downregulate facial expressions in response to contextual demands (termed expressive flexibility) is associated with a number of functional and social outcomes (Coifman & Almahmoud, 2017). Young adults who flexibly regulate their facial expressions show better adjustment to environmental stressors such as traumatic life events and sexual abuse, and experience fewer symptoms of psychopathology (Bonanno et al., 2007; Bonanno, Papa, Lalande, Westphal, & Coifman, 2004; Chen, Chen, & Bonanno, 2018; Westphal, Seivert, & Bonanno, 2010). In addition, college freshmen who show more positive facial expressions in response to peer rejection report less psychological distress than their peers (Coifman, Flynn, & Pinto, 2016). Given shifts from spontaneous to volitional control of facial expressions from infancy to adolescence, expressive flexibility may be a useful indicator of emotion regulation capacities, socioemotional competence, and resilience (Beckes, & Edwards, 2018; Bonanno & Burton, 2013).

Recognition of facial expressions

The ability to accurately recognize facial expressions of emotion develops at a slower pace than the ability to accurately produce them (Field & Walden, 1982). Nevertheless, infants can imitate adults’ facial expressions at as young as age 12 days (Meltzoff & Moore, 1977). By age 5–7 months, infants begin to discriminate between happy, sad, fearful, and surprised expressions, yet they do so based on facial features (e.g., actions involving the eyes) rather than holistic facial expressions (Nelson, 1987). By age 1 year, infants extract meaning from facial expressions and modify their behaviors accordingly. For example, studies using the visual cliff paradigm show that infants reference their mother’s facial expressions before crossing what appears to be a deep cliff. When mothers exhibit happy or fearful facial expressions, infants either cross or decide against crossing the apparent cliff, respectively (Sorce, Emde, Campos, & Klinnert, 1985).

As infants enter more complex social environments, they learn to associate specific facial expressions with emotion. For example, during intense emotional experiences such as crying and laughing, the orbicularis oculi (muscles surrounding the eyes, the Duchenne marker; Duchenne, 1990/1862) spontaneously contract to protect the eyes from increased pressure as blood rushes to the face. Because volitional control over these muscles is difficult even for adults, the Duchenne marker can be an involuntary indicator of positive or negative affect intensity (Messinger, Mattson, Mahoor, & Cohn, 2012). Young children learn to associate the Duchenne marker with more intense emotion by age 3–4 years (Song, Over, & Carpenter, 2016). This suggests that meaning attributed to the Duchenne marker is learned. In addition, preschoolers from low-socioeconomic status, high-crime neighborhoods can recognize facial expressions of fear more accurately than their nondisadvantaged peers (Smith & Walden, 1998). Thus, environments affect the rate at which children learn to associate specific facial expressions with discrete emotions.

Despite being able to categorize facial expressions of emotion, preschoolers often fail to account for context when interpreting facial expressions (Hoffner & Badzinski, 1989). However, as children begin to regulate their facial expressions in kindergarten into middle school, they learn to integrate facial expressions with contextual cues and prior experience, generating more accurate inferences about others’ emotional states (Hoffner & Badzinski, 1989). Some research suggests that children who fail to learn accurate representations of facial expressions during this period show lower academic performance (Izard et al., 2001), consistent with accounts of shared neural representation of “cognition” and “emotion” in the brain (see Pessoa, 2008).

Of note, associations children learn between facial expressions and emotion are sensitive to environmental moderation. Children with histories of neglect or abuse are less accurate in recognizing basic emotions from facial expressions than their nonabused peers, and the degree of inaccuracy is related to their social competence (Camras et al., 1983, 1988; Pollak et al., 2000). Furthermore, physically abused children are biased toward interpreting ambiguous facial expressions as angry (Pollak et al., 2000), and require less perceptual information to accurately recognize anger from facial expressions (Pollak & Sinha, 2002).

During the transition from late childhood to adolescence, facial expression recognition abilities solidify and are slowly refined into adulthood (Thomas, De Bellis, Graham, & LaBar, 2007). Because there is little qualitative change in recognition abilities from childhood to adolescence, a history of impaired facial expression recognition can strongly affect adolescents’ social and behavioral function. For example, adolescent boys with early onset conduct disorder show deficits in recognizing facial expressions of happiness, anger, and disgust, and boys with psychopathic traits show more impairment in recognizing fear, sadness, and surprise (Blair & Coles, 2000; Fairchild, Van Goozen, Calder, Stollery, & Goodyer, 2009). Adolescent girls with borderline personality disorder require more perceptual information than their typically developing peers to accurately judge facial expressions of happiness and anger (Robin et al., 2012). Among typically developing children, facial expressions are used to reinforce and punish social behaviors; inaccurate facial expression recognition during the transition from childhood to adolescence can therefore lead to repetition of socially maladaptive behaviors that are not corrected over time (Blair, 1995).

As this abbreviated review shows, accurate decoding of and effective volitional control over facial expressions are integral to healthy socioemotional development. Studying facial expressions —including their dynamics in social interactions—has therefore long been of interest to developmental psychopathologists. In the next section, we discuss historical approaches to facial coding, before turning to advantages and disadvantages of AFEC models.

Historical Approaches to Facial Expression Coding

Manual coding

Traditional methods of facial coding fall into two broad categories (Cohn & Ekman, 2005), including (a) message judgment approaches (message based), and (b) measure of sign vehicles approaches (sign based). Message-based approaches ascribe meaning to facial expressions (e.g., “is this an angry facial expression?”), whereas sign-based approaches describe observable facial actions that comprise an expression (e.g., “are they furrowing their brow?”). Because we make inferences about the meaning of facial expressions based on facial actions, the distinction between message- and sign-based approaches is more blurry than sharp. Nevertheless, the approaches are unique in what they require observers to do (Cohn & Ekman, 2005). Whereas message-based coding requires observers to make holistic inferences about emotional states from observable facial expressions, sign-based coding involves only description, not interpretation.

Message-based approaches are used most often in psychology. They are more flexible and easier to implement than sign-based approaches and allow researchers to make inferences about specific emotional states. Furthermore, most research on development of facial expressions leverages message-based protocols to link facial expressions to emotion, so message-based protocols play a crucial role in our understanding of emotional development (see the next section for details). Nevertheless, message-based protocols have a major drawback. They are often created and implemented by individual labs, so there are no widely agreed-upon methods to objectively assign emotion ratings to facial expressions. For example, some labs code judgments of expressed valence intensity on Likert scales (e.g., Bonanno et al., 2004), whereas others code discrete emotions (e.g., Gross & Levenson, 1993). Without a standard approach, it is difficult to compare findings across sites. As noted by the National Advisory Mental Health Council Workgroup on Tasks and Measures for Research Domain Criteria (2016), this is a major impediment to better understanding emotional processes. Even within labs, message-based protocols require human coders to make subjective judgments on specific emotions that facial expressions may represent, so there is no guarantee that different coding teams who are trained on the same protocol will use the same rules to make judgments.

In contrast to the lab-specific nature of message-based coding, there are a small number of highly standardized sign-based protocols (Cohn & Ekman, 2005; Ekman & Rosenberg, 2005). Among these, the Facial Action Coding System (FACS; Ekman & Friesen, 1978) is most widely used. FACS comprises approximately 33 anatomically based action units (AUs), which together can describe virtually any facial expression. For example, AU 12 indicates an oblique rotation of the lip corners (e.g., during a smile), and AU 6 denotes the Duchenne marker (i.e., crow’s feet, contracting muscles around eyes). FACS was developed from a basic emotion perspective, so relations between AUs and basic facial expressions of emotion are well understood. For example, facial expressions of happiness typically involve AUs 6, 12, and sometimes 25 (parting of the lips), and people perceive smiles as more genuine when these AUs are activated simultaneously (Korb, With, Niedenthal, Kaiser, & Grandjean, 2014). Although FACS is standardized and therefore more generalizable than message-based protocols, FACS training takes upward of 100 hr, and just minutes of video require multiple hours to code (Cohn & Ekman, 2005). For these reasons, FACS coding can be cost-prohibitive, and is difficult to apply to dynamic systems, such as fast-moving dyadic exchanges during which participants influence one another’s emotional expressions (cf. Crowell et al., 2017).

Automated coding

Automated facial expression coding (AFEC) offers a partial solution to the labor intensity of human coding (Cohn, 2010; Fasel & Luettin, 2003). AFEC combines image recognition with machine learning—an approach with solid foundations in other disciplines. Journals are now dedicated to human face and gesture recognition, and worldwide competitions to develop more accurate AFEC models are held each year (e.g., Valstar et al., 2016). Furthermore, there are many standardized data sets that AFEC researchers use to develop and test new emotion-detection models. This is consistent with broader efforts in the field to standardize tasks and measures used to assess emotion across labs (National Advisory Mental Health Council Workgroup on Tasks and Measures for Research Domain Criteria, 2016), and has accelerated the pace of AFEC model development over the past few years (see Krumhuber, Skora, Küster, & Fou, 2016). Because the literature on AFEC is large and technically complex, we provide a conceptual overview of AFEC model development, and we selectively cite research to emphasize progress made on discrete and dimensional AFEC models over the past two decades.

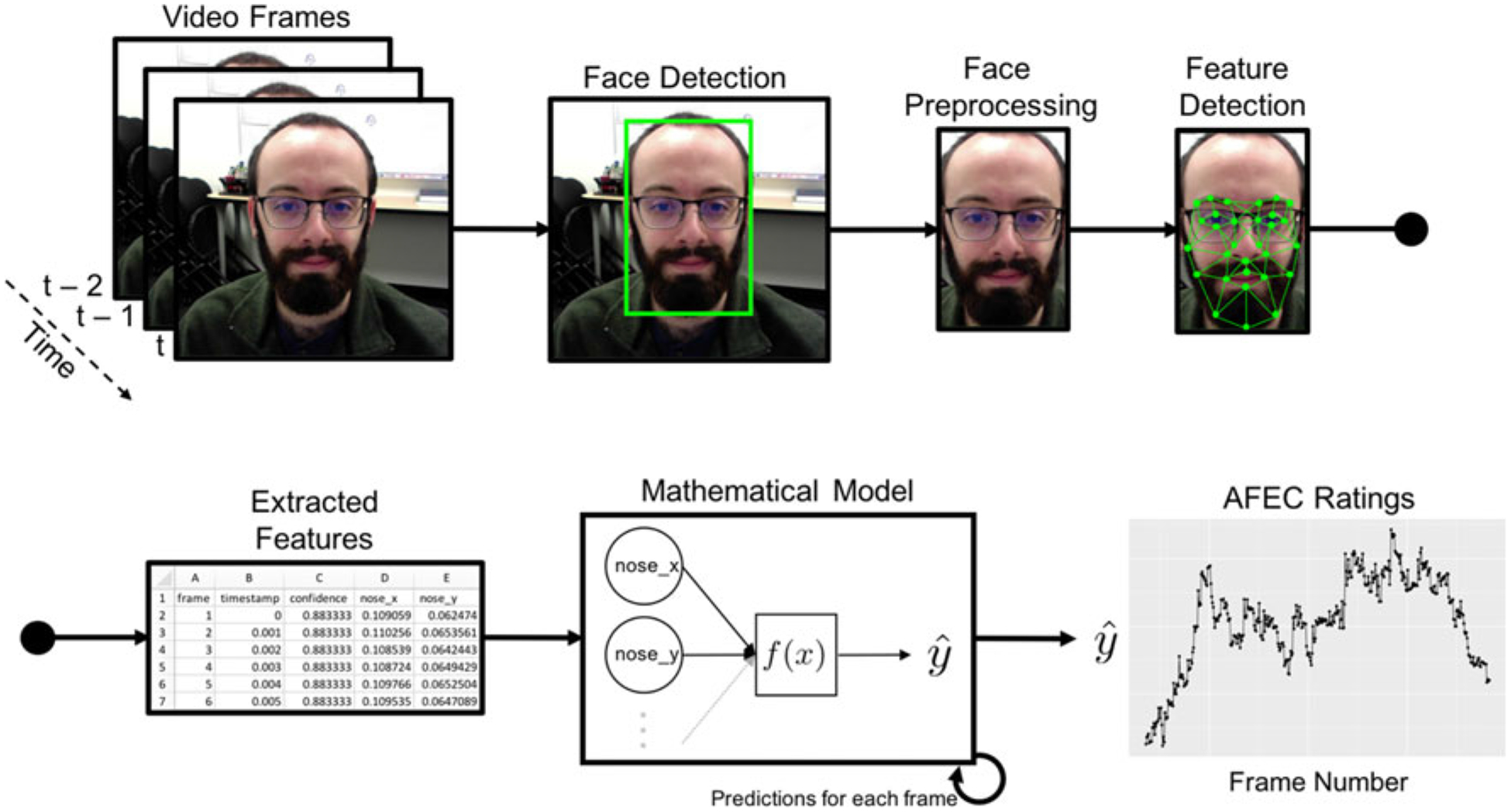

To extract facial expression information from images and videos (i.e., time-series of images), AFEC models typically proceed by (a) detecting a face, (b) extracting pixel values from the face and translating them into features using a variety of different methods, and (c) determining—based on detected features—whether the face is making a particular movement or displaying a specific expression. It is important to note, however, that processes used to detect facial features vary widely across applications. For example, some studies use Gabor patch filtering methods, whereas others use deep machine learning, which automatically detects relevant features after extensive training. In this article, we use the latter approach. Full description of these processes, which are illustrated in Figure 1, is beyond the scope of this paper. Interested readers are referred to recent reviews (Cohn, 2010; Zhao & Zhang, 2016).

Figure 1.

Steps in AFEC modeling. For each video frame (or image), AFEC uses computer-vision technology to detect a face, which is then preprocessed (cropped). Computer-vision then identifies a set of features on the preprocessed face (here represented by an Active Appearance Model that matches a statistical representation of a face to the detected face), which are tabulated and entered into a mathematical model. This model is developed to detect some aspect of emotion from a previously coded set of data (e.g., presence of a discrete emotion, valence intensity, FACS AUs, etc.), and outputs these emotion ratings based on extracted facial features of the new data. Ratings are output for each frame, resulting in a rich time series of emotional expressions. Of note, AFEC models can perform these steps in real time. In some cases, there can be a two-step mathematical model (e.g., FACS detection and then emotion detection given FACS AUs).

To generate valid ratings of emotion using machine learning applications of AFEC, researchers either (a) first have human coders annotate a data set using either message- or sign-based approaches, or (b) use a standardized reference data set. Next, a machine learning algorithm builds a model that predicts human coders’ ratings given features extracted using the above listed steps. As with all machine learning approaches, AFEC is validated by checking how accurately it codes facial expressions on data that the model was not fit to. Therefore, training data used to develop AFEC models must be considered toward understanding how well the models generalize. For example, a model trained only on Caucasian participants may not generalize to all racial groups.

After validation, AFEC models can be shared across sites to code new data sets in real time. By detecting objective facial movements (AUs or other features), which are translated to affect ratings by mathematical models, AFEC combines characteristics of both message-based and sign-based approaches to facial coding. Next, we review progress made on AFEC models that detect discrete and dimensional features of facial expressions, and we describe commercial and open-source software that may be useful to interested researchers.

Discrete perspective

Most early research on AFEC focused on classifying facial expressions using six basic emotions, including happiness, sadness, surprise, anger, fear, and disgust (neutral was sometimes added). Given adequate image quality, AFEC models have long been able to classify basic emotions with up to 90% accuracy (see Fasel & Luettin, 2003).2 More contemporary models classify basic emotions with up to 97% accuracy (Lopes, de Aguiar, De Souza, & Oliveira-Santos, 2017). With six basic emotions, such accuracy rates far exceed chance, and often exceed accuracy levels of human coders (Isaacowitz et al., 2007).

Although less work addresses AFEC coding of complex emotions (e.g., shame, boredom, interest, pride, etc.), available research indicates far lower accuracy. Humans can interpret an inordinately large number of facial expressions (Parrott, 2000). Some validated data sets used in emotion research contain over 400 distinct facial expressions and related mental states (e.g., doubt, hunger, reasoning, etc.; see Golan, Baron-Cohen, & Hill, 2006). However, in their current state of development, AFEC models cannot accommodate this level of expressive complexity (Adams & Robinson, 2015). AFEC models show at best modest success in concurrently classifying up to 18 distinct emotions from different combinations of facial expressions (47% accuracy; chance = 6%; Adams & Robinson, 2015).

Many evaluations of AFEC models compare their performance to human codings of FACS AUs (Ekman & Friesen, 1978). Because basic emotion coding relies in part on detection of specific combinations of FACS AUs, AFEC models have progressed in conjunction with FACS studies of basic emotions. Early AFEC models could detect 16–17 AUs with high accuracy (93%–95%; Kotsia & Pitas, 2007; Tian, Kanade, & Cohn, 2001), but data sets used to create and test these models were relatively small and therefore of limited generalizability.

In more recent literature, AFEC developers have stopped reporting simple accuracy rates, which do not correct for different base rates of emotions, and instead report true positives, false positives, true negatives, false negatives, and combinations of these metrics (see Footnote 1). The most widely used metric for comparing FACS AU detection across different AFEC models is the F1 score, which is a single number that takes into account precision and recall.3 Like Cohen’s kappa, F1 is useful when certain AUs are expressed more often than others. For example, a person might respond to a particular video stimulus with AU 12 (associated with happiness) 90% of the time and AU 9 (associated with disgust) 5% of the time. If an AFEC model correctly classifies 95% of AU 12 expressions but only 50% of AU 9 expressions, overall accuracy will be high because the base rate of AU 12 is higher than the base rate of AU 9.

This change in accuracy reporting makes it challenging to quantify progress in automated FACS coding. Nevertheless, clear advances have been made. Validation studies of AFEC software (details below) indicate accuracy rates for contemporary models ranging from 71% to 97%, with F1 scores ranging from .27 to .90, depending on specific AUs (Lewinski, den Uyl, & Butler, 2014). Large ranges in F1 scores for AU detection within and across studies suggests that researchers should exercise caution when interpreting single AFEC-detected AUs, and always report either F1 or kappa. As might be surmised from our previous discussion, (a) higher F1 values are obtained when fewer AUs are used to detect basic as opposed to complex emotions, and (b) much more research is needed before AFEC models can replace human FACS coders.

Dimensional perspective

Dimensional AFEC models, which we provide an example of below, have also gained attention over the past decade (Gunes, Schuller, Pantic, & Cowie, 2011). Dimensional coding requires AFEC models to estimate intensities of facial expression dimensions (e.g., positive affect intensity on a scale of 0 to 10). Because dimensional AFEC models output continuous ratings, their accuracy is measured by correlations between their intensity ratings and those averaged across multiple human coders who manually annotate valence, arousal, and other affective dimensions. Early dimensional AFEC models showed reasonable correspondence with human coders, yielding correlations ranging from .57 to .58 for valence and .58 to .62 for arousal (Kanluan, Grimm, & Kroschel, 2008). More recent models yield correlations ranging from .60 to .71 for valence, .89 for positive affect intensity, and .76 for negative affect intensity (Haines et al., 2018; Mollahosseini, Hasani, & Mahoor, 2017; Nicolaou, Gunes, & Pantic, 2011).

Of note, some studies are validated on video recordings of seated laboratory participants who react to emotionally evocative images (Haines et al., 2018), whereas others are validated on images collected “in the wild” (e.g., video found on the internet; Mollahosseini et al., 2017). In the wild recordings present challenges given limited quality control over facial position, image resolution, image refresh rates, and so on. A related issue concerns quality of images collected during social interactions, where participants are free to move about. We are currently developing the capacity to code facial expressions in real time using head-mounted cameras in the lab. Although slightly intrusive, these cameras can collect precise, high-definition video in contexts that are much more ecologically valid than many other popular methods, such as EEG and magnetic resonance imaging, allow.

The upper bounds of correlations listed above may represent ceilings for AFEC models of arousal based on facial expressions (e.g., Mollahosseini et al., 2017; Nicolaou et al., 2011). However, further increments in accuracy may be possible by including vocal features (e.g., pitch or tone) in future AFEC models (Gunes & Pantic, 2010). Such findings have prompted AFEC researchers to include a variety of “response channels” in dimensional models of emotion, including vocalizations and various psychophysiological metrics (Gunes & Pantic, 2010; Ringeval et al., 2015). At present, more research is needed before models leveraging multiple response channels are used routinely by developmentalists.

Applications of AFEC in Psychology

The literature reviewed in preceding sections indicates that AFEC models cannot—at least in their current state of development— capture the full complexity of human emotion, as evaluated by highly trained FACS coders. In contrast, AFEC models are reasonably efficient at coding basic emotions, affective valence, and affect intensity. Accordingly, studies that evaluate developmental processes and socioemotional correlates of basic emotions, including valance and intensity, might benefit considerably from using AFEC, in terms of both time saved and the ability to model dynamic social interactions in real time.

To date, however, AFEC has enjoyed limited use in developmental research, although this has begun to change. Its first application in developmental psychology was a study showing that subtle changes in affective synchrony (simultaneously expressed emotions) among mother–infant dyads can be captured by AFEC (Messinger, Mahoor, Chow, & Cohn, 2009). Follow-up studies used AFEC to detect limited numbers of FACS AUs among infants. In one such study, AU 6 (the Duchenne marker) was associated with both increased positive and negative affect intensity (Mattson, Cohn, Mahoor, Gangi, & Messinger, 2013; Messinger et al., 2012). AFEC has also been used to determine how infants as young as age 13 months communicate emotion through facial expressions (Hammal, Cohn, Heike, & Speltz, 2015). Other applications include detecting changes in children’s emotional expressions while lying (Gadea et al., 2015), while engaging in moral transgressions (Dys & Malti, 2016), and while experiencing pain (Sikka et al., 2015). Researchers have also used AFEC to develop interactive emotion–learning environments for children with autism (e.g., Gordon, Pierce, Bartlett, & Tanaka, 2014). Among adults, AFEC can differentiate between active versus remitted depression (Dibeklioğlu, Hammal, Yang, & Cohn, 2015; Girard & Cohn, 2015), genuine versus feigned pain (Bartlett, Littlewort, Frank, & Lee, 2014), and facial expression dynamics between those with versus without schizophrenia (Hamm, Kohler, Gur, & Verma, 2011).

AFEC software available to psychologists

In the past decade, several commercial and open-source AFEC software products have emerged. However, not all are validated, so we limit discussion to those described in published validation studies. Both FACET and AFFDEX are modules of the iMotions platform (iMotions, 2018). This interface accommodates data from multiple modalities (facial expressions, EMG, ECG, eye tracking). FACET is a commercial successor to the computer expression recognition toolbox (Littlewort, Whitehill, Wu, & Fasel, 2011). AFFDEX is based on the Affectiva Inc. (https://www.affectiva.com/) AFFDEX algorithm (e.g., McDuff, El Kaliouby, Kassam, & Picard, 2010). FaceReader is a module of Noldus (Noldus, 2018), which offers similar services to iMotions for integrating across modalities. Automated coding tools including FACET and FaceReader have been used in prior studies to automate AU coding in children as young as age 5 years (Dys & Malti, 2016; Gadea et al., 2015; Sikka et al., 2015).

FACET, AFFDEX, and FaceReader are commercial software systems designed for easy use with minimal technical expertise. All can process facial expressions in real time from a webcam, or from stored video data. All are capable of detecting basic emotions as well as 20 FACS AUs. FaceReader is the only commercial software that can detect nonbasic emotions and AU intensity, as opposed to AU presence. OpenFace is the only free, open-source AFEC software available (Baltrusaitis, Robinson, & Morency, 2016). Unlike commercial products, OpenFace only detects AUs, and its graphical interface cannot be used to create experiments or integrate facial expression analysis with other data modalities. All of these products allow users to export data into standard file formats (e.g., .csv), which can then be loaded into any statistical program. We refer interested readers to validation studies for further details (Baltrusaitis et al., 2016; Lewinski et al., 2014; Stöckli, Schulte-Mecklenbeck, Borer, & Samson, 2017). In the next section, we present a purposefully straightforward application of AFEC that shows its promise in research on emotion dysregulation. More complex analyses and technical descriptions can be found in recent articles (e.g., Haines et al., 2019).

An AFEC Example: Emotion Dysregulation During Mother– Daughter Conflict

To demonstrate the potential utility of AFEC for emotion dysregulation research, we apply an AFEC model to data collected as part of a previous study (Crowell et al., 2012, 2014, 2017). This study compared emotional valence and intensity in mother– daughter dyads during conflict discussions. Dyads included mothers with typically developing adolescent girls, mothers with self-injuring adolescent girls, and mothers with depressed but non-self-injuring adolescent girls. These data are well suited for AFEC analysis given (a) previous annotation with a different coding scheme focused on basic rather complex emotional processes (to assess convergent validity), (b) a broad developmental age range (to assess maturational effects), (c) dynamic dyadic expressions of emotion (to evaluate social-emotional dynamics), (d) the ability to compare such dynamics and their development across typically developing and clinical groups, and (e) a laboratory setting that included facial expressions with little occlusion. Before presenting AFEC results, we describe the sample, and summarize the original coding scheme and previously reported results. Of note, these data have not been coded previously with AFEC.

In total, 76 adolescent girls, ages 13–18 years, participated with their mothers. Among adolescents, 26 were recruited based on significant self-injury, 24 were recruited for depression without self-injury, and 24 were typically developing controls. Given space constraints, we refer readers to previous publications for more specific details about the sample (Crowell et al., 2012, 2014, 2017). For purposes of the current analyses, videos of conflict discussions between adolescents and their mothers were available for 20 self-injuring, 15 depressed, and 13 control participants (N = 48). Videos were unavailable for a sizable subset of participants because several DVDs became corrupted over time, and because video quality was insufficient to subject all DVDs to AFEC analysis. Given the current state of AFEC, we coded intensity of both positive and negative affective valence, and correspondence between mother and daughter expressions of affect and intensity. To evaluate convergent validity, we compare AFEC ratings to previously coded expressions of positive and negative affect using a well-validated coding scheme: the Family and Peer Process Code (Stubbs, Crosby, Forgatch, & Capaldi, 1998).

Of note, it is well known that emotional lability characterizes dyadic interactions in families of girls who self-harm (e.g., Crowell et al., 2013, 2014, 2017; Di Pierro, Sarno, Perego, Gallucci, & Madeddu, 2012; Hipwell et al., 2008; Muehlenkamp, Kerr, Bradley, & Adams Larsen, 2010), and that facial expressions play an important role in regulating dyadic social interactions (see extended discussion above).

Procedure

Mothers and adolescent girls engaged in a 10-min conflict discussion while being monitored with audio and video equipment. Before the discussion, mothers and daughters completed the Issues Checklist (Prinz, Foster, Kent, & O’Leary, 1979), a 44-item questionnaire that contains a variety of common conflict topics (e.g., privacy). All are rated for frequency (1 = never to 5 = very often) and intensity (0 = calm to 40 = intense). A trained research assistant chose a topic that best represented disagreement between mothers and daughters on frequency and intensity ratings of dyad members, but did not exceed an intensity rating of 20. After a discussion topic was selected, the research assistant read the following script:

For the next 10 minutes, I would like you to discuss a topic that you both rated as a frequent area of disagreement. I will tell you the topic and then I will exit the room to restart the recording equipment. I will knock on the wall when it is time for you to start the discussion, and I will knock again in 10 minutes when the discussion is over. It is important for you to keep the conversation going for the full 10 minutes. Your discussion topic is [e.g., keeping the bedroom clean].

Coding

Initially, videos were coded manually by highly trained research assistants. For the present analyses, we applied an AFEC model. Both are described below. Of note, our objective is not to compare the coding systems directly, but rather to evaluate convergent validity. Some description of each is necessary to illustrate efficiency advantages of AFEC models.

Manual coding

Expressions of emotion for both dyad members were coded manually using the Family and Peer Process Code (FPPC; Stubbs et al., 1998). Full procedures used for FPPC coding are described in detail elsewhere (Crowell et al., 2012, 2013, 2017). Briefly, FPPC is a microanalytic coding scheme in which ratings are assigned to all verbal utterances made by dyad members. FPPC codes capture content (e.g., negative interpersonal), affect (e.g., distress and neutral), and direction (mother to daughter vs. daughter to mother) of utterances throughout the conversation. New codes are assigned whenever there is a transition in speaker, listener, verbal content, or affect. FPPC comprises 25 content codes for verbal behavior and 6 affect codes (3 negative, 1 neutral, and 2 positive), resulting in 75 combinations. Facial expressions of emotion are integral to the affect codes.

Painstaking and meticulous training is required for FPPC coders. Two research assistants coded the videos. Both received 15 hr of training per week for 3 months (180 hr total). Training included (a) learning the manual and codes, (b) coding and discussing practice tapes, and (c) coding criterion tapes without discussion to assess reliability. Coders ultimately achieved good reliability for both content (κ = .76) and affect (κ = .69). In addition, coders were required to achieve 10-key typing speed of 8,000 keystrokes per hour at 95% accuracy.

Following annotation, FPPC codes across the entire discussion were reduced to a single number for each dyad member from 0 (highly positive utterances) to 9 (coercive or attacking utterances). These scores were subcategorized as follows: high-level aversives (ratings 7–9), intermediate-level aversives (ratings 5–6), and low-level aversives (ratings 3–5). Next, transition probabilities were computed between mother and daughter aversive escalations as described by Crowell et al. (2013). These reflect probabilities of a dyad member escalating aversive behaviors (e.g., mother intermediate-level aversives followed by daughter high-level aversives = daughter escalation).

Automated coding

We applied an AFEC model developed in our previous work to code for positive and negative affect intensity on a scale from 1 (no affect) to 7 (extreme affect; Haines et al., 2018). This model detects positive and negative affect intensity separately rather than on a single valence continuum. It therefore accommodates seemingly paradoxical facial expressions that are both positive and negative in valence (e.g., Du, Tao, & Martinez, 2014; Watson & Tellegen, 1985), which are sometimes interpreted as disingenuous (e.g., Porter & ten Brinke, 2010). The age range of participants used to train the model spanned late adolescence to early adulthood, overlapping partly with ages of participants in the current study. However, FACET reliably codes facial expressions of emotion valence and intensity in children as young as age 5 years (e.g., Sikka et al., 2015). The model was trained on videos collected in a controlled laboratory setting that was quite similar to the setting in which mother–daughter dyads were recorded for the current study. Of note, our AFEC model does not use baseline or individual-subject corrections to achieve accurate emotion ratings, which mitigates human error associated with determining individual-level neutral expressions.

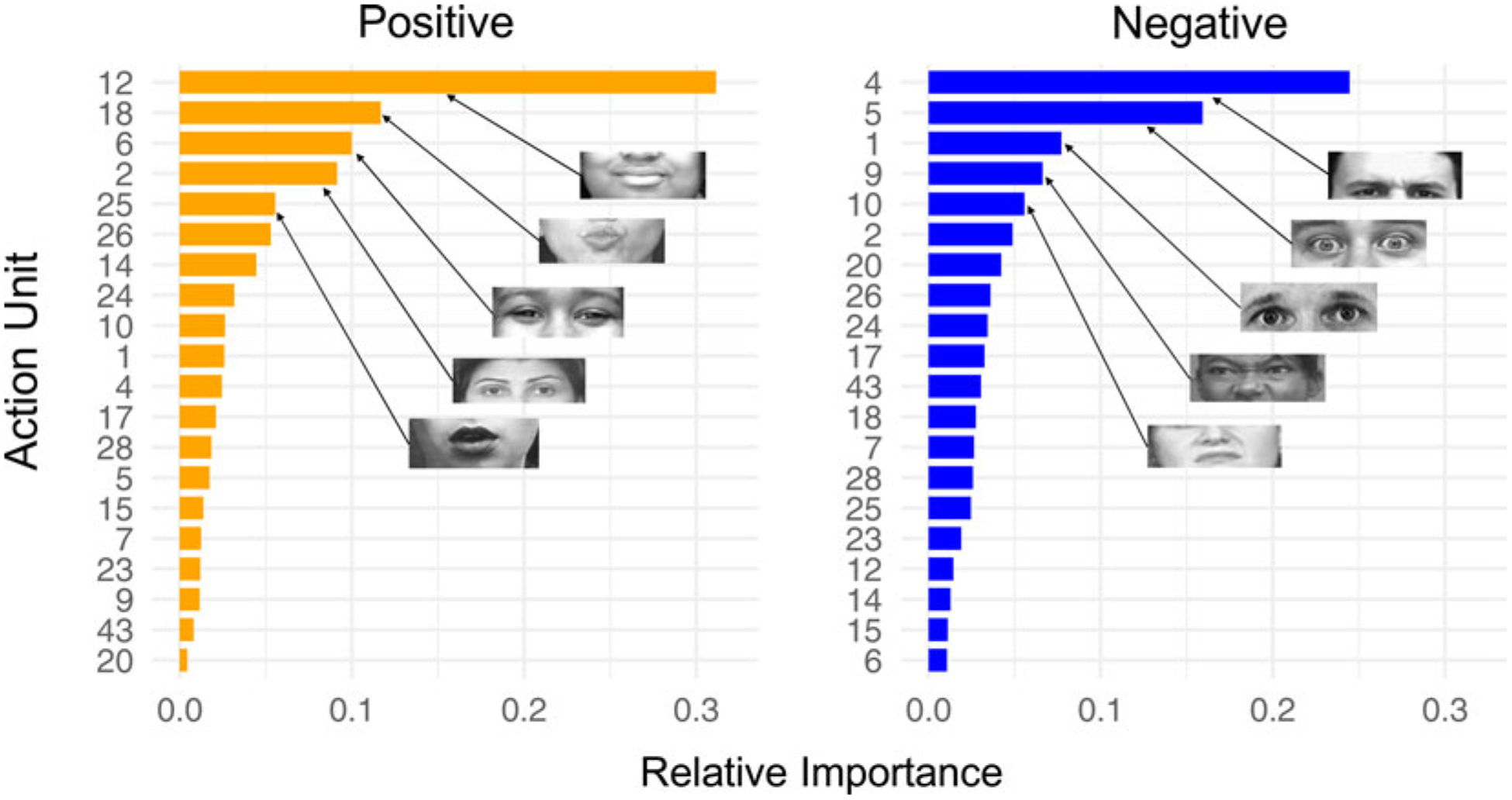

Our AFEC application uses FACET to detect 20 different AUs.4 In assigning positive and negative affect intensity ratings, these AUs are not weighted equally. Rather, they are weighted empirically using a Random Forest machine learning model that was validated on an independent sample (Haines et al., 2018). This empirically derived weighting procedure is depicted in Figure 2, which shows specific AUs that contribute more (and less) to positive and negative facial expressions. Videos were imported into iMotions (www.imotions.com), where they were analyzed using the FACET post hoc video processing feature. FACET outputs a time series of “evidence” ratings for presence of each of 20 AUs at a rate of 30 Hz. Evidence ratings reflect levels of confidence that a given AU is expressed. Ratings are rendered along a scale of roughly −16 to 16, where lower and higher ratings indicate lower and higher probabilities that an AU is present. To transform AU evidence ratings into positive and negative affect ratings, we split each individual’s 10-min video into nonoverlap-ping 10-s segments and computed the normalized area under the curve (AUC) separately for each AU within each segment. AUCs were normalized by dividing by the total length of time participants’ faces were detected throughout each 10-s segment. This ensures that resulting AUC values are not biased by varying face detection accuracies between segments (e.g., so segments with 90% face detection accuracy are not assigned a higher AUC than those with 70% accuracy). Normalized AUC scores for each AU are then entered as predictors into two pretrained Random Forest models, which generate positive and negative valence ratings. This process was iterated for each 10-s segment, resulting in a time series of 60 intensity ratings for positive and negative affect for each dyad member across the 10-min discussion.

Figure 2.

Relative importance weights for the 20 AUs used by the Random Forest model to generate ratings of positive and negative affect intensity. Relative importance is computed based on the change in standard deviation attributable to each given AU while integrating over all other AUs, which indicates the AUs that are most predictive of changes in positive/negative affect intensity. Images representing the five most important AUs are superimposed on each subplot. Image adapted with permission from Haines et al. (2019).

In our validation study with an independent sample, the model showed average correlations with human ratings of .89 for positive affect intensity and .76 for negative affect intensity. Coding all 48 dyad members’ facial expressions, which translates into 16 hr of raw video data, for 20 FACS AUs and subsequent positive and negative affect ratings, was executed overnight on a standard desktop PC.

Mother–daughter correspondence analyses

We assessed positive and negative affect correspondence by constructing multilevel models (MLM) in R, lme4 (Bates, Maechler, Bolker, & Walker, 2015). MLM accommodates nested data structure (in this case, ratings nested within individuals). Because previous studies show that mothers play a primary role in “driving” adolescent emotion during conflict (Crowell et al., 2017), we specified MLMs as follows, which enabled us to evaluate between-groups differences in both (1) mothers’ positive and negative affect, and (2) mother–daughter affect correspondence:

Level 1 : mother affectij = π0j + π 1j×(daughter affectij) + ejj

Level 2 : π 0j = β00 + β 01×(C1) + β 02×(C2) + r0j

π 1j = β10 + β11×(C1) + β12×(C2) + r1j

We included nested orthogonal contrasts to compare control dyads to both depressed and self-harm dyads (C1) and self-harm dyads to depressed dyads (C2). Mother and daughter affect indicate AFEC ratings, and i and j indicate time points and dyads, respectively. We fit separate models for positive and negative affect. Before running multilevel correspondence analyses, we smoothed the AFEC-coded positive and negative affect ratings using a simple moving average with a window size of 10. Moving averages act as low pass-filters (e.g., Smith, 2003) to dampen high-frequency noise (e.g., moment-to-moment variations in AFEC codes attributable to speaking). We conducted sensitivity analyses using varying widths (8–16) for the moving window; results were consistent across variations.

Results

Associations between FPPC and AFEC

Table 1 shows correlations between AFEC-coded positive and negative affect for each dyad member and the FPPC-derived values for (a) total aversiveness; (b) high, intermediate, and low aversives; and (c) probabilities of escalating. Among other findings, in dyads where mothers expressed more positive affect, daughters showed fewer escalations. Moreover, daughters who expressed more positive affect exhibited fewer high-level aversives and escalations and less overall aversiveness. Many of these correlations fall in the medium effect size range according to Cohen’s (1992) standards. They are nevertheless notable given that (a) different coding schemes were used (FPPC vs. AFEC), (b) different “informants” coded behaviors (humans vs. computers), and (c) the algorithm used by AFEC was derived from an independent data set. Our findings therefore suggest at least some degree of convergence across methods. Specific relations between FPPC and AFEC codes also support validity of the AFEC model (e.g., mother positive affect was associated positively with daughter positive affect and negatively with daughter escalations).

Table 1.

Correlations between AFEC and FPPC ratings

| Variable | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. Mother (+) | — | ||||||||||||

| 2. Mother (−) | −.16 | — | |||||||||||

| 3. Daughter (+) | .44** | −.12 | — | ||||||||||

| 4. Daughter (−) | −.11 | −.14 | .05 | — | |||||||||

| 5. Mother HI | −.02 | .08 | −.03 | .02 | — | ||||||||

| 6. Mother IN | .38** | −.25† | .27† | .03 | −.16 | — | |||||||

| 7. Mother LW | −.25† | .11 | −.16 | −.04 | −.73** | −.56** | — | ||||||

| 8. Mother Tot Ave. | .01 | .05 | −.01 | .15 | .93** | −.06 | −.74** | — | |||||

| 9. Mother Esc. | .15 | .13 | .25† | .01 | −.15 | −.24 | .29* | −.26† | — | ||||

| 10. Daughter HI | −.17 | −.02 | −.35* | −.04 | .52** | .00 | −.44** | .57** | −.82** | — | |||

| 11. Daughter IN | −.15 | −.13 | .20 | .12 | −.19 | .03 | .14 | −.17 | .20 | −.48** | — | ||

| 12. Daughter LW | .22 | .06 | .33* | .01 | −.52** | −.01 | .45** | −.58** | .85** | −.97** | .26† | — | |

| 13. Daughter Tot. Ave. | −.21 | −.05 | −.32* | .02 | .53** | .00 | −.44** | .59** | −.84** | .98** | −.35* | −.99** | — |

| 14. Daughter Esc. | −.35* | .26† | −.31* | .03 | −.22 | −.65** | .64** | −.21 | .08 | .09 | −.07 | −.08 | .09 |

Note: (+) represents AFEC positive affect intensity. (−) represents AFEC negative affect intensity. HI, high-level aversives. IN, intermediate-level aversives. LW, low-level aversives. Tot. Ave., total aversives. Esc., escalation.

p ≤ .1.

p ≤ .05.

p ≤ .01.

Average affect intensity

Next, we tested for pairwise differences in average positive and negative affect within and between mother–daughter dyads. Sample-wide, average positive and negative affect intensities for mothers over the 10-min discussion were 3.16 (SD = 0.65) and 2.56 (SD = 0.73), respectively. The difference between positive and negative affect was significant, t (47) = 3.92, p < .001, indicating that mothers expressed more intense positive than negative affect. Daughters also displayed higher positive affect (M = 2.99, SD = 0.94) than negative affect (M = 1.73, SD = 0.51), t (47) = 8.35, p < .001. Within dyads, mothers and daughters showed similar levels of positive affect intensity, t (47) = −1.29, p = .20, but daughters showed less negative affect than mothers, t (47) = −6.06, p < .001. Differences in average positive and negative affect between groups are reported in the correspondence analyses section below.

Dyadic correspondences of facial expressions

Before conducting correspondence analyses, we removed data from dyads for whom AFEC could code fewer than 15 co-occurring 10-s segments. This was necessary to estimate reliable correlations and resulted in exclusion of one dyad from the depressed group with only 3 segments of usable data.

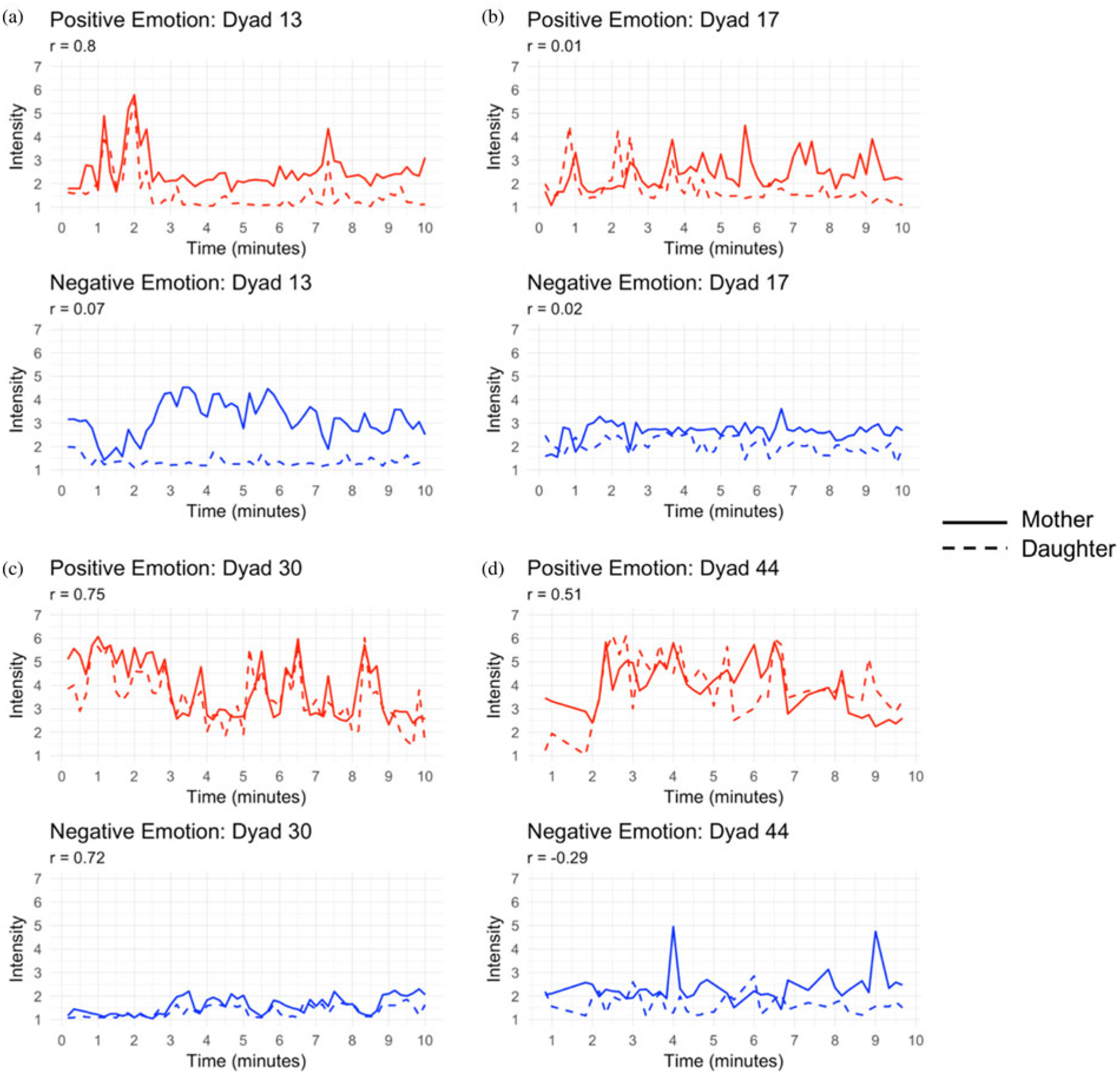

Dyads exhibited considerable heterogeneity in raw facial expression dynamics across the discussion (see Figure 3). Some dyads showed high correspondence of positive affect but low correspondence of negative affect (Figure 3a), some showed low correspondence of both positive and negative affect (Figure 3b), some showed high correspondence of both positive and negative affect (Figure 3c), and others showed positive correspondence for positive affect but negative correspondence for negative affect (Figure 3d). Such differences in correspondence within dyads support the use of MLM to account for dyad-level variation.

Figure 3.

Heterogeneity of raw facial expression correspondence (correlations) among dyads. (a) Some dyads showed high correspondence of only positive affect, (b) some showed no correspondence of either positive or negative affect, (c) some showed high correspondence of both positive and negative affect, and (d) some showed positive correspondence of positive affect but negative correspondence of negative affect. Intensity ratings range from 1 (no emotion) to 7 (extreme emotion) within each 10-s interval across the 10-min discussion.

Table 2 shows results from MLMs for both positive and negative affect correspondence. All three groups showed strong mother–daughter correspondence for positive affect intensity, yet mothers in control dyads showed higher average positive affect than mothers in both the depressed and self-harm dyads. No group differences in positive affect correspondence were observed. In contrast, only self-harm dyads showed negative affect correspondence, despite no group differences in average levels of mother negative affect.

Table 2.

Summary of fixed slope and centered intercept effects from multilevel models

| Variable | Coefficient | Standard error | t | p |

|---|---|---|---|---|

| Predicting mother positive affect | ||||

| For intercept | ||||

| Intercept | 0.081 | 0.139 | 0.578 | .566 |

| C1 | 0.682 | 0.308 | 2.215 | .032 |

| C2 | −0.019 | 0.327 | −0.058 | .954 |

| For slope | ||||

| Intercept | 0.609 | 0.086 | 7.037 | <.001 |

| C1 | 0.346 | 0.191 | 1.812 | .077 |

| C2 | 0.197 | 0.203 | 0.970 | .338 |

| Predicting mother negative affect | ||||

| For intercept | ||||

| Intercept | −0.042 | 0.127 | −0.332 | .742 |

| C1 | −0.165 | 0.278 | −0.592 | .557 |

| C2 | −0.370 | 0.299 | −1.237 | .223 |

| For slope | ||||

| Intercept | −0.076 | 0.094 | −0.813 | .422 |

| C1 | −0.181 | 0.206 | −0.879 | .385 |

| C2 | 0.505 | 0.222 | 2.274 | .029 |

Note: For Intercept coefficients indicate differences in average mother positive affect, where the intercept is averaged across groups (mean-centered). C1 and C2 indicate contrasts of (a) control versus depressed and self-harm groups and (b) self-harm versus depressed groups, respectively. For Slope coefficients indicate differences in mother–daughter affect correspondence, where the intercept is averaged across groups (mean-centered). C1 and C2 indicate contrasts of (a) control versus depressed and self-harm groups and (b) self-harm versus depressed groups, respectively. Mother and daughter affect intensity ratings were smoothed (see Automated Coding section) and z-scored before modeling analyses.

Daughter age effects on correspondence

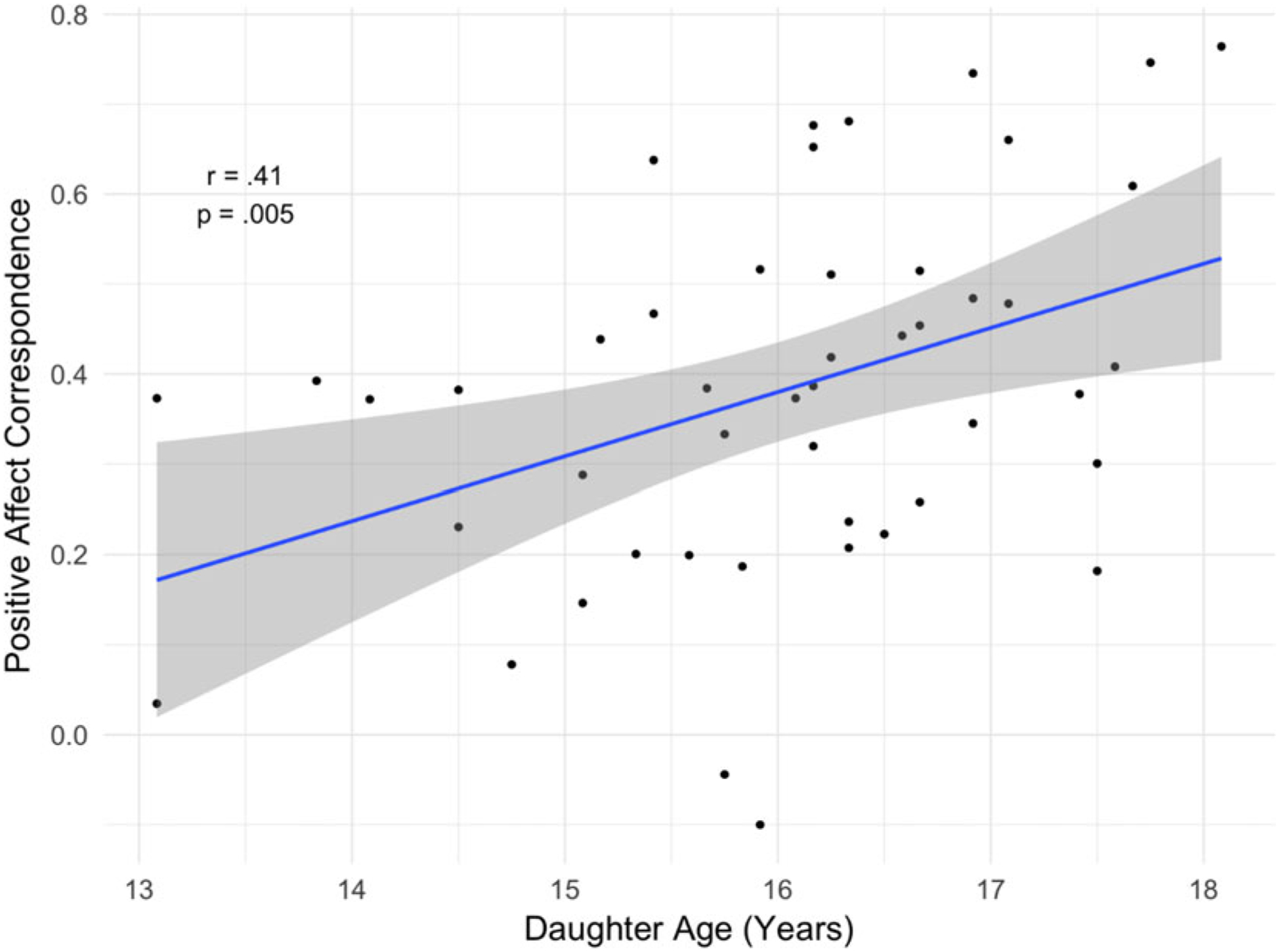

Finally, although the sample was not longitudinal, we examined daughter age effects on mother–daughter correspondence for both positive and negative affect. To do so, we separately regressed within-dyad positive and negative affect correspondence on daughters’ ages. Although there was no age effect for negative affect correspondence, r = .03, p = .83, positive affect correspondence increased with daughters’ age, r = .41, p = .004 (see Figure 4). Thus, for older girls, mother–daughter dyads showed greater synchrony of positive affect. Follow-up analyses indicated that this age effect was not attributable to group status, although power to detect any group difference was limited.

Figure 4.

Sample-wide increase in dyadic correspondence of facial expressions of positive affect from ages 13 to 18 years (daughters).

Interim discussion of findings

AFEC allowed us to code 16 continuous hours of facial expression data for positive and negative affect overnight. Even for a straightforward AFEC application, this a small fraction of the hundreds of hours required to train a human coding team, achieve reliability, and code even modest segments of data. Furthermore, AFEC detected differences in temporal dynamics of emotion expression within and between dyads at a fine-grained level of analysis (10-s intervals; see Figure 3), and showed modest convergent validity with a different coding scheme. AFEC was also sensitive to dyadic correspondences in expressions of affect, and despite restricted power, to group differences in correspondence (see Table 2). Finally, AFEC detected age-related changes in positive affect correspondence. Mothers from control dyads exhibited more overall positive facial expressions throughout the conflict discussion compared to mothers from depressed and self-harm dyads, consistent with previous research. Nevertheless mother–daughter positive affect correspondence was strong across all groups.

In contrast to positive affect findings, mothers showed no group difference in overall negative facial expressions throughout the conflict discussion, yet correspondence of negative affect was greater for self-harm dyads than both typically developing and depressed dyads. Our finding of strong correspondence of negative facial expressions among self-harm dyads is consistent with previous analyses of these data using human coders (Crowell et al., 2013). Taken together, these findings indicate that AFEC is sensitive to emotion dynamics in mother–daughter interactions, and can be used to objectively code facial expressions in settings where human coding might be cost prohibitive. It is worth reemphasizing, however, that our findings are specific to coding of emotional valence and intensity, and do not suggest that AFEC can replace more complex coding schemes.

General Discussion and Implications for Emotion Dysregulation Research

Production of and accurate interpretation of facial expressions are critical for effective social communication and healthy socioemotional development. From infancy through adolescence, these skills improve as individuals attain increasing levels of social competence and self-regulation. Accordingly, development of facial expressions, correspondences between facial expressions and physiological reactivity, and dyadic concordance in facial expressions have been core interests of developmental psychopathologists for years. Nevertheless, our understanding of developing facial expressions and their associations with both typical and atypical emotional development have been hampered by the labor, cost, and time intensity of human coding. Although AFEC cannot yet capture the full complexity of human emotion, it can be used in its current state to code affective valence and intensity with greater efficiency than human coders. Moreover, some AFEC models (including the application illustrated here) are validated and embedded in a strong empirical research base, which enables researchers to standardize coding across studies and sites. Progression of affective science depends on establishing shared methods of assessment, data collection, and data reduction (National Advisory Mental Health Council Workgroup on Tasks and Measures for Research Domain Criteria, 2016).

As our demonstration shows, existing applications of AFEC are sensitive to changes in affective valance and intensity dynamics, including individual differences, dyad-level differences, and group-level differences. One direction for future research concerns how emotion dynamics, including emotion dysregulation, develop in families, beginning at younger ages than we assessed. As noted above, AFEC accurately captures valance and intensity information from facial expressions of emotion in children as young as age 5 years, but how facial expressions shape emotional development within families is not fully understood. Future research can apply AFEC to assess maturation of parent–child emotional dynamics for typically developing children and children with various forms of psychopathology for whom extreme and lingering expressions of negative emotion are debilitating (see Beauchaine, 2015; Ramsook, Cole, & Fields-Olivieri, 2019). Of note, AFEC can code at higher temporal resolution than many human coding schemes, which often average ratings across epochs to reduce complexity. Moreover, computational advantages of AFEC hold potential to make more complicated analyses, such as dynamic evaluation of triadic interaction quality (cf. Bodner, Kuppens, Allen, Sheeber, & Ceulemans, 2018), far less cumbersome.

Given the labor intensity and cost of human coding, most studies of affective dynamics within families have been cross sectional. These studies reveal important group differences between families of typically developing children and adolescents versus families of adolescents with conduct problems, depression, and self-injury, among other conditions for which emotion dysregulation is a prominent feature (e.g., Bodner et al., 2018; Crowell et al., 2013, 2014; Snyder, Schrepferman, & St Peter, 1997). Evaluating family dynamics of emotion expression longitudinally is much more difficult because doing so requires research groups to train new coders for different time points in follow-up studies. Thus, labor intensity and cost are magnified over time. AFEC can circumvent this problem by applying a well-validated model to all time points. This opens avenues for a wide range of applications, including cross-lag panel designs that evaluate directions of association between affect dynamics and various adjustment outcomes, both healthy and adverse.

Longitudinal assessment can also be applied in studies of within-individual and within-dyad correspondences between expressed emotion and physiological reactivity. For example, according to operant perspectives, parental negative reinforcement of emotional lability and physiological reactivity promote development of persistent emotion dysregulation among children, whereas validation of affective experiences and expression promotes emotional competence (e.g., Beauchaine & Zalewski, 2016; Crowell et al., 2013; Snyder et al., 1997). To date, however, evidence for this perspective derives primarily from cross-sectional studies. Future research that evaluates time-linked correspondences between emotional and physiological reactivity both within individuals and within dyads across successive longitudinal assessments could be invaluable in identifying (a) key developmental periods during which such patterns emerge, (b) shapes of trajectories in responding over time, and (c) intervention response, among other questions. Furthermore, AFEC holds promise in allowing researchers to explore concurrent and longitudinal relations between facial expressions and peripheral nervous system responding (e.g., cardiac and electrodermal), central nervous system responding (e.g., EEG and functional magnetic resonance imaging), and trajectories in neurohormonal responding (e.g., cortisol and salivary alpha amylase).

By combining AFEC with other technologies such as ecological momentary assessment (EMA), more naturalistic, outside-thelab applications may also be possible. To date, smartphone-based EMA has been used to assess parent–child interactions in children as young as 6 years (Li & Lansford, 2018), and computer games have been developed that use AFEC to train facial expression production abilities in children of the same age (Gordon et al., 2014). One possibility is to combine EMA and computer-based AFEC games to measure children’s interpretation and use of facial expressions across different contexts over time. Such data-driven approaches may facilitate our search for prospective predictors of emotion dysregulation, a key target for prevention efforts in developmental psychopathology (Beauchaine, 2015).

A particularly appealing aspect of AFEC is its ability to code in real time from live streams of facial expressions. Real-time coding offers a number of advantages over human coding. In one real-time application, Gordon et al. (2014) leveraged real-time AFEC feedback to teach children with autism spectrum disorder to better produce facial expressions and recognize emotion from others’ facial expressions. In the future, therapeutic applications might include using AFEC to evaluate changes in interpretations and reactions to others’ facial expressions among individuals with abuse histories. Attributional biases among victims of abuse persist across development, with adverse implications for long-term function (Pollak et al., 2000; Pollak, & Sinha, 2002). Other potential applications include real-time AFEC coding during cooperative social exchange games for which performance is influenced heavily by others’ facial expressions (Reed, DeScioli, & Pinker, 2014). Skills required to play such games have clear implications for development of social competence (Gallup, O’Brien, & Wilson, 2010).

Because AFEC is relatively new—at least to psychologists— there are currently no “best practices” for analyzing facial expression data after they are collected. However, psychologists have analyzed data from dyadic interactions for decades, and many of the same techniques apply to analyzing raw AFEC data (see, e.g., Bodner et al., 2018). We expect the field will develop best practices as AFEC becomes more popular. We are currently collaborating with another lab to develop a toolbox for analyzing facial expression data collected from various software products mentioned above. This will be analogous to tool-boxes currently available for analyzing neuroimaging data. We hope this endeavor makes AFEC models more accessible to developmental and other behavioral scientists.

Finally, it is worth noting that not all AFEC models perform equally, and choices of software can lead to different conclusions from seemingly equivalent analyses. However, we do not think such differences should discourage researchers from leveraging potentially valuable features of AFEC. Common software packages for analyzing neuroimaging data (e.g., SPM and FSL) yield varying results when analyzing the same data, and even within a single software package one’s choice of preprocessing steps affects estimates of blood oxygen level dependent responding (Carp, 2012). Despite these issues, functional magnetic resonance imaging studies continue to provide critical insights into human behavior and its development. Moving forward, it is important that researchers interested in AFEC technologies use validated models and provide details on preprocessing steps used.

Financial support.

Work on this article was supported by Grants DE025980 (to T. P. B.) and MH074196 (to S.C.) and from the National Institutes of Health, and by the National Institutes of Health Science of Behavior Change (SoBC) Common Fund.

Footnotes

Constructionist theories (e.g., Barrett, 2009) offer a third perspective on facial expressions of emotion. However, because dimensional models capture key components of constructionist models (e.g., core affective processes such as valence), we do not consider them given space constraints.

Accuracy is defined as , where TP is the number of true positives, TN is the number of true negatives, and N is the total number of images or videos coded. Note that this collapses across all emotions. AFEC researchers typically do not report kappa (κ) statistics, which are commonly used to assess agreement for categorical coding in developmental psychopathology research. We refer interested readers to confusion matrices within cited papers for complete information on human–computer agreement.

An F1 score of 1.0 indicates perfect precision and recall, whereas a+score of 0 indicates no true positives.

FACET detects the following FACS AUs: 1, 2, 4, 5, 6, 7, 9, 10, 12, 14, 15, 17, 18, 20, 23, 24, 25, 26, 28, and 43.

References

- Adams A, & Robinson P (2015). Automated recognition of complex categorical emotions from facial expressions and head motions. Paper presented at the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China. [Google Scholar]

- Baltrusaitis T, Robinson P, & Morency L-P (2016). OpenFace: An open source facial behavior analysis toolkit. Paper presented at the 2016 IEEE Winter Conference on Applications of Computer Vision, Lake Placid, NY. [Google Scholar]

- Barrett LF (2009). The future of psychology: Connecting mind to brain. Perspectives on Psychological Science, 4, 326–339. doi: 10.1111/j.1745-6924.2009.01134.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsade SG (2002). The ripple effect: Emotional contagion and its influence on group behavior. Administrative Science Quarterly, 47, 644–675. doi: 10.2307/3094912 [DOI] [Google Scholar]

- Bartlett MS, Hager JC, Ekman P, & Sejnowski TJ (1999). Measuring facial expressions by computer image analysis. Psychophysiology, 36, 253–263. doi: 10.1017/S0048577299971664 [DOI] [PubMed] [Google Scholar]

- Bartlett MS, Littlewort GC, Frank MG, & Lee K (2014). Automatic decoding of facial movements reveals deceptive pain expressions. Current Biology, 24, 738–743. doi: 10.1016/j.cub.2014.02.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bates D, Maechler M, Bolker B, & Walker S (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67, 1–48. doi: 10.18637/jss.v067.i01 [DOI] [Google Scholar]

- Beauchaine TP (2015). Future directions in emotion dysregulation and youth psychopathology. Journal of Clinical Child and Adolescent Psychology, 44, 875–896. doi: 10.1080/15374416.2015.1038827 [DOI] [PubMed] [Google Scholar]

- Beauchaine TP, & Haines N (in press). Functionalist and constructionist perspectives on emotion In Beauchaine TP & Crowell SE (Eds.), The Oxford handbook of emotion dysregulation. New York: Oxford University Press. [Google Scholar]

- Beauchaine TP, & Webb SJ (2017). Developmental processes and psychophysiology In Cacioppo JT, Tassinary LG, & Berntson GG (Eds.), Handbook of psychophysiology (4th ed., pp. 495–510). New York: Cambridge University Press. [Google Scholar]

- Beauchaine TP, & Zalewski M (2016). Physiological and developmental mechanisms of emotional lability in coercive relationships In Dishion TJ & Snyder JJ (Eds.), The Oxford handbook of coercive relationship dynamics (pp. 39–52). New York: OXford University Press. [Google Scholar]

- Beckes L, & Edwards WL (2018). Emotions as regulators of social behavior In Beauchaine TP & Crowell SE (Eds.), The Oxford handbook of emotion dysregulation. New York: Oxford University Press. [Google Scholar]

- Blair R (1995). A cognitive developmental approach to morality: Investigating the psychopath. Cognition, 57, 1–29. doi: 10.1016/0010-0277(95)00676-P [DOI] [PubMed] [Google Scholar]

- Blair RJR, & Coles M (2000). Expression recognition and behavioural problems in early adolescence. Cognitive Development, 15, 421–434. doi: 10.1016/S0885-2014(01)00039-9 [DOI] [Google Scholar]

- Bodner N, Kuppens P, Allen NB, Sheeber LB, & Ceulemans E (2018). Affective family interactions and their associations with adolescent depression: A dynamic network approach. Development and Psychopathology, 30, 1459–1473. doi: 10.1017/S0954579417001699 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonanno GA, & Burton CL (2013). Regulatory flexibility: An individual differences perspective on coping and emotion regulation. Perspectives on Psychological Science, 8, 591–612. doi: 10.1177/1745691613504116 [DOI] [PubMed] [Google Scholar]

- Bonanno GA, Colak DM, Keltner D, Shiota MN, Papa A, Noll J, … Trickett PK (2007). Context matters: The benefits and costs of expressing positive emotion among survivors of childhood sexual abuse. Emotion, 7, 824–837. doi: 10.1037/1528-3542.7.4.824 [DOI] [PubMed] [Google Scholar]

- Bonanno GA, Papa A, Lalande K, Westphal M, & Coifman K (2004). The importance of being flexible: The ability to both enhance and suppress emotional expression predicts long-term adjustment. Psychological Science, 15, 482–487. doi: 10.1111/j.0956-7976.2004.00705.x [DOI] [PubMed] [Google Scholar]

- Buck R (1975). Nonverbal communication of affect in children. Journal of Personality and Social Psychology, 31, 644–653. doi: 10.1037/h0077071 [DOI] [PubMed] [Google Scholar]

- Buck R (1977). Nonverbal communication of affect in preschool children: Relationships with personality and skin conductance. Journal of Personality and Social Psychology, 35, 225–236. doi: 10.1037/0022-3514.35.4.225 [DOI] [PubMed] [Google Scholar]

- Campos JJ, Mumme DL, Kermoian R, & Campos RG (1994). A functionalist perspective on the nature of emotion. Monographs of the Society for Research in Child Development, 59, 284–303. doi:10.1111=j.1540-5834.1994.tb01289.x [PubMed] [Google Scholar]

- Camras LA, Grow JG, & Ribordy SC (1983). Recognition of emotional expression by abused children. Journal of Clinical Child Psychology, 12, 325–328. doi: 10.1207/s15374424jccp1203_16 [DOI] [Google Scholar]

- Camras LA, Ribordy S, Hill J, Martino S, Spaccarelli S, & Stefani R (1988). Recognition and posing of emotional expressions by abused children and their mothers. Developmental Psychology, 24, 776–781. doi: 10.1037//0012-1649.24.6.776 [DOI] [Google Scholar]

- Carp J (2012). On the plurality of (methodological) worlds: Estimating the analytic flexibility of fMRI experiments. Frontiers in Neuroscience, 6, 149. doi: 10.3389/fnins.2012.00149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen S, Chen T, & Bonanno GA (2018). Expressive flexibility: Enhancement and suppression abilities differentially predict life satisfaction and psychopathology symptoms. Personality and Individual Differences, 126, 78–84. doi: 10.1016/j.paid.2018.01.010 [DOI] [Google Scholar]

- Chevalier-Skolnikoff S (1973). Facial expression of emotion in nonhuman primates In Ekman P (Ed.), Darwin and facial expression (pp. 11–83). New York: Oxford University Press. [Google Scholar]

- Cohen J (1992). A power primer. Psychological Bulletin, 112, 155–159. doi: 10.1037/0033-2909.112.1.155 [DOI] [PubMed] [Google Scholar]

- Cohn JF (2010). Advances in behavioral science using automated facial image analysis and synthesis [social sciences]. IEEE Signal Processing Magazine, 27, 128–133. doi: 10.1109/MSP.2010.938102 [DOI] [Google Scholar]

- Cohn JF, & De la Torre F (2014). Automated face analysis for affective In Calvo RA, D’Mello S, Gratch J, & Kappas A (Eds.), The Oxford handbook of affective computing (pp. 131–150). New York: Oxford University Press. [Google Scholar]

- Cohn JF, & Ekman P (2005). Measuring facial action In Harrigan JA, Rosenthal R, & Scherer KR (Eds.), The new handbook of nonverbal behavior research methods in the affective sciences (pp. 9–64). New York: Oxford University Press. [Google Scholar]

- Coifman K, & Almahmoud S (2017). Emotion flexibility and psychological risk and resilience In Kumar U (Ed.), The Routledge international handbook of psychosocial resilience (pp. 1–14). London: Routledge. [Google Scholar]

- Coifman KG, Flynn JJ, & Pinto LA (2016). When context matters: Negative emotions predict psychological health and adjustment. Motivation and Emotion, 40, 602–624. doi: 10.1007/s11031-016-9553-y [DOI] [Google Scholar]

- Crowell SE, Baucom BR, McCauley E, Potapova NV, Fitelson M, Barth H, … Beauchaine TP (2013). Mechanisms of contextual risk for adolescent self-injury: Invalidation and conflict escalation in mother– child interactions. Journal of Clinical Child and Adolescent Psychology, 42, 467–480. doi: 10.1080/15374416.2013.785360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowell SE, Baucom BR, Yaptangco M, Bride D, Hsiao R, McCauley E, & Beauchaine TP (2014). Emotion dysregulation and dyadic conflict in depressed and typical adolescents: Evaluating concordance across psychophysiological and observational measures. Biological Psychology, 98, 50–58. doi: 10.1016/j.biopsycho.2014.02.009 [DOI] [PMC free article] [PubMed] [Google Scholar]