Abstract

Background and Objectives

Technology has the potential to provide assistance and enrichment to older people; however, the desired outcomes are dependent on users’ acceptance and usage. The senior technology acceptance model (STAM) was developed as a multidimensional measure assessing older people’s acceptance of general technology. It contained 11 constructs measured by 38 items and had shown satisfactory psychometric properties. However, the length of the questionnaire increased respondent burden and limited its utilization. The study aimed to develop a brief, reliable, and valid version of scale to measure older people’s technology acceptance by shortening the full, 38-item STAM questionnaire.

Research Design and Methods

The research method included (1) a sequential item-reduction strategy maximizing internal consistency, (2) convergent and discriminant validity analysis based on confirmative factor analysis, and (3) an expert review of resultant items. Data previously collected for developing the original STAM questionnaire were used to create the brief version. The data were collected from 1,012 community-dwelling individuals aged 55 and older in Hong Kong. Internal consistency and construct validity of the shortened questionnaire were examined. Two experts were invited for reviewing content validity.

Results

The final 14-item, brief version of the STAM questionnaire consisted of a 4-factor structure, representing classical technology acceptance constructs and age-related health characteristics. Theoretical relationships in the brief version showed similar patterns to the original STAM. The 14-item STAM demonstrated robustness in psychometrics by preserving the reliability and validity of the original STAM questionnaire.

Discussion and Implications

The availability of a reliable and valid assessment tool of the short STAM can help researchers and practitioners to measure older adults’ acceptance of technology and its effective usage. The short STAM could save administration time, reduce the burden on respondents, and be included in large-scale surveys.

Keywords: Confirmative factor analysis, Psychometrics, Validation, Sequential item reduction

Translational Significance: A novel 14-item questionnaire can be used to accurately assess older adults’ propensity to try new technology.

Gerontechnology has the potential to support older people in living longer and healthier lives as fully engaged members of society (Pruchno, 2019). For instance, smart home and health monitoring technology enable older individuals to live safely and independently at home, facilitating aging in place as well as active and healthy aging (Kim, Gollamudi, & Steinhubl, 2017; Vaziri et al., 2019). For example, socially assistive robotics and communication technology can reduce depression and social loneliness for older people living alone (Pu, Jones, Todorovic, & Moyle, 2018).

The potential utility for technology to provide assistance and enrichment to older people is large, but digital inequality has been observed with older people less likely to use technology than younger people (Mitchell, Chebli, Ruggiero, & Muramatsu, 2019). The realization of desired outcomes from gerontechnology depends on its acceptance and continued usage by end-users. Due to the proliferation and diffusion of gerontechnology, a substantial effort has been made to understand the factors predicting technology acceptance and usage among older people (Berkowsky, Sharit, & Czaja, 2018; Pu et al., 2018). However, the current understanding of the measurements necessary for examining technology acceptance by older people is still limited. A measure of the initial level of acceptance of technology can be used to inform product/system improvement, as well as to predict future adoption. The purpose of the current study was to develop a technology acceptance measure specific to older people that is accessible, easy to administer, and less time-consuming for developers and researchers.

Technology acceptance is the attitudinal perception and behavioral intention to use technology, and it is one of the most important predictors of adoption and usage of technology. Several competing models have been used widely to predict technology acceptance. The most dominating one is the technology acceptance model (TAM), introduced by Davis, Bagozzi, and Warshaw (1989), and derived from the theory of reasoned action (Fishbein & Ajzen, 1975) and the theory of planned behavior (Taylor & Todd, 1995). The TAM presumed the mediating role of two attitudinal beliefs, perceived usefulness (PU) and perceived ease of use (PEOU), on attitude and intention to use technology. Subsequently, the TAM has been extended by integrating with other theories such as the social cognitive theory (Bandura, 2001) and the motivational model (Vallerand, 1997). Venkatesh, Morris, Davis, and Davis (2003) synthesized these models into the unified theory of acceptance and use of technology, which has become another widely used model. The unified theory of acceptance and use of technology identifies four key factors: performance expectation, effort expectation, social influence, and facilitating conditions. These factors serve as direct predictors of intention to use and actual usage of technology.

Using the TAM and the unified theory as basic models, Chen and Chan (2014) developed the first theoretical model to predict older people’s acceptance of everyday technology [i.e., the senior technology acceptance model (STAM)]. The STAM extends previous TAMs and theories by integrating age-related health and ability characteristics of older people. The STAM can explain 68% of the variance in gerontechnology usage. The original STAM questionnaire was developed as a multidimensional measure assessing older people’s acceptance of general technology. The STAM consisted of 11 factors measured by 38 items and has shown satisfactory psychometric properties (Chen & Chan, 2014; Özsungur & Hazer, 2018). This model suggested that gerontechnology self-efficacy, gerontechnology anxiety, health and ability characteristics, and facilitating conditions are better and stronger predictors of gerontechnology usage, compared with conventional attitudinal factors such as PU and PEOU. The conceptual structure of STAM was confirmed by another qualitative study conducted by the same authors (Chen & Chan, 2013).

The STAM has been used in an increasing number of studies examining older people’s acceptance of various types of gerontechnology and/or product usability evaluation, including smartphones (Petrovčič, Peek, & Dolničar, 2019), near-field communication light systems (Lim et al., 2015), social media (Parida, Mostaghel, & Oghazi, 2016), communication technologies (Özsungur & Hazer, 2018), telehealth technology (van Houwelingen, Ettema, Antonietti, & Kort, 2018), smart wearable health monitoring devices (Li, Ma, Chan, & Man, 2019), and technology-enabled financial services (Investor and Financial Education Council, 2019). However, we find that these studies did not directly adopt the STAM measure; instead, they modified the STAM questionnaire by deleting constructs (Lim et al., 2015; Parida et al., 2016), reducing items (Li et al., 2019; Ma, Chan, & Chen, 2016), or both (Petrovčič et al., 2019). This may be due to a lack of parsimony in the STAM and the length of the original measure. These empirical studies also imply that the STAM has the potential to be simplified by combining or eliminating some items.

We have found that among healthy older adults, filling out the STAM questionnaire takes approximately 15 min. However, for older adults with lower educational levels or those who were unable to fill in the questionnaire by themselves, administering the STAM via interview may take more than 30 min. Systematic review studies have reported that the length of the questionnaire significantly influences response rate: short questionnaires were associated with higher response rates (Edwards et al., 2002; Rolstad, Adler, & Rydén, 2011). Developing a brief version of the STAM questionnaire is important because a brief scale takes less time to complete, tends to have less missing data, minimizes the burden on older respondents, has fewer refusal rates and higher data quality, and is easier to administer in large self-report research (Beaton, Wright, & Katz, 2005; Hagtvet & Sipos, 2016).

With increasing interest in measuring senior people’s technology acceptance, calls have been made for scales with theoretical foundations that are concise, focused, and psychometrically sound. A shortened version of the STAM questionnaire would be a valuable tool for researchers measuring gerontechnology acceptance or to rapidly evaluate the usability of a product. The purpose of the study was to develop and validate a brief version of the senior technology acceptance questionnaire using a robust item-reduction method. We aimed to create a brief measure by eliminating approximately half of the items in the original STAM questionnaire while respecting its theoretical model. The psychometric properties of short STAM and conceptual relationships were evaluated.

Materials and Methods

Data and Sample

The data set previously collected to develop the original STAM was used for creating a brief version scale and examining item performance. Using the data collected previously during validation of the original questionnaire made the full version and brief version more comparable in terms of psychometric properties and conceptual relationships because it avoids sampling bias. Also, it aided in selecting items for retention in the brief version without presuming their properties for the future short version.

The original STAM was empirically tested using a cross-sectional questionnaire survey with a sample of 1,012 seniors aged 55 and older in Hong Kong. Participants were recruited from 17 community centers for older people during the period 2012–2013. The mean age of the participants was 67.47 years (SD = 7.96), while 74.7% were female, 51.4% said they were married, 72.7% were living with household members, 35.5% had primary education, and 81.1% were of middle economic status (81.1%). The detailed demographics of the participants can be found in the study of Chen and Chan (2014).

Materials

The STAM was developed in 2014, both theoretically and empirically, and it provided the theoretic framework for the current study. The STAM had 38 items in total and consisted of 11 conceptual subscales: attitude toward using, defined as an individual’s positive or negative feelings or appraisals about using gerontechnology; PU, defined as the degree to which a person believes that using the particular technology would improve his/her quality of life; PEOU, defined as the extent to which a person believes that using technology is free of effort; gerontechnology self-efficacy, defined as a sense of being able to use gerontechnology successfully; gerontechnology anxiety, defined as an individual’s apprehension when he or she is faced with the possibility of using gerontechnology; facilitating conditions, defined as conditions associated with the perception of objective factors in the environment that support usage of gerontechnology; self-reported health conditions, defined as the general health conditions and the ability to hear, see, and move around; cognitive ability, defined as capabilities related to memory, learning, concentration, and thinking; social relationships, defined as satisfaction with personal relationships and support from friends and family, as well as participation in social activities; attitude to aging and life satisfaction, defined as the attitude of individuals toward their own aging and overall life satisfaction; and physical function, defined as the level at which the person independently performs instrumental activities in daily living.

The STAM scales were adapted from prior technology acceptance research, and those scales have been confirmed to be reliable and valid in several replications in both Western and Chinese populations. The STAM measure was created in English, translated into Chinese, and then back-translated to English. The process was completed by a PhD student and a research assistant and reviewed by an academic professor to reach consensus. The questionnaire was pilot tested among a group of local older adults by the first author to fine-tune the wording and response choices. The questionnaire was administered in Chinese—the language used predominantly by local residents. Seven-point response scales were used in many previous technology acceptance studies. However, the STAM used a 10-point scale, because it was identified as the preferred format by older people and the Chinese population (Castle & Engberg, 2004; Wee et al., 2008). Reasons for the preference of the 10-point scale included more response variability, ease of comprehension, and reduction of response bias.

The psychometric properties of the original STAM measure have been proven to be satisfactory, with good construct reliability, good convergent validity, and satisfactory measurement model fit (Chen & Chan, 2014; Petrovčič et al., 2019).

A Sequential Item-Reduction Strategy

Shortening the original STAM questionnaire consisted of reducing the number of items while trying to preserve the psychometric properties. Although there has been lacking rigorous methodology for shortening the length of multidimensional measurement scales, studies proposed a series of principles and/or recommendations to improve the quality of scale reduction (Goetz et al., 2013; Smith, McCarthy, & Anderson, 2000; Stanton, Sinar, Balzer, & Smith, 2002). Those recommendations highlighted the importance of ensuring sufficiently psychometrical properties of the original, full-length scale in terms of reliability and validity; respecting the conceptual model when reducing the number of dimensions; performing appropriate analysis to ensure the shortening preprocess did not alter measurement properties; preserving content coverage; and validating the short version in an independent sample.

Following the questionnaire-shortening principles suggested by Goetz and colleagues (2013) and Raubenheimer (2004), we adopted a sequential item-reduction strategy that starts with internal consistency analysis followed by confirmative factor analysis, producing a shortened scale while preserving the conceptual coverage and established relations of the original version. This sequential strategy was used because the original STAM has already been formed and validated through a process of scale development.

The sequential item-reduction strategy consisted of four steps (Sexton, King-Kallimanis, Morgan, & McGee, 2014). In Step 1, the original STAM was tested using confirmative factor analysis. Step 2 involved removing items whose absence would lead to an increase in reliability. Step 3 was to combine subscales for reducing the number of factors in the conceptual model and to test those models with different combinations of subscales. Finally, in Step 4, models were revised step by step through discarding less relevant subdimensions or poor loadings items using confirmative factor analysis to maximize the construct validity. The model revision was done sequentially, seeking to ensure adequate overall conceptual coverage and coherence as well as to meet the psychometric standards.

SPSS 25.0 was used to generate Cronbach’s alpha and corrected item-total correlations. Confirmatory factor analysis with maximum likelihood extraction was performed using AMOS 25.0 software to test the measurement model fit. The model fit indices included chi-square (χ 2), root mean square error of approximation (RMSEA), comparative fit index (CFI), non-normed fit index (NNFI), and standardized root mean residual (SRMR), which contain both incremental index and absolute index (Hair, 2010; Hooper, Coughlan, & Mullen, 2008). The more closely the CFI and NNFI values approach 1, the better the degree of fit the model has; therefore, a CFI and NNFI value greater than 0.90 is needed (Hair, 2010; Hooper et al., 2008). An RMSEA value lower than 0.06 and an SRMR less than 0.08 are needed for adequate model fit (Hu & Bentler, 1999). The chi-square value was used for model comparison—the smaller and more insignificant the value, the better the model fit (Cheng et al., 2019; Hair, 2010).

Step 1: Test the original STAM

Many studies suggested that internal consistency and theoretic coherency of the scale should be demonstrated before item reduction (Dekker et al., 2011; Goetz et al., 2013). Hence, the existing STAM was examined using confirmative factor analysis to provide a baseline measurement model for subsequent comparison with any revised models. Reliability analysis was also performed to assess internal consistency. The original STAM contains 11 factors/subscales measured by 38 items.

Step 2: Remove items to maximize internal consistency

Raubenheimer (2004) proposed a sequential item selection strategy in multidimensional Likert-type measures, which starts off with internal consistency analyses to maximize internal consistency followed by convergent and discriminant validity analysis. This strategy was most applicable when subscales have already been formed through the scale development process. The approach of maximizing Cronbach’s alpha first has been used previously for instrument shortening (Erhart et al., 2010; Garnefski & Kraaij, 2006; Raubenheimer, 2004; Sexton et al., 2014).

To maximize internal consistency, Cronbach’s alpha coefficient and corrected item-total correlation were used as criteria for item reduction in Step 2. The 11 constructs in the original STAM were left intact, while the number of items in each factor was reduced to 2. Items with the highest “alpha if item deleted” were discarded, one by one, based on the result of reliability analysis. The reliability analysis was then repeated by removing the least reliable item until there were only two items in the subscale. In this step, the original 38-item STAM would be reduced to 22 items.

The current study used a statistical approach for scale reduction by firstly including two items per factor through reliability analysis. Given that there are 11 factors identified by the original STAM, the decision to include two items per factor was made to ensure a structurally balanced measurement tool that preserves the original conceptual structure (Garnefski & Kraaij, 2006). Although some research suggested that there should be at least three items under each factor to be properly identified, we adopted a more parsimonious approach, with two items in each factor. The “two-indicator rule” specifies that a model with only two congeneric indicators per factor is sufficiently identified given that each factor has a significant relationship (Bollen & Davis, 2009; Hair, 2010). This method has been adopted by previous studies (Boot et al., 2013; Garnefski & Kraaij, 2006; Linton, Nicholas, & MacDonald, 2011).

Step 3: Combine subdimensions

The original STAM has 11 constructs categorizing into two dimensions: one was the classical TAM constructs and the other was health and capability characteristics. To eliminate a sufficient number of items, we simplified the conceptual model through a combination of highly correlated constructs while ensuring optimal conceptual coverage.

Step 4: Remove subdimensions and items

Through previous steps, the length of the scale had been reduced from 38 to 22 items, but this had not succeeded in shortening the questionnaire sufficiently (removing half the items). Therefore, the construct would be removed if previously shown to be not directly and significantly predicting technology use by older people. Removing the insignificant construct was sought to preserve the predictive power of the model. Besides, items with the least or insignificant item loadings in any factors were removed using confirmative factor analysis, to maximize both convergent and discriminant validity (as indicated by high loadings on own factors and low loadings on other factors) (Raubenheimer, 2004).

Psychometric properties of the short STAM

The internal consistency and construct validity were used to evaluate the psychometric properties of the newly shortened scale. The reliability could be achieved if the Cronbach’s alpha and/or composite reliability value was greater than 0.70 (Hair, 2010).

Construct validity was initially assessed by comparing correlations between constructs. Convergent validity, which reflects the correspondence between similar constructs, was assessed by factor loading estimates and average variance extracted (AVE). The rule of thumb for construct validity was that the standardized factor loading should be at least 0.50 (ideally, 0.70) or higher (Bagozzi & Yi, 1988; Hair, 2010). An AVE of 0.50 or higher indicated a good convergence (Fornell & Larcker, 1981; Hair, 2010).

Structural equation modeling with the AMOS software was performed to examine the relationships among relevant theoretical constructs and their predictive power toward actual usage. The final dependent variable, that is, actual usage, was measured in terms of the self-reported degree of use and domains of use over the 12 months preceding the interview (Chen & Chan, 2014). A higher score indicated that the respondent was more likely to use gerontechnology.

Expert Review

Both full and brief versions of the STAM measure were sent to two experts (one academic staff working on technology acceptance and one engineer in gerontechnology) for their comments on content validity, the importance of each item for retention, and clarity of the wording.

Results

Step 1: Test the Original STAM

The corrected item-total correlation of the original 38 items and Cronbach’s alpha of each subscale were assessed (Supplementary Table S1). Except for constructs of gerontechnology self-efficacy, attitude to aging, and life satisfaction, the Cronbach’s alpha of all other nine constructs was above 0.70. The measurement model of the original STAM (Model 1.1, Table 1) was within the acceptable range (RMSEA < 0.06, CFI > 0.90, NNFI > 0.90, and SRMR < 0.08). However, the chi-square value did not indicate a good fit (χ2 = 2305.4, df = 603, p < .001), which could be due to the large sample size (>1,000) (Bagozzi & Yi, 1988; Hair, 2010).

Table 1.

Model Fit Statistics at Each Step of Model Revision

| Model | Description | Chi-square | df | RMSEA | CFI | NNFI | SRMR |

|---|---|---|---|---|---|---|---|

| Step 1: test established model | |||||||

| 1.1 | 11 factors, 38 items | 2,305.4 | 603 | 0.054 | 0.940 | 0.930 | 0.075 |

| Step 2: remove items | |||||||

| 2.1 | 11 factors, 22 items | 485.6 | 154 | 0.046 | 0.978 | 0.968 | 0.028 |

| Step 3: combine subdimensions | |||||||

| ATT + PU | |||||||

| 3.1 | 10 factors, 22 items | 472.8 | 162 | 0.044 | 0.980 | 0.971 | 0.029 |

| ATT + PU, PEOU + SE | |||||||

| 3.2 | 9 factors, 22 items | 554.1 | 170 | 0.047 | 0.975 | 0.966 | 0.033 |

| ATT + PU, PEOU + SE + FC | |||||||

| 3.3 | 8 factors, 22 items | 591.4 | 177 | 0.048 | 0.973 | 0.965 | 0.035 |

| ATT + PU, PEOU + SE + FC, A + S + C + H | |||||||

| 3.4 | 5 factors, 22 items | 708.4 | 192 | 0.052 | 0.966 | 0.960 | 0.044 |

| Step 4: remove subdimensions/items | |||||||

| Model 3.4 with dimension P removed | |||||||

| 3.5 | 4 factors, 20 items | 599.9 | 157 | 0.057 | 0.968 | 0.961 | 0.043 |

| Model 3.5 with item H2, A1, C5, PEOU1, ATT1, SE2 removed sequentially | |||||||

| 3.6 | 4 factors, 14 items | 263.0 | 68 | 0.053 | 0.977 | 0.969 | 0.038 |

Note: df = degree of freedom; RMSEA = root mean square error of approximation; CFI = comparative fit index; NNFI = non-normed fit index; SRMR = standardized root mean residual; ATT = attitude toward using; PU = perceived usefulness; FC = facilitating conditions; SE = gerontechnology self-efficacy; PEOU = perceived ease of use; A = attitude towards aging; S = social relationships; C = cognitive ability; P = physical function; H = self-reported health conditions.

Step 2: Remove Items to Maximize Internal Reliability

In Step 2, we removed 16 items from six constructs, leaving each subscale with two items. Reliability analyses were performed on the original 38-item and the 22-item STAM for comparison. The Cronbach’s alpha increased in the subscales of facilitating conditions, health, social relationships, and physical health. The corrected item-total correlations were all greater than 0.40, indicating that each of the items contributed to the scale. The removal of those items with the lowest item-total correlations was associated with improved measurement model fit (Model 2.1, Table 1). All the model fit indices were within the boundaries of a good fit, and the chi-square value of Model 2.2 reduced greatly compared with the original STAM (Model 1.1, Table 1).

Step 3: Combine Subdimensions

In Model 2.1, it was found that the correlations between several constructs were higher than 0.50 (Supplementary Table S2). Therefore, highly correlated constructs were combined so that the conceptual model could be simplified, and redundant items could be further removed. The combination of attitude toward using and PU resulted in a model with good fit (Model 3.1, Table 1) and a smaller chi-square value (χ2 = 472.8, df = 162, p < .001). Furthermore, the constructs of PEOU, self-efficacy, and then facilitating conditions were combined (Model 3.2 and Model 3.3, Table 1), which increased chi-square values, although other fit indices were within the acceptable range. With further combinations of health-relevant constructs (attitude toward aging, social relationships, cognitive ability, and general health conditions), Model 3.4 contained five factors with 22 items (χ2 = 708.4, df = 192, p < .001).

Step 4: Remove Subdimensions and Items

As the conceptual model was simplified from 11 to five factors, it was possible to remove additional items or subscales to improve model fit. The construct of physical function was removed due to its weak association with usage. The removal of the items measuring physical function resulted in four factors with 20 items and a better degree of model fit (Model 3.5). Items with the lowest factor loadings were removed further; six items (H2, A1, C5, PEOU1, ATT1, and SE2) were removed sequentially (Supplementary Table S1). The final model (Model 3.6, Table 1) displayed excellent fit to the data (χ2 = 263.0, df = 68, p < .01, RMSEA = 0.053, CFI = 0.977, NNFI = 0.969, SRMR = 0.038).

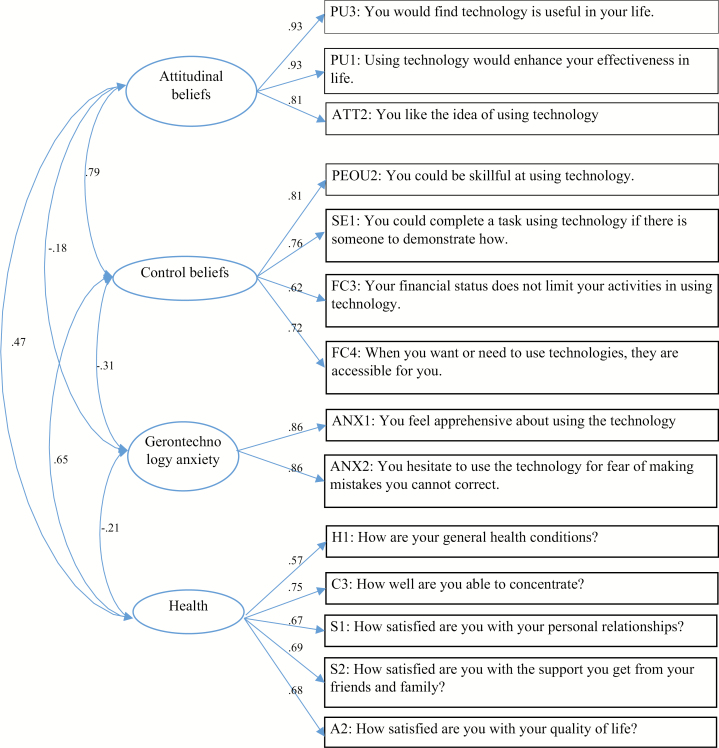

Retained items with standardized parameter estimates in the final model are shown in Figure 1. The standardized factor loadings ranged from 0.57 to 0.93, and all values exceeded 0.50 and were significant. The correlations between constructs ranged from −0.18 to 0.79.

Figure 1.

Standardized parameters in the measurement model (Model 3.6).

Psychometric Properties of the Short STAM

The final model contained 14 items and four constructs: attitudinal beliefs, control beliefs, gerontechnology anxiety, and health characteristics. Except for gerontechnology anxiety, which was left intact from the original STAM, the other three constructs were modified from the original version. The revised attitudinal beliefs construct contained two items from the previous PU and one item from the attitude toward using. Control beliefs were the combination of PEOU, self-efficacy, and two items from the original facilitating conditions. The health characteristics subscale now contained one item asking about the general health condition, one item measuring cognitive ability, two items assessing satisfaction on social relationships, and one item on satisfaction with the quality of life.

The psychometrics of the brief version was examined using confirmative factor analysis and presented in Table 2. Cronbach’s alpha and composite reliability for all subscales were above 0.80 in the brief version, indicating that the internal consistency was excellent. Additionally, the factor loadings of the 14-item STAM ranged from 0.57 to 0.93 (shown in Figure 1). The construct validity of the brief version of the STAM questionnaire was proven to be satisfactory, except that AVE for Health was 0.455, which failed to meet the suggested cutoff value of 0.50. Because AVE is a relatively strict and conservative measure, as suggested by Fornell and Larcker (1981), the convergent validity is adequate as long as composite reliability is higher than 0.60. Some researchers also suggested that AVE greater than 0.40 is acceptable (Huang, Wang, Wu, & Wang, 2013). Therefore, we consider that the construct validity of the short STAM was established.

Table 2.

Cronbach’s Alpha, Composite Reliability, and Average Variance Extracted of Short STAM

| Short STAM subscales | No. of items | Cronbach’s α | Composite reliability | Average variance extracted |

|---|---|---|---|---|

| Attitudinal beliefs | 3 | 0.915 | 0.921 | 0.795 |

| Control beliefs | 4 | 0.846 | 0.820 | 0.534 |

| Gerontechnology anxiety | 2 | 0.847 | 0.850 | 0.793 |

| Health | 5 | 0.817 | 0.805 | 0.455 |

Note: STAM = senior technology acceptance model.

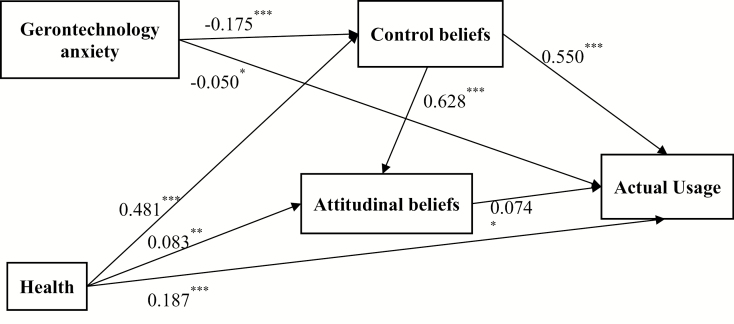

Structural equation modeling was used for testing the model fit. As shown in Figure 2, the conceptual relationships in the brief version of the STAM questionnaire were also preserved. Control beliefs (path coefficient = 0.550, p < .001) and health characteristics (path coefficient = 0.187, p < .001) had stronger impacts on actual usage compared with attitudinal beliefs (path coefficient = 0.074, p < .05) and gerontechnology anxiety (path coefficient = −0.050, p < .05). The control and attitudinal beliefs also partially mediated the effects of gerontechnology anxiety and health on actual technology usage. The brief version of STAM displayed similar patterns of association between constructs compared with the original version. The short STAM would explain 81.5% variance of actual usage of gerontechnology.

Figure 2.

Standardized path coefficients of structural equation modeling of the short senior technology acceptance model. Significant at *p < .05; **p < .01; ***p < .001 (two-tailed).

Expert Review

The feedback from two experts supported the face validity and conceptual coverage of the brief version. Suggestions to improve the retained items included rewording (i.e., changing “life” to “daily activities”), adding statements to clarify whether the items should be answered in relation to specific or general technologies, and specifying the target older adults. The brief STAM measure is given in Supplementary Table S3.

Discussion and Implications

Given the considerable interest in the development of technology for older adults and limited measures on assessing older people’s technology acceptance, this present study was conducted to develop and evaluate a shortened measure of gerontechnology acceptance. We created a brief version of the STAM measure with 14 items, using a sequential four-step item-reduction strategy. The brief version of the STAM questionnaire is psychometrically satisfactory with an improved model fit compared with the original version. Results of confirmative factor analysis provided strong support for the internal consistency and construct validity of the 14-item STAM. The original STAM contains 11 constructs and 38 items. The brief STAM simplified the original conceptual model and shortened the length of the questionnaire by 60%, resulting in four constructs and 14 items. This proportion of item reduction is in line with previous studies reported by a meta-analysis with a median proportion of 57% (Goetz et al., 2013).

The four constructs identified by the current study are consistent with those reported by previous studies examining predictors of technology acceptance by older people (Berkowsky et al., 2018; Cimperman, Makovec Brenčič, & Trkman, 2016; Vaziri et al., 2019). The proposed constructs of attitudinal beliefs, control beliefs, and anxiety are largely in line with that of traditional TAMs (Cimperman et al., 2016; Compeau & Higgins, 1995); and health conditions were strong predictors of technology acceptance in the population of older adults (Berkowsky et al., 2018; Vaziri et al., 2019). At least one item from each of the original STAM construct structure has been retained in the brief version. The two experts agreed that the items showed face validity and were relevant to technology acceptance by older adults.

The short STAM measure has several desirable properties compared with previous technology acceptance measures. First, it is relatively short and easy to administer on paper or online so reduces respondent burden. Second, the parsimonious theoretical model can explain up to 81.5% variance in actual usage, which is higher than the original STAM and other classical TAMs. Third, it has good conceptual coverage with inclusion of traditional technology acceptance-relevant constructs—attitudinal beliefs, control beliefs, gerontechnology anxiety, as well as items related to the physical, social, and psychological health of older adults. We have renamed two constructs (attitudinal beliefs and control beliefs) to be consistent with prior research and to reflect what we believe is the nature of the construct.

Finally, the current study used a sequential item-reduction strategy with consideration of the original theoretical model and psychometric properties during item selection. The theoretical importance of the factor combination and item elimination is indicated by several lines of research (Davis & Venkatesh, 1996; Goetz et al., 2013; Smith et al., 2000). In the current study, we found that attitude toward using and PU could be combined into an overall attitudinal beliefs construct, which contained two items on cognitively instrumental appraisal and one item on affective response. It is believed for older adults both cognitive and affective beliefs are important for technology usage. This combination appears to be parallel with previous theoretical foundations and empirical findings. PU and attitude toward using are highly correlated as indicated by meta-analysis studies (Ma & Liu, 2004; Schepers & Wetzels, 2007; Zhao, Ni, & Zhou, 2018). Items measuring these two constructs were previously found cross-loaded using factor analysis (Puri et al., 2017; Wixom & Todd, 2005). Although some theories [such as the theory of reasoned action developed by Fishbein and Ajzen (1975)] distinguished these two constructs and argued that PU influences usage behavior only via their indirect influence on attitude toward using, others (like the TAM2 and the unified theory of acceptance and use of technology) view PU’s direct effect on behavior over and above attitude toward using (Davis, 1989; Magsamen-Conrad, Upadhyaya, Joa, & Dowd, 2015). The effect of attitudinal beliefs on the usage also supports the general motivation theory as an explanation for behavior (Vallerand, 1997).

Control beliefs in the 14-item STAM are comprised of a combination of PEOU, self-efficacy, and the original facilitating conditions from the original STAM. As to the nature of the content, the four items of control beliefs examine the internal abilities (skillfulness and self-efficacy) and external controls in the environment (financial resources and accessibility). There are similarities in conceptual definition and measurement items between the constructs of controls beliefs (in short STAM) and perceived behavioral control identified by other two theories commonly used in technology acceptance studies, which are the decomposed theory of planned behavior developed by Taylor and Todd (1995) and the model of adoption of technology in household proposed by Brown and Venkatesh (2005). Perceived behavioral control in the two theories was conceptualized as an individual’s perception of internal and external constraints and encompasses ease of use, self-efficacy, and resource-facilitating conditions, which provided the conceptual basis for construct combination in the current study. The effect of control beliefs on actual usage was mediated by attitudinal beliefs which were also consistent with existing studies (Compeau & Higgins, 1995; Davis & Wiedenbeck, 2001).

The original STAM extended previous theoretical models by integrating aging-related psychosocial and physical characteristics measured by 22 items and encompassing five constructs: self-reported health conditions, cognitive abilities, social relationships, attitude to aging and life satisfaction, and physical functioning. To reduce the model by half and achieve parsimony, the short STAM excluded the construct of physical function and combined the other four constructs into one health construct. Physical function was removed based on complementary findings that it is not significantly predicting usage behavior more than other dimensions such as cognition and social relationships (Chou, Chang, Lee, Chou, & Mills, 2013; Petrovčič et al., 2019). The final health construct contains five items with the highest loadings. An examination of the five items suggested that they represent the underpinning of the constructs (at least one item was kept from the four domains of health) and established preliminary content validity. The composite reliability of the subscale is above 0.60, indicating adequate convergent validity of the subscale. This approach of retaining the highest loading items is consistent with recommendations in the psychometric literature; however, this approach might threaten content validity by narrowing domain coverage and eliminating facets of the constructs (Venkatesh et al., 2003). AVE of the scale was below the Fornell–Larcker criteria of 0.50, demonstrating that there is an insufficient variance for the five items to converge into a single construct; there is more error variance than the explained variance. Although we could improve the AVE by simply removing items with the lowest factor loading, we retained all five items with the consideration of underlying conceptual coverage. This is based on respecting the conceptual model as well as empirical evidence that suggests the significant effect of self-reported cognition, physical health, and quality of life on actual technology usage among older people (Best, Souders, Charness, Mitzner, & Rogers, 2015; Hedman, Kottorp, & Nygård, 2018; Holthe, Halvorsrud, Karterud, Hoel, & Lund, 2018; Mitzner, Sanford, & Rogers, 2018).

The theoretical relationships among short STAM are similar to those described by the authors in the original model. Through the “maximized reliability then validity strategy,” the theoretical model is largely maintained after the removal of several items. The shortened version of STAM shows itself to be structurally balanced, reliable, and valid in the evaluation of technology acceptance by older people. The STAM 14-item version allows for the measurement of technology acceptance in a short amount of time. It can be used as a tool for gerontechnology usability evaluation or prototype testing to assess older users’ acceptance of technology and its effective usage.

There are several limitations to the current study that should be noted. Firstly, the brief version of STAM was extracted from the full questionnaire for psychometric testing only using the original data set. Although the brief version applies to the original data set, it must be cross-validated with complete independent samples of older people. An additional set of data is needed to be collected and used to validate the psychometrics of the short STAM. Specifically, the brief 14-item scale should be administered independently without making use of the full version. Secondly, alternative measures or questionnaires may be useful for establishing discriminate and convergent validity. Although there are limited measurements available to study the external validity of the STAM, the computer proficiency questionnaire (Boot et al., 2013) and the technology experience questionnaire (Czaja et al., 2006) could be used. Thirdly, the STAM measure was validated using an older Hong Kong population that was cognitively intact. The variance explained by the model was different when STAM was used in different cultural settings (Lim et al., 2015; Parida et al., 2016; Petrovčič et al., 2019). This suggests the model may perform differently across populations, such that some factors may be important in one culture compared to others. New validated data also need to be collected and contrasted across other countries and/or cultural settings to see whether the findings are generalizable to the new setting. Also, further study may need to explore whether cognitively impaired older people are capable of answering the questions. Lastly, STAM is based on self-reported measures. Self-reported scales are cost-effective as their collection takes less time than performance-based measures. However, they are prone to reporting bias. Although there is a moderate correlation between self-reported and performance-based cognitive ability (Freund & Kasten, 2012), evidence also shows misreporting of cognitive health increases with age (Spitzer & Weber, 2019) and decreases with a prior administering of cognitive assessment battery (Freund & Kasten, 2012). Other performance-based assessments could be used to investigate their relationships with self-reported measures.

It is important to recognize that, generally speaking, overall validity may suffer from a reduction of items, and a scale containing a larger number of items might provide more information (Linton et al., 2011). However, findings from the current study provide strong support for the reliability and validity of the original STAM and the 14-item STAM as measures of gerontechnology acceptance. The full version of the STAM questionnaire should be administered if time allows and/or the response burden for participants is not too much. We recommend that the 14-STAM questionnaire be used in self-report research when there is not enough time or when older respondents show impatience. It could also be included in large-scale surveys of gerontechnology acceptance or used as a tool to rapidly assess product usability.

Funding

None reported.

Conflict of Interest

The author declare no conflicts of interest.

Supplementary Material

References

- Bagozzi R. P., & Yi Y (1988). On the evaluation of structural equation models. Journal of the Academy of Marketing Science, 16, 74–94. doi: 10.1007/bf02723327 [DOI] [Google Scholar]

- Bandura A. (2001). Social cognitive theory of mass communication. Media Psychology, 3, 265–299. doi: 10.1207/S1532785XMEP0303_03 [DOI] [Google Scholar]

- Beaton D. E., Wright J. G., & Katz J. N (2005). Development of the quickdash: Comparison of three item-reduction approaches. The Journal of Bone and Joint Surgery, 87, 1038–1046. doi: 10.2106/jbjs.d.02060 [DOI] [PubMed] [Google Scholar]

- Berkowsky R. W., Sharit J., & Czaja S. J (2018). Factors predicting decisions about technology adoption among older adults. Innovation in Aging, 1(3), 1–12. doi: 10.1093/geroni/igy002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Best R., Souders D. J., Charness N., Mitzner T. L., & Rogers W. A (2015). The role of health status in older adults’ perceptions of the usefulness of Ehealth technology. Human Aspects of IT for the Aged Population: Design for Aging: First International Conference, ITAP 2015, held as part of HCI International 2015, Los Angeles, CA, USA, August 2–7, 2015. Proceedings. Part I. ITAP (Conference) (1st: 20... 9194), 3–14. doi: 10.1007/978-3-319-20913-5_1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bollen K. A., & Davis W. R (2009). Two rules of identification for structural equation models. Structural Equation Modeling, 16, 523–536. doi: 10.1080/10705510903008261 [DOI] [Google Scholar]

- Boot W. R., Charness N., Czaja S. J., Sharit J., Rogers W. A., Fisk A. D.,...Nair S (2013). Computer proficiency questionnaire: Assessing low and high computer proficient seniors. The Gerontologist, 55, 404–411. doi: 10.1093/geront/gnt117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown S. A., & Venkatesh V (2005). Model of adoption of technology in households: A baseline model test and extension incorporating household life cycle. MIS Quarterly, 29(3), 399–426. doi: 10.2307/25148690 [DOI] [Google Scholar]

- Castle N. G., & Engberg J (2004). Response formats and satisfaction surveys for elders. The Gerontologist, 44, 358–367. doi: 10.1093/geront/44.3.358 [DOI] [PubMed] [Google Scholar]

- Chen K., & Chan A. H (2013). Use or non-use of gerontechnology—A qualitative study. International Journal of Environmental Research and Public Health, 10, 4645–4666. doi: 10.3390/ijerph10104645 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen K., & Chan A. H. S (2014). Gerontechnology acceptance by elderly Hong Kong Chinese: A senior technology acceptance model (STAM). Ergonomics, 57, 635–652. doi: 10.1080/00140139.2014.895855 [DOI] [PubMed] [Google Scholar]

- Cheng S. T., Chen P. P., Chow Y. F., Chung J. W. Y., Law A. C. B., Lee J. S. W.,...Tam C. W. C (2019). Developing a short multidimensional measure of pain self-efficacy: The chronic pain self-efficacy scale-short form. The Gerontologist, 31, 335–342. doi: 10.1093/geront/gnz041 [DOI] [PubMed] [Google Scholar]

- Chou C. C., Chang C. P., Lee T. T., Chou H., & Mills M. E (2013). Technology acceptance and quality of life of the elderly in a telecare program. CIN: Computers, Informatics, Nursing, 31, 335–342. doi: 10.1097/NXN.0b013e318295e5ce [DOI] [PubMed] [Google Scholar]

- Cimperman M., Makovec Brenčič M., & Trkman P (2016). Analyzing older users’ home telehealth services acceptance behavior—Applying an extended UTAUT model. International Journal of Medical Informatics, 90, 22–31. doi: 10.1016/j.ijmedinf.2016.03.002 [DOI] [PubMed] [Google Scholar]

- Compeau D. R., & Higgins C. A (1995). Computer self-efficacy: Development of a measure and initial test. MIS Quarterly, 19, 189–211. doi: 10.2307/249688 [DOI] [Google Scholar]

- Czaja S. J., Charness N., Dijkstra K., Fisk A. D., Rogers W. A., & Sharit J (2006). Computer and Technology Experience Questionnaire, Create Technical Report Create-2006-03. Retrieved from http://create-center.gatech.edu/publications_db/report%203%20ver1.3.pdf [Google Scholar]

- Davis F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–339. doi: 10.2307/249008 [DOI] [Google Scholar]

- Davis F. D., Bagozzi R. P., & Warshaw P. R (1989). User acceptance of computer technology: A comparison of two theoretical models. Management Science, 35, 982–1003. doi: 10.1287/mnsc.35.8.982 [DOI] [Google Scholar]

- Davis F. D., & Venkatesh V (1996). A critical assessment of potential measurement biases in the technology acceptance model: Three experiments. International Journal of Human–Computer Studies, 45(1), 19–45. doi: 10.1006/ijhc.1996.0040 [DOI] [Google Scholar]

- Davis S., & Wiedenbeck S (2001). The mediating effects of intrinsic motivation, ease of use and usefulness perceptions on performance in first-time and subsequent computer users. Interacting With Computers, 13, 549–580. doi: 10.1016/S0953-5438(01)00034-0 [DOI] [Google Scholar]

- Dekker R. L., Lennie T. A., Hall L. A., Peden A. R., Chung M. L., & Moser D. K (2011). Developing a shortened measure of negative thinking for use in patients with heart failure. Heart & Lung, 40(3), e60–e69. doi: 10.1016/j.hrtlng.2010.11.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edwards P., Roberts I., Clarke M., DiGuiseppi C., Pratap S., Wentz R., & Kwan I (2002). Increasing response rates to postal questionnaires: Systematic review. BMJ, 324(7347), 1183. doi: 10.1136/bmj.324.7347.1183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erhart M., Hagquist C., Auquier P., Rajmil L., Power M., & Ravens-Sieberer U.; European KIDSCREEN Group (2010). A comparison of Rasch item-fit and Cronbach’s alpha item reduction analysis for the development of a Quality of Life scale for children and adolescents. Child: Care, Health and Development, 36, 473–484. doi: 10.1111/j.1365-2214.2009.00998.x [DOI] [PubMed] [Google Scholar]

- Fishbein M., & Ajzen I (1975). Belief, attitude, intention, and behavior: An introduction to theory and research. Reading, MA: Addison-Wesley. [Google Scholar]

- Fornell C., & Larcker D. F (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18, 39–50. doi: 10.2307/3151312 [DOI] [Google Scholar]

- Freund P. A., & Kasten N (2012). How smart do you think you are? A meta-analysis on the validity of self-estimates of cognitive ability. Psychological Bulletin, 138, 296–321. doi: 10.1037/a0026556 [DOI] [PubMed] [Google Scholar]

- Garnefski N., & Kraaij V (2006). Cognitive emotion regulation questionnaire—Development of a short 18-item version (Cerq-Short). Personality and Individual Differences, 41, 1045–1053. doi: 10.1016/j.paid.2006.04.010 [DOI] [Google Scholar]

- Goetz C., Coste J., Lemetayer F., Rat A-C., Montel S., Recchia S...Guillemin F (2013). Item reduction based on rigorous methodological guidelines is necessary to maintain validity when shortening composite measurement scales. Journal of Clinical Epidemiology, 66, 710–718. doi: 10.1016/j.jclinepi.2012.12.015 [DOI] [PubMed] [Google Scholar]

- Hagtvet K. A., & Sipos K (2016). Creating short forms for construct measures: The role of exchangeable forms. Pedagogika, 66, 689–713. doi: 10.14712/23362189.2016.346 [DOI] [Google Scholar]

- Hair J. F. (2010). Multivariate data analysis (Vol. 7). Upper Saddle River, NJ: Prentice-Hall. [Google Scholar]

- Hedman A., Kottorp A., & Nygård L (2018). Patterns of everyday technology use and activity involvement in mild cognitive impairment: A five-year follow-up study. Aging & Mental Health, 22, 603–610. doi: 10.1080/13607863.2017.1297361 [DOI] [PubMed] [Google Scholar]

- Holthe T., Halvorsrud L., Karterud D., Hoel K., & Lund A (2018). Usability and acceptability of technology for community-dwelling older adults with mild cognitive impairment and dementia: A systematic literature review. Clinical Interventions in Aging, 13, 863–886. doi: 10.2147/CIA.S154717 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hooper D., Coughlan J., & Mullen M (2008). Structural equation modelling: Guidelines for determining model fit. Electronic Journal of Business Research Methods, 6, 53–60. doi: 10.21427/D7CF7R [DOI] [Google Scholar]

- Hu L. T., & Bentler P. M (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55. doi: 10.1080/10705519909540118 [DOI] [Google Scholar]

- Huang C. C., Wang Y. M., Wu T. W., & Wang P. A (2013). An empirical analysis of the antecedents and performance consequences of using the moodle platform. International Journal of Information and Education Technology, 3, 217. doi: 10.7763/IJIET.2013.V3.267 [DOI] [Google Scholar]

- Investor and Financial Education Council (2019). Study on money management in mature adulthood. Retrieved from https://www.ifec.org.hk/common/pdf/about_iec/ifec-mature-adults-study.pdf [Google Scholar]

- Kim K., Gollamudi S. S., & Steinhubl S (2017). Digital technology to enable aging in place. Experimental Gerontology, 88, 25–31. doi: 10.1016/j.exger.2016.11.013 [DOI] [PubMed] [Google Scholar]

- Li J., Ma Q., Chan A. H. S., & Man S. S (2019). Health monitoring through wearable technologies for older adults: Smart wearables acceptance model. Applied Ergonomics, 75, 162–169. doi: 10.1016/j.apergo.2018.10.006 [DOI] [PubMed] [Google Scholar]

- Lim W. M., Teh P. L., Ahmed P. K., Chan A. H-S., Cheong S. N., & Yap W. J. (2015). Are older adults really that different? Some insights from gerontechnology. In Chen S., & Xie M. (Eds.), 2015 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM 2015) (pp. 1561–1565). IEEE, Institute of Electrical and Electronics Engineers. doi: 10.1109/IEEM.2015.7385909 [DOI] [Google Scholar]

- Linton S. J., Nicholas M., & MacDonald S (2011). Development of a short form of the Örebro Musculoskeletal Pain Screening Questionnaire. Spine, 36, 1891–1895. doi: 10.1097/BRS.0b013e3181f8f775 [DOI] [PubMed] [Google Scholar]

- Ma Q., Chan A. H. S., & Chen K (2016). Personal and other factors affecting acceptance of smartphone technology by older Chinese adults. Applied Ergonomics, 54, 62–71. doi: 10.1016/j.apergo.2015.11.015 [DOI] [PubMed] [Google Scholar]

- Ma Q., & Liu L (2004). The technology acceptance model: A meta-analysis of empirical findings. Journal of Organizational and End User Computing, 16, 59–72. doi: 10.4018/joeuc.2004010104 [DOI] [Google Scholar]

- Magsamen-Conrad K., Upadhyaya S., Joa C. Y., & Dowd J (2015). Bridging the divide: Using UTAUT to predict multigenerational tablet adoption practices. Computers in Human Behavior, 50, 186–196. doi: 10.1016/j.chb.2015.03.032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell U. A., Chebli P. G., Ruggiero L., & Muramatsu N (2019). The digital divide in health-related technology use: The significance of race/ethnicity. The Gerontologist, 59, 6–14. doi: 10.1093/geront/gny138 [DOI] [PubMed] [Google Scholar]

- Mitzner T. L., Sanford J. A., & Rogers W. A (2018). Closing the capacity-ability gap: Using technology to support aging with disability. Innovation in Aging, 2(1), 1–8. doi: 10.1093/geroni/igy008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Özsungur F., & Hazer O (2018). Analysis of the acceptance of communication technologies by acceptance model of the elderly: Example of Adana province. International Journal of Eurasia Social Sciences, 9, 238–275. [Google Scholar]

- Parida V., Mostaghel R., & Oghazi P (2016). Factors for elderly use of social media for health-related activities. Psychology & Marketing, 33, 1134–1141. doi: 10.1002/mar.20949 [DOI] [Google Scholar]

- Petrovčič A., Peek S., & Dolničar V (2019). Predictors of seniors’ interest in assistive applications on smartphones: Evidence from a population-based survey in Slovenia. International Journal of Environmental Research and Public Health, 16, 1623. doi: 10.3390/ijerph16091623 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pruchno R. (2019). Technology and aging: An evolving partnership. The Gerontologist, 59, 1–5. doi: 10.1093/geront/gny153 [DOI] [PubMed] [Google Scholar]

- Pu L., Jones C., Todorovic M., & Moyle W (2018). The effectiveness of social robots for older adults: A systematic review and meta-analysis of randomized controlled studies. The Gerontologist, 59, e37–e51. doi: 10.1093/geront/gny046 [DOI] [PubMed] [Google Scholar]

- Puri A., Kim B., Nguyen O., Stolee P., Tung J., & Lee J (2017). User acceptance of wrist-worn activity trackers among community-dwelling older adults: Mixed method study. JMIR mHealth and uHealth, 5(11), e173–e173. doi: 10.2196/mhealth.8211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raubenheimer J. (2004). An item selection procedure to maximise scale reliability and validity. SA Journal of Industrial Psychology, 30(4), 59–64. doi: 10.4102/sajip.v30i4.168 [DOI] [Google Scholar]

- Rolstad S., Adler J., & Rydén A (2011). Response burden and questionnaire length: Is shorter better? A review and meta-analysis. Value in Health, 14, 1101–1108. doi: 10.1016/j.jval.2011.06.003 [DOI] [PubMed] [Google Scholar]

- Schepers J., & Wetzels M (2007). A meta-analysis of the technology acceptance model: Investigating subjective norm and moderation effects. Information and Management, 44, 90–103. doi: 10.1016/j.im.2006.10.007 [DOI] [Google Scholar]

- Sexton E., King-Kallimanis B. L., Morgan K., & McGee H (2014). Development of the brief ageing perceptions questionnaire (B-APQ): A confirmatory factor analysis approach to item reduction. BMC Geriatrics, 14, 44. doi: 10.1186/1471-2318-14-44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith G. T., McCarthy D. M., & Anderson K. G (2000). On the sins of short-form development. Psychological Assessment, 12, 102–111. doi: 10.1037/1040-3590.12.1.102 [DOI] [PubMed] [Google Scholar]

- Spitzer S., & Weber D (2019). Reporting biases in self-assessed physical and cognitive health status of older Europeans. PLoS One, 14(10), e0223526. doi: 10.1371/journal.pone.0223526 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stanton J. M., Sinar E. F., Balzer W. K., & Smith P. C (2002). Issues and strategies for reducing the length of self‐report scales. Personnel Psychology, 55, 167–194. doi: 10.1111/j.1744-6570.2002.tb00108.x [DOI] [Google Scholar]

- Taylor S., & Todd P (1995). Decomposition and crossover effects in the theory of planned behavior: A study of consumer adoption intentions. International Journal of Research in Marketing, 12, 137–155. doi: 10.1016/0167-8116(94)00019-K [DOI] [Google Scholar]

- Vallerand R. J. (1997). Toward a hierarchical model of intrinsic and extrinsic motivation. In Zanna M. P. (Ed.), Advances in experimental social psychology (Vol. 29, pp. 271–360). San Diego, CA: Academic Press. [Google Scholar]

- van Houwelingen C. T. M., Ettema R. G. A., Antonietti M. G. E. F., & Kort H. S. M (2018). Understanding older people’s readiness for receiving telehealth: Mixed-method study. Journal of Medical Internet Research, 20(4), e123. doi: 10.2196/jmir.8407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaziri D. D., Giannouli E., Frisiello A., Kaartinen N., Wieching R., Schreiber D., & Wulf V (2019). Exploring influencing factors of technology use for active and healthy ageing support in older adults. Behaviour & Information Technology, 1–11. doi: 10.1080/0144929X.2019.1637457 [DOI] [Google Scholar]

- Venkatesh V., Morris M. G., Davis G. B., & Davis F. D (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 27, 425–478. doi: 10.2307/30036540 [DOI] [Google Scholar]

- Wee H. L., Fong K. Y., Tse C., Machin D., Cheung Y. B., Luo N., & Thumboo J (2008). Optimizing the design of visual analogue scales for assessing quality of life: A semi-qualitative study among Chinese-speaking Singaporeans. Journal of Evaluation in Clinical Practice, 14, 121–125. doi: 10.1111/j.1365-2753.2007.00814.x [DOI] [PubMed] [Google Scholar]

- Wixom B. H., & Todd P. A (2005). A theoretical integration of user satisfaction and technology acceptance. Information Systems Research, 16, 85–102. doi: 10.1287/isre.l050.0042 [DOI] [Google Scholar]

- Zhao Y., Ni Q., & Zhou R (2018). What factors influence the mobile health service adoption? A meta-analysis and the moderating role of age. International Journal of Information Management, 43, 342–350. doi: 10.1016/j.ijinfomgt.2017.08.006 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.