Abstract

Generations of researchers have tested and used attachment theory to understand children’s development. To bring coherence to the expansive set of findings from small-sample studies, the field early on adopted meta-analysis. Nevertheless, gaps in understanding intergenerational transmission of individual differences in attachment continue to exist. We discuss how attachment research has been addressing these challenges by collaborating in formulating questions and pooling data and resources for individual-participant-data meta-analyses. The collaborative model means that sharing hard-won and valuable data goes hand in hand with directly and intensively interacting with a large community of researchers in the initiation phase of research, deliberating on and critically reviewing new hypotheses, and providing access to a large, carefully curated pool of data for testing these hypotheses. Challenges in pooling data are also discussed.

Keywords: attachment, individual-participant data, meta-analysis

Attachment is seen in both a child’s protest and proximity-seeking behavior if he or she is distressed or involuntarily separated from a primary caregiver as well as in children’s confident exploration of novelty when they feel safe in the presence of their caregiver (Tottenham, Shapiro, Flannery, Caldera, & Sullivan, 2019). Theoretically, secure attachment relationships develop when caregivers are sensitively responsive to the signals and needs of their child, whereas insecure attachment relationships may develop when caregivers ignore or respond only intermittently to signals. Accordingly, research on attachment has examined predictors and outcomes of both secure and insecure attachment relationships. To synthesize the empirical evidence on these associations, attachment researchers were early adopters of meta-analytic methodology (e.g., Goldsmith & Alansky, 1987; van IJzendoorn & Kroonenberg, 1988).

In 1985, Main, Kaplan, and Cassidy proposed that caregivers’ own mental representations regarding attachment, identified as autonomous (secure), dismissing, preoccupied, or unresolved on the basis of their responses to the Adult Attachment Interview, predict the quality of children’s attachment relationships via the sensitivity of caregivers’ responses to children. The importance of intergenerational transmission for developmental and clinical psychology, as well as for developmental psychopathology, lies in what it can tell us about caregivers’ contributions to their children’s social functioning and mental health and about factors that interrupt this contribution. In 1995, van IJzendoorn published a meta-analysis of 18 studies examining the intergenerational transmission of attachment and found an effect size of a strength rarely observed in psychological science (r = .47, d = 1.06; N = 854), which increased confidence among the research community in Main et al.’s Adult Attachment Interview as a strong predictor of individual differences in infant–caregiver attachment. However, van IJzendoorn’s meta-analysis also showed that Main et al.’s model could only partly account for how transmission came about. This finding became known as the “transmission gap” and led to numerous theoretical and empirical efforts to further understand and bridge it (van IJzendoorn & Bakermans-Kranenburg, 2019). Yet over the years, there were also studies, including ones with relatively large sample sizes, for which the authors reported null findings for intergenerational transmission (e.g., Chin, 2013; Dickstein, Seifer, & Albus, 2009). These findings cast doubt on the replicability of the intergenerational transmission, prompting a new meta-analysis more than two decades later. With more than four times as many data available (i.e., 83 samples), Verhage et al. (2016) reported a considerably lower, moderately large effect size (r = .31, d = 0.65; N = 4,102). However, studies showed significant and unexplained heterogeneity in effect sizes, prompting an effort to conduct individual-participant-data (IPD) meta-analysis (Riley, Lambert, & Abo-Zaid, 2010) for testing more complex models.

The purpose of the current article is to discuss how attachment researchers have turned to IPD to overcome the limitations of single studies with small sample sizes and traditional aggregate-data meta-analysis. Some hurdles on the road to creating IPD data sets, and their potential solutions, will also be discussed.

The Promise of Data Pooling

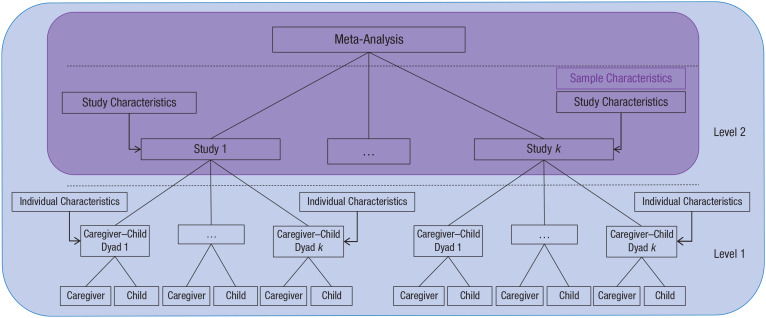

IPD meta-analysis has been a gold-standard method of meta-analysis for some time in the biomedical sciences (Tierney, Stewart, & Clarke, 2019), but it has only recently found its way to psychology (Roisman & van IJzendoorn, 2018). IPD meta-analysis involves obtaining, harmonizing, and synthesizing the raw data for the individual participants in studies pertaining to common research questions (Riley et al., 2010). Compared with meta-analysis based on study-level aggregate data, IPD meta-analysis thus adds the data on the level of the participants to the analyses (see Fig. 1).

Fig. 1.

Schematic overview of traditional meta-analysis (purple) and individual-participant-data meta-analysis (blue).

The method of IPD meta-analysis is precisely what was needed in attachment research, as the field had hit the saturation stage foreseen by van IJzendoorn and Tavecchio in 1987. In this stage, many of the major questions seemed to have been settled and, despite countless but fragmented efforts, progress was slow in resolving the remaining gaps. Combining data from primary studies, whether large or small, capitalized on the benefits of this saturation stage and offered exciting prospects for the renewal of the attachment-research paradigm (Duschinsky, 2020).

We started the Collaboration on Attachment Transmission Synthesis (CATS) to both overcome stagnation in understanding intergenerational transmission of attachment and to test the feasibility of IPD meta-analysis for our field. On the basis of discussions, the participating investigators drafted a protocol (see https://osf.io/9p3n4/) with the aim of advancing our insight into the mechanisms underlying intergenerational transmission of attachment. All authors of the studies identified in the Verhage et al. (2016) meta-analysis were invited to participate in this project. This led to a data set of 59 samples with 4,498 parent–child dyads, but new samples continue to be added.

Advantages of and Approaches to IPD Meta-Analyses for Attachment Research

The main advantage of a pooled set of raw data over a meta-analysis of aggregate data is the increase in power and degrees of freedom (Riley et al., 2010) so that increasingly complex models and auxiliary hypotheses may be tested. In attachment research, data collection through labor-intensive methods constrains sample sizes, resulting in few adequately powered studies (Stanley, Carter, & Doucouliagos, 2018). In the 2016 meta-analysis, only 18% (15/83) of the studies reached the .80 power threshold of 82 parent–child dyads required to assess secure–insecure attachment transmission (Verhage et al., 2016). Testing more complex models, such as the ones to answer pertinent questions on moderating or mediating factors of attachment transmission, require a much larger sample for drawing replicable conclusions. The CATS data set makes this venture possible.

Our first study on ecological factors that might affect intergenerational transmission of attachment is an example of moderator testing that would not have been possible without IPD (Verhage et al., 2018). In this study, we examined, for example, whether attachment transmission differed by age of the child. The preceding meta-analysis of aggregate data had looked into this issue as well, but given that attachment was measured with different instruments in studies with younger children versus older children, there was no way of separating the effects of age from the effects of using a different instrument (Verhage et al., 2016). In the IPD meta-analysis, we controlled for the type of instrument and found that the transmission effect was stronger for older children than for younger children (Verhage et al., 2018). This finding provides support for the theoretical notion that cumulative experiences with parents lead to more stable, ingrained attachment patterns and helps to recalibrate expected intergenerational transmission effect sizes. Several other manuscripts are currently under way. These report, for example, on patterns of nontransmission (e.g., from secure to insecure classifications or between different types of insecure classifications) using pooled data, a procedure that is necessary because of low base rates for these transmission patterns (Madigan et al., 2020), and on a moderated mediation model of attachment transmission explaining why the transmission gap could not be solved with additional mediators (Verhage et al., 2019).

Empirical findings may also be made more robust as a guide for theory development by controlling “researcher degrees of freedom,” which represent the diversity of choices a researcher makes during the research process (Simmons, Nelson, & Simonsohn, 2011, p. 1359). All choices made during study design, data collection, data analysis, and reporting may affect study outcomes and hence theory development on a given topic. In the field of attachment research, there are historical reasons that allow for a variety of ways in which attachment variables may be parsed. Originally, only three categories of parent–child attachments were identified (secure, avoidant, and resistant; Ainsworth, Blehar, Waters, & Wall, 1978), but later, Main and Solomon (1990) discovered insecure disorganized attachment. From that moment, researchers could parse their attachment variables as a secure/insecure dichotomy, an organized/disorganized dichotomy, three- or four-way categorical variables, or combinations of categories and a rating scale, a set of options which was also mirrored in the variables for adult attachment representations as assessed in the Adult Attachment Interview (Main et al., 1985). In all, 38 different ways of examining the intergenerational transmission of attachment have been described in the literature (Schuengel et al., 2019). The absence of substantive or statistical reasons for choosing one variant over the other may indicate underspecification in the theoretical model, making it harder to design tests that could expose the theory’s flaws and thus undermining the credibility of the theory. The meta-analytic finding that the effect size for unpublished data was lower than the effect size for published data, even within the same studies, also hints at selective reporting of significant findings (Verhage et al., 2016). Secondary analyses are just as vulnerable to p hacking as primary studies (Weston, Ritchie, Rohrer, & Przybylski, 2019), which, given their suggestive high power (uncorrected for multiple exploratory analyses of data sets), may be even more misleading. Paradoxically, controlling access to a data source that may be used to answer a plethora of questions may increase transparency, compared with not controlling access to open data. Registration of hypotheses and analysis plans for IPD meta-analysis of pooled data helps to control researcher degrees of freedom and false-discovery rates. Transparency of the analytic process is vital to ensure replicability of the results, and this is facilitated in a consortium of researchers monitoring the workflow from research question, theorizing, and hypothesizing to data collection and analysis planning. In CATS, study hypotheses and exploratory questions are included in the protocol that accompanies the invitation to the study authors and are posted on our OSF page (see https://osf.io/9p3n4/). Furthermore, manuscript proposals are circulated across the entire CATS group as an internal registration when new studies are started, thus preventing cherry-picking results for publication. Finally, for each manuscript, we conduct sensitivity analyses (Verhage et al., 2018) or multiverse analyses (Steegen, Tuerlinckx, Gelman, & Vanpaemel, 2016) to account for alternative ways to examine the data. The recently published registration format for secondary analysis (see https://osf.io/x4gzt/) will facilitate public registration for future IPD meta-analyses.

In attachment research, a categorical model of attachment was taken up early on as the most likely representation of reality, perhaps under the influence of emerging diagnostic classification systems (Duschinsky, 2020). The categorical assumption started to be put to the test much later (Fraley & Spieker, 2003; Roisman, Fraley, & Belsky, 2007). Latent structure analyses and taxometric analyses, however, require large data sets, so that smaller studies supporting dimensional measurement models have thus far insufficiently impacted research practices. With the pooled data on parental attachment representations in the CATS data set, we were able to show that individual differences in adult attachment representations may also be consistent with a latent dimensional rather than categorical model (Raby et al., 2019), but incremental validity is still an outstanding question for which the IPD approach might be perfectly suited.

Researchers could go one step further and pool raw materials such as interview transcripts or video-recorded observations, which could also be beneficial for methodological refinement. This was shown very early on by Main and Solomon (1990), who made a case for the existence of disorganized attachment on the basis of videotapes that were impossible to code with the regular rating system shared with them by researchers working with high-risk samples. This project had an enormous impact on attachment research and the use of attachment constructs in clinical practice. Thirty years later, we aim to refine attachment measurements again by sharing the raw materials. A first project regarding the structure of the scale for unresolved loss or trauma in the Adult Attachment Interview has been registered (see https://osf.io/bu5cx).

The Challenges of IPD Meta-Analysis

Like any method, IPD meta-analysis comes with challenges and limitations. In this section, we describe three broad challenges for this type of research; for more practical challenges and concrete tips, see Table 1.

Table 1.

Practical Challenges to Data Pooling and How the Collaboration on Attachment Transmission Synthesis (CATS) Dealt With Them

| Challenge and recommendation | Tip |

|---|---|

| Obtaining the data | |

| Invest time and effort to validate and share (archived) data. | • Plan enough time for this stage. For CATS, it took 18

months. • Establish the meta-analysis as a collaborative group effort by researchers who contribute data. Define roles within the collaboration for which members may volunteer (group coauthor, named coauthor, lead author) and that have academic value. Establish a transparent procedure for assigning roles and for involving members in study plans and ideas (e.g., collaborator meetings, circulating paper proposals). • Make contributing as easy as possible: Inform potential collaborators of exactly which variables are needed, provide a template for the data, be flexible in data format, and offer assistance. • Provide a secure shared folder or server to share the data; data sharing through e-mail is not compliant with privacy laws. |

| Determine whether data sharing is ethically or legally allowed. | • Include institutional privacy officers from the outset in

making a data-protection impact assessment and in determining

the infrastructure needs and the minimal set of joint agreements

for the collaboration members. • Provide information on where data are located, who controls access, and who has access and under which conditions. • Provide clear directions to collaboration members for checking the local or study-specific ethical and legal conditions under which they are allowed to share their data and for anonymizing their data. |

| Creating the overall data set | |

| Get insight into the quality of the received data. | • Perform checks for inconsistencies with article, anomalies

(e.g., out-of-range scores on questionnaires), and missing data.

Try to resolve issues that arise with study authors. • Ask for data quality indicators, such as interrater reliability and internal consistency. • Exclude data that are not up to a priori standards. |

| Securing access and analysis of the data | |

| Set up a secure and accessible storage facility. | • Determine whether the data need to be accessible to

researchers (data analysts) outside the organization. If so,

consider building a data commons with secure remote access. If

not, store the data with the university secure storage

facility. • Place only the final files that need to be accessed by researchers outside the organization on the remotely accessible server. Anonymize the origin of the data sets. Store data files received from primary study authors or files used for data cleaning on a separate secure server. • Partition the remotely accessible server so that researchers have access only to the parts they need to access. • Add the necessary analysis software and other processing software to the remotely accessible server so that the researchers do not have to copy data to their own computers. • Have researchers with access to the data sign a data-sharing agreement. |

| Defining authorship | • Clearly define contributor roles for the project and provide

transparent information about who fulfills these roles and how

(e.g., using the Contributor Roles Taxonomy; www.casrai.org/credit.html). • For manuscript preparation, consider the use of tools that allow for simultaneous working on a manuscript so that authors can see and respond to each other’s feedback, comments are given in the same document, and a record of writing contributions is maintained. • Give reasonable deadlines for responding and keep them. • Map contributor roles on authorship roles. In CATS, we have three layers of involvement with articles: Participation in drafting the manuscript leads to named authorship, sharing data and reading and approving the draft before submission leads to group authorship, and sharing data without manuscript involvement leads to a mention as a nonauthor collaborator. These types of involvement were based on the guidelines by the International Committee of Medical Journal Editors (2020). |

First, pooling data is useful only when the same underlying constructs are measured in enough studies, whether or not they are measured with different instruments. Before requesting data from study authors, it is necessary to review these instruments of the constructs of interest and assess the feasibility of harmonizing different ways of operationalizing a construct. The attachment field proved eminently suited for IPD meta-analysis because it has honed a limited and well-calibrated set of standard instruments such as the Strange Situation procedure and the Adult Attachment Interview. Harmonizing the measures for parental sensitivity already required making multiple assumptions, however.

Second, sharing participant data is increasingly regulated under privacy-protection laws, which vary across countries. Consulting with institutional privacy officers is key when setting up a data-pooling project to discuss the ethical and legal basis for data sharing and making data-sharing agreements. Furthermore, to ensure the privacy of participants, it is important to establish secure ways to transfer, store, and analyze anonymized data. In CATS, we have built a data commons for storage and analysis, which is a secured, remote-access information-technology infrastructure holding the pooled data set, syntax codes, and various analysis software packages (Grossman, 2019).

A final challenge is that likely not all data from eligible studies can be acquired. This can occur for several reasons, such as authors who cannot be traced or data that have been destroyed, but also because of priority claims of authors who want to publish their research on their arduously collected data sets before sharing the data. Fortunately, in CATS, authors of 67% of the original studies contributed their raw data, but sharing rates are often lower (Jaspers & DeGraeuwe, 2014). It is therefore important to decide in advance what percentage of data would be enough to proceed and to compare aggregate data of the missing studies with the IPD data of the included studies and, whenever possible, to include them if they differ (Stewart et al., 2015).

Conclusion

Wide-scale collaboration among attachment researchers in CATS has brought rigorous testing of complex attachment-theoretical propositions within reach while enabling the exploration of the boundaries of these propositions. Capitalizing on the advantages of the saturation stage of attachment research offers new and exciting horizons that pull attachment research back into the stage of construction of attachment theory. Data pooling holds these same promises for other fields in psychology: addressing theoretical challenges, increasing methodological rigor and transparency, and strengthening the capacity to inform applied research. Together with other efforts to make psychological science more robust (Nelson, Simmons, & Simonsohn, 2018), IPD meta-analyses show both the value and the viability of moving to new levels of collaboration.

Recommended Reading

Riley, R. D., Lambert, P. C., & Abo-Zaid, G. (2010). (See References). An accessible description of what individualparticipant-data (IPD) meta-analysis is, how it is different from meta-analysis of aggregate data, when it is the preferred method of meta-analysis, and how to conduct an IPD meta-analysis.

Stewart, L. A., Clarke, M., Rovers, M., Riley, R. D., Simmonds, M., Stewart, G., & Tierney, J. F. (2015). (See References). Contains the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines for reporting on IPD meta-analyses, which are originally from the medical field but can be applied to psychological research.

van IJzendoorn, M. H., & Bakermans-Kranenburg, M. J. (2019). (See References). Reviews previous research on the intergenerational transmission of attachment and the difficulty in explaining the “transmission gap”; also provides a novel theoretical framework including contextual factors and differential susceptibility to fill in the gap.

Verhage, M. L., Fearon, R. M. P., Schuengel, C., van IJzendoorn, M. H., Bakermans-Kranenburg, M. J., Madigan, S., . . . the Collaboration on Attachment Transmission Synthesis (2018). (See References). Describes the first IPD metaanalysis on the intergenerational transmission of attachment by the Collaboration on Attachment Transmission Synthesis.

Weston, S. J., Ritchie, S. J., Rohrer, J. M., & Przybylski, A. K. (2019). (See References). Explains the various uses of secondary data analysis as a tool for the generation of hypotheses, confirmatory work, methodological innovations, and analytical methods, with caveats for using secondary data.

Acknowledgments

For a full list of collaborators in the Collaboration on Attachment Transmission Synthesis (CATS) and their affiliations, see the CATS Open Science Framework project at https://osf.io/56ugw/.

Footnotes

ORCID iDs: Carlo Schuengel  https://orcid.org/0000-0001-5501-3341

https://orcid.org/0000-0001-5501-3341

Marinus H. van IJzendoorn  https://orcid.org/0000-0003-1144-454X

https://orcid.org/0000-0003-1144-454X

Transparency

Action Editor: Randall W. Engle

Editor: Randall W. Engle

Declaration of Conflicting Interests: The author(s) declared that there were no conflicts of interest with respect to the authorship or the publication of this article.

Funding: This work was supported by a grant from Stichting tot Steun Nederland to M. Oosterman and C. Schuengel, a grant from the Social Sciences and Humanities Research Council Canada (No. 430-2015-00989) to S. Madigan, a grant from the Wellcome Trust (WT103343MA) to R. Duschinsky, and a Veni grant by the Dutch Research Council (No. 451-17-010) to M. L. Verhage.

References

- Ainsworth M. D., Blehar M. C., Waters E., Wall S. (1978). Patterns of attachment. Hillsdale, NJ: Erlbaum. [Google Scholar]

- Chin F. (2013). Cognitive and socio-emotional developmental competence in premature infants at 12 and 24 months: Predictors and developmental sequelae. ProQuest (No. 3573727). [Google Scholar]

- Dickstein S., Seifer R., Albus K. E. (2009). Maternal adult attachment representations across relationship domains and infant outcomes: The importance of family and couple functioning. Attachment & Human Development, 11, 5–27. doi: 10.1080/14616730802500164 [DOI] [PubMed] [Google Scholar]

- Duschinsky R. (2020). Cornerstones of attachment research. Oxford, England: Oxford University Press. [Google Scholar]

- Fraley R. C., Spieker S. J. (2003). Are infant attachment patterns continuously or categorically distributed? A taxometric analysis of Strange Situation behavior. Developmental Psychology, 39, 387–404. doi: 10.1037/0012-1649.39.3.387 [DOI] [PubMed] [Google Scholar]

- Goldsmith H. H., Alansky J. A. (1987). Maternal and infant temperamental predictors of attachment: A meta-analytic review. Journal of Consulting and Clinical Psychology, 55, 805–816. doi: 10.1037//0022-006x.55.6.805 [DOI] [PubMed] [Google Scholar]

- Grossman R. L. (2019). Data lakes, clouds, and commons: A review of platforms for analyzing and sharing genomic data. Trends in Genetics, 35, 223–234. doi: 10.1016/j.tig.2018.12.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- International Committee of Medical Journal Editors. (2020). Defining the role of authors and contributors. Retrieved from http://www.icmje.org/recommendations/browse/roles-and-responsibilities/defining-the-role-of-authors-and-contributors.html

- Jaspers G. J., DeGraeuwe P. L. J. (2014). A failed attempt to conduct an individual patient data meta-analysis. Systematic Reviews, 3, Article 97. doi: 10.1186/2046-4053-3-97 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Madigan S., Verhage M. L., Schuengel C., Fearon R. M. P., Roisman G. I., Bakermans-Kranenburg M. J., . . . the Collaboration on Attachment Transmission Synthesis. (2020). An examination of the cross-transmission of parent-child attachment using an individual participant data meta-analysis. Manuscript in preparation. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Main M., Kaplan N., Cassidy J. (1985). Security in infancy, childhood, and adulthood: A move to the level of representation. Monographs of the Society for Research in Child Development, 50(1–2), 66–104. doi: 10.2307/3333827 [DOI] [Google Scholar]

- Main M., Solomon J. (1990). Procedures for identifying infants as disorganized/disoriented during the Ainsworth Strange Situation. In Greenberg M. T., Cicchetti D., Cummings E. M. (Eds.), Attachment in the preschool years: Theory, research, and intervention (pp. 121–160). Chicago, IL: University of Chicago Press. [Google Scholar]

- Nelson L. D., Simmons J., Simonsohn U. (2018). Psychology’s renaissance. Annual Review of Psychology, 69, 511–534. doi: 10.1146/annurev-psych-122216-011836 [DOI] [PubMed] [Google Scholar]

- Raby K. L., Verhage M. L., Fearon R. M. P., Fraley R. C., Roisman G. I., van IJzendoorn M. H., . . . the Collaboration on Attachment Transmission Synthesis. (2019). The latent structure of the Adult Attachment Interview: Large sample evidence from the Collaboration on Attachment Transmission Synthesis. Manuscript submitted for publication. [DOI] [PubMed] [Google Scholar]

- Riley R. D., Lambert P. C., Abo-Zaid G. (2010). Meta-analysis of individual participant data: Rationale, conduct, and reporting. British Medical Journal, 340, Article c221. doi: 10.1136/bmj.c221 [DOI] [PubMed] [Google Scholar]

- Roisman G. I., Fraley R. C., Belsky J. (2007). A taxometric study of the Adult Attachment Interview. Developmental Psychology, 43, 675–686. doi: 10.1037/0012-1649.43.3.675 [DOI] [PubMed] [Google Scholar]

- Roisman G. I., van IJzendoorn M. H. (2018). Meta-analysis and individual participant data synthesis in child development: Introduction to the special section. Child Development, 89, 1939–1942. doi: 10.1111/cdev.13127 [DOI] [PubMed] [Google Scholar]

- Schuengel C., Verhage M. L., van IJzendoorn M. H., Roisman G. I., Fearon R. M. P., Madigan S., . . . the Collaboration on Attachment Transmission Synthesis. (2019). Tilling the garden of forking paths: Transmission of parents’ unresolved loss and/or trauma to attachment disorganization not moderated in the multiverse. Manuscript in preparation. [Google Scholar]

- Simmons J. P., Nelson L. D., Simonsohn U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22, 1359–1366. doi: 10.1177/0956797611417632 [DOI] [PubMed] [Google Scholar]

- Stanley T. D., Carter E. C., Doucouliagos H. (2018). What meta-analyses reveal about the replicability of psychological research. Psychological Bulletin, 144, 1325–1346. doi: 10.1037/bul0000169 [DOI] [PubMed] [Google Scholar]

- Steegen S., Tuerlinckx F., Gelman A., Vanpaemel W. (2016). Increasing transparency through a multiverse analysis. Perspectives on Psychological Science, 11, 702–712. doi: 10.1177/1745691616658637 [DOI] [PubMed] [Google Scholar]

- Stewart L. A., Clarke M., Rovers M., Riley R. D., Simmonds M., Stewart G., Tierney J. F. (2015). Preferred reporting items for systematic review and meta-analysis of individual participant data: The PRISMA-IPD statement. JAMA, 313, 1657–1665. doi: 10.1001/jama.2015.3656 [DOI] [PubMed] [Google Scholar]

- Tierney J. F., Stewart L. A., Clarke M. (2019). Individual participant data. In Higgins J. P. T., Thomas J., Chandler J., Cumpston M., Li T., Page M. J., Welch V. A. (Eds.), Cochrane handbook for systematic reviews of interventions (2nd ed, pp. 643–658). Hoboken, NJ: John Wiley & Sons. [Google Scholar]

- Tottenham N., Shapiro M., Flannery J., Caldera C., Sullivan R. M. (2019). Parental presence switches avoidance to attraction learning in children. Nature Human Behaviour, 3, 1070–1077. doi: 10.1038/s41562-019-0656-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van IJzendoorn M. H. (1995). Adult attachment representations, parental responsiveness, and infant attachment: A meta-analysis on the predictive validity of the Adult Attachment Interview. Psychological Bulletin, 117, 387–403. doi: 10.1037/0033-2909.117.3.387 [DOI] [PubMed] [Google Scholar]

- van IJzendoorn M. H., Bakermans-Kranenburg M. J. (2019). Bridges across the intergenerational transmission of attachment gap. Current Opinion in Psychology, 25, 31–36. doi: 10.1016/j.copsyc.2018.02.014 [DOI] [PubMed] [Google Scholar]

- van IJzendoorn M. H., Kroonenberg P. M. (1988). Cross-cultural patterns of attachment: A meta-analysis of the Strange Situation. Child Development, 59, 147–156. doi: 10.2307/1130396 [DOI] [Google Scholar]

- van IJzendoorn M. H., Tavecchio L. W. C. (1987). The development of attachment theory as a Lakatosian research program. In Tavecchio L. W. C., van IJzendoorn M. H. (Eds.), Attachment in social networks: Contributions to the Bowlby-Ainsworth attachment theory (pp. 3–31). New York, NY: Elsevier. [Google Scholar]

- Verhage M. L., Fearon R. M. P., Schuengel C., van IJzendoorn M. H., Bakermans-Kranenburg M. J., Madigan S., . . . the Collaboration on Attachment Transmission Synthesis. (2018). Examining ecological constraints on the intergenerational transmission of attachment via individual participant data meta-analysis. Child Development, 89, 2023–2037. doi: 10.1111/cdev.13085 [DOI] [PubMed] [Google Scholar]

- Verhage M. L., Fearon R. M. P., Schuengel C., van IJzendoorn M. H., Bakermans-Kranenburg M. J., Madigan S., . . . the Collaboration on Attachment Transmission Synthesis. (2019, March). Does risk background affect intergenerational transmission of attachment? Testing a moderated mediation model with IPD. Paper presented at the Biennial Meeting of the Society for Research in Child Development, Baltimore, MD. [Google Scholar]

- Verhage M. L., Schuengel C., Madigan S., Fearon R. M. P., Oosterman M., Cassibba R., . . . van IJzendoorn M. H. (2016). Narrowing the transmission gap: A synthesis of three decades of research on intergenerational transmission of attachment. Psychological Bulletin, 142, 337–366. doi: 10.1037/bul0000038 [DOI] [PubMed] [Google Scholar]

- Weston S. J., Ritchie S. J., Rohrer J. M., Przybylski A. K. (2019). Recommendations for increasing the transparency of analysis of preexisting data sets. Advances in Methods and Practices in Psychological Science, 2, 214–227. doi: 10.1177/2515245919848684 [DOI] [PMC free article] [PubMed] [Google Scholar]