Abstract

Background

Machine learning approaches have become increasingly popular modeling techniques, relying on data-driven heuristics to arrive at its solutions. Recent comparisons between these algorithms and traditional statistical modeling techniques have largely ignored the superiority gained by the former approaches due to involvement of model-building search algorithms. This has led to alignment of statistical and machine learning approaches with different types of problems and the under-development of procedures that combine their attributes. In this context, we hoped to understand the domains of applicability for each approach and to identify areas where a marriage between the two approaches is warranted. We then sought to develop a hybrid statistical-machine learning procedure with the best attributes of each.

Methods

We present three simple examples to illustrate when to use each modeling approach and posit a general framework for combining them into an enhanced logistic regression model building procedure that aids interpretation. We study 556 benchmark machine learning datasets to uncover when machine learning techniques outperformed rudimentary logistic regression models and so are potentially well-equipped to enhance them. We illustrate a software package, InteractionTransformer, which embeds logistic regression with advanced model building capacity by using machine learning algorithms to extract candidate interaction features from a random forest model for inclusion in the model. Finally, we apply our enhanced logistic regression analysis to two real-word biomedical examples, one where predictors vary linearly with the outcome and another with extensive second-order interactions.

Results

Preliminary statistical analysis demonstrated that across 556 benchmark datasets, the random forest approach significantly outperformed the logistic regression approach. We found a statistically significant increase in predictive performance when using hybrid procedures and greater clarity in the association with the outcome of terms acquired compared to directly interpreting the random forest output.

Conclusions

When a random forest model is closer to the true model, hybrid statistical-machine learning procedures can substantially enhance the performance of statistical procedures in an automated manner while preserving easy interpretation of the results. Such hybrid methods may help facilitate widespread adoption of machine learning techniques in the biomedical setting.

Keywords: Interactions, Random Forest, Logistic regression, Model Explanations, Machine learning

Background

In the era of Big Data, models with highly complex specifications are employed to study nontrivial biomedical phenomena, including genetic or epigenetic interactions, high-resolution modalities such as Computed Tomography (CT) scans and histopathology slide images [1–6]. Many statistical approaches to modeling these complex data rely on expert consultation and annotation to help determine which variables and targets to study. Oddly, despite the advances in computing power, these procedures have not been augmented with sophisticated model-building algorithms. At best commercial software still only supports simple and restrictive search strategies such as forward, backward and stepwise selection. In contrast, machine learning techniques employ a data-driven set of heuristics to simultaneously build and estimate models that may include an extensive array of nonlinear interactions in the data. While machine learning methods are particularly helpful in exploratory or predictive settings, not much may be revealed about how the set of covariates vary with each other and the dependent variable, which can be detrimental towards building public trust and acceptance in these modeling approaches. Unlike statistical models, which are able to make predictions when the data has a low number of features as compared to the number of training samples, machine learning algorithms are much more suitable for the high dimensional domain, especially if the data is subject to multicollinearity, and to the low dimensional domain when it is not clear how the covariates vary with the outcome. For instance, machine learning technologies have demonstrated the ability to make impressive predictions on medical images, genomic data and Electronic Health Record (EHR) modalities in the presence of many training instances [7–9]. In recent years, these approaches have gained much traction in the biomedical space and will continue to do so in the years to come.

However, with researchers and practitioners flocking to adopt these new technologies, there are concerns that traditional statistical methods are being passed over too quickly. Many published papers that show that machine learning techniques outperform traditional statistical models. Yet, many of these papers mislead the reader by presenting unfair comparisons between the methodologies, for instance selecting datasets that are difficult to learn given insufficient model-building strategies or by comparing a statistical procedure with no embedded learning component to machine learning procedures [10]. Meanwhile, these featured datasets run counter to more clinically focused sets on which one prior study has demonstrated no performance improvement for machine learning techniques [11]. Given this ambiguity and the clear need for models that are inherently interpretable for widespread adoption in the biomedical space, we believe there is much to be gained by entwining or marrying these methodologies rather than thinking of them as competitors.

We address a gap among traditional statistical procedures by proposing that sophisticated search algorithms be paired with them to suggest terms that can be added to the base specification to improve model fit and the predictive performance of traditional statistical models. These search algorithms would consider sets of candidate predictors from sets as expansive as those available to machine learning algorithms. Whereas the implementations of traditional statistical procedures such as linear and logistic regression in commercial packages often include basic search algorithms (e.g., forward, backward and stepwise regression), these search algorithms would span all forms of higher-order interactions and nonlinear transformations. Crucially, they would not be limited to only the models that can be formed by the predictors in the model specification but rather would include a myriad of additional predictors formed from functions of one or more of them and nonlinear transformations of their sum. Therefore, they would substantially enhance model-building capacity, potentially bringing the performance of traditional statistical methods on par with machine learning methods while retaining the easy and direct interpretability of the results of statistical procedures. In addition, such hybrid procedures would enjoy the automatic implementation and scalability enjoyed by the machine learning methods.

In this paper, we aim to illustrate the domains from which machine learning models can be sensibly applied to assist traditional statistical models from which to generate models that can be widely adopted in clinical applications.

First, we describe the machine learning and logistic regression models and their differences, including scenarios under which each outperforms the other. Then, we demonstrate that extracting interactions via the machine learning can enhance logistic regression (hybrid approach) as well as the ability of logistic regression to “protect the null hypothesis” by inhibiting the additional of unwarranted interaction terms to the model. Finally, we show consistencies between the logistic regression model and the machine learning model when the logistic regression model is closer to the true model. This study shows that machine learning can be deployed to assist with the development of statistical modeling approaches to obtain machine-learning level performance with statistical model interpretability.

Review of modeling approaches

We now introduce hallmark models from machine learning and traditional statistics to better highlight domains from which either technique may excel.

Statistical Analysis System (SAS) is perhaps the most widely used commercial statistical software package. Its regression procedures are well established [12]. Some procedures, such as Proc reg and Proc logistic, include simple search algorithms that allow progress to be made towards a best fitting model. For example, forwards, backwards and stepwise regression can be implemented with the mere inclusion of an optional term in the specification of the procedure. Although these procedures have high utility and a long history (stepwise regression first made its debut in 1960), their model building search capacity has to our knowledge not been significantly enhanced since 1992 [13–15]. During this time, computational power has exponentiated. Remarkably, more expansive and otherwise advanced search algorithms have not been incorporated. Why not? Perhaps a lack of focus on, or accommodation of, predictive problems involving large data sets with many predictors. Whatever the reason, the computational power has for some time been available to enable these procedures to be enhanced. Therefore, we view this omission or gap as an historical anomaly that we seek to correct herein.

In the literature, comparisons are made between statistical models devoid of model-building and machine learning approaches enhanced by very sophisticated and powerful algorithms [10, 11]. This is an unfair comparison that risks making traditional statistical methods be dismissed too rapidly from consideration when choosing an analytic approach to a problem.

Logistic regression

Logistic regression is the most widely used modeling approach for binary outcomes in epidemiology and medicine [16]. The model is a part of the family of generalized linear models that explicitly models the relationship between the explanatory variable X and response variable Y. Conditional on the predictors, a binary outcome Y is assumed to follow a binomial distribution for which p = P(Y = 1 | X) represents the probability of the binary response given the predictors:

The approach above assumes a linear relationship between logarithm of the odds of the outcome and the predictors as equivalently depicted below:

In applications involving a large number of predictors, LASSO or Ridge regression techniques serve to penalize the model’s complexity to make it more generalizable to unseen data by reducing the magnitude of the model coefficients such that high magnitude features become less tailored to the data used to tune the model parameters. Penalization also aids model estimation by repelling parameters from boundary values in much the same way that prior distributions with continuous support nudge the posterior distribution away from the extremities of the values a model parameter can attain.

Classification and regression trees

Classification and Regression Trees (CART), otherwise known as Decision Trees [17], are a series of computational heuristics that arrive at a solution for a problem by forming binary splitting rules on the features of the data based on the criterion of maximizing the information gained about the outcome from making the split. This can be formulated mathematically as:

where S is an indicator variable indicating which side of the split contains a given observation. The above equation states that the information acquired about a set of labels given the split is the entropy of the original set of labels minus the entropy of the labels given the split, where entropy is defined as:

The entropy conditioned on the split is the weighted average of the entropy of the labels partitioned to each child, weighted by the number of samples sent to each child:

An alternative criterion that can be used in this framework, and the one used in this work, is Gini Impurity, which measures the probability of incorrect identification of a randomly chosen element and is given by:

Decreases in Gini Impurity are expressed similarly to information gain, and both represent key criterion to decide which features and values should be used to split a branch. The values that the branches split on can be viewed as non-linear transforms of the data, while splits on multiple variables introduce interactions between those variables. A collection of hyperparameters effectively prune and limit the depth of each tree to generalize the model to unseen data.

Random forest

Random forest is an extension of the base CART algorithm by considering the construction of multiple CART models to arrive at a prediction [18, 19]. While decision trees are formed by utilizing all of the features, each CART model considers the best splitting feature out of n < N randomly selected features at a time, and fits subsequent branches by selecting out of another random set of n features, all while bootstrapping the training samples. A collection of these estimators is formed, and results are aggregated to form predictions. This is done because each of the CART models may provide high variance estimates by over-fitting their training observations. When aggregating results the variance is typically reduced by de-correlating the individual CART models by bootstrapping both the observations and the predictors. The number of trees and various aspects of each estimator’s construction can be limited in order to reduce overfitting the training data.

Use cases for each modeling technique

Here, through a series of simple examples, we illustrate when either the traditional statistics or machine learning modeling approaches more closely resembles the true model and should be employed. We have provided a glossary of terms and summary of comparisons between these modeling approaches in the Additional file 1 for quick reference while reading the remainder of the text (Additional file 1 Tables 1–2).

In Fig. 1a, we have a use case where we have one linear continuous predictors and a binary endpoint. The distribution of the response is defined implicitly by the following data generating process:

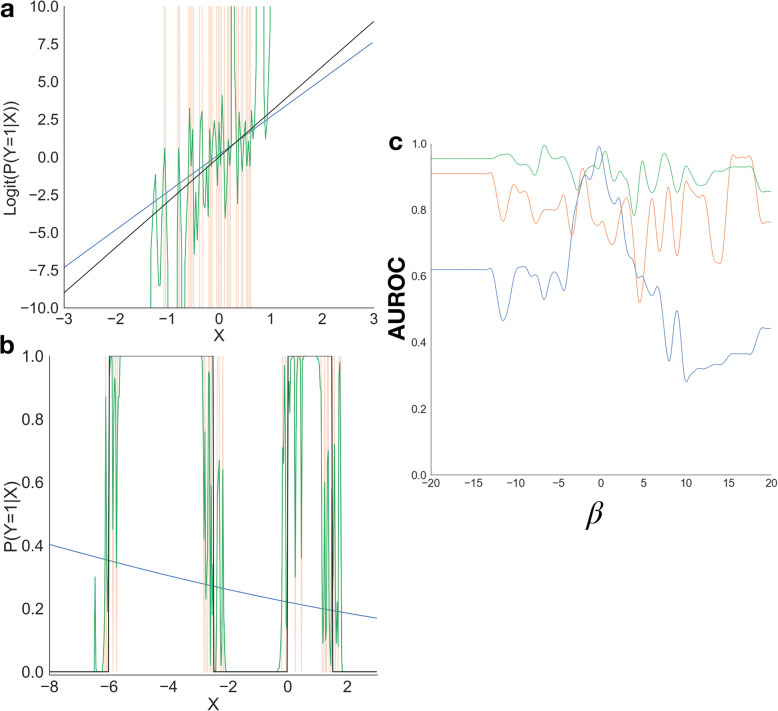

Fig. 1.

Characteristics of generalized linear modeling approach (blue), decision trees (orange), random forest (green), true model (black); (a-b) Predictor X1 versus binary response Y for the a) linear continuous predictor, b) non-linear transformed predictor; c) binary prediction performance as a function of interaction strength, smoothed using Savitzky-Golay filter to best illustrate trends

The above simulation implies the log of the probability of a positive prediction divided by the probability of a negative prediction varies linearly with the predictor. As such, we fit a logistic regression model to the data with the aim that it would capture the true decision boundary and found that the logistic regression model was able to accurately capture the binary end points with 90.7% accuracy on a held-out validation set. This example depicts the case when the true model is a logistic regression model. To the same data, we fit two CART-models, a decision tree and the random forest model, and find that the true linear decision boundary between the positive and negative classes was approximated by these machine learning approaches using a staircase type fit. Under this model, the two machine learning models demonstrated lower binary classification accuracy than the linear approach (accuracy of the decision tree was 74.6% and of the random forest was also 74.6%). This illustration demonstrates that generally any model of the generalized linear model family are, in cases when a predictor varies continuously with the outcome, not made inadmissible by the random forest and other ML approaches. This means that we should be cognizant of cases when the true relationship is continuous because simpler models produced via this assumption may lay closer to the true model than a heuristic that only forms a coarse approximation.

Conversely, we designed two simple examples to illustrate when the machine learning approaches are closer to the true model. In one example, Additional file 1 Figure 1b, we transform a continuous predictor X1 into the binary dependent outcome variable Y via the model:

These transformations are difficult to be approximated by models that suppose the dependence of the outcomes on the predictors conforms to a highly parsimonious smooth, continuous functional form. We see that the fit of the generalized linear model cuts through the middle of the entire dataset and as such has a worse goodness of fit (binary classification accuracy of 74.8%) than the machine learning approaches (94.8 and 94.8% for decision tree and random forest respectively). This is because CART techniques are able to do quite well with sharp and variable decision curves and are able to handle discontinuities in the data. In this case, the machine learning model better captures transformations to the data than the logistic regression modeling approach.

To further illustrate this point, we have included an additional discussion in the Additional file 1 for analogous situations from which continuous dependent variables are predicted from continuous predictors (Additional file 1 Figure 1), or binary prediction from multiple predictors (Additional file 1 Figure 2).

As the last use case, Fig. 1c, we now consider a binary response model with four continuous predictors. The predictors vary linearly with the logit-transformed outcome; however, in the true model, the final two predictors interact:

where:

For this experiment, we vary the strength of the interaction β, and test the logistic regression, decision tree and random forest models, hoping to find that the logistic regression model performs worse as the magnitude of the interaction increases. This would mean that without adding interaction terms to the logistic model, the approach would be unable to detect the presence of two interacting predictors. In Fig. 1c, we see that the accuracy of the generalized linear model diminishes in the domain of high interaction strength, while outperforming the machine learning approaches when the true model discards interactions, the case when the estimated model conforms to the true model. It can be noted, that the CART models’ accuracy does not significantly differ across varying interaction strengths. This motivates the use of CART, or other machine learning approaches, to alert an analyst to the likely presence of an interaction term that can then be added to the logistic regression model to improve its performance.

In some cases, we can make reasonable guesses at which interactions would be the most appropriate to include such that the logistic model can better approximate the relationship of the predictors to the outcome. While we had demonstrated the superior performance of statistical models when a generalized linear solution space exists, when this solution space becomes more complex, the machine learning based approaches are better able to efficiently search over numerous combinations and specifications of the predictors separately and in combination. Traditional statistical models are not embedded with these machine learning model building features. These two points lead naturally to consideration of procedures that use machine learning methods to enhance traditional models by bolstering their model building capacity.

Proposed new method

Our proposed method utilizes machine learning to suggest terms to add to traditional statistical models; we refer to this as our hybrid statistical inference approach. We consider this approach a candidate to move on from the stepwise regression approaches currently enabled in SAS Proc Reg and SAS Proc logistic. We propose to augment the current SAS procedures with the output from a sophisticated and comprehensive search algorithm in the form of an ordered list of candidate second-order interactions. Logistic regression procedures can then subsequently be estimated with the most promising of these terms included as predictors. While we illustrate our idea using this particular case, we also advocate for the development of far more expansive algorithms and thus types of inputs to further enhance traditional statistical methods. Such procedures may be characterized by a need to iterate between traditional statistical methods and machine learning methods such that each informs the improvement made to the other in the next iteration.

The hybrid approach stems from principles of explainable machine learning. Notably, many machine learning approaches struggle to gain traction and widespread adoption because they are unable to explain why they made their prediction. Existing techniques to explain the output from random forest algorithms attempt to quantify the marginal association of a variable’s capacity to reduce the amount of mislabeling in its decision splits. In contrast, the coefficients of the logistic regression model can be exponentiated to form an odds-ratio. However, these methods find global importance for the importance of each predictor and not the importance of the predictor for each test instance. The ideal interpretation technique for a machine learning model should project the random forest fit onto a linear subspace to determine the overall extent to which a specific factor contributes to the fit. Our hybrid approach utilizes such a projection to efficiently derive important model additions.

Methods

Here, we discuss sensible approaches to enhance statistical modeling. Fundamentally, the essence of our approach is to build an intuitive understanding of when the “statistical” model (e.g. linear regression, logistic regression) is more representative of the true model that describes the data compared to the machine learning approach and what to do in cases where there is extensive incongruence. When the machine learning model is closer to the true approach, we posit that its output contains keys in the form of interactions between predictors and nonlinear transformations of predictors that can be exploited to substantially improve the fit of the corresponding statistical model. That is, machine learning models can be used as an efficient way of traversing the space of higher-order and nonlinear predictors to identify the best candidates to improve the fit of statistical models beyond that possible with main variable predictors, and stepwise search algorithms applied to them, alone. We conjecture that the addition of a relatively small number of these more complex forms of predictors will almost always lead to an improvement in fit of the statistical model to such an extent that its performance is similar to that of the best machine learning models. The greater ease of interpretability of statistical models, and potential to provide superior fits in the case of continuous relationships of the predictors to the outcome, then makes them highly competitive alternatives to machine learning alone. Inspired by a work that used these concepts to derive meaningful variable transformations [20], and as a special case of our proposed new methodology, we extend these ideas to automate the extraction of meaningful interactions via machine learning that are then added to the statistical modeling technique to enhance its performance. We also provide user-friendly software to allow users to apply this methodology to their own studies and have included a flowchart diagram in the Additional file 1 to demonstrate when to apply a hybrid approach and the steps of the proposed algorithm (Additional file 1 Figure 3).

SHAP interaction terms extend generalized linear model building capabilities

A nice feature of ML is that a number of algorithms have been developed that are amenable to hybridization with traditional statistical procedures or that provide the ingredients for making such a hybrid procedure. For example, SHAP (SHapley Additive ExPlanations) [21] is an algorithm designed to help interpret why any model has made its prediction for an individual testing instance. These approaches explain “black-box” models by fitting linear additive surrogate models to each testing sample. If machine learning models can be characterized by complex curves over multiple variables, these models can be thought of as local tangent lines near each combination of variables present in the dataset (locally accurate). The coefficients of these models, dubbed shapley values, represent the importance of each predictor to the outcome prediction; these coefficients directly sum to the model prediction (additive), and features that are important to the prediction over a number of samples are consistent. SHAP sums the contributions of each feature to the model’s prediction over all possible permutations of other features.

Interaction extraction and autonomous application

If the logistic regression model is correct, whether or not it contains interactions, then it will outperform any other specification. If it is not the correct model, then an approach with powerful search capability over the predictor space is likely to find ways to outperform logistic regression. If quantified in terms of functions of predictors and fed into logistic regression, these identified ways of improving model fit have the potential to enhance the fit of the logistic regression model such that its performance is more in-line with that of a search algorithm. For example, a subsequent logistic regression model augmented with complex predictors recommended by the output of a machine learning algorithm might then be sufficiently close to the truth that it outperforms all competitors. If not, one can continue iterating between the procedures until superior performance (and convergence) is obtained.

While the Surrogate Assisted Feature Extraction (SAFE) Transformer [20] has demonstrated the ability to accurately characterize variable transformations by estimating how a nonlinear predictor varies with the outcome, recent advances in SHAP methodology have allowed for the accurate detection of salient interactions of tree-based models [22, 23]. Simply put, these methodologies expand the aforementioned SHAP method to include interaction terms for individual samples. A measure of the global importance of these interactions can be characterized by summing the interaction effects over all of the samples to find the salient interactions for the model’s prediction. These important interactions can be extracted and added as predictors to the logistic regression model. A related operation is the use of machine learning algorithms to identify nonlinear transformations of variables that can be used to form other additional predictors. This is outside the scope of the current study, which is solely focused on the identification the candidate interaction effects and to provide advice of when they should be included. While our work seeks to illustrate the potential for collaboration between traditional statistical approaches and interaction-heavy machine learning models such as Random Forest, we have also included a description and discussion of other non-linear and kernel-based modeling approaches (e.g. Support Vector Machines, Kernel Logistic Regression and Neural Network approaches) in the Additional file 1 (see Additional file 1 “Description of Kernel-Based Methods”) [24–28]. These methods can similarly be married with logistic regression to obtain highly predictive, but interpretable results.

Although interaction extraction has been studied previously, very few studies have attempted to characterize the importance of extracting these interactions over many datasets. Those that have are limited in that they focused on selecting a small number of predictors from which to test for interactions [4, 29]. Our novel contribution is the development of an automated means from which to extract these interactions and use them to enhance statistical model-based analyses.

Software implementation and availability

We have bundled and wrapped our multi-component methodology into a publicly available open source software package, InteractionTransformer, (GitHub: https://github.com/jlevy44/InteractionTransformer) that can be run using both R (GitHub via r-devtools: jlevy44/interactiontransformer) and Python (PyPI: interactiontransformer) to make these methods easily deployable for the biomedical researcher. InteractionTransformer takes as input the design matrices and outcome variable and fits a specified machine learning model to the data. Then, it extracts the most important SHAP-derived interaction terms from the machine learning model and augments the design matrices of the training and test data to include these interaction terms. It also provides additional capabilities to plot the salient SHAP features of any model and extract the variable importance assigned to all of the interaction terms for additional inspection. A wiki page detailing installation and usage can be found here: https://github.com/jlevy44/InteractionTransformer/wiki .

Evaluation of hybrid approach: dataset acquisition and experimental description

We seek to estimate the general utility of extracting these salient interactions and when they should be sensibly employed by characterizing their activity over a large dataset.

We first acquired a dataset that would allow us to establish the necessary domains for the application of these modeling techniques. Inspired by a meta-analysis of random forest versus logistic regression, we utilized data from the OpenML database [30] to extract 556 datasets purposed for binary classification problems. Each dataset was cleaned and preprocessed using a data pipeline implemented in python.

We ran five-fold cross validation of naive implementations of L2-regularized logistic regression and random forest models using the scikit-learn and imbalanced-learn packages [31, 32] to compare the overall accuracy of the random forest models versus logistic regression models. Then, we characterize the extent to which extracting these interactions improves the fit of the logistic regression model. Since the primary domain of research pertinent to this study involves fully identified models, we excluded high dimensional genomics datasets and retained 277 datasets in the p < n domain; i.e., the number of observations always outnumbers the number of predictors. Then, we extracted the pertinent interactions from the training data of each of these datasets using InteractionTransformer. After applying these interaction terms to the design matrices of the training and test sets, we scored a naive logistic regression model using five-fold cross-validation to compare with the original two models. This will characterize the overall extent to which these interactions enhance the logistic regression model.

We also compared results and interpretations obtained by our hybrid approach to that obtained using naïve implementations of LASSO-penalization on all of the pairwise interactions of predictors and all of the aforementioned kernel-based methods. Finally, we extracted characteristics of each dataset and trained a model to predict when the hybrid approach should be deployed, the results of which are presented in the Additional file 1.

Results

Random forest outperforms logistic regression

A total of 556 OpenML datasets were fitted and tested on both the naive logistic regression and random forest models. Five-fold cross validation scores of the C-statistics (Area Under the Receiver Operating Curve, AUROC) of the models demonstrated that random forest models clearly outperform the logistic regression models by 0.061 AUROC on average (Fig. 2a) (t = 13.6, p = 2.3e-36), where the random forest models scored 0.87 and logistic regression models scored an average cross-validated C-statistic of 0.81.

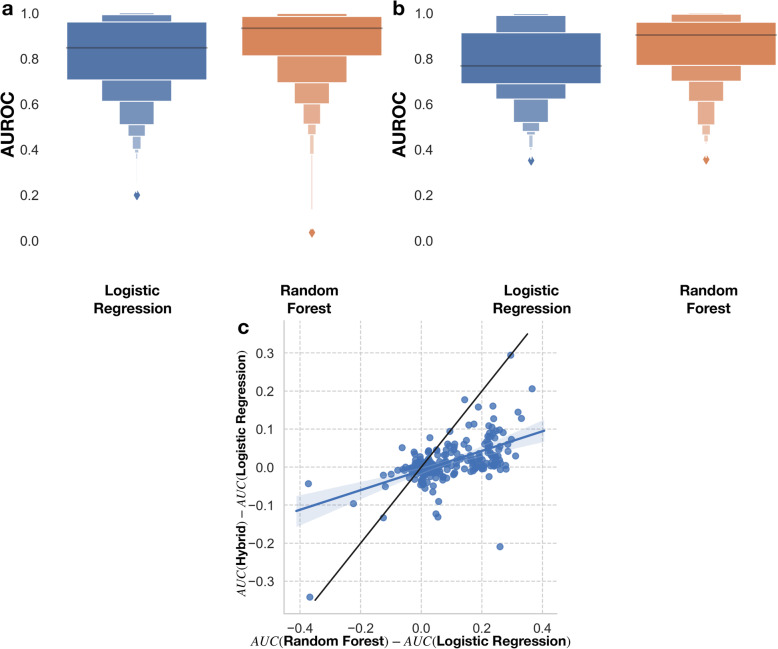

Fig. 2.

a-b Boxenplots of prediction performance of Logistic Regression versus Random Forest for a) original 556 datasets, b) subset 277 datasets, c) linear plot of the performance gains of hybrid approach versus the random forest approach

We then restricted the range of the number of variables and samples from 5 to 110 and 50 to 2500 respectively to focus on low dimensional domains where it is more sensible to employ standard statistical modeling techniques in the biomedical space. Here, out of the remaining 277 datasets studied, the random forest model further outperformed the logistic regression models by an average 5-fold cross validated C-statistic difference of 0.077, 0.86 AUROC to 0.78 AUROC respectively (Fig. 2b) (t = 11.5, p = 3.8e-25). At this point, we felt that it would be pertinent to study the extent to which this difference is merely driven by the absence of interaction terms in the logistic regression model using our interaction extraction approach.

Application of interaction terms

Out of the remaining 277 datasets, we used SHAP to screen for pertinent interaction terms to add to the design matrix of the logistic regression model. We performed this procedure, ran a logistic regression model on the resulting design matrix, and calculated the C-statistic on a validation set for each of 5 cross validation folds, as noted in the previous section. The resulting measures of model fit were compared to the fit of the original logistic regression models (those not enhanced by the ML identified interactions) and plotted against the improvement of the random forest model over the logistic regression approach. Note that improvement of the logistic regression models may be bounded by a maximum score dictated by the maximum score attainable by any model, 100%. As seen in Fig. 2c, the domain in which the random forest model is closer to the true model is when the differences in the cross-validation scores on the x-axis is greater than 0. In this case, extracting interactions proved to be beneficial when the differences in cross validation scores between the interaction and logistic regression model in the y-axis was greater than 0.

We also sought to determine how a logistic regression model augmented by interactions extracted from the machine learning model would respond when the machine learning model was closer to the true model. We observed a positive relationship between the improvement of random forest versus improvement of the interaction extraction model over the logistic regression model (m = 0.26, intercept = − 0.01, r = 0.56, p = 6.8e-24). Furthermore, using a sensitivity analysis, we uncovered 37 datasets, where taken together, the hybrid approach achieved similar performance to the Random Forest approach (p = 0.07, Mann Whitney U-Test) where previously the Random Forest model had outperformed the Logistic Regression approach (Additional file 1 Figure 4). Taking into account all 277 datasets, the Hybrid Approach outperformed the Random Forest model in 61 datasets. These results demonstrate the potential for utilizing the output of the machine learning model to enhance statistical models such that their predictive accuracy makes a substantial gain on that of the ML procedure, even when the random forest is (closer to) the true model. Conversely, when the random forest underperformed versus the initial logistic regression model, traditional statistical methods tended to reject the inclusion of the interactions (if the ML output even suggested them in the first place).

Comparison to LASSO-penalized interaction models and kernel methods

We wanted to contextualize the performance and inferential abilities gained when utilizing our hybrid approach to that achieved by LASSO selection of interactions and kernel methods across the 277 binary outcome datasets. Our results indicated that the random forest modeling approach outperformed all kernel and LASSO-based approaches, but our hybrid model was able to achieve performance on-par with the less interpretable kernel/LASSO methods. This is not to mention the many datasets from which the Hybrid approach was able to achieve similar or better performance versus the Random Forest Approach, as aforementioned in the previous section. For instances from which the Random Forest modeling approach was able to significantly outperform the Logistic Regression modeling approach, the hybrid model statistically outperformed the traditional Logistic Regression modeling approach and achieved similar performance to the kernel and LASSO approaches. Further description and results for this experimental design can be found in the Additional file 1 (Additional file 1 Figure 5; Additional file 1 Tables 3-5).

Selected biomedical case studies

Finally, we explore two test use cases using pertinent biomedical datasets. In the first, epistasis is studied using a model rich with interactions where the random forest model is closer to the true model and a diabetes dataset where the logistic regression model is closer to the true model to understand the nature of the extracted interactions.

Case study 1: interaction extraction helps model epistasis

Epistasis is defined as the interaction between multiple genes, where the complex interplay between the presence or absence of two genes in the form of alleles serves as an effect modifier to elicit the development of a complex trait or disease. Many researchers conceptualize the components of these interactions to be binary explanatory variables. The presence of these interactions can hamper the ability to uncover the true effect of the target gene in question. There are over 21,000 distinct protein-coding genes in the human genome, which can make studying all of the possible interactions between the genes computationally intractable, especially given interactions with more than two genes. Machine learning approaches such as multi-factor dimensionality reduction seek to characterize all possible pairwise interactions, but still suffer from the computational time needed to process all such interactions [33, 34].

There exist a few interacting genes that we are unable to detect, so we expect the random forest model to outperform the logistic regression model. When we train both models and test on the test set, random forest outperforms logistic regression 0.8 to 0.47, a monstrous performance gain of 0.33 (Table 1). This makes for a perfect use case from which to evaluate the ability to extract interaction terms that enhance the performance of logistic regression models.

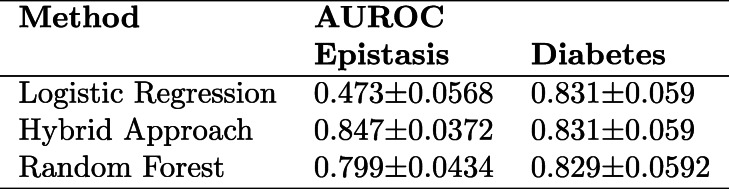

Table 1.

95% confidence intervals for predictive performance (C-statistic) of Logistic Regression, Hybrid Approach, and Random Forest on held-out test set for the tasks of Epistasis and Diabetes prediction; confidence intervals obtained using 1000 sample non-parametric bootstrap.

Inspection of SHAP summary plots reveals the salient features for each training data set for each model. The SHAP plots for the random forest model upweight genes that appear to have interactions with other genes but does not clearly describe which genes it is interacting with. After we extract the interactions, we augmented the design matrix for the logistic regression model and estimated the corresponding logistic regression model. The results reveal that the added interactions substantially enhances the logistic regression model (AUROC 0.85) while enabling the familiar interaction-effect regression model interpretation. Inspection of the SHAP plots and exponentiated model coefficients identifies which genes were explicitly and significantly interacting with one another, and which were overall most explanatory for the complex trait being studied (Fig 3a-d). Furthermore, a study of the SHAP ranking of all of the interactions of the model yields four interactions as far stronger performers than any others (Fig. 3e).

Fig. 3.

a-c Epistasis SHAP summary plots for: a) Logistic Regression, b) Random Forest, and c) Hybrid Approach; d) Odds-ratios for coefficients of predictors derived from hybrid logistic regression augmented by RF suggested interactions; e) SHAP scores (measures of predictor variable importance) for top 10 ranked interactions

We also constructed a design matrix that contained all possible pairwise epistasis interactions in addition to the main effect terms and fit a LASSO model to this dataset to select terms with non-zero coefficients. The resulting terms were used to fit a standard Logistic Regression model to see whether a LASSO-selected generalized linear interaction model could both outperform our hybrid approach and confer additional interpretability. Not only did the LASSO-selected interaction model (AUROC 0.771 ± 0.0474) fail to outperform the hybrid approach (AUROC 0.85), but the model also obfuscated the main effects in favor of the remaining highly colinear interactions (See Additional file 1 Table 6).

Here, we have demonstrated the power to meaningfully extract interactions important to a machine learning model and add them to an underperforming logistic regression model to boost its performance to match that of the machine learning model. Thus, in this case, the hybrid approach can be viewed as outperforming either the logistic regression or Random Forest approaches alone! In our next example, we will study a case where adding interaction terms did not improve model performance.

Case study 2: random forest and logistic regression features coherent for diabetes prediction

In the early 1900s, the diversion of irrigation systems that had once made the Pima Indians of Arizona and Mexico a prosperous agricultural community disrupted their lifestyle from one that had subsided on high carbohydrate, low fat diet to one based on a high fat diet and a more sedentary lifestyle. The Pima Indians have been willing participants in a myriad of epidemiological, clinical and genetic studies that have ultimately contributed to a better understanding of the pathogenesis and diagnosis of type II diabetes and obesity. Here, we study a dataset acquired from the National Institute of Diabetes and Digestive and Kidney Diseases and fit logistic regression and random forest models to the data to predict diabetes as a binary outcome as defined by the World Health Organization [35, 36].

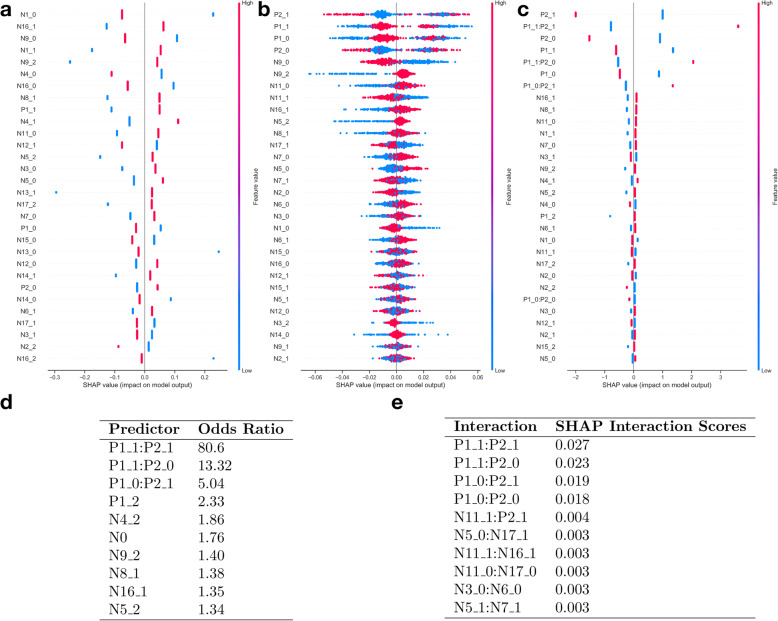

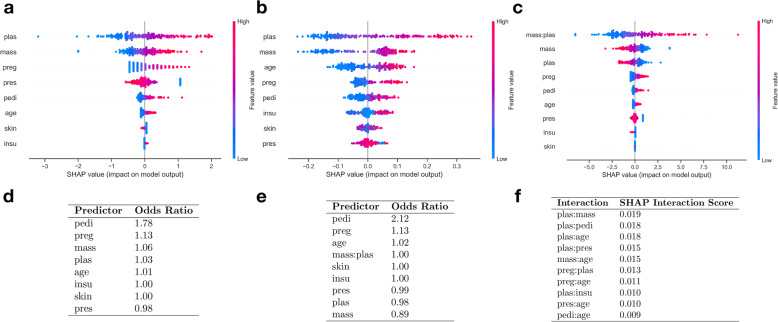

The logistic regression and random forest model both exhibit similar performance (0.83 C-statistic; Table 1) while generally agreeing on which variables are the most important for the model’s decisions (Fig. 4). After adding interactions to the logistic regression model, the performance does not change. The interaction between BMI and plasma measurements was the most significant interaction detected using SHAP (Fig. 4c) and appears to be the most important variable when running it in the logistic modeling framework. This is a typical use case where extracting the interactions does not improve model performance; logistic regression with no interactions appears closer to the correct model specification and it is able to strongly hint the fact to us by the non-significant effects for the interaction terms once added to the model (guiding us to stay with the no interaction specification).

Fig. 4.

a-c Diabetes SHAP summary plots for: a) Logistic Regression, b) Random Forest, and c) Hybrid Approach; d) Odds-ratios of predictors derived using logistic regression prior to application of hybrid procedure; e) Odds-ratios of predictors derived from hybrid approach logistic regression coefficients; f) SHAP interaction scores for top 10 ranked interactions

Discussion

We have described and illustrated some of the key differences between traditional statistical modeling and machine-learning approaches, focusing the latter on a comparison to random forests. The former approach models the explanatory variables as varying linearly on the natural scale of the outcome, while the latter incorporates unguided interaction and transformation modeling. These modeling approaches are sometimes compared unfairly due to model building being inherent to ML but not to logistic regression. Yet there are many use-cases when logistic regression models outperform machine learning approaches, particularly when equipped with an equivalent capability for model building. For future biomedical applications, the researcher should be privy to which model specification best captures the relationship between the predictor and outcome variables. In the following, we summarize the main discussion points of our work with sensible modeling recommendations:

In the domain where the number of variables is similar to or less than the number of observations, testing logistic regression and random forest models on the data should give an indication of which approach is more suitable.

The potential for the random forest model accuracy to exceed that of the logistic regression approach can be directly evaluated by applying both techniques and subtracting their goodness-of-fit measures, or via a meta-estimator that can predict this potential accuracy based on derived characteristics of the dataset. For example, see the approach featured in the Additional file 1 (Additional file 1 Figure 6) and for examples in other frameworks, see PennAI [37, 38].

If logistic regression outperforms or is on par with the random forest model, then the true model is likely to have a systematic component that is linear on the log-odds scale, and as such the results of the logistic model should be presented. There should be consistency between which features both models found to be important.

If random forest outperforms logistic regression, the true model likely involves interactions and nonlinear variable transforms. One could apply the interaction extractions as presented in this work on the random forest model to construct an interpretable logistic regression model enhanced by the inclusion of interactions.

If the number of variables is too high, it may be computationally inefficient to extract the interaction term, nor may it improve the logistic regression model performance. In this case, the variables should be modeled using the machine learning model and explained through SHAP or alternatively the aforementioned dimensionality reduction technique should be applied.

If interaction extraction demonstrates limited improvement, combining this approach with the variable transformer such as SAFE could potentially recover most of the performance difference.

Machine learning approaches may be more suitable when the number of variables far outnumbers the number of features -- the high dimensionality and multi-collinearity domain. In this domain, you could use machine learning for dimensional reduction of the analysis data set [39, 40] prior to estimating logistic regression model on only the remaining terms.

Since variable transformations and interactions are a staple of machine learning, we envision future model building approaches to iteratively transform and form important interactions between the predictors in a model. In addition to this, we can incorporate feature grouping or feature selection processes to further eliminate issues associated with multi-collinearity and make sure that we are capturing valuable interactions. Finally, we envision that future modeling approaches may be able to hybridize machine learning methods such as Classification and Regression Trees (CART) and traditional statistical models through the development of mechanisms that can automatically add interaction terms to generalized linear models [41]. We call for derivations of more higher-level interactions and variable transformations to further enhance traditional statistical procedures beyond the addition of interaction terms alone. In addition, we encourage the development of methods for rigorous mathematical characterization of hybrid procedures and the conditions and specifications under which they work best.

We acknowledge that the datasets used in this study were from a machine learning benchmark repository, which means that there may be some selection bias as to the suitability of each dataset for machine learning versus traditional statistical modeling approaches. For instance, other databases such as PMLB [42] have also been developed to benchmark machine learning algorithms. Also, we remain unconvinced that such an approach would scale to thousands of predictors on the basis of computational complexity associated with such an analysis and remaining issues with fitting a generalized linear model in the presence of excessive multicollinearity. We also assume that the stochastic estimation of feature importance using SHAP converges to an optimal solution; improperly run permutation importance methods may incorrectly specify the ideal set of interactions. In addition, because the acquired datasets were based on binary prediction tasks, we did not attempt to study approaches with continuous or multinomial outcomes. We neither explored nor discussed the impact of class imbalance on shapley value estimation, the accuracy of the Hybrid approach, and the explainability of the regression coefficients of extracted interactions (see Additional file 1 section “Discussion of the Rare Events Issue”) [43–45]. While this paper sought to motivate and demonstrate the capabilities of a hybrid approach on a binary outcome, our hybrid approach can also be used to build interpretable models with multinomial and continuous outcomes. Such capabilities can be readily utilized in practice from our software distribution. Finally, our approach focused on random forest as a representative of the ML approaches. Deep learning methodologies [46] can also be used for interaction extraction, and future work could adapt the output of deep learning models to suggest which interactions or other types of predictors to add just as considered herein with the case of random forests.

Conclusion

We demonstrated that machine learning techniques may not always include the optimal model for a biomedical problem. In cases where they are, there may be means from which to learn important interactions from the machine learning models and apply them to enhance statistical models. Biomedical analyses may benefit from entwining the algorithms acquired from machine learning with the simplicity and interpretability of statistical procedures. Such work may be a step in the right direction towards communicating helpful science to clinical and lay audiences alike, which can further build trust and acceptance for the incorporation of machine learning into biomedical clinical settings. Our procedure and its illustration are just scratching the surface of what can be achieved by hybrid procedures. We encourage readers to consider developing or adopting more hybrid statistical - ML procedures, especially in applications for which transparent interpretation of the results is needed.

Supplementary information

Additional file 1 Appendix Table 1. Glossary of Terms. Appendix Table 2. Pros and Cons of Logistic Regression, Decision Tree, and Random Forest Approaches. Appendix Figure 2. Characteristics of linear modeling approach (blue), decision trees (orange), random forest (green), original generated points (black); (a-b) Predictor X versus response Y for the a) linear continuous predictor, b) non-linear transformed predictor. Appendix Figure 2. Characteristics of generalized linear modeling approach (green), decision trees (purple), random forest (red); (a-b) Predictors X1 and X2 versus binary response Y for the a) linear continuous predictors, b) non-linear transformed predictors. Appendix Figure 3. Decision Diagram for Application of Hybrid Approach. Appendix Figure 4. Performance of Hybrid Approach for select datasets is on par with Random Forest for domain where Random Forest outperforms Logistic Regression by at least 2%; (a) 37 datasets selected with highest positive residual from model regressed on performance gains for Hybrid and Random Forest approaches in the aforementioned domain; (b) Boxenplots of predictive performance of the Logistic Regression, Random Forest and Hybrid methods for these 37 datasets; other comparison methods enforce stricter thresholds/cutoffs for performance gain. Appendix Figure 5. Comparison of Additional Machine Learning Modeling Approaches Across: (a) 277 benchmark datasets featured in the main text; (b) the 77 datasets that remain after selection of datasets where Random Forest significantly outperforms the traditional modeling approaches; the threshold for outperformance for Random Forest was greater than that utilized in previous comparison approaches for effect. Appendix Table 3. Comparison of median AUROC for each machine learning and statistical modeling approach across 277 benchmark datasets and a subset of these datasets where Random Forest significantly outperforms the traditional modeling approaches (n = 77); 95% confidence intervals were derived using a 1000-sample non-parametric bootstrap; the threshold for outperformance for Random Forest was greater than that utilized in previous comparison approaches for effect. Appendix Table 4. Pairwise comparison of median AUROC for each of the modeling approaches for the 277 benchmark datasets; 2-tailed non-parametric Mann-Whitney U tests were corrected using family-wise error rate Bonferroni correction to find median AUROCs that were different between the modeling approaches on the 0.05 alpha significance level; we note here that there still exists 61 datasets from which the Hybrid approach is still able to outperform the Random Forest Approach. Appendix Table 5. Pairwise comparison of median AUROC for each of the modeling approaches for a subset 277 benchmark datasets from which the Random Forest outperforms the traditional modeling approaches (n = 77); 2-tailed non-parametric Mann-Whitney U tests were corrected using family-wise error rate Bonferroni correction to find median AUROCs that were different between the modeling approaches on the 0.05 alpha significance level. Appendix Table 6. Top 10 largest odds-ratios of the Epistasis Logistic Regression Model coefficients after using LASSO to select predictors from a logistic regression model which included all pairwise predictors; note how all main effects have been excluded from this list. Appendix Figure 6. Cluster Heatmap of dataset characteristics of the 277 datasets; rows of matrix indicate datasets; columns of matrix indicate different properties of the dataset; columns were standardized to illustrate trends; left-hand yellow and blue labels were applied to indicate whether Random Forrest model outperformed Logistic Regression model (yellow for gain in performance; blue for no gain in performance, prediction to left of true difference in performance) for a given dataset; column colors indicate relative importance of dataset characteristics as identified by the Gini index.

Acknowledgements

Not Applicable.

Abbreviations

- AUROC

Area under the receiver operating curve

- CART

Classification and Regression Trees

- C-statistic

Concordance statistic

- CT

Computed Tomography

- EHR

Electronic Health Record

- LASSO

Least Absolute Shrinkage and Selection Operator

- MAR

Mean Absolute Error/Residual

- ML

Machine Learning

- PMLB

Penn Machine Learning Benchmarks

- SAFE

Surrogate Assisted Feature Extraction

- SAS

Statistical Analysis System

- SHAP

Shapley Additive Explanations

Authors’ contributions

JL and AJO designed the analytic plan, JL executed the analytic plan, and all authors edited and approved the final manuscript.

Funding

JL is supported through the Burroughs Wellcome Fund Big Data in the Life Sciences at Dartmouth.

Availability of data and materials

Project Name: InteractionTransformer.

Project home page:https://github.com/jlevy44/InteractionTransformer

Operating system(s): Platform independent.

Programming language: Python, R.

License: MIT.

The data used for evaluating the machine learning benchmark datasets are openly available for download at https://www.openml.org/ . We have listed the dataset IDs from which to download these datasets in our GitHub repository, here: https://github.com/jlevy44/InteractionTransformer.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

Not applicable.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information accompanies this paper at 10.1186/s12874-020-01046-3.

References

- 1.Travers C, Himmelstein Daniel S, Beaulieu-Jones Brett K, Kalinin Alexandr A, Do Brian T, Way Gregory P, et al. Opportunities and obstacles for deep learning in biology and medicine. J R Soc Interface. 2018;15:20170387. doi: 10.1098/rsif.2017.0387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Levy JJ, Titus AJ, Petersen CL, Chen Y, Salas LA, Christensen BC. MethylNet: an automated and modular deep learning approach for DNA methylation analysis. BMC Bioinformatics. 2020;21:108. [DOI] [PMC free article] [PubMed]

- 3.Chen X, Ishwaran H. Random forests for genomic data analysis. Genomics. 2012;99:323–329. doi: 10.1016/j.ygeno.2012.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jiang R, Tang W, Wu X, Fu W. A random forest approach to the detection of epistatic interactions in case-control studies. BMC Bioinformatics. 2009;10(Suppl 1):S65. doi: 10.1186/1471-2105-10-S1-S65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cheng J-Z, Ni D, Chou Y-H, Qin J, Tiu C-M, Chang Y-C, et al. Computer-aided diagnosis with deep learning architecture: applications to breast lesions in US images and pulmonary nodules in CT scans. Sci Rep. 2016;6:1–13. doi: 10.1038/s41598-016-0001-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Coudray N, Ocampo PS, Sakellaropoulos T, Narula N, Snuderl M, Fenyö D, et al. Classification and mutation prediction from non–small cell lung cancer histopathology images using deep learning. Nat Med. 2018;24:1559–1567. doi: 10.1038/s41591-018-0177-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tang B, Li A, Li B, Wang M. CapSurv: capsule network for survival analysis with whole slide pathological images. IEEE Access. 2019;7:26022–26030. doi: 10.1109/ACCESS.2019.2901049. [DOI] [Google Scholar]

- 8.Ishwaran H, Kogalur UB, Blackstone EH, Lauer MS. Random survival forests. Ann Appl Stat. 2008;2:841–860. doi: 10.1214/08-AOAS169. [DOI] [Google Scholar]

- 9.Shickel B, Tighe P, Bihorac A, Rashidi P. Deep EHR: A survey of recent advances in deep learning techniques for electronic health record (EHR) analysis. IEEE J Biomed Health Inform. 2018;22:1589–1604. doi: 10.1109/JBHI.2017.2767063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Couronné R, Probst P, Boulesteix A-L. Random forest versus logistic regression: a large-scale benchmark experiment. BMC Bioinformatics. 2018;19:270. doi: 10.1186/s12859-018-2264-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Christodoulou E, Ma J, Collins GS, Steyerberg EW, Verbakel JY, Van Calster B. A systematic review shows no performance benefit of machine learning over logistic regression for clinical prediction models. J Clin Epidemiol. 2019;110:12–22. doi: 10.1016/j.jclinepi.2019.02.004. [DOI] [PubMed] [Google Scholar]

- 12.Khattree R, Naik DN. Applied multivariate statistics with SAS software, second edition. 2. Cary: SAS Institute Inc.; 2018. [Google Scholar]

- 13.Johnsson T. A procedure for stepwise regression analysis. Stat Pap. 1992;33:21–29. doi: 10.1007/BF02925308. [DOI] [Google Scholar]

- 14.Hocking RR. A biometrics invited paper. The analysis and selection of variables in linear regression. Biometrics. 1976;32:1–49. doi: 10.2307/2529336. [DOI] [Google Scholar]

- 15.Efroymson MA. Multiple regression analysis. Mathematical Methods for Digital Computers. 1960. pp. 191–203. [Google Scholar]

- 16.Kleinbaum DG, Klein M. Introduction to logistic regression. In: Kleinbaum DG, Klein M, editors. Logistic regression: a self-learning text. New York: Springer; 2010. pp. 1–39. [Google Scholar]

- 17.Quinlan JR. Induction of decision trees. Mach Learn. 1986;1:81–106. [Google Scholar]

- 18.Breiman L. Random Forests. Mach Learn. 2001;45:5–32. doi: 10.1023/A:1010933404324. [DOI] [Google Scholar]

- 19.Ho TK. Random Decision Forests. In: Proceedings of the Third International Conference on Document Analysis and Recognition (Volume 1). Washington, DC: IEEE Computer Society; 1995.Volume 1 p. 278–. http://dl.acm.org/citation.cfm?id=844379.844681. Accessed 11 Apr 2019.

- 20.Gosiewska A, Gacek A, Lubon P, Biecek P. SAFE ML: Surrogate Assisted Feature Extraction for Model Learning. arXiv. 2019; 190211035 [cs, stat]. http://arxiv.org/abs/1902.11035. Accessed 5 Nov 2019.

- 21.Lundberg SM, Lee S-I. A unified approach to interpreting model predictions. In: Guyon I, Luxburg UV, Bengio S, Wallach H, Fergus R, Vishwanathan S, et al, editors. Advances in neural information processing systems 30. Red Hook: Curran Associates, Inc; 2017. p. 4765–74. http://papers.nips.cc/paper/7062-a-unified-approach-to-interpreting-model-predictions.pdf. Accessed 9 June 2019.

- 22.Lundberg SM, Erion GG, Lee S-I. Consistent Individualized Feature Attribution for Tree Ensembles. arXiv. 2019; 180203888 [cs, stat]. . http://arxiv.org/abs/1802.03888. Accessed 5 Nov 2019.

- 23.Lundberg SM, Erion G, Chen H, DeGrave A, Prutkin JM, Nair B, et al. Explainable AI for Trees: From Local Explanations to Global Understanding. arXiv. 2019; 190504610 [cs, stat]. http://arxiv.org/abs/1905.04610. Accessed 5 Nov 2019. [DOI] [PMC free article] [PubMed]

- 24.Hinton GE. Connectionist learning procedures. Artif Intell. 1989;40:185–234. doi: 10.1016/0004-3702(89)90049-0. [DOI] [Google Scholar]

- 25.Williams CKI, Seeger M. Using the Nyström Method to Speed Up Kernel Machines. In: Leen TK, Dietterich TG, Tresp V, editors. Advances in Neural Information Processing Systems 13. Cambridge: MIT Press; 2001. p. 682–8. http://papers.nips.cc/paper/1866-using-the-nystrom-method-to-speed-up-kernel-machines.pdf. Accessed 2 Apr 2020.

- 26.Maalouf M, Homouz D. Kernel ridge regression using truncated newton method. Knowl-Based Syst. 2014;71:339–344. doi: 10.1016/j.knosys.2014.08.012. [DOI] [Google Scholar]

- 27.Durrande N, Ginsbourger D, Roustant O, Carraro L. ANOVA kernels and RKHS of zero mean functions for model-based sensitivity analysis. arXiv. 2012; 11063571 [stat]. . http://arxiv.org/abs/1106.3571. Accessed 2 Apr 2020.

- 28.Platt JC. Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. In: Advances in Large Margin Classifiers. Cambridge: MIT Press; 1999. p. 61–74.

- 29.Lunetta KL, Hayward LB, Segal J, Van Eerdewegh P. Screening large-scale association study data: exploiting interactions using random forests. BMC Genet. 2004;5:32. doi: 10.1186/1471-2156-5-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bischl B, Casalicchio G, Feurer M, Hutter F, Lang M, Mantovani RG, et al. OpenML Benchmarking Suites. arXiv. 2019; 170803731 [cs, stat]. . http://arxiv.org/abs/1708.03731. Accessed 5 Nov 2019.

- 31.Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: Machine Learning in Python. J Mach Learn Res. 2011;12:85:2825−30.

- 32.Lemaître G, Nogueira F, Aridas CK. Imbalanced-learn: a Python toolbox to tackle the curse of imbalanced datasets in machine learning. J Mach Learn Res. 2017;18:1–5. [Google Scholar]

- 33.Cordell HJ. Epistasis: what it means, what it doesn’t mean, and statistical methods to detect it in humans. Hum Mol Genet. 2002;11:2463–2468. doi: 10.1093/hmg/11.20.2463. [DOI] [PubMed] [Google Scholar]

- 34.Sailer ZR, Harms MJ. Detecting high-order epistasis in nonlinear genotype-phenotype maps. Genetics. 2017;205:1079–1088. doi: 10.1534/genetics.116.195214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Schulz LO, Chaudhari LS. High-risk populations: the Pimas of Arizona and Mexico. Curr Obes Rep. 2015;4:92–98. doi: 10.1007/s13679-014-0132-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Acton KJ, Ríos Burrows N, Moore K, Querec L, Geiss LS, Engelgau MM. Trends in diabetes prevalence among American Indian and Alaska native children, adolescents, and young adults. Am J Public Health. 2002;92:1485–1490. doi: 10.2105/AJPH.92.9.1485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Olson RS, Sipper M, Cava WL, Tartarone S, Vitale S, Fu W, et al. A system for accessible artificial intelligence. In: Banzhaf W, Olson RS, Tozier W, Riolo R, et al., editors. Genetic programming theory and practice XV. Cham: Springer International Publishing; 2018. pp. 121–134. [Google Scholar]

- 38.Azur MJ, Stuart EA, Frangakis C, Leaf PJ. Multiple imputation by chained equations: what is it and how does it work? Int J Methods Psychiatr Res. 2011;20:40–49. doi: 10.1002/mpr.329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Schölkopf B, Smola A, Müller K-R. Kernel principal component analysis. In: Gerstner W, Germond A, Hasler M, Nicoud J-D, editors. Artificial neural networks — ICANN’97. Berlin: Springer; 1997. pp. 583–588. [Google Scholar]

- 40.McInnes L, Healy J, Melville J. UMAP: Uniform Manifold Approximation and Projection for Dimension Reduction. arXiv. 2018; 180203426 [cs, stat]. . http://arxiv.org/abs/1802.03426. Accessed 5 Mar 2019.

- 41.Olson RS, Moore JH. TPOT: a tree-based pipeline optimization tool for automating machine learning. In: Hutter F, Kotthoff L, Vanschoren J, editors. Automated machine learning: methods, systems, challenges. Cham: Springer International Publishing; 2019. pp. 151–160. [Google Scholar]

- 42.Olson RS, La Cava W, Orzechowski P, Urbanowicz RJ, Moore JH. PMLB: a large benchmark suite for machine learning evaluation and comparison. BioData Mining. 2017;10:36. doi: 10.1186/s13040-017-0154-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Maalouf M, Trafalis T. Robust weighted kernel logistic regression in imbalanced and rare events data. Comput Stat Data Anal. 2011;55:168–183. doi: 10.1016/j.csda.2010.06.014. [DOI] [Google Scholar]

- 44.Komarek P, Moore AW. Proceedings of the fifth IEEE international conference on data mining. USA: IEEE Computer Society; 2005. Making logistic regression a Core data mining tool with TR-IRLS; pp. 685–688. [Google Scholar]

- 45.King G, Zeng L. Logistic regression in rare events data. Polit Anal. 2001;9:137–163. doi: 10.1093/oxfordjournals.pan.a004868. [DOI] [Google Scholar]

- 46.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1 Appendix Table 1. Glossary of Terms. Appendix Table 2. Pros and Cons of Logistic Regression, Decision Tree, and Random Forest Approaches. Appendix Figure 2. Characteristics of linear modeling approach (blue), decision trees (orange), random forest (green), original generated points (black); (a-b) Predictor X versus response Y for the a) linear continuous predictor, b) non-linear transformed predictor. Appendix Figure 2. Characteristics of generalized linear modeling approach (green), decision trees (purple), random forest (red); (a-b) Predictors X1 and X2 versus binary response Y for the a) linear continuous predictors, b) non-linear transformed predictors. Appendix Figure 3. Decision Diagram for Application of Hybrid Approach. Appendix Figure 4. Performance of Hybrid Approach for select datasets is on par with Random Forest for domain where Random Forest outperforms Logistic Regression by at least 2%; (a) 37 datasets selected with highest positive residual from model regressed on performance gains for Hybrid and Random Forest approaches in the aforementioned domain; (b) Boxenplots of predictive performance of the Logistic Regression, Random Forest and Hybrid methods for these 37 datasets; other comparison methods enforce stricter thresholds/cutoffs for performance gain. Appendix Figure 5. Comparison of Additional Machine Learning Modeling Approaches Across: (a) 277 benchmark datasets featured in the main text; (b) the 77 datasets that remain after selection of datasets where Random Forest significantly outperforms the traditional modeling approaches; the threshold for outperformance for Random Forest was greater than that utilized in previous comparison approaches for effect. Appendix Table 3. Comparison of median AUROC for each machine learning and statistical modeling approach across 277 benchmark datasets and a subset of these datasets where Random Forest significantly outperforms the traditional modeling approaches (n = 77); 95% confidence intervals were derived using a 1000-sample non-parametric bootstrap; the threshold for outperformance for Random Forest was greater than that utilized in previous comparison approaches for effect. Appendix Table 4. Pairwise comparison of median AUROC for each of the modeling approaches for the 277 benchmark datasets; 2-tailed non-parametric Mann-Whitney U tests were corrected using family-wise error rate Bonferroni correction to find median AUROCs that were different between the modeling approaches on the 0.05 alpha significance level; we note here that there still exists 61 datasets from which the Hybrid approach is still able to outperform the Random Forest Approach. Appendix Table 5. Pairwise comparison of median AUROC for each of the modeling approaches for a subset 277 benchmark datasets from which the Random Forest outperforms the traditional modeling approaches (n = 77); 2-tailed non-parametric Mann-Whitney U tests were corrected using family-wise error rate Bonferroni correction to find median AUROCs that were different between the modeling approaches on the 0.05 alpha significance level. Appendix Table 6. Top 10 largest odds-ratios of the Epistasis Logistic Regression Model coefficients after using LASSO to select predictors from a logistic regression model which included all pairwise predictors; note how all main effects have been excluded from this list. Appendix Figure 6. Cluster Heatmap of dataset characteristics of the 277 datasets; rows of matrix indicate datasets; columns of matrix indicate different properties of the dataset; columns were standardized to illustrate trends; left-hand yellow and blue labels were applied to indicate whether Random Forrest model outperformed Logistic Regression model (yellow for gain in performance; blue for no gain in performance, prediction to left of true difference in performance) for a given dataset; column colors indicate relative importance of dataset characteristics as identified by the Gini index.

Data Availability Statement

Project Name: InteractionTransformer.

Project home page:https://github.com/jlevy44/InteractionTransformer

Operating system(s): Platform independent.

Programming language: Python, R.

License: MIT.

The data used for evaluating the machine learning benchmark datasets are openly available for download at https://www.openml.org/ . We have listed the dataset IDs from which to download these datasets in our GitHub repository, here: https://github.com/jlevy44/InteractionTransformer.