Abstract

Big data and machine learning are having an impact on most aspects of modern life, from entertainment, commerce, and healthcare. Netflix knows which films and series people prefer to watch, Amazon knows which items people like to buy when and where, and Google knows which symptoms and conditions people are searching for. All this data can be used for very detailed personal profiling, which may be of great value for behavioral understanding and targeting but also has potential for predicting healthcare trends. There is great optimism that the application of artificial intelligence (AI) can provide substantial improvements in all areas of healthcare from diagnostics to treatment. It is generally believed that AI tools will facilitate and enhance human work and not replace the work of physicians and other healthcare staff as such. AI is ready to support healthcare personnel with a variety of tasks from administrative workflow to clinical documentation and patient outreach as well as specialized support such as in image analysis, medical device automation, and patient monitoring. In this chapter, some of the major applications of AI in healthcare will be discussed covering both the applications that are directly associated with healthcare and those in the healthcare value chain such as drug development and ambient assisted living.

Keywords: Artificial intelligence, healthcare applications, machine learning, precision medicine, ambient assisted living, natural language programming, machine vision

2.1. The new age of healthcare

Big data and machine learning are having an impact on most aspects of modern life, from entertainment, commerce, and healthcare. Netflix knows which films and series people prefer to watch, Amazon knows which items people like to buy when and where, and Google knows which symptoms and conditions people are searching for. All this data can be used for very detailed personal profiling, which may be of great value for behavioral understanding and targeting but also has potential for predicting healthcare trends. There is great optimism that the application of artificial intelligence (AI) can provide substantial improvements in all areas of healthcare from diagnostics to treatment. There is already a large amount of evidence that AI algorithms are performing on par or better than humans in various tasks, for instance, in analyzing medical images or correlating symptoms and biomarkers from electronic medical records (EMRs) with the characterization and prognosis of the disease [1].

The demand for healthcare services is ever increasing and many countries are experiencing a shortage of healthcare practitioners, especially physicians. Healthcare institutions are also fighting to keep up with all the new technological developments and the high expectations of patients with respect to levels of service and outcomes as they know it from consumer products including those of Amazon and Apple [2]. The advances in wireless technology and smartphones have provided opportunities for on-demand healthcare services using health tracking apps and search platforms and have also enabled a new form of healthcare delivery, via remote interactions, available anywhere and anytime. Such services are relevant for underserved regions and places lacking specialists and help reduce costs and prevent unnecessary exposure to contagious illnesses at the clinic. Telehealth technology is also relevant in developing countries where the healthcare system is expanding and where healthcare infrastructure can be designed to meet the current needs [3]. While the concept is clear, these solutions still need substantial independent validation to prove patient safety and efficacy.

The healthcare ecosystem is realizing the importance of AI-powered tools in the next-generation healthcare technology. It is believed that AI can bring improvements to any process within healthcare operation and delivery. For instance, the cost savings that AI can bring to the healthcare system is an important driver for implementation of AI applications. It is estimated that AI applications can cut annual US healthcare costs by USD 150 billion in 2026. A large part of these cost reductions stem from changing the healthcare model from a reactive to a proactive approach, focusing on health management rather than disease treatment. This is expected to result in fewer hospitalizations, less doctor visits, and less treatments. AI-based technology will have an important role in helping people stay healthy via continuous monitoring and coaching and will ensure earlier diagnosis, tailored treatments, and more efficient follow-ups.

The AI-associated healthcare market is expected to grow rapidly and reach USD 6.6 billion by 2021 corresponding to a 40% compound annual growth rate [4].

2.1.1. Technological advancements

There have been a great number of technological advances within the field of AI and data science in the past decade. Although research in AI for various applications has been ongoing for several decades, the current wave of AI hype is different from the previous ones. A perfect combination of increased computer processing speed, larger data collection data libraries, and a large AI talent pool has enabled rapid development of AI tools and technology, also within healthcare [5]. This is set to make a paradigm shift in the level of AI technology and its adoption and impact on society.

In particular, the development of deep learning (DL) has had an impact on the way we look at AI tools today and is the reason for much of the recent excitement surrounding AI applications. DL allows finding correlations that were too complex to render using previous machine learning algorithms. This is largely based on artificial neural networks and compared with earlier neural networks, which only had 3–5 layers of connections, DL networks have more than 10 layers. This corresponds to simulation of artificial neurons in the order of millions.

There are numerous companies that are frontrunners in this area, including IBM Watson and Google’s Deep Mind. These companies have shown that their AI can beat humans in selected tasks and activities including chess, Go, and other games. Both IBM Watson and Google’s Deep Mind are currently being used for many healthcare-related applications. IBM Watson is being used to investigate for diabetes management, advanced cancer care and modeling, and drug discovery, but has yet to show clinical value to the patients. Deep Mind is also being looked at for applications including mobile medical assistant, diagnostics based on medical imaging, and prediction of patient deterioration [6], [7].

Many data and computation-based technologies have followed exponential growth trajectories. The most known example is that of Moore’s law, which explains the exponential growth in the performance of computer chips. Many consumer-oriented apps have experienced similar exponential growth by offering affordable services. In healthcare and life science, the mapping of the human genome and the digitization of medical data could result in a similar growth pattern as genetic sequencing and profiling becomes cheaper and electronic health records and the like serve as a platform for data collection. Although these areas may seem small at first, the exponential growth will take control at some point. Humans are generally poor at understanding exponential trends and have a tendency to overestimate the impact of technology in the short-term (e.g. 1 year) while underestimating the long-term (e.g. 10 years) effect.

2.1.2. Artificial intelligence applications in healthcare

It is generally believed that AI tools will facilitate and enhance human work and not replace the work of physicians and other healthcare staff as such. AI is ready to support healthcare personnel with a variety of tasks from administrative workflow to clinical documentation and patient outreach as well as specialized support such as in image analysis, medical device automation, and patient monitoring.

There are different opinions on the most beneficial applications of AI for healthcare purposes. Forbes stated in 2018 that the most important areas would be administrative workflows, image analysis, robotic surgery, virtual assistants, and clinical decision support [8]. A 2018 report by Accenture mentioned the same areas and also included connected machines, dosage error reduction, and cybersecurity [9]. A 2019 report from McKinsey states important areas being connected and cognitive devices, targeted and personalized medicine, robotics-assisted surgery, and electroceuticals [10].

In the next sections, some of the major applications of AI in healthcare will be discussed covering both the applications that are directly associated with healthcare and other applications in the healthcare value chain such as drug development and ambient assisted living (AAL).

2.2. Precision medicine

Precision medicine provides the possibility of tailoring healthcare interventions to individuals or groups of patients based on their disease profile, diagnostic or prognostic information, or their treatment response. The tailor-made treatment opportunity will take into consideration the genomic variations as well as contributing factors of medical treatment such as age, gender, geography, race, family history, immune profile, metabolic profile, microbiome, and environment vulnerability. The objective of precision medicine is to use individual biology rather than population biology at all stages of a patient’s medical journey. This means collecting data from individuals such as genetic information, physiological monitoring data, or EMR data and tailoring their treatment based on advanced models. Advantages of precision medicine include reduced healthcare costs, reduction in adverse drug response, and enhancing effectivity of drug action [11]. Innovation in precision medicine is expected to provide great benefits to patients and change the way health services are delivered and evaluated.

There are many types of precision medicine initiatives and overall, they can be divided into three types of clinical areas: complex algorithms, digital health applications, and “omics”-based tests.

Complex algorithms: Machine learning algorithms are used with large datasets such as genetic information, demographic data, or electronic health records to provide prediction of prognosis and optimal treatment strategy.

Digital health applications: Healthcare apps record and process data added by patients such as food intake, emotional state or activity, and health monitoring data from wearables, mobile sensors, and the likes. Some of these apps fall under precision medicine and use machine learning algorithms to find trends in the data and make better predictions and give personalized treatment advice.

Omics-based tests: Genetic information from a population pool is used with machine learning algorithms to find correlations and predict treatment responses for the individual patient. In addition to genetic information, other biomarkers such as protein expression, gut microbiome, and metabolic profile are also employed with machine learning to enable personalized treatments [12].

Here, we explore selected therapeutic applications of AI including genetics-based solutions and drug discovery.

2.2.1. Genetics-based solutions

It is believed that within the next decade a large part of the global population will be offered full genome sequencing either at birth or in adult life. Such genome sequencing is estimated to take up 100–150 GB of data and will allow a great tool for precision medicine. Interfacing the genomic and phenotype information is still ongoing. The current clinical system would need a redesign to be able to use such genomics data and the benefits hereof [13].

Deep Genomics, a Healthtech company, is looking at identifying patterns in the vast genetic dataset as well as EMRs, in order to link the two with regard to disease markers. This company uses these correlations to identify therapeutics targets, either existing therapeutic targets or new therapeutic candidates with the purpose of developing individualized genetic medicines. They use AI in every step of their drug discovery and development process including target discovery, lead optimization, toxicity assessment, and innovative trial design.

Many inherited diseases result in symptoms without a specific diagnosis and while interpreting whole genome data is still challenging due to the many genetic profiles. Precision medicine can allow methods to improve identification of genetic mutations based on full genome sequencing and the use of AI.

2.2.2. Drug discovery and development

Drug discovery and development is an immensely long, costly, and complex process that can often take more than 10 years from identification of molecular targets until a drug product is approved and marketed. Any failure during this process has a large financial impact, and in fact most drug candidates fail sometime during development and never make it onto the market. On top of that are the ever-increasing regulatory obstacles and the difficulties in continuously discovering drug molecules that are substantially better than what is currently marketed. This makes the drug innovation process both challenging and inefficient with a high price tag on any new drug products that make it onto the market [14].

There has been a substantial increase in the amount of data available assessing drug compound activity and biomedical data in the past few years. This is due to the increasing automation and the introduction of new experimental techniques including hidden Markov model based text to speech synthesis and parallel synthesis. However, mining of the large-scale chemistry data is needed to efficiently classify potential drug compounds and machine learning techniques have shown great potential [15]. Methods such as support vector machines, neural networks, and random forest have all been used to develop models to aid drug discovery since the 1990s. More recently, DL has begun to be implemented due to the increased amount of data and the continuous improvements in computing power. There are various tasks in the drug discovery process where machine learning can be used to streamline the tasks. This includes drug compound property and activity prediction, de novo design of drug compounds, drug–receptor interactions, and drug reaction prediction [16].

The drug molecules and the associated features used in the in silico models are transformed into vector format so they can be read by the learning systems. Generally, the data used here include molecular descriptors (e.g., physicochemical properties) and molecular fingerprints (molecular structure) as well as simplified molecular input line entry system (SMILES) strings and grids for convolutional neural networks (CNNs) [17].

2.2.2.1. Drug property and activity prediction

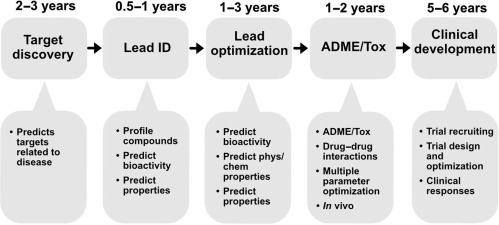

The properties and activity on a drug molecule are important to know in order to assess its behavior in the human body. Machine learning-based techniques have been used to assess the biological activity, absorption, distribution, metabolism, and excretion (ADME) characteristics, and physicochemical properties of drug molecules (Fig. 2.1 ). In recent years, several libraries of chemical and biological data including ChEMBL and PubChem have become available for storing information on millions of molecules for various disease targets. These libraries are machine-readable and are used to build machine learning models for drug discovery. For instance, CNNs have been used to generate molecular fingerprints from a large set of molecular graphs with information about each atom in the molecule. Neural fingerprints are then used to predict new characteristics based on a given molecule. In this way, molecular properties including octanol, solubility melting point, and biological activity can be evaluated as demonstrated by Coley et al. and others and be used to predict new features of the drug molecules [18]. They can then also be combined with a scoring function of the drug molecules to select for molecules with desirable biological activity and physiochemical properties. Currently, most new drugs discovered have a complex structure and/or undesirable properties including poor solubility, low stability, or poor absorption.

Figure 2.1.

Machine learning opportunities within the small molecule drug discovery and development process.

Machine learning has also been implemented to assess the toxicity of molecules, for instance, using DeepTox, a DL-based model for evaluating the toxic effects of compounds based on a dataset containing many drug molecules [19]. Another platform called MoleculeNet is also used to translate two-dimensional molecular structures into novel features/descriptors, which can then be used in predicting toxicity of the given molecule. The MoleculeNet platform is built on data from various public databases and more than 700,000 compounds have already been tested for toxicity or other properties [20].

2.2.2.2. De novo design through deep learning

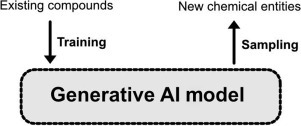

Another interesting application of DL in drug discovery is the generation of new chemical structures through neural networks (Fig. 2.2 ). Several DL-based techniques have been proposed for molecular de novo design. This also includes protein engineering involving the molecular design of proteins with specific binding or functions.

Figure 2.2.

Illustration of the generative artificial intelligence concept for de novo design. Training data of molecular structures are used to emit new chemical entities by sampling.

Here, variational autoencoders and adversarial autoencoders are often used to design new molecules in an automated process by fitting the design model to large datasets of drug molecules. Autoencoders are a type of neural network for unsupervised learning and are also the tools used to, for instance, generate images of fictional human faces. The autoencoders are trained on many drug molecule structures and the latent variables are then used as the generative model. As an example, the program druGAN used adversarial autoencoders to generate new molecular fingerprints and drug designs incorporating features such as solubility and absorption based on predefined anticancer drug properties. These results suggest a substantial improvement in the efficiency in generating new drug designs with specific properties [21]. Blaschke et al. also applied adversarial autoencoders and Bayesian optimization to generate ligands specific to the dopamine type 2 receptor [22]. Merk et al. trained a recurrent neural network to capture a large number of bioactive compounds such as SMILES strings. This model was then fine-tuned to recognize retinoid X and peroxisome proliferator-activated receptor agonists. The identified compounds were synthesized and demonstrated potent receptor modulatory activity in in vitro assays [23].

2.2.2.3. Drug–target interactions

The assessment of drug–target interactions is an important part of the drug design process. The binding pose and the binding affinity between the drug molecule and the target have an important impact on the chances of success based on the in silico prediction. Some of the more common approaches involve drug candidate identification via molecular docking, for prediction and preselection of interesting drug–target interactions.

Molecular docking is a molecular modeling approach used to study the binding and complex formation between two molecules. It can be used to find interactions between a drug compound and a target, for example a receptor, and predicts the conformation of the drug compound in the binding site of the target. The docking algorithm then ranks the interactions via scoring functions and estimates binding affinity. Popular commercial molecular docking tools include AutoDock, DOCK, Glide, and FlexX. These are rather simple and many data scientists are working on improving the prediction of drug–target interaction using various learning models [24]. CNNs are found useful as scoring functions for docking applications and have demonstrated efficient pose/affinity prediction for drug–target complexes and assessment of activity/inactivity. For instance, Wallach and Dzamba build AtomNet, a deep CNN to predict the bioactivity of small molecule drugs for drug discovery applications. The authors showed that AtomNet outperforms conventional docking models in relation to accuracy with an AUC (area under the curve) of 0.9 or more for 58% of the targets [25].

Current trends within AI applications for drug discovery and development point toward more and more models using DL approaches. Compared with more conventional machine learning approaches, DL models take a long time to train because of the large datasets and the often large number of parameters needed. This can be a major disadvantage when data is not readily available. There is therefore ongoing work on reducing the amount of data required as training sets for DL so it can learn with only small amounts of available data. This is similar to the learning process that takes place in the human brain and would be beneficial in applications where data collection is resource intensive and large datasets are not readily available, as is often the case with medicinal chemistry and novel drug targets. There are several novel methods being investigated, for instance, using a one-shot learning approach or a long short-term memory approach and also using memory augmented neural networks such as the differentiable neural computer [17].

2.3. Artificial intelligence and medical visualization

Interpretation of data that appears in the form of either an image or a video can be a challenging task. Experts in the field have to train for many years to attain the ability to discern medical phenomena and on top of that have to actively learn new content as more research and information presents itself. However, the demand is ever increasing and there is a significant shortage of experts in the field. There is therefore a need for a fresh approach and AI promises to be the tool to be used to fill this demand gap.

2.3.1. Machine vision for diagnosis and surgery

Computer vision involves the interpretation of images and videos by machines at or above human-level capabilities including object and scene recognition. Areas where computer vision is making an important impact include image-based diagnosis and image-guided surgery.

2.3.1.1. Computer vision for diagnosis and surgery

Computer vision has mainly been based on statistical signal processing but is now shifting more toward application of artificial neural networks as the choice for learning method. Here, DL is used to engineer computer vision algorithms for classifying images of lesions in skin and other tissues. Video data is estimated to contain 25 times the amount of data from high-resolution diagnostic images such as CT and could thus provide a higher data value based on resolution over time. Video analysis is still premature but has great potential for clinical decision support. As an example, a video analysis of a laparoscopic procedure in real time has resulted in 92.8% accuracy in identification of all the steps of the procedure and surprisingly, the detection of missing or unexpected steps [26].

A notable application of AI and computer vision within surgery technology is to augment certain features and skills within surgery such as suturing and knot-tying. The smart tissue autonomous robot (STAR) from the Johns Hopkins University has demonstrated that it can outperform human surgeons in some surgical procedures such as bowel anastomosis in animals. A fully autonomous robotic surgeon remains a concept for the not so near future but augmenting different aspects of surgery using AI is of interest to researchers. An example of this is a group at the Institute of Information Technology at the Alpen-Adria Universität Klagenfurt that uses surgery videos as training material in order to identify a specific intervention made by the surgeon. For example, when an act of dissection or cutting is performed on the patient’s tissues or organs, the algorithm recognizes the likelihood of the intervention as well as the specific region in the body [27]. Such algorithms are naturally based on the training on many videos and could be proven very useful for complicated surgical procedures or for situations where an inexperienced surgeon is required to perform an emergency surgery. It is important that surgeons are actively engaged in the development of such tools ensuring clinical relevance and quality and facilitating the translation from the lab to the clinical sector.

2.3.2. Deep learning and medical image recognition

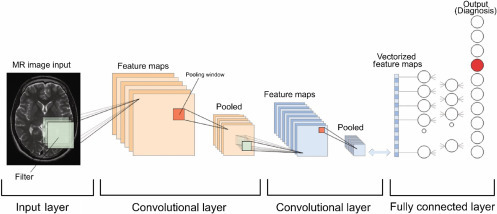

The word “Deep” refers to the multilayered nature of machine learning and among all DL techniques, the most promising in the field of image recognition has been the CNNs. Yann LeCun, a prominent French computer scientist introduced the theoretical background to this system by creating LeNET in the 1980s, an automated handwriting recognition algorithm designed to read cheques for financial systems. Since then, these networks have shown significant promise in the field of pattern recognition.

Similar to radiologists that during the medical training period have to learn by constantly correlating and relating their interpretations of radiological images to the ground truth, CNNs are influenced by the human visual cortex, where image recognition is initiated by the identification of the many features of the image. Furthermore, CNNs require a significant amount of training data that comes in the form of medical images along with labels for what the image is supposed to be. At each hidden layer of training, CNNs can adjust the applied weights and filters (characteristics of regions in an image) to improve the performance on the given training data.

Briefly and very simply (Fig. 2.3 ), the act of convolving an image with various weights and creating a stack of filtered images is referred to as a convolutional layer, where an image essentially becomes a stack of filtered images. Pooling is then applied to all these filtered images, where the original stack of images becomes a smaller representation of themselves and all negative values are removed by a rectified linear unit (ReLU). All these operations are then stacked on top of one another to create layers, sometimes referred to as Deep stacking. This process can be repeated multiple times and each time the image gets filtered more and relatively smaller. The last layer is referred to as a fully connected layer where every value assigned to all layers will contribute to what the results will be. If the system produces an error in this final answer, the gradient descent can be applied by adjusting the values up and down to see how the error changes relative to the right answer of interest. This can be achieved by an algorithm called back propagation that signifies “learning from mistakes.” After learning a new capability from the existing data, this can be applied to new images and the system can classify the images in the right category (Inference), similar to how a radiologist operates [28].

Figure 2.3.

The various stages of convolutional neural networks at work.

Adapted from Lundervold AS, Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Z Med Phys. 2019;29:102–27.

2.3.3. Augmented reality and virtual reality in the healthcare space

Augmented and virtual reality (AR and VR) can be incorporated at every stage of a healthcare system. These systems can be implemented at the early stages of education for medical students, to those training for a specific specialty and experienced surgeons. On the other hand, these technologies can be beneficial and have some negative consequences for patients.

In this section, we will attempt to cover each stage and finally comment on the usefulness of these technologies.

2.3.3.1. Education and exploration

Humans are visual beings and play is one of the most important aspects of our lives. As children the most important way for us to learn was to play. Interaction with the surroundings allowed us to gain further understanding of the world and provided us with the much-needed experience. The current educational system is limited and for interactive disciplines such as medicine this can be a hindrance. Medicine can be visualized as an art form and future clinicians are the artist. These individuals require certain skills to fulfill the need for an ever-evolving profession. Early in medical school, various concepts are taught to students without them ever experiencing these concepts in real life. So game-like technologies such as VR and AR could enhance and enrich the learning experience for future medical and health-related disciplines [29]. Medical students could be provided with and taught novel and complicated surgical procedures, or learn about anatomy through AR without ever needing to interact or involve real patients at an early stage or without ever needing to perform an autopsy on a real corpse. These students will of course be interacting with real patients in their future careers, but the goal would be to initiate the training at an earlier stage and lowering the cost of training at a later stage.

For today’s training specialists, the same concept can be applied. Of course, human interaction should be encouraged in the medical field but these are not always necessary and available when an individual is undergoing a certain training regimen. The use of other physical and digital cues such as haptic feedback and photorealistic images and videos can provide a real simulation whereby learning can flourish and the consequences and cost of training are not drastic (Fig. 2.4 ).

Figure 2.4.

Virtual reality can help current and future surgeons enhance their surgical abilities prior to an actual operation. (Image obtained from a video still, OSSOR VR).

In a recent study [30], two groups of surgical trainees were subjected to different methods for Mastoidectomy, where one group (n=18) would go through the standard training path and the other would train on a freeware VR simulator [the visible ear simulator (VES)]. At the end of the training, a significant improvement in surgical dissection was observed for those who trained with VR. For real-life and precise execution, AR would be more advantageous in healthcare settings. By wearing lightweight headsets (e.g., Microsoft HoloLens or Google Glass) that project relevant images or video onto the regions of interest, the user can focus on the task without ever being distracted by moving their visual fields away from the region of interest.

2.3.3.2. Patient experience

Humans interact with their surroundings with audiovisual cues and utilize their limbs to engage and move within this world. This seemingly ordinary ability can be extremely beneficial for those who are experiencing debilitating conditions that limit movement or for individuals who are experiencing pain and discomfort either from a chronic illness or as a side effect of a treatment. A recent study, looking at the effect of immersive VR for patients who had suffered from chronic stroke patients, found this technology to be contributing positively to the state of patients. During the VR experience, the patients are asked to grab a virtual ball and throw it back into the virtual space [31]. For these patients, this immersive experience could act as a personal rehabilitation physiotherapist who engages their upper limb movement multiple times a day, allowing for possible neuroplasticity and a gradual return of normal motor function to these regions.

For others, these immersive technologies could help cope with the pain and the discomfort of their cancer or mental health condition. A study has shown that late-stage adult cancer patients can use this technology with minimum physical discomfort and in return benefit from an enhanced relaxed state, entertainment, and a much-needed distraction [32]. These immersive worlds provide a form of escapism with their artificial characters and environments, allowing the individual to interact and explore the surrounding while receiving audiovisual feedback from the environment, much like all the activities of daily living.

2.4. Intelligent personal health records

Personal health records have historically been physician-oriented and often have lacked patient-related functionalities. However, in order to promote self-management and improve the outcomes for patients, a patient-centric personal health record should be implemented. The goal is to allow ample freedom for patients to manage their conditions, while freeing up time for the clinicians to perform more crucial and urgent tasks.

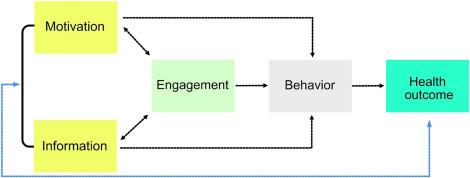

2.4.1. Health monitoring and wearables

For millennia individuals relied on physicians to inform them about their own bodies and to some extent, this practice is still applied today. However, the relatively new field of wearables is changing this. Wearable health devices (WHDs) are an upcoming technology that allow for constant measurement of certain vital signs under various conditions. The key to their early adoption and success is their application flexibility—the users are now able to track their activity while running, meditating, or when underwater. The goal is to provide individuals with a sense of power over their own health by allowing them to analyze the data and manage their own health. Simply, WHDs create individual empowerment (Fig. 2.5 ).

Figure 2.5.

Health outcome of a patient depends on a simple yet interconnected set of criteria that are predominantly behavior dependent.

At first look, a wearable device might look like an ordinary band or watch; however, these devices bridge the gap between multiple scientific disciplines such as biomedical engineering, materials science, electronics, computer programming, and data science, among many others [33]. It would not be an exaggeration to refer to them as ever-present digital health coaches, as increasingly it is encouraged to wear them at all times in order to get the most out of your data. Garmin wearables are a good example of this, with a focus on being active, they cover a vast variety of sports and provide a substantial amount of data on their Garmin connect application where users can analyze and observe their daily activities. These are increasingly accompanied by implementation of gamification.

Gamification refers to utilization of game design elements for nongame-related applications. These elements are used to motivate and drive users to reach their goals [34]. On wearable platforms, data gathered from daily activities can serve as competition between different users on the platform. Say, that your average weekly steps are around 50,000 steps. Here, based on specific algorithms, the platform places you on a leaderboard against individuals whose average weekly steps are similar to yours or higher, with the highest ranking member exceeding your current average weekly steps. As a result of this gamified scenario, the user can push themselves to increase their daily activities in order to do better on the leaderboard and potentially lead a healthier life. While the gamification aspect of wearables and their application could bring benefits, evidence of efficacy is scarce and varies widely with some claiming that the practice might bring more harm than good.

Remote monitoring and picking up on early signs of disease could be immensely beneficial for those who suffer from chronic conditions and the elderly. Here, by wearing a smart device or manual data entry for a prolonged period, individuals will be able to communicate to their healthcare workers without the need of disrupting their daily lives [35]. This is a great example of algorithms collaborating with healthcare professionals to produce an outcome that is beneficial for patients.

2.4.2. Natural language processing

Natural language processing (NLP) relates to the interaction between computers and humans using natural language and often emphasizes on the computer’s ability to understand human language. NLP is crucial for many applications of big data analysis within healthcare, particularly for EMRs and translation of narratives provided by clinicians. It is typically used in operations such as extraction of information, conversion of unstructured data into structured data, and categorization of data and documents.

NLP makes use of various classifications to infer meaning from unstructured textual data and allows clinicians to work more freely using language in a “natural way” as opposed to fitting sequences of text into input options to serve the computer. NLP is being used to analyze data from EMRs and gather large-scale information on the late-stage complications of a certain medical condition [26].

There are many areas in healthcare in which NLP can provide substantial benefits. Some of the more immediate applications include [36]

-

1.

Efficient billing: extracting information from physician notes and assigning medical codes for the billing process.

-

2.

Authorization approval: Using information from physician notes to prevent delays and administrative errors.

-

3.

Clinical decision support: Facilitate decision-making for members of healthcare team upon need (for instance, predicting patient prognosis and outcomes).

-

4.

Medical policy assessment: compiling clinical guidance and formulation appropriate guidelines for care.

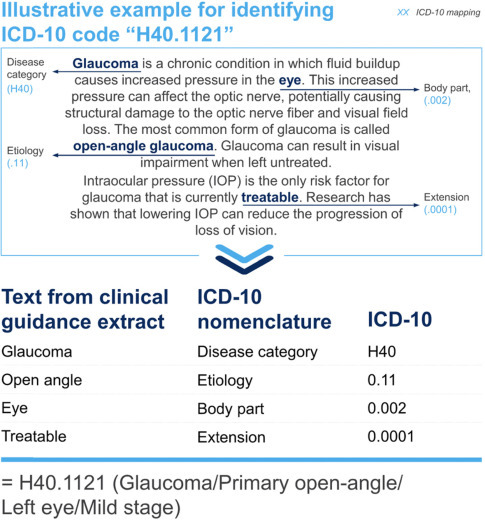

One application of NLP is disease classification based on medical notes and standardized codes using International Statistical Classification of Diseases and Related Health Problems (ICD). ICD is managed and published by the WHO and contains codes for diseases and symptoms as well as various findings, circumstances, and causes of disease. Here is an illustrative example of how an NLP algorithm can be used to extract and identify the ICD code from a clinical guidelines description. Unstructured text is organized into structured data by parsing for relevant clauses followed by classification of ICD-10 codes based on frequency of occurrence. The NLP algorithm is run at various thresholds to improve classification accuracy and the data is aggregated for the final output (Fig. 2.6 ).

Figure 2.6.

Example of ICD-10 mapping from a clinical guidelines’ description [36].

2.4.3. Integration of personal records

Since the introduction of EMRs, there have been large databases of information on each patient, which collectively can be used to identify healthcare trends within different disease areas. The EMR databases contain the history of hospital encounters, records of diagnoses and interventions, lab test, medical images, and clinical narratives. All these datasets can be used to build predictive models that can help clinicians with diagnostics and various treatment decision support. As AI tools mature it will be possible to extract all kinds of information such as related disease effects and correlations between historical and future medical events [37]. The only data often missing is data from in between interventions and between hospital visits when the patient is well or may not be showing symptoms. Such data could help to construct an end-to-end model of both “health” and “disease” for studying long-term effects and further disease classifications.

Although the applications of AI for EMRs are still quite limited, the potential for using the large databases to detect new trends and predict health outcomes is enormous. Current applications include data extraction from text narratives, predictive algorithms based on data from medical tests, and clinical decision support based on personal medical history. There is also great potential for AI to enable integration of EMR data with various health applications. Current AI applications within healthcare are often standalone applications, these are often used for diagnostics using medical imaging and for disease prediction using remote patient monitoring [38]. However, integrating such standalone applications with EMR data could provide even greater value by adding personal medical data and history as well as a large statistical reference library to make classifications and predictions more accurate and powerful. EMR providers such as Cerner, Epic, and Athena are beginning to add AI functionality such as NLP in their systems making it easier to access and extract data held in their libraries [39]. This could facilitate the integration of, for instance, Telehealth and remote monitoring applications with EMR data and the data integration transfer could even go both ways including the addition of remote monitoring data in the EMR systems.

There are many EMR providers and systems globally. These use various operating systems and approaches with more than a thousand EMR providers operating in the United States alone. Integration of EMR records on their own poses a great challenge and interoperability of these systems is important to obtain the best value from the data. There are various international efforts in gathering EMR data across countries including Observational Health Data Science and Informatics (OHDSI), who have consolidated 1.26 billion patient records from 17 different countries [40]. Various AI methods have been used to extract, classify, and correlate data from EMRs but most generally make use of NLP, DL, and neural networks.

DeepCare is an example of an AI-based platform for end-to-end processing of EMR data. It uses a deep dynamic memory neural network to read and store experiences and in memory cells. The long short-term memory of the system models the illness trajectory and healthcare processes of users via a time-stamped sequence of events and in this way allows capturing long-term dependencies [41]. Using the stored data, the framework of DeepCare can model disease progression, support intervention recommendation, and provide disease prognosis based on EMR databases. Studying data from a cohort of diabetic and mental health patients it was demonstrated that DeepCare could predict the progression of disease, optimal interventions, and assessing the likelihood for readmission [37].

2.5. Robotics and artificial intelligence-powered devices

There are numerous areas in healthcare where robots are being used to replace human workforce, augment human abilities, and assist human healthcare professionals. These include robots used for surgical procedures such as laparoscopic operations, robotic assistants for rehabilitation and patient assistance, robots that are integrated into implants and prosthetic, and robots used to assist physicians and other healthcare staff with their tasks. Some of these devices are being developed by several companies especially for interacting with patients and improving the connection between humans and machines from a care perspective. Most of the robots currently under development have some level of AI technology incorporated for better performance with regard to classifications, language recognition, image processing, and more.

2.5.1. Minimally invasive surgery

Although many advances have been seen in the area surrounding surgery measured by the outcomes of surgical procedures, the main practice of surgery still remains a relatively low-tech procedure for the most part using hand tools and instruments for “cutting and sewing.” Conventional surgery relies greatly on sensing by the surgeon, where touching allows them to distinguish between tissues and organs and often requires open surgery. There is an ongoing transformation within surgical technology and focus has especially been placed in reducing the invasiveness of surgical procedure by minimizing incisions, reducing open surgeries, and using flexible tools and cameras to assist the surgery [42]. Such minimally invasive surgery is seen as the way forward, but it is still in an early phase with many improvements to be made to make it “less of a big deal” for patients and reduce time and cost. Minimal invasive surgery requires different motor skills compared with conventional surgery due to the lower tactile feedback when relying more on tools and less on direct touching. Sensors that provide the surgeon with finer tactile stimuli are under development and make use of tactile data processing to translate the sensor input into data or stimuli that can be perceived by the surgeon. Such tactile data processing typically makes use of AI, more specifically artificial neural networks to enhance the function of this signal translation and the interpretation of the tactile information [43]. Artificial tactile sensing offers several advantages compared with physical touching including a larger reference library to compare sensation and standardization among surgeons with respect to quantitative features, continuous improvement, and level of training.

An example where artificial tactile sensing has been used includes screening of breast cancer, as a replacement for clinical breast examination to complement medical imaging techniques such as x-ray mammography and MRI. Here, the artificial tactile sensing system was built on data from reconstruction of mechanical tissue measurements using a pressure sensor as reference data. During training of the neural network, the weight of the input data adjusts according to the desired output [44]. The tactile sensory system can detect mass calcifications inside the breast tissue based on palpation of different points of the tissue and comparing with different reference data, and subsequently determine whether there are any significant abnormalities in the breast tissue. Artificial tactile sensing has also been used for other applications including assessment of liver, brain, and submucosal tumors [45].

2.5.2. Neuroprosthetics

Our species has always longed for an eternal life, in the ancient Vedic tradition there exists a medicinal drink that provides “immortality” for those who drink it. The Rig Veda, which was written some 5000 years ago, comments: “We drank soma, we became immortal, we came to the light, we found gods.” This is similar in ancient Persian culture, where a similar legendary drink is called Hoama in the Zoroastrain sacred book, Avesta [46], [47]. This longing for “enhancement” and “augmentation” has always been with us, and in the 21st century we are gradually beginning to move towards making some past myths into reality. In this section, we will cover some recent innovations that can utilize AI to assist and allow humans to function better. Most research in this area is to assist individuals with preexisting conditions and have not been implemented in normal functioning humans for the sake of human augmentation; however, this can perhaps change in the coming years.

Neuroprosthetics are defined as devices that help or augment the subject’s own nervous system, in both forms of input and output. This augmentation or stimulation often occurs in the form of an electrical stimulation to overcome the neurological deficiencies that patients experience.

These debilitating conditions can impair hearing, vision, cognitive, sensory or motor skills, and can lead to comorbidities. Indeed, movement disorders such as multiple sclerosis or Parkinson’s are progressive conditions that can lead to a painful and gradual decline in the above skills while the patient is always conscious of every change. The recent advances in brain machine interfaces (BMIs) have shown that a system can be employed where the subjects’ intended and voluntary goal-directed wishes (electroencephalogram, EEG) can be stored and learned when a user “trains” an intelligent controller (an AI). This period of training allows for identification of errors in certain tasks that the user deems incorrect, say that on a computer screen, a square is directed to go left and instead it goes to right and also in a situation where the BMI is connected to a fixed robotic hand, the subject directs the device to go up and the signals are interpreted as a down movement. Correct actions are stored, and the error-related brain signals are registered by the AI to correct for future actions. Because of this “reinforcement learning,” the system can potentially store single to several control “policies,” which allow for patient personalization [48]. This is rather similar to the goals of the company Neuralink which aims to bring the fields of material science, robotics, electronics, and neuroscience together to try and solve multifaceted health problems [49].

While in its infancy and very exploratory, this field will be immensely helpful for patients with neurodegenerative diseases who will increasingly rely on neuroprostheses throughout their lives.

2.6. Ambient assisted living

With the aging society, more and more people live through old age with chronic disorders and mostly manage to live independently up to an old age. Data indicates that half of people above the age of 65 years have a disability of some sort, which constitutes over 35 million people in the United States alone. Most people want to preserve their autonomy, even at an old age, and maintain control over their lives and decisions [50]. Assistive technologies increase the self-dependencies of patients, encouraging user participation in Information and Communication Technology (ICT) tools to provide remote care services type assistance and provide information to the healthcare professionals. Assistive technologies are experiencing rapid growth, especially among people aged 65–74 years [51]. Governments, industries, and various organizations are promoting the concept of AAL, which enables people to live independently in their home environment. AAL has multiple objectives including promoting a healthy lifestyle for individuals at risk, increasing the autonomy and mobility of elderly individuals, and enhancing security, support, and productivity so people can live in their preferred environment and ultimately improve their quality of life. AAL applications typically collect data through sensors and cameras and apply various artificially intelligent tools for developing an intelligent system [52]. One way of implementing AAL is using smart homes or assistive robots.

2.6.1. Smart home

A smart home is a normal residential home, which has been augmented using different sensors and monitoring tools to make it “smart” and facilitate the lives of the residents in their living space. Other popular applications of AAL that can be a part of a smart home or used as an individual application include remote monitoring, reminders, alarm generation, behavior analysis, and robotic assistance.

Smart homes can be useful for people with dementia and several studies have investigated smart home applications to facilitate the lives of dementia patients. Low-cost sensors in an Internet of Things (IoT) architecture can be a useful way of detecting abnormal behavior in the home. For instance, sensors are placed in different areas of the house including the bedroom, kitchen, and bathroom to ensure safety. A sensor can be placed on the oven and detect the use of the cooker, so the patient is reminded if it was not switched off after use. A rain sensor can be placed by the window to alert the patient if the window was left open during rain. A bath sensor and a lamp sensor can be used in the bathroom to ensure that they are not left on [53].

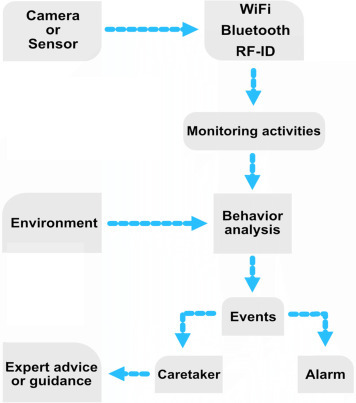

The sensors can transmit information to a nearby computing device that can process the data or upload them to the cloud for further processing using various machine learning algorithms, and if necessary, alert relatives or healthcare professionals (Fig. 2.7 ). By daily collection of patient data, activities of daily living are defined over time and abnormalities can be detected as a deviation from the routine. Machine learning algorithms used in smart home applications include probabilistic and discriminative methods such as Naive Bayes classifier and Hidden Markov Model, support vector machine, and artificial neural networks [54].

Figure 2.7.

Process diagram of a typical smart home or smart assistant setup.

In one example, Markov Logic Network was used for activity recognition design to model both simple and composite activities and decide on appropriate alerts to process patient abnormality. The Markov Logic Network used handles both uncertainty modeling and domain knowledge modeling within a single framework, thus modeling the factors that influence patient abnormality [55]. Uncertainty modeling is important for monitoring patients with dementia as activities conducted by the patient are typically incomplete in nature. Domain knowledge related to the patient’s lifestyle is also important and combined with their medical history it can enhance the probability of activity recognition and facilitate decision-making. This machine learning-based activity recognition framework detected abnormality together with contextual factors such as object, space, time, and duration for decision support on suitable action to keep the patient safe in the given environment. Alerts of different importance are typically used for such decision support and can, for instance, include a low-level alarm when the patient has forgotten to complete a routine activity such as switching off the lights or closing the window and a high-level alarm if the patient has fallen and requires intervention by a caretaker. One of the main aims of such activity monitoring approaches, as well as other monitoring tools, is to support healthcare practitioners in identifying symptoms of cognitive functioning or providing diagnosis and prognosis in a quantitative and objective manner using a smart home system [56]. There are various other assistive technology devices for people with dementia including motion detectors, electronic medication dispensers, and robotic devices for tracking.

2.6.2. Assistive robots

Assistive robots are used to support the physical limitations of the elderly and dysfunctional people and help them by assisting in daily activities and acting as an extra pair of hands or eyes. Such assistive robots can help in various activities such as mobility, housekeeping, medication management, eating, grooming, bathing, and various social communications. An assistive robot named RIBA with human-type arms was designed to help patients with lifting and moving heavy things. It has been demonstrated that the robot is able to carry the patient from the bed to a wheelchair and vice versa. Instructions can be provided to RIBA either by using tactile sensors using a method known as tactile guidance to teach by showing [57].

The MARIO project (Managing active and healthy Aging with use of caring Service robots) is another assistive robot which has attracted a lot of attention. The project aims to address the problems of loneliness, isolation, and dementia, which are commonly observed with elderly people. This is done by performing multifaceted interventions delivered by service robots. The MARIO Kompaï companion robot was developed with the objective to provide real feelings and emotions to improve acceptance by dementia patients, to support physicians and caretakers in performing dementia assessment tests, and promote interactions with the end users. The Kompaï robot used for the MARIO project was developed by Robosoft and is a robot containing a camera, a Kinect motion sensor, and two LiDAR remote sensing systems for navigation and object identification [58]. It further includes a speech recognition system or other controller and interface technologies, with the intention to support and manage a wide range of robotic applications in a single robotic platform similar to apps for smartphones. The robotic apps include those focused on cognitive stimulation, social interaction, as well as general health assessment. Many of these apps use AI-powered tools to process the data collected from the robots in order to perform tasks such as facial recognition, object identification, language processing, and various diagnostic support [59].

2.6.3. Cognitive assistants

Many elderly people experience a decline in their cognitive abilities and have difficulties in problem-solving tasks as well as maintaining attention and accessing their memory. Cognitive stimulation is a common rehabilitation approach after brain injuries from stroke, multiple sclerosis or trauma, and various mild cognitive impairments. Cognitive stimulation has been demonstrated to decrease cognitive impairment and can be trained using assistive robots.

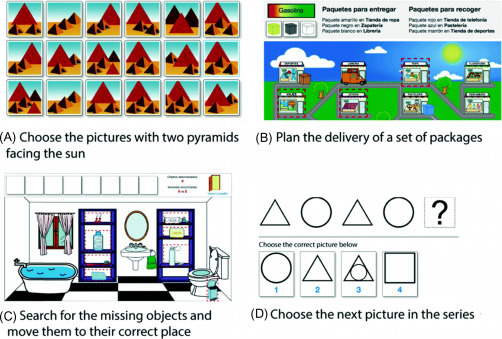

Virtrael is one of such cognitive stimulation platforms and serves to assess, stimulate, and train various cognitive skills that experience a decline in the patient. The Virtrael program is based on visual memory training and the project is carried out by three different key functionalities: configuration, communication, and games. The configuration mode allows an administrator to match the patient with a therapist and the therapist to configure the program for the patient. The communication tool allows communication between the patient and the therapist and between patients., The games are intended to train cognitive skills of the patient including memory, attention, and planning (Fig. 2.8 ) [60].

Figure 2.8.

Example of games used for training cognitive skills of patients [60].

2.6.4. Social and emotional stimulation

One of the first applications of assistive robots and a commonly investigated technology is companion robots for social and emotional stimulation. Such robots assist elderly patients with their stress or depression by connecting emotionally with the patient with enhanced social interaction and assistance with various daily tasks. The robots vary from being pet-like robots to more peer-like and they are all interactive and provide psychological and social effects. The robotic pet PARO, a baby seal robot, is the most widely used robotic pet and carries various sensors to sense touch, sounds, and visual objects [61]. Another robot is the Mario Kampäi mentioned earlier, which focuses on assisting elderly patients with dementia, loneliness, and isolation. Yet, another companion robot Buddy, by Blue Frog Robotics, assists elderly patients by helping with daily activities such as reminders about medication and appointments, as well as using motion sensors to detect falls and physical inactivity. Altogether, studies investigating cognitive stimulation seem to demonstrate a decrease in the rate of cognitive decline and progression of dementia.

2.7. The artificial intelligence can see you now

AI is increasingly becoming an integral part of all our lives. From smartphones to cars and more importantly our healthcare. This technology will continue to push boundaries and certain norms that have been dormant and accepted as the status quo for hundreds of years, will now be directly challenged and significantly augmented.

2.7.1. Artificial intelligence in the near and the remote

We believe that AI has an important role to play in the healthcare offerings of the future. In the form of machine learning, it is the primary capability behind the development of precision medicine, widely agreed to be a sorely needed advance in care. Although early efforts at providing diagnosis and treatment recommendations have proven challenging, we expect that AI will ultimately master that domain as well. Given the rapid advances in AI for imaging analysis, it seems likely that most radiology and pathology images will be examined at some point by a machine. Speech and text recognition are already employed for tasks like patient communication and capture of clinical notes, and their usage will increase.

The greatest challenge to AI in these healthcare domains is not whether the technologies will be capable enough to be useful, but rather ensuring their adoption in daily clinical practice. For widespread adoption to take place, AI systems must be approved by regulators, integrated with EHR systems, standardized to a sufficient degree that similar products work in a similar fashion, taught to clinicians, paid for by public or private payer organizations, and updated over time in the field. These challenges will ultimately be overcome, but they will take much longer to do so than it will take for the technologies themselves to mature. As a result, we expect to see limited use of AI in clinical practice within 5 years and more extensive use within 10 years.

It also seems increasingly clear that AI systems will not replace human clinicians on a large scale, but rather will augment their efforts to care for patients. Over time, human clinicians may move toward tasks and job designs that draw on uniquely human skills like empathy, persuasion, and big-picture integration. Perhaps the only healthcare providers who will risk their careers over time may be those who refuse to work alongside AI.

2.7.2. Success factors for artificial intelligence in healthcare

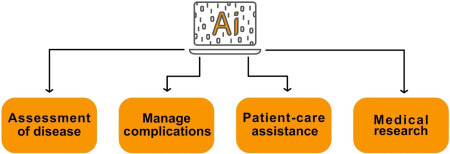

A review by Becker [62] suggests that AI used in healthcare can serve clinicians, patients, and other healthcare workers in four different ways. Here, we will use these suggestions as inspirations and will expand on their contribution toward a successful implementation of AI in healthcare: (Fig. 2.9 )

-

1.

Assessment of disease onset and treatment success.

-

2.

Management or alleviation of complications.

-

3.

Patient-care assistance during a treatment or procedure.

-

4.

Research aimed at discovery or treatment of disease.

Figure 2.9.

The likely success factors depend largely on the satisfaction of the end users and the results that the AI-based systems produce.

2.7.2.1. Assessment of condition

Prediction and assessment of a condition is something that individuals will demand to have more control over in the coming years. This increase in demand is partly due to a technology reliable population that has grown to learn that technological innovation will be able to assist them in leading healthy lives. Of course, while not all answers lie in this arena, it is an extremely promising field.

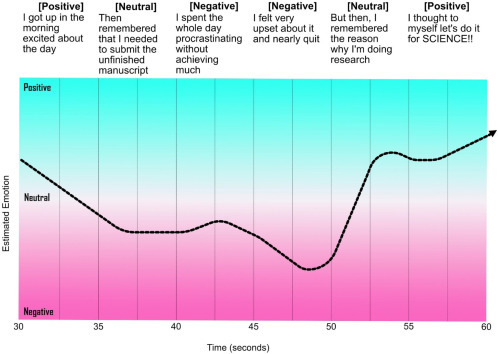

Mood and mental health-related conditions are immensely important topic in today’s world and for good reason. According to the WHO, one in four people around the world experiences such conditions and as a result can accelerate their path toward ill-health and comorbidities. Recently, machine learning algorithms have been developed to detect words and intonations of an individual’s speech that may indicate a mood disorder. Using neural networks, an MIT-based lab has conducted research onto the detection of early signs of depression using speech. According to the researchers, the “model sees sequences of words/speaking style” and decides whether these emerging patterns are likely to be seen in individuals with and without depression [63]. The technique employed by the researchers is often referred to as a sequence modeling, where model sequences of audio and text from patients with and without depression are fed to the system and as these accumulate, various text patterns could be paired with audio signals. For example, words such as “low”, “blue,” and “sad” can be paired with more monotone and flat audio signals. Additionally, the speed and the length of pauses can play a major role in detection of individuals experiencing depression. An example of this can be seen in Fig. 2.10 where within a period of 60 seconds and based on the tone and words used, it is possible to measure an estimated emotion.

Figure 2.10.

Early detection of certain mood conditions can be predicted by analyzing the trend, tone of voice, and speaking style of individuals.

2.7.2.2. Managing complications

The general feeling of being unwell and its various complications that accompany mild illnesses are usually well tolerated by patients. However, for certain conditions, it is categorically important to manage these symptoms as to prevent further development and ultimately alleviate more complex symptoms. A good example for this can be seen in the field of infectious diseases. In a study published in the journal of trauma and acute care surgery, researchers think that by understanding the microbiological niches (biomarkers) of trauma patients, we could hold the key to future wound infections and therefore can allow healthcare workers to take the necessary arrangements to prevent the worst outcome [64]. Machine learning techniques can also contribute toward the prediction of serious complications such as neuropathy that could arise for those suffering from type 2 diabetes or early cardiovascular irregularities. Furthermore, the development of models that can help clinicians detect postoperative complications such as infections will contribute toward a more efficient system [65].

2.7.2.3. Patient-care assistance

Patient-care assistance technologies can improve the workflow for clinicians and contribute toward patient’s autonomy and well-being. If each patient is treated as an independent system, then based on the variety of designated data available, a bespoke approach can be implemented. This is of utmost importance for the elderly and the vulnerable in our societies. An example of this could be that of virtual health assistants that remind individuals to take their required medications at a certain time or recommend various exercise habits for an optimal outcome. The field of Affective Computing can contribute significantly in this arena. Affective computing refers to a discipline that allows the machine to process, interpret, simulate, and analyze human behavior and emotions. Here, patients will be able to interact with the device in a remote manner and access their biometric data, all the while feeling that they are interacting with a caring and empathetic system that truly wants the best outcome for them. This setting can be applied both at home and in a hospital setting to relieve work pressure from healthcare workers and improve service.

2.7.2.4. Medical research

AI can accelerate the diagnosis process and medical research. In recent years, an increasing number of partnerships have formed between biotech, MedTech, and pharmaceutical companies to accelerate the discovery of new drugs. These partnerships are not all based on curiosity-driven research but often out of necessity and need of society. In a world where certain expertise is rare, research costs high and effective treatments for certain conditions are yet to be devised, collaboration between various disciplines is key. A good example of this collaboration is seen in a recent breakthrough for antibiotic discovery, where the researchers devised/trained a neural network that actively “learned” the properties of a vast number of molecules in order to identify those that inhibit the growth of E. coli, a Gram negative bacterial species that is notoriously hard to kill [66]. Another example is the recent research carried out regarding the pandemic of COVID-19 all around the world. Predictive Oncology, a precision medicine company has announced that they are launching an AI platform to accelerate the production of new diagnostics and vaccines, by using more than 12,000 computer simulations per machine. This is combined with other efforts to employ DL to find molecules that can interact with the main proteases (Mpro or 3CLpro) of the virus, resulting in the disruption of the replication machinery of the virus inside the host [67], [68].

2.7.3. The digital primary physician

As you walk into the primary care physician’s room, you are greeted by the doctor. There is an initial eye to eye contact, then an exchange of pleasantries follows. She further asks you about your health and how she can be of help. You, the patient, have multiple medical problems: previous presence of sciatica, snapping hip syndrome, high cholesterol, an above-average blood pressure, and chronic sinusitis. However, because of the limited time that you have with the doctor, priorities matter [69]. You categorize your own conditions and tend to focus on the most important to you, the chronic sinusitis. The doctor asks you multiple questions about the condition and as you are explaining your symptoms, she types it all in your online record, does a quick examination, writes a prescription, and says to come back in 6 weeks for further examination. For your other conditions, you probably need to book a separate appointment unless you live in a country that designates more than 20 minutes per patient.

The above scenario is the normal routine in most countries. However, despite the helpfulness of the physician, it is not an ideal system and it is likely that if you were in the position of the above patient, you will walk away dissatisfied with the care received. The frustration with such systems has led to an immense pressure on the health workers and needs to be addressed. Today, there are numerous health-related applications that utilize and combine the power of AI with that of a remote physician to answer some of the simple questions that might not warrant a physical visit to the doctors.

2.7.3.1. Artificial intelligence prequalification (triage)

Prior to having access to an actual doctor, trained AI bots can qualify whether certain symptoms warrant an actual conversation with a physician. Many questions are asked of the patient and based on each response; the software encourages the user to take specific actions. These questions and answers are often vigorously reviewed by medical professionals at each stage to account for accuracy. In important cases, a general response of “You should see a doctor” is given and the patient is directed to book an appointment with a primary care physician.

2.7.3.2. Remote digital visits

The unique selling point for these recent innovations is that they allow remote video conversations between the patient and the physician. Normally, the patient books an appointment for a specific time, often during the same day. This provides them with ample time to provide as much information as possible for the physician responsible to review and carefully analyze the evidence before talking to the patient. The information can be in the form of images, text, video, and audio. This is extremely encouraging and creative as many people around the world lack the time and resources to visit a physician and allows remote work for the physician.

2.7.3.3. The future of primary care

In a recent study, when asked about the future of AI on primary care, while acknowledging its potential benefits, most practitioners were extremely skeptical regarding it playing a significant role in the future of the profession. One main pain point refers to the lack of empathy and the ethical dilemma that can occur between AI and patients [70]. While this might be true for today, it is naive to assume that this form of technology will remain dormant and will not progress any further. Humanity prefers streamlining and creative solutions that are effective and take less out of our daily lives. Combine this with the ever-increasing breakthroughs in the field of smart healthcare materials [71] and AI, one could envisage patients managing most of their own conditions at home and when necessary get in touch with a relevant healthcare worker who will refer them to more specialized physicians who could tend to their needs. It is also very important to note that at the time of an epidemic, an outbreak, natural or manmade disaster, or simply when the patient is away from their usual dwelling, a technology that allows humans to remotely interact and solve problems will have to become a necessity. At the time of writing (Early 2020), the threat of a SARS-COV-2 epidemic looms over many countries and is expanding at an unprecedented rate. World experts speculate that the infection rate is high and has the potential to remain within a population and cause many fatalities in many months to come. It is therefore essential to promote remote healthcare facilities/technologies and to have permanent solutions in place to save lives in order to reduce any unnecessary burden or risk on both healthcare workers and patients alike.

References

- 1.Miller D.D., Brown E.W. Artificial intelligence in medical practice: the question to the answer? Am J Med. 2018;131(2):129–133. doi: 10.1016/j.amjmed.2017.10.035. [DOI] [PubMed] [Google Scholar]

- 2.Kirch D.G., Petelle K. Addressing the physician shortage: the peril of ignoring demography. JAMA. 2017;317(19):1947–1948. doi: 10.1001/jama.2017.2714. [DOI] [PubMed] [Google Scholar]

- 3.Combi C., Pozzani G., Pozzi G. Telemedicine for developing countries. Appl Clin Inform. 2016;07(04):1025–1050. doi: 10.4338/ACI-2016-06-R-0089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bresnick J. Artificial intelligence in healthcare market to see 40% CAGR surge; 2017.

- 5.Lee K.-F. AI superpowers: China, Silicon Valley, and the new world order. 1st ed. Houghton Mifflin Harcourt; 2019. [Google Scholar]

- 6.King D, DeepMind’s health team joins Google Health.

- 7.Hoyt R.E., Snider D., Thompson C., Mantravadi S. IBM Watson Analytics: automating visualization, descriptive, and predictive statistics. JMIR Public Health Surveill. 2016;2(2):e157. doi: 10.2196/publichealth.5810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Marr B. How is AI used in healthcare—5 powerful real-world examples that show the latest advances. Forbes; 2018.

- 9.Kalis B, Collier M, Fu R. 10 promising AI applications in health care. Harvard Business Review; 2018.

- 10.Singhal S, Carlton S. The era of exponential improvement in healthcare? McKinsey Co Rev.; 2019.

- 11.Konieczny L, Roterman I. Personalized precision medicine. Bio-Algorithms Med-Syst 2019; 15.

- 12.Love-Koh J. The future of precision medicine: potential impacts for health technology assessment. Pharmacoeconomics. 2018;36(12):1439–1451. doi: 10.1007/s40273-018-0686-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kulski JK. Next-generation sequencing—an overview of the history, tools, and ‘omic’ applications; 2020.

- 14.Hughes J.P., Rees S., Kalindjian S.B., Philpott K.L. Principles of early drug discovery. Br J Pharmacol. 2011;162(6):1239–1249. doi: 10.1111/j.1476-5381.2010.01127.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ekins S. Exploiting machine learning for end-to-end drug discovery and development. Nat Mater. 2019;18(5):435–441. doi: 10.1038/s41563-019-0338-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zhang L., Tan J., Han D., Zhu H. From machine learning to deep learning: progress in machine intelligence for rational drug discovery. Drug Discov Today. 2017;22(11):1680–1685. doi: 10.1016/j.drudis.2017.08.010. [DOI] [PubMed] [Google Scholar]

- 17.Lavecchia A. Deep learning in drug discovery: opportunities, challenges and future prospects. Drug Discov Today. 2019;24(10):2017–2032. doi: 10.1016/j.drudis.2019.07.006. [DOI] [PubMed] [Google Scholar]

- 18.Coley C.W., Barzilay R., Green W.H., Jaakkola T.S., Jensen K.F. Convolutional embedding of attributed molecular graphs for physical property prediction. J Chem Inf Model. 2017;57(8):1757–1772. doi: 10.1021/acs.jcim.6b00601. [DOI] [PubMed] [Google Scholar]

- 19.Mayr A., Klambauer G., Unterthiner T., Hochreiter S. DeepTox: toxicity prediction using deep learning. Front Environ Sci. 2016;3:80. [Google Scholar]

- 20.Wu Z. MoleculeNet: a benchmark for molecular machine learning. Chem Sci. 2018;9 doi: 10.1039/c7sc02664a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kadurin A., Nikolenko S., Khrabrov K., Aliper A., Zhavoronkov A. druGAN: an advanced generative adversarial autoencoder model for de novo generation of new molecules with desired molecular properties in silico. Mol Pharm. 2017;14(9):3098–3104. doi: 10.1021/acs.molpharmaceut.7b00346. [DOI] [PubMed] [Google Scholar]

- 22.Blaschke T., Olivecrona M., Engkvist O., Bajorath J., Chen H. Application of generative autoencoder in de novo molecular design. Mol Inform. 2018;37(1–2):1700123. doi: 10.1002/minf.201700123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Merk D., Friedrich L., Grisoni F., Schneider G. De novo design of bioactive small molecules by artificial intelligence. Mol Inform. 2018;37 doi: 10.1002/minf.201700153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Shi T. Molecular image-based convolutional neural network for the prediction of ADMET properties. Chemom Intell Lab Syst. 2019;194:103853. [Google Scholar]

- 25.Wallach H.A., Dzamba M.I. AtomNet: a deep convolutional neural network for bioactivity prediction in structure-based drug discovery. arXiv. 2015 [Google Scholar]

- 26.Hashimoto D.A., Rosman G., Rus D., Meireles O.R. Artificial intelligence in surgery: promises and perils. Ann Surg. 2018;268:70–76. doi: 10.1097/SLA.0000000000002693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Petscharnig S., Schöffmann K. Learning laparoscopic video shot classification for gynecological surgery. Multimed Tools Appl. 2018;77:8061–8079. [Google Scholar]

- 28.Lundervold A.S., Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Z Med Phys. 2019;29:102–127. doi: 10.1016/j.zemedi.2018.11.002. [DOI] [PubMed] [Google Scholar]

- 29.Chien CH, Chen CH, Jeng TS. An interactive augmented reality system for learning anatomy structure. In: Proceedings of the International MultiConference of Engineers and Computer Scientists 2010, IMECS 2010; 2010. http://www.iaeng.org/publication/IMECS2018/

- 30.Frendø M., Konge L., Cayé-Thomasen P., Sørensen M.S., Andersen S.A.W. Decentralized virtual reality training of mastoidectomy improves cadaver dissection performance: a prospective, controlled cohort study. Otol Neurotol. 2020;41(4) doi: 10.1097/MAO.0000000000002541. [DOI] [PubMed] [Google Scholar]

- 31.Lee S.H., Jung H.Y., Yun S.J., Oh B.M., Seo H.G. Upper extremity rehabilitation using fully immersive virtual reality games with a head mount display: a feasibility study. PM R. 2020;12:257–262. doi: 10.1002/pmrj.12206. [DOI] [PubMed] [Google Scholar]

- 32.Baños R.M. A positive psychological intervention using virtual reality for patients with advanced cancer in a hospital setting: a pilot study to assess feasibility. Support Care Cancer. 2013;21:263–270. doi: 10.1007/s00520-012-1520-x. [DOI] [PubMed] [Google Scholar]

- 33.Dias D., Cunha J.P.S. Wearable health devices—vital sign monitoring, systems and technologies. Sensors (Basel) 2018;18(8):2414. doi: 10.3390/s18082414. [DOI] [PMC free article] [PubMed] [Google Scholar]