Abstract

One of the most important factors limiting the success of chemotherapy in cancer treatment is the phenomenon of drug resistance. We have recently introduced a framework for quantifying the effects of induced and non-induced resistance to cancer chemotherapy (Greene et al., 2018a, 2019). In this work, we expound on the details relating to an optimal control problem outlined in Greene et al. (2018a). The control structure is precisely characterized as a concatenation of bang-bang and path-constrained arcs via the Pontryagin Maximum Principle and differential Lie algebraic techniques. A structural identifiability analysis is also presented, demonstrating that patient-specific parameters may be measured and thus utilized in the design of optimal therapies prior to the commencement of therapy. For completeness, a detailed analysis of existence results is also included.

Keywords: drug resistance, chemotherapy, phenotype, optimal control theory, singular controls

1. Introduction

The ability of cancer chemotherapies to successfully eradicate cancer populations is limited by the presence of drug resistance. Cells may become resistant through a variety of cellular and micro-environmental mechanisms (Gottesman, 2002). These mechanisms are exceedingly complex and diverse, and remain to be completely understood. Equally complex is the manner in which cancer cells obtain the resistant phenotype. Classically resistance was understood to be conferred by random genetic mutations; more recently, the role of epigenetic phenotype switching was discovered as another mediator of resistance (Pisco et al., 2013). Importantly, both of these phenomena were seen as drug-independent, so that the generation of resistance is functionally separate from the selection mechanism (e.g., the drug). However, experimental studies from the past ten years suggest that drug resistance in cancer may actually be induced by the application of chemotherapy (Sharma et al., 2010; Pisco et al., 2013; Goldman et al., 2015; Doherty et al., 2016; Shaffer et al., 2017).

In view of the multitude of ways by which a cancer cell may become chemoresistant, we have previously introduced a mathematical framework to differentiate drug-independent from drug-dependent resistance (Greene et al., 2019). In that work, we demonstrated that induced resistance may play a crucial role in therapy outcome, and also discussed methods by which a treatment's induction potential may be identified via biological assays. An extension of the work was outlined in the conference paper (Greene et al., 2018a), where a formal optimal control problem was introduced and an initial mathematical analysis was performed. The aim of this work is to formalize the parameter identifiability properties of our theoretical model, to establish the existence of the optimal control introduced in Greene et al. (2018a), and to precisely classify the optimal control structure utilizing the Pontryagin Maximum Principle and differential-geometric techniques. A numerical investigation of both the control structure and corresponding objective is also undertaken as a function of patient-specific parameters, and clinical conclusions are emphasized.

The work is organized as follows. In section 2, we briefly review the mathematical model together with the underlying assumptions. Section 3 restates the optimal control problem, and the Maximum Principle is analyzed in section 4. A precise theoretical characterization of the optimal control structure is summarized in section 5. In section 6, we compare theoretical results with numerical computations, and investigate the variation in control structure and objective as a function of parameters. Conclusions are presented in section 8. We also include additional properties, including details on structural identifiability and existence of optimal controls, in Section 7.

2. Mathematical Modeling of Induced Drug Resistance

We briefly review the model presented in Greene et al. (2019) and analyzed further in Greene et al. (2018a). In that work, we have constructed a simple dynamical model which describes the evolution of drug resistance through both drug-independent (e.g., random point mutations, gene amplification, stochastic state switching) and drug-dependent (e.g., mutagenicity, epigenetic modifications) mechanisms. Drug-induced resistance, although experimentally observed, remains poorly understood. It is our hope that a mathematical analysis will provide mechanistic insight and produce a more complete understanding of this process by which cancer cells inhibit treatment efficacy.

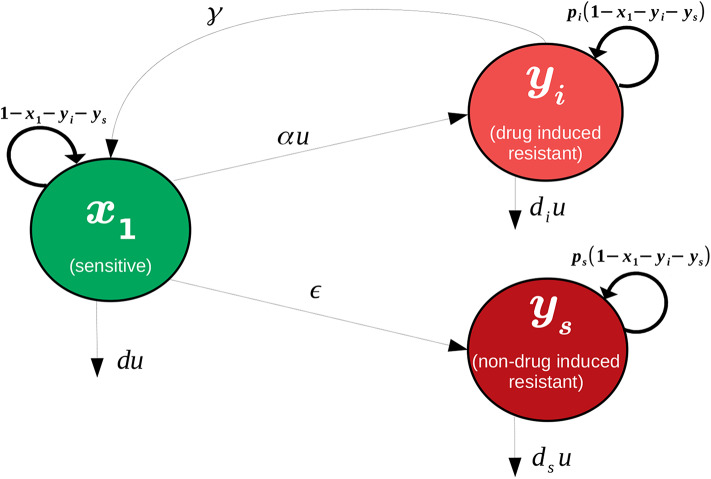

A network diagram of the model under consideration is provided in Figure 1. Specifically, we assume that the tumor being studied is composed of two types of cells: sensitive (x1) and resistant (x2). For simplicity, the drug is taken as completely ineffective against the resistant population, while the log-kill hypothesis (Traina and Norton, 2011) is assumed for the sensitive cells. Complete resistance is of course unrealistic, but can serve as a reasonable approximation, especially when toxicity constraints may limit the total amount of drug that may be administered. Furthermore, this assumption permits a natural metric on treatment efficacy that may not exist otherwise (see section 3). The effect of treatment is considered as a control agent u(t), which we assume is a locally bounded Lebesgue measurable function taking values in +. Here u(t) is directly related to the applied drug dosage D(t), and in the present work we assume that we have explicit control over u(t). Later, during the formulation of the optimal control problem (section 3), we will make precise specifications on the control set U. Even though an arbitrary dosage schedule is unrealistic as a treatment strategy, our objective in this work is to understand the fundamental mathematical questions associated with drug-induced resistance, so we believe the simplification is justified. Furthermore, our results in section 5 suggest that the applied optimal treatment should take a relatively simple form, which may be approximated with sufficient accuracy in a clinical setting. Sensitive and resistant cells are assumed to compete for resources in the tumor microenvironment; this is modeled via a joint carrying capacity, which we have scaled to one. Furthermore, cells are allowed to transition between the two phenotypes in both a drug-independent and drug-dependent manner. All random transitions to the resistant phenotype are modeled utilizing a common term, ϵx1, which accounts for both genetic mutations and epigenetic events occurring independently of the application of treatment. Drug-induced deaths are assumed of the form du(t)x1, where d is the drug cytotoxicity parameter relating to the log-kill hypothesis. Drug-induced transitions are assumed to be of the form αu(t)x1, which implies that the per-capita drug-induced transition rate is directly proportional to the dosage [as we assume full control on u(t), i.e. pharmacokinetics are ignored]. Of course, other functional relationships may exist, but since the problem is not well-studied, we consider it reasonable to begin our analysis in this simple framework. The above assumptions then yield the following system of ordinary differential equations (ODEs):

Figure 1.

Visualization of interactions considered in system (1).

| (1) |

All parameters are taken as non-negative, and 0 ≤ pr < 1. The restriction on pr emerges due to (1) already being non-dimensionalized, as pr represents the relative growth rate of the resistant population with respect to that of the sensitive cells. The condition pr < 1 thus assumes that the resistant cells divide more slowly than their sensitive counterparts, which is observed experimentally (Shackney et al., 1978; Lee, 1993; Brimacombe et al., 2009). As mentioned previously, many simplifying assumptions are made in system (1). Specifically, both types of resistance (random genetic and epigenetic) are modeled as dynamically equivalent; both possess the same division rate pr and spontaneous (i.e., drug-independent) transition rate ϵ. Thus, the resistant compartment x2 denotes the total resistant subpopulation.

The region

| (2) |

in the first quadrant is forward invariant for any locally bounded Lebesgue measurable treatment function u(t) taking values in +. Furthermore, if ϵ > 0, the population of (1) becomes asymptotically resistant:

| (3) |

For a proof, see Theorem 2 in SI A in Greene et al. (2019). Thus in our model, the phenomenon of drug resistance is inevitable. However, we may still implement control strategies which, for example, may increase patient survival time. Such aspects will inform the objective introduced in section 3. For more details on the formulation and dynamics of system (1), we refer the reader to Greene et al. (2019).

3. Optimal Control Formulation

As discussed in section 2, all treatment strategies u(t) result in an entirely resistant tumor: is globally asymptotically stable for all initial conditions in region Ω. Thus, any chemotherapeutic protocol will eventually fail, and a new drug must be introduced (not modeled in this work, but the subject of future study). Therefore, selecting an objective which minimizes tumor volume (x1 + x2) or resistant fraction [x2/(x1 + x2)] at a fixed time horizon would be specious for our modeling framework. However, one can still combine therapeutic efficacy and clonal competition to influence transient dynamics and possibly prolong patient life, as has been shown clinically utilizing real-time patient data (Gatenby et al., 2009).

Toxicity as well as pharmacokinetic constraints limit the amount of drug to be applied at any given instant. Thus, we assume that there exists some number M > 0 such that u(t) ≤ M for all t ≥ 0. Any Lebesgue measurable treatment regime u(t) is considered, so that the control set is U = [0, M] and the set of admissible controls is

| (4) |

Recall that all cellular populations have been normalized to remain in [0, 1]. We assume that there is a critical tumor volume Vc, at which treatment, by definition, has failed. Our interpretation is that a tumor volume larger than Vc interferes with normal biological function, while x1 + x2 ≤ Vc indicates a clinically acceptable state. Different diseases will have different Vc values. For technical reasons needed in section 5 we assume that Vc < 1 − ϵ. This is a mild assumption, since genetic mutation rates ϵ are generally small (Loeb et al., 1974), and our interest is on the impact of induced resistance. Thus

| (5) |

Define tc as the time at which the tumor increases above size Vc for the first time. To be precise,

| (6) |

Since all treatments approach the state (0, 1), tc(u) is well-defined for each treatment u(t). For simplicity, denote tc = tc(u) in the remainder of the work. The time tc is then a measure of treatment efficacy, and our goal is then to find those controls u* which maximize tc. Writing in standard form as a minimization problem, we have the following objective:

| (7) |

We are thus seeking a control which maximizes tc, i.e. solves the time-optimal minimization problem (7) restricted to the dynamic state equations given by the system described in (1) and the condition x1(t) + x2(t) ≤ Vc for all 0 ≤ t ≤ tc. Note that the above is formulated (using the negative sign) as a minimization problem to be consistent with previous literature and results related to the Pontryagin Maximum Principle (PMP) (Ledzewicz and Schättler, 2012). Note that maximization is still utilized in section 7.2 and section 4.1, and we believe that the objective will be clear from context. To be consistent with notation utilized later, we denote the system (1) as

| (8) |

where

| (9) |

| (10) |

and x(t) = (x1(t), x2(t)). By continuity of solutions, the time tc must satisfy the terminal condition (tc, x(tc)) ∈ N, where N is the line x1 + x2 = Vc in Ω, i.e.,

| (11) |

where

| (12) |

With this notation, the path-constraint

| (13) |

must also hold for 0 ≤ t ≤ tc. Equation (13) ensures that the tumor remains below critical volume Vc for the duration of treatment. Equivalently, the dynamics are restricted to lie in the set Ωc ⊆ Ω, where

| (14) |

for all times t such that t ∈ [0, tc]. The initial state

| (15) |

is also assumed to lie in Ωc. Except for section 7.1 where we restrict to the case , the remainder of the work allows for arbitrary .

4. Maximum Principle

We dedicate the present section to characterize the optimal control utilizing the Pontryagin Maximum Principle (PMP). The subsequent analysis is strongly influenced by the Lie-derivative techniques introduced by Sussmann (1982, 1987a,b,c). For an excellent source on both the general theory and applications to cancer biology, see the textbooks by Ledzewicz and Schättler (2012) and Schättler and Ledzewicz (2015).

Before starting our analysis of the behavior and response of system (1) to applied treatment strategies u(t) utilizing geometric methods, we would like to mention that we have not found a reference for existence of optimal controls for a problem such as this, due perhaps to the non-standard character of it (maximization of time, path constraints). For this reason, we have added a self-contained proof of regarding existence in section 7.2.

4.1. Elimination of Path Constraints

We begin our analysis by separating interior controls from those determined by the path-constraint (13) (equivalently, x ∈ N). The following theorem implies that outside of the one-dimensional manifold N, the optimal pair (x*, u*) solves the same local optimization problem without the path and terminal constraints. More precisely, the necessary conditions of the PMP (see section 4.2) at states not on N are exactly the conditions of the corresponding maximization problem with no path or terminal constraints.

THEOREM 1. Suppose that x* is an optimal trajectory. Let t1 be the first time such that x*(t) ∈ N. Fix δ > 0 such that t1 − δ > 0, and

| (16) |

Define z(t): = x*(t)|t ∈ [0,t1−δ]. Then the trajectory z is a local solution of the corresponding time maximization problem tc with boundary conditions x(0) = x0, x(tc) = ξ, and no additional path constraints. Hence at all times t, the path z (together with the corresponding control and adjoint) must satisfy the corresponding unconstrained Pontryagin Maximum Principle.

Proof. We first claim that z satisfies the path-constrained maximization problem with boundary conditions . This is a standard dynamic programming argument: if there exists a trajectory such that , τ > t1 − δ, concatenate with x*(t)|t ∈ [τ,tc] at t = τ to obtain a feasible trajectory satisfying all constraints. This trajectory then has total time τ + δ + tc − t1 > tc, contradicting the global optimality of x*.

Recall that t1 was the first time such that x*(t) ∈ N. Since z is compact, we can find a neighborhood of z that lies entirely in {x|x ∉ N}. As the Maximum Principle is a local condition with respect to the state, this completes the proof. □

Theorem 1 then tells us that for states x = (x1, x2) such that x1 + x2 < Vc, the corresponding unconstrained PMP must be satisfied by any extremal lift of the original problem. (Recall that an extremal lift of an optimal trajectory is obtained by adding the Lagrange multipliers, or adjoint variables, to the control and state; see details in Definition 2.2.4, page 95, Chapter 2 of Ledzewicz and Schättler, 2012). We have now demonstrated that the optimal control consists of concatenations of controls obtained from the unconstrained necessary conditions and controls of the form (18). In the next section, we analyze the Maximum Principle in the region x1 + x2 < Vc. Furthermore, the constraint (13) has generic order one. In other words,

| (17) |

Therefore, the feedback control (also known as the constrained control) can be found by differentiating the function (12) to insure that trajectories remain on the line N:

| (18) |

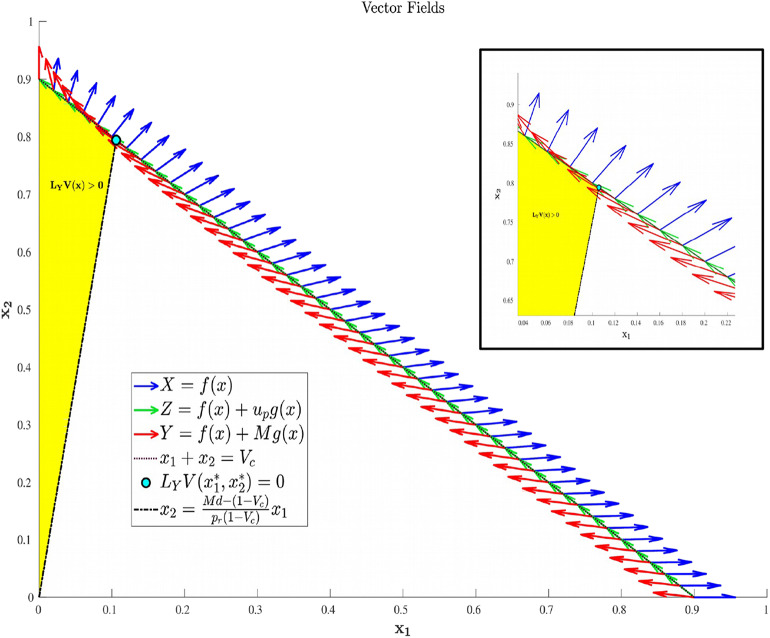

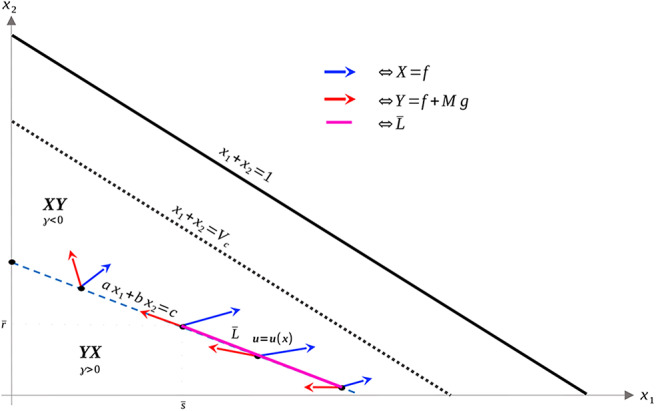

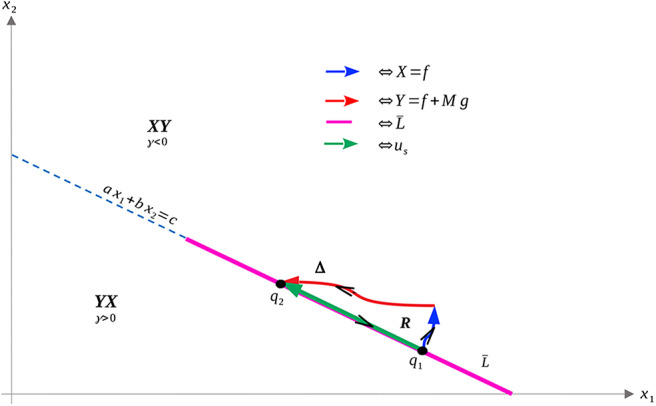

Its existence however does not imply its feasibility, which is discussed below. Specifically, up can be shown to be a decreasing function of x1 which is feasible on the portion of N satisfying , where is given in (20), and infeasible elsewhere. This is proven in Proposition 3, and the geometric structure is depicted in Figure 2. Propositions 4 and 5 provide characterizations of the volume dynamics in certain regions of phase space, and are included here for completeness.

Figure 2.

Region in Ωc where LYV(x) is guaranteed to be positive. That is, applying the maximal dosage M results in an increasing cancer population in the yellow-shaded region of phase-space.

Proposition 2. Suppose that the maximal dosage M satisfies

| (19) |

and the point with coordinates

| (20) |

Denote by Y(x) = f(x) + Mg(x) the vector field corresponding to the maximal allowed dosage M [here, f and g are the functions defined in (9), (10)]. The Lie derivative, for any x ∈ N, of the volume function V(x) = x1 + x2 with respect to Y is

positive if ,

zero at , and

negative if .

Proof. We verify the above claims with a direct calculation. Let LYV(x) denotes the Lie derivative of V(x) with respect to Y. Thus, for x ∈ N,

Assuming , the sign of LYV(x) is as in the statement of the proposition. □

Proposition 2 implies that if the allowable dosage is large enough (Equation 9), treatment can at least decrease the tumor in certain regions of phase space. If this condition was not met, then the applied drug would generally be ineffective in reducing the tumor volume V, and hence not be utilized in a clinical scenario.

Proposition 3. Let x be a point on the line N. The feedback control up is unfeasible if , and is feasible if

Proof. For x ∈ N we compute

It is straightforward to check that up > M if . In addition, the feedback control, when restricted to points in N, is a decreasing function with respect to x1. Thus, it is feasible for x ∈ N if . □

Proposition 4. For x = (x1, x2) ∈ Ωc with

| (21) |

the Lie derivative LYV(x) is positive.

Proof. As in Proposition 2, we compute LYV(x) directly:

where the first inequality utilizes V ≤ Vc, and the second relies on (21) □

Proposition 5. For

trajectories corresponding to the maximal dosage M have a decreasing sensitive cellular population.

Proof. For u(t) ≡ M, the corresponding sensitive trajectory is given by

Note that we are assuming here that the maximal dosage M satisfies . □

4.2. Maximum Principle and Necessary Conditions at Interior Points

Necessary conditions for the optimization problem discussed in section 3 without path or terminal constraints are derived from the Pontryagin Maximum Principle (Pontryagin, 1987; Ledzewicz and Schättler, 2012). The corresponding Hamiltonian function H is defined as

| (22) |

where λ0 ≥ 0 and λ ∈ 2. Here 〈·, ·〉 denotes the standard inner product on 2 and, since the dynamics are affine in the control u, Φ(x, λ) is the switching function:

| (23) |

The Maximum Principle then yields the following theorem:

THEOREM 6. If the extremal (x*, u*) is optimal, there exists λ0 ≥ 0 and a covector (adjoint) , such that the following hold:

(λ0, λ(t)) ≠ 0 for all t ∈ [0, tc].

- λ(t) = (λ1(t), λ2(t)) satisfies the second-order differential equation

(24) -

u*(t) minimizes H pointwise over the control set U:Thus, the control u*(t) must satisfy

(25) where(26) - The Hamiltonian H is identically zero along the extremal lift (x*(t), u*(t), λ(t)):

(27)

Proof. Most statements of Theorem 6 follow directly from the Maximum Principle, so proofs are omitted. In particular, items (1), (2), and the first part of (3) are immediate consequences (Ledzewicz and Schättler, 2012). Equation (25) follows directly since we minimize the function H, which is affine in u (see Equation 22). The Hamiltonian vanishes along (x*(t), u*(t), λ(t)) since it is independent of an explicit time t dependence and the final time tc is free, the latter being a consequence of the transversality condition. □

Proposition 7. For all t ∈ [0, tc], the adjoint λ(t) corresponding to the extremal lift (x*(t), u*(t), λ(t)) is nonzero.

Proof. This is a general result relating to free end time problems. We include a proof here for completeness. Suppose that there exists a time t ∈ [0, tc] such that λ(t) = 0. By (22), the corresponding value of the Hamiltonian is H(λ0, λ(t), x*(t), u*(t)) = −λ0. By item (4) in Theorem 6, H ≡ 0, which implies that λ0 = 0. This contradicts item (1) in Theorem 6. Hence, λ(t) ≠ 0 on [0, tc]. □

4.3. Geometric Properties and Existence of Singular Arcs

We now undertake a geometric analysis of the optimal control problem utilizing the affine structure of system (8) for interior states (i.e., controls which satisfy Theorem 6). We call such controls interior extremals, and all extremals in this section are assumed to be interior. The following results depend on the independence of the vector fields f and g, which we use to both classify the control structure for abnormal extremal lifts (extremal lifts with λ0 = 0), as well as characterize the switching function dynamics via the Lie bracket.

Proposition 8. For all x1 ∈ Ω, x1 > 0, the vector fields f(x) and g(x) are linearly independent.

Proof. Define A(x) = A(x1, x2) to be the matrix

| (28) |

The determinant of A can calculated as

| (29) |

where

| (30) |

As x1(t) + x2(t) ≤ 1 for all t ≥ 0, κ(x(t)) ≥ 0, and we see that detA(x) = 0 in Ω if and only if x1 = 0, completing the proof. □

The line x1 = 0 is invariant in Ω, and furthermore the dynamics in the set are independent of the control u(t). Conversely, implies that x1(t) > 0 for all t ≥ 0. We concern our analysis only in this latter case, and so without loss of generality, f(x) and g(x) are linearly independent in the region of interest Ωc.

We begin by showing that abnormal extremal lifts are easily characterized. We recall that an extremal lift is abnormal if λ0 = 0, i.e., if the Hamiltonian is independent of the objective.

THEOREM 9. Abnormal extremal lifts at interior points, i.e., extremal lifts corresponding to λ0 = 0, are constant and given by the maximal (M) or minimal (0) dosage.

Proof. Assume that u* switches values at some time t. From (25), we must have that Φ(t) = 0. Since λ0 = 0 and Φ(t) = 〈λ(t), g(x*(t))〉, Equation (22) reduces to

| (31) |

Thus, λ(t) is orthogonal to both f(x*(t)) and g(x*(t)). Since f and g are linearly independent (Proposition 8), this implies that λ(t) = 0. But this contradicts Proposition 7. Hence, no such time t exists, and u*(t) is constant. The constant sign of Φ thus corresponds to u = 0 or u = M (see Equation 25). □

The control structure for abnormal extremal lifts is then completely understood via Theorem 9. To analyze the corresponding behavior for normal extremal lifts, without loss of generality we assume that λ0 = 1. Indeed, λ(t) may be rescaled by λ0 > 0 to yield an equivalent version of Theorem 6. We thus assume that the Hamiltonian H(t) evaluated along (λ(t), x*(t), u*(t)) is of the form

| (32) |

We recall the Lie bracket as the first-order differential operator between two vector fields X1 and X2:

| (33) |

where, for example, DX2(z) denotes the Jacobian of X2 evaluated at z. As f and g are linearly independent in Ω, there exist γ, β ∈ C∞(Ω) such that

| (34) |

for all x ∈ Ω. Explicitly, we compute γ and β:

| (35) |

| (36) |

where

| (37) |

| (38) |

| (39) |

Clearly, for parameter values of interest (recall 0 < pr < 1), a, b, c > 0. The assumption (5) guarantees that β(x) > 0 on 0 < x1 + x2 < Vc.

From (25), the sign of the switching function Φ determines the value of the control u*. As λ and x* are solutions of differential equations, Φ is differentiable. The dynamics of Φ can be understood in terms of the Lie bracket [f, g]:

| (40) |

| (41) |

The last lines of the above follow from (34) as well as the linearity of the inner product. We are then able to derive an ODE system for x* and Φ. Equation (32) allows us to solve for 〈λ(t), f(x*(t))〉:

| (42) |

Substituting the above into (41) then yields the following ODE for Φ(t), which we view as coupled to system (8) via (25):

| (43) |

The structure of the optimal control at interior points may now be characterized as a combination of bang-bang and singular arcs. We recall that the control (or, more precisely, the extremal lift) u* is singular on an open interval I ⊂ [0, tc] if the switching function Φ(t) and all its derivatives are identically zero on I. On such intervals, Equation (25) does not determine the value of u*, and a more thorough analysis of the zero set of Φ(t) is necessary. Indeed, for a problem such as ours, aside from controls determined by the path constraint ψ(x1(t), x2(t)) ≤ 0, singular arcs are the only candidates for optimal controls that may take values outside of the set {0, M}. Conversely, times t where Φ(t) = 0 but Φ(n)(t) ≠ 0 for some n ≥ 1 denote candidate bang-bang junctions, where the control may switch between the vertices 0 and M of the control set U. Note that the parity of the smallest such n determines whether a switch actually occurs: n odd implies a switch, while for n even u* remains constant. Equation (43) allows us to completely characterize the regions in the (x1, x2) plane where singular arcs are attainable, as demonstrated in the following proposition.

Proposition 10. Singular arcs are only possible in regions of the (x1, x2) plane where γ(x) = 0. Furthermore, as x1(t) > 0 for all t ≥ 0, the region {x ∈ 2 | γ (x) = 0} ∩ Ω is the line

| (44) |

where a, b, c are defined in (37–39).

Proof. As discussed prior to the statement of Proposition 10, a singular arc must occur on a region where both Φ(t) and are identically zero (as well as all higher-order derivatives). Denoting by x*(t) the corresponding trajectory in the (x1, x2) phase plane, we may calculate from equation (43):

| (45) |

Note we have substituted the assumption Φ(t) = 0. Clearly we must also have that γ(x*(t)) = 0, thus implying that , as desired. The last statement of the proposition follows immediately from Equation (35). □

Proposition 10 implies that singular solutions can only occur along the line ax1 + bx2 − c = 0. Thus, define regions in the first quadrant as follows:

| (46) |

| (47) |

| (48) |

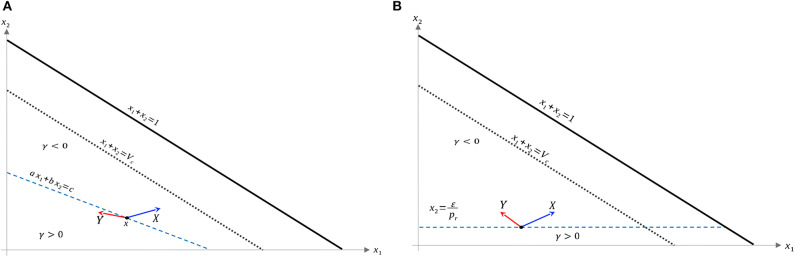

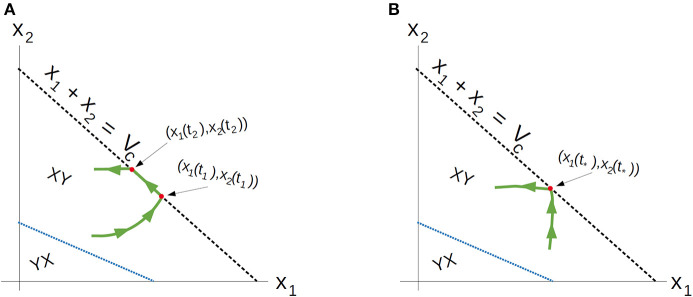

Recall that Ωc is simply the region in Ω prior to treatment failure, i.e., 0 ≤ V ≤ Vc, x1, x2 ≥ 0. From (35), Ωc is partitioned as in Figure 3B. From (35) and (37–39), is a line with negative slope −b/a. Furthermore, necessary and sufficient conditions for to lie interior to Ωc are . From (37)–(39), this occurs if and only if

Figure 3.

Domain in (x1, x2) plane. (A) Region where γ changes sign. We see that inside the triangular region x1 + x2 ≤ 1 of the first quadrant, γ changes sign only along the line ax1 + bx2 − c = 0. For this line to be interior to Ωc as depicted, we must be in the parameter regime indicated in (49). X and Y vector fields corresponding to vertices of control set U. For singular controls to lie in U, X and Y must point to opposite sides along . (B) Same as in (A), but with α = 0.

| (49) |

As we have assumed that ϵ is small, and that Vc ≈ 1, this inequality is not restrictive, and we assume it is satisfied for the remainder of the work. We note an important exception below: when α = 0 the inequality is never satisfied with ϵ > 0; for such parameter values, line is horizontal (Figure 3B). We note that this does not change the qualitative results presented below. Of course, other configurations of the line ax1 + bx2 = c and hence precise optimal syntheses may exist, but we believe the situation illustrated in Figure 3A is sufficiently generic for present purposes.

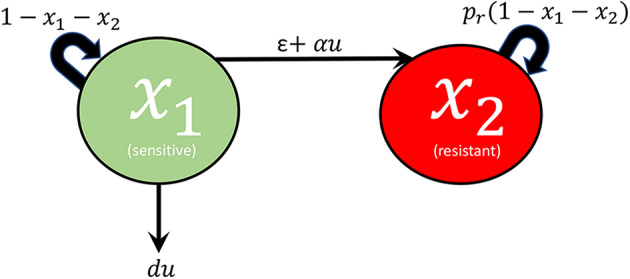

With the existence of singular arcs restricted to the line γ = 0 by Proposition 10, we now investigate the feasibility of such solutions. Recall that the treatment u(t) must lie in the control set U = [0, M], for some M > 0 corresponding to the maximally tolerated applied dosage. Defining the vector field X(x) and Y(x) as the vector fields corresponding to the vertices of U,

| (50) |

a singular control takes values in U at if and only if X(x) and Y(x) point in different directions along . More precisely, the corresponding Lie derivatives LXγ(x) and LYγ(x) must have opposite signs (see Figure 3A). The following proposition determines parameter values where this occurs.

Proposition 11. Suppose that α > 0, so that drug has the potential to induce resistance. Also, let the maximally tolerated dosage M satisfy

| (51) |

Then the following hold along :

LXγ < 0,

LYγ < 0 as in Ω,

LYγ > 0 at , and

LYγ is monotonically decreasing as a function of x1.

Thus, contains a segment which is a singular arc. Note that is precisely the region in where LYγ is positive.

Proof. The proof is purely computational. □

Note that if inequality (51) is not satisfied, then singular arcs are not in the domain Ωc.

The geometry of Proposition 11 is illustrated in Figure 4. Thus, assuming α > 0 and M as in (51), singular arcs exist along the segment . Furthermore, the corresponding control has a unique solution us, which may be computed explicitly. Indeed, as the solution must remain on the line , or equivalently, ax1 + bx2 = c, taking the time derivative of this equation yields aẋ1 + bẋ2 = 0, and substituting the expressions (1) we compute us as

Figure 4.

Geometry of vector fields X and Y with α > 0 and M satisfying (51). As in Proposition 11, this can be understood via the corresponding Lie derivatives of γ. Note that near x2 = 0, X, and Y point to opposite sides of L, while at , both X and Y point away from γ > 0. The line is the unique singular arc in Ωc.

| (52) |

where a, b, c are given by (37–39) and x2 and x1 satisfy ax1 + bx2 = c. As discussed previously, x1(t) > 0 for , so this formula is well-defined. Proposition 11 implies that it is possible to simplify Equation (52) as a function of x1 (i.e. as a feedback law) for , for some , but since its value will not be needed, we do not provide its explicit form. Note that the maximal dose M is achieved precisely at where vector field Y is parallel to . Thus, at this , the trajectory must leave the singular arc, and enter the region . As ẋ2 ≥ 0, trajectories must follow in the direction of decreasing x1 (see Figure 4). We summarize these results in the following theorem.

THEOREM 12. If α > 0, and M satisfies (51), a singular arc exists in the (x1, x2) plane as a segment of the line . Along this singular arc, the control is given by Equation (52), where ax1 + bx2 = c. Therefore, in this case the necessary minimum conditions on u* from (25) can be updated as follows:

| (53) |

where I is an open interval. Recall again that this is the optimal control at points interior to Ωc.

Proof. See the discussion immediately preceding Theorem 12. □

In the case α = 0, the line is horizontal, and as x2 is increasing, no segment is admissible in phase space. Thus, the interior controls in this case are bang-bang; for a visualization (see Figure 3B).

THEOREM 13. If α = 0, there are no singular arcs for the optimal time problem presented in section 3. Thus, the interior control structure is bang-bang.

Outside of the singular arc , the control structure is completely determined by (25) and (43). The precise result, utilized later for the optimal synthesis presented in section 5, is stated in the following theorem. We first introduce a convenient (and standard) notation. Let finite words on X and Y denote the concatenation of controls corresponding to vector fields X (u ≡ 0) and Y (u ≡ M), respectively. The order of application is read left-to-right, and an arc appearing in a word may not actually be applied (e.g. XY denotes an X arc followed by a Y arc or a Y arc alone).

THEOREM 14. Consider an extremal lift Γ = ((x, u), λ). Trajectories x remaining entirely in or can have at most one switch point. Furthermore, if , then the corresponding control is of the form YX. Similarly, implies that u = XY. Hence multiple switch points must occur across the singular arc .

Proof. If τ is a switching time, so that Φ(τ) = 0, Equation (43) allows us to calculate as

| (54) |

Thus, in where γ > 0, , and hence Φ must increase through τ. The expression for the control (25) then implies that a transition from a Y-arc to an X-arc occurs at τ (i.e., a YX arc). Furthermore, another switching time cannot occur unless x leaves , since otherwise there would exist a such that which is impossible in . Similarly, only XY-arcs are possible in . □

The structure implied by Theorem 14 is illustrated in Figure 4. Note that inside the sets , and , extremal lifts are precisely characterized. Furthermore, the results of section 4.1 (and particularly Equation 18) yield the characterization on the boundary N. What remains is then to determine the synthesis of these controls to the entire domain Ωc, as well as to determine the local optimality of the singular arc . The latter is addressed in the following section.

4.4. Optimality of Singular Arcs

We begin by proving that the singular arc is extremal, i.e. that it satisfies the necessary conditions presented in section 4.2 (note that it is interior by assumption). This is intuitively clear from Figure 4, since X and Y point to opposite sides along by the definition of .

THEOREM 15. The line segment is a singular arc.

Proof. We find an expression for u = u(x) such that the vector f(x) + u(x)g(x) is tangent to at x, i.e. we find the unique solution to

| (55) |

Note that we can invert (50):

| (56) |

so that . Thus,

Setting the above equal to zero, and solving for u = u(x) yields

| (57) |

As LXγ < 0 and LYγ > 0 on by Proposition 11, we see that 0 < u(x) < M. We must also verify that the associated controlled trajectory (57) is extremal by constructing a corresponding lift. Suppose that x(t) solves

for . Let ϕ ∈ (2)* such that

Let λ(t) solve the corresponding adjoint Equation (24) with initial condition λ(0) = ϕ. Then the extremal lift Γ = ((x, u), λ) is singular if Φ(t) = 〈λ(t), g(x(t))〉 ≡ 0. By construction of u(x), the trajectory remains on on some interval containing zero, and we can compute as [using (34)]

Note that we have used (43) and the fact that γ = 0 by our choice of u. Since Φ(0) = 0 by hypothesis, this implies that Φ(t) ≡ 0, as desired. □

The above then verifies that is a singular arc. Note that an explicit expression for u = u(x) was given in (52), which can be shown to be equivalent to (57).

Having shown that the singular arc is extremal, we now investigate whether it is locally optimal for our time-optimization problem. The singular arc is of intrinsic order k if the first 2k − 1 derivatives of the switching function are independent of u and vanish identically on an interval I, while the 2kth derivative has a linear factor of u. We can compute [this is standard for control-affine systems (8)] that

| (58) |

where adZ is the adjoint endomorphism for a fixed vector field Z:

| (59) |

and powers of this operator are defined as composition. Fix an extremal lift Γ = ((x, u), λ) of a singular arc of order k. The Generalized Legendre-Clebsch condition (also known as the Kelley condition) (Ledzewicz and Schättler, 2012) states that a necessary condition for Γ to satisfy a minimization problem with corresponding Hamiltonian H is that

| (60) |

along the arc. Note that , so that the above is simply the u coefficient of the 2k-th time derivative of the switching function (multiplied by (−1)k). The order of the arc, as well as the Legendre-Clebsch condition, are addressed in Theorem 16.

THEOREM 16. The singular control is of order one. Furthermore, for all times t such that , 〈λ(t), [g, [f, g]](x(t))〉 > 0. Thus, the Legendre-Clebsch condition is violated, and the singular arc is not optimal.

Proof. Along singular arcs we must have , and we can compute these derivatives using iterated Lie brackets as follows:

| (61) |

The final of the above in (61) can be simplified as

| (62) |

which is precisely (58) for k = 1. Order one is then equivalent to being able to solve this equation for u(t). Thus, 〈λ(t), [g, [f, g]](x(t))〉 > 0 will imply that the arc is singular of order one. We directly compute 〈λ(t), [g, [f, g]](x(t))〉 = 〈λ(t), [g, adf(g)](x(t))〉. Using Equation (34) and recalling properties of the singular arc [γ = 0 and the remaining relations in (61), as well as basic “product rule” properties of the Lie bracket], we can show that

| (63) |

Recall that for an extremal lift along the arc ,

| (64) |

The first two of the above follow from , and the third is a consequence of H ≡ 0 [see (22)]. Equations (63) and (64) together imply that

| (65) |

The last equality follows from the representation in (56). As LYγ > 0 and LXγ < 0 along (Proposition 11), 〈λ(t), [g, [f, g]](x(t))〉 > 0, as desired. Furthermore,

| (66) |

| (67) |

showing that (60) is violated (substituting k = 1). Thus, is not optimal. □

Theorem 16 then implies that the singular arc is suboptimal, i.e. that is “fast” with respect to the dynamics. In fact, we can compare times along trajectories using the “clock form,” a one-form on Ω. As one-forms correspond to linear functionals on the tangent space, and f and g are linearly independent, there exists a unique ω ∈ (TΩ)* such that

| (68) |

In fact, we compute it explicitly:

| (69) |

Then, along any controlled trajectory (x, u) defined on [t0, t1], the integral of ω computes the time t1 − t0:

| (70) |

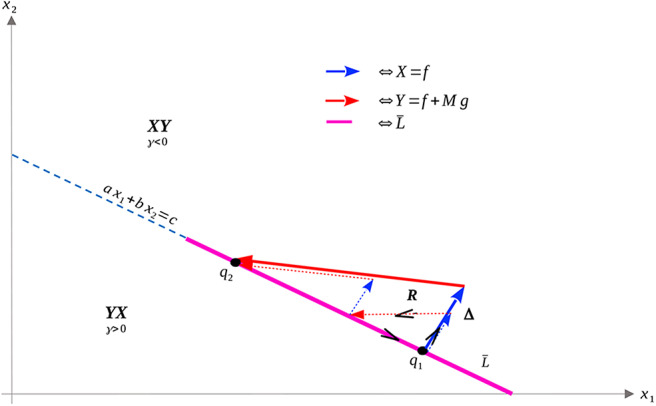

We can then use ω and Stokes' Theorem to compare bang-bang trajectories with those on the singular arc. See Figure 5 below for a visualization of a singular trajectory connecting and the corresponding unique XY trajectory connecting these points in (note that uniqueness is guaranteed as long as q1 and q2 are sufficiently close).

Figure 5.

Both XY and singular trajectories taking q1 to q2.

Let tS denote the time spent along the singular arc, tX the time spent along the X arc, and tY the time spent along the Y arc. Denote by Δ the closed curve traversing the X and Y arcs positively and the singular arc negatively, with R as its interior. As X and Y are positively oriented (they have the same orientation as f and g), Stokes' Theorem yields

| (71) |

Taking the exterior derivative yields the two-form dω see Chapter 2 of (Ledzewicz and Schättler, 2012):

| (72) |

As the determinant is everywhere positive (see the proof of Proposition 8), and R lies entirely in γ < 0, the integral on the right-hand side of (71) is positive, so that we have

| (73) |

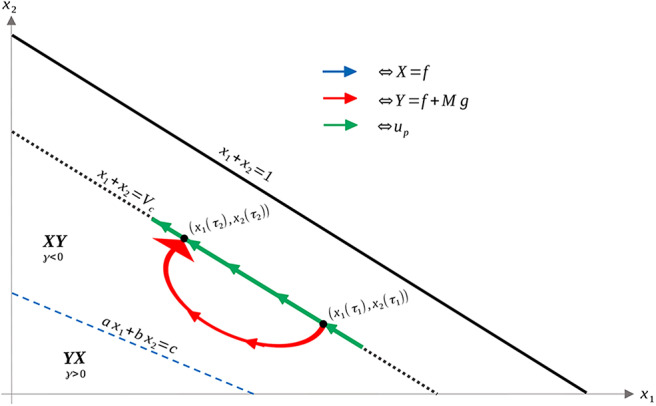

Thus, time taken along the singular arc is shorter than that along the XY trajectory, implying that the singular arc is locally suboptimal for our problem (recall that we want to maximize time). Since local optimality is necessary for global optimality, trajectories should never remain on the singular arc for a measurable set of time points. This reaffirms the results of Theorem 16. A completely analogous statement holds for YX trajectories in the region γ > 0. We can also demonstrate, utilizing the same techniques, that increasing the number of switchings at the singular arc speeds up the trajectory (see Figure 6). This again reinforces Theorem 16, and implies that trajectories should avoid the singular arc to maximize the time spent in Ωc.

Figure 6.

XY (solid) and XYXY (dashed) trajectories taking q1 to q2 in the region γ > 0. The time difference between the two trajectories can again be related to the surface integral in the region R, where γ < 0. The XY trajectory can then be seen to be slower in comparison.

5. Characterization of Optimal Control

The results of sections 4.1, 4.2, 4.3, and 4.4 may now be combined to synthesize the optimal control introduced in section 3.

THEOREM 17. For any α ≥ 0, the optimal control to maximize the time to reach a critical time is a concatenation of bang-bang and path-constraint controls. In fact, the general control structure takes the form

| (74) |

where (YX)n: = (YX)n−1YX for n ∈ , and the order should be interpreted as left to right. Here up is defined in (18).

Proof. Formula (74) is simply a combination of the results presented previously. Note that singular arcs are never (locally) optimal, and hence do not appear in the equation. We also observe that X arcs are not admissible once the boundary N has been obtained, as an X arc always increases V. A Y arc may bring the trajectory back into int(Ωc), but a YX trajectory is no longer admissible, as the switching structure in is XY (Theorem 14).

The only aspect that remains is to show that once N is reached, the only possible trajectories are either up given by (18) or Y, with at most one switching occurring between the two. That is, a local arc of the form upYup is either sub-optimal or non-feasible (equivalently, outside of the control set U). Suppose that such an arc is feasible, i.e., that for all such points in phase space, 0 ≤ up ≤ M [recall that up is defined via feedback in (18)]. Denote by τ1 and τ2 the times at which the switch onto and off of Y occurs, respectively. Since up decreases with S, feasibility implies that up(t) ≤ M for all t ∈ [τ1, τ2]. Thus, we can consider the alternate feasible trajectory which remains on N between the points (S(τ1), R(τ1)) and (S(τ2), R(τ2)); see Figure 7 for an illustration. Call τ the time for such a trajectory. Then, using the clock-form ω and the positively-oriented curve Δ which follows N first and Y (in the reverse direction) second, we obtain similarly to (71),

Figure 7.

Comparison of upYup arc and an arc that remains on N (hence u ≡ up) between the points [S(τ1), R(τ1)] and [S(τ2), R(τ2)], assuming that up remains feasible (that is, up ∈ [0, M]). Note that γ < 0 in the area of interest, and that a switching of a Y to an X arc is prohibited via the Maximum Principle. Thus, the only possibility is the curve illustrated, which leaves the boundary N for a Y arc before up becomes infeasible.

| (75) |

where R: = int(Δ). Recalling that γ < 0 in R (see Figure 4), the previous equation implies that

| (76) |

i.e., a longer amount of time is achieved by remaining on the boundary N. Hence the arc upYup is sub-optimal if it is feasible, as desired.

The previous argument has one subtle aspect, as we used results from the Maximum Principle on the boundary set N, where technically it does not apply. However, the above still remains true, since we may approximate the boundary line V = Vc with a curve interior to Ωc which remains feasible. By continuity, the time along such a curve can be made arbitrarily close to τ, and hence is still greater than τ2 − τ1, implying that upYup is sub-optimal. □

Note that in Theorem 17, the switchings must occur across the singular arc , if it exists (recall that it is not admissible if α = 0). The control up is determined along the boundary of Ωc, and provides the synthesis between interior and boundary controls.

We finally include a technical result, which eliminates the optimality of the constrained (boundary) control up in certain cases.

Proposition 18. Assume that the maximal dose M is as in Proposition 2:

| (77) |

If the optimal control becomes maximal in (i.e., u = M in this region), then the control cannot take the boundary value up (Equation 18) on an interval. Equivalently, an optimal control cannot end in the form Yup.

Proof. Note that if u* = Y and reaches N at the point x, then the Lie derivative LYV(x) must satisfy

| (78) |

as V must be increasing along the Y vector field, since it reaches N. But by Proposition 2, this implies that

Proposition 3 then implies that up is unfeasible in this region, completing the proof. □

6. Numerical Results

In this section, we provide numerical examples of the analytical results obtained in previous sections. All figures in this section were obtained using the GPOPS-II MATLAB software (Patterson and Rao, 2014). Parameters and initial values are given in Table 1 shown below, unless stated otherwise.

Table 1.

Parameter values and initial conditions used throughout section 6, unless stated otherwise.

| Parameters | Interpretation | Value |

|---|---|---|

| Initial sensitive population | 10−2 | |

| Initial resistant population | 0 | |

| α | Induced resistance rate due to the presence of the drug | 10−2 |

| d | Drug cytotoxicity parameter | 1 |

| ϵ | Drug-independent resistance rate | 10−6 |

| pr | Resistant growth fraction | 0.2 |

| t0 | Initial time | 0 |

| M | Maximum drug dosage | 5 |

| Vc | Tumor volume defining treatment failure | 0.9 |

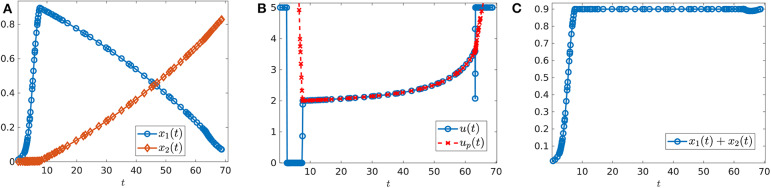

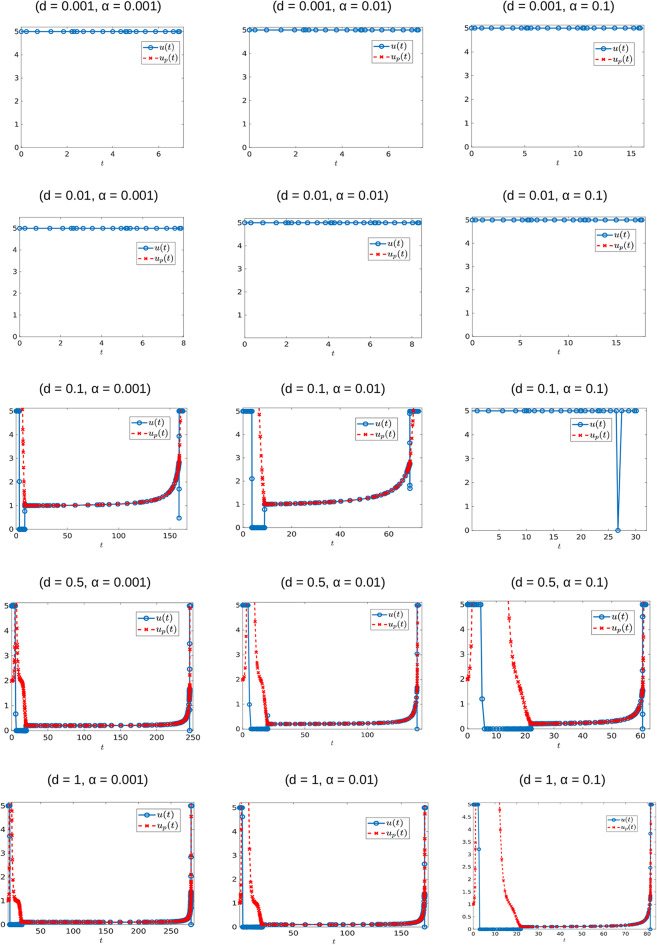

Theorem 17 characterizes the qualitative form of the optimal control:

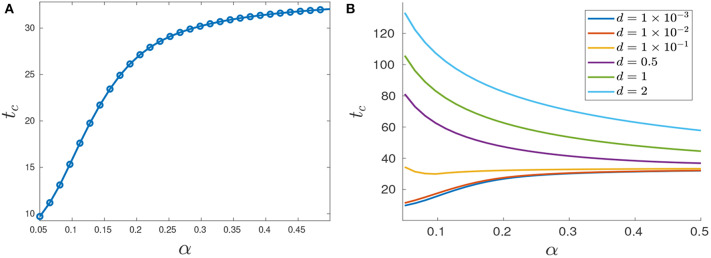

| (79) |

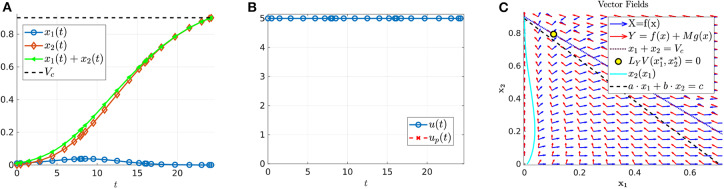

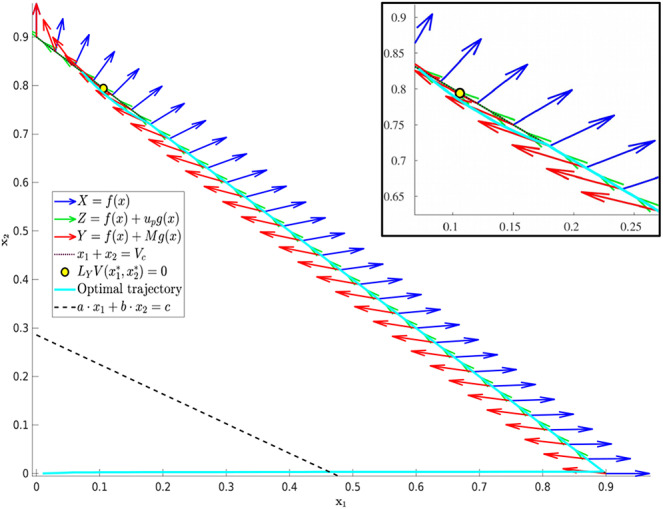

where n is the number of interior switches, up the sliding control (18), and X and Y denote the lower and upper corner controls u = 0 and u = M, respectively. We begin by computing sample controls (see Figures 8, 10). Note that the optimal control in Figure 8B takes the form YXupY, while that of Figure 10B is an upper corner control Y. The phase plane dynamics corresponding to Figure 8 are also provided in Figure 9. In both cases the cytotoxic parameter was fixed at d = 0.05, while the induced rate of resistance α varies between α = 0.005 in Figure 8 and α = 0.1 in Figure 10. Note that for the smaller value of α (Figure 8), a longer period of treatment success is observed, as the time to treatment failure is approximately 70 time units; compare this with tc = 24.2 in Figure 10. This result is intuitive, as the treatment less likely to induce resistance is able to be more effective when optimally applied.

Figure 8.

Numerical solution of the optimal control problem with d = 0.05, α = 0.005, and the remainder of parameters as in Table 1. (A) Sensitive (x1) and resistant (x2) temporal dynamics. (B) Control structure of form YXupY. (C) Volume dynamics. Note that the trajectory remains on the line V = Vc for most times, with corresponding control u = up.

Figure 10.

Numerical solution of optimal control problem with d = 0.05, α = 0.1, and the remainder of parameters as in Table 1. (A) Sensitive (x1), resistant (x2), and volume (x1 + x2) temporal dynamics. (B) Control structure of form Y, i.e., an entirely upper corner control. (C) Phase plane dynamics, plotted with relevant vector fields.

Figure 9.

Phase plane corresponding to Figure 8. Trajectory which optimal control is of the form YXupY with parameter values as in Table 1 except for α = 0.005 and d = 0.05. The yellow dot in the figure represents the point at which Y(x) is tangent to the sliding surface. Here, . As proven in Proposition 2, for points on the line N, the tumor volume will decrease along the Y(x) direction if x1 > 0.1059 and will increase for x1 < 0.1059.

The generality of the previous statement is investigated in Table 2 and Figures 11, 12. The computed optimal times tc suggest that when the cytotoxicity of the drug (d) is small, higher induction rates (α) actually increase treatment efficacy. For example, for d = 0.001 treatment response increases as α increases (Figure 12A). This could be explained from the fact that sensitive cells have a higher growth rate than resistant cells (assumption pr < 1). Thus, when the chemotherapeutic drug has a low effectiveness (small d) a larger α value actually helps to reduce the sensitive population size, and therefore extends the time tc at which the tumor volume exceeds its critical value Vc.

Table 2.

Optimal time tc for each of the computed controls appearing in Figure 11.

| d | α = 0.001 | α = 0.01 | α = 0.1 |

|---|---|---|---|

| 0.001 | 6.91 | 7.28 | 15.83 |

| 0.01 | 7.87 | 8.34 | 17.66 |

| 0.1 | 162.53 | 72.14 | 30.09 |

| 0.5 | 246.56 | 140.93 | 61.81 |

| 1 | 281.25 | 172.26 | 82.13 |

Figure 11.

Optimal control structures for different α and d values. The blue curve is the computed optimal control, while the red curve is the feedback control along on the boundary of N, which may or may not be optimal or even feasible.

Figure 12.

Variation in tc as a function of α. (A) Treatment success time tc for d = 0.001 with varying α values. (B) Functional dependence of tc on α for different d parameters. Note that for small d, tc increases as a function of α, but that this trend is reversed if d is further increased.

The situation is reversed when we consider larger values of d because in this case it would take more time for the tumor to grow to its critical volume Vc if the drug effectiveness is large enough; see for example the row d = 0.5 in Table 2, and the corresponding purple curve in Figure 12B. Figure 12B provides the critical time as a function of α for multiple cytotoxicities d; note the qualitative change in tc as d increases.

Examining Figure 11 and Table 2 also suggests that as d increases, the feedback control up becomes optimal on an interval [t1, t2] with 0 < t1 < t2 < tc. More numerical results are provided in section 7.3.

7. Additional Results

7.1. Structural Identifiability

For completeness, we discuss the identifiability of system (1). As our focus in this work has been on control structures based on the presence of drug-induced resistance, we rely on the ability to determine whether, and to what degree, the specific chemotherapeutic treatment is generating resistance.

Ideally, we envision a clinical scenario in which cancer cells from a patient are cultured in an ex vivo assay (for example, see Silva et al., 2017) prior to treatment. Parameter values are then calculated from treatment response dynamics in the assay, and an optimal therapy regime is implemented based on the theoretical work described below. Thus, identifying patient-specific model parameters, specially the induced-resistance rate α, is a necessary step in determining the control structures to apply. In this section, we address this issue, and prove that all parameters are structurally identifiable, as well as demonstrate a specific set of controls that may be utilized to determine α. A self-contained discussion is presented; for more details on theoretical aspects, see Sontag (2017) and the references therein. Other recent works related to identifiability in the biological sciences (as well as practical identifiability) can be found in Eisenberg and Jain (2017) and Villaverde et al. (2016).

We first formulate our dynamical system, and specify the input and output variables. The treatment u(t) is the sole input. Furthermore, we assume that the only clinically observable output is the net tumor volume V(t):

| (80) |

That is, we do not assume real-time measurements of the individual sensitive and resistant sub-populations. We note that in some instances, such measurements may be possible; however for a general chemotherapy, the precise resistance mechanism may be unknown a priori, and hence no biomarker with the ability to differentiate cell types may be available.

Treatment is initiated at time t = 0, at which we assume an entirely sensitive population:

| (81) |

Here , so that (x1(t), x2(t)) ∈ Ω for all t ≥ 0. We note that x2(0) = 0 is not restrictive, and similar results may derived under the more general assumption 0 ≤ x2(0) < 1. The condition x2(0) = 0 is utilized both for computational simplicity and since x2(0) is generally small (assuming a non-zero detection time, and small drug-independent resistance parameter ϵ; see Greene et al., 2019 for a discussion).

As formulated in section 7.2.1, the above then allows us to formulate our system (1) in input/output form, where the input u(t) appears affinely:

| (82) |

where (as defined on Equations (9) and (10)) f and g are

| (83) |

| (84) |

and x(t) = (x1(t), x2(t)). As is standard in control theory, the output is denoted by the variable y, which in this work corresponds to the total tumor volume:

| (85) |

Note that x1(t), x2(t) depend on both the input u(t) and parameters p. A system in form (82) is said to be uniquely structurally identifiable if the map (u(t), p) ↦ (u(t), y(t, p)) is injective almost everywhere (Meshkat and Seth, 2014; Eisenberg and Jain, 2017), where p is the vector of parameters to be identified. In this work,

| (86) |

Local identifiability and non-identifiability correspond to the map being finite-to-one and infinite-to-one, respectively. Our objective is then to demonstrate unique structural identifiability for model system (82) [or equivalently (1)], and hence recover all parameter values p from only measurements of the tumor volume y. We also note that the notion of identifiability is closely related to that of observability; for details Anguelova (2004); Sontag (1979) are good references.

To analyze identifiability, we utilize results appearing in, for example (Hermann and Krener, 1977; Wang and Sontag, 1989; Sontag and Wang, 1991), and hence frame the issue from a differential-geometric perspective. Our hypothesis is that perfect (hence noise-free) input-output data is available and in particular, for differentiable controls, that we can compute y and its derivatives. We thus, for example, make measurements of

| (87) |

for appropriately chosen inputs, and relate their values to the unknown parameter values p. If there exist inputs u(t) such that the above system of equations may be solved for p, the system is identifiable. The right-hand sides of (87) may be computed in terms of the Lie derivatives of the vector fields f and g in system (82). We recall the definition of Lie differentiation LXH of a Cω function H by a Cω (i.e. real-analytic) vector field X:

| (88) |

Here the domain of both X and H is the first-quadrant triangular region Ω, seen as a subset of the plane, and the vector fields and output function are Cω on an open set containing Ω (in fact, they are given by polynomials, so they extend as analytic functions to the entire plane). Iterated Lie derivatives are well-defined, and should be interpreted as function composition, so that for example LYLXH = LY(LXH), and .

More formally, let us introduce the observable quantities corresponding to the zero-time derivatives of the output y = h(x),

| (89) |

where U ∈ Rk is the value of the control u(t) (without loss of generality, a polynomial of degree k − 1) and its derivatives evaluated at t = 0: U = (u(0), u′(0), ..., u(k−1)(0)). Here k ≥ 0, indicating that the kth-order derivative Y may expressed as a polynomial in the components of U (Sontag and Wang, 1991). The initial conditions x0 appear due to evaluation at t = 0. The observation space is then defined as the span of the elements Y(x0, U):

| (90) |

Conversely, we also define span of iterated Lie derivatives with respect to the output h and vector fields f(x) and g(x):

| (91) |

Wang and Sontag (1989) proved that F1 = F2, so that the set of “elementary observables” may be considered as the set of all iterated Lie derivatives F2. Hence, identifiability may be formulated in terms of the reconstruction of parameters p from elements in F2. Parameters p are then identifiable if the map

| (92) |

is one-to-one. For the remainder of this section, we investigate the mapping defined in (92).

Computing the Lie derivatives and recalling that x0 = (S0, 0) we can recursively determine the parameters p:

| (93) |

Since F1 = F2, all of the above Lie derivatives are observable via appropriate treatment protocols. For an explicit set of controls and corresponding relations to measurable quantities [elements of the form (89)], see Greene et al. (2019). Thus, we conclude that all parameters in system (1) are identifiable, which allows us to investigate optimal therapies dependent upon a priori knowledge of the drug-induced resistance rate α.

7.2. Existence Results

For the problem presented in section 3, we are going to verify that the supremum of times tc(u) for [with tc(u) as defined in Equation (6)] is obtained by some , i.e., that an optimal control exists. This involves two distinct steps: (1) proving that the supremum is finite, and (2) that it is obtained by at least one admissible control. The following two subsections verify these claims.

7.2.1. Finiteness of the Supremum

We prove that

| (94) |

for the control system introduced in section 3. The result depends crucially on (3), and the fact that the globally asymptotically stable state (0, 1) is disjoint from the dynamic constraint x ∈ Ωc (see Equation (13)). That is, Vc < 1 is necessary for the following subsequent result to hold, and generally an optimal control will not exist if Vc = 1 or if the path constraint (13) is removed.

Our control system has the form

| (95) |

where x ∈ Ω, , and the vector fields f, g:Ω → 2 are continuously differentiable. Note that the above vector field is affine (and thus continuous) in the control u. Fix the initial condition

| (96) |

with x0 ∈ Ω. Recall that all solutions of (95) and (96) approach the fixed point . That is, for all ,

| (97) |

Note that we explicitly denote the dependence of the trajectory on the control u, and the above point is independent of the control u.

For any compact subset E of Ω such that , we associate to each control (and hence to its corresponding trajectory) a time tE(u) such that

| (98) |

The above is well-defined (as a maximum) for each control u, since by assumption x0 ∈ E and each trajectory asymptotically approaches , xu is continuous, and E is compact.

THEOREM 19. Define

| (99) |

With the above construction, T* is finite.

Proof. Consider the sets K, V ⊂ 2, with V being an open neighborhood of the steady state and K a compact set in 2 such that

By contradiction, suppose that T* is not finite, so we can find a sequence of controls in satisfying

| (100) |

where d∞ denotes the supremum metric and, for each k ∈ , x(t, vk) is the solution of the IVP:

| (101) |

Our aim is to find a control such that x(t, u), solution of system (101), does not enter K for any t > 0. Recall that by the Banach-Alaoglu theorem, the ball

| (102) |

is a compact set on the weak* topology and metrizable. Thus, the sequence must have a weak*−convergent subsequence which converges to a control u ∈ L∞([0, ∞)). In other words, for every ψ ∈ L1([0, ∞))

| (103) |

where μ is the usual Lebesgue measure. This means that the sequence converges to u with respect to the weak* topology on L∞([0, ∞)) as the dual of L1([0, ∞)).

We next prove that for all t ∈ [tk−1, tk] and all k ∈ . In order to do so define

for any tk−1 ∈ [0, ∞), where x solves the IVP

| (104) |

Notice that the fact that the equilibrium (0, 1) is globally asymptotically stable on implies that xk−1 is well-defined for any k ∈ .

The integral form of (104) is given by

| (105) |

With the help of the tk's from (100) and assuming (without loss of generality) that tk increases as k goes to infinity, we write the set [0, ∞) as the countable union of finite closed intervals:

Let wj, k and w denote the functions uj and u restricted to the interval [tk−1, tk], respectively. Thus, the sequence converges weakly* to w on [tk−1, tk]:

| (106) |

| (107) |

| (108) |

| (109) |

Since this result is independent of k, this implies that

| (110) |

The corresponding trajectory x(t, u) thus never enters K, contradicting the the global stability of . Hence, T* must be finite, as desired. □

For the system and control problem defined in sections 2 and 3, the above theorem implies that is finite by taking E = Ωc.

7.2.2. Supremum as a Maximum

Here we provide a general proof for the existence of optimal controls for systems of the form (95), assuming the set of maximal times is bounded above, which we have proven for our system in section 7.2.1. For convenience, we make the proof as self-contained as possible (one well-known result of Filippov will be cited), and state the results in generality which we later apply to the model of induced resistance. Arguments are adapted primarily from the textbook of Bressan and Piccoli (2007).

Consider again general control systems as in section 7.2.1. Solutions (or trajectories) of (95) will be defined as absolutely continuous functions for which a control exists such that (x(t), u(t)) satisfy (95) a.e., in their (common) domain [a, b].

It is easier and classical to formulate existence with respect to differential inclusions. That is, define the multi-function

| (111) |

Thus, the control system (95) is clearly related to the inclusion

| (112) |

The following theorem (see Filippov, 1967 for a proof) makes this relationship precise.

THEOREM 20. An absolutely continuous function x:[a, b] ↦ 2 is a solution of (95) if and only if it satisfies (112) almost everywhere.

We first prove a lemma demonstrating that the set of trajectories is closed with respect to the sup-norm ||·||∞ if all the sets of velocities F(x) are convex.

LEMMA 21. Let xk be a sequence of solutions of (95) converging to x uniformly on [0, T]. If the graph of (t, x(t)) is entirely contained in Ω, and all the sets F(x) are convex, then x is also a solution of (95).

Proof. By the assumptions on f, g, the sets F(x) are uniformly bounded as (t, x) range in a compact domain, so that xk are uniformly Lipschitz, and hence x is Lipschitz as the uniform limit. Thus x is differentiable a.e., and by Theorem 20, it is enough to show that

| (113) |

for all t such that the derivative exists.

Assume not, i.e., that the derivative exists at some τ, but ẋ(τ)∉F(x(τ)). Since F(x(τ)) is compact and convex, and ẋ(τ) is closed, the Hyperplane Separation Theorem implies that there exists a hyperplane separating F(x(τ)) and ẋ(τ). That is, there exists an ϵ > 0 and a (without loss of generality) unit-vector p ∈ 2 such that

| (114) |

for all y ∈ F(x(τ)). By continuity, there exists δ > 0 such that for |x′ − x(τ)| ≤ δ

| (115) |

for all y ∈ F(x′). Since x is differentiable at τ, we can choose τ′ > τ such that

| (116) |

for all t ∈ [τ, τ′]. Equation (116) and uniform convergence then implies that, as p is a unit vector,

| (117) |

On the other hand, since ẋ(t) ∈ F(x′) for t ∈ [τ, τ′], Equation (115) implies that for k sufficiently large,

| (118) |

Clearly, (117) and (118) contradict one another, so that (113) must be true, as desired. □

We now restate the optimal control problem associated to (95). Let S denotes the set of admissible terminal conditions, S ⊂ × 2, and ϕ: × 2 ↦ a cost function. We would like to maximize ϕ(T, x(T)) over admissible controls with initial and terminal constraints:

| (119) |

We now state sufficient conditions for such an optimal control to exist.

THEOREM 22. Consider the control system (95) and corresponding optimal control problem (119). Assume the following:

The objective ϕ is continuous.

The sets of velocities F(x) are convex.

The trajectories x remain uniformly bounded.

The target set S is closed.

A trajectory satisfying the constraints in (119) exists.

S is contained in some strip [0, T] × 2, i.e. the set of final times (for free-endpoint problems) can be uniformly bounded.

If the above items are all satisfied, an optimal control exists.

Proof. By assumption, there is at least one admissible trajectory reaching the target set S. Thus, we can construct a sequence of controls uk:[0, Tk] ↦ U whose corresponding trajectories xk satisfy

| (120) |

Since S ⊂ [0, T] × n, we know that Tk ≤ T for all k. Each function xk can then be extended to the entire interval [0, T] by setting xk(t) = xk(Tk) for t ∈ [Tk, T].

The sequence xk is uniformly Lipschitz continuous, as f is uniformly bounded on bounded sets. This then implies equicontinuity of . By the Arzela-Ascoli Theorem, there exists a subsequence xnk such that Tnk → T*, T* ≤ T, and xnk → x* uniformly on [0, T*].

Lemma 21 implies that x* is admissible, so that there exists a control u*:[0, T*] ↦ U such that

| (121) |

for almost all t ∈ [0, T*]. Equations (120) imply that

| (122) |

Note that the second of (122) relies on S being closed. Continuity of ϕ and (120) implies that

| (123) |

Thus, u* is optimal, as desired. □

For the model of drug-induced resistance, the control set U is the compact set U = [0, M], and for such control-affine systems, convexity of F(x) is implied by the convexity of U. Existence of a trajectory satisfying the constraints is clear; for example, take u(t) ≡ 0. Our objective is to maximize the time to not escape the set N. Note that N is a closed subset of 2, and that

| (124) |

is continuous. Lastly, we have seen that all solutions remain in the closure , so that |x(t)| ≤ 1 for all and hence solutions are uniformly bounded. Existence is then reduced to Item 6 in the previous theorem. Since the supremum of time t was shown to be finite, Theorem 22 together with Theorem 19 imply that the optimal control for the problem presented in section 3 exists.

7.3. Further Numerical Experiments

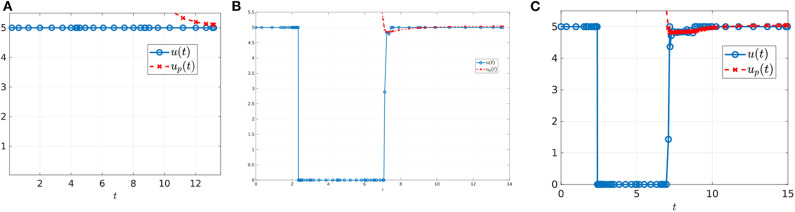

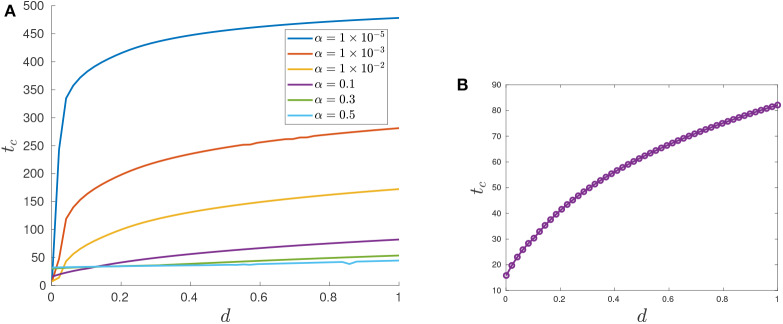

In this subsection, we present further numerical experiments (see section 6). Specifically, we study how the values of the relative resistant growth rate and critical volume influence the control structure. We also consider a regularized objective, which suggests that our numerical methods are converging to (at least local) solutions of the optimal control problem.

We first investigate the control structure and treatment outcome as a function of d for a fixed α; these results are presented in Figures 13, 14. Here α = 0.005 is fixed and d is varied on the interval [0.001, 0.1]. Figure 13 presents three of these controls; although none of the controls is of the form YXY, the figure suggests that there may exist a d* ∈ (0.02062, 0.0207959) where the solution trajectory may intersect the boundary line N only at one point and subsequently switches into a Y arc, thus providing the existence of a YXY control. Figure 14 suggests that increasing d for a fixed α increases the overall effectiveness of the treatment for all values of α, and that decreasing the induction rate α allows for longer tumor control. However, for small values of d, increasing α may provide a better treatment outcome (see, for example, the intersection of the yellow and purple curves in Figure 14).

Figure 13.

Computed optimal controls for α = 0.005 and (A) d = 0.0206, (B) d = 0.020624489795918, and (C) d = 0.207959. Note that the control in (A) takes the form Y, while that in (B,C) is of the form YXup.

Figure 14.

Variation in tc as a function of d. (A) tc response for varying d values. Note that treatment efficacy generally increases with increasing d. (B) α = 0.1.

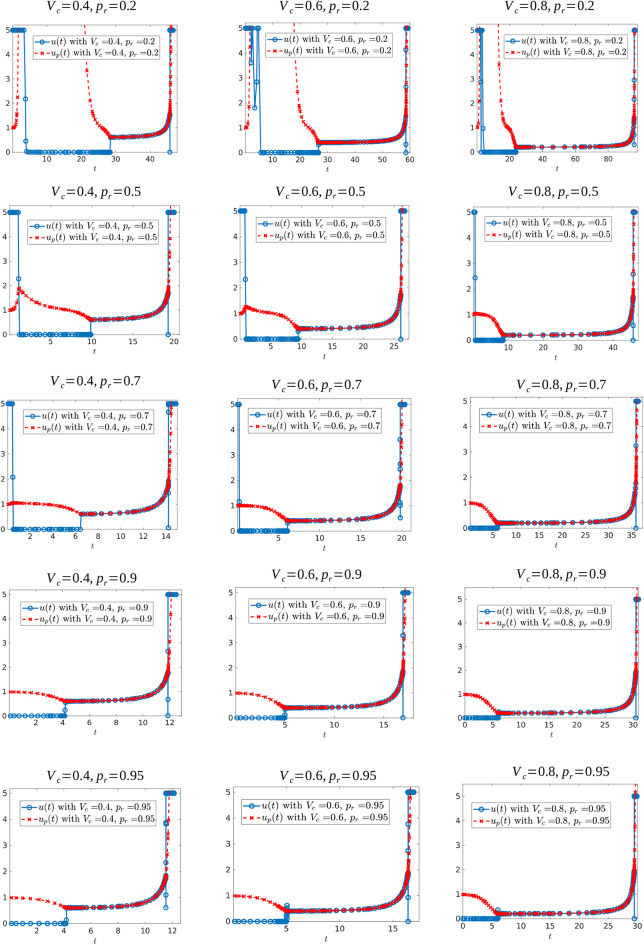

We also investigated how the shape of the optimal control changes for different values of the resistant growth fraction (pr) and/or the critical tumor volume (Vc). We run several simulations for Vc ∈ {0.2, 0.25, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.85, 0.9} and pr ∈ {0.2, 0.3, 0.5, 0.7, 0.85, 0.9, 0.95, 0.98, 0.99}. We found that when the reproduction rate of resistant cells is close to the reproduction rate of sensitive cells (pr near 1), the best strategy is to not give any drug at the beginning of treatment. This is perhaps to prolong the appearance of fast-growing resistance cells which cannot be eliminated with treatment. A representative set of the controls for these simulations are shown in Figure 15.

Figure 15.

Optimal control structures for different Vc and pr values. The blue curve is the computed optimal control, while the red curve is the feedback control along on the boundary of N, which may or may not be optimal or even feasible.

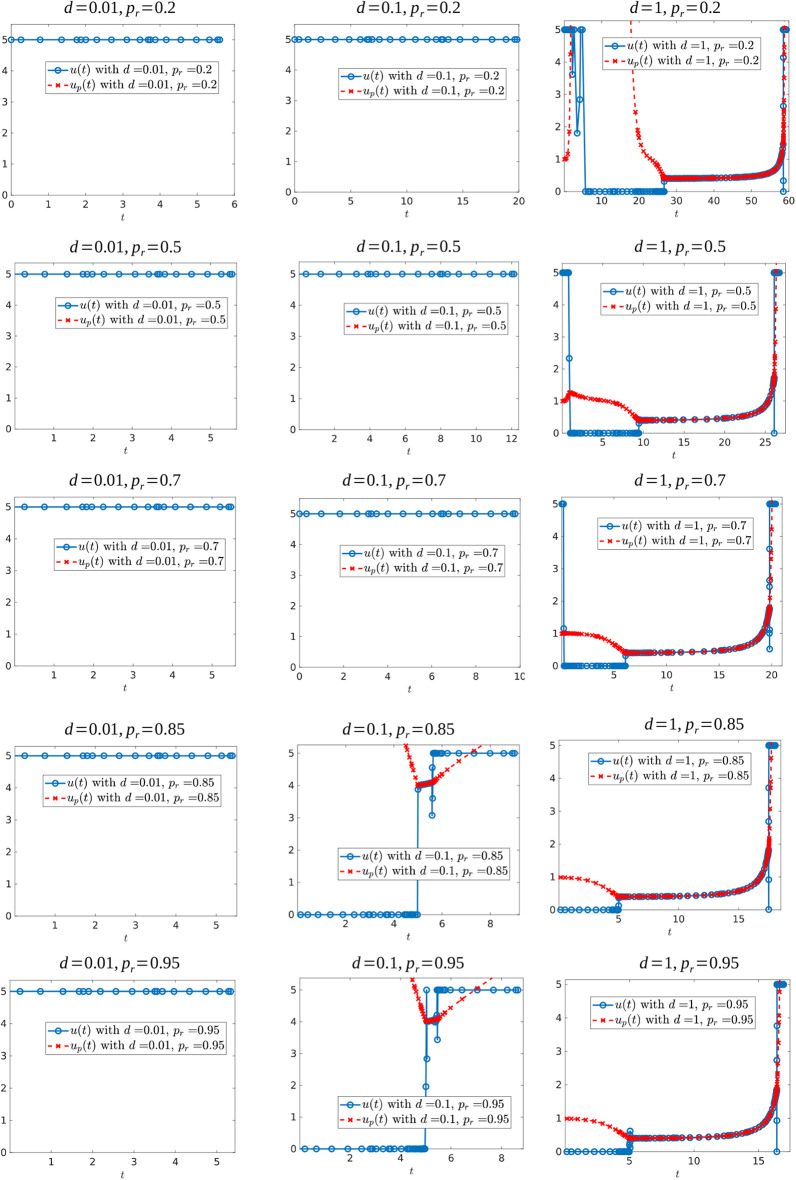

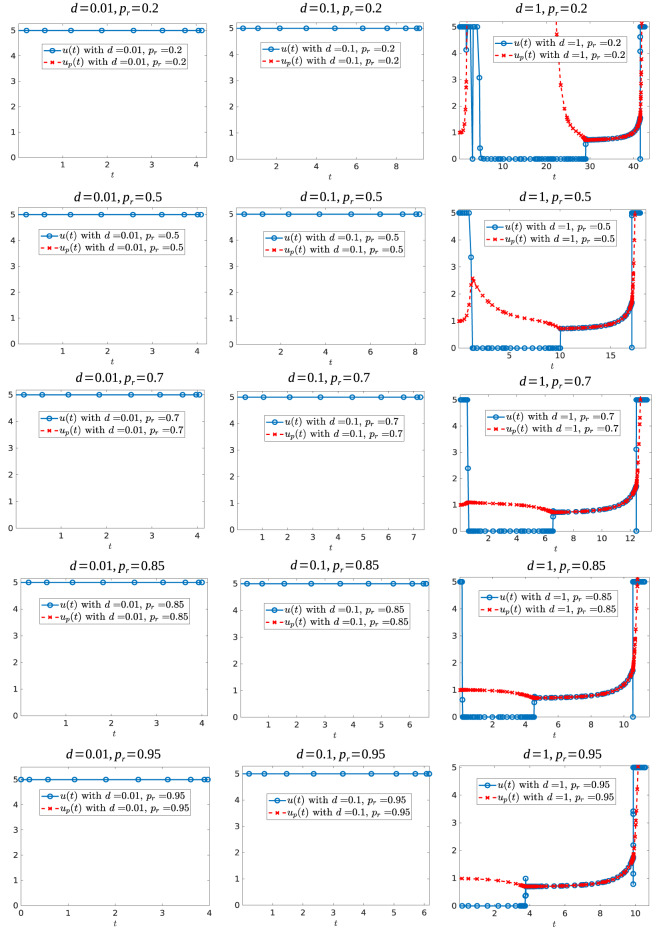

We further simulated the following parameter sets: Vc ∈ {0.3, 0.6}, d ∈ {0.01, 0.05, 0.1, 0.5, 0.75, 1} and pr ∈ {0.2, 0.3, 0.5, 0.7, 0.85, 0.9, 0.95, 0.98, 0.99}. Figure 16 shows some of the controls for these simulations for the case when Vc = 0.6, while Figure 17 shows some of the controls for the case Vc = 0.3. In both figures, we observe that independently of the value of resistant growth rate pr, if the chemotherapeutic drug has a low effectiveness (d small) then the best strategy is to give the maximum possible drug dosage during treatment. However, when d increases past d = 0.1, the control structure changes qualitatively. When Vc = 0.6 and the resistant reproduction rate is close to the reproduction rate of sensitive cells, the best strategy is to start with no drug treatment while for case Vc = 0.3 (independently of the value of pr) the best strategy is to give the maximum drug dosage from the start.

Figure 16.

Optimal control structures for Vc = 0.6, and different d and pr values. The blue curve is the computed optimal control, while the red curve is the feedback control along on the boundary of N, which may or may not be optimal or even feasible.

Figure 17.

Optimal control structures for Vc = 0.3, and different d and pr values. The blue curve is the computed optimal control, while the red curve is the feedback control along on the boundary of N, which may or may not be optimal or even feasible.

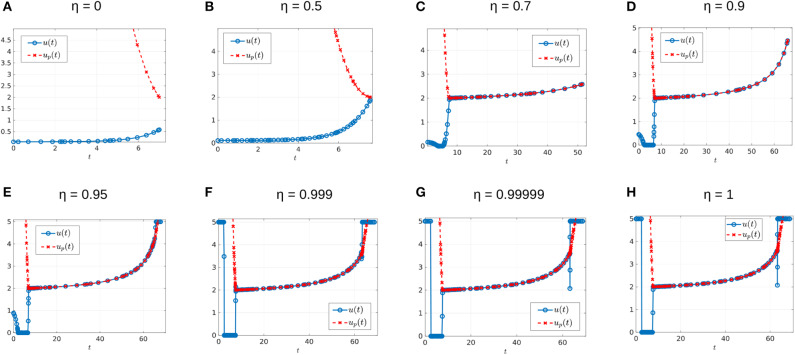

Before ending this section, we would like to mention that to verify the performance of the numerical software, we approached the original problem by a sequence of regularized problems, which is done by adding a quadratic term to the Lagrangian. More precisely, we considered the perturbed performance index:

| (125) |

Notice that Equation (125) represents a family of performance indexes parameterized by η. The original performance index corresponds to η = 1. Furthermore, for η ≠ 1 the optimal control problem is regular and solvers such as GPOPS-II (used here) or SNOPT should provide accurate solutions. Thus, to test the accuracy to the case η = 1, we investigated the corresponding control structure in the limit η → 1. An example of different controls, for η values 0, 0.5, 0.7, 0.9, 0.95, 0.999, 0.99999, and 1, are shown on Figure 18. For each case we obtained different relative errors: the largest relative error of 4.0338 × 10−7 occurs for η = 0, with the remaining values of η having smaller relative errors. From the values η = 0.95, η = 0.999 and η = 0.99999 in Figure 18 we can see that as η → 1 the computed control approaches the solution to the original problem (case η = 1).

Figure 18.

Different perturbed controls for α = 0.005 and d = 0.05. Here, from (A–H), the value of η is 0, 0.5, 0.7, 0.9, 0.95, 0.999, 0.99999, and 1, respectively. The maximum relative error is of 4.0338 × 10−7 for figure η = 0, the remaining figures have a maximum relative error of 5.5727 × 10−7 or smaller.

8. Conclusions

In this work, we have provided a rigorous analysis of the optimal control problem introduced in Greene et al. (2018a). That is, we have formally applied optimal control theory techniques to understand treatment strategies related to a model of induced drug resistance in cancer chemotherapy introduced in Greene et al. (2019). Although the model is relatively simple, it has recently been found to be highly successful in matching experimental data (Gevertz et al., 2019; Johnson et al., 2020), which we believe justifies the careful analysis presented here. An optimal control problem is then presented which maximizes a specific treatments therapy window. A formal analysis of the optimal control structure is performed utilizing the Pontryagin Maximum Principle and differential-geometric techniques. Optimal treatment strategies are realized as a combination of bang-bang and path-constrained arcs, and singular controls are proved to be sub-optimal. Numerical results are presented which verify our theoretical results, and demonstrate interesting and non-intuitive treatment strategies. We have also shown that a drug's level of resistance induction is identifiable, thus allowing for the possibility of designing therapies based on individual patient-drug interactions (see section 7.1).

Under the assumption that sensitive cells have a higher growth rate than resistant cells, our results (section 6) indicate that when using a chemotherapeutic drug with low cytotoxicity, the time at which the tumor volume exceeds its critical value tc would be larger when the transition rate of the drug is high (see for example Table 2, on cases d = 0.001 and d = 0.01, as α has larger values the end time tc becomes larger). The situation is reversed when we consider larger values of drug effectiveness because in this case it would take more time for the tumor to grow to its critical volume whenever the drug effectiveness is large enough. Also, our simulations indicate that it is optimal to apply the maximal dosage M subsequent to sliding along the boundary V = Vc (e.g., Figure 9), prior to treatment failure.

Clearly, further analysis is required in order to understand this phenomenon, and its implications for clinical scenarios. Although our model considers only an idealized scenario where resistance is unavoidable, we see that induced resistance dramatically alters therapy outcome, which underscores the importance of understanding its role in both cancer dynamics and designing chemotherapy regimes.

Other questions remain open for future work:

♢ Several studies indicate that drug-tolerance is a phenotypic property that appears transiently under the presence of the drug (Goldman et al., 2015). A next step to this research is to incorporate a reverse transition rate (from resistant to sensitive cells) that represents this phenotype-switching (see Figure 19).

♢ For controls where the trajectory remains on the boundary V = Vc (up), the feedback control is optimal during a time interval [t1, t2] with 0 ≤ t1 < t2 < tc. It remains to understand the point of entry [x1(t1), x2(t1)] and exit [x1(t2), x2(t2)] (Figure 20A). What is the significance of the times t1 and t2 with respect to parameter values?

♢ Do there exist conditions, once the trajectory reaches Vc, under which the optimal trajectory no longer slides? Is it possible that at the time t* the point [x1(t*), x2(t*)] is a contact point (Figure 20B)? Some numerical results suggest that such a contact point may exist and give rise to a YXY control structure (Figure 13).

♢ We have shown that an optimal control can switch at most once in each of the regions and . Numerically we did not observe any bang-bang controls of the form YXY, although its existence was strongly suggested. The existence of a bang-bang junction in is therefore of interest.

♢ For all examples plotted in Figure 11 with d ≥ 0.1, the entry time occurs approximately at the same value t1 = 20.03. Is this a coincidence? We would like to understand the dependence of the entry time t1 and on parameters α, d, pr, M, and/or ϵ.

♢ We would like to extend models to include multiple, possibly non-cross resistant, cytotoxic agents. Indeed, clinical practice generally includes multiple agents applied concurrently and sequentially, and we plan on investigating strategies when different types of drugs may be applied. For example, what control strategies arise when a targeted therapy exists which targets the resistant sub-population? What order should the agents be applied, and for how long? Are intermediate doses now optimal? Mathematically, all of these questions may be studied, and the results may be clinically relevant.

Figure 19.

Visualization of model that includes a reverse phenotype transition from resistant to sensitive. x1 denotes the sensitive cancerous cell population, yi the drug-induced resistant cancerous cell population, and ys the non-drug-induced resistant cell population.

Figure 20.

(A) Example of an arc with feedback control with entry point [x1(t1), x2(t1)] an exit point [x1(t2), x2(t2)] the exit point (B) Example of an arc that does not slides but reaches the boundary V = Vc at the contact point (x1(t*), x2(t*)).

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Dr. Anil Rao for technical suggestions regarding the optimization formulation and the use of GPOPS-II. This manuscript has been released as a pre-print at bioRxiv (Greene et al., 2018b).

Footnotes

Funding. This research was supported in part by NSF grants 1716623 and 1849588.

References

- Anguelova M. (2004). Nonlinear Observability and Identifiability: General Theory and a Case Study of a Kinetic Model for S. cerevisiae. Chalmers University of Technology. [Google Scholar]

- Bressan A., Piccoli B. (2007). Introduction to mathematical control theory. AIMS Ser. Appl. Math. Philadelphia [Google Scholar]

- Brimacombe K. R., Hall M. D., Auld D. S., Inglese J., Austin C. P., Gottesman M. M., et al. (2009). A dual-fluorescence high-throughput cell line system for probing multidrug resistance. Assay Drug Dev. Technol. 7, 233–249. 10.1089/adt.2008.165 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doherty M., Smigiel J., Junk D., Jackson M. (2016). Cancer stem cell plasticity drives therapeutic resistance. Cancers 8:8. 10.3390/cancers8010008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eisenberg M. C., Jain H. V. (2017). A confidence building exercise in data and identifiability: Modeling cancer chemotherapy as a case study. J. Theor. Biol. 431, 63–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filippov A. F. (1967). Classical solutions of differential equations with multi-valued right-hand side. SIAM J. Control. 5, 609–621. [Google Scholar]

- Gatenby R. A., Silva A. S., Gillies R. J., Frieden B. R. (2009). Adaptive therapy. Cancer Res. 69, 4894–4903. 10.1158/0008-5472.CAN-08-3658 [DOI] [PMC free article] [PubMed] [Google Scholar]