Abstract

It is not known how often lean tools and implementation determinants frameworks or checklists are used concurrently in health care quality improvement activities. The authors systematically reviewed the literature for studies that used a lean tool along with an implementation science determinants framework (January 1999 through August 2018). Seven studies (8 publications) were identified, inclusive of 2 protocols and 6 research articles across multiple continents. All included studies used the consolidated framework for implementation research as their implementation science determinants framework. Lean tools included in more than 1 publication were process mapping (4 publications), process redesign (3 publications), and 5S standardization (2 publications). Only 1 study proposed using a lean tool concurrently with an implementation science determinants framework in the design and execution of the QI project. Few published studies utilize both an implementation science determinants framework or checklist and 1 or more lean tool in their study design.

Keywords: lean, implementation science, quality improvement

Health care providers and systems are continually striving to improve care delivery through quality improvement (QI). Frequently, frontline health care workers or their managers identify gaps in care delivery and use a variety of approaches to close those gaps and improve care. Sometimes these are small improvements, such as reorganizing the layout of a supply room. Other times, this involves a large-scale intervention, such as reorganizing the care coordination of antithrombotic medications before a surgical procedure. Too often, these large-scale QI interventions do not achieve their goal.1,2

Many health systems have invested in performance or QI coaches to facilitate the use of lean thinking and other health systems engineering methods in order to more successfully engage workers in QI efforts. Although many different definitions of lean in health care exist, they all involve the engagement of frontline staff to describe and optimize their work processes through the use of select tools and strategies (eg, process or value stream mapping, root cause analysis, countermeasures run in Plan-Do Study-Act [PDSA] cycle experiments).3–5

At the same time, some larger health systems, including the United States Veterans Health Administration (VA), and the National Institutes of Health, have invested in implementation science research as a means to improve the uptake of evidence-based care.6 Implementation science has been described as the “scientific study of methods to promote the systematic uptake of research findings and other evidence-based practice into routine practice, and hence to improve the quality and effectiveness in health services.”7 Although the field of implementation science is inherently broad as it relates to research activities, many frontline implementation practitioners use specific implementation tools to guide how they engage in care change activities. Specifically, QI teams use check lists of implementation determinants to assess for expected implementation barriers and to identify strategies intended to overcome the expected barriers. Commonly, these checklists are derived from the Consolidated Framework for Implementation Research (CFIR), the Theoretical Domains Framework, the Tailored Implementation for Chronic Diseases (TICD) checklist, and the Technology Acceptance Model.8–11

Although both lean and implementation science aim to improve quality of care, it is not clear whether tools from these 2 fields have frequently been integrated for specific QI or clinical studies. This is particularly true given that they have different traditions within health care. Lean approaches have largely been adapted from manufacturing as a method to improve reliability and reduce waste. In so doing, they emphasize deep understanding of processes and methods to improve reliability and efficiency with less of an emphasis on the individual and organizational-level behavior theories. On the other hand, implementation science has its roots in health care, sociology, and organizational research. Its primary goal is to facilitate the use of evidence-based practices through targeted interventions at the individual and system levels but without as much emphasis on reliability and efficiency measures.

From a lean perspective, using implementation science determinants checklists may facilitate a robust and thorough assessment of potential barriers and facilitators beyond what is typically done when using a “lean healthcare approach.” On the other hand, improvement staff using checklists from implementation science might benefit from incorporating specific lean tools and strategies to develop a more comprehensive understanding and documentation of processes wherein an evidence-based practice is meant to be performed.

The purpose of this scoping review12 is to summarize the literature on published QI studies that have combined the use of lean thinking and implementation science determinants checklists to guide QI efforts.

Methods

Search Strategy

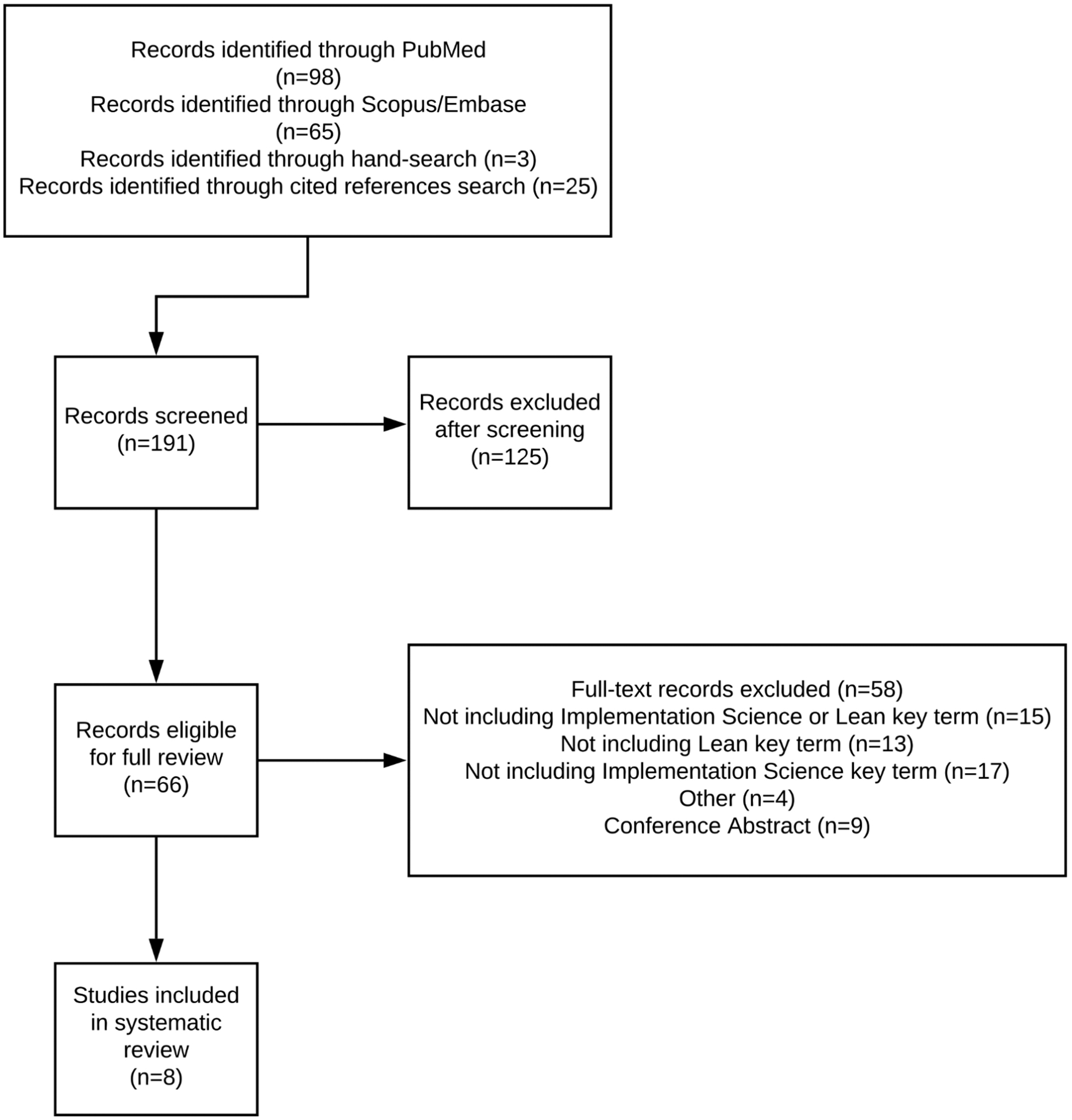

With the help of a research librarian (MLC), the research team systematically conducted a review of the relevant literature using the PubMed, EMBASE, and Scopus databases (Figure 1). To identify published literature that used lean methods, the team created and selected a broad list of problem-solving tools and strategies often associated with lean thinking to represent lean activities. Similarly, 4 consolidated implementation science determinants checklists were selected to identify researchers and studies using robust implementation determinants tools. These 4 checklists were selected because they represent either consolidation of many other smaller frameworks and/or they included comprehensive lists of determinants for implementation success. Searches of each database included combined keywords from a lean thinking process and an implementation science determinants checklist (see Supplementary Appendix). The lean thinking keywords also were used in an advanced search of the journals Implementation Science and BMJ Quality and Safety. In addition, a hand search was conducted prior to the search through Google Scholar to identify sentinel articles used to validate the search. Finally, an updated search was conducted to include any studies published during the analysis period, and a cited reference search was conducted on papers selected for inclusion. The search included both peer-reviewed and non–peer reviewed literature.

Figure 1.

PRISMA flowchart for study inclusion.

Criteria for Selection

Studies eligible for inclusion consisted of QI studies that combined the use of lean tools for QI and implementation science determinants checklists to identify potential barriers to implementation intervention success. All available study designs were included—both qualitative and quantitative and mixed methods, including randomized controlled trial, non-randomized trial, observational study, cohort study, case-control study, cross-sectional study, focus groups, and structured individual interview studies published from January 1999 to August 2018. Articles were excluded that were not based on health care QI studies and did not include the specific key lean thinking search terms in conjunction with an implementation science determinants framework. Articles that discussed a lean thinking keyword as well as an implementation process or implementation science were excluded if they did not explicitly discuss one of the implementation science determinants frameworks or theories in the search.

Titles and abstracts of papers were screened by 1 team member (TS) to decide if the full text should be reviewed. Any uncertainty regarding inclusion of a report was discussed between 2 team members (TS and GDB) and resolved through consensus.

Data Abstraction and Synthesis

Data from all studies were independently extracted by 2 team members (TS and GDB) to include the following:

Title

Publication year

Publication journal

Authors

Abstract

Specific elements from lean thinking that were utilized in the QI project

Specific elements from implementation science that were utilized in the QI project

Qualitative description of how the lean and implementation science methods were integrated

Location of study (country)

Funding source (federal, industry, unknown, other)

Identifiable study limitations

On completion of data extraction, researchers met in person to review any discrepancies between the independent extractions through discussion.

The plan for the scoping review was registered in PROSPERO, an international prospective register of systematic reviews (CRD42018096595). Because of the relatively small number of papers, the research team amended the Prospero protocol approach of evaluating the effectiveness of study designs integrating lean tools and implementation science determinants checklists as compared to studies that only used 1 method. Instead, the team described how and in what settings the study designs utilized the 2 methodologies together.

Quality Assessment Tool

Given that most included studies were at least partially qualitative in nature, the research team assessed the study quality using the RATS (Relevance, Appropriateness, Transparency and Soundness) grid for writing and reading the studies.13 Each item was scored from 0 to 2 depending on the presence of the particular item in the article: 0 (absence of description), 1 (partial description), and 2 (complete description).14 The table used for scoring purposes can be found in the Supplementary Appendix. Two articles consisted of research protocols that had not yet reported on findings, and therefore, some of the items in the RATS tool could not be applied to assess the quality of these articles.15,16 Additionally, 1 article17 is a conference proceeding and 1 article18 is an executive summary instead of a journal publication and therefore yielded a lower quality score based on the RATS assessment tool. Studies were assessed for quality independently by 2 researchers (TS and GDB), and any disagreements were resolved through discussion during an in-person meeting.

Results

Included Studies

A total of 191 articles were identified by searching for key search terms from both lean thinking and implementation science on PubMed, Scopus, and EMBASE databases; a hand search; and a cited reference search. Following abstract and full text review, 8 studies fit the inclusion criteria. Of those 8 studies, 6 were reports of research study results17–22 and 2 were protocols for studies.15,16 Two publications were from the same study,19,20 leaving 7 distinct studies included.

All the included studies were published after 2013; 5 were conducted in the United States,17–20,22 2 took place in Africa,15,21 and 1 in Asia.16

Specific Lean Tools and Implementation Science Determinants Checklists Included

Table 1 provides an overview of the 8 studies and their inclusion of various lean thinking tools and implementation science determinants frameworks or checklists. All included studies used CFIR, or a modified version,23 as the implementation science determinants checklist. Four of the papers (3 total studies) used process mapping as one of their key lean thinking elements15,16,19,21; 3 papers used some form of flow or process redesign18–20; 2 papers used 5S standardization18,19; 1 paper used continuous QI21; and 1 paper used lean principles to guide the work.22 With regard to continuous QI, Gimbel et al21 used “planning, engagement, execution, and reflection phases” to allow the research teams to “consider their findings from systems analysis tools (e.g. flow mapping and cascade analysis), brainstorm solutions, and encourage continual use of the tools for benchmarking ongoing process improvement” (p. 112). Daaleman et al22 sought to “maintain fidelity to the following lean principles: (1) patients and clinical stakeholders define the standards of service provided; (2) the improvement processes seek to minimize waste; (3) the people who do the work know best how to improve the work; (4) leadership facilitates the development and measurement of outcome measures with staff and patients; and (5) management empowers frontline staff to take ownership of the improvement processes” (p. 112)22

Table 1.

Scoping Review Summary Table.

| Study | Location of Study | Specific Elements from Lean | Implementation Science Determinants Checklist | Integration Summary | Quality Indicator |

|---|---|---|---|---|---|

| Sherr et al, 201415 | Africa | Systems engineering, process mapping, continuous quality improvement | CFIR | Proposal for a longitudinal cluster randomized trial design using systems engineering value stream mapping, system engineering, and continuous quality improvement to aid in an intervention to prevent mother to child HIV transmission. The implementation of the intervention will then be analyzed using CFIR | 26 |

| Miech et al, 201517 | United States | Lean Enterprise Transformation | CFIR | In a mixed methods study, researchers evaluated a Lean Healthcare Enterprise Deployment at 7 pilot Veterans Affairs medical centers using CFIR | 11 |

| Gimbel et al, 201621 | Africa | Systems engineering, process flow mapping, continuous quality improvement | CFIR | Performed focus group and interviews at high- and low-performing sites using CFIR-guided questions to assess which aspects of lean facilitated implementation. Analyzed transcripts using CFIR codes | 27 |

| Hung, 201618 | United States | Lean redesign, SS standardization | CFIR | Used a modified version of CFIR to evaluate the sustainability of lean redesigns as an organization-wide initiative in an ambulatory care setting | 11 |

| Hung et al, 201719 | United States | Redesign of clinical operations (flow redesign), SS technique, process mapping | CFIR | After using lean to redesign primary care delivery, CFIR constructs were used to analyze how well the implementation occurred, what aspects were more useful, and what barriers remained | 33 |

| Ashok et al, 201820 | United States | Lean-based primary care redesigns | CFIR | Integrated CFIR into a new process redesign framework that is directed at quality improvement/lean work. Then applied this framework for analysis of a case study and 113 qualitative interviews | 17 |

| Daaleman et al, 201822 | United States | Continuous quality improvement | CFIR | Team largely used lean principles to redesign a primary care clinic. During the process, they collected process data and survey data to align with CFIR domains for analysis | 25 |

| Means et al, 201816 | Asia | Process mapping | CFIR | Protocol paper to use CFIR to understand barriers to implementation. Separately, will use process mapping to identify differences between planned and actualized activities | 31 |

Abbreviation: CFIR, Consolidated Framework for Implementation Research.27

Concurrent Use of Lean and Implementation Science in Study Design

Only 1 study (a protocol paper) proposed utilizing a lean tool concurrently with an implementation science determinants checklist in the design and execution of the QI project.16 Means et al16 published a research protocol for a series of randomized controlled trials investigating the feasibility of interrupting transmission of soil-transmitted helminths in Benin, Malawi, and India. In their study design, researchers proposed using CFIR to identify barriers to implementation, to help understand why the intervention may have varying levels of effectiveness across different settings, and to identify opportunities to optimize the intervention moving forward. In addition, they proposed utilizing process mapping to identify the flow of inputs that are required to create optimal outputs and identify key differences between planned and actualized activities.

Sequential Use of Implementation Science to Evaluate a Lean Intervention

Contrastingly, all the other studies utilized a lean tool to identify and guide QI activities. Subsequently, they used CFIR to evaluate the relative success of that implementation and identify determinants of implementation success. In one study, Gimbel et al21 utilized CFIR to assess the implementation of a “Systems Analysis and Improvement Approach,” which was designed around systems engineering theory and comprised process mapping and continuous QI, across 6 health facilities through-out sub-Saharan Africa for prevention of mother to child transmission of the human immunodeficiency virus. Specifically, they performed focus group interviews at high and low-performing sites using CFIR-guided questions to assess which aspects of lean facilitated implementation. Two years earlier, Sherr et al15 had published a protocol for this same study, which detailed the study design, objectives, and aims.

Hung et al19 conducted a case study of 113 physicians using lean to redesign primary care delivery. The lean tools incorporated consisted of 5S, flow redesign, and process mapping. However, the care redesign was rolled out in phases, starting with a pilot phase at 1 clinic and then scaling over 2 subsequent phases. The pilot phase sites were more engaged in using lean tools to redesign care to meet their objectives, whereas physicians from the scaling phases expressed less engagement with the redesign because of constraints around the ability to adapt to their local clinic context. It is not further specified which physicians used which lean tools during each of the phases. The study then used a modified version of CFIR (CFIR-PR)23 to analyze how well the implementation occurred, acceptance of the redesign process, what aspects were most useful, and what barriers remained. Ashok et al20 expanded the CFIR-PR, creating a new process redesign framework to evaluate the same case study conducted by Hung et al. In a different study at an academic primary care facility, lean tools and strategies (continuous improvement) were implemented to redesign a primary care clinic. During this process, researchers collected process data and survey data and used CFIR as an evaluation tool to assess the success of the redesign.22

In a similar setting, Hung18 also reported findings regarding a lean redesign effort that took place in an ambulatory care system. This mixed methods executive summary utilized the modified CFIR-PR framework to analyze the contextual factors and processes affecting the scaling and sustainability of lean redesigns (using lean tools such as 5S standardization and redesign of care team and workflows).

Finally, in a conference contribution, Miech et al17 used CFIR to assess the effectiveness and contextual impact of the implementation of a Lean Enterprise Transformation across 7 VA Medical Centers.

Quality Assessment Results

Using the RATS quality assessment tool, the research team found a wide variation in overall scores. As expected, certain sections of RATS were unable to be scored in the protocol papers because of the lack of reported findings. Additionally, one paper was a conference proceeding instead of a journal article, which made the RATS quality assessment tool a limited instrument for assessment. Results of the quality assessment analysis are displayed in Table 2.

Table 2.

Quality Assessment Tool of Included Studies.

| Study | Sherr et al, 201415 | Miech et al, 201517 | Gimbel et al, 201621 | Hung, 201618 | Hung et al, 201719 | Ashok et al, 201820 | Daaleman et al, 201822 | Means et al, 201816 |

|---|---|---|---|---|---|---|---|---|

| Relevance of Study Question | ||||||||

| Research question explicitly stated | 2 | 1 | 2 | 1 | 2 | 2 | 1 | 2 |

| Research question justified and linked to the existing knowledge base (empirical research, theory, policy) | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| Appropriateness of Qualitative Method | ||||||||

| Study design described and justified: Why was a particular method chosen? | 2 | 1 | 2 | 1 | 1 | 2 | 1 | 2 |

| Transparency of Procedures | ||||||||

| Sampling | ||||||||

| Criteria for selecting the study sample justified and explained (eg, theoretical sampling, purposive sampling) | 2 | 0 | 2 | 1 | 2 | 0 | 1 | 2 |

| Recruitment | ||||||||

| Details of how recruitment was conducted and by whom | 2 | 0 | 2 | 0 | 2 | 0 | 0 | 2 |

| Details of who chose not to participate and why | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| Data collection | ||||||||

| Data collection method outlined and examples given | 2 | 1 | 2 | 1 | 1 | 1 | 1 | 2 |

| Study group and setting clearly described | 2 | 1 | 2 | 1 | 2 | 1 | 1 | 2 |

| End of data collection justified and described | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| Role of researchers | ||||||||

| Do the researchers occupy dual roles (clinician and researcher)? | 1 | 0 | 2 | 0 | 2 | 0 | 0 | 2 |

| Are the ethics of this discussed? Do the researcher(s) critically examine their own influence on the formulation of the research question, data collection, and interpretation? | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 2 |

| Ethics | ||||||||

| Informed consent process explicitly and clearly detailed | 2 | 0 | 0 | 0 | 2 | 0 | 0 | 2 |

| Anonymity and confidentiality discussed | 0 | 0 | 0 | 0 | 2 | 0 | 0 | 2 |

| Ethics approval cited | 2 | 0 | 0 | 0 | 2 | 0 | 2 | 2 |

| Soundness of Interpretive Approach | ||||||||

| Analysis | ||||||||

| Analytic approach described in depth and justified (thematic analysis, grounded theory, or framework approach) | 2 | 0 | 2 | 0 | 2 | 1 | 1 | 2 |

Are the interpretations clearly presented and adequately supported by the evidence? Indicators of quality:

|

N/A | 1 | 2 | 1 | 2 | 0 | 1 | N/A |

| Are quotes used and are these appropriate and effective? Illumination of context and/or meaning, richly detailed | N/A | 0 | 0 | 0 | 2 | 0 | 0 | N/A |

| Method of reliability check described and justified (eg, was an audit trail, triangulation, or member checking employed? Did an independent analyst review data and contest themes? How were disagreements resolved?) | 0 | 1 | 2 | 0 | 1 | 0 | 0 | 2 |

| Discussion and presentation | ||||||||

| Findings presented with reference to existing theoretical and empirical literature and how they contribute | N/A | 2 | 2 | 2 | 2 | 2 | 2 | N/A |

| Strengths and limitations explicitly described and discussed | N/A | 0 | 2 | 0 | 2 | 2 | 2 | N/A |

| Is the manuscript well written and accessible? | 2 | 1 | 2 | 1 | 2 | 2 | 2 | 2 |

| Total Score | 24 | 11 | 29 | 11 | 36 | 15 | 17 | 30 |

Discussion

This scoping review identified 8 articles, including 2 protocols, across a diverse array of settings. Only 1 study used lean tools and strategies concurrently with an implementation science determinants checklist to improve the success of their intervention implementation.16 The remainder of studies used a lean implementation tool in their study design and subsequently applied an implementation science determinants framework to evaluate their implementation. All the studies identified used CFIR as their implementation science determinants checklist. Overall, very few published studies utilize both lean tools and/or strategies and an implementation science determinants checklist. Moreover, only a single study used the 2 methods simultaneously.

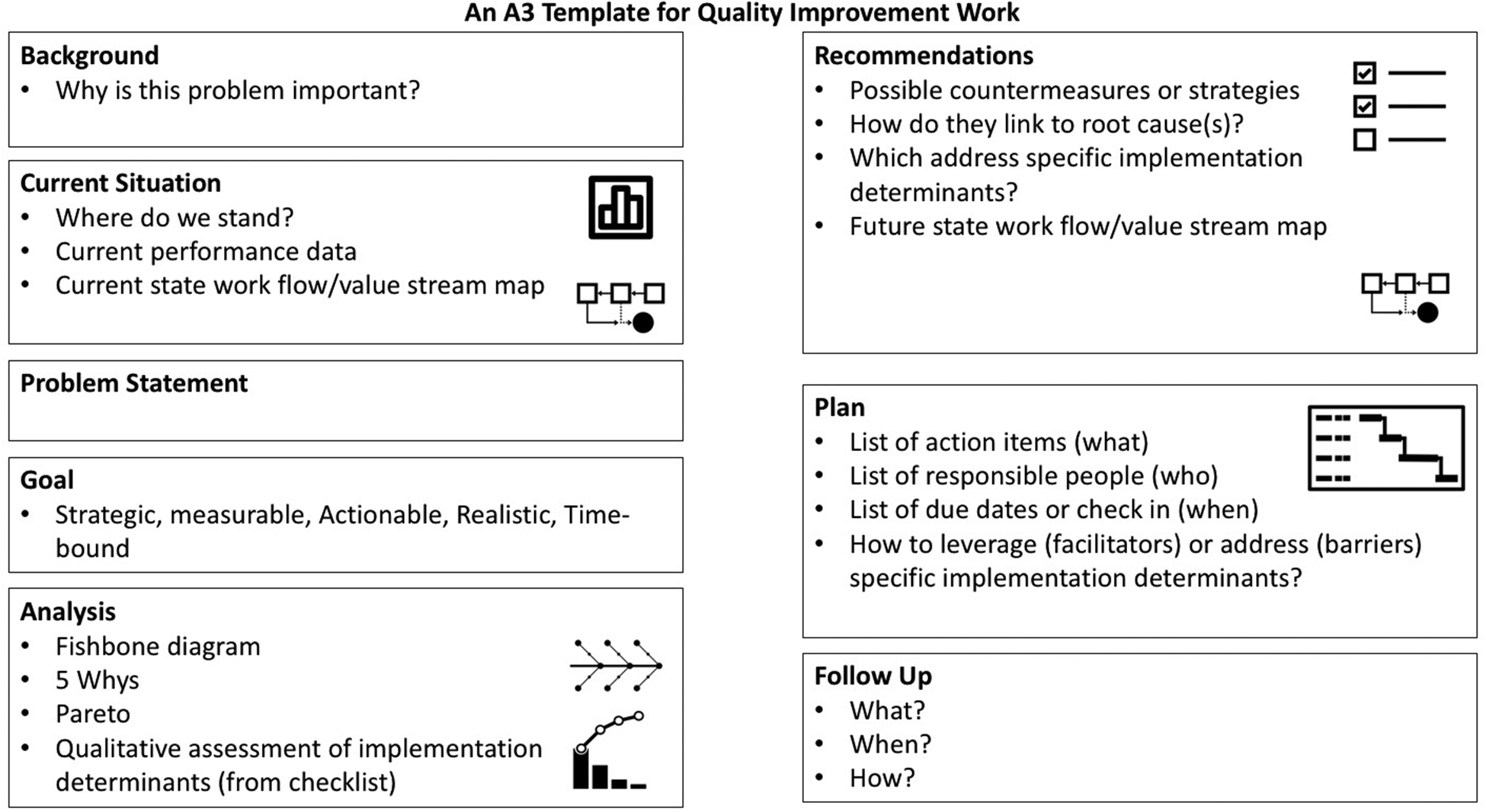

Despite a relative paucity of published literature on the integration of lean tools and strategies with implementation science checklists, the research team believes that concurrent integration may offer benefits for both fields of study. The A3 is a tool commonly used to describe and manage lean QI projects.4,24,25 One way to integrate implementation science determinants frameworks or checklists into existing lean implementation efforts is presented in Figure 2. Implementation science determinants frameworks or checklists (eg, CFIR, TICD) can be used during the “analysis” phase to better understand specific facilitators and barriers to high-quality, evidence-based care delivery.8,9 These can be assessed through stakeholder interviews and/or surveys. Once key determinants of implementation success are identified, they can be used during the “recommendations” phase to help select the best countermeasure(s) and strategies. They also should be linked to specific plan steps to help leverage existing facilitating factors and to address specific barriers to implementation success.

Figure 2.

A3 template for quality improvement work: This figure integrates implementation science determinants frameworks and checklists into the “Analysis,” “Recommendations,” and “Plan” sections of a typical lean A3 template.

Other opportunities exist to integrate implementation science determinants frameworks with lean tools and strategies. First, implementation science determinants checklists can be used to guide horizontal spread or to “scale out” a lean initiative from its original setting to other settings by assessing unique contextual factors that will require adaptation.26 In lean thinking, spread is planned through collaborative experimentation, rather than copy–paste of “best practices” without regard to local circumstances. Second, an implementation science determinants checklist can be used to facilitate multisite studies that require local implementation and tailoring to unique contextual elements. In this case, spending time at key sites to create individual workflow maps will help identify common steps in a process across different health care settings or sites. Third, many lean tools can aid in understanding the specifics of a process and how context will influence implementation. Finally, a core characteristic of lean involves strong engagement by frontline staff, which can facilitate successful implementation activities. To that end, many implementation science training programs are beginning to incorporate some aspects of lean training, including process mapping and root cause analysis.

Despite a robust literature search, this scoping review is limited in many respects. Although the research team appreciates that lean is an overarching problem-solving paradigm, for the purposes of a scoping review it had to be distilled down to identifiable search terms. Therefore, papers that did not utilize one of the key search terms may not have been included. Also, it is likely that few of the lean thinking studies that have been completed in health care have ever been published. This has been noted before and has not improved significantly since 2009.5 Therefore, it is possible that there are QI initiatives incorporating both lean tools and implementation science determinants frameworks or checklists that were not included because they were not published and indexed in one of the literature databases that the team was able to search. This is particularly true for QI work based largely on the PDSA model. The team also did not include all types of implementation science frameworks (including frameworks for evaluation, such as RE-AIM, or process models, such as the Replicating Effective Programs or Knowledge to Action frameworks),27,28 so there may be published literature on lean tools that incorporate other types of implementation science tools in addition to determinants checklists.

As an example of this issue, the research team identified 2 papers utilizing a lean tool with implementation science frameworks distinct from the determinant frameworks that were incorporated in the search methodology. Harrison et al29 utilized an implementation science framework that was very similar to CFIR; however, the authors explicitly described how CFIR did not serve as a source for their model in evaluating factors that would affect lean implementation and outcomes. Therefore, they adapted and developed their own implementation determinants framework. In another study investigating implementation science methodology, researchers used the Knowledge to Action framework along with process mapping to guide implementation of their QI intervention.30 Finally, when looking at the specific lean tools and strategies or implementation science determinants frameworks that were used in each study, the team had limited ability to assess the quality or thoroughness of how these tools were being used. Nonetheless, the small number of published studies incorporating use of both approaches is noteworthy.

An issue that arises in this review is the degree to which people using various tools and strategies are doing so with full understanding of how they were developed and their intended purposes. This is particularly salient when considering how to merge methods, strategies, and tools developed from different histories and backgrounds.31 In the implementation science literature, there is robust discussion around issues of fidelity. Adherence to fidelity often is seen as the obverse of adaptation, where there is a concern that the way a process or program is used does not capture the essence that makes the process or program work.32 Fidelity includes not only preserving the core or essence that is the “active ingredient,” but also deep understanding of tools as they are used. In both lean and implementation science training, frequently the training is not thorough and in depth enough to permit a sufficiently deep understanding about how to use processes and tools optimally. The research team notes that both lean and implementation science have deep traditions, stemming from different places, which the team believes need to be understood in order to use them appropriately and jointly.

Conclusions

Few published studies have utilized both an implementation science determinants framework or checklist and selected lean tool or strategies in the study design. The research team believes that there is an opportunity for both fields to come together intentionally and symbiotically to improve care delivery and QI. This union could create a model to optimize the delivery and longevity of intervention implementations in QI.

Supplementary Material

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was funded by a grant from the National Heart, Lung, and Blood Institute (NHLBI) to Dr Barnes (K01HL135392). All authors have no other disclosures relevant to this work. The opinions expressed herein are the views of the authors and do not necessarily reflect the official policy or position of NHLBI or any other part of the US Department of Health and Human Services.

Footnotes

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Supplemental Material

Supplemental material for this article is available online.

References

- 1.Schouten LM, Hulscher ME, van Everdingen JJ, Huijsman R, Grol RP. Evidence for the impact of quality improvement collaboratives: systematic review. BMJ. 2008;336: 1491–1494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wells S, Tamir O, Gray J, Naidoo D, Bekhit M, Goldmann D. Are quality improvement collaboratives effective? A systematic review. BMJ Qual Saf. 2018;27:226–240. [DOI] [PubMed] [Google Scholar]

- 3.Deblois S, Lepanto L. Lean and six sigma in acute care: a systematic review of reviews. Int J Health Care Qual Assur. 2016;29:192–208. [DOI] [PubMed] [Google Scholar]

- 4.Graban M Lean Hospitals: Improving Quality, Patient Safety, and Employee Engagement. 2nd ed. New York, NY: Productivity Press/Taylor & Francis; 2012. [Google Scholar]

- 5.Vest JR, Gamm LD. A critical review of the research literature on six sigma, lean and StuderGroup’s hardwiring excellence in the United States: the need to demonstrate and communicate the effectiveness of transformation strategies in healthcare. Implement Sci. 2009;4:35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chan WV, Pearson TA, Bennett GC, et al. ACC/AHA special report: clinical practice guideline implementation strategies: a summary of systematic reviews by the NHLBI Implementation Science Work Group: a report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines. Circulation. 2017;135:e122–e137. [DOI] [PubMed] [Google Scholar]

- 7.Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. 2006;1:1. [Google Scholar]

- 8.Flottorp SA, Oxman AD, Krause J, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci. 2013;8:35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Atkins L, Francis J, Islam R, et al. A guide to using the theoretical domains framework of behaviour change to investigate implementation problems. Implement Sci. 2017;12:77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Holden RJ, Karsh B-T. The technology of acceptance model: its past and its future in health care. J Biomed Inform. 2010;43:159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Colquhoun HL, Levac D, O’Brien KK, et al. Scoping reviews: time for clarity in definition, methods, and reporting. J Clin Epidemiol. 2014;67:1291–1294. [DOI] [PubMed] [Google Scholar]

- 13.The EQUATOR Network. Qualitative research review guidelines—RATS. https://www.equator-network.org/reporting-guidelines/qualitative-research-review-guidelines-rats/. Accessed September 17, 2019.

- 14.Cambon B, Vorilhon P, Michel L, et al. Quality of qualitative studies centred on patients in family practice: a systematic review. Fam Pract. 2016;33:580–587. [DOI] [PubMed] [Google Scholar]

- 15.Sherr K, Gimbel S, Rustagi A, et al. Systems analysis and improvement to optimize pMTCT (SAIA): a cluster randomized trial. Implement Sci. 2014;9:55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Means AR, Ajjampur SSR, Bailey R, et al. Evaluating the sustainability, scalability, and replicability of an STH transmission interruption intervention: the DeWorm3 implementation science protocol. PLoS Negl Trop Dis. 2018;12:e0005988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Miech EJ, Bravata DM, Woodward-Hagg H. Evaluating lean implementation: challenges in developing a research agenda for lean enterprise transformation in healthcare. Paper presented at: IIE Annual Conference and Exposition; May 30 to June 2, 2015; Nashville, TN. [Google Scholar]

- 18.Hung DY. Spreading Lean: taking efficiency interventions in health services delivery to scale. https://www.ahrq.gov/sites/default/files/publications/files/execsumm-lean-rede-sign.pdf. Accessed September 30, 2018.

- 19.Hung D, Gray C, Martinez M, Schmittdiel J, Harrison MI. Acceptance of lean redesigns in primary care: a contextual analysis. Health Care Manage Rev. 2017;42:203–212. [DOI] [PubMed] [Google Scholar]

- 20.Ashok M, Hung D, Rojas-Smith L, Halpern MT, Harrison M. Framework for research on implementation of process redesigns. Qual Manag Health Care. 2018;27:17–23. [DOI] [PubMed] [Google Scholar]

- 21.Gimbel S, Rustagi AS, Robinson J, et al. Evaluation of a systems analysis and improvement approach to optimize prevention of mother-to-child transmission of HIV using the consolidated framework for implementation research. J Acquir Immune Defic Syndr. 2016;72(suppl 2):S108–S116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Daaleman TP, Brock D, Gwynne M, et al. Implementing lean in academic primary care. Qual Manag Health Care. 2018;27:111–116. [DOI] [PubMed] [Google Scholar]

- 23.Rojas Smith L, Ashok M, Morss Dy S, Wines RC, Teixeira-Poit S. Contextual Frameworks for Research on the Implementation of Complex System Interventions. Rockville, MD: Agency for Healthcare Research and Quality; 2014. [PubMed] [Google Scholar]

- 24.Jimmerson C, Weber D, Sobek DK II. Reducing waste and errors: piloting lean principles at Intermountain Healthcare. Jt Comm J Qual Patient Saf. 2005;31:249–257. [DOI] [PubMed] [Google Scholar]

- 25.Delisle DR, American Society for Quality Executing Lean Improvements: A Practical Guide with Real-World Healthcare Case Studies. Milwaukee, WI: ASQ Quality Press; 2015. [Google Scholar]

- 26.Aarons GA, Sklar M, Mustanski B, Benbow N, Brown CH. “Scaling-out” evidence-based interventions to new populations or new health care delivery systems. Implement Sci. 2017;12:111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89:1322–1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, Stall R. Implementing evidence-based interventions in health care: application of the replicating effective programs framework. Implement Sci. 2007;2:42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Harrison MI, Paez K, Carman KL, et al. Effects of organizational context on Lean implementation in five hospital systems. Health Care Manage Rev. 2016;41:127–144. [DOI] [PubMed] [Google Scholar]

- 30.Nielsen KR, Becerra R, Mallma G, Tantaleán da Fieno J. Successful deployment of high flow nasal cannula in a Peruvian pediatric intensive care unit using implementation science–lessons learned. Front Pediatr. 2018;6:85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sales AE. Quality improvement In: Straus SE, Tetroe J, Graham ID, eds. Knowledge Translation in Health Care: Moving from Evidence to Practice. 2nd ed. Hoboken, NJ: Wiley/BMJ Books; 2013:xvii, 406. [Google Scholar]

- 32.Stirman SW, Miller CJ, Toder K, Calloway A. Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implement Sci. 2013;8:65. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.