Abstract

Convolutional neural networks (CNNs), a particular type of deep learning architecture, are positioned to become one of the most transformative technologies for medical applications. The aim of the current study was to evaluate the efficacy of deep CNN algorithm for the identification and classification of dental implant systems.

A total of 5390 panoramic and 5380 periapical radiographic images from 3 types of dental implant systems, with similar shape and internal conical connection, were randomly divided into training and validation dataset (80%) and a test dataset (20%). We performed image preprocessing and transfer learning techniques, based on fine-tuned and pre-trained deep CNN architecture (GoogLeNet Inception-v3). The test dataset was used to assess the accuracy, sensitivity, specificity, receiver operating characteristic curve, area under the receiver operating characteristic curve (AUC), and confusion matrix compared between deep CNN and periodontal specialist.

We found that the deep CNN architecture (AUC = 0.971, 95% confidence interval 0.963–0.978) and board-certified periodontist (AUC = 0.925, 95% confidence interval 0.913–0.935) showed reliable classification accuracies.

This study demonstrated that deep CNN architecture is useful for the identification and classification of dental implant systems using panoramic and periapical radiographic images.

Keywords: artificial intelligence, deep learning, dental implants, supervised machine learning

1. Introduction

Dental implants are used to replace or reconstruct missing teeth. Systematic and meta-analytic studies published in recent decades show a long-term success and survival rate of more than 10 years in over 90% of the cases.[1–3] However, despite the fact that dental implants have become a widespread and rapidly increasing treatment option, mechanical and biological complications occur frequently. Ultimately, the possibility of failure is also steadily increasing.[4–6] In a long-term systematic review, the cumulative mechanical complication incidence rate over a period of 5 years was reported to be 12.7% for loosening of screws or abutments and 0.35% for screw or abutment fractures.[7] Also observed at a large multicenter study, a total of 19,087 implant cases were monitored over 9 years, and confirmed 70 fixture fractures (0.4%).[8] Another systematic review of biological complications reported the prevalence of peri-implant mucositis and peri-implantitis of up to 65% and 47%, respectively.[9]

More than hundreds of manufacturers produce over 4000 different types of dental implant systems globally.[10,11] A wide variety of fixture structures (straight, tapered, conical, ovoid, trapezoidal, internal, and external) with different surface treatment techniques (machined, blasted, acid-etched, hydroxyapatite-coated, titanium plasma-sprayed, and oxidized) are continuously being developed and clinically applied.[12–14] Therefore, if clinical dental practitioners cannot identify and classify the dental implant systems when mechanical and biological complications occur, there is an increased probability of invasive treatment modalities for repair or replantation.[15,16] Although panoramic and periapical radiographs are the primary means for identifying and classifying dental implant systems, it is exceedingly difficult to distinguish different systems with similar shapes and features through radiographs. This is due to significant inherent weaknesses, such as noise, haziness, and distortion.[17,18]

Computer-aided diagnostic systems have shown good efficiency and improved outcomes when applied in various medical and dental fields. In particular, among popular research technologies of deep learning, convolutional neural networks (CNNs) have developed rapidly in recent years and demonstrate excellent performance in regards to image analysis such as detection, classification, and segmentation.[19,20] However, despite the excellent performance and reliability of deep CNN algorithms, basic research and clinical application in the dental field are vastly limited. The purpose of this study was to demonstrate the efficacy of deep CNN algorithm for the identification and classification of dental implant systems using panoramic and periapical radiographs.

2. Materials and methods

2.1. Datasets

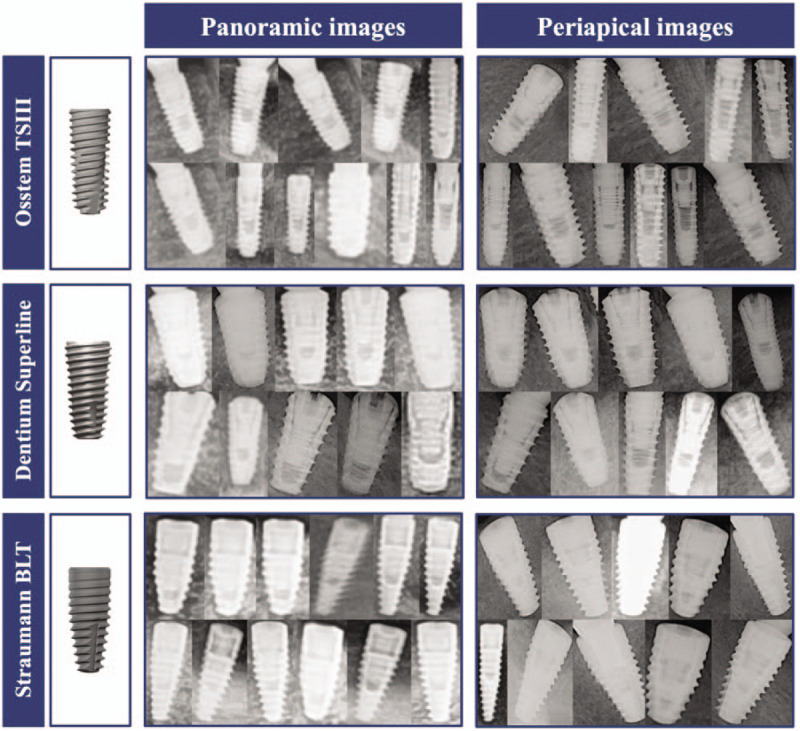

This study was conducted at the Department of Periodontology, Daejeon Dental Hospital, Wonkwang University, and all image datasets were anonymized and separated from any personal identifiers. The research protocol was approved by the Institutional Review Board of Daejeon Dental Hospital, Wonkwang University (approval no. W1809/001-001). Raw panoramic and periapical radiographic images (INFINITT PACS, Infinitt, Seoul, Korea) of patients who underwent dental implant treatment at the dental hospital were acquired between January 2010 and December 2019. Three types of dental implant systems – TSIII SA, Osstem Implant Co. Ltd., Seoul, Korea; Superline, Dentium Co. Ltd., Seoul, Korea; SLActive BLT implant, Institut Straumann AG, Basel, Switzerland – were classified and each dental implant system was labeled based on electronic dental records. These dental implant systems have a sandblasted, large-grit, acid-etched surface, and an internal conical connection with similar tapered structure in common (Fig. 1).

Figure 1.

Three types of dental implant systems have a sandblasted, large-grit, acid-etched surface, and an internal connection with similar tapered morphology in common.

Osstem TSIII implant system: fixtures with a diameter of 3.5 to 5.0 mm and length of 7 to 13 mm, designed with double and corkscrew thread, helix cutting edge, and an apical taper angle of 1.5°.

Dentium Superline implant system: fixtures with a diameter of 3.6 to 5.0 mm and length of 8 to 12 mm, designed with double threads, and a long cutting edge.

Straumann BLT implant system: fixtures with a diameter of 3.3 to 4.8 mm and length of 8 to 12 mm, designed with full-depth threads, 3 cutting notches, and an apical taper angle of 9°.

2.2. Preprocessing and image augmentation

The regions of interest, which displayed only 1 implant fixture per image, were manually cropped and labeled by 3 periodontology residents who were not directly involved in the study using radiographic image analysis software (Osirix X 10.0 64-bit version; Pixmeo SARL). Images with severe noise, haziness, and distortion were excluded. The remaining images included in this study were calibrated according to contrast and brightness using global contrast normalization and zero phase whitening.[21,22] The average value, X̄, and the standard deviation, σ, of each image were obtained, and global contrast normalization of the image data was performed as follows: X←(X – X̄) / σ.

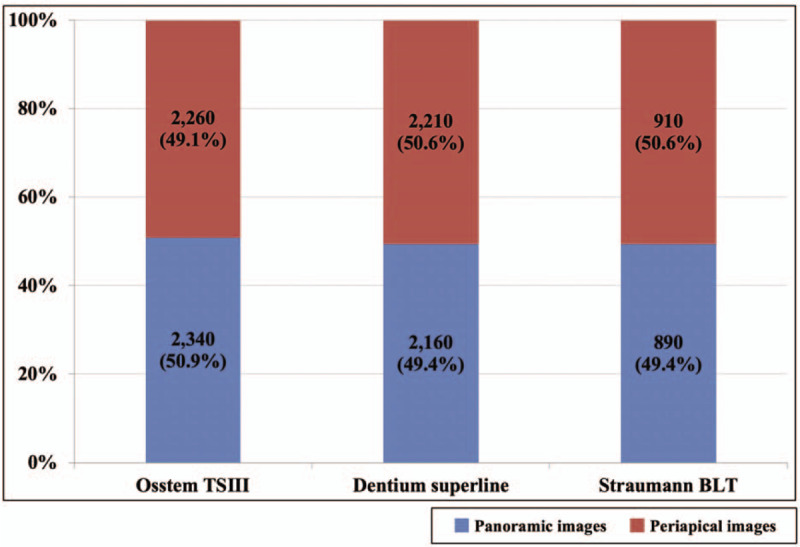

The final dataset consisted of 10,770 cropped radiographic images (extracted from 5390 panoramic and 5380 periapical radiographic images). Including 4600 Osstem TSIII implant systems (extracted from 2340 [50.9%] panoramic and 2260 [49.1%] periapical radiographic images), 4370 Dentium Superline implant systems (extracted from 2160 [49.4%] panoramic and 2210 [50.6%] periapical radiographic images), and 1800 Straumann BLT implant systems (extracted from 890 [49.4%] panoramic and 910 [50.6%] periapical radiographic images), as shown in Figure 2. The dataset was randomly divided into 3 groups: training dataset (n = 6,462 [60%]), validation dataset (n = 2,154 [20%]), and test dataset (n = 2,154 [20%]). The training dataset was randomly augmented 10 times (n = 64,620) using horizontal and vertical flip, rotation (range of 10°), width and height shifting (range of 0.1), and zooming (range of 0.8–1.2). The test dataset was allocated at the same ratio of 200 to each dental implant system.[23]

Figure 2.

The dataset consisted of a total of cropped 10,770 radiographic images (extracted from 5390 panoramic and 5380 periapical radiographic images), consisting of 4600 Osstem TSIII, 4370 Dentium Superline, and 1800 Straumann BLT dental implant systems.

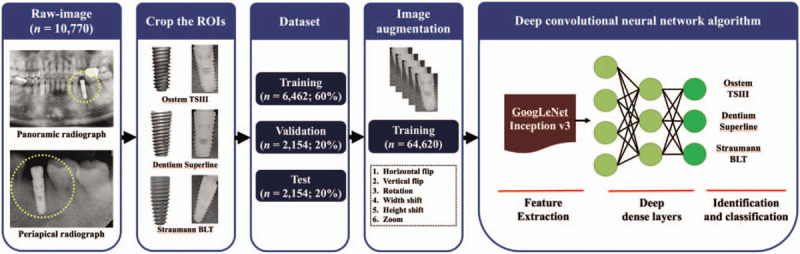

2.3. Architecture of the deep convolutional neural network

In this study, we explored the representative deep CNN architecture, GoogLeNet Inception v3, which achieved excellent performance in high-level feature abstraction.[24] This architecture was developed by the Google research team and consisted of 9 inception modules, including an auxiliary classifier, 2 fully connected layers, softmax functions, and 22 dense layers.[24] A pre-trained model on ImageNet was used for preprocessing and transfer learning. This indicated that the architectures were capable of learning comprehensive natural features from approximately 1.28 million images, consisting of 1000 object categories.[23,25] Additional fine-tuning was performed by optimizing the weights, and each architecture was trained for 1000 epochs.[26] (Fig. 3)

Figure 3.

Overall scheme and overview representing the GoogLeNet Inception-v3 architecture. Implementations of the pre-trained convolutional neural network model using transfer learning. The dataset for the implant fixture images was obtained by cropping the regions of interests and using as input. The final output layer performs softmax classification and provides the predictions.

2.4. Comparing the performance of the deep CNN architecture to human expert

A total of 2154 radiographic images (718 images evenly for each dental implant system) were randomly selected from the test dataset by a computer aided tool (Keras framework in Python (Python 3.6.1, Python Software Foundation, Wilmington, DE). Then, the accuracy performance of the trained deep CNN architecture and board-certified periodontist (JHL) was evaluated using the testing dataset.

2.5. Statistical analysis

All statistical analyses were performed using the Keras framework in Python (Python 3.6.1, Python Software Foundation, Wilmington, DE) and MedCalc statistical package (version 12.7.0, Mariakerke, Belgium), and the accuracy performance of the test dataset was evaluated using the receiver operating characteristic curve, 95% confidence intervals (CIs), and confusion matrix.

3. Results

3.1. Classification of dental implant systems

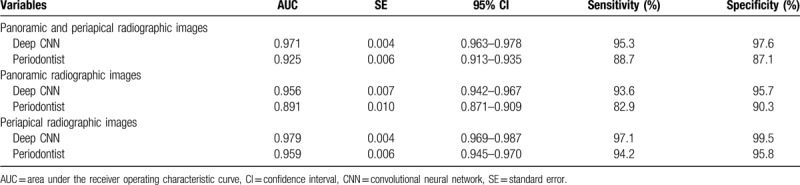

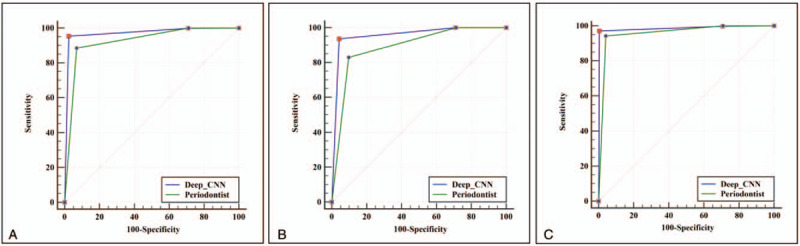

Table 1 and Figure 4 show a comparison of receiver operating characteristic curves for appraising the accuracy of the deep CNN architecture and periodontist. Using combined panoramic and periapical radiographic images, the deep CNN architecture had an AUC of 0.971 (95% CI, 0.963–0.978), while the corresponding values for the periodontist were 0.925 (95% CI, 0.913–0.935). Using only panoramic radiographic images, the deep CNN architecture had an AUC of 0.956 (95% CI, 0.942–0.967), while the corresponding values for the periodontist were 0.891 (95% CI, 0.871–0.909). Using only periapical radiographic images, the deep CNN architecture had an AUC of 0.979 (95% CI, 0.969–0.987), while the corresponding values for the periodontist were 0.979 (95% CI, 0.969–0.987), respectively (Table 1. and Fig. 4).

Table 1.

Comparison between the deep convolutional neural networks algorithm and periodontist for the identification and classification of 3 types of dental implant systems.

Figure 4.

Comparison of receiver operating characteristic curves of the deep convolutional neural network (CNN) architecture and the periodontist. (A) Dataset consisted of 2154 panoramic and periapical radiographic images, (B) Dataset consisted of 1078 panoramic radiographic images, and (C) Dataset consisted of 1076 periapical radiographic images.

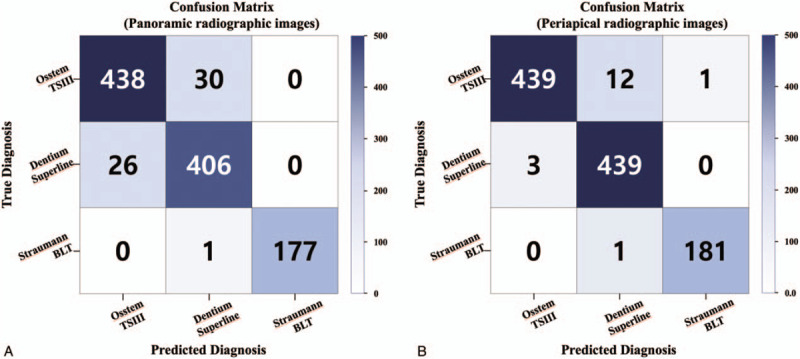

3.2. Confusion matrix

Another result analyzed was the confusion matrix of the multiclass classification of dental implant systems, based on deep CNN architecture using the training dataset (Fig. 5). The accuracy of Straumann BLT implant system (panoramic radiographic images: 99.4% and periapical radiographic images: 99.5%) was the highest among the three types of dental implant systems.

Figure 5.

Multiclass classification confusion matrix using deep convolutional neural network architecture. (A) Panoramic radiographic images without normalization, (B) Periapical radiographic images without normalization.

4. Discussion

Using dental implants for the successful rehabilitation of those who are partially or fully edentulous is growing rapidly over time. However, the number of dental implants that cannot be identified, because of the absence of available dental records, is also increasing. In addition, dental practitioners with relatively short clinical experience have more difficulty distinguishing between various designs of dental implant systems because of their limited firsthand observations.

In the past, effort has been made to identify dental implant systems from a forensic, medical viewpoint. However, these endeavors were conducted with a very small number of datasets, or resulted in low accuracy for practical clinical application.[27,28] Sahiwal et al attempted to recognize dental implant systems based on radiographic images; however, only 20 images per dental implant were used, and as a result, the dental implant system could only be accurately recognized within 10 degrees of vertical angulation.[29] Michelinakis et al also developed a computer-aided diagnostic based recognition software to identify dental implant systems, however, it has the limitation of manually recording the characteristics of the dental implant (such as diameter, length, type of thread, surface property, and collar shape).[30]

Before conducting this study, we compared the accuracy performance of the classification of dental implant systems using 3 major CNN architectures with and without transfer learning (VGG-19, Inception-v3, and ResNet-50) to find the optimal model. Although all 3 algorithms had reliable results, the pre-trained Inception-v3 architecture showed the best performance (AUC = 0.922, 95% CI 0.876–0.955), and therefore, we adopted the Inception-v3 architecture in this study.[31] The GoogLeNet Inception-v3 architecture, which was developed in 2014 and modified in 2016, showed excellent multicategorical (or multiclass) image classification and object detection performance in the annual ImageNet Large Scale Visual Recognition Challenge.[24,32] Therefore, this architecture has been widely adopted in various medical and dental fields, such as clinical diagnosis and therapeutic aspects. In particular, it has demonstrated superior performance in detecting and classifying diabetic retinopathy in retinal fundus photographs, pulmonary tuberculosis from chest radiographs, skin cancers from skin photographs, and cystic lesions from panoramic and cone beam computed tomography radiographs.[33–36]

Our goal was to learn discriminative features for contour identification and classification, using the well-known and very effective deep CNN architecture. To the best of our knowledge, this study is the first to evaluate the efficacy of deep CNN architecture using panoramic and periapical radiographic images. We demonstrated that the GoogLeNet Inception-v3 architecture provided a reliable performance (AUC between 0.956 and 0.979) and superiority when compared to the board-certified periodontist (AUC between 0.891 and 0.959). In particular, the Straumann BLT implant system had the highest accuracy in panoramic and periapical radiographic images. This result is considered to be due to the largest taper of the Straumann BLT implant system and is considered as one of the major limitations of the dataset collection in this study.

We retained the cropped, 10,770 panoramic and periapical radiographic images from the 3 categories. The images were relatively small for performing reliable training and testing with deep CNN architecture. To overcome this limitation, and to avoid overfitting, the training dataset was augmented 10-fold at random and a fine-tuning strategy with transfer learning was performed manually and meticulously.[25] Another limitation encountered was that each dental implant with the same base system was still different in structure, depending on its diameter and length. However, our dataset did not consider these factors. As already mentioned in the introduction, although there are a large number of dental implant systems with different designs, only three types of dental implant systems were included in the dataset, which limits their practical use.

In recent years, the efficacy of deep learning has been actively investigated for a 3-dimensional dataset source. A variety of deep CNN architectures have already been specialized and optimized based on 3-dimensional computed tomographic images In contrast, 2-dimensional images (including dental panoramic and periapical radiography) are more distorted than 3-dimensional images. This factor is another major limitation that impedes the clear identification and classification of dental implant systems.[37] Therefore, if additional information (e.g., exact diameter and length, based on a 3-dimensional implant image using dental cone-beam computed tomography) is included in the dataset, the classification accuracy can be improved.

5. Conclusions

Deep learning is predicted to become one of the most transformative technologies for dental applications. We found that deep CNN architecture was useful for the identification and classification of dental implant systems by using panoramic and periapical radiographic images. Further studies should concentrate on the effectiveness of deep CNN architectures with high quality and quantity datasets, obtained from clinical dental practices.

Acknowledgments

We would like to thank the periodontology residents (Dr Eun-Hee Jeong, Dr Bo-Ram Nam, and Dr Do-Hyung Kim) who helped prepare the dataset for this study.

Author contributions

Conceptualization: Jae-Hong Lee.

Data curation: Jae-Hong Lee, Seong-Nyum Jeong.

Formal analysis: Jae-Hong Lee, Seong-Nyum Jeong.

Funding acquisition: Jae-Hong Lee.

Investigation: Jae-Hong Lee, Seong-Nyum Jeong.

Methodology: Jae-Hong Lee, Seong-Nyum Jeong.

Project administration: Jae-Hong Lee, Seong-Nyum Jeong.

Resources: Jae-Hong Lee, Seong-Nyum Jeong.

Validation: Jae-Hong Lee, Seong-Nyum Jeong.

Writing – original draft: Jae-Hong Lee, Seong-Nyum Jeong.

Writing – review & editing: Jae-Hong Lee, Seong-Nyum Jeong.

Footnotes

Abbreviations: CI = confidence intervals, CNN = convolutional neural networks, ROC = receiver operating characteristic.

How to cite this article: Lee J-H, Jeong S-N. Efficacy of deep convolutional neural network algorithm for the identification and classification of dental implant systems, using panoramic and periapical radiographs: a pilot study. Medicine. 2020;99:26(e20787).

This work was supported by a National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (No. 2019R1A2C1083978) and Wonkwang University in 2020.

The authors have no conflicts of interest to disclose.

The datasets generated during and/or analyzed during the current study are not publicly available, but are available from the corresponding author on reasonable request.

References

- [1].Albrektsson T, Donos N, Working G. Implant survival and complications. The third EAO consensus conference 2012. Clin Oral Implants Res 2012;23: Suppl 6: 63–5. [DOI] [PubMed] [Google Scholar]

- [2].Srinivasan M, Vazquez L, Rieder P, et al. Survival rates of short (6 mm) micro-rough surface implants: a review of literature and meta-analysis. Clin Oral Implants Res 2014;25:539–45. [DOI] [PubMed] [Google Scholar]

- [3].Ramanauskaite A, Borges T, Almeida BL, et al. Dental implant outcomes in grafted sockets: a systematic review and meta-analysis. J Oral Maxillofac Res 2019;10:e8.1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Jung RE, Zembic A, Pjetursson BE, et al. Systematic review of the survival rate and the incidence of biological, technical, and aesthetic complications of single crowns on implants reported in longitudinal studies with a mean follow-up of 5 years. Clin Oral Implants Res 2012;23: Suppl 6: 2–1. [DOI] [PubMed] [Google Scholar]

- [5].Lee JH, Lee JB, Kim MY, et al. Mechanical and biological complication rates of the modified lateral-screw-retained implant prosthesis in the posterior region: an alternative to the conventional Implant prosthetic system. J Adv Prosthodont 2016;8:150–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Lee JH, Lee JB, Park JI, et al. Mechanical complication rates and optimal horizontal distance of the most distally positioned implant-supported single crowns in the posterior region: a study with a mean follow-up of 3 years. J Prosthodont 2015;24:517–24. [DOI] [PubMed] [Google Scholar]

- [7].Jung RE, Pjetursson BE, Glauser R, et al. A systematic review of the 5-year survival and complication rates of implant-supported single crowns. Clin Oral Implants Res 2008;19:119–30. [DOI] [PubMed] [Google Scholar]

- [8].Lee JH, Kim YT, Jeong SN, et al. Incidence and pattern of implant fractures: a long-term follow-up multicenter study. Clin Implant Dent Relat Res 2018;20:463–9. [DOI] [PubMed] [Google Scholar]

- [9].Derks J, Tomasi C. Peri-implant health and disease. A systematic review of current epidemiology. J Clin Periodontol 2015;42: Suppl 16: S158–71. [DOI] [PubMed] [Google Scholar]

- [10].Esposito M, Ardebili Y, Worthington HV. Interventions for replacing missing teeth: different types of dental implants. Cochrane Database Syst Rev 2014;7:CD003815. [DOI] [PubMed] [Google Scholar]

- [11].Jokstad A, Ganeles J. Systematic review of clinical and patient-reported outcomes following oral rehabilitation on dental implants with a tapered compared to a non-tapered implant design. Clin Oral Implants Res 2018;29: Suppl 16: 41–54. [DOI] [PubMed] [Google Scholar]

- [12].Binon PP. Implants and components: entering the new millennium. Int J Oral Maxillofac Implants 2000;15:76–94. [PubMed] [Google Scholar]

- [13].Jokstad A, Braegger U, Brunski JB, et al. Quality of dental implants. Int Dent J 2003;53:409–43. [DOI] [PubMed] [Google Scholar]

- [14].Millennium Research G. European markets for dental implants and final abutments 2004: executive summary. Implant Dent 2004;13:193–6. [DOI] [PubMed] [Google Scholar]

- [15].Esposito M, Hirsch J, Lekholm U, et al. Differential diagnosis and treatment strategies for biologic complications and failing oral implants: a review of the literature. Int J Oral Maxillofac Implants 1999;14:473–90. [PubMed] [Google Scholar]

- [16].Greenstein G, Cavallaro J. Failed dental implants: diagnosis, removal and survival of reimplantations. J Am Dent Assoc 2014;145:835–42. [DOI] [PubMed] [Google Scholar]

- [17].Dudhia R, Monsour PA, Savage NW, et al. Accuracy of angular measurements and assessment of distortion in the mandibular third molar region on panoramic radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 2011;111:508–16. [DOI] [PubMed] [Google Scholar]

- [18].Kayal RA. Distortion of digital panoramic radiographs used for implant site assessment. J Orthod Sci 2016;5:117–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Suzuki K. Overview of deep learning in medical imaging. Radiol Phys Technol 2017;10:257–73. [DOI] [PubMed] [Google Scholar]

- [20].Lee JG, Jun S, Cho YW, et al. Deep learning in medical imaging: general overview. Korean J Radiol 2017;18:570–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Hyvarinen A, Oja E. Independent component analysis: algorithms and applications. Neural Netw 2000;13:411–30. [DOI] [PubMed] [Google Scholar]

- [22].Goodfellow IJ, Warde-Farley D, Mirza M, et al. Maxout networks. arXiv e-print 2013;arXiv:1302.4389. [Google Scholar]

- [23].Shin HC, Roth HR, Gao M, et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans Med Imaging 2016;35:1285–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision. The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2016;2818–26. [Google Scholar]

- [25].Peng X, Sun B, Ali K, et al. Learning deep object detectors from 3D models. 2015 IEEE International Conference on Computer Vision (ICCV) 2015;1278–86. [Google Scholar]

- [26].Chollet F. Keras. 2017. [Google Scholar]

- [27].Berketa JW, Hirsch RS, Higgins D, et al. Radiographic recognition of dental implants as an aid to identifying the deceased. J Forensic Sci 2010;55:66–70. [DOI] [PubMed] [Google Scholar]

- [28].Nuzzolese E, Lusito S, Solarino B, et al. Radiographic dental implants recognition for geographic evaluation in human identification. J Forensic Odontostomatol 2008;26:8–11. [PubMed] [Google Scholar]

- [29].Sahiwal IG, Woody RD, Benson BW, et al. Radiographic identification of nonthreaded endosseous dental implants. J Prosthet Dent 2002;87:552–62. [DOI] [PubMed] [Google Scholar]

- [30].Michelinakis G, Sharrock A, Barclay CW. Identification of dental implants through the use of Implant Recognition Software (IRS). Int Dent J 2006;56:203–8. [DOI] [PubMed] [Google Scholar]

- [31].Jae-Hong L. Identification and classification of dental implant systems using various deep learning-based convolutional neural network architectures. Clin Oral Implants Res 2019;30:217. [Google Scholar]

- [32].Szegedy C, Liu W, Jia Y, et al. Going deeper with convolutions. The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2015;1–9. [Google Scholar]

- [33].Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016;316:2402–10. [DOI] [PubMed] [Google Scholar]

- [34].Lakhani P, Sundaram B. Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology 2017;284:574–82. [DOI] [PubMed] [Google Scholar]

- [35].Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017;542:115–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Lee JH, Kim DH, Jeong SN. Diagnosis of cystic lesions using panoramic and cone beam computed tomographic images based on deep learning neural network. Oral Dis 2020;26:152–8. [DOI] [PubMed] [Google Scholar]

- [37].Riecke B, Friedrich RE, Schulze D, et al. Impact of malpositioning on panoramic radiography in implant dentistry. Clin Oral Investig 2015;19:781–90. [DOI] [PubMed] [Google Scholar]