Quaternion methods for obtaining solutions to the problem of finding global rotations that optimally align pairs of corresponding lists of 3D spatial and/or orientation data are critically studied. The existence of multiple literatures and historical contexts is pointed out, and the algebraic solutions of the quaternion approach to the classic 3D spatial problem are emphasized. The treatment is extended to novel quaternion-based solutions to the alignment problems for 4D spatial and orientation data.

Keywords: data alignment, spatial-coordinate alignment, orientation-frame alignment, quaternions, quaternion frames, quaternion eigenvalue methods

Abstract

The general problem of finding a global rotation that transforms a given set of spatial coordinates and/or orientation frames (the ‘test’ data) into the best possible alignment with a corresponding set (the ‘reference’ data) is reviewed. For 3D point data, this ‘orthogonal Procrustes problem’ is often phrased in terms of minimizing a root-mean-square deviation (RMSD) corresponding to a Euclidean distance measure relating the two sets of matched coordinates. This article focuses on quaternion eigensystem methods that have been exploited to solve this problem for at least five decades in several different bodies of scientific literature, where they were discovered independently. While numerical methods for the eigenvalue solutions dominate much of this literature, it has long been realized that the quaternion-based RMSD optimization problem can also be solved using exact algebraic expressions based on the form of the quartic equation solution published by Cardano in 1545; focusing on these exact solutions exposes the structure of the entire eigensystem for the traditional 3D spatial-alignment problem. The structure of the less-studied orientation-data context is then explored, investigating how quaternion methods can be extended to solve the corresponding 3D quaternion orientation-frame alignment (QFA) problem, noting the interesting equivalence of this problem to the rotation-averaging problem, which also has been the subject of independent literature threads. The article concludes with a brief discussion of the combined 3D translation–orientation data alignment problem. Appendices are devoted to a tutorial on quaternion frames, a related quaternion technique for extracting quaternions from rotation matrices and a review of quaternion rotation-averaging methods relevant to the orientation-frame alignment problem. The supporting information covers novel extensions of quaternion methods to the 4D Euclidean spatial-coordinate alignment and 4D orientation-frame alignment problems, some miscellaneous topics, and additional details of the quartic algebraic eigenvalue problem.

1. Context

Aligning matched sets of spatial point data is a universal problem that occurs in a wide variety of applications. In addition, generic objects such as protein residues, parts of composite object models, satellites, cameras, or camera-calibrating reference objects are not only located at points in three-dimensional space, but may also need 3D orientation frames to describe them effectively for certain applications. We are therefore led to consider both the Euclidean spatial-coordinate alignment problem and the orientation-frame alignment problem on the same footing.

Our purpose in this article is to review, and in some cases to refine, clarify and extend, the possible quaternion-based approaches to the optimal alignment problem for matched sets of translated and/or rotated objects in 3D space, which could be referred to in its most generic sense as the ‘generalized orthogonal Procrustes problem’ (Golub & van Loan, 1983 ▸). We also devote some attention to identifying the surprising breadth of domains and literature where the various approaches, including particularly quaternion-based methods, have appeared; in fact the number of times in which quaternion-related methods have been described independently without cross-disciplinary references is rather interesting, and exposes some challenging issues that scientists, including the author, have faced in coping with the wide dispersion of both historical and modern scientific literature relevant to these subjects.

We present our study on two levels. The first level, the present main article, is devoted to a description of the 3D spatial and orientation alignment problems, emphasizing quaternion methods, with an historical perspective and a moderate level of technical detail that strives to be accessible. The second level, comprising the supporting information, treats novel extensions of the quaternion method to the 4D spatial and orientation alignment problems, along with many other technical topics, including analysis of algebraic quartic eigenvalue solutions and numerical studies of the applicability of certain common approximations and methods.

In the following, we first review the diverse bodies of literature regarding the extraction of 3D rotations that optimally align matched pairs of Euclidean point data sets. It is important for us to remark that we have repeatedly become aware of additional literature in the course of this work and it is entirely possible that other worthy references have been overlooked; if so, we apologize for any oversights and hope that the literature that we have found to review will provide an adequate context for the interested reader. We then introduce our own preferred version of the quaternion approach to the spatial-alignment problem, often described as the root-mean-square-deviation (RMSD) minimization problem, and we will adopt that terminology when convenient; our intent is to consolidate a range of distinct variants in the literature into one uniform treatment, and, given the wide variations in symbolic notation and terminology, here we will adopt terms and conventions that work well for us personally. Following a technical introduction to quaternions, we treat the quaternion-based 3D spatial-alignment problem itself. Next we introduce the quaternion approach to the 3D orientation-frame alignment (QFA) problem in a way that parallels the 3D spatial problem, and note its equivalence to quaternion-frame averaging methods. We conclude with a brief analysis of the 6-degree-of-freedom (6DOF) problem, combining the 3D spatial and 3D orientation-frame measures. Appendices include treatments of the basics of quaternion orientation frames, an elegant method that extracts a quaternion from a numerical 3D rotation matrix and the generalization of that method to compute averages of rotations.

2. Summary of spatial-alignment problems, known solutions and historical contexts

2.1. The problem, standard solutions and the quaternion method

The fundamental problem we will be concerned with arises when we are given a well behaved D × D matrix E and we wish to find the optimal D-dimensional proper orthogonal matrix  that maximizes the measure

that maximizes the measure  . This is equivalent to the RMSD problem, which seeks a global rotation R that rotates an ordered set of point test data X in such a way as to minimize the squared Euclidean differences relative to a matched reference set Y. We will find below that E corresponds to the cross-covariance matrix of the pair (X, Y) of N columns of D-dimensional vectors, namely

. This is equivalent to the RMSD problem, which seeks a global rotation R that rotates an ordered set of point test data X in such a way as to minimize the squared Euclidean differences relative to a matched reference set Y. We will find below that E corresponds to the cross-covariance matrix of the pair (X, Y) of N columns of D-dimensional vectors, namely  , though we will look at cases where E could have almost any origin.

, though we will look at cases where E could have almost any origin.

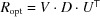

One solution to this problem in any dimension D uses the decomposition of the general matrix E into an orthogonal matrix U and a symmetric matrix S that takes the form E =  =

=  , giving

, giving  =

=  =

=  ; note that there exist several equivalent forms [see, e.g., Green (1952 ▸) and Horn et al. (1988 ▸)]. General solutions may also be found using singular-value-decomposition (SVD) methods, starting with the decomposition

; note that there exist several equivalent forms [see, e.g., Green (1952 ▸) and Horn et al. (1988 ▸)]. General solutions may also be found using singular-value-decomposition (SVD) methods, starting with the decomposition  , where S is now diagonal and U and V are orthogonal matrices, to give the result

, where S is now diagonal and U and V are orthogonal matrices, to give the result  , where D is the identity matrix up to a possible sign in one element [see, e.g., Kabsch (1976 ▸, 1978 ▸), Golub & van Loan (1983 ▸) and Markley (1988 ▸)].

, where D is the identity matrix up to a possible sign in one element [see, e.g., Kabsch (1976 ▸, 1978 ▸), Golub & van Loan (1983 ▸) and Markley (1988 ▸)].

In addition to these general methods based on traditional linear algebra approaches, a significant literature exists for three dimensions that exploits the relationship between 3D rotation matrices and quaternions, and rephrases the task of finding  as a quaternion eigensystem problem. This approach notes that, using the quadratic quaternion form R(q) for the rotation matrix, one can rewrite

as a quaternion eigensystem problem. This approach notes that, using the quadratic quaternion form R(q) for the rotation matrix, one can rewrite

, where the profile matrix

M(E) is a traceless, symmetric 4 × 4 matrix consisting of linear combinations of the elements of the 3 × 3 matrix E. Finding the largest eigenvalue

, where the profile matrix

M(E) is a traceless, symmetric 4 × 4 matrix consisting of linear combinations of the elements of the 3 × 3 matrix E. Finding the largest eigenvalue  of M(E) determines the optimal quaternion eigenvector

of M(E) determines the optimal quaternion eigenvector  and thus the solution

and thus the solution  . The quaternion framework will be our main topic here.

. The quaternion framework will be our main topic here.

2.2. Historical literature overview

Although our focus is the quaternion eigensystem context, we first note that one of the original approaches to the RMSD task exploited the singular-value decomposition directly to obtain an optimal rotation matrix. This solution appears to date at least from 1966 in Schönemann’s thesis (Schönemann, 1966 ▸) and possibly Cliff (1966 ▸) later in the same journal issue; Schönemann’s work is chosen for citation, for example, in the earliest editions of Golub & van Loan (1983 ▸). Applications of the SVD to alignment in the aerospace literature appear, for example, in the context of Wahba’s problem (Wikipedia, 2018b ▸; Wahba, 1965 ▸) and are used explicitly, e.g., in Markley (1988 ▸), while the introduction of the SVD for the alignment problem in molecular chemistry is generally attributed to Kabsch (Wikipedia, 2018a ▸; Kabsch, 1976 ▸), and in machine vision Arun et al. (1987 ▸) is often cited.

We believe that the quaternion eigenvalue approach itself was first noticed around 1968 by Davenport (Davenport, 1968 ▸) in the context of Wahba’s problem, rediscovered in 1983 by Hebert and Faugeras (Hebert, 1983 ▸; Faugeras & Hebert, 1983 ▸, 1986 ▸) in the context of machine vision, and then found independently a third time in 1986 by Horn (Horn, 1987 ▸).

An alternative quaternion-free approach by Horn et al. (1988 ▸) with the optimal rotation of the form  =

=  appeared in 1988, but this basic form was apparently known elsewhere as early as 1952 (Green, 1952 ▸; Gibson, 1960 ▸).

appeared in 1988, but this basic form was apparently known elsewhere as early as 1952 (Green, 1952 ▸; Gibson, 1960 ▸).

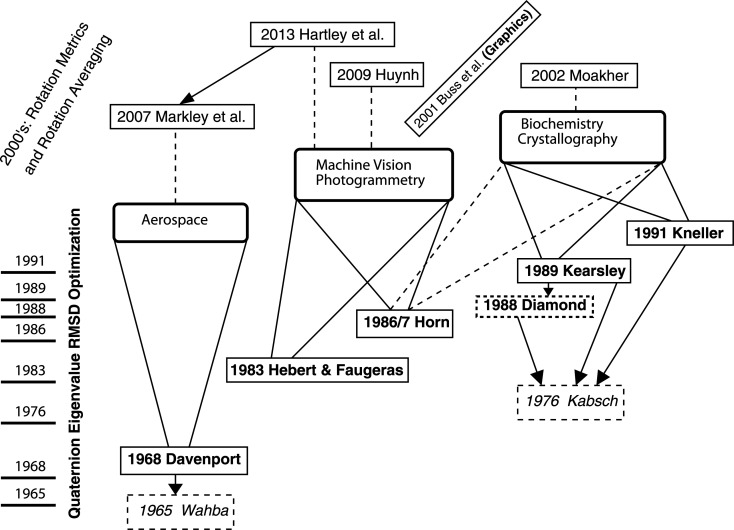

Much of the recent activity has occurred in the context of the molecular alignment problem, starting from a basic framework put forth by Kabsch (1976 ▸, 1978 ▸). So far as we can determine, the matrix eigenvalue approach to molecular alignment was introduced in 1988 without actually mentioning quaternions by name in Diamond (1988 ▸) and refined to specifically incorporate quaternion methods in 1989 by Kearsley (1989 ▸). In 1991 Kneller (Kneller, 1991 ▸) independently described a version of the quaternion-eigenvalue-based approach that is widely cited as well. A concise and useful review can be found in Flower (1999 ▸), in which the contributions of Schönemann, Faugeras and Hebert, Horn, Diamond, and Kearsley are acknowledged and all cited in the same place. A graphical summary of the discovery chronology in various domains is given in Fig. 1 ▸. Most of these treatments mention using numerical methods to find the optimal eigenvalue, though several references, starting with Horn (1987 ▸), point out that 16th-century algebraic methods for solving the quartic polynomial characteristic equation, discussed in the next section, could also be used to determine the eigenvalues. In our treatment we will study the explicit form of these algebraic solutions for the 3D problem (and also for 4D in the supporting information), taking advantage of several threads of the literature.

Figure 1.

The quaternion eigensystem method for computing the optimal rotation matching two spatial data sets was discovered independently and published without cross-references in at least three distinct literatures. Downward arrows point to the introduction of the abstract problem and upward rays indicate domains of publications specifically citing the quaternion method. Horn eventually appeared routinely in the crystallography citations, and reviews such as that by Flower (1999 ▸) introduced multiple cross-field citations. Several fields have included activity on quaternion-related rotation metrics and rotation averaging with varying degrees of cross-field awareness.

2.3. Historical notes on the quartic

The actual solution to the quartic equation, and thus the solution of the characteristic polynomial of the 4D eigensystem of interest to us, was first published in 1545 by Gerolamo Cardano (Wikipedia, 2019 ▸) in his book Ars Magna. The intellectual history of this fact is controversial and narrated with varying emphasis in diverse sources. It seems generally agreed upon that Cardano’s student Lodovico Ferrari was the first to discover the basic method for solving the quartic in 1540, but his technique was incomplete as it only reduced the problem to the cubic equation, for which no solution was publicly known at that time, and that apparently prevented him from publishing it. The complication appears to be that Cardano had actually learned of a method for solving the cubic already in 1539 from Niccolò Fontana Tartaglia (legendarily in the form of a poem), but had been sworn to secrecy, and so could not reveal the final explicit step needed to complete Ferrari’s implicit solution. Where it gets controversial is that at some point between 1539 and 1545, Cardano learned that Scipione del Ferro had found the same cubic solution as the one of Tartaglia that he had sworn not to reveal, and furthermore that del Ferro had discovered his solution before Tartaglia did. Cardano interpreted that fact as releasing him from his oath of secrecy (which Tartaglia did not appreciate), allowing him to publish the complete solution to the quartic, incorporating the cubic solution into Ferrari’s result. Sources claiming that Cardano ‘stole’ Ferrari’s solution may perhaps be exaggerated, since Ferrari did not have access to the cubic equations and Cardano did not conceal his sources; exactly who ‘solved’ the quartic is thus philosophically complicated, but Cardano does seem to be the one who combined the multiple threads needed to express the equations as a single complete formula.

Other interesting observations were made later, for example by Descartes in 1637 (Descartes, 1637 ▸) and in 1733 by Euler (Euler, 1733 ▸; Bell, 2008 ▸). For further descriptions, one may consult, e.g., Abramowitz & Stegun (1970 ▸) and Boyer & Merzbach (1991 ▸), as well as the narratives in Weisstein (2019a ▸,b ▸). Additional amusing pedagogical investigations of the historical solutions may be found in several expositions by Nickalls (1993 ▸, 2009 ▸).

2.4. Further literature

A very informative treatment of the features of the quaternion eigenvalue solutions was given by Coutsias, Seok and Dill in 2004, and expanded in 2019 (Coutsias et al., 2004 ▸; Coutsias & Wester, 2019 ▸). Coutsias et al. not only take on a thorough review of the quaternion RMSD method, but also derive the complete relationship between the linear algebra of the SVD method and the quaternion eigenvalue system; furthermore, they exhaustively enumerate the special cases involving mirror geometries and degenerate eigenvalues that may appear rarely, but must be dealt with on occasion. Efficiency is also an area of potential interest, and Theobald et al. in Theobald (2005 ▸) and in Liu et al. (2010 ▸) argue that among the many variants of numerical methods that have been used to compute the optimal quaternion eigenvalues, Horn’s original proposal to use Newton’s method directly on the characteristic equations of the relevant eigenvalue systems may well be the best approach.

There is also a rich literature dealing with distance measures among representations of rotation frames themselves, some dealing directly with the properties of distances computed with rotation matrices or quaternions, e.g. Huynh (2009 ▸), and others combining discussion of the distance measures with associated applications such as rotation averaging or finding ‘rotational centers of mass’, e.g. Brown & Worsey (1992 ▸), Park & Ravani (1997 ▸), Buss & Fillmore (2001 ▸), Moakher (2002 ▸), Markley et al. (2007 ▸), and Hartley et al. (2013 ▸). The specific computations explored below in Section 7 on optimal alignment of matched pairs of orientation frames make extensive use of the quaternion-based and rotation-based measures discussed in these treatments. In the appendices, we review the details of some of these orientation-frame-based applications.

3. Introduction

We explore the problem of finding global rotations that optimally align pairs of corresponding lists of spatial and/or orientation data. This issue is significant in diverse application domains. Among these are aligning spacecraft (see, e.g., Wahba, 1965 ▸; Davenport, 1968 ▸; Markley, 1988 ▸; and Markley & Mortari, 2000 ▸), obtaining correspondence of registration points in 3D model matching (see, e.g., Faugeras & Hebert, 1983 ▸, 1986 ▸), matching structures in aerial imagery (see, e.g., Horn, 1987 ▸; Horn et al., 1988 ▸; Huang et al., 1986 ▸; Arun et al., 1987 ▸; Umeyama, 1991 ▸; and Zhang, 2000 ▸), and alignment of matched molecular and biochemical structures (see, e.g., Kabsch, 1976 ▸, 1978 ▸; McLachlan, 1982 ▸; Lesk, 1986 ▸; Diamond, 1988 ▸; Kearsley, 1989 ▸, 1990 ▸; Kneller, 1991 ▸; Coutsias et al., 2004 ▸; Theobald, 2005 ▸; Liu et al., 2010 ▸; and Coutsias & Wester, 2019 ▸). A closely related task is the alignment of multiple sets of 3D range data, for example in digital-heritage applications (Levoy et al., 2000 ▸); the widely used iterative closest point (ICP) algorithm [see, e.g., Chen & Medioni (1991 ▸), Besl & McKay (1992 ▸) and Bergevin et al. (1996 ▸), as well as Rusinkiewicz & Levoy (2001 ▸) and Nüchter et al. (2007 ▸)] explicitly incorporates standard alignment methods in individual steps with known correspondences.

We note in particular the several alternative approaches of Schönemann (1966 ▸), Faugeras & Hebert (1983 ▸), Horn (1987 ▸) and Horn et al. (1988 ▸) that in principle produce the same optimal global rotation to solve a given alignment problem. While the SVD and  methods apply to any dimension, here we will critically examine the quaternion eigensystem decomposition approach that applies only to the 3D and 4D spatial-coordinate alignment problems, along with the extensions to the 3D and 4D orientation-frame alignment problems. Starting from the quartic algebraic equations for the quaternion eigensystem arising in our optimization problem, we direct attention to the elegant exact algebraic forms of the eigenvalue solutions appropriate for these applications. (For brevity, the more complicated 4D treatment is deferred to the supporting information.)

methods apply to any dimension, here we will critically examine the quaternion eigensystem decomposition approach that applies only to the 3D and 4D spatial-coordinate alignment problems, along with the extensions to the 3D and 4D orientation-frame alignment problems. Starting from the quartic algebraic equations for the quaternion eigensystem arising in our optimization problem, we direct attention to the elegant exact algebraic forms of the eigenvalue solutions appropriate for these applications. (For brevity, the more complicated 4D treatment is deferred to the supporting information.)

Our extension of the quaternion approach to orientation data exploits the fact that collections of 3D orientation frames can themselves be expressed as quaternions, e.g. amino-acid 3D orientation frames written as quaternions (see Hanson & Thakur, 2012 ▸), and we will refer to the corresponding ‘quaternion-frame alignment’ task as the QFA problem. Various proximity measures for such orientation data have been explored in the literature (see, e.g., Park & Ravani, 1997 ▸; Moakher, 2002 ▸; Huynh, 2009 ▸; and Huggins, 2014a ▸), and the general consensus is that the most rigorous measure minimizes the sums of squares of geodesic arc lengths between pairs of quaternions. This ideal QFA proximity measure is highly nonlinear compared to the analogous spatial RMSD measure, but fortunately there is an often-justifiable linearization, the chord angular distance measure. We present several alternative solutions exploiting this approximation that closely parallel our spatial RMSD formulation, and point out the relationship to the rotation-averaging problem.

In addition, we analyze the problem of optimally aligning combined 3D spatial and quaternion 3D-frame-triad data, a 6DOF task that is relevant to studying molecules with composite structure as well as to some gaming and robotics contexts. Such rotational–translational measures have appeared in both the computer vision and the molecular entropy literature [see, e.g., the dual-quaternion approach of Walker et al. (1991 ▸) as well as Huggins (2014b ▸) and Fogolari et al. (2016 ▸)]; after some confusion, it has been recognized that the spatial and rotational measures are dimensionally incompatible, and either they must be optimized independently, or an arbitrary context-dependent scaling parameter with the dimension of length must appear in any combined measure for the RMSD+QFA problem.

In the following, we organize our thoughts by first summarizing the fundamentals of quaternions, which will be our main computational tool. We next introduce the measures that underlie the general spatial alignment problem, then restrict our attention to the quaternion approach to the 3D problem, emphasizing a class of exact algebraic solutions that can be used as an alternative to the traditional numerical methods. Our quaternion approach to the 3D orientation-frame triad alignment problem is presented next, along with a discussion of the combined spatial–rotational problem. Appendices provide an alternative formulation of the 3D RMSD optimization equations, a tutorial on the quaternion orientation-frame methodology, and a summary of the method of Bar-Itzhack (2000 ▸) for obtaining the corresponding quaternion from a numerical 3D rotation matrix, along with a treatment of the closely related quaternion-based rotation averaging problem.

In the supporting information, we extend all of our 3D results to 4D space, exploiting quaternion pairs to formulate the 4D spatial-coordinate RMSD alignment and 4D orientation-based QFA methods. We expose the relationship between these quaternion methods and the singular-value decomposition, and extend the 3D Bar-Itzhack approach to 4D, showing how to find the pair of quaternions corresponding to any numerical 4D rotation matrix. Other sections of the supporting information explore properties of the RMSD problem for 2D data and evaluate the accuracy of our 3D orientation-frame alignment approximations, as well as studying and evaluating the properties of combined measures for aligning spatial-coordinate and orientation-frame data in 3D. An appendix is devoted to further details of the quartic equations and forms of the algebraic solutions related to our eigenvalue problems.

4. Foundations of quaternions

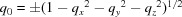

For the purposes of this paper, we take a quaternion to be a point q = (q

0, q

1, q

2, q

3) = (q

0, q) in 4D Euclidean space with unit norm, q · q = 1, and so geometrically it is a point on the unit 3-sphere S

3 [see, e.g., Hanson (2006 ▸) for further details about quaternions]. The first term, q

0, plays the role of a real number and the last three terms, denoted as a 3D vector q, play the role of a generalized imaginary number, and so are treated differently from the first: in particular the conjugation operation is taken to be  . Quaternions possess a multiplication operation denoted by

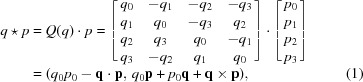

. Quaternions possess a multiplication operation denoted by  and defined as follows:

and defined as follows:

|

where the orthonormal matrix Q(q) expresses a form of quaternion multiplication that can be useful. Note that the orthonormality of Q(q) means that quaternion multiplication of p by q literally produces a rotation of p in 4D Euclidean space.

Choosing exactly one of the three imaginary components in both q and p to be nonzero gives back the classic complex algebra (q 0 + iq 1)(p 0 + ip 1) = (q 0 p 0 − q 1 p 1) + i(q 0 p 1 + p 0 q 1), so there are three copies of the complex numbers embedded in the quaternion algebra; the difference is that in general the final term q × p changes sign if one reverses the order, making the quaternion product order-dependent, unlike the complex product. Nevertheless, like complex numbers, the quaternion algebra satisfies the non-trivial ‘multiplicative norm’ relation

where  , i.e. quaternions are one of the four possible Hurwitz algebras (real, complex, quaternion and octonion).

, i.e. quaternions are one of the four possible Hurwitz algebras (real, complex, quaternion and octonion).

Quaternion triple products obey generalizations of the 3D vector identities A · (B × C) = B · (C × A) = C · (A × B), along with A × B = −B × A. The corresponding quaternion identities, which we will need in Section 7, are

where the complex-conjugate entries are the natural consequences of the sign changes occurring only in the 3D part.

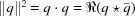

It can be shown that quadratically conjugating a vector x = (x, y, z), written as a purely ‘imaginary’ quaternion (0, x) (with only a 3D part), by quaternion multiplication is isomorphic to the construction of a 3D Euclidean rotation R(q) generating all possible elements of the special orthogonal group SO(3). If we compute

we see that only the purely imaginary part is affected, whether or not the arbitrary real constant c = 0. The result of collecting coefficients of the vector term is a proper orthonormal 3D rotation matrix quadratic in the quaternion elements that takes the form

|

with determinant  . The formula for R(q) is technically a two-to-one mapping from quaternion space to the 3D rotation group because R(q) = R(−q); changing the sign of the quaternion preserves the rotation matrix. Note also that the identity quaternion

. The formula for R(q) is technically a two-to-one mapping from quaternion space to the 3D rotation group because R(q) = R(−q); changing the sign of the quaternion preserves the rotation matrix. Note also that the identity quaternion  =

=

corresponds to the identity rotation matrix, as does

corresponds to the identity rotation matrix, as does  . The 3 × 3 matrix R(q) is fundamental not only to the quaternion formulation of the spatial RMSD alignment problem, but will also be essential to the QFA orientation-frame problem because the columns of R(q) are exactly the needed quaternion representation of the frame triad describing the orientation of a body in 3D space, i.e. the columns are the vectors of the frame’s local x, y and z axes relative to an initial identity frame.

. The 3 × 3 matrix R(q) is fundamental not only to the quaternion formulation of the spatial RMSD alignment problem, but will also be essential to the QFA orientation-frame problem because the columns of R(q) are exactly the needed quaternion representation of the frame triad describing the orientation of a body in 3D space, i.e. the columns are the vectors of the frame’s local x, y and z axes relative to an initial identity frame.

Multiplying a quaternion p by the quaternion q to get a new quaternion  simply rotates the 3D frame corresponding to p by the matrix equation (5) written in terms of q. This has non-trivial implications for 3D rotation matrices, for which quaternion multiplication corresponds exactly to multiplication of two independent 3 × 3 orthogonal rotation matrices, and we find that

simply rotates the 3D frame corresponding to p by the matrix equation (5) written in terms of q. This has non-trivial implications for 3D rotation matrices, for which quaternion multiplication corresponds exactly to multiplication of two independent 3 × 3 orthogonal rotation matrices, and we find that

This collapse of repeated rotation matrices to a single rotation matrix with multiplied quaternion arguments can be continued indefinitely.

If we choose the following specific 3-variable parameterization of the quaternion q preserving q · q = 1,

(with  ), then

), then  is precisely the ‘axis-angle’ 3D spatial rotation by an angle θ leaving the direction

is precisely the ‘axis-angle’ 3D spatial rotation by an angle θ leaving the direction  fixed, so

fixed, so  is the lone real eigenvector of R(q).

is the lone real eigenvector of R(q).

4.1. The slerp

Relationships among quaternions can be studied using the slerp, or ‘spherical linear interpolation’ (Shoemake, 1985 ▸; Jupp & Kent, 1987 ▸), which smoothly parameterizes the points on the shortest geodesic quaternion path between two constant (unit) quaternions, q 0 and q 1, as

Here  defines the angle ϕ between the two given quaternions, while q(s = 0) = q

0 and q(s = 1) = q

1. The ‘long’ geodesic can be obtained for 1 ≤ s ≤ 2π/ϕ. For small ϕ, this reduces to the standard linear interpolation (1 − s)q

0 + s

q

1. The unit norm is preserved, q(s) · q(s) = 1 for all s, so q(s) is always a valid quaternion and R(q(s)) defined by equation (5) is always a valid 3D rotation matrix. We note that one can formally write equation (8) as an exponential of the form

defines the angle ϕ between the two given quaternions, while q(s = 0) = q

0 and q(s = 1) = q

1. The ‘long’ geodesic can be obtained for 1 ≤ s ≤ 2π/ϕ. For small ϕ, this reduces to the standard linear interpolation (1 − s)q

0 + s

q

1. The unit norm is preserved, q(s) · q(s) = 1 for all s, so q(s) is always a valid quaternion and R(q(s)) defined by equation (5) is always a valid 3D rotation matrix. We note that one can formally write equation (8) as an exponential of the form  , but since this requires computing a logarithm and an exponential whose most efficient reduction to a practical computer program is equation (8), this is mostly of pedagogical interest.

, but since this requires computing a logarithm and an exponential whose most efficient reduction to a practical computer program is equation (8), this is mostly of pedagogical interest.

In the following we will make little further use of the quaternion’s algebraic properties, but we will extensively exploit equation (5) to formulate elegant approaches to RMSD problems, along with employing equation (8) to study the behavior of our data under smooth variations of rotation matrices.

4.2. Remark on 4D

Our fundamental formula equation (5) can be extended to four Euclidean dimensions by choosing two distinct quaternions in equation (4), producing a 4D Euclidean rotation matrix. Analogously to 3D, the columns of this matrix correspond to the axes of a 4D Euclidean orientation frame. The non-trivial details of the quaternion approach to aligning both 4D spatial-coordinate and 4D orientation-frame data are given in the supporting information.

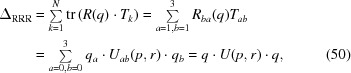

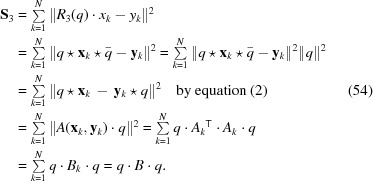

5. Reviewing the 3D spatial-alignment RMSD problem

We now review the basic ideas of spatial data alignment, and then specialize to 3D (see, e.g., Wahba, 1965 ▸; Davenport, 1968 ▸; Markley, 1988 ▸; Markley & Mortari, 2000 ▸; Kabsch, 1976 ▸, 1978 ▸; McLachlan, 1982 ▸; Lesk, 1986 ▸; Faugeras & Hebert, 1983 ▸; Horn, 1987 ▸; Huang et al., 1986 ▸; Arun et al., 1987 ▸; Diamond, 1988 ▸; Kearsley, 1989 ▸, 1990 ▸; Umeyama, 1991 ▸; Kneller, 1991 ▸; Coutsias et al., 2004 ▸; and Theobald, 2005 ▸). We will then employ quaternion methods to reduce the 3D spatial-alignment problem to the task of finding the optimal quaternion eigenvalue of a certain 4 × 4 matrix. This is the approach we have discussed in the introduction, and it can be solved using numerical or algebraic eigensystem methods. In Section 6 below, we will explore in particular the classical quartic equation solutions for the exact algebraic form of the entire four-part eigensystem, whose optimal eigenvalue and its quaternion eigenvector produce the optimal global rotation solving the 3D spatial-alignment problem.

5.1. Aligning matched data sets in Euclidean space

We begin with the general least-squares form of the RMSD problem, which is solved by minimizing the optimization measure over the space of rotations, which we will convert to an optimization over the space of unit quaternions. We take as input one data array with N columns of D-dimensional points {y k} as the reference structure and a second array of N columns of matched points {x k} as the test structure. Our task is to rotate the latter in space by a global SO(D) rotation matrix R D to achieve the minimum value of the cumulative quadratic distance measure

We assume, as is customary, that any overall translational components have been eliminated by displacing both data sets to their centers of mass (see, e.g., Faugeras & Hebert, 1983 ▸; Coutsias et al., 2004 ▸). When this measure is minimized with respect to the rotation R D, the optimal R D will rotate the test set {x k} to be as close as possible to the reference set {y k}. Here we will focus on 3D data sets (and, in the supporting information, 4D data sets) because those are the dimensions that are easily adaptable to our targeted quaternion approach. In 3D, our least-squares measure equation (9) can be converted directly into a quaternion optimization problem using the method of Hebert and Faugeras detailed in Appendix A.

Remark: Clifford algebras may support alternative methods as well as other approaches to higher dimensions [see, e.g., Havel & Najfeld (1994 ▸) and Buchholz & Sommer (2005 ▸)].

5.2. Converting from least-squares minimization to cross-term maximization

We choose from here onward to focus on an equivalent method based on expanding the measure given in equation (9), removing the constant terms, and recasting the RMSD least-squares minimization problem as the task of maximizing the surviving cross-term expression. This takes the general form

where

is the cross-covariance matrix of the data, [x k] denotes the kth column of X and the range of the indices (a, b) is the spatial dimension D.

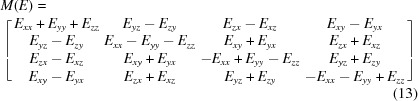

5.3. Quaternion transformation of the 3D cross-term form

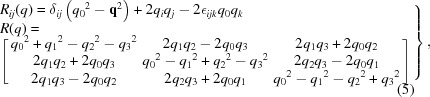

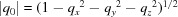

We now restrict our attention to the 3D cross-term form of equation (10) with pairs of 3D point data related by a proper rotation. The key step is to substitute equation (5) for R(q) into equation (10) and pull out the terms corresponding to pairs of components of the quaternions q. In this way the 3D expression is transformed into the 4 × 4 matrix M(E) sandwiched between two identical quaternions (not a conjugate pair) of the form

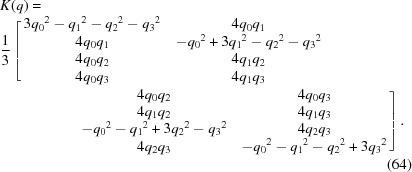

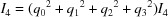

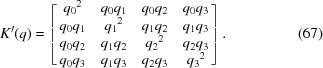

Here M(E) is the traceless, symmetric 4 × 4 matrix

|

built from our original 3 × 3 cross-covariance matrix E defined by equation (11). We will refer to M(E) from here on as the profile matrix, as it essentially reveals a different viewpoint of the optimization function and its relationship to the matrix E. Note that in some literature matrices related to the cross-covariance matrix E may be referred to as ‘attitude profile matrices’ and one also may see the term ‘key matrix’ referring to M(E).

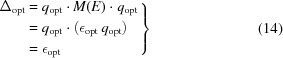

The bottom line is that if one decomposes equation (13) into its eigensystem, the measure equation (12) is maximized when the unit-length quaternion vector q is the eigenvector of M(E)’s largest eigenvalue (Davenport, 1968 ▸; Faugeras & Hebert, 1983 ▸; Horn, 1987 ▸; Diamond, 1988 ▸; Kearsley, 1989 ▸; Kneller, 1991 ▸). The RMSD optimal-rotation problem thus reduces to finding the maximal eigenvalue  of M(E) (which we emphasize depends only on the numerical data). Plugging the corresponding eigenvector

of M(E) (which we emphasize depends only on the numerical data). Plugging the corresponding eigenvector  into equation (5), we obtain the rotation matrix

into equation (5), we obtain the rotation matrix  that solves the problem. The resulting proximity measure relating {x

k} and {y

k} is simply

that solves the problem. The resulting proximity measure relating {x

k} and {y

k} is simply

|

and does not require us to actually compute  or

or  explicitly if all we want to do is compare various test data sets to a reference structure.

explicitly if all we want to do is compare various test data sets to a reference structure.

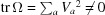

Note. In the interests of conceptual and notational simplicity, we have made a number of assumptions. For one thing, in declaring that equation (5) describes our sought-for rotation matrix, we have presumed that the optimal rotation matrix will always be a proper rotation, with  . Also, as mentioned, we have omitted any general translation problems, assuming that there is a way to translate each data set to an appropriate center, e.g. by subtracting the center of mass. The global translation optimization process is treated in Faugeras & Hebert (1986 ▸) and Coutsias et al. (2004 ▸), and discussions of center-of-mass alignment, scaling and point weighting are given in much of the original literature, see, e.g., Horn (1987 ▸), Coutsias et al. (2004 ▸), and Theobald (2005 ▸). Finally, in real problems, structures such as molecules may appear in mirror-image or enantiomer form, and such issues were introduced early on by Kabsch (1976 ▸, 1978 ▸). There can also be particular symmetries, or very close approximations to symmetries, that can make some of our natural assumptions about the good behavior of the profile matrix invalid, and many of these issues, including ways to treat degenerate cases, have been carefully studied [see, e.g., Coutsias et al. (2004 ▸) and Coutsias & Wester (2019 ▸)]. The latter authors also point out that if a particular data set M(E) produces a negative smallest eigenvalue ∊4 such that

. Also, as mentioned, we have omitted any general translation problems, assuming that there is a way to translate each data set to an appropriate center, e.g. by subtracting the center of mass. The global translation optimization process is treated in Faugeras & Hebert (1986 ▸) and Coutsias et al. (2004 ▸), and discussions of center-of-mass alignment, scaling and point weighting are given in much of the original literature, see, e.g., Horn (1987 ▸), Coutsias et al. (2004 ▸), and Theobald (2005 ▸). Finally, in real problems, structures such as molecules may appear in mirror-image or enantiomer form, and such issues were introduced early on by Kabsch (1976 ▸, 1978 ▸). There can also be particular symmetries, or very close approximations to symmetries, that can make some of our natural assumptions about the good behavior of the profile matrix invalid, and many of these issues, including ways to treat degenerate cases, have been carefully studied [see, e.g., Coutsias et al. (2004 ▸) and Coutsias & Wester (2019 ▸)]. The latter authors also point out that if a particular data set M(E) produces a negative smallest eigenvalue ∊4 such that  , this can be a sign of a reflected match, and the negative rotation matrix

, this can be a sign of a reflected match, and the negative rotation matrix  may actually produce the best alignment. These considerations may be essential in some applications, and readers are referred to the original literature for details.

may actually produce the best alignment. These considerations may be essential in some applications, and readers are referred to the original literature for details.

5.4. Illustrative example

We can visualize the transition from the initial data  to the optimal alignment

to the optimal alignment  by exploiting the geodesic interpolation equation (8) from the identity quaternion

by exploiting the geodesic interpolation equation (8) from the identity quaternion  to

to  given by

given by

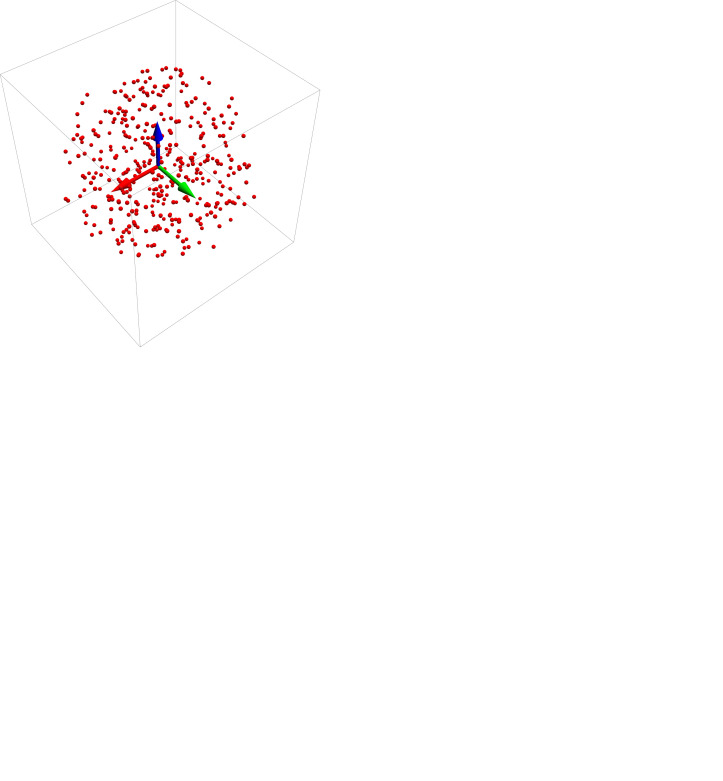

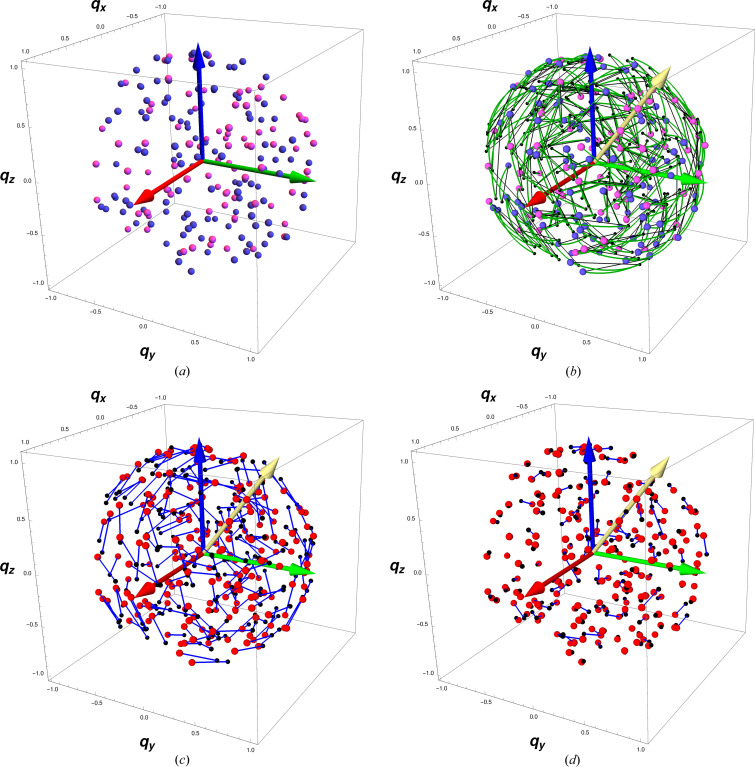

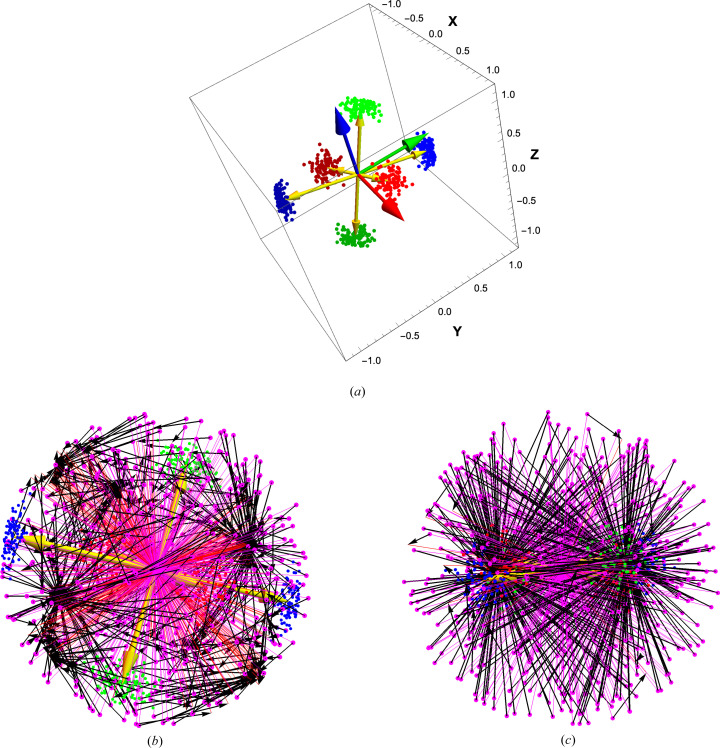

and applying the resulting rotation matrix R(q(s)) to the test data, ending with  showing the best alignment of the two data sets. In Fig. 2 ▸, we show a sample reference data set in red, a sample test data set in blue connected to the reference data set by blue lines, an intermediate partial alignment and finally the optimally aligned pair. The yellow arrow is the spatial part of the quaternion solution, proportional to the eigenvector

showing the best alignment of the two data sets. In Fig. 2 ▸, we show a sample reference data set in red, a sample test data set in blue connected to the reference data set by blue lines, an intermediate partial alignment and finally the optimally aligned pair. The yellow arrow is the spatial part of the quaternion solution, proportional to the eigenvector  (fixed axis) of the optimal 3D rotation matrix

(fixed axis) of the optimal 3D rotation matrix  , and whose length is

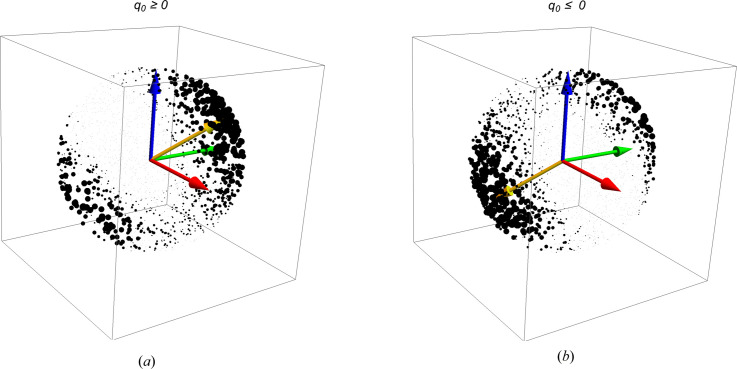

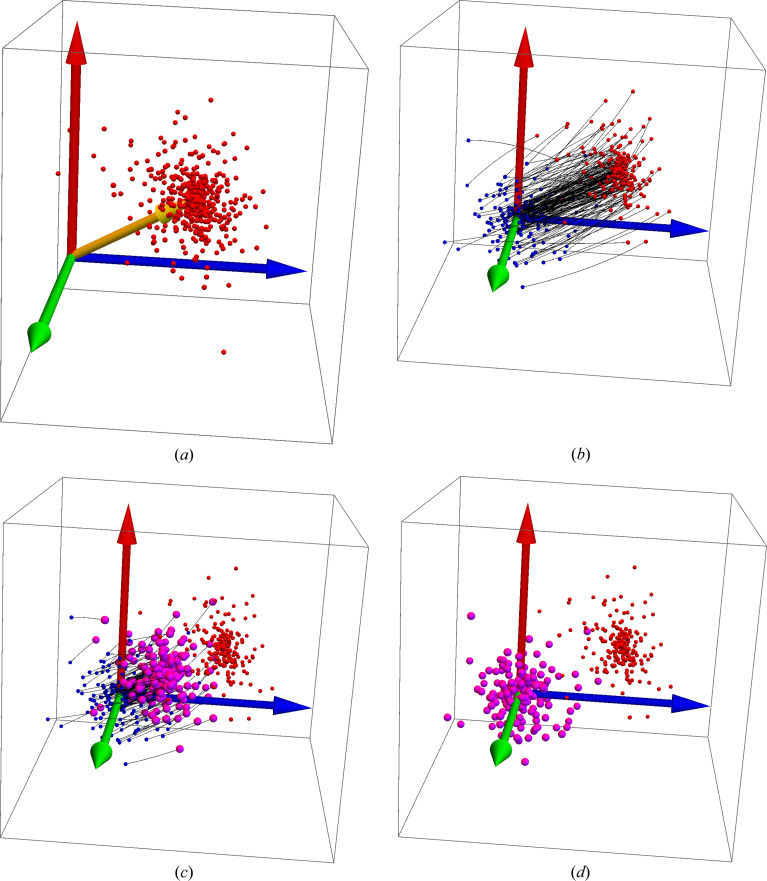

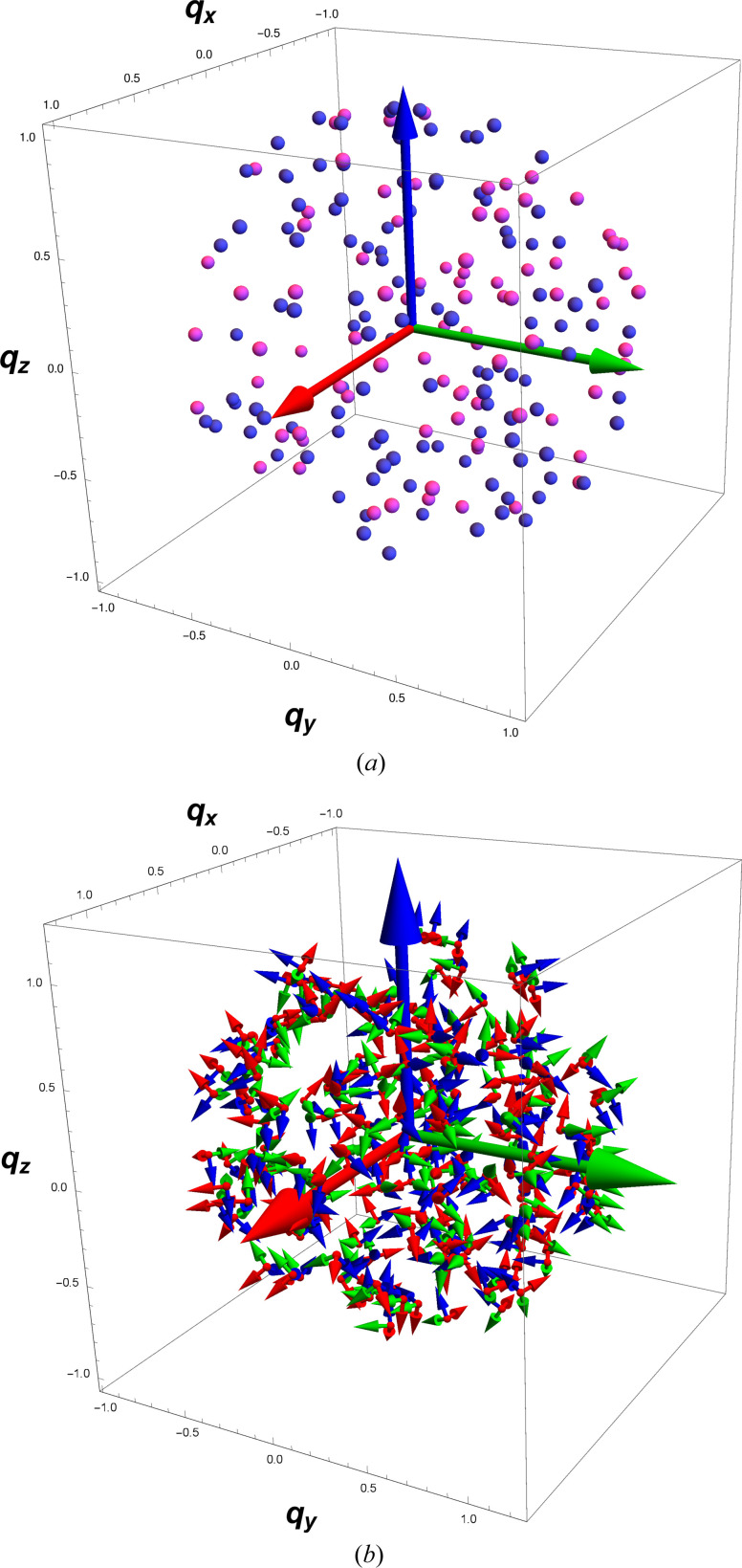

, and whose length is  , sine of half the rotation angle needed to perform the optimal alignment of the test data with the reference data. In Fig. 3 ▸, we visualize the optimization process in an alternative way, showing random samples of q = (q

0, q) in S

3, separated into the ‘northern hemisphere’ 3D unit-radius ball in (a) with q

0 ≥ 0, and the ‘southern hemisphere’ 3D unit-radius ball in (b) with q

0 ≤ 0. (This is like representing the Earth as two flattened discs, one showing everything above the equator and one showing everything below the equator; the distance from the equatorial plane is implied by the location in the disc, with the maximum at the centers, the north and south poles.) Either solid ball contains one unique quaternion for every possible choice of R(q), modulo the doubling of diametrically opposite points at q

0 = 0. The values of

, sine of half the rotation angle needed to perform the optimal alignment of the test data with the reference data. In Fig. 3 ▸, we visualize the optimization process in an alternative way, showing random samples of q = (q

0, q) in S

3, separated into the ‘northern hemisphere’ 3D unit-radius ball in (a) with q

0 ≥ 0, and the ‘southern hemisphere’ 3D unit-radius ball in (b) with q

0 ≤ 0. (This is like representing the Earth as two flattened discs, one showing everything above the equator and one showing everything below the equator; the distance from the equatorial plane is implied by the location in the disc, with the maximum at the centers, the north and south poles.) Either solid ball contains one unique quaternion for every possible choice of R(q), modulo the doubling of diametrically opposite points at q

0 = 0. The values of  are shown as scaled dots located at their corresponding spatial (‘imaginary’) quaternion points q in the solid balls. The yellow arrows, equivalent negatives of each other, show the spatial part

are shown as scaled dots located at their corresponding spatial (‘imaginary’) quaternion points q in the solid balls. The yellow arrows, equivalent negatives of each other, show the spatial part  of the optimal quaternion

of the optimal quaternion  , and the tips of the arrows clearly fall in the middle of the mirror pair of clusters of the largest values of Δ(q). Note that the lower-left dots in (a) continue smoothly into the larger lower-left dots in (b), which is the center of the optimal quaternion in (b). Further details of such methods of displaying quaternions are provided in Appendix B [see also Hanson (2006 ▸)].

, and the tips of the arrows clearly fall in the middle of the mirror pair of clusters of the largest values of Δ(q). Note that the lower-left dots in (a) continue smoothly into the larger lower-left dots in (b), which is the center of the optimal quaternion in (b). Further details of such methods of displaying quaternions are provided in Appendix B [see also Hanson (2006 ▸)].

Figure 2.

(a) A typical 3D spatial reference data set. (b) The reference data in red alongside the test data in blue, with blue lines representing the Euclidean distances connecting each test data point with its corresponding reference point. (c) The partial alignment at s = 0.75. (d) The optimal alignment for this data set at s = 1.0. The yellow arrow is the axis of rotation specified by the optimal quaternion’s spatial components.

Figure 3.

The values of  represented by the sizes of the dots placed randomly in the ‘northern’ and ‘southern’ 3D solid balls spanning the entire hypersphere S

3 with (a) containing the q

0 ≥ 0 sector and (b) containing the q

0 ≤ 0 sector. We display the data dots at the locations of their spatial quaternion components q = (q

1, q

2, q

3), and we know that q

0 = ±(1 − q · q)1/2 so the q data uniquely specify the full quaternion. Since R(q) = R(−q), the points in each ball actually represent all possible unique rotation matrices. The spatial component of the maximal eigenvector is shown by the yellow arrows, which clearly end in the middle of the maximum values of Δ(q). Note that, in the quaternion context, diametrically opposite points on the spherical surface are identical rotations, so the cluster of larger dots at the upper right of (a) is, in the entire sphere, representing the same data as the ‘diametrically opposite’ lower-left cluster in (b), both surrounding the tips of their own yellow arrows. The smaller dots at the upper right of (b) are contiguous with the upper-right region of (a), forming a single cloud centered on

represented by the sizes of the dots placed randomly in the ‘northern’ and ‘southern’ 3D solid balls spanning the entire hypersphere S

3 with (a) containing the q

0 ≥ 0 sector and (b) containing the q

0 ≤ 0 sector. We display the data dots at the locations of their spatial quaternion components q = (q

1, q

2, q

3), and we know that q

0 = ±(1 − q · q)1/2 so the q data uniquely specify the full quaternion. Since R(q) = R(−q), the points in each ball actually represent all possible unique rotation matrices. The spatial component of the maximal eigenvector is shown by the yellow arrows, which clearly end in the middle of the maximum values of Δ(q). Note that, in the quaternion context, diametrically opposite points on the spherical surface are identical rotations, so the cluster of larger dots at the upper right of (a) is, in the entire sphere, representing the same data as the ‘diametrically opposite’ lower-left cluster in (b), both surrounding the tips of their own yellow arrows. The smaller dots at the upper right of (b) are contiguous with the upper-right region of (a), forming a single cloud centered on  , and similarly for the lower left of (a) and the lower left of (b). The whole figure contains two distinct clusters of dots (related by q → −q) centered around

, and similarly for the lower left of (a) and the lower left of (b). The whole figure contains two distinct clusters of dots (related by q → −q) centered around  .

.

6. Algebraic solution of the eigensystem for 3D spatial alignment

At this point, one can simply use the traditional numerical methods to solve equation (12) for the maximal eigenvalue  of M(E) and its eigenvector

of M(E) and its eigenvector  , thus solving the 3D spatial-alignment problem of equation (10). Alternatively, we can also exploit symbolic methods to study the properties of the eigensystems of 4 × 4 matrices M algebraically to provide deeper insights into the structure of the problem, and that is the subject of this section.

, thus solving the 3D spatial-alignment problem of equation (10). Alternatively, we can also exploit symbolic methods to study the properties of the eigensystems of 4 × 4 matrices M algebraically to provide deeper insights into the structure of the problem, and that is the subject of this section.

Theoretically, the algebraic form of our eigensystem is a textbook problem following from the 16th-century-era solution of the quartic algebraic equation in, e.g., Abramowitz & Stegun (1970 ▸). Our objective here is to explore this textbook solution in the specific context of its application to eigensystems of 4 × 4 matrices and its behavior relative to the properties of such matrices. The real, symmetric, traceless profile matrix M(E) in equation (13) appearing in the 3D spatial RMSD optimization problem must necessarily possess only real eigenvalues, and the properties of M(E) permit some particular simplifications in the algebraic solutions that we will discuss. The quaternion RMSD literature varies widely in the details of its treatment of the algebraic solutions, ranging from no discussion at all, to Horn, who mentions the possibility but does not explore it, to the work of Coutsias et al. (Coutsias et al., 2004 ▸; Coutsias & Wester, 2019 ▸), who present an exhaustive treatment, in addition to working out the exact details of the correspondence between the SVD eigensystem and the quaternion eigensystem, both of which in principle embody the algebraic solution to the RMSD optimization problem. In addition to the treatment of Coutsias et al., other approaches similar to the one we will study are due to Euler (Euler, 1733 ▸; Bell, 2008 ▸), as well as a series of papers on the quartic by Nickalls (1993 ▸, 2009 ▸).

6.1. Eigenvalue expressions

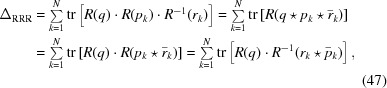

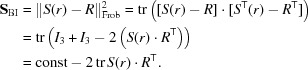

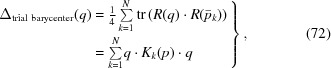

We begin by writing down the eigenvalue expansion of the profile matrix,

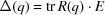

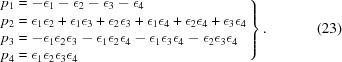

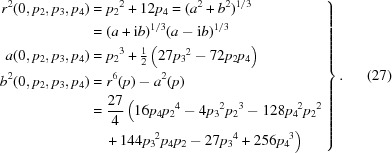

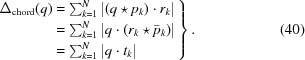

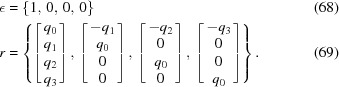

where e denotes a generic eigenvalue, I 4 is the 4D identity matrix and the p k are homogeneous polynomials of degree k in the elements of M. For the special case of a traceless, symmetric profile matrix M(E) defined by equation (13), the p k(E) coefficients simplify and can be expressed numerically as the following functions either of M or of E:

|

Interestingly, the polynomial M(E) is arranged so that −p

2(E)/2 is the (squared) Fröbenius norm of E, and −p

3(E)/8 is its determinant. Our task now is to express the four eigenvalues e = ∊k(p

1, p

2, p

3, p

4), k = 1, …, 4, usefully in terms of the matrix elements, and also to find their eigenvectors; we are of course particularly interested in the maximal eigenvalue  .

.

6.2. Approaches to algebraic solutions

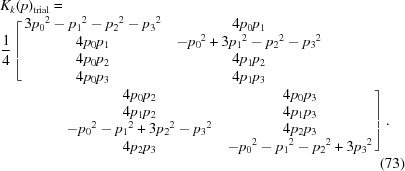

Equation (16) can be solved directly using the quartic equations published by Cardano in 1545 [see, e.g., Abramowitz & Stegun (1970 ▸), Weisstein (2019b ▸), and Wikipedia (2019 ▸)], which are incorporated into the Mathematica function

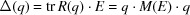

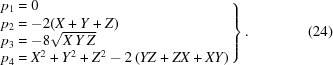

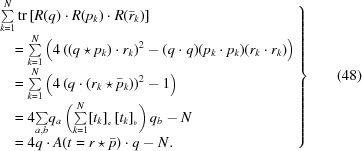

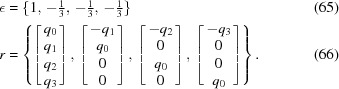

that immediately returns a suitable algebraic formula. At this point we defer detailed discussion of the textbook solution to the supporting information, and instead focus on a particularly symmetric version of the solution and the form it takes for the eigenvalue problem for traceless, symmetric 4 × 4 matrices such as our profile matrices M(E). For this purpose, we look for an alternative solution by considering the following traceless (p 1 = 0) ansatz:

|

This form emphasizes some additional explicit symmetry that we will see is connected to the role of cube roots in the quartic algebraic solutions (see, e.g., Coutsias & Wester, 2019 ▸). We can turn it into an equation for ∊k(p) to be solved in terms of the matrix parameters p k(E) as follows: First we eliminate e using (e − ∊1)(e − ∊2)(e − ∊3)(e − ∊4) = 0 to express the matrix data expressions p k directly in terms of totally symmetric polynomials of the eigenvalues in the form (Abramowitz & Stegun, 1970 ▸)

|

Next we substitute our expression equation (22) for the ∊k in terms of the {X, Y, Z} functions into equation (23), yielding a completely different alternative to equation (16) that will also solve the 3D RMSD eigenvalue problem if we can invert it to express {X(p), Y(p), Z(p)} in terms of the data p k(E) as presented in equation (20):

|

We already see the critical property in p

3 that, while p

3 itself has a deterministic sign from the matrix data, the possibly variable signs of the square roots in equation (22) have to be constrained so their product  agrees with the sign of p

3. Manipulating the quartic equation solutions that we can obtain by applying the library function equation (21) to equation (24), and restricting our domain to real traceless, symmetric matrices (and hence real eigenvalues), we find solutions for X(p), Y(p) and Z(p) of the following form:

agrees with the sign of p

3. Manipulating the quartic equation solutions that we can obtain by applying the library function equation (21) to equation (24), and restricting our domain to real traceless, symmetric matrices (and hence real eigenvalues), we find solutions for X(p), Y(p) and Z(p) of the following form:

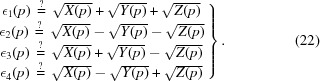

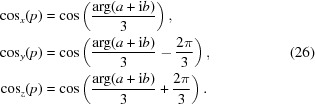

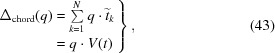

where the  terms differ only by a cube-root phase:

terms differ only by a cube-root phase:

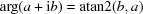

|

Here  in the C mathematics library, or ArcTan[a, b] in Mathematica, F

f(p) with f = (x, y, z) corresponds to X(p), Y(p) or Z(p), and the utility functions appearing in the equations for our traceless p

1 = 0 case are

in the C mathematics library, or ArcTan[a, b] in Mathematica, F

f(p) with f = (x, y, z) corresponds to X(p), Y(p) or Z(p), and the utility functions appearing in the equations for our traceless p

1 = 0 case are

|

The function b 2(p) has the essential property that, for real solutions to the cubic, which imply the required real solutions to our eigenvalue equations (Abramowitz & Stegun, 1970 ▸), we must have b 2(p) ≥ 0. That essential property allowed us to convert the bare solution into terms involving {(a + ib)1/3, (a − ib)1/3} whose sums form the manifestly real cube-root-related cosine terms in equation (26).

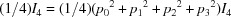

6.3. Final eigenvalue algorithm

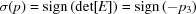

While equations (25) and (26) are well defined, square roots must be taken to finish the computation of the eigenvalues postulated in equation (22). In our special case of symmetric, traceless matrices such as M(E), we can always choose the signs of the first two square roots to be positive, but the sign of the  term is non-trivial, and in fact is the sign of

term is non-trivial, and in fact is the sign of  . The form of the solution in equations (22) and (25) that works specifically for all traceless symmetric matrices such as M(E) is given by our equations for p

k(E) in equations (17)–(22), along with equations (25), (26) and (27)

provided we modify equation (22) using

. The form of the solution in equations (22) and (25) that works specifically for all traceless symmetric matrices such as M(E) is given by our equations for p

k(E) in equations (17)–(22), along with equations (25), (26) and (27)

provided we modify equation (22) using  as follows:

as follows:

|

The particular order of the numerical eigenvalues in our chosen form of the solution equation (28) is found in regular cases to be uniformly non-increasing in numerical order for our M(E) matrices, so ∊1(p) is always the leading eigenvalue. This is our preferred symbolic version of the solution to the 3D RMSD problem defined by M(E).

Note: We have experimentally confirmed the numerical behavior of equation (25) in equation (28) with 1 000 000 randomly generated sets of 3D cross-covariance matrices E, along with the corresponding profile matrices M(E), producing numerical values of p k inserted into the equations for X(p), Y(p) and Z(p). We confirmed that the sign of σ(p) varied randomly, and found that the algebraically computed values of ∊k(p) corresponded to the standard numerical eigenvalues of the matrices M(E) in all cases, to within expected variations due to numerical evaluation behavior and expected occasional instabilities. In particular, we found a maximum per-eigenvalue discrepancy of about 10−13 for the algebraic methods relative to the standard numerical eigenvalue methods, and a median difference of 10−15, in the context of machine precision of about 10−16. (Why did we do this? Because we had earlier versions of the algebraic formulas that produced anomalies due to inconsistent phase choices in the roots, and felt it worthwhile to perform a practical check on the numerical behavior of our final version of the solutions.)

6.4. Eigenvectors for 3D data

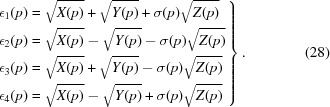

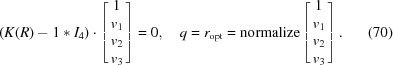

The eigenvector formulas corresponding to ∊k can be generically computed by solving any three rows of

for the elements of v, e.g. v = (1, v 1, v 2, v 3), as a function of some eigenvalue e (of course, one must account for special cases, e.g. if some subspace of M(E) is already diagonal). The desired unit quaternion for the optimization problem can then be obtained from the normalized eigenvector

Note that this can often have q

0 < 0, and that whenever the problem in question depends on the sign of q

0, such as a slerp starting at  , one should choose the sign of equation (30) appropriately; some applications may also require an element of statistical randomness, in which case one might randomly pick a sign for q

0.

, one should choose the sign of equation (30) appropriately; some applications may also require an element of statistical randomness, in which case one might randomly pick a sign for q

0.

As noted by Liu et al. (2010 ▸), a very clear way of computing the eigenvectors for a given eigenvalue is to exploit the fact that the determinant of equation (29) must vanish, that is  ; one simply exploits the fact that the columns of the adjugate matrix αij (the transpose of the matrix of cofactors of the matrix [A]) produce its inverse by means of creating multiple copies of the determinant. That is,

; one simply exploits the fact that the columns of the adjugate matrix αij (the transpose of the matrix of cofactors of the matrix [A]) produce its inverse by means of creating multiple copies of the determinant. That is,

so we can just compute any column of the adjugate via the appropriate set of subdeterminants and, in the absence of singularities, that will be an eigenvector (since any of the four columns can be eigenvectors, if one fails just try another).

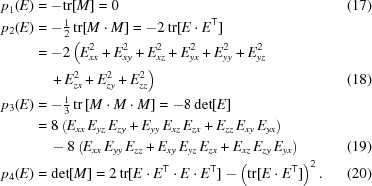

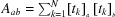

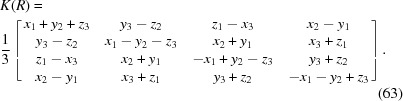

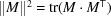

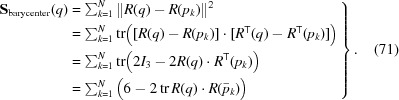

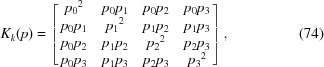

In the general well behaved case, the form of v in the eigenvector solution for any eigenvalue e = ∊k may be explicitly computed to give the corresponding quaternion (among several equivalent alternative expressions) as

|

where for convenience we define {e

x = (e − x + y + z), e

y = (e + x − y + z), e

z = (e + x + y − z)} with x = E

xx, cyclic, a = E

yz − E

zy, cyclic, and A = E

yz + E

zy, cyclic. We substitute the maximal eigenvector  into equation (5) to give the sought-for optimal 3D rotation matrix

into equation (5) to give the sought-for optimal 3D rotation matrix  that solves the RMSD problem with

that solves the RMSD problem with  , as we noted in equation (14).

, as we noted in equation (14).

Remark: Yet another approach to computing eigenvectors that, surprisingly, almost entirely avoids any reference to the original matrix, but needs only its eigenvalues and minor eigenvalues, has recently been rescued from relative obscurity (Denton et al., 2019 ▸). (The authors uncovered a long list of non-cross-citing literature mentioning the result dating back at least to 1934.) If, for a real, symmetric 4 × 4 matrix M we label the set of four eigenvectors v i by the index i and the components of any single such four-vector by a, the squares of each of the sixteen corresponding components take the form

Here the μa are the 3 × 3 minors obtained by removing the ath row and column of M, and the λj(μa) comprise the list of three eigenvalues of each of these minors. Attempting to obtain the eigenvectors by taking square roots is of course hampered by the nondeterministic sign; however, since the eigenvalues λi(M) are known, and the overall sign of each eigenvector v i is arbitrary, one needs to check at most eight sign combinations to find the one for which M · v i = λi(M)v i, solving the problem. Note that the general formula extends to Hermitian matrices of any dimension.

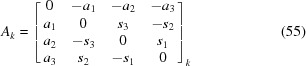

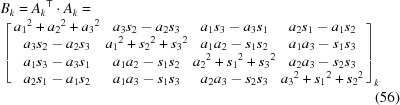

7. The 3D orientation-frame alignment problem

We turn next to the orientation-frame problem, assuming that the data are like lists of orientations of rollercoaster cars, or lists of residue orientations in a protein, ordered pairwise in some way, but without specifically considering any spatial location or nearest-neighbor ordering information. In D-dimensional space, the columns of any SO(D) orthonormal D × D rotation matrix R D are what we mean by an orientation frame, since these columns are the directions pointed to by the axes of the identity matrix after rotating something from its defining identity frame to a new attitude; note that no spatial location information whatever is contained in R D, though one may wish to choose a local center for each frame if the construction involves coordinates such as amino-acid atom locations (see, e.g., Hanson & Thakur, 2012 ▸).

In 2D, 3D and 4D, there exist two-to-one quadratic maps from the topological spaces S 1, S 3 and S 3 × S 3 to the rotation matrices R 2, R 3 and R 4. These are the quaternion-related objects that we will use to obtain elegant representations of the frame data-alignment problem. In 2D, a frame data element can be expressed as a complex phase, while in 3D the frame is a unit quaternion [see Hanson (2006 ▸) and Hanson & Thakur (2012 ▸)]. In 4D (see the supporting information), the frame is described by a pair of unit quaternions.

Note. Readers unfamiliar with the use of complex numbers and quaternions to obtain elegant representations of 2D and 3D orientation frames are encouraged to review the tutorial in Appendix B.

7.1. Overview

We focus now on the problem of aligning corresponding sets of 3D orientation frames, just as we already studied the alignment of sets of 3D spatial coordinates by performing an optimal rotation. There will be more than one feasible method. We might assume we could just define the quaternion-frame alignment or ‘QFA’ problem by converting any list of frame orientation matrices to quaternions [see Hanson (2006 ▸), Hanson & Thakur (2012 ▸) and also Appendix C] and writing down the quaternion equivalents of the RMSD treatment in equation (9) and equation (10). However, unlike the linear Euclidean problem, the preferred quaternion optimization function technically requires a nonlinear minimization of the squared sums of geodesic arc lengths connecting the points on the quaternion hypersphere S 3. The task of formulating this ideal problem as well as studying alternative approximations is the subject of its own branch of the literature, often known as the quaternionic barycenter problem or the quaternion averaging problem (see, e.g., Brown & Worsey, 1992 ▸; Buss & Fillmore, 2001 ▸; Moakher, 2002 ▸; Markley et al., 2007 ▸; Huynh, 2009 ▸; Hartley et al., 2013 ▸; and also Appendix D). We will focus on L 2 norms (the aformentioned sums of squares of arc lengths), although alternative approaches to the rotation-averaging problem, such as employing L 1 norms and using the Weiszfeld algorithm to find the optimal rotation numerically, have been advocated, e.g., by Hartley et al. (2011 ▸). The computation of optimally aligning rotations, based on plausible exact or approximate measures relating collections of corresponding pairs of (quaternionic) orientation frames, is now our task.

Choices for the forms of the measures encoding the distance between orientation frames have been widely discussed, see, e.g., Park & Ravani (1997 ▸), Moakher (2002 ▸), Markley et al. (2007 ▸), Huynh (2009 ▸), Hartley et al. (2011 ▸, 2013 ▸), and Huggins (2014a ▸). Since we are dealing primarily with quaternions, we will start with two measures dealing directly with the quaternion geometry, the geodesic arc length and the chord length, and later on examine some advantages of starting with quaternion-sign-independent rotation-matrix forms.

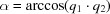

7.2. 3D geodesic arc-length distance

First, we recall that the matrix equation (5) has three orthonormal columns that define a quadratic map from the quaternion three-sphere S

3, a smooth connected Riemannian manifold, to a 3D orientation frame. The squared geodesic arc-length distance between two quaternions lying on the three-sphere S

3 is generally agreed upon as the measure of orientation-frame proximity whose properties are the closest in principle to the ordinary squared Euclidean distance measure equation (9) between points (Huynh, 2009 ▸), and we will adopt this measure as our starting point. We begin by writing down a frame–frame distance measure between two unit quaternions q

1 and q

2, corresponding precisely to two orientation frames defined by the columns of R(q

1) and R(q

2). We define the geodesic arc length as an angle α on the hypersphere S

3 computed geometrically from  . As pointed out by Huynh (2009 ▸) and Hartley et al. (2013 ▸), the geodesic arc length between a test quaternion q

1 and a data-point quaternion q

2 of ambiguous sign [since R(+q

2) = R(−q

2)] can take two values, and we want the minimum value. Furthermore, to work on a spherical manifold instead of a plane, we need basically to cluster the ambiguous points in a deterministic way. Starting with the bare angle between two quaternions on S

3,

. As pointed out by Huynh (2009 ▸) and Hartley et al. (2013 ▸), the geodesic arc length between a test quaternion q

1 and a data-point quaternion q

2 of ambiguous sign [since R(+q

2) = R(−q

2)] can take two values, and we want the minimum value. Furthermore, to work on a spherical manifold instead of a plane, we need basically to cluster the ambiguous points in a deterministic way. Starting with the bare angle between two quaternions on S

3,  , where we recall that α ≥ 0, we define a pseudometric (Huynh, 2009 ▸) for the geodesic arc-length distance as

, where we recall that α ≥ 0, we define a pseudometric (Huynh, 2009 ▸) for the geodesic arc-length distance as

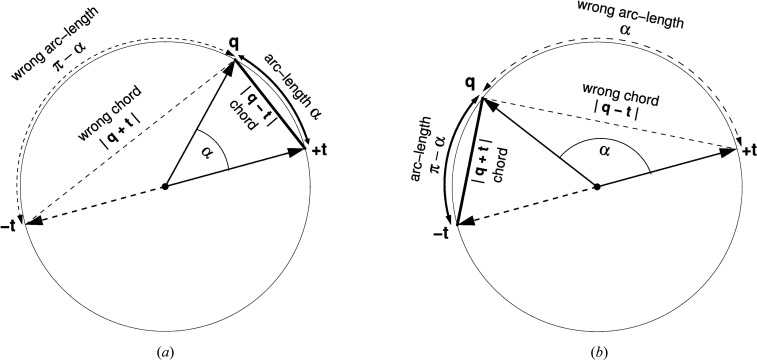

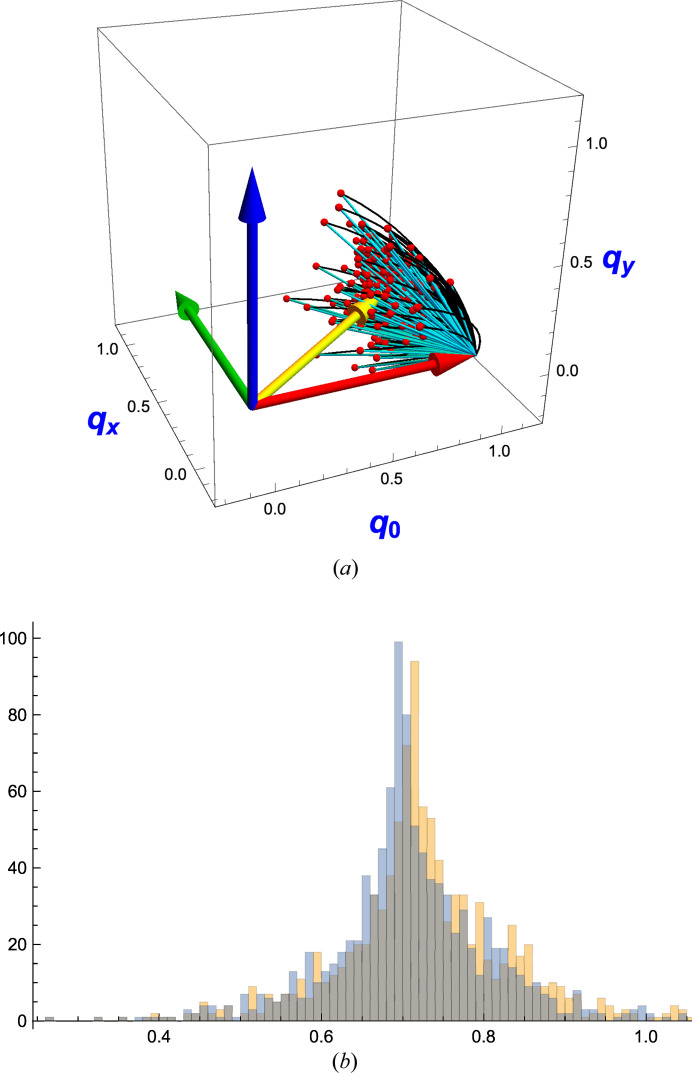

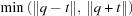

as illustrated in Fig. 4 ▸. An efficient implementation of this is to take

We now seek to define an ideal minimizing L 2 orientation-frame measure, comparable to our minimizing Euclidean RMSD measure, but constructed from geodesic arc lengths on the quaternion hypersphere instead of Euclidean distances in space. Thus to compare a test quaternion-frame data set {p k} to a reference data set {r k}, we propose the geodesic based least-squares measure

|

where we have used the identities of equation (3). When  , the individual measures correspond to equation (35), and otherwise ‘

, the individual measures correspond to equation (35), and otherwise ‘ ’ is the exact analog of ‘R(q) · x

k’ in equation (9), and denotes the quaternion rotation q acting on the entire set {p

k} to rotate it to a new orientation that we want to align optimally with the reference frames {r

k}. Analogously, for points on a sphere, the arccosine of an inner product is equivalent to a distance between points in Euclidean space.

’ is the exact analog of ‘R(q) · x

k’ in equation (9), and denotes the quaternion rotation q acting on the entire set {p

k} to rotate it to a new orientation that we want to align optimally with the reference frames {r

k}. Analogously, for points on a sphere, the arccosine of an inner product is equivalent to a distance between points in Euclidean space.

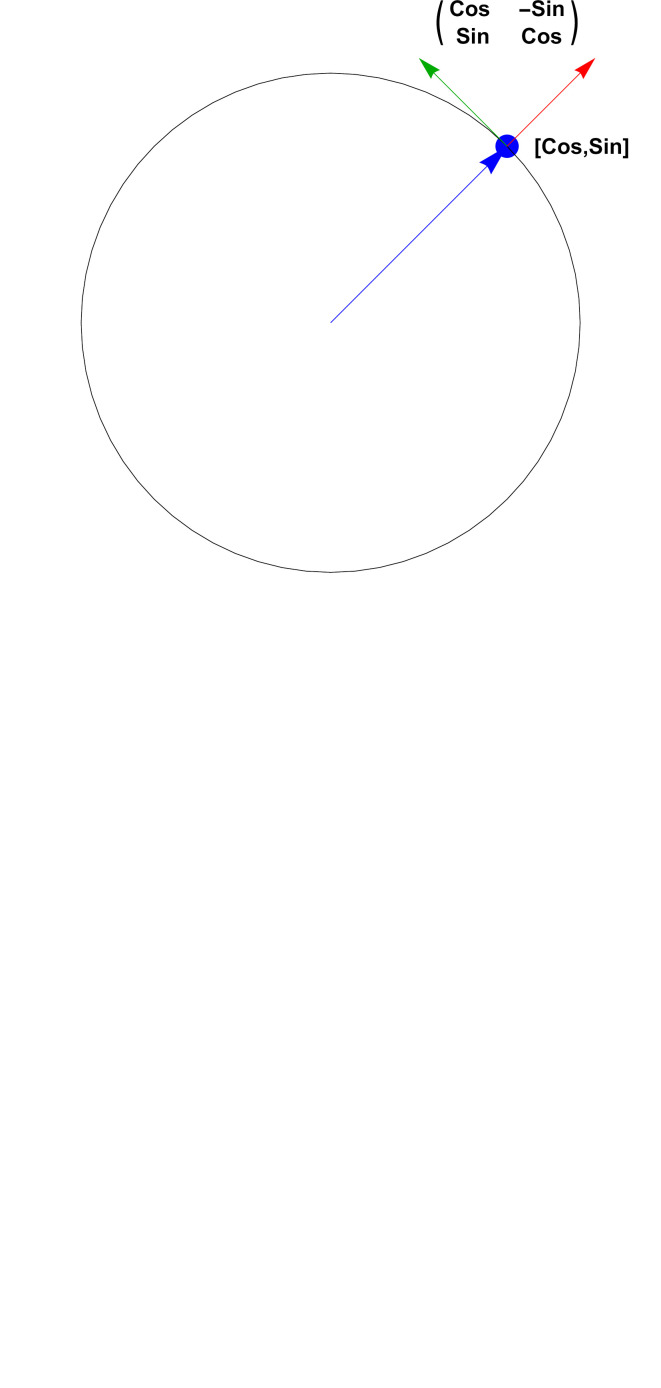

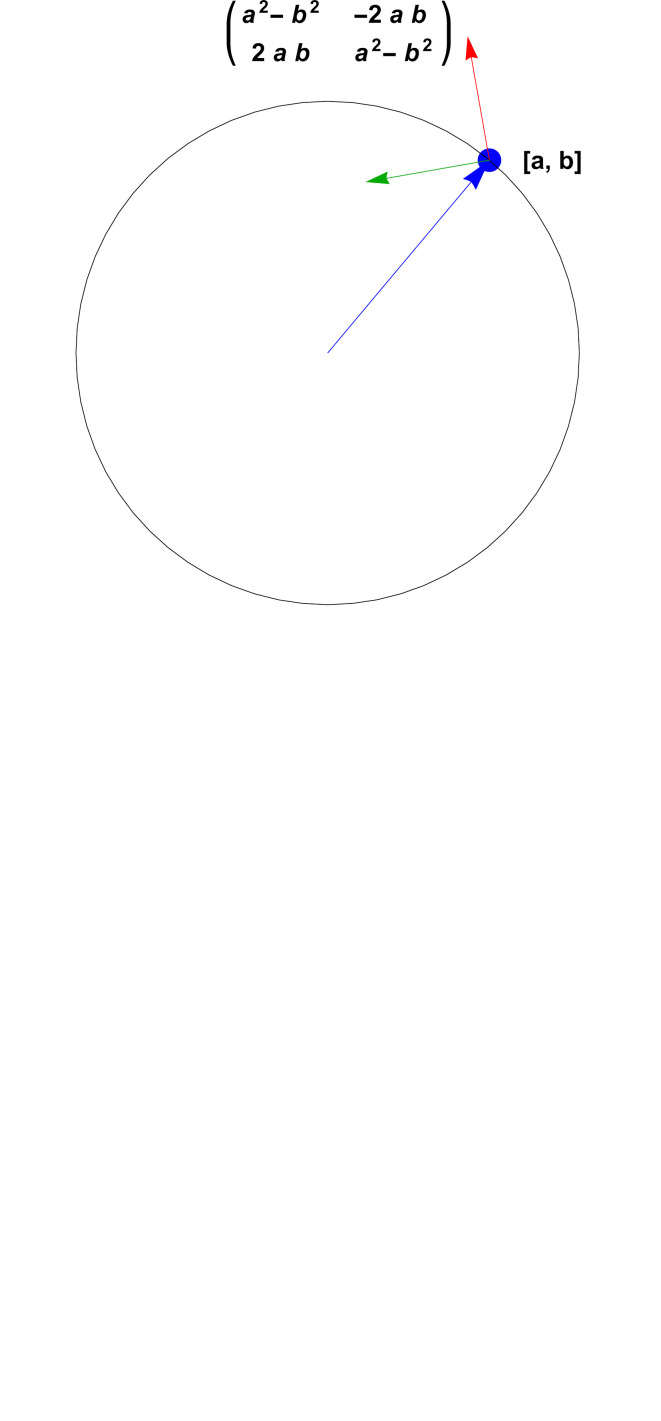

Figure 4.

Geometric context involved in choosing a quaternion distance that will result in the correct average rotation matrix when the quaternion measures are optimized. Because the quaternion vectors represented by t and −t give the same rotation matrix, one must choose  or the minima, that is

or the minima, that is  or

or  , of the alternative distance measures to get the correct items in the arc-length or chord measure summations. (a) and (b) represent the cases when the first or second choice should be made, respectively.

, of the alternative distance measures to get the correct items in the arc-length or chord measure summations. (a) and (b) represent the cases when the first or second choice should be made, respectively.

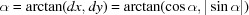

Remark: For improved numerical behavior in the computation of the quaternion inner-product angle between two quaternions, one may prefer to convert the arccosine to an arctangent form,  [remember the C math library uses the opposite argument order atan2(dy, dx)], with the parameters

[remember the C math library uses the opposite argument order atan2(dy, dx)], with the parameters

which is somewhat more stable.

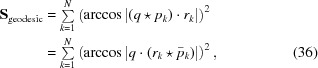

7.3. Adopting the solvable chord measure

Unfortunately, the geodesic arc-length measure does not fit into the linear algebra approach that we were able to use to obtain exact solutions for the Euclidean-data-alignment problem treated so far. Thus we are led to investigate instead a very close approximation to  that does correspond closely to the Euclidean data case and does, with some contingencies, admit exact solutions. This approximate measure is the chord distance, whose individual distance terms analogous to equation (35) take the form of a closely related pseudometric (Huynh, 2009 ▸; Hartley et al., 2013 ▸),

that does correspond closely to the Euclidean data case and does, with some contingencies, admit exact solutions. This approximate measure is the chord distance, whose individual distance terms analogous to equation (35) take the form of a closely related pseudometric (Huynh, 2009 ▸; Hartley et al., 2013 ▸),

We compare the geometric origins for equation (35) and equation (37) in Fig. 4 ▸. Note that the crossover point between the two expressions in equation (37) is at π/2, so the hypotenuse of the right isosceles triangle at that point has length  .

.

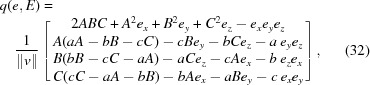

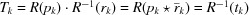

The solvable approximate optimization function analogous to ∥R · x − y∥ 2 that we will now explore for the quaternion-frame alignment problem will thus take the form that must be minimized as

We can convert the sign ambiguity in equation (38) to a deterministic form like equation (35) by observing, with the help of Fig. 4 ▸, that

Clearly (2 − 2|q 1 · q 2|) is always the smallest of the two values. Thus minimizing equation (38) amounts to maximizing the now-familiar cross-term form, which we can write as

|

Here we have used the identity  from equation (3) and defined the quaternion displacement or ‘attitude error’ (Markley et al., 2007 ▸)

from equation (3) and defined the quaternion displacement or ‘attitude error’ (Markley et al., 2007 ▸)

Note that we could have derived the same result using equation (2) to show that  =

=  =

=

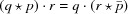

There are several ways to proceed to our final result at this point. The simplest is to pick a neighborhood in which we will choose the samples of q that include our expected optimal quaternion, and adjust the sign of each data value t

k to  by the transformation

by the transformation

The neighborhood of q matters because, as argued by Hartley et al. (2013 ▸), even though the allowed range of 3D rotation angles is θ ∈ (−π, π) [or quaternion sphere angles α ∈ (−π/2, π/2)], convexity of the optimization problem cannot be guaranteed for collections outside local regions centered on some θ0 of size θ0 ∈ (−π/2, π/2) [or α0 ∈ (−π/4, π/4)]: beyond this range, local basins may exist that allow the mapping equation (42) to produce distinct local variations in the assignments of the  and in the solutions for

and in the solutions for  . Within considerations of such constraints, equation (42) now allows us to take the summation outside the absolute value, and write the quaternion-frame optimization problem in terms of maximizing the cross-term expression

. Within considerations of such constraints, equation (42) now allows us to take the summation outside the absolute value, and write the quaternion-frame optimization problem in terms of maximizing the cross-term expression

|

where  is proportional to the mean of the quaternion displacements

is proportional to the mean of the quaternion displacements  , defining their chord-distance quaternion average. V also clearly plays a role analogous to the Euclidean RMSD profile matrix M. However, since equation (43) is linear in q, we have the remarkable result that, as noted in the treatment of Hartley et al. (2013 ▸) regarding the quaternion L

2 chordal-distance norm, the solution is immediate, being simply

, defining their chord-distance quaternion average. V also clearly plays a role analogous to the Euclidean RMSD profile matrix M. However, since equation (43) is linear in q, we have the remarkable result that, as noted in the treatment of Hartley et al. (2013 ▸) regarding the quaternion L

2 chordal-distance norm, the solution is immediate, being simply

since that obviously maximizes the value of  . This gives the maximal value of the measure as

. This gives the maximal value of the measure as

and thus ∥V∥ is the exact orientation-frame analog of the spatial RMSD maximal eigenvalue  , except it is far easier to compute.

, except it is far easier to compute.

7.4. Illustrative example

Using the quaternion display method described in Appendix B and illustrated in Fig. 12, we present in Fig. 5 ▸(a) a representative quaternion-frame reference data set, then in (b) we include a matching set of rotated noisy test data (small black dots), and draw the arc and chord distances (see also Fig. 4 ▸) connecting each test-reference point pair in the quaternion space. In Fig. 5 ▸(c,d), we show the results of the quaternion-frame alignment process using conceptually the same slerp of equation (15) to transition from the raw state at  to q(s = 0.5) for (c) and

to q(s = 0.5) for (c) and  for (d). The yellow arrow is the axis of rotation specified by the spatial part of the optimal quaternion.

for (d). The yellow arrow is the axis of rotation specified by the spatial part of the optimal quaternion.

Figure 5.

3D components of a quaternion orientation data set. (a) A quaternion reference set, color-coded by the sign of q 0. (b) Exact quaternion arc-length distances (green arcs) versus chord distances (black lines) between the test points (black dots) and the reference points. (c) Part way from the starting state to the aligned state, at s = 0.5. (d) The final best alignment at s = 1.0. The yellow arrow is the direction of the quaternion eigenvector; when scaled, the length is the sine of half the optimal rotation angle.

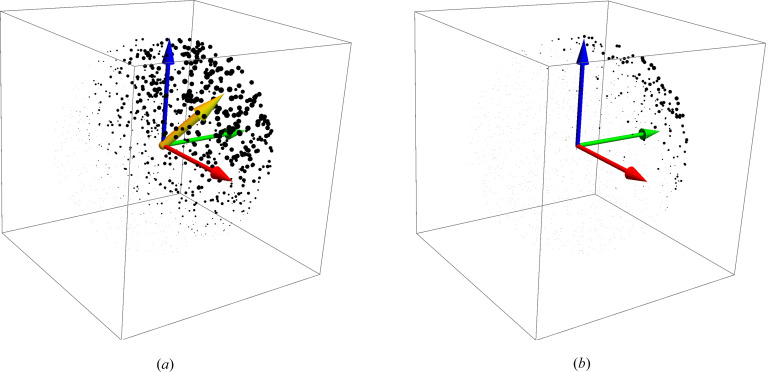

The rotation-averaging visualization of the optimization process, though it has exactly the same optimal quaternion, is quite different, since all the quaternion data collapse to a list of single small quaternions  . As illustrated in Fig. 6 ▸, with compatible sign choices, the

. As illustrated in Fig. 6 ▸, with compatible sign choices, the  ’s cluster around the optimal quaternion, which is clearly consistent with being the barycenter of the quaternion differences, intuitively the place to which all the quaternion frames need to be rotated to optimally coincide. As before, the yellow arrow is the axis of rotation specified by the spatial part of the optimal quaternion. Next, Fig. 7 ▸ addresses the question of how the rigorous arc-length measure is related to the chord-length measure that can be treated using the same methods as the spatial RMSD optimization. In parallel to Fig. 5 ▸(b), Fig. 7 ▸(a) shows essentially the same comparison for the

’s cluster around the optimal quaternion, which is clearly consistent with being the barycenter of the quaternion differences, intuitively the place to which all the quaternion frames need to be rotated to optimally coincide. As before, the yellow arrow is the axis of rotation specified by the spatial part of the optimal quaternion. Next, Fig. 7 ▸ addresses the question of how the rigorous arc-length measure is related to the chord-length measure that can be treated using the same methods as the spatial RMSD optimization. In parallel to Fig. 5 ▸(b), Fig. 7 ▸(a) shows essentially the same comparison for the  quaternion-displacement version of the same data. In Fig. 7 ▸(b), we show the histograms of the chord distances to a sample point, the origin in this case, versus the arc-length or geodesic distances. They obviously differ, but in fact for plausible simulations, the arc-length numerical optimal quaternion barycenter differs from the chord-length counterpart by only a fraction of a degree. These issues are studied in more detail in the supporting information.

quaternion-displacement version of the same data. In Fig. 7 ▸(b), we show the histograms of the chord distances to a sample point, the origin in this case, versus the arc-length or geodesic distances. They obviously differ, but in fact for plausible simulations, the arc-length numerical optimal quaternion barycenter differs from the chord-length counterpart by only a fraction of a degree. These issues are studied in more detail in the supporting information.

Figure 6.