Abstract

The increasing throughput of DNA sequencing technologies creates a need for faster algorithms. The fate of most reads is to be mapped to a reference sequence, typically a genome. Modern mappers rely on heuristics to gain speed at a reasonable cost for accuracy. In the seeding heuristic, short matches between the reads and the genome are used to narrow the search to a set of candidate locations. Several seeding variants used in modern mappers show good empirical performance but they are difficult to calibrate or to optimize for lack of theoretical results. Here we develop a theory to estimate the probability that the correct location of a read is filtered out during seeding, resulting in mapping errors. We describe the properties of simple exact seeds, skip seeds and MEM seeds (Maximal Exact Match seeds). The main innovation of this work is to use concepts from analytic combinatorics to represent reads as abstract sequences, and to specify their generative function to estimate the probabilities of interest. We provide several algorithms, which together give a workable solution for the problem of calibrating seeding heuristics for short reads. We also provide a C implementation of these algorithms in a library called Sesame. These results can improve current mapping algorithms and lay the foundation of a general strategy to tackle sequence alignment problems. The Sesame library is open source and available for download at https://github.com/gui11aume/sesame.

Keywords: C library, probability, analytic combinatorics, seeding accuracy, heuristic algorithms

1. Introduction

1.1. Mapping Reads to Genomes

Say we use an imperfect instrument to sequence a small fragment of DNA. If we know its genome of origin, how can we find the location of the fragment in this genome?

The advent of high-throughput sequencing has put this question at the center of countless applications in genetics, such as discovering disease-causing mutations, selecting breeds of interest in agriculture or tracing human migrations.

We will refer to this as the true location problem. The answer to the problem depends on the length of the read, on the error rate of the sequencer and on one key feature of the genome: its repeat structure. Most genomes contain repetitive sequences, i.e., relatively large subsequences that are present at multiple locations. If the sequenced DNA fragment comes from a repetitive sequence, it may be impossible to map the read with certainty.

In general, repetitive sequences are not identical but merely homologous—meaning that their similarity is very unlikely to occur by chance. So if the sequenced DNA fragment originates from a repetitive sequence, we can map the read only if we can rule out all the candidates except one. This, in turn, depends on the similarity between the duplicates and on the error rate of the sequencer.

Since repetitive sequences play a central role in the problem, we give the terms “targets” and “duplicates” a meaning that will facilitate the exposition of the theory.

Definition 1. The target is the DNA fragment that was actually sequenced. Duplicates are sequences of the genome that share homology with the target (in genetics they are often referred to as paralogs). In this article we will focus on short reads from complex eukaryotic genomes, so for concreteness the reader can assume that fragments are 30–300 bp long and that duplicates have above 75% identity with the target.

The difficulty of the true location problem is due to sequencing errors. Occasionally, the sequence of a DNA fragment can be closer to one of the duplicates than to the target. So the true location of a DNA fragment is not always the best (as measured by the identity between the sequence and the candidate location). This naturally leads to asking how we can identify the best location of the fragment in the genome.

We will refer to this as the best location problem. It amounts to finding the optimal alignment between two sequences, and for this reason has received substantial attention in bioinformatics. For the purpose of developing a theory rooted in statistics, our main concern is to address the true location problem, but for simplicity, we will assume that the true location is also the best. When applicable, we will clarify the implications of this hypothesis in the relevant sections, and we will see how it impacts the theory as a whole in section 8.1.

1.2. Seeding Heuristics

Exact alignment algorithms were designed to solve the best location problem (Needleman and Wunsch, 1970; Smith and Waterman, 1981), but they are too slow to process the large amount of data generated by modern sequencers. Instead, one uses heuristic methods, i.e., algorithms that run faster but may return an incorrect result (Waterman, 1984).

The most popular heuristic for mapping DNA sequences is a filtration method called “seed-and-extend.” The principle is to first identify seeds, defined as short regions of high similarity between the read and the genome, and then use an exact alignment algorithm at the seeded locations to evaluate the candidates and identify the optimum. Exact alignment algorithms, such as the Needleman-Wunsch (Needleman and Wunsch, 1970) or the Smith-Waterman (Smith and Waterman, 1981) algorithms return the distance or the similarity between two sequences according to some quantitative criterion. They are exact in the sense that they always return the correct answer, unlike heuristic algorithms. The seed-and-extend strategy was first proposed in FASTA (Lipman and Pearson, 1985) and BLAST (Altschul et al., 1990) to search for homology in sequence databases of proteins and DNA.

There are efficient methods to extract seeds, so it is possible to quickly hone in on a small set of candidates and to reduce the search space of the alignment algorithm. The disadvantage is that the target may not be in the candidate set, in which case the read cannot be mapped correctly.

As a consequence, seeding methods induce a trade-off between speed and accuracy: If the filtration is set to produce a large candidate set, the target is likely to be discovered, with the downside that checking all the candidates with an exact alignment algorithm will take long. Conversely, if the filtration is set to produce a small candidate set, the process will run faster but the target is more likely to be missed.

Importantly, the trade-off depends on the seeding method. This means that mapping algorithms can run faster at no cost for accuracy if we can find better seeding strategies. Progress on this line of research has largely benefited from the improvement of computer hardware and from the development of optimized data structures. There already exists a large body of literature on the design of seeding algorithms; the interested reader can find examples of those in references (Ma et al., 2002; Brejová, 2003; Li et al., 2004; Kucherov et al., 2005; Sun and Buhler, 2005; Xu et al., 2006; Lin et al., 2008). Sun and Buhler (2006) and Li and Homer (2010) present high-level comparisons of different designs, and Navarro (2001) gives a global overview of filtration methods in pattern matching.

1.3. The Two Types of Seeding Failure

Filtering heuristics are considered to fail whenever the target is not in the candidate set, but here we must be more specific and distinguish two kinds of failure: In the first kind, the candidate set contains a duplicate of the target but does not contain the target itself; in the second kind, the candidate set contains neither the target nor any duplicate.

The distinction is important because the duplicates of the target are similar to the read (due to their similarity to the target), so a failure of the first kind often looks like a success. In contrast, a failure of the second kind is easier to flag because in this case the candidates are not similar to the read. We will simply assume that seeding failures of the second kind are always detected (as explained in section 8.3), so that we can focus on the more difficult case of seeding failures of the first kind.

Before going further, we introduce three terms that will simplify the exposition.

Definition 2. The output of the seeding step is the candidate set. The candidate set is the list of genomic locations where the read can be potentially mapped. The read is always mapped to one element of the candidate set. The seeding step is said to be

“on-target” if the candidate set contains the target,

“off-target” if the candidate set contains a duplicate but not the target,

“null” if the candidate set contains neither.

In this article, we will always consider that a genomic location is in the candidate set if and only if the read contains at least one seed with a perfect match for this genomic location.

With our assumptions, a read is mapped to the wrong location if and only if the candidate set is off-target. Indeed, if the candidate set is null, the read is not mapped, and if the candidate set is on-target, the correct location is discovered at the alignment step. The equivalence is granted by the assumption that the true location is also the best, reducing the mapping problem to a seeding problem. That being said, the true location is not always the best in practical applications. We will show in section 8.1 that off-target seeding can be responsible for most of the mapping errors even without the assumption above, but for now we maintain the strict equivalence between mapping errors and off-target seeding.

Focusing on popular heuristics to map short reads, our aim is to develop a method to estimate the probability that the candidate set is off-target. Previous work pioneered a method to compute seeding probabilities but it did not distinguish off-target from null seeding (Filion, 2017, 2018), and therefore did not provide a way to estimate the frequency of mapping errors. Other authors investigated the reliability of mapping algorithms (Menzel et al., 2013), but they focused on the probabilities of random hits, recognizing that addressing the problem of incorrect mapping requires taking into consideration the repeat structure of the genome.

The rest of the article is organized as follows: section 2 presents common seeding strategies used in bioinformatics, section 3 presents the basic concepts of analytic combinatorics that will be required to compute seeding probabilities, sections 4 to 6 treat the cases of exact seeds, skip seeds and MEM seeds, three common seeding heuristic used for mapping, section 7 presents Sesame, a C library implementing the main results of the theory, section 8 returns to the mapping problem and revisits the assumptions of the model, and finally section 9 provides some perspectives on the present work. Appendix A gathers for reference all the definitions encountered in the text, and Appendix B contains proofs and complements omitted from the main text.

2. Seeds

The term “seed” has different meanings in computational biology. It can designate a part of the read, a part of the genome, a particular sequence motif, or a structured pattern of matches. Also, a seed does not always refer to an exact match. For instance, the algorithm PatternHunter (Ma et al., 2002) uses spaced seeds that tolerate mismatches. To avoid any confusion, we will adopt the convention below.

Definition 3. A seed is a subsequence of the read that has size at least γ (defined by the context of the problem) and that has at least one perfect match in the reference genome. Every genomic match of every seed is in the candidate set.

When a seed matches a given location of the genome, we say that it is a seed for that location. This is particularly useful in expressions such as “seed for the target” or “seed for a duplicate.”

This definition presents a computational challenge: to know if a given subsequence of a read is a seed we need to know if it exists somewhere in the genome. This is a non trivial problem in itself, but fortunately we can use practical methods to solve it, even when the reference genome is very large.

These algorithms are crucial for the present theory, but describing them in depth is outside the scope of this document. Let us just mention that all the methods belong to a family known as exact offline string matching algorithms, where “offline” means that sequences are looked up in an index instead of the genome itself. Online methods can be used when the reference genome is not indexed (Faro and Lecroq, 2013), but this case is of little relevance in the present context.

The index is usually a hash table or a variant of the so-called FM-index (Ferragina and Manzini, 2000, 2005). Hash tables are typically used to index k-mers, which makes them useful to search for seeds of fixed size k (see Manekar and Sathe, 2018 for a recent benchmark of k-mer hashing algorithms). In contrast, some text-indexing structures have no set size so they can be used to search for seeds of different lengths. This is the case of the FM-index, a compact data structure based on the suffix array (Manber and Myers, 1993) and on the Burrows-Wheeler transform (Burrows and Wheeler, 1994), emulating a suffix trie with a much smaller memory footprint (Ferragina and Manzini, 2000, 2005).

Other methods can be efficient (e.g., running a bisection on the suffix array Dobin et al., 2013) but the FM-index is currently the most popular choice for seeding methods. For MEM seeds defined below, it is even the only practical option (Khan et al., 2009; Vyverman et al., 2013; Fernandes and Freitas, 2014; Khiste and Ilie, 2015). Overall, the detail is of little interest for the theory. We simply assume that seeds are known at all times without ambiguity because this problem has several practical solutions.

2.1. Exact Seeds

Exact seeds are seeds of fixed size γ. In other words, when using exact seeds, the candidate set consists of all the genomic locations for which there is a perfect match of size γ in the read. This seeding heuristic was used in the first version of BLASTN (Altschul et al., 1990), but it has become unpopular for producing many short spurious hits.

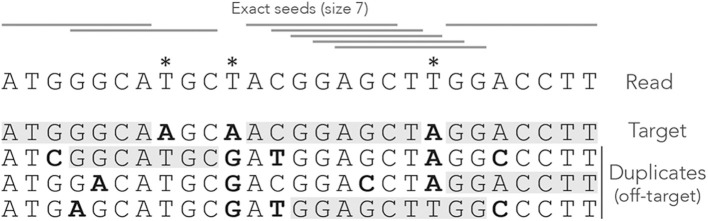

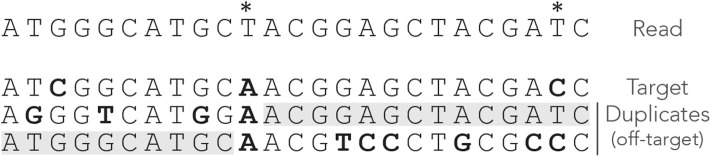

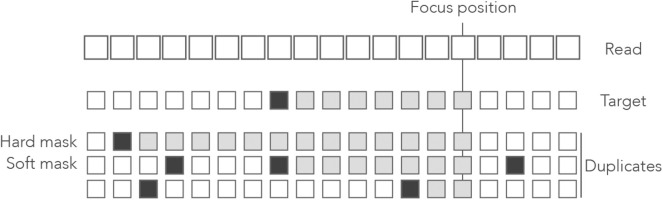

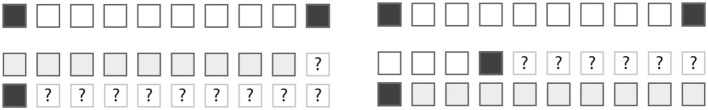

Figure 1 shows the exact seeds from an example read with three miscalled nucleotides. The sequenced DNA fragment has three duplicates so the seeds can match four possible locations.

Figure 1.

Exact seeding. A sequenced DNA fragment (Read) is shown above the actual molecule (Target), and above three paralogs of the target (Duplicates). Sequencing errors are indicated by  (all three are A misread as T). The mismatches between the genomic sequences and the read are indicated in bold. The exact seeds of size γ = 7 are indicated as horizontal gray lines above the read. Matching regions in the genomic sequences are shadowed in gray. Several overlapping seeds accumulate at the center of the read, which is typical of exact seeds.

(all three are A misread as T). The mismatches between the genomic sequences and the read are indicated in bold. The exact seeds of size γ = 7 are indicated as horizontal gray lines above the read. Matching regions in the genomic sequences are shadowed in gray. Several overlapping seeds accumulate at the center of the read, which is typical of exact seeds.

Observe that erroneous nucleotides can belong to exact seeds because they sometimes match a duplicate. For instance, the first sequencing error matches all the duplicates and belongs to an off-target seed for the first duplicate. However, sequencing errors that are mismatches for all the sequences cannot belong to a seed. This is the case of the second sequencing error in the example, creating a local deficit of seeds.

Note the clutter in the middle of the read, where consecutive seeds match consecutive sequences at the same location. This is typical for exact seeds and is considered a nuisance for the implementation. Indeed, it is a waste of computer resources to discover matches in sequences that are already in the candidate set. In addition, this seeding method is not particularly sensitive compared to spaced seeds (Ma et al., 2002) so it is used only in a few specific applications. Nevertheless, it will be useful for the development of the present theory.

2.2. Skip Seeds

Skip seeds have a fixed size γ, but unlike exact seeds they cannot start at every nucleotide. Instead, a certain amount of nucleotides is skipped between every seed. This is a way to reduce the overlapping matches at the same location, at the cost of sensitivity. This seeding heuristic is the core of Bowtie2 (Langmead and Salzberg, 2012), where seeds have size γ = 16 and are separated by 10 nucleotides (nine positions are skipped). We will refer to seeds where n nucleotides are skipped as “skip-n seeds.” For instance, Bowtie2 uses skip-9 seeds.

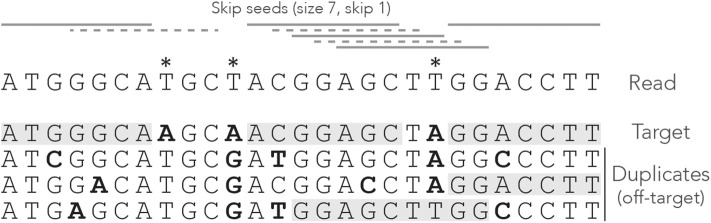

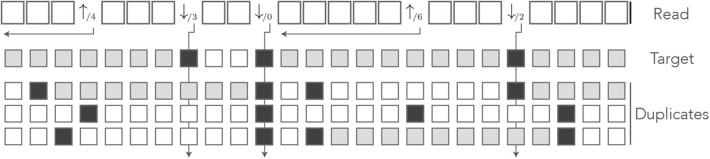

Figure 2 shows what happens when exact seeds are replaced by skip-1 seeds on the read of Figure 1. Here the size is still γ = 7 but 1 nucleotide is skipped between seeds. This amounts to removing every second seed. The consequence is that there are fewer overlapping matches at the center of the read, but the only seed for the second duplicate is lost. This is a rather positive outcome because there is one off-target location fewer in the candidate set, but the same might happen to the target.

Figure 2.

Example of skip seeds. The sequences and the annotations are the same as in Figure 1, but here we use skip-1 seeds. In other words, seeds can never start at nucleotides 2, 4, 6 etc. To highlight the difference with Figure 1, the missing seeds are represented by dotted lines.

It is clear that skipping nucleotides reduces the sensitivity of the seeding step, but to what extent? One could test this empirically, but the answer depends on the seed length, the number of nucleotides that are skipped, the error rate of the sequencer and the size of the reads. The present theory will allow us to make general statements about the performance of skip seeds against exact seeds in different contexts.

2.3. MEM Seeds

MEM seeds (where MEM stands for Maximal Exact Match) are somewhat harder to define. Unlike exact seeds and skip seeds, their size is variable. They are used in BWA-MEM (Li, 2013) where they give good empirical results. To describe MEM seeds, let us first clarify the meaning of “Maximal Exact Match.”

Definition 4. A Maximal Exact Match (MEM) is a subsequence of the read that is present in the reference genome and that cannot be extended—either because the read ends or because the extended subsequence is not in the genome.

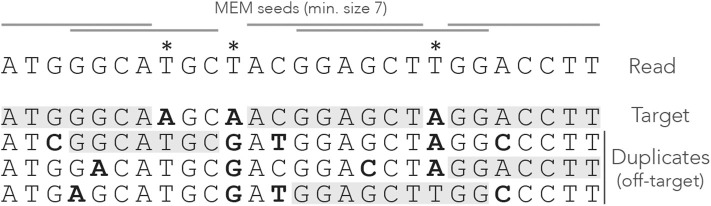

A MEM seed is simply a MEM of size γ or greater. Figure 3 shows what happens when using MEM seeds on the read of Figure 1. Observe that the clutter at the center of the read has disappeared because consecutive matches are fused into a few MEM seeds.

Figure 3.

Example of MEM seeds. The sequences and the annotations are the same as in Figure 1, but here we use MEM seeds of minimum size γ = 7. The clutter at the center of the read has disappeared and there is at least one seed for each sequence.

Two consecutive MEM seeds can overlap, in which case they always match distinct sequences of the genome (otherwise neither of them would be a MEM seed). There does not have to be any overlap though, because a nucleotide can be a mismatch against all the sequences, like the second read error for instance.

Note that a MEM does not always match a single subsequence of the genome. For instance, the rightmost MEM seed matches two distinct genomic subsequences. This case motivates the following definition, which will play an important role later.

Definition 5. A strict MEM seed has a single match in the genome. A shared MEM seed has several matches in the genome.

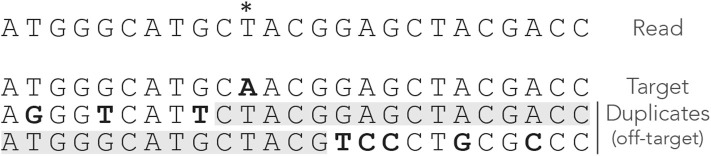

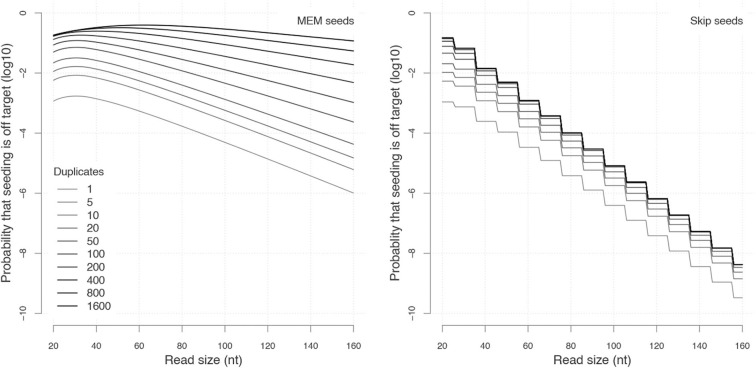

Compared to seeds of fixed size, MEM seeds have two counter-intuitive properties. The first is that there are cases where there cannot be any on-target seed, even when changing the minimum seed size γ. Figure 4 shows such an example. Even though there is a single sequencing error, the read has no MEM seed for the target. Lowering γ does not change this, so there is no way to discover the true location using MEM seeds (even though it is the best location).

Figure 4.

Issues with MEM seeds: inaccessible targets. The read, the MEM seeds and the sequences are represented as in Figure 3. The MEM seeds matching the two duplicates at the bottom effectively hide the target, so it cannot be discovered. This can occur even when the true location is the best candidate and when there is a single error on the read.

The second counter-intuitive property is that shortening a read can sometimes generate a MEM seed for the target. Figure 5 shows an example of this case. There is no MEM seed for the target, but there would be if the read were two nucleotides shorter on the right side. Indeed, in this case there would be a shared MEM seed matching the target and the first duplicate (provided γ ≤ 12).

Figure 5.

Issues with MEM seeds: too long reads. The read, the MEM seeds, and the sequences are represented as in Figure 3. There would be an on-target seed (shared) if the read were two nucleotides shorter. The true location is hidden by the last two nucleotides.

These examples show that MEM seeds can perform worse than seeds of fixed size. MEM seeds yield fewer candidates and therefore speed up the mapping process, but the question is at what cost? The theory developed here will allow us to compute the probability that a read has no MEM seed for the target and thus that the true location is missed at the seeding stage.

2.4. Spaced Seeds

Originally introduced by Califano and Rigoutsos (1993) and popularized by PatternHunter (Ma et al., 2002), spaced seeds feature “don't-care” positions allowing them to detect imperfect matches. Spaced seeds are represented by models such as “11*11,” here indicating that the seeds have length 5 and that the middle position is disregarded. At index time, the genome is scanned with the model so that nucleotides labeled “1” are concatenated and indexed. At search time, the query is scanned with the model and the concatenated nucleotides labeled “1” are looked up in the index.

Spaced seeds of weight w (the number of 1 in the model) have the same memory requirements as contiguous seeds of length w but they are more sensitive (Li et al., 2006), making them very attractive for homology search. They were used in the first generation of short-read mappers (Jocham et al., 1986; Lin et al., 2008; Chen et al., 2009; Rumble et al., 2009), but nowadays they find more applications in genome comparisons (Kiełbasa et al., 2011; Healy, 2016), metagenomics (Břinda et al., 2015; Ounit and Lonardi, 2016), genome assembly (Birol et al., 2015), and long-read mapping (Sovic et al., 2016).

The success of the mainstream mappers BWA-MEM (Li, 2013) and Bowtie2 (Langmead and Salzberg, 2012) is due in part to the FM-index, which only supports contiguous seeds. Some workarounds are available for spaced seeds (Horton et al., 2008; Gagie et al., 2017) but they increase the memory footprint, explaining that short reads are typically mapped using contiguous seeds. More generally, computing the sensitivity of spaced seeds is challenging (Kucherov et al., 2006; Li et al., 2006; Martin and Noé, 2017). It is possible to do this using the tools introduced below, as shown in section 3.5. However, to compute off-target probabilities, the main purpose of this article, the complexity rapidly becomes prohibitive. We will thus restrict our attention to contiguous seeds because they are relevant for mapping problems and fit tightly within the present theory.

3. Model and Strategy

3.1. Sequencing Errors and Divergence of the Duplicates

We now need to model the sequencing and duplication processes so that we can compute the probabilities of the events of interest. We assume that the sequencing instrument has a constant substitution rate p, and that insertions and deletions never occur. When a substitution occurs, we assume that the instrument is equally likely to output any one of the remaining three nucleotides. This corresponds more or less to the error spectrum of the Illumina sequencing technology (Nakamura et al., 2011).

Next, we assume that the target sequence has N ≥ 0 duplicates, so that there are N off-target sequences. We further assume that duplication happened instantaneously at some point in the past and that all N + 1 sequences diverge independently of each other at a constant rate. In other words, we ignore the complications due to the genealogy of the duplication events. Instead, we simply assume that at each nucleotide position, any given duplicate is identical to the target with probability 1 − μ. If it is not, we assume that the duplicate sequence can be any of one the three remaining nucleotides (i.e., each is found with probability μ/3).

Note that read errors are always mismatches against the target (because we assume that the target is the true sequence), and they match each duplicate with probability μ/3. Correct nucleotides are always matches for the target, and they match each duplicate with probability 1 − μ.

Before going further, we also need to move a practical consideration out of the way. Seeds can match any sequence of the genome, not just the target or the duplicates. However, we will ignore matches in the rest of the genome because such random matches are unlikely to cause a mapping error when seeding is off-target, contrary to matches in duplicates. Neglecting those will greatly simplify the exposition of the theory without loss of generality. We will explain in section 8.3 how to deal with this practical case and how to identify those matches as off-target. Until then, we will consider that the target and the duplicates are the only sequences in the reference genome.

3.2. Weighted Generating Functions

The central object of analytic combinatorics is the generating function, and for our purpose we will use a special kind known as weighted generating function.

Definition 6. Let be a set of combinatorial objects such that has a size |a| ∈ ℕ and a weight w(a) ∈ ℝ+. The weighted generating function of is defined as

| (1) |

Expression (1) also defines a sequence (ak)k≥0 such that

By definition , where Ak is the class of objects of size k in . The number ak is the total weight of objects of size k.

To give an example, assume that a particular symbol, say ⇓, has a probability of occurrence equal to p. The weighted generating function of words containing only this symbol is pz. The weight of the word is its probability (here equal to p) and the size is its length (here 1).

In this document we will focus on the weighted generating function A(z) of the set of reads that do not have on-target seeds (i.e., reads for which seeding is either null or off-target). The weight of a read is its probability of occurrence and the size k is its number of nucleotides. The coefficient ak is thus the proportion of reads of size k that do not have an on-target seed, which is the quantity of interest.

The motivation for introducing weighted generating functions is that operations on combinatorial objects translate into operations on their weighted generating functions. If A(z) and B(z) are the weighted generating functions of two mutually exclusive sets and , the weighted generating function of is A(z) + B(z), as evident from expression (1). Size and weight can be defined for pairs of objects in as |(a, b)| = |a| + |b| and w(a, b) = w(a)w(b). In other words the sizes are added and the weights are multiplied. With this convention, the weighted generating function of the Cartesian product is A(z)B(z). This simply follows from expression (1) and from

These two operations are all we need in order to compute the weighted generating functions of the reads of interest. Addition corresponds to creating a new family by merging reads from families and . Multiplication corresponds to creating a new family by concatenating reads from families and .

3.3. Analytic Representation

The analytic combinatorics framework relies on a strategy referred to as the symbolic method (Sedgewick and Flajolet, 2013). The idea is to combine simple objects into more complex objects. Each combinatorial operation on the objects corresponds to a mathematical operation on their weighted generating functions. One can thus obtain the weighted generating function of complex objects, whose coefficients ak (k ≥ 0) are the probabilities of interest (Régnier, 2000; Nicodeme et al., 2002; Sedgewick and Flajolet, 2013).

As explained by Filion (2017, 2018), we recode the reads in alphabets of custom symbols and we specify a construction plan of the reads using a so-called transfer matrix M(z). The transfer matrix specifies which types of “segments” can follow each other in the reads of interest: the entry at coordinates (i, j) is the weighted generating function of segments of type j that can be appended to segments of type i.

M(z) contains the weighted generating functions of all the reads that consist of a single segment. From the basic operations on weighted generating functions, M(z)s contains the weighted generating functions of all the reads that consist of s segments. Therefore, the entry at coordinates (i, j) of the matrix M(z) + M(z)2 + M(z)3 + … = M(z) · (I − M(z))−1 is the weighted generating function of the reads of any size and any number of segments, that end with a segment of type j and that can be appended to a segment of type i. The examples below will clarify the key steps of this strategy.

A complete description of how to compute seeding probabilities with the symbolic method is given by Filion (2017, 2018). The interested readers can also find more about analytic combinatorics in popular textbooks (Flajolet and Sedgewick, 2009; Sedgewick and Flajolet, 2013).

3.4. Example 1: On-Target Exact Seeds

We highlight the strategy above with an example that will turn out to be central for the development of the theory. In addition, it is simple enough to provide a gentle introduction to the general methodology. This example was described in detail by Filion (2017, 2018), but we repeat it here with a different formalism to fit the present article.

The first step is to note that the nucleotide sequence of the read is irrelevant. Indeed, the read has an on-target seed if and only if it contains a stretch of γ nucleotides without error. For this reason, we recode reads as sequences of correct or erroneous nucleotides.

We define the mismatch alphabet , where □ represents a correct nucleotide, ⇓ represents an erroneous nucleotide and | is a special symbol appended after the last nucleotide to mark the end of the read. It is not associated with a nucleotide and therefore has size 0.

This recoding allows us to partition the read in an important way.

Definition 7. A terminator is any symbol that is different from the symbol □. A segment is a sequence of 0 or more □ symbols followed by a terminator. The tail is the last segment of the read, where the terminator is always the special symbol |.

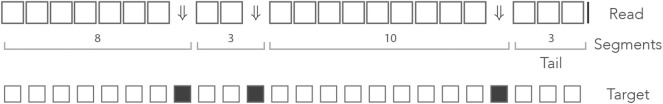

Since the decomposition of the read in segments is unique, we can view a read as a sequence of segments with a tail, instead of a sequence of nucleotides. Figure 6 shows an example of decomposition in segments. On-target seeds cannot contain sequencing errors, therefore they must be completely embedded in a segment. So the sizes of the segments indicate whether the read contains an on-target seed or not.

Figure 6.

The mismatch encoding. An example read is represented in the mismatch alphabet. The symbol ⇓ represents a mismatch against the target (an erroneous nucleotide) and the symbol □ represents a match (a correct nucleotide). The symbol | is appended to the end of the read. The symbolic sequence of the target is represented below, where an open square stands for a match and a closed square stands for a mismatch.

The probability of occurrence of the symbol ⇓ is p (the error rate of the sequencer) so the probability of occurrence of the □ symbol is 1 − p = q. Both symbols have size 1, so their respective weighted generating functions are pz and qz. Using the rule for concatenation, we see that a segment of i symbols □ followed by a terminator has weighted generating function (qz)ipz. The final symbol | has size 0, so a tail segment of i symbols □ followed by the symbol | has weighted generating function (qz)i.

The key insight is that the reads without on-target seed are exactly the reads that are made of segments with fewer than γ symbols □, where γ is the minimum seed size. The weighted generating function of such segments is (1 + qz + … +(qz)γ−1)pz, and that of the tail is 1 + qz + … + (qz)γ−1. This gives a construction plan that can be encoded in a transfer matrix.

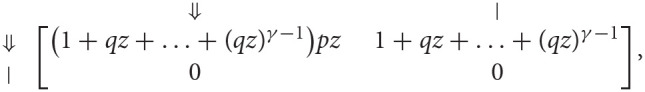

Reads consist of only two kinds of objects: the segments terminated by ⇓ (or ⇓-segments for short) and the tails, so the dimension of the transfer matrix is 2 × 2. A ⇓-segment can be followed by another ⇓-segment or by the tail. The tail cannot be followed by anything. The expression of the transfer matrix M0(z) is thus

where p is the error rate of the sequencer, q = 1 − p and γ is the minimum seed length. In the representation above, the different types of segments are identified by their terminator, indicated in the margins for clarity.

The entries of M0(z) correspond to sequences of one segment with no seed. Likewise, the entries of correspond to sequences of s segments with no seed and the entries of correspond to sequences of any number of segments with no seed. The entry of interest is the top right term, associated with terminators ⇓ and |. To see why, observe that every read can be prepended by ⇓-segments and only by those (not by a tail). Thus, reads are precisely the sequences of segments that can follow the symbol ⇓ and that are terminated by a tail, whose weighted generating function is the top right entry of the matrix. In Appendix B.1, we show that this term is equal to

| (2) |

This function can be expanded as a Taylor series , but the coefficients a0, a1, a2, … are unknown. By construction, ak is the quantity of interest, i.e., it is the probability that a read of size k does not contain an on-target seed of minimum size γ, so we now need to extract the Taylor coefficients from expression (2). There are several methods to do so; the one we choose here is to build a recurrence equation. In Appendix B.2, we show that

| (3) |

Note that for k < γ, the read is too short so the probability that it contains no seed is 1; for k = γ, the read contains a seed if and only if it has no error, which occurs with probability qγ.

The terms of interest can be computed recursively using expression (3). This approach is very efficient because every iteration involves at most one multiplication and one subtraction. Also, the default floating-point arithmetic on modern computers gives sufficient precision to not worry about numeric instability for the problems considered here (we rarely need to compute those probabilities for reads above 500 nucleotides).

This example shows how the symbolic approach yields a non-trivial and yet simple algorithm to compute the probability that a read of size k does not contain an on-target exact seed.

3.5. Example 2: On-Target Spaced Seeds

The goal of our second example is to exhibit the mechanisms of our strategy in a more complex setting. In this example we propose to compute the probability that a read of size k contains a match for the spaced seed “11*1*1.” This problem has no concrete application, but it illustrates in a relatively simple way the general methodology to deal with spaced seeds.

Here we proceed exactly as in the previous section, replacing nucleotides by the symbols from the mismatch alphabet . Recall that □ represents a correct nucleotide, ⇓ represents an erroneous nucleotide and | is the special terminator that marks the end of the read. As explained in the previous example, the weighted generating functions of the symbols □, ⇓ and | are qz, pz, and 1, respectively, where p is the probability of a sequencing error and q = 1 − p. We again decompose reads into unique sequences of segments, where a segment is the concatenation of zero or more □ symbols with a terminator ⇓, or | for the last segment also called the tail.

So far everything is identical to the previous example, the difference is how we characterize reads that do not contain a seed. This is where the transfer matrix comes in handy. We define four abstract states that represent how the end of the segment matches the seed model “11*1*1.” The states are represented as ⇓, □□⇓, □□⇓□⇓, and □□□□⇓. All the states finish with ⇓ because they correspond to the end of one or more internal segments, which are always terminated by ⇓. To these states we add | for the tail.

To fill the transfer matrix, we need to indicate how the segments bring the read from a state to another. We illustrate how this is done with the transitions from state ⇓. This state indicates that the longest alignment of the previous segment with the seed model has zero nucleotide. If the next segment is ⇓ or □⇓, the longest alignment with the seed model will again have length 0 and the state will remain ⇓. If the next segment is □□⇓ or □□□⇓, the last three nucleotides align with the beginning of the seed model “11*,” bringing the read to the state □□⇓. If the next segment is □□□□⇓ or □□□□□⇓, the last five nucleotides align with “11*1*,” bringing the read to the state □□□□⇓. Finally, segments with more than five □ symbols are disallowed because they create a match for the seed. In all of these segments, the ⇓ terminator can be replaced by | to indicate the end of the read.

Recall from the previous section that the weighted generating function of a segment with i symbols □ and a terminator ⇓ is (qz)ipz, and that the weighted generating function of a tail with i symbols □ is (qz)i. With this information we can fill the row of the transfer matrix that corresponds to state ⇓. With the same logic, we can also fill the other entries of the transfer matrix, making sure that we exclude all the possible matches with the seed. Doing this term by term, we obtain the transfer matrix M◇(z) equal to

where

The entries of correspond to sequences of any number of segments with no match for the seed. The entry of interest is the top right term, associated with states ⇓ and |. To see why, observe that every read can be prepended by segments finishing in state ⇓ and only by those, otherwise the read would already match some part of the seed before the first nucleotide.

The matrix M◇(z) is simple enough that can be computed explicitly using a computer algebra system. The weighted generating function of interest is found to be the ratio of two polynomials P(z)/Q(z) where P has degree 9 and Q has degree 10. From there, one can proceed as in Appendix B.2 to obtain a recurrence of order 10 that gives the coefficient ak in the Taylor expansion of P(z)/Q(z). By construction, this coefficient ak is the probability that a read of length k does not contain any match for the spaced seed “11*1*1.” Alternatively, one can compute ak directly from the powers of M◇(z) as explained in the next section.

This approach can be applied for any spaced seed. A seed model with m don't-care positions generates 2m possible states and the dimension of the associated transfer matrix is 2m + 1 (one state is reserved for the tail). For large m it is impossible to compute analytically but one can still compute the powers of M◇(z) efficiently because the matrix is sparse. So in general, the computational method introduced in the next section is more adapted.

3.6. Example 3: On-Target Skip Seeds

Let us now go through an example that will be important later. Here we devise a method to compute the probability that a read contains no on-target skip seed. Using the same strategy as in the previous example, we start by recoding the reads using a specialized alphabet to solve this problem.

We need to know whether a nucleotide is a sequencing error, but this time we also need to know its phase in the repeated cycles of skipped positions. For this, we define the skip-n alphabet . Again, the symbol □ represents a correct nucleotide and the symbol | is a terminator added at the end of the read. The symbols ⇓j (0 ≤ j ≤ n) represent sequencing errors and j indicates the number of nucleotides until the next non-skipped position (i.e., j = 0 for nucleotides immediately before a non-skipped position and j = n for nucleotides at a non-skipped position).

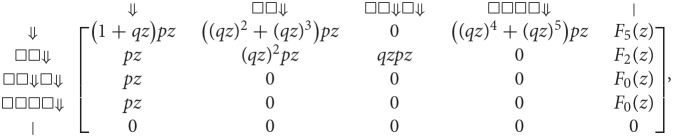

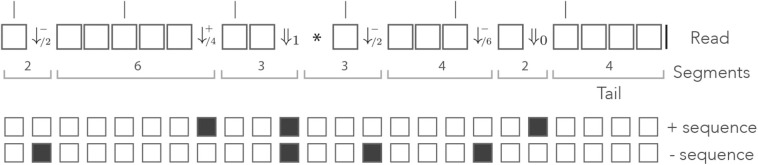

As per definition 7, segments in this alphabet are sequences of 0 or more □ symbols followed by any of the symbols ⇓j or by the symbol |. Given that this decomposition is unique, we can again view a read as a sequence of segments with a tail. The example of Figure 6 is shown again in Figure 7, where segments in the mismatch alphabet have been replaced by segments in the skip-3 alphabet.

Figure 7.

The skip encoding. The read of Figure 6 is represented in the skip-3 alphabet. The symbols ⇓j(j = 1, 2, 3) represent mismatches against the target (they are erroneous nucleotides) and the □ symbol represents a match (it is a correct nucleotide). The vertical bars indicate non-skipped positions (the potential start of a seed). The number j in ⇓j indicates the number of nucleotides until the next non-skipped position. For j = 0 the next nucleotide is not skipped. Other features are as in Figure 6.

The probability of occurrence of a sequencing error is p, so every symbol ⇓j has the same weighted generating function pz—provided the next non-skipped position is at distance j, otherwise the weighted generating function is 0. The weighted generating function of the symbol □ is again qz, so a segment with i symbols □ followed by the symbol ⇓j has weighted generating function (qz)ipz—if the next non-skipped position is at distance j, otherwise it is 0. As in the previous example, a tail segment with i symbols □ followed by the symbol | has weighted generating function (qz)i.

This case is more complex than the previous one: reads without on-target skip seed of minimum size γ can contain segments with γ or more □ symbols. For instance, the read shown in Figure 7 contains a stretch of 9 nucleotides without errors but it has no seed of minimum size γ = 9. More generally, if there is a sequencing error i nucleotides before the next non-skipped position, there can be up to γ + i − 1 symbols □ in a row.

Expressed in different words, it is possible to append segments with up to γ + i − 1 symbols □ after segments terminated by ⇓i (⇓i-segments for short). Each of those γ + i possible segments is associated with a different terminator, depending on how far ahead the next non-skipped position lies. In Figure 7, for instance, the second segment is terminated by ⇓1 because there is 1 nucleotide before the next non-skipped position. If the segment were 1 nucleotide longer, the terminator would have to be ⇓0.

The main issue is that segments of different lengths can be terminated by the same symbol. Going back to Figure 7, the third segment has length 10 and is terminated by ⇓3. It would also be terminated by ⇓3 if it had length 2 or length 6. In the general case, the entries of the transfer matrix show some periodicity modulo n + 1.

Denote Hi, j(z) the weighted generating function of ⇓j-segments that can follow a ⇓i-segment. The total number of segments that can follow a ⇓i-segment is γ + i. Among them, the shortest ⇓j-segment has size ℓ0 to be determined below, and the others have sizes ℓ0 + (n + 1), ℓ0 + 2(n + 1), …, ℓ0 + m(n + 1), for some integer m. This m is the largest number such that ℓ0 + m(n + 1) ≤ γ + i, so m = ⌊(γ + i − ℓ0)/(n + 1)⌋, where ⌊ … ⌋ is the “floor” function.

Those segments follow a ⇓i-segment, so they start i nucleotides before the next non-skipped position. The shortest following segment that ends j nucleotides before a non-skipped position has length ℓ0 = i − j if i > j, and n + 1 − j + i otherwise. This is equivalent to defining ℓ0 as x + 1 where x is the number of □ symbols of the shortest ⇓j-segment, i.e., x = i − j − 1 (mod n + 1).

With these notations, the shortest ⇓j-segment consists of x symbols □ followed by the ⇓j terminator, so it has weighted generating function (qz)xpz. The other segments have a multiple of n + 1 extra □ symbols so their weighted generating functions are (qz)x+(n+1)pz, (qz)x+2(n+1)pz, …, (qz)x+m(n+1)pz. Summing over those cases, we finally obtain

| (4) |

Denote Ji(z) the weighted generating function of tail segments that can follow a ⇓i-segment. There are γ + i such tails, each consisting of 0 to γ + i − 1 symbols □ followed by the special | terminator for the end of the read. The | symbol has size 0 so its weighted generating function is 1. Once again summing over the different cases, we obtain

| (5) |

Finally, the expression of Mn(z) the transfer matrix of reads in the skip-n alphabet is

where p is the error rate of the sequencer, q = 1 − p, n is the number of skipped nucleotides between potential seeds, γ is the minimum seed length, and polynomials Hi, j(z) and Ji(z) are defined in expressions (4) and (5).

In Appendix B.3, we present a transfer matrix with a simpler expression that will prove useful in section 5. The version of Appendix B.3 is simpler, but the expression above has some advantages that will be explained below.

The weighted generating function of interest is the top right entry of the matrix . To see why, observe that, at the start of every read, the next nucleotide is a non-skipped position, so every read can be prepended by ⇓0-segments and only by those. Thus, reads are precisely the sequences of segments that can follow the symbol ⇓0 and that are terminated by a tail, whose weighted generating function is the entry of the matrix associated with terminators ⇓0 and |.

By construction, the Taylor expansion of the top right term in the matrix contains the probabilities of interest. More specifically, if the Taylor series of this term is , then ak is the probability that a read of size k contains no skip-n seed of minimum size γ.

But Mn(z) is too complex to find a closed expression for or its top right term. Instead, we return to the definition and show in Appendix B.4 that the coefficient of interest, ak, only depends on the first k + 1 terms of the sum. So for reads of size k or lower, we only need to compute the matrix and work out the Taylor expansion of the top right term.

But we can do better: since we are only interested in the coefficients up to order k, we can perform all algebraic operations on truncated polynomials of order k, i.e., we discard the coefficients of order k + 1 or greater when multiplying two polynomials.

But we can do even better: a read with s + 1 segments contains s errors, so the top right entry of is the weighted generating function of reads with s errors that have no seed of minimum size γ. Computing the partial sum instead of corresponds to neglecting reads with s or more errors. For s sufficiently large, such reads are exceedingly rare so we can obtain accurate estimates without computing all the powers of Mn(z) up to order k.

The number of errors X in a read of size k has a Binomial distribution X ~ B(k, p). From Arratia and Gordon (1989) we can bound the probabilities of the tail with the expression

| (7) |

Using the formula above, we can thus bound the probability that a read has s + 1 or more segments. We compute where the weighted generating functions have been replaced by truncated polynomials and we extract the top right entry. When the right-hand side of expression (7) is lower than a set fraction ε of the current value of ak, we stop the computations. Typically ε = 0.01 so this method ensures that the probabilities that a read of size k has no on-target skip seed are accurate to within 1%.

With , the transfer matrix of Appendix B.3, the top right entry of is not the weighted generating function of reads with s errors, so (7) is not an upper bound for the neglected terms of the sum. As a consequence, one would have to compute more terms in the partial sum to reach the same accuracy. The transfer matrix shown in expression (6) is not the simplest, but it has the benefit of requiring fewer iterations.

Remark 1. Observe that when n = 0 the matrix Mn(z) is identical to the matrix M0(z) of section 3.4. This is consistent with the fact that exact seeds are skip-0 seeds.

4. Off-Target Exact Seeds

We now turn our attention to the problem of computing the probability that the seeding process is off-target when using exact seeds—recall from section 1.3 that off-target seeding means that the candidate set contains a duplicate but not the target.

If there is no duplicate (i.e., N = 0), seeding cannot be off-target, it can only be on-target or null. So from here we assume that the target has N ≥ 1 duplicates. Let S0 denote the event that there is an on-target seed and let Sj denote the event that there is a seed for the j-th duplicate. We are thus interested in computing , where denotes the complement of the event Sj. First observe that

| (8) |

Since the duplicates are assumed to evolve independently of each other and through the same mutagenesis process, the events Sj (1 ≤ j ≤ N) are independent and identically distributed conditionally on . We can thus write

| (9) |

Combining the two equations above, we obtain

| (10) |

Hence, the probability that seeding is off-target is a function of just two quantities: and . The first is the probability that the read has no seed for the target, which we have already computed in section 3.4 using recursive expression (3). We now need to find a way to compute .

Remark 2. Observe that expression (9) is the probability of null seeding (the read contains no seed for the target or any of its duplicates). Since it is also a function of just and , it can be computed at no additional cost. This probability is less relevant than the probabilities that the seeding process is on-target or off-target, but at times, it may be useful to know the probability that a read is not mappable, especially when reads are relatively short.

4.1. The Dual Encoding

is the probability that the read has no seed for the target or for the first duplicate—numbering is arbitrary here, the first duplicate can be any fixed duplicate. As in section 3, we first recode the reads using a specialized alphabet to simplify the problem.

It will be useful to consider a more general problem where we have two sequences of interest labeled + and −. The + sequence stands for the target and that the − sequence stands for its duplicate. We then define the dual alphabet . The symbols (j ≥ 1) signify that the nucleotide is a mismatch against the − sequence only, the symbols (j ≥ 1) signify that it is a mismatch against the + sequence only, and the symbol ⇓ signifies that it is a mismatch against both. As before, every other nucleotide is replaced by the symbol □, and the terminator | is appended to the end of the read. We again define reads as sequences of segments (zero or more □ symbols followed by a terminator), except that now the terminators are the symbols , (j ≥ 1) and ⇓. The tail, as usual, is terminated by the symbol |.

The index j in the symbol indicates the match length of the + sequence (note that this is not the same as the number of □ symbols in the segment). Likewise, the index j in the symbol indicates the match length of the − sequence. For instance, the symbol indicates that the nucleotide is a mismatch against the − sequence, that it is a match for the + sequence, and that the six preceding nucleotides were also a match for the + sequence (but the nucleotide before that was a mismatch against the + sequence). The terminators thus encode the local state of the read.

Figure 8 shows an example of read in the dual encoding. The + and − sequences are shown below the read, with matches represented as open squares and mismatches as closed squares. It is visible from this example that symbols and alternate whenever the mismatches hit different sequences. The symbol ⇓ occurs only when a nucleotide is a double mismatch.

Figure 8.

Example of dual encoding. An example of read is represented in the dual alphabet. The symbols (j ≥ 1) represent a mismatch against the − sequence, the symbols (j ≥ 1) represent a mismatch against the + sequence, and the symbol ⇓ represents a mismatch against both. The index i is the match length of the sequence that is not mismatched. The symbolic + and − sequences are represented below, where an open square stands for a match and a closed square stands for a mismatch.

Let us assume that for each nucleotide, a is the probability that the read matches both sequences, b is the probability that it matches only the + sequence, c is the probability that it matches only the − sequence and d is the probability that it matches neither. Since there are no other cases, we have a + b + c + d = 1.

With these definitions, the weighted generating functions of the symbols □, , (j ≥ 1) and ⇓ are az, bz, cz, and dz, respectively. The next sections clarify how this is used to compute the weighted generating functions of interest.

4.2. Segments Following ⇓

After a ⇓ terminator, the match counter for both sequences is reset; the following segment can thus have up to γ − 1 matches for any of the two sequences. Each match corresponds to the □ symbol with weighted generating function az. The terminators ⇓ and | have respective generating function dz and 1 (recall that the tail symbol has size 0), so if the next terminator is ⇓ or |, the segments have weighted generating functions (1 + az + … +(az)γ−1)dz or 1 + az + … +(az)γ−1, respectively.

If the next terminator is , there is a match of length j for the + sequence, so the segment contains j − 1 symbols □ plus the terminator (which also matches the + sequence). The weighted generating function is thus (az)j−1bz. By the same rationale, if the next terminator is , the weighted generating function of the segment is (az)j−1cz.

The terminators and are disallowed for j ≥ γ because this would create a seed for at least one of the sequences.

4.3. Segments Following

At a terminator, the match length of the + sequence is 0 and the match length of the − sequence is i. The next segment can thus have up to γ − 1 matches for the + sequence, but only γ − i − 1 matches for the − sequence, lowering the maximum size of the segment. If the next terminator is ⇓ or |, the segments have weighted generating functions (1 + az + … +(az)γ−j−1)dz and 1 + az + … +(az)γ−j−1, respectively.

If the next terminator is , the weighted generating function is (az)j−1bz as in the previous section. The difference is that the terminators are allowed only for 1 ≤ j ≤ γ − i, otherwise this would create a seed for the − sequence.

If the next terminator is , the situation is slightly more complex because this imposes i < j ≤ γ − 1. Indeed, there were already i matches for the − sequence at the terminator , and there will be more at the end of the following segment because it has no mismatch for the − sequence. Taking this into account, we see that the weighted generating function of those segments is (az)j−i−1cz with i < j ≤ γ − 1.

4.4. Segments Following

We can find the weighted generating functions by just reversing the + and − signs in the previous section. This way, we see that the weighted generating function of the segments terminated by ⇓ or | are (1 + az + … +(az)γ−i−1)dz and 1 + az + … +(az)γ−i−1, respectively.

Likewise, the weighted generating function of the segments terminated by is (az)j−1cz, where 1 ≤ j ≤ γ − i; and the weighted generating function of the segments terminated by is (az)j−i−1bz where i < j ≤ γ − 1.

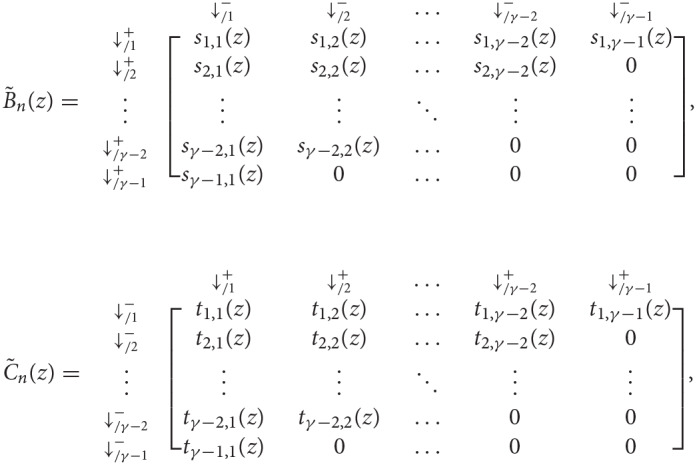

4.5. Transfer Matrix

We now have all the elements to specify the transfer matrix of reads with no seed for either sequence. Recall that a is the probability of a double match, b is the probability of a mismatch only against the − sequence, c is the probability of a mismatch only against the + sequence and d is the probability of a double mismatch. For notational convenience, we define

| (11) |

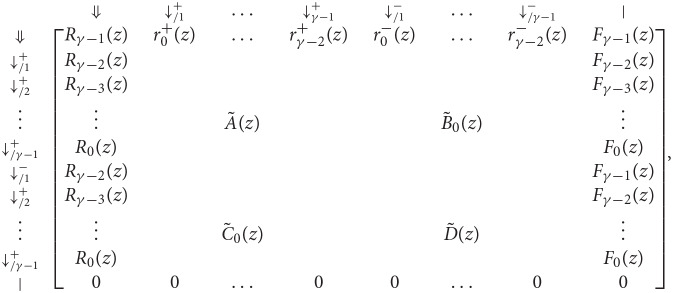

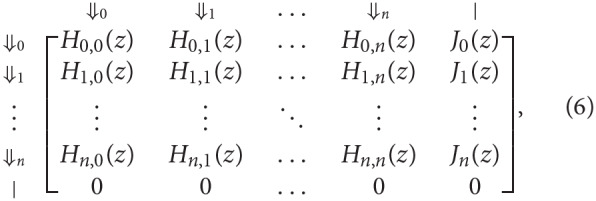

With these notations, the information from the previous sections can be summarized in the transfer matrix equal to

where γ is the minimum seed length, and where Ã(z), , , and are matrices of dimensions (γ − 1) × (γ − 1) that are defined as

As before, the term of interest is the top right entry of . To see why, observe that every read can be prepended by ⇓-segments and only by those (every other terminator would imply that one of the two sequences has a nonzero match size at the start of the read). Thus, reads are precisely the sequences of segments that can follow the symbol ⇓ and that are terminated by a tail, the weighted generating function of which is the top right entry of the matrix.

is too complex to compute a closed expression of . It is easier to proceed as in section 3.6 and to compute the powers of up to a finite value. This is done once again using the arithmetic of truncated polynomials. Since each segment except the tail contains a mismatch against at least one sequence, the top right entry of is the weighted generating function of reads that contain s mismatches (where double mismatches count as one). We thus define as the upper bound on the probability of a mismatch, i.e. . The updated formula (7) now gives an upper bound of the probability that a read of size k contains s or more mismatches as

With this upper bound, we can compute the terms of the matrix partial sums until the ignored terms become negligible, i.e. until we can be sure that the coefficient of interest ak is accurate to within chosen ε.

Now returning to the problem of computing , the + sequence is interpreted as the target and the − sequence as the duplicate. Based on the assumptions of the error model presented in section 3.1, this implies that a = (1 − p)(1 − μ), b = (1 − p)μ, c = pμ/3, and d = p(1 − μ/3).

With these values, we can fully specify the matrix , and compute its powers until the estimate of the coefficient of interest ak is accurate enough, finally giving a numerical value for .

4.6. Illustration

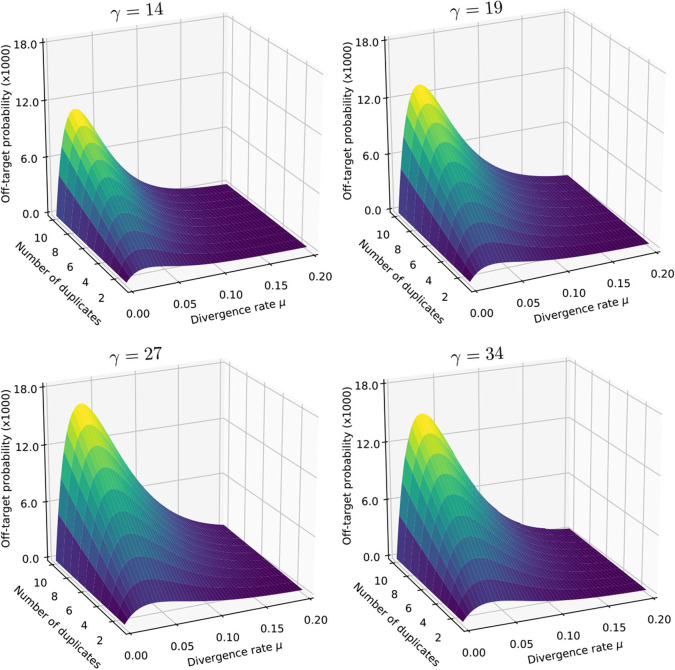

We illustrate the strategy delineated above for reads of size k = 50 sequenced with an instrument with error rate p = 0.01, when using exact seeds of size γ = 19.

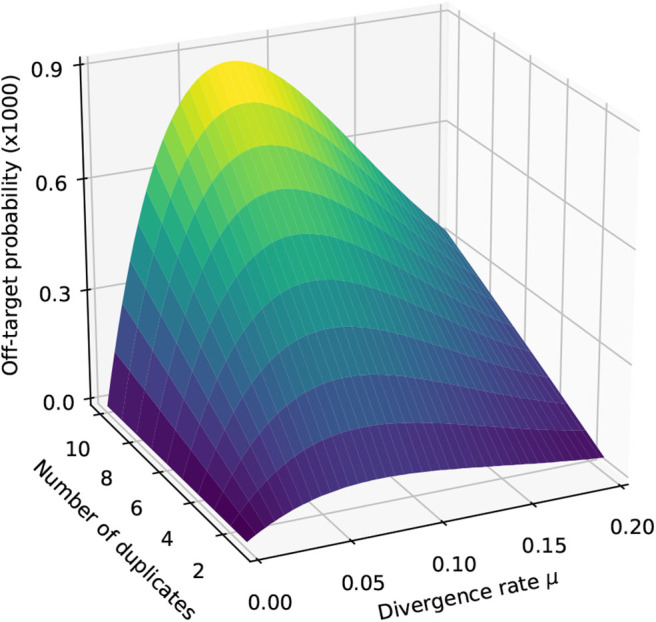

Figure 9 shows the result for a number of duplicates N from 1 to 10 and for a divergence rate μ from 0 to 0.20. The first observation is that the probability that seeding is off-target increases with N. This is also clear from expression (10). This can also be understood intuitively because the probability of not seeding the target is fixed and the probability of having an empty candidate set decreases as N increases. As a result the probability that the candidate set contains only invalid candidates increases with N.

Figure 9.

Off-target seeding probabilities (exact seeds). The surfaces show the probability that seeding is off-target as a function of the divergence rate μ and of the number N of duplicates. The results are shown for exact seeds of size γ = 19 and for reads of size k = 50 nucleotides sequenced with an error rate p = 0.01. The divergence rate μ is defined as the probability that a given duplicate differs from the target at any given position. Note the factor 1,000 in the scale of the z-axis.

The second observation is that there exists a “worst” value of μ situated around 0.070. When μ is much smaller, the duplicates tend to be exactly identical to the target, making it impossible that there is a seed for a duplicate but no seed for the target. When μ is much larger, the duplicates are far from the target and they are unlikely to be in the candidate set at all. In expression (10), the only term that depends on μ is , and it is clear that the minimum of expression (10) corresponds to the maximum of . This is why the worst value of μ is the same for every N.

5. Off-Target Skip Seeds

To compute the probability that the seeding process is off-target when using skip seeds, we observe that the logic of section 4 can be transposed with few modifications. In particular, the probability can be computed through expression (10), where S0 is the event that the read has a skip seed for the target (instead of an exact seed) and S1 is the event that the read has a skip seed for the first duplicate (instead of an exact seed).

We have already seen how to compute in section 3.6, we now need to find a way to compute when using skip seeds.

5.1. The Skip Dual Encoding

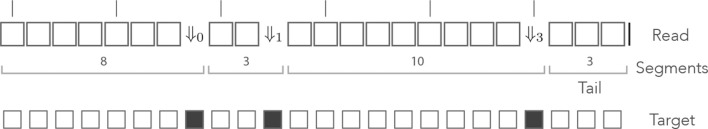

As before, we recode the reads in a specialized alphabet. We define the skip-n dual alphabet as . The symbols □, |, and (j ≥ 1) have the same meaning as in the dual alphabet of section 4.1, i.e., the □ symbol stands for a double match, the | terminator marks the end of the read, the symbol indicates a mismatch against the − sequence with a match length of size j for the + sequence, and conversely the symbol indicates a mismatch against the + sequence with a match of length j for the − sequence. The symbols ⇓j (0 ≤ j ≤ n) indicate that both sequences have match length 0 and that the next non-skipped position is j nucleotides further. The symbol * indicates that it does not matter whether the nucleotide is a match or a mismatch, as we will explain below.

Figure 10 shows the read from Figure 8 represented in the skip-3 dual encoding. It is important to note several differences with Figure 8. The first is that the symbols ⇓j (0 ≤ j ≤ n) are not always associated with double mismatches. For instance, the symbol ⇓0 on the right side of the read corresponds to a mismatch for the + sequence only. This happens whenever the + and the − sequences are mismatched in the same interval between non-skipped positions (the mismatches do not need to be on the same nucleotide).

Figure 10.

Example of skip dual encoding. The read of Figure 8 is represented in the skip dual alphabet. The vertical bars above the read indicate non-skipped positions. The symbols and (j ≥ 1) have the same meaning as in the dual alphabet. The symbols ⇓j indicate that both sequences have match length 0 and that the next non-skipped position is located j nucleotides downstream. Other features are as in Figure 8.

Also observe that the symbol ⇓1 is followed by a * symbol, indicating that it does not matter whether the nucleotide is a match or a mismatch for any of the two sequences. After the ⇓1 symbol, both sequences have match length 0 and we have to “wait” for a potential new seed one nucleotide further downstream. As in Appendix B.3, the ⇓j symbols are followed by j symbols *, unless the end of the read comes before the next non-skipped position. After a sequence of * symbols, the read is either finished or at a non-skipped position. In the second case, the is in the same state as after a ⇓0 symbol, so we can consider that the * symbol allows the terminators ⇓j (1 ≤ j ≤ n) to just “fast forward” to either ⇓0 or |, as shown in Appendix B.3.

As in the previous section, let a, b, c, and d denote the probabilities of a double match, a mismatch against −, a mismatch against + and a double mismatch, respectively. With these definitions, the weighted generating functions of the symbols □, and (j ≥ 1) are az, bz, and cz, respectively. The weighted generating function of the * symbol is z, and that of the symbols ⇓j (0 ≤ j ≤ n) will be worked out in the sections below.

5.2. Segments Following ⇓i (1 ≤ i ≤ n)

A ⇓i terminator is followed by up to i symbols *. If there are fewer than i symbols *, the read is finished so the segment must be a tail. Recall that the weighted generating function of the | terminator is 1, so the weighted generating function of tail segments is 1 + z + … +zi−1.

If there are i symbols *, the sequence ends at a non-skipped position, i.e. in the same state as after a ⇓0 terminator. This is not a segment proper, because there is no terminator, but in the transfer matrix the symbols ⇓i (1 ≤ i ≤ n) project directly to the symbol ⇓0 with weighted generating function zi.

5.3. Segments Following ⇓0

After a ⇓0 symbol, all the counters are reset as in the beginning of the read. If the next terminator is |, the segment is a tail and we only have to make sure that it contains fewer than γ symbols □, otherwise this would create a seed. The weighted generating function of tail segments following ⇓0 is thus 1 + qz + … +(qz)γ−1.

If the next terminator is a symbol (j ≥ 1), the segment is a match of size j for the + sequence. This imposes j < γ otherwise the segment would create a seed. Such segments consist of j − 1 symbols □ followed by the terminator, so their weighted generating function is (az)j−1bz with 1 ≤ j < γ.

Conversely, if the next terminator is a symbol (j ≥ 1), we can apply the same rationale to see that the weighted generating function is (az)j−1cz, where 1 ≤ j < γ.

Finally, if the next terminator is a ⇓j symbol (0 ≤ j ≤ n), we must not only make sure that the segment contains fewer than γ symbols □, but also keep track of the position of the next non-skipped position (stored in index j). Here we can follow verbatim the rationale of section 3.6 where we replace the probability of a match (previously q) by that of a double match (now a), and the probability of a mismatch (previously p) by that a double mismatch (now d). Replacing the symbols in expression (4), we see that the weighted generating function is (az)x · (1 + (az)n+1 + … + (az)(n+1)m)dz, where x = n − j (mod n + 1) and m = ⌊(γ − 1 − x)/(n + 1)⌋.

5.4. Segments Following

At a terminator (i ≥ 1), the read contains a match of length i for the + sequence. If the next segment is a tail, we must make sure that the match length for the + sequence does not exceed γ − 1. This means that we can have up to γ − i − 1 symbols □ and thus that the weighted generating function of the tail segments is 1 + (qz) + … +(qz)γ−i−1.

If the next terminator is a symbol, we must have j > i because there is no mismatch against the + sequence. In this case, we must only make sure that the total match length for the + sequence remains lower than γ. Such segments contain j − i − 1 symbols □ followed by the terminator so their weighted generating function is (qz)i−j−1bz with i < j ≤ γ − 1.

For the last two types of terminators, we must pay attention to the fact that in general, a mismatch against + that follows a mismatch against − can be represented by either a ⇓j symbol (0 ≤ j ≤ n) or a symbol (j ≥ 1). The terminator is ⇓j if the two mismatches are within the same interval between non-skipped positions because both sequences locally have match length 0. If the mismatch against + is in another interval, the terminator is because in that case the − sequence has a positive match length. For now, bear in mind that a mismatch against the + sequence only can produce a ⇓j terminator (0 ≤ j ≤ n), this will be important later.

If the next terminator is (j ≥ 1), then it must be separated from the preceding terminator by a non-skipped position, which imposes a lower bound on the size of the segment. Since at the terminator the match length for + was i, there must be a non-skipped position i nucleotides before. The number of nucleotides from the terminator to the next non-skipped position is thus y = −i (mod n + 1), so the minimum segment length is y + 1. There is also an upper bound on the size of the segment because the match length for the + sequence cannot become higher than γ−1, imposing the length to be lower than γ − i. The shortest segment is terminated by , and the longest by . Finally, the weighted generating function of segments terminated by following the terminator is (az)y+j−1cz with 1 ≤ j ≤ γ − y − i. Note that for some value of i and y, no j can satisfy the last inequality.

Finally, if the next terminator is ⇓j (0 ≤ j ≤ n) we have to distinguish two cases, depending on whether the segment ends with a double mismatch or with a single mismatch against the + sequence. For the first case we can apply the rationale of section 3.6. Recall that the index j indicates the number of nucleotides until the next non-skipped position. Let ℓ0 be the length of the shortest possible segment. The lengths of the other segments are of the form ℓ0 + n + 1, …, ℓ0 + m(n + 1), where the integer m must be chosen so that the segment does not create a seed. At the terminator, there is a match of length i for the + sequence so m is the largest integer such that i+ℓ0+m(n+1) < γ, i.e. m = ⌊(γ − i − ℓ0)/(n + 1)⌋.

The terminator is y = −i (mod n + 1) nucleotides before the next non-skipped position so the shortest segment has length ℓ0 = y − j if y > j, and n + 1 − j + y otherwise. This is equivalent to defining ℓ0 as x + 1 where x is the number of □ symbols in the shortest segment, i.e., x = −i − j − 1 (mod n + 1). Summing the weighted generating functions of the individual segments, we find (az)x(1 + (az)n+1+…+(az)m(n+1))dz.

The last remaining issue is that a mismatch against the + sequence only can produce a ⇓j terminator. This happens when the preceding terminator is not separated from the mismatch by a non-skipped position (in this case both sequences locally have match length 0). The terminator is located y nucleotides before the next non-skipped position, so if the terminator is from ⇓0 to ⇓y−1 we need to add the term (az)xbz to the previous weighted generating function. In conclusion, the weighted generating function of segments terminated by ⇓j is , where if j < y and 0 otherwise.

5.5. Segments Following

We can find the weighted generating functions by just reversing the + and − signs in the previous section. This way we can see that the weighted generating function of tail segments is 1 + (qz) + … +(qz)γ−i−1.

Likewise, the weighted generating function of segments terminated by is (qz)i−j−1bz with i < j ≤ γ − 1.

The weighted generating function of segments terminated by is (az)y+j−1dz with y = −i (mod n + 1) and 1 ≤ j ≤ γ − y − i.

Finally, the weighted generating function of segments terminated by ⇓j is , where if j < y and 0 otherwise.

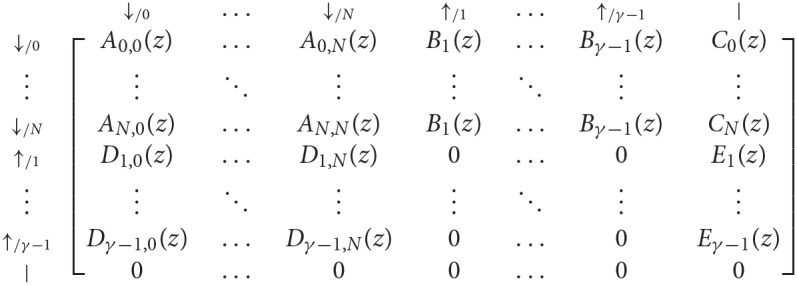

5.6. Transfer Matrix

We now have all the elements to specify the transfer matrix of reads with no skip-n seed for either sequence. Recall that n is the number of skipped nucleotides, γ is the minimum seed length, a is the probability of a double match, b is the probability of a mismatch against the − sequence only, c is the probability of a mismatch against the + sequence only and d is the probability of a double mismatch. For notational convenience we define

| (12) |

| (13) |

| (14) |

| (15) |

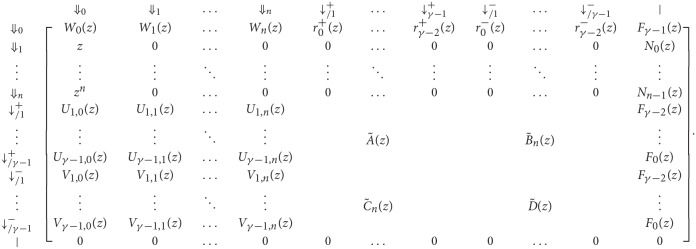

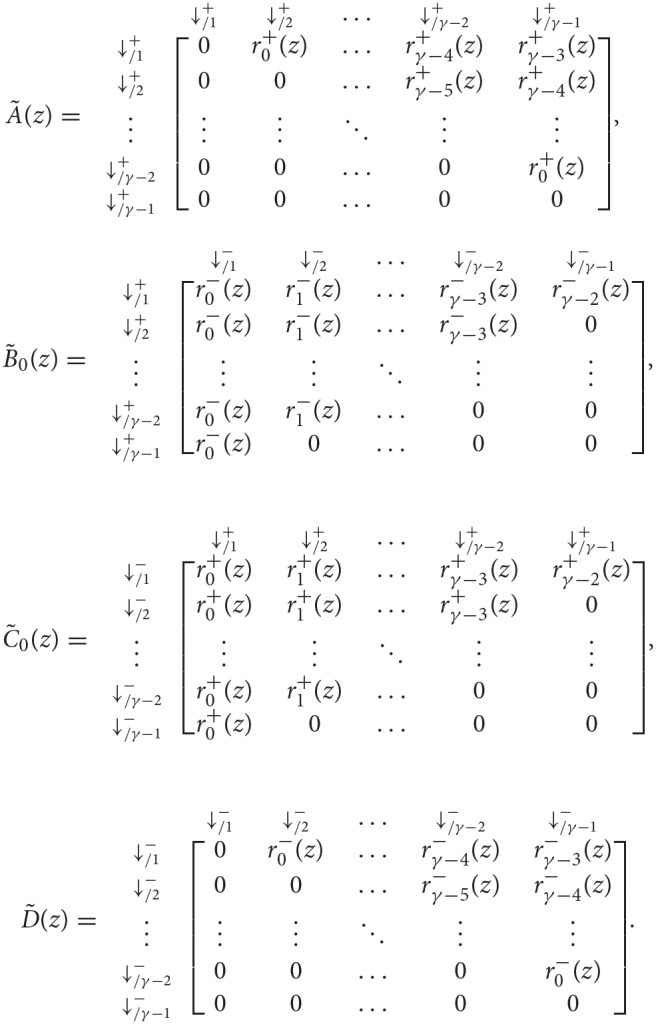

With these notations, the information from the previous sections can be summarized in the transfer matrix equal to

The matrices Ã(z) and in the expression of are the same as in section 4.1. They are reproduced here for convenience.

The matrices and are defined as

with

Now returning to the problem of computing , the + sequence is interpreted as the target and the − sequence as the duplicate. Based on the assumptions of the error model presented in section 3.1, this implies that a = (1 − p)(1 − μ), b = (1 − p)μ, c = pμ/3, and d = p(1 − μ/3).

The computation is performed as described in section 4.1. We compute the successive powers of in the arithmetic of truncated polynomials and stop the iterations using the same criterion. The only modification is that the top right term of is not the weighted generating function of reads with s mismatches (where double mismatches count as one). The reason is that the sequences of * symbols are not terminated by a mismatch. However, there can be at most one sequence of * symbols for each mismatch or double mismatch, so the reads described by the top right term of have at least ⌊s/2⌋ mismatches.

We thus define as the upper bound on the probability of a mismatch, i.e., and use the updated formula (7) from section 4.5

With this upper bound, we can compute the terms of the partial sums until the ignored terms become negligible, i.e. until we can be sure that the coefficient of interest ak is accurate to within chosen ε.

Remark 3. Observe that when n = 0 the matrix is identical to the matrix of section 4.1, again consistent with the fact that exact seeds are skip-0 seeds. The same applies to and .

5.7. Illustration

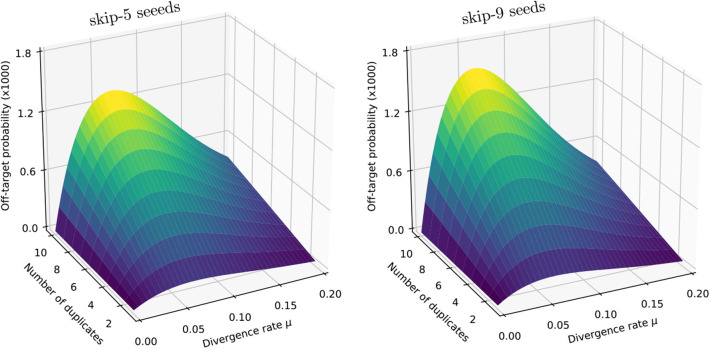

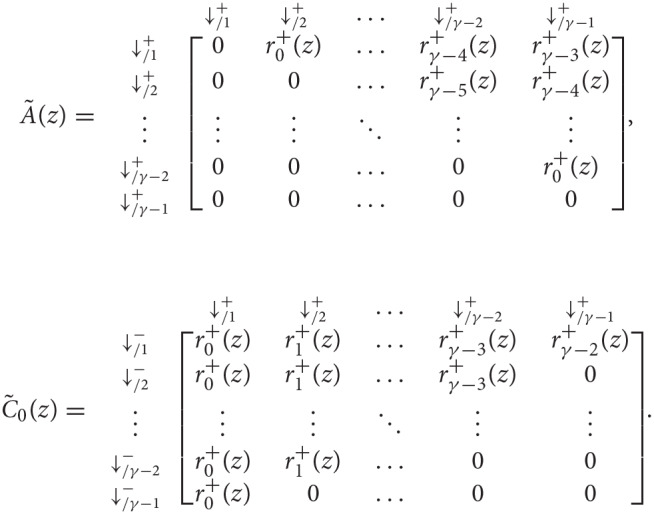

We illustrate the strategy delineated above using the same settings as in section 4.6 (reads of length k = 50, probability of sequencing error p = 0.01 and seeds of minimum size γ = 19), except that we replace exact seeds by skip-5 and skip-9 seeds.

Figure 11 shows the result for a number of duplicates N from 1 to 10 and for a divergence rate μ from 0 to 0.20. The surfaces have the same general aspect as in Figure 9. The probability that seeding is off-target increases with N and there is again a worst value of μ, because the maximum of minimizes expression (10) for every value of N. However, those values of μ are not the same (they are approximately 0.065 and 0.060 for skip-5 and skip-9 seeds, respectively).

Figure 11.

Off-target seeding probabilities (skip seeds). The surfaces show the probability that seeding is off-target for skip-5 and skip-9 seeds of size γ = 19. Read size and error rate are the same as in Figure 9, i.e., k = 50 and p = 0.01. The divergence rate μ is defined as the probability that a given duplicate differs from the target at any given position. Note the factor 1,000 in the scale of the z-axis.

Importantly, Figure 11 reveals that skipping 5 nucleotides increases the chances that seeding is off-target by a factor approximately 1.5, and skipping 9 nucleotides by a factor approximately 1.8 (compare with Figure 9). Skipping nucleotides seems to have little effect, but this is not a general conclusion. Indeed, skipping nucleotides decreases the probability of on-target seeding (skipping positions implies fewer on-target seeds) but increases the probability of null seeding (skipping positions implies fewer seeds overall), so the effects must be evaluated on a case-by-case basis. Skipping nucleotides can even decrease the probability that seeding is off-target. Concretely, for exact seeds of size γ = 19 in reads of size k = 50 with N = 10 duplicates at divergence μ = 0.1 with a sequencing error p = 0.1, the probability of off-target seeding is approximately 0.178 with exact seeds and 0.035 with skip-9 seeds, showing that skipping nucleotides can have different effects.

This kind of information is critical for choosing the best seeding strategy. Yet, the off-target seeding probability is not the only criterion. Equally important considerations are the probability of on-target seeding, the computational resources required to implement a particular seeding strategy and other sources of mapping errors (see discussion in section 8.1). The benefit of a theory to compute seeding probabilities is to have access to this knowledge.

6. Off-Target MEM Seeds

MEM seeds are substantially more complex than exact seeds and skip seeds because we need to take into account all the duplicates in the combinatorial construction.

6.1. Hard and Soft Masking

We first introduce two important notions that will be the key to understanding the behavior of MEM seeds.

Definition 8. At a given position of the read, a duplicate is a hard mask if its match length on the left side is strictly longer than the match length of the target. A duplicate is a soft mask if it has the same match length as the target.

Figure 12 gives a graphical intuition of hard and soft masks. It is important to bear in mind that hard and soft masks depend on the position of interest: a sequence can be a mask at the left end of the read and not at the right end, or the opposite.

Figure 12.

Example of hard and soft masks. Genomic sequences are shown below a read where open squares represent nucleotides. In the sequences, the open squares represent matches and the closed squares represent mismatches. The nucleotides contributing to the match length are represented as gray boxes. At the focus position, the match length of the target is 7. The first duplicate is a hard mask because its match length is 13 > 7. The second duplicate is a soft mask because its match length is 7, as the target. The third duplicate is not a mask because its match length is 2 < 7.

Hard and soft masks explain the counter-intuitive properties of MEM seeds. For instance, in Figure 4 the target cannot be discovered because every nucleotide of the read has a hard mask. In Figure 5, the target could be discovered if the read were shorter because a hard mask would turn into a soft one.

From the definition, we see that the last nucleotide of every strict on-target MEM seed is always unmasked. Conversely, an unmasked nucleotide always belongs to exactly one strict on-target MEM (not necessarily a seed because the size of the MEM can be less than γ). Also, the last nucleotide of every shared on-target MEM seed is always soft-masked, but a soft-masked nucleotide does not always belong to a shared on-target MEM.

Since hard and soft masks inform us about the positions of on-target MEM seeds, we construct an alphabet that encodes the masking status of the nucleotides.

6.2. The MEM Alphabet

As before, we recode the reads as sequences of letters from a specialized alphabet called the MEM alphabet .

The symbols ↓/m (m ≥ 0) indicate that the nucleotide is a sequencing error and m is the number of duplicates that match the nucleotide. Since a sequencing error is always a mismatch against the target, the symbol ↓/0 indicates that the nucleotide is a mismatch against every sequence. The symbols ↑/i indicate a change in masking status: the nucleotide is not masked but the previous is—this happens when all the masks fail to extend beyond this position. The index i ≥ 1 is the number of nucleotides since the last mismatch or since the beginning of the read. All the other nucleotides are represented by the symbol □, implying that □ symbols are never sequencing errors and always match the target. The symbol | is appended to the end of the read as before.

Note that in the symbols ↓/m and ↑/i, the numbers m and i have different meanings. In the symbol ↓/m, the index m is a number of sequences (0 ≤ m ≤ N where N is the number of duplicates); in the symbol ↑/i, the index i is a number of nucleotides. Figure 13 shows the encoding of a read in the MEM alphabet.

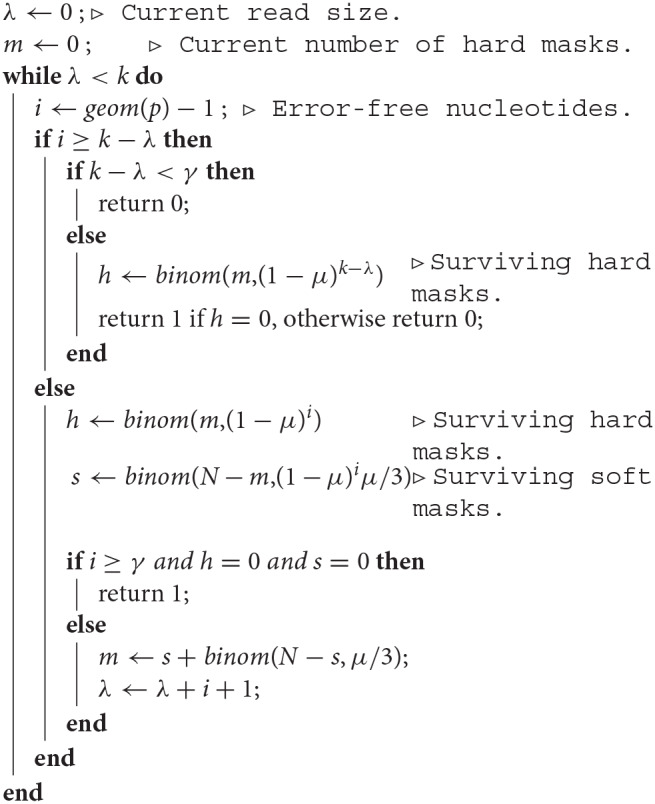

Figure 13.