Abstract

Progress of machine learning in critical care has been difficult to track, in part due to absence of public benchmarks. Other fields of research (such as computer vision and natural language processing) have established various competitions and public benchmarks. Recent availability of large clinical datasets has enabled the possibility of establishing public benchmarks. Taking advantage of this opportunity, we propose a public benchmark suite to address four areas of critical care, namely mortality prediction, estimation of length of stay, patient phenotyping and risk of decompensation. We define each task and compare the performance of both clinical models as well as baseline and deep learning models using eICU critical care dataset of around 73,000 patients. This is the first public benchmark on a multi-centre critical care dataset, comparing the performance of clinical gold standard with our predictive model. We also investigate the impact of numerical variables as well as handling of categorical variables on each of the defined tasks. The source code, detailing our methods and experiments is publicly available such that anyone can replicate our results and build upon our work.

Introduction

Increasing availability of clinical data and advances in machine learning have addressed a wide range of healthcare problems, such as risk assessment and prediction in acute, chronic and critical care. Critical care is a particularly data-intensive field, since continuous monitoring of patients in Intensive Care Units (ICU) generates large streams of data that can be harnessed by machine learning algorithms. However, progress in harnessing digital health data faces several obstacles, including reproducibility of results and comparability between competing models. While, other areas of machine learning research, such as image and natural language processing have established a number of benchmarks and competitions (including ImageNet Large Scale Visual Recognition Challenge (ILSVRC) [1] and National NLP Clinical Challenges (N2C2) [2], respectively), progress in machine learning for critical care has been difficult to measure, in part due to absence of public benchmarks. Availability of large clinical data sets, including Medical Information Mart for Intensive Care (MIMIC III) [3] and more recently, a multi-centre eICU Collaborative Research Database [4] are opening the possibility of establishing public benchmarks and consequently tracking the progress of machine learning models in critical care. Availing of this opportunity, we propose a public benchmark suite to address four areas of critical care, namely mortality prediction, estimation of length of stay, patient phenotyping and risk of decompensation. We define each task and evaluate our algorithms on a multi-centre dataset of 73,718 patients (containing 4,564,844 clinical records) collected from 335 ICUs across 208 hospitals. While there has been work in this area that has focused on the single-center MIMIC III clinical dataset [5], our work is the first to focus on a multi-center critical care dataset, the eICU database [4]. Evaluating models on a multi-center dataset typically results in the inclusion of a wider range of patient groups, larger number of patients, external validity and lower systematic bias in comparison to a single-center dataset, resulting in increased generalisability of the study [6, 7]. However building a predictive model on a multi-centre dataset is more challenging due to heterogeneity of the data. Nevertheless, the performance of our models (as measured by AUC ROC) compare favourably with the performance of the models using the single-center MIMIC III dataset as reported in [5].

The main contributions of this work are as follows: i) we provide the baseline performance, (using either on clinical gold standard or Logistic/Linear Regression algorithm) and compare it against our benchmark result, achieved using a model based on bidirectional long short-term memory (BiLSTM); ii) investigate impact of categorical and numerical variables on all four benchmarking tasks; iii) evaluate entity embedding for categorical variables, versus one hot encoding; iv) show that for some tasks the number of variables can be reduced significantly without greatly impacting prediction performance; and v) report six evaluation metrics for each of the tasks, facilitating direct comparison with future results. The source code for our experiments is publicly available at https://github.com/mostafaalishahi/eICU_Benchmark, so that anyone with access to the public eICU database can replicate our experiments and build upon our work.

Materials and methods

Ethics statement

This study was an analysis of a publicly-available, anonymised database with pre-existing institutional review board (IRB) approval; thus, no further approval was required.

eICU dataset description and cohort selection

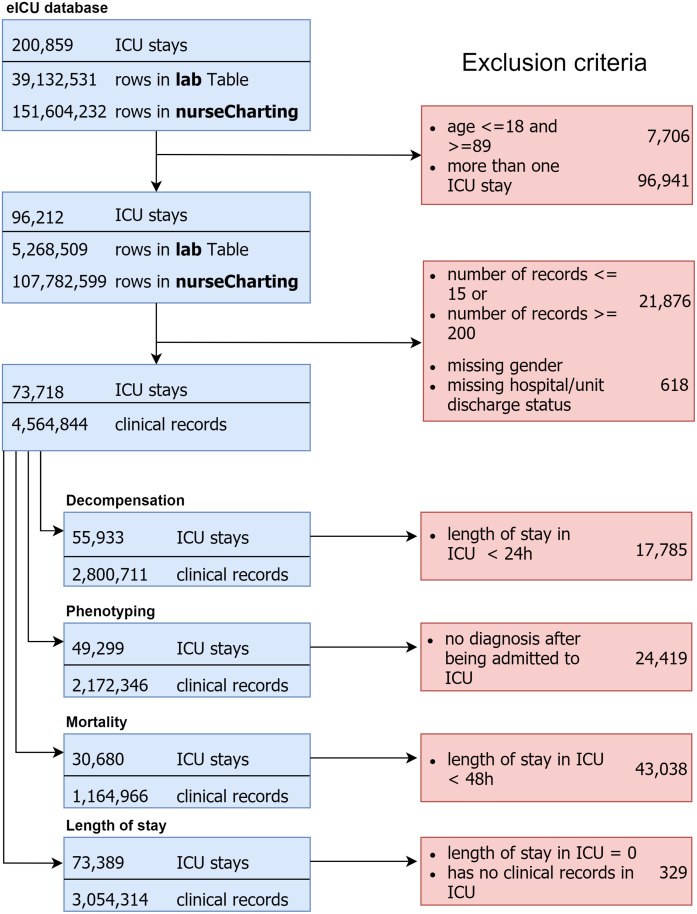

The eICU Collaborative Research Database [4] is a multi-center intensive care unit database with high granularity data for over 200,000 admissions to ICUs monitored by eICU programs across the United States. The eICU database comprises 200,859 patient unit encounters for 139,367 unique patients admitted between 2014 and 2015 to 208 hospitals located throughout the US. We selected adult patients (age > 18) that had an ICU admission with at least 15 records, leading to 73,718 unique patients with a median age of 62.41 years (Q1–Q3: 52-75), 45.5% female. Hospital mortality rate was 8.3% and average length of stay in hospital and in unit were 5.29 days and 3.9 days respectively (further details are provided in Table 1). Cohort selection criteria are detailed in Fig 1.

Table 1. Characteristics and mortality outcome measures.

*LoS (Length of Stay). Continuous variables are presented as Median [Interquartile Range Q1–Q3]; binary or categorical variables as Count (%).

| Overall | Dead at Hospital | Alive at Hospital | |

|---|---|---|---|

| ICU Admissions | 73,718 | 6,167 | 67,551 |

| Age | 62.41 [52-75] | 68.12 [59-80] | 61.8 [52-75] |

| Gender (F) | 33,544 (45.5) | 2,830 (45.8) | 30,714 (45.4) |

| Ethnicity | |||

| Caucasian | 56,973 (77.2) | 4,866 (78.9) | 52,107 (77.1) |

| African American | 7,982 (10.8) | 582 (9.4) | 7,400 (10.9) |

| Hispanic | 2,937 (3.98) | 226 (3.6) | 2,711 (4) |

| Asian | 1,174 (1.59) | 97 (1.5) | 1,077 (1.5) |

| Native American | 413 (0.56) | 42 (0.68) | 371 (0.54) |

| Unknown | 4,239 (5.7) | 354 (5.7) | 3,885 (5.7) |

| Outcomes | |||

| Hospital LoS* (days) | 5.29 [2.53-6.84] | 3.9 [1.42-5.22] | 5.41 [2.65-6.92] |

| ICU LoS* (days) | 2.32 [1.01-2.91] | 3.17 [1.19-4.43] | 2.24 [1-2.83] |

| Hospital Death | 6,167 (8.36) | 6,167 (100) | - |

| ICU Death | 4,575 (6.2) | 4,575 (74.1) | - |

Fig 1. Cohort selection criteria.

The final patient cohort contained 4,564,844 clinical records where we grouped these records on 1 hour window, imputed the missing values based on the mean of that window and took the last valid record of that specific window.

Out of 31 tables in the eICU (v1.0) database we selected variables from the following tables: patient (administrative information and patient demographics), lab (Laboratory measurements collected during routine care), nurse charting (bedside documentation) and diagnosis based on advice from a clinician as well as consistency with other similar tasks reported in the related work section. Selected variables are shown in Table 2 and are common across all the four tasks.

Table 2. Selected variables for all the four tasks.

| Variable | Data Type |

|---|---|

| Heart rate | Numerical |

| Mean arterial pressure | Numerical |

| Diastolic blood pressure | Numerical |

| Systolic blood pressure | Numerical |

| O2 | Numerical |

| Respiratory rate | Numerical |

| Temperature | Numerical |

| Glucose | Numerical |

| FiO2 | Numerical |

| pH | Numerical |

| Height | Numerical |

| Weight | Numerical |

| Age | Numerical |

| Admission diagnosis | Categorical |

| Ethnicity | Categorical |

| Gender | Categorical |

| Glasgow Coma Score Total | Categorical |

| Glasgow Coma Score Eyes | Categorical |

| Glasgow Coma Score Motor | Categorical |

| Glasgow Coma Score Verbal | Categorical |

Description of tasks

In this section, we define four different benchmark tasks, namely in-hospital mortality prediction, remaining length of stay (LoS) forecasting, patient phenotyping, and risk of physiologic decompensation. After applying selection criteria for each task, the resulting patient cohorts are outlined in Table 3

Table 3. Number of patients and records in four tasks.

| Task | No. of patients | Clinical records |

|---|---|---|

| In-hospital Mortality | 30,680 | 1,164,966 |

| Remaining LoS | 73,389 | 3,054,314 |

| Phenotyping | 49,299 | 2,172,346 |

| Physiologic Decompensation | 55,933 | 2,800,711 |

Mortality prediction

In-hospital mortality is defined as the patient’s outcome at the hospital discharge. This is a binary classification task, where each data sample spans a 1-hour window. The cohort for this task was selected based on the presence of hospital discharge status in patients’ record and length of stay of at least 48 hours (we focus on prediction during the first 24 and 48 hours). This selection criteria resulted in 30,680 patients containing 1,164,966 records.

Length of stay prediction

Length of stay is one of the most important factors accounting for the overall hospital costs, as such its forecast could play an important role in healthcare management [8]. Length of stay is estimated through analysis of events occurring within a fixed time-window, once every hour from the initial ICU admission. This is a regression task, where we use 20 clinical variables described in Table 2. For this cohort we selected patients whose length of stay was present in their records. These selection criteria resulted in 73,389 ICU stays, containing 3,054,314 records. The mean length of stay was 1.86 days with standard deviation of 1.94 days, as shown in Table 1.

Phenotyping

Phenotyping is a classification problem where we classify whether a condition (ICD-9 code) is present in a particular ICU stay record. Since any given patient may have more than one ICD-9 code, this is defined as a multi-label classification problem.

While our definition is focused on diagnosis using ICD codes for this task, the definition of phenotyping may encompass other domains, such as procedures [9] [10] for example. However, expanding the definition of phenotyping beyond standardised ICD codes would have required development of non-standardised rules, as no common standard approach for defining and validating EHR phenotyping algorithms exists [11] [12]. Consequently, it would have been challenging to compare this work with the already published benchmarks. Furthermore, there is some concern regarding reproducibility of rule-based phenotyping as found in [12]. Considering these issues, as well as keeping consistent with previously published benchmarks, we settled on using ICD codes as the basis for the definition of this task. Accordingly, the dataset contains 767 unique ICD codes, which are grouped into 25 categories shown in Table 4. The cohort for this task, considering initial inclusion criteria as well as recorded diagnosis during the ICU stay, resulted in 49,299 patients.

Table 4. Phenotype categories.

| Type | Phenotype |

|---|---|

| Acute | 1. Respiratory failure; insufficiency; arrest 2. Fluid and electrolyte disorders 3. Septicemia 4. Acute and unspecified renal failure 5. Pneumonia 6. Acute cerebrovascular disease 7. Acute myocardial infarction 8. Gastrointestinal hemorrhage 9. Shock 10. Pleurisy; pneumothorax; pulmonary collapse 11. Other lower respiratory disease 12. Complications of surgical 13. Other upper respiratory disease |

| Chronic | 1. Hypertension with complications 2. Essential hypertension 3. Chronic kidney disease 4. Chronic obstructive pulmonary disease 5. Disorders of lipid metabolism 6. Coronary atherosclerosis and related 7. Diabetes mellitus without complication |

| Mixed | 1. Cardiac dysrhythmias 2. Congestive heart failure; non hypertensive 3. Diabetes mellitus with complications 4. Other liver diseases 5. Conduction disorders |

Physiologic decompensation

There are a number of ways to define decompensation, however in clinical setting majority of early warning systems, such as National Early Warning Score (NEWS) [13] are based on prediction of mortality within the next time window (such as 24 hours after the assessment). Following suit and keeping consistent with previously published benchmarks [5], we also define decompensation as a binary classification problem, where the target label indicates whether the patient dies within the next 24 hours. The cohort for this task results in 55,933 patients (2,800,711 records), where the decompensation rate is around 6.5% (3,664 patients).

Prediction algorithms

Baselines

We compare our model with two standard baseline approaches namely, logistic/linear regression (LR) and a 1-layer artificial neural network (ANN). The embeddings for these models are learned in the same way as for the proposed BiLSTM model as explained in the section that follows.

Deep learning models

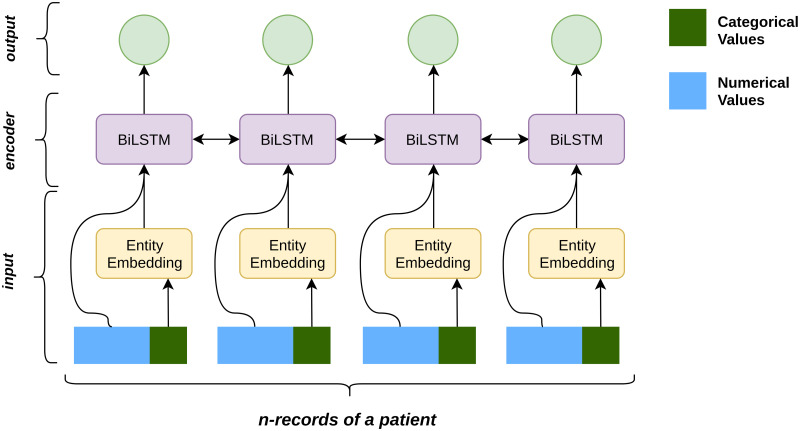

In this section, we describe the selected clinical variables, approaches to represent these variables as well as baseline and deep models used in this study. The architecture of this work consists of three modules, namely input module, encoder module and output module as shown in Fig 2.

Fig 2. Model architecture.

Input representation: We process and model both numerical and categorical variables separately, as shown in Table 2. Categorical variables are represented using either one-hot encoding (OHE) or entity embedding (EE). OHE is the baseline approach that converts the variables into binary representation. Using this approach for our 7 categorical variables results in 429 unique records, rendering a large sparse matrix. In response, we represent each variable as an embedding and compare the performance with the OHE approach. We use entity embedding [14], where each categorical variable in the dataset is mapped to a vector and the corresponding embedding is added to the patient’s record. This entity embedding is learned by the neural network during the training phase along with other parameters. So the final representation of the input at time t is as follows:

where Numt is the numerical variable, Catt is the categorical variable at time t and U is the embedding matrix learned by the model.

Encoder: To capture sequential dependency in our data, we use Recurrent Neural Network (RNN) that resemble a chain of repeating modules to efficiently model sequential data [15]. They take sequential data X = (x1, x2, ….xn) as input and provide a hidden representation H = (h1, h2, ….hn) which captures the information at every time step in the input. Formally,

where xt is the input at time t, W is the parameter of RNN learned during training and f is a non-linear operation such as sigmoid, tanh or ReLU.

A drawback of regular RNNs is that the input sequence is fed in one direction, normally from past to future. In order to capture both past and future context, we use a Bidirectional Long Short Term Memory (BiLSTM) [16] [17] for our model, which processes the input in both forward and backward direction. Using a BiLSTM the model is able to capture the context of a record not only by its preceding records but also with the following records, allowing the model to produce more informed predictions. The input at time t is represented by both its forward context and backward context as . Similarly, the representation of the completed patient record is given by .

Output: The choice of output layer is based on whether the benchmarking task is a regression or a classification task.

Remaining LoS prediction is a regression task, in which we predict the remaining LoS record-wise. That is, each patient record is fed to the model to predict the remaining LoS for that specific time step. This task is realized using a many to many architecture, where we assign a label to each patient record. The score for this task is obtained using:

| (1) |

where yt is the remaining LoS predicted and ReLU is the non-linear activation function used as the prediction of remaining LoS cannot be negative.

In-hospital mortality and decompensation are binary classification tasks. For the in-hospital mortality the many to one architecture is applied and the classifier is as follows:

| (2) |

For the decompensation task, a many to many architecture is applied. Prediction at each-time step is treated as a binary classification and the classifier is defined as:

| (3) |

Phenotyping is defined as a multi-label task with 25 binary classifiers for each phenotype, and the score for the task is obtained using:

| (4) |

where t is the time step and n is the phenotype being predicted and Wn is the model parameter.

Results

In this section, we report benchmarking results of methods and prediction algorithms, focusing on answering the following questions: (a) How does performance of our model compare to the performance of clinical scoring systems as well as baseline algorithms (logistic/linear regression in our case); and (b) What is the impact on prediction performance when using different feature sets, such as categorical and numerical variables, solely categorical and solely numerical variables? We evaluate our model through a 5-fold cross-validation using the following evaluation metrics: for the regression tasks we report coefficient of determination R2, and Mean Absolute Error (MAE), while for the classification tasks we report AUROC (Area Under the Receiver Operating Characteristics), AUPRC (Area Under the Precision Recall Curve), Specificity and Sensitivity (set to 90% to facilitate direct comparison of results), Positive Predictive Value (PPV) and Negative Predictive Value (NPV).

Mortality prediction

Results from this task indicate that the proposed approach of learning embeddings for categorical variables is more effective than OHE representation. This holds true for both baseline models (LR and ANN) as well as BiLSTM model, reflected in the prediction performance of each model. Furthermore, BiLSTM based model outperforms all the other approaches in predicting mortality in both the 24 hour window and the 48 hour window as shown in Table 5. It is interesting to note that using only categorical variables (reducing the number of variables from 20 to only 7) with embedding provides a better performance than using numerical variables only (AUROC 78.57 vs. 76.63 for the first 24h). These results suggest that EE of categorical features in vector space is more effective in the prediction of mortality.

Table 5. In-hospital mortality prediction during first 24 and 48 hours in ICU.

(Num. and Cat. indicate presence of numerical and categorical variables respectively. Repn. indicates representation of categorical variables, either One Hot Encoding (OHE) or embedding (EMB)).

| Data | Model | Num. | Cat. | Repn. | AUROC | AUPRC | Spec. | Sens. | PPV | NPV |

|---|---|---|---|---|---|---|---|---|---|---|

| First 24 hours | APACHE | ✓ | ✓ | Not spec. | 77.30 | 41.23 | 38.74 | 86 | 57.09 | 93.07 |

| LR | ✓ | ✓ | EMB | 79.88±0.67 | 40.50 | 46.01 | 90 | 64.53 | 90.06 | |

| ANN | ✓ | ✓ | EMB | 82.60±0.58 | 46.17 | 51.99 | 90 | 65.91 | 90.78 | |

| BiLSTM | ✓ | ✓ | EMB | 83.80±0.42 | 48.72 | 54.29 | 90 | 69.33 | 90.82 | |

| BiLSTM | ✓ | ✓ | OHE | 82.78±0.32 | 46.34 | 51.96 | 90 | 62.95 | 91.09 | |

| BiLSTM | ✕ | ✓ | EMB | 78.57±0.70 | 40.21 | 43.83 | 90 | 58.52 | 90.83 | |

| BiLSTM | ✓ | ✕ | ✕ | 76.63±0.75 | 38.56 | 36.30 | 90 | 66.00 | 90.82 | |

| First 48 hours | LR | ✓ | ✓ | EMB | 82.34±0.65 | 45.39 | 51.07 | 90 | 69.06 | 90.33 |

| ANN | ✓ | ✓ | EMB | 85.36±0.66 | 52.59 | 57.19 | 90 | 69.78 | 91.53 | |

| BiLSTM | ✓ | ✓ | EMB | 86.63±0.66 | 55.20 | 60.72 | 90 | 68.98 | 92.07 | |

| BiLSTM | ✓ | ✓ | OHE | 84.96±0.63 | 51.63 | 56.12 | 90 | 64.82 | 91.83 | |

| BiLSTM | ✕ | ✓ | EMB | 80.59±0.82 | 45.59 | 46.39 | 90 | 63.86 | 91.27 | |

| BiLSTM | ✓ | ✕ | ✕ | 80.77±1.29 | 45.48 | 44.02 | 90 | 67.94 | 90.63 |

Remaining length of stay in unit prediction

Predicting remaining LoS in the ICU unit requires capturing temporal dependencies between each time-step. For this reason baseline models perform poorly due the lack of explicit modelling of temporal dependencies. The proposed BiLSTM model on the other hand is able to capture this dependency effectively and outperforms the baseline models as shown in Table 6. We can also see that the numerical variables are the most effective in prediction of remaining LoS. However, using categorical variables encoded with OHE reduces the model performance, while EE improves R2 measures.

Table 6. Length of stay in hospital prediction, evaluated using Mean Absolute Error (MAE).

| Data | Model | Num. | Cat. | Repn. | R2 | MAE [Day] |

|---|---|---|---|---|---|---|

| In ICU unit | LR | ✓ | ✓ | EMB | 0.024±0.001 | 1.292±0.008 |

| ANN | ✓ | ✓ | EMB | 0.048±0.003 | 1.267±0.014 | |

| BiLSTM | ✓ | ✓ | EMB | 0.643±0.042 | 0.532±0.033 | |

| BiLSTM | ✓ | ✓ | OHE | 0.623±0.025 | 0.511± 0.021 | |

| BiLSTM | ✕ | ✓ | EMB | 0.610±0.029 | 0.532±0.033 | |

| BiLSTM | ✓ | ✕ | ✕ | 0.610±0.042 | 0.479±0.016 |

Phenotyping

For the phenotyping task, we focus on comparing performance (AUROC) of the proposed model on different subset of features, namely numerical versus categorical variables. Using only the categorical features, modelled as entity embeddings shows a significantly higher performance (77.75) compared to using only the numerical features (67.05) as outlined in Table 7. Clearly categorical features are more effective in representing patients’ phenotype, since integrating both of the subsets does not significantly improve the result (79.89 from 77.75). In this task there is a wide difference between performance of the model on individual diseases, varying from 61.55 (diabetes mellitus without complications) to 94.24 (acute cerebrovascular disease). As a general trend prediction performance on acute diseases is higher (82.49) than that on chronic diseases (73.55). This may be due to the slow-progressing nature of chronic diseases, where recorded ICU data is relatively short and thus unable to fully capture events related to chronic diseases.

Table 7. Phenotyping task on eICU (reported scores are AUROC).

| Phenotype | Prevalence | Type | Num & cat | Num. | Cat. |

|---|---|---|---|---|---|

| Respiratory failure; insufficiency; arrest | 0.241 | acute | 83.31±0.32 | 73.09±0.41 | 81.24±0.19 |

| Fluid and electrolyte disorders | 0.156 | acute | 72.76±0.77 | 60.35±0.50 | 72.18±1.20 |

| Septicemia | 0.145 | acute | 91.54±0.15 | 71.43±0.50 | 90.86±0.50 |

| Acute and unspecified renal failure | 0.142 | acute | 75.93±0.68 | 65.41±0.66 | 74.14±1.32 |

| Pneumonia | 0.120 | acute | 89.34±0.51 | 70.28±0.77 | 88.47±0.24 |

| Acute cerebrovascular disease | 0.108 | acute | 94.24±0.58 | 74.37±0.75 | 93.63±0.49 |

| Acute myocardial infarction | 0.090 | acute | 91.35±0.67 | 70.56±0.74 | 91.18±0.87 |

| Gastrointestinal hemorrhage | 0.079 | acute | 91.38 ± 0.74 | 61.33 ± 1.33 | 90.66 ± 0.83 |

| Shock | 0.068 | acute | 85.75 ± 0.57 | 77.12 ± 0.41 | 82.74 ± 1.35 |

| Pleurisy; pneumothorax; pulmonary collapse | 0.039 | acute | 70.40 ± 2.23 | 61.15 ± 1.56 | 70.03 ± 0.90 |

| Other lower respiratory disease | 0.030 | acute | 80.42 ± 0.99 | 60.06 ± 1.24 | 79.60 ± 1.05 |

| Complications of surgical | 0.011 | acute | 68.45 ± 3.91 | 54.01 ± 4.79 | 65.43 ± 3.17 |

| Other upper respiratory disease | 0.007 | acute | 77.46 ± 5.46 | 53.56 ± 3.17 | 74.18 ± 4.52 |

| Macro-average (acute diseases) | - | - | 82.49 ± 1.35 | 65.60 ± 1.30 | 81.10 ± 1.28 |

| Hypertension with complications | 0.019 | chronic | 85.70 ± 2.59 | 81.27 ± 1.29 | 81.61 ± 2.97 |

| Essential hypertension | 0.203 | chronic | 72.16 ± 0.74 | 66.58 ± 0.31 | 68.31 ± 0.66 |

| Chronic kidney disease | 0.104 | chronic | 65.96 ± 1.66 | 62.06 ± 1.39 | 65.05 ± 0.90 |

| Chronic obstructive pulmonary disease | 0.093 | chronic | 75.62 ± 1.44 | 63.73 ± 0.60 | 74.48 ± 1.67 |

| Disorders of lipid metabolism | 0.054 | chronic | 72.95 ± 1.05 | 62.85 ± 1.03 | 71.56 ± 1.36 |

| Coronary atherosclerosis and related | 0.041 | chronic | 80.89 ± 0.45 | 64.03 ± 0.98 | 79.90 ± 1.34 |

| Diabetes mellitus without complication | 0.006 | chronic | 61.55 ± 4.52 | 58.89 ± 5.77 | 59.12 ± 3.56 |

| Macro-average (chronic diseases) | - | - | 73.55 ± 1.78 | 65.63 ± 1.63 | 70.72 ± 1.78 |

| Cardiac dysrhythmias | 0.165 | mixed | 75.68 ± 0.86 | 66.24 ± 0.81 | 71.92 ± 1.49 |

| Congestive heart failure; non hypertensive | 0.106 | mixed | 78.87 ± 1.05 | 66.34 ± 0.76 | 76.56 ± 1.56 |

| Diabetes mellitus with complications | 0.047 | mixed | 93.59 ± 0.65 | 90.38 ± 1.41 | 89.59 ± 0.99 |

| Other liver diseases | 0.039 | mixed | 78.33 ± 1.71 | 68.20 ± 1.02 | 75.51 ± 2.32 |

| Conduction disorders | 0.013 | mixed | 83.58 ± 1.68 | 72.90 ± 2.43 | 80.81 ± 1.66 |

| Macro-average (mixed diseases) | - | - | 82.01 ± 1.19 | 72.81 ± 1.29 | 78.88 ± 1.60 |

| Macro-average (all diseases) | - | - | 79.89 ± 1.44 | 67.05 ± 1.39 | 77.75 ± 1.48 |

Decompensation prediction

As mentioned in Section Physiologic Decompensation, decompensation is related to mortality prediction with the difference that we predict whether the patient survives in the next 24 hours, given the current time step. As such, time-dependence is critical. Since 3 categorical variables (out of 7) are time-independent and only 4 are time-dependent, they pose a difficult challenge for the model to be able to predict decompensation using only the time-independent categorical variables. For this reason, the model with only numerical variables outperforms the model with only categorical variables as shown in Table 8.

Table 8. Decompensation risk prediction in eICU.

| Data | Model | Num. | Cat. | Repn. | AUROC | AUPRC | Spec. | Sens. | PPV | NPV |

|---|---|---|---|---|---|---|---|---|---|---|

| In ICU unit | LR | ✓ | ✓ | EMB | 67.63 ±5.89 | 16.53 | 18.92 | 90.00 | Nan | 95.10 |

| ANN | ✓ | ✓ | EMB | 80.59±0.60 | 22.86 | 47.65 | 90.00 | 45.73 | 95.32 | |

| BiLSTM | ✓ | ✓ | EMB | 95.35±0.60 | 68.69 | 88.45 | 90.00 | 78.51 | 97.27 | |

| BiLSTM | ✕ | ✓ | EMB | 86.82±0.70 | 36.34 | 61.08 | 90.00 | 57.31 | 96.13 | |

| BiLSTM | ✓ | ✕ | ✕ | 95.15±0.16 | 68.28 | 85.60 | 90.00 | 79.36 | 97.46 |

Discussion

In this study we have described four standardised benchmarks in machine learning for critical care research. Our definition of benchmark tasks is consistent with previously published benchmarks to facilitate comparison with already published results. However, in this work we focus on the more recent eICU database, where clinical data has been collected from 335 ICUs across 208 hospitals across the United States. Our dataset contains a larger number of patients and a wider range of patient groups, in comparison to benchmarks published using a single center dataset, which should result in lower systematic bias and increased generalisability of the study.

We provided a set of baselines for our benchmarks and show that BiLSTM model significantly outperforms clinical gold standard as well as the baseline models. Of note is the impact of entity embedding of categorical variables in further improving the performance of our LSTM-based model. Clearly, interpretability remains a significant challenge of models based on deep neural networks, including our BiLSTM model. However, there has been significant progress in “opening the black box” [18] as demonstrated by a recently updated review of interpretability methods [19], bringing these models one step closer to clinical practice. As our work is meant to track the progress of machine learning in critical care, interpretability is certainly an important aspect of this progress. We believe that our work will provide a solid basis to further improve critical care decision making and we provide the source code for other researchers that wish to replicate our experiments and build upon our results.

Related work

In this Section, we provide a brief review of the most relevant studies related to each of the tasks, mortality, length of stay, phenotyping, and physiologic decompensation. We briefly review the other benchmarking studies in critical care, related to our work.

Mortality prediction

Many clinical scoring systems have been developed for mortality prediction, including Acute Physiology and Chronic Health Evaluation (APACHE III [20], APACHE IV [21]) and Simplified Acute Physiology Score [22] (SAPS II, SAPS III). Most of these scoring systems use logistic regression to identify predictive variables to establish these scoring systems. Providing an accurate prediction of mortality risk for patients admitted to ICU using the first 24/48 hours of ICU data could serve as an input to clinical decision making and reduce the healthcare costs. In this regard, recent advances in deep learning have been shown to outperform the conventional machine learning methods as well as clinical prediction techniques such as APACHE and SAPS [5] [23] [24]. Mortality prediction has been a popular application for deep learning researchers in recent years, though model architecture and problem definition vary widely. Convolutional neural network and gradient boosted tree algorithm have been used by Darabi et al. [25], in order to predict long-term mortality risk (30 days) on a subset of MIMIC-III dataset. Similarly, Celi et al. [26] developed mortality prediction models based on a subset of MIMIC database using logistic regression, Bayesian network and artificial neural network.

Length of stay

Resource allocation and identifying patients with unexpected extended ICU stays would help decision-making systems to improve the quality of care and ICU resource allocation. Therefore forecasting the length of stay (LoS) in ICU would be significantly important in order to provide high-quality care to a patient, and it would avoid extra costs for care providers. In this regard, Sotoodeh et al [27] applied hidden markov models to predict LoS by using the first 48 hours of physiological measurements. Ma et al. [28] defined LoS as a classification problem in which the objective was to create a personalized model for patients to forecast LoS. Previous studies [23] [5] have shown that deep learning models obtain good results on forecasting length of stay in ICU. In this regard, Tu et al [29] applied neural network based methods on a Canadian private dataset, which includes patients with cardiac surgery. The developed model was able to detect the patient with low, intermediate, and high prolonged stay in ICU.

Phenotyping

Phenotyping has been a popular task in recent years [30] [31], although problem definition varies widely, from focusing on ICD based diagnosis [24] up to including clinical procedures and medications [9] [10]. Several works on phenotyping from clinical time series have focused on variations of tensor factorization and related models [30] [31] [32], and the most recently published studies on phenotyping are focused on deep learning methods. In this regard, Razavian et al [33] and Lipton et al [24] applied deep learning methods to predict diagnoses. While the first trained RNN LSTM and CNN for prediction of 133 diseases based on 18 laboratory tests on a private dataset including 298k patients, the latter applied an RNN LSTM on a single-center, private pediatric intensive care unit (PICU) dataset in order to classify 128 diagnoses given 13 clinical measurements.

Physiologic decompensation

Early detection of physiologic decompensation could be used to avoid or delay the occurrence of decompensation. Recently machine learning researchers have started to apply various machine learning methods in order to predict the decompensation incident. Recent study by Ren et al. [34] applied gradient boosting models (GBM) to predict required intubation 3 hours ahead of time, in this work they used a cohort of 12,470 patients to predict unexpected respiratory decompensation. Differently, Xu et al [35] proposed a deep learning model to predict the decompensation event. The proposed attention-based model was applied on MIMIC-III Waveform Database and it outperformed several machine learning and deep learning models.

Benchmark

Harutyunyan et al. [5] developed a deep learning model based on RNN LSTM called multi-task RNN, in order to predict a number of clinical tasks such as mortality prediction in hospital, physiologic decompensation, phenotyping, and length of stay in ICU unit. The proposed model was applied on MIMIC-III dataset. Similarly, Purushotham et al [23] have provided a single-center benchmark of several machine learning and deep learning models trained on MIMIC-III for various tasks, showing that deep learning models consistently outperformed conventional machine learning models and clinical scoring systems. One common theme across the reviewed work is that the current literature focuses on single-center databases, while we did not find any work in this area that addressed multi-centre datasets, including the associated challenges.

Acknowledgments

We gratefully acknowledge clinical input provided by Monica Moz, MD from Humanitas Research Hospital, Italy in both cohort selection as well as variable ranking and selection. The work in this paper was partially supported by European Union’s Horizon 2020 research and innovation programme under grant agreement No 769765.

Data Availability

The dataset we used in this study, eICU Collaborative Research Database, is freely available multicenter database for critical care research. The source code for our experiments is publicly available at https://github.com/mostafaalishahi/eICU_Benchmark, so that anyone with access to the public eICU database can replicate our experiments and build upon our work. The data has been described in detail in representative publication in [1] and the procedure to access the data is described in https://eicu-crd.mit.edu/gettingstarted/access/ Certificate of access for this data bears Record ID 25280734. [1] Pollard, T., Johnson, A., Raffa, J. et al. The eICU Collaborative Research Database, a freely available multi-center database for critical care research. Nature Scientific Data 5, 180178 (2018). https://doi.org/10.1038/sdata.2018.178. Available from: https://www.nature.com/articles/sdata2018178.

Funding Statement

VO was supported by European Commission’s Horizon 2020 Project, WellCo, under grant agreement No 769765 (https://cordis.europa.eu/project/id/769765). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, et al. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision (IJCV). 2015;115(3):211–252. 10.1007/s11263-015-0816-y [DOI] [Google Scholar]

- 2. Stubbs A, Filannino M, Soysal E, Henry S, Uzuner Ö. Cohort selection for clinical trials: n2c2 2018 shared task track 1. Journal of the American Medical Informatics Association. 2019;26(11):1163–1171. 10.1093/jamia/ocz163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Johnson AE, Pollard TJ, Shen L, Li-wei HL, Feng M, Ghassemi M, et al. MIMIC-III, a freely accessible critical care database. Scientific data. 2016;3:160035 10.1038/sdata.2016.35 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Pollard TJ, Johnson AE, Raffa JD, Celi LA, Mark RG, Badawi O. The eICU Collaborative Research Database, a freely available multi-center database for critical care research. Scientific data. 2018;5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Harutyunyan H, Khachatrian H, Kale DC, Ver Steeg G, Galstyan A. Multitask learning and benchmarking with clinical time series data. Scientific data. 2019;6(1):96 10.1038/s41597-019-0103-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Bellomo R, Warrillow SJ, Reade MC. Why we should be wary of single-center trials. Critical care medicine. 2009;37(12):3114–3119. 10.1097/CCM.0b013e3181bc7bd5 [DOI] [PubMed] [Google Scholar]

- 7. Youssef N, Reinhart K, Sakr Y. The pros and cons of multicentre studies. Neth J Crit Care. 2008;12(3). [Google Scholar]

- 8. Kılıç M, Yüzkat N, Soyalp C, Gülhaş N. Cost Analysis on Intensive Care Unit Costs Based on the Length of Stay. Turkish journal of anaesthesiology and reanimation. 2019;47(2):142 10.5152/TJAR.2019.80445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Shahin TB, Balkan B, Mosier J, Subbian V. The Connected Intensive Care Unit Patient: Exploratory Analyses and Cohort Discovery From a Critical Care Telemedicine Database. JMIR medical informatics. 2019;7(1):e13006 10.2196/13006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Mosier J, Subbian V. Rule-Based Cohort Definitions for Acute Respiratory Failure: Electronic Phenotyping Algorithm. JMIR Medical Informatics. 2020;8(4):e18402 10.2196/18402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Denaxas S, Gonzalez-Izquierdo A, Fitzpatrick N, Direk K, Hemingway H. Phenotyping UK Electronic Health Records from 15 Million Individuals for Precision Medicine: The CALIBER Resource. Studies in health technology and informatics. 2019;262:220–223. [DOI] [PubMed] [Google Scholar]

- 12. Denaxas S, Parkinson H, Fitzpatrick N, Sudlow C, Hemingway H. Analyzing the heterogeneity of rule-based EHR phenotyping algorithms in CALIBER and the UK Biobank. BioRxiv. 2019; p. 685156. [Google Scholar]

- 13. McGinley A, Pearse RM. A national early warning score for acutely ill patients; 2012. [DOI] [PubMed] [Google Scholar]

- 14.Guo C, Berkhahn F. Entity embeddings of categorical variables. arXiv preprint arXiv:160406737. 2016;.

- 15. Rumelhart DE, Hinton GE, Williams RJ, et al. Learning representations by back-propagating errors. Cognitive modeling. 1988;5(3):1. [Google Scholar]

- 16. Schuster M, Paliwal KK. Bidirectional recurrent neural networks. IEEE Transactions on Signal Processing. 1997;45(11):2673–2681. 10.1109/78.650093 [DOI] [Google Scholar]

- 17. Hochreiter S, Schmidhuber J. Long short-term memory. Neural computation. 1997;9(8):1735–1780. 10.1162/neco.1997.9.8.1735 [DOI] [PubMed] [Google Scholar]

- 18. Zhang Z, Beck MW, Winkler DA, Huang B, Sibanda W, Goyal H, et al. Opening the black box of neural networks: methods for interpreting neural network models in clinical applications. Annals of translational medicine. 2018;6(11). 10.21037/atm.2018.05.32 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Molnar C. Interpretable Machine Learning; 2019. [Google Scholar]

- 20. Knaus WA, Wagner DP, Draper EA, Zimmerman JE, Bergner M, Bastos PG, et al. The APACHE III prognostic system: risk prediction of hospital mortality for critically III hospitalized adults. Chest. 1991;100(6):1619–1636. 10.1378/chest.100.6.1619 [DOI] [PubMed] [Google Scholar]

- 21. Zimmerman JE, Kramer AA, McNair DS, Malila FM. Acute Physiology and Chronic Health Evaluation (APACHE) IV: hospital mortality assessment for today’s critically ill patients. Critical care medicine. 2006;34(5):1297–1310. 10.1097/01.CCM.0000215112.84523.F0 [DOI] [PubMed] [Google Scholar]

- 22. Le Gall JR, Lemeshow S, Saulnier F. A new simplified acute physiology score (SAPS II) based on a European/North American multicenter study. Jama. 1993;270(24):2957–2963. [DOI] [PubMed] [Google Scholar]

- 23. Purushotham S, Meng C, Che Z, Liu Y. Benchmarking deep learning models on large healthcare datasets. Journal of Biomedical Informatics. 2018;83(April):112–134. [DOI] [PubMed] [Google Scholar]

- 24.Lipton ZC, Kale DC, Elkan C, Wetzel R. Learning to diagnose with LSTM recurrent neural networks. arXiv preprint arXiv:151103677. 2015;.

- 25. Darabi HR, Tsinis D, Zecchini K, Whitcomb WF, Liss A. Forecasting Mortality Risk for Patients Admitted to Intensive Care Units Using Machine Learning. Procedia Computer Science. 2018;140:306–313. 10.1016/j.procs.2018.10.313 [DOI] [Google Scholar]

- 26. Celi LA, Galvin S, Davidzon G, Lee J, Scott D, Mark R. A database-driven decision support system: customized mortality prediction. Journal of personalized medicine. 2012;2(4):138–148. 10.3390/jpm2040138 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Sotoodeh M, Ho JC. Improving length of stay prediction using a hidden Markov model. AMIA Summits on Translational Science Proceedings. 2019;2019:425. [PMC free article] [PubMed] [Google Scholar]

- 28. Ma X, Si Y, Wang Z, Wang Y. Length of stay prediction for ICU patients using individualized single classification algorithm. Computer methods and programs in biomedicine. 2020;186:105224 10.1016/j.cmpb.2019.105224 [DOI] [PubMed] [Google Scholar]

- 29. Tu JV, Guerriere MRJ. Use of a Neural Network as a Predictive Instrument for Length of Stay in the Intensive Care Unit Following Cardiac Surgery. Computers and Biomedical Research. 1993;26(3):220–229. 10.1006/cbmr.1993.1015 [DOI] [PubMed] [Google Scholar]

- 30.Ho JC, Ghosh J, Sun J. Marble: high-throughput phenotyping from electronic health records via sparse nonnegative tensor factorization. In: Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining; 2014. p. 115–124.

- 31.Zhou J, Wang F, Hu J, Ye J. From micro to macro: data driven phenotyping by densification of longitudinal electronic medical records. In: Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining; 2014. p. 135–144.

- 32. Kim Y, El-Kareh R, Sun J, Yu H, Jiang X. Discriminative and distinct phenotyping by constrained tensor factorization. Scientific reports. 2017;7(1):1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Razavian N, Marcus J, Sontag D. Multi-task prediction of disease onsets from longitudinal laboratory tests. In: Machine Learning for Healthcare Conference; 2016. p. 73–100.

- 34.Ren O, Johnson AE, Lehman EP, Komorowski M, Aboab J, Tang F, et al. Predicting and understanding unexpected respiratory decompensation in critical care using sparse and heterogeneous clinical data. In: 2018 IEEE International Conference on Healthcare Informatics (ICHI). IEEE; 2018. p. 144–151.

- 35.Xu Y, Biswal S, Deshpande SR, Maher KO, Sun J. Raim: Recurrent attentive and intensive model of multimodal patient monitoring data. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. ACM; 2018. p. 2565–2573.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The dataset we used in this study, eICU Collaborative Research Database, is freely available multicenter database for critical care research. The source code for our experiments is publicly available at https://github.com/mostafaalishahi/eICU_Benchmark, so that anyone with access to the public eICU database can replicate our experiments and build upon our work. The data has been described in detail in representative publication in [1] and the procedure to access the data is described in https://eicu-crd.mit.edu/gettingstarted/access/ Certificate of access for this data bears Record ID 25280734. [1] Pollard, T., Johnson, A., Raffa, J. et al. The eICU Collaborative Research Database, a freely available multi-center database for critical care research. Nature Scientific Data 5, 180178 (2018). https://doi.org/10.1038/sdata.2018.178. Available from: https://www.nature.com/articles/sdata2018178.