Abstract

Attacking ECDSA with wNAF implementation for the scalar multiplication first requires some side channel analysis to collect information, then lattice based methods to recover the secret key. In this paper, we reinvestigate the construction of the lattice used in one of these methods, the Extended Hidden Number Problem (EHNP). We find the secret key with only 3 signatures, thus reaching a known theoretical bound, whereas best previous methods required at least 4 signatures in practice. Given a specific leakage model, our attack is more efficient than previous attacks, and for most cases, has better probability of success. To obtain such results, we perform a detailed analysis of the parameters used in the attack and introduce a preprocessing method which reduces by a factor up to 7 the total time to recover the secret key for some parameters. We perform an error resilience analysis which has never been done before in the setup of EHNP. Our construction find the secret key with a small amount of erroneous traces, up to  of false digits, and

of false digits, and  with a specific type of error.

with a specific type of error.

Keywords: Public key cryptography, ECDSA, Side channel attack, Windowed non-adjacent form, Lattice techniques

Introduction

The Elliptic Curve Digital Signature Algorithm (ECDSA) [13], first proposed in 1992 by Scott Vanstone [26], is a standard public key signature protocol widely deployed. ECDSA is used in the latest library TLS 1.3, email standard OpenPGP and smart cards. It is also implemented in the library OpenSSL, and can be found in cryptocurrencies such as Bitcoin, Ethereum and Ripple. It benefits from a high security based on the hardness of the elliptic curve discrete logarithm problem and a fast signing algorithm due to its small key size. Hence, it is recognized as a standard signature algorithm by institutes such as ISO since 1998, ANSI since 1999, and IEEE and NIST since 2000.

The ECDSA signing algorithm requires scalar multiplications of a point P on an elliptic curve by an ephemeral key k. Since this operation is time-consuming and often the most time-consuming part of the protocol, it is necessary to use an efficient algorithm. The Non Adjacent Form (NAF) and its windowed variant (wNAF) were introduced as an alternative to the binary representation of the nonce k to reduce the execution time of the scalar multiplication. Indeed, the NAF representation does not allow two non-zero digits to be consecutive, thus reducing the Hamming weight of the representation of the scalar. This improves on the time of execution as the latter is dependent on the number of non-zero digits. The wNAF representation is present in implementations such as in Bitcoin, as well as in the libraries Cryptlib, BouncyCastle and Apple’s Common-Crypto. Moreover, until very recently (May 2019), wNAF was present in all three branches of OpenSSL.

However, implementing the scalar multiplication using wNAF representation and no added layer of security makes the protocol vulnerable to side-channel attacks. Side-channel attacks were first introduced about two decades ago by Kocher et al. [14], and have since been used to break many implementations, and in particular some cryptographic primitives such as AES, RSA, and ECDSA. They allow to recover secret information throughout observable leakage. In our case, this leakage corresponds to differences in the execution time of a part of the signing algorithm, observable by monitoring the cache.

For ECDSA, cache side-channel attacks such as Flush&Reload [28, 29] have been used to recover information about either the sequence of operations used to execute the scalar multiplication, or for example in [8] the modular inversion. For the scalar multiplication, these operations are either a multiplication or an addition depending on the bits of k. This information is usually referred to as a double-and-add chain or the trace of k. A trace is created when a signature is produced by ECDSA and thus we talk about signatures and traces in an equivalent sense. At this point, we ask how many traces need to be collected to successfully recover the secret key. Indeed, from an attacker’s perspective, the least traces necessary, the more efficient the attack is. This quantity depends on how much information can be extracted from a single trace and how combining information of multiple traces is used to recover the key. We work on the latter to minimize the number of traces needed.

The nature of the information obtained from the side channel attack allows to determine what kind of method should be carried out to recover the secret key. Attacks on ECDSA are inspired by attacks on a similar cryptosystem, DSA. In 2001, Howgrave-Graham and Smart [12] showed how knowing partial information of the nonce k in DSA can lead to a full secret key recovery. Later, Nguyen and Shparlinski [19] gave a polynomial time algorithm that recovers the secret key in ECDSA as soon as some consecutive bits of the ephemeral key are known. They showed that using the information leaked by the side channel attack, one can recover the secret key by constructing an instance of the Hidden Number Problem (HNP) [4]. The basic structure of the attack algorithm is to construct a lattice which contains the knowledge of consecutive bits of the epheremal keys, and by solving CVP or SVP, to recover the secret key. This type of attack has been done in [3, 8, 26, 28]. However, these results considered perfect traces, but obtaining traces without any misreadings is very rare. In 2018, Dall et al. [6] included an error-resilience analysis to their attack: they showed that key recovery with HNP is still possible even in the presence of erroneous traces.

In 2016, Fan, Wang and Cheng [7] used another lattice-based method to attack ECDSA: the Extended Hidden Number Problem (EHNP) [11]. EHNP mostly differs from HNP by the nature of the information given as input. Indeed, the information required to construct an instance of EHNP is not sequences of consecutive bits, but the positions of the non-zero coefficients in any representation of some integers. This model, which we consider in this article as well, is relevant when describing information coming from Flush&Reload or Prime&Probe attacks for example, the latter giving a more generic scenario with no shared data between the attacker and the victim. In [7], the authors are able to extract 105.8 bits of information per signature on average, and thus require in theory only 3 signatures to recover a 256-bit secret key. In practice, they were able to recover the secret key using 4 error-free traces.

In order to optimize an attack on ECDSA various aspects should be considered. By minimizing the number of signatures required in the lattice construction, one minimizes the number of traces needed to be collected during the side-channel attack. Moreover, reducing the time of the lattice part of the attack, and improving the probability of success of key recovery allows to reduce the overall time of the attack. In this paper, we improve on all three of these aspects. Furthermore, we propose the first error-resilience analysis for EHNP and show that key recovery is still possible with erroneous traces too.

Contributions: In this work, we reinvestigate the attack against ECDSA with wNAF representation for the scalar multiplication using EHNP. We focus on the lattice part of the attack, i.e., the exploitation of the information gathered by the side-channel attack. We first assume we obtain a set of error-free traces from a side-channel analysis. We preselect some of these traces to optimize the attack. The main idea of the lattice part is then to use the ECDSA equation and the knowledge gained from the selected traces to construct a set of modular equations which include the secret key as an unknown. These modular equations are then incorporated into a lattice basis similar to the one given in [7], and a short vector in it will contain the necessary information to reconstruct the secret key. We call “experiment” one run of this algorithm. An experiment succeeds if the algorithm recovers the secret key.

A New Preprocessing Method. The idea of selecting good traces beforehand has already been explored in [27]. The authors suggest three rules to select traces that improve the attack on the lattice part. Given a certain (large) amount of traces available, the lattice is usually built with a much smaller subset of these traces. Trying to identify beforehand the traces that would result in a better attack is a clever option. The aim of our new preprocessing—that completely differs from [27]—is to regulate the size of the coefficients in the lattice, and this results in a better lattice reduction time. For instance, with 3 signatures, we were able to reduce the total time of the attack by a factor of 7.

Analyzing the Attack. Several parameters intervene while building and reducing the lattice. We analyze the performance of the attack with respect to these parameters and present the best parameters that optimize either the total time or the probability of success.

First, we focus on the attack time. Note that when talking about the overall time of the attack, we consider the average time of a single experiment multiplied by the number of trials necessary to recover the secret key. We compare1 our times with the numbers reported in [7, Table 3] with method C. Indeed, methods A and B in [7] use extra information that comes from choices in the implementation which we choose to ignore as we want our analysis to remain as general as possible. The comparison is justified as we consider the same leakage model, and compare timings when running experiments on similar machines. For 4 signatures, our attack is slightly slower2 than timings in [7]. However, when considering more than 4 signatures, our attack is faster. We experiment up to 8 signatures to further improve our overall time. In this case, our attack runs at best in 2 min and 25 s. Timings for 8 signatures are not reported in [7], and the case of 3 signatures was never reached before our work. In Table 1, we compare our times with the fastest times reported by [7]. We choose their fastest times but concerning our results we choose to report experiments which are faster (not the fastest) with, if possible, better probability than theirs.

Table 1.

Comparing attack times with [7], for 5000 experiments.

| Number of signatures | Our attack | [7] | ||

|---|---|---|---|---|

| Time | Success ( ) ) |

Time | Success ( ) ) |

|

| 3 | 39 h | 0.2 | – | – |

| 4 | 1 h 17 min | 0.5 | 41 min | 1.5 |

| 5 | 8 min 20 s | 6.5 | 18 min | 1 |

| 6 |

min min |

25 | 18 min | 22 |

| 7 |

min min |

17.5 | 34 min | 24 |

| 8 |

min min |

29 | – | – |

The overall time of the attack is also dependent on the success probability of key recovery. From Table 2, one can see that our success probability is higher than [7], except for 7 signatures. They have  of success with their best parameters whereas we only reach

of success with their best parameters whereas we only reach  in this case.

in this case.

Table 2.

Comparing success probability with [7], for 5000 experiments.

| Number of signatures | Our attack | [7] | ||

|---|---|---|---|---|

Success ( ) ) |

Time | Success ( ) ) |

Time | |

| 3 | 0.2 | 39 h | – | – |

| 4 | 4 | 25 h 28 min | 1.5 | 41 min |

| 5 | 20 | 2 h 42 min | 4 | 36 min |

| 6 | 40 | 1 h 4 min | 35 | 1 h 43 min |

| 7 | 45 | 2 h 36 min | 68 | 3 h 58 min |

| 8 | 45 | 5 h 2 min | – | – |

For the sake of completeness, we mention that in [21], the authors use HNP to recover the secret key using 13 signatures. Their success probability in this case is around 54 and their overall time is close to 20 s, hence much faster. However, as their leakage model is different, we do not further mention their work.

and their overall time is close to 20 s, hence much faster. However, as their leakage model is different, we do not further mention their work.

Finding the Key with Only Three Signatures. Overall, combining a new preprocessing method, a modified lattice construction and a careful choice of parameters allows us to mount an attack which works in practice with only 3 signatures. However, the probability of success in this case is very low. We were able to recover the secret key only once with BKZ-35 over 5000 experiments. This result is difficult to quantify as a probability but we note that finding the key a single time over 5000 experiments is still much better than randomly finding a 256-bit integer. If we assume the probability is around  , as each trial costs 200 s in average, we can expect to find the secret key after 12 days using a single core. Note that this time can be greatly reduced when parallelizing the process, i.e., each trial can be run on a separate core. On the other hand, if we use our preprocessing method, with 3 signatures we obtain a probability of success of

, as each trial costs 200 s in average, we can expect to find the secret key after 12 days using a single core. Note that this time can be greatly reduced when parallelizing the process, i.e., each trial can be run on a separate core. On the other hand, if we use our preprocessing method, with 3 signatures we obtain a probability of success of  and a total time of key recovery of 39 h, thus the factor 7 of improvement mentioned above. Despite the low probability of success, this result remains interesting nonetheless. Indeed, the authors in [7] reported that in practice, the key couldn’t be recovered using less than 4 signatures and we improve on their result.

and a total time of key recovery of 39 h, thus the factor 7 of improvement mentioned above. Despite the low probability of success, this result remains interesting nonetheless. Indeed, the authors in [7] reported that in practice, the key couldn’t be recovered using less than 4 signatures and we improve on their result.

Resilience to Errors. We also investigate the resilience to errors of our attack. Such an analysis has not yet been done in the setup of EHNP. It is important to underline that collecting traces without any errors using any side-channel attack is very hard. Previous works used perfect traces to mount the lattice attack. Thus, it required collecting more traces. As pointed out in [7], more or less twice as many signatures are needed if errors are considered. In practice, this led [7] to gather in average 8 signatures to be able to find the key with 4 perfect traces. We experimentally show that we are still able to recover the secret key even in the presence of faulty traces. In particular, we find the key using only 4 faulty traces, but with a very low probability of success. As the percentage of incorrect digits in the trace grows, the probability of success decreases and thus more signatures are required to successfully recover the secret key. For instance, if  of the digits are wrong among all the digits of a given set of traces, it is still possible to recover the key with 6 signatures. This result is valid if errors are uniformly distributed over the digits. However, we have a better probability to recover the key if errors consist in 0-digit faulty readings, i.e., 0 digits read as non-zero. In other words, the attack could work with a higher percentage of errors, around

of the digits are wrong among all the digits of a given set of traces, it is still possible to recover the key with 6 signatures. This result is valid if errors are uniformly distributed over the digits. However, we have a better probability to recover the key if errors consist in 0-digit faulty readings, i.e., 0 digits read as non-zero. In other words, the attack could work with a higher percentage of errors, around  , if we could ensure from the side channel attack and some preprocessing methods that none of the non-zero digits have been flipped to 0.

, if we could ensure from the side channel attack and some preprocessing methods that none of the non-zero digits have been flipped to 0.

Organization: Sect. 2 gives background on ECDSA and the wNAF representation. In Sect. 3, we explain how to transform EHNP into a lattice problem. We explicit the lattice basis and analyze the length of the short vectors found in the reduced basis. In Sect. 4, we introduce our preprocessing method which allows us to reduce the overall time of our attack. In Sect. 5, we give experimental results. Finally, in Sect. 6, we give an error resilience analysis.

Preliminaries

Elliptic Curves Digital Signature Algorithm

The ECDSA algorithm is a variant of the Digital Signature Algorithm, DSA, [17] which uses elliptic curves instead of finite fields. The parameters used in ECDSA are an elliptic curve E over a finite field, a generator G of prime order q and a hash function H. The private key is an integer  such that

such that  and the public key is

and the public key is  , the scalar multiplication of G by

, the scalar multiplication of G by  .

.

To sign a message m using the private key  , randomly select an ephemeral key

, randomly select an ephemeral key  and compute [k]G. Let r be the x-coordinate of [k]G. If

and compute [k]G. Let r be the x-coordinate of [k]G. If  , select a new nonce k. Then, compute

, select a new nonce k. Then, compute  and again if

and again if  , select a new nonce k. Finally, the signature is given by the pair (r, s).

, select a new nonce k. Finally, the signature is given by the pair (r, s).

In order to verify a signature, first check if  , otherwise the signature is not valid. Then, compute

, otherwise the signature is not valid. Then, compute  ,

,  and

and  . Finally, the signature is valid if

. Finally, the signature is valid if  .

.

We consider a 128-bit level of security. Hence  and k are 256-bit integers.

and k are 256-bit integers.

WNAF Representation

The ECDSA algorithm requires the computation of [k]G, a scalar multiplication. In [10], various methods to compute fast exponentiation are presented. One family of such methods is called window methods and comes from NAF representation. Indeed, the NAF representation does not allow two non-zero digits to be consecutive, thus reducing the Hamming weight of the representation of the scalar. The basic idea of a window method is to consider chunks of w bits in the representation of the scalar k, compute powers in the window bit by bit, square w times and then multiply by the power in the next window. The window methods can be combined with the NAF representation of k. For any  , a representation

, a representation  is called a NAF if

is called a NAF if  and

and  for all

for all  . Moreover, every k has a unique NAF representation. The NAF representation minimizes the number of non-zero digits

. Moreover, every k has a unique NAF representation. The NAF representation minimizes the number of non-zero digits  . It is presented in Algorithm 1.

. It is presented in Algorithm 1.

The NAF representation can be combined with a sliding window method to further improve the execution time. For instance, in OpenSSL (up to the latest versions using wNAF 1.1.1b), the window size usually chosen was  , which is the value we set for all our experiments. The scalar k is converted into wNAF form using Algorithm 2. The sequence of digits

, which is the value we set for all our experiments. The scalar k is converted into wNAF form using Algorithm 2. The sequence of digits  belongs to the set

belongs to the set  . Let k be the sum of its non-zero digits, renamed

. Let k be the sum of its non-zero digits, renamed  . More precisely, we get

. More precisely, we get  where

where  is the number of non-zero digits, and

is the number of non-zero digits, and  represents the position of the digit

represents the position of the digit  in the wNAF representation.

in the wNAF representation.

Example 1

In binary, we can write  whereas in NAF-representation, we have

whereas in NAF-representation, we have  With

With  , the wNAF representation gives

, the wNAF representation gives

Lattice Reduction Algorithms

A  -lattice is a discrete additive subgroup of

-lattice is a discrete additive subgroup of  . It is usually specified by a basis matrix

. It is usually specified by a basis matrix

. The lattice L(B) generated by B consists of all integer combinations of the row vectors in B. The determinant of a lattice is the absolute value of the determinant of a basis matrix:

. The lattice L(B) generated by B consists of all integer combinations of the row vectors in B. The determinant of a lattice is the absolute value of the determinant of a basis matrix:  . The discreteness property ensures that there is a vector

. The discreteness property ensures that there is a vector  reaching the minimum non-zero value for the euclidean norm. Let us write

reaching the minimum non-zero value for the euclidean norm. Let us write  . Let

. Let  be the

be the  successive minimum of the lattice. The LLL algorithm [15] takes as an input a lattice basis, and returns in polynomial time in the lattice dimension n a reduced lattice basis whose vectors

successive minimum of the lattice. The LLL algorithm [15] takes as an input a lattice basis, and returns in polynomial time in the lattice dimension n a reduced lattice basis whose vectors  satisfy the worst-case approximation bound

satisfy the worst-case approximation bound  . In practice, for random lattices, LLL obtains approximation factors such that

. In practice, for random lattices, LLL obtains approximation factors such that  as noted by Nguyen and Stehlé [18]. Moreover, for random lattices, the Gaussian heuristic implies that

as noted by Nguyen and Stehlé [18]. Moreover, for random lattices, the Gaussian heuristic implies that

The BKZ algorithm [22, 24] is exponential in some given block-size  and polynomial in the lattice dimension n. It outputs a reduced lattice basis whose vectors

and polynomial in the lattice dimension n. It outputs a reduced lattice basis whose vectors  satisfy the approximation

satisfy the approximation  [23], where

[23], where  is the Hermite constant. In practice, Chen and Nguyen [5] observed that BKZ returns vectors such that

is the Hermite constant. In practice, Chen and Nguyen [5] observed that BKZ returns vectors such that  where

where  depends on the block-size

depends on the block-size  . For random lattices, they get

. For random lattices, they get  for a block-size

for a block-size  .

.

Attacking ECDSA Using Lattices

Using some side-channel attack, one can recover information about the wNAF representation of the nonce k. In particular, it allows us to know the positions of the non-zero coefficients in the representation of k. However, the value of these coefficients are unknown. This information can be used in the setup of the Extended Hidden Number Problem (EHNP) to recover the secret key. For many messages m, we use ECDSA to produce signatures (r, s) and each run of the signing algorithm produces a nonce k. We assume we have the corresponding trace of the nonce, that is, the equivalent of the double-and-add chain of kG using wNAF. The goal of the attack is to recover the secret  while optimizing either the number of signatures required or the total time of the attack.

while optimizing either the number of signatures required or the total time of the attack.

The Extended Hidden Number Problem

The Hidden Number Problem (HNP) allows to recover a secret element  if some information about the most significant bits of random multiples of

if some information about the most significant bits of random multiples of  are known for some prime q. Boneh and Venkatesan show how to recover

are known for some prime q. Boneh and Venkatesan show how to recover  in polynomial time with probability greater than 1/2. In [11], the authors extend the HNP and present a polynomial time algorithm for solving the instances of this extended problem. The Extended Hidden Number Problem is defined as follows. Given u congruences of the form

in polynomial time with probability greater than 1/2. In [11], the authors extend the HNP and present a polynomial time algorithm for solving the instances of this extended problem. The Extended Hidden Number Problem is defined as follows. Given u congruences of the form

|

1 |

where the secret  and

and  are unknown, and the values

are unknown, and the values  ,

,  ,

,  ,

,  ,

,  are known for

are known for  (see [11], Definition 3), one has to recover

(see [11], Definition 3), one has to recover  in polynomial time. The EHNP can then be transformed into a lattice problem and one recovers the secret

in polynomial time. The EHNP can then be transformed into a lattice problem and one recovers the secret  by solving a short vector problem in a given lattice.

by solving a short vector problem in a given lattice.

Using EHNP to Attack ECDSA

From the ECDSA algorithm, we know that given a message m, the algorithm outputs a signature (r, s) such that

|

2 |

The value H(m) is just some hash of the message m. We consider a set of u signature pairs  with corresponding message

with corresponding message  that satisfy Eq. (2). For each signature pair, we have a nonce k. Using the wNAF representation of k, we write

that satisfy Eq. (2). For each signature pair, we have a nonce k. Using the wNAF representation of k, we write  , with

, with  and the choice of w depends on the implementation. Note that the coefficients

and the choice of w depends on the implementation. Note that the coefficients  are unknown, however, the positions

are unknown, however, the positions  are supposed to be known via some side-channel leakage. It is then possible to represent the ephemeral key k as the sum of a known part, and an unknown part. As the value of

are supposed to be known via some side-channel leakage. It is then possible to represent the ephemeral key k as the sum of a known part, and an unknown part. As the value of  is odd, one can write

is odd, one can write  , where

, where  . Using the same notations as in [7], set

. Using the same notations as in [7], set  , where

, where  . In the rest of the paper, we will denote by

. In the rest of the paper, we will denote by  the window-size of

the window-size of  . Note that here,

. Note that here,  but this window-size will be modified later. This allows to rewrite the value of k as

but this window-size will be modified later. This allows to rewrite the value of k as

|

3 |

with  . The expression of

. The expression of  represents the known part of k. By substituting k in Eq. (3), we get a system of modular equations:

represents the known part of k. By substituting k in Eq. (3), we get a system of modular equations:

|

4 |

where the unknowns are  and the

and the  . The known values are

. The known values are  , which is the number of non-zero digits in k for the

, which is the number of non-zero digits in k for the  sample,

sample,  , which is the position of the

, which is the position of the  non-zero digit in k for the

non-zero digit in k for the  sample and

sample and  defined above. Equation (4) is then used as input to EHNP, following the method explained in [11]. The problem of finding the secret key is then reduced to solving the short vector problem in a given lattice presented in the following section.

defined above. Equation (4) is then used as input to EHNP, following the method explained in [11]. The problem of finding the secret key is then reduced to solving the short vector problem in a given lattice presented in the following section.

Constructing the Lattice

Before giving the lattice basis construction, we redefine Eq. (4) to reduce the number of unknown variables in the system. This will allow us to construct a lattice of smaller dimension. Again, we use the same notations as in [7].

Eliminating One Variable. One straightforward way to reduce the lattice dimension is to eliminate a variable from the system. In this case, one can eliminate  from Eq. (4). Let

from Eq. (4). Let  denote the

denote the  equation of the system. Then by computing

equation of the system. Then by computing  , we get the following new modular equations

, we get the following new modular equations

| 5 |

Using the same notations as in [7], we define  ,

,  and

and  for

for  ,

,  . Even if

. Even if  is eliminated from the equations, if we recover some

is eliminated from the equations, if we recover some  values from a short vector in the lattice, we can recover

values from a short vector in the lattice, we can recover  using any equation in the modular system (4). We now use Eq. (5) to construct the lattice basis.

using any equation in the modular system (4). We now use Eq. (5) to construct the lattice basis.

From a Modular System to a Lattice Basis. Let  be the lattice constructed for the attack, and we have

be the lattice constructed for the attack, and we have  where the lattice basis

where the lattice basis  is given below. Let

is given below. Let  for

for  and

and  . We set a scaling factor

. We set a scaling factor  to be defined later. The lattice basis is given by

to be defined later. The lattice basis is given by

|

Let  , with

, with  be the dimension of the lattice. The

be the dimension of the lattice. The  first columns correspond to Eq. (5) for

first columns correspond to Eq. (5) for  . Each of the remaining columns, except the last one, corresponds to a

. Each of the remaining columns, except the last one, corresponds to a  , and contains coefficients that allow to regulate the size of the

, and contains coefficients that allow to regulate the size of the  . The determinant of

. The determinant of  is given by

is given by

The lattice is built such that there exists  which contains the unknowns

which contains the unknowns  . To find it, we know there exists some values

. To find it, we know there exists some values  such that if

such that if  , we get

, we get  and

and

|

If we are able to find w in the lattice, then we can reconstruct the secret key  . In order to find w, we estimate its norm and make sure w appears in the reduced basis. After reducing the basis, we look for vectors of the correct shape, i.e., with sufficiently many zeros at the beginning and the correct last coefficient, and attempt to recover

. In order to find w, we estimate its norm and make sure w appears in the reduced basis. After reducing the basis, we look for vectors of the correct shape, i.e., with sufficiently many zeros at the beginning and the correct last coefficient, and attempt to recover  for each of these.

for each of these.

How the Size of  Affects the Norms of the Short Vectors. In order to find the vector w in the lattice, we reduce

Affects the Norms of the Short Vectors. In order to find the vector w in the lattice, we reduce  using LLL or BKZ. For w to appear in the reduced basis, one should at least set

using LLL or BKZ. For w to appear in the reduced basis, one should at least set  such that

such that

|

6 |

The vector w we expect to find has norm  . From Eq. (6), one can deduce the value of

. From Eq. (6), one can deduce the value of  needed to find w in the reduced lattice:

needed to find w in the reduced lattice:

|

In our experiments, the average value of  for

for  is

is  and thus

and thus  on average. Moreover, the average value of

on average. Moreover, the average value of  is 7 and so on average

is 7 and so on average  . Hence, if we compute

. Hence, if we compute  for

for  , with these values, we obtain

, with these values, we obtain  , which does not help us to set this parameter. In practice, we verify that

, which does not help us to set this parameter. In practice, we verify that  allows us to recover the secret key. In Sect. 5, we vary the size of

allows us to recover the secret key. In Sect. 5, we vary the size of  to see whether a slightly larger value affects the probability of success.

to see whether a slightly larger value affects the probability of success.

Too Many Small Vectors. While running BKZ on  , we note that for some specific sets of parameters the reduced basis contains some undesired short vectors, i.e., vectors that are shorter than w. This can be explained by looking at two consecutive rows in the lattice basis given above, say the

, we note that for some specific sets of parameters the reduced basis contains some undesired short vectors, i.e., vectors that are shorter than w. This can be explained by looking at two consecutive rows in the lattice basis given above, say the  row and the

row and the  row. For example, one can look at rows which correspond to the

row. For example, one can look at rows which correspond to the  values but the same argument is valid for the rows concerning the

values but the same argument is valid for the rows concerning the  . From the definitions of the

. From the definitions of the  values we have

values we have  So

So  Thus the linear combination given by the

Thus the linear combination given by the  row minus

row minus  times the

times the  row gives a vector

row gives a vector

|

7 |

Yet, this vector is expected to have smaller norm than w. Some experimental observations are detailed in Sect. 5.

Differences with the Lattice Construction Given in [7]. Let  be the lattice basis constructed in [7]. Our basis

be the lattice basis constructed in [7]. Our basis  is a rescaled version of

is a rescaled version of  such that

such that  . This rescaling allows us to ensure that all the coefficients in our lattice basis are integer values. Note that [7] have a value

. This rescaling allows us to ensure that all the coefficients in our lattice basis are integer values. Note that [7] have a value  in their construction which corresponds to

in their construction which corresponds to  . In this work, we give a precise analysis of the value of

. In this work, we give a precise analysis of the value of  , both theoretically and experimentally in Sect. 5, which is missing in [7].

, both theoretically and experimentally in Sect. 5, which is missing in [7].

Improving the Lattice Attack

Reducing the Lattice Dimension: The Merging Technique

In [7], the authors present another way to further reduce the lattice dimension, which they call the merging technique. It aims at reducing the lattice dimension by reducing the number of non-zero digits of k. The lattice dimension depends on the value  , and thus reducing T reduces the dimension. To understand the attack, it suffices to know that after merging, we obtain some new values

, and thus reducing T reduces the dimension. To understand the attack, it suffices to know that after merging, we obtain some new values  corresponding to the new number of non-zero digits and

corresponding to the new number of non-zero digits and  the position of these digits for

the position of these digits for  . After merging, one can rewrite

. After merging, one can rewrite  , where the new

, where the new  have a new window size which we denote

have a new window size which we denote  , i.e.,

, i.e.,  .

.

We present our merging algorithm based on Algorithm 3 given in [7]. Our algorithm modifies directly the sequence  , whereas [7] work on the double-and-add chains. This helped us avoid some implementation issues such as an index outrun present in Algorithm 3 [7], line 7. To facilitate the ease of reading of (our) Algorithm 3, we work with dynamic tables. Let us first recall various known methods we use in the algorithm:

, whereas [7] work on the double-and-add chains. This helped us avoid some implementation issues such as an index outrun present in Algorithm 3 [7], line 7. To facilitate the ease of reading of (our) Algorithm 3, we work with dynamic tables. Let us first recall various known methods we use in the algorithm:  inserts an element e at the end of the table, at(i) outputs the element at index i, and last() returns the last element of the table. We consider tables of integers indexed in

inserts an element e at the end of the table, at(i) outputs the element at index i, and last() returns the last element of the table. We consider tables of integers indexed in  , where S is the size of the table.

, where S is the size of the table.

A useful example of the merging technique is given in [7]. From 3 to 8 signatures the approximate dimension of the lattices using the elimination and merging techniques are the following: 80, 110, 135, 160, 190 and 215. Each new lattice dimension is roughly  of the dimension of the lattice before applying these techniques, for the same number of signatures. For instance, with 8 signatures we would have a lattice of dimension 400 on average, far too large to be easily reduced. For the traces we consider, after merging the mean of the

of the dimension of the lattice before applying these techniques, for the same number of signatures. For instance, with 8 signatures we would have a lattice of dimension 400 on average, far too large to be easily reduced. For the traces we consider, after merging the mean of the  is 26, the minimum being 17 and the maximum 37 with a standard deviation of 3. One could further reduce the lattice dimension by preprocessing traces with small

is 26, the minimum being 17 and the maximum 37 with a standard deviation of 3. One could further reduce the lattice dimension by preprocessing traces with small  . However, the standard deviation being small, the difference in the reduction times should not be affected too much.

. However, the standard deviation being small, the difference in the reduction times should not be affected too much.

Preprocessing the Traces

The two main pieces of information we can extract and use in our attack are first the number of non-zero digits in the wNAF representation of the nonce k, denoted  and the weight of each non-zero digit, denoted

and the weight of each non-zero digit, denoted  for

for  . Let

. Let  be the set of traces we obtained from the side-channel leakage representing the wNAF representation of the nonce k used while producing an ECDSA signature. We consider the subset

be the set of traces we obtained from the side-channel leakage representing the wNAF representation of the nonce k used while producing an ECDSA signature. We consider the subset  . We choose to preselect traces in a subset

. We choose to preselect traces in a subset  for small values of a. The idea behind this preprocessing is to regulate the size of the coefficients in the lattice. Indeed, when selecting u traces for the attack, by upper-bounding

for small values of a. The idea behind this preprocessing is to regulate the size of the coefficients in the lattice. Indeed, when selecting u traces for the attack, by upper-bounding  for

for  , we force the coefficients to remain smaller than when taking traces at random.

, we force the coefficients to remain smaller than when taking traces at random.

We work with a set  of 2000 traces such that for all traces

of 2000 traces such that for all traces  . The proportion of signatures corresponding to the different preprocessing subsets we consider in our experiments are:

. The proportion of signatures corresponding to the different preprocessing subsets we consider in our experiments are:  for

for  ,

,  for

for  and

and  for

for  . The effect of preprocessing on the total time is explained in Sect. 5.

. The effect of preprocessing on the total time is explained in Sect. 5.

Performance Analysis

Traces from the Real World. We work with the elliptic curve  but none of the techniques introduced here are limited to this specific elliptic curve. We consider traces from a Flush&Reload attack, executed through hyperthreading, as it can virtually recover the most amount of information.3

but none of the techniques introduced here are limited to this specific elliptic curve. We consider traces from a Flush&Reload attack, executed through hyperthreading, as it can virtually recover the most amount of information.3

To the best of our knowledge, the only information we can recover are the positions of the non-zero digits. We are not able to determine the sign or the value of the digits in the wNAF representation. In [7], the authors exploit the fact that the length of the binary string of k is fixed in implementations such as OpenSSL, and thus more information can be recovered by comparing this length to the length of the double-and-add chain. In particular, they were able to recover the MSB of k, and in some cases the sign of the second MSB. We do not consider this extra information as we want our analysis to remain general.

We report calculations ran on error-free traces where we evaluate the total time necessary to recover the secret key and the probability of success of the attack. Our experiments have two possible outputs: either we reconstruct the secret key  and thus consider the experiment a success, or we do not recover the secret key, and the experiment fails. In order to compute the success probability and the average time of one reduction, we run 5000 experiments for some specific sets of parameters using either Sage’s default BKZ implementation [25] or a more recent implementation of the latest sieving strategies, the General Sieve Kernel (G6K) [1]. The experiments were ran using the cluster Grid’5000 on a single core of an Intel Xeon Gold 6130. The total time is the average time of a single reduction multiplied by the number of trials necessary to recover the key. The number of trials necessary to recover the secret key corresponds the number of experiments ran until we have a success for a given set of parameters. For a fixed number of signatures, we either optimize the total time or the success probability. We report numbers in Tables 3, 4 when using BKZ.4

and thus consider the experiment a success, or we do not recover the secret key, and the experiment fails. In order to compute the success probability and the average time of one reduction, we run 5000 experiments for some specific sets of parameters using either Sage’s default BKZ implementation [25] or a more recent implementation of the latest sieving strategies, the General Sieve Kernel (G6K) [1]. The experiments were ran using the cluster Grid’5000 on a single core of an Intel Xeon Gold 6130. The total time is the average time of a single reduction multiplied by the number of trials necessary to recover the key. The number of trials necessary to recover the secret key corresponds the number of experiments ran until we have a success for a given set of parameters. For a fixed number of signatures, we either optimize the total time or the success probability. We report numbers in Tables 3, 4 when using BKZ.4

Table 3.

Fastest key recovery with respect to the number of signatures.

| Number of signatures | Total time | Parameters | Probability of success ( ) ) |

||

|---|---|---|---|---|---|

| BKZ | Preprocessing |  |

|||

| 3 | 39 h | 35 |  |

|

0.2 |

| 4 | 1 h 17 | 25 |  |

|

0.5 |

| 5 | 8 min 20 | 25 |  |

|

6.5 |

| 6 | 3 min 55 | 20 |  |

|

7 |

| 7 | 2 min 43 | 20 |  |

|

17.5 |

| 8 | 2 min 25 | 20 |  |

|

29 |

Table 4.

Highest probability of success with respect to the number of signatures.

| Number of signatures | Probability of success ( ) ) |

Parameters | Total time | ||

|---|---|---|---|---|---|

| BKZ | Preprocessing |  |

|||

| 3 | 0.2 | 35 |  |

|

39 h |

| 4 | 4 | 35 |  |

|

25 h 28 |

| 5 | 20 | 35 |  |

|

2 h 42 |

| 6 | 40 | 35 |  |

|

1 h 04 |

| 7 | 45 | 35 |  |

|

2 h 36 |

| 8 | 45 | 35 |  |

|

5 h 02 |

Comments on G6K: We do not report the full experiments ran with G6K since using this implementation does not lead to the fastest total time of our attack: around 2 min using 8 signatures for BKZ and at best 5 min for G6K.

However, G6K allows to reduce lattices with much higher block-sizes than BKZ. For comparable probabilities of success, G6K is faster. Considering the highest probability achieved, on one hand, BKZ-35 leads to a probability of success of  , and a single reduction takes 133 min. On the other hand, to reach around the same probability of success with G6K, we increase the block-size to 80, and a single reduction is only around 45 min on average. This is an improvement by a factor of 3 in the reduction time.

, and a single reduction takes 133 min. On the other hand, to reach around the same probability of success with G6K, we increase the block-size to 80, and a single reduction is only around 45 min on average. This is an improvement by a factor of 3 in the reduction time.

Only 3 Signatures. Using  and no preprocessing, we recovered the secret key using 3 signatures with BKZ-35 only once and three times with BKZ-40. When using pre-processing

and no preprocessing, we recovered the secret key using 3 signatures with BKZ-35 only once and three times with BKZ-40. When using pre-processing  , BKZ-35 and

, BKZ-35 and  , the probability of success went up to

, the probability of success went up to  . Since all the probabilities remain much less than

. Since all the probabilities remain much less than  an extensive analysis would have taken too long. Thus, in the rest of the section, the number of signatures only varies between 4 and 8. However, we want to emphasize that it is precisely this detailed analysis on a slightly higher number of signatures that allowed us to understand the impact of the parameters on the performance of the attack and resulted in finding the right ones allowing to mount the attack with 3 signatures.

an extensive analysis would have taken too long. Thus, in the rest of the section, the number of signatures only varies between 4 and 8. However, we want to emphasize that it is precisely this detailed analysis on a slightly higher number of signatures that allowed us to understand the impact of the parameters on the performance of the attack and resulted in finding the right ones allowing to mount the attack with 3 signatures.

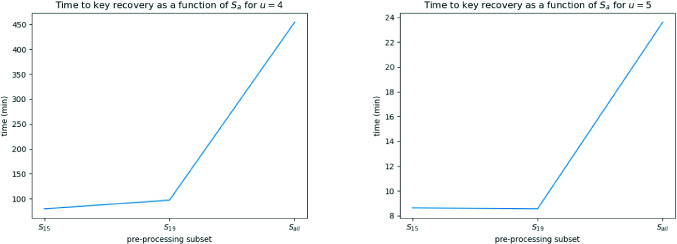

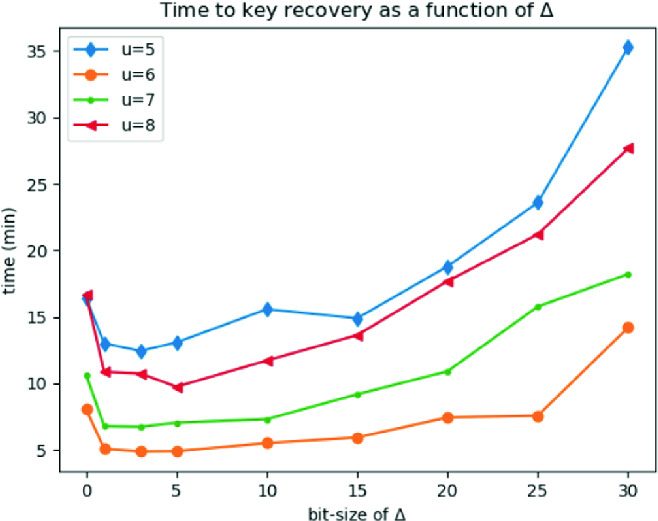

Varying the Bitsize of  . In Fig. 1, we analyze the total time of key recovery as a function of the bitsize of

. In Fig. 1, we analyze the total time of key recovery as a function of the bitsize of  . We fix the block-size of BKZ to 25 and take traces without any preprocessing. We are able to recover the secret key by setting

. We fix the block-size of BKZ to 25 and take traces without any preprocessing. We are able to recover the secret key by setting  , which is the lowest theoretical value one can choose. However, we observed a slight increase in the probability of success by taking a larger

, which is the lowest theoretical value one can choose. However, we observed a slight increase in the probability of success by taking a larger  . Without any surprise, we note that the total time to recover the secret key increases with the bitsize of

. Without any surprise, we note that the total time to recover the secret key increases with the bitsize of  as the coefficients in the lattice basis become larger.

as the coefficients in the lattice basis become larger.

Fig. 1.

Analyzing the overall time to recover the secret key as a function of the bitsize of  . We report the numbers BKZ-25 and no preprocessing. The optimal value for

. We report the numbers BKZ-25 and no preprocessing. The optimal value for  is around

is around  except for

except for  where it is

where it is  .

.

Analyzing the Effect of Preprocessing. We analyze the influence of our preprocessing method on the attack time. We fix BKZ block-size to 25. The effect of preprocessing is influenced by the bitsize of  and we give here an analyze for

and we give here an analyze for  since the effect is more noticeable.

since the effect is more noticeable.

The effect of preprocessing is difficult to predict since its behavior varies depending on the parameters, having both positive and negative effects. On the one hand, we reduce the size of all the coefficients in the lattice, thus reducing the reduction time. On the other hand, we generate more potential small vectors5 with norms smaller than the norm of w. For this reason, the probability of success of the attack decreases since the vector w is more likely to be a linear combination of vectors already in the reduced basis. For example, with 7 signatures we find in average w to be the third or fourth vector in the reduced basis without preprocessing, whereas with  it is more likely to appear in position 40.

it is more likely to appear in position 40.

The positive effect of preprocessing is most noticeable for  and

and  , as shown in Fig. 2. For instance, using

, as shown in Fig. 2. For instance, using  and

and  lowers the overall time by a factor up to 5.7. For

lowers the overall time by a factor up to 5.7. For  , we gain a factor close to 3 by using either

, we gain a factor close to 3 by using either  or

or  . For

. For  , using preprocessed traces is less impactful. For large

, using preprocessed traces is less impactful. For large  such as

such as  , we still note some lower overall times when using

, we still note some lower overall times when using  and

and  , up to a factor 2. When the bitsize gets smaller, reducing the size of the coefficients in the lattice is less impactful.

, up to a factor 2. When the bitsize gets smaller, reducing the size of the coefficients in the lattice is less impactful.

Fig. 2.

Overall time to recover the secret key as a function of the preprocessing subset for 4 and 5 traces. The other parameters are fixed:  and BKZ-25.

and BKZ-25.

Balancing the Block-size of BKZ. Finally, we vary the block-size in the BKZ algorithm. We fix  and use no preprocessing. We plot the results in Fig. 3 for 6 and 7 signatures. For other values of u, the plot is very similar and we omit them in Fig. 3. Without any surprise, we see that as we increase the block-size, the probability of success increases, however the reduction time increases significantly as well. This explains the results shown in Table 3 and Table 4: to reach the best probability of success one needs to increase the block-size in BKZ (we did not try any block-size greater than 40), but to get the fastest key recovery attack, the block-size is chosen between 20 and 25, except for 3 signatures where the probability of success is too low with these parameters.

and use no preprocessing. We plot the results in Fig. 3 for 6 and 7 signatures. For other values of u, the plot is very similar and we omit them in Fig. 3. Without any surprise, we see that as we increase the block-size, the probability of success increases, however the reduction time increases significantly as well. This explains the results shown in Table 3 and Table 4: to reach the best probability of success one needs to increase the block-size in BKZ (we did not try any block-size greater than 40), but to get the fastest key recovery attack, the block-size is chosen between 20 and 25, except for 3 signatures where the probability of success is too low with these parameters.

Fig. 3.

Analyzing the number of trials to recover the secret key and the reduction time of the lattice as a function of the block-size of BKZ. We consider the cases where  and

and  . The dotted lines correspond to the number of trials, and the continued lines to the reduction time in seconds.

. The dotted lines correspond to the number of trials, and the continued lines to the reduction time in seconds.

Error Resilience Analysis

It is not unexpected to have errors in the traces collected during side-channel attacks. Obtaining error-free traces requires some amount of work on the signal processing side. Prior to [6], the presence of errors in traces was either ignored or preprocessing was done on the traces until an error-free sample was found, see [2, 9]. In [6], it is shown the lattice attack still successfully recovers the secret key even when traces contain errors. An error in the setup given in [6] corresponds to an incorrect bound on the size of the values being collected. In our setup, a trace without errors corresponds to a trace where every single coefficient in the wNAF representation of k has been identified correctly as either non-zero or not. The probability of having an error in our setup is thus much higher. Side-channel attacks without any errors are very rare. Both [21] and [6] give some analysis of the attacks Flush&Reload and Prime&Probe in real life scenarios.

In [7], the results presented in the paper assume the Flush&Reload is implemented perfectly, without any error. In particular, to obtain 4 perfect traces and be able to run their experiment and find the key, one would need to have in average 8 traces from Flush&Reload – the probability to conduct to a perfect reading of the traces being 56% as pointed out in [21]. In our work, we show that it is possible to recover the secret key using only 4, even erroneous, traces. However, the probability of success is very low.

Recall that an error in our case corresponds to a flipped digit in the trace of k. Table 5 shows the attack success probability in the presence of errors. We ran used BKZ-25 and  with traces taken from

with traces taken from  . We average over 5000 experiments. We write

. We average over 5000 experiments. We write  when the attack succeeded less than five times over 5000 experiments, thus making it difficult to evaluate the probability of success.

when the attack succeeded less than five times over 5000 experiments, thus making it difficult to evaluate the probability of success.

Table 5.

Error analysis using BKZ-25,  and

and  .

.

| Number of signatures | Probability of success (%) | ||||

|---|---|---|---|---|---|

| 0 error | 5 errors | 10 errors | 20 errors | 30 errors | |

| 4 | 0.28 |  |

0 | 0 | 0 |

| 5 | 4.58 | 0.86 | 0.18 |  |

0 |

| 6 | 19.52 | 5.26 | 1.26 | 0.14 |  |

| 7 | 33.54 | 10.82 | 3.42 | 0.32 |  |

| 8 | 35.14 | 13.26 | 4.70 | 0.58 |  |

The attack works up to a resilience to  of errors. Indeed, for

of errors. Indeed, for  , we recovered the secret key with 30 errors, i.e., 30 flipped digits over

, we recovered the secret key with 30 errors, i.e., 30 flipped digits over  digits.

digits.

Different Types of Errors. There exists two possible types of errors. In the first case, a coefficient which is zero is evaluated as a non-zero coefficient. In theory, this only adds a new variable to the system, i.e., the number  of non-zero coefficients is overestimated. This does not affect the probability of success much. Indeed, we just have an overly-constrained system. We can see in Fig. 4 that the probability of success of the attack indeed decreases slowly as we add errors of this form. With errors only of this form, we were able to recover the secret key up to nearly

of non-zero coefficients is overestimated. This does not affect the probability of success much. Indeed, we just have an overly-constrained system. We can see in Fig. 4 that the probability of success of the attack indeed decreases slowly as we add errors of this form. With errors only of this form, we were able to recover the secret key up to nearly  of errors, (for instance with

of errors, (for instance with  , using BKZ-35). The other type of errors consists of a non-zero coefficients which is misread as a zero coefficient. In this case, we lose information necessary for the key recovery and thus this type of error affects the probability of success far more importantly as can also be seen in Fig. 4. In this setup, we were not able to recover the secret key when more than 3 errors of this type appear in the set of traces considered.

, using BKZ-35). The other type of errors consists of a non-zero coefficients which is misread as a zero coefficient. In this case, we lose information necessary for the key recovery and thus this type of error affects the probability of success far more importantly as can also be seen in Fig. 4. In this setup, we were not able to recover the secret key when more than 3 errors of this type appear in the set of traces considered.

Fig. 4.

Probability of success for key recovery with various types of errors when using  , BKZ-25,

, BKZ-25,  , and no preprocessing.

, and no preprocessing.

Strategy. If the signal processing method is hesitant between a non-zero digit or 0, we would recommend to favor putting a non-zero instead of 0 to increase the chance of having an error of type  non-zero, for which the attack is a lot more tolerant.

non-zero, for which the attack is a lot more tolerant.

Conclusion and countermeasures

In the last decades, most implementations of ECDSA have been the target of microarchitectural attacks, and thus existing implementations have either been replaced by more robust algorithms, or layers of security have been added.

For example, one way of minimizing leakage from the scalar multiplication is to use the Montgomery ladder scalar-by-point multiplication [16], much more resilient to side-channel attacks due to the regularity of the operations. However, this does not entirely remove the risk of leakage [28]. Additional countermeasures are necessary.

When looking at common countermeasures, many implementations use blinding or masking techniques [20], for example in BouncyCastle implementation of ECDSA. The former consists in blinding the data before doing any operations, and masking techniques randomize all the data-dependent operations by applying random transformations, thus making any leakage inexploitable.

However, it is important to keep in mind these lattices attacks as they can be applied at any level of an implementation that leaks the correct information.

Acknowledgement

We would like to thank Nadia Heninger for discussions about possible lattice constructions, Medhi Tibouchi for answering our side-channel questions, Alenka Zajic and Milos Prvulovic for providing us with traces from OpenSSL that allowed us to confirm our results on a deployed implementation, Daniel Genkin for pointing us towards the Extended Hidden Number Problem, and Pierrick Gaudry for his precious support and reading. Experiments presented in this paper were carried out using the Grid’5000 testbed, supported by a scientific interest group hosted by Inria and including CNRS, RENATER and several universities as well as other organizations.

Footnotes

In order to have a fair comparison with our methodology, the times reported in [7] with which we compare ourselves have to be multiplied by the number of trials necessary for their attack succeed, thus increasing their total time by a lot. Using 5 signatures, their best total time would be around 15 h instead of 18 min.

For 4 signatures, no times are reported without method A. Thus, we have no other choice than to compare our times with theirs, using A. Yet their time for 4 signatures without A should at least be the time they report with it.

In practice, measurements done during the cache attack depend on the noise in the execution environment, the threat model and the target leaky implementation. For instance, Flush&Reload ran from another core would be noisy. Prime&Probe would give the same information, with a more generic scenario. In an SGX scenario, it would recover the largest amount of information but in a user/user threat model it would be too noisy to lead to practical key recovery.

In [7], the authors use an Intel Core i7-3770 CPU running at 3.40 GHz on a single core. In order for the time comparison to be meaningful, we ran experiments with a machine of comparable performance to estimate the timings of a single reduction. As we obtained similar timings with an older machine than used in [7], the variations we find when comparing ourselves to them solely come from the lattice construction and the reduction algorithm being used rather than hardware differences.

In the sense of vectors exhibited in (7).

Contributor Information

Abderrahmane Nitaj, Email: abderrahmane.nitaj@unicaen.fr.

Amr Youssef, Email: youssef@ciise.concordia.ca.

Gabrielle De Micheli, Email: gabrielle.de-micheli@inria.fr.

References

- 1.Albrecht, M.R., Ducas, L., Herold, G., Kirshanova, E., Postlethwaite, E.W., Stevens, M.: The general sieve kernel and new records in lattice reduction. Cryptology ePrint Archive, Report 2019/089 (2019). https://eprint.iacr.org/2019/089

- 2.Angel J, Rahul R, Ashokkumar C, Menezes B. DSA signing key recovery with noisy side channels and variable error rates. In: Patra A, Smart NP, editors. Progress in Cryptology – INDOCRYPT 2017; Cham: Springer; 2017. pp. 147–165. [Google Scholar]

- 3.Benger N, van de Pol J, Smart NP, Yarom Y. “Ooh Aah... Just a Little Bit” : a small amount of side channel can go a long way. In: Batina L, Robshaw M, editors. Cryptographic Hardware and Embedded Systems – CHES 2014; Heidelberg: Springer; 2014. pp. 75–92. [Google Scholar]

- 4.Boneh D, Venkatesan R. Hardness of computing the most significant bits of secret keys in diffie-hellman and related schemes. In: Koblitz N, editor. Advances in Cryptology — CRYPTO 96; Heidelberg: Springer; 1996. pp. 129–142. [Google Scholar]

- 5.Chen Y, Nguyen PQ. BKZ 2.0: better lattice security estimates. In: Lee DH, Wang X, editors. Advances in Cryptology – ASIACRYPT 2011; Heidelberg: Springer; 2011. pp. 1–20. [Google Scholar]

- 6.Dall, F., et al.: Cachequote: Efficiently recovering long-term secrets of SGX EPID via cache attacks (2018)

- 7.Fan, S., Wang, W., Cheng, Q.: Attacking OpenSSL implementation of ECDSA with a few signatures. In: Weippl, E.R., Katzenbeisser, S., Kruegel, C., Myers, A.C., Halevi, S. (eds.) ACM CCS 2016, pp. 1505–1515. ACM Press, October 2016

- 8.García, C.P., Brumley, B.B.: Constant-time callees with variable-time callers. In: Kirda, E., Ristenpart, T. (eds.) USENIX Security 2017, pp. 83–98. USENIX Association, August 2017

- 9.Genkin, D., Pachmanov, L., Pipman, I., Tromer, E., Yarom, Y.: ECDSA key extraction from mobile devices via nonintrusive physical side channels. In: Weippl, E.R., Katzenbeisser, S., Kruegel, C., Myers, A.C., Halevi, S. (eds.) ACM CCS 2016, pp. 1626–1638. ACM Press, October 2016

- 10.Gordon DM. A survey of fast exponentiation methods. J. Algorithms. 1998;27(1):129–146. doi: 10.1006/jagm.1997.0913. [DOI] [Google Scholar]

- 11.Hlaváč M, Rosa T. Extended hidden number problem and its cryptanalytic applications. In: Biham E, Youssef AM, editors. Selected Areas in Cryptography; Heidelberg: Springer; 2007. pp. 114–133. [Google Scholar]

- 12.Howgrave-Graham NA, Smart NP. Lattice attacks on digital signature schemes. Des. Codes Cryptol. 2001;23(3):283–290. doi: 10.1023/A:1011214926272. [DOI] [Google Scholar]

- 13.Johnson D, Menezes A, Vanstone S. The elliptic curve digital signature algorithm (ECDSA) Int. J. Inf. Secur. 2001;1(1):36–63. doi: 10.1007/s102070100002. [DOI] [Google Scholar]

- 14.Kocher P, Jaffe J, Jun B. Differential power analysis. In: Wiener M, editor. Advances in Cryptology — CRYPTO 99; Heidelberg: Springer; 1999. pp. 388–397. [Google Scholar]

- 15.Lenstra AK, Lenstra HW, Lovász L. Factoring polynomials with rational coefficients. Math. Ann. 1982;261(4):515–534. doi: 10.1007/BF01457454. [DOI] [Google Scholar]

- 16.Montgomery PL. Speeding the pollard and elliptic curve methods of factorization. Math. Comput. 1987;48(177):243–243. doi: 10.1090/S0025-5718-1987-0866113-7. [DOI] [Google Scholar]

- 17.National Institute of Standards and Technology: Digital Signature Standard (DSS) (2013)

- 18.Nguyen PQ, Stehlé D. LLL on the average. In: Hess F, Pauli S, Pohst M, editors. Algorithmic Number Theory; Heidelberg: Springer; 2006. pp. 238–256. [Google Scholar]

- 19.Nguyen PQ, Shparlinski IE. The insecurity of the elliptic curve digital signature algorithm with partially known nonces. Des. Codes Cryptol. 2003;30(2):201–217. doi: 10.1023/A:1025436905711. [DOI] [Google Scholar]

- 20.Osvik DA, Shamir A, Tromer E. Cache attacks and countermeasures: the case of AES. In: Pointcheval D, editor. Topics in Cryptology – CT-RSA 2006; Heidelberg: Springer; 2006. pp. 1–20. [Google Scholar]

- 21.van de Pol J, Smart NP, Yarom Y. Just a little bit more. In: Nyberg K, editor. Topics in Cryptology — CT-RSA 2015; Cham: Springer; 2015. pp. 3–21. [Google Scholar]

- 22.Schnorr CP. A hierarchy of polynomial time lattice basis reduction algorithms. Theoret. Comput. Sci. 1987;53(2–3):201–224. doi: 10.1016/0304-3975(87)90064-8. [DOI] [Google Scholar]

- 23.Schnorr CP. Block reduced lattice bases and successive minima. Comb. Probab. Comput. 1994;3:507–522. doi: 10.1017/S0963548300001371. [DOI] [Google Scholar]

- 24.Schnorr CP, Euchner M. Lattice basis reduction: Improved practical algorithms and solving subset sum problems. Math. Program. 1994;66(2):181–199. doi: 10.1007/BF01581144. [DOI] [Google Scholar]

- 25.The FPLLL development team: FPLLL, a lattice reduction library (2016)

- 26.Vanstone, S.: Responses to NIST’s proposals (1992)

- 27.Wang W, Fan S. Attacking OpenSSL ECDSA with a small amount of side-channel information. Sci. China Inf. Sci. 2017;61(3):032105. doi: 10.1007/s11432-016-9030-0. [DOI] [Google Scholar]

- 28.Yarom Y, Benger N. Recovering OpenSSL ECDSA nonces using the FLUSH+RELOAD cache side-channel attack. IACR Cryptol. ePrint Archive. 2014;2014:140. [Google Scholar]

- 29.Yarom, Y., Falkner, K.: FLUSH+RELOAD: A high resolution, low noise, L3 cache side-channel attack. In: Proceedings of the 23rd USENIX Conference on Security Symposium, SEC 2014, Berkeley, CA, USA, pp. 719–732. USENIX Association (2014)