Abstract

The entwined nature of perceptual and conceptual processes renders an understanding of the interplay between perceptual recognition and conceptual access a continuing challenge. Here, to disentangle perceptual and conceptual processing in the brain, we combine magnetoencephalography (MEG), picture and word presentation and representational similarity analysis (RSA). We replicate previous findings of early and robust sensitivity to semantic distances between objects presented as pictures and show earlier (~105 msec), but not later, representations can be accounted for by contemporary computer models of visual similarity (AlexNet). Conceptual content for word stimuli is reliably present in two temporal clusters, the first ranging from 230 to 335 msec, the second from 345 to 665 msec. The time-course of picture induced semantic content and the spatial location of conceptual representation were highly convergent, and the spatial distribution of both differed from that of words. While this may reflect differences in picture and word induced conceptual access, this underscores potential confounds in visual perceptual and conceptual processing. On the other hand, using the stringent criterion that neural and conceptual spaces must align, the robust representation of semantic content by 230-240 msec for visually unconfounded word stimuli significantly advances estimates of the timeline of semantic access and it’s orthographic and lexical precursors.

1. Introduction

Successful interaction with the environment requires both the perception of objects and access to their meaning. At the neural level, the entwined nature of these two processes renders an understanding of the interplay between perceptual recognition and conceptual access a continuing challenge. The neural substrates of visual object perception are known to be highly sensitive to different semantic classes or ‘object-categories’ (e.g. faces, Kanwisher et al., 1997; places, Epstein & Kanwisher, 1998; body parts, Downing et al., 2001). However, visual features strongly covary with object category and the selective activation of visual cortex may reflect variations in visual rather than semantic processing. Model-driven analytical techniques such as representational similarity analysis (RSA; Kriegeskorte et al., 2008), which allow the assessment of concordance between neural and cognitive representational spaces, might have potential to disentangle the specific contribution of each process. In visual perception, functional magnetic resonance imaging (fMRI) indexed neural representational distances between object categories conforms to the subjective similarity ratings in object-selective ventral visual cortex and to Hmax (Serre et al., 2007) computer model of vision in V1 (Connolly et al., 2012). RSA has further shown that MEG representational space of visually presented object evident at 90 msec conform to fMRI representational spaces in V1, while MEG representational spaces at 130 msec conform to fMRI representational spaces in ventral visual cortex (Cichy et al., 2014).

However in both these cases, representation in ventral visual cortex may also be attributable to visual features (Kaiser et al., 2016). Further attempts have been made to resolve this ambiguity using competing models to disentangle visual from semantic features. Clarke and colleagues (2015) demonstrated that semantic-feature models explained variance beyond that accounted for by Hmax visual models from 110 msec post stimulus onset (see also Bankson et al., 2018). However, also in this context, dissociating perceptual from conceptual contributions to content sensitivity is an ill posed problem, contingent on the capacity of visual models to capture all perceptual contributions. As conceptual representations can be accessed not only through pictures but also words, word offer a perceptually unconfounded method to cue conceptual representations. A growing number of fMRI studies that have employed word, or cross modal (word/picture) stimuli have observed sensitivity to semantic content in a much-reduced subset of brain regions, largely distinct from those showing sensitivity to object category when viewing pictures (Bruffaerts et al., 2013; Fairhall & Caramazza, 2013; Liuzzi et al., 2017; Simanova et al., 2014). These studies provide insight into the locus of conceptual representation in the brain but cannot provide insight into the temporal properties of access.

The goal of the present study is to identify the differential time course of conceptual and perceptual processing. We used magnetoencephalography with RSA to assess the relationship between conceptual representational spaces and neural representational spaces during picture presentation. Then, similar to previous research, we controlled for visual similarity using a contemporary neural network models of vision, AlexNet (Krizhevsky et al., 2012). Finally, and critically, we examine the relationship between conceptual similarity and neural similarity spaces elicited by words, where perceptual features are dissociated from meaning, providing an unclouded view of conceptual access as well as a baseline for the extent to which current computer models of vision can fully account for human perceptual processes.

2. Materials & Methods

2.1. Participants

Twenty-five right-handed native Italian speakers underwent an MEG recording session (14 male; mean age 25.1 years, SD = 5.36). All participants had normal or corrected-to- normal vision and reported no history of neurological disorders. Prior to recording they gave written informed consent to participate in the experiment. All procedures were approved by the ethical committee of the University of Trento.

2.2. Stimuli

Stimuli consisted of written Italian words and corresponding pictures of exemplars from five semantic categories: fruits, tools, clothes, mammals and birds. Each category was composed of 32 exemplars for a total of 160 exemplars. Word length did not differ between categories (F(4,155)=1.83, p=.126) and was uncorrelated with the conceptual model (r=-0.004). Likewise, word frequency (Lyding et al, 2014) did not differ across categories (F(4,155)=1.02, p=.40) or correlate with the conceptual model (r=0.03). In picture presentation trials, pictures were randomly selected from 5 different images of that object.

2.3. Procedure

The experiment was composed of a total of seven runs, the first two using word stimuli while the other five pictures. A greater number of picture runs were presented as the availability of differing exemplars was anticipated to engage participants more than the presentation of the necessarily identical stimulus in the word runs. While this different number of runs for words and pictures may affect variability across participants, it will not influence the magnitude of the similarity effect. Two subjects completed only three runs of pictures. Each run consisted in the presentation of all the 160 exemplars, divided in 20 blocks of 8 stimuli from one category. Blocks were preceded by a word cue indicating the upcoming category (750 msec) followed by a fixation cross (750 msec). Block order was pseudo randomised within runs. Trials consisted of a stimulus (word or picture) presented for 400 msec followed by a fixation cross 2100 ms. On each trial, participants rated the typicality of the exemplar as a member of the category on a scale from 1 (very typical) to 4 (not typical) via a bi-manual VPixx button box (VPixx technologies, Canada). Having blocks of stimuli from the same category was important for subjects to perform the behavioral typicality task. Presentation was controlled using Psychtoolbox (Brainard, 1997). Each image was back-projected with a VPixx PROPixx projector at the centre of a translucent screen placed 120 cm from the eyes of the participants. Refresh rate of the screen was 120 frames per second. Timing was verified with a photodiode.

2.4. MEG data acquisition

Continuous MEG data were recorded at the Center for Mind and Brain Sciences of the University of Trento using Elekta Neuromag 306 MEG system (Elekta, Helsinki, Finland), composed of 102 magnetometers and 204 planar gradiometers, placed in a magnetically shielded room (AK3B, Vakuumschmelze, Hanau, Germany). Prior to the experiment, participants’ head shape was recorded using a 3D digitizer (Fastrak Polhemus, Inc., Colchester, VA, USA), including the fiducial points (nasion, left and right periaricular sites) and the position of five coils (one on the left and right mastoid, three on the forehead). Before each run head position within the MEG helmet was recorded by inducing a non-invasive current though the five coils. Data were collected at 1000 Hz and hardware filters were adjusted to bandpass the MEG signal in the frequency range of 0.01-330 Hz.

2.5. MEG data preprocessing

Raw MEG data were visually inspected to identify bad channels, according to their overall noise level and signal jumps. An average of 7 channels per run were discarded. After this procedure 4 subjects were discarded due to the high number of bad channels (>12). For each subject a reference run was calculated as the one that minimizes the distance in head position. This was then used to realign all other runs and thus have a uniquely defined head position. Realignment and bad channel interpolation were conducted through the temporal signal space separation method (TSSS; Taulu & Simola, 2006) as implemented in the MaxFilter software thus reducing dimensionality of the data by retaining only components that are not correlated to noise. Additional preprocessing was then conducted using the Fieldtrip package (Oostenveld, Fries, Maris, & Schoffelen, 2011). Maxfiltered data were low-pass filtered at 80 Hz and high-pass filtered at 0.8 Hz to remove slow drifts in the signal. Although recent evidence shows that high pass filtering has moderate effects on the temporal profile of classifiers performance (van Driel et al., 2019), no such effect has been reported in the context of RSA analyses. In order to understand its impact, we examined the time course of RSA results on a single random subject with and without filtering. The observed results show a diminished effect as the filter cut-off increases, thus the current filter settings should not lead to any false positives. A notch filter was applied at 50 Hz to remove line noise. Photodiode was used to correct for timings recorded by the MEG system. These corrected timings were used to segment epochs of 1 second (from -0.2 to 0.8 s), resulting in 160 trials per run. Data were then down-sampled to 200 Hz to increase signal-to-noise ratio in the multivariate analysis and to reduce computational time (Grootswagers, Wardle, & Carlson, 2017). Runs were concatenated and visually inspected. Using the function ft_rejectvisual with the summary method, single-trial variance over time was computed and the mean over channels was plotted to identify trials that exceeded the general trend of the individual subject. An average of 19 trials per subjects were discarded. Each trial was baseline corrected, subtracting the mean activity in the baseline window (from -.2 to 0 s) from the subsequent time points. To take into account the different measurement units of magnetometer (T) and gradiometers (T/m) sensors, the signal recorded by magnetometers was multiplied by a factor of 17 corresponding to their distance in mm from the gradiometers so to have them on the same scale and have a comparable influence on correlation value calculations.

2.6. Representational Similarity Analysis

In the present study we used Representational Similarity Analysis (RSA; Kriegeskorte, 2008) to compare the representational structure between the MEG data and conceptual dissimilarity ratings (separately for pictures and words). We then used a computational model of the visual system to assess the influence of visual features on picture- based representational spaces. The goal of RSA is to compare the representational vector spaces associated with the different experimental conditions to obtain a measure of their (dis)similarity. This results in a representational dissimilarity matrix (RDM) containing in each cell the pairwise distance value between different conditions. The main advantage of RSA is the possibility to compare RDMs obtained from different kinds of data. These in fact reflect only the representational structure, not preserving the specific geometry of the features used in its construction. Neural RDMs can then be compared to models to test the hypothesis that brain activity is shaped accordingly to the model.

2.6.1. Neural RDMs

Preprocessed data were mean-centred by removing the mean across-trial pattern (Diedrichsen & Kriegeskorte, 2017). At each time point, we correlated the MEG activity between trial pairs, separately for the picture and word modality. This results in a distance value (1- Pearson correlation) that indicates the dissimilarity between trial pairs according to brain activity. By repeating this procedure for each trial pair we constructed RDMs that account for the whole representational space. Individual trials were used as input to the RDM calculation. To calculate the time-point by time-point RDMs, the vector for the 306 sensors was concatenated with those of the two preceding and the two succeeding time points, as implemented in CoSMoMVPA. This resulted in a vector length of 1530 features reflecting brain activity spanning 20 ms. These were then correlated to conceptual similarity judgements (pictures and words) and to conceptual similarity judgements while removing visual similarity (pictures only).

2.6.2. Conceptual RDMs

An independent sample of 10 subjects took part in a separate behavioural experiment adapted from the Multi-Arrangement Method (Kriegeskorte & Mur, 2012). Briefly, subjects positioned pictures of items in a 2-D space, with the mouse drag-and-drop, according to overall conceptual similarity. The pairwise distance between items was then calculated as to obtain a conceptual RDM. This conceptual model was restructured for each participant to match the actual trials’ label and correlated with the neural RDMs produced by Picture and Word at each time point.

To characterize the information represented in our conceptual model we created a categorical model based on 1s and 0s (1-correlation) for different and similar categories, respectively. The estimated correlation with the conceptual model was r=.79, indicating that the categorical structure of individual concepts is represented in the model, but more fine-grained differences persist.

2.6.3. Visual RDMs

Convolutional neural networks (CNNs) are considered a reliable model of the human isual system with their features reflecting its gradient in complexity ( ichy et al., 1 ; G l & van Gerven, 2015). In the present study we used a pretrained AlexNet architecture (Krizhevsky, Sutskever, & Hinton, 2012) as implemented in Matlab. (Figure 1B. NN architecture was adapted from “Typical NN architecture” by Aphex34 used under BY-SA 4.0) We gave as input to the neural network all the images used for this study and extracted the activations at four specific layers (conv1, conv3, conv5, fc8). Convolutional layers (conv) activation is a feature map representing progressively bigger local image features through arbitrary units. The final fully- connected (fc8) activation is instead a vector with 1000 entries, each one representing the probability of the input image to be classified as one of 1000 classes. For each subject then, we matched the activations of each layer to the actually presented images and calculated the pairwise dissimilarity between feature vectors as 1-Spearman correlation. We used these RDMs as regressors in a partial correlation approach, thus by correlating the pictures MEG RDM to the conceptual similarity RDM while removing the influence of visual similarity modelled through these RDMs. Visual RDMs have a mean (between-subject) correlation with the conceptual RDM of r= .19, .24, .25 and .47 for conv1, conv3, conv5 and fc8. respectively.

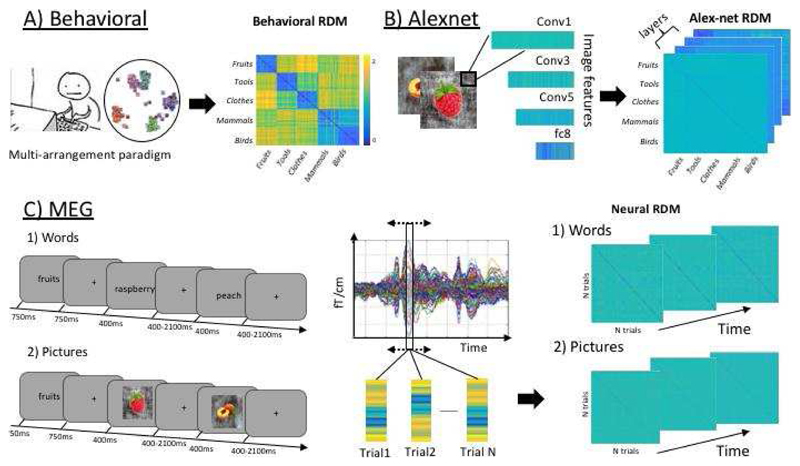

Figure 1.

A) Behavioural experiment with the multi-arrangement paradigm was conducted to obtain a measure of conceptual dissimilarity for 160 exemplars from 5 semantic categories. These were averaged across participants (N=10) to create a conceptual similarity RDM. B) For each image, we extracted activations at four selected layers of a pretrained AlexNet architecture and constructed visual similarity RDMs. C) In an MEG-experiment (N=21) the same exemplars were presented first as words (2 runs) and then as pictures (5 runs). Each run was composed of 20 category-specific blocks in which subjects had to rate typicality of 8 exemplars as a member of the instructed category in a scale 1-4. Preprocessed MEG trials were vectorized to calculate pairwise distance resulting in a neural RDM at each time point, separately for pictures and words. Three separate RSAs were performed: one for pictures, one for pictures controlling for visual similarity and one for words.

2.6.4. Searchlight analysis

To identify the spatially localised sources of information content, we ran a searchlight analysis over all channels in the four time windows showing maximal information content in the picture condition. Considering only planar gradiometers, we averaged data ±10 msec around 180, 280, 365 and 540 msec to perform the same RSA analysis described in previous sections on pictures, pictures controlled for visual similarity and words. For each sensor we selected the closest 24 planar channels (i.e. 12 gradiometer pairs) to be included as neighbours. The result of these analysis is a topography of correlation values that indicates the extent to which each sensor (including its neighbours) is correlated to our semantic model.

2.7. Multivariate Pattern Analysis

Multivariate pattern analysis (MVPA, Haxby et al., 2014) exploits machine learning algorithms to solve a supervised classification problem. A subset of the data is used to find a linear boundary between the multivariate neural activation patterns that best distinguishes two conditions of interest (training), whose reliability is tested on never before seen data. An accuracy value is returned indicating how well learned features are able to predict the class of unlabelled data. An above chance accuracy then indicates that some features are shared between data partitions and thus informative for the class distinction.

We used MVPA to investigate the extent to which the informational content represented in the MEG sensor array is shared across picture and word modalities. Specifically, we trained a Linear Discriminant Analysis (LDA) classifier to distinguish between object-categories. This procedure was reduced to a two-class classification problem by repeating the analysis for all possible combinations of category pairs. Importantly, the classifier was trained on one modality and tested on the other and vice versa. To avoid overfitting we implemented a leave-one-chunk-out cross validation approach. We partitioned the data in 8 chunks and repeated the analysis until all chunks were used both as training and as testing set.

To take into account possible delays between the processing time course of the two modalities (Leonardelli et al., 2019) we used the temporal generalization approach (King & Dehaene, 2014). The training and testing procedure was thus repeated for all possible time point combinations. The final result is a matrix of decoding accuracies indicating the time points in which the informational content that allows the distinction between object categories is shared across modalities.

2.8. Statistical analysis

RSA results were Fisher transformed prior to statistical analysis to have normally distributed values. Significance was assessed using one-tailed cluster-based permutation test. Following the approach described by Stelzer et al., (2013), 50 null distributions for each subject were created by randomly shuffling condition labels and repeating the RSA analysis with the resulting model. These random RSA time courses were combined across subjects to create 1000000 null distributions against which the data were statistically compared with a t-test at each time point. This approach allowed the construction of a null distribution which accurately reflected temporal correlation in the error term as well as other potential characteristics of the noise distribution. Correction for multiple comparisons was assessed through a cluster-based approach. Consecutive significant (p<0.05) timepoints in the interval from 100 to 700 msec were considered to form clusters, whose probability was given by their position within the null distribution A cluster-based permutation test (Maris & Oostenveld, 2007; 10000 permutations), as implemented in Fieldtrip, was used to identify spatial clusters from the searchlight analysis. A cluster-based permutation approach (10000 permutations) was also used to assess statistical significance of the time generalised, cross-modal MVPA in the time window between 100 and 600 msec.

2.9. Data and Code Availability Statement

Data will be made available on request.

3. Results

3.1. Behavioural

Due to equipment malfunction, behavioural data were not available for four subjects. Mean typicality rating did not differ as a function of modality (F1,20)=0.17, p=.68) or category F(1.9,37.5)=2.35, p=.113, Greenhouse-Geisser corrected), although there is a moderate interaction between these two factors F(4,80)=3.49, p=.011). Post-hoc pairwise comparisons between modalities did not reveal the origin of this interaction, even at uncorrected thresholds. Due to equipment asynchrony, absolute RTs were not available. However, relative (mean-centred) were preserved, permitting full statistical analysis. There was no overall effect of modality (F(1,20)=0.09, p=.77) but there was a strong effect of category F(4,80)=5.25, p=.001), and a modality by category interaction (F(4,80)=6.43, p<.0001). Critically, RT differences did not systematically correlate across participants with the conceptual RDM across subjects for either words (t=91) or pictures (t=.36) and will not influence RSA analyses.

3.2. Neuroconceptual Representational Similarity Analysis

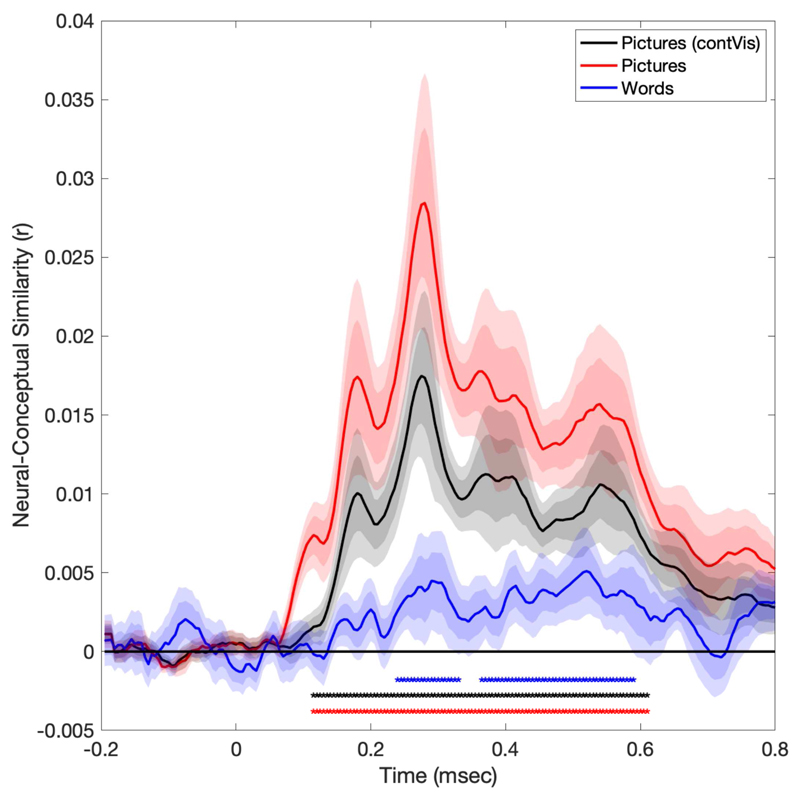

We calculated dissimilarity matrices at each time point of MEG recordings in which subjects were presented with either pictures or words depicting objects from five different semantic categories (fruits, tools, clothes, mammals, birds). We correlated these RDMs with a semantic model obtained from behavioural ratings collected with the multi-arrangement method in a separate experiment. Additionally, for the picture condition we repeated the same analysis controlling for the visual features of each stimuli by regressing out the visual dissimilarity matrices calculated using the activation of four layers of the AlexNet CNN. Informational content was pronounced for picture stimuli, both before and after controlling for visual similarity via the AlexNet model (p < .00001, corrected) and the time course of information content was highly similar (Figure 2). Picture induced information content was characterised by four peaks with their centres at 180, 280, 365 and 540 msec. The sole exception to this pattern of an early peak in visual information at 105 msec which was absent after controlling for visual similarity. For words stimuli, two temporal clusters survived corrections for multiple comparison, one from 230 to 335 msec (p = .0002, corrected) and a second from 345 to 665 msec (p < .00001, corrected).

Figure 2.

Time–course of group-level correlations between Conceptual Similarity Ratings and three RDMs derived from MEG-data: Pictorial presentation (red), pictorial presentation after partialling out perceptual features through AlexNet (contVis, black) and word presentation (blue). Asterisks indicate clusters that survived multiple comparison correction within the time-window of interest (100 msec to 600 msec). All timepoints are significant for the two image-based analyses and two temporal clusters for word stimuli, 230 to 335 ms (p=.0002) and 345 to 665, (p<.00001). Darker shaded areas show the standard error of the mean, lighter shaded areas the 95% confidence interval.

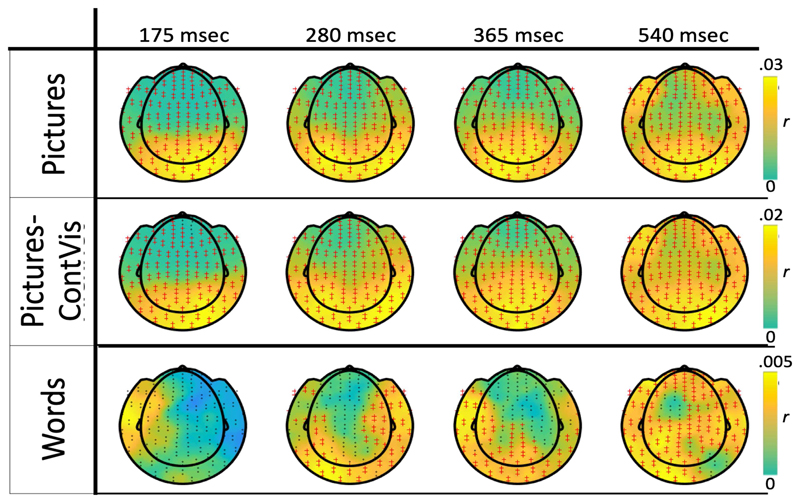

3.2.1. Searchlight analysis

To identify spatially localised sources of information content contributing to the timeseries effects, we performed searchlight analyses on local subsets of 24 planar gradiometers within 20 msec time-windows centred peaks observed in the picture condition (see methods). Picture induced information content was detectable at each sensor location. Controlling for visual features did not alter this topographic distribution of information content. Within the first three time-windows, sensors over occipital sites contain the strongest representation of semantic content. In the last time window, information content is spread more evenly across the sensor space, with frontal recording sites contributing comparable information. Formal statistical inference is not reported as circularity between the definition of time-window of interest and the topographic data render this invalid. However, the extent and strength of the effect are such that significance would persist even after correction for the entire timeseries. In the word modality informational content is more precisely localised and survived correction for multiple comparisons across the four time-windows in three of the considered time windows (critical alpha=.0125). The first and second significant cluster of sensors at 280 (p=.005) and 365 msec (p=.006) are present over occipital and temporal sites with the maximal information content present over left occipital temporal sites in the earlier time window and more anterior left temporal sites in the latter window. As was observed for picture stimuli, at 540 msec additionally encompasses more frontal recording sites, with semantic information being present broadly across the brain (p<.001). A left-lateralised cluster of significant sensors evident in the first time-window for words (lower left topoplot), did not survive correction for multiple comparisons across the four time-windows (p<.03).

3.3. Cross-modal MVPA

To detect common conceptual representation produced by word and picture presentation, we performed cross-modal decoding analyses, training an LDA classifier on Pictures, Testing on words and vice versa (c.f. Fairhall & Caramazza, 2013). As access to conceptual representations may be faster during image presentation than word reading (Leonardelli et al, 2019) we performed time-generalized MVPA (e.g. King and Dehaene, 2014). Specifically, the analysis was aimed at decoding object-category, using a cross-validation strategy where we trained the classifier in one modality and tested in the other. This analysis failed to produce any significant effects (p=0.27)

3.4. Control Analyses

To determine the influence of conceptual similarity that extended beyond category, RSA between MEG data and subjective similarity was rerun partialling out the effect of category per se (Figure S1). The results show that neural representations of pictures capture the conceptual relationship between items extending beyond that of object category across all time-points (100-600 msec). On the other hand, word stimuli effects were only observed in two later time windows, from 405-460 msec and 485-540 msec. Thus, it is uncertain whether word effects are driven by category or a combination of category and non-categorical semantic similarity.

Given the relatively low-dimensionality underlying our conceptual model compared to richer word corpora, we repeated the same RSA analysis using a semantic model obtained from a word2vec algorithm trained on Italian Wikipedia (Berardi, 2015). Comparable timings of conceptual access are observed (Figure S2).

To evaluate the effectiveness of our visual model we repeated the searchlight analysis on the first informational content peak (105 msec). While the picture modality already engages the majority of sensors, controlling visual features using AlexNet effectively reduced the distribution of localised information content to a topography that is similar to the one observed in the word modality (Figure S3).

To further investigate the contribution of our visual features to the visual neural response, we performed an RSA analysis with separate hierarchical layer as the model RDM, while removing the contribution of the other layers through a partial correlation approach. However, there was a high degree of correlation between RDMs based on the individual layers of Alexnet (r-values: 0.18 - 0.86) and the contribution of individual layers across timepoints was unclear. Future work on this question should select stimulus sets which have clear and dissociable representational spaces across layers.

Given the particular nature of fc8 activations (class scores) and to safeguard against bias related to the presented object not being one of the fc8 labels, we performed the same partial correlation analysis using the earlier fully connected layer, fc 7. While fc8 explained moderately more variance than fc7, the time course of neural conceptual representation was identical when controlling for visual effect via either fc7 or fc8, indicating that the categorical nature of fc8 is not affecting our results.

Discussion

To disentangle perceptual and conceptual contributions to object processing, we conducted an MEG study in which stimuli representing exemplars from five semantic categories were presented to participants using two different input modalities, pictures and written words. We used RSA to compare neural representational similarity across time with a model of conceptual similarity constructed using behavioural judgements. Additionally, we used a neural network for visual recognition to control for the influence of perceptual processing in the picture modality. The richness of information content was not uniform across time and followed a similar time course for pictures, and pictures controlled for visual similarity. While an initial peak in information content around 105 msec was present only for pictures when not controlled for visual features, subsequent peaks at 180, 280, 365 and 540 msec were observed both for pictures and pictures when controlled for visual features. Words elicited semantic content across two significant clusters, the first ranging from 235 to 325 msec, the second from 360 to 585 msec.

The first observed peak (105 msec) is present only for picture stimuli. Removing the influence of visual features both via the AlexNet computer model in the picture modality and using words, results in the attenuation of this early peak. Such conceptual-like representations then appear to be attributable to the perception of those visual features that covary with semantic category. As noted previously, representational spaces present in MEG at these early latencies align most closely with fMRI-indexed V1 representations (Cichy et al, 2014), further supporting that cortical responses at these latencies are unrelated to conceptual processing.

In contrast, the second peak in conceptual-neural similarity at 180 msec was not fully explained by the AlexNet model. This result is in line with previous reports (Bankson et al., 2018; Clarke et al., 2015). However, the reliance on pictorial stimuli and the ability of the visual model to capture all features relevant to human perception renders the meaning of this result ambiguous. While there was limited evidence for word-elicited conceptual representation at this time point in both the timeseries and topographic analyses, this effect did not survive correction for multiple comparisons. The timing of this peak coincides with the word selective N170 ERP, a left lateralised component elicited by letter strings more than non-letter stimuli, that is thought to reflect the first stage of orthographic processing (Bentin et al., 1999; Maurer et al., 2005; Tarkiainen et al., 1999). There is some evidence that more complex processing, orthographic lexical interactions (Coch and Mitra, 2010) or lexicosemantic interactions (Hauk et al., 2006; Kim & Lai, 2012) may occur at this latency. Moreover, there is some evidence that intracranial recording sites may be able to distinguish words denoting animals and man-made objects at similarly early time windows (Chan et al., 2011).

The peak at 280 msec signifies the first robust representation of semantic content for word stimuli and also coincides with the maximal representation of semantic content in the image modality. Word induced evoked potentials within this period show sensitivity to words and pseudowords in comparison to unpronounceable letter strings, are sensitive to word repetition and are thought to reflect further pre-lexical orthographic processing or construction of the word form (Chauncey et al., 2008.; Cohen et al., 2000; Dehaene, 1995; Dufau et al., 2008; Simon et al., 2004). The present finding demonstrates the scope of multivariate pattern analysis in combination with magneto and electrophysiological measures to uncover neural processes normally inaccessible with univariate methodologies. The criteria that neural representational spaces conform to semantic representational spaces provides a strong and valid test that semantic representation underlies this neural response. This result significantly advances the timeline not only for semantic access but for prerequisite orthographic and lexical processing.

Our experimental paradigm might have played a role in the rapidity of this semantic access. Electrophysiological studies of word processing generally employ the random presentation of unrelated words. In the present experiment, stimuli were presented in blocks of related content allowing context to play a role in word processing. Context is an important aspect of skilled reading and can facilitate lexico-semantic processing enabling easier and earlier access (Kutas & Federmeier, 2011). Such contextual facilitations that are common during reading may be lost in paradigms were individual, unrelated words are presented.

A stable significant representation of conceptual content in all the modalities is observed later in the time course with an initial peak in the image modality at 365 msec. This coincides with the N400 potential, a negative deflection culminating at 400 msec in response to semantic violations (Kutas & Federmeier, 2011). The N400 is one of the most well-characterised indices of semantic processing, and has been observed following the presentation of words (Bentin et al., 1985) but also faces (Barrett et al., 1988) and objects (Barrett & Rugg, 1990). It has been demonstrated that its amplitude is greatly influenced by previous processing such as the predictability of a stimulus (Lau et al., 2008) and representations at this time may reflect the integration of semantic information with the working context (Hagoort, 2013). Analysis of the topographic distribution of localised information content shows that, for word stimuli, the relatively constrained occipitotemporal location of information extends to a more distributed representation at the later, 540 msec peak, encompassing occipital, temporal and frontal sites, a pattern also evident for image stimuli. Both this distributed representation of semantic knowledge and the finding that this potential follows from an earlier representation of semantic content further supports a model that the semantic-violation sensitive N400 reflects the integration of incoming semantic information within it’s working context, rather than indexing the first representation of semantic content.

While there is some consistency in the time course of semantic representation for pictures and words, the topography of information content differed. In the first three time-windows, the searchlight analysis showed that picture representations were detectable at all sensor locations but was strongest over occipital sites. In contrast, word representations were localized in sensors overlying occipitotemporal areas. This indicates that, different cortical generators are involved in representing conceptual-like information in word and picture modalities. Only in the latest time-window, 530 msec, did word and picture induced representations share a similar topographic distribution. fMRI studies have demonstrated that large sections of the ventral visual cortex are highly sensitive to object categories and the semantic distance between objects (Connolly et al., 2012; Fairhall & Caramazza, 2013). It is probable that the representations of the presented images in visual cortex dominate the information measured at the sensor level. This may eclipse those more subtle patterns of conceptual representation evident in the word condition underlying neural semantic representation in the 280 and 365 msec time-windows. fMRI studies have implicated the posterior inferior/middle temporal gyrus, precuneus and perirhinal cortex in non-perceptual conceptual representation (Bruffaerts et al., 2013; Fairhall & Caramazza, 2013; Liuzzi et al., 2017; Simanova et al., 2014). The relationship between these earlier content representations and that occurring at 530 msec to components of the fMRI indexed semantic system remains an important goal for future research.

As in previous studies, we attempted to use visual models to account for the perceptual processing of pictures. The AlexNet model accounted for the image-based response during the earliest time window (~105 msec). Carefully selecting the best model is crucial to fully capture the processing of the human visual system. Although none of them is perfect, AlexNet fully captured early visual responses whereas earlier models such as HMax might not be able to disambiguate them from conceptual-like representations (Clarke et al., 2015). The most parsimonious explanations of the perseveration of representations after this timepoint in the presents study is that it arises from a shortfall in the visual model that result in conceptual-like neural representations due to the close relationship between semantics and perceptual features. However, it is also possible that conceptual representations are activated differently when cued by a picture or a word. Viewing a hammer may cue conceptual associations relating to form, affordance or motion, to a greater extent than reading the word. Thus, while we cannot be certain that controlling for visual similarity in picture induced representations only reflect conceptual processing, we cannot be certain that word induced representations reflect all conceptual processing that may occur. In fact, the higher similarity induced by pictorial representations might suggest that some differences persist between modalities even at higher processing stages. In addition, in this study we found no evidence for shared crossmodal representations for word and picture induced conceptual access, in contradistinction to effects seen under comparable conditions in fMRI (Fairhall & Caramazza, 2013). This may be due to the poorer spatial resolution of MEG with strong effects associated with visual perception and localized in visual cortices overwhelming weaker influences of conceptual access at all recorded sensors (unlike fMRI which can spatially segregate ‘modal’ and ‘amodal’ cortical sources). This may be further exacerbated by different time-courses in conceptual access, with conceptual access being more rapid during picture presentation which does not necessitate the linguistic processes involved with word reading (Leonardelli et al, 2019). Future MEG studies should take into consideration the spatial overlap and the temporal discrepancy of the effects of interest.

Moreover, the particular influence of a typicality task on the nature of the activated conceptual representation remains uncertain. The task was selected as it encourages access to conceptual representations not constrained to a specific object features (e.g. size, function) in an intuitive and naturalistic manner. Having participants rate the similarity of the specific object to the category in general may have accentuated access to divergent, non-stereotypical features (see also Fairhall, 2020) or, conversely, accentuated those features typical of the category. While it may strongly influence the importance of feature co-occurrence and distinctiveness in the instantiated conceptual representation (Taylor et al, 2011), the specific influence of this on the current task remains uncertain.

In this work, using the stringent criterion that neural and conceptual spaces must align, we observed robust representation of semantic content by 230-240 msec for words. Processing in this time period is usually attributed to orthographic features and the demonstration of the presence of semantic content at this time notably advances estimates of the time course of semantic processing and its orthographic and lexical antecedents. We observe different topographies for information extracted from words or pictures, with information content induced by images being represented most strongly at occipital sensors, both before and after controlling for visual similarity. The spatial location of word and picture induced conceptual representation align only at later time periods (~530 msec) when conceptual information is distributed broadly across recording sites. While words and pictures may cue different types of conceptual access, the differential pattern of information elicited by words and pictures after controlling for visual similarity, argues for caution in the interpretation of the latter.

Supplementary Material

Figure 3.

Topographies of similarities between conceptual and neural similarity spaces. To assess the localisation of information contributing to conceptual content, we performed a searchlight on the gradiometers (radius 12 planar pairs = 24 sensors) at the four peaks of information content identified in the time series analysis for the picture modality. Sensors comprising significant clusters corrected for comparisons across sensor and time-window are indicated in red.

Acknowledgements

The project was funded by the European Research Council (ERC) grant CRASK - Cortical Representation of Abstract Semantic Knowledge, awarded to Scott Fairhall under the European Union's Horizon 2020 research and innovation program (grant agreement no. 640594).

Footnotes

Giuliano Giari: Formal Analysis, Writing – Original Draft. Elisa Leonardelli; Formal Analysis, Writing – Review & Editing. Yuan Tao: Formal Analysis. Mayara Machado: Investigation. Scott L. Fairhall: Conceptualization, Formal Analysis, Writing – Original Draft, Supervision, Funcing Acquisition.

References

- Bankson BB, Hebart MN, Groen IIA, Baker CI. The temporal evolution of conceptual object representations revealed through models of behavior, semantics and deep neural networks. NeuroImage. 2018;178(November 2017):172–182. doi: 10.1016/j.neuroimage.2018.05.037. [DOI] [PubMed] [Google Scholar]

- Barrett SE, Rugg MD, Perrett DI. Event-related potentials and the matching of familiar and unfamiliar faces. Neuropsychologia. 1988;26(1):105–117. doi: 10.1016/0028-3932(88)90034-6. [DOI] [PubMed] [Google Scholar]

- Barrett SE, Rugg MD. Event-related potentials and the semantic matching of pictures. Brain and Cognition. 1990;14(2):201–212. doi: 10.1016/0278-2626(90)90029. [DOI] [PubMed] [Google Scholar]

- N Bentin S, Mouchetant-Rostaing Y, Giard MH, Echallier JF, Pernier J. ERP manifestations of processing printed words at different psycholinguistic levels: Time course and scalp distribution. Journal of Cognitive Neuroscience. 1999;11(3):235–260. doi: 10.1162/089892999563373. [DOI] [PubMed] [Google Scholar]

- Bentin S, McCarthy G, Wood CC. Event-related potentials, lexical decision and semantic priming. Electroencephalography and Clinical Neurophysiology. 1985;60(4):343–355. doi: 10.1016/0013-4694(85)90008-2. [DOI] [PubMed] [Google Scholar]

- Berardi G, Esuli A, Marcheggiani D. Word Embeddings Go to Italy: A Comparison of Models and Training Datasets. IIR. 2015 [Google Scholar]

- Bruffaerts R, Dupont P, Peeters R, Deyne S, De Storms G, Vandenberghe R. Similarity of fMRI Activity Patterns in Left Perirhinal Cortex Reflects Semantic Similarity between Words. 2013;33(47):18597–18607. doi: 10.1523/JNEUROSCI.1548-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan AM, Baker JM, Eskandar E, Schomer D, Ulbert I, Marinkovic K, Halgren E. First-Pass Selectivity for Semantic Categories in Human Anteroventral Temporal Lobe. Journal of Neuroscience. 2011;31(49):18119–18129. doi: 10.1523/JNEUROSCI.3122-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coch D, Mitra P. Word and pseudoword superiority effects reflected in the ERP waveform. Brain research. 2010;1329:159–174. doi: 10.1016/j.brainres.2010.02.084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chauncey K, Holcomb PJ, Grainger J, Chauncey K, Holcomb PJ, Grainger J. Language and Cognitive Processes Effects of stimulus font and size on masked repetition priming : An event-related potentials ( ERP ) investigation. 2008 Jun;:37–41. doi: 10.1080/01690960701579839. 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cichy RM, Khosla A, Pantazis D, Torralba A, Oliva A. Comparison of deep neural networks to spatio-temporal cortical dynamics of human visual object recognition reveals hierarchical correspondence. Scientific Reports. 2016 Jun;6:1–13. doi: 10.1038/srep27755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cichy RM, Pantazis D, Oliva A. Resolving human object recognition in space and time. Nature Neuroscience. 2014;17(3):455–462. doi: 10.1038/nn.3635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke A, Devereux BJ, Randall B, Tyler LK. Predicting the time course of individual objects with MEG. Cerebral Cortex. 2015;25(10):3602–3612. doi: 10.1093/cercor/bhu203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen L, Dehaene S, Naccache L, Lehericy S, Dehaene-Lambertz G, Henaff MA, Michel F. The visual word form area: spatial and temporal characterization of an initial stage of reading in normal subjects and posterior split-brain patients. Brain. 2000;123(Pt2) doi: 10.1093/brain/123.2.291. 291307. [DOI] [PubMed] [Google Scholar]

- Connolly AC, Guntupalli JS, Gors J, Hanke M, Halchenko YO, Wu Y-C, Haxby JV. The Representation of Biological Classes in the Human Brain. Journal of Neuroscience. 2012;32(8):2608–2618. doi: 10.1523/JNEUROSCI.5547-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehaene S. Electrophysiolofical evidence for category-specific word processing in the normal human brain. NeuroReport. 1995 doi: 10.1097/00001756-199511000-00014. Retrieved from http://www.unicog.org/publications/Dehaene_CatWords_NeuroRep1995.pdf. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Kriegeskorte N. Representational models: A common framework for understanding encoding, pattern-component, and representational-similarity analysis. PLoS Computational Biology. 2017;13 doi: 10.1371/journal.pcbi.1005508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A Cortical Area Selective for Visual Processing of the Human Body. Science. 2001;293(5539):2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Dufau S, Grainger J, Holcomb PJ. An ERP investigation of location invariance in masked repetition priming. Cognitive, Affective and Behavioral Neuroscience. 2008;8(2):222–228. doi: 10.3758/CABN.8.2.222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392(6676):598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, Caramazza A. Brain Regions That Represent Amodal Conceptual Knowledge. Journal of Neuroscience. 2013;33(25):10552–10558. doi: 10.1523/JNEUROSCI.0051-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall SL. Cross Recruitment of Domain-Selective Cortical Representations Enables Flexible Semantic Knowledge. The Journal of Neuroscience. 2020 Apr 8;40(15):3096–3103. doi: 10.1523/JNEUROSCI.2224-19.2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grootswagers T, Wardle SG, Carlson TA. Decoding Dynamic Brain Patterns from Evoked Responses: A Tutorial on Multivariate Pattern Analysis Applied to Time Series Neuroimaging Data. Journal of Cognitive Neuroscience. 2017;29(4):667–697. doi: 10.1162/jocn. [DOI] [PubMed] [Google Scholar]

- G l U, van Gerven MAJ. Deep Neural Networks Reveal a Gradient in the omplexity of Neural Representations across the Brain’s Ventral Visual Pathway. The Journal of Neuroscience. 2015;35(27):10005–10014. doi: 10.1523/JNEUROSCI.5023-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagoort P. The memory, unification, and control (MUC) model of language. Automaticity and Control in Language Processing. 2013 Jul;4:243–270. doi: 10.4324/9780203968512. [DOI] [Google Scholar]

- Hauk O, a is, H ord, Pulvermüller F, Marslen-Wilson WD. The time course of visual word recognition as revealed by linear regression analysis of ERP data. NeuroImage. 2006;30(4):1383–1400. doi: 10.1016/j.neuroimage.2005.11.048. [DOI] [PubMed] [Google Scholar]

- Haxby James V, Connolly Andrew C, Guntupalli J Swaroop. Decoding Neural Representational Spaces Using Multivariate Pattern Analysis. Annual Review of Neuroscience. 2014 Jul 8;37(1):435–56. doi: 10.1146/annurev-neuro-062012-170325. [DOI] [PubMed] [Google Scholar]

- Kaiser D, Azzalini DC, Peelen MV. Shape-independent object category responses revealed by MEG and fMRI decoding. Journal of Neurophysiology. 2016 doi: 10.1152/jn.01074.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience : The Official Journal of the Society for Neuroscience. 1997;17(11):4302–4311. doi: 10.1098/Rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim A, Lai V. Rapid Interactions between Lexical Semantic and Word Form Analysis during Word Recognition in Context : Evidence from ERPs. 2012:1104–1112. doi: 10.1162/jocn_a_00148. [DOI] [PubMed] [Google Scholar]

- King J-R, Dehaene S. Characterizing the Dynamics of Mental Representations: The Temporal Generalization Method. Trends in Cognitive Sciences. 2014 Apr;18(4):203–10. doi: 10.1016/j.tics.2014.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M. Inverse MDS: Inferring dissimilarity structure from multiple item arrangements. Frontiers in Psychology. 2012 Jul;3:1–13. doi: 10.3389/fpsyg.2012.00245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini PA. Representational similarity analysis – connecting the branches of systems neuroscience. Frontiers in Systems Neuroscience. 2008 Nov;2:1–28. doi: 10.3389/neuro.06.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krizhevsky A, Sutskever I, Hinton GE. ImageNet Classification with Deep Convolutional Neural Networks. Advances In Neural Information Processing Systems. 2012:1–9. doi: 10.1016/j.protcy.2014.09.007. [DOI] [Google Scholar]

- Kutas M, Federmeier KD. Thirty Years and Counting: Finding Meaning in the N400 Component of the Event-Related Brain Potential (ERP) Annual Review of Neuroscience. 2011 Jan;62:621–647. doi: 10.1146/annurev.psych.093008.131123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lau EF, Phillips C, Poeppel D. A cortical network for semantics: (De)constructing the N400. Nature Reviews Neuroscience. 2008;9(12):920–933. doi: 10.1038/nrn2532. [DOI] [PubMed] [Google Scholar]

- Liuzzi AG, Bruffaerts R, Peeters R, Adamczuk K, Keuleers E, De Deyne S, et al. Vandenberghe R. Cross-modal representation of spoken and written word meaning in left pars triangularis. NeuroImage. 2017 Feb;150:292–307. doi: 10.1016/j.neuroimage.2017.02.032. [DOI] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods. 2007;164(1):177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Maurer U, Brandeis D, Mccandliss BD. Visual specialization for reading in English revealed by the topography of the N170 ERP response. 2005;12:1–12. doi: 10.1186/1744-9081-1-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen JM. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience. 2011;2011 doi: 10.1155/2011/156869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serre T, Oliva A, Poggio T. A feedforward architecture accounts for rapid categorization. 2007 doi: 10.1073/pnas.0700622104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simanova I, Hagoort P, Oostenveld R, Van Gerven MAJ. Modality-independent decoding of semantic information from the human brain. Cerebral Cortex. 2014;24(2):426–434. doi: 10.1093/cercor/bhs324. [DOI] [PubMed] [Google Scholar]

- Simon G, Bernard C, Largy P, Lalonde R. Chronometry of visual word recognition during passive and lexical decision tasks: An erp investigation. Intern J Neuroscience. 2004;114:1401–1432. doi: 10.1080/00207450490476057. [DOI] [PubMed] [Google Scholar]

- Stelzer J, Chen Y, Turner R. Statistical inference and multiple testing correction in classification- based multi-voxel pattern analysis (MVPA): Random permutations and cluster size control. NeuroImage. 2013;65:69–82. doi: 10.1016/j.neuroimage.2012.09.063. ISSN 1053-8119. [DOI] [PubMed] [Google Scholar]

- Tarkiainen A, Helenius P, Hansen PC, Cornelissen PL, Salmelin R. Dynamics of letter string perception in the human occipitotemporal cortex. Brain. 1999;122(11):2119–2131. doi: 10.1093/brain/122.11.2119. [DOI] [PubMed] [Google Scholar]

- Taulu S, Simola J. Spatiotemporal signal space separation method for rejecting nearby interference in MEG measurements. Physics in Medicine and Biology. 2006;51(7):1759–1768. doi: 10.1088/0031-9155/51/7/008. [DOI] [PubMed] [Google Scholar]

- Taylor KI, Devereux BJ, Tyler LK. Conceptual structure: Towards an integrated neurocognitive account. Language and Cognitive Processes. 2011;26(9):1368–1401. doi: 10.1080/01690965.2011.568227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Driel Joram, Olivers Christian NL, Fahrenfort Johannes J. High-Pass Filtering Artifacts in ulti ariate lassification of Neural Time Series ata.” Biorxi Preprint. Neuroscience. 2019 Jan 26; doi: 10.1101/530220. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data will be made available on request.