Highlights

-

•

A new theoretical framework is developed to explore microblog information credibility by incorporating message format.

-

•

Argument quality has a decreasing incremental effect on microblog information credibility, suggesting that argument quality is a hygiene factor.

-

•

Source credibility was found to have a positive and linear effect on microblog information credibility, consistent with the role of a bivalent factor.

-

•

Multimedia diagnosticity was found to have an increasing incremental effect on microblog information credibility.

Keywords: Microblog information credibility, Message format, Multimedia, Two-factor theory, Nonlinear relationship

Abstract

The spreading of misinformation and disinformation is a great problem on microblogs, leading user evaluation of information credibility a critical issue. This study incorporates two message format factors related to multimedia usage on microblogs (vividness and multimedia diagnosticity) with two well-discussed factors for information credibility (i.e., argument quality and source credibility) as a holistic framework to investigate user evaluation of microblog information credibility. Further, the study draws on two-factor theory and its variant three-factor lens to explain the nonlinear effects of the above factors on microblog information credibility. An online survey was conducted to test the proposed framework by collecting data from microblog users. The research findings reveal that for the effects on microblog information credibility: (1) argument quality (a hygiene factor) exerts a decreasing incremental effect; (2) source credibility (a bivalent factor) exerts only a linear effect; and (3) multimedia diagnosticity (a motivating factor) exerts an increasing incremental effect. This study adds to current knowledge about information credibility by proposing an insightful framework to understand the key predictors of microblog information credibility and further examining the nonlinear effects of these predictors.

1. Introduction

Microblogs, with the features of brevity, real-time, and broadcasting, have become a major social media for individuals to acquire, search, and exchange information (Java, Song, Finin, & Tseng, 2007; Zhao & Rosson, 2009). Without strict gatekeepers, anyone can post or repost any information, no matter whether the information is accurate. As a result, the spreading of misinformation and disinformation is widely acknowledged as a problem in society. The Institute for Public Relations’ (IPR) 2019 IPR Disinformation in Society Report indicated that more than half of the respondents (55%) reported that they believe microblogs (i.e., Twitter in the United States) should take responsibility for the problem of misinformation and disinformation in society.1 Thus, identifying information credibility becomes a common issue for microblog users. This raises an interesting question that how users evaluate information credibility on microblogs.

Understanding user evaluation of microblog information credibility has important practical implications in society in general and for organizations more specifically. In relation to society, if a piece of misinformation is considered highly credible by many people, it is likely to be distributed widely and may cause social panic (Liu, Jin & Shen, 2019). For example, in the year of 2020, a novel virus COVID-19 affected the whole world, infecting many people very rapidly. During the outbreak period of the virus, a piece of misinformation that more than 100,000 people were infected was considered as highly credible by many people, and this accelerated the social panic. In relation to organizations, many organizations possess official microblog accounts to connect with customers, and understanding user evaluation of microblog information credibility can assist organizations in managing their reputation (Peetz, De Rijke & Kaptein, 2016).

Existing studies have explored the potential factors affecting online information credibility in general (Cheung, Lee & Rabjohn, 2008, 2009; Fogg, 2003; Jung, Walsh-Childers & Kim, 2016; Qiu, Pang & Lim, 2012; Thon & Jucks, 2017) and information credibility on social media in particular (Aladhadh, Zhang & Sanderson, 2019; Borah & Xiao, 2018; Cooley & Parks-Yancy, 2019; Shariff, Zhang & Sanderson, 2017; Xie, Wang, Chen & Xiang, 2016; Yin, Sun, Fang & Lim, 2018). However, the research into user evaluation of microblog information credibility is still in an early stage, and a holistic perspective is lacking because few unique and contextual features of microblogs have been identified. Therefore, the first research question is formulated as follows: Which factors determine user evaluation of information credibility on microblogs? To address this research question, the study incorporates message format (i.e., multimedia usage in this study) into the previous perspectives on information credibility to propose a three-dimensional framework (i.e., message content, message format, and source), and identifies four key predictors of microblog information credibility: (1) argument quality (related to the message content dimension); (2) source credibility (related to the sender dimension); (3) vividness (related to the message format dimension); (4) multimedia diagnosticity (related to the message format dimension).

Further, previous studies have proposed only linear effects when examining information credibility on social media (Aladhadh et al., 2019; Borah & Xiao, 2018; Cooley & Parks-Yancy, 2019; Shariff et al., 2017; Xie et al., 2016; Yin et al., 2018). When evaluating information credibility on microblogs, the brief text in a posting may limit users’ understanding, making the evaluation of information credibility less straightforward. Meanwhile, credibility evaluation as a type of psychological responses towards microblog information should be complex in nature, because as depicted by prior studies that it is less proper to simply describe individuals’ psychological responses in a linear manner (Cheung & Lee, 2009; Ou & Sia, 2009, 2010). Further, investigating the nonlinear relationships in our proposed framework can increase knowledge of, and supplement understanding about, the details of how the four factors under investigation affect microblog information credibility. Thus, the second research question is formulated as follows: Do the antecedents of microblog information credibility exert their effects in a nonlinear manner? If so, how? To address this research question, the study employed the two-factor theory and its variant three-factor lens to argue that the factors influencing microblog information credibility play different roles, and thus exert different nonlinear effects.

The contribution of this study is mainly two-fold. First, this work contributes to the literature of information credibility by incorporating message format to prior studies, thus building a holistic framework. Second, this study enriches the current knowledge about information credibility in microblog context by verifying the effects of key predictors of credibility from a nonlinear perspective, which is an early effort to explore nonlinear relationships in user evaluation of microblog information credibility.

This remainder of this paper is organized as follows. Section 2 presents the key predictors of microblog information credibility and the two-factor theory. Section 3 builds the research model and the hypotheses. Section 4 discusses the methods employed for data collection and analysis and presents the results. Section 5 discusses the study's key findings, limitations, and implications. Section 6 concludes the paper.

2. Literature review

2.1. Studies of information credibility

This study examines the literature on online information credibility generally and microblog information credibility specifically (see Table 1 ), and figures out that these studies have investigated many factors, especially message-related factors and sender-related factors. However, there are two research gaps to be addressed.

Table 1.

The literature on information credibility.

| Source | Context | Predictors of information credibility |

|---|---|---|

| Online information credibility | ||

| Fogg (2003) | Online information credibility | Message content: involvement, topic, task, and experience Other factors: assumptions in users’ minds, skill/knowledge, and context |

| Cheung et al. (2009) | Credibility of electronic word-of-mouth | Message content: argument strength, recommendation framing, recommendation sidedness, and confirmation with prior beliefs Sender-related factor: source credibility Normative determinants: recommendation consistency, and recommendation rating |

| Cheung et al. (2012) | Online review credibility | Message content: argument quality, review consistency, and review sidedness Sender-related factor: source credibility |

| Qiu et al. (2012) | Credibility of electronic word-of-mouth | Message content: conflicting aggregated rating |

| Jung et al. (2016) | Online diet-nutrition information credibility web sites | Message content: message accuracy Sender-related factor: source expertise |

| Thon and Jucks (2017) | Online health information credibility | Sender-related factor: medical vs. nonmedical, authors’ language use (technical vs. every day) |

| Information credibility on social media | ||

| Xie et al. (2016) | Information credibility on microblogs | Sender-related factor: gatekeeping behavior of microblog users |

| Shariff et al. (2017) | Credibility evaluation of news on Twitter | Readers’ features: educational background and geo-location Message content: topic keyword, alert phrase, and writing style |

| Borah and Xiao (2018) | Health information credibility on Facebook | Message content: message framing (gain vs. loss), and social endorsement (high vs. low) Sender-related factor: expert vs. non-expert |

| Yin et al. (2018) | Microblog information credibility | Sender-related factor: microblog platform credibility, source credibility, and social endorsement Message format: vividness |

| Aladhadh et al. (2019) | Information credibility on social media | Sender-related factor: the distance between an information source and the event |

| Cooley and Parks-Yancy (2019) | Product information credibility on social media | Sender-related factor: celebrities, influencers, and people whom consumers know |

| Song, Zhao, Song and Zhu (2019) | Credibility of health rumors on social media | Message content: quality cues, attractiveness cues, and affective polarity |

First, when considering message-related factors, most of the previous studies focused on the content, and argument quality is a widely studied factor related to content (Bhattacherjee & Sanford, 2006; Cheung et al., 2008, 2009). However, message format is also critical for user evaluation of information credibility (Hilligoss & Rieh, 2008), but is less considered. On social media (and for this study, microblogs in particular), users share information not only with the text but also always with pictures or videos, leading various formats of information are shared among users (Li & Li, 2013; Webb & Roberts, 2016). The various formats of information on microblog make it different from the information from traditional offline and static websites (Adomavicius, Bockstedt, Curley & Jingjing, 2019), which can provide microblog users a brand-new dimension to evaluate microblog information. The omission of message format in previous information credibility evaluation makes the current findings insufficient for the understanding of user evaluation of microblog information credibility. In other words, a specific framework that includes the role of message format is needed. Thus, it is of theoretical and practical importance to develop a new framework within which both message content and message format are considered when understanding user evaluation of information credibility on microblogs.

Second, we found that existing studies explored only the direct and linear effects of antecedents on microblog information credibility. However, the linear effects are insufficient to understand user evaluation of microblog information credibility for three reasons. First, investigations into nonlinear effects can add a complementary understanding to the current understanding of linear effects. Although linear investigations have been widely used, “there is no apparent reason, intuitive or otherwise, as to why human behavior should be more linear than the behavior of other things, living and nonliving” (Brown & Henkel, 1995, p1). Second, microblogs form social networks, which are unstable and dynamic, and contain rich and complex relationships (Guo, Vogel, Zhou, Zhang & Chen, 2009). Linear relationships are simple and concrete, but may ignore some information. Third, user evaluation of information credibility is a psychological reaction that is complex in nature and cannot be simply depicted by linear descriptions (Cheung & Lee, 2009; Ou & Sia, 2009, 2010). Thus, the study is further designed to explore the possible nonlinear effects of the four proposed factors on user evaluation of microblog information credibility. Doing this allows the new framework to determine more precisely the factors affecting user evaluation of microblog information credibility.

2.2. Factors of microblog information credibility

To address the first gap in the literature, this study incorporates message format and develops a framework specific to the context of microblogs (see Table 2 ). In general, the framework contains both message-related and sender-related factors. The message-related factors are further classified into two parts: message content and message format, thus building a three-dimensional framework. Specifically, two previously well-discussed factors are included in the framework, argument quality related to the message content (which refers to the strength of an argument) (Bhattacherjee & Sanford, 2006; Cheung et al., 2008, 2009) and source credibility related to the sender (which is defined as the extent of information sources to be believable, competent, and trustworthy) (Bhattacherjee & Sanford, 2006).

Table 2.

Research framework in the context of microblogs.

| Dimensions | Message-related factors |

Sender-related factors | ||

|---|---|---|---|---|

| Message content | Message format | |||

| Constructs in this study | Argument quality | Vividness | Multimedia diagnosticity | Source credibility |

The framework also includes factors related to message format. That is, a posting on a microblog can include elements other than text, and multimedia such as pictures, audio, or video is an important one; multimedia is not necessary, but can be added, and is important to the message format and its association with information credibility (Yin et al., 2018). Multimedia has two characteristics of multimedia-rich language, meaning that multimedia can include rich language, and complementary cues, meaning that multimedia can add complementary cues to help individuals further understand information (Lim, Benbasat & Ward, 2000, 2005). Following that, two factors of multimedia use that influence message format are identified as the unique characteristics of information on social media, namely vividness, defined as the representational richness of a microblog posting (Steuer, 1992), and multimedia diagnosticity, defined as the extent to which individuals perceive that the multimedia embedded in a microblog posting are helpful for them to evaluate the facts (Jiang & Benbasat, 2004).

2.3. Nonlinear theoretical foundations

2.3.1. Two-factor theory and three-factor framework

Two-factor theory, which is also known as hygiene-motivator theory, was developed by Herzberg, Mausner and Snyderman (1967) to explain workers’ motivations. This theory identified two categories of factors, namely hygiene factors associated with basic needs that lead to dissatisfaction when absent while not resulted in satisfaction when present and motivators associated with growth needs that predict satisfaction when present while not triggered dissatisfaction when absent.

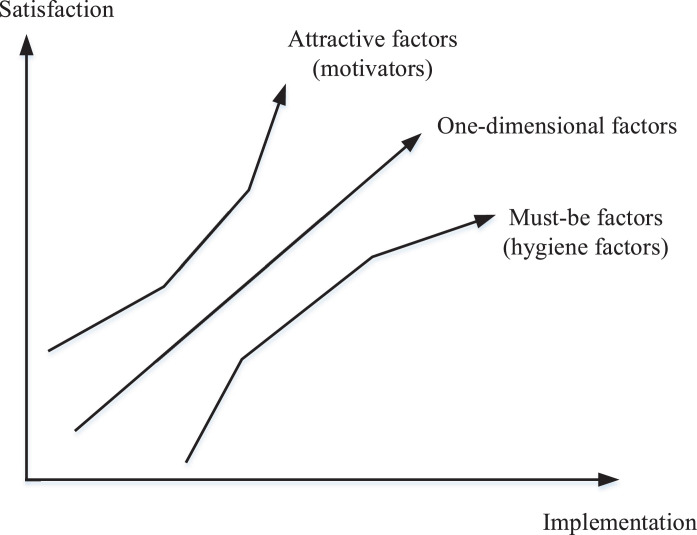

Furthermore, when adapting the two-factor theory to investigate customer satisfaction, Kano, Seraku, Takahashi and Tsuji (1984) discovered that some factors cannot be classified as hygiene factors or motivators because there is a third type of needs—performance needs. Basic needs are those that must be fulfilled, performance needs are those that are expected to be fulfilled, and growth needs (termed as motivating needs in Kano et al., 1984) are those exceeding customers’ expectations. Then a three-factor framework is built and the factors associated with the three types of needs are termed as must-be factors (matching with hygiene factors), one-dimensional factors, and attractive factors (matching with motivators) (Rashid, 2010). The underlying mechanism of these factors implies that they may exert effects in a nonlinear pattern as illustrated in Fig. 1 . Specifically, the must-be factors fulfill individuals’ basic needs, thus their absence is unacceptable while their presence is taken for granted. This implies that these factors have a decreasing incremental effect on satisfaction. The one-dimensional factors are associated with performance needs to be fulfilled, and these factors are important no matter absence or presence thus exerting a linear effect. For the attractive factors that fulfill individuals’ growth needs, their absence is not a critical issue for individuals and their presence adds values to individuals, exerting an increasing incremental effect on satisfaction.

Fig. 1.

The nonlinear effects implied in two-factor theory and three-factor framework.

Originally used to explore employee satisfaction and customer satisfaction, the two-factor theory and three-factor framework were later adapted into other contexts, such as technology usage (Cenfetelli, 2004) and knowledge sharing using IT (Hendriks, 1999). The implied nonlinear effects result in a further adaptation of these two theories to study trust and distrust (Ou & Sia, 2009, 2010) and the nonlinear pattern of user satisfaction (Cheung & Lee, 2009). Therefore, to explore the possible nonlinear patterns, we argue that these two theories may also provide a theoretical foundation for the current context. Given that the terms used in the prior literature are not consistently the same, this study followed Ou and Sia's (2009) examination of trust and distrust to employ the terms hygiene factors, bivalent factors, and motivating factors. A general mapping among different terms and the fulfilled needs can be found in Table 3 .

Table 3.

The mapping among different terms.

| Fulfilled needs |

|||

|---|---|---|---|

| Basic needs | Performance needs | Growth (motivating) needs | |

| Two-factor theory | Hygiene factors | Motivators | |

| Three-factor framework | Must-be factors | One-dimensional factors | Attractive factors |

| The current study | Hygiene factors | Bivalent factors | Motivating factors |

2.3.2. Adaptation of nonlinear framework

The four factors identified in the research framework (i.e., argument quality, source credibility, vividness, and multimedia diagnosticity) (see Section 2.1) can be considered to reflect hygiene factors, bivalent factors, and motivating factors. The study considered that argument quality is a hygiene factor, source credibility is a bivalent factor, and vividness and multimedia diagnosticity are motivating factors, according to the needs that are fulfilled in the process of information credibility judgment as elaborated below.

First, message content is the basic means through which information is conveyed (Cheung, Sia & Kuan, 2012). If the quality of message content (i.e., argument quality) is low, it is hard for users to understand the meaning of the information and also hinders the credibility judgment. Since argument quality affects information judgment through the central route, it requires a high cognitive effort that is always not preferred for users when evaluating information credibility in microblogs (Bhattacherjee & Sanford, 2006; Yin et al., 2018). Therefore, argument quality is required to reach an acceptable level, and it is a need that should be fulfilled (i.e., basic needs) when evaluating information credibility, consistent with the meaning of hygiene factors. Thus, we consider argument quality as a hygiene factor.

Second, given that information on microblogs is user-generated, there are no strict gatekeepers to control information quality, leading the source credibility of the information critical for information credibility evaluation (Yin et al., 2018). When evaluating information credibility, users expect the source credible given the unsupervised mechanism of microblogs. Therefore, source credibility is an expected criterion to be fulfilled, thus consistent with the meaning of bivalent factors. Therefore, we consider source credibility as a bivalent factor.

Third, vividness and multimedia diagnosticity are two factors related to multimedia usage that contributes to message format. These two factors are specific to the context of microblogs. Multimedia usage is not a required part of microblogs, so users will not feel unacceptable if a piece of microblog information does not include multimedia or just includes an arbitrary picture. However, when used, multimedia can provide additional cues or information to users (Yin et al., 2018), which is beyond users’ expectations and is consistent with the motivating needs. Therefore, we consider vividness and multimedia diagnosticity to be motivating factors.

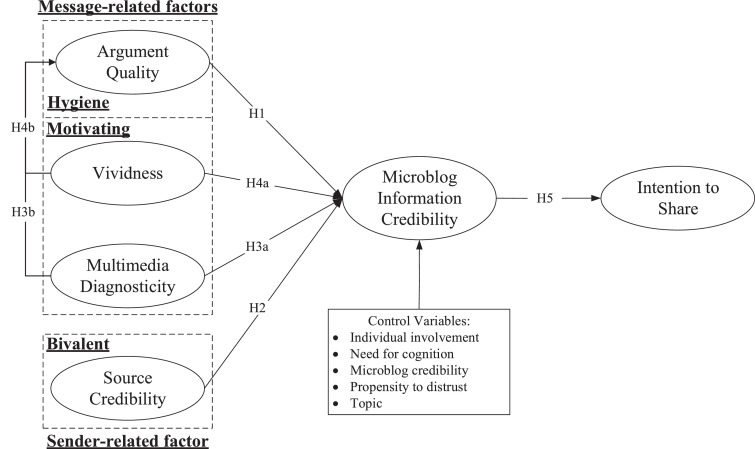

3. Research model and hypotheses

Employing the justifications presented in the previous section, we propose our research model as depicted in Fig. 2 . We argue that argument quality as a hygiene factor will exert a decreasing incremental effect on microblog information credibility, source credibility as a bivalent factor will exert a symmetrical and linear effect on microblog information credibility, and vividness and multimedia diagnosticity as motivating factors will both exert an increasing incremental effect on microblog information credibility. In addition, prior literature has indicated that multimedia usage can increase information credibility through enhancing text-message comprehensiveness (Lim & Benbasat, 2002, 2000). Thus, we argue that vividness and multimedia diagnosticity also have increasing incremental effects on argument quality. We also included intention to share in the research model and consider it a consequence of microblog information credibility.

Fig. 2.

Research model.

3.1. Hypotheses development

Argument quality indicates “the persuasive strength of arguments embedded in an informational message” (Bhattacherjee & Sanford, 2006). The message content is the basic means to convey the information. When it is with low quality, users will feel unacceptable and will perceive the information less credible. However, the situation is different when the argument quality is high. In microblogs, users intend to post the information with short text-message, reducing the comprehensiveness of the meaning of information. Therefore, even if the text message of a microblog posting is well codified and of high quality, the microblog information credibility is not increased proportionally because of the limited comprehensiveness of the meaning of information. Besides, users are less likely to put a high cognitive effort in judging argument quality because individuals are cognitive misers currently (Yin et al., 2018). This personal attribute will also dilute the influence of argument quality that requires individuals to exert mental efforts to make judgments about quality. Overall, once argument quality is at an acceptable level, a higher level of argument quality will not increase the information credibility proportionally. Thus, the following hypothesis is proposed:

H1

Argument quality has a decreasing incremental effect on microblog information credibility.

Source credibility is defined as the extent to which an information source is believable, competent, and trustworthy (Bhattacherjee & Sanford, 2006). Studies have proved that source credibility positively predicts information credibility (Cheung et al., 2009, 2012; Yin et al., 2018). In the current context, microblog postings can be generated by any user without strict monitoring of information quality, making the identity of posting authors critical to information credibility. Users expect that high credible sources present high credible information and low credible sources present low credible information (Taylor & Thompson, 1982), which indicates that source credibility is associated with performance needs. Therefore, it is considered as a bivalent factor, and its effect on microblog information credibility is proportionally linear. Thus, the following hypothesis is proposed:

H2

Source credibility has a symmetrically increasing effect on microblog information credibility.

Vividness refers to the representational richness of a microblog posting (Steuer, 1992; Yin et al., 2018). As stated, vividness can affect both microblog information credibility and argument quality. First, individuals are easily attracted by vivid information and will pay more attention to such information. These qualities of vivid information lead individuals to be more involved in information processing and decision making (Yin et al., 2018). Thus, we assert that vividness can increase microblog information credibility. Further, vivid information will make the text message look more convincing because it requires more effort to codify a piece of vivid information. Thus, vividness can also positively influence argument quality (Yin et al., 2018).

We further argue that the effects of vividness on microblog information credibility and argument quality are nonlinear. Especially, less vivid information does not hinder the credibility judgment of the information and the understanding of the content. However, higher vivid information can bring additional values that are out of users’ expectations. Therefore, the increased perception of microblog information credibility and argument quality should be increasing along with the increase in vividness. Thus, the following hypotheses are proposed:

H3a

Vividness has an increasing incremental effect on microblog information credibility.

H3b

Vividness has an increasing incremental effect on argument quality.

Multimedia diagnosticity is defined as the extent to which individuals perceive that the multimedia embedded in a microblog posting help them to evaluate the facts (Jiang & Benbasat, 2004). The inclusion of multimedia in microblog postings can reduce equivocality and help individuals to verify the meaning of the information (Lim & Benbasat, 2000). Therefore, with higher multimedia diagnosticity, individuals will better understand more facts stated by the posting, and have greater belief in the posting. Further, multimedia can also add supplement understanding of a posting and enrich the meaning, which can influence users’ judgment on argument quality.

We further suggest that multimedia diagnosticity can increase microblog information credibility and argument quality in an increasing manner. If arbitrary multimedia is embedded in a microblog posting that is unrelated to message content, i.e., with low multimedia diagnosticity, users will not perceive it as unacceptable because multimedia is not the major means to convey information. When the multimedia diagnosticity is high, however, users will find that multimedia adds unexpected cues to the understanding of the information. Therefore, multimedia diagnosticity possesses the motivating feature, and its effects on microblog information credibility and argument quality are increasing incrementally. Thus, the following hypotheses are proposed:

H4a

Multimedia diagnosticity has an increasing incremental effect on microblog information credibility.

H4b

Multimedia diagnosticity has an increasing incremental effect on argument quality.

We argue that microblog information credibility positively predicts individuals’ intention to share the microblog posting on their own microblog accounts. If individuals believe one posting is credible, they are likely to accept the content embedded in the posting and share it with others (Cheung et al., 2009). Following this logic, the following hypothesis is proposed:

H5

Microblog information credibility is positively associated with intention to share.

3.2. Control variables

Several control variables were included in the proposed research model, namely individual involvement, need for cognition, propensity to distrust, microblog credibility, and topic. Individual involvement refers to the extent to which the posting is relevant to the microblog user (Yin, Zhang & Liu, 2020); need for cognition refers to the extent to which the microblog user tends to engage in effortful analytic activity because of their disposition (Tam & Ho, 2005); propensity to distrust refers to the microblog user's dispositional tendency to trust or distrust (Benamati, Serva & Fuller, 2010); and microblog credibility measures the general credibility of microblog services as perceived by the users (Sussman & Siegal, 2003).

4. Methods

4.1. Research design

This study employs an online field survey as the data collection technique for two reasons. First, microblogs are online services, so collecting data online can maintain consistency between the research context and the data collection context. Second, our target respondents are microblog users who are online users, thus it is easier to reach these targets online.

For the survey, we first established a filter question in the beginning of the questionnaire to target respondents who are active microblog users. Then, the target respondents were directed to finish the subsequent questionnaire step-by-step with a series of instructions embedded in the questionnaire. They were first required to log onto their regularly used microblog accounts and then scan posts as usual. When encountering a posting that caught their attention, they were required to determine the credibility of that posting and to answer a series of questions without reviewing the posting. After that, the respondents were required to review the posting and provide some objective data related to the posting that they just had read, such as topic, author's name, and multimedia type. We were able to verify whether the respondents were completing the questionnaire with careful consideration by checking the consistency between the objective data and the questionnaire answers. For example, we asked respondents that “whether the posting uses multimedia or not”, and if “yes” was obtained but the objective data related to the multimedia number was zero, we identified this answer as invalid.

4.2. Instrument

Measures for all the constructs were adapted from previous literature as presented in Table 4 .

Table 4.

Research instruments.

| Constructs | Items | Source |

|---|---|---|

| Microblog information credibility | I think this posting is factual I think this posting is accurate I think this posting is credible |

(CheunG et al., 2009) |

| Argument quality | The text arguments in this posting are convincing The text arguments in this posting are strong The text arguments in this posting are persuasive The text arguments in this posting are good |

(Cheung et al., 2009) |

| Source credibility | The person who wrote this posting is trustworthy The person who wrote this posting is reliable The person who wrote this posting is knowledgeable on this topic The person who wrote this posting is an expert on this topica |

(Bhattacherjee & Sanford, 2006) |

| Vividness | In the posting I just read, I felt it is sensational In the posting I just read, I felt it is vivid In the posting I just read, I felt it is graphic |

(Quick & Stephenson, 2008) |

| Multimedia diagnosticity | The multimedia used in this posting are helpful for me to evaluate the fact The multimedia used in this posting are helpful in familiarizing me with the fact The multimedia used in this posting are helpful for me to understand the fact |

(Jiang & Benbasat, 2007) |

| Individual involvement | This topic is important to me This topic is relevant to mea This topic is one that really matters to me This topic affects me personally |

(Stephenson, Benoit & Tschida, 2001) |

| Need for cognition | I do not like to have to do a lot of thinking (R)a I try to avoid situations that require thinking in-depth about something (R) I prefer to do something that challenges my thinking abilities rather than something that requires little thought I prefer complex problems to simple problems Thinking hard and for a long time about something gives me little satisfaction (R)a |

(Tam & Ho, 2005) |

| Microblog credibility | I think most information on microblogs is factual I think most information on microblogs is accurate I think most information on microblog is credible Generally speaking, I believe the information on microblogs |

(Sussman & Siegal, 2003) |

| Propensity to distrust | I usually distrust people until they give me a reason to trust them I generally am wary of people's motives when I first meet thema My typical approach is to distrust new acquaintances unless they prove that I should trust them |

(Benamati et al., 2010) |

Deleted items from the final analyses because of two criteria: (1) Significant decrease in reliability; (2) Low factor loadings (< 0.75).

4.3. Data collection

The questionnaire was created on an online survey-related service website,2 and a link directing the user to the questionnaire was obtained. To obtain more responses, we employed two sampling techniques, one was the snowball sampling technique (sampling technique 1) through which the questionnaire link was distributed via referrals on social networks, and the other was the sampling service provided by the online survey-related service website (sampling technique 2). We received 107 responses from the sampling technique 1 and 257 responses from the technique 2.

We ticked off 18 invalid responses from sampling technique 1 and 47 invalid responses from sampling technique 2, according to one or more of the following criteria: (1) the time spent on responses was fewer than seven minutes3 ; (2) more than 15 answers were the same4 ; (3) there was an inconsistency between the subjective and objective data. A total of 299 valid responses was obtained. Given that we intended to test the role of multimedia, we further removed 65 responses that did not identify multimedia in the microblog posting. Finally, 234 responses remained for final data analysis.

The demographic statistics are presented in Table 5 . The table demonstrated that 30.3% of the respondents were male, and 92.8% were under the age of 35 years. More than 80% of the respondents had a bachelor's degree or higher, and almost 70% of respondents’ income lay in the range of 2000 to 8000 RMB/month.

Table 5.

Demographic statistics.

| Variables | Levels | Frequency | Percentage |

|---|---|---|---|

| Gender | Male | 71 | 30.3% |

| Female | 163 | 69.7% | |

| Age | <=25 | 64 | 27.4% |

| 26–35 | 153 | 65.4% | |

| 36–45 | 15 | 6.4% | |

| >=45 | 2 | 0.9% | |

| Education | High school | 4 | 1.7% |

| Junior college | 32 | 13.7% | |

| Undergraduate | 146 | 62.4% | |

| Graduate | 52 | 22.2% | |

| Income per month (¥) | <=1000 | 24 | 10.3% |

| 1001–2000 | 17 | 7.3% | |

| 2001–4000 | 70 | 29.9% | |

| 4001–8000 | 77 | 32.9% | |

| >=8000 | 46 | 19.7% | |

| Internet experience | <=2 years | 2 | 0.9% |

| 3–4 years | 17 | 7.3% | |

| 5–6 years | 51 | 21.8% | |

| 7–8 years | 55 | 23.5% | |

| >= 8 years | 109 | 46.6% |

4.4. Non-response test

Given that we collected data with two sampling techniques, a non-response test was conducted to ensure the appropriateness of data integration. The method of comparison of demographic and socioeconomic differences (CDSD) was used in the current study (Sivo, Saunders, Chang & Jiang, 2006). The chi-square test was used for this comparison, and the results are presented in Table 6 . These results reveal that the two samples differ in gender, age, education, and income because the first sampling technique invited more students in higher education. However, there was no significant difference in the item of internet experience, indicating the acceptability of data integration.

Table 6.

Demographical distribution comparison.

| Variables | Levels | Channel 1 | Channel 2 | Chi-square |

|

|---|---|---|---|---|---|

| χ2 | p value | ||||

| Gender | Male | 38 | 63 | 4.505 | .034 |

| Female | 51 | 147 | |||

| Age | <=25 | 35 | 42 | 18.164 | .000 |

| 26–35 | 50 | 144 | |||

| 36–45 | 2 | 23 | |||

| >=45 | 2 | 1 | |||

| Education | Non-student | 51 | 183 | 56.760 | .000 |

| High school | 2 | 4 | |||

| Junior college | 6 | 31 | |||

| Undergraduate | 39 | 156 | |||

| Postgraduate | 42 | 19 | |||

| Income per month (¥) | <=1000 | 19 | 16 | 31.180 | .000 |

| 1001–2000 | 6 | 11 | |||

| 2001–4000 | 20 | 68 | |||

| 4001–8000 | 17 | 86 | |||

| >=8000 | 27 | 29 | |||

| Internet experience | <=2 years | 0 | 2 | 5.352 | .253 |

| 3–4 years | 5 | 14 | |||

| 5–6 years | 13 | 50 | |||

| 7–8 years | 20 | 49 | |||

| >= 8 years | 51 | 95 | |||

4.5. Common method bias

The common method bias is also assessed because the self-report survey was employed in the study which is more likely to be susceptible to such bias (Podsakoff, Mackenzie, Lee & Podsakoff, 2003). Two methods were adopted. First, the Harman's single-factor test was utilized, and the results show that only 32.24% of the total variance was explained by one single factor. Second, the unmeasured latent method construct approach was adopted (Podsakoff et al., 2003), and a comparison among several models—null model, trait model, method model, and trait and method model—was conducted (Kim, Cavusgil & Calantone, 2006). The results presented in the Appendix demonstrated the existence of variance explanations by both constructs and a method factor. However, the mean percentage of variance explained by the common method factor (6.96%) is relatively smaller comparing to the mean percentage of variance explained by constructs (85.42%). Therefore, we conclude that common method bias is not a major threat to our study.

4.6. Data analysis

4.6.1. Measurement model

The measurement model was analyzed using confirmatory factor analysis, and both reliability and construct validity were evaluated. Reliability was estimated by Cronbach's alpha, Composite reliability, and Average Variance Extracted (AVE). The threshold values for Cronbach's alpha and composite reliability are 0.7, and the AVE should exceed 0.5 (Chin, 1998). The results are shown in Table 7 , and all indexes reached the criteria. The construct validity was evaluated considering both convergent validity and discriminant validity. Convergent validity was assessed by verifying that the items within the same construct loaded well on their target constructs, and discriminant validity was assessed by examining whether the items loaded higher on their target constructs than on the other constructs and whether the square of AVE was higher than the correlations. The results presented in Tables 7 and 8 indicate a good construct validity of the instrument. Overall, the instrument is satisfactory.

Table 7.

Cronbach's alpha, composite reliability, average variance extracted (AVE), and correlations.

| Alpha | C.R. | AVE | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AS | 0.886 | 0.921 | 0.744 | 0.863 | |||||||||

| DI | 0.921 | 0.950 | 0.864 | 0.526 | 0.930 | ||||||||

| II | 0.873 | 0.920 | 0.794 | 0.519 | 0.276 | 0.891 | |||||||

| MC | 0.953 | 0.966 | 0.876 | 0.337 | 0.273 | 0.313 | 0.936 | ||||||

| NFC | 0.880 | 0.910 | 0.772 | 0.134 | 0.130 | 0.126 | 0.060 | 0.878 | |||||

| PtoD | 0.832 | 0.900 | 0.819 | −0.086 | 0.016 | −0.060 | −0.220 | −0.088 | 0.905 | ||||

| SC | 0.793 | 0.876 | 0.702 | 0.624 | 0.434 | 0.341 | 0.142 | 0.067 | −0.018 | 0.838 | |||

| VI | 0.898 | 0.936 | 0.831 | 0.568 | 0.447 | 0.377 | 0.313 | 0.086 | −0.067 | 0.401 | 0.911 | ||

| IC | 0.903 | 0.939 | 0.838 | 0.674 | 0.457 | 0.367 | 0.308 | 0.125 | −0.058 | 0.683 | 0.382 | 0.915 | |

| ItoS | 0.979 | 0.986 | 0.959 | 0.520 | 0.233 | 0.590 | 0.442 | −0.055 | −0.081 | 0.289 | 0.447 | 0.359 | 0.979 |

Note: (1) Alpha – Cronbach's Alpha; C.R. – Composite Reliability; AVE – Average Variable Extraction,

(2) The values in the diagonal are the root squares of related AVEs,

(3) NFC – Need for cognition; AQ – Argument Quality; IC – Information credibility; MD – Multimedia Diagnosticity; SC – Source Credibility; MC – Microblogging Credibility; PtoD – Propensity to Distrust; II – Individual Involvement; VI – Vividness; ItoS – Intention to Share.

Table 8.

Loadings and cross-loadings.

| AS | MD | IC | MC | NFC | PtoD | II | SC | ItoS | VI | |

|---|---|---|---|---|---|---|---|---|---|---|

| AS1 | 0.870 | 0.425 | 0.676 | 0.272 | 0.129 | −0.086 | 0.437 | 0.546 | 0.459 | 0.485 |

| AS2 | 0.856 | 0.443 | 0.514 | 0.283 | 0.131 | −0.086 | 0.408 | 0.519 | 0.405 | 0.468 |

| AS3 | 0.882 | 0.504 | 0.577 | 0.289 | 0.131 | −0.071 | 0.442 | 0.556 | 0.449 | 0.559 |

| AS4 | 0.843 | 0.441 | 0.547 | 0.320 | 0.070 | −0.051 | 0.508 | 0.530 | 0.481 | 0.442 |

| Digno1 | 0.460 | 0.913 | 0.402 | 0.253 | 0.117 | 0.018 | 0.225 | 0.345 | 0.196 | 0.399 |

| Digno2 | 0.515 | 0.938 | 0.442 | 0.258 | 0.168 | 0.011 | 0.280 | 0.446 | 0.224 | 0.442 |

| Digno3 | 0.489 | 0.938 | 0.428 | 0.251 | 0.074 | 0.017 | 0.262 | 0.414 | 0.228 | 0.404 |

| ICred1 | 0.540 | 0.369 | 0.898 | 0.231 | 0.076 | −0.038 | 0.238 | 0.626 | 0.235 | 0.306 |

| ICred2 | 0.627 | 0.427 | 0.906 | 0.291 | 0.154 | −0.065 | 0.387 | 0.574 | 0.349 | 0.366 |

| ICred3 | 0.673 | 0.451 | 0.941 | 0.317 | 0.110 | −0.054 | 0.370 | 0.673 | 0.389 | 0.373 |

| MCred1 | 0.300 | 0.269 | 0.277 | 0.931 | 0.067 | −0.212 | 0.262 | 0.144 | 0.424 | 0.297 |

| MCred2 | 0.333 | 0.242 | 0.315 | 0.943 | 0.066 | −0.159 | 0.312 | 0.150 | 0.427 | 0.314 |

| MCred3 | 0.333 | 0.268 | 0.277 | 0.949 | 0.044 | −0.212 | 0.317 | 0.124 | 0.413 | 0.284 |

| MCred4 | 0.293 | 0.247 | 0.283 | 0.920 | 0.048 | −0.245 | 0.277 | 0.111 | 0.390 | 0.274 |

| NFC2 | 0.084 | 0.129 | 0.101 | −0.029 | 0.906 | −0.049 | 0.048 | 0.065 | −0.157 | 0.068 |

| NFC3 | 0.157 | 0.113 | 0.129 | 0.122 | 0.944 | −0.107 | 0.170 | 0.060 | 0.032 | 0.089 |

| NFC4 | 0.080 | 0.113 | 0.002 | 0.124 | 0.776 | −0.022 | 0.153 | 0.031 | 0.012 | 0.115 |

| PtoDis1 | −0.095 | 0.009 | −0.065 | −0.218 | −0.068 | 0.987 | −0.077 | −0.025 | −0.086 | −0.080 |

| PtoDis3 | −0.034 | 0.040 | −0.018 | −0.177 | −0.138 | 0.815 | 0.016 | 0.013 | −0.042 | −0.006 |

| Rele1 | 0.486 | 0.234 | 0.343 | 0.336 | 0.099 | −0.052 | 0.894 | 0.313 | 0.592 | 0.353 |

| Rele3 | 0.407 | 0.154 | 0.225 | 0.278 | 0.056 | −0.109 | 0.902 | 0.234 | 0.559 | 0.272 |

| Rele4 | 0.472 | 0.310 | 0.372 | 0.225 | 0.158 | −0.020 | 0.877 | 0.336 | 0.442 | 0.357 |

| SExpert1 | 0.486 | 0.264 | 0.442 | 0.039 | 0.177 | 0.009 | 0.321 | 0.787 | 0.195 | 0.350 |

| Strust2 | 0.547 | 0.387 | 0.522 | 0.138 | 0.069 | −0.051 | 0.321 | 0.852 | 0.245 | 0.380 |

| STrust1 | 0.536 | 0.414 | 0.701 | 0.157 | −0.030 | −0.003 | 0.241 | 0.873 | 0.273 | 0.300 |

| Share1 | 0.537 | 0.249 | 0.374 | 0.428 | −0.052 | −0.095 | 0.599 | 0.299 | 0.976 | 0.454 |

| Share2 | 0.515 | 0.228 | 0.352 | 0.433 | −0.052 | −0.068 | 0.593 | 0.287 | 0.985 | 0.445 |

| Share3 | 0.472 | 0.203 | 0.326 | 0.440 | −0.056 | −0.073 | 0.536 | 0.259 | 0.977 | 0.413 |

| Vivid1 | 0.545 | 0.378 | 0.374 | 0.295 | 0.091 | −0.030 | 0.379 | 0.399 | 0.481 | 0.890 |

| Vivid2 | 0.517 | 0.424 | 0.317 | 0.305 | 0.074 | −0.077 | 0.341 | 0.365 | 0.385 | 0.932 |

| Vivid3 | 0.487 | 0.422 | 0.352 | 0.252 | 0.070 | −0.079 | 0.305 | 0.327 | 0.348 | 0.912 |

Note: NFC – Need for cognition; AQ – Argument Quality; IC – Information credibility; MD Multimedia Diagnosticity; SC – Source Credibility; MC – Microblogging Credibility; PtoD – Propensity to Distrust; II – Individual Involvement; VI – Vividness; ItoS – Intention to Share.

4.6.2. Structural model

Given that we intended to test increasing or decreasing effects, the quadratic terms of some constructs were included in the research model following the previous research (Ping, 2004). The valence of the coefficients of quadratic terms can reflect the rate of change of the slope of the regression line: positive valence refers to increasing change rate while negative valence refers to a decreasing change rate. For example, to test the nonlinear effect of argument quality, we include quadratic term AQ*AQ into the model. If the valence of its coefficient is significant and negative, it means that the incremental effect of argument quality on microblog information credibility is decreasing.

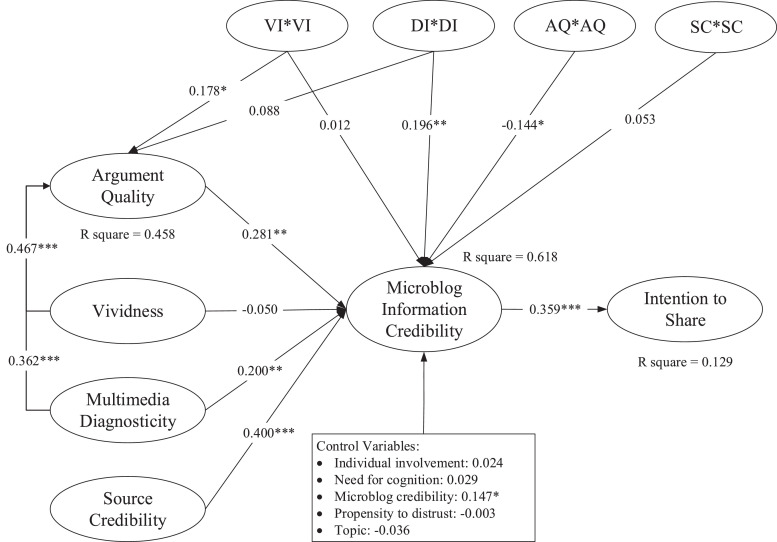

We employed partial least squares (PLS) with product indicator (PI) (PLS-PI) method to test the significance of coefficients of quadratic terms and linear terms in PLS-SEM because this method can obtain a more accurate path estimate that other methods (Chin, Marcolin & Newsted, 2003). The quadratic terms of the constructs were included in the PLS-SEM as exogenous variables and were estimated using the PI approach. That is, if an independent variable is X which is measured by two items x1 and x2, its quadratic term X*X is specified by x1×1, x1×2, and x2×2. To avoid multicollinearity issue, we used the standardized scores of items to conduct the product. The results of PLS-PI are presented in Fig. 3 .

Fig. 3.

The results of the structural model.

Fig. 3 reveals that H1 was supported that argument quality (a hygiene factor) had a decreasing incremental effect on microblog information credibility with a significant positive linear effect (β = 0.281, t = 3.108, p < 0.01) and a significant negative quadratic effect (β = −0.144, t = 2.100, p < 0.05). Source credibility (a bivalent factor) had a significant positive linear relationship (β = 0.400, t = 6.142, p < 0.001) and its quadratic term was found to have no significant effect (β = 0.053, t = 0.692, n.s.), thus supporting H2. H3a was not supported. That is, the increasing incremental effect of vividness (a motivating factor) on microblog information credibility was not found because both the linear (β = −0.050, t = 0.934, n.s.) and quadratic (β = 0.012, t = 0.292, n.s.) coefficients were insignificant. However, H3b was supported that the nonlinear effect of vividness on argument quality was confirmed with a significant positive linear effect (β = 0.467, t = 9.274, p<0.001) and a significant positive quadratic effect (β = 0.178, t = 2.290, p < 0.05). H4a was strongly supported. That is, multimedia diagnosticity (a motivating factor) was found to exert an increasing incremental effect on microblog information credibility with a significant positive linear effect (β = 0.200, t = 2.726, p < 0.01) and a significant positive quadratic effect (β = 0.196, t = 2.721, p < 0.01) on microblog information credibility. However, H4b was only partially supported that only a linear effect of multimedia diagnosticity on argument quality was found that there was a significant positive linear effect (β = 0.362, t = 5.856, p < 0.001) and an insignificant quadratic effect (β = 0.088, t = 1.287, n.s.). Finally, H5 was supported that microblog information credibility had a positive relationship with intention to share (β = 0.359, t = 6.561, p < 0.001).

5. Discussions

5.1. Key findings

This work attempts to investigate the key factors affecting microblog information credibility from a nonlinear perspective. Five key findings are derived from this study. First, microblog information credibility positively predicts users’ intention to share. This suggests that it is more likely for a microblog posting to gain publicity if it is perceived as more credible. Therefore, it can be considered that microblog information credibility is a critical issue that deserves further considered research attention.

Second, argument quality has a decreasing incremental effect on microblog information credibility, suggesting that argument quality is a hygiene factor. Previous studies have suggested that argument quality can increase information credibility linearly (Cheung et al., 2009; Yin et al., 2018). Our result further confirms that the incremental effect decreases with the increase of argument quality, suggesting that argument quality is the basic criterion for user evaluation of microblog information credibility. That is, high argument quality is taken for granted, while poor argument quality is unacceptable.

Third, source credibility was found to have a positive and linear effect on microblog information credibility, consistent with the role of a bivalent factor and echoing those of previous studies on the effect of source credibility on information credibility (Cheung et al., 2009; Yin et al., 2018). This is understandable given that there are no strict gatekeepers on microblogs to verify the content of the postings. Thus, users will expect a high level of credible information from a source they perceive as highly credible.

Fourth, as expected, multimedia diagnosticity was found to have an increasing incremental effect on microblog information credibility, demonstrating its role as a motivating factor for user evaluation of microblog information credibility. This finding indicates that when multimedia is helpful for users to understand the facts, it indeed plays a value-added component of microblog information credibility. However, the analysis failed to confirm the motivating role of vividness, even the increasing linear effect of vividness was also insignificant. Prior research has suggested that the effect of vividness functions when diagnostic information is present (Taylor & Thompson, 1982), which may provide a possible explanation for the insignificant effect of vividness. That is, if a picture or a video is included but does not add diagnostic information to users’ understanding of the facts of the microblog postings, then the vividness is increased, but the increase in vividness does not exert any effects on user evaluation of microblog information credibility.

Fifth, vividness and multimedia diagnosticity were found to enhance the understanding of the content of the microblog postings (i.e., argument quality in this study), with the former showing an increasing incremental role and the latter showing a linear effect. This supports the conclusion that multimedia can enhance text comprehensiveness.

5.2. Limitations

There are several limitations of this study that must be acknowledged. First, the whole data collection procedure was unsupervised, and all the instructions were embedded in the questionnaire. Although we took some measures to control for the data quality (e.g., compare consistency between subjective responses and objective data), it was still hard to ensure all the respondents follow the instructions to finish the whole survey. Therefore, future studies can improve the research design process to further increase data quality.

Second, our respondents were recruited from China only, and thus mainly used microblog platforms in Mandarin. Comparing with Twitter that uses English, the text with 140 Chinese-language characters contain a great deal more information than 140 English-language characters. It would be interesting to examine whether this difference influences individuals’ perceptions about argument quality, in turn shaping the effect of this factor on microblog information credibility. Future studies may compare this cultural, linguistic, or contextual difference.

Third, this study focused on nonlinear relationships, but investigated only quadratic relationships. However, there are many other types of nonlinear relationships, and exploring more types of nonlinear relationships may provide a greater understanding of the complicated relationships among the analyzed constructs.

Fourth, this study did not distinguish the effects among different types of multimedia. In a microblog posting, there are different types of multimedia that can be used (e.g., picture, video, or audio). Different multimedia or their combinations may exert different effects, and considering multimedia as a whole may ignore this difference. Also, the consistency between multimedia and text content was also not considered in this study. That is, if the multimedia is poorly designed and is less related to the text content, it might cause some confusion or even make information less credible. Therefore, future studies can conduct studies about multimedia through these directions.

5.3. Implications

5.3.1. Theoretical implications

This study contributes to theory in four ways. First, this study contributes to the information credibility literature by proposing a holistic framework that incorporates message format. Although previous studies have explored predictors of user evaluation of microblog information credibility (Shariff et al., 2017; Xie et al., 2016; Yin et al., 2018), the role of message format is ignored. By incorporating message format into user evaluation of microblog information credibility and building a research framework from a holistic perspective, this study furthers the existing knowledge related to user evaluation of information credibility.

Second, this study enriches the current understanding of microblog information by proposing and verifying the different nonlinear effects of key predictors of credibility. Although simple linear and positive effects were examined in previous studies (Shariff et al., 2017; Xie et al., 2016; Yin et al., 2018), this study suggests that the effects are more complex and should be considered from a nonlinear perspective because of the complicated psychological process involved in human responses. By examining the more complex effects, this study provides a nuanced perspective in investigating the effects of factors that increasing user evaluation of information credibility, and suggests that there are differences in the increase effects. This enriches previous information credibility literature with a linear perspective, and future studies may further this line of research.

Third, this study gains the literature of the two-factor theory and the three-factor framework by generalizing them to the context of social media. The two-factor theory and three-factor framework provide theoretical explanations for trust and distrust research in the online context (Ou & Sia, 2009, 2010), which implies that these theories may also be adopted to credibility evaluation on social media. By adapting these two theories to explain with precision the nonlinear relationships between different predictors of information credibility, this study demonstrates that these two theories can also be generalized to the social media context, and suggests that future research attention should be devoted in this direction.

Finally, this work also extends the multimedia literature by identifying the motivating role of message formats in credibility evaluation. The effects of message formats have been demonstrated in many areas, for example in organizational information systems (Lim & Benbasat, 2000, 2002) and attention studies (Hong, Thong & Tam, 2004; Hsieh & Chen, 2011), but few empirical studies have been conducted in the social media context. Given that multimedia is widely used in microblog postings, specific research on the role of message formats in user evaluation of microblog information credibility should be conducted. This study confirms that message formats use can add value to user evaluation of information credibility by increasing information vividness and providing helpful cues that unfold the motivating role.

5.3.2. Practical implications

This research can also provide some guidance and suggestions to practice. First, microblog managers should pay attention to the role of information credibility on microblogs because it can trigger users’ share, which can expand information publicity. If the information is negative, increased publicity may lead to social panic (e.g., in public health incidents), or may destroy an organization's reputation. Therefore, understanding which factors influence microblog information credibility is a first step to controlling this dissemination of information on microblogs.

Second, the nonlinear results of this study provide more nuanced suggestions to microblog managers on how to increase information credibility. Specifically, previous studies suggest that argument quality is critical in information credibility evaluation and careful editing is needed to keep the argument with high quality. However, this work suggests that the effect of argument quality is limited because of its decreasing incremental effect on microblog information credibility, and that low argument quality is unacceptable and high argument quality is taken for granted by users. Therefore, if an organization wants to publish a piece of credible information, the content of the posting, mainly presented in text messages, should be carefully edited to avoid obvious mistakes. However, once the argument quality has reached some extent, fewer efforts could be put on the editing. As source credibility has a positive and linear effect on information credibility, microblog managers can pay more attention to increasing source credibility by citing official accounts.

Third, microblog practitioners should utilize multimedia to increase information credibility given multimedia can add value to user understanding of the facts of the information. Our results indicate that multimedia diagnosticity is key to user evaluation of microblog information credibility. Therefore, understanding appropriate and targeted utilization of multimedia and how to provide useful cues that complement or explain the facts contained in the text message (as opposed to inserting arbitrary pictures, audios, or videos in a post) would be beneficial to those microblog postings.

6. Conclusion

Microblog platforms have become an important channel through which individuals acquire information. Thus, information credibility is a critical issue in the context of microblogs. Through incorporating message format, this study built a holistic research framework for user evaluation of microblog information credibility. Further, based on the two-factor theory and the three-factor framework, this study investigated the nonlinear effects of several key factors (i.e., argument quality, source credibility, vividness, and multimedia diagnosticity) on microblog information credibility. The empirical results propose that for the effects on user evaluation of microblog information credibility, argument quality (a hygiene factor) exerts a decreasing incremental effect, source credibility (a bivalent factor) exerts a linear effect, and multimedia diagnosticity (a motivating factor) exerts an increasing incremental effect. Our study enriches existing literature on information credibility and the two-factor theory and provides some suggestions for practice.

CRediT authorship contribution statement

Chunxiao Yin: Conceptualization, Methodology, Writing - original draft. Xiaofei Zhang: Conceptualization, Writing - review & editing.

Acknowledgements

The authors wish to thank the Editors-in-Chief, Associate Editor, and four anonymous reviewers for their highly constructive comments. This study was partially funded by the National Natural Science of China (71701169, 71901127, and 71971123).

Footnotes

The pre-test revealed that the time required to complete the questionnaire was at least 8 minutes

Having more than 15 answers that were the same suggested that the respondents did not answer the questionnaire with careful consideration.

Supplementary material associated with this article can be found, in the online version, at doi:10.1016/j.ipm.2020.102345.

Appendix

Table A1.

Assessment of common method bias.

| Model | Chi-square | df | p | CFI | NFI | RMSEA |

|---|---|---|---|---|---|---|

| M1: Null | 6649.495 | 465 | .000 | NA | NA | NA |

| M2: Trait model | 692.547 | 389 | .000 | 0.951 | 0.896 | 0.058 |

| M3: Method model | 4298.029 | 434 | .000 | 0.375 | 0.354 | 0.195 |

| M4: Trait and method model | 574.772 | 358 | .000 | 0.965 | 0.914 | 0.051 |

| Model comparison | ||||||

| Δchi-square | Δdf | p | conclusion | |||

| Testing for the presence of trait factors | ||||||

| M1–M2 | 5956.948 | 67 | <.01 | M1>M2a | ||

| M3–M4 | 3723.257 | 67 | <.01 | M3>M4a | ||

| Testing for the presence of a method factor | ||||||

| M1–M3 | 2351.466 | 31 | <.01 | M1>M3b | ||

| M2–M4 | 117.775 | 31 | <.01 | M2>M4b | ||

NOTE: 1. CFI = Comparative Fit Index; NFI = Normed Fit Index; RMSEA = Root Mean Square Error of Approximation; NA = Not Applicable.

Evidence supporting presence of trait factors.

Evidence supporting presence of a method factor.

Appendix B. Supplementary materials

References

- Adomavicius G., Bockstedt J.C., Curley S.P., Jingjing Z. Reducing recommender system biases: An investigation of rating display designs. MIS Quarterly. 2019;43:1321–1341. [Google Scholar]

- Aladhadh S., Zhang X., Sanderson M. Location impact on source and linguistic features for information credibility of social media. Online Information Review. 2019;43(1):89–112. [Google Scholar]

- Benamati J.S., Serva M.A., Fuller M.A. The productive tension of trust and distrust: The coexistence and relative role of trust and distrust in online banking. Journal of Organizational Computing and Electronic Commerce. 2010;20:328–346. [Google Scholar]

- Bhattacherjee A., Sanford C. Influence processes for information technology acceptance: An elaboration likelihood model. MIS Quarterly. 2006;30:805–825. [Google Scholar]

- Borah P., Xiao X. The importance of ‘likes’: The interplay of message framing, source, and social endorsement on credibility perceptions of health information on Facebook. Journal of Health Communication. 2018;23:399–411. doi: 10.1080/10810730.2018.1455770. [DOI] [PubMed] [Google Scholar]

- Brown C., Henkel R.E. Sage; 1995. Chaos and catastrophe theories. [Google Scholar]

- Cenfetelli R.T. Inhibitors and enablers as dual factor concepts in technology usage. Journal of the Association for Information Systems. 2004;5(3):472–492. [Google Scholar]

- Cheung C.M.K., Lee M.K.O., Rabjohn N. The impact of electronic word-of-mouth: The adoption of online opinions in online customer communities. Internet Research. 2008;18:229–247. [Google Scholar]

- Cheung C.M., Lee M.K. User satisfaction with an internet-based portal: An asymmetric and nonlinear approach. Journal of the American Society for Information Science and Technology. 2009;60:111–122. [Google Scholar]

- Cheung M.Y., Luo C., Sia C.L., Chen H. Credibility of electronic word-of-mouth: Informational and normative determinants of on-line consumer recommendations. International Journal of Electronic Commerce. 2009;13:9–38. [Google Scholar]

- Cheung M.-.Y., Sia C.-.L., Kuan K.K. Is This review believable? A study of factors affecting the credibility of online consumer reviews from an ELM perspective. Journal of the Association for Information Systems. 2012;13:619–635. [Google Scholar]

- Chin W.W. The partial least squares approach for structural equation modeling. Modern Methods for Business Research. 1998;295:295–336. [Google Scholar]

- Chin W.W., Marcolin B.L., Newsted P.R. A Partial least squares latent variable modeling approach for measuring interaction effects: Results from a Monte Carlo simulation study and an electronic-mail emotion/adoption study. Information Systems Research. 2003;14:189–217. [Google Scholar]

- Cooley D., Parks-Yancy R. The effect of social media on perceived information credibility and decision making. Journal of Internet Commerce. 2019;18:249–269. [Google Scholar]

- Fogg B. Prominence-interpretation theory: Explaining how people assess credibility online. CHI’03 extended abstracts on Human Factors in Computing Systems. 2003:722–723. [Google Scholar]

- Guo X., Vogel D., Zhou Z., Zhang X., Chen H. Chaos theory as a lens for interpreting blogging. Journal of Management Information Systems. 2009;26:101–128. [Google Scholar]

- Hendriks P. Why share knowledge? The influence of ICT on the motivation for knowledge sharing. Knowledge and Process Management. 1999;6:91–100. [Google Scholar]

- Herzberg F., Mausner B., Snyderman B.B. Wiley; New York: 1967. The motivation to work. [Google Scholar]

- Hilligoss B., Rieh S.Y. Developing a unifying framework of credibility assessment: Construct, heuristics, and interaction in context. Information Processing & Management. 2008;44:1467–1484. [Google Scholar]

- Hong W., Thong J.Y.L., Tam K.Y. Does animation attract online users’ attention? The effects of flash on information search performance and perceptions. Information Systems Research. 2004;15:60–86. [Google Scholar]

- Hsieh Y.C., Chen K.H. How different information types affect viewer's attention on internet advertising. Computers in Human Behavior. 2011;27:935–945. [Google Scholar]

- Jiang Z.J., Benbasat I. The effects of presentation formats and task complexity on online consumers' product understanding. MIS Quarterly. 2007;31:475. [Google Scholar]

- Java A., Song X., Finin T., Tseng B. Why we twitter: Understanding microblogging usage and communities. Proceedings of the 9th WebKDD and 1st SNA-KDD 2007 workshop on Web mining and social network analysis. 2007:56–65. [Google Scholar]

- Jiang Z., Benbasat I. Virtual product experience: Effects of visual and functional control of products on perceived diagnosticity and flow in electronic shopping. Journal of Management Information Systems. 2004;21:111–147. [Google Scholar]

- Jung E.H., Walsh-Childers K., Kim H.-.S. Factors influencing the perceived credibility of diet-nutrition information web sites. Computers in Human Behavior. 2016;58:37–47. [Google Scholar]

- Kano N., Seraku N., Takahashi F., Tsuji S. Attractive quality and must-be quality. The Journal of the Japanese Society for Quality Control. 1984;14:39–48. [Google Scholar]

- Kim D., Cavusgil S.T., Calantone R.J. Information system innovations and supply chain management: Channel relationships and firm performance. Journal of the Academy of Marketing Science. 2006;34:40–54. [Google Scholar]

- Li Y.-.M., Li T.-.Y. Deriving market intelligence from microblogs. Decision Support Systems. 2013;55:206–217. [Google Scholar]

- Lim K.H., Benbasat I. The effect of multimedia on perceived equivocality and perceived usefulness of information systems. MIS Quarterly. 2000;24:449–471. [Google Scholar]

- Lim K.H., Benbasat I. The influence of multimedia on improving the comprehension of organizational information. Journal of Management Information Systems. 2002;19:99–128. [Google Scholar]

- Lim K.H., Benbasat I., Ward L.M. The role of multimedia in changing first impression bias. Information Systems Research. 2000;11:115–136. [Google Scholar]

- Lim K.H., O'Connor M.J., Remus W.E. The impact of presentation media on decision making: Does multimedia improve the effectiveness of feedback. Information & Management. 2005;42:305–316. [Google Scholar]

- Liu Y., JIn X., Shen H. Towards early identification of online rumors based on long short-term memory networks. Information Processing & Management. 2019;56:1457–1467. [Google Scholar]

- Ou C.X., Sia C.L. To trust or to distrust, that is the question: Investigating the trust-distrust paradox. Communications of the ACM. 2009;52:135–139. [Google Scholar]

- Ou C.X., Sia C.L. Consumer trust and distrust: An issue of website design. International Journal of Human-Computer Studies. 2010;68:913–934. [Google Scholar]

- Peetz M.-.H., De Rijke M., Kaptein R. Estimating reputation polarity on microblog posts. Information Processing & Management. 2016;52:193–216. [Google Scholar]

- Ping R.A. 2nd ed. 2004. Testing latent variable models with survey data.http://www.wright.edu/~robert.ping/lv.htm [Google Scholar]

- POdsakoff P.M., Mackenzie S.B., Lee J.Y., Podsakoff N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. Journal of Applied Psychology. 2003;88:879–903. doi: 10.1037/0021-9010.88.5.879. [DOI] [PubMed] [Google Scholar]

- Qiu L., Pang J., Lim K.H. Effects of conflicting aggregated rating on eWOM review credibility and diagnosticity: The moderating role of review valence. Decision Support Systems. 2012;54:631–643. [Google Scholar]

- Quick B.L., Stephenson M.T. Examining the role of trait reactance and sensation seeking on perceived threat, state reactance, and reactance restoration. Human Communication Research. 2008;34:448–476. [Google Scholar]

- Rashid M. A review of state-of-art on KANO model for research direction. International Journal of Engineering Science and Technology. 2010;2:7481–7490. [Google Scholar]

- Shariff S.M., Zhang X., Sanderson M. On the credibility perception of news on Twitter: Readers, topics and features. Computers in Human Behavior. 2017;75:785–796. [Google Scholar]

- Sivo S.A., SAunders C., Chang Q., Jiang J.J. How low should you go? Low response rates and the validity of inference in IS questionnaire research. Journal of the Association for Information Systems. 2006;7:351–414. [Google Scholar]

- Song X., Zhao Y., Song S., Zhu Q. The role of information cues on users' perceived credibility of online health rumors. Proceedings of the Association for Information Science Technology. 2019:760–761. [Google Scholar]

- Stephenson M.T., Benoit W.L., Tschida D.A. Testing the mediating role of cognitive responses in the elaboration likelihood model. Communication Studies. 2001;52:324–337. [Google Scholar]

- Steuer J. Defining virtual reality: Dimensions determining telepresence. Journal of Communication. 1992;42:73–93. [Google Scholar]

- Sussman S.W., Siegal W.S. Informational influence in organizations: An integrated approach to knowledge adoption. Information Systems Research. 2003;14:47–65. [Google Scholar]

- Tam K.Y., Ho S.Y. Web personalization as a persuasion strategy: An elaboration likelihood model perspective. Information Systems Research. 2005;16:271. [Google Scholar]

- Taylor S.E., Thompson S.C. Stalking the elusive "vividness" effect. Psychological Review. 1982;89:155–181. [Google Scholar]

- Thon F.M., Jucks R. Believing in expertise: How authors’ credentials and language use influence the credibility of online health information. Journal of Health Communication. 2017;32:828–836. doi: 10.1080/10410236.2016.1172296. [DOI] [PubMed] [Google Scholar]

- Webb S.H., Roberts S.J. Communication and social media approaches in small businesses. Journal of Marketing Development and Competitiveness. 2016;10(1):e1857. [Google Scholar]

- Xie B., Wang Y., Chen C., Xiang Y. Proceedings of the international conference on network and system security. Springer; 2016. Gatekeeping behavior analysis for information credibility assessment on Weibo; pp. 483–496. [Google Scholar]

- Yin C., Sun Y., Fang Y., Lim K. Exploring the dual-role of cognitive heuristics and the moderating effect of gender in microblog information credibility evaluation. Information, Technology & People. 2018;31:741–769. [Google Scholar]

- Yin C., Zhang X., Liu L. Reposting negative information on microblogs: Do personality traits matter. Information Processing & Management. 2020;57 [Google Scholar]

- Zhao D., Rosson M.B. Proceedings of the ACM 2009 international conference on supporting group work. ACM; 2009. How and why people Twitter: The role that microblogging plays in informal communication at work; pp. 243–252. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.