Abstract

Pneumonia is a virulent disease that causes the death of millions of people around the world. Every year it kills more children than malaria, AIDS, and measles combined and it accounts for approximately one in five child-deaths worldwide. The invention of antibiotics and vaccines in the past century has notably increased the survival rate of Pneumonia patients. Currently, the primary challenge is to detect the disease at an early stage and determine its type to initiate the appropriate treatment. Usually, a trained physician or a radiologist undertakes the task of diagnosing Pneumonia by examining the patient’s chest X-ray. However, the number of such trained individuals is nominal when compared to the 450 million people who get affected by Pneumonia every year. Fortunately, this challenge can be met by introducing modern computers and improved Machine Learning techniques in Pneumonia diagnosis. Researchers have been trying to develop a method to automatically detect Pneumonia using machines by analyzing and the symptoms of the disease and chest radiographic images of the patients for the past two decades. However, with the development of cogent Deep Learning algorithms, the formation of such an automatic system is very much within the realms of possibility. In this paper, a novel diagnostic method has been proposed while using Image Processing and Deep Learning techniques that are based on chest X-ray images to detect Pneumonia. The method has been tested on a widely used chest radiography dataset, and the obtained results indicate that the model is very much potent to be employed in an automatic Pneumonia diagnosis scheme.

Keywords: pneumonia, chest radiograph, medical image processing, deep learning

1. Introduction

Pneumonia refers to the acute respiratory infection that affects the air sacs of a person’s lungs. When a person inhales, his/her lungs get filled with Oxygen-rich air; but, due to infections, tiny air sacs within the lungs (medically known as alveoli) may fill up with fluid and pus instead. This condition restricts the person’s ability to breathe properly—and that is when a person is said to have developed Pneumonia. Apart from difficulty in breathing, the symptoms of Pneumonia include coughing with mucus, sweating, fever, chest pain, exhaustion, fatigue, headaches, nausea, vomiting, and loss of appetite. Bacteria, Virus, and Fungi are all are capable of causing Pneumonia. Viral and bacterial Pneumonia are contagious and capable of propagating from person-to-person. Usually, a healthy person gets affected by Pneumonia when they inhale air that is contaminated by a patient of Pneumonia through sneeze or cough. Fungal Pneumonia is typically spread through the environment, not by a patient [1]. Pneumonia is classified based on how people acquire the disease. It can be Hospital-acquired Pneumonia (HAP), which is developed during the period a person stays in the hospital, Ventilator-associated Pneumonia (VAP), which is developed during a patient’s ventilation, Community-acquired Pneumonia (CAP), which is formed within a non-medical environment, or Aspiration Pneumonia, which occurs when a person inhales or intakes Pneumonia-causing bacteria with food, drinks, or water.

The treatment of Pneumonia depends on the type of Pneumonia the patient has developed, his/her age, and the severity of the infection. However, the most crucial part of the treatment is the diagnosis of Pneumonia, which helps a physician to know whether a person showing multiple symptoms has actually developed some form of Pneumonia or not. This task is more clinically challenging if the patient has developed a form of HAP or VAP, as the associated symptoms may require more than 48 h to reveal [2]. Among many methods to diagnose Pneumonia, the method that is based on analyzing chest X-rays is widely used. However, a number of credible alternatives are also available. For instance, blood tests can be carried out to confirm the presence of any infection and to try ascertain the organism, which is causing it. However, in many cases, accurate identification is not possible only from blood tests’ results. Another alternative test is called “Sputum test”, where a sample of mucus is collected from the deep cough of a patient, and analyzed to find out the reason for the infection. Another substitute can be the “Pulse oximetry” test, which helps doctors to measure the amount of oxygen present in the blood. It is usually done with the help of a sensor that is clipped or taped onto the patient’s finger. Alternatively, the doctors can perform a Bronchoscopy, which is a straightforward way of examining the bronchi using samples of tissue or fluid that were collected with the help of a flexible tube. This test is also useful for diagnosing the presence of lung problems other than Pneumonia in patients. Pleural fluid culture, chest Computed Tomography (CT) scan, and Arterial Blood Gas (ABG) test are some of the lesser-used methods in Pneumonia diagnosis. Regardless of the method, if not diagnosed in time and goes untreated, Pneumonia can cause several long-term complications and permanent damage to the patient’s respiratory system and other associated organs, including lung abscess, lung failure, and even blood poisoning (septicemia) [3]. People that belong to any age group can develop Pneumonia, but children under the age of five and elderly people who are over 65 are mostly affected by it [2,4]. Pneumonia is responsible for the death of over 800,000 children around the world every year, which amounts to almost 16 percent of all child-deaths [5], according to the World Health Organization (WHO). Most of the children who lose their life suffering from Pneumonia live in the South-Asian and Sub-Sahara region.

Among many forms of diagnosis, examining the chest X-ray is probably the most common for detecting Pneumonia. In this method, a physician or a pathologist determines the presence of any infiltration within the X-ray image of a potential patient who has exhibited multiple symptoms of the diseases. If any infection is detected in the lower respiratory tract, then the person is identified as a “positive”. Additionally, examining chest X-rays reveals important information related to the exact location and extent of the infection, pleural effusion cavitation, the lobes that are involved in the process, and necrotizing pneumonia [2]. Therefore, analyzing chest X-rays is a vital and imperative stage in the diagnosis of Pneumonia. Unfortunately, the number of experts who have attained this skill and can exercise it impeccably is not adequate, and most of these capable individuals are hired by acclaimed medical institutions and pathological centers. People who live in the developing areas and below the poverty line do not often receive proper diagnosis and timely treatment, and, consequently, bear the risk of developing complicated medical conditions. Training more individuals to undertake the task of diagnosing Pneumonia can be a viable solution to the problem. However, such training requires considerable time and funding, and the trainees are prone to commit mistakes, leading to misdiagnosis until they acquire sufficient experience.

Another solution to this problem has emerged because of the recent breakthroughs in the field of Artificial Intelligence (AI), Machine Learning (ML), and Digital Image Processing (DIP). Researchers can now use machines to develop Computer-Aided Diagnosis (CAD) techniques to diagnose Pneumonia by analyzing the X-ray images of the chest [6] due to the advancements in these domains. Among other ML techniques, supervised deep-learning (DL) algorithms offer an elegant way for working with any form of images. These algorithms perform convolution operations to extract characteristic traits, known as “features”, from the images containing diverse information; then use those features to determine whether the images belong to the same category (i.e., contain similar information), known as a “class”, or to different categories. Based on this simple yet effective principal, supervised learning techniques can analyze and differentiate among countless images containing various objects, shapes, colors, and patterns that belong to numerous classes. The eminence of ML techniques has been appreciated by the researchers working in diverse domains, including Computer Science, Mechanical Science, Material Science, Bio-medical Engineering, Construction and Civil Engineering, and even Business Studies. Apart from being used in detecting Pneumonia, ML and DIP techniques are rapidly used in various types of bio-signal classification, such as Electrocardiogram (ECG) [7], Electromyogram (EMG) [8], Electroencephalogram (EEG) [9], human activity recognition (HAR) [10], skin disease detection and classification [11], Diabetic Retinopathy (DR) [12], Alzheimer detection [13], cancer detection [14], and in many other areas to solve detection and classification problems.

The idea of exploiting involving computers to diagnose Pneumonia is certainly not new. Actually, it dates back to the year 1963, when Lodwick et al. described a concept of converting chest X-ray images into numerical features and described a CAD technique for determining lung cancer (LC) [15]. Article [16] outlines the very first application of CAD in chest radiography. CAD brought a new dimension in the interpretation of X-ray images, and this field was extensively researched throughout the 70s and 80s [17]. Even before the advancements of deep-learning methods, non-deep decision tree-based methods, which are not so ideal for working with high dimensional data like images, were used in chest radiography. For instance, in 1989, Hong and Unrik published an article where they used generalize and specialize (GS), an attribute-based ML algorithm, in order to distinguish between Pneumonia and Tuberculosis (TB) cases based on the revealed symptoms; and, showed that their proposed method outperforms AQ11 and ID3, which were two emerging ML techniques at that time [18]. Since the beginning of the 21st century, the capacity of the machines has increased exponentially each year. This rise of computational power has allowed researches to implement many time-consuming and memory-hungry DL methods in practice, which were previously considered to be too complex for the machines. Consequently, novel CAD methods with high computational complexity and massive raw data got the chance to be experimented with and come into practical use. In 2007, Coppini et al. designed a Neural-network-based diagnosis method for Chronic Obstructive Pulmonary Disease (COPD) patients, where they mathematically analyzed the posteroanterior and lateral radiographs of the subjects with and without emphysema [19].

In 2011, Karargyris et al. provided a segmentation-based method for screening Pneumonia and Tuberculosis while using chest X-rays [20]. In 2014, Ebrahimian et al. proposed a solution to the tricky problem of discriminating Pulmonary Tuberculosis (PTB) and Lobar Pneumonia (PNEU) [21], Noor et al. described a method for distinguishing among PNEU, PTB, and LC using chest radiographs, where they extracted features using wavelet texture measures and principal component analysis (PCA) [22], and Abiyev et al. used Convolutional Neural Networks (CNN) for predicting the presence of chest disease through analyzing chest radiographs [23]. In 2016, Khobragade et al. designed an automatic detection scheme to detect lung diseases—TB, LC, and Pneumonia—by segmenting the images of lungs from chest radiographs, and then using Artificial Neural Network (ANN) for classification [24]. In 2017, Wang et al. carried out extensive experiments in order to separate eight different diseases and medical conditions related to thorax based on the evidence present in chest X-rays using Deep CNN (DCNN) as the classification algorithm [25]. In 2018, Guan et al. designed a DL model, named Attention Guided CNN (AG-CNN), to distinguish the eight conditions mentioned in the previous study [26]. Additionally, in 2018, Singh et al. assessed the accuracy of algorithms in detecting the abnormalities on frontal chest X-rays and assessing the stability and change in their findings over serial radiographs [27]. In recent times, Chandra and Verma proposed a binary classification method based on a few statistical features that were extracted from chest X-ray images for Pneumonia detection [28], and Togaçar et al. proposed a feature learning model based on 300 selected deep mRMR features extracted from chest X-ray images and three different DL algorithms for the detection of Pneumonia [29].

In this study, a novel ML method has been proposed for Pneumonia detection while using a multi-channel CNN model. Features have been extracted from chest radiographic images using three different DIP techniques, and they were simultaneously put into a CNN model to provide the algorithm with diverse information on the properties related to Pneumonia. Our approach differs from the previous ones in terms of the type of features extracted, the order in which the images were processed, and the architecture of the DL model. Together, the employed DIP and CNN algorithms provide a powerful model for Pneumonia diagnosis, as explained in the Results and Discussion section. The proposed model is capable of detecting the presence of Pneumonia with a high degree of reliability; however, it is not optimized in order to determine the type of disease. In order to ensure easy interpretation, necessary graphs, charts, tables, and other illustrations have been included while describing the operating principle of the method.

The rest of the paper is organized, as follows. Section 2 explains the underlying principles of the employed methodology along with short discussions on its building blocks. Section 3 provides details on the experimental setup, presents the acquired results, and imparts brief discussions on the outcomes where necessary. Finally, Section 4 provides an overview of the work done in this article as well as indicates some room for future exploration in this area of research.

2. Methodology

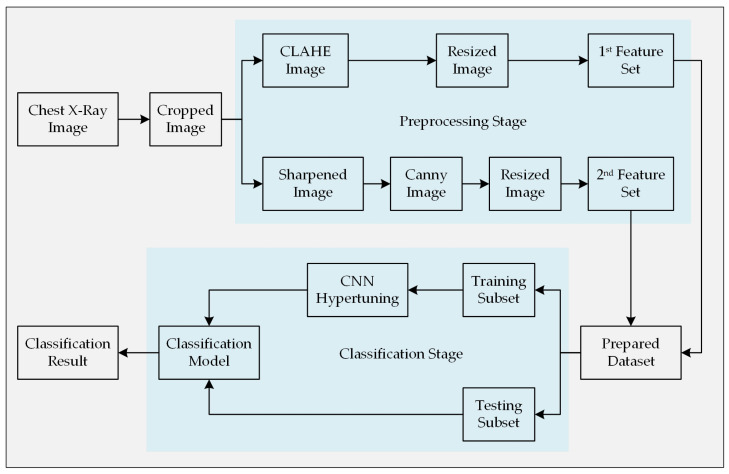

As stated earlier, this paper propounds an automatic Pneumonia detection algorithm though analyzing chest X-ray images and collecting features from them using a customized CNN architecture. A set of chest X-ray images containing both the Pneumonia-positive and Pneumonia-negative instances is required for training the supervised learning model. These sample images were collected from a widely used public dataset. First of all, the images were cropped from the center in order to omit unnecessary information outside of the chest-area and close to the border of the images. This step will help the algorithm to focus on the information relevant to this classification problem as well as to reduce the complexity of the algorithm. Subsequently, the corresponding images were preprocessed using two different image processing techniques to draw out potential features that are more relevant while distinguishing them. Two different techniques were implemented with the intention to bring diversity within the set of features that represents an image in the classification stage. Since all of the images of the employed dataset do not have the same dimension (height and width in terms of pixels), a resize operation was performed to ensure equal dimensionality. Figure 1 illustrates the entire methodology step-by-step. Finally, the acquired results of the experiment were presented, and the performance of the model was compared with other similar models.

Figure 1.

Methodology of the proposed automatic Pneumonia diagnosis model.

2.1. Chest Radiograph Dataset

Images that were used to build this diagnostic model were collected from a renowned chest X-ray image repository [30], which contains a total of 5856 images. Among those images, 4274 samples were collected from the patients of Pneumonia, whereas the rest of the images were collected from healthy subjects who did not have Pneumonia. The chest X-rays were collected from pediatric patients that were aged between one and five years in Guangzhou Women and Children’s Medical Center, Guangzhou. The data collection procedure was performed alongside the patients’ routine clinical care. After collection, all of the images were passed through a grading system that was comprised of multiple layers. Each image was labeled based on the information that fits the corresponding patient’s most recent diagnostic profile. First of all, all of the unreadable and low-quality scans were marked and excluded. The remaining images were then labeled by two expert physicians to prepare them for ML applications. Lastly, a third expert checked the images one more time in order to suppress any error in labeling before including them in the dataset. The associated repository provided the images in three separate folders for training, validation, and testing purposes [30]. However, their contents were combined and re-separated based on their labels for the experiments that are described in this study.

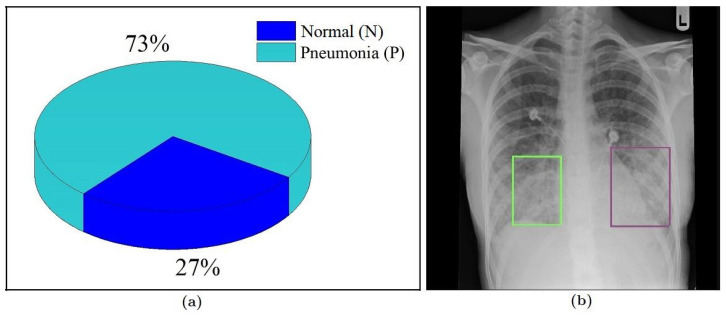

Figure 2a shows the proportion of the available images in the corresponding classes, which clearly indicates that the dataset is imbalanced in nature. The number of images collected from the Pneumonia-positive patients is roughly three times the number of samples that were collected from healthy people. Training a learning model using an imbalanced dataset often leads to decisions that are biased towards the superior class (i.e., the class with the larger sample size). This issue can be addressed by increasing the number of samples of the inferior class up to a point where the classes have an equal ratio (or close to that in terms of sample size) through using various image augmentation techniques. However, increasing the number of images to try to balance the dataset will significantly increase the overall size of the dataset and will not add any new information other than the original images. Therefore, in the experimental section of this study (discussed in Section 3), we took a different approach to address this problem. We ensured that the predefined train-test-split ratio (in our case 70–30) is maintained while splitting the images into the training subset and testing subset (i.e., the training subset would have 70% samples of both classes and the testing subset would have the remaining 30%). If the samples were divided without preserving this condition, most of the samples of the superior class (Pneumonia-positive) could have ended up in the training subset forcing most of the samples of the inferior class (Pneumonia-negative) to the testing subset. A split like this would not give the algorithm enough samples to analyze and properly “know” the later class, which might lead to high mis-classification rate of that class jeopardizing the model’s efficacy. This approach might not solve the problems that come with imbalanced data altogether, but it can reduce their effects in the classification stage.

Figure 2.

(a) Ratio of class samples in the dataset and (b) areas (marked) within the ribcage where typically indications of Pneumonia reside [31].

2.2. Image Cropping

All of the areas within an image do not carry the same level of information pertinent to the objective. We want the learning program to analyze the regions of interest within the image (i.e., where the distinctive features are) related to the task and ignore or subside the other areas to reduce the complexity and execution time. In this case, we goal was to make the algorithm more focused around the center of the image to the lower section of the rib cage (as shown in Figure 2b). Since there is no information regarding the presence of Pneumonia outside the cage, a crop operation was performed on all of the X-ray images to omit most of the black regions near the left and right border before progressing them any further.

2.3. Image Preprocessing

Image preprocessing techniques are useful for improving the quality of an image or to reveal more relevant information on the targeted object. Image enhancement techniques fundamentally require applying a series of mathematical operations on the sample images. In X-ray imaging, the amount of photons absorbed by different types of tissues in the targeted region is taken into account [32]. Bones are very dense, which is why they absorb most of the photons coming from the X-ray beam. Other tissues, like skin and meat, absorb fewer photons and allow most of the ray to pass through them. This phenomena creates an image on the radiographic film that was placed opposite the X-ray beam and behind the subject with the variation of white, black, and gray colors. A physician then uses this image to observe the status of the internal organs. However, as a result of an abrupt variety of gray-level data, sometimes the information of the targeted area becomes misleading. In these cases, the properties of images need to be modified to obtain a better view of the targeted object. There are several image enhancement techniques available. In this work, we have utilized the Contrast-Limited Adaptive Histogram Equalization (CLAHE) method and an image sharpening technique to sharpen the images. Instead of collecting a single set of features from the input images, we extracted two different sets of features to provide the learning algorithm two different viewpoints on the training data. The architecture of the proposed CNN model is designed, such that the first channel processes the X-ray images whose contrast have been enhanced by the CLAHE method, and the second channel processes an edge-enhanced version of the input images along with a few intermediate preprocessing operations.

2.3.1. Data Preparation for the 1st Channel

In the 1st Channel, the contrast of the image is enhanced by the CLAHE method [33,34]. In this method, the contrast of the input image is enhanced by utilizing its local histogram information. The objectives of this operation are to provide an upper bound on the amplification of histogram and to perform a clipping operation to keep the gray level information within a limit. Instead of using global histogram information, this technique operates based on the local histogram information of the image by dividing the entire image into small non-overlapping cells. The working procedure of the applied method has been depicted in the following algorithm:

-

Step 1:

divide the whole image into R disjoint non-overlapping regions, where each region contains pixels.

-

Step 2:

calculate histogram () for each region .

-

Step 3:clip using a clipping threshold where,

and,(1) (2) Here, is the number of gray level, which is a clip factor, and is the maximum allowable slope.

Let, represent the CLAHE operator, which has been performed on image. Then, the contrast enhanced image can be represented as

| (3) |

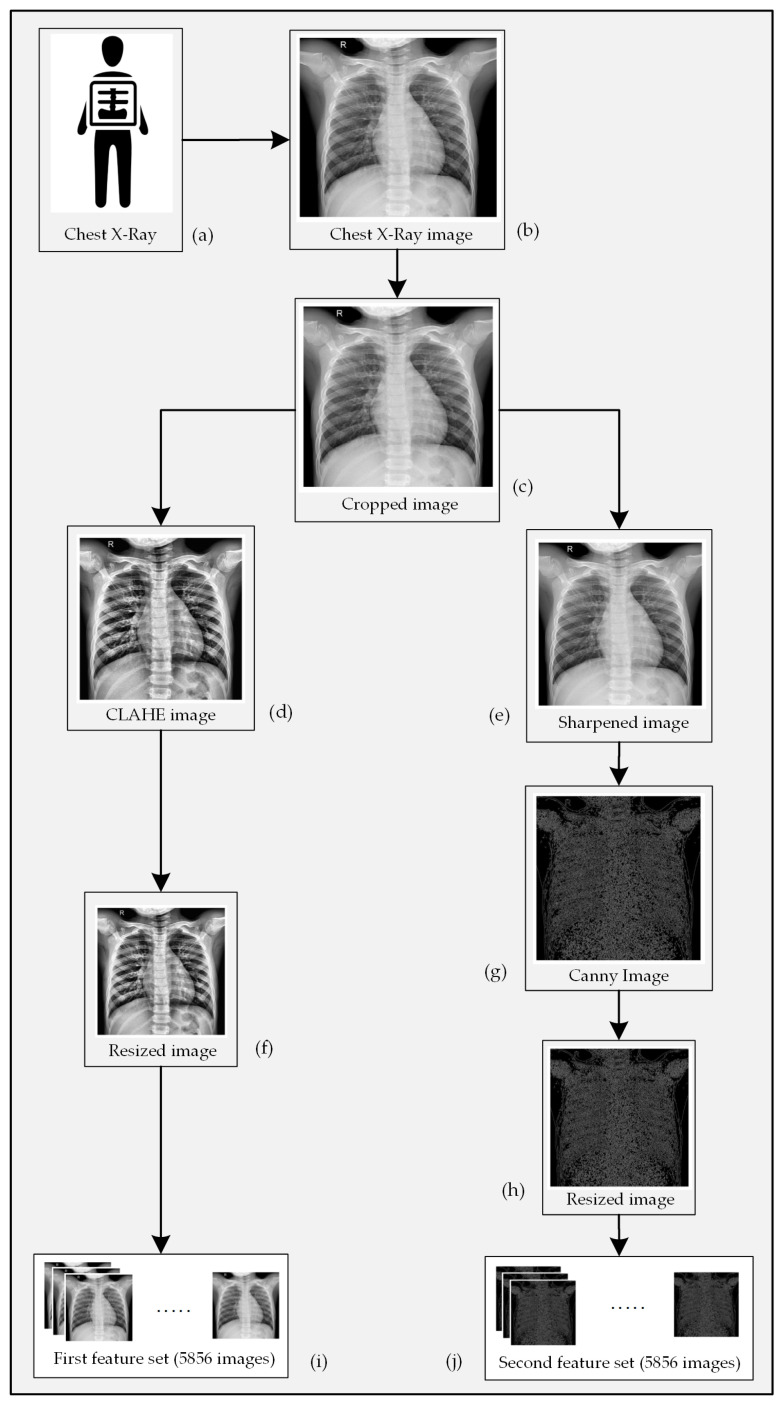

Figure 3d is a version of Figure 3c that has been enhanced through the CLAHE algorithm.

Figure 3.

Processing of an X-ray image showing (a) chest radiograph collection, (b) conversion of 3(a) to a digital image, (c) elimination of unnecessary black pixels from 3(b), (d) CLAHE enhanced form of 3(c), (e) sharpened form of 3(c), (f) resized version of 3(d), (g) edge enhanced version of 3(e), (h) resized version of 3(g), and features collected from all X-ray images for the (i) first channel, and (j) second channel of CNN.

2.3.2. Data Preparation for the 2nd Channel

An edge refers to a curve within an image along which the intensity of the image is changed rapidly typically due to encountering the boundary of an object. We have provided a set of edge enhanced image to the 2nd channel of our proposed CNN model derived from the original X-ray images. Edges within these images have been detected and enhanced using the Canny method. Prior to applying the method, an image sharpening operation was performed. This operation allows for the edges of the objects within an image to be more prominent. An image can be sharpened by subtracting a reasonable amount of blurred information from the original image while using the unsharp masking method [35]. A sample image can be transformed to a sharpened image by applying the following algorithm:

-

Step 1:calculate the blurred image () from the original image () using the function , such that

(4) -

Step 2:subtract from to obtain the edge enhanced image , such that

(5) -

Step 3:finally, acquire the sharpened image () through the following operation

(6)

Figure 3e represents a sharpened version of the sample image that is depicted in Figure 3c. An edge represents the occurrence of an abrupt change of color in a specific area of an image. A CNN model performs kernel operations all-over an image to extract the global features. Images that only contain the edge information can be a valuable source of prominent features at the classification stage. There are quite a few edge detection techniques available. We have selected the Canny method to extract the edge information from the input X-ray images [36]. The Canny method performs the following operation to detect edges within an image:

- Perform a Gaussian filtering operation through all over the images such that

(7) - Calculate the magnitude and angle of gradient and , such that

(8)

here and represent the horizontal and vertical gradients, respectively.(9) Calculate the threshold image from , based on the threshold value .

- Perform a non-maximal suppression on the edges of image , such that

(10) - Perform a hysteresis liking operation on image such that

(11)

Figure 3g is an edge enhanced version of Figure 3e derived while using the Canny method.

2.4. Pneumonia Identification Using Multichannel CNN

As discussed earlier, we used a CNN to extract global features from the X-ray images of the dataset, and used those features to detect the presence of Pneumonia in them. The CNN performs numerous convolution operations through-out the whole input image and, thus, extracts global features from the feature maps [37]. Let represent ith feature map at the lth layer, and represent the kernel matrix. Subsequently, the output map of layer can be represented as

| (12) |

where,

here, is the bias value. An additional layer, called the sub-sampling layer, is implemented to reduce the overall computational complexity. Let represent sub-sampling function; then, the output of this sub-sampling layer can be represented as

| (13) |

Whether it is a traditional Neural Network or a CNN, the last layer of the network is a Fully-Connected (FC) layer. Let the output of the last layer be represented as

| (14) |

here, represents the logistic function and represents the corresponding output. The output of the network is different from the original output due to the random selection of the weight and bias values. The difference between the real output and the predicted output produces an error, which can be expressed as

| (15) |

here, represents the number of classes. Currently, the total error can be calculated using

| (16) |

The minimum value of provides the best output result, which can be calculated through the back-propagation error. This back-propagation ultimately provides the optimum values of . The values of can be updated as

| (17) |

here, is the incremental factor. Equation (17) is known as Stochastic Gradient Decent (SGD) method. However, this work has utilized the Adaptive Moment Estimation (ADAM) method for the optimization, such as

| (18) |

| (19) |

| (20) |

| (21) |

| (22) |

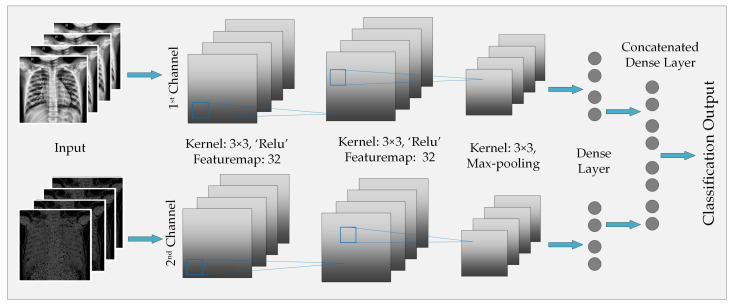

Figure 4 illustrates the architecture of the CNN model that has been implemented for global feature extraction and image classification. As the figure portrays, the 1st layer of the 1st channel contains seven feature maps, where we have employed a 2 × 2 kernel along with ReLU rectifier. 2nd convolution operation of the 1st channel also produces seven feature maps and it involves a 2 × 2 kernel. After the 2nd layer, another 2 × 2 kernel is utilized in order to perform a Max-pooling operation. After the Max-pooling operation, we can find the features extracted by the 1st channel at its Dense layer. The procedure is also the same for the 2nd channel. The only difference between the two channels is the type of image that they are given to process. After concatenating the two sets of features that were collected from the corresponding channels, a soft-max decision layer is employed to obtain the final decision regarding the presence of Pneumonia in the corresponding sample.

Figure 4.

Architecture of the proposed multichannel CNN model for Pneumonia classification.

3. Results and Discussion

The solution to the problem in hand requires us to perform a binary image classification operation. As the mentioned dataset contains a set of X-ray images divided into two subsets based on the presence of Pneumonia in the corresponding patients, an experiment was designed to implement the CNN architecture that is illustrated and described in Section 2. The performance of the proposed method has been evaluated by different matrices, such as accuracy, precision, recall, and f-measure values. The model loss has been evaluated by two measuring parameters, namely, Binary Cross-entropy (BCE) and Kullback–Leibler (KL) Divergence values. Furthermore, Matthews Correlation Coefficient (MCC) values and Receiver Operating Characteristics (ROC) curve have also been provided to impart more depth to the model’s performance. Another factor that was taken into consideration is the dimension (height, width, and the number of channels) of the X-ray images tgar are present in the dataset. Theoretically, an image having a higher dimension contains more information than its lower-dimensioned counterparts and, in most cases, produces better classification results. However, increasing the dimensionality and resolution of images within a dataset also increases its overall size; and, processing large images requires more time and processing power than working with its smaller versions. Therefore, it becomes a matter of trade-off between performance (i.e., accuracy) and processing power as well as the required time while choosing the size of images in the dataset. In our experiment, we considered three different dimensions (height and width expressed in pixels) of the chest X-ray images— 64 × 64, 128 × 128, and 256 × 256, while keeping the information of all three color channels intact. The reason behind experimenting with three different image sizes is to find out the variation in the performance of our classifier with respect to image dimension and provide a rough estimation of the performance that can be acquired by implementing it in hardware of various capacities. Then again, these mentioned dimensions are not invariable; they can always be optimized to obtain the desired performance or lodge the algorithm to the available device by making necessary adjustments in the CNN architecture.

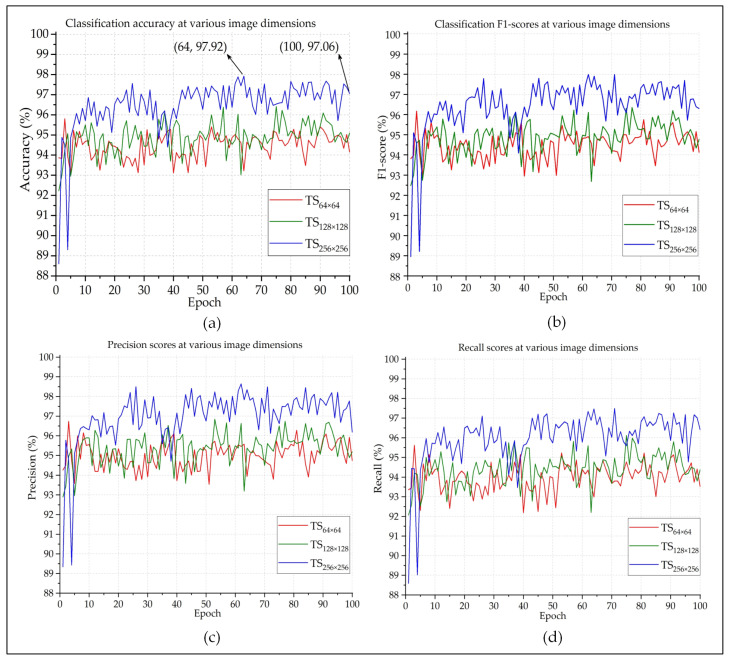

Figure 5 presents the accuracy, precision, recall, and F1-score of the proposed method on the test samples (TS) for three different image sizes (denoted as TS64 × 64, TS128 × 128, and TS256 × 256). The experiment was carried out for 100 epochs. As expected, this graph shows that images with the dimension provide the most amount of information and, hence, achieve the overall best performance when compared to the other two image sizes. According to Figure 5a, the recorded accuracy was 97.06% at the 100th epoch. However, the best classification accuracy was achieved at the 64th epoch, which is close to 98%. Figure 5c,d represent the precision and recall scores of each classification, respectively. Both of them are very common and widely used parameters when it comes to judging the performance of a classifier. Precision refers to the proportion of positive identifications that are actually correct; whereas, recall indicates the proportion of the actual positive instances that were accurately identified [38]. Although both of the parameters are equally important, they cannot individually provide an accurate estimation of the efficiency of the classifier.

Figure 5.

The (a) accuracy, (b) f-measure, (c) precision, and (d) recall of the performed classification.

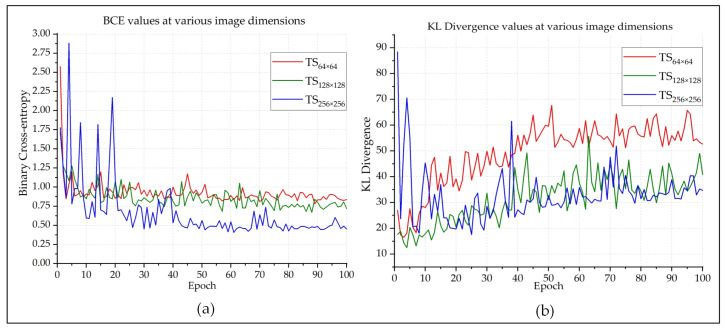

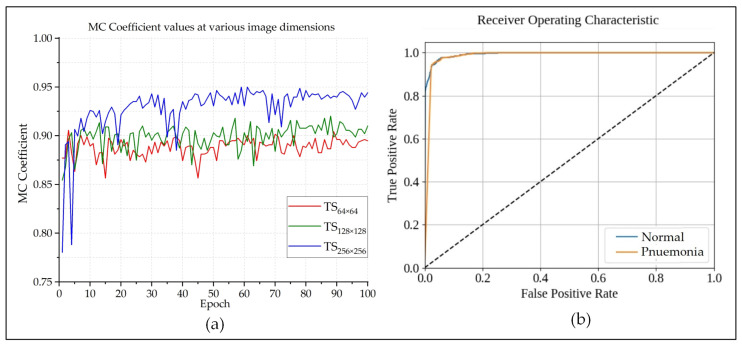

F1-score (also known as F-measure or F-score) is a much more reliable parameter than precision and recall, since it calculates the harmonic mean of those two parameters. So much so, many experts believe that F1-score is a better arbitrator than the classification accuracy while quantifying the cogency of a classification algorithm. Figure 5b shows that the F1-score of each epoch is very close to the classification accuracy of that epoch. This indicates that the model is providing authentic outcomes, and it is not biased towards any class. Figure 6 presents the BCE and KL Divergences of the classifications. The BCE of a model should be as low as possible, and it would be 0 for a perfect classification outcome. Figure 6a shows that, as the model got better trained after each epoch, BCE continuously decreased. Because the larger images provided better classification outcome than the smaller ones, quite expectedly, their BCE curve is better than the others. The same also goes for the KL Divergences, which is depicted in Figure 6b. The MCC is another useful parameter while evaluating binary classifiers. The MCC values lie in between . An MCC value of −1 represents a binary classifier with the worst performance, and a value of indicates a classifier with the best performance. The best MCC values achieved by the designed classifier was , according to Figure 7a. The ROC curve helps us to visualize the performance as a whole. Figure 7b presents the ROC curve of this experiment. The X-axis of this curve represents the False Positive Rate, and the Y-axis of this curve represents the True Positive Rate. The topmost left corner of the graph that is close to the (0,1) position, which means that the model classified most of the positive and negative instances correctly into their corresponding classes [39]. Table 1 provides an overview of the classification performances at different image dimensions in terms of the performance evaluating parameters described above. As discussed earlier, the results acquired while working with the images are superior to those of the other two.

Figure 6.

The (a) BCE and (b) KLD curves of the Pneumonia classifications.

Figure 7.

The (a) Matthews Correlation Coefficient (MCC) and (b) Receiver Operating Characteristics (ROC) curve of the performed classification.

Table 1.

Summary of the classification outcome at the 64th epoch.

| Image Dimension | Annotation | Accuracy% | Precision% | Recall% | BCE | KLD | MCC | F1-Score% |

|---|---|---|---|---|---|---|---|---|

| 64 × 64 × 3 | TS64 × 64 | 93.22 | 93.94 | 92.99 | 0.99 | 56.68 | 00.87 | 93.46 |

| 128 × 128 × 3 | TS128 × 128 | 95.28 | 95.37 | 94.78 | 0.72 | 37.83 | 00.91 | 95.07 |

| 256 × 256 × 3 | TS256 × 256 | 97.92 | 98.38 | 97.47 | 0.46 | 29.77 | 00.94 | 97.91 |

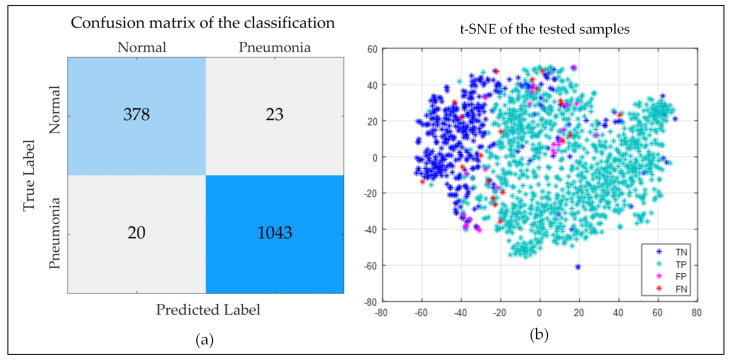

Figure 8a demonstrates the confusion matrix of the last classification outcome (image dimension: , epoch: 100). It shows that only 43 samples among the 1464 testing samples were mis-classified by the described algorithm. Apart from them, 98.19% Pneumonia-positive cases and 94.26% healthy cases were correctly identified. Figure 8b shows the t-distributed Stochastic Neighbor Embedding (t-SNE) graph of the samples of the testing subset. Most of the Pneumonia-positive (true-positive, TP) samples are clustered at the right side of the graph, and most of the Pneumonia-negative (true-negative, TN) instances are clustered at the left, as we can see from the figure. However, there are a few areas where they overlap. False-positive (FP) and false-negative (FN) samples are scattered across the tw-dimensional (2-D) plane. This representation can be useful while hyper-tuning the model for better performance.

Figure 8.

The (a) Confusion Matrix, and (b) t-SNE distribution of the tested samples.

Table 2 presents a comparison of the acquired results of the proposed model with some of the state-of-the-art CNN-based Pneumonia diagnosis techniques. Abiyev RH [23] performed a CNN-based classification on the National Institute Health Clinical Center dataset. This dataset (known as ChestX-ray8 ) contains 112,120 frontal chest images [25]. The method described in [23] obtained a 92.40% classification accuracy. The authors of the study [40] reported an accuracy of 93.63% and an F1-score of 92.70% on the same dataset in the following year. The study described in [41] used transfer learning on the dataset referenced in [42] and, after performing a four-class classification, obtained an accuracy of 92.80%. The outcomes of the studies described in [43,44,45] are directly comparable to this work, since they all perform binary classifications and employ the same dataset. As the table shows, our method outperforms them by 4.19%, 1.56%, and 1.53%, respectively, in terms of classification accuracy. Although all of the cited articles did not mention all of the matrices necessary for a direct comparison (precision, recall, and F1-score), our method attained better scores than the ones that reported such information. Only the method described in [45] recorded a better recall score than the proposed model; however, it attained a lower F1-score due to poor precision. Based on these facts, it can be concluded that our method is superior in detecting Pneumonia from chest radiographs to other CNN-based methods.

Table 2.

Comparison of the acquired results.

| References | Dataset | Class | Model | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|---|---|---|---|

| [23] | [25] | 12 | CNN | 92.40 | |||

| [40] | [25] | 2 | CNN | 93.63 | 93.9 | 93.00 | 92.70 |

| [41] | [42] | 4 | CNN | 92.80 | 87.2 | 93.20 | 90.10 |

| [43] | [30] | 2 | CNN | 93.73 | |||

| [44] | [30] | 2 | CNN | 96.36 | |||

| [45] | [30] | 2 | CNN | 96.39 | 93.28 | 99.62 | 96.35 |

| This Work | [30] | 2 | CNN | 97.92 | 98.38 | 97.47 | 97.97 |

“” denotes that the information is not mentioned in the associated paper.

4. Conclusions

This paper described a novel ML method to diagnose Pneumonia while employing a two-channel CNN. The acquired results suggest that the method is highly accurate in detecting whether a person has or does not have Pneumonia based on the radiographic image of their chest. The described method can provide better detection outcome than the contemporary ones, as it incorporates two different sets of features extracted from each training image. Additionally, the results of the conducted experiment suggest that increasing the size of the image (height and width in pixels) can potentially lead to a better classification outcome, as a large image usually contain more information than a smaller one. However, choosing to work with larger images significantly increases the size of the dataset, which in turn makes the model more complex, and it requires more time to build as well as a machine with high capacity to accommodate it. There will be a trade-off between the desired performance and collectible cost while designing the associated hardware to implement this model. We are looking forward to hyper-tuning the model more sophistically in order to achieve even better classification performance before implementing it in a device for online and automatic Pneumonia detection, which is the ultimate goal of this research project.

Acknowledgments

The authors would like to thank Daniel Kermany, Kang Zhang, Michael Goldbaum for constructing the dataset entitled “Labeled Optical Coherence Tomography (OCT) and Chest X-Ray Images for Classification” and making it public which allowed us to use its contents to train and validate the proposed diagnostic model [42]. Authors would also like to express their graduate to Deakin University, Australia for supplying necessary resources to support this research.

Author Contributions

Conceptualization, A.-A.N. and N.S.; Formal analysis, A.-A.N., N.S. and A.K.B.; Investigation, A.-A.N. and N.S.; Methodology, A.-A.N., N.S. and M.A.R.; Project administration, A.-A.N.; Resources, A.Z.K.; Software, M.A.P.M.; Supervision, A.-A.N.; Validation, A.K.B., M.A.R., M.M., A.Z.K. and M.A.P.M.; Visualization, A.-A.N. and N.S.; Writing—original draft, A.-A.N. and N.S.; Writing—review & editing, A.K.B., M.A.R., M.M., A.K. and M.A.P.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Biggers A. Pneumonia: Symptoms, Causes, Treatment, and More. [(accessed on 20 October 2019)];2019 Available online: https://www.healthline.com/health/pneumonia.

- 2.Torres A., Cillóniz C. Clinical Management of Bacterial Pneumonia. Springer; Berlin, Germany: 2015. Clinical Management of Bacterial Pneumonia. [DOI] [Google Scholar]

- 3.Pneumonia—NHS. [(accessed on 20 April 2019)];2019 Available online: https://www.nhs.uk/conditions/pneumonia.

- 4.Pneumonia. [(accessed on 20 October 2019)];2019 Available online: https://www.who.int/news-room/fact-sheets/detail/pneumonia.

- 5.Unicef A Child Dies of Pneumonia Every 39 Seconds. [(accessed on 20 April 2020)];2019 Available online: https://data.unicef.org/topic/child-health/pneumonia/

- 6.Chumbita M., Cillóniz C., Puerta-Alcalde P., Moreno-García E., Sanjuan G., Garcia-Pouton N., Soriano A., Torres A., Garcia-Vidal C. Can Artificial Intelligence Improve the Management of Pneumonia. J. Clin. Med. 2020;9:248. doi: 10.3390/jcm9010248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rahman A., Sikder N., Nahid A.-A. Heart Condition Monitoring Using Ensemble Technique Based on ECG Signals’ Power Spectrum; Proceedings of the International Conference on Computer, Communication, Chemical, Materials and Electronic Engineering (IC4ME2); Rajshahi, Bangladesh. 11–12 July 2019; pp. 1–4. [DOI] [Google Scholar]

- 8.Bhattachargee C.K., Sikder N., Hasan M.T., Nahid A.-A. Finger Movement Classification Based on Statistical and Frequency Features Extracted from Surface EMG Signals; Proceedings of the International Conference on Computer, Communication, Chemical, Materials and Electronic Engineering (IC4ME2); Rajshahi, Bangladesh. 11–12 July 2019; pp. 1–4. [DOI] [Google Scholar]

- 9.Wang X.W., Nie D., Lu B.L. Emotional state classification from EEG data using machine learning approach. Neurocomputing. 2014;129:94–106. doi: 10.1016/j.neucom.2013.06.046. [DOI] [Google Scholar]

- 10.Sikder N., Chowdhury M.S., Arif A.S.M., Nahid A.-A. Human Activity Recognition Using Multichannel Convolutional Neural Network; Proceedings of the 5th International Conference on Advances in Electrical Engineering (ICAEE); Dhaka, Bangladesh. 26–28 September 2019; pp. 560–565. [DOI] [Google Scholar]

- 11.Shrivastava V.K., Londhe N.D., Sonawane R.S., Suri J.S. Reliable and accurate psoriasis disease classification in dermatology images using comprehensive feature space in machine learning paradigm. Expert Syst. Appl. 2015;42:6184–6195. doi: 10.1016/j.eswa.2015.03.014. [DOI] [Google Scholar]

- 12.Chowdhury M.S., Taimy F.R., Sikder N., Nahid A.-A. Diabetic Retinopathy Classification with a Light Convolutional Neural Network; Proceedings of the International Conference on Computer, Communication, Chemical, Materials and Electronic Engineering (IC4ME2); Rajshahi, Bangladesh. 11–12 July 2019; pp. 1–4. [DOI] [Google Scholar]

- 13.Moradi E., Pepe A., Gaser C., Huttunen H., Tohka J. Machine learning framework for early MRI-based Alzheimer’s conversion prediction in MCI subjects. NeuroImage. 2015;104:398–412. doi: 10.1016/j.neuroimage.2014.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Esteva A., Kuprel B., Novoa R.A., Ko J., Swetter S.M., Blau H.M., Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lodwick G.S., Keats T.E., Dorst J.P. The Coding of Roentgen Images for Computer Analysis as Applied to Lung Cancer. Radiology. 1963;81:185–200. doi: 10.1148/81.2.185. [DOI] [PubMed] [Google Scholar]

- 16.Van Ginneken B., Hogeweg L., Prokop M. Computer-aided diagnosis in chest radiography: Beyond nodules. Eur. J. Radiol. 2009;72:226–230. doi: 10.1016/j.ejrad.2009.05.061. [DOI] [PubMed] [Google Scholar]

- 17.Van Ginneken B., Ter Haar Romeny B.M., Viergever M.A. Computer-aided diagnosis in chest radiography: A survey. IEEE Trans. Med Imaging. 2001;20:1228–1241. doi: 10.1109/42.974918. [DOI] [PubMed] [Google Scholar]

- 18.Hong J., Unrik C. A new attribute-based learning algorithm GS and a comparison with existing algorithms. J. Comput. Sci. Technol. 1989;4:218–228. doi: 10.1007/BF02943537. [DOI] [Google Scholar]

- 19.Coppini G., Miniati M., Paterni M., Monti S., Ferdeghini E.M. Computer-aided diagnosis of emphysema in COPD patients: Neural-network-based analysis of lung shape in digital chest radiographs. Med. Eng. Phys. 2007;29:76–86. doi: 10.1016/j.medengphy.2006.02.001. [DOI] [PubMed] [Google Scholar]

- 20.Karargyris A., Antani S., Thoma G. Segmenting anatomy in chest x-rays for tuberculosis screening; Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society; EMBS, Boston, MA, USA. 30 August–3 September 2011; pp. 7779–7782. [DOI] [PubMed] [Google Scholar]

- 21.Ebrahimian H., Rijal O.M., Noor N.M., Yunus A., Mahyuddin A.A. Phase congruency parameter estimation and discrimination ability in detecting lung disease chest radiograph; Proceedings of the IECBES 2014, IEEE Conference on Biomedical Engineering and Sciences: “Miri, Where Engineering in Medicine and Biology and Humanity Meet”; Sarawak, Malaysia. 8–10 December 2014; pp. 729–734. [DOI] [Google Scholar]

- 22.Noor N.M., Rijal O.M., Yunus A., Mahayiddin A.A., Peng G.C., Ling O.E., Abu Bakar S.A.R. Pair-wise discrimination of some lung diseases using chest radiography; Proceedings of the IEEE TENSYMP 2014—2014 IEEE Region 10 Symposium; Kuala Lumpur, Malaysia. 14–16 April 2014; pp. 151–156. [DOI] [Google Scholar]

- 23.Abiyev R.H., Ma’aitah M.K.S. Deep Convolutional Neural Networks for Chest Diseases Detection. J. Healthc. Eng. 2018 doi: 10.1155/2018/4168538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Khobragade S., Tiwari A., Patil C.Y., Narke V. Automatic detection of major lung diseases using Chest Radiographs and classification by feed-forward artificial neural network; Proceedings of the 1st IEEE International Conference on Power Electronics, Intelligent Control and Energy Systems, ICPEICES 2016; Delhi, India. 4–6 July 2016; [DOI] [Google Scholar]

- 25.Wang X., Peng Y., Lu L., Lu Z., Bagheri M., Summers R.M. ChestX-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases; Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, 2017-Janua; Honolulu, HI, USA. 21–26 July 2017; pp. 3462–3471. [DOI] [Google Scholar]

- 26.Guan Q., Huang Y., Zhong Z., Zheng Z., Zheng L., Yang Y. Diagnose like a Radiologist: Attention Guided Convolutional Neural Network for Thorax Disease Classification. arXiv. 20181801.09927 [Google Scholar]

- 27.Singh R., Kalra M.K., Nitiwarangkul C., Patti J.A., Homayounieh F., Padole A., Rao P., Putha P., Muse V.V., Sharma S., et al. Deep learning in chest radiography: Detection of findings and presence of change. PLoS ONE. 2018;13:e0204155. doi: 10.1371/journal.pone.0204155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chandra T.B., Verma K. Pneumonia Detection on Chest X-ray Using Machine Learning Paradigm; Proceedings of the Third International Conference on Computer Vision & Image Processing; Jaipur, India. 27–29 September 2019; pp. 21–33. [DOI] [Google Scholar]

- 29.Toğaçar M., Ergen B., Cömert Z. A Deep Feature Learning Model for Pneumonia Detection Applying a Combination of mRMR Feature Selection and Machine Learning Models. IRBM. 2019 doi: 10.1016/j.irbm.2019.10.006. [DOI] [Google Scholar]

- 30.Kermany D., Zhang K., Goldbaum M. Chest X-Ray Images (Pneumonia) | Kaggle. [(accessed on 3 June 2020)];2018 Available online: https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia.

- 31.Zahavi G. What Are Lung Opacities? | Kaggle. [(accessed on 21 April 2020)];2018 Available online: https://www.kaggle.com/zahaviguy/what-are-lung-opacities.

- 32.Johansson L. Principles of Translational Science in Medicine: From Bench to Bedside. 2nd ed. Elsevier; Amsterdam, The Netherlands: 2015. Biomarkers: Translational Imaging Research; pp. 189–194. [DOI] [Google Scholar]

- 33.Yadav G., Maheshwari S., Agarwal A. Contrast limited adaptive histogram equalization based enhancement for real time video system; Proceedings of the 2014 International Conference on Advances in Computing, Communications and Informatics, ICACCI 2014; Delhi, India. 24–27 September 2014; pp. 2392–2397. [DOI] [Google Scholar]

- 34.Reza A.M. Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. Vlsi Signal Process. Syst. Signal. Image Video Technol. 2004;38:35–44. doi: 10.1023/B:VLSI.0000028532.53893.82. [DOI] [Google Scholar]

- 35.Polesel A., Ramponi G., Mathews V.J. Image enhancement via adaptive unsharp masking. IEEE Trans. Image Process. 2000;9:505–510. doi: 10.1109/83.826787. [DOI] [PubMed] [Google Scholar]

- 36.Canny J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986;PAMI-8:679–698. doi: 10.1109/TPAMI.1986.4767851. [DOI] [PubMed] [Google Scholar]

- 37.Krizhevsky A., Sutskever I., Hinton G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 38.Hackeling G. Mastering Machine Learning with Scikit-Learn. Packt Publishing Ltd. [(accessed on 30 May 2019)];2014 Available online: https://www.packtpub.com/big-data-and-business-intelligence/mastering-machine-learning-scikit-learn.

- 39.Fawcett T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006;27:861–874. doi: 10.1016/j.patrec.2005.10.010. [DOI] [Google Scholar]

- 40.Xu S., Wu H., Bie R. CXNet-m1: Anomaly Detection on Chest X-Rays with Image-Based Deep Learning. IEEE Access. 2019;7:4466–4477. doi: 10.1109/ACCESS.2018.2885997. [DOI] [Google Scholar]

- 41.Kermany D.S., Goldbaum M., Cai W., Valentim C.C.S., Liang H., Baxter S.L., McKeown A., Yang G., Wu X., Yanet F., et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell. 2018;172:1122–1131.e9. doi: 10.1016/j.cell.2018.02.010. [DOI] [PubMed] [Google Scholar]

- 42.Kermany D., Zhang K., Goldbaum M. Labeled Optical Coherence Tomography (OCT) and Chest X-Ray Images for Classification. Mendeley Data. 2018 doi: 10.17632/RSCBJBR9SJ.2. [DOI] [Google Scholar]

- 43.Stephen O., Sain M., Maduh U.J., Jeong D.U. An Efficient Deep Learning Approach to Pneumonia Classification in Healthcare. J. Healthc. Eng. 2019 doi: 10.1155/2019/4180949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Mittal A., Kumar D., Mittal M., Saba T., Abunadi I., Rehman A., Roy S. Detecting pneumonia using convolutions and dynamic capsule routing for chest X-ray images. Sensors. 2020;20:1068. doi: 10.3390/s20041068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Chouhan V., Singh S.K., Khamparia A., Gupta D., Tiwari P., Moreira C., Damasevicius R., de Albuquerque V.H.C. A Novel Transfer Learning Based Approach for Pneumonia Detection in Chest X-ray Images. Appl. Sci. 2020;10:559. doi: 10.3390/app10020559. [DOI] [Google Scholar]