Abstract

We conducted an audit study - a resume correspondence experiment - to measure discrimination in hiring faced by Indigenous Peoples in the United States (Native Americans, Alaska Natives, and Native Hawaiians). We sent employers 13,516 realistic resumes of Indigenous or white applications for common jobs in 11 cities. We signalled Indigenous status in one of four different ways. Interview offer rates do not differ by race, which holds after an extensive battery of robustness checks. We discuss multiple concerns such as the saliency of signals, selection of cities and occupations, and labour market tightness that could affect the results of our audit study and those of others. We also conduct decompositions of wages, unemployment rates, unemployment durations, and employment durations to explore if discrimination might exist in contexts outside our experiment. We conclude by highlighting the essential tests and considerations that are important for future audit studies, regardless of if they find discrimination or not.

JEL Codes: J15, J7, C93, Indigenous Peoples, employment discrimination, Native American, Alaska Native, Native Hawaiian, Indian reservations, audit study, resume experiment, Gelbach decomposition, Oaxaca decomposition

Introduction

Indigenous Peoples in North America faced perpetual injustices throughout history. A summary includes, but is not limited to, the colonization, annexation, and military occupation of Hawaii (Sai, 2008; Silva, 2004), genocide (Thornton, 1987), massacres (e.g., Wounded Knee, Brown 2007), forced relocation (e.g., the “Trail of Tears”) and isolation in Indian reservations (Foreman, 1972), disenfranchisement (Wolfley, 1991), the slaughter of the bison (Feir, Gillezeau, & Jones, 2017), and the forcible assimilation of Indigenous children through Indian boarding schools (Adams, 1995; Feir, 2016b, 2016a). See Nabokov (1999) for a historical summary.

These injustices extend to contemporary racial disparities, which are some of the largest. Among racial and ethnic minorities, American Indians and Alaska Natives (AIANs) have the lowest employment-to-population ratio (54.6%, with 59.9% for whites), the highest unemployment rate (9.9%, with 4.6% for whites) (U.S. Bureau of Labor Statistics, 2016), and they earn significantly less income (median income of $35,060 in 2010, compared to $50,046 for the nation as a whole) (U.S. Census Bureau, 2015). These disparities are less stark for Native Hawaiian and Pacific Islanders (NHPIs) as they have the highest employment-to-population ratio (62.8%); though, this reflects a stronger economy in Hawaii. Even absent this, unemployment rates are still higher for NHPIs relative to whites (5.7%, versus 4.6%) (U.S. Bureau of Labour Statistics 2016). Poverty rates among those who identify as AIAN alone (NHPI) are nearly double (1.5 times) the rates of those in the general population (U.S. Census Bureau, 2015; WHIAAPI, 2010). For NHPIs, these disparities are even more substantial for the 22% of AIANs who reside or used to reside on one of the 326 federal or state Indian reservations (Gitter & Reagan, 2002; Taylor & Kalt, 2005; U.S. Census Bureau, 2015) or in Alaska Native Statistical Areas (U.S. Census Bureau, 2015).1 These disparities are only becoming more relevant as Indigenous populations grow.2

Several factors could contribute to these disparities, such as differences in education, geography (especially in or near Indian reservations), and the intergenerational legacy of colonialism, such as the harmful effects of Indian Residential schools (Adams, 1995; Feir, 2016a, 2016b) and the slaughter of the bison by colonial settlers (Feir et al., 2017).

Another possible explanation is employment discrimination which anecdotal and survey evidence3 suggest may be occurring, Indigenous Peoples also face negative stereotypes, some of which could contribute to discrimination.4 Economists typically distinguish between three sources of employment discrimination: taste-based discrimination (Becker, 1957),5 levels-based statistical discrimination (Arrow, 1973; Phelps, 1972),6 and variance-based statistical discrimination (Aigner & Cain, 1977)7 (see Lahey & Oxley (2018) for an excellent discussion). Psychology adds the concept of implicit bias, where discrimination occurs due to unconscious bias (Greenwald & Mahzarin, 1995).8 Sociologists conceptualize discrimination as being caused by numerous factors that are intrapsychic, organizational, or structural (Pager & Shepherd, 2008). Employment discrimination against Indigenous Peoples could occur from any of these sources.

We are only aware of one peer-reviewed quantitative study that studied employment discrimination against Indigenous Peoples in the United States.9 Hurst (1997) decomposed the AIAN-white earnings gap using the Oaxaca-Blinder decomposition method. Hurst (1997) found that, while observable factors such as education and geography explain a large part (87%) of the gap, there is “still a substantial unexplained differential in earnings between the various categories of Indians and non-Indians.” (p. 805).

Quantifying employment discrimination against Indigenous Peoples is essential to inform policies to reduce these significant economic disparities. If there is little discrimination, then disparities are primarily caused by factors other than employment discrimination like differences in education, which policymakers may be able to target directly. However, if there is significant discrimination, then this may suggest that supply-side policy measures like education or skills training10 may be less effective at closing this gap. In this case, stronger discrimination laws, or stronger enforcement of them, could be more helpful, as could efforts that seek to reduce discriminatory attitudes or behaviours or our abilities to act upon them.

To quantify discrimination, we conducted a field experiment of hiring discrimination—a resume correspondence study—sending applications to job openings. Resume correspondence studies are the preferred method of estimating employment discrimination because they can hold all factors other than minority status constant (Bertrand & Duflo, 2017; Gaddis, 2018; Neumark, 2018) which is not the case for decomposition studies that use survey data (e.g., Hurst, 1997).

In our field experiment, job applications are identical on average but are either signalled to be white or Indigenous (Native American, Alaska Native, or Native Hawaiian). Our general approach follows previous studies of this nature (e.g., Baert, Cockx, & Verhaest, 2013; Bertrand & Mullainathan, 2004; Carlsson & Rooth, 2007; Neumark, Burn, & Button, 2019; Pager, 2003) by estimating hiring discrimination by comparing interview offer rates (“callbacks”) by race.

Since signalling Indigenous status is not straightforward, we use four different methods. Our most common signal is volunteer experience, where we mention Indigenous status in the description of a volunteer experience, mirroring Tilcsik (2011), Ameri et al. (2018), and Namingit, Blankenau, & Schwab (2017). Our second most common signal is language, through listing an Indigenous language along with English as mother tongues in a language section on the resume. We also occasionally signal Native Hawaiian status using Native Hawaiian first names or Native American status by using last names of Navajo ancestry.

We also quantify whether there is an additional bias against Native Americans from Indian reservations. Employers may have negative perceptions of these reservations, as poverty rates there are higher (Collett, Limb, & Shafer, 2016), incomes are lower (Akee & Taylor, 2014), economic conditions are worse (Akee & Taylor, 2014; Gitter & Reagan, 2002; Taylor & Kalt, 2005), and educational quality can be lower (DeVoe, Darling-Churchill, & Snyder, 2008). If Native Americans from Indian Reservations, who move to urban centres, experience discrimination because of their upbringing, then this makes it more difficult for them to move to these urban centres. Thus, migration is a less useful way for them to seek economic opportunities and possibly escape poverty. Estimating this potential bias against Native Americans who move from Indian reservations to urban centres is increasingly vital given increasing migration over time from Indian Reservations to urban centres (e.g., Snipp 1997, Pickering, 2000).

Our large-scale field experiment, based on 13,516 job applications in 11 cities and five occupations, shows no evidence of discrimination in callbacks against Indigenous Peoples in any of these cities and occupations. We similarly find no additional bias against Native Americans who lived on an Indian Reservation. Our results do not vary by which combination of four signals for Indigenous status we use. Our results hold under a battery of robustness checks which include alternative functional forms and clustering, weighting, alternative callback measures, and correcting for the variance of unobservables (Neumark, 2012).

Our finding of no difference in callbacks is less common compared to the literature (Baert, 2018; Neumark, 2018). Of all resume correspondence studies from 2005 to 2018, 80 (78.4%) show significantly negative discrimination, 17 (16.7%) show no statistical evidence of discrimination, and five (4.6%) show significant preference for the minority group (Baert, 2018). However, publication bias may be a problem, as discrimination experiments with null results are less likely to be published (Zigerell, 2018).

We also conduct a complementary Gelbach (2016) and Oaxaca-Blinder decompositions of disparities in wages, unemployment rates, unemployment durations, and employment durations. We decompose the extent to which differences in observable characteristics explain these economic disparities, and what portion of the disparities remains unexplained, which could suggest discrimination. This provides an alternative measure of discrimination that, while problematic, is broader than our experiment by, for example, covering more cities and occupations, and covering contexts outside of hiring discrimination.

In our preferred decomposition, we find that AIANs have a large, unexplained gap in unemployment rates of 4.3 percentage points and weak evidence of slightly longer unemployment durations. We do not find unexplained gaps in within-occupation wages or in employment durations. For NHPIs, we find an unexplained gap (within occupation) of 4.1% lower wages, a 0.7 percentage point higher unemployment rate, and weak evidence of slightly shorter unemployment durations. When controlling for occupational differences, the wage gaps increase for both AIANs and NHPIs, suggesting lower access to higher-paying occupations.

Despite not finding discrimination in callbacks against Indigenous Peoples in our audit field experiment, this does not imply that Indigenous Peoples in the United States do not face employment discrimination at all. We discuss how certain choices for our experiment could have affected our estimates, such as the saliency of our racial signals, our choice of cities and occupations, and the relatively higher labour market tightness during our study. Our decomposition of economic disparities, despite the problems with this approach, also suggests that there could be hiring discrimination outside the context of our audit study. This is evidenced by Indigenous Peoples facing higher unexplained unemployment rates and holding lower-wage occupations.

In addition to this study being the first audit study of discrimination against Indigenous Peoples in North America, our study also provides many methodological contributions to the audit studies literature more broadly. We conduct numerous robustness checks and discuss other considerations that are crucial to the interpretation of audit studies. These include, but are not limited to pre-registering our experiment, using more than one method of signalling minority status, testing how salient signals of minority status and other resume features are, controlling for the variance of unobservables (Neumark, 2012), using population and occupation weighting to generate study-population-representative results, testing and discussing how our results vary by occupation and city, and exploring how our results vary by labour market tightness and economic cycles. These are essential checks and discussions for audit studies more broadly, regardless of whether they find discrimination. We also extend the decomposition literature by studying disparities in economic outcomes that are not usually studied (unemployment rates, unemployment durations, and employment durations) and we are among the first to apply the newer Gelbach decomposition.

Field Experiment Design

In this section, we summarize how we designed our field experiment. We discuss issues such as our pre-analysis plan, how we signalled race, how we constructed the resumes, which jobs we targeted, and which cities we selected. Our goal was to design the field experiment to be as externally valid as possible, and we aim in this section to be transparent in our design, especially as our choices and discussion may be helpful to others designing these experiments. Additional details on the design of our field experiment are in Online Appendix A.

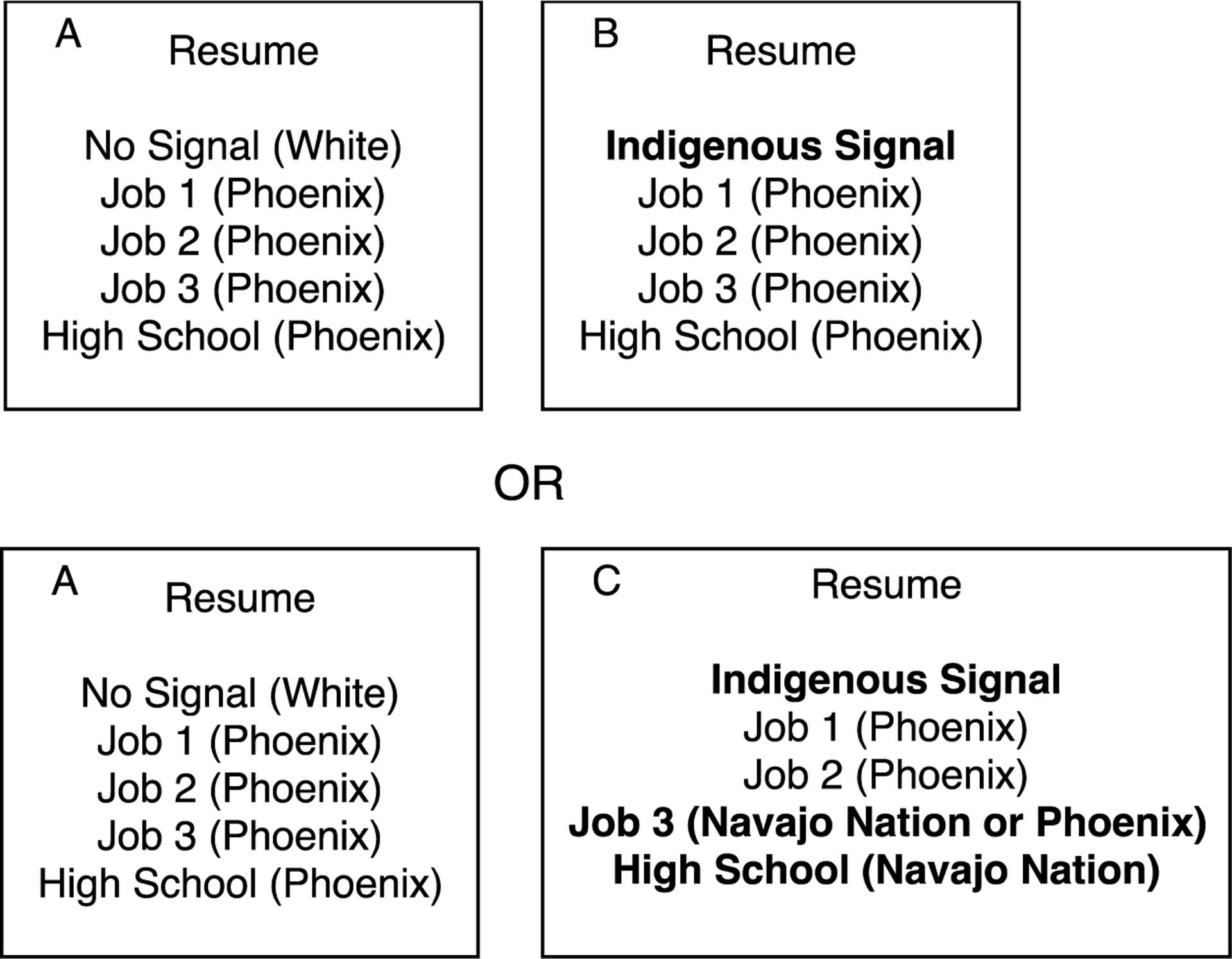

To briefly summarize the general experimental design, we sent two applications in a random order to each job in retail sales, server, kitchen staff, janitor, and security. One application was from an Indigenous applicant (Native American, Native Hawaiian, or Alaska Native), with the Indigenous status signalled in four possible ways (volunteer experience, language, Native Hawaiian first name, Navajo last name). The other application was from a non-Indigenous (white) applicant that had no minority status signals. All applicants had a high school diploma and relevant work experience in the occupation, with resumes constructed partly from publicly posted resumes from a popular job search website. We applied to jobs in 11 cities: Albuquerque, Anchorage, Billings, Chicago, Honolulu, Houston, Los Angeles, New York, Oklahoma City, Phoenix, and Sioux Falls. We measured discrimination by comparing callback rates - interview offers or other positive responses – by race. Figure 1 presents a diagram that summarizes how we generally create and pair resumes.

Figure 1 -. Example of Pairs of Applicants for Jobs in Phoenix with Navajo Applicants.

Notes: We always sent the A–B pair when the Indigenous applicant was Native Hawaiian or Alaska Native. For pairs with a Native American applicant, we used the A–B or A–C pairs with equal probability. Of the A–C pairs, half have Job 3 for resume type C be a job on the Indian reservation while the other half have the equivalent job in the local city as in resume type A.

Pre-Analysis Plan

Before putting this experiment into the field, we filed a pre-analysis plan and registered it with the American Economic Association’s Randomized Control Trial Registry.11 The goal was to pre-specify any variables, models, sample sizes, or decisions to prevent data mining or p-hacking while simultaneously avoiding tying our hands too much in ways that would negatively affect our ability to conduct this research later (see Olken 2015 and Lahey & Beasley 2018). The pre-analysis plan is also useful given the apparent publication bias in studies of discrimination, where studies that do not find discrimination are less likely to be p (see Zigerell, 2018). We discuss this pre-analysis plan in greater detail in Online Appendix B.

Signalling Indigenous Status

There is no obvious or perfect way to signal Indigenous status, and different possibilities have strengths and weaknesses. Names are a common way to signal race since names always need to be revealed in the application process. However, names could be a weak signal as we discuss in detail later or could signal socioeconomic status in addition to race (Barlow & Lahey, 2018; Darolia, Koedel, Martorell, Wilson, & Perez-Arce, 2016; Fryer & Levitt, 2004; Gaddis, 2017a, 2017b). On the other hand, disclosing minority status through work or volunteer experience (e.g., Tilcsik 2011; Ameri et al. 2018; Namingit, Blankenau, and Schwab 2017) or through listing an Indigenous language under the skills section of a resume, could be stronger signals but also less externally valid since minority groups may prefer to avoid signalling minority status to avoid potential discrimination. It also may be less common to have these sorts of volunteer experiences or to speak an Indigenous language and list it on a resume. Because there is no clear best option for signalling, as Indigenous Populations are very heterogeneous,12 we used four possible ways to signal that the job applicant is Indigenous: volunteer experience, languages spoken, first names for Native Hawaiians, and last names for Native Americans of Navajo ancestry. We discuss these choices in much more detail below.

Our most common signal was using the volunteer experience only, used for 3,029 of the Indigenous resumes, followed by volunteer experience only (1,723), Native Hawaiian last names (475), and then Navajo last names (222). We also sometimes combine racial signals, using two signals (three signals) for 823 (92) resumes. In addition to these racial signals, we also signalled that some Native American applicants grew up on an Indian reservation by listing that they had graduated from a high school on an Indian reservation.

Table 1 presents our matching of possible racial signals to Indigenous groups. We explain these signals and why we assign them in this way in more detail below (with sample resumes in Online Appendix H). Overall, our goal was to ensure that all signals were appropriate for each tribal group so that any signals we use in combination are compatible.13 Our intent was not to study discrimination for particular tribal groups (e.g., comparing Navajo to Osage), but rather to ensure that our signals were as valid as possible.

Table 1 -.

Summary of Possible Racial Signals by Indigenous Group

| Indigenous Group | Possible Signals of Indigenous Status | Indian Reservation Possible | |||

|---|---|---|---|---|---|

| Volunteer Experience | Language | First Name | Last Name | ||

| Navajo | X | X (Navajo) | X | X (Navajo Nation) | |

| Apache | X | X (Apache) | X (Fort Apache or San Carlos) | ||

| Blackfeet | X | X (Blackfeet) | |||

| Tohono O’odham | X | X (Pima) | X (Tohono O’odham) | ||

| Oglala Lakota | X | X (Lakota) | X (Pine Ridge) | ||

| Osage | X | X (Osage) | |||

| Alaska Native | X | X (Yup’ik) | |||

| Native Hawaiian | X | X (Hawaiian) | X | ||

Notes: The language signal is not possible for Blackfeet or Osage because Indigenous language use for those tribes is not sufficiently common (see Online Appendix Table A1).

Volunteer experience as an Indigenous signal.

Volunteer and work experience have been used before to signal minority status. Tilcsik (2011) and others signal sexual orientation through volunteer experience with a lesbian, gay, bisexual, and transgender (LGBT) group. Ameri et al. (2018) signal disability partly through a relevant volunteer experience as an accountant at a fictional disability group. Namingit, Blankenau, & Schwab (2017) disclose an illness-related gap in employment history partly through a volunteer experience (Cancer survivor’s group) on a resume. Relatedly, Baert & Vujić (2016, 2018) find that volunteer experience boosts callbacks, especially for immigrants.

We follow a similar approach by using volunteer experience as one way to signal race. We use volunteer experience as a youth mentor with the Big Brothers and Big Sisters of America to signal race. In this volunteer experience, it is typical for “Bigs” to be matched with “Littles” based on race or other socioeconomic factors to improve mentorship. We list this in a volunteer experience section with a title such as “Youth Mentor,” and a description such as: “I mentored youth in my [Native American/Native Hawaiian/Alaska Native] community. I worked with youth on social skills, academics, and understanding our [Native American/Native Hawaiian/Alaska Native] culture.” For an example, see the resumes presented in Online Appendix H.

A concern with using a volunteer experience to signal race is that ir could be valuable to employers, independent of the racial signal. To control for this, all resumes, regardless of race or signals used list a volunteer experience. For the white resume in a pair where the Indigenous resume has the volunteer signal, the white resume has a volunteer experience either at a local Boys & Girls Club or at a local food bank. For any resume pair where the Indigenous applicant does not signal through volunteer experience, then one resume chosen at random gets the Big Brothers and Big Sisters volunteer experience without a mention of race, and the other resume gets either Boys & Girls Club or food bank. Thus, we can directly identify the effect of the Big Brothers and Big Sisters volunteer experience, relative to the control volunteer experiences, separately from its use as a racial signal. We find no differences in callback rates by the type of volunteer experience.

Language as an Indigenous signal.

We found few audit studies of discrimination that used language as a signal of minority status (one example may be Oreopoulos, 2011, to some extent). The American Community Survey codes 169 AIAN languages, plus Hawaiian and Hawaiian Pidgin. While most Indigenous people primarily speak English, Indigenous languages are somewhat common: 26.8% of AIANs spoke a language other than English at home in 2014, compared to 21.2% nationally (U.S. Census Bureau, 2015). Among those who identified as NHPI alone and were born in the United States, 30.3% spoke a language other than English at home (U.S. Census Bureau, 2014). Since it is rare for non-Indigenous people to speak an Indigenous language, especially as a native speaker, this makes for a robust racial signal. We thus used Indigenous languages to signal Indigenous status in some cases for most (but not all) of the tribal groups since Indigenous language use varies by tribal group. Table 1 presents the languages that we selected for each Indigenous group, and Online Appendix A presents our analysis of Census data to determine the frequency of each Indigenous language and thus to what extent signalling through language is appropriate.

It is unclear how employers would view this signal.14 To investigate this, we added the Irish Gaelic language as a control to 10% of the white resumes. Irish Gaelic, like Indigenous languages, is uncommonly used in the United States. It is also one that is unlikely to signal that the applicant might have worse English skills. While this control is imperfect, we find no difference in callback rates between resumes with an Indigenous language or Irish Gaelic or between resumes with Irish Gaelic and no languages listed.

First name as an Indigenous signal (Native Hawaiian only).

We signalled race through first names for some Native Hawaiian applicants only. To determine possible first names, we first considered names within the top 100 baby names from Social Security records for the state of Hawaii in order to get a list of common first names only.15 We then investigated which of these popular names were Native Hawaiian, using various sources.16 We settled on three male names: Kekoa, Ikaika, and Keoni, and one female name: Maile. When using the first name as a racial signal, we randomly assigned one of these names, conditional on gender. We did not use first names to signal race for Alaska Natives or Native Americans because there was little information on first names for these populations.17

Last name as an Indigenous signal (Native American, Navajo, only).

To find Indigenous-specific last names, we use tabulations from the 2000 Census of the racial composition of each last name.18 Unfortunately, these data also do not include information on NHPI individuals, so we can only use this data to determine names for AIAN individuals. We used this data and other sources on the ancestry of names to select four names of Navajo origin: Begay, Yazzie, Benally, and Tsosie. These are among the most common last names that are almost exclusively held by individuals who identify as AIAN alone. Online Appendix A provides more details of our process for selecting these names.

We also considered the possibility of assigning some Native American last names that were perhaps stronger signals (e.g., Sittingbull, Whitebear). However, these names are rare19 and are difficult to assign appropriately to tribal groups. Further, we had concerns that the names signalled stereotypical tropes of Native Americans from popular media (McLaurin, 2012; Tan, Fujioka, & Lucht, 1997).20 That said, these sort of names would have been a stronger signal of Indigenous status, an issue what may have affected our results as we discuss in detail later.

Assigning racial signals.

Table 1 summarizes which of the signals we used for each tribal or Indigenous group. We allocated Indigenous signals as follows. For Navajo and Native Hawaiian applicants, where three signals were possible, we assigned signals with the following probabilities: name only (30%), language only (25%), volunteer only (25%), name and language (5%), name and volunteer (5%), language and volunteer (5%), and all three (5%). For Alaska Native, Apache, Tohono O’odham, and Oglala Lakota applicants, where language and volunteer were possible, we assigned signals with the following probabilities: language only (40%), volunteer only (40%), and both (20%). For Osage and Blackfeet applicants, only the volunteer signal was possible. Assigning more than one signal allowed us to test whether discrimination increased with higher saliency. Table 8 later in the paper presents how often we use each combination of signals, although the volunteer signal is by far the most common, followed the language signal, and then the names signals.

Table 8 -.

Discrimination Estimates by Indigenous Signal Type

| (1) | (2) | |

|---|---|---|

| Volunteer Only (β1) (n = 3,029) | −0.006 (0.010) | −0.006 (0.010) |

| Language Only (β2) (n = 1,723) | 0.006 (0.010) | … |

| Language Only (Native A. or Alaska N.) (n = 1,356) | … | 0.010 (0.012) |

| Language Only (Native Hawaiian) (n = 346) | … | −0.009 (0.021) |

| First Name (Native Hawaiian) Only (β3) (n = 475) | −0.017 (0.018) | −0.017 (0.012) |

| Last Name (Navajo) Only (β4) (n = 222) | −0.007 (0.026) | −0.007 (0.026) |

| Two Signals (β5) (n = 823) | 0.003 (0.015) | … |

| Language + Last Name (n = 78) | … | 0.009 (0.033) |

| Volunteer + Last Name (n = 89) | … | −0.040 (0.030) |

| Language + First Name (n = 112) | … | −0.011 (0.032) |

| Volunteer + First Name (n = 117) | … | 0.023 (0.041) |

| Volunteer + Language (Native A. or Alaska N.) (n = 578) | … | 0.002 (0.019) |

| Volunteer + Language (Native Hawaiian) (n = 125) | … | 0.043 (0.054) |

| Three Signals (β6) (n = 92) | 0.038 (0.037) | 0.007 (0.062) |

| Boys & Girls Club (Volunteer Control) (α1) (n = 3,298) | −0.007 (0.009) | −0.007 (0.009) |

| Food Bank (Volunteer Control) (α2) (n = 3,460) | −0.006 (0.009) | −0.005 (0.009) |

| Irish Gaelic (Language Control) (α3) (n = 831) | −0.017 (0.013) | −0.016 (0.013) |

Notes: N=13,516 for the entire sample and the n in each row corresponds to the number of resumes with that feature. See also the notes to Table 6. Regressions include the “Regular Controls” and occupation and city fixed effects from Table 6 (Column (2)). Different from zero at 1-per cent level (***), 5-per cent level (**) or 10-per cent level (*).

Indian Reservation Upbringing

We assigned half of the Native American applicants an upbringing on an Indian reservation rather than in the city. We signalled this through having graduated from a high school on an Indian reservation, rather than a local high school. We considered the seven Indian reservations shown in Table 1. These fall within the top ten most populous reservations (Norris et al., 2012). We used one to three high schools per reservation, depending on availability. We specifically chose high schools with names that were a clear signal that the high school was on an Indian reservation. We also specified the location of the high school as “City, Reservation Name, State” to ensure the saliency of this signal. For the white, Native Hawaiian, and Alaska Native resumes, and the other half of the Native American resumes without an Indian reservation upbringing, we assigned one of two to four high schools local to the city (from Neumark, Burn, & Button, 2019, and Neumark, Burn, Button, & Chehras, 2019).

For half of the Indigenous applicants with an Indian reservation upbringing, we also had their first job out of high school (the least recent job, Job 3 in Figure 1) listed on the resume as having been on the reservation, while the others had a local job. In addition to strengthening the reservation signal, this on-reservation work experience is realistic for many Indigenous people who grew up on an Indian reservation and later migrated to a city. Since we randomized the addition of this on-reservation work experience, we can identify whether this has any independent effect beyond the location of the high school. A typical entry-level job on a reservation that was also common off a reservation, according to publicly posted resumes, was a cashier at a grocery store. Thus, for pairs of applicants where we sent Native American applicants, we set Job 3 for both resumes to be a cashier at a grocery store, with the store location either being on the reservation or in the local city. All subsequent jobs are in the targeted occupation.

Employers may prefer local or non-rural applicants, which challenges our ability to identify differential treatment by Indian Reservation upbringing. We investigate this by randomly assigning a rural upbringing to white resumes in pairs where we sent a Native American resume. We added a high school in a small town to 25% of these white resumes, and then in half of these, we also assigned a Job 3 location in that same rural town, mirroring the reservation job.21 Adding reservation signals may also increase the likelihood that the employer detects that the applicant is Native American. We attempted to control for this by sometimes assigning Indigenous applicants to have more than one racial signal to see if this affects results (it does not).

Cities

We focused on cities where more Indigenous Peoples live to get more population-representative estimates of discrimination. We applied for jobs in eight of the ten cities with the most people who identify as AIAN (Norris et al., 2012). These are, in decreasing order of AIAN population: New York, Los Angeles, Phoenix, Oklahoma City, Anchorage, Albuquerque, Chicago, and Houston.22 We then added two additional smaller cities with a higher proportion who are AIAN: Billings and Sioux Falls. These cities give us additional variation in the proportion of the population that is Indigenous, which lends power to testing whether discrimination varies by the size of the minority population, as discussed in sociology,23 psychology,24 and tested in some audit studies (e.g., Giulietti, Tonin, & Vlassopoulos, 2019; Hanson & Hawley, 2011). Billings and Sioux Falls are also noteworthy because these cities increase the geographical coverage of our experiment and are near a few Indian reservations of interest (e.g., Pine Ridge) (Pickering, 2000).

To study discrimination against Native Hawaiians, we applied to jobs in Honolulu, the city with the most Native Hawaiians. We also applied for some jobs in Los Angeles with Native Hawaiian applicants, as Los Angeles is the most popular mainland city for Native Hawaiians to live in (Hixson, Hepler, & Kim, 2012). While we cover more cities than most prior resume correspondence studies, we cannot study discrimination in all contexts. For example, it is often untenable in resume correspondence studies to identify and apply for jobs in small cities or rural areas, which in turn makes it difficult to study discrimination in these contexts. We discuss these and related issues and their implications in detail later.

Occupations

We chose common occupational categories where there were many jobs posted online that usually allowed applications by email and were common for applicants of about age 30. Tables 2 and 3 show the popularity of our selected occupations by race and sex for those ages 25 to 35, based on the Current Population Survey (CPS). These are sorted and ranked based on the per cent of whites of that sex who are in the occupation. Online Appendix A presents more detailed tables and additional information on how we selected occupations.

Table 2 -.

Frequency of our Selected Occupations for Men, by Race

| Occupation (Rank) | Proportion of Entire Race | Ratio to White | |||

|---|---|---|---|---|---|

| White | AIAN | NHPI | AIAN | NHPI | |

| Retail salespersons 41–2031 (#5) (retail sales) | 2.18% | 0.83% | 0.46% | 0.0119 | 0.0020 |

| Grounds maintenance workers 37–3010 (#6) (janitor) | 2.06% | 2.36% | 2.11% | 0.0359 | 0.0097 |

| Cooks 35–2010 (#9) (kitchen staff) | 1.65% | 3.73% | 2.51% | 0.0707 | 0.0144 |

| Janitors and building cleaners 31–201X (#10) (janitor) | 1.49% | 1.68% | 2.00% | 0.0355 | 0.0128 |

| Waiters and waitresses 35–3031 (#24) (server) | 0.94% | 0.57% | 0.08% | 0.0189 | 0.0008 |

| Cashiers 41–2010 (#31) (retail sales) | 0.84% | 1.26% | 0.50% | 0.0469 | 0.0056 |

| Security guards and gaming surveillance (#37) (security) | 0.74% | 1.44% | 2.74% | 0.0614 | 0.0353 |

Notes: Data come from all months of the 2015 Current Population Survey. Estimates are weighted using person-level sampling weights. Occupations are ranked based on the decreasing share of white men that have this occupation out of all white men. White corresponds to those who report that they are white only, while AIAN (NHPI) correspond to those who report AIAN (NHPI) either alone or in combination with another race. Our sample includes those aged 25 to 35 only. Ratio to white presents the number of AIAN (NHPI) individuals in the occupation for each white individual. In parentheses is the larger occupation grouping to which this finer CPS occupation belongs. See Online Appendix A and Online Appendix Table A3 for a larger table with additional occupations.

Table 3 -.

Frequency of our Selected Occupations for Women, by Race

| Occupation (Rank) | Proportion of Entire Race | Ratio to White | |||

|---|---|---|---|---|---|

| White | AIAN | NHPI | AIAN | NHPI | |

| Cashiers 41–2010 (#4) (retail sales) | 2.65% | 3.30% | 3.25% | 0.0503 | 0.0113 |

| Waiters and waitresses 35–3031 (#5) (server) | 2.65% | 0.80% | 0.47% | 0.0122 | 0.0016 |

| Retail salespersons 41–2031 (#8) (retail sales) | 2.00% | 1.94% | 1.50% | 0.0391 | 0.0069 |

| Cooks 35–2010 (#27) (kitchen staff) | 1.00% | 1.11% | 1.81% | 0.0449 | 0.0167 |

| Bartenders 35–3011 (#34) (server) | 0.81% | 0.32% | 0.86% | 0.0161 | 0.0098 |

| Janitors and building cleaners 31–201X (#38) (janitor) | 0.75% | 0.40% | 1.03% | 0.0217 | 0.0127 |

Notes: See the notes to Table 2. Occupations are ranked based on the decreasing share of white women that have this occupation out of all white women. See Online Appendix A and Online Appendix Table A4 for a larger table with other occupations.

We settled on five broad occupations with high ranks: retail sales, kitchen staff, server, janitors, and security guards.25 We used male and female applicants for all occupations except security guard as women infrequently hold that occupation.

Education

All applicants had a high school diploma only. We focused on this group for a few reasons. First, it is much less common for Indigenous Peoples to have a post-secondary education.26 Second, advanced degrees are usually not required in our selected occupations, but high school education almost always is. Third, we wanted to focus on less-educated individuals who might be closer to the margins of poverty.

Job Histories

We modelled our job histories and descriptions off of publicly posted resumes from a popular job search website. We randomly assigned three jobs with matching descriptions from a list of twelve possible jobs per city-occupation combination. The employer and job title came from real resumes or from active businesses. We randomly generated job tenure distributions, conditional on all three jobs spanning high school graduation to near the present.27 All applicants within each pair were either both employed with 25% probability or both unemployed (as of the month before the job application) with 75% probability. Since kitchen staff jobs are very heterogeneous, covering experienced cooks down to entry-level dishwashers, we created separate resumes for cooks and more entry-level positions (e.g., food preparation, fast food, dishwasher).28

Age and Names

We set the age of all applicants to be approximately 29 to 31, via a high school graduation year of 2004 or 2005. We used common first and last names for age 30 from Neumark, Burn, & Button (2019), who got these names from Social Security tabulations of popular names by age.

Residential Addresses, Phone Numbers, and Email Addresses

Within each pair of applications sent to a job, both were from different residential addresses, taken from Neumark, Burn & Button (2019) and Neumark, Burn, Button, & Chehras (2019). We assigned each applicant a unique email address and one of 88 phone numbers.29

Collecting Data

Pairing Applicants to Apply to Jobs

After creating the final resumes, we combined them into pairs to apply to each job (see Figure 1). Each pair always had one white and one Indigenous applicant. Table 4 presents how we match Indigenous tribal groups to cities. This was to have Indigenous applicants, for those cities with a high proportion Indigenous, that reflect the Indigenous groups in the area. To ensure that the resumes were sufficiently differentiated, all other resume characteristics were randomized with replacement except the following: first and last names, resume template styles, addresses, email address domain, employers listed in the job history, exact phrasing describing skills or jobs on the resume or cover letter, and the specific volunteer experience.

Table 4 -.

Applicant Types Sent by City

| City | Applicant Types Sent |

|---|---|

| Albuquerque | White (A), Navajo (60%)/Apache (40%) (B or C, 50% probability each) |

| Anchorage | White (A), Alaska Native (B) |

| Billings | White (A), Blackfeet (B or C, 50% probability each) |

| Chicago | White (A), Navajo (25%)/Apache (15%)/Blackfeet (15%)/Osage (15%)/Tohono O’odham (15%)/Oglala Lakota (15%) (B or C, 50% probability each) |

| Honolulu | White (A), Native Hawaiian (B) |

| Houston | See Chicago |

| Los Angeles | White (A), Native Hawaiian (B) (25%) or White (A), Navajo (18.75%)/Apache (11.25%)/Blackfeet (11.25%)/Osage (11.25%)/Tohono O’odham (11.25%)/Oglala Lakota (11.25%) (B or C, 50% probability each) |

| New York | See Chicago |

| Oklahoma City | White (A), Osage (B or C, 50% probability each) |

| Phoenix | White (A), Navajo (40%)/Apache (20%)/Tohono O’odham (40%) (B or C, 50% probability each) |

| Sioux Falls | White (A), Oglala Lakota (B or C, 50% probability each) |

Notes: Two applications, one Indigenous and one white, were sent in random order to each job ad. A, B, and C refer to the resume types presented in Figure 1, where A is always a white applicant, B is always an Indigenous application who grew up in the urban centre, and C is always a Native American applicant who grew up on an Indian reservation.

Sample Size

In our pre-analysis plan, we conducted a power analysis to determine how many observations would be necessary to detect meaningful differences in callback rates between Indigenous and white applicants. Based on previous studies, we decided that we wanted to have the power to detect at least a three-percentage point difference in the callback rate. Through our calculations, we anticipated needing to apply to 4,211 jobs (8,422 applications). We ultimately decided to collect more data (13,516 total applications) to have the power to detect differences smaller than three percentage points and to detect other moderators of discrimination with more precision (e.g., reservation upbringing, geography, gender, and occupation). We followed our commitment in our pre-analysis plan to do our principal analysis both with the final sample size (13,516) and with 8,422 applications. Our results are similar either way. See Online Appendix B for this analysis and additional details about our pre-analysis plan and power analysis.

Identifying Job Ads

We identified viable jobs to apply for using a popular job-posting website (see Online Appendix B for more details). The jobs had to fit our occupational categories, be non-supervisor roles, and not require in-person applications, inquiries by phone, or application through an external website. We ignored job ads that required documents that we did not prepare (e.g., headshots or salary history) or required skills,30 training, or education that our resumes did not have. We applied for jobs between March 2017 and December 2017.

Emailing Applications

We used a different email subject line, opening, body, closing, and signature order for each application in a pair to ensure that applicants from the same pair were not perceived as related. We based some of these scripts on examples and advice from job search experts.31 The content of our emails mirrored cover letters, and we followed the standard practice for these jobs of including this content in the body of the email (requests for separate cover letters were rare).

Coding Employer Responses

We coded employer responses as positive (e.g., “Please call to schedule an interview”), ambiguous (e.g., “We reviewed your application and have a few questions”), or negative (e.g., “We have filled the position”). To avoid having to classify the heterogeneous ambiguous responses through a subjective process, we follow others (e.g., Neumark, Burn, & Button, 2019) and treat only positive and ambiguous responses as callbacks, but our results are robust to using strict interview requests only (Online Appendix Table D9).

Data Analysis Methodology

We first assessed callback rates by race without regression controls. For this analysis, we computed raw callback rates by race and used an exact Fisher test (two-sided) to test whether callback differences were statistically significantly different by race. We pooled all Indigenous groups together to test for a difference between white and Indigenous applicants. Then we compared Native American, Alaska Native, Native Hawaiian, and white applicants separately.

We then estimated the following regression:

| [1] |

where i indexes each application, o indexes the occupation, and c indexes the city. NA is an indicator variable for being Native American, AN is an indicator variable for being Alaska Native, NH is an indicator variable for being Native Hawaiian, Reservation is an indicator variable for being a Native American applicant who grew up on an Indian Reservation, Reservation Job is an indicator variable for being a Native American applicant who grew up on an Indian Reservation and their oldest job listed on the resume (first job out of high school) was on the reservation, Rural is an indicator variable for being a white applicant who grew up in a rural area, and Rural Job is an indicator variable for being a white applicant who grew up in a rural town and their oldest job was in the rural town. White is the excluded racial category, so all estimates reflect callback differences relative to white applicants. μo and μc are occupation and city fixed effects, respectively. The city fixed effects are important to account for how we sent different types of Indigenous resumes by city (see Table 4). Controls is a vector of resume controls. We used three versions: (1) no resume controls, (2) regular controls32 (the default for all our analysis), and (3) full controls, which includes additional controls33 on top of the regular controls.

Following Neumark, Burn, & Button (2019), we cluster our standard errors on the resume. There may also be random influences at the level of the job ad, which would suggest clustering on the job, or two-way clustering on the job and the resume simultaneously (Cameron, Gelbach, & Miller, 2011). Our results are the same regardless of how we cluster (Online Appendix D).

After conducting this primary analysis, we then conduct regressions to analyse callback rates for Indigenous Peoples, compared to whites, separately by occupation, occupation and gender, by city, and by the Indigenous signal(s) we used. In these and all subsequent analysis, we use the regular controls and include occupation and city fixed effects.

Results

Effects by Race and Indian Reservation Upbringing

Table 5 presents the raw callback rates by race. The callback rates were nearly identical for whites and Indigenous Peoples at 19.8% and 20.1%, respectively. By subgroup, the callback rates were 19.6% for Native Americans, 21.3% for Native Hawaiians, and 25.5% for Alaska Natives. However, these estimates do not account for clustering, and, more importantly, they do not control for city-specific callback rates, which is important since callback rates in general are higher in Honolulu and especially in Anchorage (24.8% versus 19.8%).

Table 5 -.

Mean Callback Differences by Indigenous Status

| Callback: | No | Yes | Total |

|---|---|---|---|

| White | 80.2% (5,421) | 19.8% (1,337) | 6,758 |

| Indigenous | 79.9% (5,397) | 20.1% (1,361) | 6,758 |

| Native American | 80.4% (4,187) | 19.6% (1,018) | 5,205 |

| Native Hawaiian | 78.7% (1,000) | 21.3% (271) | 1,271 |

| Alaska Native | 74.5% (210) | 25.5% (72) | 282 |

| Total | 80.0% (10,818) | 20.0% (2,698) | 13,516 |

| Test of independence (p-value): | White | N.A. | N.H. |

| White | … | … | … |

| Native American | 0.763 | … | … |

| Native Hawaiian | 0.165 | 0.132 | … |

| Alaska Native | 0.022 | 0.017 | 0.153 |

Notes: The p-values reported for the tests of independence are from Fisher’s exact test (two-sided).

Table 6 presents the estimates from Equation [1]. The regression without controls (column (1)) shows again that Alaska Natives have a higher callback rate. However, adding city fixed effects (column (2)) removes this difference. In the regression with regular controls and occupation and city fixed, our preferred and default specification in column (2), Native American applicants (without a reservation upbringing) have only a 0.4 percentage point lower callback rate, but this is not statistically significant. Alaska Natives (Native Hawaiians) have a 0.5 percentage point higher (0.3 percentage point lower) callback rate, but this is again not statistically significant.

Table 6 -.

Callback Estimates by Race and Indian Reservation Upbringing

| No Controls (1) | Regular Controls (2) | Full Controls (3) | |

|---|---|---|---|

| Native American (β1) | −0.011 (0.010) | −0.004 (0.009) | −0.005 (0.009) |

| … x Reservation (β2) | 0.000 (0.015) | −0.000 (0.012) | −0.000 (0.012) |

| … x Reservation x Reservation Job (β3) | 0.022 (0.020) | 0.006 (0.016) | 0.005 (0.016) |

| Alaska Native (β4) | 0.052** (0.026) | 0.005 (0.035) | 0.003 (0.035) |

| Native Hawaiian (β5) | 0.012 (0.013) | −0.003 (0.013) | −0.002 (0.013) |

| Non-Reservation Rural (α1) | −0.038** (0.016) | −0.016 (0.013) | −0.015 (0.013) |

| … x Rural Job (α2) | 0.018 (0.023) | 0.002 (0.018) | 0.002 (0.018) |

| Occupation and city fixed effects: | No | Yes | Yes |

| Callback Rate for White: | 19.8% |

Notes: N=13,516. Standard errors are clustered at the resume level. Significantly different from zero at 1-per cent level (***), 5-per cent level (**) or 10-per cent level (*). The regular controls are indicator variables for employment status, added quality features (Spanish, no typos in the cover letter, better cover letter, and two occupation-specific skills), gender, resume sending order, and volunteer experience. The full controls include the regular controls plus the graduation year (we randomize between two years), the start month of the oldest job (job 3), the gap (in months) between job 3 and job 2, the gap between job 2 and 1, the duration of the volunteer experience (in months), and indicator variables for the naming structure for the resume, the version of the e-mail script, the formatting of the e-mail, the structure of the subject line in the e-mail, the opening greeting in the e-mail, the structure of the e-mail, the structure of the e-mail signature, the domain of the e-mail address, the voicemail greeting. We also tested for β2 = α2 (β3 = α3) in column (2) and found that these were not statistically different, with a p-value of 0.2830 (0.9186).

After adding the regular controls, city fixed effects, and occupation fixed effects (column (2)), the callback rates are identical for Native Americans with and without a reservation upbringing. Callback rates are 0.6 percentage points higher for those who worked on the Indian reservation, compared to those who just went to high school on the reservation, but this is not statistically significant. Our estimates are robust to the inclusion of the full set of controls (column (3)). Therefore, these regression estimates show no evidence of hiring discrimination.

Effects by Occupation and Gender

Table 7 presents discrimination estimates by occupation from a similar regression to Equation [1], but pooling Indigenous groups into one indicator variable, and interacting this with each occupation. The callback rates are nearly identical in all occupations.34

Table 7 -.

Discrimination Estimates by Occupation

| Indigenous | Estimate | Callback Rate for Whites | N |

|---|---|---|---|

| … x Retail | 0.004 (0.013) | 17.3% | 2,926 |

| … x Server | −0.001 (0.013) | 16.4% | 2,774 |

| … x Kitchen | −0.006 (0.012) | 22.2% | 4,858 |

| … x Janitor | −0.001 (0.016) | 16.8% | 1,652 |

| … x Security | 0.011 (0.022) | 27.4% | 1,306 |

In Online Appendix Table D4, we present estimates by occupation and gender. These show no racial or intersectional discrimination. We find a strong preference for female applicants for server (retail) positions: a 6.5 (3.7) percentage point higher callback rate for white women compared to white men (who have a callback rate of 13.3% [16.3%]).

Effects by City

Online Appendix Table D5 shows results by city. Callback differences are within two percentage points for all cities except Phoenix (Albuquerque) where Indigenous applicants have a 4.1 percentage point higher (3.7 percentage point lower) callback rate. Only the estimate for Phoenix is statistically significant, at the 10% level only.35

Estimates by Indigenous Signal Type

We then explore if our results differed by how we signalled Indigenous status, as follows:

| [2] |

where Volunteer Only is an indicator variable for being an Indigenous applicant with the volunteer (Big Brothers and Big Sisters) signal only, Language Only is an indicator variable for being an Indigenous applicant with the language signal only, NH First Name Only is an indicator variable for being a Native Hawaiian applicant with the first name signal only, Navajo Last Name Only is an indicator variable for being a Native American applicant of Navajo ancestry with a Navajo last name only,36 Two (Three) Signals is an indicator variable for any combinations of two (three) signals, Boys & Girls is an indicator variable for having the Boys & Girls Club control volunteer experience, Food Bank is an indicator variable for having the food bank control volunteer experience,37 and Gaelic is an indicator variable for having the Irish Gaelic control language.

We also extended Equation [2] to split Language Only into Native American and Alaska Native versus Native Hawaiian and to split Two Signals into all possible combinations. This more saturated model allows us to compare these estimates for more granular signals that match our signal saliency survey, described later.

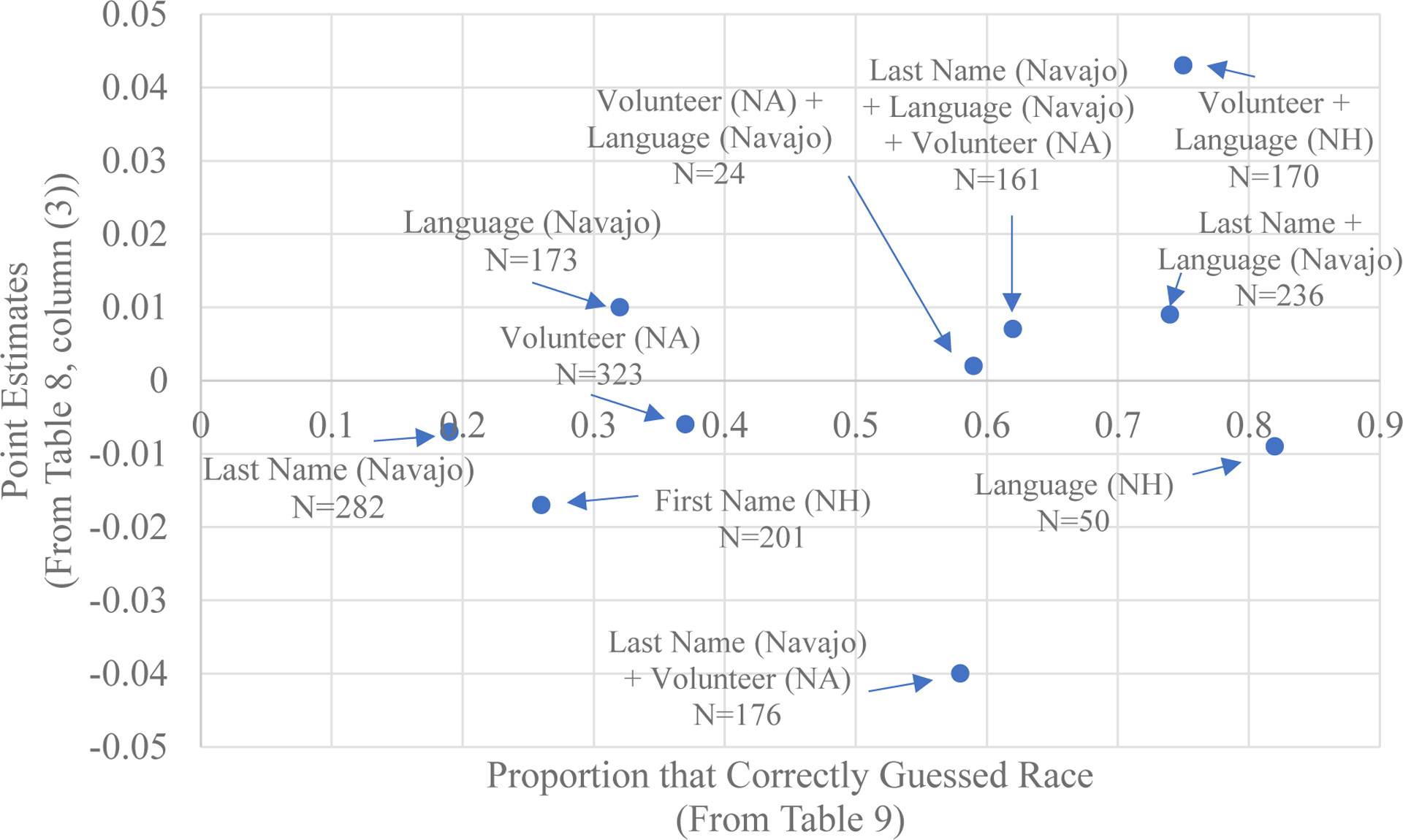

Table 8 presents the estimates by signal type, from Equation [2] (in column (1)), with the more saturated version in column (2). Here we discuss the results from column (1), but the results in column (2) are similar. Across all variables and columns, the results are never statistically significant and do not suggest any differences by signal.

For Indigenous applicants with the volunteer (language) signal only, the callback rate is 0.6 percentage points lower (higher), but this is statistically insignificant with a standard error of 1.0. The estimates on the controls for volunteer and language experiences are also statistically insignificant, which suggests that regardless of which control volunteer experience, or if the Irish Gaelic control is used, there is no difference in callback rates. Results are also similar for our name signals. For Native Hawaiian first name (Navajo last name) as the only signal, the estimate is a 1.7 (0.7) percentage point lower callback rate, again statistically insignificant.

The estimates with two or three signals are positive but again statistically insignificant. These estimates are imprecise, however, for three signals, given that most resumes had only one or two signals. Thus, there is no evidence to support that having multiple signals decreases the callback rate. The fact that there is no difference in callback rates by Indian reservation upbringing is further evidence that our discrimination estimates do not vary by signal type or by saliency.

Robustness Checks

Here we present a summary of two major robustness checks: weighting our estimates and the effects of correcting for possible differences in the variance of unobservables. In Online Appendices C and D, we present additional details and results for these robustness checks, and we also conduct other robustness checks such as using a probit instead of a linear probability model, alternative methods of clustering, and using interview offers instead of callbacks.

Robustness to Population and Occupation Weighting

We attempted to apply for all eligible job openings that met our criteria in each city and occupation. What would generate more population-representative estimates for Indigenous Peoples would be to weight the estimates by the population distribution of Indigenous Peoples across these cities (Hanson, Hawley, Martin, & Liu, 2016; Neumark, Burn, Button, & Chehras, 2019). Similarly, we can weight by the popularity of occupations according to the CPS data in case our sample by occupation differs from the national data. We can also weight by both. In Online Appendix D, we discuss how we construct these weights, and we present our main results, from Table 6, under different types of weighting. Our results are unaffected by how we weight the data.

Correcting for the Variance of Unobservables using the Neumark (2012) Correction

Audit and correspondence studies, especially resume-correspondence studies like ours, could face the “Heckman-Siegelman critique” (Heckman, 1998; Heckman and Siegelman, 1993). This critique holds that while these studies control for average differences in observable characteristics (information included in the job application), discrimination estimates can still be biased, in either direction, through differences in the variance of unobservable characteristics - which relates to variance-based statistical discrimination (Aigner & Cain, 1977). Neumark (2012) shows how this can occur using a model of hiring decisions, and Neumark and Rich (2016) show that about half of the resume-correspondence studies were biased because of this issue. In Online Appendix C we discuss this in more detail, including with a formal model, and test for this bias.

To summarize, we correct for this possible bias by randomly adding quality features to the applications. As discussed in Neumark (2012) and Online Appendix C, these quality features shift the probability of a callback, allowing us to identify to what extent differences in the variance of unobservables between white and Indigenous applicants lead to bias. We find no evidence of bias in our main results due to the variance of unobservables issue and the estimated variances of unobservables by race are nearly for white and Indigenous peoples.

Implications of our Experimental Design

Here we discuss numerous choices in our experimental design that could have impacted our results: the saliency of our signals, our choice of occupations and cities, our choice of job board, our use of callbacks to measure hiring discrimination, and our sample size. These broader discussions bring attention to the limitations of our experiment and others.

Implications of the Saliency of our Indigenous Signals

A key question in any audit study is whether the tested subjects detected and correctly interpreted the signal(s) of minority status. Usually, this is just assumed. We are only aware of a few audit studies that carefully test for saliency and interpretation of their signals (Kroft, Notowidigdo, & Lange, 2013; Lahey & Oxley, 2018). If the signal is only detected sometimes, then results are attenuated towards zero. If the signal is interpreted differently than intended, then the results may not reflect what the experimenters expect to test (Barlow & Lahey, 2018; Darolia et al., 2016; Fryer & Levitt, 2004; Gaddis, 2017a, 2017b; Ghoshal, 2019).

We use four different signals in our study (volunteer experience, language, Native Hawaiian first name, and Navajo last name). Despite our results not differing by signal type, or when more than one signal is used (Table 8), it still may be the case that each signal has different saliency. To investigate this, we fielded two surveys, both described in more detail in Online Appendix E (“resume survey”) and Online Appendix F (“names survey”).

First, we fielded the resume survey, a survey similar to Kroft, Notowidigdo, and Lange (2013). Specifically, we asked individuals on MTurk to read a resume from our study and to consider the candidate for a job position in the relevant occupation. We then asked the subjects to recall characteristics of the applicant (race or ethnicity, languages spoken, age, education, employment status). We included surveys showing resumes without signals (white) or with some combination of signals for either Native American or Native Hawaiian applicants. We included respondents from both a national sample and an Arizona and New Mexico only sample for the Navajo resumes since we primarily send Navajo resumes to positions in Albuquerque and Phoenix.

Table 9 summarizes our main results which combine both samples, with additional results and details in Online Appendix E. To summarize, the white resumes (no signals) are identified as white 86.8% of the time. However, resumes with a Native American (Native Hawaiian) signal were detected as AIAN (NHPI) at rates between 18.8% to 74.2% (26.4% to 82.0%). More specifically, the Navajo last name only signal is very weak (18.8%) compared to the language signal only (32.4%) or the volunteer signal only (37.2%), which are stronger, but still not strong. Saliency is significantly higher when using more than one signal, ranging from 58.0% for Navajo last name and volunteer experience to 74.2% for Navajo last name and Navajo language listed. In the Arizona and New Mexico sample only, saliency was significantly higher, ranging from 58.3% (Navajo last name only) to 76.7% (Navajo last name and Navajo language).

Table 9 -.

Responses to “What is the race or ethnicity of this applicant?” from the Resume Survey

| Resume Type | Distribution of Responses (by Resume Type) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | (11) | |

| No Signals (White) | X | ||||||||||

| First Name (Native Hawaiian) | X | ||||||||||

| Last Name (Navajo) | X | X | X | X | |||||||

| Language (Navajo) | X | X | X | X | |||||||

| Volunteer (Native American) | X | X | X | X | |||||||

| Language (Hawaiian) | X | ||||||||||

| Volunteer + Language (Native Hawaiian) | X | ||||||||||

| Response | |||||||||||

| White | 86.8% | 35.8% | 58.9% | 46.8% | 32.2% | 17.0% | 23.9% | 21.8% | 20.5% | 10.0% | 20.1% |

| AIAN | 1.5% | 1.5% | 18.8% | 32.4% | 37.2% | 74.2% | 58.0% | 59.4% | 62.1% | 0% | 0% |

| NHPI | 0% | 26.4% | 2.1% | 14.5% | 15.8% | 3.8% | 4.0% | 12.9% | 6.8% | 82.0% | 75.0% |

| Hispanic | 1.5% | 6.5% | 8.5% | 2.3% | 4.3% | 2.1% | 5.1% | 1.2% | 3.7% | 0% | 4.2% |

| Black | 4.4% | 19.9% | 4.6% | 2.3% | 3.4% | 1.7% | 2.8% | 0.6% | 3.1% | 2.0% | 0% |

| Asian | 0% | 1.5% | 1.1% | 0% | 0.9% | 0% | 1.1% | 1.2% | 0% | 2.0% | 0% |

| Other | 5.9% | 8.5% | 6.0% | 1.7% | 6.2% | 1.3% | 5.1% | 2.9% | 3.7% | 4.0% | 0% |

| N | 205 | 201 | 282 | 173 | 323 | 236 | 176 | 170 | 161 | 50 | 24 |

Notes: The sample includes both a national sample (no restriction based on the state of residence) and an oversample of Arizona and New Mexico. Estimates are bolded to highlight the signalled race in each case.

However, the saliency estimates presented in Table 9 are lower bounds for a few reasons. First, the saliency for the Native American resumes may have been higher, namely, for those with the volunteer signal, had we listed “Native American” as a race option, rather than the more “official” Census category of “American Indian or Alaska Native”. Some individuals may not have equated “Native American”, as stated in the volunteer signal, as “American Indian” in the survey question. Second, we add an Indian reservation upbringing to half of the Native American resumes, which further strengthens the racial signal, but our estimates above are for resumes without this additional signal. Third, most Native Hawaiian resumes were sent to jobs in Honolulu, where saliency of would be higher. However, we were unable to perform an oversample of Hawaii, so our saliency estimates for Native Hawaiian names and signals are significantly bias downwards, reflecting more-so how Native Hawaiians are perceived on the mainland.

To compare the saliency of our different types and combinations of signals for Indigenous Status to the discrimination estimates when using these signals, we match the estimates in Table 8, column (2), with their corresponding estimates from Table 9 and plot them in Figure 2 below. Figure 2 shows no clear relationship between saliency and discrimination as the relationship is very flat, or perhaps slightly upward sloping. Thus, this suggests that while our saliency was often (but not always) somewhat low, saliency does not seem to impact our results.

Figure 2 -. Signal Saliency vs Discrimination Point Estimates.

Note: the size of each dot corresponds to the number of applicants that use that signal in our experiment, as shown in Table 8, column (2), above.

Since the saliency of the Navajo last name signal was the lowest, we also conducted three robustness checks where we: (1) recoded those with Navajo last names as the only signal as “white” and re-estimated Table 6, column (2); (2) controlled for resumes with the Navajo last name only with a separate indicator variable and re-estimated Table 6, column (2); and (3) re-estimated the results in Table 8, column (1), but recoded the signals as if the Navajo last name signal did not exist. As shown in Online Appendix Tables D7 and D8, these tests again do not change our results.

Implications of the Saliency and Perception of our Indigenous Name Signals

Some recent audit study literature discusses or tests how names signal race, ethnicity, and socioeconomic status, finding that individual names may not signal what researchers assume and names can drive results in unexpected ways (Barlow & Lahey, 2018; Darolia et al., 2016; Gaddis, 2017b, 2017a; Ghoshal, 2019). We tested the specific names we used to signal Indigenous status through our resume survey, discussed earlier. We also fielded a second survey on MTurk specifically on our Navajo last names, similar to how Gaddis (2017a, 2017b) and Ghoshal (2019) test names. We simply showed those surveyed a name (e.g., Daniel Begay, Emily Adams) and asked them to indicate the race of the individual (in addition to asking them about other perceptions). We present more details and full results from both surveys in Online Appendix E (resume survey) and Online Appendix F (names survey).

To summarize the names survey, out of the four Navajo last names, saliency was highest for Tsosie (47.1% nationally thought this person was AIAN and 70.0% in Arizona and New Mexico only), followed by Yazzie (12.5%, 28.6%), Begay (10.0%, 35.0%), and Benally (5.7%, 15.0%).38 We also learn from both our surveys that individuals perceive Indigenous Peoples to be more likely to have been born outside the United States - an odd result, but one seen in other research using the Native Implicit Association Test.39 Given that our Navajo names are less salient, it highlights the trade-offs between saliency and representativeness (see the discussion on page 14) in using our chosen names and more “stereotypical” names (e.g., Whitebear).

Implications of the Saliency of Other Resume Features

Following Kroft, Notowidigdo, and Lange (2013), we measured the saliency of other frequently-used signals: gender, age, education, employment status, employment duration, and whether a second language was listed on the resume. More details are in Online Appendix E.

Across all tested resumes, survey respondents correctly recalled gender from our gender-specific names 71.4% of the time, highest completed education 86.4% of the time, employment status 68.3% of the time, and correctly recalled whether there was a second language on the resume 75.3% of the time. The mean age in years (or duration of the last job held in years) minus actual was −1.60 years (−0.90 years), with a standard deviation of 4.69 years (3.15 years).

These results suggest that one should never assume that signals, even ones like employment status, will always be detected. Researchers should test the saliency of their signals in all contexts and discuss how this affects their results. The fact that not all signals are always detected suggests that the estimates in most audit field experiments are lower bounds.

Implications of our Choice of Occupations

We chose common occupations for those age 30. These positions do skew more low-skilled or lower-experience relative to some other possible occupations, although this is a broader concern facing resume-correspondence studies (Baert, 2018; Neumark, 2018). A key question is if there is discrimination in occupations that we do not study. We discuss arguments on both sides. First, there are arguments that our chosen occupations are likely to have more discrimination than others, so our chosen occupations did not give us our “no differences” result. Second, there are arguments that our occupations would have less discrimination, suggesting that perhaps our “no differences” result is a function of our occupations and is not more broadly generalizable. After this discussion, we leave it to the reader to decide the extent to which our occupations drove our result.

An argument against the claim that our occupations drove our result is the fact that previous audit studies found discrimination in these specific occupations, albeit for different minority groups. Numerous studies used retail sales, server, and kitchen staff positions and found discrimination (Baert, 2018; Neumark, 2018). Neumark, Burn, & Button (2016, 2019) also apply for janitor and security jobs and find some evidence of discrimination.

Another argument against the claim that our occupations drove our result is that Helleseter, Kuhn, & Shen (2014) and Kuhn & Shen (2013) find that discrimination is more likely in low-skilled occupations than in higher-skilled jobs. Our occupations are lower-skilled, suggesting that higher-skilled occupations could be even less likely to have discrimination.

However, there are arguments that our chosen occupations are ones where discrimination is less likely to occur, such that we may have found discrimination had we chosen other occupations. First, our occupations may be “typed” to be for Indigenous Peoples or are otherwise more “friendly” to Indigenous Peoples. Sociology research suggests that individuals sometimes “type” jobs as being more suitable for individuals of certain races or genders (Kaufman, 2002). While we found no research on this typing for Indigenous Peoples, we do not think that Indigenous Peoples are “typed” into retail sales or server positions. In these occupations, there is a significant amount of customer interaction such that customer taste-based discrimination, if it exists, may cause a preference for white employees. Typing, however, may be relevant for kitchen staff, janitor, and security jobs. For kitchen staff, there is the potential notion that people of colour are more likely to be “back of the house” (kitchen) than “front of the house” (servers, hosts, bartenders) staff, and this manifests in the CPS data (Tables 2 and 3). However, discrimination does not appear to vary by the five occupations we study (Tables 7 and Online Appendix Tables D3 and D4), suggesting that this concern likely does not affect our results.

A related issue is that typing could vary by city based on the size of the Hispanic population, as certain jobs may be typed as more or less “Hispanic”.40 In Online Appendix Table D14, we show estimates by the relative size of the Hispanic population in each occupation-city-gender combination. We used this information to re-estimate our main results (Table 6, Column (2)) excluding occupation-city-gender combinations where Hispanics outnumber whites. Our results, available upon request, are unchanged.

Implications of our Choice of Jobs Within our Occupations

There is also the related question of whether the jobs we applied to, within our occupations, were ones where discrimination does not occur. Kuhn & Shen (2013) find that almost a third of the variation in age and gender discriminatory job ads in China occurs between firms in the same occupation, which suggests that a non-representative sample of jobs from an occupation could drive a particular result in an audit study.

It is difficult to know whether our jobs are representative of all those within an occupation. While we use a popular job board, especially for these occupations, no job board is representative. Our job board skews towards smaller firms since larger firms either post job ads elsewhere or require submissions through their website, regardless of posting site. We argue that these smaller firms could be even more likely to discriminate because they are less likely to have Human Resources departments and are less likely to be covered by Title VII of the Civil Rights Act (which applies to firms with at least 15 employees). However, it is unclear whether our selection of jobs is representative of our occupations, a broader concern facing all audit studies.

Implications of our Choice of Cities

Our selection of 11 cities focuses on those with the most Indigenous Peoples to get more population-representative estimates. Despite covering more cities relative to most resume correspondence studies, discrimination may vary geographically in ways we cannot account for.

A related concern is that where Indigenous Peoples live could be endogenous to the geographical distribution of discrimination.41 Suppose, as many have suggested to us, that Indigenous Peoples face more discrimination in rural areas relative to in urban areas, especially rural areas near reservations. We cannot capture this because we do not include rural areas.

We attempted to account somewhat for these differences in geography by including Billings and Sioux Falls, which are cities with a much smaller population in more rural states. However, our analysis of these small cities is underpowered as it was challenging to collect observations (e.g., few eligible job postings), a common issue in studies with small cities (see, e.g., Neumark, Burn, Button, & Chehras, 2019).42 Even then, these smaller cities might not represent rural areas enough. Given this, we cannot comment on whether discrimination differs in urban versus rural contexts. Similarly, we cannot speak to discrimination on a reservation.

Our selection of cities also does not allow us to explore whether discrimination differs in cities that neither have large Indigenous populations nor have a high percentage Indigenous. Numerous cities (e.g., Salt Lake City, Indianapolis, Atlanta) fit into this broad category. It is unclear whether discrimination in these cities differs from the larger cities in our experiment (New York, Los Angeles, Chicago, and Houston), although we believe this to be unlikely.

A trade-off we faced in our selection of cities was balancing population-representativeness with increasing the variation in our chosen cities to test for moderators of discrimination. While our selection of cities incorporates significant variation in the proportion AIAN,43 we could have instead swapped out some of our chosen cities (e.g., Houston) with other cities that, while having a lower number of Indigenous Peoples, have a higher proportion. This would have improved our ability to test how discrimination varies by the proportion Indigenous, at the cost of population representativeness. These trade-offs are essential for researchers to consider.

Implications of the Timing of the Study and Labour Market Tightness

Discrimination could occur more often when economic conditions are worse (Baert, Cockx, Gheyle, & Vandamme, 2015; Johnston & Lordan, 2016; Kroft et al., 2013; Neumark & Button, 2014) although there is also evidence that discrimination decreases when economic conditions are worse (Carlsson, Fumarco, & Rooth, 2018). Therefore, resume-correspondence studies could generate larger (smaller) discrimination estimates during a downturn (a boom) in labour markets. We investigate this in two ways. First, we compare the timing of our study to all other employment audit studies conducted in the United States listed in Baert (2018) or Neumark (2018)’s reviews of the literature. This comparison informs whether discrimination estimates are a function of national economic cycles. Second, we follow the approaches of Kroft, Notowidigdo, & Lange (2013), Baert et al. (2015), and Carlsson et al. (2018) and explore how cross-sectional differences in labour market tightness by city and occupation relate to our discrimination estimates.

To see how discrimination estimates from different studies in the United States varied based on national economic cycles, we created Online Appendix Table D15, which presents the timing of data collection in each study and matches this with the national, seasonally adjusted unemployment rates during that time. This table shows that our study was during a time (March to December 2017) with lower unemployment rates of 4.1 to 4.4 per cent (16th to 24th percentile of the seasonally adjusted rate from 1948 to 2018,). This percentile range with the ranges faced by Pager (2003) (23rd to 56th percentile) and Kleykamp (2009) (21st to 35th), both which find statistically significant effects, although under different contexts. The unemployment rates during our study were not as extreme as over a third of the other studies which occurred during the Great Recession, where unemployment rates reached record highs. This comparison of previous studies and the national unemployment rates they faced does not provide clear evidence that lower unemployment rates drove our results, but it does not rule this out.

Another way to investigate whether discrimination depends on labour market tightness is to use cross-sectional labour market tightness; that is, seeing whether discrimination varies by the tightness of labour markets by city and occupation. We follow Kroft, Notowidigdo, & Lange (2013) and construct two variables that measure labour market tightness. First, we estimate the unemployment rate for each city and occupation combination using data from the CPS.44 Second, we use the callback rate by occupation and city for our white applicants as a measure of tightness. Online Appendix D presents the details of our methodology and Online Appendix Tables D17 and D18 present out results. We do not find that this cross-sectional tightness of occupation and city affects our results, regardless of which measure of tightness we use.

Do Callbacks Capture Hiring Discrimination?

There is the recurring question of whether callbacks capture hiring discrimination. Many others discuss this issue (e.g., Booth, Leigh, & Varganova, 2012; Cahuc, Carcillo, Minea, & Valfort, 2019; Neumark, Burn, & Button, 2019). There are again arguments on both sides.

There are many reasons that callbacks capture a significant share of hiring discrimination. At the callback stage, is it far less likely that discrimination can be detected or enforced, relative to later when company personnel systems may have more detailed records of applicants (Neumark, Burn, & Button, 2019). At this callback stage, employers are also more likely to make quick decisions and fall victim to implicit bias (Bertrand, Mullainathan, & Chugh, 2005; Rooth, 2010). Audit studies that have actors go to interviews show that 75% to 90% of hiring discrimination occurs at the callback stage (Bendick, Brown, & Wall, 1999; Riach & Rich, 2002).

However, recent work by Cahuc et al. (2019) argues that callbacks can fail to capture hiring discrimination when interviewing costs are low. Cahuc et al. (2019) uses a model of hiring and interviewing behaviour to show that as the cost of interviewing decreases, callbacks become less able to capture hiring discrimination as discrimination shifts to after the interview stage. Since we study the case where Cahuc et al. (2019) argue that interviewing costs are high - private-sector jobs in competitive industries - we believe that the hiring costs are high such that their critique does not apply much to our study. This explanation also does not explain how our results differ from the other resume studies, almost all of which were for private-sector jobs.