BACKGROUND

Randomized controlled trials (RCTs) have historically addressed whether one treatment is superior to another. Therefore, their null hypothesis was that no statistically significant difference exists between the two treatments. In recent years, another type of RCTs, non-inferiority trials (NITs), has become prevalent in the medical literature.1–4 Contrary to superiority trials, NITs try to answer whether a treatment is no worse than the control treatment by a predefined non-inferiority margin. The null hypothesis of NITs is that the treatment is worse than the control by more than the non-inferiority margin; rejecting the null hypothesis means the treatment is not inferior to the control (i.e., the treatment is at least as good as the control).

NITs offer some advantages over superiority trials. They are preferred when giving placebo to patients in certain conditions is unethical. In these cases, NITs allow both groups to receive active treatment. NITs have also been deemed as a way to reduce sample size, save cost of conducting superiority trials,5, 6 and show non-inferiority for new treatments for regulatory approval before superiority trials.4, 6–8 However, NITs have always been criticized for limitations in the design, conduct, analysis, reporting, and interpretation, including unverifiable assumptions,9–13 reliance on non-inferiority margin for sample size calculation and results interpretation,14, 15 complex study planning and analysis,16 poor reporting,1, 15, 17, 18 and inappropriate interpretation by readers.5, 16 It is believed that NITs are subject to higher risk of bias than superiority trials.

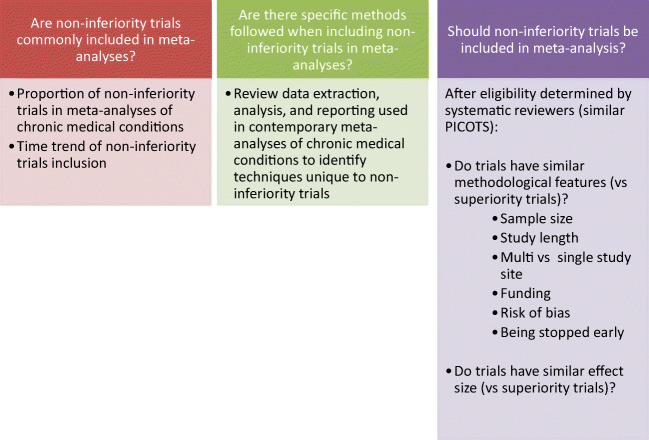

Despite this criticism, the number of NITs conducted and published in medical literature has increased in recent years. Meta-analysis, an important tool for supporting decision-makers, also face the challenge of including NITs. It is unclear how often NITs were included in meta-analyses and whether different approaches are needed to address NITs. Therefore, this study addresses the following questions (Fig. 1): (1) are NITs commonly included in meta-analyses? (2) Are there specific methods followed when including NITs in meta-analyses? (3) Should NITs be included in meta-analyses? We sought to answer these questions using the meta-epidemiological methodology, which uses systematic reviews and/or meta-analyses to evaluate methodological-related questions, such as the impact of certain characteristics of clinical studies, and distribution, heterogeneity, and plausible bias of research evidence.19 We evaluated treatments of chronic medical conditions because of the associated morbidity and mortality. We selected meta-analyses published in the 10 journals with the highest impact factor because all original trials, regardless of NITs and superiority trials, addressed the same questions and because meta-analyses published in the 10 journals have wide reach and potential to change practice.]-->

Figure 1.

Including non-inferiority trials in meta-analyses.

METHODS

This study follows the reporting guidelines of meta-epidemiologic research.19 The detailed literature search and data extraction methods of this study have been previously published.20

Data Sources and Study Selection

We searched the 10 general medical journals with the highest impact factors (New England Journal of Medicine, Journal of the American Medical Association, Lancet, British Medical Journal, Annals of Internal Medicine, PLOS Medicine, Mayo Clinic Proceedings, BioMed Central Medicine, Canadian Medical Association Journal, and Journal of the American Medical Association Internal Medicine/Archives of Internal Medicine) for all systematic reviews published between January 1, 2007, and June 10, 2019.

Eligible meta-analyses had to meet the following criteria: (1) evaluated patients with chronic medical conditions; (2) compared different medications or devices; (3) reported dichotomous outcomes; (4) derived from a systematic review; and (5) included only RCTs with a minimum of 5 RCTs. Following the National Institutes of Health’s definition, we defined chronic medical conditions as conditions that last a year or more, require ongoing medical attention, and affect daily activities.21 We included meta-analysis with dichotomous outcomes because the effect sizes of dichotomous outcomes (e.g., log odd ratio) are comparable among different meta-analyses, and typically more patient-centered. We excluded diagnostic meta-analysis, prognostic meta-analysis, meta-analysis that includes observational studies, meta-analysis of behavioral interventions, and meta-analysis of continuous outcomes. We also excluded meta-analysis of less than 5 RCTs to reduce chance findings and improve stability of statistical methods. When multiple meta-analyses within a systematic review were eligible, we selected the one with the outcome most important to patients (e.g., mortality, stroke, and myocardial infarction). When more than 1 such outcome was reported, we selected the analysis with the largest number of RCTs.

Independent experienced systematic reviewers, working in pairs, screened the title and abstract of systematic reviews, and then full text using predefined inclusion and exclusion criteria. Conflicts were resolved by a third reviewer.

Data Extraction

For each eligible meta-analysis, we identified and retrieved full text of the RCTs. We used a standardized data extraction form, which was tested in 5 randomly selected studies, to extract data from these RCTs and meta-analyses, including author, publication time, outcome, study type (superiority trial vs NIT), and methodological features that might affect the validity of trials. These prior-defined methodological features included sample size, study length (from the start of treatments to the end of follow-up), risk of bias (low risk of bias vs high or unclear bias in terms of random sequence generation, allocation concealment, blinding of participants and personnel, blinding of outcome assessment, incomplete outcome data, selective reporting, and other sources of bias), whether trials were stopped early, funding source (nonprofit vs profit/unclear), and number of study sites (single vs multiple). Reviewers worked independently to extract study details. An additional reviewer reviewed data extraction and resolved conflicts.

Outcome Measures

We evaluated prevalence and time trend of including NITs in meta-analyses, and methodological and statistical approaches specific for NITs. We were also interested in difference in pooled effect sizes between NITs and superiority trials, methodological features, and impact of including NITs on statistical significance of the final pooled outcome and its heterogeneity as measured by the I2 index.

Statistical Analysis

We used the “intention-to-treat” principle to extract the 2 × 2 tables from each RCT (number of events and sample size by the intervention and control groups). For trials only reported hazard ratio, we extracted the log transformed hazard ratio and its variance and used Mantel-Haenszel version of the log rank statistic for RCTs with randomization ratio 1:1 (and its extension given by Kalbfleisch and Prentice for RCTs with randomization ratio other than 1:1) to calculate observed events in the intervention and control groups.22, 23 We calculated log-transformed odds ratios and related standard error for each RCT. The DerSimonian and Laird (D-L) random effect method was used to pool individual RCTs regardless of the original methods used by the included meta-analyses. The D-L method was chosen as it is the most commonly used method in meta-analyses and often provided too narrower (too precise) confidence intervals, which can be a conservative way to detect any difference between NITs and superiority trials. For meta-analyses with both NITs and superiority trials, Altman’s interaction test was used to compare pooled effect size between NITs and superiority trials.24 To evaluate methodological features between NITs and superiority trials, we used mixed-effects random intercept linear regression models for continuous methodological features and mixed-effects random intercept logistic regression models for binary methodological features. Fixed effects included a study type (NIT vs superiority trial). A random effect was included for the meta-analysis. We conducted a sensitivity analysis by excluding NITs with primary outcomes same to those used in meta-analyses because the hypothesis testing and, thus, the interpretation of the results are different between NITs and superiority trials. The D-L method with the modified Hartung-Knapp-Sidik-Jonkman variance correction (HKSJ) was used as a sensitivity analysis of the D-L method.25, 26 We did not make comparisons across meta-analyses because trials across meta-analyses addressed different questions. A 2-tailed P value of less than 0.05 was considered statistically significant. All statistical analyses were conducted using Stata/SE version 15.1 (StataCorp LLC, College Station, TX).

RESULTS

The literature search identified 3166 systematic reviews in the 10 general medical journals published between January 1, 2007, and June 10, 2019. Eighty-eight meta-analyses met our inclusion and were included in our analyses (Appendix online, Fig. 1). These meta-analyses included 1114 RCTs (average 12.66 RCTs per meta-analysis) with 1,103,114 patients (average 12,535 patients per meta-analysis).

31 (35.23%) meta-analyses included at least one NIT; 57 (64.77%) meta-analyses did not include any NIT. Of these 31 meta-analyses, there were 84 NITs (vs 326 superiority trials) with an average of 2.71 NITs per meta-analysis (range, 1 to 15). Two meta-analyses only included NITs.27, 28 Overtime, the number of meta-analysis with NITs increased from 30.00% of meta-analyses between 2007 and 2010 to 41.67% between 2015 and 2019. The average number of NITs per meta-analysis increased from 6.12 to 52.27%.

All of the 31 meta-analyses either adopted the “intention-to-treat” principle or did not declare. None of them specifically stated to include NITs; none reported or used different methods to extract or pool NITs with superiority trials; and none reported or conducted subgroup analyses by NITs and/or superiority trials. Five NITs out of 84 (5.95%) reported the same primary outcome as the outcomes used by the meta-analyses.

Table 1 listed the pooled effect size and key methodological features between NITs and superiority trials. We found no statistically significant difference on pooled effect size between NITs and superiority trials (p = 0.64). When we added NITs to superiority trials, the pooled effect sizes of 6 meta-analyses (20.69%) changed from nonsignificant to statistically significant; and 25 of 31 had lower or no changes on I2 (median change, − 0.06%; interquartile range, − 7.89% to 0%).

Table 1.

The Pooled Effect Size and Key Methodological Features Between NITs and Superiority Trials

| Findings (31 meta-analyses with 440 RCTs) | Non-inferiority trials (N = 84) vs superiority trials (N = 326) | P value |

|---|---|---|

| Difference of pooled effect size between NITs and superiority trials | ROR = 1.03 (95% CI, 0.90 to 1.15) | 0.64 |

| Number of meta-analyses with statistically significant difference between NITs and superiority trials | 2/29 (6.90%) | |

| Changes of statistical significance in meta-analysis by adding NITs (from nonsignificant to significant) | 6/29 (20.69%) | |

| Number of meta-analyses with lower I2 or no change on I2 by adding NITs | 25/31 (80.65%) | |

| Absolute difference between log transformed OR from the trials and the pooled log transformed OR* | MD = − 0.03 (95% CI, − 0.10 to 0.05) | 0.53 |

| Being early in the chain of evidence (being chronologically the first 2 studies in a meta-analysis) | OR = 1.43 (95% CI, 0.75 to 2.73) | 0.28 |

| Number of patients | MD = 163.47 (95% CI, − 349.26 to 676.20) | 0.53 |

| Study length (month) | MD = − 5.76 (95% CI, − 16.61 to 5.09) | 0.30 |

| Study sites (multiple vs single) | OR = 0.39 (95% CI, 0.16 to 0.98) | 0.05 |

| Funding source (nonprofit vs for-profit/unclear) | OR = 0.46 (95% CI, 0.16 to 1.32) | 0.15 |

| Trials stopped early (yes vs no/unclear) | OR = 2.73 (95% CI, 0.85 to 8.75) | 0.09 |

| Sequence generation (low risk vs high/unclear risk) | OR = 0.37 (95% CI, 0.18 to 0.78) | 0.01 |

| Allocation concealment (low risk vs high/unclear risk) | OR = 0.34 (95% CI, 0.16 to 0.69) | 0.003 |

| Participants and personnel blinding (low risk vs high/unclear risk) | OR = 0.53 (95% CI, 0.26 to 1.06) | 0.07 |

| Outcome assessment blinding (low risk vs high/unclear risk) | OR = 0.30 (95% CI, 0.13 to 0.72) | 0.01 |

| Incomplete outcome data (low risk vs high/unclear risk) | OR = 0.88 (95% CI, 0.42 to 1.87) | 0.75 |

| Selective reporting (low risk vs high/unclear risk) | OR = 0.36 (95% CI, 0.16 to 0.78) | 0.01 |

| Other sources of bias (low risk vs high/unclear risk) | OR = 1.06 (95% CI, 0.44 to 2.53) | 0.90 |

*A measure of deviation of the pooled effect sizes of NITs/superiority trials to the pooled effect sizes of meta-analyses

MD: mean difference; OR: odds ratio; ROR: rate of odds ratio

NITs were found to have significantly better methodological features than superiority trials, including more study sites, and lower risk of bias (sequence generation, allocation concealment, blinding of outcome assessors, and selective outcome reporting) (Table 1 and Appendix online, Table 1). We found no statistical difference in number of patients, study length, early publications, and funding sources.

The sensitivity analysis based on the HKSJ method found similar results, compared to those from the D-L method (Appendix online, Table 2). By excluding the 5 NITs which have the same primary outcomes with the outcomes in meta-analyses, we found no changes in conclusions.

DISCUSSION

In this meta-epidemiological study, we evaluated meta-analyses of chronic medical conditions and found that at least 1 in 3 has included a NIT (average 2.71 NITs per meta-analysis). None of these meta-analyses reported or used different methods to analyze or report NITs, compared to superiority trials. We found no statistically significant difference in effect sizes. Six out of 29 meta-analyses changed statistical significance from non-significant to significant after adding NITs to superiority trials. On average, NITs had multiple better methodological features than superiority trials.

NITs have been criticized for methodological and reporting shortcomings. However, with increasingly adoption and publication of NITs, our study confirmed that NITs continue to be included in meta-analyses which means they are impacting guidelines and daily decisions about patient care.

The criticisms of NITs largely focused on issues related to primary outcomes, such as inappropriate and arbitrary non-inferiority margin, composite outcomes, and wrong or misleading interpretation of non-inferiority versus superiority.1, 5, 14–18 Our study found the majority of the outcomes pooled in meta-analyses (79/84) were not the primary outcomes used in NITs. This may be partially caused by the wide use of composite outcomes in NITs (37/84 in our study), but not in meta-analyses. Since the hypothesis for studying secondary outcomes in NITs, in almost all of the cases, is a superiority one, we believe that current approaches for data extraction, analysis, and reporting used in meta-analyses for superiority trials are also appropriate for those secondary outcomes in NITs. In addition, even for the small number of NITs with the same primary outcomes used in meta-analyses, we found no changes by excluding or adding NITs to the meta-analyses.

To be eligible for meta-analyses, studies not only need to address the same research question(s), and report similar patient population, intervention, comparison, outcome, timing, setting, and study design (PICOTS), but also should use similar methodological features. At the end, if the included studies report similar effect size, we have more confidence in findings from meta-analyses. All of the above constitute the process of heterogeneity evaluation, a critical step to determining soundness/appropriateness of meta-analyses. In this study, we found no worse methodological features in NITs than superior trials and no statistically significant difference in findings. Heterogeneity measured by I2 was reduced or remained the same in the majority of the meta-analyses (25/31). As the similarity of research questions and PICOTS was determined by the original systematic reviews, our findings provide a rationale for combining NITs with superior trials with no additional heterogeneity and potentially increased precision to the totality of the evidence. It is appropriate to pool NITs with superiority trials for decision-making in chronic medical conditions, at least in the meta-analyses included in this study.

This study has multiple limitations. We only identified meta-analyses published in the top 10 prominent medical journals, which may not represent the whole literature base. We did not evaluate the similarity of PICOTS across trials and depended on the judgments of the original systematic reviewers about similarity. The non-significant difference in effect sizes and lack of changes of conclusions by adding NITs could be due to the small sample size. We focused on binary outcomes, which reduced the sample size in our analysis. We also did not investigate how primary outcomes in NITs should be integrated in meta-analyses, though our limited findings showed no difference after excluding these NITs in the meta-analyses. Future research should investigate this issue.

CONCLUSION

This study found no empirical evidence to suggest that NITs differ from superiority trials in evaluated methodological features and effect sizes, suggesting that pooling NITs with superiority trials for decision-making in chronic medical conditions is appropriate.

Electronic Supplementary Material

(DOCX 39 kb)

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Bikdeli B, Welsh JW, Akram Y, Punnanithinont N, Lee I, Desai NR, et al. Noninferiority Designed Cardiovascular Trials in Highest-Impact Journals. Circulation. 2019;140(5):379–89. doi: 10.1161/CIRCULATIONAHA.119.040214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Suda KJ, Hurley AM, McKibbin T, Motl Moroney SE. Publication of noninferiority clinical trials: changes over a 20-year interval. Pharmacotherapy. 2011;31(9):833–9. doi: 10.1592/phco.31.9.833. [DOI] [PubMed] [Google Scholar]

- 3.Murthy VL, Desai NR, Vora A, Bhatt DL. Increasing proportion of clinical trials using noninferiority end points. Clin Cardiol. 2012;35(9):522–3. doi: 10.1002/clc.22040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mauri L, D'Agostino RB., Sr Challenges in the Design and Interpretation of Noninferiority Trials. N Engl J Med. 2017;377(14):1357–67. doi: 10.1056/NEJMra1510063. [DOI] [PubMed] [Google Scholar]

- 5.Garattini S, Bertele V. Non-inferiority trials are unethical because they disregard patients' interests. Lancet. 2007;370(9602):1875–7. doi: 10.1016/S0140-6736(07)61604-3. [DOI] [PubMed] [Google Scholar]

- 6.Head SJ, Kaul S, Bogers AJ, Kappetein AP. Non-inferiority study design: lessons to be learned from cardiovascular trials. Eur Heart J. 2012;33(11):1318–24. doi: 10.1093/eurheartj/ehs099. [DOI] [PubMed] [Google Scholar]

- 7.Kaul S, Diamond GA, Weintraub WS. Trials and tribulations of non-inferiority: the ximelagatran experience. J Am Coll Cardiol. 2005;46(11):1986–95. doi: 10.1016/j.jacc.2005.07.062. [DOI] [PubMed] [Google Scholar]

- 8.United States Government Accountability Office. New Drug Approval FDA’s Consideration of Evidence from Certain Clinical Trial. 2010.

- 9.Kaul S, Diamond GA. Good enough: a primer on the analysis and interpretation of noninferiority trials. Ann Intern Med. 2006;145(1):62–9. doi: 10.7326/0003-4819-145-1-200607040-00011. [DOI] [PubMed] [Google Scholar]

- 10.James Hung HM, Wang SJ, Tsong Y, Lawrence J, O'Neil RT. Some fundamental issues with non-inferiority testing in active controlled trials. Stat Med. 2003;22(2):213–25. doi: 10.1002/sim.1315. [DOI] [PubMed] [Google Scholar]

- 11.Hung HM, Wang SJ, O'Neill R. A regulatory perspective on choice of margin and statistical inference issue in non-inferiority trials. Biom J. 2005;47(1):28–36. doi: 10.1002/bimj.200410084. [DOI] [PubMed] [Google Scholar]

- 12.D'Agostino RB, Sr, Massaro JM, Sullivan LM. Non-inferiority trials: design concepts and issues - the encounters of academic consultants in statistics. Stat Med. 2003;22(2):169–86. doi: 10.1002/sim.1425. [DOI] [PubMed] [Google Scholar]

- 13.Snapinn SM. Alternatives for discounting in the analysis of noninferiority trials. J Biopharm Stat. 2004;14(2):263–73. doi: 10.1081/BIP-120037178. [DOI] [PubMed] [Google Scholar]

- 14.Jones B, Jarvis P, Lewis JA, Ebbutt AF. Trials to assess equivalence: the importance of rigorous methods. BMJ. 1996;313(7048):36–9. doi: 10.1136/bmj.313.7048.36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Althunian TA, de Boer A, Klungel OH, Insani WN, Groenwold RH. Methods of defining the non-inferiority margin in randomized, double-blind controlled trials: a systematic review. Trials. 2017;18(1):107. doi: 10.1186/s13063-017-1859-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gotzsche PC. Lessons from and cautions about noninferiority and equivalence randomized trials. JAMA. 2006;295(10):1172–4. doi: 10.1001/jama.295.10.1172. [DOI] [PubMed] [Google Scholar]

- 17.Piaggio G, Elbourne DR, Pocock SJ, Evans SJ, Altman DG, Group C Reporting of noninferiority and equivalence randomized trials: extension of the CONSORT 2010 statement. JAMA. 2012;308(24):2594–604. doi: 10.1001/jama.2012.87802. [DOI] [PubMed] [Google Scholar]

- 18.Rehal S, Morris TP, Fielding K, Carpenter JR, Phillips PP. Non-inferiority trials: are they inferior? A systematic review of reporting in major medical journals. BMJ Open. 2016;6(10):e012594. doi: 10.1136/bmjopen-2016-012594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Murad MH, Wang Z. Guidelines for reporting meta-epidemiological methodology research. Evid Based Med. 2017;22(4):139–42. doi: 10.1136/ebmed-2017-110713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Alahdab F, Farah W, Almasri J, Barrionuevo P, Zaiem F, Benkhadra R, et al. Treatment Effect in Earlier Trials of Patients With Chronic Medical Conditions: A Meta-Epidemiologic Study. Mayo Clin Proc. 2018;93(3):278–83. doi: 10.1016/j.mayocp.2017.10.020. [DOI] [PubMed] [Google Scholar]

- 21.Parekh AK, Goodman RA, Gordon C, Koh HK. Conditions HHSIWoMC. Managing multiple chronic conditions: a strategic framework for improving health outcomes and quality of life. Public Health Rep. 2011;126(4):460–71. doi: 10.1177/003335491112600403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Parmar MK, Torri V, Stewart L. Extracting summary statistics to perform meta-analyses of the published literature for survival endpoints. Stat Med. 1998;17(24):2815–34. doi: 10.1002/(sici)1097-0258(19981230)17:24<2815::aid-sim110>3.0.co;2-8. [DOI] [PubMed] [Google Scholar]

- 23.Kalbfleisch JD, Prentice RL. The statistical analysis of failure time data. 2. Hoboken: J. Wiley; 2002. [Google Scholar]

- 24.Altman DG, Bland JM. Interaction revisited: the difference between two estimates. BMJ. 2003;326(7382):219. doi: 10.1136/bmj.326.7382.219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rover C, Knapp G, Friede T. Hartung-Knapp-Sidik-Jonkman approach and its modification for random-effects meta-analysis with few studies. BMC Med Res Methodol. 2015;15:99. doi: 10.1186/s12874-015-0091-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Veroniki AA, Jackson D, Bender R, Kuss O, Langan D, Higgins JPT, et al. Methods to calculate uncertainty in the estimated overall effect size from a random-effects meta-analysis. Res Synth Methods. 2019;10(1):23–43. doi: 10.1002/jrsm.1319. [DOI] [PubMed] [Google Scholar]

- 27.Zhang XL, Zhu L, Wei ZH, Zhu QQ, Qiao JZ, Dai Q, et al. Comparative Efficacy and Safety of Everolimus-Eluting Bioresorbable Scaffold Versus Everolimus-Eluting Metallic Stents: A Systematic Review and Meta-analysis. Ann Intern Med. 2016;164(11):752–63. doi: 10.7326/M16-0006. [DOI] [PubMed] [Google Scholar]

- 28.Cassese S, Byrne RA, Ndrepepa G, Kufner S, Wiebe J, Repp J, et al. Everolimus-eluting bioresorbable vascular scaffolds versus everolimus-eluting metallic stents: a meta-analysis of randomised controlled trials. Lancet. 2016;387(10018):537–44. doi: 10.1016/S0140-6736(15)00979-4. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 39 kb)