ABSTRACT

Aims

The aim was to help physicians engage with NHS and other policymakers about the use, procurement and regulation of artificial intelligence, algorithms and clinical decision support systems (CDSS) in the NHS by identifying the professional benefits of and concerns about these systems.

Methods

We piloted a three-page survey instrument with closed and open-ended questions on SurveyMonkey, then circulated it to specialty societies via email. Both quantitative and qualitative methods were used to analyse responses.

Results

The results include the current usage of CDSS; identified benefits; concerns about quality; concerns about regulation, professional practice, ethics and liability, as well as actions being taken by the specialty societies to address these; and aspects of CDSS quality that need to be tested.

Conclusion

While results confirm many expected benefits and concerns about CDSS, they raise new professional concerns and suggest further actions to explore with partners on behalf of the physician community.

KEYWORDS: Professional concerns, artificial intelligence, decision support systems, evaluation, regulation

Introduction and study aims

Clinical decision support systems (CDSS) are computer systems that generate patient-specific scores, interpretation, advice or risk estimates to support clinical decisions such as diagnosis, treatment or test ordering, and are known to be effective in many settings.1,2 Many CDSS use artificial intelligence (AI) techniques and these are probably the best known medical application of AI techniques, such as machine learning, but other AI applications are being developed rapidly, such as medical image analysis, speech recognition and robotics. However, there is a body of evidence showing that CDSS have not improved patient outcomes in some clinical specialty areas and that they can even lead to unintended adverse consequences.3–6 Additionally, there is considerable concern and activity around the NHS and government about the safety and impact of all these AI tools, particularly with the growth of so-called deep learning algorithms which are black boxes, meaning that the basis for their advice or risk estimates is not transparent.7 For example, the Government Office for Science organised a cross-government workshop on this topic in 2018, national reports have both advocated greater use of AI in healthcare and discussed potential risks, and NHS England announced its code of conduct on the procurement and use of these tools in 2018.8–11

The Royal College of Physicians (RCP) Health Informatics Unit had over 30 years’ combined experience of developing and evaluating decision support systems and was commissioned by the Medicines and Healthcare products Regulatory Agency (MHRA) and NHS Digital to convene an expert panel to investigate the QRISK2 incident.12 The incident resulted from this well-designed algorithm being poorly implemented by the supplier of one general practice record system, influencing hundreds of thousands of cardiovascular risk consultations.

Various surveys of doctors’ attitudes to and concerns about CDSS have been conducted since 1981.13 To acquaint the RCP Patient Safety Committee and physicians with current views on CDSS, AI, machine learning etc (both benefits and harms), and to help clinicians to contribute to NHS policy in this area, the RCP Patient Safety Committee decided to carry out a short study of specialty society views on and experience with these technologies.

We considered seeking the views of individual physicians on these tools, but this would entail surveying many physicians, most of whom had not yet encountered this technology. So initially, we worked via medical specialty societies to provide us with the most rapid and focused response. The survey was based on current evidence and included a mixed-method study, analysing both quantitative and qualitative data from the survey respondents in duplicate to reduce confirmation bias.

The aim of the study was to help scope potential clinical guidance for physicians using and procuring AI, algorithms and clinical decision support systems for their healthcare organisation by identifying some of the professional benefits and concerns about these systems and their use by physicians.

Methods

Survey instrument design and piloting

We developed and piloted a three-page survey instrument with both closed and open-ended questions which was implemented on SurveyMonkey. Requests to complete the survey were emailed to the RCP College Safety Committee. Specialty representatives (11 officers) of the RCP Patient Safety Committee were emailed a link to the survey with an explanatory letter outlining the definition and examples of CDSS. Two reminders were sent and no incentives offered. After receiving a small number of responses over August and September 2018, the wording of both invitation letter and survey questions was slightly improved with the aim of wider circulation.

Target organisations and survey process

The final survey (supplementary material S1) was put up on SurveyMonkey in October 2018 with a link sent to the survey to RCP specialty informatics contacts – a further 36 in all. This generated another five responses. An additional nine responses were received when the survey was sent out again in November 2018 to 60 contacts including informatics leads who had not previously responded, as well as to the president of each specialty society. In total, the survey was sent to 44 medical specialty societies, with 19 responses received – a response rate of 43%.

Data analysis

Responses to closed-ended questions were imported into MS Excel for analysis. Responses to the questions on concerns about CDSS scored one if the concern was rated ‘very important’, a half if ‘fairly important’ and zero otherwise. All quantitative data were analysed using MS Excel version 2016.

Responses to open-ended questions were independently read by two authors who separately carried out thematic analysis by identifying the core theme(s) underlying each comment. These themes were discussed and grouped under a small number of high-level themes for this article.

Results

Box 1 lists those specialties who responded (18 out of 44 specialties, with two responses received from the Renal Association).

Box 1.

List of responding specialty societies and committees

| Association of British Clinical Diabetologists |

| Association of Cancer Physicians |

| British Association for Sexual Health and HIV |

| British Association of Audiovestibular Physicians |

| British Association of Dermatologists |

| British Association of Stroke Physicians |

| British Cardiovascular Society |

| British Infection Association |

| British Pharmacological Society |

| British Society for Rheumatology |

| British Society of Rehabilitation Medicine |

| Clinical Genetics Society |

| Royal College of Physicians Joint Specialty Committee on Clinical Pharmacology & Therapeutics |

| Royal Pharmaceutical Society |

| Society for Acute Medicine |

| Society for Endocrinology |

| The Renal Association |

| UK Palliative Medicine Association |

Current reported usage of CDSS was to support safe prescribing (19 responses, consisting of assisting in choice of therapy (eight) and calculating drug dose (11)), calculate risk or prognosis (13), report or interpret investigation results (10), monitor disease activity in long-term conditions (seven) or assist in diagnosis (six). This agrees with the systematic review evidence of which clinical tasks are most likely to be influenced by CDSS.14

The identified benefits were improved patient safety (13), improved medicines management (seven), better patient outcomes (seven), more efficient clinical work (seven), more accurate diagnoses (six), fewer drug side effects (four), better use of investigations (four), preventive care activities (four) and reduced resource utilisation (two) – again in line with the evidence.

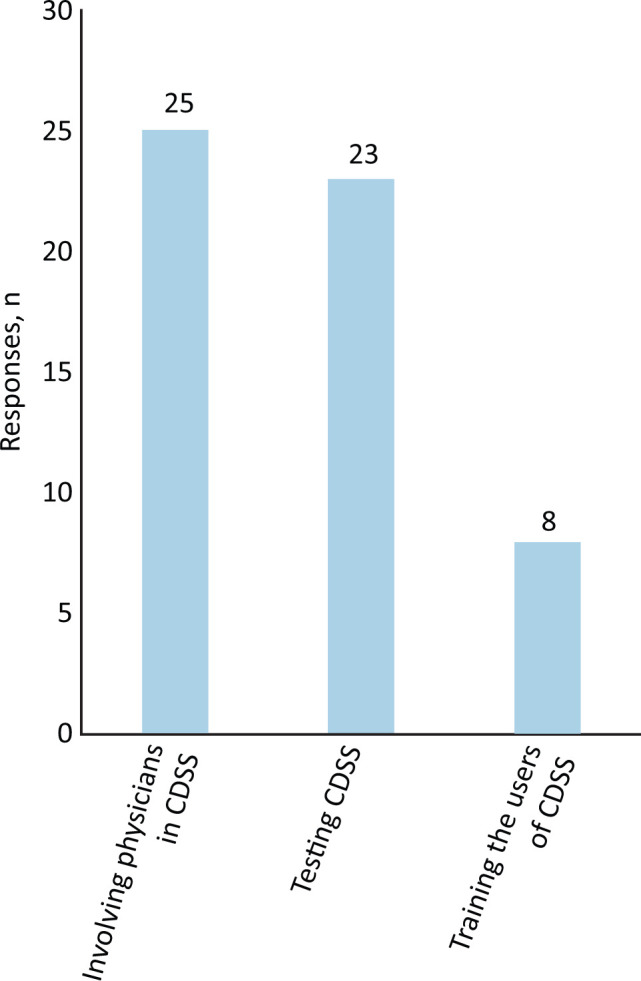

The actions needed to improve the benefits of CDSS (Fig 1) included involving clinicians in system design (15 responses), system procurement (four) or system updates (six), testing the accuracy of CDSS (12) or their impact (11), training system users (eight) or extending the scope of the CDSS (one). No respondent felt that involving physicians in writing the business case for CDSS was useful to help realise their benefits.

Fig 1.

The three most important actions to help realise clinical decision support systems benefits. CDSS = clinical decision support systems.

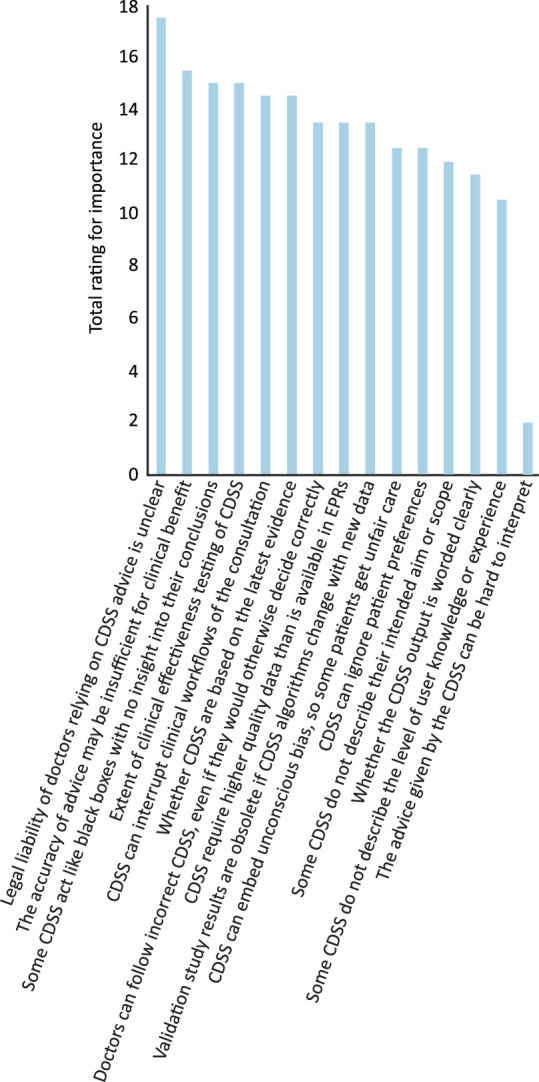

The section on concerns about CDSS (Fig 2) scored one if the concern was rated ‘very important’ and a half if ‘fairly important’. Important quality concerns included insufficiently accurate advice (total score 15.5), lack of testing of system effectiveness (15), doubt over whether CDSS are based on the latest evidence (14.5), disrupting the clinical consultation (14.5), that CDSS often ignore patient preferences (12.5) and concerns that CDSS output may not be clearly worded (11.5) or can be hard to interpret (two).

Fig 2.

Concerns about clinical decision support systems quality, regulation, ethics and liability. Concerns about clinical decision support systems scored one if the concern was rated ‘very important’ and a half if ‘fairly important’. CDSS = clinical decision support systems; EPRs = electronic patient records.

Concerns about regulation included poor system labelling (total 22.5; including concerns that CDSS fail to describe their aim or scope of use (12) or the level of user experience required for safe use (10.5)); that CDSS require high-quality patient data that NHS systems rarely provide (13.5); and that study results on CDSS can become obsolete due to fast-changing algorithms (13.5).

Concerns about professional practice, ethics and liability included that the legal liability of doctors following advice is unclear (17.5), that black box systems fail to provide explanation for their advice (15), that doctors may mistakenly follow incorrect advice (15; ‘automation bias’), and that CDSS can embed unconscious bias, leading to unfair care for some patients (12.5). Seven respondents stated that their specialty was taking action to address some of these concerns. These actions ranged from preliminary discussions and workshops on the role of AI, or consulting lawyers and the RCP Joint Specialty Committee for Clinical Pharmacology & Therapeutics about their concerns. Others are actively engaging with projects migrating to electronic patient records (EPRs) and increased use of embedded CDSS, or engaging in the development and validation of CDSS, and one specialty society is developing comparable tools to evaluate clinical aspects to complement those being solely undertaken by clinical scientists with no patient contact.

Turning to the regulation of CDSS, the organisations which respondents favoured for setting quality standards were regulators like the MHRA (16 responses), the RCP or other colleges (11), NHS Digital (seven), National Institute for Health and Care Excellence (NICE; six), specialty societies (six) and NHS England (three). The British Standards Institute (BSI) got one mention and trade associations received zero. The organisations which respondents suggested should test CDSS against these standards were regulators like the MHRA (11; in fact the MHRA never conducts tests on devices, it relies on notified bodies to conduct and report tests; these received only four responses in the survey), RCP or other colleges (seven), CDSS suppliers (seven; this ignores the fact that testing should be done by an independent body); NICE (five) and specialty societies (four). Individual clinicians, the BSI, hospital trusts and trade associations received zero responses. In a comment, it was suggested that a local EPR project team should test the CDSS.

Finally, we enquired about the three most important aspects of CDSS quality that need to be tested to assure specialty society members that a CDSS is fit for purpose. The largest total response was for testing the impact of the CDSS (23 total responses, consisting of testing CDSS impact on patient outcomes (10), on clinical decisions (six), on clinical actions (four) and on NHS resource utilisation (three)). Next was testing the extent to which CDSS content matches clinical evidence (12), its ease of use in the clinical environment (seven), the accuracy of the advice (six), the acceptability of the advice (six), cost effectiveness (four) and ease of understanding explanations (one). One comment suggested testing CDSS compatibility with other systems, open application programming interface etc.

Final comments on CDSS

Box 2 summarises the final free text comments under three broad themes: the use of and training about CDSS; clinical governance and CDSS; and regulation and evaluation of CDSS. Please see supplementary material S2 for full comments.

Box 2.

Summary of overall comments volunteered about clinical decision support systems, organised under three broad themes

| Use of and training about these systems |

|

| Clinical governance and CDSS |

|

| Regulation and evaluation of CDSS |

|

AI = artificial intelligence; CDSS = clinical decision support system; EPR = electronic patient record.

Discussion

Main results

Some specialties welcome CDSS and are benefiting from their input to support decisions on a range of clinical tasks. Better training about, and clinical governance of, installed CDSS are required, and the NHS and regulators need to ensure that only high-quality CDSS are made available. We captured informed clinician views on what factors make a CDSS high quality, including accuracy and impact, which may differ from the views of regulators such as MHRA and NHS Digital, who take a more technical safety and standards-based approach.

Study strengths

Rather than surveying unselected clinicians who might have no experience of AI and CDSS, we surveyed the better-informed informatics leads of specialty societies, to whom concerns about these tools would be directed and whose role requires that they reflect on these. In addition, as well as capturing quantitative survey data, we took account of their responses to open questions (qualitative data) and analysed this in duplicate to reduce confirmation bias. Our study thus used mixed methods to generate a better understanding of the views of senior clinicians about these tools.

Study weaknesses

The study was small and it is possible that other specialties (eg in surgery or mental health) may have had different views on AI or CDSS.

Conclusion

Implications for practitioners, professional bodies, regulators and developers

While small, this survey of specialty society officers who have carefully considered the professional opportunities, implications and concerns about CDSS has consequences for practice and education. For example, to promote greater uptake of these tools, a more recent analysis of the legal position of doctors who follow CDSS advice and of those who choose not to do so is needed; to update a 30-year-old Lancet article.15 Once this is available, we need to assemble relevant educational material for CDSS users and to empower physicians to get involved in developing or procuring these systems by publishing a quality checklist, listing questions to ask about CDSS. This could be modelled on the RCP apps checklist.16 There is also a need for education about CDSS to be included in lists of core competencies and curricula, both for clinical informatics specialists and for general physicians. Regulators (for example, the MHRA and NHS England) may be interested in the types of evidence that senior physicians expect to be made available to support an informed choice about using a CDSS (for example, evidence about accuracy as well as impact), and reflect on how their processes can encourage CDSS developers to include this information in product literature or labels. Finally, developers need to design these systems to promote, not inhibit, understanding and learning from failure, especially for junior doctors. They also need to pay attention to the quality dimensions raised in the section on concerns, avoiding black box systems and systems that constantly change their algorithm in response to new data, as validation study results are then rapidly outdated.

Implications for researchers and research funders

We need to understand better how clinicians of various grades and professional backgrounds choose to use a CDSS that is provided for them, whether there are thresholds for key performance criteria such as accuracy, and how they trade off the potential benefits and risks of CDSS. For example, might they use a ‘black box’ CDSS that is unable to provide explanations of its advice once its accuracy exceeds a certain threshold?

Supplementary material

Additional supplementary material may be found in the online version of this article at www.rcpjournals.org/clinmedicine:

S1 – Final questionnaire about clinical decision support systems.

S2 – Analysis of free text comments made on the overall position.

References

- 1.Roshanov PS, Fernandes N, Wilczynski JM, et al. Features of effective computerised clinical decision support systems: meta-regression of 162 randomised trials. BMJ 2013;346:f657. [DOI] [PubMed] [Google Scholar]

- 2.Ranji SR, Rennke S, Wachter RM. Computerised provider order entry combined with clinical decision support systems to improve medication safety: a narrative review. BMJ Qual Saf 2014;23:773–80. [DOI] [PubMed] [Google Scholar]

- 3.Groenhof TKJ, Asselbergs FW, Groenwold RHH, et al. The effect of computerized decision support systems on cardiovascular risk factors: a systematic review and meta-analysis. BMC Med Inform Decis Mak 2019;19:108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jaspers MW, Smeulers M, Vermeulen H, Peute LW. Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. J Am Med Inform Assoc 2011;18:327–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jia P, Zhang L, Chen J, Zhao P, Zhang M. The effects of clinical decision support systems on medication safety: an overview. PLoS One 2016;11:e0167683. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Stone EG. Unintended adverse consequences of a clinical decision support system: two cases. J Am Med Inform Assoc 2018;25:564–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Wyatt J. Nervous about artificial neural networks? Lancet 1995;346:1175–7. [DOI] [PubMed] [Google Scholar]

- 8.Department for Business, Energy & Industrial Strategy Industrial Strategy: building a Britain fit for the future. GOV.UK, 2018. www.gov.uk/government/publications/industrial-strategy-building-a-britain-fit-for-the-future [Google Scholar]

- 9.Department for Business, Energy & Industrial Strategy The Grand Challenge missions. GOV.UK, 2019. www.gov.uk/government/publications/industrial-strategy-the-grand-challenges/missions [Google Scholar]

- 10.Brundage M, Avin S, Clark J, et al. The malicious use of artificial intelligence: Forecasting, prevention, and mitigation. Oxford: Future of Humanity Institute, 2018. [Google Scholar]

- 11.Harwich E, Laycock K. Thinking on its own: AI in the NHS. Reform, 2018. [Google Scholar]

- 12.BBC News Statins alert over IT glitch in heart risk tool. BBC, 2016. www.bbc.co.uk/news/health-36274791 [Google Scholar]

- 13.Teach RL, Shortliffe EH. An analysis of physician attitudes regarding computer-based clinical consultation systems. Comput Biomed Res 1981;14:542–58. [DOI] [PubMed] [Google Scholar]

- 14.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–38. [DOI] [PubMed] [Google Scholar]

- 15.Brahams D, Wyatt J. Decision-aids and the law. Lancet 1989;2;632–4. [DOI] [PubMed] [Google Scholar]

- 16.Wyatt JC, Thimbleby H, Rastall P, et al. What makes a good clinical app? Introducing the RCP Health Informatics Unit checklist. Clin Med 2015;15:519–21. [DOI] [PMC free article] [PubMed] [Google Scholar]