Abstract

Generalised anxiety disorder (GAD) is prevalent among college students in India; however, barriers like stigma, treatment accessibility and cost prevent engagement in treatment. Web- and mobile-based, or digital, mental health interventions have been proposed as a potential solution to increasing treatment access. With the ultimate goal of developing an engaging digital mental health intervention for university students in India, the current study sought to understand students’ reactions to a culturally and digitally adapted evidence-based cognitive behavioural therapy (CBT) for GAD intervention. Specifically, through theatre testing and focus groups with a non-clinical sample of 15 college students in India, the present study examined initial usability, acceptability and feasibility of the “Mana Maali Digital Anxiety Program.” Secondary objectives comprised identifying students’ perceived barriers to using the program and eliciting recommendations. Results indicated high usability, with the average usability rating ranking in the top 10% of general usability scores. Participants offered actionable changes to improve usability and perceived acceptability among peers struggling with mental health issues. Findings highlight the benefits of offering digital resources that circumvent barriers associated with accessing traditional services. Results build on existing evidence that digital interventions can be a viable means of delivering mental healthcare to large, defined populations.

Keywords: College students, Cognitive behavioural therapy, Digital interventions, India

Mental health disorders are a public health concern among college students in India (Sunitha & Gururaj, 2014). Anxiety disorders are the most prevalent disorder among Indian college students, with approximately 19% of young adults in India experiencing generalised anxiety disorder (GAD; Sahoo & Khess, 2010). Untreated GAD is associated with significant distress, reduced quality of life, increased prevalence of medical problems, increased costs of healthcare, and higher rates of comorbid mental health issues (Bereza, Machado, & Einarson, 2009; Yonkers, Bruce, Dyck, & Keller, 2003). As the average age of onset of GAD is early adulthood, college-aged youth are particularly vulnerable (Kessler et al., 2007).

For students in India, academic and parental pressures are primary drivers of mental health concerns (Banu, Deb, Vardhan, & Rao, 2015). Specifically, academic stress, often induced by tests, grades, studying and a self-imposed desire to succeed, has been linked to anxiety among college students in India (Banu et al., 2015). This self-imposed academic pressure is often compounded by parental pressure to succeed, with students frequently anxious about meeting parental expectations for academic achievement (Banu et al., 2015).

A key barrier to accessing treatment in India is the limited availability of mental healthcare professionals. Nationwide, there are only approximately 3800 psychiatrists, 900 clinical psychologists, 850 psychiatric social workers and 1500 psychiatric nurses (Sabha, 2015). In a population of over 1.3 billion individuals, this results in roughly one mental health professional per 185,000 people, a ratio, that is, considerably greater than the U.S. rate of one mental health provider per 580 individuals (Fairburn & Patel, 2014). Moreover, traditional counselling is rarely offered in Indian colleges and, when it is, students cite that barriers like stigma and confidentiality concerns prevent access (Menon, Sarkar, & Kumar, 2015). A focus on alternative forms of treatment has been proposed to address such a significant treatment gap (Murthy & Isaac, 2016).

Cognitive behavioural therapy (CBT) has been demonstrated successful in treating anxiety disorders (Olatunji, Cisler, & Deacon, 2010), including among Indian adolescents (Sharma, Mehta, & Sagar, 2016). Research has reconfirmed the efficacy of Internet-delivered CBT for treating anxiety disorders, with the majority of studies targeting GAD. A metanalysis of 11 randomised controlled trials found a significant reduction (d = −0.91) in GAD symptoms when comparing treatment versus control groups (Richards, Richardson, Timulak, & McElvaney, 2015). Further, research has replicated positive outcomes when delivering digital health interventions to college students (Davies, Morriss, & Glazebrook, 2014).

Despite compounding evidence suggesting the value of digital CBT-based interventions for treating anxiety among college students, limited research has been replicated in India (for a discussion, see Kanuri et al., 2015a; Kanuri, Taylor, Cohen, & Newman, 2015b). For example, Sharma et al. (2016) demonstrated CBT to be effective at treating GAD among Indian adolescents. However, to date, such studies have been few in number, small in sample size, with treatments delivered face-to-face.

Leveraging technology to deliver mental health interventions could reduce key barriers to access like counsellor availability, stigma and cost (Fairburn & Patel, 2017). Prior or in parallel to evaluating the effectiveness of digital health interventions, examining implementation outcomes is critical to ensuring the use and sustainability of interventions in real-life settings (Drotar & Lemanek, 2001). Proctor et al. (2011) outlined several key outcomes and the importance of assessing each when disseminating evidence-based treatments. Acceptability, or the perception among stakeholders that a given treatment is satisfactory, and feasibility, or the extent to which a new treatment can be successfully carried out within a given setting, have been highlighted as key variables to consider when developing and implementing interventions (Proctor et al., 2011). When examining digital health interventions in particular, the importance of assessing usability, or the extent to which a product can be used by the intended audience to achieve intended goals, has also been underscored (Ben-Zeev et al., 2014).

CURRENT STUDY

Although the literature reinforces the use of digital mental health interventions to reduce symptoms of GAD, few studies have assessed the effectiveness of such interventions developed for and delivered to college students in India (for a discussion and example, see Mehrotra, Sudhir, Rao, Thirthalli, & Srikanth, 2018). Considering the challenges associated with the delivery of digital mental health interventions (Mohr, Weingardt, Reddy, & Schueller, 2017) and the unique mental health needs of the Indian college-aged population (Sunitha & Gururaj, 2014), research examining the usability, acceptability and feasibility of interventions among this population is needed. Accordingly, the purpose of this study was to evaluate the initial usability, acceptability and feasibility of a digital GAD intervention for use with Indian college students. Secondary objectives were to examine students’ perceived barriers to using the program and elicit recommendations for modification prior to formal implementation and evaluation among an at-risk, clinical population.

METHODS

As an initial step in the evaluation of a digital anxiety program, we sought to assess its usability, acceptability and feasibility with Indian college students. “Theatre testing,” an innovative approach frequently leveraged in treatment adaptation research wherein participants preview a new intervention and share their feedback (Wingood & DiClemente, 2008), was employed. Theatre testing with non-clinical samples has been proposed as a beneficial initial step prior to engagement in a more formal feasibility study with a clinical sample in order to further optimise usability and acceptability prior to a costlier rollout to an at-risk population. In this study, usability was conceptualised as students’ perception of the ease of using the program; acceptability as students’ perception of the program’s ability to satisfactorily address mental health concerns; and feasibility as students’ perception of the ability to engage with the program via a digital platform.

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

PARTICIPANTS

Fifteen students from a leading engineering university in India were included in this study. Participants ranged in age from 18 to 22 years, with an average of 19.07 years (SD = 1.33). Most participants were male (n = 13;86.6%), which was representative of the 4:1 ratio of males to females at this university and most engineering schools in India. Most students were in their first year of college (n = 9; 60.0%). Students’ most common field of study was chemical engineering (n = 7; 46.7%) followed by computer science (n = 3; 20.0%). All participants had at least an intermediate level of English fluency and most also spoke Hindi (n = 14; 93.3%). Approximately 60% (n = 9) reported speaking at least one additional regional language (e.g., Guajarati, Telugu), with six distinct languages identified. All participants had at least one parent with a bachelor’s degree. See Table 1 for participant demographics.

TABLE 1.

Student demographic data

| Student demographic data | ||

|---|---|---|

| Number | Percentage (%) | |

| Gender | ||

| Male | 13 | 86.7 |

| Female | 2 | 13.3 |

| Age | ||

| 18 | 7 | 46.7 |

| 19 | 4 | 26.7 |

| 20 | 1 | 6.7 |

| 21 | 2 | 13.3 |

| 22 | 1 | 6.7 |

| Year in college | ||

| 1st year | 9 | 60.0 |

| 2nd year | 2 | 13.3 |

| 3rd year | 4 | 26.7 |

| English proficiency | ||

| Fluent | 7 | 46.7 |

| Intermediate | 8 | 53.3 |

| Beginner | - | - |

| Field of studya | ||

| Master of Science (M. Sc) | ||

| Physics | 1 | 6.7 |

| Economics | 1 | 6.7 |

| Chemistry | 1 | 6.7 |

| Biological Sciences | 2 | 13.3 |

| Bachelor of Engineering (B. E. Honours) | ||

| Computer Science | 3 | 20.0 |

| Chemical Engineering | 7 | 46.7 |

| Mechanical Engineering | 1 | 6.7 |

| Electrical and Electronics Engineering | 2 | 13.3 |

| Electronics & Instrumentations | 2 | 13.3 |

| Parent education | ||

| Bachelor’s Degree | 6 | 40.0 |

| Master’s Degree | 5 | 33.3 |

| Advanced graduate work/PhD | 4 | 26.7 |

| Estimated monthly family income | ||

| Prefer not to say | 4 | 26.7 |

| Less than 25,000 rupees/month | 2 | 13.3 |

| 25,000 to 50,000 rupees/month | 3 | 20.0 |

| 1 lakh rupees to 5 lakh rupees/month | 6 | 40.0 |

| Additional languages spokena | ||

| Hindi | 14 | 93.3 |

| Malayalam | 1 | 6.7 |

| Punjabi | 1 | 6.7 |

| Telugu | 3 | 20.0 |

| Gujarati | 2 | 13.3 |

| Odia | 1 | 6.7 |

| Tamil | 1 | 6.7 |

Note: Percentages may not add to 100% due to rounding.

Categories are not mutually exclusive.

MEASURES

Usability

The System Usability Scale (SUS; Brooke, 1996) is a 10-item measure that assesses usability, which encompasses whether users can achieve their objectives, how much effort is expended in doing so, and whether the experience was satisfactory. Items are rated on a 5-option Likert scale ranging from “strongly disagree” (1) to “strongly agree” (5). To score the SUS, the following directions are employed: for each odd numbered item, one is subtracted from each score; for each even numbered item, the response value is subtracted from the number five; the total score of the preceding items is then multiplied by 2.5. The final scores range from zero to one hundred, with higher scores indicating a greater level of usability. Scores above 68 are considered to be above average, whereas scores above 80.3 are in the top 10%. In an analysis of over 10 years of data from 206 studies on the SUS, the survey was found to be a highly reliable and valid measure of usability, with Cronbach’s alphas consistently reported above .90 (Bangor, Kortum, & Miller, 2008). For this study, item wording was adapted, replacing “system” with “website.” Cronbach’s alpha, calculated using IBM’s Statistical Package for the Social Sciences (SPSS), was 0.87.

Acceptability

The Treatment Satisfaction and Acceptability Measure (TSAM; Yoman, Hong, Kanuri, & Stanick, 2018) is a nascent, 14-item measure designed to assess treatment acceptability. Items are rated on a six-point Likert scale from “strongly disagree” (1) to “strongly agree” (6), with six items reverse scored. Scores range from 14 to 84, with higher scores indicating higher acceptability. Items include statements like, “I would expect great improvement as a result of this treatment.” Given that our study targeted a non-clinical student population, statements were modified by adding the dependent clause, “if I were struggling with mental health problems.” Thus, “hypothetical acceptability” was measured. As the measure is relatively new, no data on reliability and validity is available. Although the lack of data is a limitation, at this initial stage of the research, gathering student responses to definitive items was determined to be more important than gathering responses to peer-reviewed but imperfect items. Cronbach’s alpha was 0.77.

Focus group protocol

A semi-structured interview protocol was developed by the research team in collaboration with local student research assistants reviewing for language and readability (see Appendix). Most of the 13 questions had dynamic follow-up questions to elicit more feedback. The questions addressed topics related to usability (i.e., the ease of interacting with the program), acceptability (i.e., the appropriateness of the content in terms of language, length and format) and feasibility (i.e., how likely the mental health program could be delivered to students via a web-based platform). Participants were allowed to comment on any topic.

Demographic questionnaire

Participants reported on age, gender, year in college, major, languages spoken, fluency in English, estimated monthly family income, and highest level of education obtained by parents.

PROCEDURE

Convenience sampling was used to identify participants. Per the university’s request and in line with the theatre testing approach, we sought a general student perspective of the potential use of a digital anxiety program in the university setting. Members of the on-campus student mental health advocacy club disseminated information about the study opportunity. Interested students (n = 27) learned about the study goals and provided consent. Demographic questionnaires were completed by participants after providing consent. Participants then received account information and a “User Guide” comprising: (a) a description of the digital program; (b) session navigation instructions; and (c) a suggested timeline of use.

Participants were granted access to the 20-session program for a four-week period. We recommended completing one session each weekday in order to best experience the treatment as designed; however, participants were free to complete the sessions at their convenience. Of the 27 students, 11 did not initiate the program due to reported time constraints of school. The remaining 16 completed two or more sessions, with an average of 10.73 sessions. They were asked to complete a post-study survey at the end of the four-week period. Fifteen completed post-assessments.

These 15 students then participated in semi-structured focus groups conducted on campus. One of the local research assistants, a student at the university known to the participants, was present at all groups. Two team members joined via Google Hangouts, specifically a mental health professional originally licensed in India who led the focus group and an undergraduate who took notes. Focus groups included four to six individuals and lasted between 41 to 70 minutes (M = 53.5 minutes). Discussions were audio recorded with participant consent for transcription and coding. The study was approved by the Johns Hopkins Institutional Review Board and the governing body at the participating Indian college. Informed consent was obtained from all individual adult participants included in the study.

THE MANA MAALI DIGITAL ANXIETY INTERVENTION

The Mana Maali, Hindi for “gardener of the mind,” digital anxiety program was based on an evidence-based CBT for GAD intervention (Newman & Borkovec, 1995). Newman and Borkovec’s CBT for GAD treatment protocol for clinicians was a 14-session, face-to-face intervention including a variety of approaches like relaxation training and cognitive restructuring. In order to improve cultural fit for the Indian college-aged population, modifications were made to the content. Specifically, in collaboration with the original authors, a team of clinical psychologists, psychiatrists, and researchers from the U.S and India adapted Newman and Borkovec’s intervention for cultural competence. Modifications were informed by student feedback gathered in prior studies in which the research team delivered a digital version of the original intervention to college students in India (Kanuri et al., 2015a, 2015b). With regard to modification, the language was simplified to suit a population for whom English was often a second language. Illustrative scenarios were also adapted. For example, one vignette detailed the stress of leaving home to attend college in an urban city, whereas another addressed managing anxiety related to parental pressure to achieve academically (Banu et al., 2015). All audio and video were recorded by locals to ensure the accent and pronunciation were easy to understand.

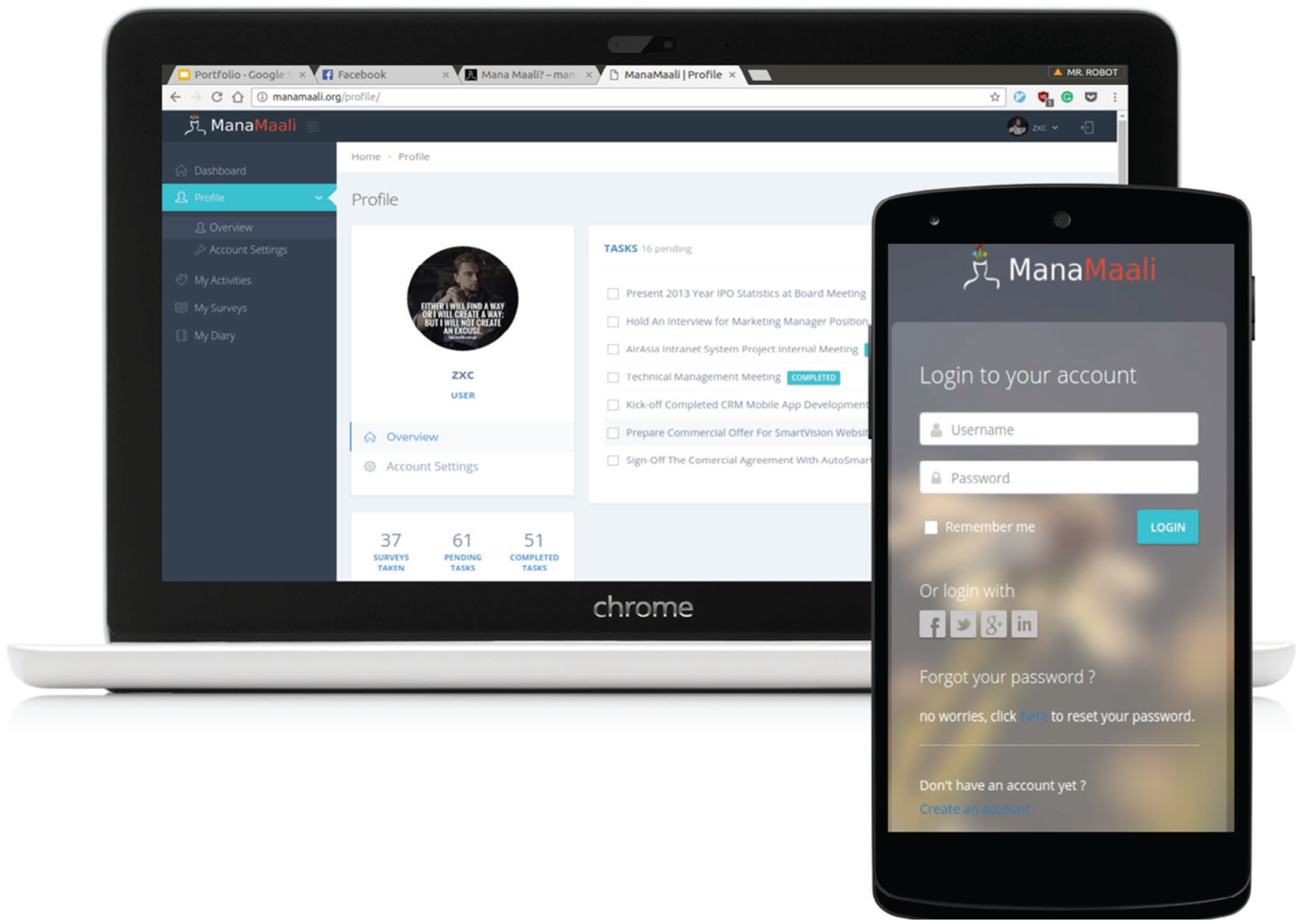

Adaptations were also made to translate an originally face-to-face intervention into a digitally-delivered program. See Figure 1 for a depiction of the web-based portal. Content was reduced to ensure sessions could be completed in 10–15 minutes. Brief relaxation exercises were designed for limited internet connectivity environments. Additionally, techniques like breathing exercises and mindfulness were repeated to facilitate skills rehearsal and ultimately acquisition.

Figure 1.

The Mana Maali Digital Anxiety Program.

The resulting GAD program comprised 20 sessions, designed to be completed in 10–15 minutes daily, with the goal of helping students: (a) learn about anxiety; (b) identify symptoms; (c) monitor thoughts and feelings; and (d) cope with their anxiety. Each session comprised two parts. The first didactic component educated students about the cause or experience of anxiety (e.g., worry, logical errors, automatic negative thoughts). The second practical component taught students a technique to cope with anxiety (e.g., diaphragmatic breathing, muscle relaxation). While the content was restructured to enable “micro” 10-minute daily sessions, the content of both components was derived from Newman & Borkovec’s evidence-based CBT for GAD intervention (Newman & Borkovec, 1995). The program comprised text, audio recordings and videos, either embedded in the program or linked to a third-party site (e.g., YouTube).

Technical platform development

A platform to support the secure delivery of the intervention content, track objective data on program use, and evaluate participant outcomes was developed. The platform was built over a six-month semester by a team of five graduate and undergraduate software engineers from a leading engineering university in India. Biweekly meetings were held with a research team situated in the U.S. and India.

DATA ANALYSIS

Quantitative data regarding acceptability and usability was analysed using descriptive statistics. Qualitative responses collected via the focus groups were audio recorded and transcribed. Using a qualitative data analysis approach for health services research outlined by Bradley, Curry, and Devers (2007), student responses were coded and analysed for themes by three research members. Based on the constructs being evaluated and the associated questions included in the interview guide, the research team created an a priori coding structure defining themes of usability, acceptability and feasibility. Team members independently coded one transcript for these themes and any other themes that emerged. Following, they converged to align on a final coding structure. They used the revised coding structure to recode all three focus group transcripts individually. The team met a final time to align on final codes per transcript, specifically using Bradley et al.’s systematic approach to resolve any code discrepancies via discussion, re-review and voting.

RESULTS

Quantitative analysis

The mean number of sessions completed over the 4-week period was 10.73 (SD = 4.86, Range = 2–18). The average score on the SUS (Brooke, 1996) was 82.33 (SD = 11.89, Range = 55–100), which is considered to be in the top 10% of general usability scores. The item with the highest mean score (M = 4.00; SD = 0.00) was “I thought the website was easy to use.” See Table 2 for the average scores and SDs for each item.

TABLE 2.

Means and SDs for each item of the System Usability Scale (SUS)

| Means and SDs for each item of the SUS | ||

|---|---|---|

| Mean score | SD | |

| SUS | ||

| I thought the website was easy to use | 4.00 | - |

| I think that I would need the support of a technical person to be able to use this website | 3.73 | 0.59 |

| I needed to learn a lot of things before I could get going with this website | 3.47 | 0.83 |

| I would imagine that most people would learn to use this website very quickly | 3.33 | 1.11 |

| I felt very confident using the website | 3.27 | 0.46 |

| I found the website very cumbersome to use | 3.27 | 0.59 |

| I found the website unnecessarily complex | 3.20 | 1.21 |

| I think that I would like to use this website frequently | 3.00 | 0.76 |

| I thought there was too much inconsistency in this website | 2.87 | 0.64 |

| I found the various functions in this website were well integrated | 2.80 | 0.77 |

Note: All participants (n = 15) responded to every item.

Odd-numbered items are scored by subtracting one from the response value, while even-numbered items are scored by subtracting the response value from the number five. The sum of all items is then multiplied by 2.5.

The average TSAM (Yoman et al., 2018) score was 66.27 (SD = 6.63, Range = 55–77). Following reverse scoring, the items with the highest means were, “If I was experiencing mental health problems in the future, I would be likely to seek out this treatment” (M = 5.07, SD = 0.59) and “If I were struggling with mental health problems, I would like this treatment.” (M = 5.07, SD = 0.70). See Table 3 for the average scores and SDs for each item.

TABLE 3.

Means and SDs for each item of the Treatment Satisfaction and Acceptability Measure (TSAM)

| Means and SDs for each item of the TSAM | ||

|---|---|---|

| Mean score | SD | |

| TSAM | ||

| If I was experiencing mental health problems in the future, I would be likely to seek out this treatment. | 5.07 | 0.59 |

| If I were struggling with mental health problems, I would like this treatment. | 5.07 | 0.70 |

| If I were struggling with mental health problems, this treatment suggested that there was something seriously wrong with me.a | 5.07 | 1.49 |

| I think this treatment was worth the time commitment and effort if I were struggling with mental health problems. | 4.87 | 0.64 |

| This treatment gave me more power over my problems if I were struggling with mental health problems. | 4.87 | 0.83 |

| If I knew someone who was struggling with mental health problems, I would tell them about this treatment. | 4.87 | 1.06 |

| This treatment fit well with the changes I would like to make in my life if I were struggling with mental health problems. | 4.80 | 0.56 |

| If I were struggling with mental health problems, I would not expect the effects of this treatment to last.a | 4.80 | 1.01 |

| This treatment suggested that I was to blame for my problems if I were struggling with mental health problems.a | 4.80 | 1.61 |

| I knew what I could do to get the most help from this treatment if I were struggling with mental health problems. | 4.73 | 1.10 |

| If I were struggling with mental health problems, I would feel uncomfortable with this treatment.a | 4.73 | 1.22 |

| If I were struggling with mental health problems, I would expect great improvement as a result of this treatment. | 4.53 | 0.74 |

| If I were struggling with mental health problems, I would not think this treatment is as good as other treatments.a | 4.47 | 1.13 |

| I would not expect to see rapid improvement as a result of this treatment if I were struggling with mental health problems.a | 3.73 | 1.71 |

Note: All participants (n = 15) responded to every item.

These six items are reverse scored.

Qualitative analysis

Themes of data collected from the focus group interviews and representative quotes are presented below.

Usability

Participants indicated the platform was intuitive to navigate. One student said, “It’s like using Coursera [an online learning platform] or like how we study here.” Unlike the desktop version, several people reported that accessing it via their personal cell phones, a common method among participants, was not user-friendly. One student reported, “The interface was good, but alignment of text and other features [on the cell phone] could be improved.” Accordingly, students advised developing a more mobile-friendly design given students’ increasing reliance on mobile phones to access the Web. Some suggested an app, while others indicated a Web-based program optimised for mobile access would be sufficient and quicker to load.

Although many students agreed that session content was helpful, they wanted delivery to be more engaging. One student mentioned, “The text was actually good. But I think it was too textual, like three to four pages of complete text. There were no pictures, so it was pretty bland. It starts to feel boring.” Participants suggested including more illustrations, animations, and videos. They preferred content organised on multiple pages versus each session requiring significant scrolling to view all parts. Another student suggested an organisational framework: “I think it should be more like a road map, like it should be a journey where ….you are learning to tackle your problems right … have some checkpoints [to show] you completed this … it will give you motivation to continue further.”

Students suggested a search tool to find sessions tagged with particular keywords and techniques labelled with short descriptions. For example, one student envisioned entering a keyword like “depression,” finding a list of sessions including content and/or techniques related to managing depression, and hovering over each session title to reveal a brief description. This would enable selection of the best technique in a moment of need.

Finally, participants described web links to third-party sites (e.g., YouTube) as suboptimal. Instead, the majority agreed accessing videos and audio content within the website itself would streamline the experience and decrease load time. The majority suggested videos should be concise, no longer than a few minutes. Finally, participants suggested videos demonstrating techniques like breathing exercises could be dually formatted as step-by-step illustrations to enable access even in limited connectivity environments.

Acceptability

Participants’ believed the program could effectively address unmet needs among the Indian college-aged population. The majority indicated the digital program would help peers suffering from anxiety and that they would recommend the program to a friend. Of note, most participants perceived the program as helpful to most students, even those without a clinical anxiety disorder. Indeed, many participants mentioned that they continued to use certain techniques after the study period as they found them to be beneficial. One student stated, “Yes, the material was quite relevant … it’s a nice thing because emotional education is not emphasized or spoken about in our country.” After sharing the program content with a peer, one student detailed, “I showed him some of the modules, some of the activities. He was quite happy to see [the program].”

Additionally, the majority of students in each focus group agreed the program would help students overcome barriers related to seeking help for mental healthcare. As one participant stated, “Sometimes some people have … problems actually accepting and seeking out treatment for mental health issues. Since the website does not require much effort and it can be done at home, that’s an advantage.”

Finally, participants were divided regarding the potential incorporation of an online program guide, or coach. Some indicated they would prefer to work through lessons on their own and maybe engage a coach later on. Others believed a coach would significantly improve the experience through personalization and focused attention. One student said, “At the end of the day, I do feel human conversation is actually necessary … it helps a lot if you are under stress.”

Feasibility

Students nearly unanimously agreed this digital mental health program was a practical option for their peers and feasible to implement on their campus. They perceived the effort required to incorporate this program into their daily schedule as reasonable. One student shared, “One of the most important things was [the modules were] very short, like 10 or 15 minutes per session … which makes a person feel like he can come back and do it.”

Barriers to use

Although not directly queried, students raised potential barriers to using the program. Students suggested mental health stigma might still limit engagement. To address this concern, they recommended incorporating content regarding stigma to normalise feelings of shame or embarrassment and, hopefully, increase the likelihood of continued use. One student shared, “We can show it is completely fine to talk about your mental health and it is not a big deal … you should feel free to share with the counsellor [on campus] or with your coach [if you have one].” Further, considering students’ limited knowledge about mental health generally, students suggested presenting first-time users with introductory information about the potential benefits of mental health treatment, the way in which the treatment methods work, and a preview of activities (e.g., diaphragmatic breathing) to help those considering its use.

Recommendations

Students across the focus groups brought up the potential benefits of connecting with others who are going through the same thing. While they acknowledged the possible risks to anonymity and privacy, most believed in the potential of peer support if designed in the right way:

Interviewer (I): Okay, what do you think will make it [the program] better?

Student (S1): In such situations one of the most important things is to know that you are not alone. So after a few weeks of use it is better if we can contact with someone else who is facing the same problems maybe. Which is possible if the online counsellor recommends someone or something.

S2: Or a place where you could post like this was my worry, how did I overcome it and like you can explain it to others. Like an anonymous chat forum or something.

I: Within the program you mean?

S1 and S2: Yeah.

S1: If the online counsellor is talking to someone, maybe he can talk to a group of people facing the same problem at the same time, so that all of them can pitch in and learn from each other’s problems.

Students also suggested adding a rating system for the techniques taught in the program, reminders and notifications beyond email, and a way to show students their results over time (e.g., a dashboard).

DISCUSSION

The current study found that the Mana Maali Digital Anxiety Program achieved generally high levels of usability, acceptability and feasibility as evaluated by a nonclinical population of college students in India. High scores on the SUS (Brooke, 1996) suggested students perceived the program to be user-friendly. Likewise, high scores on the TSAM (Yoman et al., 2018), along with qualitative feedback, indicated a belief in the program’s ability to meet the needs of peers struggling with mental health issues. Focus group themes confirmed the intervention was culturally acceptable, owing in large part to the program’s ability to circumvent barriers associated with more conventional treatments by offering confidentiality and convenience. Feasibility was also rated highly, with most participants believing the program could be easily incorporated into a student’s schedule. The primary perceived barrier to use was clunky and time-consuming activities, which could ultimately impede long-term engagement and benefit. Students’ suggestions included reducing the amount of content on a single page, inserting more rich media, ensuring swift access to exercises accessed via mobile devices, and enabling content tagging and search functions to tailor the experience based on immediate need.

College students in India check their phones more than 150 times per day (Khan, 2018). Given India has the world’s second largest and fastest growing population of smartphone users (GSMA, 2019), designing and delivering easy and engaging mental health treatments via mobile phones has significant public health potential. To effectively engage students, participants suggested including information about the potential benefits of the treatment and how it works. Yeager, Shoji, Luszczynska, and Benight (2018) research underscores this suggestion, with their findings demonstrating that priming outcome expectancy and treatment self-efficacy increases engagement. Delivering a mental health literacy intervention prior to the mental health intervention itself could prove beneficial for longer-term engagement.

These results corroborate findings of similar studies in the digital mental health intervention literature, specifically that digital programs can prove an acceptable and effective means of accessing mental health treatment in environments with limited access to care and pervasive stigma (Lewis, Pearce, & Bisson, 2012). Naslund et al. (2017) found that, across 13 studies of online mental health programs delivered in low-resource countries, individuals who completed the programs reduced anxiety and depression symptoms and improved quality of life. However, they reported high attrition rates, suggesting a useable, engaging platform is critical to achieve outcomes. Berry, Lobban, Emsley, and Bucci (2016) suggest privacy is also critical. In a meta-analysis of online mental health programs, they demonstrated that enhanced privacy increased acceptability among those with mental health problems.

This research suggests the Mana Maali Digital Anxiety Program is perceived as both usable and confidential. Such findings pave the way toward an evaluation with larger clinical samples of university students in India. In a usability study of a smartphone application for youth with anxiety, Stoll, Pina, Gary, and Amresh (2017) discovered that system usability was significantly correlated with greater system satisfaction, and concluded usability evaluations should be conducted prior to establishing effectiveness.

Research supports the potential efficacy of digital anxiety interventions. In a systematic review, Lewis et al. (2012) demonstrated that online interventions for anxiety disorders achieved a large effect size when compared to a waitlist control group, suggesting online interventions can be an effective intervention in low-resource settings in which licensed therapists are limited. That being said, such “low intensity” interventions are suggested to function best when implemented in a stepped-care system (Haaga, 2000). In such a closed-loop system, those who do not improve can be “stepped up” to the next level of care (e.g., an on-campus counsellor) (Kanuri et al., 2015b). Given the scarcity of research on digital mental health programs for college student in India, these findings will enable the development and implementation of digital mental health interventions that meet this population’s needs and expectations.

LIMITATIONS

There are a number of limitations associated with this study. First, the small, non-random participant sample limits the generalizability of the findings. Even though feedback indicated the program could be useful to all students, regardless of current mental health issues, the non-clinical nature of the sample reduces generalizability. Specifically, students asked about perceived satisfaction if you had mental health concerns might respond differently than students currently struggling with mental health issues. Another limitation was that students were exposed to variable amounts of the program, with all students averaging only 50% completion. However, as the aim of the study was an initial evaluation of the perceived acceptability, feasibility, and usability of the digital anxiety program, any amount of exposure to the program’s interface and content was determined sufficient to elicit feedback to inform future program iterations prior to additional evaluation. Future research should seek to engage a clinical sample of college-aged students in a true feasibility study, followed by a randomised trial incorporating a control group with a larger clinical sample of college students in India. Future research on program efficacy should also control for program exposure.

Another limitation was the use of measures. Namely, existing psychometric support for the TSAM (Yoman et al., 2018) is not yet available. However, this measure was selected for its alignment with constructs of interest and was combined with qualitative focus group data, which supported conclusions made from the quantitative measure. Nonetheless, future research should seek to use validated measures of treatment acceptability.

CONCLUSION

This study was the first to develop and test a digital intervention designed to treat GAD among college students in India. Findings bolster the evidence base supporting the use of digital mental healthcare for college students in India. In an increasingly competitive world, in both academic and professional environments, college students will continue to face academic stress. The need for more accessible, scalable and cost-effective means of delivering mental healthcare to college students has never been greater. Digital programs, capable of adapting to individual student needs, can help meet that growing need. While in-person support with a trained professional may continue to be the most effective and likely most desired form of therapy, these technologies can begin to close the widening treatment gap by enabling new ways of human connection and exponentially increasing access to evidence-based treatments.

APPENDIX

Focus Group Guide

INTRODUCTION

“I would like to thank you all for being here today. First, let’s go around the room and say our names. I will start. My name is … (All members say their names.) Thank you. Today we are interested in hearing what you all think about the Mana Maali website as a way for Indian colleges students like yourself to learn more about mental health and gain useful techniques to manage stress, anxiety or depression. Before we start, we want to go over some rules for this discussion.”

Confidentiality: “The first rule is confidentiality. Everything discussed in this room stays in the room as some information shared may be considered to be personal or sensitive. If you are talking about someone other than yourself, please do not use his or her name. So, instead of saying, ‘Bona (use name of co-researcher) at my school told me that she experiences anxiety, you can say, ‘Someone at my school told me … ‘”

- Respect: “We want to make sure respect is demonstrated by and for everyone in this group.”

- No interrupting someone when they are talking

- No talking over someone else

- If your thoughts conflict with those of another participant, please share them in a respectful way. It is OK to have different opinions, but please be considerate of other people’s opinions.

- Others: “Also, please remember … ”

- Only share what you feel comfortable sharing. You do not have to share anything you do not want to. You can stop us at any time and ask a question if you do not understand anything we bring up.

- You can leave the group at any time if you choose to do so.

Questions: “Does anyone have any questions before we begin?”

USABILITY

- A “Did you find the website to be easy to use?”

- If YES:

- “What about the website made it easy to use?”

- “Which feature did you find the most helpful?”

- “Were you able to navigate to find what you needed easily?”

- If NO:

- “What about the website did you find challenging?”

- “Which features do you think worsened your experience?”

- B “Did you find the layout and color scheme of the website pleasing?”

- If YES:

- “What did you find most appealing?”

- If NO:

- “What did you find most unappealing?”

- “Do you think it affected your willingness to use the program?”

C “What would you change about the website?”

ACCEPTABILITY

- D “What did you think of the content included in the sessions?”

- If limited response: “What did you think of the length of each session?”

- If too long:

- “What aspects did you find unnecessary?”

- If too short:

- “What else do you think should be added?”

- “What do you think about combining sessions together?”

- E “Was the language easy to understand?”

- If YES:

- “Were the illustrations or videos helpful in understanding the content?”

- If NO:

- “What would make the content clearer?”

- If limited response, mention the following examples:

- Cut down on wordiness

- Use fewer technical terms

- Include more illustrations or videos

- F “Did you enjoy practicing the techniques/activities?”

- If YES:

- “Which one did you find the most helpful?”

- “Do you think these techniques could be used regularly if needed?”

- “Did you find the examples included helpful and relevant?”

- If NO:

- “Why did you not like practicing the techniques?”

- If limited response, mention the following examples:

- Not helpful

- Takes too long

- Too difficult

- Did not understand how to practice the techniques

- G Did you find the material relevant to you (or a college student who may struggle with anxiety)?

- If YES:

- “How so?”

- “Would you recommend this to a peer/friend?”

- If NO:

- “Why not?”

- “What kind of material would you like to see included instead or additionally?

FEASIBILITY

- H “Do you think Indian college students could be reliably recruited and motivated to use this mental health website, as it is designed, to learn techniques to manage their anxiety, stress, or depression?”

- If limited response: “Do you believe mental health is a concern for your peers?”

- If YES:

- “Do you think college students with mental health problems ask for help if they need it?”

- “Do you think your peers would find using this website an acceptable way of getting help?”

- If NO:

- “Why do you think mental health is not a concern for your peers?”

I “What do you think might get in the way of accessing mental health support for your peers?”

J “Which, if any, barriers to mental healthcare do you think this mental health website reduces?”

K “Do you think many people in your college would commit to use this website regularly?”

L “Do you think students would engage with the website long enough to actually learn the techniques?”

OTHER QUESTIONS

M “Is there anything else about the program that we did not ask about that you want to make sure we know before we end this discussion? Any other questions you think should have been asked?

CONCLUDING AND DEBRIEFING

“Thank you for being a part of this focus group! We really appreciate what you shared with us. We will use your feedback to better understand how a program like this can be developed to increase access to mental health support for Indian college students. I want to remind everyone about the confidentiality rule that was discussed at the beginning of this focus group. Please make sure that what we have discussed here today stays in this room. I will stick around afterwards for any questions or concerns you may have. Thank you again for being part of this discussion!”

CODING STRUCTURE

Usability

Usability—technology—positive: Any comments indicating there were no issues related to technology development, bugs, glitches, formatting issues, etc., for example,, “The website supported the program really well.”

Usability—technology—negative: Any comments indicating there were issues related to technology development, bugs, glitches, formatting issues, etc., for example, “The website doesn’t show up properly on my phone.”

Usability—program navigation—positive: Any comments regarding easily becoming oriented to the webpage and easily being able to navigate to find the right tabs, links and pages. Organised architecture and interface. For example, “I could find what I needed easily.”

Usability—program navigation—negative: Any comments regarding difficulty becoming oriented to the webpage and being able to navigate to find the right tabs, links and pages. Disorganised architecture and interface. For example, “It was difficult to find the specific technique I wanted.”

Usability—colour Scheme—positive: Any comments regarding the aesthetics of the colour scheme used for the website being appropriate or pleasing.

Usability—colour Scheme—negative: Any comments regarding the aesthetics of the colour scheme used for the website being inappropriate or displeasing.

Feasibility

Feasibility—time—positive: Any positive comments related to the length of time of each module or the overall program.

Feasibility—time—negative: Any negative comments related to the length of time of each module or the overall program.

Feasibility—program delivery—positive: Any comments related to the proper functioning of the program in the environment of use (i.e., university campus) or for users (i.e., students).

Feasibility—program delivery—challenges: Any comments related to the improper functioning of the program in the environment of use (i.e., university campus) or for users (i.e., students).

Acceptability

Acceptability—program design—positive: Any comments endorsing the organisation and flow of content and program features, or how dynamic it is. For example, “I liked that the introduction to anxiety came before we learned specific techniques,” or “I like that we were told to practice just one technique for the entire week.” Note: This code might overlap with Acceptability—engagement—positive.

Acceptability—program design—negative: Any comments critiquing the organisation and flow of content and program features. For example, “I would put the worry time technique before learning about meditation.”

Acceptability—content—appropriate: Any comments identifying the topics covered and information included as appropriate for the intended target audience (Indian college students). This covers relevancy of the program content.

Acceptability—content—inappropriate: Any comments identifying the topics covered and information included as inappropriate for the intended target audience (Indian college students). Or any suggestions to modify or change information or topics covered in the program. Or any comments indicating something is confusing.

Acceptability—feature—positive: Any comments indicating a specific feature was beneficial.

Acceptability—feature—negative: Any comments indicating a specific feature was not beneficial.

Acceptability—language—appropriate: Any comments indicating the language used was appropriate and/or easy to understand.

Acceptability—language—inappropriate: Any comments indicating the language used was inappropriate and/or difficult to understand. For example, “I could not comprehend the text.”

Acceptability—engagement—positive: Any positive comments related to the program’s ability to evoke or maintain interest, or keep users engagement and free from boredom.

Acceptability—engagement—challenges: Any comments related to the program’s inability to evoke or maintain interest, or keep users engagement and free from boredom.

Acceptability—videos/pictures—positive: Any comments indicating the videos and pictures were effective or engaging.

Acceptability—videos/pictures—challenges: Any comments expressing concerns about the appropriateness or effectiveness of videos or pictures. Or any suggestions for incorporating alternative forms of media into the program.

Acceptability—helpfulness—positive: Any comments indicating this program would be helpful for the designated audience, either individual students or the broader student population. Any positive comments indicating what a student can expect to gain from using this program.

Acceptability—helpfulness—negative: Any comments indicating this program would not be helpful for the designated audience, either individual students or the broader student population. Any comments indicating a lack of belief in the program’s benefits for students.

Acceptability—novelty of program—positive: Any comments indicating that the program content was novel to the user.

Acceptability—novelty of program—negative: Any comments indicating that the program content was *not* novel to the user.

Impact

Impact—effectiveness—positive: Any comments that indicate the program was actually beneficial to the person using it (vs. potentially beneficial to others who are in need).

Impact—effectiveness—negative: Any comments that indicate the program was not actually beneficial to the person using it (vs. potentially beneficial to others who are in need).

Other

Other—stigma of mental health: Any comments that reflect on the perceptions or stigma of mental health and the level to which it is prevalent or absent on campus.

Other—need for mental health resources: Any comments that reflect on the disparity of mental health resources on or off campus or the availability, access and visibility of existing mental resources for students.

Other—adding new features: Any comments that suggest the addition of a new feature or aspect, that is, not already included in the program.

Other—adding new features—technique: Any suggestions about including a specific technique to the program (e.g., diary, etc.).

Other—adding new features—media: Any suggestions about including pictures or videos to the program.

Other—adding new features—content: Any suggestions about including specific information to the program.

Other—adding new features—program design: Any suggestions about modifying the ordering/flow of content in the program (e.g., make the first sessions shorter).

Other—remove features: Any comments about not liking a feature or suggesting removing a feature or aspect, that is, already included in the program.

Other—sharing/venting: Any comments revealing personal experiences with mental health or frustrations regarding mental health.

Representative Selection of Qualitative Feedback and Associated Codes

Now that you have used it, what do you think about the website and the program in general?

| Response | Relevant codes |

|---|---|

|

|

What is one thing that you would want to change the website or the program?

| Response | Relevant codes |

|---|---|

|

|

Any particular technique or content that you liked a lot or found very helpful?

| Response | Relevant codes |

|---|---|

|

|

I also heard a few of you say that sometimes it was text heavy. Was there anything you found unnecessary?

| Response | Relevant codes |

|---|---|

|

|

So after using this program, would you recommend this to someone who has mental health issues?

| Response | Relevant codes |

|---|---|

|

|

Is there anything that you personally learned, something you will take away, from the program?

| Response | Relevant codes |

|---|---|

|

|

Is there anything else that you would like to share?

| Response | Relevant codes |

|---|---|

|

|

| |

REFERENCES

- Bangor A, Kortum PT, & Miller JT (2008). An empirical evaluation of the system usability scale. International Journal of Human-Computer Interaction, 24(6), 574–594. 10.1080/10447310802205776 [DOI] [Google Scholar]

- Banu P, Deb S, Vardhan V, & Rao T (2015). Perceived academic stress among university students across gender, academic streams, semester and academic performance. Indian Journal of Health and Wellbeing, 6, 412–416. [Google Scholar]

- Ben-Zeev D, Brenner CJ, Begale M, Duffecy J, Mohr DC, & Mueser KT (2014). Feasibility, acceptability, and preliminary efficacy of a smartphone intervention for schizophrenia. Schizophrenia Bulletin, 40(6), 1244–1253. 10.1093/schbul/sbu033 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bereza BG, Machado M, & Einarson TR (2009). Systematic review and quality assessment of economic evaluations and quality-of-life studies related to generalized anxiety disorder. Clinical Therapy, 31(6), 1279–1308. 10.1016/j.clinthera.2009.06.004 [DOI] [PubMed] [Google Scholar]

- Berry N, Lobban F, Emsley R, & Bucci S (2016). Acceptability of interventions delivered online and through mobile phones for people who experience severe mental health problems: A systematic review. Journal of Medical Internet Research, 18(5), e121 10.2196/jmir.5250 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley EH, Curry LA, & Devers KJ (2007). Qualitative data analysis for health services research: Developing taxonomy, themes, and theory. Health Services Research, 42(4), 1758–1772. 10.1111/j.1475-6773.2006.00684.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooke J (1996). SUS: A quick and dirty usability scale In Jordan PW, Thomas B, Weerdmeester BA, & McClelland IL (Eds.), Usability evaluation in industry (pp. 189–194). London, U.K.: Taylor & Francis. [Google Scholar]

- Davies EB, Morriss R, & Glazebrook C (2014). Computer-delivered and web-based interventions to improve depression, anxiety, and psychological well-being of university students: A systematic review and meta-analysis. Journal of Medical Internet Research, 16(5), e130 10.2196/jmir.3142 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drotar D, & Lemanek K (2001). Steps toward a clinically relevant science of interventions in pediatric settings. Journal of Pediatric Psychology, 26, 385–394. 10.1093/jpepsy/26.7.385 [DOI] [PubMed] [Google Scholar]

- Fairburn CG, & Patel V (2014). The global dissemination of psychological treatments: A road map for research and practice. American Journal of Psychiatry, 171, 495–498. 10.1176/appi.ajp.2013.13111546 [DOI] [PubMed] [Google Scholar]

- Fairburn CG, & Patel V (2017). The impact of digital technology on psychological treatments and their dissemination. Behaviour Research and Therapy, 88, 19–25. 10.1016/j.brat.2016.08.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- GSMA. (2019). The mobile economy. Retrieved from GSM Association website https://www.gsma.com/r/mobileeconomy/. [Google Scholar]

- Haaga DAF (2000). Introduction to the special section on stepped care models in psychotherapy. Journal of Consulting and Clinical Psychology, 68(4), 547–548. 10.1037/0022-006X.68.4.547 [DOI] [PubMed] [Google Scholar]

- Kanuri N, Newman MG, Ruzek JI, Kuhn E, Manjula M, Jones M, … Taylor CB (2015a). The feasibility, acceptability, and efficacy of delivering internet-based self-help and guided self-help interventions for generalized anxiety disorder to Indian university students: Design of a randomized controlled trial. JMIR Research Protocols, 4(4), e136 10.2196/resprot.4783 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanuri N, Taylor CB, Cohen JM, & Newman MG (2015b). Classification models for subthreshold generalized anxiety disorder in a college population: Implications for prevention. Journal of Anxiety Disorders, 34, 43–52. 10.1016/j.janxdis.2015.05.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kessler RC, Angermeyer M, Anthony JC, De Graaf R, Demyttenaere K, Gasquet I, … Ustun TB (2007). Lifetime prevalence and age-of-onset distributions of mental disorders in the World Health Organization’s World Mental Health Survey Initiative. World psychiatry: Official Journal of the World Psychiatric Association (WPA), 6(3), 168–176. [PMC free article] [PubMed] [Google Scholar]

- Khan MN (2018). Smartphone dependency, Hedonism and Purchase Behaviour: Implications for Digital India Initiatives Indian Council of Social Science Research, The Ministry of Human Resource Development. New Delhi, India: Unpublished raw data [Google Scholar]

- Lewis C, Pearce J, & Bisson JI (2012). Efficacy, cost-effectiveness and acceptability of self-help interventions for anxiety disorders: Systematic review. The British Journal of Psychiatry: the Journal of Mental Science, 200(1), 15–21. 10.1192/bjp.bp.110.084756 [DOI] [PubMed] [Google Scholar]

- Mehrotra S, Sudhir P, Rao G, Thirthalli J, & Srikanth TK (2018). Development and pilot testing of an internet-based self-help intervention for depression for Indian users. Behavioral Science (Basel), 8, 36 10.3390/bs8040036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Menon V, Sarkar S, & Kumar S (2015). Barriers to healthcare seeking among medical students: A cross sectional study from South India. Postgraduate Medical Journal, 91, 477–482. 10.1136/postgradmedj-2015-133233 [DOI] [PubMed] [Google Scholar]

- Mohr DC, Weingardt KR, Reddy M, & Schueller SM (2017). Three problems with current digital mental health research … and three things we can do about them. Psychiatric Services, 68(5), 427–429. 10.1176/appi.ps.201600541 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murthy P, & Isaac MK (2016). Five-year plans and once-in-a-decade interventions: Need to move from filling gaps to bridging chasms in mental health care in India. Indian Journal of Psychiatry, 58(3), 253–258. 10.4103/0019-5545.192010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naslund JA, Aschbrenner KA, Araya R, Marsch LA, Unützer J, Patel V, & Bartels SJ (2017). Digital technology for treating and preventing mental disorders in low-income and middle-income countries: A narrative review of the literature. Lancet Psychiatry, 4(6), 486–500. 10.1016/S2215-0366(17)30096-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman MG, & Borkovec TD (1995). Cognitive-behavioral treatment of generalized anxiety disorder. Clinical Psychologist, 48(4), 5–7. [Google Scholar]

- Olatunji BO, Cisler JM, & Deacon BJ (2010). Efficacy of cognitive behavioral therapy for anxiety disorders: A review of meta-analytic findings. Psychiatric Clinic of North America, 33(3), 557–577. 10.1016/j.psc.2010.04.002 [DOI] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, … Hensley M (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health, 38(2), 65–76. 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards D, Richardson T, Timulak L, & McElvaney J (2015). The efficacy of internet-delivered treatment for generalized anxiety disorder: A systematic review and meta-analysis. Internet Interventions, 2(3), 272–282. 10.1016/j.invent.2015.07.003 [DOI] [Google Scholar]

- Sabha R (2015). Starred Question No. 253 to be answered on the 22nd December, 2015: Infrastructure to treat mental illness. Department of Health and Family Welfare, Ministry of Health and Family Welfare, Government of India. [Last access on 2018 May 24]. Retrieved from http://164.100.47.234/question/annex/237/As253.pdf. [Google Scholar]

- Sahoo S, & Khess CR (2010). Prevalence of depression, anxiety, and stress among young male adults in India. The Journal of Nervous and Mental Disease, 198(12), 901–904. 10.1097/nmd.0b013e3181fe75dc [DOI] [PubMed] [Google Scholar]

- Sharma P, Mehta M, & Sagar R (2016). Efficacy of transdi-agnostic cognitive-behavioral group therapy for anxiety disorders and headache in adolescents. Journal of Anxiety Disorders, 46, 78–84. 10.1016/j.janxdis.2016.11.001 [DOI] [PubMed] [Google Scholar]

- Stoll RD, Pina AA, Gary K, & Amresh A (2017). Usability of a smartphone application to support the prevention and intervention of anxiety in youth. Cognitive and Behavioral Practice, 24(4), 393–404. 10.1016/j.cbpra.2016.11.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sunitha S, & Gururaj G (2014). Health behaviours & problems among young people in India: Cause for concern & call for action. The Indian Journal of Medical Research, 140(2), 185–208. [PMC free article] [PubMed] [Google Scholar]

- Wingood GM, & DiClemente RJ (2008). The ADAPT-ITT model: A novel method of adapting evidence-based HIV interventions. Journal of Acquired Immune Deficiency Syndrome, 47, S40–S46. 10.1097/QAI.0b013e3181605df1 [DOI] [PubMed] [Google Scholar]

- Yeager CM, Shoji K, Luszczynska A, & Benight CC (2018). Engagement with a trauma recovery internet intervention explained with the health action process approach (HAPA): Longitudinal study. JMIR Mental Health, 5(2), e29 10.2196/mental.9449 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoman J, Hong N, Kanuri N, Stanick C (2018). The development of a treatment satisfaction and acceptability measure. Unpublished manuscript. [Google Scholar]

- Yonkers KA, Bruce SE, Dyck IR, & Keller MB (2003). Chronicity, relapse, and illness –course of panic disorder, social phobia, and generalized anxiety disorder: Findings in men and women from 8 years of follow-up. Depression and Anxiety, 17(3), 173–179. 10.1002/da.10106 [DOI] [PubMed] [Google Scholar]