Abstract

Improving the reproducibility of biomedical research is a major challenge. Transparent and accurate reporting is vital to this process; it allows readers to assess the reliability of the findings and repeat or build upon the work of other researchers. The ARRIVE guidelines (Animal Research: Reporting In Vivo Experiments) were developed in 2010 to help authors and journals identify the minimum information necessary to report in publications describing in vivo experiments. Despite widespread endorsement by the scientific community, the impact of ARRIVE on the transparency of reporting in animal research publications has been limited. We have revised the ARRIVE guidelines to update them and facilitate their use in practice. The revised guidelines are published alongside this paper. This explanation and elaboration document was developed as part of the revision. It provides further information about each of the 21 items in ARRIVE 2.0, including the rationale and supporting evidence for their inclusion in the guidelines, elaboration of details to report, and examples of good reporting from the published literature. This document also covers advice and best practice in the design and conduct of animal studies to support researchers in improving standards from the start of the experimental design process through to publication.

The NC3Rs developed the ARRIVE guidelines in 2010 to help authors and journals identify the minimum information necessary to report in publications describing in vivo experiments. This article explains the rationale behind each item in the revised and updated ARRIVE guidelines 2019, clarifying key concepts and providing illustrative examples.

See S1 Annotated byline for individual authors’ positions at the time this article was submitted.

See S1 Annotated References for further context on the works cited in this article.

Introduction

Transparent and accurate reporting is essential to improve the reproducibility of scientific research; it enables others to scrutinise the methodological rigour of the studies, assess how reliable the findings are, and repeat or build upon the work.

However, evidence shows that the majority of publications fail to include key information and there is significant scope to improve the reporting of studies involving animal research [1–4]. To that end, the UK National Centre for the 3Rs (NC3Rs) published the ARRIVE (Animal Research: Reporting In Vivo Experiments) guidelines in 2010. The guidelines are a checklist of information to include in a manuscript to ensure that publications contain enough information to add to the knowledge base [5]. The guidelines have received widespread endorsement from the scientific community and are currently recommended by more than a thousand journals, with further endorsement from research funders, universities, and learned societies worldwide.

Studies measuring the impact of ARRIVE on the quality of reporting have produced mixed results [6–11], and there is evidence that in vivo scientists are not sufficiently aware of the importance of reporting the information covered in the guidelines and fail to appreciate the relevance to their work or their research field [12].

As a new international working group—the authors of this publication—we have revised the guidelines to update them and facilitate their uptake; the ARRIVE guidelines 2.0 are published alongside this paper [13]. We have updated the recommendations in line with current best practice, reorganised the information, and classified the items into two sets. The ARRIVE Essential 10 constitute the minimum reporting requirement, and the Recommended Set provides further context to the study described. Although reporting both sets is best practice, an initial focus on the most critical issues helps authors, journal staff, editors, and reviewers use the guidelines in practice and allows a pragmatic implementation. Once the Essential 10 are consistently reported in manuscripts, items from the Recommended Set can be added to journal requirements over time until all 21 items are routinely reported in all manuscripts. Full methodology for the revision and the allocation of items into sets is described in the accompanying publication [13].

A key aspect of the revision was to develop this explanation and elaboration document to provide background and rationale for each of the 21 items of ARRIVE 2.0. Here, we present additional guidance for each item and subitem, explain the importance of reporting this information in manuscripts that describe animal research, elaborate on what to report, and provide supporting evidence. The guidelines apply to all areas of bioscience research involving living animals. That includes mammalian species as well as model organisms such as Drosophila or Caenorhabditis elegans. Each item is equally relevant to manuscripts centred around a single animal study and broader-scope manuscripts describing in vivo observations along with other types of experiments. The exact type of detail to report, however, might vary between species and experimental setup; this is acknowledged in the guidance provided for each item.

We recognise that the purpose of the research influences the design of the study. Hypothesis-testing research evaluates specific hypotheses, using rigorous methods to reduce the risk of bias and a statistical analysis plan that has been defined before the study starts. In contrast, exploratory research often investigates many questions simultaneously without adhering to strict standards of rigour; this flexibility is used to develop or test novel methods and generate theories and hypotheses that can be formally tested later. Both study types make valuable contributions to scientific progress. Transparently reporting the purpose of the research and the level of rigour used in the design, execution, and analysis of the study enables readers to decide how to use the research, whether the findings are groundbreaking and need to be confirmed before building on them, or whether they are robust enough to be applied to other research settings.

To contextualise the importance of reporting information described in the Essential 10, this document also covers experimental design concepts and best practices. This has two main purposes: First, it helps authors understand the relevance of this information for readers to assess the reliability of the reported results, thus encouraging thorough reporting. Second, it supports the implementation of best practices in the design and conduct of animal research. Consulting this document at the start of the process when planning an in vivo experiment will enable researchers to make the best use of it, implement the advice on study design, and prepare for the information that will need to be collected during the experiment to report the study in adherence with the guidelines.

To ensure that the recommendations are as clear and useful as possible to the target audience, this document was road tested alongside the revised guidelines with researchers preparing manuscripts describing in vivo research [13]. Each item is written as a self-contained section, enabling authors to refer to particular items independently, and a glossary (Box 1) explains common statistical terms. Each subitem is also illustrated with examples of good reporting from the published literature. Explanations and examples are also available from the ARRIVE guidelines website: https://www.arriveguidelines.org.

Box 1. Glossary

Bias: The over- or underestimation of the true effect of an intervention. Bias is caused by inadequacies in the design, conduct, or analysis of an experiment, resulting in the introduction of error.

Descriptive and inferential statistics: Descriptive statistics are used to summarise the data. They generally include a measure of central tendency (e.g., mean or median) and a measure of spread (e.g., standard deviation or range). Inferential statistics are used to make generalisations about the population from which the samples are drawn. Hypothesis tests such as ANOVA, Mann-Whitney, or t tests are examples of inferential statistics.

Effect size: Quantitative measure of differences between groups, or strength of relationships between variables.

Experimental unit: Biological entity subjected to an intervention independently of all other units, such that it is possible to assign any two experimental units to different treatment groups. Sometimes known as unit of randomisation.

External validity: Extent to which the results of a given study enable application or generalisation to other studies, study conditions, animal strains/species, or humans.

False negative: Statistically nonsignificant result obtained when the alternative hypothesis (H1) is true. In statistics, it is known as the type II error.

False positive: Statistically significant result obtained when the null hypothesis (H0) is true. In statistics, it is known as the type I error.

Independent variable: Variable that either the researcher manipulates (treatment, condition, time) or is a property of the sample (sex) or a technical feature (batch, cage, sample collection) that can potentially affect the outcome measure. Independent variables can be scientifically interesting, or nuisance variables. Also known as predictor variable.

Internal validity: Extent to which the results of a given study can be attributed to the effects of the experimental intervention, rather than some other, unknown factor(s) (e.g., inadequacies in the design, conduct, or analysis of the study introducing bias).

Nuisance variable: Variables that are not of primary interest but should be considered in the experimental design or the analysis because they may affect the outcome measure and add variability. They become confounders if, in addition, they are correlated with an independent variable of interest, as this introduces bias. Nuisance variables should be considered in the design of the experiment (to prevent them from becoming confounders) and in the analysis (to account for the variability and sometimes to reduce bias). For example, nuisance variables can be used as blocking factors or covariates.

Null and alternative hypotheses: The null hypothesis (H0) is that there is no effect, such as a difference between groups or an association between variables. The alternative hypothesis (H1) postulates that an effect exists.

Outcome measure: Any variable recorded during a study to assess the effects of a treatment or experimental intervention. Also known as dependent variable, response variable.

Power: For a predefined, biologically meaningful effect size, the probability that the statistical test will detect the effect if it exists (i.e., the null hypothesis is rejected correctly).

Sample size: Number of experimental units per group, also referred to as n.

Definitions are adapted from [14,15] and placed in the context of animal research.

ARRIVE Essential 10

The ARRIVE Essential 10 (Box 2) constitute the minimum reporting requirement to ensure that reviewers and readers can assess the reliability of the findings presented. There is no ranking within the set; items are presented in a logical order.

Box 2. ARRIVE Essential 10

Study design

Sample size

Inclusion and exclusion criteria

Randomisation

Blinding

Outcome measures

Statistical methods

Experimental animals

Experimental procedures

Results

Item 1. Study design

For each experiment, provide brief details of study design including:

1a. The groups being compared, including control groups. If no control group has been used, the rationale should be stated.

Explanation. The choice of control or comparator group is dependent on the experimental objective. Negative controls are used to determine whether a difference between groups is caused by the intervention (e.g., wild-type animals versus genetically modified animals, placebo versus active treatment, sham surgery versus surgical intervention). Positive controls can be used to support the interpretation of negative results or determine if an expected effect is detectable.

It may not be necessary to include a separate control with no active treatment if, for example, the experiment aims to compare a treatment administered by different methods (e.g., intraperitoneal administration versus oral gavage) or animals that are used as their own control in a longitudinal study. A pilot study, such as one designed to test the feasibility of a procedure, might also not require a control group.

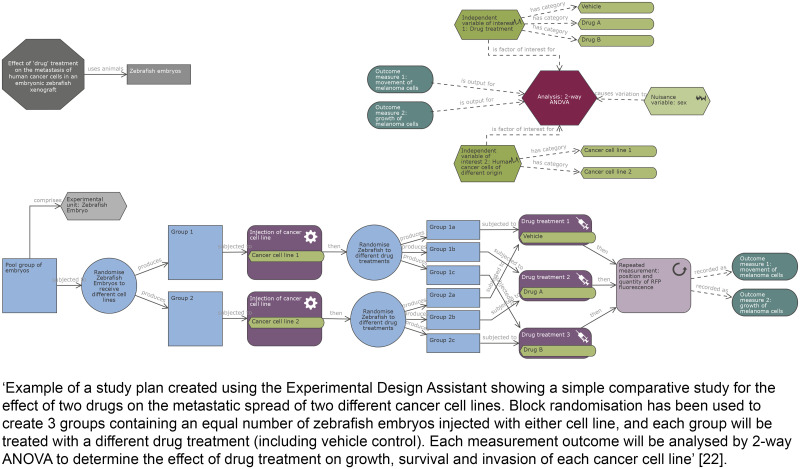

For complex study designs, a visual representation is more easily interpreted than a text description, so a timeline diagram or flowchart is recommended. Diagrams facilitate the identification of which treatments and procedures were applied to specific animals or groups of animals and at what point in the study these were performed. They also help to communicate complex design features such as whether factors are crossed or nested (hierarchical/multilevel designs), blocking (to reduce unwanted variation, see Item 4. Randomisation), or repeated measurements over time on the same experimental unit (repeated measures designs); see [16–18] for more information on different design types. The Experimental Design Assistant (EDA) is a platform to support researchers in the design of in vivo experiments; it can be used to generate diagrams to represent any type of experimental design [19].

For each experiment performed, clearly report all groups used. Selectively excluding some experimental groups (for example, because the data are inconsistent or conflict with the narrative of the paper) is misleading and should be avoided [20]. Ensure that test groups, comparators, and controls (negative or positive) can be identified easily. State clearly if the same control group was used for multiple experiments or if no control group was used.

Examples

Subitem 1a—Example 1

‘The DAV1 study is a one-way, two-period crossover trial with 16 piglets receiving amoxicillin and placebo at period 1 and only amoxicillin at period 2. Amoxicillin was administered orally with a single dose of 30 mg.kg-1. Plasma amoxicillin concentrations were collected at same sampling times at each period: 0.5, 1, 1.5, 2, 4, 6, 8, 10 and 12 h’ [21].

Subitem 1a—Example 2

Fig 1. Reproduced from reference [22].

1b. The experimental unit (e.g., a single animal, litter, or cage of animals).

Explanation. Within a design, biological and technical factors will often be organised hierarchically, such as cells within animals and mitochondria within cells, or cages within rooms and animals within cages. Such hierarchies can make determining the sample size difficult (is it the number of animals, cells, or mitochondria?). The sample size is the number of experimental units per group. The experimental unit is defined as the biological entity subjected to an intervention independently of all other units, such that it is possible to assign any two experimental units to different treatment groups. It is also sometimes called the unit of randomisation. In addition, the experimental units should not influence each other on the outcomes that are measured.

Commonly, the experimental unit is the individual animal, each independently allocated to a treatment group (e.g., a drug administered by injection). However, the experimental unit may be the cage or the litter (e.g., a diet administered to a whole cage, or a treatment administered to a dam and investigated in her pups), or it could be part of the animal (e.g., different drug treatments applied topically to distinct body regions of the same animal). Animals may also serve as their own controls, receiving different treatments separated by washout periods; here, the experimental unit is an animal for a period of time. There may also be multiple experimental units in a single experiment, such as when a treatment is given to a pregnant dam and then the weaned pups are allocated to different diets [23]. See [17,24,25] for further guidance on identifying experimental units.

Conflating experimental units with subsamples or repeated measurements can lead to artificial inflation of the sample size. For example, measurements from 50 individual cells from a single mouse represent n = 1 when the experimental unit is the mouse. The 50 measurements are subsamples and provide an estimate of measurement error and so should be averaged or used in a nested analysis. Reporting n = 50 in this case is an example of pseudoreplication [26]. It underestimates the true variability in a study, which can lead to false positives and invalidate the analysis and resulting conclusions [26,27]. If, however, each cell taken from the mouse is then randomly allocated to different treatments and assessed individually, the cell might be regarded as the experimental unit.

Clearly indicate the experimental unit for each experiment so that the sample sizes and statistical analyses can be properly evaluated.

Examples

Subitem 1b—Example 1

‘The present study used the tissues collected at E15.5 from dams fed the 1X choline and 4X choline diets (n = 3 dams per group, per fetal sex; total n = 12 dams). To ensure statistical independence, only one placenta (either male or female) from each dam was used for each experiment. Each placenta, therefore, was considered to be an experimental unit’ [28].

Subitem 1b—Example 2

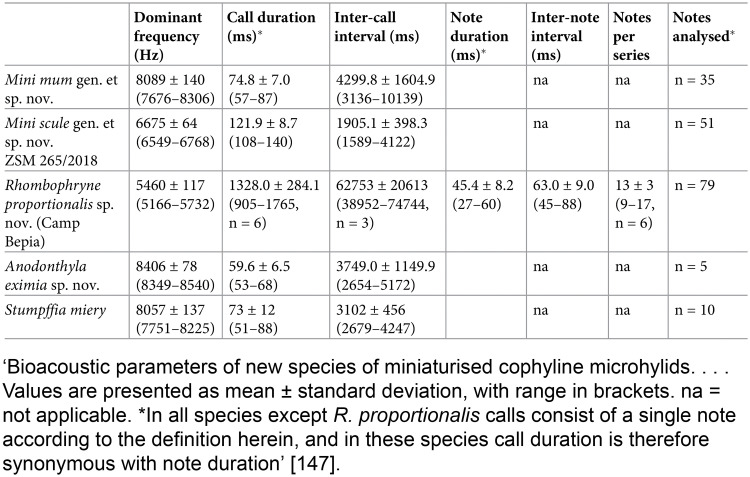

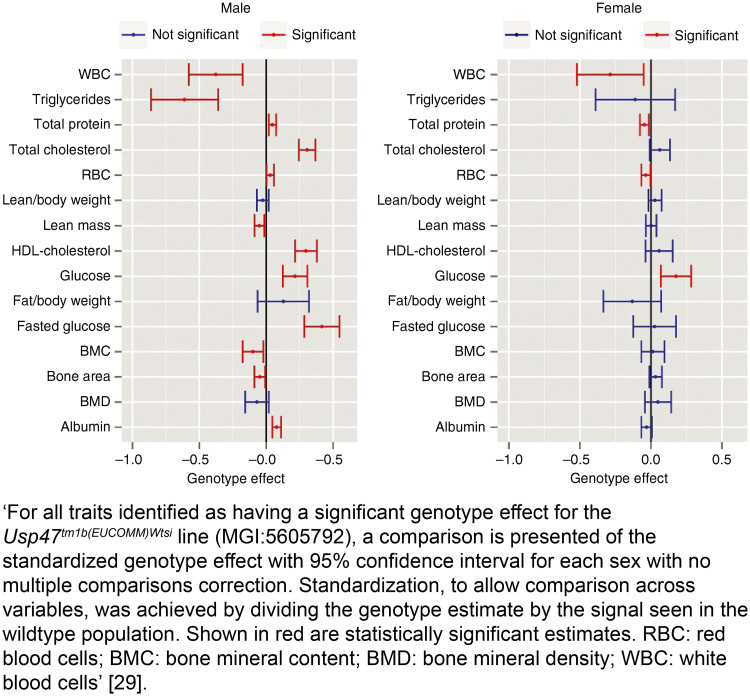

‘We have used data collected from high-throughput phenotyping, which is based on a pipeline concept where a mouse is characterized by a series of standardized and validated tests underpinned by standard operating procedures (SOPs)…. The individual mouse was considered the experimental unit within the studies’ [29].

Subitem 1b—Example 3

‘Fish were divided in two groups according to weight (0.7–1.2 g and 1.3–1.7 g) and randomly stocked (at a density of 15 fish per experimental unit) in 24 plastic tanks holding 60 L of water’ [30].

Subitem 1b—Example 4

‘In the study, n refers to number of animals, with five acquisitions from each [corticostriatal] slice, with a maximum of three slices obtained from each experimental animal used for each protocol (six animals each group)’ [31].

Item 2. Sample size

2a. Specify the exact number of experimental units allocated to each group, and the total number in each experiment. Also indicate the total number of animals used.

Explanation. The sample size relates to the number of experimental units in each group at the start of the study and is usually represented by n (see Item 1. Study design for further guidance on identifying and reporting experimental units). This information is crucial to assess the validity of the statistical model and the robustness of the experimental results.

The sample size in each group at the start of the study may be different from the n numbers in the analysis (see Item 3. Inclusion and exclusion criteria); this information helps readers identify attrition or if there have been exclusions and in which group they occurred. Reporting the total number of animals used in the study is also useful to identify whether any were reused between experiments.

Report the exact value of n per group and the total number in each experiment (including any independent replications). If the experimental unit is not the animal, also report the total number of animals to help readers understand the study design. For example, in a study investigating diet using cages of animals housed in pairs, the number of animals is double the number of experimental units.

Example

Subitem 2a –example 1

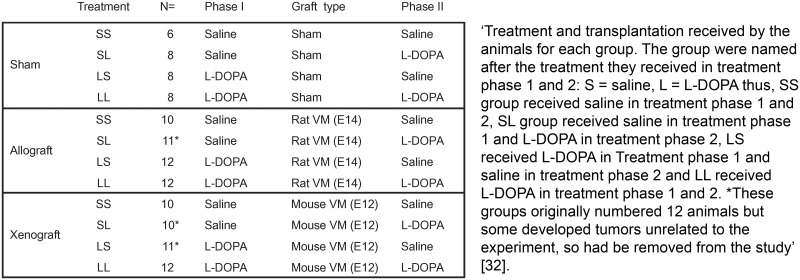

Fig 2. Reproduced from reference [32].

2b. Explain how the sample size was decided. Provide details of any a priori sample size calculation, if done.

Explanation. For any type of experiment, it is crucial to explain how the sample size was determined. For hypothesis-testing experiments, in which inferential statistics are used to estimate the size of the effect and to determine the weight of evidence against the null hypothesis, the sample size needs to be justified to ensure experiments are of an optimal size to test the research question [33,34] (see Item 13. Objectives). Sample sizes that are too small (i.e., underpowered studies) produce inconclusive results, whereas sample sizes that are too large (i.e., overpowered studies) raise ethical issues over unnecessary use of animals and may produce trivial findings that are statistically significant but not biologically relevant [35]. Low power has three effects: first, within the experiment, real effects are more likely to be missed; second, when an effect is detected, this will often be an overestimation of the true effect size [24]; and finally, when low power is combined with publication bias, there is an increase in the false positive rate in the published literature [36]. Consequently, low-powered studies contribute to the poor internal validity of research and risk wasting animals used in inconclusive research [37].

Study design can influence the statistical power of an experiment, and the power calculation used needs to be appropriate for the design implemented. Statistical programmes to help perform a priori sample size calculations exist for a variety of experimental designs and statistical analyses, both freeware (web-based applets and functions in R) and commercial software [38–40]. Choosing the appropriate calculator or algorithm to use depends on the type of outcome measures and independent variables, and the number of groups. Consultation with a statistician is recommended, especially when the experimental design is complex or unusual.

When the experiment tests the effect of an intervention on the mean of a continuous outcome measure, the sample size can be calculated a priori, based on a mathematical relationship between the predefined, biologically relevant effect size, variability estimated from prior data, chosen significance level, power, and sample size (see Box 3 and [17,41] for practical advice). If you have used an a priori sample size calculation, report

Box 3. Information used in a power calculation

Sample size calculation is based on a mathematical relationship between the following parameters: effect size, variability, significance level, power, and sample size. Questions to consider are the following:

The primary objective of the experiment—What is the main outcome measure?

The primary outcome measure should be identified in the planning stage of the experiment; it is the outcome of greatest importance, which will answer the main experimental question.

The predefined effect size—What is a biologically relevant effect size?

The effect size is estimated as a biologically relevant change in the primary outcome measure between the groups under study. This can be informed by similar studies and involves scientists exploring what magnitude of effect would generate interest and would be worth taking forward into further work. In preclinical studies, the clinical relevance of the effect should also be taken into consideration.

What is the estimate of variability?

Estimates of variability can be obtained

From data collected from a preliminary experiment conducted under identical conditions to the planned experiment, e.g., a previous experiment in the same laboratory, testing the same treatment under similar conditions on animals with the same characteristics

From the control group in a previous experiment testing a different treatment

From a similar experiment reported in the literature

Significance threshold—What risk of a false positive is acceptable?

The significance level or threshold (α) is the probability of obtaining a false positive. If it is set at 0.05, then the risk of obtaining a false positive is 1 in 20 for a single statistical test. However, the threshold or the p-values will need to be adjusted in scenarios of multiple testing (e.g., by using a Bonferroni correction).

Power—What risk of a false negative is acceptable?

For a predefined, biologically meaningful effect size, the power (1 − β) is the probability that the statistical test will detect the effect if it genuinely exists (i.e., true positive result). A target power between 80% and 95% is normally deemed acceptable, which entails a risk of false negative between 5% and 20%.

Directionality—Will you use a one- or two-sided test?

The directionality of a test depends on the distribution of the test statistics for a given analysis. For tests based on t or z distributions (such as t tests), whether the data will be analysed using a one- or two-sided test relates to whether the alternative hypothesis is directional or not. An experiment with a directional (one-sided) alternative hypothesis can be powered and analysed with a one-sided test with the goal of maximising the sensitivity to detect this directional effect. Controversy exists within the statistics community on when it is appropriate to use a one-sided test [42]. The use of a one-sided test requires justification of why a treatment effect is only of interest when it is in a defined direction and why they would treat a large effect in the unexpected direction no differently from a nonsignificant difference [43]. Following the use of a one-sided test, the investigator cannot then test for the possibility of missing an effect in the untested direction. Choosing a one-tailed test for the sole purpose of attaining statistical significance is not appropriate.

Two-sided tests with a nondirectional alternative hypothesis are much more common and allow researchers to detect the effect of a treatment regardless of its direction.

Note that analyses such as ANOVA and chi-squared are based on asymmetrical distributions (F-distribution and chi-squared distribution) with only one tail. Therefore, these tests do not have a directionality option.

the analysis method (e.g., two-tailed Student t test with a 0.05 significance threshold)

the effect size of interest and a justification explaining why an effect size of that magnitude is relevant

the estimate of variability used (e.g., standard deviation) and how it was estimated

the power selected

There are several types of studies in which a priori sample size calculations are not appropriate. For example, the number of animals needed for antibody or tissue production is determined by the amount required and the production ability of an individual animal. For studies in which the outcome is the successful generation of a sample or a condition (e.g., the production of transgenic animals), the number of animals is determined by the probability of success of the experimental procedure.

In early feasibility or pilot studies, the number of animals required depends on the purpose of the study. When the objective of the preliminary study is primarily logistic or operational (e.g., to improve procedures and equipment), the number of animals needed is generally small. In such cases, power calculations are not appropriate and sample sizes can be estimated based on operational capacity and constraints [44]. Pilot studies alone are unlikely to provide adequate data on variability for a power calculation for future experiments. Systematic reviews and previous studies are more appropriate sources of information on variability [45].

If no power calculation was used to determine the sample size, state this explicitly and provide the reasoning that was used to decide on the sample size per group. Regardless of whether a power calculation was used or not, when explaining how the sample size was determined take into consideration any anticipated loss of animals or data, for example, due to exclusion criteria established upfront or expected attrition (see Item 3. Inclusion and exclusion criteria).

Examples

Subitem 2b—Example 1

‘The sample size calculation was based on postoperative pain numerical rating scale (NRS) scores after administration of buprenorphine (NRS AUC mean = 2.70; noninferiority limit = 0.54; standard deviation = 0.66) as the reference treatment… and also Glasgow Composite Pain Scale (GCPS) scores… using online software (Experimental design assistant; https://eda.nc3rs.org.uk/eda/login/auth). The power of the experiment was set to 80%. A total of 20 dogs per group were considered necessary’ [46].

Subitem 2b—Example 2

‘We selected a small sample size because the bioglass prototype was evaluated in vivo for the first time in the present study, and therefore, the initial intention was to gather basic evidence regarding the use of this biomaterial in more complex experimental designs’ [47].

Item 3. Inclusion and exclusion criteria

3a. Describe any criteria used for including or excluding animals (or experimental units) during the experiment, and data points during the analysis. Specify if these criteria were established a priori. If no criteria were set, state this explicitly.

Explanation. Inclusion and exclusion criteria define the eligibility or disqualification of animals and data once the study has commenced. To ensure scientific rigour, the criteria should be defined before the experiment starts and data are collected [8,33,48,49]. Inclusion criteria should not be confused with animal characteristics (see Item 8. Experimental animals) but can be related to these (e.g., body weights must be within a certain range for a particular procedure) or related to other study parameters (e.g., task performance has to exceed a given threshold). In studies in which selected data are reanalysed for a different purpose, inclusion and exclusion criteria should describe how data were selected.

Exclusion criteria may result from technical or welfare issues such as complications anticipated during surgery or circumstances in which test procedures might be compromised (e.g., development of motor impairments that could affect behavioural measurements). Criteria for excluding samples or data include failure to meet quality control standards, such as insufficient sample volumes, unacceptable levels of contaminants, poor histological quality, etc. Similarly, how the researcher will define and handle data outliers during the analysis should also be decided before the experiment starts (see subitem 3b for guidance on responsible data cleaning).

Exclusion criteria may also reflect the ethical principles of a study in line with its humane endpoints (see Item 16. Animal care and monitoring). For example, in cancer studies, an animal might be dropped from the study and euthanised before the predetermined time point if the size of a subcutaneous tumour exceeds a specific volume [50]. If losses are anticipated, these should be considered when determining the number of animals to include in the study (see Item 2. Sample size). Whereas exclusion criteria and humane endpoints are typically included in the ethical review application, reporting the criteria used to exclude animals or data points in the manuscript helps readers with the interpretation of the data and provides crucial information to other researchers wanting to adopt the model.

Best practice is to include all a priori inclusion and exclusion/outlier criteria in a preregistered protocol (see Item 19. Protocol registration). At the very least, these criteria should be documented in a laboratory notebook and reported in manuscripts, explicitly stating that the criteria were defined before any data was collected.

Example

Subitem 3a—Example 1

‘The animals were included in the study if they underwent successful MCA occlusion (MCAo), defined by a 60% or greater drop in cerebral blood flow seen with laser Doppler flowmetry. The animals were excluded if insertion of the thread resulted in perforation of the vessel wall (determined by the presence of sub-arachnoid blood at the time of sacrifice), if the silicon tip of the thread became dislodged during withdrawal, or if the animal died prematurely, preventing the collection of behavioral and histological data’ [51].

3b. For each experimental group, report any animals, experimental units, or data points not included in the analysis and explain why. If there were no exclusions, state so.

Explanation. Animals, experimental units, or data points that are unaccounted for can lead to instances in which conclusions cannot be supported by the raw data [52]. Reporting exclusions and attritions provides valuable information to other investigators evaluating the results or who intend to repeat the experiment or test the intervention in other species. It may also provide important safety information for human trials (e.g., exclusions related to adverse effects).

There are many legitimate reasons for experimental attrition, some of which are anticipated and controlled for in advance (see subitem 3a on defining exclusion and inclusion criteria), but some data loss might not be anticipated. For example, data points may be excluded from analyses because of an animal receiving the wrong treatment, unexpected drug toxicity, infections or diseases unrelated to the experiment, sampling errors (e.g., a malfunctioning assay that produced a spurious result, inadequate calibration of equipment), or other human error (e.g., forgetting to switch on equipment for a recording).

Most statistical analysis methods are extremely sensitive to outliers and missing data. In some instances, it may be scientifically justifiable to remove outlying data points from an analysis, such as obvious errors in data entry or measurement with readings that are outside a plausible range. Inappropriate data cleaning has the potential to bias study outcomes [53]; providing the reasoning for removing data points enables the distinction to be made between responsible data cleaning and data manipulation. Missing data, common in all areas of research, can impact the sensitivity of the study and also lead to biased estimates, distorted power, and loss of information if the missing values are not random [54]. Analysis plans should include methods to explore why data are missing. It is also important to consider and justify analysis methods that account for missing data [55,56].

There is a movement toward greater data sharing (see Item 20. Data access), along with an increase in strategies such as code sharing to enable analysis replication. These practices, however transparent, still need to be accompanied by a disclosure on the reasoning for data cleaning and whether methods were defined before any data were collected.

Report all animal exclusions and loss of data points, along with the rationale for their exclusion. For example, this information can be summarised as a table or a flowchart describing attrition in each treatment group. Accompanying this information should be an explicit description of whether researchers were blinded to the group allocations when data or animals were excluded (see Item 5. Blinding and [57]). Explicitly state when built-in models in statistics packages have been used to remove outliers (e.g., GraphPad Prism’s outlier test).

Examples

Subitem 3b—Example 1

‘Pen was the experimental unit for all data. One entire pen (ZnAA90) was removed as an outlier from both Pre-RAC and RAC periods for poor performance caused by illness unrelated to treatment…. Outliers were determined using Cook’s D statistic and removed if Cook’s D > 0.5. One steer was determined to be an outlier for day 48 liver biopsy TM and data were removed’ [58].

Subitem 3b—Example 2

‘Seventy-two SHRs were randomized into the study, of which 13 did not meet our inclusion and exclusion criteria because the drop in cerebral blood flow at occlusion did not reach 60% (seven animals), postoperative death (one animal: autopsy unable to identify the cause of death), haemorrhage during thread insertion (one animal), and disconnection of the silicon tip of the thread during withdrawal, making the permanence of reperfusion uncertain (four animals). A total of 59 animals were therefore included in the analysis of infarct volume in this study. In error, three animals were sacrificed before their final assessment of neurobehavioral score: one from the normothermia/water group and two from the hypothermia/pethidine group. These errors occurred blinded to treatment group allocation. A total of 56 animals were therefore included in the analysis of neurobehavioral score’ [51].

Subitem 3b—Example 3

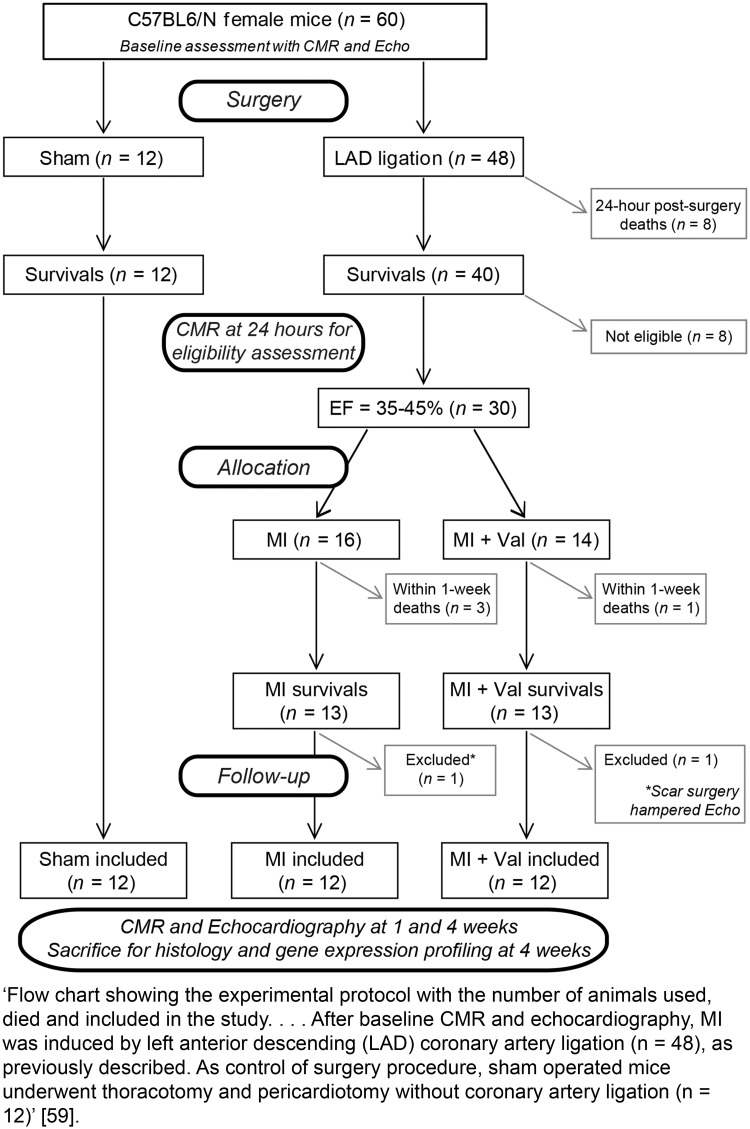

Fig 3. Reproduced from reference [59].

3c. For each analysis, report the exact value of n in each experimental group.

Explanation. The exact number of experimental units analysed in each group (i.e., the n number) is essential information for the reader to interpret the analysis; it should be reported unambiguously. All animals and data used in the experiment should be accounted for in the data presented. Sometimes, for good reasons, animals may need to be excluded from a study (e.g., illness or mortality), or data points excluded from analyses (e.g., biologically implausible values). Reporting losses will help the reader to understand the experimental design process, replicate methods, and provide adequate tracking of animal numbers in a study, especially when sample size numbers in the analyses do not match the original group numbers.

For each outcome measure, indicate numbers clearly within the text or on figures and provide absolute numbers (e.g., 10/20, not 50%). For studies in which animals are measured at different time points, explicitly report the full description of which animals undergo measurement and when [33].

Examples

Subitem 3c—Example 1

‘Group F contained 29 adult males and 58 adult females in 2010 (n = 87), and 32 adult males and 66 adult females in 2011 (n = 98). The increase in female numbers was due to maturation of juveniles to adults. Females belonged to three matrilines, and there were no major shifts in rank in the male hierarchy. Six mid to low ranking individuals died and were excluded from analyses, as were five mid-ranking males who emigrated from the group at the beginning of 2011’ [60].

Subitem 3c—Example 2

‘The proportion of test time that animals spent interacting with the handler (sniffed the gloved hand or tunnel, made paw contact, climbed on, or entered the handling tunnel) was measured from DVD recordings. This was then averaged across the two mice in each cage as they were tested together and their behaviour was not independent…. Mice handled with the home cage tunnel spent a much greater proportion of the test interacting with the handler (mean ± s.e.m., 39.8 ± 5.2 percent time of 60 s test, n = 8 cages) than those handled by tail (6.4 ± 2.0 percent time, n = 8 cages), while those handled by cupping showed intermediate levels of voluntary interaction (27.6 ± 7.1 percent time, n = 8 cages)’ [61].

Item 4. Randomisation

4a. State whether randomisation was used to allocate experimental units to control and treatment groups. If done, provide the method used to generate the randomisation sequence.

Explanation. Using appropriate randomisation methods during the allocation to groups ensures that each experimental unit has an equal probability of receiving a particular treatment and provides balanced numbers in each treatment group. Selecting an animal ‘at random’ (i.e., haphazardly or arbitrarily) from a cage is not statistically random, as the process involves human judgement. It can introduce bias that influences the results, as a researcher may (consciously or subconsciously) make judgements in allocating an animal to a particular group, or because of unknown and uncontrolled differences in the experimental conditions or animals in different groups. Using a validated method of randomisation helps minimise selection bias and reduce systematic differences in the characteristics of animals allocated to different groups [62–64]. Inferential statistics based on nonrandomised group allocation are not valid [65,66]. Thus, the use of randomisation is a prerequisite for any experiment designed to test a hypothesis. Examples of appropriate randomisation methods include online random number generators (e.g., https://www.graphpad.com/quickcalcs/randomize1/) or a function like Rand() in spreadsheet software such as Excel, Google Sheets, or LibreOffice. The EDA has a dedicated feature for randomisation and allocation concealment [19].

Systematic reviews have shown that animal experiments that do not report randomisation or other bias-reducing measures such as blinding are more likely to report exaggerated effects that meet conventional measures of statistical significance [67–69]. It is especially important to use randomisation in situations in which it is not possible to blind all or parts of the experiment, but even with randomisation, researcher bias can pervert the allocation. This can be avoided by using allocation concealment (see Item 5. Blinding). In studies in which sample sizes are small, simple randomisation may result in unbalanced groups; here, randomisation strategies to balance groups such as randomising in matched pairs [70–72] and blocking are encouraged [17]. Reporting the precise method used to allocate animals or experimental units to groups enables readers to assess the reliability of the results and identify potential limitations.

Report the type of randomisation used (simple, stratified, randomised complete blocks, etc.; see Box 4), the method used to generate the randomisation sequence (e.g., computer-generated randomisation sequence, with details of the algorithm or programme used), and what was randomised (e.g., treatment to experimental unit, order of treatment for each animal). If this varies between experiments, report this information specifically for each experiment. If randomisation was not the method used to allocate experimental units to groups, state this explicitly and explain how the groups being compared were formed.

Box 4. Considerations for the randomisation strategy

Simple randomisation

All animals/samples are simultaneously randomised to the treatment groups without considering any other variable. This strategy is rarely appropriate, as it cannot ensure that comparison groups are balanced for other variables that might influence the result of an experiment.

Randomisation within blocks

Blocking is a method of controlling natural variation among experimental units. This splits up the experiment into smaller subexperiments (blocks), and treatments are randomised to experimental units within each block [17,66,73]. This takes into account nuisance variables that could potentially bias the results (e.g., cage location, day or week of procedure).

Stratified randomisation uses the same principle as randomisation within blocks, only the strata tend to be traits of the animal that are likely to be associated with the response (e.g., weight class or tumour size class). This can lead to differences in the practical implementation of stratified randomisation as compared with block randomisation (e.g., there may not be equal numbers of experimental units in each weight class).

Other randomisation strategies

Minimisation is an alternative strategy to allocate animals/samples to treatment group to balance variables that might influence the result of an experiment. With minimisation, the treatment allocated to the next animal/sample depends on the characteristics of those animals/samples already assigned. The aim is that each allocation should minimise the imbalance across multiple factors [74]. This approach works well for a continuous nuisance variable such as body weight or starting tumour volume.

Examples of nuisance variables that can be accounted for in the randomisation strategy

Time or day of the experiment

Litter, cage, or fish tank

Investigator or surgeon—different level of experience in the people administering the treatments, performing the surgeries, or assessing the results may result in varying stress levels in the animals or duration of anaesthesia

Equipment (e.g., PCR machine, spectrophotometer)—calibration may vary

Measurement of a study parameter (e.g., initial tumour volume)

Animal characteristics (e.g., sex, age bracket, weight bracket)

Location—exposure to light, ventilation, and disturbances may vary in cages located at different height or on different racks, which may affect important physiological processes

Implication for the analysis

If blocking factors are used in the randomisation, they should also be included in the analysis. Nuisance variables increase variability in the sample, which reduces statistical power. Including a nuisance variable as a blocking factor in the analysis accounts for that variability and can increase the power, thus increasing the ability to detect a real effect with fewer experimental units. However, blocking uses up degrees of freedom and thus reduces the power if the nuisance variable does not have a substantial impact on variability.

Examples

Subitem 4a—Example 1

‘Fifty 12-week-old male Sprague-Dawley rats, weighing 320–360g, were obtained from Guangdong Medical Laboratory Animal Center (Guangzhou, China) and randomly divided into two groups (25 rats/group): the intact group and the castration group. Random numbers were generated using the standard = RAND() function in Microsoft Excel’ [75].

Subitem 4a—Example 2

‘Animals were randomized after surviving the initial I/R, using a computer based random order generator’ [76].

Subitem 4a—Example 3

‘At each institute, phenotyping data from both sexes is collected at regular intervals on age-matched wildtype mice of equivalent genetic backgrounds. Cohorts of at least seven homozygote mice of each sex per pipeline were generated…. The random allocation of mice to experimental group (wildtype versus knockout) was driven by Mendelian Inheritance’ [29].

4b. Describe the strategy used to minimise potential confounders such as the order of treatments and measurements, or animal/cage location. If confounders were not controlled, state this explicitly.

Explanation. Ensuring there is no systematic difference between animals in different groups apart from the experimental exposure is an important principle throughout the conduct of the experiment. Identifying nuisance variables (sources of variability or conditions that could potentially bias results) and managing them in the design and analysis increases the sensitivity of the experiment. For example, rodents in cages at the top of the rack may be exposed to higher light levels, which can affect stress [77].

Reporting the strategies implemented to minimise potential differences that arise between treatment groups during the course of the experiment enables others to assess the internal validity. Strategies to report include standardising (keeping conditions the same, e.g., all surgeries done by the same surgeon), randomising (e.g., the sampling or measurement order), and blocking or counterbalancing (e.g., position of animal cages or tanks on the rack), to ensure groups are similarly affected by a source of variability. In some cases, practical constraints prevent some nuisance variables from being randomised, but they can still be accounted for in the analysis (see Item 7. Statistical methods).

Report the methods used to minimise confounding factors alongside the methods used to allocate animals to groups. If no measures were used to minimise confounders (e.g., treatment order, measurement order, cage or tank position on a rack), explicitly state this and explain why.

Examples

Subitem 4b—Example 1

‘Randomisation was carried out as follows. On arrival from El-Nile Company, animals were assigned a group designation and weighed. A total number of 32 animals were divided into four different weight groups (eight animals per group). Each animal was assigned a temporary random number within the weight range group. On the basis of their position on the rack, cages were given a numerical designation. For each group, a cage was selected randomly from the pool of all cages. Two animals were removed from each weight range group and given their permanent numerical designation in the cages. Then, the cages were randomized within the exposure group’ [78].

Subitem 4b—Example 2

‘… test time was between 08.30am to 12.30pm and testing order was randomized daily, with each animal tested at a different time each test day’ [79].

Subitem 4b—Example 3

‘Bulls were blocked by BW into four blocks of 905 animals with similar BW and then within each block, bulls were randomly assigned to one of four experimental treatments in a completely randomized block design resulting in 905 animals per treatment. Animals were allocated to 20 pens (181 animals per pen and five pens per treatment)’ [80].

Item 5. Blinding

Describe who was aware of the group allocation at the different stages of the experiment (during the allocation, the conduct of the experiment, the outcome assessment, and the data analysis).

Explanation. Researchers often expect a particular outcome and can unintentionally influence the experiment or interpret the data in such a way as to support their preferred hypothesis [81]. Blinding is a strategy used to minimise these subjective biases.

Although there is primary evidence of the impact of blinding in the clinical literature that directly compares blinded versus unblinded assessment of outcomes [82], there is limited empirical evidence in animal research [83,84]. There are, however, compelling data from systematic reviews showing that nonblinded outcome assessment leads to the treatment effects being overestimated, and the lack of bias-reducing measures such as randomisation and blinding can contribute to as much as 30%–45% inflation of effect sizes [67,68,85].

Ideally, investigators should be unaware of the treatment(s) animals have received or will be receiving, from the start of the experiment until the data have been analysed. If this is not possible for every stage of an experiment (see Box 5), it should always be possible to conduct at least some of the stages blind. This has implications for the organisation of the experiment and may require help from additional personnel—for example, a surgeon to perform interventions, a technician to code the treatment syringes for each animal, or a colleague to code the treatment groups for the analysis. Online resources are available to facilitate allocation concealment and blinding [19].

Box 5. Blinding during different stages of an experiment

During allocation

Allocation concealment refers to concealing the treatment to be allocated to each individual animal from those assigning the animals to groups, until the time of assignment. Together with randomisation, allocation concealment helps minimise selection bias, which can introduce systematic differences between treatment groups.

During the conduct of the experiment

When possible, animal care staff and those who administer treatments should be unaware of allocation groups to ensure that all animals in the experiment are handled, monitored, and treated in the same way. Treating different groups differently based on the treatment they have received could alter animal behaviour and physiology and produce confounds.

Welfare or safety reasons may prevent blinding of animal care staff, but in most cases, blinding is possible. For example, if hazardous microorganisms are used, control animals can be considered as dangerous as infected animals. If a welfare issue would only be tolerated for a short time in treated but not control animals, a harm-benefit analysis is needed to decide whether blinding should be used.

During the outcome assessment

The person collecting experimental measurements or conducting assessments should not know which treatment each sample/animal received and which samples/animals are grouped together. Blinding is especially important during outcome assessment, particularly if there is a subjective element (e.g., when assessing behavioural changes or reading histological slides) [83]. Randomising the order of examination can also reduce bias.

If the person assessing the outcome cannot be blinded to the group allocation (e.g., obvious phenotypic or behavioural differences between groups), some, but not all, of the sources of bias could be mitigated by sending data for analysis to a third party who has no vested interest in the experiment and does not know whether a treatment is expected to improve or worsen the outcome.

During the data analysis

The person analysing the data should know which data are grouped together to enable group comparisons but should not be aware of which specific treatment each group received. This type of blinding is often neglected but is important, as the analyst makes many semisubjective decisions such as applying data transformation to outcome measures, choosing methods for handling missing data, and handling outliers. How these decisions will be made should also be decided a priori.

Data can be coded prior to analysis so that the treatment group cannot be identified before analysis is completed.

Specify whether blinding was used or not for each step of the experimental process (see Box 5) and indicate what particular treatment or condition the investigators were blinded to, or aware of.

If blinding was not used at any of the steps outlined in Box 5, explicitly state this and provide the reason why blinding was not possible or not considered.

Examples

Item 5—Example 1

‘For each animal, four different investigators were involved as follows: a first investigator (RB) administered the treatment based on the randomization table. This investigator was the only person aware of the treatment group allocation. A second investigator (SC) was responsible for the anaesthetic procedure, whereas a third investigator (MS, PG, IT) performed the surgical procedure. Finally, a fourth investigator (MAD) (also unaware of treatment) assessed GCPS and NRS, mechanical nociceptive threshold (MNT), and sedation NRS scores’ [46].

Item 5—Example 2

‘… due to overt behavioral seizure activity the experimenter could not be blinded to whether the animal was injected with pilocarpine or with saline’ [86].

Item 5—Example 3

‘Investigators could not be blinded to the mouse strain due to the difference in coat colors, but the three-chamber sociability test was performed with ANY-maze video tracking software (Stoelting, Wood Dale, IL, USA) using an overhead video camera system to automate behavioral testing and provide unbiased data analyses. The one-chamber social interaction test requires manual scoring and was analyzed by an individual with no knowledge of the questions’ [87].

Item 6. Outcome measures

6a. Clearly define all outcome measures assessed (e.g., cell death, molecular markers, or behavioural changes).

Explanation. An outcome measure (also known as a dependent variable or a response variable) is any variable recorded during a study (e.g., volume of damaged tissue, number of dead cells, specific molecular marker) to assess the effects of a treatment or experimental intervention. Outcome measures may be important for characterising a sample (e.g., baseline data) or for describing complex responses (e.g., ‘haemodynamic’ outcome measures including heart rate, blood pressure, central venous pressure, and cardiac output). Failure to disclose all the outcomes that were measured introduces bias in the literature, as positive outcomes (e.g., those statistically significant) are reported more often [88–91].

Explicitly describe what was measured, especially when measures can be operationalised in different ways. For example, activity could be recorded as time spent moving or distance travelled. When possible, the recording of outcome measures should be made in an unbiased manner (e.g., blinded to the treatment allocation of each experimental group; see Item 5. Blinding). Specify how the outcome measure(s) assessed are relevant to the objectives of the study.

Example

Subitem 6a—Example 1

‘The following parameters were assessed: threshold pressure (TP; intravesical pressure immediately before micturition); post-void pressure (PVP; intravesical pressure immediately after micturition); peak pressure (PP; highest intravesical pressure during micturition); capacity (CP; volume of saline needed to induce the first micturition); compliance (CO; CP to TP ratio); frequency of voiding contractions (VC) and frequency of non-voiding contractions (NVCs)’ [92].

6b. For hypothesis-testing studies, specify the primary outcome measure, i.e., the outcome measure that was used to determine the sample size.

Explanation. In a hypothesis-testing experiment, the primary outcome measure answers the main biological question. It is the outcome of greatest importance, identified in the planning stages of the experiment and used as the basis for the sample size calculation (see Box 3). For exploratory studies, it is not necessary to identify a single primary outcome, and often multiple outcomes are assessed (see Item 13. Objectives).

In a hypothesis-testing study powered to detect an effect on the primary outcome measure, data on secondary outcomes are used to evaluate additional effects of the intervention, but subsequent statistical analysis of secondary outcome measures may be underpowered, making results and interpretation less reliable [88,93]. Studies that claim to test a hypothesis but do not specify a predefined primary outcome measure or those that change the primary outcome measure after data were collected (also known as primary outcome switching) are liable to selectively report only statistically significant results, favouring more positive findings [94].

Registering a protocol in advance protects the researcher against concerns about selective outcome reporting (also known as data dredging or p-hacking) and provides evidence that the primary outcome reported in the manuscript accurately reflects what was planned [95] (see Item 19. Protocol registration).

In studies using inferential statistics to test a hypothesis (e.g., t test, ANOVA), if more than one outcome was assessed, explicitly identify the primary outcome measure, state whether it was defined as such prior to data collection and whether it was used in the sample size calculation. If there was no primary outcome measure, explicitly state so.

Examples

Subitem 6b—Example 1

‘The primary outcome of this study will be forelimb function assessed with the staircase test. Secondary outcomes constitute Rotarod performance, stroke volume (quantified on MR imaging or brain sections, respectively), diffusion tensor imaging (DTI) connectome mapping, and histological analyses to measure neuronal and microglial densities, and phagocytic activity…. The study is designed with 80% power to detect a relative 25% difference in pellet-reaching performance in the Staircase test’ [96].

Subitem 6b—Example 2

‘The primary endpoint of this study was defined as left ventricular ejection fraction (EF) at the end of follow-up, measured by magnetic resonance imaging (MRI). Secondary endpoints were left ventricular end diastolic volume and left ventricular end systolic volume (EDV and ESV) measured by MRI, infarct size measured by ex vivo gross macroscopy after incubation with triphenyltetrazolium chloride (TTC) and late gadolinium enhancement (LGE) MRI, functional parameters serially measured by pressure volume (PV-)loop and echocardiography, coronary microvascular function by intracoronary pressure- and flow measurements and vascular density and fibrosis on histology’ [76].

Item 7. Statistical methods

7a. Provide details of the statistical methods used for each analysis, including software used.

Explanation. The statistical analysis methods implemented will reflect the goals and the design of the experiment; they should be decided in advance before data are collected (see Item 19. Protocol registration). Both exploratory and hypothesis-testing studies might use descriptive statistics to summarise the data (e.g., mean and SD, or median and range). In exploratory studies in which no specific hypothesis was tested, reporting descriptive statistics is important for generating new hypotheses that may be tested in subsequent experiments, but it does not allow conclusions beyond the data. In addition to descriptive statistics, hypothesis-testing studies might use inferential statistics to test a specific hypothesis.

Reporting the analysis methods in detail is essential to ensure readers and peer reviewers can assess the appropriateness of the methods selected and judge the validity of the output. The description of the statistical analysis should provide enough detail so that another researcher could reanalyse the raw data using the same method and obtain the same results. Make it clear which method was used for which analysis.

Analysing the data using different methods and selectively reporting those with statistically significant results constitutes p-hacking and introduces bias in the literature [90,94]. Report all analyses performed in full. Relevant information to describe the statistical methods include

the outcome measures

the independent variables of interest

the nuisance variables taken into account in each statistical test (e.g., as blocking factors or covariates)

what statistical analyses were performed and references for the methods used

how missing values were handled

adjustment for multiple comparisons

the software package and version used, including computer code if available [97]

The outcome measure is potentially affected by the treatments or interventions being tested but also by other factors, such as the properties of the biological samples (sex, litter, age, weight, etc.) and technical considerations (cage, time of day, batch, experimenter, etc.). To reduce the risk of bias, some of these factors can be taken into account in the design of the experiment, for example, by using blocking factors in the randomisation (see Item 4. Randomisation). Factors deemed to affect the variability of the outcome measure should also be handled in the analysis, for example, as a blocking factor (e.g., batch of reagent or experimenter) or as a covariate (e.g., starting tumour size at point of randomisation).

Furthermore, to conduct the analysis appropriately, it is important to recognise the hierarchy that can exist in an experiment. The hierarchy can induce a clustering effect; for example, cage, litter, or animal effects can occur when the outcomes measured for animals from the same cage/litter, or for cells from the same animal, are more similar to each other. This relationship has to be managed in the statistical analysis by including cage/litter/animal effects in the model or by aggregating the outcome measure to the cage/litter/animal level. Thus, describing the reality of the experiment and the hierarchy of the data, along with the measures taken in the design and the analysis to account for this hierarchy, is crucial to assessing whether the statistical methods used are appropriate.

For bespoke analysis—for example, regression analysis with many terms—it is essential to describe the analysis pipeline in detail. This could include detailing the starting model and any model simplification steps.

When reporting descriptive statistics, explicitly state which measure of central tendency is reported (e.g., mean or median) and which measure of variability is reported (e.g., standard deviation, range, quartiles, or interquartile range). Also describe any modification made to the raw data before analysis (e.g., relative quantification of gene expression against a housekeeping gene). For further guidance on statistical reporting, refer to the Statistical Analyses and Methods in the Published Literature (SAMPL) guidelines [98].

Examples

Subitem 7a—Example 1

‘Analysis of variance was performed using the GLM procedure of SAS (SAS Inst., Cary, NC). Average pen values were used as the experimental unit for the performance parameters. The model considered the effects of block and dietary treatment (5 diets). Data were adjusted by the covariant of initial body weight. Orthogonal contrasts were used to test the effects of SDPP processing (UV vs no UV) and dietary SDPP level (3% vs 6%). Results are presented as least squares means. The level of significance was set at P < 0.05’ [99].

Subitem 7a—Example 2

‘All risk factors of interest were investigated in a single model. Logistic regression allows blocking factors and explicitly investigates the effect of each independent variable controlling for the effects of all others…. As we were interested in husbandry and environmental effects, we blocked the analysis by important biological variables (age; backstrain; inbreeding; sex; breeding status) to control for their effect. (The role of these biological variables in barbering behavior, particularly with reference to barbering as a model for the human disorder trichotillomania, is described elsewhere …). We also blocked by room to control for the effect of unknown environmental variables associated with this design variable. We tested for the effect of the following husbandry and environmental risk factors: cage mate relationships (i.e. siblings, non-siblings, or mixed); cage type (i.e. plastic or steel); cage height from floor; cage horizontal position (whether the cage was on the side or the middle of a rack); stocking density; and the number of adults in the cage. Cage material by cage height from floor; and cage material by cage horizontal position interactions were examined, and then removed from the model as they were nonsignificant. N = 1959 mice were included in this analysis’ [100].

7b. Describe any methods used to assess whether the data met the assumptions of the statistical approach, and what was done if the assumptions were not met.

Explanation. Hypothesis tests are based on assumptions about the underlying data. Describing how assumptions were assessed and whether these assumptions are met by the data enables readers to assess the suitability of the statistical approach used. If the assumptions are incorrect, the conclusions may not be valid. For example, the assumptions for data used in parametric tests (such as a t test, z test, ANOVA, etc.) are that the data are continuous, the residuals from the analysis are normally distributed, the responses are independent, and different groups have similar variances.

There are various tests for normality, for example, the Shapiro-Wilk and Kolmogorov-Smirnov tests. However, these tests have to be used cautiously. If the sample size is small, they will struggle to detect non-normality; if the sample size is large, the tests will detect unimportant deviations. An alternative approach is to evaluate data with visual plots, e.g., normal probability plots, box plots, scatterplots. If the residuals of the analysis are not normally distributed, the assumption may be satisfied using a data transformation in which the same mathematical function is applied to all data points to produce normally distributed data (e.g., loge, log10, square root).

Other types of outcome measures (binary, categorical, or ordinal) will require different methods of analysis, and each will have different sets of assumptions. For example, categorical data are summarised by counts and percentages or proportions and are analysed by tests of proportions; these analysis methods assume that data are binary, ordinal or nominal, and independent [18].

For each statistical test used (parametric or nonparametric), report the type of outcome measure and the methods used to test the assumptions of the statistical approach. If data were transformed, identify precisely the transformation used and which outcome measures it was applied to. Report any changes to the analysis if the assumptions were not met and an alternative approach was used (e.g., a nonparametric test was used, which does not require the assumption of normality). If the relevant assumptions about the data were not tested, state this explicitly.

Examples

Subitem 7b—Example 1

‘Model assumptions were checked using the Shapiro-Wilk normality test and Levene’s Test for homogeneity of variance and by visual inspection of residual and fitted value plots. Some of the response variables had to be transformed by applying the natural logarithm or the second or third root, but were back-transformed for visualization of significant effects’ [101].

Subitem 7b—Example 2

‘The effects of housing (treatment) and day of euthanasia on cortisol levels were assessed by using fixed-effects 2-way ANOVA. An initial exploratory analysis indicated that groups with higher average cortisol levels also had greater variation in this response variable. To make the variation more uniform, we used a logarithmic transform of each fish’s cortisol per unit weight as the dependent variable in our analyses. This action made the assumptions of normality and homoscedasticity (standard deviations were equal) of our analyses reasonable’ [102].

Item 8. Experimental animals

8a. Provide species-appropriate details of the animals used, including species, strain and substrain, sex, age or developmental stage, and, if relevant, weight.

Explanation. The species, strain, substrain, sex, weight, and age of animals are critical factors that can influence most experimental results [103–107]. Reporting the characteristics of all animals used is equivalent to standardised human patient demographic data; these data support both the internal and external validity of the study results. It enables other researchers to repeat the experiment and generalise the findings. It also enables readers to assess whether the animal characteristics chosen for the experiment are relevant to the research objectives.

When reporting age and weight, include summary statistics for each experimental group (e.g., mean and standard deviation) and, if possible, baseline values for individual animals (e.g., as supplementary information or a link to a publicly accessible data repository). As body weight might vary during the course of the study, indicate when the measurements were taken. For most species, precise reporting of age is more informative than a description of the developmental status (e.g., a mouse referred to as an adult can vary in age from 6 to 20 weeks [108]). In some cases, however, reporting the developmental stage is more informative than chronological age—for example, in juvenile Xenopus, in which rate of development can be manipulated by incubation temperature [109].

Reporting the weight or the sex of the animals used may not feasible for all studies. For example, sex may be unknown for embryos or juveniles, or weight measurement may be particularly stressful for some aquatic species. If reporting these characteristics can be reasonably expected for the species used and the experimental setting but are not reported, provide a justification.

Examples

Subitem 8a—Example 1

‘One hundred and nineteen male mice were used: C57BL/6OlaHsd mice (n = 59), and BALB/c OlaHsd mice (n = 60) (both from Harlan, Horst, The Netherlands). At the time of the EPM test the mice were 13 weeks old and had body weights of 27.4 ± 0.4 g and 27.8 ± 0.3 g, respectively (mean ± SEM)’ [110].

Subitem 8a—Example 2

‘Histone Methylation Profiles and the Transcriptome of X. tropicalis Gastrula Embryos. To generate epigenetic profiles, ChIP was performed using specific antibodies against trimethylated H3K4 and H3K27 in Xenopus gastrula-stage embryos (Nieuwkoop-Faber stage 11–12), followed by deep sequencing (ChIP-seq). In addition, polyA-selected RNA (stages 10–13) was reverse transcribed and sequenced (RNA-seq)’ [111].

8b. Provide further relevant information on the provenance of animals, health/immune status, genetic modification status, genotype, and any previous procedures.

Explanation. The animals’ provenance, their health or immune status, and their history of previous testing or procedures can influence their physiology and behaviour, as well as their response to treatments, and thus impact on study outcomes. For example, animals of the same strain but from different sources, or animals obtained from the same source but at different times, may be genetically different [16]. The immune or microbiological status of the animals can also influence welfare, experimental variability, and scientific outcomes [112–114].

Report the health status of all animals used in the study and any previous procedures the animals have undergone. For example, if animals are specific pathogen free (SPF), list the pathogens that they were declared free of. If health status is unknown or was not tested, explicitly state this.

For genetically modified animals, describe the genetic modification status (e.g., knockout, overexpression), genotype (e.g., homozygous, heterozygous), manipulated gene(s), genetic methods and technologies used to generate the animals, how the genetic modification was confirmed, and details of animals used as controls (e.g., littermate controls [115]).

Reporting the correct nomenclature is crucial to understanding the data and ensuring that the research is discoverable and replicable [116–118]. Useful resources for reporting nomenclature for different species include

Mice—International Committee on Standardized Genetic Nomenclature (https://www.jax.org/jax-mice-and-services/customer-support/technical-support/genetics-and-nomenclature)

Rats—Rat Genome and Nomenclature Committee (https://rgd.mcw.edu/)

Zebrafish—Zebrafish Information Network (http://zfin.org/)

Xenopus—Xenbase (http://www.xenbase.org/entry/)

Drosophila—FlyBase (http://flybase.org/)

C. elegans—WormBase (https://wormbase.org/)

Examples

Subitem 8b—Example 1

‘A construct was engineered for knockin of the miR-128 (miR-128-3p) gene into the Rosa26 locus. Rosa26 genomic DNA fragments (~1.1 kb and ~4.3 kb 5′ and 3′ homology arms, respectively) were amplified from C57BL/6 BAC DNA, cloned into the pBasicLNeoL vector sequentially by in-fusion cloning, and confirmed by sequencing. The miR-128 gene, under the control of tetO-minimum promoter, was also cloned into the vector between the two homology arms. In addition, the targeting construct also contained a loxP sites flanking the neomycin resistance gene cassette for positive selection and a diphtheria toxin A (DTA) cassette for negative selection. The construct was linearized with ClaI and electroporated into C57BL/6N ES cells. After G418 selection, seven-positive clones were identified from 121 G418-resistant clones by PCR screening. Six-positive clones were expanded and further analyzed by Southern blot analysis, among which four clones were confirmed with correct targeting with single-copy integration. Correctly targeted ES cell clones were injected into blastocysts, and the blastocysts were implanted into pseudo-pregnant mice to generate chimeras by Cyagen Biosciences Inc. Chimeric males were bred with Cre deleted mice from Jackson Laboratories to generate neomycin-free knockin mice. The correct insertion of the miR-128 cassette and successful removal of the neomycin cassette were confirmed by PCR analysis with the primers listed in Supplementary Table… ’ [119].

Subitem 8b—Example 2

‘The C57BL/6J (Jackson) mice were supplied by Charles River Laboratories. The C57BL/6JOlaHsd (Harlan) mice were supplied by Harlan. The α-synuclein knockout mice were kindly supplied by Prof…. (Cardiff University, Cardiff, United Kingdom.) and were congenic C57BL/6JCrl (backcrossed for 12 generations). TNFα−/− mice were kindly supplied by Dr…. (Queens University, Belfast, Northern Ireland) and were inbred on a homozygous C57BL/6J strain originally sourced from Bantin & Kingman and generated by targeting C57BL/6 ES cells. T286A mice were obtained from Prof…. (University of California, Los Angeles, CA). These mice were originally congenic C57BL/6J (backcrossed for five generations) and were then inbred (cousin matings) over 14 y, during which time they were outbred with C57BL/6JOlaHsd mice on three separate occasions’ [120].

Item 9. Experimental procedures

For each experimental group, including controls, describe the procedures in enough detail to allow others to replicate them, including:

9a. What was done, how it was done, and what was used.

Explanation. Essential information to describe in the manuscript includes the procedures used to develop the model (e.g., induction of the pathology), the procedures used to measure the outcomes, and pre- and postexperimental procedures, including animal handling, welfare monitoring, and euthanasia. Animal handling can be a source of stress, and the specific method used (e.g., mice picked up by tail or in cupped hands) can affect research outcomes [61,121,122]. Details about animal care and monitoring intrinsic to the procedure are discussed in further detail in Item 16. Animal care and monitoring. Provide enough detail to enable others to replicate the methods and highlight any quality assurance and quality control used [123,124]. A schematic of the experimental procedures with a timeline can give a clear overview of how the study was conducted. Information relevant to distinct types of interventions and resources are described in Table 1.

Table 1. Examples of information to include when reporting specific types of experimental procedures and resources.

| Procedures | Resources |

|---|---|

Pharmacological procedures (intervention and control)

|

Cell lines |

Surgical procedures (including sham surgery)

|

Reagents (e.g., antibodies, chemicals)

|

Pathogen infection (intervention and control)

|

Equipment and software

|

Euthanasia

|

AVMA, American Veterinary Medical Association; RRID, Research Resource Identifier.

When available, cite the Research Resource Identifier (RRID) for reagents and tools used [126,127]. RRIDs are unique and stable, allowing unambiguous identification of reagents or tools used in a study, aiding other researchers to replicate the methods.

Detailed step-by-step procedures can also be saved and shared online, for example, using Protocols.io [128], which assigns a digital object identifier (DOI) to the protocol and allows cross-referencing between protocols and publications.

Examples

Subitem 9a—Example 1

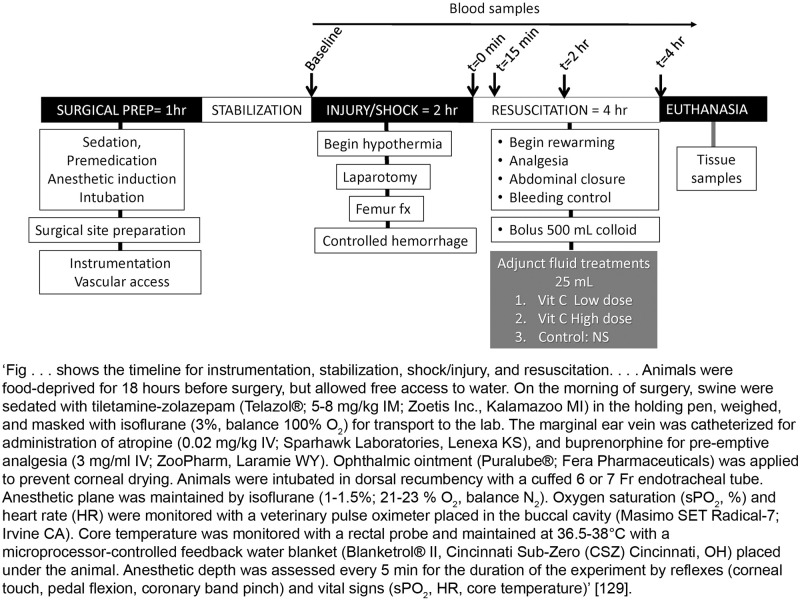

Fig 4. This figure is an alternative version of the figure published in reference [129].

Subitem 9a—Example 2