Abstract

We propose a definition of saliency by considering what the visual system is trying to optimize when directing attention. The resulting model is a Bayesian framework from which bottom-up saliency emerges naturally as the self-information of visual features, and overall saliency (incorporating top-down information with bottom-up saliency) emerges as the pointwise mutual information between the features and the target when searching for a target. An implementation of our framework demonstrates that our model’s bottom-up saliency maps perform as well as or better than existing algorithms in predicting people’s fixations in free viewing. Unlike existing saliency measures, which depend on the statistics of the particular image being viewed, our measure of saliency is derived from natural image statistics, obtained in advance from a collection of natural images. For this reason, we call our model SUN (Saliency Using Natural statistics). A measure of saliency based on natural image statistics, rather than based on a single test image, provides a straightforward explanation for many search asymmetries observed in humans; the statistics of a single test image lead to predictions that are not consistent with these asymmetries. In our model, saliency is computed locally, which is consistent with the neuroanatomy of the early visual system and results in an efficient algorithm with few free parameters.

Keywords: saliency, attention, eye movements, computational modeling

Introduction

The surrounding world contains a tremendous amount of visual information, which the visual system cannot fully process (Tsotsos, 1990). The visual system thus faces the problem of how to allocate its processing resources to focus on important aspects of a scene. Despite the limited amount of visual information the system can handle, sampled by discontinuous fixations and covert shifts of attention, we experience a seamless, continuous world. Humans and many other animals thrive using this heavily downsampled visual information. Visual attention as overtly reflected in eye movements partially reveals the sampling strategy of the visual system and is of great research interest as an essential component of visual cognition. Psychologists have investigated visual attention for many decades using psychophysical experiments, such as visual search tasks, with carefully controlled stimuli. Sophisticated mathematical models have been built to account for the wide variety of human performance data (e.g., Bundesen, 1990; Treisman & Gelade, 1980; Wolfe, Cave, & Franzel, 1989). With the development of affordable and easy-to-use modern eye-tracking systems, the locations that people fixate when they perform certain tasks can be explicitly recorded and can provide insight into how people allocate their attention when viewing complex natural scenes. The proliferation of eye-tracking data over the last two decades has led to a number of computational models attempting to account for the data and addressing the question of what attracts attention. Most models have focused on bottom-up attention, where the subjects are free-viewing a scene and salient objects attract attention. Many of these saliency models use findings from psychology and neurobiology to construct plausible mechanisms for guiding attention allocation (Itti, Koch, & Niebur, 1998; Koch & Ullman, 1985; Wolfe et al., 1989). More recently, a number of models attempt to explain attention based on more mathematically motivated principles that address the goal of the computation (Bruce & Tsotsos, 2006; Chauvin, Herault, Marendaz, & Peyrin, 2002; Gao & Vasconcelos, 2004, 2007; Harel, Koch, & Perona, 2007; Kadir & Brady, 2001, Oliva, Torralba, Castelhano, & Henderson, 2003; Renninger, Coughlan, Verghese, & Malik, 2004; Torralba, Oliva, Castelhano, & Henderson, 2006; Zhang, Tong, & Cottrell, 2007). Both types of models tend to rely solely on the statistics of the current test image for computing the saliency of a point in the image. We argue here that natural statistics (the statistics of visual features in natural scenes, which an organism would learn through experience) must also play an important role in this process.

In this paper, we make an effort to address the underlying question: What is the goal of the computation performed by the attentional system? Our model starts from the simple assumption that an important goal of the visual system is to find potential targets and builds up a Bayesian probabilistic framework of what the visual system should calculate to optimally achieve this goal. In this framework, bottom-up saliency emerges naturally as self-information. When searching for a particular target, top-down effects from a known target emerge in our model as a log-likelihood term in the Bayesian formulation. The model also dictates how to combine bottom-up and top-down information, leading to pointwise mutual information as a measure of overall saliency. We develop a bottom-up saliency algorithm that performs as well as or better than state-of-the-art saliency algorithms at predicting human fixations when free-viewing images. Whereas existing bottom-up saliency measures are defined solely in terms of the image currently being viewed, ours is instead defined based on natural statistics (collected from a set of images of natural scenes), to represent the visual experience an organism would acquire during development. This difference is most notable when comparing with models that also use a Bayesian formulation (e.g., Torralba et al., 2006) or self-information (e.g., Bruce & Tsotsos, 2006). As a result of using natural statistics, our model provides a straightforward account of many human search asymmetries that cannot be explained based on the statistics of the test image alone. Unlike many models, our measure of saliency only involves local computation on images, with no calculation of global image statistics, saliency normalization, or winner-take-all competition. This makes our algorithm not only more efficient, but also more biologically plausible, as long-range connections are scarce in the lower levels of the visual system. Because of the focus on learned statistics from natural scenes, we call our saliency model SUN (Saliency Using Natural statistics).

Previous work

In this section we discuss previous saliency models that have achieved good performance in predicting human fixations in viewing images. The motivation for these models has come from psychophysics and neuroscience (Itti & Koch, 2001; Itti et al., 1998), classification optimality (Gao & Vasconcelos, 2004, 2007), the task of looking for a target (Oliva et al., 2003; Torralba et al., 2006), or information maximization (Bruce & Tsotsos, 2006). Many models of saliency implicitly assume that covert attention functions much like a spotlight (Posner, Rafal, Choate, & Vaughan, 1985) or a zoom lens (Eriksen & St James, 1986) that focuses on salient points of interest in much the same way that eye fixations do. A model of saliency, therefore, can function as a model of both overt and covert attention. For example, although originally intended primarily as a model for covert attention, the model of Koch and Ullman (1985) has since been frequently applied as a model of eye movements. Eye fixations, in contrast to covert shifts of attention, are easily measured and allow a model’s predictions to be directly verified. Saliency models are also compared against human studies where either a mix of overt and covert attention is allowed or covert attention functions on its own. The similarity between covert and overt attention is still debated, but there is compelling evidence that the similarities commonly assumed may be valid (for a review, see Findlay & Gilchrist, 2003).

Itti and Koch’s saliency model (Itti & Koch, 2000, 2001; Itti et al., 1998) is one of the earliest and the most used for comparison in later work. The model is an implementation of and expansion on the basic ideas first proposed by Koch and Ullman (1985). The model is inspired by the visual attention literature, such as feature integration theory (Treisman & Gelade, 1980), and care is taken in the model’s construction to ensure that it is neurobiologically plausible. The model takes an image as input, which is then decomposed into three channels: intensity, color, and orientation. A center-surround operation, implemented by taking the difference of the filter responses from two scales, yields a set of feature maps. The feature maps for each channel are then normalized and combined across scales and orientations, creating conspicuity maps for each channel. The conspicuous regions of these maps are further enhanced by normalization, and the channels are linearly combined to form the overall saliency map. This process allows locations to vie for conspicuity within each feature dimension but has separate feature channels contribute to saliency independently; this is consistent with the feature integration theory. This model has been shown to be successful in predicting human fixations and to be useful in object detection (Itti & Koch, 2001; Itti et al., 1998; Parkhurst, Law, & Niebur, 2002). However, it can be criticized as being ad hoc, partly because the overarching goal of the system (i.e., what it is designed to optimize) is not specified, and it has many parameters that need to be hand-selected.

Several saliency algorithms are based on measuring the complexity of a local region (Chauvin et al., 2002; Kadir & Brady, 2001; Renninger et al., 2004; Yamada & Cottrell, 1995). Yamada and Cottrell (1995) measure the variance of 2D Gabor filter responses across different orientations. Kadir and Brady (2001) measure the entropy of the local distribution of image intensity. Renninger and colleagues measure the entropy of local features as a measure of uncertainty, and the most salient point at any given time during their shape learning and matching task is the one that provides the greatest information gain conditioned on the knowledge obtained during previous fixations (Renninger et al., 2004; Renninger, Verghese, & Coughlan, 2007). All of these saliency-as-variance/entropy models are based on the idea that the entropy of a feature distribution over a local region measures the richness and diversity of that region (Chauvin et al., 2002), and intuitively a region should be salient if it contains features with many different orientations and intensities. A common critique of these models is that highly textured regions are always salient regardless of their context. For example, human observers find an egg in a nest highly salient, but local-entropy-based algorithms find the nest to be much more salient than the egg (Bruce & Tsotsos, 2006; Gao & Vasconcelos, 2004).

Gao and Vasconcelos (2004, 2007) proposed a specific goal for saliency: classification. That is, a goal of the visual system is to classify each stimulus as belonging to a class of interest (or not), and saliency should be assigned to locations that are useful for that task. This was first used for object detection (Gao & Vasconcelos, 2004), where a set of features are selected to best discriminate the class of interest (e.g., faces or cars) from all other stimuli, and saliency is defined as the weighted sum of feature responses for the set of features that are salient for that class. This forms a definition that is inherently top-down and goal directed, as saliency is defined for a particular class. Gao and Vasconcelos (2007) define bottom-up saliency using the idea that locations are salient if they differ greatly from their surroundings. They use difference of Gaussians (DoG) filters and Gabor filters, measuring the saliency of a point as the Kullback–Leibler (KL) divergence between the histogram of filter responses at the point and the histogram of filter responses in the surrounding region. This addresses a previously mentioned problem commonly faced by complexity-based models (as well as some other saliency models that use linear filter responses as features): these models always assign high saliency scores to highly textured areas. In the Discussion section, we will discuss a way that the SUN model could address this problem by using non-linear features that model complex cells or neurons in higher levels of the visual system.

Oliva and colleagues proposed a probabilistic model for visual search tasks (Oliva et al., 2003; Torralba et al., 2006). When searching for a target in an image, the probability of interest is the joint probability that the target is present in the current image, together with the target’s location (if the target is present), given the observed features. This can be calculated using Bayes’ rule:

| (1) |

where O = 1 denotes the event that the target is present in the image, L denotes the location of the target when O = 1, F denotes the local features at location L, and G denotes the global features of the image. The global features G represent the scene gist. Their experiments show that the gist of a scene can be quickly determined, and the focus of their work largely concerns how this gist affects eye movements. The first term on the right side of Equation 1 is independent of the target and is defined as bottom-up saliency; Oliva and colleagues approximate this conditional probability distribution using the current image’s statistics. The remaining terms on the right side of Equation 1 respectively address the distribution of features for the target, the likely locations for the target, and the probability of the target’s presence, all conditioned on the scene gist. As we will see in the Bayesian framework for saliency section, our use of Bayes’ rule to derive saliency is reminiscent of this approach. However, the probability of interest in the work of Oliva and colleagues is whether or not a target is present anywhere in the test image, whereas the probability we are concerned with is the probability that a target is present at each point in the visual field. In addition, Oliva and colleagues condition all of their probabilities on the values of global features. Conditioning on global features/gist affects the meaning of all terms in Equation 1, and justifies their use of current image statistics for bottom-up saliency. In contrast, SUN focuses on the effects of an organism’s prior visual experience.

Bruce and Tsotsos (2006) define bottom-up saliency based on maximum information sampling. Information, in this model, is computed as Shannon’s self-information, −log p(F), where F is a vector of the visual features observed at a point in the image. The distribution of the features is estimated from a neighborhood of the point, which can be as large as the entire image. When the neighborhood of each point is indeed defined as the entire image of interest, as implemented in (Bruce & Tsotsos, 2006), the definition of saliency becomes identical to the bottom-up saliency term in Equation 1 from the work of Oliva and colleagues (Oliva et al., 2003; Torralba et al., 2006). It is worth noting, however, that the feature spaces used in the two models are different. Oliva and colleagues use biologically inspired linear filters of different orientations and scales. These filter responses are known to correlate with each other; for example, a vertical bar in the image will activate a filter tuned to vertical bars but will also activate (to a lesser degree) a filter tuned to 45-degree-tilted bars. The joint probability of the entire feature vector is estimated using multivariate Gaussian distributions (Oliva et al., 2003) and later multivariate generalized Gaussian distributions (Torralba et al., 2006). Bruce and Tsotsos (2006), on the other hand, employ features that were learned from natural images using independent component analysis (ICA). These have been shown to resemble the receptive fields of neurons in primary visual cortex (V1), and their responses have the desirable property of sparsity. Furthermore, the features learned are approximately independent, so the joint probability of the features is just the product of each feature’s marginal probability, simplifying the probability estimation without making unreasonable independence assumptions.

The Bayesian Surprise theory of Itti and Baldi (2005, 2006) applies a similar notion of saliency to video. Under this theory, organisms form models of their environment and assign probability distributions over the possible models. Upon the arrival of new data, the distribution over possible models is updated with Bayes’ rule, and the KL divergence between the prior distribution and posterior distribution is measured. The more the new data forces the distribution to change, the larger the divergence. These KL scores of different distributions over models combine to produce a saliency score. Itti and Baldi’s implementation of this theory leads to an algorithm that, like the others described here, defines saliency as a kind of deviation from the features present in the immediate neighborhood, but extending the notion of neighborhood to the spatiotemporal realm.

Bayesian framework for saliency

We propose that one goal of the visual system is to find potential targets that are important for survival, such as food and predators. To achieve this, the visual system must actively estimate the probability of a target at every location given the visual features observed. We propose that this probability, or a monotonic transformation of it, is visual saliency.

To formalize this, let z denote a point in the visual field. A point here is loosely defined; in the implementation described in the Implementation section, a point corresponds to a single image pixel. (In other contexts, a point could refer other things, such as an object; Zhang et al., 2007.) We let the binary random variable C denote whether or not a point belongs to a target class, let the random variable L denote the location (i.e., the pixel coordinates) of a point, and let the random variable F denote the visual features of a point. Saliency of a point z is then defined as p(C = 1 | F = fz, L = lz) where fz represents the feature values observed at z, and l represents the location (pixel coordinates) of z. This probability can be calculated using Bayes’ rule:

| (2) |

We assume1 for simplicity that features and location are independent and conditionally independent given C = 1:

| (3) |

| (4) |

This entails the assumption that the distribution of a feature does not change with location. For example, Equation 3 implies that a point in the left visual field is just as likely to be green as a point in the right visual field. Furthermore, Equation 4 implies (for instance) that a point on a target in the left visual field is just as likely to be green as a point on a target in the right visual field. With these independence assumptions, Equation 2 can be rewritten as:

| (5) |

| (6) |

| (7) |

To compare this probability across locations in an image, it suffices to estimate the log probability (since logarithm is a monotonically increasing function). For this reason, we take the liberty of using the term saliency to refer both to sz and to log sz, which is given by:

| (8) |

The first term on the right side of this equation, −log p(F = fz), depends only on the visual features observed at the point and is independent of any knowledge we have about the target class. In information theory, −log p(F = fz) is known as the self-information of the random variable F when it takes the value fz. Self-information increases when the probability of a feature decreases—in other words, rarer features are more informative. We have already discussed self-information in the context of previous work, but as we will see later, SUN’s use of self-information differs from that of previous approaches.

The second term on the right side of Equation 8, log p(F = fz | C = 1), is a log-likelihood term that favors feature values that are consistent with our knowledge of the target. For example, if we know that the target is green, then the log-likelihood term will be much larger for a green point than for a blue point. This corresponds to the top-down effect when searching for a known target, consistent with the finding that human eye movement patterns during iconic visual search can be accounted for by a maximum likelihood procedure for computing the most likely location of a target (Rao, Zelinsky, Hayhoe, & Ballard, 2002).

The third term in Equation 8, log p(C = 1 | L = lz), is independent of visual features and reflects any prior knowledge of where the target is likely to appear. It has been shown that if the observer is given a cue of where the target is likely to appear, the observer attends to that location (Posner & Cohen, 1984). For simplicity and fairness of comparison with Bruce and Tsotsos (2006), Gao and Vasconcelos (2007), and Itti et al. (1998), we assume location invariance (no prior information about the locations of potential targets) and omit the location prior; in the Results section, we will further discuss the effects of the location prior.

After omitting the location prior from Equation 8, the equation for saliency has just two terms, the self-information and the log-likelihood, which can be combined:

| (9) |

| (10) |

| (11) |

The resulting expression, which is called the pointwise mutual information between the visual feature and the presence of a target, is a single term that expresses overall saliency. Intuitively, it favors feature values that are more likely in the presence of a target than in a target’s absence.

When the organism is not actively searching for a particular target (the free-viewing condition), the organism’s attention should be directed to any potential targets in the visual field, despite the fact that the features associated with the target class are unknown. In this case, the log-likelihood term in Equation 8 is unknown, so we omit this term from the calculation of saliency (this can also be thought of as assuming that for an unspecified target, the likelihood distribution is uniform over feature values). In this case, the overall saliency reduces to just the self-information term: log sz = −log p(F = fz). We take this to be our definition of bottom-up saliency. It implies that the rarer a feature is, the more it will attract our attention.

This use of −log p(F = fz) differs somewhat from how it is often used in the Bayesian framework. Often, the goal of the application of Bayes’ rule when working with images is to classify the provided image. In that case, the features are given, and the −log p(F = fz) term functions as a (frequently omitted) normalizing constant. When the task is to find the point most likely to be part of a target class, however, −log p(F = fz) plays a much more significant role as its value varies over the points of the image. In this case, its role in normalizing the likelihood is more important as it acts to factor in the potential usefulness of each feature to aid in discrimination. Assuming that targets are relatively rare, a target’s feature is most useful if that feature is comparatively rare in the background environment, as otherwise the frequency with which that feature appears is likely to be more distracting than useful. As a simple illustration of this, consider that even if you know with absolute certainty that the target is red, i.e. p(F = red | C = 1) = 1, that fact is useless if everything else in the world is red as well.

When one considers that an organism may be interested in a large number of targets, the usefulness of rare features becomes even more apparent; the value of p(F = fz | C = 1) will vary for different target classes, while p(F = fz) remains the same regardless of the choice of targets. While there are specific distributions of p(F = fz | C = 1) for which SUN’s bottom-up saliency measure would be unhelpful in finding targets, these are special cases that are not likely to hold in general (particularly in the free-viewing condition, where the set of potential targets is largely unknown). That is, minimizing p(F = fz) will generally advance the goal of increasing the ratio p(F = fz | C = 1) / p(F = fz), implying that points with rare features should be found “interesting.”

Note that all of the probability distributions described here should be learned by the visual system through experience. Because the goal of the SUN model is to find potential targets in the surrounding environment, the probabilities should reflect the natural statistics of the environment and the learning history of the organism, rather than just the statistics of the current image. (This is especially obvious for the top-down terms, which require learned knowledge of the targets.)

In summary, calculating the probability of a target at each point in the visual field leads naturally to the estimation of information content. In the free-viewing condition, when there is no specific target, saliency reduces to the self-information of a feature. This implies that when one’s attention is directed only by bottom-up saliency, moving one’s eyes to the most salient points in an image can be regarded as maximizing information sampling, which is consistent with the basic assumption of Bruce and Tsotsos (2006). When a particular target is being searched for, on the other hand, our model implies that the best features to attend to are those that have the most mutual information with the target. This has been shown to be very useful in object detection with objects such as faces and cars (Ullman, Vidal-Naquet, & Sali, 2002).

In the rest of this paper, we will concentrate on bottom-up saliency for static images. This corresponds to the free-viewing condition, when no particular target is of interest. Although our model was motivated by the goal-oriented task of finding important targets, the bottom-up component remains task blind and has the statistics of natural scenes as its sole source of world knowledge. In the next section, we provide a simple and efficient algorithm for bottom-up saliency that (as we demonstrate in the Results section) produces state-of-the-art performance in predicting human fixations.

Implementation

In this section, we develop an algorithm based on our SUN model that takes color images as input and calculates their saliency maps (the saliency at every pixel in an image) using the task-independent, bottom-up portion of our saliency model. Given a probabilistic formula for saliency, such as the one we derived in the previous section, there are two key factors that affect the final results of a saliency model when operating on an image. One is the feature space, and the other is the probability distribution over the features.

In most existing saliency algorithms, the features are calculated as responses of biologically plausible linear filters, such as DoG (difference of Gaussians) filters and Gabor filters (e.g., Itti & Koch, 2001; Itti et al., 1998; Oliva et al., 2003; Torralba et al., 2006). In Bruce and Tsotsos (2006), the features are calculated as the responses to filters learned from natural images using independent component analysis (ICA). In this paper, we conduct experiments with both kinds of features.

Below, we describe the SUN algorithm for estimating the bottom-up saliency that we derived in the Bayesian framework for saliency section, −log p(F = fz). Here, a point z corresponds to a pixel in the image. For the remainder of the paper, we will drop the subscript z for notational simplicity. In this algorithm, F is a random vector of filter responses, F = [F1, F2, …], where the random variable Fi represents the response of the ith filter at a pixel, and f = [f1, f2, …] are the values of these filter responses at this pixel location.

Method 1: Difference of Gaussians filters

As noted above, many existing models use a collection of DoG (difference of Gaussians) and/or Gabor filters as the first step of processing the input images. These filters are popular due to their resemblance to the receptive fields of neurons in the early stages of the visual system, namely the lateral geniculate nucleus of the thalamus (LGN) and the primary visual cortex (V1). DoGs, for example, give the well-known “Mexican hat” center-surround filter. Here, we apply DoGs to the intensity and color channels of an image. A more complicated feature set composed of a mix of DoG and Gabor filters was also initially evaluated, but results were similar to those of the simple DoG filters used here.

Let r, g, and b denote the red, green, and blue components of an input image pixel. The intensity (I), red/green (RG), and blue/yellow (BY) channels are calculated as:

| (12) |

The DoG filters are generated by2

| (13) |

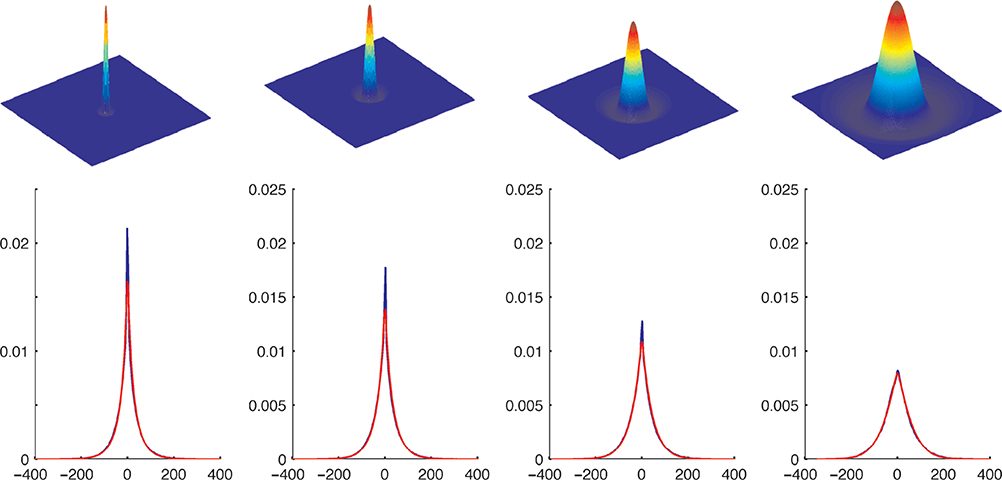

where (x, y) is the location in the filter. These filters are convolved with the intensity and color channels (I, RG, and BY) to produce the filter responses. We use four scales of DoG (σ = 4, 8, 16, or 32 pixels) on each of the three channels, leading to 12 feature response maps. The filters are shown in Figure 1, top.

Figure 1.

Four scales of difference of Gaussians (DoG) filters were applied to each channel of a set of 138 images of natural scenes. Top: The four scales of difference of Gaussians (DoG) filters that were applied to each channel. Bottom: The graphs show the probability distribution of filter responses for these four filters (with σ increasing from left to right) on the intensity (I) channel collected from the set of natural images (blue line), and the fitted generalized Gaussian distributions (red line). Aside from the natural statistics in this training set being slightly sparser (the blue peak over 0 is slightly higher than the red peak of the fitted function), the generalized Gaussian distributions provide an excellent fit.

By computing these feature response maps on a set of 138 images of natural scenes (photographed by the first author), we obtained an estimate of the probability distribution over the observed values of each of the 12 features. Note that the images used to gather natural scene statistics were different than those used in the experiments that we used in our evaluation. To parameterize this estimated distribution for each feature Fi, we used an algorithm proposed by Song (2006) to fit a zero-mean generalized Gaussian distribution, also known as an exponential power distribution, to the filter response data:

| (14) |

In this equation, Γ is the gamma function, θ is the shape parameter, σ is the scale parameter, and f is the filter response. This resulted in one shape parameter, θi, and one scale parameter, σi, for each of the 12 filters: i = 1, 2, …, 12. Figure 1 shows the distributions of the four DoG filter responses on the intensity (I) channel across the training set of natural images and the fitted generalized Gaussian distributions. As the figure shows, the generalized Gaussians provide an excellent fit to the data.

Taking the logarithm of Equation 14, we obtain the log probability over the possible values of each feature:

| (15) |

| (16) |

where the constant term does not depend on the feature value. To simplify the computations, we assume that the 12 filter responses are independent. Hence the total bottom-up saliency of a point takes the form:

| (17) |

Method 2: Linear ICA filters

In SUN’s final formula for bottom-up saliency (Equation 17), we assumed independence between the filter responses. However, this assumption does not always hold. For example, a bright spot in an image will generate a positive filter response for multiple scales of DoG filters. In this case the filter responses, far from being independent, will be highly correlated. It is not clear how this correlation affects the saliency results when a weighted sum of filter responses is used to compute saliency (as in Itti & Koch, 2001; Itti et al., 1998) or when independence is assumed in estimating probability (as in our case). Torralba et al. (2006) used a multivariate generalized Gaussian distribution to fit the joint probability of the filter responses. However, although the response of a single filter has been shown to be well fitted by a univariate generalized Gaussian distribution, it is less clear that the joint probability follows a multivariate generalized Gaussian distribution. Also, much more data are necessary for a good fit of a high-dimensional probability distribution than for one-dimensional distributions. It has been shown that estimating the moments of a generalized Gaussian distribution has limitations even for the one-dimensional case (Song, 2006), and it is much less likely to work well for the high-dimensional case.

To obtain the linear features used in their saliency algorithm, Bruce and Tsotsos (2006) applied independent component analysis (ICA) to a training set of natural images. This has been shown to yield features that qualitatively resemble those found in the visual cortex (Bell & Sejnowski, 1997; Olshausen & Field, 1996). Although the linear features learned in this way are not entirely independent, they have been shown to be independent up to third-order statistics (Wainwright, Schwartz, & Simoncelli, 2002). Such a feature space will provide a much better match for the independence assumptions we made in Equation 17. Thus, in this method we follow (Bruce & Tsotsos, 2006) and derive complete ICA features to use in SUN. It is worth noting that although Bruce and Tsotsos (2006) use a set of natural images to train the feature set, they determine the distribution over these features solely from a single test image when calculating saliency.

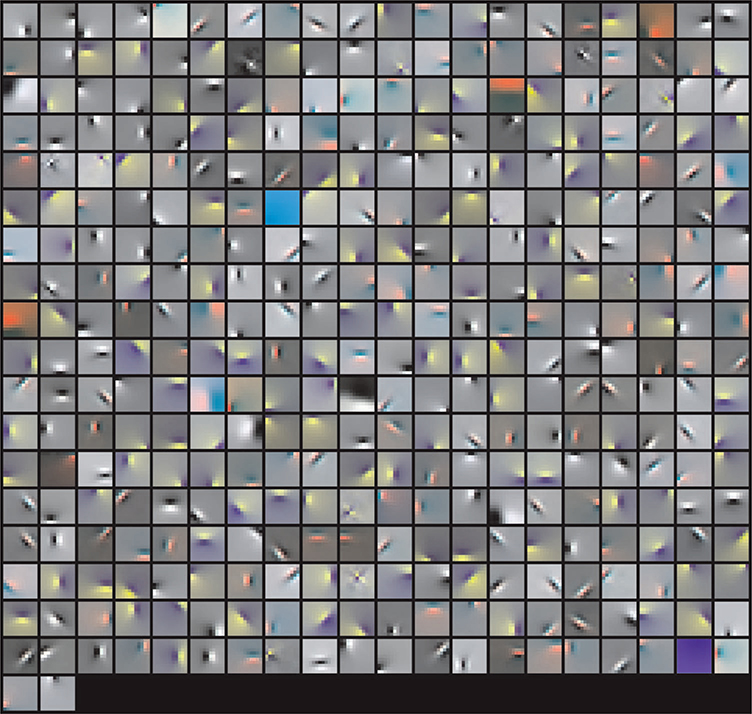

We applied the FastICA algorithm (Hyvärinen & Oja, 1997) to 11-pixel × 11-pixel color natural image patches drawn from the Kyoto image data set (Wachtler, Doi, Lee, & Sejnowski, 2007). This resulted in 11 × 11 × 3 − 1 = 362 features.3 Figure 2 shows the linear ICA features obtained from the training image patches.

Figure 2.

The 362 linear features learned by applying a complete independent component analysis (ICA) algorithm to 11 × 11 patches of color natural images from the Kyoto data set.

Like the DoG features from method 1, the ICA feature responses to natural images can be fitted very well using generalized Gaussian distributions, and we obtain the shape and scale parameters for each ICA filter by fitting its response to the ICA training images. The formula for saliency is the same as in Method 1 (Equation 17), except that the sum is now over 362 ICA features (rather than 12 DoG features). The matlab code for computing saliency maps with ICA features is available at http://journalofvision.org/8/7/32/supplement/supplement.html.

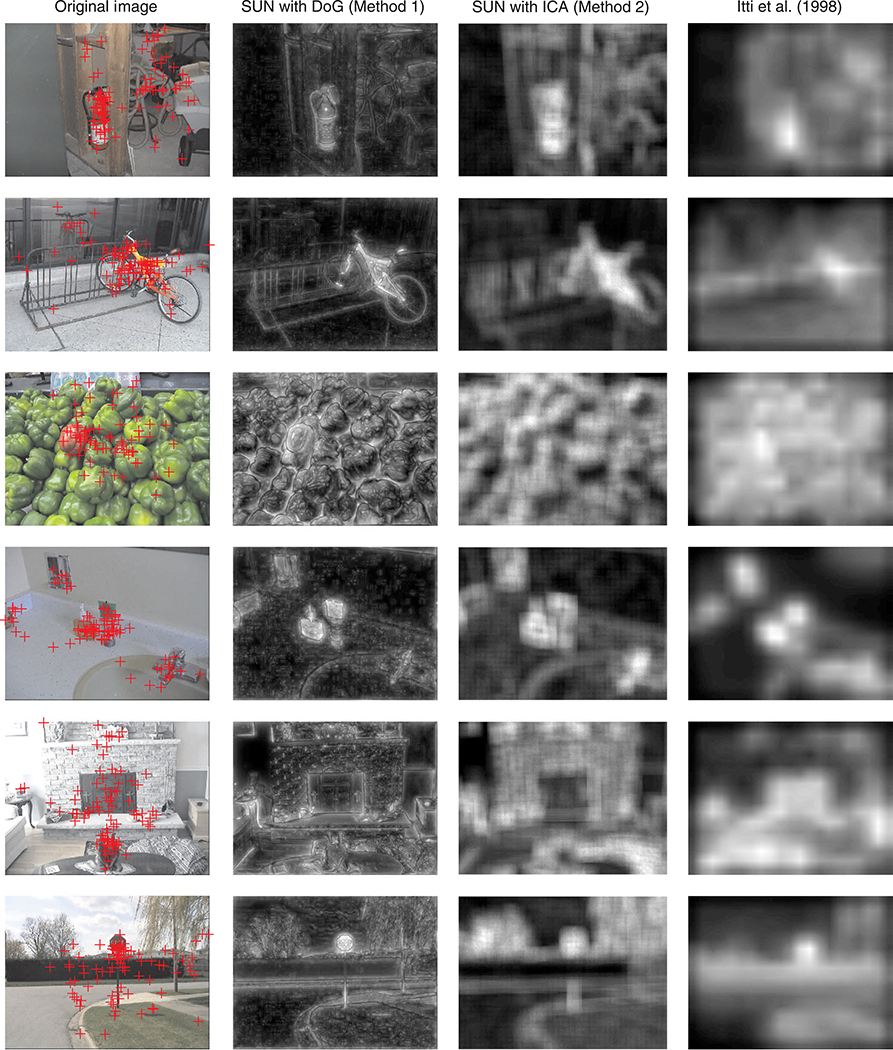

Some examples of bottom-up saliency maps computed using the algorithms from Methods 1 and 2 are shown in Figure 3. Each row displays an original test image (from Bruce & Tsotsos, 2006) with human fixations overlaid as red crosses and the saliency maps on the image computed using Method 1 and Method 2. For comparison, the saliency maps generated by Itti et al. (1998) are included. For Method 1, we applied the DoG filters to 511 × 681 images; for computational efficiency of Method 2, we downsampled the images by a factor of 4 before applying the ICA-derived filters. Figure 3 is included for the purpose of qualitative comparison; the next section provides a detailed quantitative evaluation.

Figure 3.

Examples of saliency maps for qualitative comparison. Each row contains, from left to right: An original test image with human fixations (from Bruce & Tsotsos, 2006) shown as red crosses; the saliency map produced by our SUN algorithm with DoG filters (Method 1); the saliency map produced by SUN with ICA features (Method 2); and the maps from (Itti et al., 1998, as reported in Bruce & Tsotsos, 2006) as a comparison.

Results

Evaluation method and the center bias

ROC area

Several recent publications (Bruce & Tsotsos, 2006; Harel et al., 2007; Gao & Vasconcelos, 2007; Kienzle, Wichmann, Schölkopf, & Franz, 2007) use an ROC area metric to evaluate eye fixation prediction. Using this method, the saliency map is treated as a binary classifier on every pixel in the image; pixels with larger saliency values than a threshold are classified as fixated while the rest of the pixels in that image are classified as non-fixated. Human fixations are used as ground truth. By varying the threshold, an ROC curve can be drawn and the area under the curve indicates how well the saliency map predicts actual human eye fixations. This measurement has the desired characteristic of transformation invariance, in that the area under the ROC curve does not change when applying any monotonically increasing function (such as logarithm) to the saliency measure.

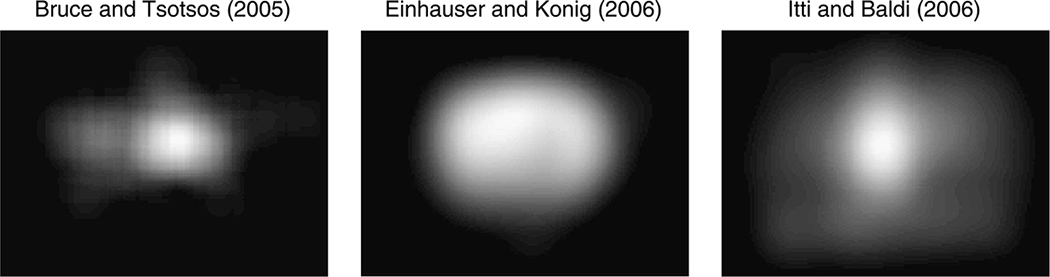

Assessing performance in this manner runs into problems because most human fixation data sets collected with head-mounted eye tracking systems have a strong center bias. This bias has often been attributed to factors related to the setup of the experiment, such as subjects being centered with respect to the center of the screen and framing effects caused by the monitor, but also reflects the fact that human photographers tend to center objects of interest (Parkhurst & Niebur, 2003; Tatler, Baddeley, & Gilchrist, 2005). More recent evidence suggests that the center bias exists even in the absence of these factors and may be due to the center being the optimal viewpoint for screen viewing (Tatler, 2007). Figure 4 shows the strong center bias of human eye fixations when free-viewing color static images (data from Bruce & Tsotsos, 2006), gray static images (data from Einhäuser, Kruse, Hoffmann, & König, 2006), and videos (data from Itti & Baldi, 2006). In fact, simply using a Gaussian blob centered in the middle of the image as the saliency map produces excellent results, consistent with findings from Le Meur, Le Callet, and Barba (2007). For example, on the data set collected in (Bruce & Tsotsos, 2006), we found that a Gaussian blob fitted to the human eye fixations for that set has an ROC area of 0.80, exceeding the reported results of 0.75 (in Bruce & Tsotsos, 2006) and 0.77 (in Gao & Vasconcelos, 2005) on this data set.

Figure 4.

Plots of all human eye fixation locations in three data sets. Left: subjects viewing color images (Bruce & Tsotsos, 2006); middle: subjects viewing gray images (Einhäuser et al., 2006); right: subjects viewing color videos (Itti & Baldi, 2006).

KL divergence

Itti and colleagues make use of the Kullback–Leibler (KL) divergence between the histogram of saliency sampled at eye fixations and that sampled at random locations as the evaluation metric for their dynamic saliency (Itti & Baldi, 2005, 2006). If a saliency algorithm performs significantly better than chance, the saliency computed at human-fixated locations should be higher than that computed at random locations, leading to a high KL divergence between the two histograms. This KL divergence, similar to the ROC measurement, has the desired property of transformation invariance-applying a continuous monotonic function (such as logarithm) to the saliency values would not affect scoring (Itti & Baldi, 2006). In (Itti & Baldi, 2005, 2006), the random locations are drawn from a uniform spatial distribution over each image frame. Like the ROC performance measurement, the KL divergence awards excellent performance to a simple Gaussian blob due to the center bias of the human fixations. The Gaussian blob discussed earlier, trained on the (Bruce & Tsotsos, 2006) data, yields a KL divergence of 0.44 on the data set of Itti and Baldi (2006), exceeding their reported result of 0.24. Thus, both the ROC area and KL divergence measurements are strongly sensitive to the effects of the center bias.

Edge effects

These findings imply that models that make use of a location prior (discussed in the section on the SUN model) would better model human behavior. Since all of the aforementioned models (Bruce & Tsotsos, 2006; Gao & Vasconcelos, 2007; Itti & Koch, 2000; Itti et al., 1998) calculate saliency at each pixel without regard to the pixel’s location, it would appear that both the ROC area measurement and the KL divergence provide a fair comparison between models since no model takes advantage of this additional information.

However, both measures are corrupted by an edge effect due to variations in the handling of invalid filter responses at the borders of images. When an image filter lies partially off the edge of an image, the filter response is not well defined, and various methods are used to deal with this problem. This is a well-known artifact of correlation, and it has even been discussed before in the visual attention literature (Tsotsos et al., 1995). However, its significance for model evaluation has largely been overlooked.

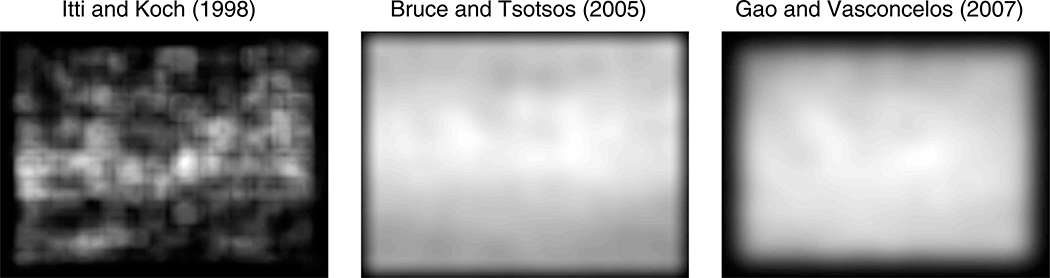

Figure 5 shows the average of all of the image saliency maps using each of the algorithms of (Bruce & Tsotsos, 2006; Gao & Vasconcelos, 2007; Itti & Koch, 2001) on the data set of Bruce and Tsotsos (2006). It is clear from Figure 5 that all three algorithms have borders with decreased saliency but to varying degrees. These border effects introduce an implicit center bias on the saliency maps; “cool borders” result in the bulk of salience being located at the center of the image. Because different models are affected by these edge effects to varying degrees, it is difficult to determine using the previously described measures whether the difference in performance between models is due to the models themselves, or merely due to edge effects.4

Figure 5.

The average saliency maps of three recent algorithms on the stimuli used in collecting human fixation data by Bruce and Tsotsos (2006). Averages were taken across the saliency maps for the 120 color images. The algorithms used are, from left to right, Bruce and Tsotsos (2006), Gao and Vasconcelos (2007), and Itti et al. (1998). All three algorithms exhibit decreased saliency at the image borders, an artifact of the way they deal with filters that lie partially off the edge of the images.

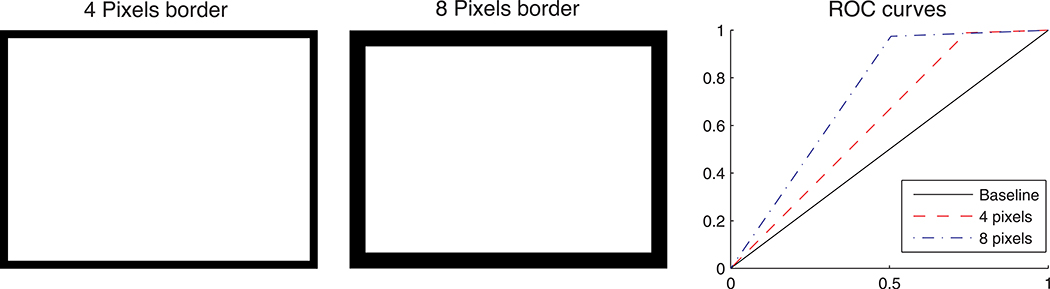

Figure 6 illustrates the impact that varying amounts of edge effects can have on the ROC area evaluation score by examining the performance of dummy saliency maps that are all 1’s except for a border of 0’s. The map with a four-pixel border yields an ROC area of 0.62, while the map with an eight-pixel border has an area of 0.73. All borders are small relative to the 120 × 160 pixel saliency map, and for these measurements, we assume that the border points are never fixated by humans, which corresponds well with actual human fixation data. A dummy saliency map of all 1’s with no border has a baseline ROC area of 0.5.

Figure 6.

Illustration of edge effects on performance. Left: a saliency map of size 120 × 160 that consists of all 1’s except for a four-pixel-wide border of 0’s. Center: a saliency map of size 120 × 160 that consists of all 1’s except for an eight-pixel-wide border of 0’s. Right: the ROC curves of these two dummy saliency maps, as well as for a baseline saliency map (all 1’s). The ROC areas for these two curves are 0.62 and 0.73, respectively. (The baseline ROC area is 0.5.)

The KL measurement, too, is quite sensitive to how the filter responses are dealt with at the edges of images. Since the human eye fixations are rarely near the edges of the test images, the edge effects primarily change the distribution of saliency of the random samples. For the dummy saliency maps used in Figure 6, the baseline map (of all 1’s) gives a KL divergence of 0, the four-pixel-border map gives a KL divergence of 0.12, and the eight-pixel-border map gives a KL divergence of 0.25.

While this dummy example presents a somewhat extreme case, we have found that in comparing algorithms on real data sets (using the ROC area, the KL divergence, and other measures), the differences between algorithms are dwarfed by differences due to how borders are handled. Model evaluation and comparisons between models using these metrics are common throughout the saliency literature, and these border effects appear to distort these metrics such that they are of little use.

Eliminating border effects

Parkhurst and Niebur (2003) and Tatler et al. (2005) have pointed out that random locations should be drawn from the distribution of actual human eye fixations. In this paper, we measure the KL divergence between two histograms: the histogram of saliency at the fixated pixels of a test image and the histogram of saliency at the same pixel locations but of a randomly chosen image from the test set (effectively shuffling the saliency maps with respect to the images). This method of comparing models has several desired properties. First, it avoids the aforementioned problem that a static saliency map (such as a centered Gaussian blob) can receive a high score even though it is completely independent of the input image. By shuffling the saliency maps, any static saliency map will give a KL divergence of zero—for a static saliency map, shuffling has no effect, and the salience values at the human-fixated pixels are identical to those from the same pixel locations at a random image. Second, shuffling saliency maps also diminishes the effect of variations in how borders are handled since few eye fixations are located near the edges. These properties are also true of Tatler’s version of the ROC area metric described previously—rather than compare the saliency of attended points for the current image against the saliency of unattended points for that image, he compares the saliency of attended points against the saliency in that image for points that are attended during different images from the test set. Like our modified KL evaluation, Tatler’s ROC method compares the saliency of attended points against a baseline based on the distribution of human saccades rather than the uniform distribution. We assess SUN’s performance using both of these methods to avoid problems of the center bias.

The potential problem with both these methods is that because photos taken by humans are often centered on interesting objects, the center is often genuinely more salient than the periphery. As a result, shuffling saliency maps can bias the random samples to be at more salient locations, which leads to an underestimate of a model’s performance (Carmi & Itti, 2006). However, this does not affect the validity of this evaluation measurement for comparing the relative performance of different models, and its properties make for a fair comparison that is free from border effects.

Performance

We evaluate our bottom-up saliency algorithm on human fixation data (from Bruce & Tsotsos, 2006). Data were collected from 20 subjects free-viewing 120 color images for 4 seconds each. As described in the Implementation section, we calculated saliency maps for each image using DoG filters (Method 1) and linear ICA features (Method 2). We also obtained saliency maps for the same set of images using the algorithms of Itti et al. (1998), obtained from Bruce and Tsotsos,5 Bruce and Tsotsos (2006), implemented by the original authors,6 and Gao and Vasconcelos (2007), implemented by the original authors. The performance of these algorithms evaluated using the measures described above is summarized in Table 1. For the evaluation of each algorithm, the shuffling of the saliency maps is repeated 100 times. Each time, KL divergence is calculated between the histograms of unshuffled saliency and shuffled saliency on human fixations. When calculating the area under the ROC curve, we also use 100 random permutations. The mean and the standard errors are reported in the table.

Table 1.

Performance in predicting human eye fixations when viewing color images. Comparison of our SUN algorithm (Method 1 using DoG filters and Method 2 using linear ICA features) with previous algorithms. The KL divergence metric measures the divergence between the saliency distributions at human fixations and at randomly shuffled fixations (see text for details); higher values therefore denote better performance. The ROC metric measures the area under the ROC curve formed by attempting to classify points attended on the current image versus points attended in different images from the test set based on their saliency (Tatler et al., 2005).

| Model | KL(SE) | ROC(SE) |

|---|---|---|

| Itti et al. (1998) | 0.1130 (0.0011) | 0.6146 (0.0008) |

| Bruce and Tsotsos (2006) | 0.2029 (0.0017) | 0.6727 (0.0008) |

| Gao and Vasconcelos (2007) | 0.1535 (0.0016) | 0.6395 (0.0007) |

| SUN: Method 1 (DoG filters) | 0.1723 (0.0012) | 0.6570 (0.0007) |

| SUN: Method 2 (ICA filters) | 0.2097 (0.0016) | 0.6682 (0.0008) |

The results show that SUN with DoG filters (Method 1) significantly outperforms Itti and Koch’s algorithm ( p < 10−57) and Gao and Vasconcelos’ (2007) algorithm ( p < 10−14), where significance was measured with a two-tailed t-test over different random shuffles using the KL metric. Between Method 1 (DoG features) and Method 2 (ICA features), the ICA features work significantly better ( p < 10−32). There are further advantages to using ICA features: efficient coding has been proposed as one of the fundamental goals of the visual system (Barlow, 1994), and linear ICA has been shown to generate receptive fields akin to those found in primary visual cortex (V1) (Bell & Sejnowski, 1997; Olshausen & Field, 1996). In addition, generating the feature set using natural image statistics means that both the feature set and the distribution over features can be calculated simultaneously. However, it is worth noting that the online computations for Method 1 (using DoG features) take significantly less time since only 12 DoG features are used compared to 362 ICA features in Method 2. There is thus a trade off between efficiency and performance in our two methods. The results are similar (but less differentiated) using the ROC area metric of Tatler et al. (2005).

SUN with linear ICA features (Method 2) performs significantly better than Bruce and Tsotsos’ algorithm ( p = 0.0035) on this data set by the KL metric, and worse by the ROC metric, although in both cases the scores are numerically quite close. This similarity in performance is not surprising, for two reasons. First, since both algorithms construct their feature sets using ICA, the feature sets are qualitatively similar. Second, although SUN uses the statistics learned from a training set of natural images whereas Bruce and Tsotsos (2006) calculate these statistics using only the current test image, the response distribution for a low-level feature on a single image of a complex natural scene will generally be close to overall natural scene statistics. However, the results clearly show that SUN is not penalized by breaking from the standard assumption that saliency is defined by deviation from one’s neighbors; indeed, SUN actually performs at the state of the art. In the next section, we’ll argue why SUN’s use of natural statistics is actually preferable to methods that only use local image statistics.

Discussion

In this paper, we have derived a theory of saliency from the simple assumption that a goal of the visual system is to find potential targets such as prey and predators. Based on a probabilistic description of this goal, we proposed that bottom-up saliency is the self-information of visual features and that overall saliency is the pointwise mutual information between the visual features and the desired target. Here, we have focused on the bottom-up component. The use of self-information as a measure of bottom-up saliency provides a surface similarity between our SUN model and some existing models (Bruce & Tsotsos, 2006; Oliva et al., 2003; Torralba et al., 2006), but this belies fundamental differences between our approach and theirs. In this section, we explain that the core motivating intuitions behind SUN lead to a use of different statistics, which better account for a number of human visual search asymmetries.

Test image statistics vs. natural scene statistics

Comparison with previous work

All of the existing bottom-up saliency models described in the Previous work section compute saliency by comparing the feature statistics at a point in a test image with either the statistics of a neighborhood of the point or the statistics of the entire test image. When calculating the saliency map of an image, these models only consider the statistics of the current test image. In contrast, SUN’s definition of saliency (derived from a simple intuitive assumption about a goal of the visual system) compares the features observed at each point in a test image to the statistics of natural scenes. An organism would learn these natural statistics through a lifetime of experience with the world; in the SUN algorithm, we obtained them from a collection of natural images.

SUN’s formula for bottom-up saliency is similar to the one in the work of Oliva and colleagues (Oliva et al., 2003; Torralba et al., 2006) and the one in (Bruce & Tsotsos, 2006) in that they are all based on the notion of self-information. However, the differences between current image statistics and natural statistics lead to radically different kinds of self-information. Briefly, the motivation for using self-information with the statistics of the current image is that a foreground object is likely to have features that are distinct from the features of the background. The idea that the saliency of an item is dependent on its deviation from the average statistics of the image can find its roots in the visual search model proposed in (Rosenholtz, 1999), which accounted for a number of motion pop-out phenomena, and can be seen as a generalization of the center-surround-based saliency found in Koch and Ullman (1985). SUN’s use of natural statistics for self-information, on the other hand, corresponds to the intuition that since targets are observed less frequently than background during an organism’s lifetime; rare features are more likely to indicate targets. The idea that infrequent features attract attention has its origin in findings that novelty attracts the attention of infants (Caron & Caron, 1968; Fagan, 1970; Fantz, 1964; Friedman, 1972) and that novel objects are faster to find in visual search tasks (for a review, see Wolfe, 2001). This fundamental difference in motivation between SUN and existing saliency models leads to very different predictions about what attracts attention.

In the following section, we show that by using natural image statistics, SUN provides a simple explanation for a number of psychophysical phenomena that are difficult to account for using the statistics of either a local neighborhood in the test image or the entire test image. In addition, since natural image statistics are computed well in advance of the test image presentation, in the SUN algorithm the estimation of saliency is strictly local and efficient.

Visual search asymmetry

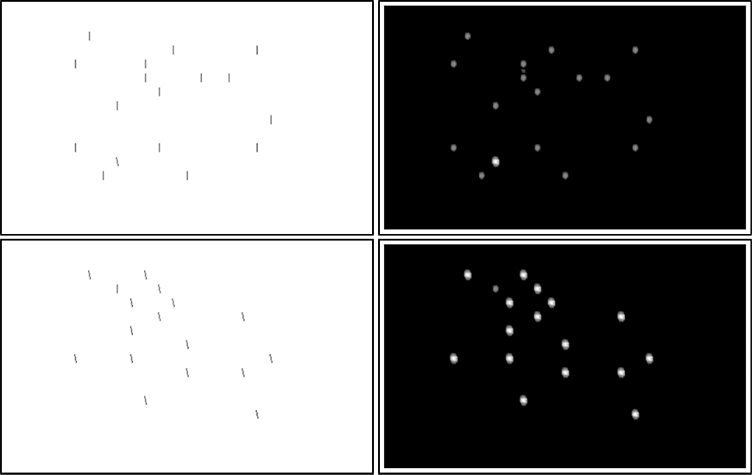

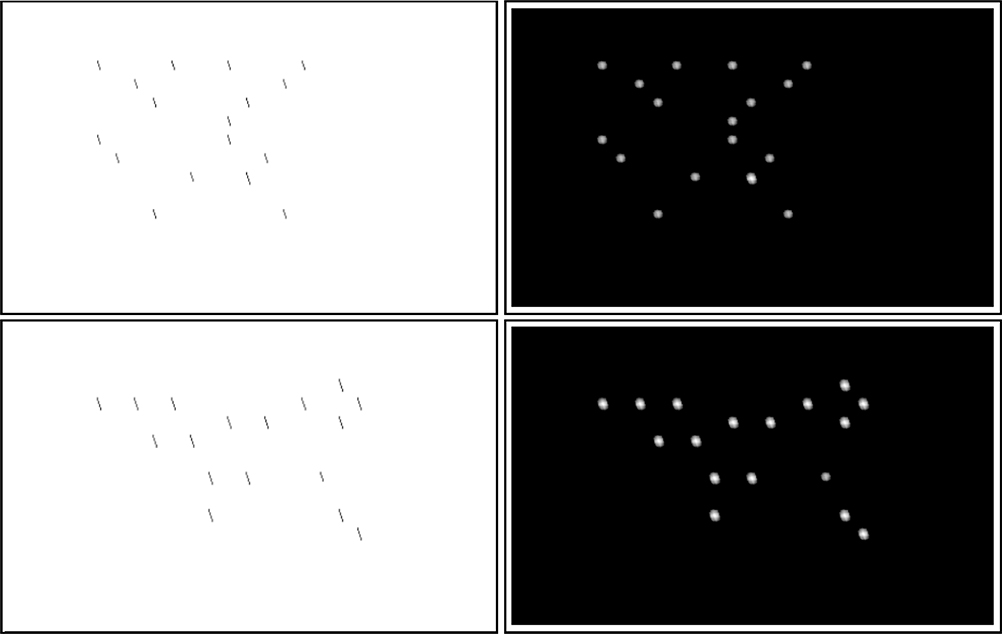

When the probability of a feature is based on the distribution of features in the current test image, as in previous saliency models, a straightforward consequence is that if all items in an image are identical except for one, this odd item will have the highest saliency and thus attract attention. For example, if an image consists of a number of vertical bars with one bar that is slightly tilted from the vertical, the tilted bar “pops out” and attracts attention almost instantly (Treisman & Gormican, 1988); see Figure 7, top left, for an illustration. If, on the other hand, an image consists of a number of slightly-tilted-from-vertical bars with one vertical bar, saliency based on the statistics of the current image predicts the same pop-out effect for the vertical bar. However, this simply is not the case, as humans do not show the same pop-out effect: it requires more time and effort for humans to find a vertical bar within a sea of tilted bars (Treisman & Gormican, 1988); see Figure 7, bottom left, for an illustration. This is known in the visual search literature as a search asymmetry, and this particular example corresponds to findings that “prototypes do not pop out” because the vertical is regarded as a prototypical orientation (Treisman & Gormican, 1988; Treisman & Souther, 1985; Wolfe, 2001).

Figure 7.

Illustration of the “prototypes do not pop out” visual search asymmetry (Treisman & Gormican, 1988). Here we see both the original image (left) and a saliency map for that image computed using SUN with ICA features (right). Top row: a tilted bar in a sea of vertical bars pops out-the tilted bar can be found almost instantaneously. Bottom row: a vertical bar in sea of tilted bars does not pop out. The bar with the odd-one-out orientation in this case requires more time and effort for subjects to find than in the case illustrated in the image in the top row. This psychophysical asymmetry is in agreement with the calculated saliency maps on the right, in which the tilted bars are more salient than the vertical bars.

Unlike saliency measures based on the statistics of the current image or of a neighborhood in the current image, saliency based on natural statistics readily predicts this search asymmetry. The vertical orientation is prototypical because it occurs more frequently in natural images than the tilted orientation (van der Schaaf & van Hateren, 1996). As a result, the vertical bar will have smaller salience than the surrounding tilted bars, so it will not attract attention as strongly.

Another visual search asymmetry exhibited by human subjects involves long and short line segments. Saliency measures based on test image statistics or local neighborhood statistics predict that a long bar in a group of short bars (illustrated in the top row of Figure 8) should be as salient as a short bar in a group of long bars (illustrated in the bottom row in Figure 8). However, it has been shown that humans find a long bar among short bar distractors much more quickly than they find a short bar among long bars (Treisman & Gormican, 1988). Saliency based on natural statistics readily predicts this search asymmetry, as well. Due to scale invariance, the probability distribution over the lengths of line segments in natural images follows the power law (Ruderman, 1994). That is, the probability of the occurrence of a line segment of length v is given by p(V = v) ∝ 1/v. Since longer line segments have lower probability in images of natural scenes, the SUN model implies that longer line segments will be more salient.

Figure 8.

Illustration of a visual search asymmetry with line segments of two different lengths (Treisman & Gormican, 1988). Shown are the original image (left) and the corresponding saliency map (right) computed using SUN with ICA features (Method 2). Top row: a long bar is easy to locate in a sea of short bars. Bottom row: a short bar in a sea of long bars is harder to find. This corresponds with the predictions from SUN’s saliency map, as the longer bars have higher saliency.

We can demonstrate that SUN predicts these search asymmetries using the implemented model described in the Implementation section. Figures 7 and 8 show saliency maps produced by SUN with ICA features (Method 2). For ease of visibility, the saliency maps have been thresholded. For the comparison of short and long lines, we ensured that all line lengths were less than the width of the ICA filters. The ICA filters used were not explicitly designed to be selective for orientation or size, but their natural distribution is sensitive to both these properties. As Figures 7 and 8 demonstrate, SUN clearly predicts both search asymmetries described here.

Visual search asymmetry is also observed for higher-level stimuli such as roman letters, Chinese characters, animal silhouettes, and faces. For example, people are faster to find a mirrored letter in normal letters than the reverse (Frith, 1974). People are also faster at searching for an inverted animal silhouette in a sea of upright silhouettes than the reverse (Wolfe, 2001) and faster at searching for an inverted face in a group of upright faces than the reverse (Nothdurft, 1993). These phenomena have been referred to as “the novel target is easier to find.” Here, “novel” means that subjects have less experience with the stimulus, indicating a lower probability of encounter during development. This corresponds well with SUN’s definition of bottom-up saliency, as items with novel features are more salient by definition.

If the saliency of an item depends upon how often it has been encountered by an organism, then search asymmetry should vary among people with different experience with the items involved. This seems to indeed be the case. Modified/inverted Chinese characters in a sea of real Chinese characters are faster to find than the reverse situation for Chinese readers, but not for non-Chinese readers (Shen & Reingold, 2001; Wang, Cavanagh, & Green, 1994). Levin (1996) found an “other-race advantage” as American Caucasians are faster to search for an African American face among Caucasian faces than to search for a Caucasian face among African American faces. This is consistent with what SUN would predict for American Caucasian subjects that have more experience with Caucasian faces than with African American faces. In addition, Levin found that Caucasian basketball fans who are familiar with many African American basketball players do not show this other-race search advantage (Levin, 2000). These seem to provide direct evidence that experience plays an important role in saliency (Zhang et al., 2007), and the statistics of the current image alone cannot account for these phenomena.

Efficiency comparison with existing saliency models

Table 2 summarizes some computational components of several algorithms previously discussed. Computing feature statistics in advance using a data set of natural images allows the SUN algorithm to compute saliency of a new image quickly compared with algorithms that require calculations of statistics on the current image. In addition, SUN requires strictly local operations, which is consistent with implementation in the low levels of the visual system.

Table 2.

Some computational components of saliency algorithms. Notably, our SUN algorithm requires only offline probability distribution estimation and no global computation over the test image in calculating saliency.

| Model | Statistics calculated using | Global operations | Statistics calculated on image |

|---|---|---|---|

| Itti et al. (1998) | N/A | Sub-map normalization | None |

| Bruce and Tsotsos (2006) | Current image | Probability estimation | Once for each image |

| Gao and Vasconcelos (2007) | Local region of current image | None | Twice for each pixel |

| SUN | Training set of natural images (pre-computed offline) | None | None |

Higher-order features

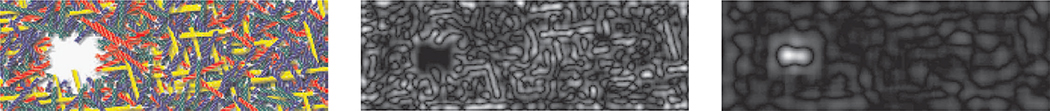

The range of visual search asymmetry phenomena described above seem to suggest that the statistics of observed visual features are estimated by the visual system at many different levels, including basic features such as color and local orientation as well as higher-level features. The question of exactly what feature set is employed by the visual system is beyond the scope of this paper. In the current implementation of SUN, we only consider linear filter responses as features for computational efficiency. This use of linear features (DoG or linear ICA features) causes highly textured areas to have high saliency, a characteristic shared with complexity-based algorithms (Chauvin et al., 2002; Kadir & Brady, 2001; Renninger et al., 2004; Yamada & Cottrell, 1995). In humans, however, it is often not the texture itself but a change in texture that attracts attention. Saliency algorithms that use local region statistics, such as (Gao & Vasconcelos, 2007), address this problem explicitly.

Our SUN model could resolve this problem implicitly by using a higher-order non-linear feature space. Whereas linear ICA features learned from natural images respond to discontinuities in illumination or color, higher-order non-linear ICA features are found to respond to discontinuity in textures (Karklin & Lewicki, 2003; Osindero, Welling, & Hinton, 2006; Shan, Zhang, & Cottrell, 2007). Figure 9 shows an image of birthday candles, the response of a linear DoG filter to that image, and the response of a non-linear feature inspired by the higher-order ICA features learned in (Shan et al., 2007). Perceptually, the white hole in the image attracts attention (Bruce & Tsotsos, 2006). Whereas the linear feature has zero response to this hole, the higher-order feature responds strongly in this region. In future work, we will explore the use of the higher order features from (Shan et al., 2007). These higher-order features are completely determined by the same natural statistics that form the basis of our model, thus avoiding the danger that feature sets would be selected to fit the model to the data.

Figure 9.

Demonstration that non-linear features could capture discontinuity of textures without using a statistical model that explicitly measures the local statistics. Left: the input image, adapted from (Bruce & Tsotsos, 2006). Middle: the response of a linear DoG filter. Right: the response of a non-linear feature. The non-linear feature is constructed by applying a DoG filter, then non-linearly transforming the output before another DoG is applied (see Shan et al., 2007, for details on the non-linear transformation). Whereas the linear feature has zero response to the white hole in the image, the non-linear feature responds strongly in this region, consistent with the white region’s perceptual salience.

Conclusions

Based on the intuitive assumption that one goal of the visual system is to find potential targets, we derived a definition of saliency in which overall visual saliency is the pointwise mutual information between the observed visual features and the presence of a target, and bottom-up saliency is the self-information of the visual features. Using this definition, we developed a simple algorithm for bottom-up saliency that can be expressed in a single equation (Equation 17). We applied this algorithm using two different set of features, difference of Gaussians (DoG) and ICA-derived features, and compared the performance to several existing bottom-up saliency algorithms. Not only does SUN perform as well as or better than the state-of-the-art algorithms, but it is also more computationally efficient. In the process of evaluation, we also revealed a critical flaw in the methods of assessment that are commonly used, which results from their sensitivity to border effects.

In its use of self-information to measure bottom-up saliency, SUN is similar to the algorithms in (Bruce & Tsotsos, 2006; Oliva et al., 2003; Torralba et al., 2006), but stems from a different set of intuitions and is calculated using different statistics. In SUN, the probability distribution over features is learned from natural statistics (which corresponds to an organism’s visual experience over time), whereas the previous saliency models compute the distribution over features from each individual test image. We explained that several search asymmetries that may pose difficulties for models based on test image statistics can be accounted for when feature probabilities are obtained from natural statistics.

We do not claim that SUN attempts to be a complete model of eye movements. As with many models of visual saliency, SUN is likely to function best for short durations when the task is simple (it is unlikely to model well the eye movements during Hayhoe’s sandwich-making task (Hayhoe & Ballard, 2005), for instance). Nor is the search for task-relevant targets the sole goal of the visual system-once the location of task- or survival-relevant targets are known, eye movements also play other roles in which SUN is unlikely to be a key factor. Our goal here was to show that natural statistics, learned over time, play an important role in the computation of saliency.

In future work, we intend to incorporate the higher-level non-linear features described in the Discussion section. In addition, our definition of overall saliency includes a top-down term that captures the features of a target. Although this is beyond the scope of the present paper, we plan to examine top-down influences on saliency in future research; preliminary work with images of faces shows promise. We also plan to extend the implementation of SUN from static images into the domain of video.

Acknowledgments

The authors would like to thank Neil D. Bruce and John K. Tsotsos for sharing their human fixation data and simulation results; Dashan Gao and Nuno Vasconcelos for sharing their simulation results; Laurent Itti and Pierre Baldi for sharing their human fixation data; and Wolfgang Einhäuser-Treyer and colleagues for sharing their human fixation data. We would also like to thank Dashan Gao, Dan N. Hill, Piotr Dollar, and Michael C. Mozer for helpful discussions, and everyone in GURU (Gary’s Unbelievable Research Unit), PEN (the Perceptual Expertise Network), and the reviewers for helpful comments. This work is supported by the NIH (grant #MH57075 to G.W. Cottrell), the James S. McDonnell Foundation (Perceptual Expertise Network, I. Gauthier, PI), and the NSF (grant #SBE-0542013 to the Temporal Dynamics of Learning Center, G.W. Cottrell, PI and IGERT Grant #DGE-0333451 to G.W. Cottrell/V.R. de Sa.).

Footnotes

Commercial relationships: none.

These independence assumptions do not generally hold (they could be relaxed in future work). For example, illumination is not invariant to location: as sunshine normally comes from above, the upper part of the visual field is likely to be brighter. But illumination contrast features, such as the responses to DoG (Difference of Gaussians) filters, will be more invariant to location changes.

Equation 13 is adopted from the function filter_DOG_2D, from Image Video toolbox for Matlab by Piotr Dollar. The toolbox can be found at http://vision.ucsd.edu/~pdollar/toolbox/doc/.

The training image patches are considered as 11 × 11 × 3 = 363-dimensional vectors, z-scored to have zero mean and unit standard deviation, then processed by principal component analysis (where one dimension is lost due to mean subtraction).

When comparing different feature sets within the same model, edge effects can also make it difficult to assess which features are best to use; larger filters result in a smaller valid image after convolution, which can artificially boost performance.

The saliency maps that produce the score for Itti et al. in Table 1 come from Bruce and Tsotsos (2006) and were calculated using the online Matlab saliency toolbox (http://www.saliencytoolbox.net/index.html) using the parameters that correspond to (Itti et al., 1998). Using the default parameters of this online toolbox generates inferior binary-like saliency maps that give a KL score of 0.1095 (0.00140).

The results reported in (Bruce & Tsotsos, 2006) used ICA features of size 7 × 7. The results reported here, obtained from Bruce and Tsotsos, used features of size 11 × 11, which they say achieved better performance.

Contributor Information

Lingyun Zhang, Department of Computer Science and Engineering, UCSD, La Jolla, CA, USA.

Matthew H. Tong, Department of Computer Science and Engineering, UCSD, La Jolla, CA, USA

Tim K. Marks, Department of Computer Science and Engineering, UCSD, La Jolla, CA, USA

Honghao Shan, Department of Computer Science and Engineering, UCSD, La Jolla, CA, USA.

Garrison W. Cottrell, Department of Computer Science and Engineering, UCSD, La Jolla, CA, USA

References

- Barlow H (1994). What is the computational goal of the neocortex? In Koch C (Ed.), Large scale neuronal theories of the brain (pp. 1–22). Cambridge, MA: MIT Press. [Google Scholar]

- Bell AJ, & Sejnowski TJ (1997). The “independent components” of natural scenes are edge filters. Vision Research, 37, 3327–3338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruce N, & Tsotsos J (2006). Saliency based on information maximization In Weiss Y, Schölkopf B, & Platt J (Eds.), Advances in neural information processing systems 18 (pp. 155–162). Cambridge, MA: MIT Press. [Google Scholar]

- Bundesen C (1990). A theory of visual attention. Psychological Review, 97, 523–547. [DOI] [PubMed] [Google Scholar]

- Carmi R, & Itti L (2006). The role of memory in guiding attention during natural vision. Journal of Vision, 6(9):4, 898–914, http://journalofvision.org/6/9/4/, doi: 10.1167/6.9.4. [Article] [DOI] [PubMed] [Google Scholar]

- Caron RF, & Caron AJ (1968). The effects of repeated exposure and stimulus complexity on visual fixation in infants. Psychonomic Science, 10, 207–208. [Google Scholar]

- Chauvin A, Herault J, Marendaz C, & Peyrin C (2002). Natural scene perception: Visual attractors and image processing In Lowe W & Bullinaria JA (Eds.), Connectionist models of cognition and perception (pp. 236–248). Singapore: World Scientific. [Google Scholar]

- Einhäuser W, Kruse W, Hoffmann KP, & König P (2006). Differences of monkey and human overt attention under natural conditions. Vision Research, 46, 1194–1209. [DOI] [PubMed] [Google Scholar]

- Eriksen CW, & St James JD (1986). Visual attention within and around the field of focal attention: A zoom lens model. Perception & Psychophysics, 40, 225–240. [DOI] [PubMed] [Google Scholar]

- Fagan JF III. (1970). Memory in the infant. Journal of Experimental Child Psychology, 9, 217–226. [DOI] [PubMed] [Google Scholar]

- Fantz RL (1964). Visual experience in infants: Decreased attention to familiar patterns relative to novel ones. Science, 146, 668–670. [DOI] [PubMed] [Google Scholar]

- Findlay JM, & Gilchrist ID (2003). Active vision: A psychology of looking and seeing. New York: Oxford University Press. [Google Scholar]

- Friedman S (1972). Habituation and recovery of visual response in the alert human newborn. Journal of Experimental Child Psychology, 13, 339–349. [DOI] [PubMed] [Google Scholar]

- Frith U (1974). A curious effect with reversed letters explained by a theory of schema. Perception & Psychophysics, 16, 113–116. [Google Scholar]

- Gao D, & Vasconcelos N (2004). Discriminant saliency for visual recognition from cluttered scenes In Saul LK, Weiss Y, & Bottou L (Eds.), Advances in neural information processing systems 17 (pp. 481–488). Cambridge, MA: MIT Press. [Google Scholar]

- Gao D, & Vasconcelos N (2005). Integrated learning of saliency, complex features, and object detectors from cluttered scenes. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) (vol. 2, pp. 282–287). Washington, DC: IEEE Computer Society. [Google Scholar]

- Gao D, & Vasconcelos N (2007). Bottom-up saliency is a discriminant process. In IEEE International Conference on Computer Vision (ICCV’07) Rio de Janeiro, Brazil. [Google Scholar]

- Harel J, Koch C, & Perona P (2007). Graph-based visual saliency In Advances in neural information processing systems 19 Cambridge, MA: MIT Press. [Google Scholar]

- Hayhoe M, & Ballard D (2005). Eye movements in natural behavior. Trends in Cognitive Sciences, 9, 188–194. [DOI] [PubMed] [Google Scholar]

- Hyvärinen A, & Oja E (1997). A fast fixed-point algorithm for independent component analysis. Neural Computation, 9, 148–1492. [Google Scholar]

- Itti L, & Baldi P (2005). A principled approach to detecting surprising events in video. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) (vol. 1, pp. 631–637). Washington, DC: IEEE Computer Society. [Google Scholar]

- Itti L, & Baldi P (2006). Bayesian surprise attracts human attention In Weiss Y, Schölkopf B, & Platt J (Eds.), Advances in neural information processing systems 18 (pp. 1–8). Cambridge, MA: MIT press. [Google Scholar]

- Itti L, & Koch C (2000). A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Research, 40, 1489–1506. [DOI] [PubMed] [Google Scholar]

- Itti L, & Koch C (2001). Computational modeling of visual attention. Nature Reviews, Neuroscience, 2, 194–203. [DOI] [PubMed] [Google Scholar]

- Itti L, Koch C, & Niebur E (1998). A model of saliency-based visual attention for rapid scene analysis. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20, 1254–1259. [Google Scholar]

- Kadir T, & Brady M (2001). Saliency, scale and image description. International Journal of Computer Vision, 45, 83–105. [Google Scholar]

- Karklin Y, & Lewicki MS (2003). Learning higher-order structures in natural images. Network, 14, 483–499. [PubMed] [Google Scholar]

- Kienzle W, Wichmann FA, Schölkopf B, & Franz MO (2007). A nonparametric approach to bottom-up visual saliency In Schölkopf B, Platt J, & Hoffman T (Eds.), Advances in neural information processing systems 19 (pp. 689–696). Cambridge, MA: MIT Press. [Google Scholar]

- Koch C, & Ullman S (1985). Shifts in selective visual attention: Towards the underlying neural circuitry. Human Neurobiology, 4, 219–227. [PubMed] [Google Scholar]

- Le Meur O, Le Callet P, & Barba D (2007). Predicting visual fixations on video based on low-level visual features. Vision Research, 47, 2483–2498. [DOI] [PubMed] [Google Scholar]

- Levin D (1996). Classifying faces by race: The structure of face categories. Journal of Experimental Psychology: Learning, Memory, and Cognition, 22, 1364–1382. [Google Scholar]

- Levin DT (2000). Race as a visual feature: Using visual search and perceptual discrimination tasks to understand face categories and the cross-race recognition deficit. Journal of Experimental Psychology: General, 129, 559–574. [DOI] [PubMed] [Google Scholar]

- Nothdurft HC (1993). Faces and facial expressions do not pop out. Perception, 22, 1287–1298. [DOI] [PubMed] [Google Scholar]

- Oliva A, Torralba A, Castelhano M, & Henderson J (2003). Top-down control of visual attention in object detection In Proceedings of International Conference on Image Processing (pp. 253–256). Barcelona, Catalonia: IEEE Press. [Google Scholar]

- Olshausen BA, & Field DJ (1996). Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature, 381, 607–609. [DOI] [PubMed] [Google Scholar]

- Osindero S, Welling M, & Hinton GE (2006). Topographic product models applied to natural scene statistics. Neural Computation, 18, 381–414. [DOI] [PubMed] [Google Scholar]

- Parkhurst D, Law K, & Niebur E (2002). Modeling the role of salience in the allocation of overt visual attention. Vision Research, 42, 107–123. [DOI] [PubMed] [Google Scholar]

- Parkhurst DJ, & Niebur E (2003). Scene content selected by active vision. Spatial Vision, 16, 125–154. [DOI] [PubMed] [Google Scholar]