Abstract

Recent technological developments in mobile brain and body imaging are enabling new frontiers of real-world neuroscience. Simultaneous recordings of body movement and brain activity from highly skilled individuals as they demonstrate their exceptional skills in real-world settings, can shed new light on the neurobehavioural structure of human expertise. Driving is a real-world skill which many of us acquire to different levels of expertise. Here we ran a case-study on a subject with the highest level of driving expertise—a Formula E Champion. We studied the driver’s neural and motor patterns while he drove a sports car on the “Top Gear” race track under extreme conditions (high speed, low visibility, low temperature, wet track). His brain activity, eye movements and hand/foot movements were recorded. Brain activity in the delta, alpha, and beta frequency bands showed causal relation to hand movements. We herein demonstrate the feasibility of using mobile brain and body imaging even in very extreme conditions (race car driving) to study the sensory inputs, motor outputs, and brain states which characterise complex human skills.

Subject terms: Motor control, Sensorimotor processing

Introduction

One of the hallmarks of being human is our unique ability to develop skills and expertise. While all animals develop skills like walking, running, fruit picking or hunting, we as humans can develop a much broader and more diverse set of skills. With practice, most of us can learn to play a musical instrument, play a sport, or do arts and crafts. Nevertheless, only some of us can reach the highest level of expertise. Unlike the widespread view that this is entirely driven by practice1, there is accumulating evidence that practice is not enough2, making individual musicians, artists, athletes, and craftspeople who take this expertise to new heights of particular research interest. Novel technology for mobile brain and body imaging now enables us to study neurobehaviour in real-world settings3–5. When carried out in natural environments, these measures can enable a meaningful understanding of human behaviour while performing real-life tasks. Studying the relation and inter-dependencies between brain activity and body movements of experts, while they perform their expert skills in real-world settings, can enable us to unpack this enigma.

In recent years there has been an accumulating body of literature studying the neural signals associated with expertise, particularly in sports6. EEG studies link expert performance to changes in EEG alpha and beta rhythms. However, most of these studies are using lab-based tasks, and therefore their findings have had little impact on sports professionals6 (for example, the Go/No-Go task was used to study baseball expertise7). While their findings showed that experts perform better and have more robust EEG inhibition responses, which can tell us something about their skill, it is far from true expertise. Other studies addressed expertise in a real task in a trial-by-trial design. For example, expert rifle shooters exhibited longer quiet eye period before shooting and showed an increased asymmetry in alpha and beta power (increase in left-hemisphere and decrease in right-hemisphere) during the preparatory period8. Also, expert golf players show a more significant reduction in EEG theta, high-alpha, and beta power during action preparation9. Measuring and interpreting the neurobehaviour of expertise during the continuous performance of a real-world task is the next challenge. Here we present a case study in real-world neuroscience of expertise, measuring the brain activity and body movement of a professional race car driver (Formula E Champion) while driving under extreme conditions (high speed, low visibility and road slipperiness). Driving is a skill that most of us acquire and use on daily life. Previous literature on driving mostly addressed it as such and thus focused on evaluating brain signals, eye gaze, or body movements, in order to measure the state of attention10,11, fatigue12–14 and drowsiness15,16 of the driver, which are significant causes of road accidents. Here, we use driving as an exciting test-bed to demonstrate the feasibility of studying real-world expertise under extreme conditions in-the-wild. We address the driver’s natural perceptual input (vision), motor output (eyes and limbs movements) and brain activity comprehensively, in an attempt to assess the neuromotor behaviour responses under challenging driving conditions, which are hallmarks of expertise in race driving. As in any case study, this work is limited in key components of scientific inquiry such as comparison and generalisation. Further work is required to determine the specificity of the reported neural signatures to expertise and to distinguish it from non-experts. However, it highlights a unique neurobehavioral characteristic that could be tested for generalisation in future studies. This study, in essence, is a proof of concept, showing the feasibility of real-world neuroscience in-the-wild, even in extreme conditions. The second objective of this study was to characterise the neuromotor behaviour of a highly skilled expert in a high skill task, performed in real-life conditions, outside a laboratory or simulator, and thus creating a reference point for future real-world and simulator driving studies with experts and non-expert drivers. A better understanding of expert drivers’ neural and motor interdependencies while facing driving challenges can also potentially foster the development of technologies to prevent critical conditions and improve driving safety, as well as safety procedures for autonomous and semi-autonomous cars.

Methods

Experimental setup

The experiment took place at the Dunsfold Aerodrome (Surrey, UK), commonly known as the Top Gear race track. The driver (co-author) was Formula E champion, Lucas Di Grassi (Audi Sport ABT Schaeffler team), with over 15 years of professional racing experience, which include karting, Formula 3, Formula One and Formula E racing. The participant, LDG, is an author on this paper and gave informed consent to participate in the experiment and to publish the information and images in an online open-access publication. While all methods used in the study were approved by the Imperial College Research Ethics Committee and performed in accordance with the declaration of Helsinki, this study was a self-experimentation by an author17. The test drive was prescheduled for video production purposes by the racing team, who race in these conditions frequently. It enabled us this unique scientific observation of motor expertise in the wild. Although unlikely to be needed, emergency response units were present. A promo video of the film by Averner Films18 is accessible here: https://vimeo.com/248167533.

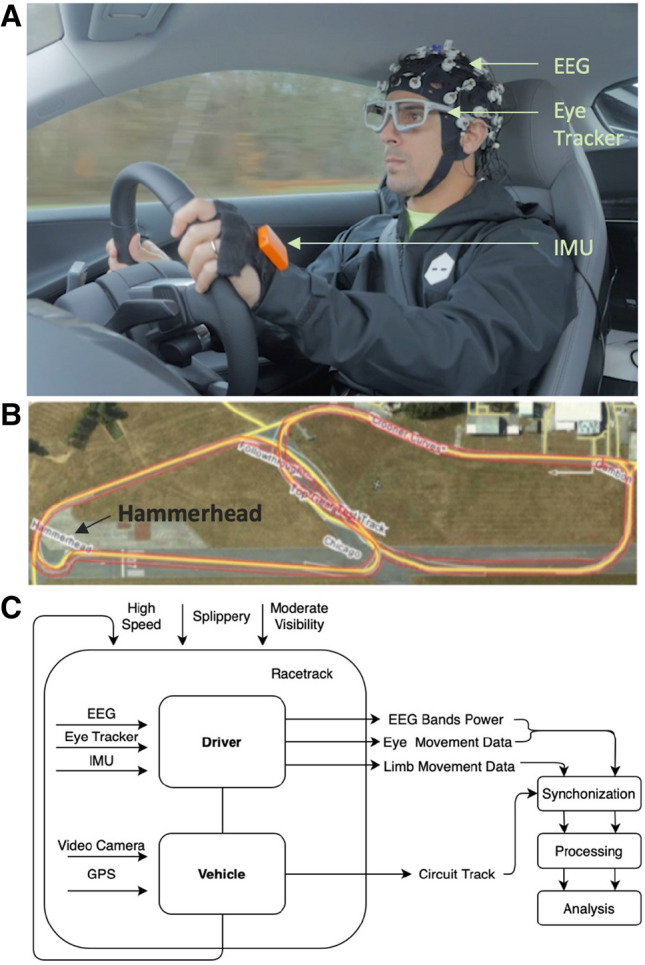

The driver was equipped with: a 32-channels wireless EEG system (LiveAmp, Brain Products GmbH, Germany) with dry electrodes (actiCAP Xpress Twist, Brain Products GmbH, Germany); binocular eye-tracking glasses (SMI ETG 2W A, SensoMotoric Instruments, Germany); and four inertial measurement units (IMUs) on his hands and feet (MTw Awinda, Xsens Technologies BV, The Netherlands); shown in Fig. 1A. The car was equipped with a GPS and a camera recording the inside of the car. The car driver assistance systems were turned off. The full architecture of the experimental setup is presented in Fig. 1C.

Figure 1.

Experimental setup involving expert driver (LDG): (A) involved equipment (EEG, eye tracking and body motion tracking systems) and their placement on the driver; (B) Top Gear racetrack and the highlighted race critical curve, the Hammerhead; (C) architecture of our data collection system with driver/car/road parameters inputs and data flows.

The Top Gear race track has specific curve types that subjugate drivers’ to different driving challenges. In particular, in the south-west of the track (left side of of the image in Fig. 1B) is the Hammerhead curve. As the name suggests, it is a hammerhead-shaped tricky curve, designed to test cars and drivers’ skill for it is technically challenging19. This is considered the critical curve of the track, being used in this work to assess the driver’s performance in challenging scenarios. This extreme curve was selected to show highlight features of the driver’s neuromotor behaviour. The car was driven with a mean driving speed of 120 Km/h and a top speed of 178 Km/h on a track 2.82 Km long with 12 curves. The experiment consisted of 6 sets of 2 to 3 laps each, stopping after every set for system check-up and recalibration.

Data processing

All data were pre-processed using custom software written in MatLab (R2017a, The MathWorks, Inc., MA, USA). During the recording in the car, all data streams were synchronised using the computer’s real-time clock (RTC). We verified the synchronisation offline and corrected for any drifts and offsets by computing temporal cross-correlations. Since the car acceleration had an equal effect on the accelerometers of the IMUs on the limbs and the built-in IMU in the GPS and the EEG headset, timestamps were synchronised across all data these streams using cross-correlation between the accelerations recorded by all systems and minor offsets between timestamps were corrected for each six sets. The gaze data was synced to the motion capture sensors (Xsens) using the video from the eye tracker’s egocentric camera, in which the driver’s hands are clearly visible. During the race, the driver was repeatedly in states of almost complete stillness (while driving on a straight path) which were followed by rapid hand movements (as the car started to slip on the road or drove into a sharp curve). We automatically detected these extreme peaks in hand acceleration and then looked at the video frames around those peaks and corrected for the minor offsets (of at most 33 msecs, based on 30 fps of the egocentric camera) between timestamps. Then data streams were segmented to the laps, and laps with missing data in either stream were removed.

Car movement data

The car GPS was obtained by the placement of an iPhone inside the car. This system’s acceleration and rotation recordings were resampled to a stable 100 Hz sampling rate, for timestamp synchronisation and for cleaning the body data. The data was filtered resorting to a zero phase-lag fourth-order Butterworth filter with 10 Hz cut off frequency20,21.

Body movement data

Body data was collected by 4 Xsens MTw Awinda IMUs placed on hands and feet. These sensors recorded rotation and acceleration. The data collected has a stable sampling rate of 100 Hz. Since the driver was in a moving car, the motion captured by the motion capture system was the combination of the car movement and the driver’s limb movements inside the car. Linear regression was implemented in order to clean the car movement (based on the GPS recordings) from the motion tracking. The regression’s residuals capture the movement of the limbs that do not fit the car movement, for rotation and acceleration data separately. The data was then filtered with resorting to a zero phase-lag fourth-order Butterworth filter with 10 Hz cut off frequency20,21.

Gaze data

The eye gaze was acquired with binocular SMI ETG 2W A eye-tracking glasses which use infrared light and a camera to track the position of the pupil. Using the pupil centre and the corneal reflection (CR) creates a vector in the image that can be mapped to coordinates22,23. The use of eye-tracking glasses simplify the analysis of gaze-targets inside a free moving head in a freely driving car, as those are not calibrated to a fixed physical coordinated system but only to the device’s built-in egocentric camera that captures the visual scene that the subject sees. The glasses and the camera move with the head and thus no correction for head movements is needed. The egocentric camera captured the scene view and therefore enabled the reconstruction of the frame-by-frame gaze point in the real-world scene (e.g. visual relations of gaze-target to the side of the road). The gaze data was collected at 120 Hz and included multiple standardised eye measurements, such as pupil size, eye position, point of regard, and gaze vector. When measuring gaze behaviour in-the-wild, fixation and saccades become an under-specified concept24. For example, a free-moving head tracking a location on a moving road involves smooth eye movements and saccades during fixation, which are considered mutually exclusive in fixed head settings. Therefore, conventional definitions of these types of eye-movements and ways of analysis do not apply. Thus, our analysis focused on the point of regard, obtained from the RMS between the point of regard binocular measured in X and Y axis; the gaze vector, computed for the right and left eyes, separately, through the RMS of XYZ; and the change in gaze vector, calculated using two consecutive measurements. These measures were resampled to 100 Hz, and linear interpolation was applied to account for some missing data points in the gaze recording.

EEG data

The brain activity was recorded using a 32 channels EEG cap with dry electrodes (displayed accordingly to the 10-20 system), sampled at 250 Hz stable sampling rate. EEG data was analysed with EEGLAB toolbox (https://sccn.ucsd.edu/eeglab;25) . The processing steps included (i) high pass filtering with a cutoff frequency of 1 Hz26–28; (ii) line noise removal in the selected frequencies of 60 Hz and 120 Hz26; (iii) bad channels removal for those with less than 60% correlation to its own reconstruction based on its neighbour channels26; (iv) re-referencing the EEG dataset to the average (of all channels) to minimise the impact of a channel with bad contact in the variance of the entire dataset26; (v) Independent Component Analysis (ICA) in order to separate signal sources29–31; (vi) Artefacts removal using runica, infomax ICA algorithm from EEGLAB, for identification and removal of head and eye movements and blinking artefacts. The algorithm searches for the maximal statistical independence between sources. Artefacts sources were identified based on a predefined set of criteria for scalp topographies and spectrum analysis (e.g. source location by the ears and power spectrum with high and spiky power in high frequencies indicates movement artefacts, source location between the eyes suggest eye blink artefacts, source location in the eye indicates lateral eye movement artefacts)25. EEG data was then transformed in the time-frequency domain to power in decibels (dB) in 100 Hz (to match the other data streams), in delta () 0.5 to 4 Hz; theta () 4 to 8 Hz; alpha () 8 to 12 Hz; and beta () 12 to 30 Hz frequency bands. The transformation was applied separately to the individual IC located over the left motor cortex and to the mean brain activity, averaged across the cleaned ICs.

Results

The results section of this case-study paper were written to characterise the neuromotor behaviour of a professional driver while driving in extreme condition, which can be used as a reference point for future driving studies. Initial analysis was aimed to understand the interdependencies between the different neurobehavioural data streams and their level of complexity. The analysis then focused on specific driving events, such as response to challenging conditions (skidding, curves and straights), in order to assess if there is a distinguishable behaviour upon those moments. Lastly, we addressed the causality across different data streams.

Data characteristics

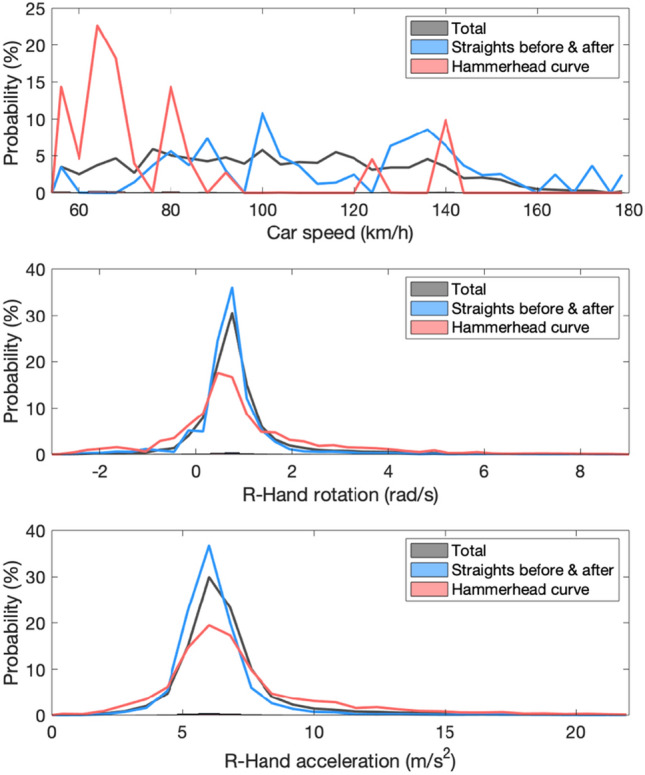

The distributions of the car speed, the right-hand rotation, and the right-hand accelerations were assessed in the entire track, the straight segments before and after the hammerhead curve, and the hammerhead curve itself (Fig. 2). The average speed throughout the experiment was 120Km/h. As expected, in the curves speed was relatively low (between 54 and 82 Km/h), while in straight segments speed was much higher with broader distribution, as the car decelerated towards a curve and accelerated after the curve. The number of frames considered hammerhead critical curve was 11.3% of the total recording, and straights corresponded to 25.5%.

Figure 2.

Histogram evolution analysis detail the extreme driving scenario of this experiment. (top) car speed with an average of 120Km/h, critical curve speed average of 78 Km/h and straight speed average of 130 Km/h, all above conventional driving speed limits; (middle) right-hand gyroscope with an average value of 0.9 rad/s whereas for intense driving style (critical curve and skidding moments) the average values are of 1 and 3 rad/s, superior to normal movement expected from literature; and (bottom) right-hand accelerometer data showing absolute acceleration with similar results distribution as gyroscope data. Data regarding straights corresponds to 25.5% of the entire data set and the Hammerhead curve to 11.3%.

Since the hand movements were highly correlated here, we show only the right hand. Both gyroscope and accelerometer distributions present similar tendencies, with a narrow distribution during the straight segments and a slightly wider one in curves. The result considering the whole data set lies in-between. The gyroscope values for the abrupt responses (skidding) have a mean of 3rad/s, considerably superior to the 1rad/s found in the literature for normal forearm movement32, which is expected considering the intense car handling. Data considered as abrupt responses corresponds to 6% of the dataset.

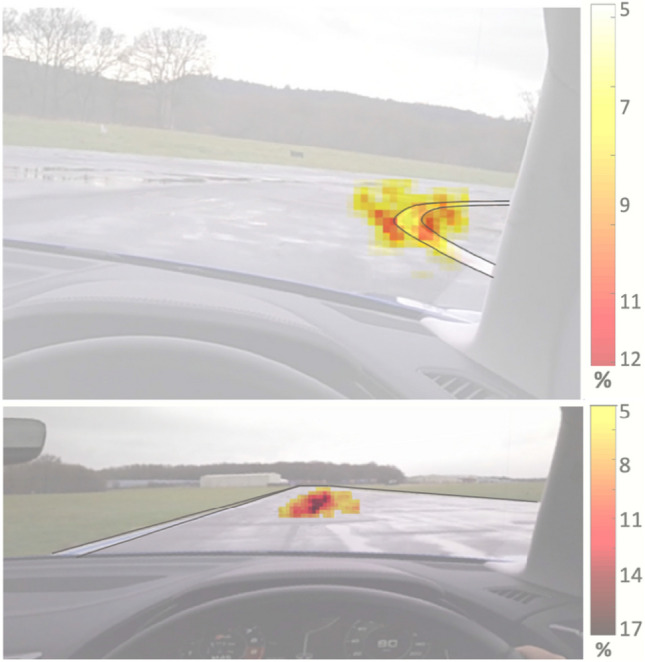

Eye gaze data showed a strong tendency of tracking the tangent point of the curve, as illustrated in Fig. 3 (top), and reported for non-expert drivers33. In the top figure the eye gaze search for the tangent of the curve can be seen (internal and external), marked in white on the road, where it remains throughout the entire curve. During straight segments, the eye gaze focuses straight ahead, with a stable distance in the horizon, with minor saccadic deviations, as illustrated in Fig. 3 (bottom). The heat map was built using data recorded during the critical curve (top) or the straights before and after that curve (bottom). The gaze point position from the geocentric view was annotated at a ten frames cadence in an overlapping position matrix. The heat map was built resorting to the percentual annotations occurrence in this matrix.

Figure 3.

Heat maps of eye gaze using data recorded during the Hammerhead critical curve (top), highlighting the driver’s tendency of tracking the tangent curve; and the straight segments before and after the curve (bottom), where the driver’s gaze focus on the horizon.

Global dataset assessment

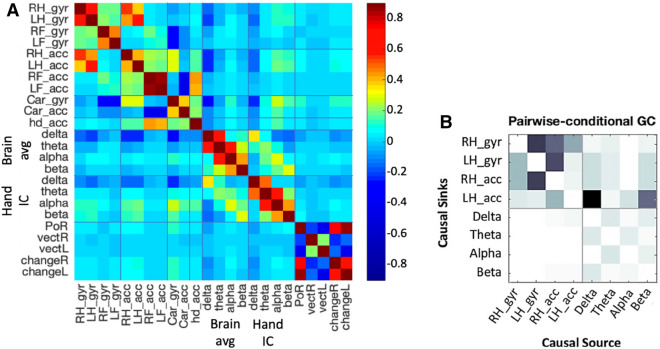

For an overall understanding of the interdependencies between the neurobehavioral data streams, a correlation matrix was computed (Fig. 4A). The variables considered were: the RMS of the acceleration and the rotation over the four limbs, the car, and the head; EEG band power for the mean brain activity, and the right hand IC; and eye movement data including the point of regard, the gaze vectors, and the change in gaze vector for both eyes. The right and the left hands are strongly correlated, as expected since the driver had both hands on the steering wheel. There are other correlations within domains (e.g. neighbouring band powers are correlated), and also weaker, but statistically significant, correlations between domains. Most importantly, between the EEG band powers and the hand movements. We found a positive correlation for the hands’ acceleration and rotation with the alpha and beta power in the right hand IC, and a negative correlation with the delta power (P < 0.001). Cross-correlation shows that while the delta band is synced with the movement, the neural signal in the alpha and beta band precedes the movement by 100 ms.

Figure 4.

Global assessments: (A) Instantaneous correlation matrix between all data groups: gyroscope rotation (gyr) and acceleration (acc) of the right and left hands (RH,LH), the right and left feet (RF,LF), the car (Car), and the head (hd); the band power in the delta, theta, alpha, and beta bands across the brain (Brain avg) and in the left motor cortex (Hand IC); eye gaze point of regard (PoR), the gaze vectors (vect) and the change in gaze vector (change) for the right (R) and the left (L) eye; (B) Granger causality pairwise results considering the data groups separated by grey lines. Darker colour is associated with more robust causality, for which brain data is more cause of hand movements than the other way around. A significance of p=0.05 and Bonferroni correction supported these conclusions.

Granger causality

To address the sequence of causality between variables, we used Granger causality34. Granger causality is based on the idea that causes precede and help predict their effects. This technique tries to identify direct interactions between time series, in both time and frequency domains. Granger causality was calculated using EEGLAB toolbox MVGC multivariate Granger causality (mvgc_v1.0)35,36. The toolbox uses autoregressive vector modelling to find linear interdependencies between time series based on their past values. The Granger causality analysis was conducted on the hands’ acceleration and gyroscope rotation data, and EEG bands power from the IC of the right hand. The results are presented in Fig. 4B as a pairwise matrix of causality between causal sources to causal sinks (darker indicates more robust causality) for statistically significant causality links (p<0.05 after Bonferroni correction). We found one-directional causality where the EEG bands power are causing hand movements, specially delta and beta.

Specific driving related events

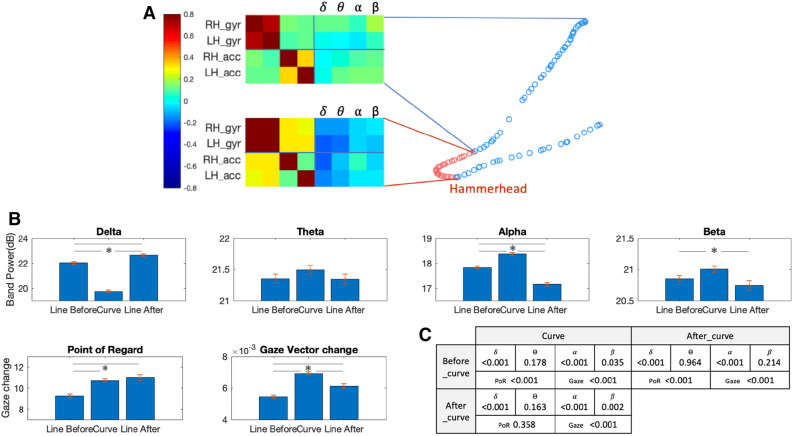

Here we considered a comparison between the neurobehavioral signature of the challenging Hammerhead curve and the straight segments leading to it and out of it. Based on the GPS data and the egocentric videos, we annotated the segments of the critical Hammerhead curve in the track and the straight paths leading to it and out of it. Correlation matrix from hands IMUs and EEG data streams for these segments shows differences in the correlation structure between the two types of segments (Fig. 5A). While in the straight segments, there was no correlation between the hands’ rotation and acceleration to the EEG power, in the curves, there was a negative correlation between these brain and body measurements. Statistical analysis of the differences between the segments shows a decrease in delta power and an increase in alpha and beta during the curve (Fig. 5B, C). The data from the segments before and after the curve also showed differences where delta power was lower before and higher after the curve, while alpha power showed the opposite trend. Eye gaze vector also changed more during the curves.

Figure 5.

(A) Identification of the straights (blue) and curve (red) periods, with a fragment of the correlation matrix obtained for each period; (B) statistical analysis on the mean and SEM for curves and straights on bands power and eye data. Despite lower correlation values, the brain shows structured correlation to the hand when performing the curve, with delta and theta anticorrelation and alpha and beta positive correlations, instead of a generalised correlation to the body. Statistical significance between before, during and after the critical curve was found for delta,alpha,beta, point of regard and change in gaze vector. (C) shows the p-values obtained with t-test.

Discussion

This work is an attempt to assess neurobehavioural signatures of real-world motor behaviour in-the-wild. We demonstrate the feasibility of simultaneously measuring brain activity, eye and body movement behaviours in-the-wild under extreme conditions (race track driving), assessing neurobehavioural interdependencies and inferring causal relations. First, we have demonstrated that body movements, eye movements, and wireless EEG data can be collected in very extreme conditions in-the-wild. Second, we have demonstrated that these measurements are meaningful not only from a purely descriptive experience but also by showing how they are predictive of each other, how they are related to car performance and race track location. And third, we used this data to characterise the neuromotor behaviour of an expert while driving in extreme conditions, which can be used as a reference point for future driving studies. This proof of “possibility” will act as a foundation to enable further research in real-world settings.

Our results show changes in the EEG power and the gaze characteristic during sharp curves, where the control of the car is most challenging. While many previous studies found correlations between hand movements and brain activity in lab-based repeated trials tasks37, here we show such correlations in continuous movement in-the-wild. Moreover, in a controlled lab experiment, there is a clear trial order where the timing of stimuli appearance, go-cue, etc. are well defined. Accordingly, the direction of the causality (if it exists) is clear -neural activity after a stimulus can be caused by it but cannot cause it. At the same time, neural activity before movement can cause the movement but cannot be caused by it. In-the-wild, causal relationships may reverse or be bi-directional. Here we show not only the correlation but the causality from the brain activity to the body movement in an unconstrained setting. Interestingly, the EEG power changes are in line with previous results on general creative solution finding and interventions38.

Comparing the driver’s neurobehaviour between the sharp curves and the straight segments before and after, enabled us to assess world-championship-level skilled responses while facing extreme driving conditions. Our results suggest seeming differences in the EEG power, point of regard, and gaze change vector, between the different segments: before-during-after sharp curves. The difference between the driving segments means that we can detect neurobehavioural differences between more and less demanding segments of the drive from on-going in-the-wild EEG and gaze recordings. It suggests possible neurobehavioural matrices for task demand that would presumably be different in expertise. During the curves, the driver showed a power increase in the alpha and beta bands and a decrease in the delta band. The increase in alpha is potentially a signature of the increased creativity demand in these segments38,39. The alpha and beta power increase we observed are also in line with previous work showing an increase in left-hemisphere alpha and beta power of expert rifle shooters during the preparatory pre-shot period8.

Neurobehavioural data collection in-the-wild is subject to more noise sources and interference than standard data collection in-the-lab. This concern is specifically worrying for the EEG signal, which is always contaminated by noise, and any EEG recording during movement is subject to movement and muscle artefacts. Thus, we find our cross-correlation and Granger causality results very encouraging, as those suggest the EEG activity precedes the movement and predicted it and not the other way around. If the EEG results were simply movement artefacts, we would have expected to see the opposite causality - the movement would precede and predict the EEG movement artefact. Thus, since the EEG activity precedes the movement, we believe the EEG results cannot be rejected as noise artefacts.

The driver’s gaze during curves followed the tangent point of the curve, as suggested in the classic paper by Land and Lee33. During the straight segments, his gaze was entirely focused on the centre of the road which led to the more stability in straight segments relative to curves, though during both segments type the driver’s gaze is exceptionally stable, as illustrated in Fig. 3.

Being able to collect real-world data which capture a significant portion of the sensory input to the brain (visual scene and locus of attention), the motor output of the brain (hand, head and arm movements during driving) as well as the state of the brain (EEG signals), is a further realisation of our human ethomics approach. This is not only insightful for understanding the brain and its behaviour, but also for devising artificial intelligence to improve driving safety for autonomous and semi-autonomous cars. In recent work40, we demonstrated how human drivers in a virtual reality driving simulator generated gaze behaviour that we used to train a deep convolutional neural network to predict where a human driver was looking moment-by-moment. This human-like visual attention model enabled us to mask “irrelevant” visual information (where the human driver was not looking) and train a self-driving car algorithm to drive successfully. Crucially, our human-attention based AI system learned to drive much faster and with a higher end-of-learning performance than AI systems that had no knowledge of human visual attention. The work we present here takes this en passant human-annotation of skilled behaviour to the next level, by collecting real-world data of rich input and output characteristics of the brain. Similarly to the way we used drivers’ gaze in a driving simulator to train a self-driving car algorithm in that simulator, we can use rich neurobehavioural data from an expert driver in extreme conditions to train a real self-driving car algorithm to response successfully in extreme conditions. Likewise, on the side of control systems, we showed for example how using ethomic data obtained from natural tasks (movement data41, electrophysiological data42, decision making data43) can be harnessed to boost AI system performance. The neurobehavioural approach demonstrated here suggests how we can succeed in future to close-the-loop between person and vehicle.

In summary, we demonstrated the feasibility of studying the neurobehavioral signatures of real-world expertise in-the-wild. We showed evidence of specific brain activity and gaze patterns during driving in extreme conditions, in which, presumably, the expertise of the driver makes a crucial difference. Future work is required to generalise these findings from this single case study.

Acknowledgements

First, we would like to acknowledge Alex Verner and Joel Verner (Averner Films) whose video production idea and work enabled this research. We thank them and the race team for inviting us to participate in their production and collect the data for this study. We also thank Pavel Orlov for his help with the gaze data. The study was enabled by financial support to a Royal Society-Kohn International Fellowship (NF170650; SH and AAF) and by eNHANCE (http://www.enhance-motion.eu) under the European Union’s Horizon 2020 research and innovation programme Grant Agreement No. 644000 (SH and AAF).

Author contributions

A.A.F. conceived and designed the scientific study; L.D.G. preformed the experiment; I.R.L. and A.A.F. acquired the data; I.R.L., A.A.F. and S.H. analyzed the data; I.R.L., S.H. and A.A.F. interpreted the data; I.R.L. and S.H. drafted the paper; I.R.L., S.H., L.D.G. and A.A.F. revised the paper.

Competing interests

The experimental data collected and analysed here was as part of a promotional video production for Roborace—a racing competition for autonomously driving, electrically powered vehicles. L.D.G. is the CEO of Roborace. Averner Films was commissioned by Roborace to produce the video. L.D.G. and A.A.F. appeared in the video. I.R.L. and S.H. declare no competing financial interests. Within the domain of this paper, A.A.F. has consulted for Airbus, Averner Films and Celestial Group. A.A.F. lab has received within the domain of this paper research funding and donations from Microsoft and NVIDIA. This scientific publication was not commissioned nor expected as part of the film production.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Ines Rito Lima and Shlomi Haar.

References

- 1.Ericsson KA, Krampe RT, Tesch-Römer C. The role of deliberate practice in the acquisition of expert performance. Psychological review. 1993;100:363. doi: 10.1037/0033-295X.100.3.363. [DOI] [Google Scholar]

- 2.Hambrick DZ, et al. Deliberate practice: Is that all it takes to become an expert? Intelligence. 2014;45:34–45. doi: 10.1016/j.intell.2013.04.001. [DOI] [Google Scholar]

- 3.Haar, S., van Assel, C. M. & Faisal, A. A. Kinematic signatures of learning that emerge in a real-world motor skill task. bioRxiv 612218, 10.1101/612218 (2019).

- 4.Haar, S. & Faisal, A. A. Neural biomarkers of multiple motor-learning mechanisms in a real-world task. bioRxiv 2020.03.04.976951, 10.1101/2020.03.04.976951 (2020).

- 5.Haar, S., Sundar, G. & Faisal, A. A. Embodied virtual reality for the study of real-world motor learning. bioRxiv 2020.03.19.998476, 10.1101/2020.03.19.998476 (2020). [DOI] [PMC free article] [PubMed]

- 6.Park JL, Fairweather MM, Donaldson DI. Making the case for mobile cognition: Eeg and sports performance. Neuroscience & Biobehavioral Reviews. 2015;52:117–130. doi: 10.1016/j.neubiorev.2015.02.014. [DOI] [PubMed] [Google Scholar]

- 7.Muraskin J, Sherwin J, Sajda P. Knowing when not to swing: Eeg evidence that enhanced perception-action coupling underlies baseball batter expertise. NeuroImage. 2015;123:1–10. doi: 10.1016/j.neuroimage.2015.08.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Janelle CM, et al. Expertise differences in cortical activation and gaze behavior during rifle shooting. Journal of Sport and Exercise psychology. 2000;22:167–182. doi: 10.1123/jsep.22.2.167. [DOI] [Google Scholar]

- 9.Cooke A, et al. Preparation for action: Psychophysiological activity preceding a motor skill as a function of expertise, performance outcome, and psychological pressure. Psychophysiology. 2014;51:374–384. doi: 10.1111/psyp.12182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Busso, C. & Jain, J. Advances in multimodal tracking of driver distraction. Digital Signal Process. In-Vehicle Syst. Saf.253–270, 10.1007/978-1-4419-9607-7_18 (2012).

- 11.Baldwin CL, et al. Detecting and Quantifying Mind Wandering during Simulated Driving. Frontiers in Human Neuroscience. 2017;11:1–15. doi: 10.3389/fnhum.2017.00406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lal SK, Craig A, Boord P, Kirkup L, Nguyen H. Development of an algorithm for an EEG-based driver fatigue countermeasure. Journal of Safety Research. 2003;34:321–328. doi: 10.1016/S0022-4375(03)00027-6. [DOI] [PubMed] [Google Scholar]

- 13.Zhao C, Zhao M, Liu J, Zheng C. Electroencephalogram and electrocardiograph assessment of mental fatigue in a driving simulator. Accident Analysis and Prevention. 2012;45:83–90. doi: 10.1016/j.aap.2011.11.019. [DOI] [PubMed] [Google Scholar]

- 14.Li W, He QC, Fan XM, Fei ZM. Evaluation of driver fatigue on two channels of EEG data. Neuroscience Letters. 2012;506:235–239. doi: 10.1016/j.neulet.2011.11.014. [DOI] [PubMed] [Google Scholar]

- 15.Li G, Chung WY. Combined EEG-Gyroscope-tDCS Brain Machine Interface System for Early Management of Driver Drowsiness. IEEE Transactions on Human-Machine Systems. 2017;48:50–62. doi: 10.1109/THMS.2017.2759808. [DOI] [Google Scholar]

- 16.Borghini G, Astolfi L, Vecchiato G, Mattia D, Babiloni F. Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neuroscience and Biobehavioral Reviews. 2014;44:58–75. doi: 10.1016/j.neubiorev.2012.10.003. [DOI] [PubMed] [Google Scholar]

- 17.Hanley BP, Bains W, Church G. Review of scientific self-experimentation: Ethics history, regulation, scenarios, and views among ethics committees and prominent scientists. Rejuvenation research. 2019;22:31–42. doi: 10.1089/rej.2018.2059. [DOI] [PubMed] [Google Scholar]

- 18.The Human Machine | Audi R8. An Averner Films project, featuring Audi Sport and Formula E Champion Lucas Di Grassi. https://www.averner.com/work.

- 19.BBC Top Gear Track Plan. https://www.bbc.co.uk/programmes/articles/1jckx859NGhPCNrL6vQD9Wl/track-plan.

- 20.Ostry DJ, Cooke JD, Munhall KG. Velocity curves of human arm and speech movements. Experimental Brain Research. 1987;68:37–46. doi: 10.1007/BF00255232. [DOI] [PubMed] [Google Scholar]

- 21.Atkeson CG, Hollerbach JM. Kinematic Features of Unrestrained Vertical Arm Movements. The Journal of Neuroscience. 1985;5:2318–2330. doi: 10.1523/JNEUROSCI.05-09-02318.1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kogkas AA, Darzi A, Mylonas GP. Gaze-contingent perceptually enabled interactions in the operating theatre. International Journal of Computer Assisted Radiology and Surgery. 2017;12:1131–1140. doi: 10.1007/s11548-017-1580-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Morimoto CH, Mimica MR. Eye gaze tracking techniques for interactive applications. Computer Vision and Image Understanding. 2005;98:4–24. doi: 10.1016/j.cviu.2004.07.010. [DOI] [Google Scholar]

- 24.Lappi O. Eye movements in the wild: Oculomotor control, gaze behavior & frames of reference. Neuroscience & Biobehavioral Reviews. 2016;69:49–68. doi: 10.1016/j.neubiorev.2016.06.006. [DOI] [PubMed] [Google Scholar]

- 25.Delorme A, Makeig S. Eeglab: an open source toolbox for analysis of single-trial eeg dynamics including independent component analysis. Journal of neuroscience methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- 26.Bigdely-Shamlo N, Mullen T, Kothe C, Su K-M, Robbins KA. The PREP pipeline: standardized preprocessing for large-scale EEG analysis. Frontiers in Neuroinformatics. 2015;9:1–20. doi: 10.3389/fninf.2015.00016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Dias NS, Carmo JP, Mendes PM, Correia JH. Wireless instrumentation system based on dry electrodes for acquiring EEG signals. Medical Engineering and Physics. 2012;34:972–981. doi: 10.1016/j.medengphy.2011.11.002. [DOI] [PubMed] [Google Scholar]

- 28.Gargiulo G, et al. A new eeg recording system for passive dry electrodes. Clinical Neurophysiology. 2010;121:686–693. doi: 10.1016/j.clinph.2009.12.025. [DOI] [PubMed] [Google Scholar]

- 29.Sahonero-Alvarez, G. & Calderón, H. A comparison of SOBI, FastICA, JADE and Infomax algorithms. Proceedings of the 8th International Multi-Conference on Complexity, Informatics and Cybernetics 17–22 (2017).

- 30.Lourenço PR, Abbott WW, Faisal AA. Supervised EEG ocular artefact correction through eye-tracking. Biosystems and Biorobotics. 2016;12:99–113. doi: 10.1007/978-3-319-26242-0_7. [DOI] [Google Scholar]

- 31.Lee T.-W, Girolami M, Sejnowski T. J. Independent Component Analysis Using an Extended Infomax Algorithm for Mixed Subgaussian and Supergaussian Sources. Neural Computation. 1999;11:417–441. doi: 10.1162/089976699300016719. [DOI] [PubMed] [Google Scholar]

- 32.Hasan Z. Optimized movement trajectories and joint stiffness in unperturbed, inertially loaded movements. Biological Cybernetics. 1986;53:373–382. doi: 10.1007/BF00318203. [DOI] [PubMed] [Google Scholar]

- 33.Land MF, Lee DN. Where we look when we steer. Nature. 1994;369:742–744. doi: 10.1038/369742a0. [DOI] [PubMed] [Google Scholar]

- 34.Granger, C. W. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 424–438 (1969).

- 35.Barnett L, Seth AK. The MVGC multivariate Granger causality toolbox: A new approach to Granger-causal inference. Journal of Neuroscience Methods. 2014;223:50–68. doi: 10.1016/j.jneumeth.2013.10.018. [DOI] [PubMed] [Google Scholar]

- 36.Seth, A. K., Barrett, A. B. & Barnett, L. Granger causality analysis in neuroscience and neuroimaging. J. Neurosci.35, 10.1523/JNEUROSCI.4399-14.2015 (2015). [DOI] [PMC free article] [PubMed]

- 37.Morash V, Bai O, Furlani S, Lin P, Hallett M. Classifying eeg signals preceding right hand, left hand, tongue, and right foot movements and motor imageries. Clinical neurophysiology. 2008;119:2570–2578. doi: 10.1016/j.clinph.2008.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Fink A, Benedek M. Eeg alpha power and creative ideation. Neuroscience & Biobehavioral Reviews. 2014;44:111–123. doi: 10.1016/j.neubiorev.2012.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Fink A, Graif B, Neubauer A. C. Brain correlates underlying creative thinking: Eeg alpha activity in professional vs. novice dancers. NeuroImage. 2009;46:854–862. doi: 10.1016/j.neuroimage.2009.02.036. [DOI] [PubMed] [Google Scholar]

- 40.Makrigiorgos, A., Shafti, A., Harston, A., Gerard, J. & Faisal, A. A. Human visual attention prediction boosts learning & performance of autonomous driving agents. arXiv preprint arXiv:1909.05003 (2019).

- 41.Belić JJ, Faisal AA. Decoding of human hand actions to handle missing limbs in neuroprosthetics. Frontiers in computational neuroscience. 2015;9:27. doi: 10.3389/fncom.2015.00027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Xiloyannis M, Gavriel C, Thomik AA, Faisal AA. Gaussian process autoregression for simultaneous proportional multi-modal prosthetic control with natural hand kinematics. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2017;25:1785–1801. doi: 10.1109/TNSRE.2017.2699598. [DOI] [PubMed] [Google Scholar]

- 43.Gottesman O, et al. Guidelines for reinforcement learning in healthcare. Nature Medicine. 2019;25:16–18. doi: 10.1038/s41591-018-0310-5. [DOI] [PubMed] [Google Scholar]