Abstract

SMT-based model checkers, especially IC3-style ones, are currently the most effective techniques for verification of infinite state systems. They infer global inductive invariants via local reasoning about a single step of the transition relation of a system, while employing SMT-based procedures, such as interpolation, to mitigate the limitations of local reasoning and allow for better generalization. Unfortunately, these mitigations intertwine model checking with heuristics of the underlying SMT-solver, negatively affecting stability of model checking.

In this paper, we propose to tackle the limitations of locality in a systematic manner. We introduce explicit global guidance into the local reasoning performed by IC3-style algorithms. To this end, we extend the SMT-IC3 paradigm with three novel rules, designed to mitigate fundamental sources of failure that stem from locality. We instantiate these rules for the theory of Linear Integer Arithmetic and implement them on top of Spacer solver in Z3. Our empirical results show that GSpacer, Spacer extended with global guidance, is significantly more effective than both Spacer and sole global reasoning, and, furthermore, is insensitive to interpolation.

Introduction

SMT-based Model Checking algorithms that combine SMT-based search for bounded counterexamples with interpolation-based search for inductive invariants are currently the most effective techniques for verification of infinite state systems. They are widely applicable, including for verification of synchronous systems, protocols, parameterized systems, and software.

The Achilles heel of these approaches is the mismatch between the local reasoning used to establish absence of bounded counterexamples and a global reason for absence of unbounded counterexamples (i.e., existence of an inductive invariant). This is particularly apparent in IC3-style algorithms [7], such as Spacer [18]. IC3-style algorithms establish bounded safety by repeatedly computing predecessors of error (or bad) states, blocking them by local reasoning about a single step of the transition relation of the system, and, later, using the resulting lemmas to construct a candidate inductive invariant for the global safety proof. The whole process is driven by the choice of local lemmas. Good lemmas lead to quick convergence, bad lemmas make even simple-looking problems difficult to solve.

The effect of local reasoning is somewhat mitigated by the use of interpolation in lemma construction. In addition to the usual inductive generalization by dropping literals from a blocked bad state, interpolation is used to further generalize the blocked state using theory-aware reasoning. For example, when blocking a bad state  , inductive generalization would infer a sub-clause of

, inductive generalization would infer a sub-clause of  as a lemma, while interpolation might infer

as a lemma, while interpolation might infer  – a predicate that might be required for the inductive invariant. Spacer, that is based on this idea, is extremely effective, as demonstrated by its performance in recent CHC-COMP competitions

[10]. The downside, however, is that the approach leads to a highly unstable procedure that is extremely sensitive to syntactic changes in the system description, changes in interpolation algorithms, and any algorithmic changes in the underlying SMT-solver.

– a predicate that might be required for the inductive invariant. Spacer, that is based on this idea, is extremely effective, as demonstrated by its performance in recent CHC-COMP competitions

[10]. The downside, however, is that the approach leads to a highly unstable procedure that is extremely sensitive to syntactic changes in the system description, changes in interpolation algorithms, and any algorithmic changes in the underlying SMT-solver.

An alternative approach, often called invariant inference, is to focus on the global safety proof, i.e., an inductive invariant. This has long been advocated by such approaches as Houdini [15], and, more recently, by a variety of machine-learning inspired techniques, e.g., FreqHorn [14], LinearArbitrary [28], and ICE-DT [16]. The key idea is to iteratively generate positive (i.e., reachable states) and negative (i.e., states that reach an error) examples and to compute a candidate invariant that separates these two sets. The reasoning is more focused towards the invariant, and, the search is restricted by either predicates, templates, grammars, or some combination. Invariant inference approaches are particularly good at finding simple inductive invariants. However, they do not generalize well to a wide variety of problems. In practice, they are often used to complement other SMT-based techniques.

In this paper, we present a novel approach that extends, what we call, local reasoning of IC3-style algorithms with global guidance inspired by the invariant inference algorithms described above. Our main insight is that the set of lemmas maintained by IC3-style algorithms hint towards a potential global proof. However, these hints are lost in existing approaches. We observe that letting the current set of lemmas, that represent candidate global invariants, guide local reasoning by introducing new lemmas and states to be blocked is often sufficient to direct IC3 towards a better global proof.

We present and implement our results in the context of Spacer—a solver for Constrained Horn Clauses (CHC)—implemented in the Z3 SMT-solver [13]. Spacer is used by multiple software model checking tools, performed remarkably well in CHC-COMP competitions [10], and is open-sourced. However, our results are fundamental and apply to any other IC3-style algorithm. While our implementation works with arbitrary CHC instances, we simplify the presentation by focusing on infinite state model checking of transition systems.

We illustrate the pitfalls of local reasoning using three examples shown in Fig. 1. All three examples are small, simple, and have simple inductive invariants. All three are challenging for Spacer. Where these examples are based on Spacer-specific design choices, each exhibits a fundamental deficiency that stems from local reasoning. We believe they can be adapted for any other IC3-style verification algorithm. The examples assume basic familiarity with the IC3 paradigm. Readers who are not familiar with it may find it useful to read the examples after reading Sect. 2.

Fig. 1.

Verification tasks to illustrate sources of divergence for Spacer. The call nd() non-deterministically returns a Boolean value.

Myopic Generalization.

Spacer diverges on the example in Fig. 1(a) by iteratively learning lemmas of the form  for different values of k, where a, b, c, d are the program variables. These lemmas establish that there are no counterexamples of longer and longer lengths. However, the process never converges to the desired lemma

for different values of k, where a, b, c, d are the program variables. These lemmas establish that there are no counterexamples of longer and longer lengths. However, the process never converges to the desired lemma  , which excludes counterexamples of any length. The lemmas are discovered using interpolation, based on proofs found by the SMT-solver. A close examination of the corresponding proofs shows that the relationship between

, which excludes counterexamples of any length. The lemmas are discovered using interpolation, based on proofs found by the SMT-solver. A close examination of the corresponding proofs shows that the relationship between  and

and  does not appear in the proofs, making it impossible to find the desired lemma by tweaking local interpolation reasoning. On the other hand, looking at the global proof (i.e., the set of lemmas discovered to refute a bounded counterexample), it is almost obvious that

does not appear in the proofs, making it impossible to find the desired lemma by tweaking local interpolation reasoning. On the other hand, looking at the global proof (i.e., the set of lemmas discovered to refute a bounded counterexample), it is almost obvious that  is an interesting generalization to try. Amusingly, a small, syntactic, but semantic preserving change of swapping line 2 for line 3 in Fig. 1(a) changes the SMT-solver proofs, affects local interpolation, and makes the instance trivial for Spacer.

is an interesting generalization to try. Amusingly, a small, syntactic, but semantic preserving change of swapping line 2 for line 3 in Fig. 1(a) changes the SMT-solver proofs, affects local interpolation, and makes the instance trivial for Spacer.

Excessive (Predecessor) Generalization.

Spacer diverges on the example in Fig. 1(b) by computing an infinite sequence of lemmas of the form  , where a and b are program variables, and

, where a and b are program variables, and  and

and  are integers. The root cause is excessive generalization in predecessor computation. The

are integers. The root cause is excessive generalization in predecessor computation. The  states are

states are  , and their predecessors are states such as

, and their predecessors are states such as  ,

,  , etc., or, more generally, regions

, etc., or, more generally, regions  ,

,  , etc. Spacer always attempts to compute the most general predecessor states. This is the best local strategy, but blocking these regions by learning their negation leads to the aforementioned lemmas. According to the global proof these lemmas do not converge to a linear invariant. An alternative strategy that under-approximates the problematic regions by (numerically) simpler regions and, as a result, learns simpler lemmas is desired (and is effective on this example). For example, region

, etc. Spacer always attempts to compute the most general predecessor states. This is the best local strategy, but blocking these regions by learning their negation leads to the aforementioned lemmas. According to the global proof these lemmas do not converge to a linear invariant. An alternative strategy that under-approximates the problematic regions by (numerically) simpler regions and, as a result, learns simpler lemmas is desired (and is effective on this example). For example, region  can be under-approximated by

can be under-approximated by  , eventually leading to a lemma

, eventually leading to a lemma  , that is a part of the final invariant:

, that is a part of the final invariant:  .

.

Stuck in a Rut. Finally, Spacer converges on the example in Fig. 1(c), but only after unrolling the system for 100 iterations. During the first 100 iterations, Spacer learns that program states with  are not reachable because a is bounded by 1 in the first iteration, by 2 in the second, and so on. In each iteration, the global proof is updated by replacing a lemma of the form

are not reachable because a is bounded by 1 in the first iteration, by 2 in the second, and so on. In each iteration, the global proof is updated by replacing a lemma of the form  by lemma of the form

by lemma of the form  for different values of k. Again, the strategy is good locally – total number of lemmas does not grow and the bounded proof is improved. Yet, globally, it is clear that no progress is made since the same set of bad states are blocked again and again in slightly different ways. An alternative strategy is to abstract the literal

for different values of k. Again, the strategy is good locally – total number of lemmas does not grow and the bounded proof is improved. Yet, globally, it is clear that no progress is made since the same set of bad states are blocked again and again in slightly different ways. An alternative strategy is to abstract the literal  from the formula that represents the bad states, and, instead, conjecture that no states in

from the formula that represents the bad states, and, instead, conjecture that no states in  are reachable.

are reachable.

Our Approach: Global Guidance. As shown in the examples above, in all the cases that Spacer diverges, the missteps are not obvious locally, but are clear when the overall proof is considered. We propose three new rules, Subsume, Concretize, and, Conjecture, that provide global guidance, by considering existing lemmas, to mitigate the problems illustrated above. Subsume introduces a lemma that generalizes existing ones, Concretize under-approximates partially-blocked predecessors to focus on repeatedly unblocked regions, and Conjecture over-approximates a predecessor by abstracting away regions that are repeatedly blocked. The rules are generic, and apply to arbitrary SMT theories. Furthermore, we propose an efficient instantiation of the rules for the theory Linear Integer Arithmetic.

We have implemented the new strategy, called GSpacer, in Spacer and compared it to the original implementation of Spacer. We show that GSpacer outperforms Spacer in benchmarks from CHC-COMP 2018 and 2019. More significantly, we show that the performance is independent of interpolation. While Spacer is highly dependent on interpolation parameters, and performs poorly when interpolation is disabled, the results of GSpacer are virtually unaffected by interpolation. We also compare GSpacer to LinearArbitrary [28], a tool that infers invariants using global reasoning. GSpacer outperforms LinearArbitrary on the benchmarks from [28]. These results indicate that global guidance mitigates the shortcomings of local reasoning.

The rest of the paper is structured as follows. Sect. 2 presents the necessary background. Sect. 3 introduces our global guidance as a set of abstract inference rules. Sect. 4 describes an instantiation of the rules to Linear Integer Arithmetic (LIA). Sect. 5 presents our empirical evaluation. Finally, Sect. 7 describes related work and concludes the paper.

Background

Logic. We consider first order logic modulo theories, and adopt the standard notation and terminology. A first-order language modulo theory  is defined over a signature

is defined over a signature  that consists of constant, function and predicate symbols, some of which may be interpreted by

that consists of constant, function and predicate symbols, some of which may be interpreted by  . As always, terms are constant symbols, variables, or function symbols applied to terms; atoms are predicate symbols applied to terms; literals are atoms or their negations; cubes are conjunctions of literals; and clauses are disjunctions of literals. Unless otherwise stated, we only consider closed formulas (i.e., formulas without any free variables). As usual, we use sets of formulas and their conjunctions interchangeably.

. As always, terms are constant symbols, variables, or function symbols applied to terms; atoms are predicate symbols applied to terms; literals are atoms or their negations; cubes are conjunctions of literals; and clauses are disjunctions of literals. Unless otherwise stated, we only consider closed formulas (i.e., formulas without any free variables). As usual, we use sets of formulas and their conjunctions interchangeably.

MBP. Given a set of constants  , a formula

, a formula  and a model

and a model  , Model Based Projection (MBP) of

, Model Based Projection (MBP) of  over the constants

over the constants  , denoted

, denoted  , computes a model-preserving under-approximation of

, computes a model-preserving under-approximation of  projected onto

projected onto  . That is,

. That is,  is a formula over

is a formula over  such that

such that  and any model

and any model  can be extended to a model

can be extended to a model  by providing an interpretation for

by providing an interpretation for  . There are polynomial time algorithms for computing MBP in Linear Arithmetic

[5, 18].

. There are polynomial time algorithms for computing MBP in Linear Arithmetic

[5, 18].

Interpolation. Given an unsatisfiable formula  , an interpolant, denoted

, an interpolant, denoted  , is a formula I over the shared signature of A and B such that

, is a formula I over the shared signature of A and B such that  and

and  .

.

Safety Problem. A transition system is a pair  , where

, where  is a formula over

is a formula over  and

and  is a formula over

is a formula over  , where

, where  .1 The states of the system correspond to structures over

.1 The states of the system correspond to structures over  ,

,  represents the initial states and

represents the initial states and  represents the transition relation, where

represents the transition relation, where  is used to represent the pre-state of a transition, and

is used to represent the pre-state of a transition, and  is used to represent the post-state. For a formula

is used to represent the post-state. For a formula  over

over  , we denote by

, we denote by  the formula obtained by substituting each

the formula obtained by substituting each  by

by  . A safety problem is a triple

. A safety problem is a triple  , where

, where  is a transition system and

is a transition system and  is a formula over

is a formula over  representing a set of bad states.

representing a set of bad states.

The safety problem  has a counterexample of length k if the following formula is satisfiable:

has a counterexample of length k if the following formula is satisfiable:  where

where  is defined over

is defined over  (a copy of the signature used to represent the state of the system after the execution of i steps) and is obtained from

(a copy of the signature used to represent the state of the system after the execution of i steps) and is obtained from  by substituting each

by substituting each  by

by  , and

, and  is obtained from

is obtained from  by substituting

by substituting  by

by  and

and  by

by  . The transition system is safe if the safety problem has no counterexample, of any length.

. The transition system is safe if the safety problem has no counterexample, of any length.

Inductive Invariants. An inductive invariant is a formula  over

over  such that (i)

such that (i)  , (ii)

, (ii)  , and (iii)

, and (iii)  . If such an inductive invariant exists, then the transition system is safe.

. If such an inductive invariant exists, then the transition system is safe.

Spacer. The safety problem defined above is an instance of a more general problem, CHC-SAT, of satisfiability of Constrained Horn Clauses (CHC). Spacer is a semi-decision procedure for CHC-SAT. However, to simplify the presentation, we describe the algorithm only for the particular case of the safety problem. We stress that Spacer, as well as the developments of this paper, apply to the more general setting of CHCs (both linear and non-linear). We assume that the only uninterpreted symbols in  are constant symbols, which we denote

are constant symbols, which we denote  . Typically, these represent program variables. Without loss of generality, we assume that

. Typically, these represent program variables. Without loss of generality, we assume that  is a cube.

is a cube.

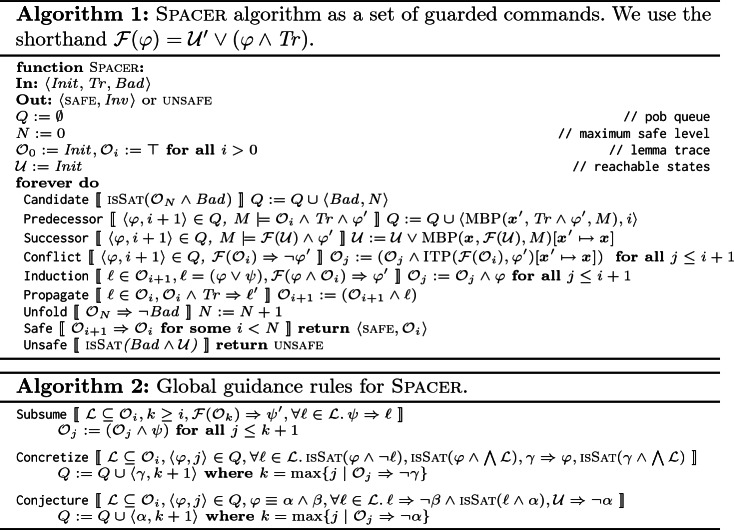

Algorithm 1 presents the key ingredients of Spacer as a set of guarded commands (or rules). It maintains the following. Current unrolling depth N at which a counterexample is searched (there are no counterexamples with depth less than N). A trace

of frames, such that each frame

of frames, such that each frame  is a set of lemmas, and each lemma

is a set of lemmas, and each lemma  is a clause. A queue of proof obligations

Q, where each proof obligation (pob) in Q is a pair

is a clause. A queue of proof obligations

Q, where each proof obligation (pob) in Q is a pair  of a cube

of a cube  and a level number i,

and a level number i,  . An under-approximation

. An under-approximation  of reachable states. Intuitively, each frame

of reachable states. Intuitively, each frame  is a candidate inductive invariant s.t.

is a candidate inductive invariant s.t.  over-approximates states reachable up to i steps from

over-approximates states reachable up to i steps from  . The latter is ensured since

. The latter is ensured since  , the trace is monotone, i.e.,

, the trace is monotone, i.e.,  , and each frame is inductive relative to its previous one, i.e.,

, and each frame is inductive relative to its previous one, i.e.,  . Each pob

. Each pob

in Q corresponds to a suffix of a potential counterexample that has to be blocked in

in Q corresponds to a suffix of a potential counterexample that has to be blocked in  , i.e., has to be proven unreachable in i steps.

, i.e., has to be proven unreachable in i steps.

The Candidate rule adds an initial pob

to the queue. If a pob

to the queue. If a pob

cannot be blocked because

cannot be blocked because  is reachable from frame

is reachable from frame  , the Predecessor rule generates a predecessor

, the Predecessor rule generates a predecessor  of

of  using MBP and adds

using MBP and adds  to Q. The Successor rule updates the set of reachable states if the pob is reachable. If the pob is blocked, the Conflict rule strengthens the trace

to Q. The Successor rule updates the set of reachable states if the pob is reachable. If the pob is blocked, the Conflict rule strengthens the trace  by using interpolation to learn a new lemma

by using interpolation to learn a new lemma  that blocks the pob, i.e.,

that blocks the pob, i.e.,  implies

implies  . The Induction rule strengthens a lemma by inductive generalization and the Propagate rule pushes a lemma to a higher frame. If the

. The Induction rule strengthens a lemma by inductive generalization and the Propagate rule pushes a lemma to a higher frame. If the  state has been blocked at N, the Unfold rule increments the depth of unrolling N. In practice, the rules are scheduled to ensure progress towards finding a counterexample.

state has been blocked at N, the Unfold rule increments the depth of unrolling N. In practice, the rules are scheduled to ensure progress towards finding a counterexample.

Global Guidance of Local Proofs

As illustrated by the examples in Fig. 1, while Spacer is generally effective, its local reasoning is easily confused. The effectiveness is very dependent on the local computation of predecessors using model-based projection, and lemmas using interpolation. In this section, we extend Spacer with three additional global reasoning rules. The rules are inspired by the deficiencies illustrated by the motivating examples in Fig. 1. In this section, we present the rules abstractly, independent of any underlying theory, focusing on pre- and post-conditions. In Sect. 4, we specialize the rules for Linear Integer Arithmetic, and show how they are scheduled with the other rules of Spacer in an efficient verification algorithm. The new global rules are summarized in Algorithm 2. We use the same guarded command notation as in description of Spacer in Algorithm 1. Note that the rules supplement, and not replace, the ones in Algorithm 1.

Subsume is the most natural rule to explain. It says that if there is a set of lemmas  at level i, and there exists a formula

at level i, and there exists a formula  such that (a)

such that (a)  is stronger than every lemma in

is stronger than every lemma in  , and (b)

, and (b)  over-approximates states reachable in at most k steps, where

over-approximates states reachable in at most k steps, where  , then

, then  can be added to the trace to subsume

can be added to the trace to subsume  . This rule reduces the size of the global proof – that is, the number of total not-subsumed lemmas. Note that the rule allows

. This rule reduces the size of the global proof – that is, the number of total not-subsumed lemmas. Note that the rule allows  to be at a level k that is higher than i. The choice of

to be at a level k that is higher than i. The choice of  is left open. The details are likely to be specific to the theory involved. For example, when instantiated for LIA, Subsume is sufficient to solve example in Fig. 1(a). Interestingly, Subsume is not likely to be effective for propositional IC3. In that case,

is left open. The details are likely to be specific to the theory involved. For example, when instantiated for LIA, Subsume is sufficient to solve example in Fig. 1(a). Interestingly, Subsume is not likely to be effective for propositional IC3. In that case,  is a clause and the only way for it to be stronger than

is a clause and the only way for it to be stronger than  is for

is for  to be a syntactic sub-sequence of every lemma in

to be a syntactic sub-sequence of every lemma in  , but such

, but such  is already explored by local inductive generalization (rule Induction in Algorithm 1).

is already explored by local inductive generalization (rule Induction in Algorithm 1).

Concretize applies to a pob, unlike Subsume. It is motivated by example in Fig. 1(b) that highlights the problem of excessive local generalization. Spacer always computes as general predecessors as possible. This is necessary for refutational completeness since in an infinite state system there are infinitely many potential predecessors. Computing the most general predecessor ensures that Spacer finds a counterexample, if it exists. However, this also forces Spacer to discover more general, and sometimes more complex, lemmas than might be necessary for an inductive invariant. Without a global view of the overall proof, it is hard to determine when the algorithm generalizes too much. The intuition for Concretize is that generalization is excessive when there is a single pob

that is not blocked, yet, there is a set of lemmas

that is not blocked, yet, there is a set of lemmas  such that every lemma

such that every lemma  partially blocks

partially blocks  . That is, for any

. That is, for any  , there is a sub-region

, there is a sub-region  of pob

of pob

that is blocked by

that is blocked by  (i.e.,

(i.e.,  ), and there is at least one state

), and there is at least one state  that is not blocked by any existing lemma in

that is not blocked by any existing lemma in  (i.e.,

(i.e.,  ). In this case, Concretize computes an under-approximation

). In this case, Concretize computes an under-approximation  of

of  that includes some not-yet-blocked state s. The new pob is added to the lowest level at which

that includes some not-yet-blocked state s. The new pob is added to the lowest level at which  is not yet blocked. Concretize is useful to solve the example in Fig. 1(b).

is not yet blocked. Concretize is useful to solve the example in Fig. 1(b).

Conjecture guides the algorithm away from being stuck in the same part of the search space. A single pob

might be blocked by a different lemma at each level that

might be blocked by a different lemma at each level that  appears in. This indicates that the lemmas are too strong, and cannot be propagated successfully to a higher level. The goal of the Conjecture rule is to identify such a case to guide the algorithm to explore alternative proofs with a better potential for generalization. This is done by abstracting away the part of the pob that has been blocked in the past. The pre-condition for Conjecture is the existence of a pob

appears in. This indicates that the lemmas are too strong, and cannot be propagated successfully to a higher level. The goal of the Conjecture rule is to identify such a case to guide the algorithm to explore alternative proofs with a better potential for generalization. This is done by abstracting away the part of the pob that has been blocked in the past. The pre-condition for Conjecture is the existence of a pob

such that

such that  is split into two (not necessarily disjoint) sets of literals,

is split into two (not necessarily disjoint) sets of literals,  and

and  . Second, there must be a set of lemmas

. Second, there must be a set of lemmas  , at a (typically much lower) level

, at a (typically much lower) level  such that every lemma

such that every lemma  blocks

blocks  , and, moreover, blocks

, and, moreover, blocks  by blocking

by blocking  . Intuitively, this implies that while there are many different lemmas (i.e., all lemmas in

. Intuitively, this implies that while there are many different lemmas (i.e., all lemmas in  ) that block

) that block  at different levels, all of them correspond to a local generalization of

at different levels, all of them correspond to a local generalization of  that could not be propagated to block

that could not be propagated to block  at higher levels. In this case, Conjecture abstracts the pob

at higher levels. In this case, Conjecture abstracts the pob

into

into  , hoping to generate an alternative way to block

, hoping to generate an alternative way to block  . Of course,

. Of course,  is conjectured only if it is not already blocked and does not contain any known reachable states. Conjecture is necessary for a quick convergence on the example in Fig. 1(c). In some respect, Conjecture is akin to widening in Abstract Interpretation

[12] – it abstracts a set of states by dropping constraints that appear to prevent further exploration. Of course, it is also quite different since it does not guarantee termination. While Conjecture is applicable to propositional IC3 as well, it is much more significant in SMT-based setting since in many FOL theories a single literal in a pob might result in infinitely many distinct lemmas.

is conjectured only if it is not already blocked and does not contain any known reachable states. Conjecture is necessary for a quick convergence on the example in Fig. 1(c). In some respect, Conjecture is akin to widening in Abstract Interpretation

[12] – it abstracts a set of states by dropping constraints that appear to prevent further exploration. Of course, it is also quite different since it does not guarantee termination. While Conjecture is applicable to propositional IC3 as well, it is much more significant in SMT-based setting since in many FOL theories a single literal in a pob might result in infinitely many distinct lemmas.

Each of the rules can be applied by itself, but they are most effective in combination. For example, Concretize creates less general predecessors, that, in the worst case, lead to many simple lemmas. At the same time, Subsume combines lemmas together into more complex ones. The interaction of the two produces lemmas that neither one can produce in isolation. At the same time, Conjecture helps unstuck the algorithm from a single unproductive pob, allowing the other rules to take effect.

Global Guidance for Linear Integer Arithmetic

In this section, we present a specialization of our general rules, shown in Algorithm 2, to the theory of Linear Integer Arithmetic (LIA). This requires solving two problems: identifying subsets of lemmas for pre-conditions of the rules (clearly using all possible subsets is too expensive), and applying the rule once its pre-condition is met. For lemma selection, we introduce a notion of syntactic clustering based on anti-unification. For rule application, we exploit basic properties of LIA for an effective algorithm. Our presentation is focused on LIA exclusively. However, the rules extend to combinations of LIA with other theories, such as the combined theory of LIA and Arrays.

The rest of this section is structured as follows. We begin with a brief background on LIA in Sect. 4.1. We then present our lemma selection scheme, which is common to all the rules, in Sect. 4.2, followed by a description of how the rules Subsume (in Sect. 4.3), Concretize (in Sect. 4.4), and Conjecture (in Sect. 4.5) are instantiated for LIA. We conclude in Sect. 4.6 with an algorithm that integrates all the rules together.

Linear Integer Arithmetic: Background

In the theory of Linear Integer Arithmetic (LIA), formulas are defined over a signature that includes interpreted function symbols  , −,

, −,  , interpreted predicate symbols <,

, interpreted predicate symbols <,  ,

,  , interpreted constant symbols

, interpreted constant symbols  , and uninterpreted constant symbols

, and uninterpreted constant symbols  . We write

. We write  for the set interpreted constant symbols, and call them integers. We use constants to refer exclusively to the uninterpreted constants (these are often called variables in LIA literature). Terms (and accordingly formulas) in LIA are restricted to be linear, that is, multiplication is never applied to two constants.

for the set interpreted constant symbols, and call them integers. We use constants to refer exclusively to the uninterpreted constants (these are often called variables in LIA literature). Terms (and accordingly formulas) in LIA are restricted to be linear, that is, multiplication is never applied to two constants.

We write  for the fragment of LIA that excludes divisiblity (d

for the fragment of LIA that excludes divisiblity (d h) predicates. A literal in

h) predicates. A literal in  is a linear inequality; a cube is a conjunction of such inequalities, that is, a polytope. We find it convenient to use matrix-based notation for representing cubes in

is a linear inequality; a cube is a conjunction of such inequalities, that is, a polytope. We find it convenient to use matrix-based notation for representing cubes in  . A ground cube

. A ground cube  with p inequalities (literals) over k (uninterpreted) constants is written as

with p inequalities (literals) over k (uninterpreted) constants is written as  , where A is a

, where A is a  matrix of coefficients in

matrix of coefficients in  ,

,  is a column vector that consists of the (uninterpreted) constants, and

is a column vector that consists of the (uninterpreted) constants, and  is a column vector in

is a column vector in  . For example, the cube

. For example, the cube  is written as

is written as  In the sequel, all vectors are column vectors, super-script T denotes transpose, dot is used for a dot product and

In the sequel, all vectors are column vectors, super-script T denotes transpose, dot is used for a dot product and  stands for a matrix of column vectors

stands for a matrix of column vectors  and

and  .

.

Lemma Selection

A common pre-condition for all of our global rules in Algorithm 2 is the existence of a subset of lemmas  of some frame

of some frame  . Attempting to apply the rules for every subset of

. Attempting to apply the rules for every subset of  is infeasible. In practice, we use syntactic similarity between lemmas as a predictor that one of the global rules is applicable, and restrict

is infeasible. In practice, we use syntactic similarity between lemmas as a predictor that one of the global rules is applicable, and restrict  to subsets of syntactically similar lemmas. In the rest of this section, we formally define what we mean by syntactic similarity, and how syntactically similar subsets of lemmas, called clusters, are maintained efficiently throughout the algorithm.

to subsets of syntactically similar lemmas. In the rest of this section, we formally define what we mean by syntactic similarity, and how syntactically similar subsets of lemmas, called clusters, are maintained efficiently throughout the algorithm.

Syntactic Similarity. A formula  with free variables is called a pattern. Note that we do not require

with free variables is called a pattern. Note that we do not require  to be in LIA. Let

to be in LIA. Let  be a substitution, i.e., a mapping from variables to terms. We write

be a substitution, i.e., a mapping from variables to terms. We write  for the result of replacing all occurrences of free variables in

for the result of replacing all occurrences of free variables in  with their mapping under

with their mapping under  . A substitution

. A substitution  is called numeric if it maps every variable to an integer, i.e., the range of

is called numeric if it maps every variable to an integer, i.e., the range of  is

is  . We say that a formula

. We say that a formula  numerically matches a pattern

numerically matches a pattern  iff there exists a numeric substitution

iff there exists a numeric substitution  such that

such that  . Note that, as usual, the equality is syntactic. For example, consider the pattern

. Note that, as usual, the equality is syntactic. For example, consider the pattern  with free variables

with free variables  and

and  and uninterpreted constants a and b. The formula

and uninterpreted constants a and b. The formula  matches

matches  via a numeric substitution

via a numeric substitution  . However,

. However,  , while semantically equivalent to

, while semantically equivalent to  , does not match

, does not match  . Similarly

. Similarly  does not match

does not match  as well.

as well.

Matching is extended to patterns in the usual way by allowing a substitution  to map variables to variables. We say that a pattern

to map variables to variables. We say that a pattern  is more general than a pattern

is more general than a pattern  if

if  matches

matches  . A pattern

. A pattern  is a numeric anti-unifier for a pair of formulas

is a numeric anti-unifier for a pair of formulas  and

and  if both

if both  and

and  match

match  numerically. We write

numerically. We write  for a most general numeric anti-unifier of

for a most general numeric anti-unifier of  and

and  . We say that two formulas

. We say that two formulas  and

and  are syntactically similar if there exists a numeric anti-unifier between them (i.e.,

are syntactically similar if there exists a numeric anti-unifier between them (i.e.,  is defined). Anti-unification is extended to sets of formulas in the usual way.

is defined). Anti-unification is extended to sets of formulas in the usual way.

Clusters. We use anti-unification to define clusters of syntactically similar formulas. Let  be a fixed set of formulas, and

be a fixed set of formulas, and  a pattern. A cluster,

a pattern. A cluster,  , is a subset of

, is a subset of  such that every formula

such that every formula  numerically matches

numerically matches  . That is,

. That is,  is a numeric anti-unifier for

is a numeric anti-unifier for  . In the implementation, we restrict the pre-conditions of the global rules so that a subset of lemmas

. In the implementation, we restrict the pre-conditions of the global rules so that a subset of lemmas  is a cluster for some pattern

is a cluster for some pattern  , i.e.,

, i.e.,  .

.

Clustering Lemmas. We use the following strategy to efficiently keep track of available clusters. Let  be a new lemma to be added to

be a new lemma to be added to  . Assume there is at least one lemma

. Assume there is at least one lemma  that numerically anti-unifies with

that numerically anti-unifies with  via some pattern

via some pattern  . If such an

. If such an  does not belong to any cluster, a new cluster

does not belong to any cluster, a new cluster  is formed, where

is formed, where  . Otherwise, for every lemma

. Otherwise, for every lemma  that numerically matches

that numerically matches  and every cluster

and every cluster  containing

containing  ,

,  is added to

is added to  if

if  matches

matches  , or a new cluster is formed using

, or a new cluster is formed using  ,

,  , and any other lemmas in

, and any other lemmas in  that anti-unify with them. Note that a new lemma

that anti-unify with them. Note that a new lemma  might belong to multiple clusters.

might belong to multiple clusters.

For example, suppose  , and there is already a cluster

, and there is already a cluster  . Since

. Since  anti-unifies with each of the lemmas in the cluster, but does not match the pattern

anti-unifies with each of the lemmas in the cluster, but does not match the pattern  , a new cluster that includes all of them is formed w.r.t. a more general pattern:

, a new cluster that includes all of them is formed w.r.t. a more general pattern:  .

.

In the presentation above, we assumed that anti-unification is completely syntactic. This is problematic in practice since it significantly limits the applicability of the global rules. Recall, for example, that  and

and  do not anti-unify numerically according to our definitions, and, therefore, do not cluster together. In practice, we augment syntactic anti-unification with simple rewrite rules that are applied greedily. For example, we normalize all

do not anti-unify numerically according to our definitions, and, therefore, do not cluster together. In practice, we augment syntactic anti-unification with simple rewrite rules that are applied greedily. For example, we normalize all  terms, take care of implicit multiplication by 1, and of associativity and commutativity of addition. In the future, it is interesting to explore how advanced anti-unification algorithms, such as

[8, 27], can be adapted for our purpose.

terms, take care of implicit multiplication by 1, and of associativity and commutativity of addition. In the future, it is interesting to explore how advanced anti-unification algorithms, such as

[8, 27], can be adapted for our purpose.

Subsume Rule for LIA

Recall that the Subsume rule (Algorithm 2) takes a cluster of lemmas  and computes a new lemma

and computes a new lemma  that subsumes all the lemmas in

that subsumes all the lemmas in  , that is

, that is  . We find it convenient to dualize the problem. Let

. We find it convenient to dualize the problem. Let  be the dual of

be the dual of  , clearly

, clearly  iff

iff  . Note that

. Note that  is a set of clauses,

is a set of clauses,  is a set of cubes,

is a set of cubes,  is a clause, and

is a clause, and  is a cube. In the case of

is a cube. In the case of  , this means that

, this means that  represents a union of convex sets, and

represents a union of convex sets, and  represents a convex set that the Subsume rule must find. The strongest such

represents a convex set that the Subsume rule must find. The strongest such  in

in  exists, and is the convex closure of

exists, and is the convex closure of  . Thus, applying Subsume in the context of

. Thus, applying Subsume in the context of  is reduced to computing a convex closure of a set of (negated) lemmas in a cluster. Full LIA extends

is reduced to computing a convex closure of a set of (negated) lemmas in a cluster. Full LIA extends  with divisibility constraints. Therefore, Subsume obtains a stronger

with divisibility constraints. Therefore, Subsume obtains a stronger  by adding such constraints.

by adding such constraints.

Example 1

For example, consider the following cluster:

|

The convex closure of  in

in  is

is  . However, a stronger over-approximation exists in LIA:

. However, a stronger over-approximation exists in LIA:  .

.

In the sequel, we describe subsumeCube (Algorithm 3) which computes a cube  that over-approximates

that over-approximates  . Subsume is then implemented by removing from

. Subsume is then implemented by removing from  lemmas that are already subsumed by existing lemmas in

lemmas that are already subsumed by existing lemmas in  , dualizing the result into

, dualizing the result into  , invoking subsumeCube on

, invoking subsumeCube on  and returning

and returning  as a lemma that subsumes

as a lemma that subsumes  .

.

Recall that Subsume is tried only in the case  . We further require that the negated pattern,

. We further require that the negated pattern,  , is of the form

, is of the form  , where A is a coefficients matrix,

, where A is a coefficients matrix,  is a vector of constants and

is a vector of constants and  is a vector of p free variables. Under this assumption,

is a vector of p free variables. Under this assumption,  (the dual of

(the dual of  ) is of the form

) is of the form  , where

, where  , and for each

, and for each  ,

,  is a numeric substitution to

is a numeric substitution to  from which one of the negated lemmas in

from which one of the negated lemmas in  is obtained. That is,

is obtained. That is,  . In Example 1,

. In Example 1,  and

and

Each cube  is equivalent to

is equivalent to  . Finally,

. Finally,  . Thus, computing the over-approximation of

. Thus, computing the over-approximation of  is reduced to (a) computing the convex hull H of a set of points

is reduced to (a) computing the convex hull H of a set of points  , (b) computing divisibility constraints D that are satisfied by all the points, (c) substituting

, (b) computing divisibility constraints D that are satisfied by all the points, (c) substituting  for the disjunction in the equation above, and (c) eliminating variables

for the disjunction in the equation above, and (c) eliminating variables  . Both the computation of

. Both the computation of  and the elimination of

and the elimination of  may be prohibitively expensive. We, therefore, over-approximate them. Our approach for doing so is presented in Algorithm 3, and explained in detail below.

may be prohibitively expensive. We, therefore, over-approximate them. Our approach for doing so is presented in Algorithm 3, and explained in detail below.

Computing the convex hull of  . lines 3 to 8 compute the convex hull of

. lines 3 to 8 compute the convex hull of  as a formula over

as a formula over  , where variable

, where variable  , for

, for  , represents the

, represents the  coordinates in the vectors (points)

coordinates in the vectors (points)  . Some of the coordinates,

. Some of the coordinates,  , in these vectors may be linearly dependent upon others. To simplify the problem, we first identify such dependencies and compute a set of linear equalities that expresses them (L in line 4). To do so, we consider a matrix

, in these vectors may be linearly dependent upon others. To simplify the problem, we first identify such dependencies and compute a set of linear equalities that expresses them (L in line 4). To do so, we consider a matrix  , where the

, where the  row consists of

row consists of  . The

. The  column in N, denoted

column in N, denoted  , corresponds to the

, corresponds to the  coordinate,

coordinate,  . The rank of N is the number of linearly independent columns (and rows). The other columns (coordinates) can be expressed by linear combinations of the linearly independent ones. To compute these linear combinations we use the kernel of

. The rank of N is the number of linearly independent columns (and rows). The other columns (coordinates) can be expressed by linear combinations of the linearly independent ones. To compute these linear combinations we use the kernel of  (N appended with a column vector of 1’s), which is the set of all vectors

(N appended with a column vector of 1’s), which is the set of all vectors  such that

such that  , where

, where  is the zero vector. Let

is the zero vector. Let  be a basis for the kernel of

be a basis for the kernel of  . Then

. Then  , and for each vector

, and for each vector  , the linear equality

, the linear equality  holds in all the rows of N (i.e., all the given vectors satisfy it). We accumulate these equalities, which capture the linear dependencies between the coordinates, in L. Further, the equalities are used to compute

holds in all the rows of N (i.e., all the given vectors satisfy it). We accumulate these equalities, which capture the linear dependencies between the coordinates, in L. Further, the equalities are used to compute  coordinates (columns in N) that are linearly independent and, modulo L, uniquely determine the remaining coordinates. We denote by

coordinates (columns in N) that are linearly independent and, modulo L, uniquely determine the remaining coordinates. We denote by  the subset of

the subset of  that consists of the linearly independent coordinates. We further denote by

that consists of the linearly independent coordinates. We further denote by  the projection of

the projection of  to these coordinates and by

to these coordinates and by  the projection of N to the corresponding columns. We have that

the projection of N to the corresponding columns. We have that  .

.

In Example 1, the numeral matrix is  , for which

, for which  . Therefore, L is the conjunction of equalities

. Therefore, L is the conjunction of equalities  , or, equivalently

, or, equivalently  ,

,  , and

, and

|

Next, we compute the convex closure of  , and conjoin it with L to obtain H, the convex closure of

, and conjoin it with L to obtain H, the convex closure of  .

.

If the dimension of  is one, as is the case in the example above, convex closure, C, of

is one, as is the case in the example above, convex closure, C, of  is obtained by bounding the sole element of

is obtained by bounding the sole element of  based on its values in

based on its values in  (line 6). In Example 1, we obtain

(line 6). In Example 1, we obtain  .

.

If the dimension of  is greater than one, just computing the bounds of one of the constants is not sufficient. Instead, we use the concept of syntactic convex closure from

[2] to compute the convex closure of

is greater than one, just computing the bounds of one of the constants is not sufficient. Instead, we use the concept of syntactic convex closure from

[2] to compute the convex closure of  as

as  where

where  is a vector that consists of q fresh rational variables and C is defined as follows (line 8):

is a vector that consists of q fresh rational variables and C is defined as follows (line 8):  . C states that

. C states that  is a convex combination of the rows of

is a convex combination of the rows of  , or, in other words,

, or, in other words,  is a convex combination of

is a convex combination of  .

.

To illustrate the syntactic convex closure, consider a second example with a set of cubes:  . The coefficient matrix A, and the numeral matrix N are then:

. The coefficient matrix A, and the numeral matrix N are then:  and

and  . Here,

. Here,  is empty – all the columns are linearly independent, hence,

is empty – all the columns are linearly independent, hence,  and

and  . Therefore, syntactic convex closure is applied to the full matrix N, resulting in

. Therefore, syntactic convex closure is applied to the full matrix N, resulting in

|

The convex closure of  is then

is then  , which is

, which is  here.

here.

Divisibility Constraints. Inductive invariants for verification problems often require divisibility constraints. We, therefore, use such constraints, denoted D, to obtain a stronger over-approximation of  than the convex closure. To add a divisibility constraint for

than the convex closure. To add a divisibility constraint for  , we consider the column

, we consider the column  that corresponds to

that corresponds to  in

in  . We find the largest positive integer d such that each integer in

. We find the largest positive integer d such that each integer in  leaves the same remainder when divided by d; namely, there exists

leaves the same remainder when divided by d; namely, there exists  such that

such that  for every

for every  . This means that

. This means that  is satisfied by all the points

is satisfied by all the points  . Note that such r always exists for

. Note that such r always exists for  . To avoid this trivial case, we add the constraint

. To avoid this trivial case, we add the constraint  only if

only if  (line 12). We repeat this process for each

(line 12). We repeat this process for each  .

.

In Example 1, all the elements in the (only) column of the matrix  , which corresponds to

, which corresponds to  , are divisible by 2, and no larger d has a corresponding r. Thus, line 12 of Algorithm 3 adds the divisibility condition

, are divisible by 2, and no larger d has a corresponding r. Thus, line 12 of Algorithm 3 adds the divisibility condition  to D.

to D.

Eliminating Existentially Quantified Variables Using MBP. By combining the linear equalities exhibited by N, the convex closure of  and the divisibility constraints on

and the divisibility constraints on  , we obtain

, we obtain  as an over-approximation of

as an over-approximation of  . Accordingly,

. Accordingly,  , where

, where  , is an over-approximation of

, is an over-approximation of  (line 13). In order to get a LIA cube that overapproximates

(line 13). In order to get a LIA cube that overapproximates  , it remains to eliminate the existential quantifiers. Since quantifier elimination is expensive, and does not necessarily generate convex formulas (cubes), we approximate it using MBP. Namely, we obtain a cube

, it remains to eliminate the existential quantifiers. Since quantifier elimination is expensive, and does not necessarily generate convex formulas (cubes), we approximate it using MBP. Namely, we obtain a cube  that under-approximates

that under-approximates  by applying MBP on

by applying MBP on  and a model

and a model  . We then use an SMT solver to drop literals from

. We then use an SMT solver to drop literals from  until it over-approximates

until it over-approximates  , and hence also

, and hence also  (lines 16 to 19). The result is returned by Subsume as an over-approximation of

(lines 16 to 19). The result is returned by Subsume as an over-approximation of  .

.

Models  that satisfy

that satisfy  and do not satisfy any of the cubes in

and do not satisfy any of the cubes in  are preferred when computing MBP (line 14) as they ensure that the result of MBP is not subsumed by any of the cubes in

are preferred when computing MBP (line 14) as they ensure that the result of MBP is not subsumed by any of the cubes in  .

.

Note that the  are rational variables and

are rational variables and  are integer variables, which means we require MBP to support a mixture of integer and rational variables. To achieve this, we first relax all constants to be rationals and apply MBP over LRA to eliminate

are integer variables, which means we require MBP to support a mixture of integer and rational variables. To achieve this, we first relax all constants to be rationals and apply MBP over LRA to eliminate  . We then adjust the resulting formula back to integer arithmetic by multiplying each atom by the least common multiple of the denominators of the coefficients in it. Finally, we apply MBP over the integers to eliminate

. We then adjust the resulting formula back to integer arithmetic by multiplying each atom by the least common multiple of the denominators of the coefficients in it. Finally, we apply MBP over the integers to eliminate  .

.

Considering Example 1 again, we get that  (the first three conjuncts correspond to

(the first three conjuncts correspond to  ). Note that in this case we do not have rational variables

). Note that in this case we do not have rational variables  since

since  . Depending on the model, the result of MBP can be one of

. Depending on the model, the result of MBP can be one of

|

However, we prefer a model that does not satisfy any cube in  , rules off the two possibilities on the right. None of these cubes cover

, rules off the two possibilities on the right. None of these cubes cover  , hence generalization is used.

, hence generalization is used.

If the first cube is obtained by MBP, it is generalized into  ; the second cube is already an over-approximation; the third cube is generalized into

; the second cube is already an over-approximation; the third cube is generalized into  . Indeed, each of these cubes over-approximates

. Indeed, each of these cubes over-approximates  .

.

Concretize Rule for LIA

The Concretize rule (Algorithm 2) takes a cluster of lemmas  and a pob

and a pob

such that each lemma in

such that each lemma in  partially blocks

partially blocks  , and creates a new pob

, and creates a new pob

that is still not blocked by

that is still not blocked by  , but

, but  is more concrete, i.e.,

is more concrete, i.e.,  . In our implementation, this rule is applied when

. In our implementation, this rule is applied when  is in

is in  . We further require that the pattern,

. We further require that the pattern,  , of

, of  is non-linear, i.e., some of the constants appear in

is non-linear, i.e., some of the constants appear in  with free variables as their coefficients. We denote these constants by U. An example is the pattern

with free variables as their coefficients. We denote these constants by U. An example is the pattern  , where

, where  . Having such a cluster is an indication that attempting to block

. Having such a cluster is an indication that attempting to block  in full with a single lemma may require to track non-linear correlations between the constants, which is impossible to do in LIA. In such cases, we identify the coupling of the constants in U in pobs (and hence in lemmas) as the potential source of non-linearity. Hence, we concretize (strengthen)

in full with a single lemma may require to track non-linear correlations between the constants, which is impossible to do in LIA. In such cases, we identify the coupling of the constants in U in pobs (and hence in lemmas) as the potential source of non-linearity. Hence, we concretize (strengthen)  into a pob

into a pob

where the constants in U are no longer coupled to any other constant.

where the constants in U are no longer coupled to any other constant.

Coupling. Formally, constants u and v are coupled in a cube c, denoted  , if there exists a literal

, if there exists a literal  in c such that both u and v appear in

in c such that both u and v appear in  (i.e., their coefficients in

(i.e., their coefficients in  are non-zero). For example, x and y are coupled in

are non-zero). For example, x and y are coupled in  whereas neither of them are coupled with z. A constant u is said to be isolated in a cube c, denoted

whereas neither of them are coupled with z. A constant u is said to be isolated in a cube c, denoted  , if it appears in c but it is not coupled with any other constant in c. In the above cube, z is isolated.

, if it appears in c but it is not coupled with any other constant in c. In the above cube, z is isolated.

Concretization by Decoupling. Given a pob

(a cube) and a cluster

(a cube) and a cluster  , Algorithm 4 presents our approach for concretizing

, Algorithm 4 presents our approach for concretizing  by decoupling the constants in U—those that have variables as coefficients in the pattern of

by decoupling the constants in U—those that have variables as coefficients in the pattern of  (line 2). Concretization is guided by a model

(line 2). Concretization is guided by a model  , representing a part of

, representing a part of  that is not yet blocked by the lemmas in

that is not yet blocked by the lemmas in  (line 3). Given such M, we concretize

(line 3). Given such M, we concretize  into a model-preserving under-approximation that isolates all the constants in U and preserves all other couplings. That is, we find a cube

into a model-preserving under-approximation that isolates all the constants in U and preserves all other couplings. That is, we find a cube  , such that

, such that

| 1 |

Note that  is not blocked by

is not blocked by  since M satisfies both

since M satisfies both  and

and  . For example, if

. For example, if  and

and  , then

, then  is a model preserving under-approximation that isolates

is a model preserving under-approximation that isolates  .

.

Algorithm 4 computes such a cube  by a point-wise concretization of the literals of

by a point-wise concretization of the literals of  followed by the removal of subsumed literals. Literals that do not contain constants from U remain unchanged. A literal of the form

followed by the removal of subsumed literals. Literals that do not contain constants from U remain unchanged. A literal of the form  , where

, where  (recall that every literal in

(recall that every literal in  can be normalized to this form), that includes constants from U is concretized into a cube by (1) isolating each of the summands

can be normalized to this form), that includes constants from U is concretized into a cube by (1) isolating each of the summands  in t that include U from the rest, and (2) for each of the resulting sub-expressions creating a literal that uses its value in M as a bound. Formally, t is decomposed to

in t that include U from the rest, and (2) for each of the resulting sub-expressions creating a literal that uses its value in M as a bound. Formally, t is decomposed to  , where

, where  . The concretization of

. The concretization of  is the cube

is the cube  , where

, where  denotes the interpretation of

denotes the interpretation of  in M. Note that

in M. Note that  since the bounds are stronger than the original bound on t:

since the bounds are stronger than the original bound on t:  . This ensures that

. This ensures that  , obtained by the conjunction of literal concretizations, implies

, obtained by the conjunction of literal concretizations, implies  . It trivially satisfies the other conditions of Eq. (1).

. It trivially satisfies the other conditions of Eq. (1).

For example, the concretization of the literal  with respect to

with respect to  and

and  is the cube

is the cube  . Applying concretization in a similar manner to all the literals of the cube

. Applying concretization in a similar manner to all the literals of the cube  from the previous example, we obtain the concretization

from the previous example, we obtain the concretization  . Note that the last literal is not concretized as it does not include y.

. Note that the last literal is not concretized as it does not include y.

Conjecture Rule for LIA

The Conjecture rule (see Algorithm 2) takes a set of lemmas  and a pob

and a pob

such that all lemmas in

such that all lemmas in  block

block  , but none of them blocks

, but none of them blocks  , where

, where  does not include any known reachable states. It returns

does not include any known reachable states. It returns  as a new pob.

as a new pob.

For LIA, Conjecture is applied when the following conditions are met: (1) the pob

is of the form

is of the form  , where

, where  , and

, and  and

and  are any cubes. The sub-cube

are any cubes. The sub-cube  acts as

acts as  , while the sub-cube

, while the sub-cube  acts as

acts as  . (2) The cluster

. (2) The cluster  consists of

consists of  , where

, where  and

and  . This means that each of the lemmas in

. This means that each of the lemmas in  blocks

blocks  , and they may be ordered as a sequence of increasingly stronger lemmas, indicating that they were created by trying to block the pob at different levels, leading to too strong lemmas that failed to propagate to higher levels. (3) The formula

, and they may be ordered as a sequence of increasingly stronger lemmas, indicating that they were created by trying to block the pob at different levels, leading to too strong lemmas that failed to propagate to higher levels. (3) The formula  is satisfiable, that is, none of the lemmas in

is satisfiable, that is, none of the lemmas in  block

block  , and (4)

, and (4)  , that is, no state in

, that is, no state in  is known to be reachable. If all four conditions are met, we conjecture

is known to be reachable. If all four conditions are met, we conjecture  . This is implemented by conjecture, that returns

. This is implemented by conjecture, that returns  (or

(or  when the pre-conditions are not met).

when the pre-conditions are not met).

For example, consider the pob

and a cluster of lemmas

and a cluster of lemmas  . In this case,

. In this case,  ,

,  ,

,  , and

, and  . Each of the lemmas in

. Each of the lemmas in  block

block  but none of them block

but none of them block  . Therefore, we conjecture

. Therefore, we conjecture  :

:  .

.

Putting It All Together

Having explained the implementation of the new rules for LIA, we now put all the ingredients together into an algorithm, GSpacer. In particular, we present our choices as to when to apply the new rules, and on which clusters of lemmas and pobs. As can be seen in Sect. 5, this implementation works very well on a wide range of benchmarks.

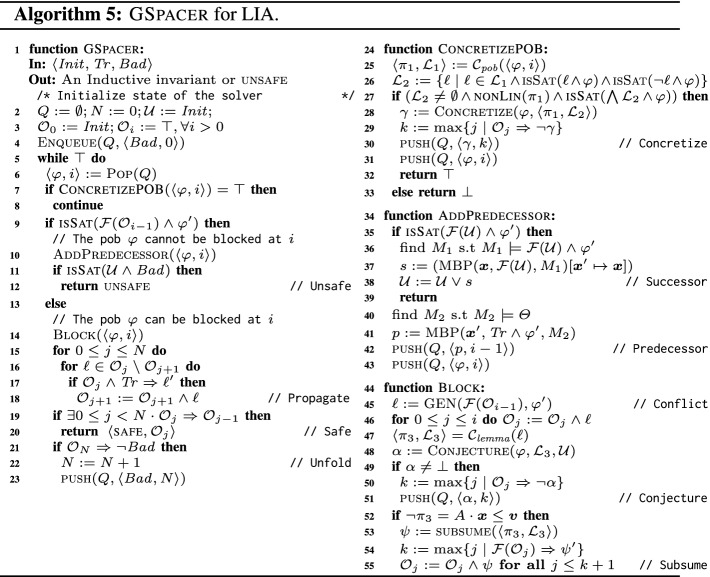

Algorithm 5 presents GSpacer. The comments to the right side of a line refer to the abstract rules in Algorithm 1 and 2. Just like Spacer, GSpacer iteratively computes predecessors (line 10) and blocks them (line 14) in an infinite loop. Whenever a pob is proven to be reachable, the reachable states are updated (line 38). If  intersects with a reachable state, GSpacer terminates and returns unsafe (line 12). If one of the frames is an inductive invariant, GSpacer terminates with safe (line 20).

intersects with a reachable state, GSpacer terminates and returns unsafe (line 12). If one of the frames is an inductive invariant, GSpacer terminates with safe (line 20).

When a pob

is handled, we first apply the Concretize rule, if possible (line 7). Recall that Concretize (Algorithm 4) takes as input a cluster that partially blocks

is handled, we first apply the Concretize rule, if possible (line 7). Recall that Concretize (Algorithm 4) takes as input a cluster that partially blocks  and has a non-linear pattern. To obtain such a cluster, we first find, using

and has a non-linear pattern. To obtain such a cluster, we first find, using  , a cluster

, a cluster  , where

, where  , that includes some lemma (from frame k) that blocks

, that includes some lemma (from frame k) that blocks  ; if none exists,

; if none exists,  . We then filter out from

. We then filter out from  lemmas that completely block

lemmas that completely block  as well as lemmas that are irrelevant to

as well as lemmas that are irrelevant to  , i.e., we obtain

, i.e., we obtain  by keeping only lemmas that partially block

by keeping only lemmas that partially block  . We apply Concretize on

. We apply Concretize on  to obtain a new pob that under-approximates

to obtain a new pob that under-approximates  if (1) the remaining sub-cluster,

if (1) the remaining sub-cluster,  , is non-empty, (2) the pattern,

, is non-empty, (2) the pattern,  , is non-linear, and (3)

, is non-linear, and (3)  is satisfiable, i.e., a part of

is satisfiable, i.e., a part of  is not blocked by any lemma in

is not blocked by any lemma in  .

.

Once a pob is blocked, and a new lemma that blocks it,  , is added to the frames, an attempt is made to apply the Subsume and Conjecture rules on a cluster that includes

, is added to the frames, an attempt is made to apply the Subsume and Conjecture rules on a cluster that includes  . To that end, the function

. To that end, the function  finds a cluster

finds a cluster  to which

to which  belongs (Sect. 4.2). Note that the choice of cluster is arbitrary. The rules are applied on

belongs (Sect. 4.2). Note that the choice of cluster is arbitrary. The rules are applied on  if the required pre-conditions are met (line 49 and line 53, respectively). When applicable, Subsume returns a new lemma that is added to the frames, while Conjecture returns a new pob that is added to the queue. Note that the latter is a may

pob, in the sense that some of the states it represents may not lead to safety violation.

if the required pre-conditions are met (line 49 and line 53, respectively). When applicable, Subsume returns a new lemma that is added to the frames, while Conjecture returns a new pob that is added to the queue. Note that the latter is a may

pob, in the sense that some of the states it represents may not lead to safety violation.

Ensuring Progress.

Spacer always makes progress: as its search continues, it establishes absence of counterexamples of deeper and deeper depths. However, GSpacer does not ensure progress. Specifically, unrestricted application of the Concretize and Conjecture rules can make GSpacer diverge even on executions of a fixed bound. In our implementation, we ensure progress by allotting a fixed amount of gas to each pattern,  , that forms a cluster. Each time Concretize or Conjecture is applied to a cluster with

, that forms a cluster. Each time Concretize or Conjecture is applied to a cluster with  as the pattern,

as the pattern,  loses some gas. Whenever

loses some gas. Whenever  runs out of gas, the rules are no longer applied to any cluster with

runs out of gas, the rules are no longer applied to any cluster with  as the pattern. There are finitely many patterns (assuming LIA terms are normalized). Thus, in each bounded execution of GSpacer, the Concretize and Conjecture rules are applied only a finite number of times, thereby, ensuring progress. Since the Subsume rule does not hinder progress, it is applied without any restriction on gas.

as the pattern. There are finitely many patterns (assuming LIA terms are normalized). Thus, in each bounded execution of GSpacer, the Concretize and Conjecture rules are applied only a finite number of times, thereby, ensuring progress. Since the Subsume rule does not hinder progress, it is applied without any restriction on gas.

Evaluation

We have implemented2 GSpacer (Algorithm 5) as an extension to Spacer. To reduce the dimension of a matrix (in subsume, Sect. 4.3), we compute pairwise linear dependencies between all pairs of columns instead of computing the full kernel. This does not necessarily reduce the dimension of the matrix to its rank, but, is sufficient for our benchmarks. We have experimented with computing the full kernel using SageMath [25], but the overall performance did not improve. Clustering is implemented by anti-unification. LIA terms are normalized using default Z3 simplifications. Our implementation also supports global generalization for non-linear CHCs. We have also extended our work to the theory of LRA. We defer the details of this extension to an extended version of the paper.

To evaluate our implementation, we have conducted two sets of experiments3. All experiments were run on Intel E5-2690 V2 CPU at 3 GHz with 128 GB memory with a timeout of 10 min. First, to evaluate the performance of local reasoning with global guidance against pure local reasoning, we have compared GSpacer with the latest Spacer, to which we refer as the baseline. We took the benchmarks from CHC-COMP 2018 and 2019

[10]. We compare to Spacer because it dominated the competition by solving  of the benchmarks in CHC-COMP 2019 (

of the benchmarks in CHC-COMP 2019 ( more than the runner up) and

more than the runner up) and  of the benchmarks in CHC-COMP 2018 (

of the benchmarks in CHC-COMP 2018 ( more than runner up). Our evaluation shows that GSpacer outperforms Spacer both in terms of number of solved instances and, more importantly, in overall robustness.

more than runner up). Our evaluation shows that GSpacer outperforms Spacer both in terms of number of solved instances and, more importantly, in overall robustness.

Second, to examine the performance of local reasoning with global guidance compared to solely global reasoning, we have compared GSpacer with an ML-based data-driven invariant inference tool LinearArbitrary [28]. Compared to other similar approaches, LinearArbitrary stands out by supporting invariants with arbitrary Boolean structure over arbitrary linear predicates. It is completely automated and does not require user-provided predicates, grammars, or any other guidance. For the comparison with LinearArbitrary, we have used both the CHC-COMP benchmarks, as well as the benchmarks from the artifact evaluation of [28]. The machine and timeout remain the same. Our evaluation shows that GSpacer is superior in this case as well.

Comparison with Spacer. Table 1 summarizes the comparison between Spacer and GSpacer on CHC-COMP instances. Since both tools can use a variety of interpolation strategies during lemma generalization (Line 45 in Algorithm 5), we compare three different configurations of each: bw and fw stand for two interpolation strategies, backward and forward, respectively, already implemented in Spacer, and sc stands for turning interpolation off and generalizing lemmas only by subset clauses computed by inductive generalization.

Table 1.

Comparison between Spacer and GSpacer on CHC-COMP.

Any configuration of GSpacer solves significantly more instances than even the best configuration of Spacer. Figure 2 provides a more detailed comparison between the best configurations of both tools in terms of running time and depth of convergence. There is no clear trend in terms of running time on instances solved by both tools. This is not surprising—SMT-solving run time is highly non-deterministic and any change in strategy has a significant impact on performance of SMT queries involved. In terms of depth, it is clear that GSpacer converges at the same or lower depth. The depth is significantly lower for instances solved only by GSpacer.

Fig. 2.

Best configurations: GSpacer versus Spacer.

Moreover, the performance of GSpacer is not significantly affected by the interpolation strategy used. In fact, the configuration sc in which interpolation is disabled performs the best in CHC-COMP 2018, and only slightly worse in CHC-COMP 2019! In comparison, disabling interpolation hurts Spacer significantly.

Figure 3 provides a detailed comparison of GSpacer with and without interpolation. Interpolation makes no difference to the depth of convergence. This implies that lemmas that are discovered by interpolation are discovered as efficiently by the global rules of GSpacer. On the other hand, interpolation significantly increases the running time. Interestingly, the time spent in interpolation itself is insignificant. However, the lemmas produced by interpolation tend to slow down other aspects of the algorithm. Most of the slow down is in increased time for inductive generalization and in computation of predecessors. The comparison between the other interpolation-enabled strategy and GSpacer (sc) shows a similar trend.

Fig. 3.

Comparing GSpacer with different interpolation tactics.

Comparison with LinearArbitrary. In [28], the authors show that LinearArbitrary, to which we refer as LArb for short, significantly outperforms Spacer on a curated subset of benchmarks from SV-COMP [24] competition.

At first, we attempted to compare LArb against GSpacer on the CHC-COMP benchmarks. However, LArb did not perform well on them. Even the baseline Spacer has outperformed LArb significantly. Therefore, for a more meaningful comparison, we have also compared Spacer, LArb and GSpacer on the benchmarks from the artifact evaluation of [28]. The results are summarized in Table 2. As expected, LArb outperforms the baseline Spacer on the safe benchmarks. On unsafe benchmarks, Spacer is significantly better than LArb. In both categories, GSpacer dominates solving more safe benchmarks than either Spacer or LArb, while matching performance of Spacer on unsafe instances. Furthermore, GSpacer remains orders of magnitude faster than LArb on benchmarks that are solved by both. This comparison shows that incorporating local reasoning with global guidance not only mitigates its shortcomings but also surpasses global data-driven reasoning.

Table 2.

Comparison with LArb.

Related Work

The limitations of local reasoning in SMT-based infinite state model checking are well known. Most commonly, they are addressed with either (a) different strategies for local generalization in interpolation (e.g., [1, 6, 19, 23]), or (b) shifting the focus to global invariant inference by learning an invariant of a restricted shape (e.g., [9, 14–16, 28]).