Abstract

Much of what is known about the course of auditory learning following cochlear implantation is based on behavioral indicators that users are able to perceive sound. Prelingually deafened children and postlingually deafened adults who receive cochlear implants have highly variable speech and language processing outcomes, though the basis for this is poorly understand. To date, measuring neural activity within the auditory cortex of implant recipients of all ages has been challenging, primarily because the use of traditional neuroimaging techniques is limited by the implant itself. Functional near-infrared spectroscopy (fNIRS) is an imaging technology that works with implant users of all ages because it is non-invasive, compatible with implant devices, and not subject to electrical artifacts. Thus, fNIRS can provide insight into processing factors that contribute to variations in spoken language outcomes in implant users, both children and adults. There are important considerations to be made when using fNIRS, particularly with children, both to maximize the signal-to-noise ratio and to best identify and interpret cortical responses. This review considers these issues, recent data, and future directions for using fNIRS as a tool to understand spoken language processing in both children and adults who hear through a cochlear implant.

1. Introduction

Hearing loss is the fourth most common developmental disorder in the United States and the most common sensory disorder (Boyle et al., 2011). Over 90% of deaf children are born to hearing parents (Mitchell & Karchmer, 2002) and whether or not hearing parents opt to learn sign language with their deaf child, the vast majority will opt for the surgical placement of a cochlear implant, an electronic device that processes incoming sounds and bypasses the inner ear to electrically stimulate the auditory nerve. Cochlear implantation has become the most widely used computer-brain interface and now is the most successful intervention for total sensory function loss (Prochazka, 2017), proving particularly impactful for hearing loss intervention in deaf infants and young children. Since 2000, the United States Food and Drug Administration (FDA) approves cochlear implantation in children 12 months old and older based on the results of clinical studies. However, many parents are opting to have their deaf infants implanted at even younger ages, guided by increasing awareness that significant perceptual tuning in normal hearing infants takes place across the first year of life (Miyamoto, Colson, Henning, & Pisoni, 2018). In this review, data from both prelingually deafened pediatric implant users and postlingually deafened adult implant users will be presented, as both are informative to the goal of developing fNIRS for clinical and research applications for improving cochlear implant outcomes.

Cochlear implant mediated speech is not the same as normal speech. Despite continued advances in implant technology, multiple auditory components are somewhat degraded relative to the speech typical hearers experience (Caldwell, Jiam, & Limb, 2017). Learning to use this degraded speech signal when one already has a language system in place to fill in the gaps, as in the case of postlingually deafened adults, should on its face be quite a different process from that of learning language through an implant the first time around and with no such prior knowledge. The latter situation is what prelingually deafened children who learn language through a cochlear implant face. Despite this, outcomes for both prelingually (Fisher et al., 2015; Geers, Nicholas, Tobey, & Davidson, 2016) and postlingually (Lenarz, Joseph, Sönmez, Büchner, & Lenarz, 2011) deafened individuals are highly variable. More than half of implanted children score in the average range on assessments of spoken language skills (Geers, Brenner, & Tobey, 2011; Geers, Tobey, & Moog, 2011), and this percentage increases by high school age as listening experience is accrued (Geers & Sedey, 2011). Among implanted adults, most have a moderately high satisfaction level with their device (Ou, Dunn, Bentler, & Zhang, 2008), but younger users have significantly better speech perception scores than older users (Roberts, Lin, Herrmann, & Lee, 2013). In other words, on average, neither group of implant users performs at the level of a group of normal hearing individuals of the same age. Although outcomes are continually improving with improved technology, identifying the sources of this variability is a critical challenge to researchers and clinicians, who stand to benefit from additional tools to help maximize spoken language outcomes for all implant users.

Despite a minority of prelingually deafened children having difficulty using their implant even after years, many can and do learn to use the implant’s signal to acquire age-appropriate speech production and spoken language comprehension skills (Lazard et al., 2012; Miyamoto et al., 1994). The factors that contribute to variability in individual outcomes following cochlear implantation are diverse and poorly understood (Lazard et al., 2014; Peterson et al., 2010) but one critical issue is whether the implant accurately conveys acoustic information to the auditory nerve and beyond. This depends at least in part on the many steps that take place prior to activation of the device itself, including hearing loss diagnosis, implant candidacy evaluation, implant surgery, and surgical recovery. Although the device is tested for successful placement and function during surgery (intraoperatively), the audiologist who initiates and programs the device following surgery will act as the initial interface between an implanted child’s previous knowledge of sound, if any, and the new auditory percept the implant provides.

Device programming, the process of adjusting electrical stimulation levels across the implant’s different electrodes following initial activation, incorporates both behavioral and objective measures. Behavioral measures evaluate the child’s response to the device’s electrical thresholds, which are adjustable by the audiologist, and the child’s comfort with those thresholds. Objective measures are manufacturer-provided indicators of the device’s function, including impedance telemetry, electrically evoked compound action potentials, and acoustic reflex (Teagle, 2016). These measures generally focus on early stage interactions between the electrical stimulation provided by the device and the biological system with which it interfaces: the inner ear and auditory nerve. To maximize auditory learning, ideally both the behavioral and objective measures will be used by the audiologist and other specialists in conjunction with a range of other information (i.e., about the child, the family, pre- and post-implant communicative mode, and therapeutic interventions) in a dynamic manner from the time of activation onward.

Although the causes of outcome variability are not well understood, a child’s hearing history and age of implantation have been shown to be the most predictive (Ching et al., 2014; Niparko et al., 2010). These factors implicate the plasticity, or lack thereof, of cortical and subcortical structures that will support learning language from the implant-mediated auditory signal. Of particular concern is the status of the auditory pathways that carry sound information from the auditory nerve to the primary auditory cortex. Subsequent stages of cortical processing, including corticocortical connectivity, are also important to consider (Dahmen & King, 2007; Kral, Yusuf, & Land, 2017). As demonstrated systematically with animal models (Yusuf, Hubka, Tillein, & Kral, 2017), the effects of prior auditory experience, age of hearing loss, and facility with perceptual learning are all issues of relevance to human cochlear implant users of all ages. For example, lack or loss of sound-evoked neural stimulation early in development can result in the auditory cortex being co-opted by other sensory modalities (e.g., vision), although the degree to which such cross-modal reorganization takes place in humans who experience some hearing prior to deafness appears to be limited to secondary brain regions (Glick & Sharma, 2017). Nonetheless, given lack of auditory input from birth, as is the case for congenitally deaf individuals, cross-modal reorganization can limit the influence of whatever auditory input subsequently is provided by the implant, and early implantation maximizes auditory benefits (Silva et al., 2017). Thus, it is clear that differential experience over the lifespan modifies whether and how the auditory cortex processes sensory input, with implications for speech and language outcomes.

A notable constraint on our understanding of outcome differences in cochlear implant users has been a general focus by the clinical community on measures of post-implant performance (e.g., standardized speech and language evaluations), rather than on developing measures that might support a more nuanced understanding of the processes underlying that performance. Fortunately, this bias has been changing (Moberly, Castellanos, Vasil, Adunka, & Pisoni, 2018). One approach has been to determine the developmental status of the human auditory cortex by comparing latencies of the cortical auditory evoked potential (CAEP) across individuals (Dorman, Sharma, Gilley, Martin, & Roland, 2007). Data obtained using this technique highlight the importance of age of implantation in pediatric implant users and further underscore the idea that earlier implantation is better. Despite the fact that the CAEP has been used quite productively (for a recent review, see Ciscare, Mantello, Fortunato-Queiroz, Hyppolito, & Dos Reis, 2017), this line of research is somewhat constrained in what it can say about the processes underlying good and poor implant outcomes. Moreover, limitations imposed by implant-electrode interaction constrain the ecological validity of the stimuli used to elicit the CAEP (Paulraj, Subramaniam, Yaccob, Bin Adom, & Hema, 2015).

A promising approach that has been reported recently is the use of structural information about the brains of implant recipients (Feng et al., 2018). This approach evaluates the initial status of the deaf individuals’ brain tissue itself as a function of specific characteristics of their hearing loss and age, among other things. In a recent study, pre-surgical morphological data about pediatric cochlear implant candidates’ brains were used to predict the speech and language outcomes following implantation (Feng et al., 2018). Based on comparisons between the neuroanatomical density and spatial pattern similarities in structural magnetic resonance images (MRIs) from the implant candidates and from age-matched normal hearing children, the researchers identified brain networks that were either affected or unaffected by auditory deprivation. Using these data, they then constructed machine-learning algorithms to classify another set of pre-implant data into categories reflecting projected improvement in speech perception. The resulting models made relatively accurate predictions about each implant user’s ranking in speech outcome measures, demonstrating that pre-surgical neuroanatomical data can be used to predict speech and language outcomes post-implantation. This goes well beyond the current use of structural MRI to evaluate anatomical fitness for implant candidacy. It also presents a novel way of assessing plasticity pre-implantation, taking a systems neuroscience approach (Kral, 2013) to understanding the sources of variability in post-implant outcomes.

Nonetheless and despite these advances, assessing the activity elicited by implant-mediated speech in the brains of individuals of all ages remains difficult, particularly in young children. For one thing, typical research techniques for such measures are not practical or feasible with this population. Hemodynamic-based methods, such as positron emission topography (PET) and functional magnetic resonance imaging (fMRI), are generally considered impractical or unsafe for use with healthy infants. PET involves the use of radioactive isotopes and, regardless of age, patients with cochlear implants cannot have an MRI because the implant itself is ferromagnetic. Electrophysiological measures, including the CAEP and other electroencephalogram-based measures, are hampered by stimulation artifacts from the device. During the time it takes for the implant-driven signal to propagate from the auditory nerve up to the cortex (6–10 ms), the implant’s processor interferes with the signal being acquired. Thus, electrophysiological measures typically are based on short stimuli (i.e. square wave pulses) that allow the cortical response to the stimulus to be separated from implant-induced artifacts (Gransier et al., 2016). Thus, the main advantage of taking measurements from the auditory cortex—identifying differential responses to different forms of meaningful speech—is lost using this approach. Although there are methods to better remove artifacts from the cortical signal, this is not trivial and it is still unclear how accurately the signal reflects actual neural activity (Friesen & Picton, 2010; Mc Laughlin, Lopez Valdes, Reilly, & Zeng, 2013; Miller & Zhang, 2014; Somers, Verschueren, & Francart, 2018). Moreover, the use of electrophysiological measures requires infants and young children to remain quite still, something difficult to achieve without sedation.

2. Functional near-infrared spectroscopy: Background and general principles

Functional near-infrared spectroscopy (fNIRS) is a tool that operates outside these limitations, providing a non-invasive assessment of localized changes in blood oxygenation. Thus, the technology presents the first opportunity to measure focal changes in blood oxygen concentration in cochlear implant users, many of whom are hearing for the first time. The benefit that fNIRS can provide to cochlear implant research is two-fold: 1) it introduces an important alternative to the measures that are traditionally used to assess speech and spoken language development in implant users, and 2) it allows examination of localization of function as it applies to the emergence of speech and speech-related skills in both normal hearing and hearing-impaired populations. Because speech perception occurs within and beyond the auditory cortex, neuroimaging with fNIRS provides an additional means of assessing whether auditory information relayed by an implant is delivered to the auditory cortex and beyond (e.g., to language-specific cortical regions of the brain) (Pasley et al., 2012). Thus, fNIRS can supplement behavioral tests, which are particularly limited in young children (Santa Maria & Oghalai, 2014), providing an important addition to the limited array of neuroimaging modalities suitable for use with this population.

fNIRS uses red-to-near-infrared (NIR) light to detect cortical blood oxygenation, which itself is a proxy for neural activation because active brain regions demand the delivery of oxygen to support their metabolic needs. Optical absorption changes are recorded across the scalp over time and converted to relative concentrations of oxygenated and deoxygenated hemoglobin, which are then mapped to specific areas of underlying cerebral cortex. The localization specificity is nowhere near that of fMRI, but fNIRS can track cortical responses to within 1 to 2 cm of the area targeted (Ferrari & Quaresima, 2012; Scholkmann et al., 2014). Because the equipment is quiet and tolerates some movement, it is ideal for testing auditory processing while people are awake and behaving, making it compatible for both speech processing and developmental research. fNIRS is noninvasive, poses no risks and, given the optical nature of the technology, does not interact with the implant’s components (i.e., electronic, ferromagnetic). Of note, it can be used to measure cortical responses to any auditory signal, including relatively long samples of speech, which is particularly important for assessing speech and spoken language processing. As mentioned, PET is the only other neuroimaging modality that provides a matching level of compatibility with implants. However, unlike PET, fNIRS does not require tracers to be injected into the blood stream and thus does not expose individuals to radiation. This also means that the number of test runs that can be conducted with a single individual is not restricted, making fNIRS ideal for longitudinal studies.

Although there are different forms of fNIRS imaging, the focus here will be on continuous wave systems because they are the most commonly used for human neuroimaging. This is, in part, because these systems rely on lower cost photon detectors, which allows for more spatially resolved measurements. Continuous wave fNIRS uses a stable light source that sends a continuous beam of light into the tissue while the exiting light is monitored. The intensity of the detected light is used to determine the amount of optical (light) absorption that has occurred. Other forms of optical imaging, operating in the time-domain (Torricelli et al., 2014; Pifferi et al., 2016) and the frequency-domain (Jiang, Paulsen, Osterberg, Pogue, & Patterson, 1996), use more complex light sources to measure the phase of returning light or the temporal distribution of the light following migration through the targeted tissue and are beyond the scope of this review (for an overview of optical approaches, see Zhang, 2014).

For human neuroimaging, fNIRS involves placing sets of light sources and light detectors over the scalp with the goal of measuring the amount of light that exits the skull. Light will be scattered as it passes through the bone and tissue and, critically, some of the scattered light will be absorbed by the hemoglobin present in the superficial layer of the cortex. A critical aspect of fNIRS is that the spectrum of light absorbed by hemoglobin depends on whether the hemoglobin is oxygenated or not. For this reason, two or more optical fibers are coupled to deliver two wavelengths of light through each source, and each of the two wavelengths is selected for maximal absorption by either oxygenated (HbO) or deoxygenated (HbR) hemoglobin. Thus, the choice of wavelength pairs is important, as this affects the quality of the fNIRS signals (Strangman, Franceschini, & Boas, 2003; Sato, Kiguchi, Kawaguchi, & Maki, 2004). Except at the isosbestic point (808 nm), where the extinction coefficients of the two chromophores (i.e., forms of hemoglobin) are equal, HbO and HbR differentially absorb light in the red-to-NIR spectral range. Although different fNIRS systems use slight variations in wavelength pairs, it is always the case that one wavelength is absorbed more by HbO and the other by HbR. Generally speaking, wavelengths below the isosbestic point can be used to measure HbR (below 760–770 nm), whereas longer wavelengths measure HbO (up to 920 nm) (Scholkmann et al., 2014). While some have argued that the highest signal-to-noise ratios are obtained when one wavelength is below 720 nm and the other is above 730 nm (see Uludaǧ, Steinbrink, Villringer, & Obrig, 2004, for a detailed discussion of cross-talk and source separability), other factors influence the quality of the signal as well and this is reflected in the variability in wavelength pairs available commercially.

In terms of depth of penetration, fNIRS can be used to interrogate an adult brain to a depth of about 1.5 cm from the scalp itself (Elwell & Cooper, 2011). This is because biological tissue absorbs light in the visible spectrum while remaining relatively transparent to light in the red-to-NIR range (650–1000 nm), meaning that the latter penetrates past the superficial layers of the head and interacts with cortical tissue (Wilson, Nadeau, Jaworski, Tromberg, & Durkin, 2015). When a light source and detector are placed in contact with the scalp with at least 2 cm of space between them, a small fraction of the incident light will scatter (“optical scattering”) within the scalp, skull, and cerebral cortex, and then repeat this random journey to eventually reach back to the detector. Although the fraction of light that scatters through the cortex and ultimately reaches the detector can be quite small, it nonetheless provides both spatial and temporal information about the metabolic state of the cortical tissue it has traveled through. This backscattering geometry produces a canonical “banana-shaped” profile of light arcing from an emitter to a nearby detector that characterizes the tissue measured using fNIRS (Bhatt, Ayyalasomayajula, & Yalavarthy, 2016). In a typical study, an array of such source-detector pairs (an “optode array”) is positioned on the scalp with the distance between each source-detector pair ranging from 2 to 5 cm, depending on the age of the person being tested. Each source-detector pair represents a localized “channel,” a term that refers to the convex banana-shaped region of tissue through which light is passing and whose metabolic characteristics are thus measured by that particular source-detector pair. A channel thus corresponds to the sampling of tissue that underlies a particular source-detector pair by the light path passing through it. Based on the loss of light intensity at the point of each detector relative to the source, oxygen concentration of the blood in the cortex underlying that channel can then be calculated using a formula called the modified Beer-Lambert Law (Villringer & Chance, 1997).

In sum, the major spatial limitation of fNIRS is that it only probes a thin top layer of the cortex (1.5 cm from the scalp means light reaches only the top 5–8 mm of the brain itself), a considerable drawback for studies that aim to investigate deeper regions of the brain. Depth resolution is further influenced by the age of the person being tested, and varies somewhat across brain regions even within a particular age group (Beauchamp et al., 2011). In adults, thicker scalp, soft tissue (i.e., dura and meninges), and skull significantly restrict NIR light from penetrating as deeply as it can in children. This influences the accuracy of data recording and is the basis for adjusting source-detector pair distances as a function of a person’s age, as mentioned above. Although deeper neural activity can be probed by increasing the source-detector distance, this is generally at the cost of signal-to-noise ratio due to an overall reduction in transmitted photons. To reach the cortex, the current consensus is that source-detector distances should be between 2 and 3 cm in infants and 3 and 5 cm in adults (Quaresima, Bisconti, & Ferrari, 2012). Tools for better spatial localization continue to be improved and streamlined as well. For probe placement prior to data acquisition, the 10–20 (EEG) system is used in the same way it is for the acquisition of whole-head EEG data. For greater spatial precision, a digital localizer also can be used to record the 3-D location of each source and detector on a digital model. Source encoding, in which the different wavelengths within sources are flashed on and off at different points in time, helps further differentiate location and is important for localizing optode arrays with large numbers of sources and detectors (Wojtkiewicz, Sawosz, Milej, Treszczanowicz, & Liebert, 2014). Although raw fNIRS data do not provide an anatomical image of the brain, data from an individual or from group averages can be imposed on an individual’s MRI or on a template for better visualization. Cochlear implant users generally have structural MRIs of their brains taken prior to implantation, which are helpful when used to guide interpretation of specific probe localization, though this is not at all necessary to the process.

Although many of the benefits of fNIRS relate to its fMRI-like characteristics, its effective temporal resolution is actually higher than that of fMRI. Indeed, the sampling rate of fNIRS is the highest among the hemodynamic neuroimaging techniques, with continuous wave systems reaching up to 100 Hertz (Huppert, Hoge, Diamond, Franceschini, & Boas, 2006). Of course, as a blood-based measure, the temporal resolution of fNIRS is inferior to EEG and MEG by an order of magnitude. Nonetheless, the high temporal resolution (due to the high sampling rate) relative to other hemodynamic measurements allows for the use of event-related experimental paradigms, not to mention detailed interrogation of the temporal dynamics of cortical blood flow (Taga, Watanabe, & Homae, 2011). The spatial resolution, typically estimated at 1 to 2 cm (Ferrari & Quaresima, 2012; Scholkmann et al., 2014), enables localization of cortical responses with reasonable precision, and this can be further manipulated through variations in the arrangement and density of sources and detectors. For example, increasing the density of channels over a target area achieves finer sampling of the cortex (e.g., Olds et al., 2016; Pollonini et al., 2014). Moreover, it is possible to generate three-dimensional images of the optical properties of the brain given a sufficient number of sources and detectors (Eggebrecht et al., 2014). But even the most basic fNIRS system allows for quantitative monitoring of HbO, HbR, and total hemoglobin, which makes for robust evaluation of the components underlying the cortical hemodynamic response.

There are many factors to consider in analyzing and interpreting fNIRS data. Because changes in blood volume in the scalp and muscles underlying the optodes can influence the data, ongoing discussions in the field have led to the development of techniques to separate signals that originate from the brain from those coming from extra-cerebral tissues (e.g., Goodwin, Gaudet, & Berger, 2014). Moreover, physiological noise that originates from the cardiac pulse and from breathing can influence measurements and must be addressed prior to or in the process of data analysis (Gagnon et al., 2012). Removing noise from the raw signal requires analytical strategies, some provided through custom software development and others through more widely used software packages. As with any type of data that requires extensive processing, pipeline standardization becomes important to data quality and reliability. The current lack of standardization in fNIRS data analysis (Tak & Ye, 2014) is being addressed through the efforts of a working group organized by the Society for functional Near-Infrared Spectroscopy (SfNIRS).

Finally, with regard to usability, the footprint and mobility of the fNIRS physical system is important to consider. The setup typically consists of a cart for the acquisition computer, a tabletop NIRS module, and the optical fibers, which are connected to that module. The optic fibers that deliver the NIR light to the probe are flexible and relatively lightweight, allowing researchers to test participants in a range of positions and postures. The cart itself can be on wheels to increase portability and allow for measurements to be taken more easily in clinical settings. And, of course, optical technology is advancing rapidly; wireless, wearable, multi-channel fNIRS systems are already available and are rapidly improving (Piper et al., 2014; McKendrick, Parasuraman, & Ayaz, 2015; Pinti et al., 2015; Huve, Takahashi, & Hashimoto, 2017; Kassab et al., 2018). Although this is not generally considered to be low-cost instrumentation, after EEG, fNIRS is among the most affordable neuroimaging modalities available. There are no disposables and minimal maintenance is required for the system itself. In short, fNIRS is a user-friendly technology for implementation in either clinic- or lab-based research.

3. Functional near-infrared spectroscopy in speech research

Appropriate to its emergence as tool to assess speech perception and processing in cochlear implant users, speech processing was one of the first research topics addressed using fNIRS. Sakatani and colleagues (Sakatani, Xie, Lichty, Li, & Zuo, 1998) first compared performance of healthy adults and stroke patients on a series of speech processing tasks, focusing on language-related changes in the left prefrontal cortex (roughly Broca’s area). A study published the same year by Watanabe and colleagues (Watanabe et al., 1998) focused on establishing hemispheric lateralization of language in healthy and epileptic adults while they performed a word-generation task. Critically, their fNIRS results confirmed language dominance data collected from the epileptic group using the Wada test. The first fNIRS speech comprehension study was published shortly thereafter (Sato, Takeuchi, & Sakai, 1999). In it, healthy adult participants performed a dichotic listening task with stimuli that varied in complexity (tones, sentences, stories). Results demonstrated greater cortical activity (increases in oxygenated hemoglobin and decreases in deoxygenated hemoglobin) in the left temporal region during the story condition relative to the two other two auditory conditions. Since this early work, numerous auditory processing studies have been conducted using fNIRS with people of all ages, including infants. The research of particular relevance for those considering using fNIRS with cochlear implantees belongs to three primary areas: speech and language processing in infants and young children, adult speech perception and processing in ideal listening conditions, and perception and processing of degraded speech. I will provide a brief overview of each body of data.

Prelingually deafened children with cochlear implants often present with difficulties that include performing below grade level on phonological awareness, on sentence comprehension, and on reading (Johnson & Goswami, 2010; Lund, 2016; Nittrouer, Sansom, Low, Rice, & Caldwell-Tarr, 2014). Thus, having a tool to identify early sound processing problems, which may form the foundation for these subsequent difficulties, is both theoretically and clinically beneficial, and there is now substantial evidence that fNIRS can be used to examine a range of issues relevant to the development of early language processing abilities. Although the first application of fNIRS to infant research (Meek et al., 1998) was published around the time of the initial fNIRS adult language processing research reviewed earlier, the first use of fNIRS to examine infant speech processing was published five years later (Peña et al., 2003). This influential study introduced fNIRS to the language development community and the technique is now a recognized component of the infant research toolkit. In this study, newborn infants (none more than 5 days old) were presented with forward and reversed speech. The forward speech produced a stronger hemodynamic response (increases in oxygenated hemoglobin; decreases in deoxygenated hemoglobin) in the left than the right hemisphere, hinting that language lateralization already manifests at birth. This precocious left lateralization to speech was confirmed in my own (Bortfeld, Fava, & Boas, 2009; Bortfeld, Wruck, & Boas, 2007) and others’ subsequent work (Minagawa-Kawai et al., 2011; Sato et al., 2012).

A variety of other early speech processing questions have been explored using fNIRS. These include categorical perception (Minagawa-Kawai, Mori, Naoi, & Kojima, 2007), prosodic processing (Homae, Watanabe, Nakano, Asakawa, & Taga, 2006; Homae, Watanabe, Nakano, & Taga, 2007), syllable segmentation (Gervain, Macagno, Cogoi, Pena, & Mehler, 2008), and hierarchical language processing (Obrig, Rossi, Telkemeyer, & Wartenburger, 2010). In short, application has been pursued across a range of speech processing subdomains, each of which is relevant to early language development. The result is a substantial and growing body of research demonstrating how to address speech-specific questions in infants and young children using fNIRS. The technology also is being used to characterize speech and language processing in older children (e.g., Jasińska, Berens, Kovelman, & Petitto, 2017). These and other findings provide a solid foundation for characterizing what a normal hearing infant’s response to speech should look like, a necessary step for the purposes of identifying when a child is not developing normally.

Identifying speech processing in adults that deviates from “typical” patterns will also improve our understanding of sources of variability in outcomes for cochlear implant users. Postlingually deafened adults with implants often demonstrate impressive speech recognition performance in ideal listening conditions, but problems in central hearing abilities remain, including poor understanding in less ideal hearing situations, such as in competitive listening (i.e., speech in noise) and in the perception of suprasegmental aspects of speech (i.e., prosodic processing). There is also substantial variability across individuals, a fact that continues to stymie researchers. An important advantage of fNIRS for addressing these and other questions is that it is completely silent. Although fMRI is not an option currently for testing cochlear implant users of any age, researchers often use fMRI data collected from normal hearing individuals to guide expectations about “good” and “poor” cortical processing patterns in cochlear implant users. Given that the scanner noise influences any fMRI data collected during auditory processing tasks (Peelle, 2014), such an approach may well be flawed. Thus, having a technique that can be used to establish normal hearing individuals’ cortical processing patterns while they process speech in silence can be used to guide identification of deviant patterns in postlingually deafened cochlear implant users (Peelle, 2017).

In truth, relatively little work has been done using fNIRS to examine speech and language processing in normal hearing adults; with its unparalleled spatial resolution, fMRI is the ideal modality to answer such questions. But interest in applying fNIRS to investigate central auditory processing has been growing (Chen, Sandmann, Thorne, Herrmann, & Debener, 2015; Hong & Santosa, 2016). Recently, Hassanpour and colleagues (Hassanpour, Eggebrecht, Culver, & Peelle, 2015) used a high density (i.e., spatially sensitive) form of fNIRS in an auditory sentence comprehension task to evaluate the technology’s ability to map the cortical networks that support speech processing. Using sentences with two levels of linguistic complexity and a control condition consisting of unintelligible noise-vocoded speech, these researchers were able to map a hierarchically organized speech network consistent with results from fMRI studies using the same stimuli. This marks an important advance in the specificity of fNIRS speech processing data, because processing connected speech is substantially more complex than processing a single syllable or single word, engaging broader cortical networks (Friederici & Gierhan, 2013; Price, 2012). Thus, the Hassanpour et al. study demonstrates that accurate characterization of the activation patterns underlying normal speech processing can be achieved using fNIRS.

Finally, for cochlear implants, having an understanding of what happens in the brain during the processing of degraded speech is just as important as what happens during speech processing in ideal listening conditions. Fortunately, the amount of research using fMRI to delineate the neurocognitive processes underlying effortful listening has exploded in recent years (e.g., Adank, Davis, & Hagoort, 2012; Eckert, Teubner-Rhodes, & Vaden, 2016; Erb, Henry, Eisner, & Obleser, 2013; Golestani, Hervais-Adelman, Obleser, & Scott, 2013; Hervais-Adelman, Carlyon, Johnsrude, & Davis, 2012; Lewis & Bates, 2013; Scott & McGettigan, 2013; Wild et al., 2012), providing substantial evidence that cortical activation patterns during effortful listening deviate substantially from those observed during the processing of clear speech. There is far less evidence from fNIRS, but recent work—our own and others’—is beginning to make the transition across imaging modalities (Pollonini et al., 2014; Wijayasiri, Hartley, & Wiggins, 2017).

4. Functional near-infrared spectroscopy as a research tool: challenges and insights

Over the past fifteen years, my colleagues and I have worked to develop this brain-based measure as a supplement to existing techniques of measuring speech and spoken language-related functions in infants and young children, both in the lab and in the clinic. In the process, we transitioned from a four-channel system to a 140-channel system, allowing us to generate topographic activation maps of the auditory cortex based on high-density sampling (Olds et al., 2016; Pollonini et al., 2014; Sevy et al., 2010).

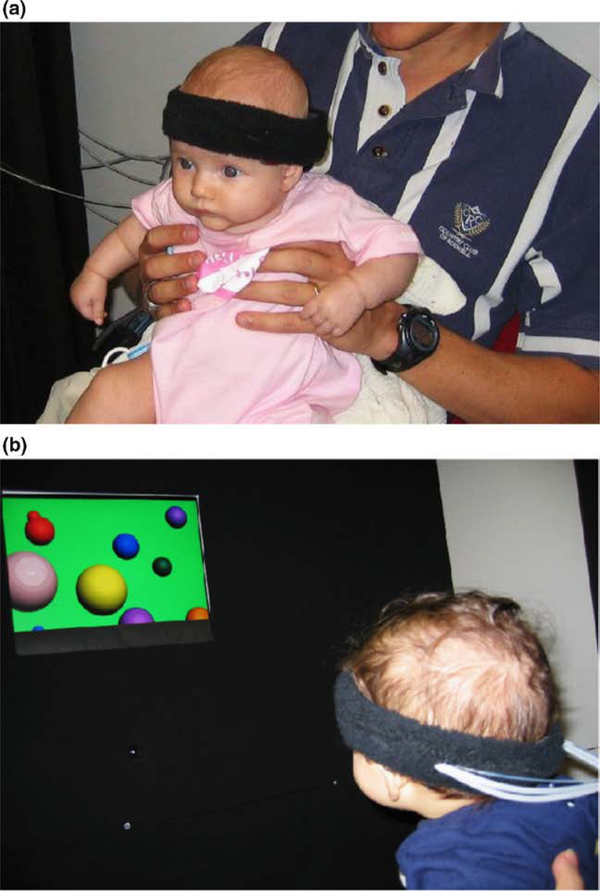

Our focus initially was entirely on typically developing infants, the goal being to compare changes in regional cerebral blood volume and oxygenation within and between age groups. Using a continuous-wave fNIRS system, we conducted several studies relating early functional patterns of activation in infants’ brains to their behavioral performance in traditional looking time paradigms (Bortfeld, Fava, & Boas, 2009; Bortfeld, Wruck, & Boas, 2007; Fava, Hull, Baumbauer, & Bortfeld, 2014; Fava, Hull, & Bortfeld, 2014; Fava, Hull, & Bortfeld, 2011; Wilcox et al., 2009; Wilcox, Bortfeld, Woods, Wruck, & Boas, 2005, 2008). In this way, we were able to establish the efficacy of using fNIRS to identify meaningful patterns of brain activity as they relate to early infant perceptual and cognitive development (Figure 1), and speech perception and processing in particular. At the same time, we extended the use of fNIRS to monitor cortical activity in adults during speech perception and production tasks (Chen, Vaid, Boas, & Bortfeld, 2011; Chen, Vaid, Bortfeld, & Boas, 2008; Hull, Bortfeld, & Koons, 2009). We also introduced another form of optical imaging, frequency-domain near-infrared spectroscopy, as an infant brain monitoring device (Franceschini et al., 2007). Together, these studies helped establish fNIRS as a viable tool for cognitive research on a range of topics.

Figure 1. Normal hearing infant fitted with an early fNIRS array (a) and another oriented towards stimulus during preferential looking paradigm (b).

Figure adapted from (Bortfeld et al., 2007).

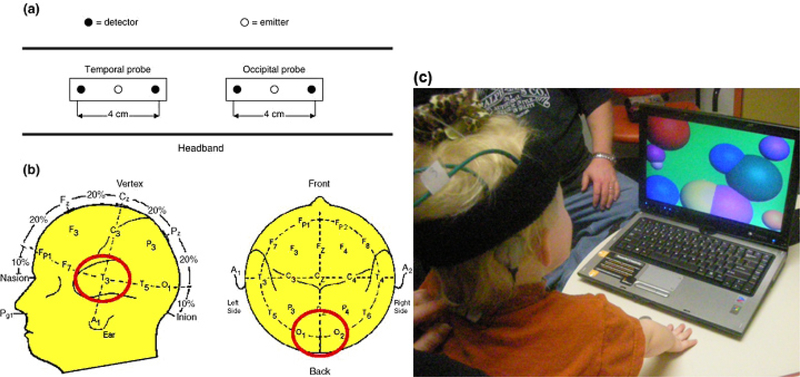

Not surprisingly, the transition from testing typically developing infants and toddlers to pediatric cochlear implant users was slow. We first had to demonstrate that the fNIRS system would be uncorrupted by the implant itself. We also had to learn to accommodate the unique demands of testing in an active pediatric hearing clinic (Figure 2). One factor critical to maintaining a high signal-to-noise ratio and good quality recording is the degree of contact between each optode and the scalp. Hair is a problem in fNIRS recordings for two reasons: it interferes with scalp-optode contact and its pigments scatter and absorb NIR light, attenuating whatever signal is detected. Particularly problematic is thick, dark hair. Researchers can (and do) spend considerable time and effort optimizing the optode positioning to maximize the signal-to-noise ratio. With the children, we found that a dab of saline gel helped restrain hair that has to be moved out of the way. Needless to say, this is why the best recordings often come from subjects who are bald (such as babies or elderly individuals) or who have thin, light colored hair. But our development of a scalp-optode coupling measure helped us overcome this limitation (Pollonini, Bortfeld, & Oghalai, 2016). This numerically-based application computes an objective measure of the signal-to-noise ratio related to optical coupling to the scalp for each measurement channel, an approach that is akin to electrode conductivity testing used in electroencephalography. At the optode level, it determines and displays the coupling status of each source-detector pair in real time on a digital model of a human head. This helped us shorten pre-acquisition preparation time by providing a visual display of which optodes require further adjustment for optimum scalp coupling, thus allowing us to maximize the signal-to-noise ratio of all optical channels contributing to functional hemodynamic mapping. The application has been implemented in a software tool, PHOEBE (Placing Headgear Optodes Efficiently Before Experimentation), which is freely available for use by the fNIRS community (Pollonini, Bortfeld, & Oghalai, 2016).

Figure 2. Example of early fNIRS array on pediatric cochlear implant user.

(a&b) Localization of the probe over T3 (for left temporal auditory cortex measurements) and over O1 and O2 (for occipital visual cortex measurements). (c) A child undergoing fNIRS testing for cortical activity during the cochlear implant activation session. The computer is used to provide auditory (experimental) stimuli and visual (control) stimuli. The black NIRS headband and the brown cochlear implant behind the ear are visible.

Additional considerations relevant to testing cochlear implant users of any age center on probe design and placement. Of concern are the logistical challenges of securing the optode array around the external magnet of the implant itself. Depending on the array, the external magnet can interfere with probe placement. In such circumstances (i.e., high-density arrays), it is fine to place the headset over the magnet. While this obstructs the scalp contact of certain channels, the remaining channels will acquire data without any interference. In this case, however, using a digital localizer to register only those sources and detectors that are not overlaying the external magnet is helpful. In our experience thus far, the external magnet is generally posterior and inferior enough that it does not interfere with placement of more than a couple of optodes, thus permitting measurement of responses within all other regions of interest, particularly bilateral temporal, visual, and frontal areas. Nonetheless, this is a practical issue that must be considered when processing data: how to account for lost data at the individual level. For lower-density arrays this may be less of an issue, but for high-density arrays that target the area where an implant magnet is situated, it will limit data acqusition. While there are merits to using a standard localization approach for all participants, for implant users it may well make more sense to implement a participant-specific optode placement approach that takes the external magnet location into account to maximize data acquisition from key regions of interest. This is an issue that will no doubt continue to be addressed by future research. Regardless of the approach used, care always must be taken not to displace the magnet, which would disallow acoustic-to-electrical signal transmission. Finally, when placing the optode array in the crease between the pinna and the temporal skin (i.e., behind the ear), it is important to avoid brushing the implant’s microphone, which creates the sensation of unpleasant noise for the implant user. This can be particularly problematic if the user is a young child who already may be anxious about wearing the probe.

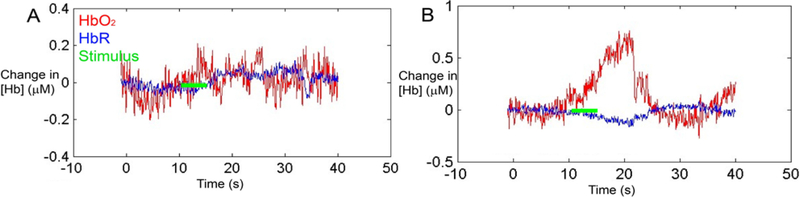

Our initial attempt at data acquisition from pediatric implant users was successful (Figure 3), demonstrating that fNIRS was able to measure differences in cortical activation from pre- to post-implant even in children who never before had been exposed to sound. Based on these data, we were able to pursue what proved to be the first research study using fNIRS with pediatric cochlear implant users (Sevy et al., 2010). Our goal was to compare speech-evoked cortical responses across four subject cohorts: normal-hearing adults, normal-hearing children, deaf children who had over 4 months experience hearing through a cochlear implant, and deaf children who were tested on the day of initial CI activation. The speech stimuli consisted of digital recordings from children’s stories in English. Critically, we were able to successfully record auditory cortical activity using a four-channel continuous wave fNIRS system in 100% of normal-hearing adults, 82% of normal-hearing children, 78% of deaf children who had been implanted for at least four months, and 78% of deaf children on the day of their initial implant activation.

Figure 3. Representative measurements from the auditory cortex in a deaf child in response to auditory stimulation on the day of cochlear implant activation.

(A) Before cochlear implant activation, auditory stimulation (green line) produced no changes in oxygenated and deoxygenated hemoglobin (HbO2 (red) and HbR (blue), respectively). (B) Immediately after cochlear implant activation, auditory stimulation evoked an increase in HbO2 and a decrease in HbR. This is the expected hemodynamic response to an increase in neuronal activity.

In this early work, it was critical to validate our fNIRS paradigm with data obtained using fMRI using the same paradigm. We did so in three normal-hearing adults, finding similar speech-evoked responses in superior temporal gyrus using both fNIRS and fMRI. These results served to demonstrate that fNIRS is a feasible neuroimaging technique in implant users. We later evaluated whether fNIRS was sensitive enough to detect differences in cortical activation evoked by different quality levels of speech in normal-hearing individuals (Pollonini et al., 2014), an important proof-of-concept for application in implant users as a means of identifying quality of implant-based speech perception. In this case, we began using a 140 channel fNIRS system (NIRScout, NIRx Medical Technologies LLC, Glen Head, NY), which allowed us to design a tight array of source-detector pairs and thus providing spatial oversampling of the cortical tissue of interest. Averaging between channels further improved the signal-to-noise ratio (SNR) of the data obtained. By increasing the number of channels, we were able to generate topographic maps of the region-of-interest, allowing measurement of area of activation and center of mass of activation for each individual tested.

Our experimental paradigm consisted of four different stimulus types: normal speech, channelized (vocoded) speech, scrambled speech, and environmental noise (for previous use of these stimulus types as cross-controls see, for example, Humphries, Willard, Buchsbaum, & Hickok, 2001). Results clearly demonstrate that speech intelligibility produced different patterns of cortical activation in the temporal cortex as measured using fNIRS. Specifically, in normal hearing adults, the strongest cortical response was evoked by normal speech, less region-specific activation was evoked by distorted speech, and the smallest response was to environmental sounds. We again validated our experimental paradigm against fMRI data collected from a single participant, with consistent outcomes across the imaging modalities. Critically, results from this study served to demonstrate that fNIRS detected differences in the response of the auditory cortex to variations in speech intelligibility in normal hearing adults. These findings were a necessary step in our journey to using fNIRS as a diagnostic tool for speech processing in cochlear implant users, showing that it provided objective measurements of whether a normal-hearing subject was hearing normal or distorted speech. In other words, at this point it was clear that fNIRS had the potential to assess how differential levels of cortical activation in the brains of cochlear implant users during speech processing.

Because the Pollonini et al. (2014) study did not involve cochlear implant users, our next move was to test adult implant users with a similar paradigm (Olds et al., 2016), using comparable stimulus conditions and fNIRS instrumentation. Our goal in designing this study was to better understand the variability in speech perception across cochlear implant users with different speech perception abilities. Again, a NIRScout instrument (NIRx Medical Technologies, LLC, Glen Head, NY) with 140 channels was used to record the cortical responses in bilateral temporal regions of 32 postlingually deafened adults hearing through a cochlear implant and 35 normal-hearing adults. As in our earlier study, four auditory stimuli with varying degrees of speech intelligibility were employed: normal speech, channelized speech, scrambled speech and environmental noise. Gold standard behavioral measures (e.g., speech reception thresholds, monosyllabic consonant-nucleus-consonant word scores and AzBio sentence recognition scores) were used as the basis for assessing individual speech perception abilities across the implant users.

Results demonstrated that the cortical activation pattern in implanted adults with good speech perception was similar to that of normal hearing controls. Consistent with our earlier findings, in both these groups, as speech stimuli became less intelligible, less cortical activation was observed. In contrast, implant users with poor speech perception displayed large, indistinguishable patterns of cortical activation across all four stimulus classes. As we hypothesized, the findings of this study demonstrated that activation patterns in the auditory cortex of implant recipients correlate with the quality of speech perception and do so in interesting ways. Importantly, when the fNIRS measurements were repeated with each users implant turned off, we observed significantly reduced cortical activations in all participants. This confirmed that, although sound information was being conveyed to the auditory cortex of all the implant users, those with poor speech perception abilities were unable to discriminate the intelligible speech from the other forms of information they were hearing.

5. Potential theoretical and clinical applications

Since the publication of our initial findings using fNIRS with pediatric cochlear implant users (Sevy et al., 2010), the research community has embraced the technology for this purpose (e.g., Bisconti et al., 2016). In particular, there has been an effort to use fNIRS to understand cortical reorganization associated with deafness and cochlear implantation (Dewey & Hartley, 2015; Lawler, Wiggins, Dewey, & Hartley, 2015). Based on their preliminary findings, these authors reported that auditory deprivation is associated with cross-modal plasticity of visual inputs to auditory cortex. Practically speaking, these results demonstrate the ability of fNIRS to accurately record cortical changes associated with neural plasticity in profoundly deaf individuals. The application of fNIRS to understanding plasticity in the adult implant user population has since accelerated (see Anderson, Wiggins, Kitterick, & Hartley, 2017, for a recent example), highlighting the promise of fNIRS as an objective neuroimaging tool to detect and monitor cross-modal plasticity prior to and following cochlear implantation.

Promising future clinical applications of fNIRS include using cortical responsivity to guide post-implant programming in the service of improving speech and language outcomes in both child and adult users. As outlined here, the programming process is ongoing and critical to ensure that sound information is being conveyed accurately to the auditory nerve and, ultimately, to the auditory cortex. If the language areas of the brain are appropriately activated, then the child has the best chance of developing normal speech and language. Early identification of children who are perceiving poorly is therefore critical, as prompt intervention can prevent delay in both linguistic and psychosocial development (Teagle, 2016). Likewise, an objective measure of how well speech information is processed beyond the auditory cortex across the range of perceiver abilities, from good to poor, will inform models of how different patterns of corticocortical connectivity relate to post-implant performance.

Critical to fNIRS being used for this purpose is demonstrating its repeatability and reliability at both the group and the individual levels. Recent work highlights its repeatability in infants (Blasi, Lloyd-Fox, Johnson, & Elwell, 2014) and in adults (Wiggins, Anderson, Kitterick, & Hartley, 2016), particularly at the group level. Substantially more work will be needed on this front as this will undoubtedly impact the transition of this technique from the research to the clinical setting, where obtaining interpretable individual measures will be an important factor in adoption of the technology.

In short, fNIRS is ideal for evaluating sound-evoked brain activation in cochlear implant users. As outlined in this review, the addition of this technology to the measurement toolkit is helping researchers and clinicians achieve the long-term goal of ensuring that cochlear implant users of all ages obtain better hearing and a higher quality of life.

Figure 4. fNIRS headset placement over a cochlear implant device.

A) The location of the cochlear implant’s external magnet interferes with headset placement over the temporal area. B) The fNIRS headset is simply apposed over the magnet (shaded area). C) Custom analytic software demonstrating the quality of scalp contact for each optode using live fNIRS recordings. The optodes obstructed by the magnet postero-superiorly lose their scalp contact (red), while the remaining optodes are unaffected and can still be used (green). The status of scalp contact remained indeterminate for certain optodes (yellow). Figure adapted from (Saliba, Bortfeld, Levitin, & Oghalai, 2016).

References

- Adank P, Davis MH, & Hagoort P (2012). Neural dissociation in processing noise and accent in spoken language comprehension. Neuropsychologia, 50(1), 77–84. 10.1016/j.neuropsychologia.2011.10.024 [DOI] [PubMed] [Google Scholar]

- Anderson CA, Wiggins IM, Kitterick PT, & Hartley DEH (2017). Adaptive benefit of cross-modal plasticity following cochlear implantation in deaf adults. Proceedings of the National Academy of Sciences, 114(38), 201704785 10.1073/pnas.1704785114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Beurlot MR, Fava E, Nath AR, Parikh N. a., Saad ZS, … Oghalai JS (2011). The developmental trajectory of brain-scalp distance from birth through childhood: Implications for functional neuroimaging. PLoS ONE, 6(9), 1–9. 10.1371/journal.pone.0024981 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhatt M, Ayyalasomayajula KR, & Yalavarthy PK (2016). Generalized Beer–Lambert model for near-infrared light propagation in thick biological tissues. Journal of Biomedical Optics, 21(7), 76012 10.1117/1.JBO.21.7.076012 [DOI] [PubMed] [Google Scholar]

- Bisconti S, Shulkin M, Hu X, Basura GJ, Kileny PR, & Kovelman I (2016). Functional near-infrared Spectroscopy brain imaging investigation of phonological awareness and passage comprehension abilities in adult recipients of cochlear implants. Journal of Speech, Language, and Hearing Research, 59(2), 239–253. [DOI] [PubMed] [Google Scholar]

- Blasi A, Lloyd-Fox S, Johnson MH, & Elwell C (2014). Test–retest reliability of functional near infrared spectroscopy in infants. Neurophotonics, 1(2), 25005 10.1117/1.NPh.1.2.025005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bortfeld H, Fava E, & Boas D (2009). Identifying cortical lateralization of speech processing in infants using near-infrared spectroscopy. Developmental Neuropsychology, 34(784375697), 52–65. 10.1080/87565640802564481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bortfeld H, Wruck E, & Boas DA (2007). Assessing infants’ cortical response to speech using near-infrared spectroscopy. NeuroImage, 34(1), 407–415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boyle CA, Boulet S, Schieve LA, Cohen RA, Blumberg SJ, Yeargin-Allsopp M, … Kogan MD (2011). Trends in the revalence of developmental disabilities in US children, 1997–2008. Pediatrics, 127(6), 1034 LP–1042. Retrieved from http://pediatrics.aappublications.org/content/127/6/1034.abstract [DOI] [PubMed] [Google Scholar]

- Caldwell MT, Jiam NT, & Limb CJ (2017). Assessment and improvement of sound quality in cochlear implant users. Laryngoscope Investigative Otolaryngology, 2(3), 119–124. 10.1002/lio2.71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen HC, Vaid J, Boas D, & Bortfeld H (2011). Examining the phonological neighborhood density effect using near infrared spectroscopy. Human Brain Mapping, 32, 1363–1370. 10.1002/hbm.21115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen HC, Vaid J, Bortfeld H, & Boas D. a. (2008). Optical imaging of phonological processing in two distinct orthographies. Experimental Brain Research, 184, 427–433. 10.1007/s00221-007-1200-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen LC, Sandmann P, Thorne JD, Herrmann CS, & Debener S (2015). Association of concurrent fNIRS and EEG signatures in response to auditory and visual stimuli. Brain Topography, 28(5), 710–725. 10.1007/s10548-015-0424-8 [DOI] [PubMed] [Google Scholar]

- Ching TYC, Day J, Van Buynder P, Hou S, Zhang V, Seeto M, … Flynn C (2014). Language and speech perception of young children with bimodal fitting or bilateral cochlear implants. Cochlear Implants International, 15(sup1), S43–S46. 10.1179/1467010014Z.000000000168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciscare GKS, Mantello EB, Fortunato-Queiroz CAU, Hyppolito MA, & Dos Reis ACMB (2017). Auditory speech perception development in relation to patient’s age with cochlear implant. International Archives of Otorhinolaryngology, 21(3), 206–212. 10.1055/s-0036-1584296 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahmen JC, & King AJ (2007). Learning to hear: plasticity of auditory cortical processing. Current Opinion in Neurobiology, 17(4), 456–464. 10.1016/j.conb.2007.07.004 [DOI] [PubMed] [Google Scholar]

- Dewey RS, & Hartley DEH (2015). Cortical cross-modal plasticity following deafness measured using functional near-infrared spectroscopy. Hearing Research, 325, 55–63. 10.1016/j.heares.2015.03.007 [DOI] [PubMed] [Google Scholar]

- Dorman MF, Sharma A, Gilley P, Martin K, & Roland P (2007). Central auditory development: Evidence from CAEP measurements in children fit with cochlear implants. Journal of Communication Disorders, 40(4), 284–294. 10.1016/j.jcomdis.2007.03.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eckert MA, Teubner-Rhodes S, & Vaden KI (2016). Is listening in noise worth it? the neurobiology of speech recognition in challenging listening conditions. Ear and Hearing, 37, 101S–110S. 10.1097/AUD.0000000000000300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eggebrecht AT, Ferradal SL, Robichaux-Viehoever A, Hassanpour MS, Dehghani H, Snyder AZ, … Culver JP (2014). Mapping distributed brain function and networks with diffuse optical tomography. Nature Photonics, 8(6), 448–454. 10.1038/nphoton.2014.107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elwell CE, & Cooper CE (2011). Making light work: illuminating the future of biomedical optics. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 369(1955), 4358–4379. 10.1098/rsta.2011.0302 [DOI] [PubMed] [Google Scholar]

- Erb J, Henry MJ, Eisner F, & Obleser J (2013). The brain dynamics of rapid perceptual adaptation to adverse listening conditions. Journal of Neuroscience, 33(26), 10688–10697. 10.1523/JNEUROSCI.4596-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fava E, Hull R, Baumbauer K, & Bortfeld H (2014). Hemodynamic responses to speech and music in preverbal infants. Child Neuropsychology, 20(4). 10.1080/09297049.2013.803524 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fava E, Hull R, & Bortfeld H (2011). Linking behavioral and neurophysiological indicators of perceptual tuning to language. Frontiers in Psychology, 2(AUG). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fava E, Hull R, & Bortfeld H (2014). Dissociating cortical activity during processing of native and non-native audiovisual speech from early to late infancy. Brain Sciences, 4, 471–487. 10.3390/brainsci4030471 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feng G, Ingvalson EM, Grieco-Calub TM, Roberts MY, Ryan ME, Birmingham P, … Wong PCM (2018). Neural preservation underlies speech improvement from auditory deprivation in young cochlear implant recipients. Proceedings of the National Academy of Sciences, 201717603 10.1073/pnas.1717603115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrari M, & Quaresima V (2012). A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. NeuroImage, 63(2), 921–935. 10.1016/j.neuroimage.2012.03.049 [DOI] [PubMed] [Google Scholar]

- Fisher LM, Wang N, Barnard JM, Fisher LM, Johnson KC, Eisenberg LS, … Niparko JK (2015). A prospective longitudinal study of U.S. children unable to achieve open-set speech recognition 5 Years after cochlear implantation. Otology & Neurotology, 36(6), 985–992. 10.1097/MAO.0000000000000723 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franceschini MA, Thaker S, Themelis G, Krishnamoorthy KK, Bortfeld H, Diamond SG, … Grant PE (2007). Assessment of infant brain development with frequency-domain near-infrared spectroscopy. Pediatric Research, 61(5), 546–551. 10.1203/pdr.0b013e318045be99 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici AD, & Gierhan SME (2013). The language network. Current Opinion in Neurobiology, 23(2), 250–254. 10.1016/j.conb.2012.10.002 [DOI] [PubMed] [Google Scholar]

- Friesen LM, & Picton TW (2010). A method for removing cochlear implant artifact. Hearing Research, 259(1–2), 95–106. 10.1016/j.heares.2009.10.012 [DOI] [PubMed] [Google Scholar]

- Gagnon L, Cooper RJ, Yücel MA, Perdue KL, Greve DN, & Boas DA (2012). Short separation channel location impacts the performance of short channel regression in NIRS. NeuroImage, 59(3), 2518–2528. 10.1016/j.neuroimage.2011.08.095 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geers AE, Brenner CA, & Tobey EA (2011). Long-term outcomes of cochlear implantation in early childhood: Sample characteristics and data collection methods. Ear and Hearing, 32(1), 2S–12S. [DOI] [PubMed] [Google Scholar]

- Geers AE, Nicholas JG, Tobey E, & Davidson L (2016). Persistent langauge delay versus late language emergence in children with early cochlear implantation. Journal of Speech, Language, and Hearing Research, 59(February), 155–170. 10.1044/2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geers AE, & Sedey AL (2011). Language and verbal reasoning skills in adolescents with 10 or more years of cochlear implant experience. Ear and Hearing, 32(1 Suppl), 39S–48S. 10.1097/AUD.0b013e3181fa41dc [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geers AE, Tobey EA, & Moog JS (2011). Editorial: Long-Term Outcomes of Cochlear Implantation in Early Childhood. Ear and Hearing, 32(Ci), 1S 10.1097/AUD.0b013e3181ffd5dc [DOI] [PubMed] [Google Scholar]

- Gervain J, Macagno F, Cogoi S, Pena M, & Mehler J (2008). The neonate brain detects speech structure. Proceedings of the National Academy of Sciences, 105(37), 14222–14227. 10.1073/pnas.0806530105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glick H, & Sharma A (2017). Cross-modal plasticity in developmental and age-related hearing loss: Clinical implications. Hearing Research, 343, 191–201. 10.1016/j.heares.2016.08.012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golestani N, Hervais-Adelman A, Obleser J, & Scott SK (2013). Semantic versus perceptual interactions in neural processing of speech-in-noise. NeuroImage, 79, 52–61. 10.1016/j.neuroimage.2013.04.049 [DOI] [PubMed] [Google Scholar]

- Goodwin JR, Gaudet CR, & Berger AJ (2014). Short-channel functional near-infrared spectroscopy regressions improve when source-detector separation is reduced. Neurophotonics, 1(1), 15002 10.1117/1.NPh.1.1.015002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gransier R, Deprez H, Hofmann M, Moonen M, van Wieringen A, & Wouters J (2016). Auditory steady-state responses in cochlear implant users: Effect of modulation frequency and stimulation artifacts. Hearing Research, 335, 149–160. 10.1016/j.heares.2016.03.006 [DOI] [PubMed] [Google Scholar]

- Hassanpour MS, Eggebrecht AT, Culver JP, & Peelle JE (2015). Mapping cortical responses to speech using high-density diffuse optical tomography. NeuroImage, 117, 319–326. 10.1016/j.neuroimage.2015.05.058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hervais-Adelman AG, Carlyon RP, Johnsrude IS, & Davis MH (2012). Brain regions recruited for the effortful comprehension of noise-vocoded words. Language and Cognitive Processes, 27(7–8), 1145–1166. 10.1080/01690965.2012.662280 [DOI] [Google Scholar]

- Homae F, Watanabe H, Nakano T, Asakawa K, & Taga G (2006). The right hemisphere of sleeping infant perceives sentential prosody. Neuroscience Research, 54(4), 276–280. 10.1016/j.neures.2005.12.006 [DOI] [PubMed] [Google Scholar]

- Homae F, Watanabe H, Nakano T, & Taga G (2007). Prosodic processing in the developing brain. Neuroscience Research, 59(1), 29–39. 10.1016/j.neures.2007.05.005 [DOI] [PubMed] [Google Scholar]

- Hong KS, & Santosa H (2016). Decoding four different sound-categories in the auditory cortex using functional near-infrared spectroscopy. Hearing Research, 333, 157–166. 10.1016/j.heares.2016.01.009 [DOI] [PubMed] [Google Scholar]

- Hull R, Bortfeld H, & Koons S (2009). Near-infrared spectroscopy and cortical responses to speech production. The Open Neuroimaging Journal 10.2174/1874440000903010026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphries C, Willard K, Buchsbaum B, & Hickok G (2001). Role of anterior temporal cortex in auditory sentence comprehension: An fMRI study. NeuroReport, 12(8), 1749–1752. 10.1097/00001756-200106130-00046 [DOI] [PubMed] [Google Scholar]

- Huppert TJ, Hoge RD, Diamond SG, Franceschini MA, & Boas DA (2006). A temporal comparison of BOLD, ASL, and NIRS hemodynamic responses to motor stimuli in adult humans. NeuroImage, 29(2), 368–382. 10.1016/j.neuroimage.2005.08.065 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huve G, Takahashi K, & Hashimoto M (2017). Brain activity recognition with a wearable fNIRS using neural networks. 2017 IEEE International Conference on Mechatronics and Automation (ICMA), 1573–1578. 10.1109/ICMA.2017.8016051 [DOI] [Google Scholar]

- Jasińska KK, Berens MS, Kovelman I, & Petitto LA (2017). Bilingualism yields language-specific plasticity in left hemisphere’s circuitry for learning to read in young children. Neuropsychologia, 98(November 2016), 34–45. 10.1016/j.neuropsychologia.2016.11.018 [DOI] [PubMed] [Google Scholar]

- Jiang H, Paulsen KD, Osterberg UL, Pogue BW, & Patterson MS (1996). Optical image reconstruction using frequency-domain data: simulations and experiments. Journal of the Optical Society of America A, 13(2), 253 10.1364/JOSAA.13.000253 [DOI] [Google Scholar]

- Johnson C, & Goswami U (2010). Phonological awareness, vocabulary, and reading in deaf children with cochlear implants. Hearing Research, 53(April), 237–262. 10.1044/1092-4388(2009/08-0139) [DOI] [PubMed] [Google Scholar]

- Kassab A, Le Lan J, Tremblay J, Vannasing P, Dehbozorgi M, Pouliot P, … Nguyen DK (2018). Multichannel wearable fNIRS-EEG system for long-term clinical monitoring. Human Brain Mapping, 39(1), 7–23. 10.1002/hbm.23849 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kral A (2013). Auditory critical periods: A review from system’s perspective. Neuroscience, 247, 117–133. 10.1016/j.neuroscience.2013.05.021 [DOI] [PubMed] [Google Scholar]

- Kral A, Yusuf PA, & Land R (2017). Higher-order auditory areas in congenital deafness: Top-down interactions and corticocortical decoupling. Hearing Research, 343, 50–63. 10.1016/j.heares.2016.08.017 [DOI] [PubMed] [Google Scholar]

- Lawler CA, Wiggins IM, Dewey RS, & Hartley DEH (2015). The use of functional near-infrared spectroscopy for measuring cortical reorganisation in cochlear implant users: A possible predictor of variable speech outcomes? Cochlear Implants International, 16(S1), S30–S32. 10.1179/1467010014Z.000000000230 [DOI] [PubMed] [Google Scholar]

- Lenarz M, Joseph G, Sönmez H, Büchner A, & Lenarz T (2011). Effect of technological advances on cochlear implant performance in adults. Laryngoscope, 121(12), 2634–2640. 10.1002/lary.22377 [DOI] [PubMed] [Google Scholar]

- Lewis GJ, & Bates TC (2013). The long reach of the gene. Psychologist, 26(3), 194–198. 10.1162/jocn [DOI] [Google Scholar]

- Lund E (2016). Vocabulary knowledge of children with cochlear implants: A meta-analysis. Journal of Deaf Studies and Deaf Education, 21(2), 107–121. 10.1093/deafed/env060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mc Laughlin M, Lopez Valdes A, Reilly RB, & Zeng FG (2013). Cochlear implant artifact attenuation in late auditory evoked potentials: A single channel approach. Hearing Research, 302, 84–95. 10.1016/j.heares.2013.05.006 [DOI] [PubMed] [Google Scholar]

- McKendrick R, Parasuraman R, & Ayaz H (2015). Wearable functional near infrared spectroscopy (fNIRS) and transcranial direct current stimulation (tDCS): expanding vistas for neurocognitive augmentation. Frontiers in Systems Neuroscience, 9(March), 1–14. 10.3389/fnsys.2015.00027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meek JH, Firbank M, Elwell CE, Atkinson J, Braddick O, & Wyatt JS (1998). Regional Hemodynamic Responses to Visual Stimulation in Awake Infants. Pediatric Research, 43, 840 Retrieved from 10.1203/00006450-199806000-00019 [DOI] [PubMed] [Google Scholar]

- Miller S, & Zhang Y (2014). Validation of the cochlear implant artifact correction tool for auditory electrophysiology. Neuroscience Letters, 577, 51–55. 10.1016/j.neulet.2014.06.007 [DOI] [PubMed] [Google Scholar]

- Minagawa-Kawai Y, Mori K, Naoi N, & Kojima S (2007). Neural attunement processes in infants during the acquisition of a language-specific phonemic contrast. Journal of Neuroscience, 27(2), 315–321. 10.1523/JNEUROSCI.1984-06.2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minagawa-Kawai Y, van der Lely H, Ramus F, Sato Y, Mazuka R, & Dupoux E (2011). Optical Brain Imaging Reveals General Auditory and Language-Specific Processing in Early Infant Development. Cerebral Cortex, 21(2), 254–261. Retrieved from 10.1093/cercor/bhq082 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell RE, & Karchmer MA (2002). Chasing the mythical ten percent: Parental hearing status of deaf and hard of hearing students in the United States. Sign Language Studies, 4(2), 138–163. [Google Scholar]

- Miyamoto RT, Colson B, Henning S, & Pisoni D (2018). Cochlear implantation in infants below 12 months of age. World Journal of Otorhinolaryngology - Head and Neck Surgery, 3(4), 214–218. 10.1016/j.wjorl.2017.12.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moberly AC, Castellanos I, Vasil KJ, Adunka OF, & Pisoni DB (2018). “product” Versus “process” Measures in Assessing Speech Recognition Outcomes in Adults with Cochlear Implants. Otology and Neurotology, 39(3). 10.1097/MAO.0000000000001694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niparko JK, Tobey EA, Thal DJ, Eisenberg LS, Wang N-Y, Quittner AL, & Fink NE (2010). Spoken language development in children following cochlear implantation. Jama, 303(15), 1498–506. 10.1001/jama.2010.451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nittrouer S, Sansom E, Low K, Rice C, & Caldwell-Tarr A (2014). Language structures used by kindergartners with cochlear implants: Relationship to phonological awareness, lexical knowledge and hearing loss. Ear and Hearing, 35(5), 506–518. 10.1097/AUD.0000000000000051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obrig H, Rossi S, Telkemeyer S, & Wartenburger I (2010). From acoustic segmentation to language processing: evidence from optical imaging. Frontiers in Neuroenergetics. Retrieved from https://www.frontiersin.org/article/10.3389/fnene.2010.00013 [DOI] [PMC free article] [PubMed]

- Olds C, Pollonini L, Abaya H, Larky J, Loy M, Bortfeld H, … Oghalai JS (2016). Cortical activation patterns correlate with speech understanding after cochlear implantation. Ear and Hearing, 37(3). 10.1097/AUD.0000000000000258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ou H, Dunn CC, Bentler RA, & Zhang X (2008). Measuring cochlear implant satisfaction in postlingually deafened adults with the SADL inventory. Journal of the American Academy of Audiology, 19(9), 721–734. 10.3766/jaaa.19.9.7 [DOI] [PubMed] [Google Scholar]

- Pasley BN, David SV, Mesgarani N, Flinker A, Shamma SA, Crone NE, … Chang EF (2012). Reconstructing speech from human auditory cortex. PLoS Biology, 10(1). 10.1371/journal.pbio.1001251 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paulraj MP, Subramaniam K, Yaccob S. Bin, Bin Adom AH, & Hema CR (2015). Auditory evoked potential response and hearing loss: A review. Open Biomedical Engineering Journal, 9, 17–24. 10.2174/1874120701509010017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelle JE (2014). Methodological challenges and solutions in auditory functional magnetic resonance imaging. Frontiers in Neuroscience, (8 JUL). 10.3389/fnins.2014.00253 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peelle JE (2017). Optical neuroimaging of spoken language. Language, Cognition and Neuroscience, 32(7), 847–854. 10.1080/23273798.2017.1290810 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peña M, Maki A, Kovacic D, Dehaene-Lambertz G, Koizumi H, Bouquet F, & Mehler J (2003). Sounds and silence: An optical topography study of language recognition at birth. Proceedings of the National Academy of Sciences, 100(20), 11702–11705. 10.1073/pnas.1934290100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pifferi A, Contini D, Mora AD, Farina A, Spinelli L, & Torricelli A (2016). New frontiers in time-domain diffuse optics, a review. Journal of Biomedical Optics, 21(9), 91310 10.1117/1.JBO.21.9.091310 [DOI] [PubMed] [Google Scholar]

- Pinti P, Aichelburg C, Lind F, Power S, Swingler E, Merla A, … Tachtsidis I (2015). Using Fiberless, Wearable fNIRS to Monitor Brain Activity in Real-world Cognitive Tasks. Journal of Visualized Experiments, (106), 1–13. 10.3791/53336 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piper SK, Krueger A, Koch SP, Mehnert J, Habermehl C, Steinbrink J, … Schmitz CH (2014). A wearable multi-channel fNIRS system for brain imaging in freely moving subjects. NeuroImage, 85, 64–71. 10.1016/j.neuroimage.2013.06.062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollonini L, Bortfeld H, & Oghalai JS (2016). PHOEBE: A method for real time mapping of optodes-scalp coupling in functional nearinfrared spectroscopy. Biomedical Optics Express, 7(12). 10.1364/BOE.7.005104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollonini L, Olds C, Abaya H, Bortfeld H, Beauchamp MS, & Oghalai JS (2014). Auditory cortex activation to natural speech and simulated cochlear implant speech measured with functional near-infrared spectroscopy. Hearing Research, 309, 84–93. 10.1016/j.heares.2013.11.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ (2012). A review and synthesis of the first 20years of PET and fMRI studies of heard speech, spoken language and reading. NeuroImage, 62(2), 816–847. 10.1016/j.neuroimage.2012.04.062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prochazka A (2017). Neurophysiology and neural engineering: a review. Journal of Neurophysiology, 118(2), 1292–1309. 10.1152/jn.00149.2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quaresima V, Bisconti S, & Ferrari M (2012). A brief review on the use of functional near-infrared spectroscopy (fNIRS) for language imaging studies in human newborns and adults. Brain and Language, 121(2), 79–89. 10.1016/j.bandl.2011.03.009 [DOI] [PubMed] [Google Scholar]

- Roberts DS, Lin HW, Herrmann BS, & Lee DJ (2013). Differential cochlear implant outcomes in older adults. Laryngoscope, 123(8), 1952–1956. 10.1002/lary.23676 [DOI] [PubMed] [Google Scholar]

- Sakatani K, Xie Y, Lichty W, Li S, & Zuo H (1998). Language-activated cerebral blood oxygenation and hemodynamic changes of the left prefrontal cortex in poststroke aphasic patients. Stroke, 29(7), 12–14. 10.1161/01.STR.29.7.1299 [DOI] [PubMed] [Google Scholar]

- Saliba J, Bortfeld H, Levitin DJ, & Oghalai JS (2015). Functional near-infrared spectroscopy for neuroimaging in cochlear implant recipients. Hearing Research 10.1016/j.heares.2016.02.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Santa Maria PL, & Oghalai JS (2014). When is the best timing for the second implant in pediatric bilateral cochlear implantation? Laryngoscope, 124(7), 1511–1512. 10.1002/lary.24465 [DOI] [PubMed] [Google Scholar]

- Sato H, Hirabayashi Y, Tsubokura H, Kanai M, Ashida T, Konishi I, … Maki A (2012). Cerebral hemodynamics in newborn infants exposed to speech sounds: A whole-head optical topography study. Human Brain Mapping, 33(9), 2092–2103. 10.1002/hbm.21350 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sato H, Kiguchi M, Kawaguchi F, & Maki A (2004). Practicality of wavelength selection to improve signal-to-noise ratio in near-infrared spectroscopy. NeuroImage, 21(4), 1554–1562. 10.1016/j.neuroimage.2003.12.017 [DOI] [PubMed] [Google Scholar]

- Sato H, Takeuchi T, & Sakai KL (1999). Temporal cortex activation during speech recognition: An optical topography study. Cognition, 73(3), 55–66. 10.1016/S0010-0277(99)00060-8 [DOI] [PubMed] [Google Scholar]

- Scholkmann F, Kleiser S, Metz AJ, Zimmermann R, Mata Pavia J, Wolf U, & Wolf M (2014). A review on continuous wave functional near-infrared spectroscopy and imaging instrumentation and methodology. NeuroImage, 85, 6–27. 10.1016/j.neuroimage.2013.05.004 [DOI] [PubMed] [Google Scholar]

- Scott SK, & McGettigan C (2013). The neural processing of masked speech. Hearing Research, 303, 58–66. 10.1016/j.heares.2013.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sevy ABG, Bortfeld H, Huppert TJ, Beauchamp MS, Tonini RE, & Oghalai JS (2010). Neuroimaging with near-infrared spectroscopy demonstrates speech-evoked activity in the auditory cortex of deaf children following cochlear implantation. Hearing Research, 270, 39–47. 10.1016/j.heares.2010.09.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silva LAF, Couto MIV, Magliaro FCL, Tsuji RK, Bento RF, De Carvalho ACM, & Matas CG (2017). Cortical maturation in children with cochlear implants: Correlation between electrophysiological and behavioral measurement. PLoS ONE, 12(2), 1–18. 10.1371/journal.pone.0171177 [DOI] [PMC free article] [PubMed] [Google Scholar]