Abstract

Background:

Epidemiologic studies often use diagnosis codes to identify dementia outcomes. It remains unknown to what extent cognitive screening test results add value in identifying dementia cases in big data studies leveraging electronic health record (EHR) data. We examined test scores from EHR data and compared results with dementia algorithms.

Methods:

This retrospective cohort study included patients 60+ years of age from Kaiser Permanente Washington (KPWA) during 2013–2018 and the Veterans Health Affairs (VHA) during 2012–2015. Results from the Mini Mental State Examination (MMSE) and the Saint Louis University Mental Status Examination (SLUMS) cognitive screening exams, were classified as showing dementia or not. Multiple dementia algorithms were created using combinations of diagnosis codes, pharmacy records, and specialty care visits. Correlations between test scores and algorithms were assessed.

Results:

3,690 of 112,917 KPWA patients and 2,981 of 102,981 VHA patients had cognitive test results in the EHR. In KPWA, dementia prevalence ranged from 6.4% - 8.1% depending on the algorithm used and in the VHA, 8.9% - 12.1%. The algorithm which best agreed with test scores required ≥2 dementia diagnosis codes in 12 months; at KPWA, 14.8% of people meeting this algorithm had an MMSE score, of whom 65% had a score indicating dementia. Within VHA, those figures were 6.2% and 77% respectively.

Conclusions:

Although cognitive test results were rarely available, agreement was good with algorithms requiring ≥2 dementia diagnosis codes, supporting the accuracy of this algorithm.

Implications:

These scores may add value in identifying dementia cases for EHR-based research studies.

Keywords: electronic health record, dementia, algorithms, cognitive screening

INTRODUCTION:

Studies estimate that between 2.4 and 5.5 million Americans have been diagnosed with dementia [1–3]. Furthermore, the prevalence of Alzheimer’s disease and related dementias is projected to double by 2060 [4]. To address the growing burden of dementia, it is important to carry out research about dementia prevention, surveillance and treatment. Exploring methods to identify cases of dementia in the EHR can inform attempts to quickly enroll patients with dementia into randomized controlled trials or to be able to target dementia-related care programs to the appropriate population [5]. Electronic health records (EHRs) provide valuable opportunities for such research, but a challenge is how to best identify cases of dementia.

Previous dementia-related research has utilized diagnosis codes available in electronic health record data [6], and in general, more specific algorithms have limited sensitivity while less specific algorithms identify more false-positives. Many data elements beyond diagnoses alone are included in EHR data, such as medications prescribed, types of visits attended and cognitive screening test results. Physicians often rely on a combination of medical history, laboratory and imaging test results, and cognitive or neuropsychological assessments [7, 8] to make a dementia diagnosis. Results from these cognitive tests are often stored in the EHR and may provide important information to assist in identifying patients with dementia for EHR-based epidemiologic studies [9, 10]. It is unknown whether cognitive tests are widely used, and whether these tests can assist in the identification of patients diagnosed with dementia for research studies. Understanding how to utilize these data can enhance the ability to identify and study those living with dementia. Imfeld et al. have shown that the incorporation of data elements such as the presence of cognitive screening tests (e.g., Mini Mental State Examination, Clock Drawing Test, or Abbreviated Mental Test) into algorithms using other electronic health data can aid identification of a cohort of patients with dementia [11]. However, no prior study has explored the use of cognitive test scores, a rich element of EHR data, and how their presence and results compare to diagnostic algorithms for dementia.

To address the lack of knowledge about how cognitive screening test results might be useful to augment standard methods of identifying dementia from healthcare data, we examined the availability of results from two cognitive screening tests, the Mini Mental State Examination (MMSE) [12] and the Saint Louis University Mental Status Examination (SLUMS) [13] in two healthcare systems’ EHRs, and we evaluated the relationship of these screening test results with dementia algorithms using other EHR data elements. We evaluated how often cognitive screening tests were completed around the time dementia algorithm criteria were met and how often algorithms for defining dementia were met around the time that cognitive test results suggested dementia was present.

MATERIALS AND METHODS:

Overview:

Eligible patients were identified from Kaiser Permanente Washington (KPWA- formerly known as Group Health), an integrated healthcare system in the Northwest United States, and the Veterans Health Affairs (VHA), which provides government-funded healthcare for veterans across the United States. This study was approved by the Institutional Review Boards of participating institutions with a waiver of consent.

Data Sources:

Data from KPWA and VHA patients were obtained from their respective electronic health databases. In both healthcare systems, records included International Classification of Diseases, Ninth Edition (ICD-9) (and in KPWA, Tenth Edition [ICD-10] as well) diagnosis codes from healthcare encounters, pharmacy dispensing data, encounters with specialty care, results from cognitive screening tests and demographic information. In KPWA, information on the MMSE became much more common in electronic databases starting in 2013, so we included data from 1/1/2013–4/30/2018. In the VHA, results from SLUMS was primarily available starting in 2012, and data were available through 2015 from an ongoing cohort study of metformin use and incident dementia, so we included data from 10/1/2012–9/30/2015. VHA data included patient encounters from community-based outpatient clinics and VHA medical centers across the entire US.

Study Settings and Population:

KPWA is an integrated healthcare delivery system which provides both insurance coverage and healthcare to about 710,000 individuals in the Pacific Northwest. The KPWA cohort consisted of individuals who were 60 years or older during 1/1/2013–4/30/2018 and were enrolled in KPWA’s integrated group practice for two or more years prior to entry into the study. Members in the group practice receive all or nearly all their care from KPWA providers at KPWA-owned clinics, so the EHR is expected to have complete data on cognitive tests performed in this subgroup. From the VHA, a subset of those from a larger study of 2,311,519 patients with yearly visits in fiscal years 2000 and 2001were included [14]. The current analyses were limited to patients with a yearly visit during 10/01/2010– 9/30/2011 and 10/1/2011– 9/30/2012, at least one visit during 10/1/2012–9/30/2015, and who were 60 or more years of age on 10/1/2012 (n=922,635). The requirement for visits was designed to ensure that those enrollees were actively using VHA for their medical care. Because the VHA cohort was very large, we limited the analyses that follow to a subsample including 100% of those with a SLUMS result (n=2,981) and a random sample of 100,000 (approximately 10%) patients without a SLUMS, resulting in a sample size of 102,981 individuals from the VHA.

Cognitive Screening Ascertainment

The electronic medical records of all study patients were searched for structured data containing results from two cognitive assessment tests, the MMSE in KPWA and the SLUMS in the VHA. While the predominant cognitive screening test at KPWA was the MMSE, this test has historically been used infrequently at the VHA. In the VHA, the SLUMS is the predominant cognitive screening test, which is not used at KPWA. Therefore, we focused on different tests, most relevant to patterns of healthcare utilization in each healthcare system. We searched only for results in structured data formats (not in free-text clinical notes). We classified cognitive test results based on the total score as indicating dementia (<24/30 points for the MMSE or <21/30 for SLUMS) or not indicating dementia (scores of ≥24/30 on the MMSE or ≥21/30 on the SLUMS) [12, 13]. Incomplete tests were excluded (<2% of KPWA tests; VHA had no information on incomplete tests).

Algorithm creation

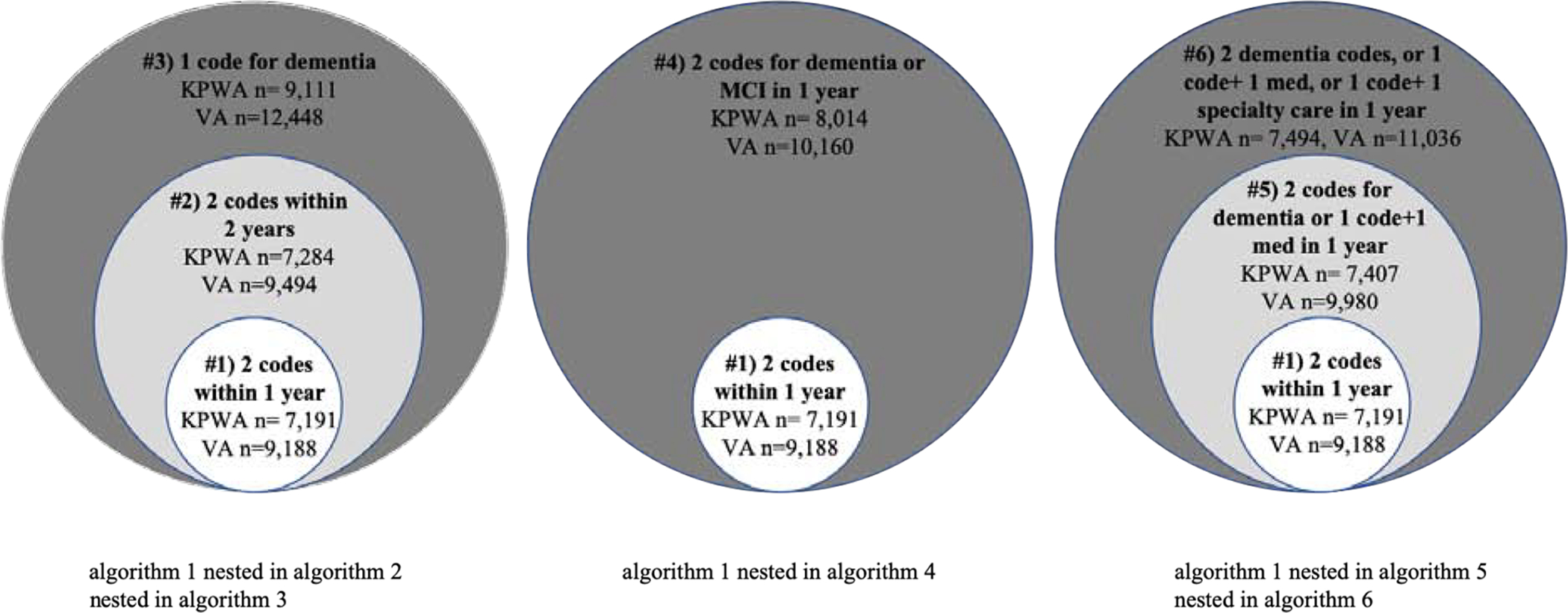

From the KPWA and VHA EHRs, we extracted diagnosis codes for dementia and mild cognitive impairment (MCI), prescription fills for dementia medications, and utilization information for dementia-related specialty care visits (list of codes, medications and specialty care visits available in Supplemental Table 1). We developed 6 algorithms to identify cases of dementia (Table 1). The onset of dementia was defined as the first date a person met all criteria for a particular algorithm. These algorithms were informed by the literature [9, 11] and were designed to be hierarchical. Algorithm 1 was the strictest, requiring 2 or more diagnosis codes for dementia within a 12 month period, while subsequent algorithms relaxed the criteria in a sequential fashion. In addition to the hierarchical aspect, there were 3 groups of algorithms created, and within each group, the algorithms were nested in one another (Figure 1). Group 1 includes Algorithms 1, 2 and 3 using only dementia diagnosis codes but varying the required number and timing of codes. Group 2 includes Algorithms 1 and 4 which include diagnosis codes for MCI in addition to dementia. Group 3 includes algorithms 1, 5 and 6 which adds information on prescription medications and visits to specialty care. In the literature, dementia algorithms commonly require ≥1 dementia diagnosis codes or ≥2 dementia diagnosis codes (algorithm 3 and 1 in the present study, respectively) [6].

Table 1:

Algorithm definitions

| Algorithm | Definition |

|---|---|

| 1 | ≥2 diagnosis codes for dementia within a 12-month period |

| 2 | ≥2 diagnosis codes for dementia within a 24-month period |

| 3 | ≥1 diagnosis codes for dementia ever |

| 4 | ≥2 diagnosis codes for dementia and/or MCI within a 12-month period |

| 5 | ≥2 diagnosis codes for dementia within a 12-month period, or 1 diagnosis code for dementia and ≥1 prescription(s) for dementia drug within a 12-month period |

| 6 | ≥2 diagnosis codes for dementia within a 12-month period, or 1 diagnosis code for dementia and ≥1 prescription(s) for dementia drug within a 12 month period, or 1 diagnosis code for dementia and ≥1visit to dementia-related specialty care |

Abbreviations: MCI, mild cognitive impairment.

Figure 1:

Nested algorithms in study. This figure shows the three groups of nested, hierarchical algorithms. The necessary criteria for each algorithm are shown along with the number from each population (KPWA or VHA) who met each algorithm. The larger circles indicate the algorithms with less-stringent criteria. As you move toward the center, the algorithm criteria becomes more stringent either by requiring more data or a shorter amount of time.

Analysis

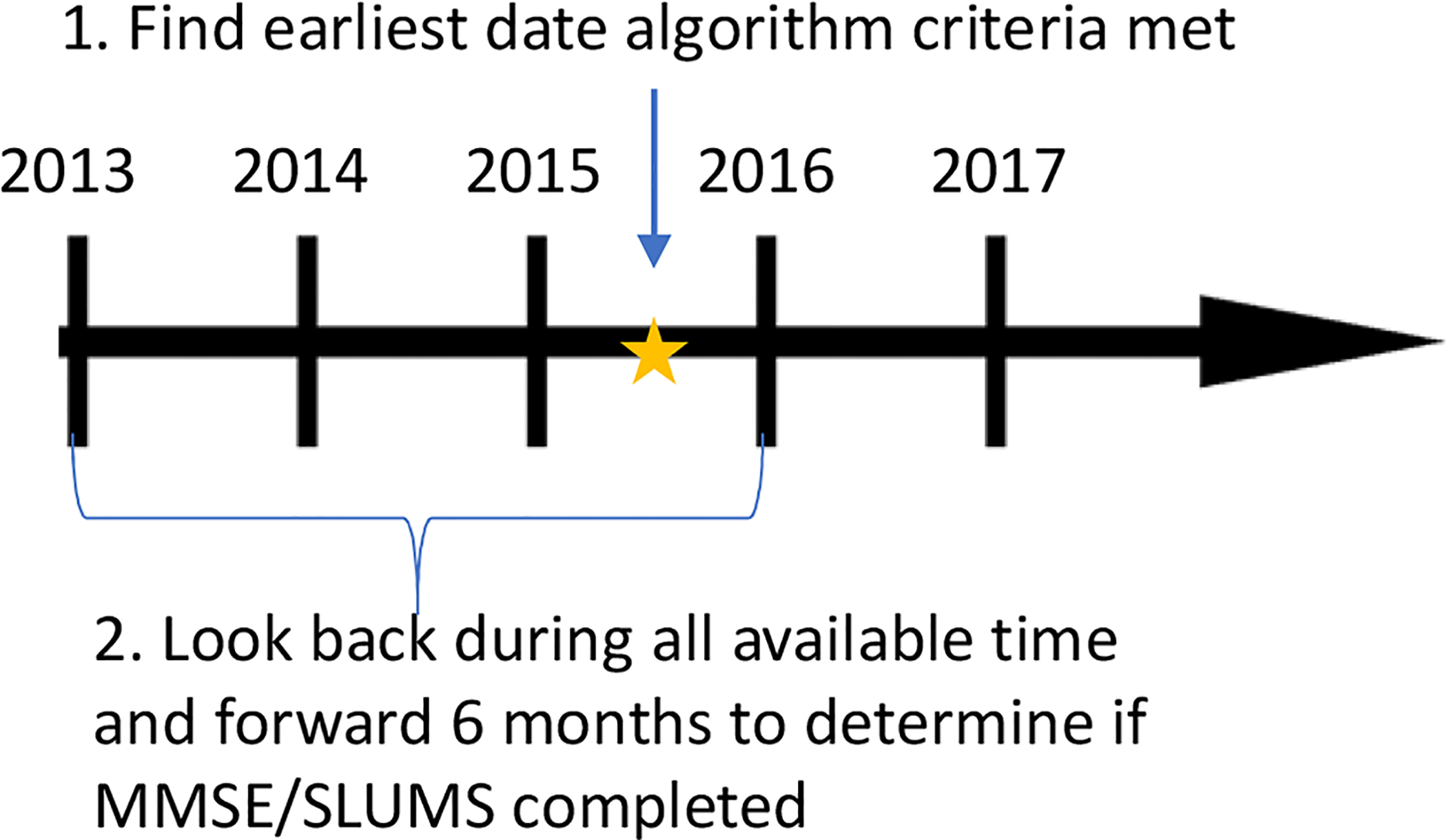

To evaluate the relationship between dementia algorithms and cognitive test scores, we conducted two sets of analyses. In the first set of analyses, we assessed how many individuals met each diagnostic algorithm. Then, we determined whether a cognitive test was performed any time before or within 6 months after the date a given algorithm was met, and we reported the proportion of these tests that were scored below the threshold for dementia (<24/30 points for the MMSE or <21/30 for SLUMS) (Figure 2). We thought of these analyses as akin to positive predictive value (PPV), in that they were intended to determine which algorithm had the most accuracy, compared to results from a widely accepted cognitive screening test. We were not able to estimate actual PPV because we lacked a true gold standard. Cognitive screening tests are not the true gold standard because these tests themselves have imperfect sensitivity and specificity. Also, without universal routine screening we are missing information on the dementia status for most patients, so no data elements in the EHR can be a true gold standard.

Figure 2:

Anchor date and time-window for assessing the correlation between cognitive test results and dementia algorithms. This figure shows the comparison of whether a cognitive test was completed during the time window of interest given that criteria for a dementia algorithm were met. For this comparison, we determine an anchor date that an algorithm was met, then we look back using all available look-back and forward 6 months from this anchor date to determine if a cognitive test was completed.

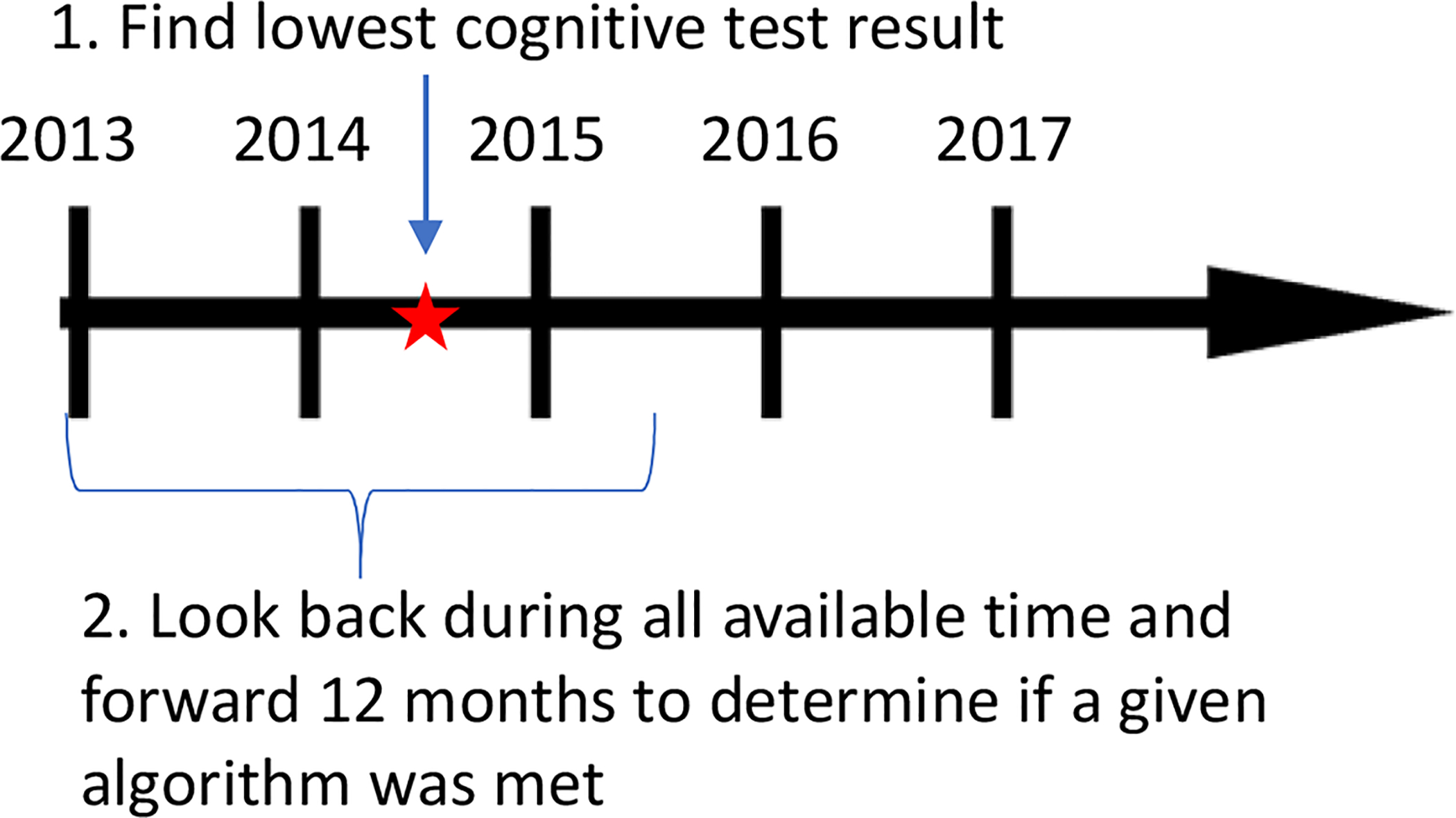

In the second set of analyses, we identified the date of the lowest scoring cognitive screening test per person (most people had only one result available). Then, we determined whether patients met criteria for each of the 6 dementia algorithms any time before or within 12 months after the date of the screening test (Figure 3). We examined the lowest test score available because of concerns that if an individual had a cognitive test done in the past that was low, their doctor may continue assigning diagnosis codes repeatedly while not necessarily repeating the cognitive test. We thought of these analyses as akin to sensitivity, in that they were intended to determine the likelihood that algorithms would identify patients as having dementia given that objective results from a widely accepted cognitive test suggested dementia. We were not able to estimate actual sensitivity because we lacked a true gold standard.

Figure 3:

Anchor date and time-window for assessing the correlation between cognitive test results and dementia algorithms. This figure shows the comparison of which algorithms were met during the time window of interest given a cognitive test was completed. For this comparison, we determine an anchor date that a cognitive test (MMSE or SLUMS) was completed, then we look back using all available look-back and look forward 12 months from this anchor date to determine if one or more algorithms were met.

Sensitivity analyses:

We altered the threshold for a score consistent with dementia on the MMSE to be <26/30 instead of <24/30 and on the SLUMS to be <27/30 instead of <21/30 and reported the new proportion of patients that met algorithms who scored below the threshold for dementia. This sensitivity analysis addressed findings from prior research that a higher cut-off on cognitive tests, particularly among highly educated populations, may result in improved sensitivity for detecting dementia [15–17].

All analyses at KPWA were performed using STATA version 13. All analyses at the VHA were performed using SAS version 9.4.

RESULTS:

In the KPWA population, 112,917 people with a mean age of 70.1 years (±sd 8.6) were included in analyses, of whom 3,690 (3%) had one or more MMSE tests during the observation period. The prevalence of receiving a MMSE test during the study years increased from 0.81% in 2013 to 0.91% in 2016, then decreased slightly (coinciding with the initiation of the Montreal Cognitive Assessment for cognitive screening at KPWA in later study years). Those with a MMSE result were older (mean age 76.6, ±sd 8.5) and had more comorbid conditions compared to patients with no MMSE tests (Table 2). Approximately 20% had more than one MMSE test during the study period. Overall, 1,434 (39%) of MMSE tests had a score consistent with dementia.

Table 2.

Characteristics of KPWA and VHA study population at baseline who are ≥60 years of agea

| KPWAb | VHAc | |||||||

|---|---|---|---|---|---|---|---|---|

| Cohort characteristics | Overall N=112,917 | Participants with ≥ 1 complete MMSE N=3,690 | Overall N=102,981 | Participants with ≥ 1 SLUMS N=2,981 | ||||

| n | % | n | % | n | % | n | % | |

| Age, mean (±sd) | 70.1 (±8.6) | 76.6 (±8.5) | 73.4 (±9.0) | 73.0 (±8.8) | ||||

| Age category | ||||||||

| 60–69 | 66,520 | 59 | 872 | 24 | 44,123 | 43 | 1,346 | 45 |

| 70–79 | 28,133 | 25 | 1,367 | 37 | 29,040 | 28 | 807 | 27 |

| ≥80 | 18,264 | 16 | 1,451 | 39 | 29,818 | 29 | 828 | 28 |

| Female | 63,281 | 56 | 2,267 | 61 | 3,486 | 3 | 91 | 3 |

| Race | ||||||||

| White | 93,831 | 83 | 3,275 | 89 | 85,624 | 83 | 2,533 | 85 |

| Black | 3,518 | 3 | 89 | 2 | 15,441 | 15 | 401 | 3 |

| Asian | 7,637 | 7 | 185 | 5 | - | - | ||

| Other/unknown | 7,931 | 7 | 141 | 4 | 1,916 | 2 | 47 | 2 |

| Diabetes | 24,599 | 22 | 980 | 27 | 52,899 | 51 | 1,669 | 56 |

| Hypertension | 69,692 | 62 | 2,733 | 74 | 93,868 | 91 | 2,765 | 93 |

| History of stroke | 7,755 | 7 | 460 | 13 | 13,333 | 3 | 488 | 16 |

| History of ischemic heart disease | 20,299 | 18 | 1,068 | 29 | 55,655 | 54 | 1,737 | 58 |

| History of congestive heart failure | 11,458 | 10 | 576 | 16 | 24,781 | 24 | 830 | 28 |

| Atrial fibrillation | 13,397 | 12 | 738 | 20 | 20,695 | 20 | 635 | 21 |

| History of traumatic brain injury | 11,682 | 10 | 647 | 18 | 12,046 | 12 | 523 | 18 |

| Depression | 25,113 | 22 | 1,183 | 32 | 34,267 | 33 | 1,656 | 56 |

| Post-traumatic stress disorder | 1,302 | 1 | 58 | 2 | 20,049 | 20 | 1,021 | 34 |

| Other anxiety | 13,606 | 12 | 630 | 17 | 18,405 | 18 | 926 | 31 |

| History of smoking | 34,920 | 31 | 1,234 | 33 | 57,526 | 56 | 1,860 | 62 |

| Alcohol use | 6,549 | 6 | 257 | 7 | 18,482 | 18 | 810 | 27 |

| Illicit drug abuse/dependence | 2,636 | 2 | 94 | 3 | 9,841 | 10 | 507 | 17 |

| Number of complete test results | ||||||||

| 1 | NA | 2,863 | 78 | NA | 2,601 | 87 | ||

| 2 | NA | 556 | 15 | NA | 306 | 10 | ||

| 3 | NA | 170 | 5 | NA | 54 | 2 | ||

| 4 | NA | 62 | 2 | NA | 9 | <1 | ||

| 5+ | NA | 39 | 1 | NA | 11 | <1 | ||

Abbreviations: KPWA: Kaiser Permanente Washington, VHA: Veterans Health Affairs, MMSE: Mini-Mental Status Examination, SLUMS:Saint Louis University Mental Status

Variables shown indicate history of medical conditions using all available look back.

Study baseline in KPWA is the date that a person had ≥2 years of enrollment in KPWA during the study years 1/1/2013 to 4/30/18

Study baseline in the VHA was 10/1/2012

In the VHA population, there were 102,981 patients with a mean age of 73.4 years (±sd 9.0) of whom 2,981 had one or more SLUMS tests during the observation period. The prevalence of receiving a SLUMS test during the study years increased from 0.87% in 2013 to 1.40% in 2015. Those with a SLUMS test during follow-up had a greater comorbidity burden compared to patients with no SLUMS tests (Table 2). Only 13% had more than one SLUMS test during the study period. Overall, 1,605 (54%) of SLUMS tests had a score consistent with dementia.

In the KPWA population, 7,191 (6.4%) met criteria for the algorithm requiring ≥2 dementia diagnosis codes within 12 months (algorithm 1). Between 6.5 and 8.1% met criteria for the other algorithms. In the VHA population, 9,188 (8.9%) met criteria for algorithm 1, and between 9.3% and 12.1% met criteria for the other algorithms (Figure 1).

At KPWA, the best correlation between algorithms and MMSE test scores was seen among those meeting algorithm 1, and the lowest correlation was seen among those meeting the algorithm requiring ≥2 diagnosis codes for dementia and/or MCI within a 12-month period (algorithm 4). Among those meeting algorithm 1, 15% had an MMSE available and 65% of the scores were below the threshold for dementia. In contrast, for the less stringent algorithm 4 (allowing MCI codes), there were 823 additional people meeting algorithm 4 who did not meet algorithm 1. Of these 823 patients, a larger proportion had completed the MMSE (19%) but only 28% of these had scores indicating dementia (Table 3a). Among patients who had a positive test score and went on to meet an algorithm, the median time between the score and meeting algorithm criteria was shortest for the algorithm requiring at least one code for dementia ever (algorithm 3, 89 days) and longest for the algorithm requiring two or more dementia diagnoses within 24 months (algorithm 2, 190 days) within KPWA (Supplemental table 2a). Similarly, from the VHA, among people meeting algorithm 1, 6% had a SLUMS and a large majority (77%) of the scores indicated dementia. Relaxing the criteria to the less stringent algorithm 4 resulted in an additional 972 patients being classified as having dementia. Of these 972 patients, a larger portion had completed the SLUMS (9%) but only 47% of these had scores consistent with dementia (Table 3b). At the VHA, the median time between a score and meeting algorithm criteria was shortest for the algorithm requiring at least one code for dementia ever (algorithm 3, 56 days) and longest for the algorithm requiring two or more dementia diagnoses within 12 months (algorithm 1, 106 days [Supplemental Table 2b]).

Table 3a:

Number meeting various dementia algorithms and proportion with a cognitive test score indicating dementia among 112,917 KPWA participants

| Algorithms met during follow-up | Total meeting algorithms | Additional added beyond those meeting Algorithm 1 when algorithm criteria are loosened | People who have an MMSE any time before or within 6 months aftera | Of those with MMSE, how many score 0–23 (dementia) | |||

|---|---|---|---|---|---|---|---|

| n | n | %b | n | %c | n | %d | |

| Algorithm 1, ≥2 dx codes for dementia within 1 year | 7,191 | NA | NA | 1,067 | 14.8 | 693 | 65.0 |

| Algorithm 2, ≥2 dx codes for dementia within 2 years | 7,284 | 93 | 1.3 | 20 | 21.5 | 11 | 55.0 |

| Algorithm 3, ≥1 dx codes for dementia ever | 9,111 | 1920 | 26.7 | 234 | 12.2 | 118 | 50.4 |

| Algorithm 1, ≥2 dx codes for dementia (not MCI) within 1 year | 7,191 | NA | NA | 1,067 | 14.8 | 693 | 65.0 |

| Algorithm 4, Algorithm 1 OR MCI within 1 year | 8,014 | 823 | 11.4 | 155 | 18.8 | 44 | 28.4 |

| Algorithm 1, ≥2 dx codes for dementia (not MCI) within 1 year | 7,191 | NA | NA | 1,067 | 14.8 | 693 | 65.0 |

| Algorithm 5, Algorithm 1 OR 1 dx code for dementia and ≥1 prescription(s) for dementia drug within 1 year | 7,407 | 216 | 3.0 | 47 | 21.8 | 19 | 40.4 |

| Algorithm 6, Algorithm 1 OR algorithm 5 OR 1dx code for dementia and ≥1 visit to relevant specialist | 7,494 | 303 | 4.2 | 120 | 39.6 | 50 | 41.6 |

Abbreviations: KPWA: Kaiser Permanente Washington, MMSE: Mini-Mental Status Examination, dx: diagnosis

Comparison shown visually in Figure 2

Percent of those meeting algorithm 1 (n=7,191)

Percent of the additional meeting algorithm when criteria are loosened

Percent of the people who have an MMSE score recorded, given that the algorithm was met

Table 3b:

Number meeting various dementia algorithms and proportion with a cognitive test score indicating dementia among 102,981 VHA participants

| Algorithms met during follow-up | Total meeting algorithms | Additional added beyond those meeting Algorithm 1 when algorithm criteria are loosened | People who have a SLUMS any time before or within 6 months aftera | Of those with SLUMS, how many score 0–20 (dementia) | |||

|---|---|---|---|---|---|---|---|

| n | n | %b | n | %c | n | %d | |

| Algorithm 1, ≥2 dx codes for dementia within 1 year | 9,188 | NA | NA | 574 | 6.2 | 444 | 77.4 |

| Algorithm 2, ≥2 dx codes for dementia within 2 years | 9,494 | 306 | 3.3 | 24 | 7.8 | 17 | 70.8 |

| Algorithm 3, ≥1 dx codes for dementia ever | 12,448 | 3,260 | 35.5 | 218 | 6.7 | 153 | 70.2 |

| Algorithm 1, ≥2 dx codes for dementia within 1 year | 9,188 | NA | NA | 574 | 6.2 | 444 | 77.4 |

| Algorithm 4, Algorithm 1, include MCI in dx codes | 10,160 | 972 | 10.6 | 91 | 9.4 | 43 | 47.3 |

| Algorithm 1, ≥2 dx codes for dementia within 1 year | 9,188 | NA | NA | 574 | 6.2 | 444 | 77.4 |

| Algorithm 5, Algorithm 1 OR (1 dx code for dementia and ≥1 prescription(s) for dementia drug within 1 year) | 9,980 | 792 | 8.6 | 69 | 8.7 | 53 | 76.8 |

| Algorithm 6, Algorithm 1 OR algorithm 5 OR (≥1dx code for dementia and ≥1 visit to relevant specialist within 1 year) | 11,036 | 1,848 | 20.1 | 179 | 9.7 | 126 | 70.4 |

Abbreviations: VHA: Veterans Health Affairs, SLUMS: Saint Louis University Mental Status, dx: diagnosis

Comparison shown visually in Figure 2

Percent of those meeting algorithm 1 (n=9,188)

Percent of the additional meeting algorithm when criteria are loosened

Percent of the people who have an SLUMS given algorithm met

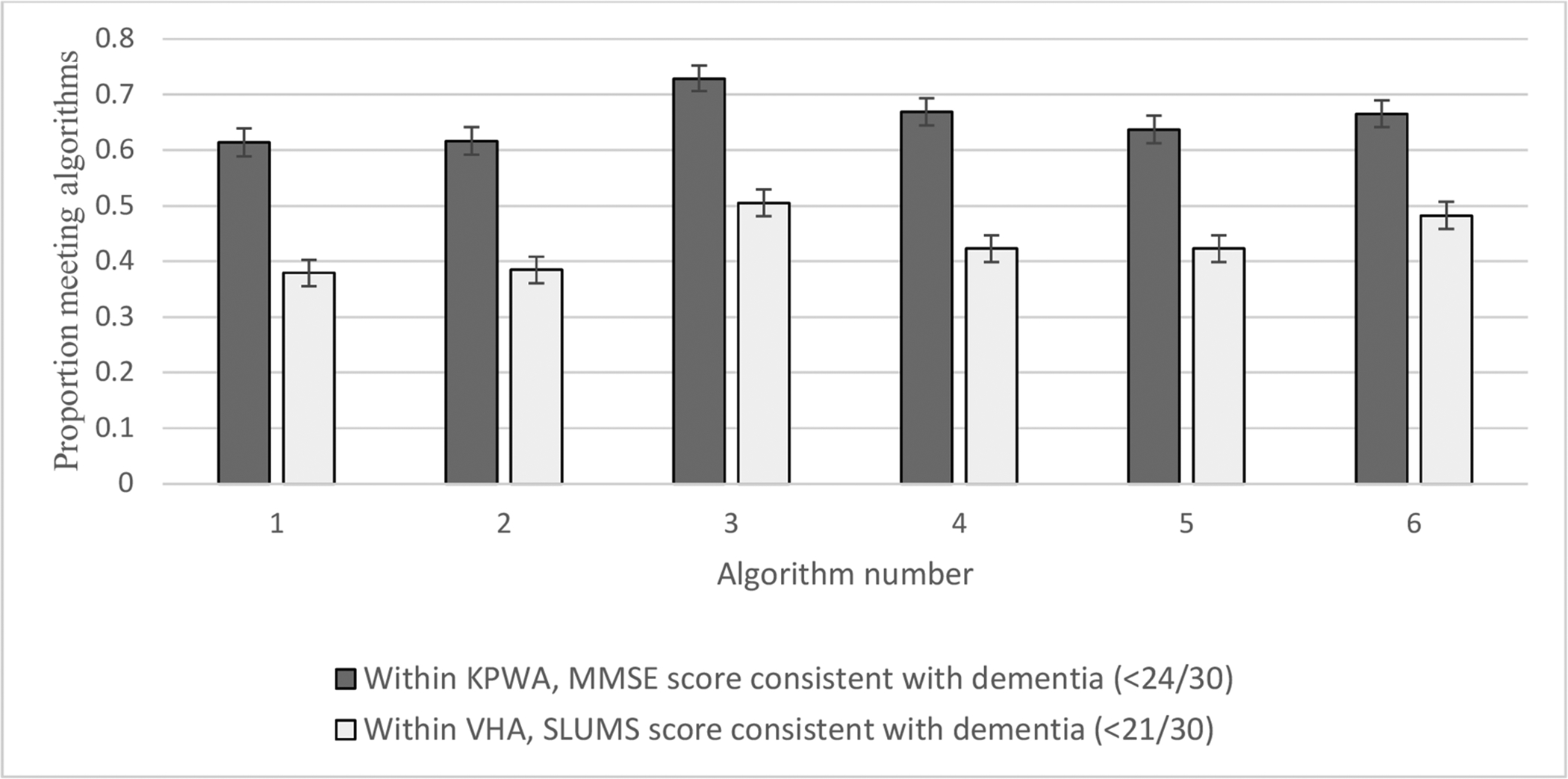

From KPWA, among those with an MMSE score consistent with dementia, approximately 61% met algorithm 1. The algorithm met by the greatest proportion of study patients with an MMSE score indicating dementia (72.9%) was algorithm 3, which required only one dementia diagnosis code (Figure 4). Similar results were found in the VHA, where those with a SLUMS score in the dementia range were least likely to meet algorithm 1 in the time window of interest (38%) and most likely to meet algorithm 3 (51%) (Figure 4).

Figure 4:

Among people with a cognitive test score indicating dementia, proportion meeting algorithms ever before or within 12 months following test. This figure shows how many patients from each population met a particular algorithm based on the presence of a cognitive screening test.

Results from sensitivity analyses altering the cut-off value for dementia on the MMSE in KPWA were consistent with the primary analysis (Supplemental Table 3a). Meanwhile, altering the cut-off value for a score consistent with dementia on the SLUMS in the VHA resulted in a high level of correlation for most algorithms and less of an ability to discriminate between which algorithms perform best (Supplemental Table 3b).

DISCUSSION:

In this retrospective cohort study, we describe the availability of results from two widely accepted cognitive screening exams in two health care settings and their correlation with EHR-based algorithms to identify patients with dementia. We found that requiring ≥2 dementia-specific diagnosis codes within 12 months (algorithm 1) resulted in the identification of people who are most likely to have cognitive screening tests with results consistent with dementia, indicating this algorithm may be the best when the research objective is to reliably identify patients with dementia. Based on the goals of a given study, the preferred algorithm might vary. For instance, if there are resources available to validate cases (e.g. through additional prospective cognitive assessment), researchers may want to cast a wider net and utilize less specific algorithms requiring only one code for dementia (algorithm 3) or a combination of dementia and MCI codes (algorithm 4), which identify many more potential dementia cases.

There have been other efforts to use EHR data to find patients with dementia for epidemiologic studies. Imfeld et al. [11] sought to determine dementia incidence rates using algorithms incorporating varying combinations of diagnosis codes, dementia medication prescriptions, presence of cognitive tests, referrals to a specialist, and neuro-imaging tests and validated these algorithms by sending questionnaires to patients’ general practitioners asking for confirmation of dementia status. Imfeld et al.’s algorithms included information on whether a cognitive test was done but did not make use of actual cognitive test scores as we have done here.

There are many factors that may explain the inconsistencies between cognitive test scores and algorithms in this study – that is, why some patients appeared to have dementia based on one approach but not the other. First, cognitive tests tend not to perform well in highly educated populations [15–17]. For example, in highly educated individuals, a higher cutoff on the MMSE (i.e. >=27) results in better sensitivity for dementia [15]. It is possible that some patients in the present study had dementia but achieved a high score on the MMSE or SLUMS. Additionally, patients with conditions including delirium, psychosis or depression may perform poorly on cognitive tests [18, 19]. Therefore, relying on cognitive test scores alone can result in inaccuracies when defining a population of individuals with dementia [10]. Instead, in clinical practice, clinicians conduct a full dementia workup incorporating many factors in order to make a diagnosis [20], which may explain why some people with low scores in our study did not receive a dementia diagnosis.

To our knowledge, our study is the first to extract and describe cognitive test scores from the EHR for a large cohort of patients and compare them with other EHR data elements indicating dementia-related care. Strengths of this study include the use of two different study populations with very different demographic and clinical characteristics and two different dementia screening instruments, allowing for greater generalizability of findings. An additional strength was the exploration of a wide range of algorithms to identify dementia from readily available EHR data elements.

There are some important limitations to consider. First, cognitive screening tests were done infrequently at both KPWA and the VHA (< 5% of patients in each setting had completed a test) and so results were often not available in the chart for patients meeting dementia algorithms. Cognitive screening tests are not done routinely or universally in our healthcare systems, which is in line with guideline recommendations advising that there is currently insufficient evidence to recommend universal screening for dementia [21]. Thus, we expect that patients who complete these tests are more likely showing signs or symptoms of cognitive problems. Second, we would have liked to study individuals’ cognitive score trajectories over time, but too few of the patients had repeated testing to support such analyses. Third, cognitive screening tests are not a true gold standard against which dementia algorithms can be validated. A true gold standard would consist of a full dementia evaluation, including detailed cognitive testing, history and physical exam, and laboratory and/or imaging results, which would be highly resource intensive. Rather than validating dementia algorithms against results from cognitive tests, our focus was instead on describing cognitive testing use and correlation to EHR algorithms for dementia. Fourth, as discussed above, cognitive screening tests do not always perform well based on the characteristics of the population studied (e.g. education, language spoken). Fifth, in both settings, it is possible that information on cognitive screening results were included only in clinical notes and not recorded in the EHR as structured data. This could result in the exclusion of some cognitive tests for patients in our study. Finally, between 29% and 76% of patients with dementia or probable dementia in the primary care setting are undiagnosed [22, 23]. So, any approach relying on EHR data to find people living with dementia will miss a substantial proportion of cases. Because of this, even conducting chart reviews would be insufficient to generate a “gold standard” cohort to compare dementia cases identified by cognitive test scores against.

CONCLUSION:

In conclusion, though cognitive test results were available on few people, there was good agreement with algorithms requiring ≥2 dementia diagnosis codes within 12 months, which supports the use of this algorithm in epidemiologic studies. Our findings suggest that cognitive test scores could be a valuable addition to other methods for identifying dementia cases using EHR data. As more health systems adopt EHRs, cognitive screening results may become more widely accessible for integration into research. However, because cognitive screening tests are done in a highly-selected group, more work in this area is required to understand how and when cognitive test scores should be incorporated into dementia-related research that uses large electronic health databases.

Supplementary Material

Funding:

This study was supported by National Institute on Aging grant R21 AG055604 and by Kaiser Permanente Washington Health Research Institute Development Funds. Dr. Harding is supported by a National Heart, Lung and Blood Institute grant T32HL007828. This material is the result of work supported with resources and the use of facilities at the Harry S. Truman Memorial Veterans’ Hospital. Support for VA/CMS data is provided by the Department of Veterans Affairs, Veterans Health Administration, Office of Research and Development, Health Services Research and Development, VA Information Resource Center (Project Numbers SDR 02-237 and 98-004). The funders had no role in study design, data analysis or in the writing of the report.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of interest: Dr. Floyd has consulted for Shionogi Inc. Other authors have no conflicts of interest to disclose.

REFERENCES:

- 1.Chodosh J, et al. , Physician recognition of cognitive impairment: evaluating the need for improvement. J Am Geriatr Soc, 2004. 52(7): p. 1051–9. [DOI] [PubMed] [Google Scholar]

- 2.Plassman BL, et al. , Prevalence of dementia in the United States: the aging, demographics, and memory study. Neuroepidemiology, 2007. 29(1–2): p. 125–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Querfurth HW and LaFerla FM, Alzheimer’s disease. N Engl J Med, 2010. 362(4): p. 329–44. [DOI] [PubMed] [Google Scholar]

- 4.Matthews KA, et al. , Racial and ethnic estimates of Alzheimer’s disease and related dementias in the United States (2015–2060) in adults aged >/=65 years. Alzheimers Dement, 2019. 15(1): p. 17–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Reuben DB, et al. , An Automated Approach to Identifying Patients with Dementia Using Electronic Medical Records. J Am Geriatr Soc, 2017. 65(3): p. 658–659. [DOI] [PubMed] [Google Scholar]

- 6.Wilkinson T, et al. , Identifying dementia cases with routinely collected health data: A systematic review. Alzheimers Dement, 2018. 14(8): p. 1038–1051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Knopman DS, Boeve BF, and Petersen RC, Essentials of the proper diagnoses of mild cognitive impairment, dementia, and major subtypes of dementia. Mayo Clin Proc, 2003. 78(10): p. 1290–308. [DOI] [PubMed] [Google Scholar]

- 8.Karlawish JH and Clark CM, Diagnostic evaluation of elderly patients with mild memory problems. Ann Intern Med, 2003. 138(5): p. 411–9. [DOI] [PubMed] [Google Scholar]

- 9.Ladeira RB, et al. , Combining cognitive screening tests for the evaluation of mild cognitive impairment in the elderly. Clinics (Sao Paulo), 2009. 64(10): p. 967–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Holsinger T, et al. , Does this patient have dementia? JAMA, 2007. 297(21): p. 2391–404. [DOI] [PubMed] [Google Scholar]

- 11.Imfeld P, et al. , Epidemiology, co-morbidities, and medication use of patients with Alzheimer’s disease or vascular dementia in the UK. J Alzheimers Dis, 2013. 35(3): p. 565–73. [DOI] [PubMed] [Google Scholar]

- 12.Folstein MF, Folstein SE, and McHugh PR, “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res, 1975. 12(3): p. 189–98. [DOI] [PubMed] [Google Scholar]

- 13.Tariq SH, et al. , Comparison of the Saint Louis University mental status examination and the mini-mental state examination for detecting dementia and mild neurocognitive disorder--a pilot study. Am J Geriatr Psychiatry, 2006. 14(11): p. 900–10. [DOI] [PubMed] [Google Scholar]

- 14.Scherrer JF, et al. , Association Between Metformin Initiation and Incident Dementia Among African American and White Veterans Health Administration Patients. Ann Fam Med, 2019. 17(4): p. 352–362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.O’Bryant SE, et al. , Detecting dementia with the mini-mental state examination in highly educated individuals. Arch Neurol, 2008. 65(7): p. 963–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Spering CC, et al. , Diagnostic accuracy of the MMSE in detecting probable and possible Alzheimer’s disease in ethnically diverse highly educated individuals: an analysis of the NACC database. J Gerontol A Biol Sci Med Sci, 2012. 67(8): p. 890–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chapman KR, et al. , Mini Mental State Examination and Logical Memory scores for entry into Alzheimer’s disease trials. Alzheimers Res Ther, 2016. 8: p. 9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lam RW, et al. , Cognitive dysfunction in major depressive disorder: effects on psychosocial functioning and implications for treatment. Can J Psychiatry, 2014. 59(12): p. 649–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Barch DM and Sheffield JM, Cognitive impairments in psychotic disorders: common mechanisms and measurement. World Psychiatry, 2014. 13(3): p. 224–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.van der Flier WM and Scheltens P, Epidemiology and risk factors of dementia. J Neurol Neurosurg Psychiatry, 2005. 76 Suppl 5: p. v2–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Tschanz JT, et al. , Conversion to dementia from mild cognitive disorder: the Cache County Study. Neurology, 2006. 67(2): p. 229–34. [DOI] [PubMed] [Google Scholar]

- 22.Valcour VG, et al. , The detection of dementia in the primary care setting. Arch Intern Med, 2000. 160(19): p. 2964–8. [DOI] [PubMed] [Google Scholar]

- 23.Olafsdottir M, Skoog I, and Marcusson J, Detection of dementia in primary care: the Linkoping study. Dement Geriatr Cogn Disord, 2000. 11(4): p. 223–9. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.