Abstract

Theoretically informed measures of eHealth literacy that consider the social affordances of eHealth are limited. This study describes the psychometric testing of a multi-dimensional instrument to measure functional, communicative, critical, and translational eHealth literacies, as informed by the Transactional Model of eHealth Literacy (TMeHL). A 3-phase rating scale construction process was conducted to engage eHealth experts and end-users. In Phase 1, Experts (N = 5) and end-users (N = 25) identified operational behaviors to measure each eHealth literacy dimension. End-users (N = 10) participated in think-aloud interviews to provide feedback on items reviewed and approved by experts. A field test was conducted with a random sample of patients recruited from a university-based research registry (N = 283). Factor analyses and Rasch procedures examined the internal structure of the scores produced by each scale. Pearson’s r correlations provided evidence for external validity of scores. The instrument measures four reliable (ω = .92-.96) and correlated (r = .44-.64) factors: functional (4 items), communicative (5 items), critical (5 items), and translational (4 items). Researchers and providers can use this new instrument as a theory-driven instrument to measure four eHealth literacies that are fundamental to the social affordances of the eHealth experience.

Keywords: eHealth literacy, measurement, transactional communication, participatory medicine

INTRODUCTION

eHealth literacy was operationalized in 2006 as, “the ability to seek, find, understand, and appraise health information from electronic sources and apply the knowledge gained to addressing or solving a health problem” (Norman & Skinner, 2006a, p. 2). This seminal definition corresponds with the eHealth Literacy Scale (eHEALS); a widely used 8-item measure that assesses self-efficacy related to online health information seeking, appraisal, and application (Norman & Skinner, 2006b). Despite its popularity and evidence for psychometrically sound properties across the lifespan (Griebel et al., 2017; Paige, Miller, Krieger, Stellefson, & Cheong, 2018a), researchers have noted limitations in its content validity alongside the evolving social capabilities afforded by eHealth technology. Shaw and colleagues (2017) demonstrate that eHealth comprises three fluid domains that afford users the opportunity to manage exchanges from multiple sources in synchronous or asynchronous formats, and appraise and engage in self-disclosures and storytelling with other users. These affordances are transactional in nature; the exchange of information between online sources is both continuous and dynamic, meaning that the relational and cultural context is influenced and an influencer of the social exchange (Barnlund, 1970).

Norman (2011) recommended that future eHealth literacy instruments should extend beyond the eHEALS to measures skills that capture transactional features afforded by eHealth. He summarized these skills as technological (e.g., navigating the functional features of devices) and interpersonal communication (e.g., exchanging and appraising information from diverse online sources) management. Researchers have extended the scope of eHealth literacy to include the term “communication” in definitions. With the exception of one measure that considers users’ knowledge about how to protect the privacy of themselves and others through selective self-disclosures (van der Vaart & Drossaert, 2017), remaining models and measures operationalize the transactional features of eHealth as an outcome (i.e., creating a text-based message, talking to a doctor about online information) rather than a central function or process of using eHealth to exchange information for the purposes of health promotion (Paige et al., 2018b). Researchers note that the scientific community’s understanding of eHealth literacy and its measurement is hindered due to the historic absence of a theoretical model (Griebel et al., 2017). This study presents the development and testing of a brief instrument informed by a theory-driven model that draws upon a decade’s worth of eHealth literacy and transactional computer-mediated communication literature.

The Transactional Model of eHealth Literacy: A Communication Perspective

The Transactional Model of eHealth Literacy (TMeHL) defines eHealth literacy as, “the ability to locate, understand, exchange, and evaluate health information from the Internet in the presence of dynamic contextual factors, and to apply the knowledge gained for the purposes of maintaining or improving health” (Paige et al., 2018b, pg. 8). The TMeHL demonstrates that eHealth literacy is a multi-dimensional, intrapersonal skillset that enables consumers to negotiate online transactions among diverse sources in the face of adversity (e.g., dexterity limitations, unique jargon, topic drift). Unique to other eHealth literacy concepts and measures, the TMeHL highlights transactional features as central to the use of eHealth for health promotion, describing how consumers access and engage with other users and the information exchanges.

The operational skills outlined in the TMeHL’s proposed definition of eHealth literacy (i.e., locate and understand, exchange, evaluate, and apply) correspond with four literacies (i.e., functional, communicative, critical, translational). Consistent with prior work in health literacy (Nutbeam, 2000) and eHealth literacy (Chan & Kaufman, 2011), these operational skills are cognitive processes that are interdependent and serve as building blocks to one another. Without skills to read and type health-related messages on the Internet, an individual will likely have a limited ability to effectively communicative, critically appraise, and apply knowledge to offline action. In the TMeHL, functional eHealth literacy is the ability to use baseline features of technology (e.g., typing, reading on a screen) to access and comprehend health-related context, whereas translational eHealth literacy comprises the highest-level ability to create and carry out an action plan to apply knowledge that is obtained, negotiated, and evaluated for health promotion (Paige et al., 2018b).

eHealth Literacy Measurement: Centralizing Communication

The TMeHL provides an updated conceptualization of eHealth literacy, allowing healthcare researchers and practitioners to attend to the unique aspects of eHealth skills that are central to the transactional features afforded by online media. Existing measures of eHealth literacy exist, but they do not capture these unique tenets. van der Vaart and Drossaert (2017) for example, included an item in their eHealth literacy measure to assess the ability of an Internet user to create a text-based message. Per the tenets of the TMeHL, this item constitutes a functional eHealth skills and does not capture the critical, transactional, and transactional skills that are central to the communicative aspects of eHealth literacy. These constructs and their underlying processes are theoretically supported by the TMeHL (Paige et al., 2018b), yet there is a need for behavioral research scientists and members of the eHealth community to explicate and confirm their operationalization and functionality within this context. With the high volume of health information produced from online sources with varying degrees of credibility, an instrument is needed to assess patients’ abilities to produce text messages, communicate with multiple online users and determine their source credibility, as well as translate knowledge gained from the online transaction to offline action.

Purpose

The purpose of this study is to describe the development and psychometric testing of a multi-dimensional eHealth literacy instrument informed by the TMeHL, called the Transactional eHealth Literacy Instrument (or TeHLI). The TeHLI is intended to measure patients’ perceived skills related to their capacity to understand, exchange, evaluate, and apply health information from diverse online sources and multimedia.

MATERIALS AND METHODS

Sample and Procedures

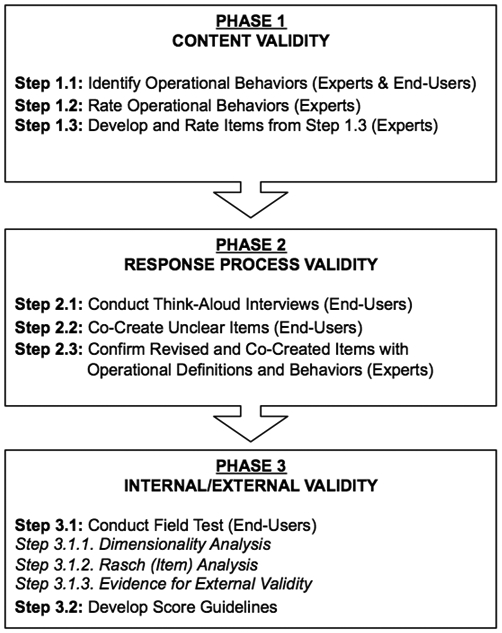

Figure 1 presents the three-phase instrument development process approved by the lead researcher’s Institutional Review Board (Crocker & Algina, 1986). Phase 1 establishes content validity, or the degree that empirical evidence supports the operationalization of the construct and the wording of items used to measure the construct. Phase 2 includes response process validity, to ensure that the answering process of the test takers is consistent with the construct as defined by the researchers. Phase 3 is internal/external validity. Internal validity of scores produced by the new scale provides evidence for its multi-dimensionality and item function as a rating scale. External validity evidence supports the degree that the scale produces scores appropriately associated with measures of similar, dissimilar, and related constructs. Each phase includes best practices for social scientists seeking to develop and revise scales with sufficient validity evidence (Boateng, Neilands, Frongillo, Melgar-Quiñonez, & Young, 2018; Jensen et al., 2014).

Figure 1.

Flow-chart of the instrument development process

This study systematically considers feedback from both experts and end-user throughout the instrumentation. Including both experts and end-users as equally collaborative members in this process enhances the generalizability of results and it ensures that the final product is pragmatic and reflective of the community’s reality (Agency for Toxic Substances and Disease Registry, 2015; Boote, Baird, & Beecroft, 2010). eHealth experts were identified by perusing the lead investigator’s university’s college and department websites to identify faculty with publications, grants, and/or research interests in at least one of the following areas: health literacy, eHealth literacy, human-computer interaction, instrument development. Six (42.86%) of the 14 invited experts agreed to participate; however, one withdrew at the final step of Phase 1. eHealth end-users were recruited from a community-engaged research program (Phase 1-2), which has a mission to alleviate disparities in healthcare and research participation, and a large university research registry (Phase 3). eHealth end-users were patients over the age of 40 who were at-risk or living with obstructive lung diseases, predominantly Chronic Obstructive Pulmonary Disease. This instrument is informed by triangulation methods including literature and eHealth experts and end-users.

Phase 1: Operational Behaviors and Item Construction

Step 1.1. Identifying Operational Behaviors: Experts & End-Users.

Definitions from the TMeHL (Paige et al., 2018b) were used as codes to conduct a secondary content analysis of interviews with end-users (N = 25) who talked about eHealth experiences (Paige, Stellefson, Krieger, & Alber, 2019). eHealth experts (N = 5) were given an online scenario about a patient interested in supplementing their healthcare with eHealth. Following this scenario, experts were asked to provide a list of skills the patient would need to successfully use eHealth to supplement their healthcare experience.

Operational behaviors that reflect each dimension of the TMeHL (functional, communicative, critical, translational) were compiled from (a) end-user interviews, (b) expert review scenario, and (c) the literature. See Table 1. The operational behaviors for functional and translational eHealth literacies highlight the functional nature of technology, or skills to use the Internet. This was expected, as basic technological functionality is both a foundational eHealth skill and a prerequisite for translating what is learned from the online experience to the offline sphere. The translational eHealth literacy operational behaviors described by experts and end-users extended beyond functional skills to imply that there is learning-oriented action that occurs as part of this translation (e.g., engage in health promoting behaviors for yourself or others, correctly take medication as directed from an online healthcare provider). This is comparable to functional eHealth literacy operational behaviors, described as knowing how to use different technological features that promote informational accessibility and comprehension (e.g., use a browser to access webpages, use a keyboard, know basic health-related terms).

Table 1.

eHealth Literacy Dimensions: eHealth Expert and End-User Feedback

| eHealth Literacy Dimension |

Skills, Behaviors, and Attributes of eHealth Literacy | |

|---|---|---|

| Experts (N = 6) | Lay End-Users (N = 25) | |

| Functional eHealth Literacy Basic skills in reading and typing about health effectively function on the Internet. |

|

|

| Communicative eHealth Literacy The ability to collaborate, adapt, and control communication about health with users on social online environments with multimedia. |

|

|

| Critical eHealth Literacy The ability to evaluate the credibility, relevance, and risks of sharing and receiving health information on the Internet. |

|

|

| Translational eHealth Literacy The ability to apply health knowledge gained from the Internet across diverse ecological contexts. |

|

>

|

The operational behaviors to describe communicative eHealth literacy were unique between experts and end-users, highlighting the value of integrating feedback from both groups in this process. Experts and end-users highlighted the functional aspect of being able to use technology to communicate; however, end-users highlighted interpersonal features associated with online communication, including knowing when and how to self-disclose content and develop relationships with other users. These transactional features are foundational to the TMeHL and have not been highlighted in previous models or measures.

Step 1.2. Rating Operational Behaviors and Items: Experts.

eHealth experts (N = 5) reviewed the compiled list and identified the most important operational behaviors to define functional, communicative, critical, and translational eHealth literacies. These operational behaviors informed four tables of specification (one for each of the eHealth literacy dimensions). On a 5-point scale (1 = strongly disagree, 5 = strongly agree), all experts (100%) “strongly agreed” or “agreed” that the operational behaviors outlined in the table of specifications appropriately corresponded with their respectively assigned cognitive processes. Operational behaviors were used to identify items inspired and adapted by existing eHealth literacy scales (Chinn & McCarthy, 2013; Koopman, Petroski, Canfield, Stuppy, & Mehr, 2014; Norman & Skinner, 2006b; Rubin & Martin, 1994; Seçkin, Yeatts, Hughes, Hudson, & Bell, 2016; Spitzberg & Cupach, 1984; van der Vaart & Drossaert, 2017). Best practices for item design were used where items could not be adapted (Osterlind, 1998).

Step 1.3. Develop and Rate Instrument Items: Experts.

Experts reviewed the item pool and ranked (1 = strongly disagree, 5 = strongly agree) their focus (is this item directly related to the dimension measured?) to each eHealth literacy dimension, with respect to its operational definition. Over 80% of experts “agreed” or “strongly agreed” that that each item was focused; however, recommendations for making items more concise, clear, and readable were offered. For example, experts suggested replacing the phrase “technological device” with “computer,” because it is a broader term that encompasses all devices of eHealth. Experts recommended replacing academic verbiage (e.g., replacing “generate” with “create,” “disclose” with “share,” “assist” with “help,” and “reflect the tone of a conversation” to “style” or “emotion.”)

Phase 2: Think-Aloud Interviews

Community end-users (N = 10) participated in audio-recorded think-aloud face-to-face interviews on a desktop computer. The think-aloud interview method is a theoretically driven and practical approach to obtain reliable data reflecting participants’ cognitive processing while responding to a survey item (Charters, 2003). Think-aloud procedures fall under the umbrella of cognitive interviewing, where participants actively verbalize their thoughts and interpretation of the items and answer retrospective probes from the interviewer. Participants verbally articulate the item, retrieve information from the short- and long-term memory to attend to the item, and ultimately make sense of how the item fits their current cognitive schema (Ryan, Gannon-Slater, & Culbertson, 2012). This method is especially useful in instrument development, as there is evidence that it better identifies comprehension issues and patterns of response processes (Pepper, Hodgen, Kamesoo, Koiv, & Tolboom, 2016). Think-aloud interviews provide evidence that there is harmony between what the item was intended to measure and how it is processed by the respondent (Crocker & Algina, 1986). It is a critical phase of instrument development, and it should not be ignored regardless of financial of capital limitations (Ryan et al., 2012).

Think-aloud participants were 45-92 years old (M = 64.40 years; SD = 16.35 years), male (n = 6; 60%), white (n = 6; 60%) or Black/African American (n = 4; 40%), college educated (n = 6; 60%) but low income (less than $20K/year; n = 8; 80%). Participants provided socio-demographic information and completed the eHealth literacy scale (eHEALS; Norman & Skinner, 2006b). A “mock” think-aloud interview was conducted with the eHEALS, to enhance familiarity with the method prior to reviewing the new instrument.

Following procedures of McGinnis (2009) and Willis (1987), participants read aloud each item and verbally expressed thought processes during their response with any necessary researcher prompts. Participants were probed about item interpretation (“what does this item mean to you?”), paraphrase (“what is this item asking you?”), process-oriented (“talk me through your decision to select this answer.”), evaluative (“what makes this item easy or difficult to answer?”), and recall factors (“do you have an example of your personal online experience that led you to this answer?”). At the end of the interview, any items that were unclear, confusing, or interpreted differently than intended were revised through an iterative co-creation process.

Participants believed that the items of the new instrument were straightforward but identified concerns with: (1) perceived double-barreled items; (2) context of answering the questions (e.g., basic vs. disease-specific); (3) unintended response options (i.e., select “disagree” rather than “agree” if they had the skills to engage in the behavior but prefer not to engage in the behavior); and (4) visual presentation of response options (e.g., small vs. large radio buttons). Problematic items underwent minor wording adjustments and were ultimately tested with subsequent participants and revised for further refinement. Senior research team members with expertise in eHealth and health communication reviewed items to confirm the content still reflected operational behaviors and cognitive processes from the predetermined expert review.

Phase 3: Instrument Assessment

Step 3.1. Conduct Field Test: End-Users.

A randomly selected cohort of 2,100 patients enrolled in a large university research registry was recruited for a web-based survey. The survey included socio-demographic items, online health behavior scales, existing eHealth and health literacy measures, as well as the battery of items co-created by eHealth experts and end-users in Phases 1 and 2. Eligible participants were at least 40 years old and assigned an ICD-10 (J40-J47) code in their electronic health record, indicating risk or diagnosis of chronic obstructive lung disease. The survey was delivered to patients via their personal email address, followed by one reminder (3 days from initial invitation) and a final “last call” message (1 week after initial invitation). Participants (N = 283) completed the survey (13.48% response rate), like another COPD research registry study (M. L. Stellefson et al., 2017). Participants were remunerated with a $5 eGift Card. List-wise deletion missing data procedures were used.

Step 3.1.1. Dimensionality Analysis.

The initial item pool consisted of 30 items (functional = 6; communicative = 8; critical = 10; translational = 6). In SPSS v24, a Promax (oblique) principal components analysis (PCA) was conducted to examine the number of components, their explained variance, and the pattern matrix of items. Items that belonged to multiple components and showed high (ρ > .85) or low (ρ < .30) bivariate inter-item correlations were identified. Mplus v8.0 was used to conduct a series of confirmatory factor analyses (CFAs) with weighted least squares and adjusted means/variances estimates. Item pairs with residual correlations greater than r ≥ ∣.10∣ and modification indices greater than 10.0 were flagged as threats to local independence and ultimately removed. The final item pool consisted of 18 items (functional = 4; communicative = 5; critical = 5; translational = 4). To confirm dimensionality, Mplus v8.0 was used to conduct a CFA under the Maximum Likelihood estimate. Global model fit indices were used to establish “good fit” (Hu & Bentler, 1999): Root Mean Square of Error Approximation (RMSEA) value less than or equal to .08, non-significant (p > .05) chi-squared value, Comparative Fit Index/Tucker-Lewis Index (CFI/TLI) values greater than .90, and Squared Root Mean Residual (SRMR) value, or average residual correlations, ≤.08. Cronbach’s α and ω coefficients ≥.70 indicated acceptable reliability.

Step 3.1.2. Item Analysis.

SPSS v24 was used to compute descriptive statistics for items on each scale. Item difficulty (M and SD), corrected inter-item correlations (≥ .30 is acceptable), and item skewness (within ±1.96 is acceptable) were also computed. Data were fit to a Rasch Rating Scale Model (RSM) with RStudio eRm software (Mair, Hatzinger, Maier, & Rusch, 2018). Following Linacre’s (2002) guidelines for optimizing rating scale category effectiveness, RSM functions under the assumption that the probability of selecting a response option is dependent on item difficulty and a person’s placement on the latent continuum (eHealth literacy dimension score; x-axis). Item responses should not be random or predictable, meaning infit and outfit values should be between .5 and 1.5. Items should be sufficiently represented across each latent continuum, so that item separation and reliability are greater than .80. Assumptions of monotonicity should be met, so that the probability of selecting “agree” or “strongly agree” for each item will be greatest at the most positive end of the latent continuum. The threshold values, or the difference in probability for selecting adjacent response options (e.g., “agree” and “strongly agree”), should be between 1.4 to 5.0 logits. This determines the relationship between response options, including optimal variability. Finally, test (TICs) and item (IICs) information curves (inverse of standard error of measurement across the latent continuum) were estimated.

Step 3.1.3. External Validity.

SPSS v24 was used to conduct a series of Pearson r correlations. To examine how the scale function related to TMeHL (Paige et al., 2018), correlations were computed to examine the relationship between anteceding elements, online health information seeking challenges, and perceived usefulness of the Internet for health. Scores produced by the TeHLI were compared to existing eHealth literacy (Norman & Skinner, 2006b) and health literacy (Chinn & McCarthy, 2013) scales. Finally, the relationship between TeHLI scores and interactive (Ramirez, Walther, Burgoon, & Sunnafrank, 2002) and active (Eheman et al., 2009) online health information seeking was computed.

RESULTS

Sample Characteristics

Table 2 shows that the sample was comprised of predominantly non-Hispanic white adults 65 years old (SD = 1.47 years), on average. Participants had some college education and about half earned more than $50,000/year. About half (50.5%; n = 143) of the participants reported a physician COPD diagnosis with a moderate degree of respiratory symptom severity (M = 2.44; SD = .99). Nearly 90% used the Internet in the past year for health-related purposes. About three-quarters (73%) used social media for 0-1 hours per week (on average). Participants were more likely to be active (M = 3.03; SD = 0.71; min = 1, max = 5) rather than interactive (M = 1.65; SD = 0.78; min = 1, max = 4.60) online health information seekers.

Table 2.

Socio-Demographic Characteristics of the Sample

| Demographic Characteristics | n (%) unless otherwise noted |

|---|---|

| Age, M (SD) | 64.34 (10.49) |

| Number of chronic diseases,a M (SD) | 2.30 (1.47) |

| Gender | |

| Female | 160 (56.5) |

| Male | 120 (42.4) |

| Missing | 3 (1.1) |

| Race | |

| White/Caucasian | 255 (90.1) |

| Black/African American | 9 (3.18) |

| American Indian | 3 (1.06) |

| Asian American | 6 (2.12) |

| Multi-Racial | 9 (3.18) |

| Missing | 1 (0.35) |

| Ethnicity | |

| Non-Hispanic | 207 (73.1) |

| Hispanic | 43 (15.2) |

| Missing | 33 (11.7) |

| Annual income (pre-tax) | |

| $24,999 or less | 60 (21.2) |

| $25K-$34,999 | 30 (10.6) |

| $35K-$49,999 | 29 (10.2) |

| $50K-$74,999 | 56 (19.8) |

| $75K or more | 90 (31.8) |

| Missing | 18 (6.4) |

| Education | |

| None | 1 (0.4) |

| Grades 1-8 | 1 (0.4) |

| Grades 9-11 | 10 (3.5) |

| Grade 12 or high school equivalent | 36 (12.7) |

| College 1-3 years | 107 (37.8) |

| College 4 or more years | 126 (44.5) |

| Missing | 2 (0.7) |

| Employment | |

| Full time | 55 (19.4) |

| Part time | 12 (4.2) |

| Unemployed | 8 (2.8) |

| Retired | 126 (44.5) |

| Disabled | 70 (24.7) |

| Other | 8 (2.8) |

| Missing | 4 (1.4) |

| Marital Status | |

| Married | 161 (56.9) |

| Divorced | 64 (22.6) |

| Widowed | 32 (11.3) |

| Separated | 6 (2.1) |

| Single, never married | 18 (6.4) |

| Missing | 2 (0.7) |

Note. N = 283

min = 0 and max = 7

Selected all that apply, % may not = 100%

Dimensionality

The correlated and reliable (ω = .92-.96) 4-factor measurement model yielded acceptable model fit (RMSEA = .07, 90% CI = .06-.08; X2 = 308.714, p < .05; CFI = .95; TLI = .94; SRMR = .06) with statistically significant standardized factor loadings (lambda = .53-1.0). All factors had a moderate-to-high positive association with one another (r = .44-.64; p < .001).

Item Analysis

Each scale exhibited acceptable internal consistency (α = .87-.92), and scale items had acceptable corrected inter-item correlations (.62-.85) and skewness (−1.78 to −.10). The average response for each item was within an acceptable range: functional, 3.94 (SD = 1.04) to 4.27 (SD = .88); communicative, 3.03 (SD = 1.11) to 3.36 (SD = .98); critical, 2.99 (SD = 1.10) to 3.79 (SD = .85); and translational, 3.77 (SD = .84) to 4.13 (SD = .65).

Table 3 shows the summary statistics of each scale under the RSM. The infit and outfit values were generally below a value of 1.0 logits, indicating some degree of predictability in the model and redundancy in responses but within a range that is conducive to productive measurement nevertheless. Reliability estimates for each scale were above the recommended .80 and separation indices ranged from 4.37 to 10.55.

Table 3.

Rasch Rating Scale Model Item Fit Statistics for the TeHLI

| Transactional eHealth Literacy Instrument (TeHLI) | MSQ Infit | MSQ Outfit |

|---|---|---|

| Functionala | ||

| 1. I can summarize basic health information from the Internet in my own words. | 0.76 | 0.84 |

| 2. I know how to access basic health information on the Internet. | 0.58 | 0.47 |

| 3. I can use my computer to create messages that describe my health needs. | 0.83 | 0.77 |

| 4. I have the skills I need to tell someone how to find basic health information on the Internet. | 0.69 | 0.68 |

| Communicative (COM)b | ||

| 1. I can achieve my health information goals on the Internet while helping other users achieve theirs. | 1.14 | 1.22 |

| 2. I have the skills I need to talk about health topics on the Internet with multiple users at the same time. | 0.70 | 0.70 |

| 3. I can identify the emotional tone of a health conversation on the Internet. | 0.89 | 0.91 |

| 4. I have the skills I need to contribute to health conversations on the Internet. | 0.61 | 0.60 |

| 5. I have the skills I need to build personal connections with other Internet users who share health information. | 0.72 | 0.71 |

| Critical (CRIT)c | ||

| 1. I can tell when an Internet user is a credible source of health information. | 0.62 | 0.64 |

| 2. I can tell when health information on the Internet is fake. | 0.62 | 0.61 |

| 3. I can tell when a health website is safe for sharing my personal health information. | 0.95 | 0.95 |

| 4. I can tell when information on the Internet is relevant to my health needs. | 0.97 | 0.91 |

| 5. I know how to evaluate the credibility of Internet users who share health information. | 0.86 | 0.88 |

| Translational (TRANSL)d | ||

| 1. I can use the Internet to learn how to manage my health in a positive way. | 0.69 | 0.71 |

| 2. I can use the Internet as a tool to improve my health. | 0.67 | 0.64 |

| 3. I can use information on the Internet to make an informed decision about my health. | 0.78 | 0.78 |

| 4. I can use the Internet to learn about topics that are relevant to me. | 0.59 | 0.51 |

Note. MSQ = Mean Square

Reliability = .88 and Separation = 7.51

Reliability = .91 and Separation = 10.55

Reliability = .88 and Separation = 7.85

Reliability = .81 and Separation = 4.37

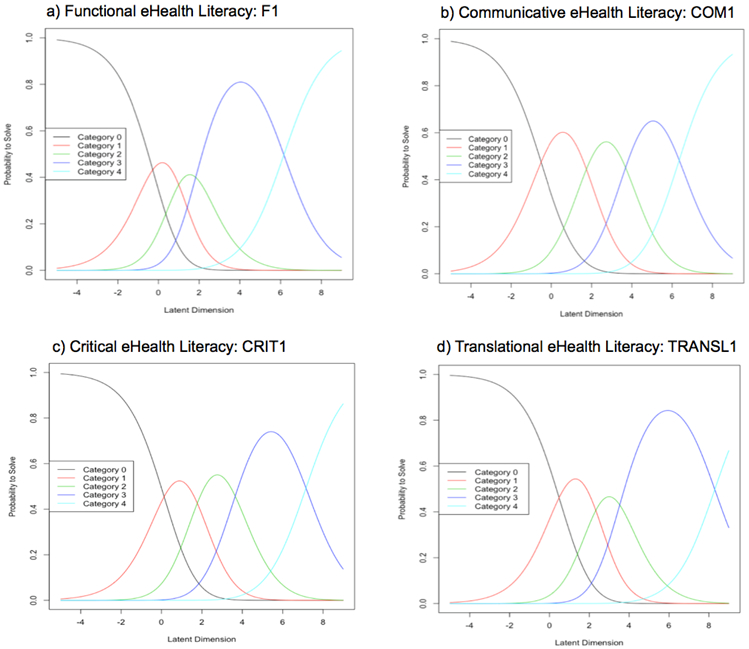

In Table 4, the threshold values are presented. For the communicative and critical scales, adjacent thresholds advanced in order and within the recommended 1.4 to 5.0 logits. The difference in Thresholds 1-2 (“strongly disagree/disagree” and “disagree/neutral”) and 2-3 (“disagree/neutral” to “neutral/agree”) on the functional scale, as well as Thresholds 2-3 (“disagree/neutral” to “neutral/agree”) on the translational scale, were below the recommended 1.4 logits, but only by .13 logits. This is not detrimental to response option variability.

Table 4.

Threshold Values Under the Rasch Rating Scale Model (RSM)

| TeHLI | Location | Threshold 1 (Strongly Disagree/ Disagree) |

Threshold 2 (Disagree/Neutral) |

Threshold 3 (Neutral/Agree) |

Threshold 4 (Agree/ Strongly Agree) |

|---|---|---|---|---|---|

| Functional | |||||

| F1: Summarize | 2.18 | −0.28 | 1.00 | 1.83 | 6.16 |

| F2: Access | 1.61 | −0.85 | 0.43 | 1.26 | 5.59 |

| F3: Type Message | 2.92 | 0.47 | 1.74 | 2.57 | 6.90 |

| F4: Explain | 3.11 | 0.66 | 1.93 | 2.77 | 7.09 |

| Communicative | |||||

| COM1: Mutual Help | 2.82 | −0.51 | 1.74 | 3.69 | 6.35 |

| COM2: Engagement | 3.47 | 0.14 | 2.39 | 4.34 | 7.00 |

| COM3: Emotional Tone | 3.70 | 0.37 | 2.62 | 4.57 | 7.23 |

| COM4: Contribute | 3.07 | −0.26 | 2.00 | 3.94 | 6.60 |

| COM5: Connect with Others | 3.60 | 0.27 | 2.53 | 4.48 | 7.14 |

| Critical | |||||

| CRIT1: Credible Users | 3.18 | 0.13 | 1.77 | 3.65 | 7.15 |

| CRIT2: Fake Information | 3.14 | 0.09 | 1.74 | 3.61 | 7.12 |

| CRIT3: Safe Websites | 3.80 | 0.76 | 2.40 | 4.27 | 7.78 |

| CRIT4: Relevant Information | 1.72 | −1.32 | 0.32 | 2.20 | 5.70 |

| CRIT5: Online Credibility | 3.39 | 0.34 | 1.98 | 3.86 | 7.37 |

| Translational | |||||

| TRANSL1: Manage Health | 3.66 | 0.48 | 2.33 | 3.53 | 8.30 |

| TRANSL2: Improve Health | 2.99 | −0.19 | 1.65 | 2.85 | 7.62 |

| TRANSL3: Informed Decision | 3.90 | 0.72 | 2.56 | 3.76 | 8.53 |

| TRANSL4: Learn about Health | 2.17 | −1.01 | 0.83 | 2.03 | 6.80 |

Figure 2 shows that each response option had the highest probability of being selected at corresponding values on the latent continuum. Threshold 1 (“strongly disagree” to “disagree”) was generally represented at the average score (“0” logits) on the latent continuum. Respondents below this point had the highest probability of selecting “strongly disagree,” but respondents with an above average (“+0” logits) score had the highest probability of selecting positive response options, which occurred in ascending order (i.e., “disagree,” “neutral,” “agree,” “strongly agree”). Figure 2 depicts ICCs from the TeHLI. As expected, IICs and TICs for each scale showed the greatest amount of information peaked at 0 logits, ranging from −2 to 6 logits.

Figure 2.

An item characteristic curve for each TeHLI scale

Note. Category 0 = strongly disagree; Category 4 = strongly agree

External Validity

Table 5 shows that scores from the functional TeHLI scale were associated with more education, but had no statistical relationship with age, gender, race, ethnicity or income. Scores from the communicative TeHLI scale were associated with being younger, identifying as a woman, and number of chronic conditions. Scores from the critical TeHLI scale were not associated with any demographic variables; however, a notable relationship reaching statistical significance was identifying as a woman (p = .05). Finally, greater scores on the TeHLI translational scale were associated with being younger, identifying as a woman, and number of chronic conditions. Unlike scores from communicative, critical, and translational TeHLI scales, the scores from the functional scale were not associated with the number of social media used for health. The relationship between functional skills and number of devices used approached statistical significance (p = .05). A positive relationship existed between each scale and perceived usefulness of the Internet for health-related purposes, and an inverse relationship with perceived online information seeking challenges. Each scale was positively associated with active and interactive online health information seeking; however, communicative skills and interactive online health information seeking behaviors had the strongest correlation.

Table 5.

Bivariate Analyses to Examine Relationship between TeHLI Scores and Related Variables

| Participant Characteristics | Functional eHealth Literacy |

Communicative eHealth Literacy |

Critical eHealth Literacy |

Translational eHealth Literacy |

|---|---|---|---|---|

| User-Oriented Characteristic | ||||

| Age | −0.09 | −0.27*** | −0.10 | −0.21** |

| Gendera | 0.06 | 0.21*** | 0.16 | 0.15* |

| Raceb | −0.01 | 0.04 | 0.05 | 0.01 |

| Ethnicityc | 0.09 | −0.11 | −0.03 | −0.06 |

| Education | 0.16** | 0.09 | 0.03 | 0.09 |

| Income | −0.02 | −0.09 | −0.11 | 0.01 |

| Marriedd | −0.23 | −0.04 | −0.07 | −0.02 |

| Number of chronic diseases | 0.05 | 0.18** | 0.02 | 0.13* |

| Task/Technology-Oriented Characteristics | ||||

| Internet use for health in past 12 months | 0.14* | 0.17** | 0.22** | 0.32** |

| Number of social media platforms used | 0.05 | 0.26*** | 0.13* | 0.16** |

| Number of digital devices used | 0.11 | 0.20** | 0.13* | 0.18** |

| Online Health Information Seeking Strategies | ||||

| Interactive online health information seeking | 0.20*** | 0.52*** | 0.33*** | 0.30*** |

| Active online health information seeking | 0.36*** | 0.43*** | 0.31*** | 0.49*** |

| eHealth Perceptions | ||||

| Perceived online health information seeking challenges | −0.27*** | −0.46*** | −0.36*** | −0.36*** |

| Perceived usefulness of the Internet for health | 0.35*** | 0.50*** | 0.41*** | 0.63*** |

Note.

Male = 0, Female = 1

White = 0, Non-white = 1

Hispanic = 1, Non-Hispanic = 0

No = 0; Yes = 1

P < .05

P < .01

P < .001

eHEALS scores had a moderate-to-high positive association with scores on the functional (r = .47; p < .01), communicative (r = .63; p < .01), critical (r = .66; p < .01), and translational (r = .65; p < .01) scales. The TeHLI scales and AAHLS functional scale scores were not statistically significantly associated (r = −0.08; p = .21). A low-to-moderate statistically significant relationship did exist between TeHLI scales and AAHLS’ communicative (r = .15; p < .05) and critical (r = .33; p < .01) scores. Similarly, a positive moderate relationship between AAHLS’ critical health literacy and TeHLI “translational” scores also existed (r = .28; p < .001).

DISCUSSION

This study developed and tested the Transactional eHealth Literacy Instrument (TeHLI), a theory-driven and multi-dimensional measure of eHealth literacy. The TeHLI consists of 18 items, with 4-5 items comprising each scale. The brevity and psychometric properties of the TeHLI have important implications for its use in research and practice.

Principal Findings

The TeHLI consists of four correlated scales that measure functional, communicative, critical, and translational eHealth literacy. Scale items functioned as expected, with response options advancing across the latent continuum within uniform fashion. The greatest degree of precision in measurement was seen in scores considered “above average.” Research among baby boomers and older adults (Stellefson et al., 2017), including those with chronic disease (Paige, Krieger, Stellefson, & Alber, 2017), show the seminal eHealth literacy instrument has limited precision in the measurement of higher-level abilities due to extreme response behaviors. Extreme response styles are typical among self-reported measures assessing confidence or abilities, and they are significant limitations in eHealth skill assessment (van der Vaart et al., 2011). The TeHLI generally has a good degree of precision at these higher-level self-reported abilities.

Scores produced by each TeHLI scale exhibit sufficient evidence for external validity, give their expected associations with socio-demographic variables. High functional TeHLI scores were associated with greater education but not age, as strongly demonstrated in eHealth (Neter & Brainin, 2012; Norman & Skinner, 2006b; Paige, Krieger, & Stellefson, 2017) and health literacy literature (Chinn & McCarthy, 2013; Kobayashi, Wardle, Wolf, & von Wagner, 2015; Wolf et al., 2012). Although older adults are less likely to participate in social online environments than younger adults (Anderson & Perrin, 2017), this sample comprised a large proportion of Internet (~90%) and social media users (~70%) over the age of 40 with confidence in their skills to engage with other online users. Women and chronic disease patients are active online health information seekers and engage in participatory online forums (Fox, 2014; Fox & Purcell, 2010); therefore, the finding that these subgroups had significantly higher communicative and translational TeHLI scores was expected. Interestingly, a statistically significant relationship between critical TeHLI scores and socio-demographics did not exist. Unique to other eHealth literacy instruments, the critical TeHLI scale measures skills related to evaluating the credibility, relevance, and security of health information from online sources (e.g., users) and channels (e.g., websites) as suitable exchanges for health information. Diviani and colleagues (2016) report that eHealth users apply a variety of evaluative techniques to assess the credibility of online health information, and these techniques are not universal to all users. To obtain a comprehensive understanding of patients’ evaluative skills throughout the eHealth experience, research should include the critical TeHLI scale in a battery of measures.

Perceived usefulness of the Internet as a health information resource was strongly associated with scores produced by all TeHLI scales. Interestingly, the strength of this relationship was strongest for communicative (r = .50) and translational (r = .63) eHealth literacies, as compared to critical (r = .41) and functional (r = .35) literacies. This finding supports the notion that simply having the functional skills to search for and review static, informational webpages does not guarantee optimal satisfaction as it once did; rather, eHealth is perceived as most useful when users have the perceived ability to effectively connect with other online users. This is consistent with a prior study where patients reported that the perceived usefulness of participatory online media (e.g., Facebook) for Chronic Obstructive Pulmonary Disease (COPD) self-management is hindered by technological separation imposed by challenges to make meaningful connections with other online users (Paige et al., 2019).

Limitations

This self-reported instrument was predominantly tested among members of the baby boomer and silent generation. The findings cannot be generalized across the lifespan nor can they be assumed equivalent to performance ability. It should be noted, however, that the initial phases of the instrument development process were informed by an extensive review of the literature that included evidence across the lifespan. eHealth experts provided feedback about the operational behaviors and items from generic users, not those who are older or with chronic conditions. The relevance of the TeHLI applies across the lifespan. Research is needed to test the TeHLI with younger adults and different modalities (e.g., telephone, paper and pencil).

Practical Implications

Results demonstrate that the TeHLI captures and sufficiently measures self-reported skills related to transactional features of eHealth, particularly among a clinic-based cohort of baby boomers and older adults. The multi-dimensional TeHLI will allow behavioral research scientists and healthcare practitioners to determine if patients’ strengths or deficits are related to using a computer (functional) or a combination of exchanging information (communicative), evaluating content credibility (critical), and applying online information to wellness plans (translational). With this instrument, researchers and practitioners will be able to identify patients who want to use the Internet for health, but may only have the skills to review online websites rather than engage in exchanges among other users. As such, this instrument is useful for practitioners and researchers to direct their patients to resources that correspond with their eHealth attitudes, preferences and skills.

This study demonstrates the value of engaging experts and end-users in the instrument development process. Experts had unique values and beliefs regarding eHealth from a professional perspective, whereas end-users provided insight to the behaviors and the skills perceived as being effective for effectively navigating the online experience. Experts provided operational behaviors about communicative eHealth literacy that corresponded with TMeHL’s functional skillset, whereas end-users provided operational behaviors about how to effectively and appropriately communicate with other users through mediated platforms. As such, this study presents a blueprint for actively engaging both experts and end-users in instrument development procedures that result in a brief, reliable measure that produces with scores with sufficient validity evidence to support its intended use.

Acknowledgements:

The authors would like to thank the experts and patients that participated in this study. This work was supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under grant #F31HL132463.

Footnotes

Declaration of Interest Statement: No potential conflict of interest exists among authors.

Contributor Information

Samantha R. Paige, STEM Translational Communication Center, University of Florida, Gainesville FL USA.

Michael Stellefson, Department of Health Education & Promotion, East Carolina University, Greenville NC USA.

Janice L. Krieger, STEM Translational Communication Center, Department of Health Outcomes & Biomedical Informatics, University of Florida, Gainesville FL USA.

M. David Miller, School of Human Development & Organizational Studies, University of Florida, Gainesville FL USA.

JeeWon Cheong, Department of Health Education & Behavior, University of Florida, Gainesville FL USA.

Charkarra Anderson-Lewis, College of Nursing and Health Professions, University of Southern Mississippi, Hattiesburg MS USA.

REFERENCES

- Agency for Toxic Substances and Disease Registry. (2015, June 25). Principles of Community Engagement. Retrieved from https://www.atsdr.cdc.gov/communityengagement/index.html

- Anderson M, & Perrin A (2017, May 17). Technology use among seniors. Retrieved from http://www.pewinternet.org/2017/05/17/technology-use-among-seniors/

- Barnlund D (1970). Communication: The Context of Change. Harper & Row. [Google Scholar]

- Boateng GO, Neilands TB, Frongillo EA, Melgar-Quiñonez HR, & Young SL (2018). Best practices for developing and validating scales for health, social, and behavioral research: A primer. Frontiers in Public Health, 6, 149 10.3389/fpubh.2018.00149 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boote J, Baird W, & Beecroft C (2010). Public involvement at the design stage of primary health research: A narrative review of case examples. Health Policy, 95(1), 10–23. 10.1016/j.healthpol.2009.11.007 [DOI] [PubMed] [Google Scholar]

- Chan CV, & Kaufman DR (2011). A framework for characterizing eHealth literacy demands and barriers. Journal of Medical Internet Research, 13(4), e94 10.2196/jmir.1750 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charters E (2003). The use of think-aloud methods in qualitative research: An introduction to think-aloud methods. Brock Education: A Journal of Educational Research and Practice, 12(2). 10.26522/brocked.v12i2.38 [DOI] [Google Scholar]

- Chinn D, & McCarthy C (2013). All aspects of health literacy scale (AAHLS): Developing a tool to measure functional, communicative, and critical health literacy in primary healthcare settings. Patient Education and Counseling, 90(2), 247–253. 10.1016/j.pec.2012.10.019 [DOI] [PubMed] [Google Scholar]

- Crocker L, & Algina J (1986). Introduction to Classical and Modern Test Theory. Orlando, Florida: CBS College Publishing. [Google Scholar]

- Diviani N, van den Putte B, Meppelink CS, & van Weert JCM (2016). Exploring the role of health literacy in the evaluation of online health information: Insights from a mixed-methods study. Patient Education and Counseling, 99(6), 1017–1025. 10.1016/j.pec.2016.01.007 [DOI] [PubMed] [Google Scholar]

- Eheman C, Berkowitz Z, Lee J, Mohile S, Purnell J, Rodriguez E, … Morrow G (2009). Information-seeking styles among cancer patients before and after treatment by demographics and use of information sources. Journal of Health Communication, 14(5), 487–502. 10.1080/10810730903032945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox S (2014, January 15). The social life of health information. Retrieved May 7, 2018, from Pew Research Center: http://www.pewresearch.org/fact-tank/2014/01/15/the-social-life-of-health-information/ [Google Scholar]

- Fox S, & Purcell K (2010, March 24). Social media and health. Retrieved February 27, 2018, from Pew Research Center; website: http://www.pewinternet.org/2010/03/24/social-media-and-health/ [Google Scholar]

- Griebel L, Enwald H, Gilstad H, Pohl A-L, Moreland J, & Sedlmayr M (2017). eHealth literacy research—Quo vadis? Informatics for Health and Social Care, 0(0), 1–16. 10.1080/17538157.2017.1364247 [DOI] [PubMed] [Google Scholar]

- Hu L, & Bentler PM (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 5(1), 1–55. [Google Scholar]

- Jensen JD, Carcioppolo N, King AJ, Scherr CL, Jones CL, & Niederdieppe J (2014). The cancer information overload (CIO) scale: Establishing predictive and discriminant validity. Patient Education and Counseling, 94(1), 90–96. 10.1016/j.pec.2013.09.016 [DOI] [PubMed] [Google Scholar]

- Kobayashi LC, Wardle J, Wolf MS, & von Wagner C (2015). Cognitive function and health literacy decline in a cohort of aging English adults. Journal of General Internal Medicine, 30(7), 958–964. 10.1007/s11606-015-3206-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koopman RJ, Petroski GF, Canfield SM, Stuppy JA, & Mehr DR (2014). Development of the PRE-HIT instrument: Patient readiness to engage in health information technology. BMC Family Practice, 15(18). Retrieved from https://bmcfampract.biomedcentral.com/articles/10.1186/1471-2296-15-18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linacre JM (2002). Optimizing rating scale category effectiveness. Journal of Applied Measurement, 3(1), 85–106. [PubMed] [Google Scholar]

- Mair P, Hatzinger R, Maier MJ, & Rusch T (2018). Package “eRm”: Extended rasch modeling (Version 0.16–1) [RStudio CRAN]. Retrieved from https://cran.r-project.org/web/packages/eRm/eRm.pdf

- McGinnis D (2009). Text comprehension products and processes in young, young-old, and old-old adults. Journal of Gerontology Series B: Psychological Sciences and Social Sciences, 64B(2), 202–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neter E, & Brainin E (2012). eHealth Literacy: Extending the digital divide to the realm of health information. Journal of Medical Internet Research, 14(1), e19 10.2196/jmir.1619 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman C (2011). eHealth Literacy 2.0: Problems and opportunities with an evolving concept. Journal of Medical Internet Research, 13(4), e125 10.2196/jmir.2035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman CD, & Skinner HA (2006a). eHealth Literacy: Essential skills for consumer health in a networked world. Journal of Medical Internet Research, 8(2), e9 10.2196/jmir.8.2.e9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman CD, & Skinner HA (2006b). eHEALS: The eHealth literacy scale. Journal of Medical Internet Research, 8(4), e27 10.2196/jmir.8.4.e27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nutbeam D (2000). Health literacy as a public health goal: A challenge for contemporary health education and communication strategies into the 21st century. Health Promotion International, 15(3), 259–267. 10.1093/heapro/15.3.259 [DOI] [Google Scholar]

- Osterlind SJ (1998). Constructing Test Items: Multiple-Choice, Constructed-Response, Performance and Other Formats (2nd ed.). Retrieved from https://www.springer.com/gp/book/9780792380771 [Google Scholar]

- Paige SR, Stellefson M, Krieger JL, Anderson-Lewis C, Cheong J, & Stopka C (2018b). The transactional model of electronic health (eHealth) literacy. Journal of Medical Internet Research, 20(10), e10175. doi: 10.2196/10175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paige SR, Krieger JL, Stellefson M, & Alber JM (2017). eHealth literacy in chronic disease patients: An item response theory analysis of the eHealth literacy scale (eHEALS). Patient Education and Counseling, 100(2), 320–326. 10.1016/j.pec.2016.09.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paige SR, Krieger JL, & Stellefson ML (2017). The influence of eHealth literacy on perceived trust in online health communication channels and sources. Journal of Health Communication, 22(1), 53–65. 10.1080/10810730.2016.1250846 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paige SR, Miller MD, Krieger JL, Stellefson M, & Cheong J (2018a). eHealth literacy across the lifespan: A measurement invariance study. Journal of Medical Internet Research, 20(7), e10434 10.2196/10434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paige SR, Stellefson M, Krieger JL, & Alber JM (2019). Computer-mediated experiences of patients with chronic obstructive pulmonary disease. American Journal of Health Education, 50(2), 127–134. 10.1080/19325037.2019.1571963 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pepper D, Hodgen J, Lamesoo K, Koiv P, & Tolboom J (2016). Think aloud: Using cognitive intrviewing to validate the PISA assessment of student self-efficacy in mathematics. International Journal of Research and Method in Education, 41, 3–16. doi: 10.1080/1743727X.2016.1238891. [DOI] [Google Scholar]

- Ramirez A, Walther JB, Burgoon JK, & Sunnafrank M (2002). Information-seeking strategies, uncertainty, and computer-mediated communication. Human Communication Research, 28(2), 213–228. [Google Scholar]

- Rubin RB, & Martin MM (1994). Development of a measure of interpersonal communication competence. Communication Research Reports, 11(1), 33–44. [Google Scholar]

- Ryan K, Gannon-Slater N, & Culbertson MJ (2012). Improving survey methods with cognitive interviews in small- and medium-scale evaluations. American Journal of Evaluation, 33(3), 414–430. 10.1177/1098214012441499 [DOI] [Google Scholar]

- Seçkin G, Yeatts D, Hughes S, Hudson C, & Bell V (2016). Being an informed consumer of health information and assessment of electronic health literacy in a national sample of Internet users: Validity and reliability of the e-HLS instrument. Journal of Medical Internet Research, 18(7), e161 10.2196/jmir.5496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaw T, McGregor D, Brunner M, Keep M, Janssen A, & Barnet S (2017). What is eHealth (6)? Development of a conceptual model for eHealth: Qualitative study with key informants. Journal of Medical Internet Research, 19(10), e324 10.2196/jmir.8106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spitzberg BH, & Cupach WR (1984). Interpersonal communication competence. University of Michigan: Sage Publications. [Google Scholar]

- Stellefson ML, Shuster JJ, Chaney BH, Paige SR, Alber JM, Chaney JD, & Sriram PS (2017). Web-based health information seeking and eHealth literacy among patients living with chronic obstructive pulmonary disease (COPD). Health Communication, 1–15. 10.1080/10410236.2017.1353868 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stellefson M, Paige SR, Tennant B, Alber JM, Chaney BH, Chaney D, & Grossman S (2017). Reliability and validity of the telephone-based eHealth literacy scale among older adults: Cross-sectional survey. Journal of Medical Internet Research, 19(10), e362 10.2196/jmir.8481 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Vaart R, & Drossaert C (2017). Development of the digital health literacy instrument: Measuring a broad spectrum of health 1.0 and health 2.0 skills. Journal of Medical Internet Research, 19(1), e27 10.2196/jmir.6709 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Vaart R, van Deursen AJ, Drossaert CH, Taal E, van Dijk JA, & van de Laar MA (2011). Does the eHealth literacy scale (eHEALS) measure what it intends to measure? Validation of a Dutch version of the eHEALS in two adult populations. Journal of Medical Internet Research, 13(4). 10.2196/jmir.1840 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willis S (1987). Cognitive training and everyday competence. Annual Review of Gerontology and Geriatrics, 7, 159–188. [PubMed] [Google Scholar]

- Wolf MS, Curtis LM, Wilson EAH, Revelle W, Waite KR, Smith SG, … Baker DW (2012). Literacy, cognitive function, and health: Results of the LitCog Study. Journal of General Internal Medicine, 27(10), 1300–1307. 10.1007/s11606-012-2079-4 [DOI] [PMC free article] [PubMed] [Google Scholar]