Abstract

Purpose of review:

To provide a comprehensive review of usability testing of eHealth interventions for HIV.

Recent Findings:

We identified 28 articles that assessed the usability of eHealth interventions for HIV, most of which were published within the past 3 years. The majority of the eHealth interventions for HIV were developed on a mobile platform and focused on HIV prevention as the intended health outcome. Usability evaluation methods included: eye-tracking, questionnaires, semi-structured interviews, contextual interviews, think-aloud protocols, cognitive walkthroughs, heuristic evaluations and expert reviews, focus groups, and scenarios.

Keywords: Usability, eHealth, mHealth, HIV, Telemedicine, Digital Health

Summary:

A wide variety of methods are available to evaluate the usability of eHealth interventions. Employing multiple methods may provide a more comprehensive assessment of the usability of eHealth interventions as compared to inclusion of only a single evaluation method.

Introduction

Approximately two thirds of the population worldwide are connected by mobile devices and more than three billion are smartphone users [1, 2]. Even in limited-resource settings, there is growing use of the internet and increasing accessibility to internet capable technologies such as computers, tablets, and smartphones [3, 4]. eHealth takes advantage of the proliferation of technology users by delivery of health information and interventions though information and communication technologies. eHealth interventions can be delivered through a variety of technology platforms including mobile phones (mHealth), internet-based websites, tablets, electronic devices, and desktop computers [5]. With substantially rising numbers of internet and electronic device users, eHealth can reach patients across the HIV care cascade, from HIV prevention and testing to medication adherence for people living with HIV (PLWH)[6–11].

While there have been many promising eHealth HIV interventions, many of these have do not have reports of being developed using a rigorous design process nor rigorously evaluated through usability testing prior to deployment. Lack of formative evaluation may result in a failure to achieve usability, which is broadly defined as ‘the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use [12].The core metrics of effectiveness, efficiency, and satisfaction can be measured to determine the usability of a health information technology interventions [13, 14]. In sum, usability is a critical determinant of successful use and implementation of eHealth interventions [15]. Without evidence of usability, an eHealth intervention may result in frustrated users, reduced efficiency, increased costs, interruptions in workflow, and increases in healthcare errors, which can hinder adoption of an eHealth intervention [16]. Given the importance of assessing the usability of health information technology interventions and the growing development of HIV-related eHealth interventions, this paper presents a review of the published literature of usability evaluations conducted during the development of eHealth HIV interventions.

METHODS

Our team conducted a comprehensive search of usability evaluations of eHealth HIV interventions using Pubmed, Embase, CINAHL, IEEE, Web of Science, and Google Scholar (first 10 pages of results). The search was limited to English language articles published from January 2005 to September 2019. An informationist assisted with tailoring search strategies for online reference databases. The final list of search terms included: eHealth, mHealth, HIV, telemedicine, intervention or implementation science, user testing, user-centered, effectiveness, ease of use, performance speed, error prevention, heuristic, and usability. We included studies that measured and reported usability evaluation methods of eHealth HIV-related interventions. We excluded studies based on the following criteria: (1) did not focus on an eHealth intervention; (2) did not focus on HIV; (3) focused on an eHealth HIV intervention without providing information on the usability of the intervention; (4) articles that were systematic reviews, conference posters, or presentations (5) articles not published in English.

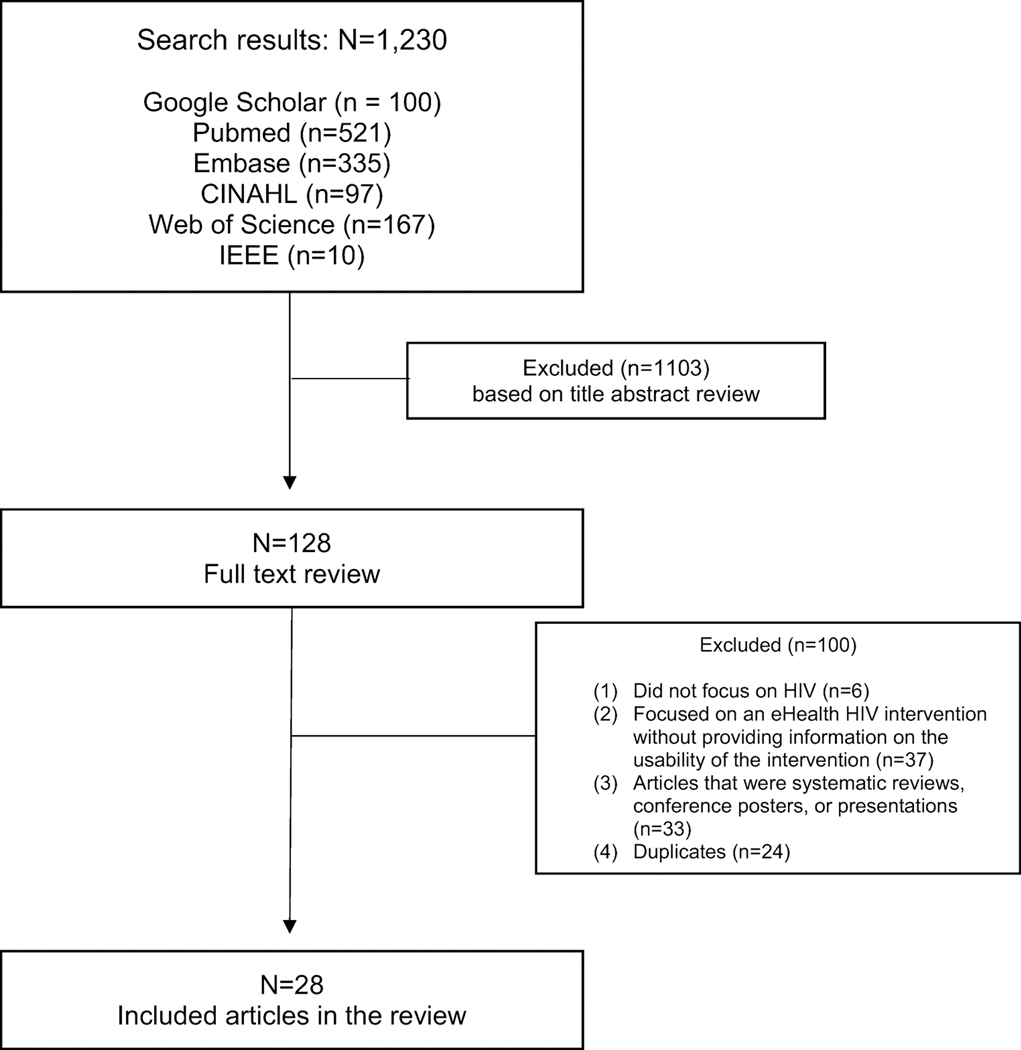

Two authors (RD, JG) divided the online reference databases and conducted the initial title/abstract review. All articles recommended for full text review were recorded in an MS Excel spreadsheet. The two investigators then independently reviewed 128 full texts of all selected articles from the title/abstract review (see Figure 1). Any discrepancies regarding article inclusion for the review were discussed by the two investigators until consensus was reached.

Figure 1.

Flowchart of article selection

RESULTS

We located 28 studies which included usability evaluations of eHealth HIV interventions (See table 1), the majority (71%, n=20) of which were published within the past 3 years. More than half of studies (57%, n=16) used more than one method of evaluation to assess the usability of the eHealth interventions. Platforms for the delivery of intervention varied: mobile applications (68%, n=19), websites (25%, n=7), and desktop-based programs (7%, n=2). Two included articles evaluated the mVIP, using different usability methods for each article [17, 18]; and two included articles evaluated MyPEEPS Mobile, using different usability evaluation methods in each article[19, 20].

Table 1.

Studies evaluating eHealth HIV interventions using usability evaluation methods

| First Author & Reference | Title | Year | Country | Intervention Name | Technology | Purpose | Target Population | Usability Evaluation Type(s) | Results |

|---|---|---|---|---|---|---|---|---|---|

| Beauchemin [21] | A multistep usability evaluation of a self-management app to support medication adherence in persons living with HIV | 2019 | USA | WiseApp | Mobile app and electronic pill bottle | ART adherence and Health management | PLWH aged 18+ | Cognitive walkthrough, Think -aloud, Heuristic evaluation, Questionnaire (Health-ITUES), Task analysis | Researchers created a mobile app, in conjunction with an electronic pill bottle, to encourage ART adherence among PLWH. A think-aloud was performed by end-users and revealed the need to increase the visibility of app components, which was supported the heuristic evaluations. A cognitive walkthrough confirmed that the app and pill bottle were usable for end-users. |

| Cho [17] | Understanding the predisposing, enabling, and reinforcing factors influencing the use of a mobile-based HIV management app: A real world usability evaluation | 2018 | USA | mVIP | Mobile app | Health management | PLWH aged 18+ | Focus groups | A mobile app was developed to provide self-care strategies to aid in symptom self-management. Focus groups with end-users revealed the predisposing, enabling, and reinforcing factors that influenced the app‟s usability in a real-world setting. |

| Cho [20] | A mobile health intervention for HIV prevention among racially and ethnically diverse young men | 2018 | USA | MyPEEPS Mobile | Mobile app | Risk reduction | Healthy males aged 15–18 | Heuristic evaluation, Think-aloud, Questionnaire (PSSUQ), Scenarios | Researchers translated an HIV-prevention curriculum into a mobile app. The heuristic evaluation revealed that user navigability within the app needed the most refinement. End-users generally were able to complete the use case-scenarios. The end-users‟ PSSUQ scores indicated strong user acceptance. |

| Cho [18] | A multi-level usability evaluation of mobile health applications: A case study | 2018 | USA | mVIP | Mobile app | Health management | PLWH aged 18 + | Think-aloud, Heuristic evaluation, Semi-structured interviews, Questionnaire (Health-ITUES), Scenarios, Task analysis, Eye-tracking | A mobile app was developed to aid with self-management of HIV symptoms for PLWH. Researchers employed a 3 level usability evaluation. Through this multilevel evaluation, the content, interface, and functionality of the app was iteratively refined at each level. End-users rated the app as highly usable. |

| Cho [19] | Eye-tracking retrospective think-aloud as a novel approach for a usability evaluation | 2019 | USA | MyPEEPS Mobile | Mobile app | Risk reduction | Healthy males aged 15–18 | Eye-tracking, Think-aloud, Questionnaire (Health-ITUES) | Researchers translated an HIV-prevention curriculum into a mobile app. eye-tracking retrospective think-aloud enabled us to identify critical usability problems as well as gain an in-depth understanding of the usability issues related to interactions between end-users and the app. |

| Coppock [22] | Development and usability of a smartphone application for tracking antiretroviral medication refill data for human immunodeficiency virus | 2017 | Botswana | Mobile app | ART Adherence | Pharmacists | Questionnaire (CSUQ), Contextual interview | Researchers developed a pharmacy-based ART adherence mobile app. End-user evaluators provided recommendations such as a feature for rapid adherence calculations and wanted ongoing technical improvements. End-users rated the app as highly usable. | |

| Cordova [23] | The usability and acceptability of an adolescent mHealth HIV/STI and drug abuse preventive intervention in primary care | 2018 | USA | Storytelling 4 Empowerment | Mobile app | Risk reduction | Healthy youth aged 13–22 | Focus groups, Semi-structured interviews | A mobile app was adapted from a face to face intervention for HIV/STI and drug abuse prevention among adolescents. Qualitative results indicated favorable results for the usability and acceptability of the app‟s format, content, and process. |

| Danielson [24] | SiHLEWeb.com: Development and usability testing of an evidence-based HIV/STI prevention website for female African American adolescents | 2016 | USA | SiHLEWeb.com | Website | Risk reduction | Healthy African American females aged 13–18 | Think-aloud, Questionnaire (WAMMI) | A website was developed for risk-reduction among female African American adolescents. During the evaluation, users reported issues with website navigation. Users found that the intervention was helpful, easy to use, and generally attractive. In addition, it improved knowledge and learning of HIV prevention. |

| Hightow-Weidman [25] | HealthMpowerment.org: Development of a theory-based HIV/STI website for young black MSM | 2011 | USA | HealthMpowerment.org | Website | Risk reduction | Healthy African American males aged 18–30 | Think-aloud | An internet-based intervention was developed with input from young African American men who have sex with men. The evaluations indicated acceptable usability and provided potential innovations to the website. |

| Himelhoch [26] | Pilot feasibility study of Heart2HAART: A smartphone application to assist with adherence among substance users living with HIV | 2017 | USA | Heart2HAART | Mobile app | ART adherence, Substance use treatment | PLWH aged 18–64 | Questionnaire (non-validated instrument) | A mobile app was developed to support ART adherence in conjunction with directly observed treatment.. Although the study participants generally found the app usable, some counselors found the participation required on their part burdensome. |

| Horvath [27] | eHealth literacy and intervention tailoring impacts the acceptability of a HIV/STI testing intervention and sexual decision making among young gay and bisexual men | 2017 | USA | Get Connected! | Website | Risk reduction | Healthy males aged 15–24 | Questionnaire (non-validated instrument) | An online intervention was developed to increase HIV testing among YMSM. The study explored the effect of tailoring the intervention to a participant‟s background and the effect of eHealth literacy on HIV/STI testing among YMSM and intervention usability. Tailoring and participant health literacy did not have an impact of overall user satisfaction and perceived usefulness, but they did alter perceived quality. |

| Kawakyu [28] | Development and implementation of a mobile phone-based prevention of mother-to-child transmission of HIV cascade analysis tool: Usability and feasibility testing in Kenya and Mozambique | 2019 | Kenya & Mozambique | mPCAT | Mobile app | Risk reduction | Health workers and facility managers | Think-aloud, Focus groups | Researchers created a mobile phone based PMTCT cascade analysis tool for health workers. Following training on the use of the app, two rounds of usability testing was conducted among facility workers and managers. Health facility workers found that the app was useful and wanted to implement it in their clinics. |

| Luque [29] | Bridging the digital divide in HIV care: a pilot study of an iPod personal health record | 2013 | USA | MyMedical | Mobile app | Health management | PLWH aged 18+ | Questionnaire (non-validated instrument) | A personal health record (PHR) for potential users, who also participated in a 6-week self-management training curriculum, evaluated an iPod touch. Usability for both the iPod and the PHR was assessed post-training. Both aspects of the intervention were highly rated in terms of usability, but most participants expressed that they did not intend to use the PHR to remind them to take their medications. |

| Mitchell [30] | Smartphone-based contingency management intervention to improve pre-exposure prophylaxis adherence: Pilot trial | 2018 | USA | mSMART | Mobile app | Risk reduction | Healthy males aged 18–30 years | Semi-structured interviews, Questionnaire (SUS) | A mobile app was developed to encourage PrEP adherence among MSM. The intervention received a moderate usability rating, but future studies are recommended due to the sample‟s homogeneity. |

| Musiimenta [31] | Acceptability and feasibility of real time antiretroviral therapy and adherence interventions in rural Uganda: Mixed method pilot randomized control trial | 2018 | Uganda | SMS and electronic pill bottle | ART adherence | PLWH aged 18+ and their social supporters aged 18+ | Semi-structured interviews | The intervention was developed to provide real-time ART adherence monitoring via text messaging and an electronic pill bottle. PLWH and their supporters generally approved of the intervention, but usability was restricted by environmental factors such as shared phones and limited electricity. | |

| Sabben [32] | A smartphone game to prevent HIV among young Africans (Tumaini): assessing intervention and study acceptability among adolescents and their parents in an RCT | 2019 | Kenya | Tumaini | Mobile app | Risk reduction | Healthy adolescents aged 11–14 years & their parents | Questionnaire (non-validated instrument), Focus groups | A narrative-based smartphone game was created for risk reduction among healthy youth aged 15–24. The eHealth intervention scored high for usability. There is opportunity for improving game mechanics and communication to parents about their role in the intervention. |

| Schnall [33] | Usability evaluation of a prototype mobile app for health management for persons living with HIV | 2016 | USA | Mobile app | Health management | PLWH aged 18+ | Heuristic evaluation, Questionnaire (PSSUQ), Scenarios, Think-aloud | A mobile app was created to support self-management and improve health outcomes. The usability evaluation process led to 77 intervention changes recommended by the expert evaluators and 83 changes recommended by the end users. | |

| Schnall [34] | A user-centered model for designing consumer mobile health application (apps) | 2016 | USA | Mobile app | Risk reduction | Healthy males aged 13–64 | Heuristic evaluation, Questionnaire (PSSUQ), Scenarios, Think-aloud | A mobile app for risk reduction among high-risk MSM. Usability evaluation methods were used to iteratively develop the mobile app. The study demonstrated the value of the ISR (Information Systems Research) Framework as a method to incorporate end-user’s preferences when creating mHealth interventions. | |

| Shegog [35] | “+Click”: pilot of a web-based training program to enhance ART adherence among HIV-positive youth | 2012 | USA | +CLICK | Web-based application | ART adherence | PLWH aged 14–22 | Questionnaire (non-validated instrument) | A web-based application was created for ART adherence among youth PLWH. Evaluation ratings indicated that the intervention as easy to use, trustworthy, and easy to understand. |

| Shegog [36] | “It’s your game”: an innovative multimedia virtual world to prevent HIV/STI and pregnancy in middle school youth | 2007 | USA | It’s Your Game, Keep It Real | Desktop-based curriculum | Risk reduction | Healthy adolescents aged 12–14 | Questionnaire (non-validated instrument) | The multimedia desktop-based HIV/STD and pregnancy prevention intervention for middle school students was highly rated in terms of ease of use, credibility, understandability, and acceptability. |

| Skeels [37] | CARE+ user study: usability and attitudes towards a tablet PC computer counseling tool for HIV+ men and women | 2006 | USA | CARE+ | Desktop-based curriculum | ART adherence | PLWH aged 18+ | Semi-structured interviews, Contextual interview | A computer-counseling tool was created to support ART medication adherence. The study found the intervention was usable by participants of varying experience with computers. Most participants found computer counseling useful, but still wanted to maintain consultations with a human provider. |

| Stonbraker [38] | Usability testing of a mHealth app to support self-management of HIV-associated non-AIDS related symptoms | 2018 | USA | VIP-HANA | Mobile app | Health management | PLWH aged 18+ | Heuristic evaluation, Think-aloud, Scenarios, Questionnaire (Health-ITUES & PSSUQ) | The mobile app was created to support PLWH to manage symptoms associated with HIV. Experts and end users rated the application as highly usable. Experts and end-users had different feedback regarding essential components for usability. Experts had focused more on the app design compared to end-users. |

| Sullivan [39] | Usability and acceptability of a mobile comprehensive HIV prevention app for men who have sex with men: A pilot study | 2017 | USA | HealthMindr | Mobile app | Risk reduction | Healthy males aged 18+ | Questionnaire (SUS) | A mobile app was created as a risk reduction intervention for MSM. Participants gave the mobile application an above average usability rating. Nearly ten percent of the PrEP eligible participants-initiated PrEP over the course of the trial. |

| Vargas [40] | Usability and acceptability of a comprehensive HIV and other sexually transmitted infections prevention app | 2019 | Spain | Preparadxs | Mobile app | Risk reduction | Healthy males and females aged 18+ | Questionnaire (SUS) | The mobile app was created to improve HIV/STI prevention among adults. Evaluators rated the application having good usability and believed that the app had the potential to reduce future incidence of HIV and other STIs. |

| Widman [41] | ProjectHeartForGirls.com: Development of a web-based HIV/STD prevention program for adolescent girls emphasizing sexual communication skills | 2016 | USA | ProjectHeartForGirls.com | Website | Risk reduction | Healthy females aged 15–19 | Think-aloud | An interactive web-based program for risk reduction among adolescent girls. All recommendations served as part of an iterative process of development and usability testing. |

| Williams [42] | An evaluation of the experiences of rural MSM who assess an online HIV/AIDS health promotion intervention | 2010 | USA | HOPE project | Website | Risk reduction | Healthy males aged 18+ | Questionnaire (non-validated instrument) | A website was created for risk reduction among MSM residing in rural areas. Participants who completed the intervention found it easy to navigate and access the intervention from home. Perceived usefulness increased from the first to the last module. |

| Winstead-Derlega [43] | A pilot study of delivering peer health messages in a HIV clinic via mobile media | 2012 | USA | Mobile app | Health management | PLWH aged 18+ | Questionnaire (non-validated instrument) | A mobile app consisting of peer health videos regarding health management for PLWH. Participants found the intervention acceptable in a clinic setting and found the app easy to use. After the intervention, there were no significant changes in participant perception regarding engagement in care or HIV status disclosure. | |

| Ybarra [44] | Usability and navigability of an HIV/AIDS internet intervention for adolescents in a resource limited setting | 2012 | Uganda | CyberSenga | Website | Risk reduction | Healthy adolescents | Think-aloud, Focus groups, Contextual interview | A website was created for risk reduction among secondary school students in Uganda. Participants were most interested in the interactive activities. From the field-testing, researchers found that those wanting to implement this intervention should consider user preparedness, user literacy, a source of internet connection, bandwidth, and electricity. |

ART: Antiretroviral Therapy; CSUQ: Computer System Usability Questionnaire; Health-ITUES: Health Information Technology (IT) Usability Evaluation Scale; HIV: Human Immunodeficiency Virus; MSM: Men who have Sex with Men; PLWH: People living with HIV; PMTCT: Prevention of mother-to-child transmission; PSSUQ: Post-Study System Usability Questionnaire; SMS: Short message service; STI: Sexually Transmitted Infections; SUS: System Usability Scale; WAMMI: Website Analysis and MeasureMent Inventory; YMSM: Young Men who have Sex with Men

The target populations for the eHealth interventions included healthy youth participants (39%, n=11), people living with HIV (39%, n=11), healthy adults, including men who have sex with men (MSM) (21%, n=6), and health professionals (7%, n=2). The eHealth interventions also focus on a variety of topics including HIV prevention (54%, n=15), ART medication adherence (22%, n=6), and health management for PLWH (21%, n=6).

Our findings are organized by usability evaluation methods. The methodological approach is detailed in Table 2. The narrative describes how each study operationalized the usability evaluation method.

Table 2.

Overview of Usability Evaluation Methods

| Usability Evaluation Method | Description | Strengths | Limitations |

|---|---|---|---|

| Eye-tracking[45] | Eye-tracking involves a device recording the motion of the eye as a participant views the eHealth intervention. The device traces the pupil movement within the eye and determines the direction and focus of the participant‟s gaze. | This method can provide information about the duration of the participant‟s focus on a spot, movement of the participant‟s focus, specific items on the screen that draw attention, and participant‟s navigation of the page. With this method, researchers can compare patterns of eye movements among participants. | This method cannot tell you the intent behind a participant‟s gaze. Eye-tracking cannot capture peripheral vision such that you cannot be certain that the participant did not see an item outside the immediate scope of vision. It can be expensive as software is required to track eye motion and it may require specialists to conduct eye-tracking sessions and interpret the results. |

| Questionnaires [46] | A questionnaire involves participants scoring items using a predetermined scale. There are numerous standardized and validated questionnaires to quantitatively evaluate usability of eHealth interventions. | Validated questionnaires can be quick and easy to administer to participants. It can differentiate between a usable vs. unusable systems. | Questionnaires cannot identify which components of the technology need to be fixed and the scoring systems for questionnaires can be complex. |

| Semi-structured Interviews [47, 48] | Semi-structured interviews involve a face-to-face interview focusing on usability-related topics such as functionality, navigability, and ease of use of the eHealth intervention. | These interviews are useful for gathering information regarding an individual‟s attitudes, beliefs, practices, and experiences. The confidential setting of an interview allows for the interviewer to ask sensitive questions about social or personal experiences. | The limitations of this approach are that it requires preparation time to design questions and probes and potential cost to train the interviewer. |

| Contextual Interview[45] | A contextual interview is a usability assessment that involves observation of end-users as they work with the eHealth program in their own environment. | This method allows researcher to identify issues that users are facing and learn more about the setting of the user environment; researchers are able to ascertain the speed of internet, the layout of the space, and what additional resources are needed for optimal use of the eHealth program. | Researcher needs to travel to the user environment to conduct the contextual interview. This method provides data based only on researcher observations. |

| Think-aloud [49, 15] | The think-aloud method is a usability assessment that gathers information on functionality, navigability, and ease of use while end-users interact with the eHealth intervention technology. End users and/or experts (as part of a heuristic evaluation) express their thoughts and questions in real time as they perform tasks, allowing the observers to see the cognitive processes associated with task completion. During the think-aloud, trained research staff usually uses software to record the end-user‟s responses including verbal comments and physical responses such as eye movements during this assessment. | This method provides insight into usability flaws by identifying actual problems encountered by end-users and the causes underlying the encountered problems. | An expensive evaluation method as recording software is involved. In addition, this method can only reveal usability problems of the specific tasks given to end-users. |

| Cognitive Walkthrough [50, 49] | A cognitive walkthrough determines whether the end-user‟s background knowledge and the technological cues embedded in the computer system interface are enough to facilitate successful completion of a task. This method evaluates of the learnability of the eHealth tool. The method involves a monitored task simulation with the end-user going through the sequence of actions necessary to complete a task. | A cognitive walkthrough focuses on cognitive actions such as identifying icons and behavioral or physical actions such as mouse clicks needed for task completion. This method can identify usability problems that can hinder completion of a task and especially useful when developing technology for populations with low literacy. | The limitations of this approach include time for intensive preparation needed to detail correct sequence of actions needed to complete a task. These written sequences may limit the usability evaluation of the whole eHealth system. |

| Heuristic Evaluation & Expert Review [49, 15] | A heuristic evaluation is a method involving a small group of experts to evaluate the user interface design against a list of usability principles. The heuristic principles include the following: 1) simple to use and natural dialogue, (2) speak the end-user‟s language, (3) minimize end-user‟s memory load, (4) be consistent, (5) provide feedback, (6) provide clearly marked exits, (7) provide shortcuts, (8) provide good error messages, (9) prevent errors, and (10) provide help and documentation. The purpose of the heuristic evaluation is to uncover usability problems of the eHealth technology by identifying unmet usability principles. The think-aloud method, scenarios, and cognitive walkthrough may be used with experts as part of the heuristic evaluation. | This method can provide quick feedback and experts can provide suggestions to correct any problems related to usability. | Multiple experts may be hard to recruit and potentially expensive. There may be a lack of consistency or overlap in detected usability problems between experts. |

| Focus Group Discussion [45] | Focus groups are a moderated discussion on a range of preset topics that comprise approximately 5 to 10 participants from the target population. Consideration should be taken to conduct a focus group among participants who are representative of the target population. When recruiting participants, it is important to consider specific traits of the target population such as age, experience, gender, education. | Focus groups are a way to assess attitudes, beliefs, knowledge, practices, desires and reactions to topics. | The content from the focus groups discussions cannot be verified. In addition, the setting of a group may influence the way that respondents speak about topics. |

| Scenario [45] | Scenarios are used to situate tasks in realistic settings for the use of an eHealth program. The scenarios contain key tasks to provide representative data on usability of the system. These tasks should encourage an action without providing instructions on how to use the interface. | This method shows how the end-user accomplishes a task and gives information on whether the interface facilitates completing the scenario. | Poorly written scenarios can lead to poor usability data. This method requires a trained facilitator and intensive preparation time to create the scenarios. Scenarios must be used in the context of the think-aloud method and heuristic evaluation to obtain feedback from both end-users and experts. |

Eye-tracking

Eye-tracking was utilized by Cho and colleagues to evaluate usability mVIP, a health management mobile app. Gaze plots illustrating eye movements of participants were reviewed along with notes of critical incidents during task completion. Participants were asked to watch the recording of their task performance and verbalize their thoughts retrospectively. Participant difficulty with a task in the app was characterized with long eye fixation or distractive eye movements. For further insight behind the unusual eye movements, a retrospective think-aloud protocol was conducted among participants. This combination of methods allowed Cho and colleagues to decipher eye movements and further understand participants’ expectations of where information should be in the app. For example, one identified usability problem was placement of the ‘continue’ button in the app when displayed on a mobile device. Due to the small screen of a mobile device, participants had to scroll down to find the ‘Continue’ button. To resolve the placement issue, Cho and colleagues transitioned the mVIP app from a native app to a mobile responsive web-app [20].

In another study by Cho and colleagues, they evaluated the MyPEEPS Mobile intervention using eye-tracking and a retrospective think-aloud. The combination of eye-tracking and a retrospective think-aloud allowed for the identification of critical errors with the system and the time spent on each task. By analyzing participant fixations on the problem areas of the app, the study team was able to identify critical usability problems [19].

Questionnaires

The majority of studies (68%, n=19) included questionnaires as part of their usability evaluation of the eHealth intervention [26, 27, 29, 35, 36, 39, 40, 42, 43, 21, 20, 10, 38, 41, 24, 30, 33, 34, 19]. The complete list of validated questionnaires is described in Table 3. Among the studies that only utilized a single usability assessment (32%, n=9), a questionnaire was always used [26, 27, 29, 35, 36, 39, 40, 42, 43] Many different types of questionnaires were used including Health Information Technology Usability Evaluation Scale (Health-ITUES) [21, 20, 10, 38, 19], Computer System Usability Questionnaire (CSUQ)[41], Website Analysis and Measurement (WAMMI) [24], System Usability Scale (SUS) [39, 30, 40], Post-Study System Usability Questionnaire (PSSUQ) [38, 33, 34, 20]. Notably, a study by Stonbraker and colleagues used two different surveys, Health-ITUES and PSSUQ, among end-users in combination with a heuristic evaluation, think-aloud, and scenarios methods to evaluate the Video Information Provider-HIV-associated non-AIDS (VIP-HANA) app. This method provided feedback on overall usability. The end-users rated the app with high usability scores on both questionnaires [38].

Table 3.

Types of validated questionnaires commonly used to evaluate usability of eHealth interventions

| Questionnaires | Description | Benefits & Challenges |

|---|---|---|

| System Usability Scale (SUS)[51] | A widely used and quick usability assessment scale. Participants are asked to score 10 items with a five-point scale ranging from strongly agree to strongly disagree. | The overall score can be complex to interpret. This is meant to be a quick and rough estimate of perceived usability. |

| Health Information Technology Usability Evaluation Scale (Health-ITUES)[52] | A customizable usability assessment instrument where the participants are asked to score 20 items with a 5-point scale ranging strongly disagree to strongly agree. The questionnaire is comprised of four subscales: (1) quality of work life, (2) perceived usefulness, (3) perceived ease of use, and (4) user control. | This questionnaire is customizable to health system and the needs of a study. However, it is one of the longer questionnaires to administer. |

| Post-Study System Usability Questionnaire (PSSUQ)[53] | The PSSUQ consists of 19 items rated on a 7-point scale ranging from strongly agree to strongly disagree and includes an additional option for „not applicable‟ (N/A). The questionnaire addresses the following components of usability: quick completion of work, ease of learning, high-quality documentation, high quality online information, functional adequacy, rapid acquisition of usability experts, and rapid acquisition of several different user groups. | There is a 7-point scale which allows end-users to give a nuanced response compared to the 5-point scale. |

| Website Analysis and Measurement (WAMMI)[46] | The WAMMI questionnaire uses a standardized 20-item assessment to evaluate user experience and assesses user satisfaction. The participants rate items on a five-point scale from strongly agree to strongly disagree. The items are scored to produces five subscales measuring attractiveness, controllability, efficiency, helpfulness, and learnability of the website. | This instrument specifically recommended for use with websites and is one of the longer assessment tools that is available. |

| IBM Computer System Usability Questionnaire (CSUQ)[54] | A scenario-based psychometric questionnaire developed to assess subjective usability and end-user‟s reactions to the eHealth intervention. Participants rate items on a Likert scale, ranging from 1 to 7. | This is a 7-point scale which allows end-users to give a nuanced response compared to the 5-point scale. This instrument can be used within scenario-based usability. |

Semi-structured Interviews

Semi-structured interviews were conducted by 18% (n=5) of the included studies [22, 24, 31, 25, 42]. Interviews were conducted to evaluate a variety of technological platforms including mobile applications, websites, and a desktop-based curriculum. This usability evaluation method was primarily conducted with end-users [22, 24, 31, 42]. One unique study by Musiimenta and colleagues conducted semi-structured interviews to evaluate an SMS reminder intervention with both study participants and social supporters encouraging ART adherence [25].This method provided in-depth details of an end-user‟s experience with the intervention. One participant reported that they felt motivated when getting text messaging notifications: “I also like it [SMS notification] because when I have many people reminding me it gives great strength. My sister calls me when she receives an SMS reminder and asks why I didn’t swallow.” Findings from the semi-structured interviews led to the conclusion that the eHealth intervention was generally acceptable and feasible in a resource-limited country

Contextual Interviews

Contextual interviews were conducted in only three studies [44, 37, 22]. This method was used with end-users in all three studies. Two of these studies were conducted in a low-resource setting [44, 22]. The study by Coppock and colleagues conducted two rounds of contextual interviews to observe pharmacists use a mobile application during clinical sessions [22]. Ybarra and colleagues used the usability evaluation for a website targeting risk reduction among with adolescents [44]. The study by Skeels and colleagues had observed end-users work through CARE+, a tablet-based application [37].

Think-Aloud

The think aloud method was used by 43% of studies (n=12) [38, 21, 20, 18, 24, 25, 28, 33, 34, 41, 44, 19]. This method was used to evaluate usability of websites and mobile applications. The study by Beauchemin and colleagues used the think aloud method to evaluate both a mobile app with an electronic pill bottle [21]. All studies conducted the think aloud protocol among end-users. Five studies conducted the method with both end-users and experts.

Cognitive Walkthrough

One study by Beauchemin and colleagues conducted a cognitive walkthrough in combination with a think-aloud and heuristic evaluation to assess the usability of the WiseApp, a health management mobile application linked to an electronic pill bottle [21]. There were 31 tasks in total and 61% were easy to complete tasks, requiring less than 2 steps on average to complete. The tasks that were more difficult were related to finding a specific item within the mobile application. For example, participants reported that the “To-Do” list was hard to locate on the home screen. This feedback was incorporated as iterative updates to the app and onboarding procedures for future end-users of the app.

Heuristic Evaluation and Expert Reviews

Multiple studies (21%, n=6) conducted a heuristic evaluation with experts in combination with other usability evaluation methods [21, 18, 33, 34, 38, 20]. Majority of studies that used a heuristic evaluation used a think-aloud protocol with experts as they completed tasks using the eHealth program. All studies were using a heuristic evaluation to measure usability for mobile applications. All studies used a think-aloud protocol with five experts as they completed tasks using the eHealth program. The results from this method included feedback which mainly focused on interface design, navigability, and functionality issues and recommendations based in expertise to resolve these issues.

Focus groups

Focus groups were conducted by 18% (n=5) of all included studies [17, 23, 32, 28, 44]. Four studies evaluated mobile applications [17, 23, 32, 28] and one study evaluated a website [44]. The studies conducted between two and four focus groups, ranging from 5 to 12 participants. Sabben and colleagues conducted focus groups with participants and their parents to evaluate a risk reduction mobile application for healthy adolescents [32]. The focus groups divided parents up by the age of their children [32]. The results from this method revealed positive feedback and acceptability among participants and lack of safety concerns associated with application from parents.

Scenarios

Five studies used scenarios to evaluate usability of mobile applications with end-users and experts [20, 18, 33, 34, 38]. These studies employed case scenarios that reflected main functions of the system and used the same scenarios for both end-users and experts. This evaluation method was consistently used in the context of a heuristic evaluation and think aloud methods to obtain qualitative data on usability from experts and end-users. This method would not be possible to execute with method that did not involve direct interaction with the system, such as a questionnaire or focus group discussion taking place after using the system.

DISCUSSION

This paper provides a broad overview of some of the most frequently employed usability evaluation methods. This summary provides a compilation of methods, which can be considered in the future by others in the development of eHealth interventions. Most of the studies used multiple usability evaluation methods for the evaluation eHealth HIV interventions. Questionnaires were the most frequently used method of usability evaluation. In cases where only one usability evaluation was conducted, the questionnaire was the preferred method.

Questionnaires can be quick and cost-effective tools to quantitatively assess one or two aspects of usability and therefore are frequently used. However, they cannot provide a comprehensive evaluation of usability issues and instead simply provide a score to indicate the level of usability of an eHealth tools. Therefore, both quantitative and qualitative methods are recommended for evaluating complex interventions, such as eHealth interventions targeting HIV [55]. Questionnaires should be used in conjunction with other validated methods, such as a cognitive walkthrough as part of a multistep process to evaluate usability. If questionnaires are used alone, the overall usability can be determined but it is nearly impossible to identify the issues in the technology which need to be changed in response.

Cognitive walkthrough is an underutilized evaluation method within our review. This method specifically evaluates end-user learnability and ease of use through a series of tasks performed using the system. This method can pinpoint challenging tasks or complicated features associated with an eHealth intervention [21, 50, 49].

Future research should consider incorporating multiple methods as part of their overall usability evaluation of eHealth interventions.

When using multiple usability evaluation methods, there is potential to get varying results. One study by Beauchemin and colleagues conducted a Health-ITUES questionnaire with both end-users and experts to evaluate WiseApp, a mobile application linked to an electronic pill model [21]. The experts gave the eHealth intervention a lower score, emphasizing design issues, compared to end-users [20]. The authors then used a think-aloud method and cognitive walkthrough for further clarification on the cited issues [21].

Another study by Stonbraker and colleagues assessed the usability of the VIP-HANA app, a mobile application targeting symptom management for PLWH, with both end-users and experts. The researchers used multiple usability evaluation methods including heuristic evaluation, think aloud, scenarios, and two questionnaires. The heuristic evaluation with experts indicated that there were design issues and the area needing the most improvement was the navigation between sections in the app and adding a help feature In contrast, end-users did not comment on the lack of a back button Further, end-users indicated that app features needed to be more clearly marked rather than specifying a need for a help feature The combination of multiple usability methods allowed for detailed identification of usability concerns and the researchers were able to refine the app to make it more usable while reconciling the experts and the end-users feedback [33].

Several limitations should be considered when reading this review. Measures were taken to build comprehensive search strategies and were created under the guidance of an informationist. However, the results from the search strategies may not include all eligible studies. In addition, publication bias should be considered when conducting a systematic review as we may have missed relevant unpublished work.

Conclusions:

In summary, this paper provides a review of the usability evaluation methods employed in the assessment of eHealth HIV eHealth interventions. eHealth is a growing platform for delivery of HIV interventions and there is a need to critically evaluate the usability of these tools before deployment. Each usability evaluation method has its own set advantages and disadvantages. Cognitive walkthroughs and eye-tracking are underutilized usability evaluation methods. They are both useful approaches which provide detailed information on the usability violations and guidance on key factors which need to be fixed to ensure the efficacious use of eHealth tools. Further, given the limitations of any one usability evaluation method, technology developers will likely need to employ multiple usability evaluation methods to gain a comprehensive understanding of the usability of an eHealth tool.

Human and Animal Rights

All reported studies/experiments with human or animal subjects performed by authors have been previously published and complied with all applicable ethical standards (including Helsinki declaration and its amendments, institutional/national research committee standards, and international/national/institutional guidelines).

Acknowledgements

We would like to acknowledge the contributions of John Usseglio, an Informationist at Columbia University Irving Medical Center. Mr. Usseglio provided his expertise on constructing comprehensive search strategies for this review. RD is funded by the Mervyn W. Susser Post-doctoral Fellowship Program at the Gertrude H. Sergievsky Center. RS is supported by the National Institute of Nursing Research of the National Institutes of Health under award number K24NR018621.The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Footnotes

Conflict of Interest

Rindcy Davis, Jessica Gardner and Rebecca Schnall declare that they have no conflict of interest.

Publisher's Disclaimer: This Author Accepted Manuscript is a PDF file of a an unedited peer-reviewed manuscript that has been accepted for publication but has not been copyedited or corrected. The official version of record that is published in the journal is kept up to date and so may therefore differ from this version.

REFERENCES

- 1.Hollander R. Two-thirds of the world‟s population are now connected by mobile devices. Business Insider. 2017. [Google Scholar]

- 2.Holst A. Number of smartphone users worldwide 2014–2020. Statista, Available at: https://www.statista.com/statistics/330695/number …; 2019. [Google Scholar]

- 3.Center PR. Communications technology in emerging and developing nations. Pew Research Center. 2015. [Google Scholar]

- 4.Akhlaq A, McKinstry B, Muhammad KB, Sheikh A. Barriers and facilitators to health information exchange in low-and middle-income country settings: a systematic review. Health policy and planning. 2016;31(9):1310–25. [DOI] [PubMed] [Google Scholar]

- 5.Eysenbach G. What is e-health? J Med Internet Res. 2001;3(2):e20. doi: 10.2196/jmir.3.2.e20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Page TF, Horvath KJ, Danilenko GP, Williams M. A cost analysis of an internet based medication adherence intervention for people living with HIV. Journal of acquired immune deficiency syndromes (1999). 2012;60(1):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Patel AR, Kessler J, Braithwaite RS, Nucifora KA, Thirumurthy H, Zhou Q et al. Economic evaluation of mobile phone text message interventions to improve adherence to HIV therapy in Kenya. Medicine. 2017;96(7). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schnall R, Travers J, Rojas M, Carballo-Diéguez A. eHealth interventions for HIV prevention in high-risk men who have sex with men: a systematic review. Journal of medical Internet research. 2014;16(5):e134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Noar SM, Willoughby JF. eHealth interventions for HIV prevention. AIDS care. 2012;24(8):945–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chaiyachati KH, Ogbuoji O, Price M, Suthar AB, Negussie EK, Bärnighausen T. Interventions to improve adherence to antiretroviral therapy: a rapid systematic review. Aids. 2014;28:S187–S204. [DOI] [PubMed] [Google Scholar]

- 11.Henny KD, Wilkes AL, McDonald CM, Denson DJ, Neumann MS. A rapid review of eHealth interventions addressing the continuum of HIV care (2007–2017). AIDS and Behavior. 2018;22(1):43–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bevan N, Carter J, Earthy J, Geis T, Harker S, editors. New ISO Standards for Usability, Usability Reports and Usability Measures. Human-Computer Interaction Theory, Design, Development and Practice; 2016. 2016//; Cham: Springer International Publishing. [Google Scholar]

- 13.Seffah A, Kececi N, Donyaee M, editors. QUIM: a framework for quantifying usability metrics in software quality models. Proceedings Second Asia-Pacific Conference on Quality Software; 2001: IEEE. [Google Scholar]

- 14.Sheehan B, Lee Y, Rodriguez M, Tiase V, Schnall R. A comparison of usability factors of four mobile devices for accessing healthcare information by adolescents. Applied clinical informatics. 2012;3(04):356–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yen P-Y, Bakken S. Review of health information technology usability study methodologies. Journal of the American Medical Informatics Association. 2012;19(3):413–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kaufman D, Roberts WD, Merrill J, Lai T-Y, Bakken S. Applying an evaluation framework for health information system design, development, and implementation. Nursing research. 2006;55(2):S37–S42. [DOI] [PubMed] [Google Scholar]

- 17.Cho H, Porras T, Baik D, Beauchemin M, Schnall R. Understanding the predisposing, enabling, and reinforcing factors influencing the use of a mobile-based HIV management app: A real-world usability evaluation. International journal of medical informatics. 2018;117:88–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cho H, Yen P-Y, Dowding D, Merrill JA, Schnall R. A multi-level usability evaluation of mobile health applications: A case study. Journal of biomedical informatics. 2018;86:79–89.• This paper provides an overview of the combination of multiple usability methods to develop a self-management app.

- 19.Cho H, Powell D, Pichon A, Kuhns LM, Garofalo R, Schnall R. Eye-tracking retrospective think-aloud as a novel approach for a usability evaluation. International journal of medical informatics. 2019;129:366–73. doi: 10.1016/j.ijmedinf.2019.07.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Cho H, Powell D, Pichon A, Thai J, Bruce J, Kuhns LM et al. A mobile health intervention for HIV prevention among racially and ethnically diverse young men: usability evaluation. JMIR mHealth and uHealth. 2018;6(9):e11450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Beauchemin M, Gradilla M, Baik D, Cho H, Schnall R. A Multi-step Usability Evaluation of a Self-Management App to Support Medication Adherence in Persons Living with HIV. International Journal of Medical Informatics. 2019;122:37–44. doi: 10.1016/j.ijmedinf.2018.11.012.•This article describes the use of a cognitive walkthrough for evaluating the usability of an HIV prevention intervention. Cognitive walkthrough is a rarely used evaluation method but is very useful for pinpointing challenging tasks or complicated features associated with an eHealth intervention

- 22.Coppock D, Zambo D, Moyo D, Tanthuma G, Chapman J, Re III VL et al. Development and usability of a smartphone application for tracking antiretroviral medication refill data for human immunodeficiency virus. Methods of information in medicine. 2017;56(05):351–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cordova D, Alers-Rojas F, Lua FM, Bauermeister J, Nurenberg R, Ovadje L et al. The usability and acceptability of an adolescent mHealth HIV/STI and drug abuse preventive intervention in primary care. Behavioral Medicine. 2018;44(1):36–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Danielson CK, McCauley JL, Gros KS, Jones AM, Barr SC, Borkman AL et al. SiHLE Web. com: Development and usability testing of an evidence-based HIV prevention website for female African-American adolescents. Health informatics journal. 2016;22(2):194–208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hightow-Weidman LB, Fowler B, Kibe J, McCoy R, Pike E, Calabria M et al. HealthMpowerment.org: development of a theory-based HIV/STI website for young black MSM. AIDS Educ Prev. 2011;23(1):1–12. doi: 10.1521/aeap.2011.23.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Himelhoch S, Kreyenbuhl J, Palmer-Bacon J, Chu M, Brown C, Potts W. Pilot feasibility study of Heart2HAART: a smartphone application to assist with adherence among substance users living with HIV. AIDS care. 2017;29(7):898–904. doi: 10.1080/09540121.2016.1259454. [DOI] [PubMed] [Google Scholar]

- 27.Horvath KJ, Bauermeister JA. eHealth Literacy and Intervention Tailoring Impacts the Acceptability of a HIV/STI Testing Intervention and Sexual Decision Making Among Young Gay and Bisexual Men. AIDS Educ Prev. 2017;29(1):14–23. doi: 10.1521/aeap.2017.29.1.14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kawakyu N, Nduati R, Munguambe K, Coutinho J, Mburu N, DeCastro G et al. Development and Implementation of a Mobile Phone-Based Prevention of Mother-To-Child Transmission of HIV Cascade Analysis Tool: Usability and Feasibility Testing in Kenya and Mozambique. JMIR mHealth and uHealth. 2019;7(5):e13963-e. doi: 10.2196/13963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Luque AE, Corales R, Fowler RJ, DiMarco J, van Keken A, Winters P et al. Bridging the digital divide in HIV care: a pilot study of an iPod personal health record. J Int Assoc Provid AIDS Care. 2013;12(2):117–21. doi: 10.1177/1545109712457712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mitchell JT, LeGrand S, Hightow-Weidman LB, McKellar MS, Kashuba AD, Cottrell M et al. Smartphone-Based Contingency Management Intervention to Improve Pre-Exposure Prophylaxis Adherence: Pilot Trial. JMIR mHealth and uHealth. 2018;6(9):e10456-e. doi: 10.2196/10456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Musiimenta A, Atukunda EC, Tumuhimbise W, Pisarski EE, Tam M, Wyatt MA et al. Acceptability and Feasibility of Real-Time Antiretroviral Therapy Adherence Interventions in Rural Uganda: Mixed-Method Pilot Randomized Controlled Trial. JMIR mHealth and uHealth. 2018;6(5):e122-e. doi: 10.2196/mhealth.9031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sabben G, Mudhune V, Ondeng’e K, Odero I, Ndivo R, Akelo V et al. A Smartphone Game to Prevent HIV Among Young Africans (Tumaini): Assessing Intervention and Study Acceptability Among Adolescents and Their Parents in a Randomized Controlled Trial. JMIR mHealth and uHealth. 2019;7(5):e13049-e. doi: 10.2196/13049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Schnall R, Bakken S, Brown Iii W, Carballo-Dieguez A, Iribarren S. Usabilty Evaluation of a Prototype Mobile App for Health Management for Persons Living with HIV. Studies in health technology and informatics. 2016;225:481–5. [PMC free article] [PubMed] [Google Scholar]

- 34.Schnall R, Rojas M, Bakken S, Brown W, Carballo-Dieguez A, Carry M et al. A user-centered model for designing consumer mobile health (mHealth) applications (apps). Journal of biomedical informatics. 2016;60:243–51. doi: 10.1016/j.jbi.2016.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Shegog R, Markham CM, Leonard AD, Bui TC, Paul ME. “+CLICK”: pilot of a web-based training program to enhance ART adherence among HIV-positive youth. AIDS care. 2012;24(3):310–8. doi: 10.1080/09540121.2011.608788. [DOI] [PubMed] [Google Scholar]

- 36.Shegog R, Markham C, Peskin M, Dancel M, Coton C, Tortolero S, editors. ‘It’s Your Game’: An Innovative Multimedia Virtual World to Prevent HIV/STI and Pregnancy in Middle School Youth. Medinfo 2007: Proceedings of the 12th World Congress on Health (Medical) Informatics; Building Sustainable Health Systems; 2007: IOS Press. [PubMed] [Google Scholar]

- 37.Skeels MM, Kurth A, Clausen M, Severynen A, Garcia-Smith H, editors. CARE+ user study: usability and attitudes towards a tablet pc computer counseling tool for HIV+ men and women AMIA Annual Symposium Proceedings; 2006: American Medical Informatics Association. [PMC free article] [PubMed] [Google Scholar]

- 38.Stonbraker S, Cho H, Hermosi G, Pichon A, Schnall R. Usability testing of a mhealth app to support self-management of HIV-associated non-AIDS Related Symptoms. Studies in health technology and informatics. 2018;250:106. [PMC free article] [PubMed] [Google Scholar]

- 39.Sullivan PS, Driggers R, Stekler JD, Siegler A, Goldenberg T, McDougal SJ et al. Usability and acceptability of a mobile comprehensive HIV prevention app for men who have sex with men: a pilot study. JMIR mHealth and uHealth. 2017;5(3):e26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Rodríguez Vargas B, Sánchez-Rubio Ferrández J, Garrido Fuentes J, Velayos R, Morillo Verdugo R, Sala Piñol F et al. Usability and Acceptability of a Comprehensive HIV and Other Sexually Transmitted Infections Prevention App. J Med Syst. 2019;43(6):175–. doi: 10.1007/s10916-019-1323-4. [DOI] [PubMed] [Google Scholar]

- 41.Widman L, Golin CE, Kamke K, Massey J, Prinstein MJ. Feasibility and acceptability of a web-based HIV/STD prevention program for adolescent girls targeting sexual communication skills. Health Educ Res. 2017;32(4):343–52. doi: 10.1093/her/cyx048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Williams M, Bowen A, Ei S. An evaluation of the experiences of rural MSM who accessed an online HIV/AIDS health promotion intervention. Health Promot Pract. 2010;11(4):474–82. doi: 10.1177/1524839908324783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Winstead-Derlega C, Rafaly M, Delgado S, Freeman J, Cutitta K, Miles T et al. A pilot study of delivering peer health messages in an HIV clinic via mobile media. Telemedicine and e-Health. 2012;18(6):464–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ybarra M, Biringi R, Prescott T, Bull SS. Usability and navigability of an HIV/AIDS internet intervention for adolescents in a resource limited setting. Computers, informatics, nursing: CIN. 2012;30(11):587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.US Department of Health Human Services. Usability testing. 2012. www.usability.gov/how-to-and-tools/methods/usability-testing.html [accessed 2017-12-29][WebCite Cache ID 6w4kyZEBQ]. [Google Scholar]

- 46.Gruenstein A, McGraw I, Badr I, editors. The WAMI toolkit for developing, deploying, and evaluating web-accessible multimodal interfaces. Proceedings of the 10th international conference on Multimodal interfaces; 2008. [Google Scholar]

- 47.Pope C, Mays N. Reaching the parts other methods cannot reach: an introduction to qualitative methods in health and health services research. BMJ (Clinical research ed). 1995;311(6996):42–5. doi: 10.1136/bmj.311.6996.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.DiCicco-Bloom B, Crabtree BF. The qualitative research interview. Medical Education. 2006;40(4):314–21. doi: 10.1111/j.1365-2929.2006.02418.x. [DOI] [PubMed] [Google Scholar]

- 49.Jaspers MW. A comparison of usability methods for testing interactive health technologies: methodological aspects and empirical evidence. International journal of medical informatics. 2009;78(5):340–53. [DOI] [PubMed] [Google Scholar]

- 50.Kaufman DR, Patel VL, Hilliman C, Morin PC, Pevzner J, Weinstock RS et al. Usability in the real world: assessing medical information technologies in patients’ homes. Journal of biomedical informatics. 2003;36(1–2):45–60. [DOI] [PubMed] [Google Scholar]

- 51.Lewis JR, Sauro J, editors. The factor structure of the system usability scale International conference on human centered design; 2009: Springer. [Google Scholar]

- 52.Schnall R, Cho H, Liu J. Health Information Technology Usability Evaluation Scale (Health-ITUES) for usability assessment of mobile health technology: validation study. JMIR mHealth and uHealth. 2018;6(1):e4.• •This study validates the use of a customizable usability questionnaire for assessing the usability of mobile technology.

- 53.Lewis JR. Psychometric evaluation of the PSSUQ using data from five years of usability studies. International Journal of Human-Computer Interaction. 2002;14(3–4):463–88. [Google Scholar]

- 54.Lewis JR. IBM computer usability satisfaction questionnaires: psychometric evaluation and instructions for use. International Journal of Human‐Computer Interaction. 1995;7(1):57–78. [Google Scholar]

- 55.Campbell M, Fitzpatrick R, Haines A, Kinmonth AL, Sandercock P, Spiegelhalter D et al. Framework for design and evaluation of complex interventions to improve health. Bmj. 2000;321(7262):694–6. [DOI] [PMC free article] [PubMed] [Google Scholar]