Abstract

Intervention effects observed in efficacy trials are rarely seen when programs are broadly disseminated, underscoring the need to better understand factors influencing fidelity. The Michigan Model for Health™ (MMH) is an evidence-based health curriculum disseminated in schools throughout Michigan that is widely adopted but delivered with limited fidelity. Understanding implementation determinants and how they influence fidelity is essential to achieving desired implementation and behavioral outcomes.

The study surveyed health teachers throughout Michigan (n=171) on MMH implementation, guided by the Consolidated Framework for Implementation Research. We investigated relationships between context, intervention and provider factors and dose delivered (i.e., the proportion of curriculum delivered by teachers), a fidelity dimension. We also examined whether intervention factors were moderated by provider factors to influence fidelity.

Our results indicated that program packaging ratings were associated with dose delivered (fidelity). We also found that this relationship was moderated by teacher experience. The strength of this relationship diminished with increasing levels of experience, with no relationship among the most experienced teachers. Intervention adaptability was also associated with dose delivered. We found no association between health education policies (context), provider beliefs and dose delivered.

Intervention factors are important determinants of fidelity. Our results suggest that providers with more experience may need materials tailored to their knowledge and skill level to support materials’ continued usefulness and fidelity long-term. Our results also suggest that promoting adaptability may help enhance fidelity. Implementation strategies that focus on systematically adapting evidence-based health programs may be well suited to enhancing the fidelity of the MMH curriculum across levels of teacher experience.

Keywords: implementation science; prevention; program evaluation; evidence-based practice; health, school; youth

Introduction

Intervention effects observed in efficacy trials are rarely seen when programs are broadly implemented outside of research settings. There is often an observed “voltage drop” when interventions are implemented in the real-world practice settings, which can be attributed, in part, to decreased levels of intervention fidelity, (Chambers et al., 2013; Weisz et al., 2013). This underscores the need to better identify key implementation determinants, and understand how they operate to influence fidelity, the extent to which a program is intended by program developers or original protocol (Lyon & Bruns, 2019b; Proctor et al., 2011). Substance use and other areas of prevention (e.g., violence) have made notable gains in developing effective interventions (Dusenbury et al., 2003). Yet, these programs are rarely delivered as intended (i.e., with fidelity; Lee & Gortmaker, 2018). Fidelity can include multiple dimensions such as dose delivered (i.e., the proportion of the intervention delivered), quality of program delivery, and adherence to the intervention protocol (Proctor et al., 2011). The Michigan Model for Health™ (MMH), for example, the health curriculum for the State of Michigan, is a theoretically-based, comprehensive, skills-focused program that has demonstrated effectiveness in reducing adolescent substance use (O’neill et al., 2011). MMH is recognized by Collaborative for Academic, Social and Emotional Learning (CASEL) and the National Registry of Evidence-Based Programs and Practices (CASEL, 2018; SAMHSA, 2018). In addition, MMH is aligned to both the State of Michigan and National Health Education standards. Prevailing policy and practice have encouraged its adoption within many Michigan school districts. The program is grounded in Social Learning Theory (Bandura, 1977) and the Health Belief Model (Rosenstock, 1974), and it addresses several developmentally appropriate cognitive, social-emotional, attitudinal, and contextual factors related to health behaviors (www.michigan.gov/mdhhs). School-based prevention, such as MMH offers an opportunity to reach large populations of young people, including those underserved in other settings. Although widely adopted, with 91% of high school teachers using MMH, only 31% meet state-identified standards of fidelity; these standards are specified using dose-delivered, a dimension of fidelity, specifically delivering 80% or more of the curriculum (Proctor et al., 2011; Rockhill, 2017).

The field of implementation science developed to address the research-to-practice gap. Implementation science is the study of methods to promote the systematic uptake of research and other evidence-based interventions (EBI) into routine practice to improve the quality and effectiveness of these programs and practices (Eccles & Mittman, 2006). While implementation research has made notable progress in developing and applying frameworks to identify implementation determinants, identifying key determinants and understanding how they influence implementation outcomes (e.g., fidelity) to effectively design implementation strategies is just emerging (Lyon & Bruns, 2019b). Most implementation research has been conducted in clinical settings; comparatively less research has applied implementation frameworks to study school-based programs (Lee & Gortmaker, 2018; Lyon & Bruns, 2019b). Although there is notable development in research to support the implementation of selected and indicated (Tiers 2 and 3) interventions, considerably less research has examined the implementation of universal (Tier 1) prevention EBIs in schools (Chafouleas et al., 2019). Yet, this research is critical to producing generalizable knowledge to identify and deploy effective implementation strategies (i.e., implementation interventions) that will enhance the fidelity of universal prevention programs and support their sustainment in schools.

Multilevel Influences on School-Based EBI Implementation

The Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009) provides a useful guide to examine implementation determinants for school-based EBI implementation. CFIR is well suited to investigating these determinants as implementation inherently is a multilevel endeavor (Lyon & Bruns, 2019b). CFIR is a comprehensive framework that includes domains across multiple levels (e.g., context, provider and intervention factors) that can aid in understanding and improving EBI delivery in a given setting. This framework has been widely applied in clinical implementation research to improve fidelity in community settings. Researchers have identified specific domains/levels as critical to school-based implementation and possible targets for implementation strategies (Lyon & Bruns, 2019b). These levels include 1) the context, 2) the providers, and 3) the intervention. Consequently, including multi-level determinants is critical when evaluating implementation and identifying key targets for implementation strategies (Lyon & Bruns, 2019b).

Context

Determinants within the immediate organizational context where implementation occurs (i.e., schools) include policies related to the evidence-based intervention and its delivery. This refers to school- or district-level policies and practices that support the health and academic needs of students; in 2014, the Centers for Disease Control and Prevention partnered with ASCD (the Association for Supervision and Curriculum Development) to develop a framework to guide such policies, called the WSCC (Whole School, Whole Child, Whole Community; Lewallen et al., 2015). They identified policies and practices related to health education as a key component in achieving positive academic and health outcomes among youth; in particular, the WSCC model, founded on concepts of coordinated school health, which is designed to support policies and practices that help schools implement and sustain school health interventions (Rasberry et al., 2015). Policies may be measured at the individual level via perceptions of the policies, or by coding actual policies from local school-level, or larger district level (Rasberry et al., 2015). Policies related to health education, including perceptions and actual policies, have the potential to influence implementation, but more research is needed to understand how policies influence fidelity. Herein, we focus on the perception of district policies, which may be critical to the delivery of evidence-based school health curricula such as MMH with fidelity.

Provider-level factors

Provider-level implementation determinants include characteristics and experiences of front-line providers (i.e., teachers) delivering the EBI; researchers have found that provider-level characteristics such as beliefs about the EBI and provider (i.e., teacher) experience influence program uptake and fidelity (Lyon & Bruns, 2019b). Social/behavioral science theories, including Social Cognitive Theory (Bandura, 1977) have long supported the role that individual-level factors such as attitudes and beliefs play in behavior and behavior change. This is true among educators; educator characteristics have been argued as a necessary component of change in education practices within schools (Gusky, 1986). For example, researchers found that providers with negative perceptions of a school-based substance use-prevention curricula were less likely to deliver it with fidelity (Mihalic et al., 2008). Consequently, beliefs about the EBI are a key individual-level determinant to consider when evaluating curriculum fidelity.

Teacher experience is another key factor that may influence curriculum fidelity. Teachers’ implementation of evidence-based curricula is likely to evolve with experience (Hall & Hord, 1987). With more experience, teachers may be less likely to adhere to the curricula and more likely to make adaptations based on their perceptions of student needs and context (Hall & Hord, 1987). While some of this research has focused on the first several iterations of a specific curriculum (Ringwalt et al., 2010), this may also be applied more broadly to teachers’ level of experience teaching health. Consistent with this, other researchers have found that teachers with less experience are more likely to deliver a program with high fidelity than a teacher with more experience (Rohrbach et al., 2010). Taken together, these results suggest that teachers who have more experience may be less likely to deliver EBIs with fidelity.

Intervention

Implementation science tends to focus on contextual and provider levels of influence, with less focus on factors related to characteristics of the intervention program itself, such as program packaging (i.e., curriculum materials), and curriculum adaptability (Lyon & Bruns, 2019b). Manualized interventions are the primary mechanism by which research discoveries are translated into action to improve the public’s health (Garland et al., 2014). The MMH intervention, for example, includes a detailed manual, consisting of 6 core units; the packaging consists of step-by-step lesson instructions, including power points, lesson activities, assessments and rubrics. Intervention characteristics, including perceptions of program packaging and adaptability, are critical features of EBI fidelity. Researchers found that teachers who rated intervention characteristics more favorably (e.g., as an asset) delivered a school-based drug use prevention program with higher fidelity (i.e., dose delivered and adherence) that those who rated the intervention less favorably (Mihalic et al., 2008). But intervention factors were aggregated. Examining them separately will aid in understanding how characteristics each uniquely influence fidelity and inform suitable implementation strategies to address them.

Intervention adaptability also plays a key role in intervention delivery, as deliberate adaptation is essential to meet the needs of the context and promote successful adoption and scale-up (Chambers & Azrin, 2013). Balancing the need for fidelity and flexibility in program delivery remains a challenge. But researchers increasingly acknowledge that fidelity and adaptation can coexist and that “there is no implementation without adaptation (Lyon & Bruns, 2019a, p. 3).” Adaptation is an important complement to fidelity and not necessarily in conflict with it; optimizing interventions often involves specific culturally focused adaptations to enhance the fit between the EBI and its intended recipients (Barrera et al., 2017). Adaptation is a key but understudied implementation determinant. Taken together, these results suggest that more research is needed to understand the relationship between intervention factors and fidelity.

Intervention characteristics are understudied, and increasingly implementation science researchers recognize that implementation outcomes such as fidelity are inseparable from the intervention itself (Lyon & Bruns, 2019a, 2019b). This has led to a growing focus on implementation strategies incorporating specific approaches to address intervention-related barriers, such as user-centered design; user-centered design is an approach to intervention development and adaptation that is guided by information and characteristics of the products’ end-users (i.e., the teachers; Lyon & Bruns, 2019a). Yet, a critical first step in employing implementation strategies that incorporate user-centered design is investigating whether or how the relationship between fidelity and intervention factors is moderated by provider (teacher) characteristics. Understanding how end-users interact with the intervention itself can help target specific implementation strategies to meet provider needs and improve implementation success.

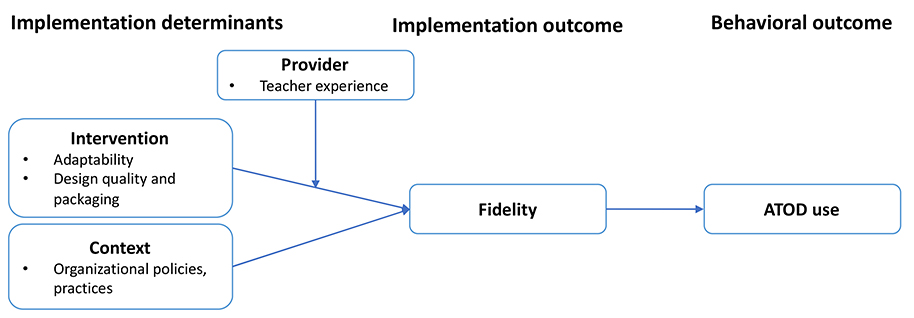

Researchers suggest that the aforementioned categories of determinants (context, provider and intervention factors) can all exert independent effects on fidelity, but they may also exert combined effects (Allen et al., 2018). Understanding these combined effects, through exploring moderation, can deepen our understanding of implementation determinants and how they influence fidelity. In the current study, we examine multilevel implementation determinants and EBI fidelity, specifically measured as dose delivered (See Figure 1). We also investigate possible moderators of fidelity, notably provider and intervention characteristics; end-users’ (i.e., the providers) interaction with the intervention itself offers a promising opportunity to better tailor interventions and meet the needs of providers and the context to support fidelity long-term. We focus specifically on the interaction between curriculum materials (i.e., program packaging) and provider factors based on prior research suggesting that teachers’ relationship with curriculum materials changes over time as they gain experience (Ringwalt et al., 2010). We also investigate possible moderation to inform suitable methods to address implementation determinants, such as implementation strategies informed by user-centered design. We test the hypothesis that factors across CFIR domains, including the context, provider and intervention factors, will be associated with MMH fidelity among health teachers. We also hypothesize that provider factors (e.g., teacher experience) will moderate the relationship between program packaging and MMH fidelity.

Figure 1:

Hypothesized mechanism for relationship between implementation determinants, program fidelity and student outcomes. The current study examines provider experience as a moderator of intervention characteristics on Michigan Model for Health™ fidelity (as measured via dose delivered).

Method

The study, which was reviewed and approved by local institutional review boards, involved high school health teachers from schools across Michigan. Teachers possessing appropriate state certification (i.e., health certification) and currently teaching health in high school were eligible to participate. We focus on high schools as this is a developmental period characterized by heightened engagement in health risk behaviors (e.g., substance use, violence) and poor mental health outcomes. We also focus on this period because, in Michigan, health class is required only in high school.

The survey was guided by CFIR and includes items addressing the context (e.g., organizational policies and practices) and the provider (e.g., experience teaching health) and the intervention (e.g., program packaging). The survey is also guided by the and the Implementation Outcomes Framework (IOF); IOF provides a framework to conceptualize and operationalize implementation outcomes such as fidelity (Proctor et al., 2011). The model puts forth a heuristic for understanding the relationship between implementation and intervention effectiveness, specifically, that implementation outcomes are a necessary precondition for attaining desired behavioral outcomes with EBIs. (Proctor et al., 2011). The hypothesized relationships including possible interactions are shown in Figure 1.

Participants

We recruited high school health teachers throughout Michigan for the study in collaboration with the Michigan Department of Health and Human Services (MDHHS) and their state-wide network of Regional School Health Coordinators (RSHCs). RSHCs are professionals who promote the health and safety of youth and families using evidence-based approaches. RSHCs work with school districts and teachers in their assigned regions (generally multiple adjacent counties) to support school health programs, including providing MMH curriculum training and technical assistance for teachers. RSHCs contacted all eligible participants (high school health teachers in their region) via email. The health teachers received an email letter that included a short study description and a link for those interested in completing the online survey. According to our MDHHS partners, most Michigan high schools have one certified health teacher. Given this, to ensure anonymity in responses, teachers were only asked for their school’s county. Potential participants completed a screening survey with a unique identifier to verify eligibility and had to meet the following criteria: (1) Be 18 years of age or older, (2) work with high school students/in a high school building and (3) teach high school-level health classes.

Procedure

The RSHCs distributed a recruitment email regarding study participation to the high school health teachers in their region, informing them about the study. The letter included a short study description and information about study participation, including a link (URL) for those interested in completing the online survey. The survey was self-administered using a secure, online survey administration program. Following the screening survey, eligible participants were directed to an informed consent document. Participants were linked to the Health Education Experts Survey using a second unique identifier. The current study was exempted (Category 1: Research conducted in common educational settings involving normal educational practices) from IRB approval. Participants received $10 remuneration for completing the online survey.

Measures

Fidelity: Dose delivered

We measured fidelity by asking about the proportion of MMH delivered. Teachers were asked what proportion of the curriculum they typically delivered from 1: less than 25% to 4: 75% or more. This measure is consistent with a previous evaluation of MMH (Rockhill, 2017).

Implementation Determinants

Context: organizational policies and practices

We asked health teachers about the school context, specifically the organizational policies and practices related to health and learning. This measure is based on school (i.e., organizational) characteristics as defined by Mihalic et. al. (2008), informed by the CFIR inner and outer setting (Damschroder et al., 2009) and adapted based MDHHS input. Teachers were asked to rate organizational policies and practices related to health and learning from 1: significant barrier to 5: significant asset to delivering MMH.

Provider factors

Beliefs

We asked teachers about beliefs regarding the effectiveness of the intervention. Participants were asked for their level of agreement with the following: “MMH effectively addresses substance use” using a 5-point Likert scale. We focused on this aspect of MMH as the curriculum has demonstrated efficacy in reducing substance use in multiple trials, drug use prevention is a core component of the MMH curriculum and an issue of primary concern among youth (www.healthypeople.gov/2020; O’neill et al., 2011).

Gender and teaching experience

We assessed the number of years teaching health, in 5-year increments from 1: less than 5 years to 5: more than 20 years. We assessed gender using male, female, other; as no respondents reported “other” we dichotomized the gender variable.

Intervention

Design quality and packaging

Teachers were asked about MMH curriculum design quality and packaging; specifically, they rated perceptions of MMH intervention materials, including curriculum handouts and activities from 1: significant barrier to 5: significant asset. The item was adapted from Mihalic et al. (2008) and informed by our MDHHS partners.

Adaptability

Teachers were asked about the perceived adaptability of MMH; specifically, how much they agreed with the statement that they could make changes to the curriculum to make it work more effectively in their setting from 1: strongly disagree to 5:strongly agree. This item was guided by adaptation as described by Damschroder et al (2009).

Analytic Approach

Preliminary analyses included descriptive statistics and unadjusted analyses between all study variables. We evaluated study variables for violations of statistical assumptions as indicated. We next evaluated missing data on all study variables. Our analytic sample was n=159 (93% of the initial study sample, n=171) due to missing data on our dependent variable. We examined participants who had missing data on our outcome versus non-missing on demographic characteristics (e.g., gender, age, years teaching) using t-tests and chi-square tests. We utilized multiple imputation for missing data as needed on independent variables. In accordance with our study hypothesis, our analyses included statistical interaction terms. We followed the procedure as described by Jose (2013), that following a significant interaction finding, we then investigated details of the moderation effect through graphing and examining simple slopes.

We adopted a model building approach driven by theory and previous empirical research to select variables for inclusion in regression models. Specifically, we included variables across CFIR domains that have been associated with fidelity in schools but limited the number of variables included to avoid model overfitting. Model overfitting occurs when too many parameters are estimated given the sample size; this can result in biased and untrustworthy estimates (Harrell, 2015). We conducted multiple linear regression analyses in 2 steps. We included context, provider and intervention factors in step 1 for our main effects model, testing the hypothesis that multilevel implementation determinants predict fidelity (i.e., dose delivered). In step 2, we tested the hypothesis that provider factors would influence the relationship between intervention factors and fidelity, adding a two-way interaction term. We used MI using in Stata (StataCorp, 2019) to handle notable proportions of missing data on independent variables (i.e., >10%; Bennett, 2001). MI accounts for missing data conditional on all variables in the imputation model (Enders, 2010). We used 10 imputations and imputed by simulating from the joint distribution of the covariates and response that were in the main model. To compute predicted probabilities using the MI model, we used adjusted predictions/marginal effects and multiple imputation. For our outcome variable, we examined participants who had missing data versus non-missing on all sociodemographic variables to examine possible differences.

Results

Table 1 provides the descriptive statistics and bivariate analyses for the study variables. We had 58 of 83 counties represented in the sample, with 13% of the teachers not reporting their county. Forty-three percent of our sample was male and mean years teaching health was between 5–15 years. Our results suggest we had a notable range of experience teaching health. Ninety-three percent of the sample was White, 4% African American and 2% Latino/a. Although our sample includes approximately 21% of all certified high school health teachers in Michigan, our results suggest that our sample is sociodemographically similar to the larger population of health teachers across the state, according to the Center for Educational Performance and Information (www.michigan.gov/cepi/). We found that the mean dose delivered was 2.45. This suggests that most teachers did not meet the state-identified fidelity standard of 80%. In our analysis of missing data on the outcome, we found differences between those missing and not, with more males than females missing (X2=9.29, p>.01) We also found that the mean years teaching health (t=−2.69, p>.01) was higher among those missing than those not missing on the outcome.

Table 1:

Descriptive statistics and intercorrelations of study variables

| 1 | 2 | 3 | 4 | 5 | 6 | M (SD) or % | |

|---|---|---|---|---|---|---|---|

| 1 MMH dose delivered | 1 | 2.45 (1.19) | |||||

| 2 Organizational policies & practices | 0.21* | 1 | 3.51(0.88)a | ||||

| 3 Male | 0.28* | 0.17* | 1 | 43% | |||

| 4 Effectiveness of MMH ATOD unit | 0.26* | 0.16* | 0.13 | 1 | 3.76(1.20)a | ||

| 5 Years teaching health | −0.05 | −0.06 | 0.13* | −0.16* | 1 | 2.8 (1.36)b | |

| 6 Curriculum design quality & packaging | 0.48* | 0.14 | 0.18* | 0.27* | −0.04 | 1 | 3.62(0.99)a |

| 7 Curriculum adaptability | 0.45* | 0.21* | 0.16* | 0.17* | −0.01 | 0.45* | 4.37(0.78)a |

p<.05

higher ratings represent more favorable perceptions

represents years teaching between 5–10 and 11–15 years

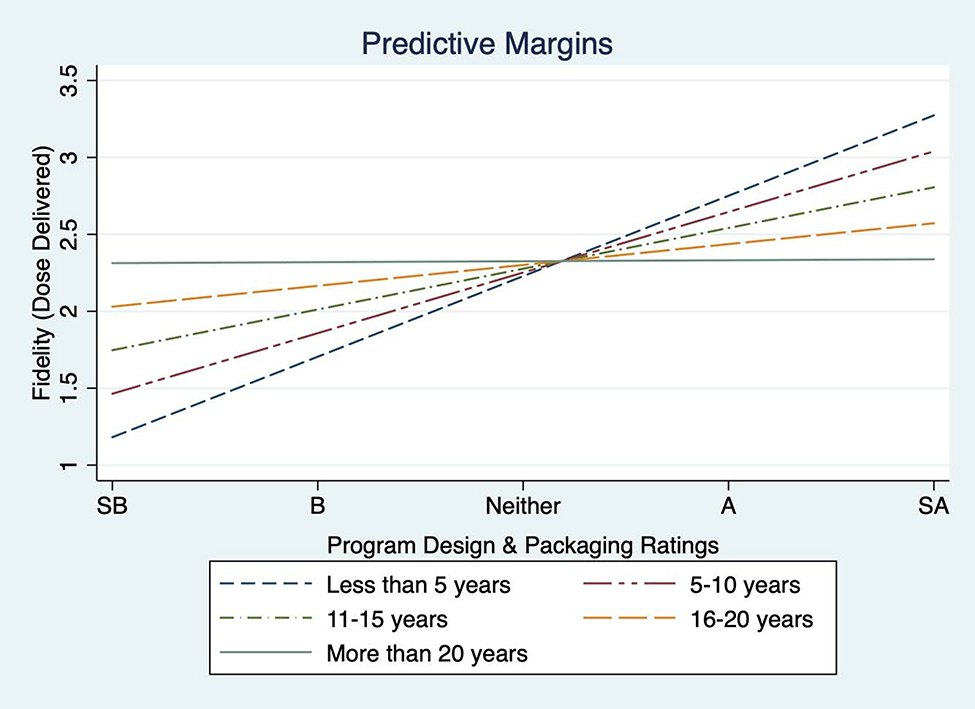

The multivariate models are included in Table 2. As a first step in our analysis, we investigated assumptions for regression, including normality and homoscedasticity. Graphical examination of residuals indicates limited deviation from normality. The Cook and Weisenberg test for heteroscedasticity indicates that heteroscedasticity is likely not an issue (X2=1.02). In the model including only main effects, we found that being male, curriculum design quality and packaging ratings, and adaptability were positively associated with fidelity. Years teaching health, perceived effectiveness of the drug use prevention unit and organizational policies and practices were not associated with fidelity. In the full model including interaction terms, we found that teacher experience moderates the relationship between ratings of curriculum design quality and packaging and fidelity (see full model results, Table 2). To further explore this interaction, we examined simple slopes at each level of teacher experience and graphed the moderation effect (see Figure 2). Table 2 includes the simple slopes: the amount of change in fidelity with a one-unit change in program design and packaging ratings, holding teacher experience constant at different values. The amount of change in fidelity associated with program packaging ratings decreased as teachers reported higher levels of experience teaching health. We found that among teachers with 16 or more years of experience, there was no relationship. Perceived adaptability and gender were associated with fidelity, with a one-unit change in perceived adaptability resulting in fidelity increasing by 0.12 units. Male teachers reported fidelity ratings 0.40 units higher than female teachers. Perceived curriculum effectiveness and organizational policies related to health education were not associated with fidelity.

Table 2:

Main effects and full model results

| Parameters | b | SE | 95% CI | b | SE | 95% CI |

| Intercept | −0.82 | 0.58 | −1.96, 0.32 | −2.17* | 0.86 | −3.88, -0.46 |

| Effectiveness of MMH ATOD unit | 0.07 | 0.08 | −0.08, 0.22 | 0.06 | 0.07 | −0.09, 0.21 |

| Male | 0.40* | 0.18 | 0.04, 0.76 | 0.39* | 0.18 | 0.04, 0.75 |

| Organizational policies & practices | −0.01 | 0.10 | −0.20, 0.19 | 0.02 | 0.10 | −0.17, 0.22 |

| Curriculum adaptability | 0.45** | 0.12 | 0.21, 0.68 | 0.45** | 0.12 | 0.21, 0.68 |

| Curriculum design quality & packaging | 0.29** | 0.09 | 0.10, 0.47 | 0.65** | 0.20 | 0.26, 1.04 |

| Years teaching health | −0.04 | 0.06 | −0.17, 0.08 | 0.41 | 0.23 | −0.04, 0.86 |

| Design quality & packaging x years teaching health | −0.13* | 0.06 | −0.25, -0.01 | |||

| Simple slopesa | SE | p-value | 95% CI | |||

| Years teaching health | ||||||

| less than 5 years | 0.52* | 0.14 | 0.00 | 0.23, 0.81 | ||

| 5–10 years | 0.39* | 0.11 | 0.00 | 0.18, 0.60 | ||

| 11–15 years | 0.26* | 0.09 | 0.01 | 0.08, 0.45 | ||

| 16–20 years | 0.14 | 0.12 | 0.25 | −0.10, 0.37 | ||

| More than 20 years | 0.01 | 0.16 | 0.96 | −0.32, 0.33 | ||

p<.05

p<.05 b: beta, SE: standard error, CI: confidence interval

simple slope values forpackaging ratings and fidelity holding teacher experience constant at different values

Figure 2:

Conditional effects of program design and packaging ratings and fidelity across levels of teacher experience. The association between program design and packaging ratings and fidelity diminishes with increasing experience and is not present at 16–20 years and more than 20 years of experience. SB: significant barrier, B: barrier, A: asset, SA: significant asset.

Discussion

Understanding implementation determinants influencing fidelity (e.g., dose delivered) and how they influence implementation outcomes is vital to improving fidelity of evidence-based health interventions in schools and realizing their public health benefits. Researchers, in general, have found generally that higher levels of fidelity are associated with better participant or student-level outcomes, including with school-based interventions (Dusenbury et al., 2003; Rohrbach et al., 2010). Evidence also suggests that implementation determinants across multiple levels, including context, provider and intervention determinants, may influence fidelity of EBIs. Yet, how these determinants influence fidelity may depend on other characteristics. How intervention factors influence fidelity, for example, may depend on provider characteristics. The current study investigates multi-level implementation determinants for a universal prevention EBI, and possible interactions between determinants on dose delivered, a dimension of fidelity.

We found that teacher experience moderated the relationship between program packaging and fidelity. Specifically, we found a diminishing relationship between program packaging ratings and fidelity as teachers reported higher levels of experience such that, among teachers with the highest levels of experience (i.e., 16 or more years of teaching health), we found no relationship between program packaging ratings and fidelity. This is consistent with the Ringwalt et.al. (2010) who found that teachers early on in program delivery followed the curriculum more closely, and may thus have found the materials more helpful, but made changes to materials as they gained more experience. Our results suggest that options for program packaging, as well as training and support for using tailored versions of curriculum materials, may need to evolve as teachers gain more experience. This may include versions of the curriculum that include a different levels of structure. One version, for example, could include more structure for newer teachers and another version could include less structure for more experienced teachers. In this way, experienced teachers could retain the functional elements of the curriculum critical to its success, but also allow space to integrate their own experiences and teaching style. Ongoing engagement of teachers, through tailored trainings and curriculum packaging options, is vital in continuing to deliver programs with fidelity (Quinn & Kim, 2017). Training and support can help teachers remain engaged by addressing emerging challenges to student health and intervention delivery and provide guidance for innovative fidelity-consistent adaptations.

We also found, among implementation determinants, that higher ratings of curriculum adaptability were associated with higher dose delivered regardless of teacher experience. Although researchers have written extensively on the importance of intervention adaptability for EBIs (see Barrera et al., 2017; Castro & Yasui, 2017; Quinn & Kim, 2017) few have examined this relationship empirically. Our results support the notion that adaptability is key to successful implementation, as “there is no implementation without adaptation (Lyon & Bruns, 2019a, p. 3).” EBIs need to be adapted to meet local needs, although their remains some tension between what should and should not be adapted. Our results support the need to promote adaptability which may, in turn, enhance fidelity and ultimately program effectiveness when implementing evidence-based programs. This can be accomplished by providing guidance and structure to support fidelity-consistent adaptations. Fidelity-consistent adaptations are modifications that retain the functional effective program elements, but tailor form elements to meet local needs, thereby promoting fit and fidelity in implementation (Stirman et al., 2013).

Taken together, our results are consistent with the notion that when designing implementation strategies for prevention interventions, practitioners and researchers may benefit from focusing on methods to enhance fidelity and adaptation. Teachers may benefit from enhanced intervention flexibility to make adaptations based on the needs of the students, context or their teaching style and experience, notably through addition of tailoring options via a user-centered design approach. This approach may also warrant more advanced trainings for teachers to effectively adapt the curriculum and retain fidelity to those functional or core elements that are critical to the intervention’s success. In this way, teachers who have developed a deeper knowledge of underlying theory and pedagogy for an EBI, for example, can make adaptations without compromising, and perhaps even enhancing, program effectiveness (Castro & Yasui, 2017; Quinn & Kim, 2017). This is consistent with “both-and” views of fidelity and adaptation that suggest some degree of EBI adaptation is necessary to suit the context, but that strategic adaptations, with an understanding of underlying EBI theory, can resolve implementation challenges and enhance engagement of program participants (Castro & Yasui, 2017). Future research testing these assertions, however, is needed.

An important step in this process is understanding the nature and impact of curriculum adaptations and what level of fidelity is needed to achieve desired outcomes (Stirman et al., 2013). Tracking and examining the impact of adaptations will help guide training and support for more experienced teachers, with the goal of having the curriculum remain an asset through accounting for the use of materials to evolving with experience.

We found an association between teacher gender and fidelity, with males reporting greater fidelity than females. Research that has assessed teacher gender as a predictor of student academic outcomes has primarily focused on how teacher gender may influence student learning; the results of this research, however, are mixed and researchers have not reached a consensus on the potential effects of teacher gender on student learning. (Dee, 2006). Few studies have examined potential gender differences in curriculum delivery. In general, the field of education supports gender diversity among teachers, but more research is needed to understand possible differences in curriculum fidelity by gender.

We did not find an association between perceptions of organizational policies and practices related to health education and fidelity. This may be because the organizational (i.e., school-level) policies themselves were locally-developed and not evidence-based. Researchers have found that isolated (i.e., within school or district) policies related to health may not be sufficient to affect change in health curriculum delivery; policies that follow a comprehensive school health approach may be necessary to enhance health curriculum fidelity (Laxer et al., 2019). Alternatively, these findings may reflect measurement issues, as we measured perceptions of policies using a single item, as opposed using a multi-item scale with demonstrated validity and/or to conducting objective coding of the policies themselves.

Our findings also have implications for ongoing support related to health curriculum implementation. Unlike other academic areas, the focus of effective universal prevention curricula such as MMH is on skill development and active learning approaches in addition to acquiring knowledge (www.cdc.gov/healthyschools). And the issues addressed in the health curriculum (e.g., substance use) are constantly evolving. Accessible, timely materials that complement the existing curriculum to address emerging issues may also be an important feature of maintaining fidelity to the functional curriculum components. Sharing information with teachers that provide up-to-date statistics, videos and active learning activities or scenarios related to relevant and emerging issues may help teachers stay engaged with the curriculum and can be tailored according to their level of experience (Ringwalt et al., 2010).

Limitations

Despite the novel data reported, several limitations require mentioning. Fidelity was operationalized as a single construct, dose delivered, which was measured via self-report. Our dose-delivered measure, however, is consistent with previous evaluations of MMH (Rockhill, 2017). Future studies would benefit from assessing fidelity across multiple dimensions, including quality of delivery and adherence in addition to dose delivered and using multiple approaches to triangulate the findings (e.g., self-report, observation, administrative data). In addition, our study used single-item measures for the CFIR constructs. Although this approach allowed for an investigation of distinct contributions of each determinant within each domain to fidelity compared to previous research with composite determinant measures, future research would benefit from developing standardized, multi-item constructs to assess determinants within each domain. The current study investigated the perceived adaptability of MMH; we did not investigate specific adaptations to the program (e.g., adding or removing elements) or if these adaptations were fidelity-consistent or fidelity-consistent (Stirman et al., 2013). Future research in school-based prevention programs would benefit from monitoring adaptations to the curriculum and evaluating their impact on fidelity. Yet our study is an important step in identifying adaptability as a critical determinant of fidelity. We did not include school-level identification in the current study, and it is possible that more than one teacher responded from the same school. However, according to MDHHS, the vast majority of Michigan high schools have one certified health teachers, making this unlikely. This study included only end-user perceptions of CFIR factors/implementation determinants. Yet, information from the provider or end-user’s perspective is critical to the development of effective implementation strategies in schools. Future research would benefit from multiple data sources, including student and administrative data. Although a substantial amount of implementation research indicates that the organizational context is vital to the successful adoption of evidence-based programs, the majority of this research has been conducted in healthcare settings; in addition, research that has been done in schools has focused on the provision of mental health services (Damschroder et al., 2009; Lyon et al., 2018). Consequently, relevant contextual determinants related to the provision of prevention curricula are not well understood and other relevant factors not included may better predict fidelity (Lyon et al., 2018).

Future research would benefit from exploring a range of contextual factors with prevention programs in schools to identify relevant determinants. We found some differences among those missing on our outcome (e.g., more likely to be male, more years of experience teaching) than not missing. However, our analytic sample demographic still largely reflects the larger health teacher population. Also, the differences in years of experience would reduce our power to detect changes among the most experienced teachers, thus pointing to the robustness of our findings. Finally, our survey is cross-sectional. Future research would benefit from examining teachers’ engagement with the intervention over time.

Conclusions

Data from this study underscore the importance of identifying key implementation determinants and understanding how they influence EBI fidelity (i.e., dose delivered). Specifically, program design quality and packaging was associated with increases in MMH dose delivered among teachers with less experience but became less relevant among those with 16 or more years of experience. Curriculum adaptability was also associated with greater dose delivered, which highlights the need to promote systematic adaptation that will enhance fidelity and program effectiveness. Given that schools have limited time and resources to offer health education training, ongoing support and monitoring, data from this study suggests that implementation strategies that focus on tailoring program packaging is key to facilitate effective delivery; among less experienced teachers, whereas focus on flexibility (e.g., optional lessons, variations in practice exercises) may enhance dose delivered among all teachers regardless of experience.

Acknowledgments

This research was supported by the Michigan Institute for Clinical and Health Research Grant UL1TR002240 and the National Institutes on Drug Abuse Grant K01DA044279-01A1 (PI: Eisman).

Footnotes

Conflict of Interest

The authors declare they have no conflict of interest

Compliance with Ethical Standards

Ethical Approval

The University of Michigan Institutional Review Board reviewed all study procedures and deemed the research exempt, category 2. All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all participants included in this study.

Publisher's Disclaimer: This Author Accepted Manuscript is a PDF file of a an unedited peer-reviewed manuscript that has been accepted for publication but has not been copyedited or corrected. The official version of record that is published in the journal is kept up to date and so may therefore differ from this version.

References

- Allen J, Linnan L, & Emmons K (2018). Fidelity and Its Relationship to Implementation Effectiveness, Adaptation, and Dissemination In Brownson R, Colditz G, & Proctor E (Eds.), Dissemination and implementation research in health: Translating science to practice (2nd ed., pp. 281–304). Oxford University Press. [Google Scholar]

- Bandura A (1977). Social learning theory. Prentice Hall. [Google Scholar]

- Barrera M, Berkel C, & Castro F (2017). Directions for the Advancement of Culturally Adapted Preventive Interventions: Local Adaptations, Engagement, and Sustainability. Prevention Science, 18(6), 640–648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett D (2001). How can I deal with missing data in my study? Australian and New Zealand Journal of Public Health, 25(5), 464–469. [PubMed] [Google Scholar]

- CASEL. (2018). Elementary SELect: Michigan Model for Health. CASEL: Collaborative for Academic Social and Emotional Learning. https://casel.org/guideprogramsmichigan-model-for-health/ [Google Scholar]

- Castro F, & Yasui M (2017). Advances in EBI Development for Diverse Populations: Towards a Science of Intervention Adaptation. Prevention Science, 18(6), 623–629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chafouleas S, Koriakin T, Roundfield K, & Overstreet S (2019). Addressing Childhood Trauma in School Settings: A Framework for Evidence-Based Practice. School Mental Health, 11(1), 40–53. [Google Scholar]

- Chambers D, & Azrin S (2013). Research and Services Partnerships: Partnership: A Fundamental Component of Dissemination and Implementation Research. Psychiatric Services, 64(6), 509–511. [DOI] [PubMed] [Google Scholar]

- Chambers D, Glasgow R, & Stange KC (2013). The dynamic sustainability framework: Addressing the paradox of sustainment amid ongoing change. Implementation Science, 8(1), 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damschroder L, Aron D, Keith R, Kirsh S, Alexander J, & Lowery J (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(50), 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dee T (2006). Teachers and the Gender Gaps in Student Achievement. Journal of Human Resources, 42(3), 27. [Google Scholar]

- Dusenbury L, Brannigan R, Falco M, & Hansen W (2003). A review of research on fidelity of implementation: Implications for drug abuse prevention in school settings. Health Education Research, 18(2), 237–256. [DOI] [PubMed] [Google Scholar]

- Eccles M, & Mittman B (2006). Welcome to Implementation Science. Implementation Science, 1(1), 1. [Google Scholar]

- Enders C (2010). Applied missing data analysis. Guilford Press. [Google Scholar]

- Garland AF, Accurso EC, Haine-Schlagel R, Brookman-Frazee L, Roesch S, & Zhang JJ (2014). Searching for Elements of Evidence-based Practices in Children’s Usual Care and Examining their Impact. Journal of Clinical Child and Adolescent Psychology, 43(2), 201–215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gusky T (1986). Staff Development and the Process of Teacher Change. Educational Researcher, 15(5), 5–12. [Google Scholar]

- Hall GE, & Hord SM (1987). Change in schools: Facilitating the process (Vol. 1–viii, 393 p. :). State University of New York Press. [Google Scholar]

- Harrell FE Jr. (2015). Regression Modeling Strategies With Applications to Linear Models, Logistic and Ordinal Regression, and Survival Analysis / (2nd ed. 2015). Springer International Publishing. [Google Scholar]

- Jose P (2013). Doing statistical mediation and moderation. The Guilford Press. [Google Scholar]

- Laxer R, Dubin J, Brownson R, Cooke M, Chaurasia A, & Leatherdale S (2019). Noncomprehensive and Intermittent Obesity-Related School Programs and Policies May Not Work: Evidence from the COMPASS Study. Journal of School Health, 89(10), 818–828. [DOI] [PubMed] [Google Scholar]

- Lee R, & Gortmaker S (2018). Health Dissemination and Implementation within Schools In Brownson R, Colditz G, & Proctor E (Eds.), Dissemination and implementation research in health: Translating science to practice (2nd ed., pp. 401–416). Oxford University Press. [Google Scholar]

- Lewallen T, Hunt H, Potts-Datema W, Zaza S, & Giles W (2015). The Whole School, Whole Community, Whole Child Model: A New Approach for Improving Educational Attainment and Healthy Development for Students. The Journal of School Health, 85(11), 729–739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon A, & Bruns E (2019a). User-Centered Redesign of Evidence-Based Psychosocial Interventions to Enhance Implementation—Hospitable Soil or Better Seeds? JAMA Psychiatry, 76(1), 3. [DOI] [PubMed] [Google Scholar]

- Lyon A, & Bruns E (2019b). From Evidence to Impact: Joining Our Best School Mental Health Practices with Our Best Implementation Strategies. School Mental Health, 11(1), 106–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon A, Cook C, Brown E, Locke J, Davis C, Ehrhart M, & Aarons G (2018). Assessing organizational implementation context in the education sector: Confirmatory factor analysis of measures of implementation leadership, climate, and citizenship. Implementation Science, 13(1), 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mihalic S, Fagan A, & Argamaso S (2008). Implementing the Life Skills Training drug prevention program: Factors related to implementation fidelity. Implementation Science, 3(5). [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’neill J, Clark J, & Jones J (2011). Promoting mental health and preventing substance abuse and violence in elementary students: A randomized control study of the michigan model for health. Journal of School Health, 81(6), 320–330. [DOI] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, & Hensley M (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38(2), 65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinn D, & Kim J (2017). Scaffolding Fidelity and Adaptation in Educational Program Implementation: Experimental Evidence From a Literacy Intervention. American Educational Research Journal, 54(6), 1187–1220. [Google Scholar]

- Rasberry CN, Slade S, Lohrmann DK, & Valois RF (2015). Lessons Learned From the Whole Child and Coordinated School Health Approaches. Journal of School Health, 85(11), 759–765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ringwalt CL, Pankratz MM, Jackson-Newsom J, Gottfredson NC, Hansen WB, Giles SM, & Dusenbury L (2010). Three-year trajectory of teachers’ fidelity to a drug prevention curriculum. Prevention Science, 11(1), 67–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rockhill S (2017). Use of the Michigan Model for Health Curriculum among Michigan Public Schools: 2017. Michigan Department of Health and Human Services, Lifecourse Epidemiology and Genomics Division, Child Health Epidemiology Section. [Google Scholar]

- Rohrbach L, Sun P, & Sussman S (2010). One-year follow-up evaluation of the Project Towards No Drug Abuse (TND) dissemination trial. Preventive Medicine, 51(3–4), 313–319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenstock IM (1974). Historical Origins of the Health Belief Model. Health Education Monographs, 2(4), 328–335. [DOI] [PubMed] [Google Scholar]

- SAMHSA. (2018). National Registry of Evidence-Based Programs and Practices (NREPP). Substance Abuse and Mental Health Services Administration (SAMHSA) www.nrepp.samhsa.gov [Google Scholar]

- StataCorp. (2019). Stata Multiple-Imputation Reference Manual: Release 16. StataCorp LP; https://www.stata.com/manuals/mi.pdf [Google Scholar]

- Stirman SW, Miller CJ, Toder K, & Calloway A (2013). Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implementation Science, 8(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weisz J, Kuppens S, Eckshtain D, Ugueto A, Hawley K, & Jensen-Doss A (2013). Performance of evidence-based youth psychotherapies compared with usual clinical care: A multilevel meta-analysis. JAMA Psychiatry, 70(7), 750–761. [DOI] [PMC free article] [PubMed] [Google Scholar]