Abstract

Background

Program directors (PDs) in emergency medicine (EM) receive an abundance of applications for very few residency training spots. It is unclear which selection strategies will yield the most successful residents. Many authors have attempted to determine which items in an applicant’s file predict future performance in EM.

Objectives

The purpose of this scoping review is to examine the breadth of evidence related to the predictive value of selection factors for performance in EM residency.

Methods

The authors systematically searched four databases and websites for peer‐reviewed and gray literature related to EM admissions published between 1992 and February 2019. Two reviewers screened titles and abstracts for articles that met the inclusion criteria, according to the scoping review study protocol. The authors included studies if they specifically examined selection factors and whether those factors predicted performance in EM residency training in the United States.

Results

After screening 23,243 records, the authors selected 60 for full review. From these, the authors selected 15 published manuscripts, one unpublished manuscript, and 11 abstracts for inclusion in the review. These studies examined the United States Medical Licensing Examination (USMLE), Standardized Letters of Evaluation, Medical Student Performance Evaluation, medical school attended, clerkship grades, membership in honor societies, and other less common factors and their association with future EM residency training performance.

Conclusions

The USMLE was the most common factor studied. It unreliably predicts clinical performance, but more reliably predicts performance on licensing examinations. All other factors were less commonly studied and, similar to the USMLE, yielded mixed results.

Selecting residents for a graduate medical education (GME) training program is a difficult task. The average emergency medicine (EM) program receives 940 applications for 11 first postgraduate year (PGY‐1) positions.1 Programs typically rank 12.8 applicants per PGY‐1 position,1, 2 investing considerable time and resources in the screening, reviewing, and interviewing of medical student applicants.

When deciding where an applicant should fall on a program’s rank list, a program director (PD) may consider many factors in their decision, such as clerkship grades, United States Medical Licensing Examination (USMLE) scores, Standardized Letters of Evaluation (SLOEs), or an applicant’s potential to contribute to the program and the community.1 It is difficult to predict which selection strategies will cull the most successful residents.

Residency programs aim to build a strong cohort of residents who will become successful physicians. The definition of a “successful” resident is controversial,1, 3 but may include one who is clinically competent, as measured by the Accreditation Council of Graduate Medical Education (ACGME) core competencies4 and milestones,5 or one who successfully passes board qualifying examinations.

Several studies across all specialties have analyzed which applicant qualities are most predictive of future resident performance. For example, one multidisciplinary single‐institution study found that student’s class rank or quantile on the dean’s letter, now known as the Medical Student Performance Evaluation (MSPE), predicted intern performance.6 Another study in orthopedic surgery found that USMLE Step 2, number of clerkship honors, and Alpha Omega Alpha (AOA) membership strongly correlate with objective and subjective measures of resident success.7 However, the qualities that predict a successful internal medicine or orthopedic resident may not predict success in EM. Multiple studies have attempted to analyze the predictive value of specific EM resident selection strategies.8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34 Some studies have reported the relative importance PDs place on each factor during the selection process, but these are based on opinion.35, 36, 37 A recent review studied selection factors as they related to resident performance for all specialties,38 but no comprehensive review has evaluated the predictive value of selection factors specific to EM residency performance. The purpose of this scoping review is to examine the breadth of evidence related to the predictive value of selection factors for performance in EM residency.

Methods

After ensuring that no other systematic or scoping review on this topic had been performed, the authors conducted a scoping review to establish a narrative of which resident selection items forecast a successful EM resident. The objectives, inclusion criteria, and methods for this scoping review were specified in advance and documented in a protocol and can be found in Data Supplement S1, Appendix S1 (available as supporting information in the online version of this paper, which is available at http://onlinelibrary.wiley.com/doi/10.1002/aet2.10411/full).

We used the scoping review methodology first proposed by Arksey and O’Malley39 and further revised by the Joanna Briggs Institute40 to address our study objective. We then incorporated the guidelines delineated by PRISMA Extension for Scoping Reviews: Checklist and Explanation into the appendix table (Data Supplement S1, Appendix S2).41 Quality of literature appraised using the STROBE Statement checklist and represented as a percentage of the total possible score.42

Eligibility Criteria (Inclusion Criteria)

Participants in each study are residents or graduates of EM residency programs in the United States. The included studies are published papers, abstracts, and available unpublished papers correlating one or more selection factors from medical school (e.g., USMLE scores) or the start of residency (e.g., factors assessed during the first month of residency intended to act as a surrogate for medical school factors) with performance outcomes during or after EM residency training. Residency programs included in this study are 3‐ or 4‐year EM residency programs that were formed in or after 1970 and are accredited by the ACGME or the American Osteopathic Association.

Information Sources (Search Strategy)

We comprehensively searched PubMed, Scopus, ERIC, and Web of Science for publications of all study designs, written in English language, and pertaining to the U.S. programs from 1992 to February 2019. The librarian (LM) designed the search and applied the search strategy in each of the selected database. A complete PubMed search strategy is depicted as an example in Data Supplement S1, Appendix S1.

To identify gray literature, the authors searched four websites (Society for Academic Emergency Medicine, Council of Residency Directors in Emergency Medicine [CORD‐EM], American College of Emergency Physicians, and Association of American Medical Colleges [AAMC]) for abstracts at national meetings written on the subject. For abstracts that met the inclusion criteria, we contacted the study authors to request a copy of their unpublished or published manuscript. We conducted the most recent search in February 2019. A complete search query for the PubMed database can be found in found in Data Supplement S1, Appendix S1.

Study Screening and Selection

Using our predefined inclusion and exclusion criteria, two reviewers (CG and AY) independently screened titles and/or abstracts of articles that met our search query. Interreviewer discrepancies were resolved by the senior investigator (MBO). There were approximately 16 articles out of the original 60 that required adjudication by the third author. After screening titles, we read the full text of articles to determine whether the study should be included in the final review. Following this, the reviewers screened the references and PubMed‐related articles for all included studies. We ensured literature saturation by using the Google Scholar “cited by” and “relevant articles” feature for each included study.

Data Charting Process

We identified selection factor and outcome variable categories. We worked with a trained statistician (SS) to describe the relationship between each selection factor and each outcome variable.

Results

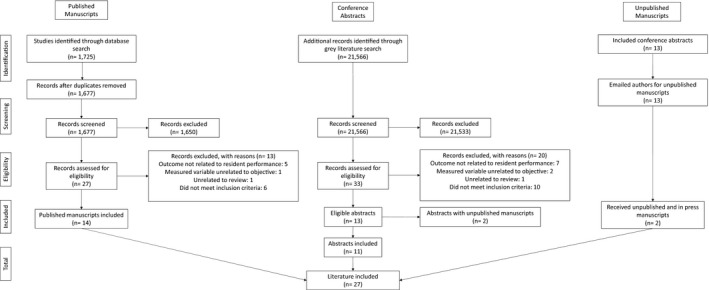

We identified 1,725 studies through our electronic database keyword search. We screened an additional 21,566 abstracts. After removing duplicates, the reviewers screened a total of 23,243 article titles for study eligibility. We read the full text of 27 articles and 33 abstracts, ultimately including 14 papers and 13 abstracts in the review. We attempted to contact the study author for each of 13 abstracts to request the manuscript. We received one unpublished manuscript23 and one in press (now published) manuscript22 from these queries. In total, we included 15 published articles,8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22 one unpublished manuscript,23 and 11 abstracts24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34 (Figure 1).

Figure 1.

Flowsheet for inclusion and exclusion of studies in the scoping review.

The 27 included studies examined the following selection factor categories: standardized tests, letters of evaluation or recommendation, MSPE, interview, medical school reputation, clerkship grades, audition elective, honor society membership, and other infrequently cited factors (e.g., sending thank you letters). Some authors studied whether the student’s position on the National Resident Matching Program (NRMP) rank order list (ROL) predicted success.

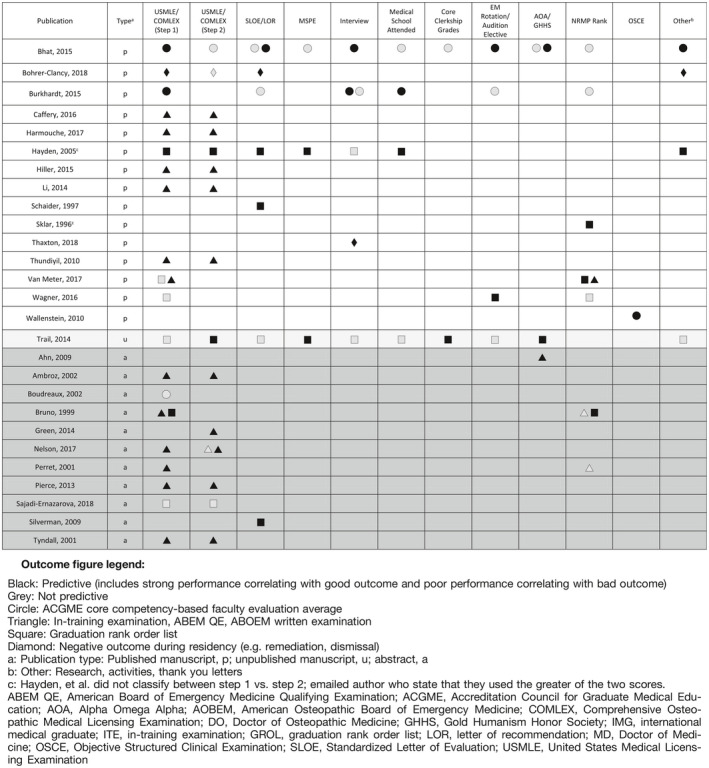

The authors of these studies defined success by the following: in‐training examination (ITE) performance,13, 18, 19, 25, 27, 28, 29, 30, 34 passage of the American Board of Emergency Medicine (ABEM) Qualifying Examination (QE) 11, 22, 29 or American Osteopathic Board of Emergency Medicine (AOEBM) written examination14, 31 average ACGME core competency‐based Likert‐scale faculty evaluation scores,8, 10, 21, 26 or a graduation rank order list (GROL).12, 15, 16, 19, 20, 23, 27, 32, 33 A GROL is a ranked list of graduates, as determined by a vote of faculty or program leaders, upon or after graduation, made specifically for the purposes of the study. Table 1 and Data Supplement S1, Appendix S2, list each included study, the selection factors they studied, the assessment method they used, and whether the selection factor was predictive or not significantly predictive of success in residency. Studies that correlated strong performance on a selection factor with strong performance during/after residency and studies that correlated poor performance on a selection factor with poor performance during/after residency are both represented/considered “predictive” in Table 1. Here we describe each selection factor and its predictive factor according to the published literature.

Table 1.

Selection factors and their association with residency outcomes. Table includes each study in the review, the selection factors they studied, the outcome measured (symbols), and whether that factor was predictive of the outcome (black symbol for predictive or gray/outlined symbol for not predictive). The table includes an item as “predictive” (black icons) even if they were only predictive in the univariable analysis.

Interviews

Two studies investigated traditional, nonstandardized interviews. Bhat and colleagues8 examined 277 EM residents from nine programs in a retrospective cohort study. They found that the interview score (top, middle, or lower third), during a traditional interview, was one of several predictive factors for residency success, as measured by faculty evaluations at the end of residency. Hayden et al.,12 however, did not find a correlation between the traditional interview and overall residency success in 54 residents.

A standardized, behavioral interview was explored by Thaxton and colleagues,17 who interviewed 106 first‐month interns from a single institution over 8 years. The authors coded interns’ responses to the question “Why is medicine important to you?” as “self‐focused” or “other‐focused” (e.g., “I enjoy the challenge” vs. “I enjoy helping patients”). A higher proportion of residents who gave “self‐focused” answers underwent remediation during residency than did those who gave “other‐focused” answers.

Only one study in EM has tested the predictive value of the multiple mini interview (MMI). Burkhardt and colleagues10 sought to determine whether the MMI would provide additional value beyond other traditional selection factors. They administered an eight‐station MMI to 65 interns at three programs during their first month of residency. On a univariable analysis, the MMI correlated with overall performance at an end of intern year ACGME core competency‐based assessment. However, it failed to remain in the model when studied with other selection factors in a multivariable analysis.

The standardized video interview (SVI) was developed by the AAMC and introduced to EM during the 2017 to 2018 application cycle.43, 44 At the time of publication, no studies had examined whether the SVI could predict future performance. In October 2019, the AAMC announced that they would discontinue use of the SVI for EM in the 2020 to 2021 application season.45

Medical School Attended

Hayden and colleagues12 retrospectively studied 54 residents over a 9‐year period from a single residency program. They found that the competitiveness of the medical school attended (MSA), as measured by published medical school ratings and faculty experience, was the strongest predictor of overall success at the time of graduation. Burkhardt et al.10 found MSA was one of several applicant factors that correlated with end of internship performance in the multivariable analysis; however, Bhat et al.8 did not find a statistically significant correlation between MSA and resident performance.

Audition Elective and Emergency Medicine Clerkship Performance

Wagner and colleagues20 reviewed 93 residents from four California residency programs and found that matching into the program at which a student completed an EM clerkship “audition rotation” correlated with strong performance during residency. Bhat et al.8 found an association between students receiving honors grades on EM clerkships and top‐third performance at the end of residency. At the end of internship, Burkhardt et al.10 did not find a similar correlation with overall performance.

Standardized Tests

Twenty‐one studies examined the relationship between standardized tests during medical school and resident performance in EM.8, 9, 10, 11, 12, 13, 14, 18, 19, 20, 22, 23, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34

USMLE and Clinical Performance

Several studies found mixed results as to whether the USMLE is associated with clinical performance during residency, as measured by core competency‐based assessments or a GROL. Bhat et al.8 found that USMLE Step 1, but not Step 2, correlated with being classified in the top one‐third of one’s residency class; however, it was not as strong a predictor as other variables, namely, EM rotation grades, AOA designation, and publications. Burkhart et al.10 and Hayden et al.12 found similar correlation between USMLE scores and performance. However, other similarly sized studies found different results. Van Meter and colleagues19 examined 286 residents from five EM programs to determine the correlation between USMLE Step 1, ITE scores, NRMP ROL, and GROL and did not find correlation between USMLE Step 1 performance and GROL, nor did Wagner et al..20 Several abstracts and an unpublished manuscript yielded mixed results for both examinations.23, 26, 27, 32

Bohrer‐Clancy and colleagues9 retrospectively studied 260 residents over a 19‐year period at a single institution to determine whether selection factors are associated with negative outcomes during residency, as defined by formal letters of deficiency, letters of reprimand, extension of residency (due to academic reasons), or failure to finish residency.9, 46 They found that a failure on the USMLE Step 1 correlated with adverse outcomes during residency, in the univariable analysis, but this did not hold true in the multivariable analysis; results for Step 2 were not significant.9

USMLE and ITE Performance

The relationship between the USMLE and the ITE or ABEM QE performance appears to be stronger than that of clinical performance. Thundiyil and colleagues18 retrospectively examined 51 residents from a single program and found a correlation between USMLE scores and mean ITE scores, with moderate correlation for the USMLE Step 2 and mild for Step 1. Hiller and colleagues13 observed 62 interns from six residency programs to determine if first‐month intern performance on a National Board of Medical Examiners (NBME) EM Advanced Clinical Examination (ACE) correlated with ITE scores. After reporting significant association between EM‐ACE and ITE score, the authors also found a significant association between ITE and USMLE 1 and USMLE 2 in univariable analysis, but USMLE Step 2 failed to remain in the model in the multivariable analysis. Van Meter et al.19 found weak positive correlation between USMLE Step 1 performance and ITE performance in a multilevel model. Several abstracts have shown a positive relationship between Step 1 and/or Step 2 and the ITE, but none were published manuscripts at the time of this study.25, 27, 28, 29, 30, 34

USMLE and ABEM QE Performance

Harmouche et al.11 retrospectively studied 197 residents from nine EM programs and found that residents who passed the ABEM QE on first attempt (n = 187) had higher mean USMLE Step 1 and Step 2 scores than those who failed (n = 10; 206 vs. 220, Step 1; 229 vs. 203, Step 2). Residents who passed both the QE and the oral examination had a statistically significantly higher mean USMLE Step 2 than those who failed either examination on first attempt (229 vs. 201); USMLE Step 1 scores were not statistically significant.11 These results are supported by one abstract by Nelson and Calandrella,29 which found statistically significantly higher USMLE scores for those who passed the ABEM QE (207 vs 220, Step 1; 208 vs 228, Step 2).29 A study of 101 residents from two programs found that a score cutoff seven points below the USMLE national mean yielded 64% sensitivity and 81% specificity for passage of the ABEM QE on first attempt.22

Comprehensive Osteopathic Licensing Examination

Li et al.14 investigated 451 EM residency graduates who took the AOBEM Part 1 in 2011 and 2012 and found significant correlation between Comprehensive Osteopathic Licensing Examination (COMLEX) 1, 2, and 3 and AOBEM scores and passage. One abstract correlated COMLEX 1 and 2 performance for 86 residents with ABEM ITE performance.31

Letters of Recommendation and Evaluation

In 1995, CORD‐EM created a standardized letter of recommendation (SLOR), later renamed the SLOE in 2013.47, 48 Prior to 1995, some programs utilized a preprinted questionnaire (PPQ), which was similar conceptually to the SLOR/SLOE.15 One study of 17 residents found that these PPQs predicted success during residency, as measured by a GROL.15

Studies on the SLOR/SLOE have yielded mixed results. Burkhardt et al.10 did not find that SLORs predicted performance during intern year, as part of the multivariable analysis. Hayden et al.12 reported that LORs—assumed to be SLORs—were a significant predictor of success for applicants from non–top tier medical schools. Bhat et al.,8 unexpectedly, found that the final two “ranking” questions (global assessment and competitiveness) on SLORs written by non–program leadership, not residency program leadership, correlated with resident performance at graduation. Bohrer‐Clancy et al.9 found that of the four applicants who either had a red flag on a LOR/SLOR/SLOE or during a clerkship rotation, all had a negative outcome during residency. An abstract by Silverman et al.33 reviewed the SLOR forms from six interns at a single institution and found that the question pertaining to how highly an applicant would reside on a programs match list correlated with EM intern overall performance.

MSPE, Class Rank, and Clerkship Grades

The MSPE is a summative evaluation of a student’s experiences, attributes, and academic performance during medical school.49 It usually contains a ranking statement placing the student’s overall performance into a quantile.50, 51, 52 Trail et al.23 and Hayden et al.12 found a correlation between MSPE rank and performance, with Trail et al.23 correlating higher GROL and overall assessment during residency with an “outstanding” category on the MSPE or clerkships in honors in internal medicine. Bhat et al.,8 however, did not find significant association between the MSPE and core clerkship grades with performance.

Honor Society Membership

Bhat et al.8 and Trail et al.23 found that AOA designation was associated with higher performance during residency. Gold Humanism Honor Society membership, however, was not significantly associated with a top one‐third performance.8 An abstract by Ahn et al.24 surveyed PDs and found that programs with greater than one‐half of residents with AOA distinction performed higher on the QE and oral boards.

Other Factors

Distinctive Leadership

Hayden et al.12 examined “other distinctive factors” from a student’s application, such as athletic accomplishments or leadership involvement, and found that they predicted overall success in residency.

Objective Structured Clinical Examination

Wallenstein et al.21 studied 18 first‐month interns’ performance on a five‐station objective structured clinical examination (OSCE) and found that it correlated with performance 18 months later on ACGME core competency‐based EM faculty global evaluations.

Publications

Bhat et al.8 found that publishing five or more presentations or publications before or during medical school was associated with a top one‐third performance during residency.

Professionalism

The study by Bohrer‐Clancy et al.9 reported that students who did not send interview thank you notes or had any leave of absence during medical school were more likely to have negative outcome during residency.

NRMP Rank

Several papers and abstracts evaluated the predictive ability of a resident’s position on a program’s NRMP rank for performance, finding mixed results. Sklar et al.16 studied 20 residents from a single institution and found that NRMP ROL position moderately correlated with resident performance at graduation; Van Meter et al.19 found weak positive correlation, as did one abstract.27 Bhat et al.,8, 10, 20 Wagner et al.,10 and Burkhardt et al.20 did not find a correlation with clinical performance; and two abstracts did not find a correlation between ROL position and ITE performance.27, 30

Discussion

Many authors have attempted to quantify the qualities that predict future performance during EM residency. Results for each selection factor are mixed, with no one factor or combination of factors being able to reliably predict a “successful” resident. However, defining a “success” is very difficult. One may feel that a successful resident is one who appears at the top of an unofficial GROL, attaining many measurable and immeasurable qualities that make them intangibly “the best.” While many studies used a GROL or other outcome such as ITE scores, passing the ABEM QE, or having the highest faculty evaluations, all of these outcome measures have inherent flaws. A recent study identified 20 factors (traits, skills, and behaviors) as important in describing a successful EM resident.3

In current EM resident selection, over three‐quarters of PDs cite interviews, USMLE scores, SLOEs, and audition electives as important factors in making ranking decisions.1 While the SLOE is rated as one of the most important factors for making interview selection and ranking decisions,1, 47, 48 it is unclear if it can accurately predict resident success.8, 10, 12, 15, 33 These studies probably suffer from selection bias, since each program presumably used the SLOE in their selection decisions; residents were assessed on a program‐by‐program basis and authors may have been unable to detect a performance difference if there was little variability in SLOE scores. Future studies could leverage the eSLOE49 database to determine performance trends on a national scale. The SLOE has several published benefits, namely, inter‐rater reliability and ease and efficiency to review,47, 55, 56 which are likely responsible for its high utilization.1, 53, 54, 55

The USMLE is the most frequently studied selection factor in our review. Not surprisingly, the connection between USMLE performance and ITE and ABEM QE performance is stronger than its connection with clinical performance. The NBME cautions against differentiating between applicants using small variations in students’ scores, but does endorse the consideration of USMLE scores in conjunction with other selection factors.57 Currently, there is a national discourse regarding the elimination of numeric USMLE scores to move to a pass/fail system.58

Relying too heavily on any one application item has pitfalls; in isolation, many selection factors do not reliably predict performance. Even when combined for a more global picture, such as NRMP rank list position, correlation is not reliably demonstrated. Each selection factor may suffer from inconsistency or, worse, bias. Black and Asian medical students are less likely to be inducted into AOA than their white counterparts.59, 60 Writers of the MSPE may use less positive language for certain racial/ethnic and gender groups.61 Additionally, the MSPE may be inconsistent in its categories and rankings.50, 61

To avoid a reliance on items like the USMLE and AOA, the AAMC created task forces to better assess students’ global performance and noncognitive domains. For example, in an effort to improve the consistency of MSPEs by schools, its guidelines were revised in 2017.49 Additionally, the AAMC piloted the SVI in EM.43, 44 The AAMC reports that it does not suffer from bias or correlate with USMLE scores, suggesting that it could provide new information for programs;62, 63 however, in 2019 AAMC announced that they would discontinue its use in EM for the 2020‐2021 application season.45

The MMI has been more heavily studied in undergraduate medical education admissions than it has in GME.64, 65 While the MMI is valuable because it can assess desired constructs during selection, operationally, an MMI may be nonideal for EM. With a high match rate for U.S. seniors applying to EM,66 many applicants have the luxury of interviewing a program as much as the program is interviewing them. Thus, the MMI may be too one‐sided for selection in EM.67, 68, 69 Other innovative techniques in EM and other specialties include virtual reality–based and simulation‐based assessments.70, 71 Structured, behavioral interviews may measure noncognitive competencies better than traditional interviews.17, 72 We would not recommend using published interview questions to predict performance; once the applicant pool is familiar with the questions, their predictive value may be diminished.

While the included studies seek to predict a highly successful resident, some may argue that, after years of training and selection, the vast majority of residents are successful. Realistically, many PDs are satisfied graduating capable, competent physicians who do not have professionalism issues. A better question for PDs may be, “Would I choose this resident again?” The time associated with remediating a problematic resident may be more significant than graduating a resident who is “more successful.” Thus, studies that predict unprofessionalism, like those of Bohrer‐Clancy et al.9, 17 and Thaxton et al.,17 may be more relevant to PDs. Several studies, across other specialties, have similarly examined early markers of unprofessionalism. For example, Papadakis and colleagues73 found that medical students who had concerning, problematic, or extreme unprofessional behavior noted in their medical student file were much more likely to later be disciplined by state medical boards. A study in psychiatry found similar results with negative comments on the dean’s letter/MSPE, being associated with negative actions during residency.74 We interpret studies like that of Bohrer‐Clancy et al.9 with caution because practices that “flag” a student with a previous leave of absence could discriminate against students with a pregnancy, bereavement, or medical leave.

While avoiding unprofessional and problematic residents is satisfactory, ideally, PDs hope to graduate residents who go on to make a positive impact on the health care system. It is unclear if the constructs that are measured in GME actually predict future health care impactors. For example, a “top resident” who goes on to work in a medically dense community may not have the same impact as an “average” resident who goes on to affect health outcomes in a medically underserved community or by working in an academic medical center. Ideally, future research could aim to marry patient and community outcomes with selection factors, but this would be difficult to study.

Limitations

As discussed, defining “success” is difficult. Furthermore, success during residency may not equate to success as a practicing physician. Many of the included studies have flawed endpoints. There is a nonlinear relationship between resident performance and his or her respective place on a GROL. For some programs and graduation years, there may be very little difference between a top resident and bottom resident; other times, the lowest performing resident may be significantly weaker than the middle or top cohort of residents. Regardless, being a “graduation” ROL, all individuals should be competent to graduate.

Some papers may not have listed all the variables they studied and only reported factors that they found positive. The breadth of papers may suffer from publication bias, with a tendency for negative results to remain unpublished. We tried to minimize this by searching conference abstracts. However, one could argue that conference abstracts could also suffer from publication bias.

Conclusions

There is mixed evidence for whether selection factors can predict emergency medicine resident performance. The United States Medical Licensing Examination was the most common factor studied. It unreliably predicts clinical performance, but more reliably predicts performance on licensing examinations. All other factors were less commonly studied and, similar to the United States Medical Licensing Examination, yielded mixed results. No single factor has reliably been shown to predict resident performance.

Supporting information

Data Supplement S1. Supplemental material.

AEM Education and Training 2020;4:191–201

The authors have no relevant financial information or potential conflicts to disclose.

Author contributions: MBO, AY, and CG conceived the study; MBO, LM, AY, and CG designed the study; MBO supervised the study; LM, AY, and CG performed literature review; SS provided statistical expertise; CG, AY, SS, ST, and MBO drafted the manuscript; and all authors contributed substantially to its revision. MBO takes responsibility for the paper as a whole.

References

- 1. National Resident Matching Program, Data Release and Research Committee . Results of the 2018 NRMP Program Director Survey. Washington, DC: National Resident Matching Program, 2018. Available at: https://www.nrmp.org/wp-content/uploads/2018/07/NRMP-2018-Program-Director-Survey-for-WWW.pdf. Accessed June 11, 2019. [Google Scholar]

- 2. National Resident Matching Program, Data Release and Research Committee . Impact of Length of Rank Order List on Match Results: 2002‐2018 Main Residency Match. Available at: http://www.nrmp.org/wp-content/uploads/2018/03/Impact-of-Length-of-Rank-Order-List-on-Match-Results-2018-Main-Match.pdf. Accessed June 11, 2019. [Google Scholar]

- 3. Pines JM, Alfaraj S, Batra S, et al. Factors important to top clinical performance in emergency medicine residency: results of an ideation survey and delphi panel. AEM Educ Train 2018;2:269–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Accreditation Council for Graduate Medical Education . Milestones Guidebook for Residents and Fellows. Available at: https://www.acgme.org/Portals/0/PDFs/Milestones/MilestonesGuidebookforResidentsFellows.pdf. Accessed June 11, 2019.

- 5. Accreditation Council for Graduate Medical Education and American Board of Emergency Medicine . The Emergency Medicine Milestone Projects. Available at: https://www.acgme.org/Portals/0/PDFs/Milestones/EmergencyMedicineMilestones.pdf?ver=2015-11-06-120531-877. Accessed June 11, 2019.

- 6. Lurie SJ, Lambert DR, Grady‐Weliky TA. Relationship between dean’s letter rankings and later evaluations by residency program directors. Teach Learn Med 2007;19:251–6. [DOI] [PubMed] [Google Scholar]

- 7. Raman T, Alrabaa RG, Sood A, Maloof P, Benevenia J, Berberian W. Does residency selection criteria predict performance in orthopaedic surgery residency? Clin Orthop Relat Res 2016;474:908–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Bhat R, Takenaka K, Levine B, et al. Predictors of a top performer during emergency medicine residency. J Emerg Med 2015;49:505–12. [DOI] [PubMed] [Google Scholar]

- 9. Bohrer‐Clancy J, Lukowski L, Turner L, Staff I, London S. Emergency medicine residency applicant characteristics associated with measured adverse outcomes during residency. West J Emerg Med 2018;19:106–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Burkhardt JC, Stansfield RB, Vohra T, Losman E, Turner‐Lawrence D, Hopson LR. Prognostic value of the multiple mini‐interview for emergency medicine residency performance. J Emerg Med 2015;49:196–202. [DOI] [PubMed] [Google Scholar]

- 11. Harmouche E, Goyal N, Pinawin A, Nagarwala J, Bhat R. USMLE scores predict success in ABEM initial certification: a multicenter study. West J Emerg Med 2017;18:544–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hayden SR, Hayden M, Gamst A. What characteristics of applicants to emergency medicine residency programs predict future success as an emergency medicine resident? Acad Emerg Med 2005;12:206–10. [DOI] [PubMed] [Google Scholar]

- 13. Hiller K, Franzen D, Heitz C, Emery M, Poznanski S. Correlation of the National Board of Medical Examiners Emergency Medicine Advanced Clinical Examination given in July to intern American Board of Emergency Medicine in‐training examination scores: a predictor of performance? West J Emerg Med 2015;16:957–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Li F, Gimpel JR, Arenson E, Song H, Bates BP, Ludwin F. Relationship between COMLEX‐USA scores and performance on the American Osteopathic Board of Emergency Medicine Part I certifying examination. J Am Osteopath Assoc 2014;114:260–6. [DOI] [PubMed] [Google Scholar]

- 15. Schaider JJ, Rydman RJ, Greene CS. Predictive value of letters of recommendation vs questionnaires for emergency medicine resident performance. Acad Emerg Med 1997;4:801–5. [DOI] [PubMed] [Google Scholar]

- 16. Sklar DP, Tandberg D. The relationship between National Resident Match Program rank and perceived performance in an emergency medicine residency. Am J Emerg Med 1996;14:170–2. [DOI] [PubMed] [Google Scholar]

- 17. Thaxton RE, Jones WS, Hafferty FW, April CW, April MD. Self vs. other focus: predicting professionalism remediation of emergency medicine residents. West J Emerg Med 2018;19:35–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Thundiyil JG, Modica RF, Silvestri S, Papa L. Do United States Medical Licensing Examination (USMLE) scores predict in‐training test performance for emergency medicine residents? J Emerg Med 2010;38:65–9. [DOI] [PubMed] [Google Scholar]

- 19. Van Meter M, Williams M, Banuelos R, et al. Does the National Resident Match Program Rank List predict success in emergency medicine residency programs? J Emerg Med 2017;52:77–82. [DOI] [PubMed] [Google Scholar]

- 20. Wagner JG, Schneberk T, Zobrist M, et al. What predicts performance? A multicenter study examining the association between resident performance, rank list position, and United States Medical Licensing Examination Step 1 scores. J Emerg Med 2017;52:332–40. [DOI] [PubMed] [Google Scholar]

- 21. Wallenstein J, Heron S, Santen S, Shayne P, Ander D. A core competency‐based Objective Structured Clinical Examination (OSCE) can predict ruture resident performance. Acad Emerg Med. 2010;17:S67–71. [DOI] [PubMed] [Google Scholar]

- 22. Caffery T, Fredette J, Musso MW, Jones GN. Predicting American board of emergency medicine qualifying examination passage using United States medical licensing examination step scores. Ochsner J 2018;18:204–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Trail L, Hays J, Vohra T, Goyal N, Lewandowski C, Charlotte B, Krupp S. The right stuff: can selection criteria predict success in emergency medicine residency training? [Abstract 110] West J Emerg Med 2014;15(5). [Google Scholar]

- 24. Ahn J, Christian MR, Patel SR, Allen NG, Theodosis C, Babcock C. Characteristics of emergency medicine residency curricula that affect board performance [Abstract 30]. Ann Emerg Med 2009;54:S10. [Google Scholar]

- 25. Ambroz KG, Chan SB. Correlation of the USMLE with the emergency residents’ in‐service exam [Abstract 328]. Acad Emerg Med 2002;9:480. [Google Scholar]

- 26. Boudreaux ED, Perret N, Mandry CV, Broussard J, Wood K. The relation between standardized test scores and evaluations of medical competence among emergency medicine residents [Abstract 370]. Acad Emerg Med 2002;9:495. [Google Scholar]

- 27. Bruno R, Su M, Lucchesi M . Predictors of EM Residency Applicants’ Success Early in Residency [Abstract 131]. Presented at Society for Academic Emergency Medicine Annual Meeting, Boston, MA, May 1999. [Google Scholar]

- 28. Green WL, Velez LI, Roppolo LP, et al. What Study Materials and Study Habits Correlate With High ABEM In‐training Examination Scores? [Abstract 404]. Presented at the Society for Academic Emergency Medicine Annual Meeting, Denver, CO, May 2014. [Google Scholar]

- 29. Nelson M, Calandrella C. Does USMLE Step 1 & 2 scores predict success on ITE and ABEM qualifying exam: a review of an emergency medicine residency program from its inception [Abstract 146]. Ann Emerg Med 2017;70:S58–S59. [Google Scholar]

- 30. Perret JN, Boudreaux ED, Francis JL. The relation between match rank, USMLE‐I, and ABEM performance for an emergency medicine residency program [Abstract 153]. Acad Emerg Med 2001;8:470. [Google Scholar]

- 31. Pierce DL, Mirre‐Gonzalez MA, Carter MA, Nug D, Salamanca Y. Performance on the COMLEX‐USA exams predicts performance on EM‐Residency in‐training exams. Acad Emerg Med 2013;20(5 Suppl 1). [Google Scholar]

- 32. Sajadi‐Ernazarova K, Ramoska E, Saks M. USMLE scores do not predict ultimate clinical performance in an emergency medicine residency program. West J Emerg Med 2018;19:S24–5. [Google Scholar]

- 33. Silverman M, Mayer C, Shih R. Does the Standard Letter of Recommendation Help Predict Emergency Medicine Intern Performance? [Abstract 104]. Presented at the Society for Academic Emergency Medicine Annual Meeting, New Orleans, LA, May 2009.

- 34. Tyndall JA, Leber M, Yeh B. USMLE scores as a predictor of resident performance on the in‐training examination [Abstract 154]. Acad Emerg Med 2001;8:470–1. [Google Scholar]

- 35. Balentine J, Gaeta T, Spevack T. Evaluating applicants to emergency medicine residency programs. J Emerg Med 1999;17:131–4. [DOI] [PubMed] [Google Scholar]

- 36. Crane JT, Ferraro CM. Selection criteria for emergency medicine residency applicants. Acad Emerg Med 2008;7:54–60. [DOI] [PubMed] [Google Scholar]

- 37. Breyer MJ, Sadosty A, Biros M. Factors affecting candidate placement on an emergency medicine residency program’s rank order list. West J Emerg Med 2012;13:458–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Hartman ND, Lefebvre CW, Manthey DE. A narrative review of the evidence supporting factors used by residency program directors to select applicants for interviews. J Grad Med Educ 2019;11:268–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol 2005;8:19–32. [Google Scholar]

- 40. Peters MD, Godfrey CM, Khalil H, McInerney P, Parker D, Soares CB. Guidance for conducting systematic scoping reviews. Int J Evid Based Healthc 2015;13:141–6. [DOI] [PubMed] [Google Scholar]

- 41. Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA‐ScR): checklist and explanation. Ann Intern Med 2018;169:467–73. [DOI] [PubMed] [Google Scholar]

- 42. STROBE Statement—Checklist of Items That Should Be Included in Reports of Observational Studies. Available at: https://www.strobe-statement.org/fileadmin/Strobe/uploads/checklists/STROBE_checklist_v4_combined.pdf. Accessed June 23, 2019. [DOI] [PubMed]

- 43. Bird S, Blomkalns A, Deiorio NM, Gallahue FE. Stepping up to the plate: emergency medicine takes a swing at enhancing the residency selection process. AEM Educ Train 2018;2:61–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Bird SB, Hern HG, Blomkalns A, et al. Innovation in residency selection: the AAMC standardized video interview. Acad Med 2019;94:1489–97. [DOI] [PubMed] [Google Scholar]

- 45. Association of American Medical Colleges . AAMC Standardized Video Interview Evaluation Summary. AAMC.org. Available at: https://students-residents.aamc.org/applying-residency/article/svi-evaluation-summary/. Accessed October 22, 2019.

- 46. Asher S, Kilby KA. Proceed with caution before assigning “red flags” in residency applications. West J Emerg Med 2018;19:737–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Keim S, Rein J, Chisholm C, et al. A standardized letter of recommendation for residency application. Acad Emerg Med 1999;6:1141–6. [DOI] [PubMed] [Google Scholar]

- 48. Martin DR, McNamara R. The CORD standardized letter of evaluation: have we achieved perfection or just a better understanding of our limitations? J Grad Med Educ 2014;6:353–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Association of American Medical Colleges . Recommendations for Revising the Medical Student Performance Evaluation. Available at: https://www.aamc.org/download/470400/data/mspe-recommendations.pdf. Accessed June 11, 2019.

- 50. Boysen‐Osborn M, Mattson J, Yanuck J, et al. Ranking practice variability in the medical student performance evaluation: so bad, it’s “good”. Acad Med 2016;91:1540–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Horn J, Richman I, Hall P, et al. The state of medical student performance evaluations: Improved transparency or continued obfuscation? Acad Med 2016;91:1534–9. [DOI] [PubMed] [Google Scholar]

- 52. Love JN, Smith J, Weizberg M, et al. Council of Emergency Medicine Residency Directors’ standardized letter of recommendation: the program director’s perspective. Acad Emerg Med 2014;21:680–7. [DOI] [PubMed] [Google Scholar]

- 53. Negaard M, Assimacopoulos E, Harland K, Van Heukelom J. Emergency medicine residency selection criteria: an update and comparison. AEM Educ Train 2018;2:146–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Council of Emergency Medicine Residency Directors (CORD) . The Standardized Letter of Evaluation (SLOE): Instructions for Authors. Available at: https://www.cordem.org/resources/residency-management/sloe/esloe/. Accessed May 9, 2019.

- 55. Love JN, Deiorio NM, Ronan‐Bentle S, et al. Characterization of the Council of Emergency Medicine Residency Directors’ standardized letter of recommendation in 2011–2012. Acad Emerg Med 2013;20:926–32. [DOI] [PubMed] [Google Scholar]

- 56. Girzadas DV, Harwood RC, Dearie J, Garrett S. A comparison of standardized and narrative letters of recommendation. Acad Emerg Med 1998;5:1101–4. [DOI] [PubMed] [Google Scholar]

- 57. United States Medical Licensing Examination . USMLE score Interpretation Guidelines. Available at: https://www.usmle.org/pdfs/transcripts/USMLE_Step_Examination_Score_Interpretation_Guidelines.pdf. Accessed June 11, 2019.

- 58. United States Medical Licensing Examination . InCUS Meeting Concludes. Available at: https://www.usmle.org/pdfs/incus/Post-conference_statement.pdf. Accessed June 11, 2019.

- 59. Boatright D, Ross D, O’Connor P, Moore E, Nunez‐Smith M. Racial disparities in medical student membership in the Alpha Omega Alpha Honor Society. JAMA Intern Med 2017;177:659–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Wijesekera TP, Kim M, Moore EZ, Sorenson O, Ross DA. All other things being equal: exploring racial and gender disparities in medical school honor society induction. Acad Med 2019; 4:562–9. [DOI] [PubMed] [Google Scholar]

- 61. Ross DA, Boatright D, Nunez‐Smith M, Jordan A, Chekroud A, Moore EZ. Differences in words used to describe racial and gender groups in medical student performance evaluations. PLoS ONE 2017;12:e181659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Egan D, Husain A, Bond MC, et al. Standardized video interviews do not correlate to United States Medical Licensing Examination Step 1 and Step 2 scores. West J Emerg Med 2019;20:87–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Gallahue FE, Hiller KM, Bird SB, et al. The AAMC standardized video interview: reactions and use by residency program directors during the 2018 application cycle. Acad Med 2019;94:1506–12. [DOI] [PubMed] [Google Scholar]

- 64. Rees EL, Hawarden AW, Dent G, Hays R, Bates J, Hassell AB. Evidence regarding the utility of multiple mini‐interview (MMI) for selection to undergraduate health programs: a BEME systematic review: BEME guide no. 3. Med Teach 2016;38:433–55. [DOI] [PubMed] [Google Scholar]

- 65. Eva KW, Rosenfeld J, Reiter HI, Norman GR. An admissions OSCE: the multiple mini‐interview. Med Educ 2004;38:324–36. [DOI] [PubMed] [Google Scholar]

- 66. National Resident Matching Program . Results and Data: 2019 Main Residency Match®. Washington, DC. 2019. Available at: https://mk0nrmpcikgb8jxyd19h.kinstacdn.com/wp-content/uploads/2019/04/NRMP-Results-and-Data-2019_04112019_final.pdf. Accessed June 11, 2019.

- 67. Soares WE, Sohoni A, Hern HG, Wills CP, Alter HJ, Simon BC. Comparison of the multiple mini‐interview with the traditional interview for US emergency medicine residency applicants: a single institution experience. Acad Med 2015;90:76–81. [DOI] [PubMed] [Google Scholar]

- 68. Hopson LR, Bukhardt JC, Stansfield RB, Vohra T, Turner‐Lawrence D, Losman ED. The multiple mini‐interview for emergency medicine resident selection. J Emerg Med 2014;46:537–43. [DOI] [PubMed] [Google Scholar]

- 69. Boysen‐Osborn M, Wray A, Hoonpongisimanont W, et al. A multiple‐mini interview for emergency medicine residency admissions: a brief report and qualitative analysis. J Adv Med Educ Prof 2018;6:176–80. [PMC free article] [PubMed] [Google Scholar]

- 70. Crawford SB, Monks SM, Wells RN. Virtual reality as an interview technique in evaluation of emergency medicine applicants. AEM Educ Train 2018;2:328–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Masgood MM, Stephenson ED, Farquhar DR, et al. Surgical simulation and application perception in otolaryngology residency interviews. Laryngoscope 2018;128:2503–7. [DOI] [PubMed] [Google Scholar]

- 72. Marcus‐Blank B, Dahlke J, Braman JP, et al. Predicting performance of first‐year residents: correlations between structured interview, licensure exam, and competency scores in a multi‐institutional study. Acad Med 2019;4:378–87. [DOI] [PubMed] [Google Scholar]

- 73. Papadakis MA, Teherani A, Banach MA, et al. Disciplinary action by medical boards and prior behavior in medical school. N Engl J Med 2005;353:2673–82. [DOI] [PubMed] [Google Scholar]

- 74. Brenner AW, Mathai S, Jain S, Mohl PC. Can we predict “problem residents”? Acad Med 2010;85:1147–51. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Supplement S1. Supplemental material.