Abstract

Structural information about protein-protein interactions, often missing at the interactome scale, is important for mechanistic understanding of cells and rational discovery of therapeutics. Protein docking provides a computational alternative for such information. However, ranking near-native docked models high among a large number of candidates, often known as the scoring problem, remains a critical challenge. Moreover, estimating model quality, also known as the quality assessment problem, is rarely addressed in protein docking.

In this study the two challenging problems in protein docking are regarded as relative and absolute scoring, respectively, and addressed in one physics-inspired deep learning framework. We represent protein and complex structures as intra- and inter-molecular residue contact graphs with atom-resolution node and edge features. And we propose a novel graph convolutional kernel that aggregates interacting nodes’ features through edges so that generalized interaction energies can be learned directly from 3D data. The resulting energy-based graph convolutional networks (EGCN) with multi-head attention are trained to predict intra- and inter-molecular energies, binding affinities, and quality measures (interface RMSD) for encounter complexes. Compared to a state-of-the-art scoring function for model ranking, EGCN significantly improves ranking for a CAPRI test set involving homology docking; and is comparable or slightly better for Score_set, a CAPRI benchmark set generated by diverse community-wide docking protocols not known to training data. For Score_set quality assessment, EGCN shows about 27% improvement to our previous efforts. Directly learning from 3D structure data in graph representation, EGCN represents the first successful development of graph convolutional networks for protein docking.

Keywords: protein-protein interactions, protein docking, scoring function, quality estimation, machine learning, graph convolutional networks, energy-based models

INTRODUCTION

Protein-protein interactions (PPIs) underlie many important cellular processes. Structural information about these interactions often helps reveal their mechanisms, understand diseases, and develop therapeutics. However, such information is often unavailable at the scale of protein-protein interactomes 1, which calls for computational protein docking methods. Two major tasks for protein docking are sampling (aiming at generating many near-native models or decoys) and scoring (aiming at identifying those near-natives among a large number of candidate decoys), both of which present tremendous challenges 2.

This study focuses on scoring by filling two gaps in protein docking. First, current scoring functions for protein docking often aim at relative scoring, in other words, ranking near-natives high 2. But they do not score the decoy/model quality directly (absolute scoring), which is more often known as quality estimation or quality assessment (without known native structures) in the protein-structure community of CASP 3. Although quality estimation methods based on machine learning are emerging for single-protein structure prediction4–9, such methods are still rare for protein-complex structure prediction, i.e., protein docking 10. Second, state-of-the-art scoring functions (for relative scoring) in protein docking are often based on machine learning with hand-engineered features, such as physical-energy terms 11, 12, statistical potentials 13, 14, and graph kernels 15. These features, heavily relying on domain expertise, are often not specifically tailored or optimized for scoring purposes. Recently, deep learning has achieved tremendous success in image recognition and natural language processing 16, 17, largely due to its automated feature/representation learning from raw inputs of image pixels or words. This trend has also rippled to structural bioinformatics and will be briefly reviewed later.

To fill the aforementioned gaps for scoring in protein docking, we propose a deep learning framework with automated feature learning to estimate the quality of protein-docking models (measured by interface RMSD or iRMSD) and to rank them accordingly. In other words, the framework simultaneously addresses both relative scoring (ranking) and absolute scoring (quality assessment) using features directly learned from data. To that end, our deep learning framework predicts binding free energy values of docking models (encounter complexes) whereas these values are correlated to known binding free energy values of native complexes according to model quality.

Technical challenges remain for the framework of deep learning: how to represent protein-complex structure data and learn from such data effectively? Current deep learning methods in structural bioinformatics often use 3D volumetric representations of molecular structures. For instance, for protein-structure quality assessment, atom density maps have been used as the raw input to 3D Convolutional Neural Networks (CNN) 18, 19. For protein-ligand interaction prediction, the Atomic Convolutional Neural Network (ACNN) 20 uses the neighbor matrix as input and uses radical pooling to simulate the additive pairwise interaction. And for RNA structure QA, grid representations of the structures are used as the input to 3D CNN 21. However, learning features from volumetric data of molecular structures present several drawbacks 22. First, representing the volumetric input data as pixel data through tensors would require discretization, which may lose some biologically-meaningful features while costing time. Second, such input data are often sparse, resulting in many convolutional operations for zero-valued pixels and thus low efficiency. Third, the convolutional operation is not rotation-invariant, which demands rotational augmentation of training data and increased computational cost.

To effectively learn features from and predict labels for protein-docking structure models, we represent proteins and protein-complexes as intra- and inter-molecular residue contact graphs with atom-resolution node and edge features. Such a representation naturally captures the spatial relationship of protein-complex structures while overcoming the aforementioned drawbacks of learning from volumetric data. Moreover, we learn from such graph data by proposing a physics-inspired graph convolutional kernel that pool interacting nodes’ interactions through their node and edge features. We use two resulting energy-based graph convolutional networks (EGCN) of the same architecture but different parameters to predict intra- and inter-molecular energy potentials for protein-docking models (encounter complexes), which further predicts these encounter complexes’ binding affinity and quality (iRMSD) values. Therefore, our EGCN is capable of both model ranking and quality estimation (or quality assessment without known native structures). We note the recent surging of graph neural networks in protein modeling, such as protein interface prediction 23 and protein structure classification 22. We also note that this is the first study of graph neural networks for protein docking.

The rest of the paper is organized as follows. In the Methods section, we first describe the data sets for training, validating, and testing our EGCN models. We proceed to introduce contact graph representations for proteins and protein complexes and initial features for nodes and edges; our energy-based graph convolutional networks as the intra- or inter-molecular energy predictor for encounter complexes; and additional contents (label, loss function, and optimization) for training our EGCN. Next we compare in Results EGCN’s performances against a state-of-the-art scoring function for ranking and against our previous efforts for both ranking and quality estimation. We also share in Discussion our thoughts on how EGCN can be further improved in prediction accuracy and training efficiency. Lastly, we summarize major contributions to method development and major results of method performances in Conclusion.

MATERIALS AND METHODS

Data

Training and Validation Sets

We randomly choose 50 protein-protein pairs from protein benchmark 4.024, all with known binding affinities (kd) values32–34, as in our earlier work 10 and split them into training and validation pairs with the ratio 4:1. For each training or validation pair, we perform rigid docking using ClusPro25, retain the top-1000 decoys according to ClusPro’s default scoring, and introduce flexible perturbation for each decoy using cNMA10, 26, 27. In total, we have 40,000 training and 10,000 validation decoys.

Test Sets

We consider three test sets of increasing difficulty levels.

The first test set includes the rest 107 protein pairs from the protein benchmark set 4.024. As in the training and validation sets, these unbound protein pairs are rigidly docked through ClusPro. The top 1000 decoys after scoring are flexibly perturbed through cNMA, leading to 107,000 decoys.

This second test set includes 14 recent CAPRI targets10 undergoing the same protocol above (ClusPro + cNMA) for 14,000 decoys. Besides unbound docking seen in the benchmark test set, many of the targets here involve homology-unbound and homology-homology docking26. The homology models were automatically built using the webserver iTASSER when CARPI targets were released to predictors, with few exceptions detailed in our previous study26.

The last test set is Score_set, a CAPRI benchmark for scoring28. It includes 13 earlier CAPRI targets, each of which has 400 to 2,000 flexible decoys generated through various protocols by the community.

We summarize PDB IDs for training, validation, and the three test sets in the supplementary Tables S1–S5. Binding affinities for some of the benchmark test set and nearly all of the CAPRI test set and Score_set (marked with stars in the supplementary tables) were not available and instead predicted from protein sequences35.

Graphs and Features

The structure of a protein or protein complex is represented as a graph where each residue corresponds to a node and each intra- or inter-molecular residue-pair defines an edge. Such intra- and inter-molecular contact graphs can be united under bipartite graphs: the two sets of nodes correspond to the same protein for intra-molecular graphs and they do to binding partners for inter-molecular graphs. Atom-resolution features are further introduced for nodes and edges of such bipartite contact graphs. In other words, to represent , we have initialized two node feature matrices (denoted by XA ∈ RN1×M and XB ∈ RN2×M) and an edge feature tensor (denoted by A ∈ RN1×N2×K), where N1 or N2 denote the number of residues for either protein A or B (which can be the same protein in the case of intra-molecular contact graphs), M the number of features per node, and K the number of features per edge. As revealed later in our graph convolutional networks, node feature matrices will be learned, i.e. updated layer after layer, by interacting with neighboring nodes’ features through the fixed edge tensors.

For node-feature matrix X, we use M = 4 features for each node. Each side chain is represented as a pseudo atom whose position is the geometric center of the side chain. And the first 3 node features are the side-chain pseudo atom’s charge, non-bonded radii, and distance-to-Cα, as parameterized in the Rosetta coarse-grained energy model 29. The last node feature is the solvent accessible surface area (SASA) of the whole residue, as calculated by the FreeSASA program 30.

For edge-feature tensor A, we use K = 11 features for each edge. These features are related to pairwise atomic distances between the two corresponding residues. Specifically, each residue is a point cloud of 6 atoms, including 5 backbone atoms (N, HN, CA, C, and O as named in CHARMM27) and 1 side-chain pseudo atom (named SC). For numerical efficiency, we have picked 11 pairwise distances (including potential hydrogen bonds) out of the total 6 × 6 = 36 (see Table I) and used their reciprocals for the edge features. We use a cutoff of 12 Å for pairwise atomic distances and set edge features at zero when corresponding distances are above the cutoff.

Table I.

The atom pairs whose distance are converted to edge features. Atom names follow the convention in CHARMM, except that “SC” corresponds to pseudo-atoms for side chains. Note that the last two features are set at 0 for pairs involving prolines (without HN).

| Index | Atom 1 | Atom 2 |

|---|---|---|

| 1 | SC | SC |

| 2 | SC | O |

| 3 | SC | N |

| 4 | O | SC |

| 5 | O | O |

| 6 | O | N |

| 7 | N | SC |

| 8 | N | O |

| 9 | N | N |

| 10 | HN | O |

| 11 | O | HN |

In this study, in order to address input proteins of varying sizes, we consider the maximum number of residues to be 500 and hence fix N at 500 for contact graphs. Correspondingly, smaller proteins’ feature matrices/tensors X and A are zero-padded as needed so that feature matrices/tensors for all input data are of the same sizes: X ∈ RN×M and A ∈ RN×N×K.

Energy-based Graph Convolutional Networks

Background on principle-driven energy model

To score protein-docking models, we try to use machine learning to model ΔG, the binding energy of encounter complexes, which can be written as:

where GC, GRu, and GLu denote the Gibbs free energies of the complex, unbound receptor and unbound ligand, respectively. For GC, we can further decompose it into the intra-molecular energies within two individual proteins and the inter-molecular energy between two proteins:

where GR, GL, GRL are the Gibbs free energies within the encountered receptor, within the encountered ligand and between them, respectively. Therefore, the binding energy in classical force fields can be written as:

In flexible docking, unbound and encountered structures of the same protein are often different. Such protein conformational changes indicate that GR − GRu ≠ 0 and GL − GLu ≠ 0.

Extension to data-driven energy model

It is noteworthy that the expression of ΔG above consists of four terms measuring intra-molecular free energies of individual proteins and one one term measuring inter-molecular free energies across two proteins. Therefore, we decide to use two machine-learning models f of the same neural-network architecture but different parameters to approximate the two types of energy terms as follows:

where fθ and fθ′ are the intra- and inter-molecular energy models (graph convolutional networks here) parameterized by θ and θ′, respectively. Subscripts are included for node feature matrices X or edge feature matrices A to indicate identities of molecules or molecular pairs. The parameters are to be learned from data specifically for the purpose of quality estimation.

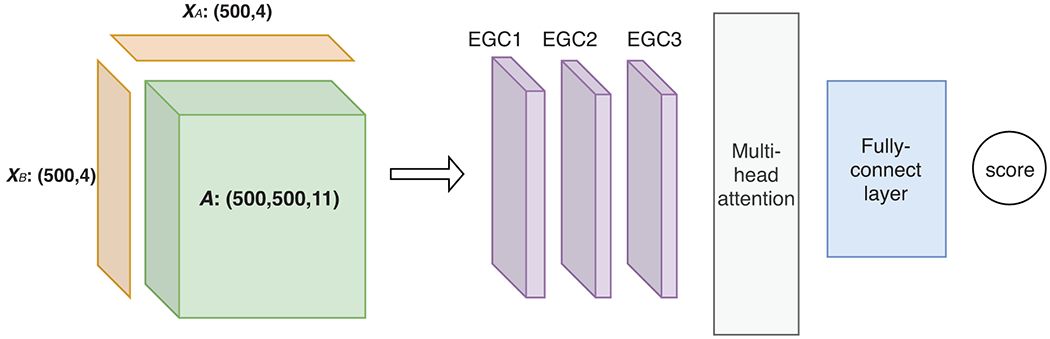

The architecture of the neural networks is summarized in Figure 1 whereas individual components are detailed below.

Figure 1.

The architecture of the proposed graph convolutional network (GCN) models for intra- or inter-molecular energies. In our work, there are five types of such models together for predicting encounter-complex binding energy, including 4 intra-molecular models with shared parameters for the unbound or encountered receptor or ligand as well as 1 inter-molecular model for the encounter complex. In each type of model, the inputs (to the left of the arrow) include a pair of node-feature matrices (XA and XB) for individual protein(s) and an edge-feature tensor (A) for intra- or inter-molecular contacts. And the inputs are fed through 3 layers of our energy-based graph convolution layers that learn from training data to aggregate and transform atomic interactions, followed by multi-head attention module and fully-connected layers for the output of intra- or inter-molecular energy.

Energy-based Graph Convolutional layer (EGC)

The node matrices (XA and XB) and the edge tensor A of each contact graph are first fed to three consecutive graph convolutional layers inspired by physics. Specifically, energy potentials are often pairwise additive and those between atoms (i and j) are often of the form , where xi and xj are atom “features”, r is the distance between them, and a is an integer. For instance, x being a scalar charge and a being 1 lead to a Coulombic potential.

Inspired by the energy form, we propose a novel graph convolutional kernel that “pool” neighbor features to update protein A’s node features layer by layer:

where subscript i and j are node indices for proteins A and B, respectively, p node-feature index, and k edge-feature index; superscript (l) and (l + 1) indicate layer indices. Accordingly, of size M(l) × 1 is the node feature vector for the ith node of protein A in layer is the pth node-feature for the ith node of protein A in layer (l + 1) and of size 10 × M(l) is the trainable weight matrix for the kth edge feature and the pth node feature (p = 1, …, M(l+1)) in the same layer (l + 1). In this way we sum all the interactions between node i in protein A and all neighboring nodes j in protein B through the 11 edge features. Similarly we update node features for each node in protein B by following

where is an element of Ã, a permuted A with the first two dimensions swapped. When calculating intra-molecular energy within a single protein, the second molecule’s feature update can be skipped for numerical efficiency.

We only do the convolution on the node features, while keeping the edge features the same across all the EGC layers. The output node-feature matrices and for both proteins, together with the edge feature tensor A, will be used as the input (after batch normalization) for the next layer (l + 1). Whereas M(0) = M = 4, we choose M(1) = 2 and M(2) = M(3) = 5 without particular optimization.

Multi-head attention and fully-connected layers.

After the 3 EGC layers the output node feature matrices XA(L) and XB(L) (L = 3 in this study) for both proteins (or two copies of the single matrix in the intra-molecular case) are concatenated and fed into a multi-head attention module 16, 31, whose output subsequently goes through three fully-connected (FC) layers with 128, 64, and 1 output, respectively. A dropout rate of 20% is applied to all but the last FC layer.

Label, loss function, and model training

The label here is the binding energy ΔG although it is not available for encounter complexes. We thus use the same idea as in our previous work 10. Specifically, using known kd32–34, binding affinity of native complexes, or their values predicted from protein sequences 35 , we estimate , the binding affinity of an encounter complex, to weaken with the worsening quality of the encounter complex (measured by iRMSD):

where α and q are hyperparameters optimized using the validation set. Specifically, α is searched between 0.5 and 10 with a stepsize of 0.5 and q among 0.25, 0.5, 0.75, 1, 1.5, and 2. The optimized α and q are 1.5 and 0.5, respectively. Therefore, for each sample (corresponding to an encounter complex or decoy), the predicted label is the previously defined , the actual label is , and the error is simply the difference between the two.

We learn parameters θ and θ′ of two graph convolutional networks of the same architecture, by minimizing the loss function of mean squared errors (MSE) over samples. Model parameters include in the EGC layers as well as those in the multi-head attention module and FC layers. One set of learned parameter values, θ are shared among all intra-molecular energy models, and the other set, θ′ are for the inter-molecular energy model.

The model is trained for 50 epochs through the optimizer Adam36 with a batch size of 16 and the learning rate is tuned to be 0.01 using the validation set. The training and validation losses over the 50 epochs are shown in the supplementary Figure S1.

Note that all training and validation cases, but not all test cases, are with known kd values32–34. Those test cases with unknown kd values instead used sequence-predicted values35. Although the accuracy of affinity prediction for these cases is not available without ground truth, the original study35 reported a correlation range of 0.836–0.998 and 0.739–0.992 for their self-consistency and jack-knife test sets, respectively. More importantly, the accuracy of affinity prediction only affects absolute scoring but does not do so for relative scoring (as a constant additive error would be introduced to all structure models of the same target).

Assessment Metrics

We are aiming at not only relative scoring (ranking) but also absolute scoring (quality estimation) of protein-docking models. Two assessment metrics are therefore introduced.

For ranking models for a given protein-protein pair, we use enrichment factor which is the number of acceptable protein-docking models among the top P% ranked by a scoring function divided by that ranked by random. Conceptually, enrichment factor measures the fold improvement of ranking acceptable models high relative to random ranking. Here acceptable models are defined according to the CAPRI criteria37 with iRMSD within 4 Å (ligand RMSD or native contacts were not considered).

In addition, for all targets in each given test set, we use the number of targets with at least acceptable docking-models (including that of medium or high-quality models according to the CAPRI iRMSD criteria37) when such models were among top 1, 5, or 10 ranked by a scoring function. To remove redundancy among decoys, we clustered all decoys of each target using the program FCC (Fraction of Common Contacts)38 with default parameters and only passed those FCC-retained cluster representatives to each scoring function.

For quality estimation of all docking models in various quality ranges, we use the root mean square error (RMSE) between the real iRMSD and our predicted iRMSD (as obtained from our predicted labels). Conceptually, RMSE measures the proximity between predicted and actual quality measures.

Baseline Methods

We use two baseline scoring functions here. The first one, only applicable to model ranking, is IRAD 12, one of the top-performing scorers in recent CAPRI. IRAD uses a linear combination of atom-based ZRANK potentials (van der Waals, electrostatics, and ACE desolvation contact energy) and five residue-based potentials. It optimizes the weights of these potentials through optimizing hit rates for ZDOCK 3.0 decoys generated for docking benchmark 3.012.

The second one is a Random Forest (RF) model from our previous work 10, which both ranks models and estimates model quality. For comparability among models, the RF model is re-trained using the same training and validation sets in this study. Similar to IRAD, RF is a combination of 8 potentials, although all atom-based and calculated in the CHARMM27 force field, namely bond, angle, dihedral and Urey-Bradley terms of internal energy, van der Waals, nonpolar contribution of the solvation energy based on solvent-accessible surface area (SASA), and two solvation energy terms based on Generalized Born with a simple SWitching (GBSW). Unlike IRAD, RF combines the 8 atom-based potentials in random forest. More importantly, RF is trained to optimize binding-energy estimation and absolute scoring for encounter complexes in our data set and can be used (although not optimized) to rank models using predicted absolute scores ([relative] binding affinity or iRMSD).

Just like RF, EGCN is trained to optimize absolute scoring and can be applied to (although not optimized to) relative scoring. However, unlike both baselines where classical energy terms from various force fields are calculated for structure models and then combined for scoring these models, EGCN directly learns energies from structure data to optimize energy estimation and absolute scoring. Representing 3D structure data as spatial graphs, EGCN uses physics-inspired, energy-based graph convolutional networks newly-developed for this purpose, as detailed earlier.

RESULTS

Relative Scoring (Model Ranking)

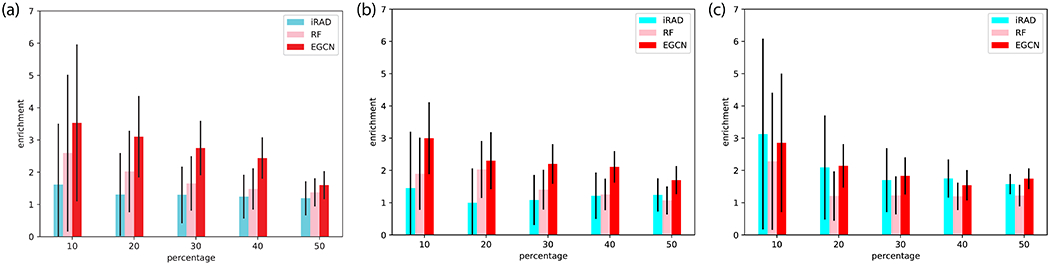

We first analyze our model’s performances of relative scoring and compare them to IRAD. Note that real affinity values are essentially not needed for EGCN just for relative scoring and errors in affinity prediction would not affect EGCN’s ranking results. For both benchmark and CAPRI test sets, we note that the proposed EGCN significantly outperforms both RF and IRAD (Figure 2A and 2B). In particular, for the top-ranked 10% for the benchmark test set, EGCN achieved an average enrichment factor of 3.5, which represents a nearly 40% improvement compared to RF (2.6) and nearly 120% improvement against IRAD (1.6). In all cases, the improvement margins decrease as the top-ranked percentages increase (that is, more models are retained), due to the fact that limited and relatively few acceptable models are available among the total available decoys. Although the training/validation set and the benchmark test set involve unbound docking, the CAPRI test set additionally involves the more challenging cases of homology docking. Nevertheless, for the more challenging CAPRI test set, EGCN’s average enrichment factor only slightly dropped from 3.5 to 3.0 in the top-ranked 10% and did so from 3.1 to 2.3 in the top-ranked 20%.

Figure 2.

Comparing relative scoring (ranking) performances among IRAD, RF, and EGCN. Reported are enrichments ratios of acceptable models among the first P percentage, top-ranked decoys for (a) benchmark test set, (b) CAPRI test set, and (c) Score_set, a CAPRI benchmark for scoring.

For Score_set, the CAPRI historical benchmark set, EGCN outperforms RF as shown in Figure 2C. Its average performance is comparable to IRAD although it has considerably lower variance across targets (Figure 2C). It is noteworthy that, although both the benchmark and CAPRI test decoys are generated by the same protocol (ClusPro + cNMA) as the training/validation decoys, Score_set represents completely different and heterogeneous decoy-generating protocols from the community. Impressively, for the top-ranked 10%, EGCN’s average enrichment factor is almost the same (nearly 3.0) for Score_set as it is for the CAPRI test set. For the top-ranked 20%, the factor slightly decreased to 2.1 for Score_set compared to 2.3 for the CAPRI test set.

We have also evaluated relative scoring performances using the CAPRI iRMSD criteria37 and compared IRAD, RF, and EGCN in Table II. For both the benchmark and CAPRI test sets, EGCN significantly outperformed IRAD. First, for the benchmark test set of 107 cases, EGCN generated 46 targets with at least acceptable top-5 predictions (the most possible number being 70 for the decoy set), representing more than 50% increase compared to 30 achieved by IRAD. The performance improvement for ranking medium and high-quality predictions was even more impressive: EGCN generated 21 and 5 targets with medium and high-quality top-5 predictions, respectively, representing 62% and 150% increases compared to IRAD. Second, for the CAPRI test set, although the total number of targets (14) can be too few to provide statistical significance, EGCN again increased the number of at least acceptable, medium, and high-quality top-5 models from IRAD’s 4, 2, 0 to 6, 3, 2, respectively. Last, even for Score_set where test decoys were generated by diverse protocols different from EGCN training decoys, EGCN had comparable or slightly better ranking performances, producing 6 targets with at least acceptable top-5 predictions (including 4 targets with medium and 1 with high-quality predictions). We find the EGCN ranking performance particularly impressive considering that, unlike IRAD, the current EGCN was trained for absolute scoring and not optimized for relative scoring. More thoughts to further improve its ranking performance will be described in Discussion.

Table II.

Comparing relative scoring performance among IRAD, RF and EGCN for three testing sets under the CAPRI criteria. As an upper limit, the best possible performance for each decoy set is given by ranking the best model (with the lowest iRMSD) as the first. Following the CAPRI convention, reported are the numbers of targets with at least an acceptable, at most a medium (**), or at most a high-quality (***) model within top 1/5/10 predictions ranked by individual scoring functions. For instance, 7/4**/2*** means that a scoring function has generated 7 targets with at least acceptable top-ranked models, including 4 with medium and 2 with high-quality predictions at best.

| Top 1 | Top 5 | Top 10 | ||

|---|---|---|---|---|

| Benchmark Test Set (107) | IRAD | 10/0**/0*** | 30/13**/2*** | 40/18/7*** |

| RF | 13/5**/0*** | 35/17**/5*** | 42/25**/10*** | |

| EGCN | 17/8**/2*** | 46/21**/5*** | 51/28**/11*** | |

| Best Possible | 70/47**/20*** | 70/47**/20*** | 70/47**/20*** | |

| CAPRI Test Set (14) | IRAD | 3/1**/0*** | 4/2**/0*** | 6/3**/1*** |

| RF | 4/1**/1*** | 6/2**/1*** | 8/3/2*** | |

| EGCN | 5/1**/1*** | 6/3**/2*** | 7/4/2*** | |

| Best Possible | 9/6**/3*** | 9/6**/3*** | 9/6**/3*** | |

| Score Set (13) | IRAD | 3/2**/0*** | 5/4**/1*** | 7/4**/2*** |

| RF | 1/0**/0*** | 3/2**/0*** | 3/2**/1*** | |

| EGCN | 3/2**/0*** | 6/4**/1*** | 7/4**/1*** | |

| Best Possible | 11/9**/3*** | 11/9**/3*** | 11/9**/3*** |

Absolute Scoring (Quality Estimation)

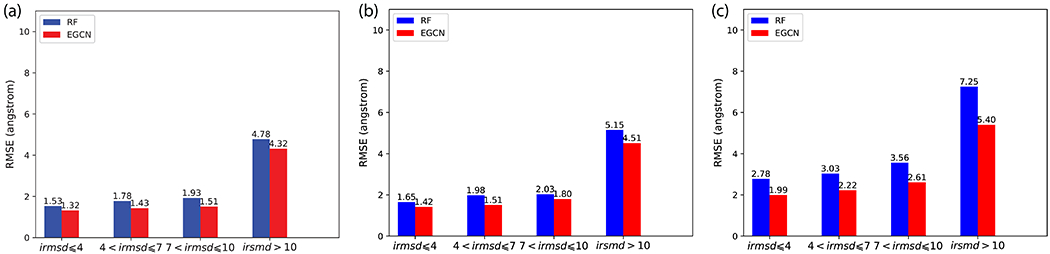

We next analyze EGCN’s absolute scoring performances and compare them to our previous RF model. IRAD, among many other scoring functions, is only for relative scoring or ranking and thus not compared here. EGCN significantly outperforms RF in quality estimation across all test sets (Figure 3). For the benchmark test set, EGCN estimates all docking models’ interface RMSD values with an error of 1.32 Å, 1.43 Å, and 1.51 Å when the models’ iRMSD values are within 4 Å (acceptable), between 4 Å and 7 Å, and between 7 Å and 10 Å, respectively. These values represent 14%-22% improvement against RF’s iRMSD prediction errors. Both EGCN and RF’s prediction errors remain relatively flat when models are acceptable or close with iRMSD values within 10 Å whereas they rise out the range when precise quality estimation is no longer desired for those far incorrect models. This performance trend is partially by chance, reflecting the quality distribution in the training set. But it can be guaranteed by design through re-weighting training decoys of different quality ranges, as we did before 10.

Figure 3.

Comparing absolute scoring (quality estimation) performances among RF and EGCN. Reported are the RMSE of iRMSD predictions for (a) benchmark test set, (b) CAPRI test set, and (c) Score_set, a CAPRI benchmark for scoring.

Very similar performances and trends are observed for the CAPRI test set and the CAPRI Score_set. EGCN’s quality estimation performances only deteriorates slightly in the more challenging CAPRI test set involving homology docking: an error of 1.42 Å, 1.51 Å, and 1.80 Å when the models’ iRMSD values are within 4 Å, between 4 Å and 7 Å, and between 7 Å and 10 Å, respectively. For Score_set involving diverse and distinct decoy generation protocols, EGCN’s quality-estimation performances further deteriorates slightly to an error of 1.99 Å, 2.22 Å, and 2.61 Å when the models’ iRMSD values are within 4 Å, between 4 Å and 7 Å, and between 7 Å and 10 Å, respectively, which has shown even more improvements (27%-28%) relative to RF. It is noteworthy that these two test sets are more challenging than the benchmark test set for additional reasons. Unlike the case of relative scoring, binding affinities of native complexes are needed for absolute scoring. But their values have to be predicted for all but one target pairs in these two test sets (13 out of 14 or 12 out of 14), compared to just 34 out of 107 for the benchmark test set.

To further assess the impact of affinity prediction on absolute scoring, we split the benchmark test set into two subsets (73 with known affinity values and 34 without) and analyzed EGCN on these two subsets separately (supplementary Figure S2). Indeed, the subset with known affinity values had seen lower errors in iRMSD prediction. Specifically, the RMSE values for decoy models within 4 Å, between 4 Å and 7 Å, and between 7 Å and 10 Å were 1.20 Å, 1.22 Å, and 1.50 Å, respectively, when affinity values were known, compared to 1.52 Å, 1.73 Å, and 1.53 Å, respectively, when affinity values were predicted from sequences. The deterioration in iRMSD prediction due to the unavailable affinity values did not appear to be a major concern, although its sensitivity to the accuracy of affinity prediction is unclear due to the lack of ground-truth affinity values.

Taken together, these results suggest that, learning energy models directly from structures as in EGCN represents a much more accurate and robust data-driven approach than doing so from structure-derived energy terms as in RF. The EGCN model performances are less sensitive to target difficulty than they are to the training versus test data distributions (reflected in the ways training/test decoys are generated).

DISCUSSION

The accuracy of the EGCN model could be further improved along a few directions. The first and the foremost important direction is to provide better-quality training data whose distributions align better to those of the test data. Specifically in our case, this demands more diverse protocols to generate training decoys (as opposed to the only ClusPro + cNMA protocol used in the study). Moreover, decoys in different quality ranges could be given different priorities as well. The second direction is to train deeper models (going beyond L = 3 in this study) and to include more edge features (for instance, including distances besides their reciprocals so that internal energies polynomial in distances can be captured). The third direction is to split the two objectives of relative and absolute ranking. We are training just one model for both model ranking and quality estimation whereas the loss function is perfectly aligned to quality estimation alone. Instead, a separate model with its own loss function can be trained just for model ranking.

The computational cost of training the EGCN model can also be reduced. Currently, we use an edge feature tensor A ∈ RN×N×K and pool all other nodes’ features to update each node’s feature vector. However, due to the sparsity of protein contact graphs, the edge feature tensor can enjoy a sparse representation of A ∈ RN×N′×K where N′ is the maximum number of node neighbors and N′ ≪ N. Here two residues are neighbors if any of the 11 atom-pair distances is below the threshold (12 Å in this study). Accordingly, when updating the feature vector for node i, i.e. , we just need to sum over all its neighboring nodes j for an equivalent expression.

CONCLUSION

In this paper, we propose a novel, energy-based graph convolutional network (EGCN) for scoring protein-docking models in both relative and absolute senses: ranking docking models and estimating their quality measures (iRMSD). We represent a protein or an encounter complex as an intra-molecular or inter-molecular residue contact graph with atom-resolution node features and edge features. Inspired by physics, we design a novel graph convolutional kernel that maps the inputs (current node features and fixed edge features) to energy-like outputs (next node features). Using such energy-based graph convolution layers and state-of-the-art attention mechanisms, we train two graph convolutional networks of identical architecture and distinct parameters to predict intra- and inter-molecular free-energy, respectively. The two networks are trained to together predict an encounter complex’s energy value whereas the true value is approximated by the corresponding native complex’s energy discounted according to the quality of the encounter complex.

The first GCN development for protein docking, our EGCN model is tested against three data sets with increasing difficulty levels: unbound docking with a single decoy-generating mechanism, unbound and homology docking with the single mechanism, as well as unbound and homology docking with diverse decoy-generating mechanisms from the community. For ranking protein-docking models (decoys), EGCN is found to perform better or equally well compared to a state-of-the-art method (IRAD) that has found great success in CAPRI as well as our previous RF model10. For quality estimation which has seen few method developments in the field, EGCN is again found to outperform our previous RF model. In both cases, EGCN is relatively insensitive to target difficulty and is of limited sensitivity to training decoys (specifically, the way they are generated). Compared to our previous RF model that learns indirectly from structure-derived energy terms, the EGCN model learns directly from structures and thus shows improved accuracy and robustness.

Supplementary Material

ACKNOWLEDGEMENTS

This work was supported by the National Institutes of Health (R35GM124952). Portions of this research were conducted with high performance research computing resources provided by Texas A&M University.

REFERENCES

- 1.Mosca R, Céol A, Aloy P. Interactome3D: adding structural details to protein networks. Nat Methods 2013;10(1):47. [DOI] [PubMed] [Google Scholar]

- 2.Porter KA, Desta I, Kozakov D, Vajda S. What method to use for protein–protein docking? Curr Opin Struct Biol 2019;55:1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Kryshtafovych A, Barbato A, Fidelis K, Monastyrskyy B, Schwede T, Tramontano A. Assessment of the assessment: evaluation of the model quality estimates in CASP10. Proteins Struct Funct Bioinforma 2014;82:112–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cao R, Bhattacharya D, Hou J, Cheng J. DeepQA: improving the estimation of single protein model quality with deep belief networks. BMC Bioinformatics 2016;17(1):495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Manavalan B, Lee J, Lee J. Random forest-based protein model quality assessment (RFMQA) using structural features and potential energy terms. PloS One 2014;9(9):e106542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.McGuffin LJ. The ModFOLD server for the quality assessment of protein structural models. Bioinformatics 2008;24(4):586–587. [DOI] [PubMed] [Google Scholar]

- 7.Popov P, Grudinin S. Knowledge of Native Protein–Protein Interfaces Is Sufficient To Construct Predictive Models for the Selection of Binding Candidates. J Chem Inf Model 2015;55(10):2242–2255. [DOI] [PubMed] [Google Scholar]

- 8.Qiu J, Sheffler W, Baker D, Noble WS. Ranking predicted protein structures with support vector regression. Proteins Struct Funct Bioinforma 2008;71(3):1175–1182. [DOI] [PubMed] [Google Scholar]

- 9.Ray A, Lindahl E, Wallner B. Improved model quality assessment using ProQ2. BMC Bioinformatics 2012;13(1):224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cao Y, Shen Y. Bayesian active learning for optimization and uncertainty quantification in protein docking. ArXiv Prepr ArXiv190200067 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gray JJ, Moughon S, Wang C, Schueler-Furman O, Kuhlman B, Rohl CA, Baker D. Protein–protein docking with simultaneous optimization of rigid-body displacement and side-chain conformations. J Mol Biol 2003;331(1):281–299. [DOI] [PubMed] [Google Scholar]

- 12.Vreven T, Hwang H, Weng Z. Integrating atom-based and residue-based scoring functions for protein–protein docking. Protein Sci 2011;20(9):1576–1586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Huang S-Y, Zou X. ITScorePro: An Efficient Scoring Program for Evaluating the Energy Scores of Protein Structures for Structure Prediction In: Protein Structure Prediction. Springer; 2014. p 71–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Pons C, Talavera D, Cruz X de la, Orozco M, Fernandez-Recio J Scoring by intermolecular pairwise propensities of exposed residues (SIPPER): a new efficient potential for protein–protein docking. J Chem Inf Model 2011;51(2):370–377. [DOI] [PubMed] [Google Scholar]

- 15.Geng C, Jung Y, Renaud N, Honavar V, Bonvin AM, Xue LC. iScore: A novel graph kernel-based function for scoring protein-protein docking models. BioRxiv 2018:498584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate. ArXiv Prepr ArXiv14090473 2014. [Google Scholar]

- 17.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems; 2012; . p 1097–1105. (Advances in neural information processing systems). [Google Scholar]

- 18.Charmettant B, Grudinin S. Protein model quality assessment using 3D oriented convolutional neural networks. Bioinformatics 2019. [DOI] [PubMed] [Google Scholar]

- 19.Derevyanko G, Grudinin S, Bengio Y, Lamoureux G. Deep convolutional networks for quality assessment of protein folds. Bioinformatics 2018;34(23):4046–4053. [DOI] [PubMed] [Google Scholar]

- 20.Gomes J, Ramsundar B, Feinberg EN, Pande VS. Atomic convolutional networks for predicting protein-ligand binding affinity. ArXiv Prepr ArXiv170310603 2017. [Google Scholar]

- 21.Li J, Zhu W, Wang J, Li W, Gong S, Zhang J, Wang W. RNA3DCNN: Local and global quality assessments of RNA 3D structures using 3D deep convolutional neural networks. PLoS Comput Biol 2018;14(11):e1006514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Zamora-Resendiz R, Crivelli S. Structural Learning of Proteins Using Graph Convolutional Neural Networks. bioRxiv 2019:610444. [Google Scholar]

- 23.Fout A, Byrd J, Shariat B, Ben-Hur A. Protein interface prediction using graph convolutional networks. In: Advances in Neural Information Processing Systems; 2017; . p 6530–6539. (Advances in Neural Information Processing Systems). [Google Scholar]

- 24.Hwang H, Vreven T, Janin J, Weng Z. Protein-Protein Docking Benchmark Version 4.0. Proteins 2010;78(15):3111–3114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kozakov D, Hall DR, Xia B, Porter KA, Padhorny D, Yueh C, Beglov D, Vajda S. The ClusPro web server for protein–protein docking. Nat Protoc 2017;12(2):255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chen H, Sun Y, Shen Y. Predicting protein conformational changes for unbound and homology docking: learning from intrinsic and induced flexibility. Proteins Struct Funct Bioinforma 2017;85(3):544–556. [DOI] [PubMed] [Google Scholar]

- 27.Oliwa T, Shen Y. cNMA: a framework of encounter complex-based normal mode analysis to model conformational changes in protein interactions. Bioinformatics 2015;31(12):i151–i160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lensink MF, Wodak SJ. Score_set: a CAPRI benchmark for scoring protein complexes. Proteins Struct Funct Bioinforma 2014;82(11):3163–3169. [DOI] [PubMed] [Google Scholar]

- 29.Das R, Baker D. Macromolecular modeling with rosetta. Annu Rev Biochem 2008;77:363–382. [DOI] [PubMed] [Google Scholar]

- 30.Mitternacht S FreeSASA: An open source C library for solvent accessible surface area calculations. F1000Research 2016;5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I. Attention is all you need. In: Advances in neural information processing systems; 2017; . p 5998–6008. (Advances in neural information processing systems). [Google Scholar]

- 32.Kastritis PL, Bonvin AM. Are scoring functions in protein–protein docking ready to predict interactomes? Clues from a novel binding affinity benchmark. J Proteome Res 2010;9(5):2216–2225. [DOI] [PubMed] [Google Scholar]

- 33.Vreven T, Moal IH, Vangone A, Pierce BG, Kastritis PL, Torchala M, Chaleil R, Jiménez-García B, Bates PA, Fernandez-Recio J. Updates to the integrated protein–protein interaction benchmarks: docking benchmark version 5 and affinity benchmark version 2. J Mol Biol 2015;427(19):3031–3041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Kastritis PL, Moal IH, Hwang H, Weng Z, Bates PA, Bonvin AM, Janin J. A structure-based benchmark for protein–protein binding affinity. Protein Sci 2011;20(3):482–491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Yugandhar K, Gromiha MM. Protein–protein binding affinity prediction from amino acid sequence. Bioinformatics 2014;30(24):3583–3589. [DOI] [PubMed] [Google Scholar]

- 36.Kingma DP, Ba J. Adam: A method for stochastic optimization. ArXiv Prepr ArXiv14126980 2014. [Google Scholar]

- 37.Lensink MF, Brysbaert G, Nadzirin N, Velankar S, Chaleil RAG, Gerguri T, Bates PA, Laine E, Carbone A, Grudinin S, Kong R, Liu R-R, Xu X-M, Shi H, Chang S, Eisenstein M, Karczynska A, Czaplewski C, Lubecka E, Lipska A, Krupa P, Mozolewska M, Golon Ł, Samsonov S, Liwo A, Crivelli S, Pagès G, Karasikov M, Kadukova M, Yan Y, Huang S-Y, Rosell M, Rodríguez-Lumbreras LA, Romero-Durana M, Díaz-Bueno L, Fernandez-Recio J, Christoffer C, Terashi G, Shin W-H, Aderinwale T, Subraman SRMV, Kihara D, Kozakov D, Vajda S, Porter K, Padhorny D, Desta I, Beglov D, Ignatov M, Kotelnikov S, Moal IH, Ritchie DW, Beauchêne IC de, Maigret B, Devignes M-D, Echartea MER, Barradas-Bautista D, Cao Z, Cavallo L, Oliva R, Cao Y, Shen Y, Baek M, Park T, Woo H, Seok C, Braitbard M, Bitton L, Scheidman-Duhovny D, DapkŪnas J, Olechnovič K, Venclovas Č, Kundrotas PJ, Belkin S, Chakravarty D, Badal VD, Vakser IA, Vreven T, Vangaveti S, Borrman T, Weng Z, Guest JD, Gowthaman R, Pierce BG, Xu X, Duan R, Qiu L, Hou J, Merideth BR, Ma Z, Cheng J, Zou X, Koukos PI, Roel-Touris J, Ambrosetti F, Geng C, Schaarschmidt J, Trellet ME, Melquiond ASJ, Xue L, Jiménez-García B, Noort CW van, Honorato RV, Bonvin AMJJ, Wodak SJ. Blind prediction of homo- and hetero- protein complexes: The CASP13-CAPRI experiment. Proteins: Structure, Function, and Bioinformatics 2019; 87(12):1200–1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Rodrigues JP, Trellet M, Schmitz C, Kastritis P, Karaca E, Melquiond AS, & Bonvin AM. Clustering biomolecular complexes by residue contacts similarity. Proteins: Structure, Function, and Bioinformatics (2012); 80(7): 1810–1817. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.