Abstract

Wing disc pouches of fruit flies are a powerful genetic model for studying physiological intercellular calcium (Ca2+) signals for dynamic analysis of cell signaling in organ development and disease studies. A key to analyzing spatial-temporal patterns of Ca2+ signal waves is to accurately align the pouches across image sequences. However, pouches in different image frames may exhibit extensive intensity oscillations due to Ca2+ signaling dynamics, and commonly used multimodal non-rigid registration methods may fail to achieve satisfactory results. In this paper, we develop a new two-phase non-rigid registration approach to register pouches in image sequences. First, we conduct segmentation of the region of interest. (i.e., pouches) using a deep neural network model. Second, we use a B-spline based registration to obtain an optimal transformation and align pouches across the image sequences. Evaluated using both synthetic data and real pouch data, our method considerably outperforms the state-of-the-art non-rigid registration methods.

Keywords: Non-rigid registration, Deep neural networks, Biomedical image segmentation, Calcium imaging

1. INTRODUCTION

Ca2+ is a ubiquitous second messenger in organisms [1]. Quantitatively analyzing spatial-temporal patterns of intercellular calcium (Ca2+) signaling in tissues is important for understanding biological functions. Wing disc pouches of fruit flies are a commonly used genetic model system of organ development and have recently been used to study the decoding of Ca2+ signaling in epithelial tissues [1, 2]. However, wing discs can undergo considerable movements and deformations during live imaging experiments. Therefore, an effective automatic image registration approach is needed to align pouches across image sequences.

Registering tissues that undergo deformations in time-lapse image sequences is a challenging problem. For example, experimental image data of wing disc pouches at different time points are moving or deforming due to a general feature of tissue growth, morphogenesis, and due to general movement during the live imaging process. Furthermore, a time-lapse movie can contain many frames, and a number of movies are needed to obtain reliable measurements of Ca2+ activity due to the noisy and stochastic nature of the signals, which make the processing more complicated and costly. Common approaches suffer considerably due to cumbersome intensity distortions of tissues caused by Ca2+ oscillations. A method for minimizing the error residual between the local phase-coherence representations of two images was proposed to deal with non-homogeneity in images [3], which relies heavily on structural information. But, in our problem, the intensity inside the pouches may change a lot, thus causing such methods to fail. A Markov-Gibbs random field model with pairwise interaction was used to learn prior appearance of a given prototype, which makes it possible to align complex images [4]. Incorporation of spatial and geometric information was proposed to address the limitations of the static local intensity relationship [5]. But, the computational complexity of these methods is high. In our problem, a single time-lapse movie may have hundreds of frames, and hundreds of movies are analyzed. Hence, these methods do not work well for our problem. In general, known methods do not address well the kind of complex intensity distortion in our images with a reasonable computation cost.

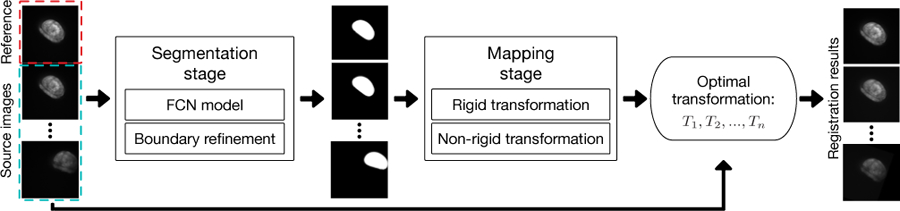

To address the difficulty of spatial intensity distortion along with various ROI deformations and movements, we propose a new non-rigid registration approach to effectively align pouches at different time points in image sequences. Our approach consists of two main stages: (1) a segmentation stage and (2) a mapping stage (see Fig. 1). The segmentation stage is based on a deep neural network (FCN). Because the accuracy of the ROI boundary is a key factor influencing the registration results, we apply a graph search algorithm to refine the segmented pouch boundaries. The mapping stage uses the segmentation results from the first stage to characterize an optimal transformation. We first apply a rigid transformation to modulate the movement of pouches, and then design an object pre-detection B-spline based non-rigid algorithm to produce the final mapping.

Fig. 1.

An overview of our proposed registration approach.

2. METHODOLOGY

In this section, we present a complete pipeline, which takes time-lapse image sequences of pouches as input and produces the registered sequences (see Fig. 1). We first discuss the segmentation stage (i.e., FCN model and boundary refinement), and then the mapping stage (i.e., rigid transformation and non-rigid transformation).

2.1. Segmentation Stage

Our registration approach is based on accurate pouch segmentation. Pouches in our images are commonly surrounded and touched by extra tissues with similar intensity and texture, and the separation boundary between pouches and extra tissues is usually of poor visibility. Meanwhile, the noise induced by the live imaging process makes the segmentation task more challenging. Thus, it is important for our segmentation algorithm to leverage the morphological and topological contexts, in order to correctly segment the shape of each actual pouch, especially its boundary, from the noisy background. For this, we employ an FCN model to exploit the semantic context for accurate segmentation, and a graph search algorithm to further refine the boundaries.

FCN module

Recently, deep learning methods have emerged as powerful image segmentation tools. Fully convolutional networks (FCN) are widely used in general semantic segmentation and biomedical image segmentation [6, 7].

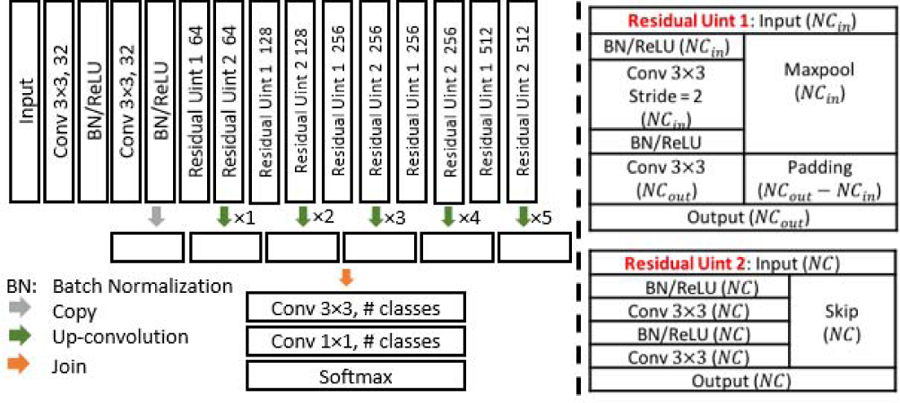

It is worth mentioning that in our images, the separation boundary between a pouch and other tissues is usually quite subtle (as thin as 3 to 5 pixels wide) and obscure, while the whole contextual region (including both the pouch and extra tissues) can be of a relatively large scale (more than 200 × 200 pixels). Therefore, the FCN model must fully exploit both the fine details and a very large context. For this purpose, we carefully design the FCN architecture following the model in [8] to leverage a large receptive field without sacrificing model simplicity and neglecting fine details. The exact structure of our FCN model is depicted in Fig. 2.

Fig. 2.

The structure of our FCN model.

Boundary refinement

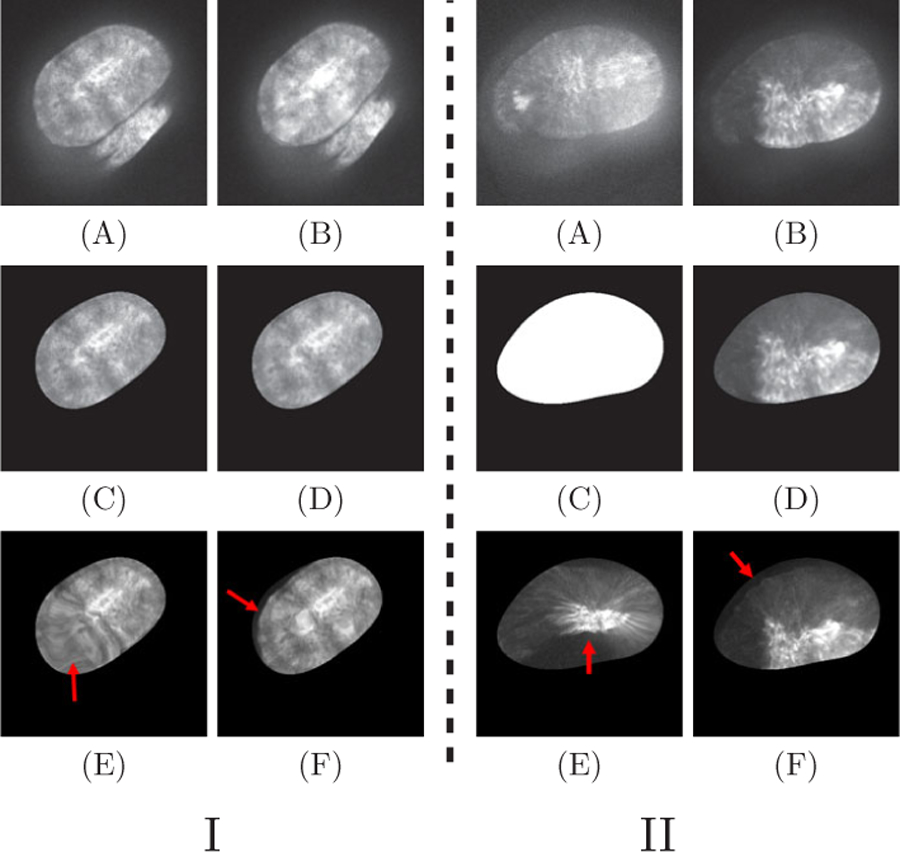

We observed that the boundaries of the output pouches from our FCN model may be fuzzy or of irregular shape in difficult cases (see Fig. 4(F)). To improve the boundary accuracy of the segmented pouches, we first apply a Gaussian smooth filter to reduce the influence of intensity variations inside the pouches and then employ a graph search algorithm with the node weights being the negatives of the gradients to further refine the shape boundaries. This allows the subsequent mapping stage to be built upon more accurate segmentation and produce better registration results. Details of this process are omitted due to the page limit.

Fig. 4.

Segmentation results of different methods on a somewhat difficult pouch image (BR = Boundary Refinement). The red arrow indicates a location that demonstrates the effect of BR.

2.2. Mapping Stage

The goal of the registration process is: For every point in the source image, obtain an optimal corresponding point in the reference image. A key observation is that the intensity profile of the same pouch may incur substantial changes in different frames of an image sequence, due to undergoing Ca2+ signal waves. Hence, intensity is not a reliable cue for finding optimal correspondence between points in different frames of a sequence. Here, we utilize the results from the segmentation stage.

Rigid transformation

Since there are lots of movements (i.e., rotation and translation) of pouches in image sequences, we first compute an optimal rigid transformation to reduce their influence. This optimization step uses a regular-step gradient descent algorithm. Note that here, local optimum traps could be an issue in practice. Specifically, since the pouches are often of oval shapes, a local optimum may yield incorrect results where the object is aligned with the opposite orientation. To resolve this issue, we always initialize the optimization process using the optimum parameters computed from the preceding frame in the sequence, since the pouch movement in consecutive frames is usually not very big.

Non-rigid transformation

The non-rigid registration seeks an optimal transformation , which maps any points in the source image at time t to time t0 (i.e., in the reference image). We use the free-form deformation (FFD) transformation model, based on B-splines [9], to find an optimal transformation.

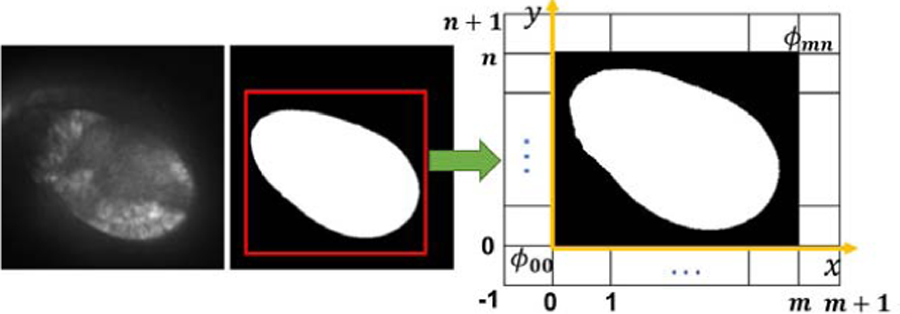

For our problem, not too many control points are needed outside a pouch, because we need to focus only on the ROI. Thus, detecting ROIs first can save computation time. Pouches will be near the same position in the frames after the rigid registration, making it possible to do non-rigid transformation only around the ROI area. Based on this observation, we can crop an area in the first frame, and apply a lattice Φ to this area in the following changing frames. We define the lattice Φ as an (m + 3) × (n + 3) grid in the domain Ω (see Fig. 3).

Fig. 3.

Configuration of lattice grid Φ for non-rigid transformation.

We define the registration model as follows. Let be a rectangular domain in the xy plane, where the X and Y values specify the boundary of the detection area. To approximate the intensity of scattered points, I(x, y), in a pouch, we formulate a function f as a uniform cubic B-spline function: , where , and . In addition, Bi represents the i-th basis function of the cubic B-spline: B0(t) = (1−t)3/6, B1(t) = (3t3−6t2 +4)/6, B2(t) = (−3t3 − 6t2 +4) /6, and B3(t) = (t3)/6.

Since the resolution of the control points determines the non-rigid degree and has a big impact on the computation time, a higher resolution of control points gives more freedom to do deformation while also increasing the computation time. To optimize this trade-off, we use a multi-level mesh grid approach [10] to devise a computationally efficient algorithm. Let Φ1, Φ2,…, Φg denote a hierarchy of meshes of control points with increasing resolutions and T1, T2,…, Tg be the deformation functions with respect to each mesh. We first apply a coarse deformation, and then refine the deformation gradually. The size of the mesh grid is increased by a factor of 2 with the same spacing, so that the raw image is down-sampled to the corresponding size at different levels. The final deformation is the composition of these functions: T (Ω) = Tg (…T2(T1(Ω))…).

To obtain an optimal transformation Φ, we minimize the following energy function: E = Fs + QS, where the first term is the similarity measure and the second term is for regularization. Q is the curvature penalization and S is the displacement of control points. The similarity measure we use is the sum of squared distance (SSD): , where A is the reference image intensity function, BT is the intensity function of the transformed image of a source image B under the current transformation T and N is the number of pixels.

This process iteratively updates the transformation parameters, T , using a gradient descent algorithm. When a local optimum of the cost function is smaller than ε or the number of iterations is bigger than Nmax, the algorithm will terminate.

3. EXPERIMENTS AND EVALUATIONS

We evaluate our registration approach from two perspectives. First, we evaluate the accuracy of the segmentation method, because the segmentation accuracy is crucial to our overall approach. Second, we conduct experiments using both synthetic data and real biological data to assess the registration performance of different approaches.

3.1. Segmentation Evaluations

We conduct experiments on 100 images of 512 × 512 pixels selected from 10 randomly chosen control videos. Our method is compared with both traditional method (e.g., level set [11]) and state-of-the-art FCN models (i.e., U-Net [7] and CUMedNet [12]). The FCN models are trained using the Adam optimizer [13] with a learning rate of 0.0005. Data augmentation (random rotation and flip) is used during training. We use the mean IU (intersection over union) and F1 as the metrics. Table 1 shows the quantitative results of different methods, and Fig. 4 shows some segmentation examples of pouches using different methods. It is evident that our FCN model works better in segmenting difficult ROIs, and our boundary refinement can help obtain accurate ROI boundaries.

Table 1.

Comparison results of segmentation.

| Mean IU | F1 score | |

|---|---|---|

| Level set | 0.8235 | 0.8294 |

| CUMedNet | 0.9394 | 0.9454 |

| U-Net | 0.9479 | 0.9542 |

| Our FCN | 0.9586 | 0.9643 |

| Our FCN + BR | 0.9617 | 0.9682 |

3.2. Registration Evaluations

We compare the registration performance with the Demon algorithm [14] and a B-spline method based on Residual Complexity (RC) [15], which are two state-of-the-art non-rigid registration methods for images with spatially-varying intensity.

Synthetic data

For synthetic data, we choose 8 pouch images as reference images. Specifically, for each reference image R, we generate 20 source images by adding geometric distortion GT, and intensity distortion IT, to simulate an image sequence with undergoing movements and Ca2+ signaling . For geometric distortions, we apply an elastic spline deformation to perturb the points according to the grid deformation and a rigid transformation. For intensity distortion, we first add Gaussian noise in random disk-shaped regions within the pouch to simulate the calcium signal waves and then rescale the intensity to [0, 1]. Our target is to find the optimal transformation Ti from every source image to the corresponding reference image . To quantify the performance, we compare the intensity root mean square error (RMSE) between the reference image R and the clean registered images Ci, which are obtained by applying Ti to the geometrically distorted images without intensity distortion . The idea is to evaluate whether the registration algorithm is able to find an optimal geometric transformation without damaging the texture. Fig. 5.I shows some visual results of different methods, and Table 2 gives quantitative results. The results of our approach are considerably more accurate.

Fig. 5.

Registration results. I: Synthetic data. (A) A reference image; (B) A source image; (C) The expected registration result; (D) Our method; (E) RC; (F) Demon. II: Real pouches. (C) Intermediate segmentation; (D) Our method; (E) RC; (F) Demon. The red arrows point at some areas with unsatisfactory registration.

Table 2.

Registration results of synthetic data.

| Movie No. | Our method RMSE (pixel) | RC RMSE (pixel) | Demon RMSE (pixel) |

|---|---|---|---|

| 1 | 0.097 ± 0.019 | 0.137 ± 0.039 | 0.150 ± 0.023 |

| 2 | 0.097 ± 0.021 | 0.133 ± 0.025 | 0.184 ± 0.043 |

| 3 | 0.091 ± 0.012 | 0.137 ± 0.033 | 0.162 ± 0.021 |

| 4 | 0.098 ± 0.009 | 0.119 ± 0.024 | 0.128 ± 0.018 |

| 5 | 0.086 ± 0.011 | 0.094 ± 0.020 | 0.124 ± 0.010 |

| 6 | 0.088 ± 0.013 | 0.132 ± 0.059 | 0.149 ± 0.015 |

| 7 | 0.095 ± 0.018 | 0.096 ± 0.013 | 0.133 ± 0.021 |

| 8 | 0.099 ± 0.019 | 0.109 ± 0.019 | 0.175 ± 0.034 |

| Average | 0.094 ± 0.015 | 0.120 ± 0.029 | 0.151 ± 0.023 |

Wing disc pouch data

We randomly choose 8 movies from 150 control videos. In each movie, we take the first frame as the reference image and all the other frames as source images. To validate the registration results, we apply transformation T to the annotation of source images to obtain the registered annotation boundary and compare the results using the Hausdorff distance (HD) error metric. Table 3 gives the quantitative results. Our approach achieves accurate boundary shapes. Also, our method obtains clear texture inside ROIs, as shown by the examples in Fig. 5.II.

Table 3.

Registration results of real pouch data.

| Movie No. | Our method HD (pixel) | RC HD (pixel) | Demon HD (pixel) |

|---|---|---|---|

| 1 | 5.199 ± 0.484 | 5.490 ± 0.876 | 5.633 ± 0.517 |

| 2 | 5.556 ± 1.250 | 6.189 ± 0.833 | 6.819 ± 1.193 |

| 3 | 5.568 ± 0.702 | 6.403 ± 0.812 | 6.164 ± 0.514 |

| 4 | 4.342 ± 1.347 | 6.160 ± 1.311 | 6.761 ± 0.787 |

| 5 | 4.622 ± 0.684 | 5.461 ± 0.824 | 5.072 ± 0.330 |

| 6 | 4.913 ± 0.527 | 5.328 ± 1.394 | 6.696 ± 0.703 |

| 7 | 6.882 ± 1.162 | 7.413 ± 0.574 | 7.192 ± 0.836 |

| 8 | 5.344 ± 0.443 | 6.173 ± 0.805 | 5.845 ± 0.576 |

| Average | 5.303 ± 0.825 | 6.077 ± 0.929 | 6.273 ± 0.682 |

4. DISCUSSIONS AND CONCLUSIONS

In this paper, we propose a new two-stage non-rigid image registra tion approach and apply it to analyze live imaging data of wing disc pouches for Ca2+ signaling study. Comparing to the state-of-the-art non-rigid methods for biomedical image registration, our approach achieves higher accuracy in aligning images with non-negligible texture distortions. Our approach lays a foundation for quantitative analysis of pouch image sequences in whole-organ studies of Ca2+ signaling related diseases. Our approach may be extended to solving other biomedical image registration problems, especially when the intensity profiles and texture patterns of the target objects incur significant changes. The mapping stage of our approach is application-dependent, while our segmentation method is general and can be applied to many problems by modifying only the graph search based boundary refinement procedure.

5. ACKNOWLEDGMENT

This research was supported in part by the Nanoelectronics Research Corporation (NERC), a wholly-owned subsidiary of the Semiconductor Research Corporation (SRC), through Extremely Energy Efficient Collective Electronics (EXCEL), an SRC-NRI Nanoelectronics Research Initiative under Research Task ID 2698.005, and by NSF grants CCF-1640081, CCF-1217906, CNS-1629914, CCF-1617735, CBET-1403887, and CBET-1553826, NIH R35GM124935, Harper Cancer Research Institute Research like a Champion awards, Walther Cancer Foundation Interdisciplinary Interface Training Project, the Notre Dame Advanced Diagnostics and Therapeutics Berry Fellowship, the Notre Dame Integrated Imaging Facility, the Bloomington Stock Center for fly stocks.

6. REFERENCES

- [1].Wu Q, Brodskiy P, Narciso C, Levis M, Chen J, Liang P, Arredondo-Walsh N, Chen DZ, and Zartman JJ, “Inter-cellular calcium waves are controlled by morphogen signaling during organ development,” bioRxiv, no. 104745, 2017. [Google Scholar]

- [2].Ardiel EL, Kumar A, Marbach J, Christensen R, Gupta R, Duncan W, Daniels JS, Stuurman N, Colón-Ramos D, and Shroff H, “Visualizing calcium flux in freely moving nematode embryos,” Biophysical Journal, vol. 112, no. 9, pp. 1975–1983, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Wong A and Orchard J, “Robust multimodal registration using local phase-coherence representations,” Journal of Signal Processing Systems, vol. 54, no. 1–3, p. 89, 2009. [Google Scholar]

- [4].El-Baz A, Farag A, Gimel’farb G, and Abdel-Hakim AE, “Image alignment using learning prior appearance model,” in ICIP, pp. 341–344, 2006.

- [5].Woo J, Stone M, and Prince JL, “Multimodal registration via mutual information incorporating geometric and spatial context,” IEEE Transactions on Image Processing, vol. 24, no. 2, pp. 757–769, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Chen J, Yang L, Zhang Y, Alber M, and Chen DZ, “Combining fully convolutional and recurrent neural networks for 3D biomedical image segmentation,” in NIPS, pp. 3036–3044, 2016.

- [7].Ronneberger O, Fischer P, and Brox T, “U-Net: Convolutional networks for biomedical image segmentation,” in MICCAI, pp. 234–241, 2015.

- [8].Yang L, Zhang Y, Chen J, Zhang S, and Chen DZ, “Suggestive annotation: A deep active learning framework for biomedical image segmentation,” in MICCAI, pp. 399–407, 2017.

- [9].Lee S, Wolberg G, Chwa K-Y, and Shin SY, “Image meta-morphosis with scattered feature constraints,” IEEE Transactions on Visualization and Computer Graphics, vol. 2, no. 4, pp. 337–354, 1996. [Google Scholar]

- [10].Rueckert D, Sonoda L, Denton E, Rankin S, Hayes C, Leach MO, Hill D, and Hawkes DJ, “Comparison and evaluation of rigid and non-rigid registration of breast MR images,” in SPIE, vol. 3661, pp. 78–88, 1999. [DOI] [PubMed] [Google Scholar]

- [11].Li C, Kao C-Y, Gore JC, and Ding Z, “Minimization of region-scalable fitting energy for image segmentation,” IEEE Transactions on Image Processing, vol. 17, no. 10, pp. 1940–1949, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Chen H, Qi X, Cheng J-Z, Heng P-A, et al. , “Deep contextual networks for neuronal structure segmentation.,” in AAAI, pp. 1167–1173, 2016.

- [13].Kingma D and Ba J, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

- [14].Kroon D-J and Slump CH, “MRI modality transformation in demon registration,” in ISBI, pp. 963–966, 2009.

- [15].Myronenko A and Song X, “Image registration by minimization of residual complexity,” in CVPR, pp. 49–56, 2009.