Abstract

High-resolution real-time intraocular imaging of retina at the cellular level is very challenging due to the vulnerable and confined space within the eyeball as well as the limited availability of appropriate modalities. A probe-based confocal laser endomicroscopy (pCLE) system, can be a potential imaging modality for improved diagnosis. The ability to visualize the retina at the cellular level could provide information that may predict surgical outcomes. The adoption of intraocular pCLE scanning is currently limited due to the narrow field of view and the micron-scale range of focus. In the absence of motion compensation, physiological tremors of the surgeons’ hand and patient movements also contribute to the deterioration of the image quality.

Therefore, an image-based hybrid control strategy is proposed to mitigate the above challenges. The proposed hybrid control strategy enables a shared control of the pCLE probe between surgeons and robots to scan the retina precisely, with the absence of hand tremors and with the advantages of an image-based auto-focus algorithm that optimizes the quality of pCLE images. The hybrid control strategy is deployed on two frameworks - cooperative and teleoperated. Better image quality, smoother motion, and reduced workload are all achieved in a statistically significant manner with the hybrid control frameworks.

I. Introduction

Among the various vision-threatening medical conditions, retinal detachment is one of the most common, occurring at a rate of about 1 in 10,000 per eye per year worldwide [1]. Mechanistically retinal detachment represents a separation of the neural retina from the necessary underlying supporting tissues, such as the retinal pigment epithelium and the underlying choroidal blood vessels, which collectively provide multiple types of support required for retinal survival. If not treated promptly, retinal detachment can result in a permanent loss of vision. One possible regenerative therapeutics treatment to the injured but not dead retina is to deliver a neuroprotective agent, such as stem cells, to targeted retina cells. However, this requires finding the viable retina cells identified by using cellular-level information [2].

A recent promising technique for in-vivo characterization and real-time visualization of biological tissues at the cellular level is probe-based confocal laser endomicroscopy (pCLE). pCLE is an optical visualization technique that can facilitate cellular-level imaging of biological tissues in confined spaces. The effectiveness of pCLE has previously been demonstrated using real-time visualization of the gastrointestinal tract [3], thyroid gland [4], breast [5] and gastric [6] tissue. The use of benchtop confocal microscopy systems for imaging externally mounted retinal tissue has been reported [7][8]. However, the small footprint of a pCLE probe (typically on the order of 1 mm) makes pCLE a suitable technique for intraocular cellular-level visualization. To our knowledge, there is only one prior work for intraocular pCLE scanning of the retina (M. Paques, personal communication, March 22, 2020) using a contact-based CellVizio ProFlex probe (Mauna Kea Technologies). The work by M. Paques et. al. only took one image via without attempting to scan a large area. The image was acquired manually without considering the safety of retina.

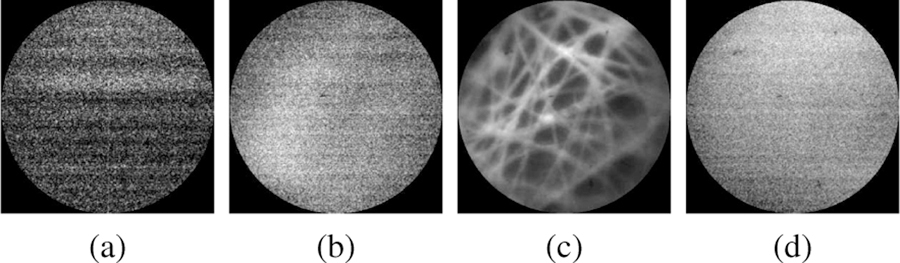

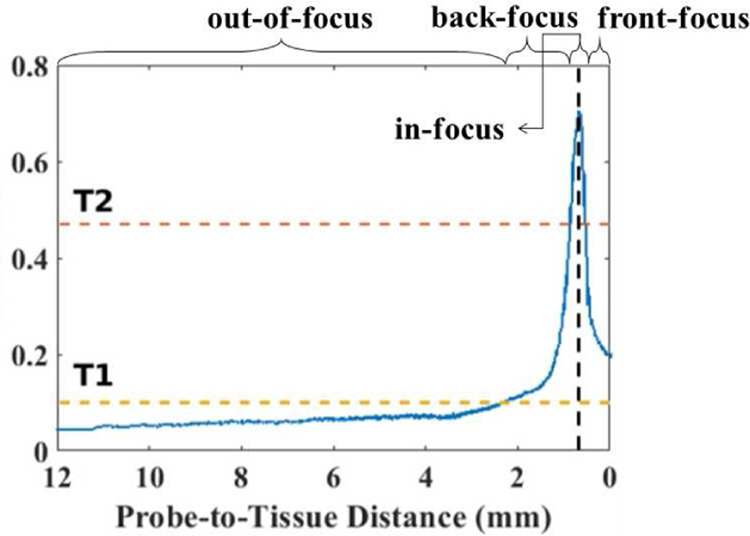

There are, however, several steep challenges to manual intraocular scanning of the retina using a pCLE probe for real-time in-vivo diagnosis. First, the exceeding fragility and non-regenerative nature of retinal tissue do not allow any damage. It has been shown that forces as low as 7.5 mN can tear the retina [9], thus necessitating a non-contact scanning probe. Previous publications of contactless in vivo cornea scanning [10][11] promise the no-contact scanning working principles. Furthermore, due to the small size of pCLE fiber bundles, pCLE has a narrow field of view, usually on the sub-millimeter scale [12]. Considering that the average diameter of the human eye is approximately 23.5 mm, one single pCLE image only covers a tiny portion of the scanning region. A solution to this is to use mosaicking algorithms. However, mosaicking algorithms require consistent high-quality images for feature matching and image stitching. The micron-scale focus range of a pCLE system, however, makes the manual acquisition of high-quality images extremely difficult; as the image quality drops considerably beyond the optimal focus range [13]. For example, the non-contact pCLE system with a distal-focus lens in this study has an optimal focus distance of approximately 700 µm and a focus range of approximately 200 µm. Fig. 1 shows a comparison of our non-contact probe for four cases: 1) out-of-range, 2) back-focus, 3) in-focus, and 4) front-focus, measured at a probe-to-tissue distance of 2.34 mm, 1.16 mm, 0.69 mm, and fully in contact with the tissue, respectively. The physiological tremor of the human hand is on the order of magnitude of several hundreds of microns [14][15], making it almost impossible to consistently maintain the pCLE probe in the optimal range required for high-quality image acquisition while assuring no or minimal contact. In addition to the above, patient movement, as well as the movement of the detached retina during the repair procedure, augment the complexities of manual scanning of the detached retinal tissue.

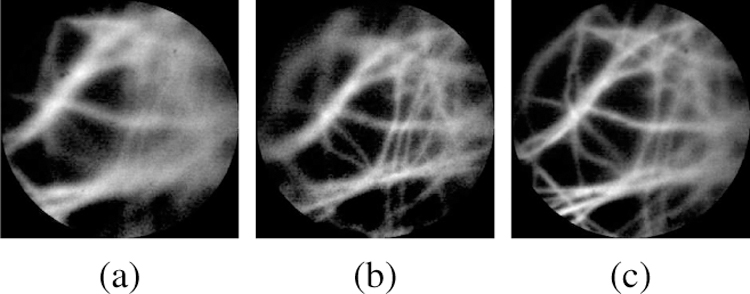

Fig. 1.

Sample views of a non-contact pCLE system: (a) out-of-range, (b)back-focus, (c) in-focus, and (d) front-focus views, measured at a tissue distance of (a) 2.34 mm, (b) 1.16 mm, (c) 0.69 mm, and (d) fully in contact with the tissue, respectively.

These challenges highlight the need for a robot-assisted manipulation of the pCLE probe for intraocular scanning of the retinal tissue. While robotic systems have been developed in the literature to mitigate the difficulties of manipulating pCLE probes, most are not yet suitable for intraocular use. In [16], a hand-held device with a voice-coil actuator and a force sensor is presented to enable consistent probe-tissue contact. This device uses the measured forces to control the probe motion relative to the tissue. In [17], a hollow tube is added surrounding the pCLE probe to provide friction-based adherence during contact with the tissue to enable a “steady motion”. All the discussed techniques, however, are only compatible with contact-based pCLE probes, which together with the large footprint of the devices, making them inappropriate for scanning the delicate retinal tissues inside of the confined space of the eyeball.

Robotic integration of the pCLE probe that does not require probe-tissue contact has also been achieved in [18], by presenting a system that tracks the tip position of the pCLE with an external tracker. However, in the present work, the scanning task was performed on a flat surface that presupposed the geometry and position of the tissue. Nonetheless, the curvature of the eyeball and the uneven surface of detached retina rapidly void these assumptions, rendering this system inapplicable to retinal surgery.

Therefore, in this paper, a semi-autonomous hybrid approach is proposed for real-time pCLE scanning of the retina. Features of the proposed hybrid approach are briefly described:

a robot-assisted control that allows the surgeon to maneuver the probe with micron-level prevision laterally along the tissue surface freely, where the use of a robot eliminates the effect of hand tremor,

an auto-focus algorithm based on the pCLE images that actively and optimally adjusts the probe-to-tissue distance for best image quality and enhanced safety.

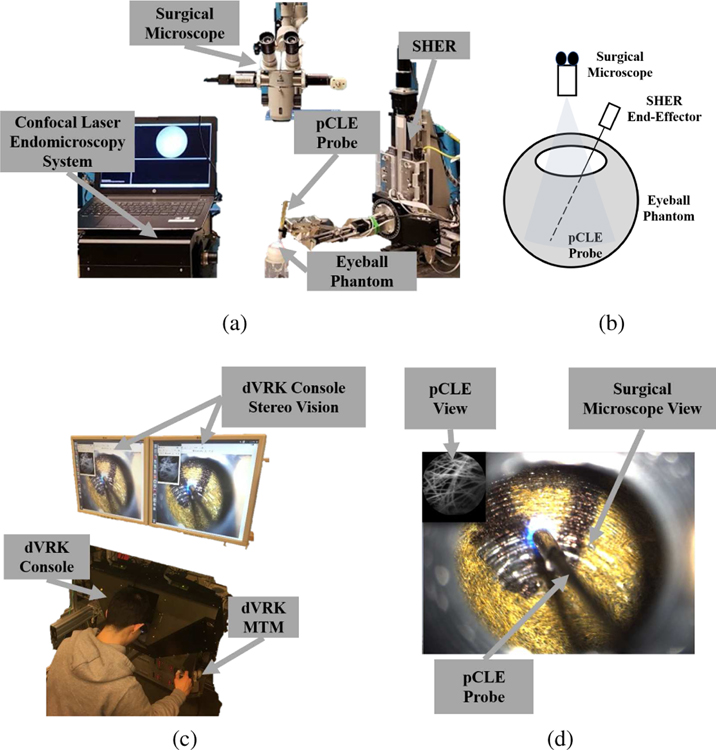

The hybrid control strategy is deployed on two frameworks, cooperative and teleoperated, which have been shown to significantly improve the position precision in retinal surgery [19]. Both implementations use the Steady-Hand Eye Robot (SHER, developed at LCSR, Johns Hopkins University) end-effector to hold the customized non-contact fiber-bundle pCLE imaging probe. The pCLE probe is connected to a high-speed Line-Scan Confocal Laser Endomicroscopy system (developed at the Hamlyn Centre, Imperial College [20]). The teleoperated framework includes the da Vinci Research Kit (dVRK, developed at LCSR, Johns Hopkins University) to allow surgeons to remotely control the pCLE probe using the mater tool manipulator (MTM) and the stereo-vision console. A surgical microscope is used to visualize the surgical scene within the eyeball. Fig. 2 shows the complete setup.

Fig. 2.

The complete setup: (a) the SHER robot, the surgical microscope, the confocal laser endomicroscopy system, the pCLE probe, and an artificial eyeball phantom, (b) sketch of the setup, (c) the dVRK stereo vision, console and MTM, (d) the user’s view, where the pCLE view is overlaid on the top left of the surgical microscope view looking through the eyeball opening, and the pCLE probe can be seen.

The proposed hybrid cooperative and teleoperated frameworks have been validated through a series of experiments and a set of user studies, in which they are compared to manual operation, traditional cooperative and teleoperated control systems. We have discovered that the proposed hybrid frameworks result in statistically significant improvements in image quality, motion smoothness, and user workload.

Contribution:

To the best of our knowledge, this work is the first reported attempt to perform intraocular pCLE scanning of a large area of the retina with a distal-focus probe and with robot assistance. The introduction of an auto-focus, robot-assisted, non-contact pCLE scanning system will resolve previously discussed challenges and provide surface information of retina with enhanced safety and efficiency. The technical contribution reported can be summarized as follows:

A novel hybrid teleoperated control framework is proposed to enable significant motion scaling for improved precision of pCLE control, thus leading to consistently high-quality images. An improved hybrid cooperative framework extending the previously system in [21] is also presented.

The proposed hybrid controller includes a novel auto-focus algorithm to find the optimal position for best image quality while enhancing operation safety by avoiding probe-retina contact. A prior model of the retina curvature is included to speed up the algorithm and relax the assumption of scanning a flat surface within a static eye, which limited our previous work in [21].

Three experiment scenarios validate the challenge of manual scanning, the efficacy of the proposed auto-focus algorithm, and the enhanced smoothness of the scanning path and improved image quality using the hybrid framework over the traditional one.

A user study involving 14 participants demonstrates the enhanced image-quality and reduced user workload in a statistically significant manner using the proposed hybrid system.

The remainder of the paper is organized as follows: Section II explains the implementation of hybrid control strategy by using the hybrid cooperative framework; Section III presents the hybrid teleoperated framework; Section IV presents the three experiment evaluations; Section V presents the user study, results and discussion; Section VI concludes the paper.

II. Hybrid Cooperative Framework

The hybrid cooperative framework setup consists of the pCLE system and the SHER. The SHER is a cooperatively controlled robot with 5-Degrees-of-Freedom (DOF) developed for vitreoretinal surgery. It has a positioning resolution of 1 µm and a bidirectional repeatability of 3 µm. The pCLE system used for real-time image acquisition is a high-speed line-scanning fiber bundle endomicroscope, coupled to a customized probe (FIGH-30–800G, Fujikura Ltd.) of 30,000-core fiber bundle with distal micro-lens (SELFOC ILW-1.30, Go!Foton Corporation). The lens-probe distance is set such that the probe has an optimal focus distance of about 700 µm and a focus range of 200 µm.

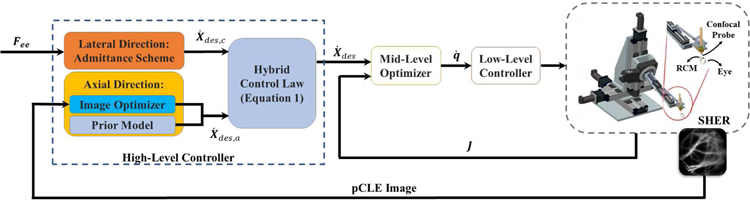

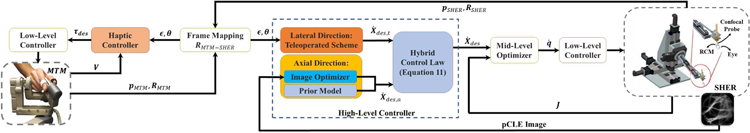

The hybrid cooperative framework consists of a high-level motion controller (which implements the semi-autonomous hybrid control strategy), a mid-level optimizer, and a low-level controller. Fig. 3 illustrates the block diagram of the overall closed-loop architecture.

Fig. 3.

General schematic of the proposed hybrid cooperative control strategy. Hybrid Control Law is described in Equation 1. Lateral Direction control is described in Section II-A2. Axial Direction control is described in Section II-A3 and Section II-A4. Mid-Level Optimizer and Low-Level Controller are described in Section II-B.

A. High-Level Controller

The high-level controller enables dual functionality:

the control of the pCLE probe by surgeons with robot assistance in directions lateral to the scanning surface. Surgeons can maneuver the pCLE probe to scan regions of interest while the robot cancels out hand tremors.

the autonomous control of the pCLE probe by the robot in the axial direction (along the normal direction of the tissue’s surface) to actively control the probe-tissue distance for optimized image quality. This active image-based feedback can reduce the task complexity and the workload on surgeons while improving the image quality.

The four components of the high-level controller will be discussed separately.

1). Hybrid Control Law:

The hybrid control law can be formulated as:

| (1) |

where subscripts des, c, and a denote the desired hybrid motion, the cooperative motion by the surgeon (used for lateral control), and the auto-focus motion by the robot (used for axial control), respectively; X denotes to the Cartesian position of the pCLE probe tip expressed in the base frame of the SHER. Kc and Ka denote the motion specification matrices [22] that map a given motion to the lateral and axial directions of the retina surface respectively, and are defined as follows:

| (2) |

| (3) |

where R denotes the orientation of the tissue normal expressed in the base frame of the SHER robot.

2). Lateral Direction - Admittance Scheme:

The motion of the pCLE probe along the lateral direction is set to be controlled cooperatively [23] by the SHER and the surgeon together to enable tremor-free scanning of regions of interest. Following [23], this is realized using an admittance control scheme, as follows:

| (4) |

where subscripts ee and r indicate the end-effector frame and the base frame of the SHER, respectively; Fee denotes the interaction forces applied by the user’s hand, expressed in the end-effector frame. Fee is measured by a 6-DOF force/torque sensor (ATI Nano 17, ATI Industrial Automation, Apex, NC, USA) attached on the end-effector; α is the admittance gain; Adr,ee is the adjoint transformation matrix that maps the desired motion in the end-effector frame to the base frame of the SHER, and is calculated as

| (5) |

where Rr,ee and pr,ee are the rotation matrix and translation vector of the end-effector frame expressed in the base frame of the SHER. Also, skew(pr,ee) denotes the skew-symmetric matrix associated with the vector pr,ee. The resultant , along with the motion specification matrix Kc, specifies the lateral component of the desired motion given in Equation 1.

3). Axial Direction - Image Optimizer:

The desired motion along the axial direction, , is defined such that the confocal image quality is optimized through an auto-focus control using a gradient-ascent search. The autonomous control is performed in a sensorless manner, meaning that no additional sensing modality is required for depth/contact measurement. As discussed previously, the confined space within the eyeball and the fragility of the retinal tissue makes it very challenging, if not impossible, to add extra sensing modalities. The proposed sensorless and contactless control strategy relies on the image quality as an indirect measure of the probe-to-tissue distance, intending to maximize the quality autonomously.

Using image blur metrics as a depth-sensing modality has been previously discussed in [13]. The control strategy presented therein, however, is only applicable to contact-based pCLE systems probes, which is not suitable for scanning the delicate retinal tissue. Besides, the control system presented in [13] is dependent on the characteristics of the tissue and requires a pre-operative calibration phase. The calibration process necessitates pressing the contact-based probe onto the tissue and collecting a series of images from the pCLE system along with the force values applied to the tissue. However, this calibration process (which requires exertion and measurement of force values) is not feasible for retinal scanning due to its fragility. Therefore, in this paper, a image-based auto-focus methodology is proposed for optimizing image sharpness and quality during non-contact pCLE scanning without the necessity for any extra sensing modality.

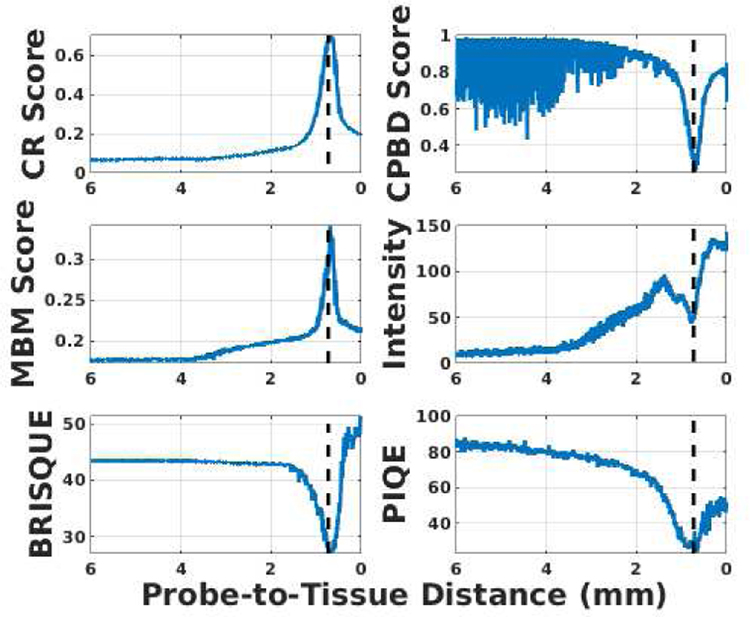

For this purpose, the effectiveness of several blur metrics was evaluated to use in real-time control of the robot. The selected metrics are: Crété-Roffet (CR) [24], Marziliano Blurring Metrix (MBM) [25], Cumulative Probability of Blur Detection (CPBD) [26], Blind/Referenceless Image Spatial QUality Evaluator (BRISQUE) [27] and Perception-based Image Quality Evaluator (PIQE) [28], which are all standard no-reference image metrics for quality assessment. Besides, the image intensity is calculated since the pCLE image will appear dark when back-focus and bright when front-focus. An experiment was conducted by commanding the SHER to move from a far distance to almost touching a scanned tissue surface. The six metrics were calculated for the pCLE images during the experiment, as shown in Fig. 4. The optimal image view in this experiment was achieved around a probe-to-tissue distance of 690 µm. A consistent pattern was observed for the six metrics around the optimal view (i.e., maximized value for MBM and CR scores, and minimized value for intensity, CPBD, BRISQUE, and PIQE). Among these six metrics, the lowest level of noise and the highest signal-to-noise ratio belongs to CR, which was eventually chosen to be incorporated into our auto-focus control strategy. CR is a no-reference blur metric and can evaluate both motion and focal blurs. It has a low implementation cost and high robustness to the presence of noise in the image. All of the above attributes make CR an efficient and effective metric for real-time image-based control. Moreover, since pCLE images exhibit blur patterns that CR can capture (as shown in Fig. 1 and Fig. 5), CR becomes a preferred metric to evaluate the clarity and indicate the clinical diagnosis value of a given pCLE image.

Fig. 4.

Evaluation of 6 image metrics with respect to (w.r.t) the probe-to-tissue distance (linear scale). The optimal view at a probe-to-tissue distance of 690 µm is indicated by the vertical dashed line.

Fig. 5.

CR score w.r.t the probe-to-tissue distance, with the two horizontal lines indicating two thresholds T1 and T2, the vertical line indicating the optimal probe-to-tissue distance of approximately 690 µm. The four focus regions (out-of-focus, back-focus, in-focus, and front-focus) are labeled respectively.

The working principle of the CR score is based on the fact that blurring an already blurred image does not result in significant changes in intensities of neighboring pixels, unlike blurring a sharp image. The CR score for an image I of size M × N can be obtained by first calculating the accumulated absolute backward neighborhood differences in the image along the x and y axes, denoted as dI,x and dI,y:

| (6) |

Then, the input image I is convolved with a low-pass filter to obtain a blurred image B, also of size M × N. Afterwards, the changes of neighborhood differences due to the low-pass filtration, denoted as dIB,x and dIB,y, are calculated along x and y axes with a minimum value of 0:

| (7) |

The accumulated changes dIB,x and dIB,y are then normalized using the values obtained in Equation 6, indicating the level of blur present in the input image as

| (8) |

The quality, q, of the image I, i.e. the amount of high-frequency content, is then calculated as the maximum blur level along the x and y axes as:

| (9) |

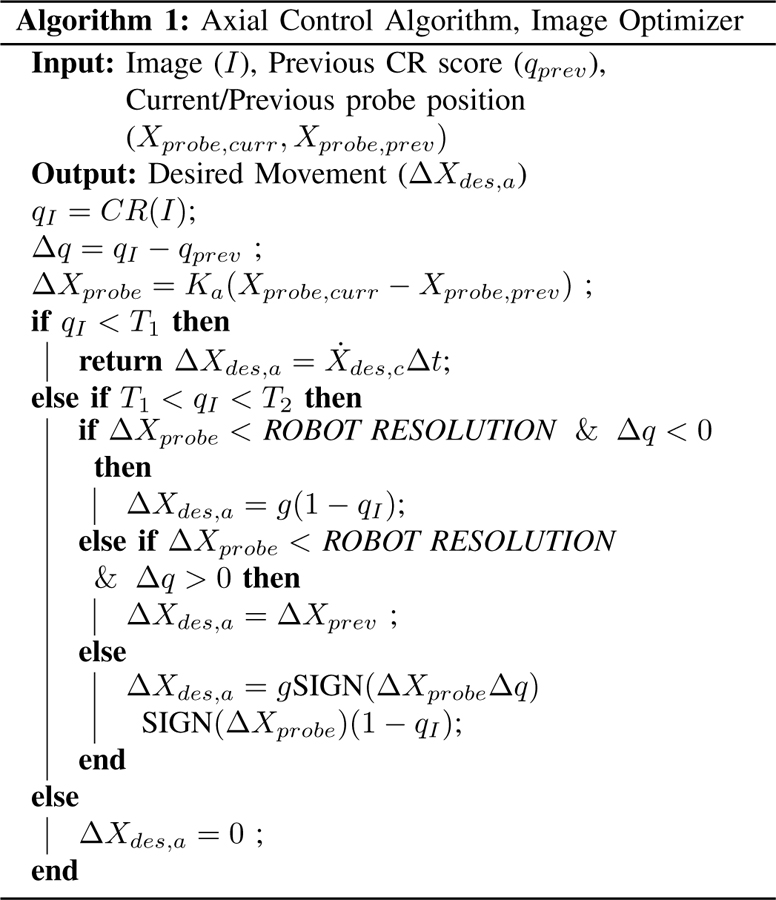

Fig. 5 shows an example of the CR score w.r.t the probe-to-tissue distance acquired from the previous experiment. The CR metric has minimal variation and the lowest value when the pCLE probe is out-of-focus (illustrated in Fig. 1a); the maximum value (i.e. the image quality is maximized) when the probe-to-tissue distance is optimized and the pCLE probe is in-focus (illustrated in Fig. 1c); and the CR metric drops sharply when the probe is either back-focus (Fig. 1b) or front-focus (Fig. 1d). Accordingly, the autonomous probe-to-tissue adjustment algorithm (Algorithm 1) is designed based on a stochastic gradient-ascent approach to maximize the image quality.

An interesting observation in Fig. 5 is that the metric has an almost symmetric pattern around the optimal in-focus probe-to-tissue distance, while having an asymmetric pattern in the out-of-range region. This asymmetry is used in our proposed framework to identify probe-to-tissue distances that are too far, and to avoid the vanishing gradient problem that may occur. For this purpose, a pre-defined threshold T1 is chosen, below which the pCLE probe is considered to be out-of-focus. When the probe-to-tissue distance is large and the pCLE probe is far from the retina (e.g., when the pCLE probe is first inserted into the eyeball), the CR score drops below T1. The surgeon can obtain full control of the pCLE probe in both the lateral and axial direction, and all autonomous movement is disabled. It should be noted that this transfer of control only happens when the probe is sufficiently far from the retina and in the out-of-range region. The robot takes over the axial control as soon as the probe approaches the retina and enters the back-focus region. This ensures the safety of the patient as there is no longer the risk of the surgeon suddenly touching or puncturing the retina tissue.

Due to the symmetry around the peak of the CR score (where the image quality is optimal and probe is in-focus), the history of the axial movement of the probe relative to the tissue is used to determine the gradient of the CR score w.r.t the probe-to-tissue distance, such that the direction to increase the CR score is found. If the relative motion of the probe has the same sign as the variation of the image score between the current state and the previous state, the robot is commanded by the algorithm to continue moving in the same direction, and otherwise, to reverse the direction of motion. For example, in the back-focus region, the probe will move closer to the tissue since the gradient is positive; in the front-focus region, the probe will move further from the tissue since the gradient is negative. Therefore, the relative desired movement ΔXdes,a is calculated based on the product of a gain value g (to convert the image score to a distance value) and 1 – qI . The term 1 − qI is used as an adaption factor of the step size, so that the motion of the probe is slowed down further as it gets closer to the optimal distance. The direction of the desired relative motion ΔXdes,a is determined by SIGN (ΔXprobeΔq) · SIGN (ΔXprobe), where SIGN (·) is the sign (positive or negative) of the input (·).

In Algorithm 1, a threshold T2 is chosen as a termination condition, indicating that the optimal probe-to-tissue distance and, thereby, the in-focus condition is met. When an image quality higher than T2 is achieved, the axial motion of zero is sent to the robot. Therefore, the axial motion of the robot is zero unless the score drops beyond the threshold T2. This could happen with the patient motion or a sudden curvature change on the surface of the retina. In this scenario, the robot does not have a previous state of motion to rely on to identify the desired direction of motion. Therefore, it will perform an exploration step to specify the appropriate direction of motion. As a safety consideration, the exploration step is always away from the retina to ensure no contact between the pCLE probe and the retina.

In this framework, a CR score of 0.47, where the image view appears in-focus visually, is chosen for T2. A CR score of 0.10 is chosen for T1, where the pCLE probe is out-of-range. A comparison of three images collected at CR values of 0.45, 0.47 and 0.61 is shown in Fig. 6. It should be mentioned that, since the proposed approach relies only on the gradient of the CR score, it is not sensitive to the exact shape of CR w.r.t the probe-to-tissue distance. Also, only knowing a rough estimation of the two threshold values would suffice for the algorithm, which can be specified pre-operatively.

Fig. 6.

Sample probe views of different CR score, (a) CR=0.45 at 0.51 mm, (b) CR=0.47 at 0.62 mm, and (c) CR=0.61 at 0.69 mm.

The desired axial velocity, used in Equation 1 is calculated based on the control-loop sampling time Δt and the desired axial displacement ΔXdes,a, as .

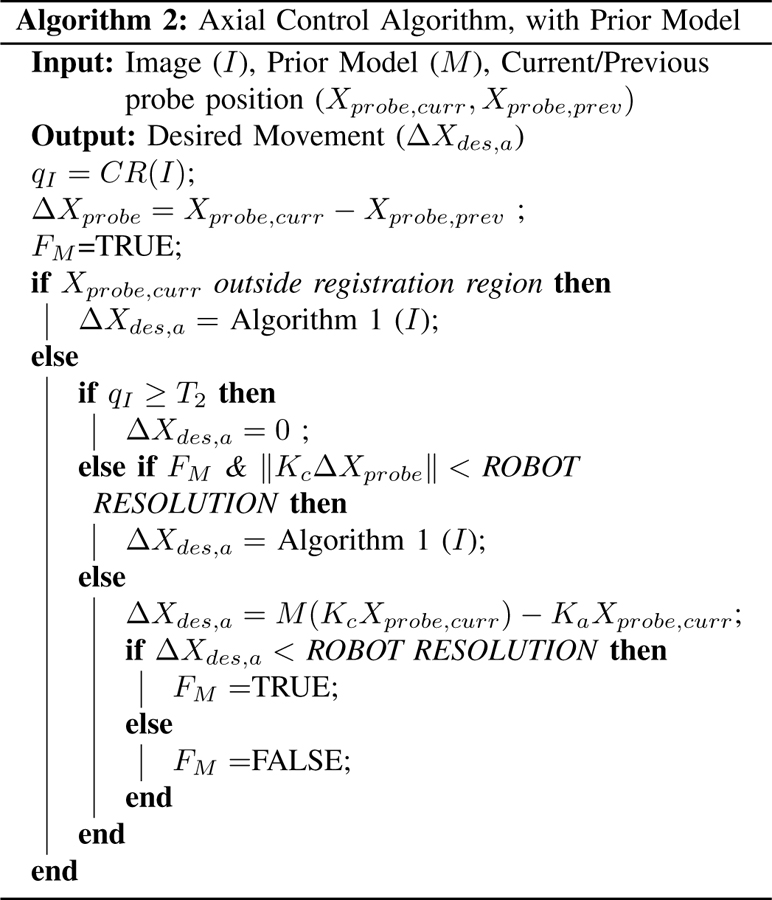

4). Axial Direction - Prior Model:

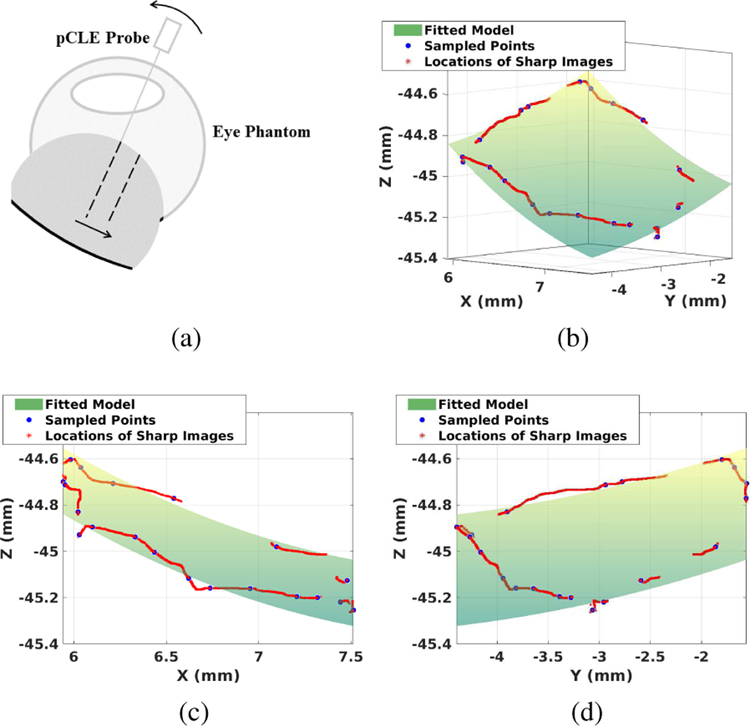

For safety purposes, Algorithm 1 is designed such that the exploration step is always away from the retina. When scanning a curved-surface, this retracted motion may move the probe to a gradient-diminishing region as shown in Fig. 7a before the system captures the right direction of motion again. This can elongate the process of specifying a dominant gradient for correcting motion direction and, thereby, lead to poor user experience.

Fig. 7.

(a) Illustration of the failure case for Algorithm 1. Assuming the pCLE probe is currently in-focus. As the probe moves in the direction of the arrow, the image score will decrease due to the larger probe-to-tissue distance, without the probe movement in the axial direction. The safety feature implemented will move the probe away from the tissue. (b)(c)(d) Example of the fitted model, where (c) is the projected view of XZ plane and (d) is the projected view of YZ plane; Green surface: fitted local polynomial model of the retina. Red points: scanning path during the registration process. Blue points: sampled points where images are in-focus.

To address this, a prior model of the retina is integrated into the algorithm to provide the algorithm with a suitable initialization state. To obtain the retina curvature model, the perimeter of the area of interest is scanned once. During the scanning, both the CR score and the probe position are recorded. Based on the CR score threshold T2, the image qualities at different positions are classified as either in-focus or not. By spatially sampling 20 of the in-focus positions, a second-order parabola is fitted. The resulted model is denoted as M(·), where the input (·) is the lateral probe position KcXprobe,curr, and the output is the desired axial position of the probe, both expressed in the base frame of the SHER. Fig. 7b shows an example of prior model.

The prior model will not be accurate enough at the micron-scale to keep the probe focused directly, but it can augment the previously mentioned gradient-ascent approach (Algorithm 1) to fine-tune the image quality. Thus, Algorithm 2 is proposed to take both the prior model and the gradient-ascent image optimizer into account. The flag FM indicates if the in-focus axial position has been reached according to the prior model. When the flag FM is set to true and the image quality is still far from desirable, the gradient-ascent approach will be then activated to further fine-tune and improve the image quality. If the current position of the probe tip Xprobe,curr is outside the registration region, based on which the model of the retina curvature has been established, the auto-focus algorithm will only use the gradient-ascent approach given in Algorithm 1.

If the CR score is above the optimal image quality threshold T2, the desired axial motion is set to zero and the probe stops moving. Otherwise, a score below T2 indicates that the optimal image quality has not been reached and further adjustment is needed. The algorithm will check if the position inferred by the prior model has been achieved, i.e. FM is TRUE or not, and if the user has moved laterally to the tissue surface by comparing the lateral motion with ROBOT RESOLUTION. If the model-inferred position has been reached while the user has not moved laterally, i.e. if FM is TRUE and , the prior model differs from the actual retina curvature due to external factors, e.g., patient movement or registration error; in this case, Algorithm 1 will be applied to fine-tune the image quality using gradient-ascent image optimizer. In any other case, the probe will move to the axial position specified by the prior-model and the flag is set accordingly, i.e. ΔXa is set to the difference between model inferred position M(KcXprobe,curr) and KaXprobe,curr. If it is found that ΔXa is less than ROBOT RESOLUTION, the position inferred from prior model has been reached and flag FM is set to TRUE. Otherwise, if ΔXa is larger than ROBOT RESOLUTION, the position inferred from the prior model has not yet been reached and FM is set to FALSE.

B. Mid-Level Optimizer and Low-Level Controller

The desired motion , which is the output of the high-level controller, is then sent to the mid-level optimizer, which calculates the equivalent desired joint values q while satisfying the limits of the robot’s joints. The mid-level optimizer is formulated as

| (10) |

where J is the Jacobian of the SHER. qL, qU, , and denote the lower and upper limits of the joint values and velocities, respectively.

The desired joint values are then sent to the low-level PID controller of the SHER, as a result of which the desired objectives generated based on the high-level controller are satisfied.

III. Hybrid Teleoperated Framework

In the previous section, the proposed hybrid strategy was explained using the hybrid cooperative framework. In this section, the hybrid teleoperated framework will be discussed. In addition to filtering tremors of surgeon’s hand, the hybrid teleoperated framework enables large scaling down of the motions to make precise and minute manipulations inside the confined areas of the eyeball. The proposed hybrid teleoperated framework has the same patient-side platform, mid-level optimizer and low-level controller architecture at the SHER side as the hybrid cooperative platform. However, the lateral motion of the pCLE probe is controlled remotely by the surgeon.

For this purpose, the dVRK system has been used to enable remote control of the SHER. The dVRK system is an open-source telerobotic research platform developed at Johns Hopkins University. The system consists of the first-generation da Vinci surgical system with a master console, including the surgeon interface with stereo display and MTM to control the pCLE probe.

The proposed hybrid teleoperated framework differ from the previous hybrid cooperative framework in three aspects: 1) a hybrid control law using the teleoperated commands (Section III-A1), 2) a teleoperated scheme to control the lateral direction (Section III-A2), and 3) a haptic controller on the MTM side (Section III-B). Fig. 8 shows the block diagram of the proposed hybrid teleoperated framework.

Fig. 8.

General schematic of the proposed hybrid teleoperated control strategy. Hybrid Control Law is described in Equation 11. Lateral Direction control is described in Section III-A2. Axial Direction control is described in Section II-A3 and Section II-A4. Mid-Level Optimizer and Low-Level Controller are described in Section II-B. Haptic Controller is described in Section III-B.

A. High-Level Controller

1). Hybrid Control Law:

Like the hybrid cooperative framework, the hybrid motion controller of the teleoperated platform has the lateral and axial components at the SHER side with the following formulations:

| (11) |

where subscripts t and a refer to the teleoperated (the lateral) and autonomous (the axial) components of the motion, respectively; Kt is the motion specification matrix along the lateral direction same in the hybrid cooperative framework, i.e., Kt = Kc. Also, is the desired axial motion component of the SHER, which is derived using the autonomous control strategy discussed in Section II-A3 and Section II-A4 to maximize the image quality.

2). Lateral Direction - Teleoperated Scheme:

The desired lateral component of the motion, , is received from a remote master console as:

| (12) |

such that

| (13) |

where all the above variables are expressed in the base frame of the SHER; and refer to the translational and rotational components of the desired user-commanded velocities. ϵ and θ denote the current translational and rotational components of the user-commanded increments, which are calculated as the tracking error of the SHER. The tracking error, i.e. the difference between the commanded position (Cartesian position of the MTM transformed into the base frame of the SHER) and the actual position of the pCLE probe, can be written as

| (14) |

where β is the teleoperation motion scaling factor; RMTM−SHER is the orientation mapping between the base frames of the MTM and SHER; RMTM and RSHER, respectively, denote the rotation of the MTM in its own base frame and the rotation of pCLE probe in the base frame of the SHER. ΔpMTM refers to the translation offset between the current position of the MTM (pMTM) and the initial position of the MTM (pMTM,0) in the base frame of MTM. ΔpSHER denotes the translation offset between the current position (pSHER) and the initial position (pSHER,0) of the pCLE probe in the base frame of the SHER.

| (15) |

This relative position and absolute orientation control setup allows the surgeon to control the pCLE probe intuitively.

The output of the high-level hybrid controller given in Equation 11, is then sent to the mid-level optimizer and low-level PID controller at the SHER side (discussed in Section II-B) to fulfill the desired motion.

B. Haptic Controller at the MTM Side

To provide the surgeon with sensory situational awareness from the SHER side, a haptic controller is designed at the MTM side.

The first component of an effective haptic feedback strategy is a gravity compensation to cancel out the weight of the MTM. To enable a zero-gravity low-friction controller, a multi-step least square estimation/compensation approach is used, which also addresses elastic force and friction modeling in the dynamics model. Details of the gravity compensation algorithm can be found in the open-source code.

Besides, the haptic controller implemented at the MTM side includes a compliance wrench defined in the MTM base frame, to enable haptic feedback. The compliance wrench has two parts:

an elastic part, which is proportional to the tracking error (Equation 14) of the SHER, and

a damping part, which is proportional to the Cartesian linear velocities of the MTM

The compliance wrench W can be formulated as

| (16) |

where kp and kR are the elasticity coefficient of translation error ϵ and rotation error θ, respectively; b is the damping coefficient of V, the Cartesian linear velocities of the MTM (defined in the base frame of the MTM). The compliance wrench is then converted to the corresponding joint torque using the inverse Jacobian J−1 as

| (17) |

The final desired torque is the summation of the compliance wrench torque and gravity compensation torque , as

| (18) |

The desired torque is then sent to the low-level PID controller of the MTM to achieve the desired haptics feedback.

IV. Experiment Results

Fig. 2 shows the experiment setup. Elbow support was provided to the user to interact with the SHER. The sampling frequency of the pCLE is set to be 60 Hz. The mid-level optimizer frequencies of the SHER and dVRK are both 200 Hz. The cooperative gain, α, is set to be 10 μm/s per 1 N. The motion scaling factor of the teleoperated framework, β, is set to be 15 µm/s per 1 mm/s. The elasticity and damping coefficients of the haptics feedback are set to 50 N/m and , respectively. The software framework is built upon the CSA library developed at Johns Hopkins University [29]. The eyeball phantom used was built in-house with spherical shape of 30 mm outer diameter. A thin layer of tissue paper with an uneven surface is attached to the inside of the eyeball phantom, representing the detached retina. A 10 mm opening is made at the top to simulate the open-sky process. The phantom is 3D printed with ABS material. Before the experiment, the tissue was stained with 0.01% acriflavine. We chose acriflavine because of its ready availability. For clinical applications, fluorescein, which is bio-compatible, should be used.

To validate the effectiveness of the proposed frameworks, three experiments were conducted, as discussed below. In this set of experiments, a user highly familiar with the SHER and the dVRK was chosen to eliminate any extra variability over the experiment outcome due to the insufficient skill level of the user.

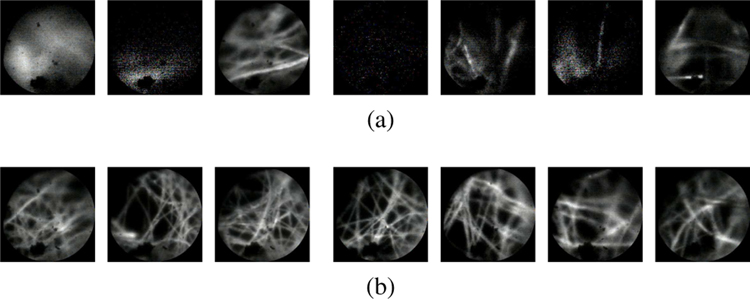

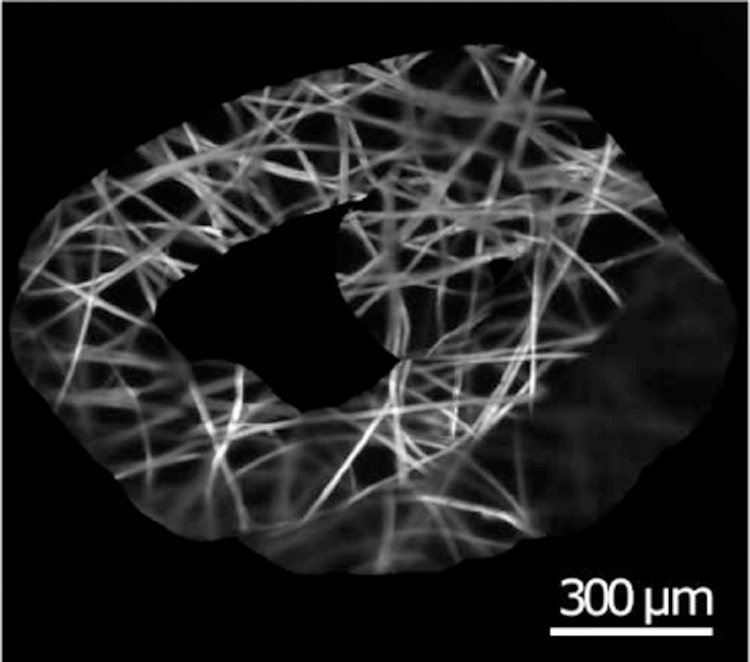

A. Experiment #1

The purpose of this experiment was to demonstrate the impracticality of manual pCLE scanning. As discussed previously, manual control of a pCLE probe within the micron-order focus range is extremely challenging, if not impossible, due to several factors including hand tremor and patient motion. In this experiment, the user was instructed to try his/her best to control the probe for a clear scanning stream of images. The user, however, was unsuccessful in performing the task. Fig. 9a shows a sequence of images acquired during this task. As can be seen, the quality of the images is far from desirable. By way of comparison, a sequence of images acquired using the proposed hybrid teleoperated framework is also presented in Fig. 9b, which shows a considerable improvement of the image quality, and an example of the generated mosaicking image is shown in Fig. 10. The complete result of this experiment can be found in the video supplemental material of the paper.

Fig. 9.

A sequence of images acquired from (a) manual scanning, (b) the proposed hybrid teleoperated framework, sub-sampled at 1.5 Hz. As can be seen, the manual scanning image quality is far from desirable.

Fig. 10.

An example of generated mosaicking images.

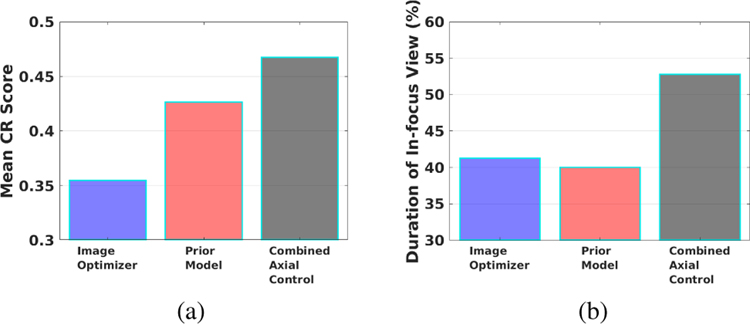

B. Experiment #2

The second experiment was designed to validate the improvement due to the addition of the prior model to the image optimizer as previously proposed in [21]. For this purpose, three experiments were conducted with: 1) only the gradient-ascent image optimizer (no prior model), 2) only the prior model (no image optimizer), and 3) the combined axial controller (image optimizer and prior model) as proposed in this work. In these experiments, the task was defined to follow a triangular path with a side length of approximately 3 cm using the teleoperated setup. Two metrics were used to evaluate and compare the efficacy of the three experiments:

Mean CR score: The average of CR scores throughout the scanning task, whose formulation was given in Section II-A3.

Duration of In-focus View: The percentage of the instances throughout the scanning task that the pCLE probe is in-focus (indicated as a CR score higher than the threshold T2).

Fig. 11a and Fig. 11b present a comparison of the two metrics for the three experiment scenarios. The combined axial controller outperformed both the image optimizer only and the prior model only approaches. The mean CR score of the combined controller is 0.47, higher than that of the prior model only approach 0.43, and the image optimizer only approach 0.35. The in-focus duration of the combined controller was 53%, also higher than that of the prior model only approach 40%, and the image optimizer only approach 41%. A higher mean CR score and longer in-duration focus make the combined axial controller more effective than using only either of the individual components.

Fig. 11.

Experiment results of the image optimizer only, prior model only, and combined axial controller. Comparison of (a) CR scores, (b) duration of in-focus view.

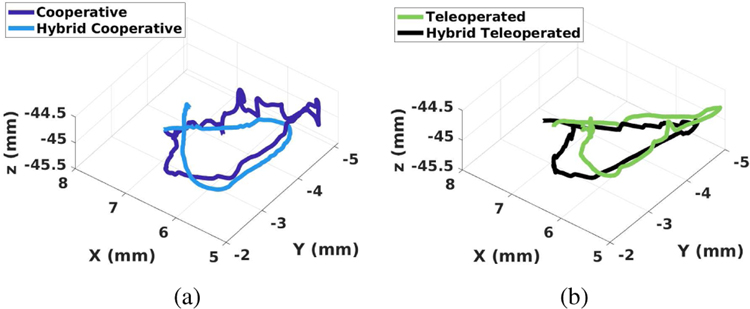

C. Experiment #3

The third experiment was designed to compare the performance with and without autonomous axial control using the cooperative and teleoperated frameworks. Same as Experiment #2, the task was defined to follow a triangular path with a side length of approximately 3 cm. The user was instructed to try his/her best to maintain the optimal image quality during the task. In this set of experiments, the orientation of the pCLE probe was locked for more accurate trajectory comparison and easier maneuvering for the user.

Fig. 12a and Fig. 12b show a comparison of the 3-D path between the frameworks (the proposed hybrid cooperative vs. traditional cooperative, and the proposed hybrid teleoperated vs conventional teleoperated). As shown in Fig. 12a, the proposed hybrid cooperative framework resulted in a significantly smoother path with lower elevational variability along the axial direction (Z-axis), as compared to the traditional cooperative approach. Smaller deviations along the axial direction of the probe shaft are an indication of better (less jerky) manipulation and better control of the probe around the optimal probe-to-tissue distance.

Fig. 12.

Comparison of scanning path between (a) Cooperative and hybrid cooperative frameworks, (b) Teleoperated and hybrid teleoperated frameworks.

Interestingly, as can be seen in Fig. 12b, the teleoperated and hybrid teleoperated frameworks resulted in a visually comparable elevational motion along the axial direction. Both trajectories appear to be smooth visually. To further assess the motion made during these two cases, Motion smoothness (MS) [30] was calculated as a quantitative measure of smoothness. MS is calculated as the time-integrated squared jerk, where jerk is defined as the third-order derivative of position:

| (19) |

where

| (20) |

is the third-order central finite difference of the discrete trajectory signal along u-axis. A smoother trajectory will result in a smaller jerk, thus smaller MS value. Based on the experiment, the MS scores are 6.477E−2 for the cooperative framework, 3.481E−2 for the hybrid cooperative framework, 4.201E−2 for the teleoperated framework, and 4.202E−2 for the hybrid teleoperated framework. The hybrid cooperative framework outperformed the traditional cooperative framework by 46.3%, while the two teleoperated frameworks yield almost the same motion smoothness scores. The quantitative evaluation of MS yields the same result as the visual inspection. The total duration of the scanning task was 61.92 s for the cooperative framework, 35.95 s for the hybrid cooperative framework, 60.66 s for the teleoperated framework, and 42.43 s for the hybrid teleoperated framework.

V. User Study

A. Study Protocol

To further evaluate and compare the proposed frameworks, a set of user studies was conducted. The study protocol was approved by the Homewood Institutional Review Board, Johns Hopkins University. In this study, 14 participants without clinical experience were recruited at the Laboratory for Computational Sensing and Robotics (LCSR), with all participants being right-handed.

The participants were asked to perform a path-following task in four cases:

using the traditional cooperative framework, i.e., without the auto-focus algorithm

using the proposed hybrid shared cooperative framework, i.e., with the auto-focus algorithm

using the traditional teleoperated framework, i.e., without the auto-focus algorithm

using the proposed hybrid shared teleoperated framework, i.e., with the auto-focus algorithm

The task was defined to perform pCLE scanning of a triangular region of a side length of approximately 3 mm within an eyeball phantom using the four setups. The participants were instructed to perform the scanning task while trying their best to maintain the best image quality. Fig. 2d shows the view provided to the users during the experiments.

Before each trial, the participants were given around 10 minutes to familiarize themselves with the system before they proceed with the main experiments. The order of the experiments was randomized to eliminate the learning-curve effect. After each trial, the participants were asked to fill out a post-study questionnaire. The questionnaire included a form of the NASA Task Load Index (NASA TLX) survey for evaluation of operator workload. Data recording started when the participants pressed the activation pedal of the robot. This ensured consistent and trackable start timing between participants.

In our previous study [21], we observed that manipulating the robot at micron-level precision within the confined space of the eye is very challenging for novices. Therefore, the orientation of the pCLE probe was locked to reduce the complexity of the scanning task.

B. Metrics Extraction and Evaluation

To evaluate task performance during four experiments, 6 quantitative metrics were used: CR score, duration of in-focus view, and MS (discussed above); as well as task completion time, Cumulative Probability for Blur Detection (CPBD), and Marziliano Blurring Metric (MBM). A NASA TLX questionnaire including 6 qualitative metrics answered by the participants was also studied.

While CR, MBM, and CPBD are image-quality metrics, they use different approaches to assess the sharpness of an image. These metrics were chosen to validate the consistent improvement of the image quality regardless of the metric type, demonstrating the generalizability and consistency of the outcome.

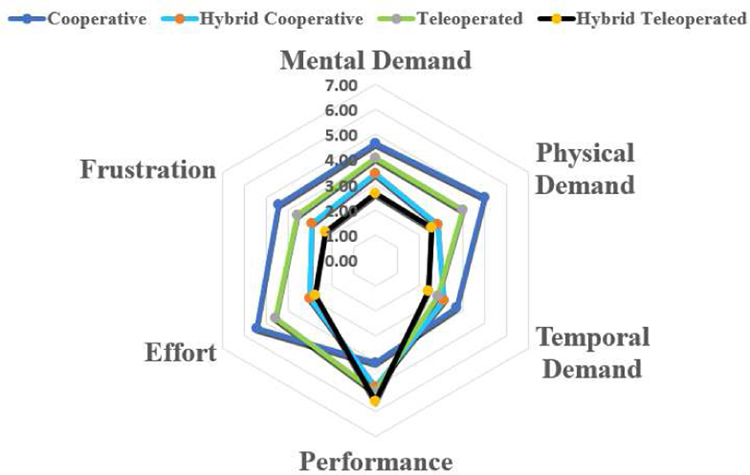

C. Results and Discussion

Fig. 13 shows the results of the NASA TLX questionnaire for 14 users. The questionnaire includes six criteria: mental demand, physical demand, temporal demand, performance level perceived by the users themselves, level of effort, and frustration level, each with a maximum value of 7. Single-factor ANOVA analysis was used to statistically evaluate the results, and statistical significance was observed in the mental demand (p-value=3.0E−2), physical demand (p-value=2.0E−3), effort (p-value=6.3E−5) and frustration level (p-value=1.0E−2), while no statistical significance was observed in the temporal demand (p-value=0.182) and performance (p-value=0.062).

Fig. 13.

NASA TLX questionnaire result

A Tukey’s Honest Significance test was followed for the four categories with statistical significance (w.s.s). The mental demand has decreased from 5.43 for the cooperative framework to 2.78 for the hybrid teleoperated framework. The physical demand has decreased from 5.00 for the cooperative framework and 4.00 for the teleoperated framework, to 2.57 for the hybrid teleoperated framework. Similarly, the effort level has decreased from 5.43 for the cooperative framework and 4.57 for the teleoperated framework, to 2.78 for the hybrid teleoperated framework. A lower frustration level was observed in the hybrid teleoperated framework 2.29 compared to the cooperative framework 4.43. Out of the 14 users, 11 indicated that the hybrid teleoperated framework is the most preferred modality.

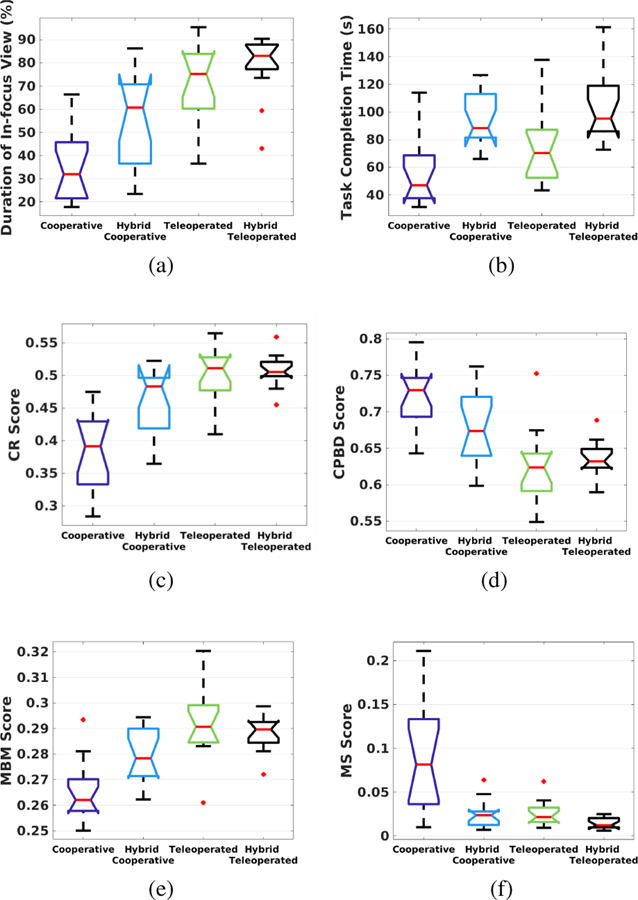

The quantitative results are shown as boxplots in Fig. 14 with six metrics compared: in-focus duration, task completion time, CR, CPBD, MBM and MS. Applying the Single-factor ANOVA analysis, statistically significant differences were observed between various modes of operation for all the metrics, with p-values equal to 1.0E−8, 4.7E−5, 1.1E−9, 4.4E−7, 2.7E−7, and 9.2E−8 respectively.

Fig. 14.

Results of the user study: (a) Duration of in-focus view, (b) Task completion time, (c) CR score, (d) CPBD score, (e) MBM score, and (f) MS score.

Post hoc analysis using the Tukey’s test showed that in terms of in-focus duration, both the hybrid teleoperated (79%) and teleoperated (73%) frameworks outperformed the hybrid cooperative (56%) and cooperative (36%) frameworks w.s.s. The hybrid cooperative framework also outperformed the cooperative framework w.s.s. The mean CR scores of the hybrid teleoperated (CR=0.51), teleoperated (CR=0.50) and hybrid cooperative (CR=0.46) frameworks were better than that of the cooperative framework (CR=0.38) w.s.s. The mean MBM score of the teleoperated framework (MBM=0.2920) was better than the hybrid cooperative (MBM=0.2792) and cooperative (MBM=0.2657) frameworks w.s.s. The hybrid teleoperated (MBM=0.2886) and hybrid cooperative frameworks also outperformed the cooperative framework w.s.s.

Task completion time, CPBD, and MS (metrics with a lower value indicating a higher performance) were also analyzed with Tukey’s test as discussed below. The task completion time for the hybrid teleoperated framework (105s) was longer than the teleoperated (76s) and cooperative frameworks (58s) w.s.s. The hybrid cooperative framework (95s) also took longer than the cooperative framework (58s) w.s.s. However, it should be noted that the task completion time for the hybrid teleoperated framework and hybrid cooperative framework include pre-operative eyeball registration time as well. Extracting the pre-operative registration time, the task completion time for the hybrid teleoperated framework and hybrid cooperative framework become 51s and 44s, respectively.

The CPBD score for the hybrid teleoperated framework (CPBD=0.6342) was lower than the cooperative framework (CPBD=0.7233) w.s.s. The CPBD score for the teleoperated framework (CPBD=0.6270) was lower than that of both hybrid cooperative (CPBD=0.6773) and cooperative frameworks w.s.s. The hybrid cooperative framework also had lower CPBD compared to the cooperative framework w.s.s. The MS scores for the hybrid teleoperated (MS=1.407E-2), teleoperated (MS=2.608E-2) and hybrid cooperative (MS=2.514E-2) frameworks were smaller than that for the cooperative framework (MS=8.944E-2) w.s.s.

In general, the hybrid cooperative framework was shown to be more advantageous over the traditional cooperative framework both qualitatively and quantitatively. The user workload decreased when using the hybrid cooperative framework, and the image quality was considerably improved. The comparison between the hybrid teleoperated framework and the traditional teleoperated framework showed that the hybrid teleoperated framework reduced the user’s workload while providing an equally high-quality image.

Comparing the hybrid teleoperated framework proposed in this work with the hybrid cooperative framework previously proposed in [21], the hybrid teleoperated framework demonstrated better performance with a higher percentage of duration where the pCLE probe is in-focus. A reason for the improved performance can be the ability for a large motion scaling, which is unavailable in a cooperative setting. Overall, the hybrid teleoperated system demonstrated clear advantages: quantitatively consistent high-quality images and qualitatively with 78.6% of the users indicating it as the most favorable framework.

However, several limitations may be addressed in future studies. First, none of users were clinicians, but with a wide range of skills in controlling robotic frameworks. A part of our future work will focus on comparing and evaluating the four frameworks with a larger set of users including surgeons. Secondly, in this study, an artificial eyeball phantom was used and technical aspects of the work were demonstrated and validated. In the future, cadaveric eyes will be used along with image mosaicking and clinical studies to evaluate the systems in clinical settings. Lastly, the stain material should be switched to fluorescein for bio-compatibility.

VI. Summary

A novel hybrid strategy was proposed for real-time endomicroscopy imaging for retinal surgery. The proposed strategy was deployed on two control frameworks - cooperative and teleoperated. The setup consists of the dVRK, SHER, and a distal-focus pCLE system. The hybrid frameworks allow surgeons to scan the area of interest in the retina without worrying about the loss of image quality and hand tremor. The effectiveness of the hybrid frameworks was demonstrated via a series of experiments in comparison with the traditional teleoperated and cooperative frameworks. A user study of 14 users showed that both hybrid frameworks lead to statistically significant lower workload and improved image quality both qualitatively and quantitatively.

Supplementary Material

Acknowledgments

This work was funded in part by: NSF NRI Grants IIS-1327657, 1637789; Natural Sciences and Engineering Research Council of Canada (NSERC) Postdoctoral Fellowship #516873; Johns Hopkins internal funds; Robotic Endobronchial Optical Tomography (REBOT) Grant EP/N019318/1; EP/P012779/1 Micro-robotics for Surgery; and NIH R01 Grant 1R01EB023943-01.

References

- [1].Mitry D, Charteris DG, Fleck BW, Campbell H, and Singh J, “The epidemiology of rhegmatogenous retinal detachment: geographical variation and clinical associations,” British Journal of Ophthalmology, vol. 94, no. 6, pp. 678–684, 2010. [DOI] [PubMed] [Google Scholar]

- [2].Pardue MT and Allen RS, “Neuroprotective strategies for retinal disease,” Progress in retinal and eye research, vol. 65, pp. 50–76, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Meining A, Bajbouj M, Delius S, and Prinz C, “Confocal laser scanning microscopy for in vivo histopathology of the gastrointestinal tract,” Arab J. Gastroenterol, vol. 8, no. 1, pp. 1–4, 2007. [Google Scholar]

- [4].Wang H, Wang S, Li J, and Zuo S, “Robotic scanning device for intraoperative thyroid gland endomicroscopy,” Annals of biomedical engineering, vol. 46, no. 4, pp. 543–554, 2018. [DOI] [PubMed] [Google Scholar]

- [5].Zuo S, Hughes M, Seneci C, Chang TP, and Yang G-Z, “Toward intraoperative breast endomicroscopy with a novel surface-scanning device,” IEEE Transactions on Biomedical Engineering, vol. 62, no. 12, pp. 2941–2952, 2015. [DOI] [PubMed] [Google Scholar]

- [6].Ping Z, Wang H, Chen X, Wang S, and Zuo S, “Modular robotic scanning device for real-time gastric endomicroscopy,” Annals of biomedical engineering, vol. 47, no. 2, pp. 563–575, 2019. [DOI] [PubMed] [Google Scholar]

- [7].Tan PEZ et al. , “Quantitative confocal imaging of the retinal microvasculature in the human retina,” Investigative ophthalmology & visual science, vol. 53, no. 9, pp. 5728–5736, 2012. [DOI] [PubMed] [Google Scholar]

- [8].Ramos D et al. , “The use of confocal laser microscopy to analyze mouse retinal blood vessels,” Confocal Laser Microscopy-Principles and Applications in Medicine, Biology, and the Food Sciences, 2013.

- [9].Gupta PK, Jensen PS, and de Juan E, “Surgical forces and tactile perception during retinal microsurgery,” in International Conference on Medical Image Computing and Computer-Assisted Intervention Springer, 1999, pp. 1218–1225. [Google Scholar]

- [10].Pritchard N, Edwards K, and Efron N, “Non-contact laser-scanning confocal microscopy of the human cornea in vivo,” Contact Lens and Anterior Eye, vol. 37, no. 1, pp. 44–48, 2014. [DOI] [PubMed] [Google Scholar]

- [11].Pascolini M et al. , “Non-contact, laser-based confocal microscope for corneal imaging,” Investigative Ophthalmology & Visual Science, vol. 60, no. 11, pp. 014–014, 2019. [Google Scholar]

- [12].Giataganas P, Hughes M, and Yang G-Z, “Force adaptive robotically assisted endomicroscopy for intraoperative tumour identification,” International journal of computer assisted radiology and surgery, vol. 10, no. 6, pp. 825–832, 2015. [DOI] [PubMed] [Google Scholar]

- [13].Varghese RJ, Berthet-Rayne P, Giataganas P, Vitiello V, and Yang G-Z, “A framework for sensorless and autonomous probe-tissue contact management in robotic endomicroscopic scanning,” in Robotics and Automation (ICRA), 2017 IEEE International Conference on IEEE, 2017, pp. 1738–1745. [Google Scholar]

- [14].Singhy S and Riviere C, “Physiological tremor amplitude during retinal microsurgery,” in Proceedings of the IEEE 28th Annual Northeast Bioengineering Conference (IEEE Cat. No. 02CH37342) IEEE, 2002, pp. 171–172. [Google Scholar]

- [15].Zhang T, Gong L, Wang S, and Zuo S, “Hand-held instrument with integrated parallel mechanism for active tremor compensation during microsurgery,” Annals of Biomedical Engineering, vol. 48, no. 1, pp. 413–425, 2020. [DOI] [PubMed] [Google Scholar]

- [16].Latt WT, Newton RC, Visentini-Scarzanella M, Payne CJ, Noonan DP, Shang J, and Yang G-Z, “A hand-held instrument to maintain steady tissue contact during probe-based confocal laser endomicroscopy,” IEEE transactions on biomedical engineering, vol. 58, no. 9, pp. 2694–2703, 2011. [DOI] [PubMed] [Google Scholar]

- [17].Erden MS, Rosa B, Boularot N, Gayet B, Morel G, and Szewczyk J, “Conic-spiraleur: A miniature distal scanner for confocal microlaparoscope,” IEEE/ASME transactions on mechatronics, vol. 19, no. 6, pp. 1786–1798, 2013. [Google Scholar]

- [18].Zhang L, Ye M, Giataganas P, Hughes M, and Yang G-Z, “Autonomous scanning for endomicroscopic mosaicing and 3d fusion,” in 2017 IEEE International Conference on Robotics and Automation (ICRA) IEEE, 2017, pp. 3587–3593. [Google Scholar]

- [19].Gijbels A, Vander Poorten EB, Gorissen B, Devreker A, Stalmans P, and Reynaerts D, “Experimental validation of a robotic comanipulation and telemanipulation system for retinal surgery,” in 5th IEEE RAS/EMBS International Conference on Biomedical Robotics and Biomechatronics IEEE, 2014, pp. 144–150. [Google Scholar]

- [20].Hughes M and Yang G-Z, “Line-scanning fiber bundle endomicroscopy with a virtual detector slit,” Biomedical optics express, vol. 7, no. 6, pp. 2257–2268, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Li Z et al. , “A novel semi-autonomous control framework for retina confocal endomicroscopy scanning,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) IEEE, 2019, pp. 7083–7090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Khatib O, “A unified approach for motion and force control of robot manipulators: The operational space formulation,” IEEE Journal on Robotics and Automation, vol. 3, no. 1, pp. 43–53, 1987. [Google Scholar]

- [23].Taylor R, Jensen P, Whitcomb L, Barnes A, Kumar R, Stoianovici D, Gupta P, Wang Z, Dejuan E, and Kavoussi L, “A steady-hand robotic system for microsurgical augmentation,” The International Journal of Robotics Research, vol. 18, no. 12, pp. 1201–1210, 1999. [Google Scholar]

- [24].Crete F, Dolmiere T, Ladret P, and Nicolas M, “The blur effect: perception and estimation with a new no-reference perceptual blur metric,” in Human vision and electronic imaging XII, vol. 6492 International Society for Optics and Photonics, 2007, p. 64920I. [Google Scholar]

- [25].Marziliano P, Dufaux F, Winkler S, and Ebrahimi T, “A no-reference perceptual blur metric,” in Proceedings. International Conference on Image Processing, vol. 3 IEEE, 2002, pp. III–III. [Google Scholar]

- [26].Narvekar ND and Karam LJ, “A no-reference perceptual image sharpness metric based on a cumulative probability of blur detection,” in 2009 International Workshop on Quality of Multimedia Experience IEEE, 2009, pp. 87–91. [Google Scholar]

- [27].Mittal A, Moorthy AK, and Bovik AC, “No-reference image quality assessment in the spatial domain,” IEEE Transactions on image processing, vol. 21, no. 12, pp. 4695–4708, 2012. [DOI] [PubMed] [Google Scholar]

- [28].Venkatanath N, Praneeth D, Bh MC, Channappayya SS, and Medasani SS, “Blind image quality evaluation using perception based features,” in 2015 Twenty First National Conference on Communications (NCC) IEEE, 2015, pp. 1–6. [Google Scholar]

- [29].Chalasani P, Deguet A, Kazanzides P, and Taylor RH, “A computational framework for complementary situational awareness (CSA) in surgical assistant robots,” Proceedings - 2nd IEEE International Conference on Robotic Computing, IRC 2018, vol. 2018-Janua, pp. 9–16, 2018. [Google Scholar]

- [30].Shahbazi M, Atashzar SF, Ward C, Talebi HA, and Patel RV, “Multimodal sensorimotor integration for expert-in-the-loop telerobotic surgical training,” IEEE Trans. on Robotics, no. 99, pp. 1–16, 2018. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.