Abstract

Objective

To test the effectiveness of physician incentives for increasing patient medication adherence in three drug classes: diabetes medication, antihypertensives, and statins.

Data Sources

Pharmacy and medical claims from a large Medicare Advantage Prescription Drug Plan from January 2011 to December 2012.

Study Design

We conducted a randomized experiment (911 primary care practices and 8,935 nonadherent patients) to test the effect of paying physicians for increasing patient medication adherence in three drug classes: diabetes medication, antihypertensives, and statins. We measured patients’ medication adherence for 18 (6) months before (after) the intervention.

Data collection/extraction methods

We obtained data directly from the health insurer.

Principal Findings

We found no evidence that physician incentives increased adherence in any drug class. Our results rule out increases in the proportion of days covered by medication larger than 4.2 percentage points.

Conclusions

Physician incentives of $50 per patient per drug class are not effective for increasing patient medication adherence among the drug classes and primary care practices studied. Such incentives may be more likely to improve measures under physicians’ direct control rather than those that predominantly reflect patient behaviors. Additional research is warranted to disentangle whether physician effort is not responsive to these types of incentives, or medication adherence is not responsive to physician effort. Our results suggest that significant changes in the incentive amount or program design may be necessary to produce responses from physicians or patients.

Keywords: health economics, medication adherence, physician payment incentives, primary care, quality improvement

What this Study Adds.

Nonadherence to medication is a significant health problem that increases mortality, morbidity, and costs, accounting for 33‐69 percent of medication‐related hospital admissions and $100 billion in avoidable hospitalizations in the United States.

Pay‐for‐performance arrangements that incentivize physicians to improve processes of care and health outcomes have become widespread, but there is little evidence on whether they can improve patient behaviors such as medication adherence.

In a cluster‐randomized trial of 911 primary care practices and 8,935 patients, physician incentives of $50 per patient per drug class were not effective for increasing patient medication adherence to diabetes medication, antihypertensives, or statins.

1. INTRODUCTION

Nonadherence to medication is a significant health problem that increases mortality, morbidity, and costs. 33‐69 percent of medication‐related hospital admissions in the United States are due to poor adherence, 1 and better antihypertensive adherence alone could prevent 89 000 deaths in the United States per year. 2 $100 billion is spent annually on avoidable hospitalizations due to medication nonadherence. 2

Pay‐for‐performance schemes that incentivize physicians to improve health care quality have become increasingly common and are salient features of reforms spanning Medicare, Medicaid, and commercial insurers. 3 , 4 , 5 However, there is a shortage of robust evidence from randomized trials on their effects on health care quality 6 , 7 and patient outcomes. 8 Moreover, the evidence that does exist for the effectiveness of pay for performance is mixed. 3 , 7 Studies that find positive effects tend to be those where the examined outcome is under physicians’ direct control—for example, processes of care such as screening and preventive services. 9 In contrast, studies on intermediate health outcomes such as blood pressure, blood glucose levels, and low‐density lipoprotein find inconsistent results. 7 , 10 , 11

We conceive of adherence as an intermediate behavioral outcome that sits between the writing of a prescription and the medication's ultimate effect on physical health. Adherence may be more difficult for physicians to control than processes of care but may be on par with changing intermediate health outcomes such as blood pressure. Training providers to improve patient communication has been shown to improve patient medication adherence, 12 , 13 suggesting that physicians have the ability to influence adherence. We hypothesized that physician incentives would encourage physicians to exert more effort to increase adherence, for example by communicating more intensively with patients. Consistent with the role of the provider in patient adherence, Cutler and Everett 2 identify payment reform as an essential component for improving adherence and argue that “goals for medication adherence should be explicitly written into the performance measures for medical homes, accountable care organizations, and care transition teams.” Our study provides one piece of evidence for whether physician incentives for adherence are likely to be effective.

In a randomized controlled trial, we tested financial incentives for physicians to improve patient medication adherence, measured using pharmacy claims data, in three drug classes for which adherence is an important determinant of health outcomes: diabetes medication, 14 antihypertensives, 15 , 16 and cholesterol‐lowering (statin) drugs. 16 Motivated by the hypothesis that simple incentives may be more motivating than complex incentives, we tested incentive schemes that only reward adherence improvements in one drug class (“focused incentives”) and incentive schemes that reward increases in adherence in any of the three drug classes (“broad incentives”). We also tested a “light‐touch” intervention where physicians received reports listing their nonadherent patients, which could be placed in these patients’ charts so that the physician could recognize them as nonadherent during an appointment.

2. BACKGROUND

Our incentives targeted adherence to diabetes medication, antihypertensives, and statins because these outcomes are rewarded in the Medicare Part C & D Star Rating System, which under the Affordable Care Act provides incentives in the form of bonus payments and marketing privileges to insurers offering Medicare Advantage (Medicare Part C), prescription drug plans (Medicare Part D), and combined Part C and D plans. 5 , 17 , 18 These incentives are substantial: Between 2012 and 2014, incentive payments from the Medicare Quality Bonus Payment Demonstration totaled $10.9 billion or about 2.6 percent of all payments to Medicare Advantage during those years 19 ).

Incentives are based on a plan's “star rating,” which is a weighted average of up to 48 quality measures in nine domains. 18 Medication adherence is an important component of these ratings: In 2018, adherence to diabetes medication, antihypertensives, and statins accounted for 32 and 11 percent of the total star rating for Part D and combined plans, respectively. 17 , 18

3. METHODS

We randomized physician practices with patients who in 2011 were nonadherent for at least one targeted drug class. We preregistered our trial, including details on our outcome variable and intervention groups, on ClinicalTrials.gov (NCT01603329). We obtained approval from the National Bureau of Economic Research Human Subjects Committee and the Harvard Committee on the Use of Human Subjects.

3.1. Measuring adherence

We measured adherence on the basis of how many prescriptions patients filled in three drug classes: diabetes medications, antihypertensives (ACE inhibitors and ARBs), and statins. Our primary outcome measure is the “proportion of days covered,” or PDC, which is defined as the number of days’ supply of a drug an individual obtained at the pharmacy (covered days) divided by the total number of days that the individual should be taking the drug. We computed PDC separately for each calendar year, restricting our sample to only include patients with at least two prescription fills any time in that year (as was done to compute the star rating adherence measures 18 ). For patients taking multiple different drugs within a class, the number of covered days was computed as the number of days covered by any drug. That is, our PDC measure did not distinguish between periods during which a patient filled prescriptions for one drug versus two drugs within a drug class. PDC is a widely used measure in the literature, 1 , 20 is considered an accurate and objective measure of overall adherence, 1 and has been shown to predict clinical outcomes. 21 Following the Medicare Advantage definition, we considered patients “nonadherent” for a time period if their PDC for that time period was below 80 percent.

3.2. Selecting the initial sample of physicians

In February 2012, we received data on every patient of a large health insurer's Medicare Advantage Prescription Drug Plan for whom a 2011 PDC for diabetes, high blood pressure, or cholesterol medication could be computed, excluding patients of five‐star plans and plans in Florida or Puerto Rico.

We limited our analyses to physician practices with no more than five physicians, because we were constrained in the number of practices and physicians we could contact. Larger practices are therefore costlier and do not add as much statistical power as multiple smaller practices. We also dropped practices employing physicians who were associated with more than one practice. These restrictions reduced the number of practices from 25 857 to 22 231 and the number of physicians from 55 511 to 30 206. Then, for each practice, we computed the number of nonadherent patients in each drug class. To maximize statistical power, we required that the minimum of these three patient counts be greater than 1 for one‐physician practices, greater than 3 for two‐physician practices, greater than 5 for three‐physician practices, greater than 7 for four‐physician practices, and greater than 9 for five‐physician practices. This left us with an intermediate sample of 1034 practices and 1237 physicians.

Next, we updated the patient‐to‐physician attribution to be current as of December 31, 2011, and dropped patients who were no longer active with the health plan as of March 31, 2012. Following this update, we allowed one‐physician practices to have a minimum nonadherent patient count of 1 but maintained the same thresholds for practices with two or more physicians. We ended up with a randomization sample of 911 practices with 1005 physicians and 8935 noncompliant patients, all of which could be uniquely matched to a practice.

3.3. Description of treatments

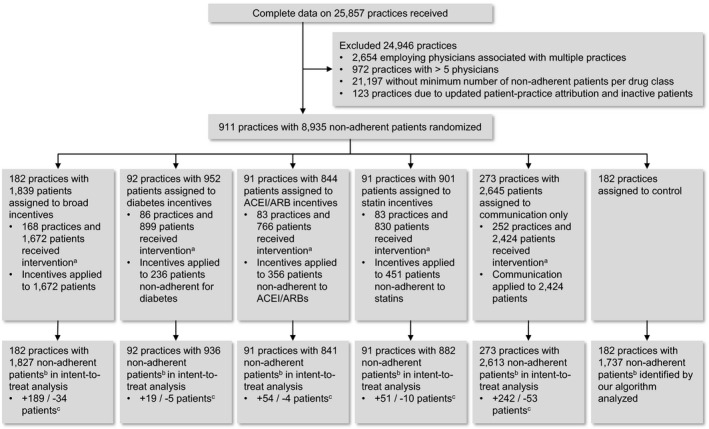

We randomized the 911 physician practices to a no‐intervention control group and five treatment arms (Figure 1 gives a graphical summary of the randomization procedure). Mailings to physicians were sent on July 10, 2012. The incentives described below were specific to this experiment and unrelated to the health plan's standard physician reimbursement practices, STARS rewards, and quality incentive programs, which rewarded improvements for several Healthcare Effectiveness Data and Information Set (HEDIS) measures, including diabetes management, LDL control, and A1C control. These other incentives were paid on the order of about $50 per year for each insurer‐covered patient.

FIGURE 1.

This CONSORT flow diagram shows the flow of physician practices and patients. It includes the reasons for excluding practices from randomization as well as the number of practices and patients randomized to each group, the number receiving interventions, and the number included in the analysis.

Note: a, Patients/practices omitted because practice mailing address missing or patients not noncompliant under the updated PDC calculation. b, Across all three drug classes. c, Number of patients in incentivized drug class(es) added (+) and subtracted (−) when our algorithm was used to identify noncompliant patients. Source: Authors’ analysis of claims data on prescription fills, 2011‐2012.

Physicians in practices assigned to the “communications” treatment arm were mailed a report on all the practice's Medicare Advantage patients who were nonadherent (PDC < 80 percent) in 2011 for at least one drug class (diabetes, ACE inhibitors or ARBs, or statins). Each page of the report contained information on only one patient. The introductory letter in the mailing suggested that each page of the report be placed into the respective patient's chart to help physicians recognize the patient as nonadherent during an appointment. (Additionally, we randomized the report's paper color—yellow or white—across providers. We hypothesized that yellow chart inserts would be more salient to physicians, but we found no efficacy differences between the two colors, so we do not discuss this result further.)

In each of three other treatment arms, we sent physicians an analogous report that listed patients who were nonadherent in one of three medication domains: diabetes medication, ACE inhibitors or ARBs, and statins. Physicians in these “focused incentives” arms were paid $50 for each listed patient who became adherent (PDC ≥ 80 percent) in the specified drug class between July 1, 2012, and December 31, 2012. For example, physicians in the “focused diabetes” incentive group received a report only on their patients who were nonadherent to diabetes medication and were paid $50 for each of these patients who became adherent to diabetes medication.

The final “broad incentives” treatment arm received the same report as the communications arm, but physicians in this arm were paid $50 each time a patient became adherent in any of the three drug classes (therefore up to $150 for a patient who went from being nonadherent in all three drug classes to being adherent in all three drug classes).

3.4. Treatment assignment and power calculations

Two times more practices were assigned to the broad incentives arm than to each focused incentives arm because we were interested in comparing how a portfolio of practices narrowly incentivized on different medication classes performed against a portfolio of practices that were all broadly incentivized. To maximize the statistical power of this comparison, the broad incentives arm should be equal in size to the sum of the focused incentives arms, but budget constraints prevented us from making the broad incentives arm three times as large as each focused incentives arm.

Ex‐ante power calculations, assuming a 5 percent significance level, demonstrated that our experiment had 80 percent power to detect a difference in PDC of about 3 percentage points between each focused incentives group and the control group, and similar power to detect differences between broad and focused incentives.

3.5. Data description

We conducted our experimental analysis using pharmacy and medical claims data from a large health insurer. The patient sample consists of everyone who took diabetes, hypertension, or cholesterol medication in 2011—whether adherent or nonadherent—who had been attributed to the physicians in our randomization sample in our original dataset (before the updated patient‐to‐physician attribution). The claims data run from January 1, 2011, to December 31, 2012, and include every pharmacy and medical claim for these patients. The data include indicators for treatment group assignment and whether the patient was the subject of a mailing.

3.6. Sample restrictions

After random assignment, the set of patients and physicians who were actually subjected to treatment was further modified by two developments. First, the mailing addresses of 46 physicians who were assigned to receive a mailing could not be located, so these physicians did not receive a mailing. Second, CMS updated its specifications and drug lists for calculating PDCs. The reports of any patients who were no longer considered nonadherent according to the new PDC methodology were excluded, which dropped 24 physicians from the sample.

These two modifications reduced the number of practices mailed a report from 729 to 672 and the number of patients who were the subject of an incentive and/or communication from 7181 to 5139. This attrition was balanced across treatment arms; however, we did not receive information from the health plan on how these modifications would have affected the control group (182 practices) if it had been sent treatment mailings and incentives. Because of this, we estimated intent‐to‐treat effects, including all 729 + 182 = 911 practices subject to random assignment regardless of whether they eventually received a letter.

We further restrict our analysis to noncompliant patients, since we expect little effect of the treatments on already‐compliant patients who were not mentioned in the letters. Because the claims data do not flag noncompliant patients in the control group and in nonincentivized drug classes, we used the 2011 claims data to construct our own 2011 PDC measure and identify noncompliant patients. We used individuals assigned to treatment groups to validate our PDC algorithm. Among these individuals, our algorithm matched the health plan's 97.9 percent of the time. To maintain consistency across all treatment arms, all the analyses below will use the sample of noncompliant patients identified using our algorithm.

3.7. Regression analyses

Our main outcome variable is the patient's PDC in the six incentivized months (July to December 2012) for a given drug class. Our main independent variables are indicators for whether the patient's physician received only a report, whether the patient's physician received a focused incentive in the drug class, whether the patient's physician received a broad incentive (which encompassed all three drug classes), and whether the patient's physician received a focused incentive in one of the other two drug classes (to test whether incentives in one drug class crowded out PDC in unincentivized drug classes). To maximize our statistical power to detect effects, we also controlled for the patient's PDC in the same drug class in the six months prior to the incentives (January to June 2012) and the patient's PDC in the same drug class in 2011. 22 , 23

After receiving the data and performing initial analyses, we specified several secondary outcomes post hoc: the number of prescription fills during the treatment period, the likelihood that patients saw their physician during the treatment period, and the likelihood of patients filling a prescription within the first two and four weeks after seeing their physician.

For diabetes medications, we also conducted several follow‐up analyses to examine the effect of broad incentives on the number of prescriptions: We decomposed the dependent variable by subclass of diabetes medication (metformin, sulfonylureas, thiazolidinediones, and dipeptidyl peptidase (DPP)‐IV inhibitors), estimated the effect of incentives on prescription characteristics (number of days’ supply, copay, pill quantity, and combination formulations), and allowed the treatment effect to vary by patients’ prior utilization of the drug in the six months preceding the intervention.

All analyses were performed using Stata.

4. RESULTS

4.1. Baseline variable values

Table 1 shows summary statistics on PDCs from January to December 2011 and January to June 2012 for each experimental group. The average PDC was lower in 2011 (about 58 percent across drug classes) than the average PDC in 2012 (about 80 percent across drug classes). At least some of this difference is likely because our sample of nonadherent individuals was selected based on having low PDC in 2011, and these individuals regressed to a higher mean PDC in 2012. Some of the increase in average PDC could also reflect upward trends in adherence over time.

TABLE 1.

Summary statistics for each study arm

| Proportion of days covered, Jan‐Dec 2011 | Proportion of days covered, Jan‐Jun 2012 | Proportion of days covered, Jul‐Dec 2012 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Diabetes | ACEI/ARB | Statin | Diabetes | ACEI/ARB | Statin | Diabetes | ACEI/ARB | Statin | |

| Control | 57.7 | 57.0 | 57.0 | 80.6 | 81.4 | 80.3 | 71.3 | 72.7 | 68.9 |

| Report only | 59.1 | 57.6 | 56.9 | 79.7 | 79.9 | 79.9 | 70.3 | 70.6 | 70.0 |

| Broad incentives | 57.5 | 58.1 | 56.8 | 77.0 | 79.4 | 78.2 | 70.3 | 70.2 | 67.6 |

| Focused diabetes incentive | 59.3 | 58.0 | 56.4 | 79.1 | 81.4 | 79.7 | 71.4 | 71.3 | 70.4 |

| Focused ACEI/ARB incentive | 59.2 | 59.2 | 57.5 | 82.2 | 84.2 | 78.9 | 67.8 | 72.5 | 69.9 |

| Focused statin incentive | 58.7 | 57.8 | 58.4 | 80.4 | 81.2 | 80.5 | 69.6 | 70.8 | 68.5 |

| P‐value of six groups’ equality | .452 | .429 | .479 | .102 | .011 | .598 | .846 | .654 | .547 |

Proportion of days covered (PDC) is expressed as a percentage (100 × PDC).

Source: Authors’ analysis of claims data on prescription fills, 2011‐2012.

Our five experimental groups were balanced on PDC across all drug classes in 2011. F‐tests did not reject the null hypothesis of joint equality across experimental groups. In the January to June 2012 period, joint equality was not rejected for diabetes and statin PDCs, but ACEI/ARB PDC was somewhat higher for the focused ACEI/ARB incentive group (84.2 percent) than for the other groups (about 80 percent). Our main specification controls for the January to June 2012 PDC and the January to December 2011 PDC for each drug class. This does not substantially change any of our inferences relative to alternative specifications without these controls.

4.2. Main results

Table 2 shows coefficients from ordinary least‐squares regressions where the dependent variable is PDC during the six treatment months (July to December 2012) for each drug class. We found that reports and incentives had no statistically significant effects on PDC in the targeted drug class(es). No 95 percent confidence interval has an upper bound that exceeds 4.2 percentage points. There is some evidence that focused incentives in nondiabetes drug classes decreased diabetes drug PDC by 4.2 percentage points (P = .03; see Table 2, Column 1). However, this crowd‐out effect is not significant for ACEI/ARBs, and the effect goes in the opposite direction and is marginally significant (P < .10; see Table 2, Column 3) for statins.

TABLE 2.

Treatment effects on proportion of days covered (PDC)

| Diabetes | ACEI/ARB | Statins | |

|---|---|---|---|

| Report only | −0.012 (0.017) | −0.014 (0.014) | 0.018 (0.012) |

| Focused incentive in drug class | 0.002 (0.020) | −0.023 (0.018) | −0.003 (0.016) |

| Focused incentive in other drug classes | −0.042 b (0.019) | −0.019 (0.013) | 0.022 a (0.013) |

| Broad incentives | 0.002 (0.018) | −0.018 (0.014) | 0.003 (0.014) |

| Jan‐Jun 2012 PDC | 0.575 c (0.030) | 0.567 c (0.023) | 0.595 c (0.022) |

| Jan‐Dec 2011 PDC | 0.207 c (0.042) | 0.149 c (0.033) | 0.245 c (0.031) |

| Constant | 0.126 c (0.032) | 0.170 c (0.025) | 0.053 b (0.023) |

| # patients | 1754 | 3003 | 3317 |

| # practices | 831 | 874 | 879 |

| F statistic | 92.9 | 130.7 | 191.6 |

| R 2 | .25 | .21 | .25 |

The dependent variable is the Jul‐Dec 2012 PDC of the drug class shown in the column header; explanatory treatment dummy variables in rows. Standard errors (in parentheses) are clustered at the practice level.

Source: Authors’ analysis of claims data on prescription fills, 2011‐2012.

Significant at 10 percent level.

Significant at 5 percent level.

Significant at 1 percent level.

We considered several secondary outcomes in post hoc analysis. We first examined whether incentives affected the number of 30‐day‐equivalent prescriptions filled during the six‐month treatment period. This differs from our primary outcome because it distinguishes between cases in which a patient fills only one kind of prescription within a drug class versus multiple different prescriptions within a drug class in a given time period. PDC only distinguishes between filling any prescriptions versus no prescriptions within a drug class in a given time period. Moreover, for PDC to be calculated for a patient, we required that at least two prescriptions be filled in the calendar year; we did not apply this restriction to the number of 30‐day‐equivalent prescriptions.

We found evidence that broad incentives result in a modest and statistically significant increase in diabetes‐related prescription fills (0.51 prescriptions per patient against a backdrop of 3.9 on average over six months, P = .006, Table 3, Column 1). However, we found no such effects for other drug classes. The effect of focused incentives on diabetes prescriptions was similar in magnitude (0.32 prescriptions) but not statistically significant. Focused incentives in the ACEI/ARB drug class appear to result in fewer prescription fills for that drug class (Table 3, Column 2), but this effect is only marginally significant at P < .10.

TABLE 3.

Treatment effects on number of prescriptions filled

| Diabetes | ACEI/ARB | Statins | |

|---|---|---|---|

| Report only | 0.22 (0.17) | −0.15 (0.11) | 0.07 (0.10) |

| Focused incentive in drug class | 0.32 (0.24) | −0.24 a (0.13) | −0.15 (0.12) |

| Focused incentive in other drug classes | −0.02 (0.18) | −0.18 (0.11) | 0.13 (0.10) |

| Broad incentives | 0.51 c (0.18) | −0.12 (0.12) | −0.01 (0.10) |

| # scripts Jan‐Jun 2012 | 0.47 c (0.02) | 0.51 c (0.02) | 0.47 c (0.02) |

| # scripts 2011 | 0.17 c (0.02) | 0.11 c (0.02) | 0.15 c (0.01) |

| Constant | 0.62 c (0.19) | 0.90 c (0.12) | 0.60 c (0.11) |

| # patients | 2459 | 4319 | 4763 |

| # practices | 908 | 911 | 910 |

| F statistic | 116.6 | 260.0 | 266.7 |

| R 2 | .31 | .31 | .28 |

The dependent variable is the number of prescriptions filled during July‐December 2012 for the drug class shown in column header; explanatory treatment dummy variables in rows. Standard errors (in parentheses) are clustered at the practice level.

Source: Authors’ analysis of claims data on prescription fills, 2011‐2012.

Significant at 10 percent level.

Significant at 5 percent level.

Significant at 1 percent level.

Additional analyses revealed that the effect of broad incentives on diabetes prescriptions is driven by increases in metformin (0.34 prescriptions, P = .01) and DPP‐IV inhibitors (0.11 prescriptions, P = .04). These increases do not appear to be primarily driven by physicians trying to change the treatment regimen in order to improve adherence, as we did not find much evidence that physicians in the broad incentives group increased prescriptions of drugs or drug subclasses that their patients had never taken before (in our sample). Broad incentives did not affect characteristics of diabetes prescriptions that might indirectly improve adherence, including the share of 90‐day versus 30‐day prescriptions, average copay, pill quantity, or use of combination formulations.

We examined two additional outcomes, pooling patients across drug classes. First, we tested whether patients in the treatment groups were more likely to see their physician in the six treatment months (Appendix Table S1). Among patients who saw their physician during the treatment months, we tested for the existence of short‐term effects on adherence (Appendix Table S2) by focusing on prescriptions filled within four weeks of a physician visit. We found only weak evidence (P < .10) that focused incentives increased the probability of a physician visit and no evidence of short‐term adherence effects.

5. DISCUSSION

We know of only one other study 24 that tests the effects of physician incentives on patient medication adherence. To our knowledge, ours is the first large randomized study to specifically reward improvements in adherence (rather than intermediate health outcomes such as LDL cholesterol or blood pressure 24 ) and the first to compare broad incentives to focused incentives.

Our primary finding is that physician incentives (on the order of $50‐$150 per patient) did not increase patient adherence. One possible explanation for our null effect is that the physicians in our sample had limited opportunities to interact with patients. Only 60 percent of targeted patients saw their physician during the treatment period, and our incentives did not increase the likelihood of an office visit.

We found no consistent difference between broad and focused incentives, and no effect of communications without financial incentives. However, we did find some evidence (P = .03) that focused incentives in the antihypertensive and statin drug classes decreased PDC for diabetes drugs. One explanation for this result is that physicians who are induced to focus on one drug class become less focused on increasing adherence among patients who are nonadherent in other drug classes. However, this effect would need to pertain specifically to diabetes medication, as ACEI/ARBs were unaffected and statins exhibited a small effect in the opposite direction. Given that focused incentives for diabetes medication did not increase adherence to diabetes medication—indicating that either the incentives did not induce significant physician effort or that diabetes medication adherence is not very responsive to changes in physician effort—this crowd‐out result seems likely to be a type I error.

In secondary analyses, we found evidence that broad incentives increased prescription fills for diabetes medications (P = .006) but not for other drug classes. We hypothesized that this could be due to the greater flexibility inherent in the diabetes drug class, which encompasses four subclasses of drugs. However, in follow‐up analysis, we found that patients in the broad incentives group were no more likely to begin using new drugs or drug classes than those in the control group. The fact that PDC did not also increase under broad incentives indicates that the additional fills were made by patients who were contemporaneously filling prescriptions for other drugs in the same drug class. (An alternative possibility is that the additional prescriptions went to patients with only one fill in 2012 and who thus did not have a PDC calculated for them. However, setting the total prescriptions variable to 0 for those who had only one fill in 2012 only slightly affects the treatment effect point estimate, indicating that this channel is not important.)

The increase in diabetes‐related prescriptions was not statistically significant in the focused diabetes incentive group. This could be due to larger standard errors resulting from the smaller number of patients randomized to the focused diabetes incentive arm. It is also possible that broad incentives, which have a maximum incentive of $150 per patient (versus $50 per patient for focused incentives) and cover more drug classes, were more salient to physicians.

We conclude that, in general, modest physician incentives are ineffective at increasing the total amount of time that patients maintain prescription fills. Our findings are consistent with prior literature showing that physician incentives have mixed results, and they provide some mechanistic insight into studies in which pay for performance produced significant positive effects on intermediate health outcomes. The most similar study to ours, Asch et al 24 , randomly assigned incentives for reducing LDL cholesterol levels, an intermediate health outcome, to patients, their physicians, or both. The patient incentives were conditional on both adherence and improvements in LDL. They found that only the group with both patient and physician incentives significantly decreased LDL cholesterol despite incentives as large as $1024 per patient in the physician incentive arm. They also found that physician incentives did not improve medication adherence (as measured by electronic pill bottles), but that physician incentives were important for medication intensification (ie, statin initiation or increases in dosage or potency). We replicated the null effect of physician incentives on adherence to statins using a larger number of physician practices, a PDC measure of adherence, and multiple drug classes.

When physician incentives have improved intermediate health outcomes, our results suggest that the mechanism of action is unlikely to stem from improvements in patient adherence. Rather, effects are likely driven by different channels, such as increased provision of new services or medications to patients. 24 , 25 For example, Bardach et al 10 and Petersen et al 11 find that physician incentives of similar magnitude to ours improved blood pressure control; our result would suggest that the mechanism of action for these improvements is more likely to be new prescribing of antihypertensives rather than improved adherence by patients with existing prescriptions. This is consistent with the broader literature on physician incentives, which finds the most evidence for improvements in processes of care, 8 such as preventive service provision 26 and appropriate responses to uncontrolled blood pressure. 11

5.1. Limitations

Our study has several limitations. First, the incentives were only $50 per patient per drug class, all or nothing based on the 80 percent PDC cutoff, and were spread across the physician group. We do not know how physicians chose to apportion the funds (e.g., equally to all physicians or selectively to the physician who had primary responsibility for a patient). This leaves open the possibility that larger, or more individually targeted, incentives may have been more effective. We note that the size of Medicare incentive payments to the health plan may not allow for significantly larger incentives to the physician.

Second, we measure adherence using prescription drug claims. Claims data provide upper bounds on adherence (patients who do not fill their prescriptions can be assumed to not be taking their medication) but do not capture differences in day‐to‐day adherence conditional on filling a prescription, or prescriptions that are written by a physician but never filled. Our measured effects may also have been attenuated by the fact that about a third of the prescriptions in our sample are for a 90‐day supply, and our post‐treatment period is only six months long. A longer measurement period may have given physicians more time with which to improve adherence. On the other hand, measures of adherence based on claims data are policy‐relevant; they are simple to implement and actually used to compute Medicare star ratings. They are also less prone to gaming, as they do not allow patients to register greater adherence by simply opening pill bottles without taking the pills. 1

Third, average PDC among study patients in the first half of 2012 was relatively high. For 47.0 percent of patient‐drug class pairs, physicians received PDCs (computed over 2012Q1) in the patient report of at least 80 percent. It is possible that physicians regarded those with higher reported PDC values as needing no intervention despite their nonadherence in 2011, thus attenuating the impact of the incentives. In addition, the high rate at which patients in the study “self‐cured” their low adherence may have somewhat limited the scope for the incentives to work.

Fourth, for clinical goals that partially depend on patient behavior, the effectiveness of physician incentives depends on both the degree to which they motivate physicians to act and the ability of physicians to change patient behavior. We are unable to identify which of these necessary conditions were unsatisfied in our trial.

Lastly, our study focuses on a particular environment: practices with no more than five physicians and insurance with average copays of $12 for each drug class. Our results may generalize to other settings to the extent that the responsiveness of physician effort to incentives and the feasibility of effective communication are similar across practice types and copay magnitudes.

6. CONCLUSIONS

We present evidence from a randomized controlled trial that incentivizing physicians using incentives of $50 per drug class to increase patient medication adherence is not effective. We rule out increases in proportion of days covered by medication that are larger than 4.2 percentage points. Although incentives did not increase PDC, the number of diabetes prescriptions filled may respond to incentives, and significantly different intervention designs might elicit different responses.

Our results are in accordance with suggestions in the literature that physician incentives may be more effective when physicians more directly control the desired outcome. 11 , 26 Future studies on physician incentives should include intermediate outcomes that separately identify physician effort and patient responsiveness.

CONFLICT OF INTEREST

None.

Supporting information

Author matrix

Supplementary materials

ACKNOWLEDGMENT

Joint Acknowledgment/Disclosure Statement: We thank Jay McKnight and William Fleming at Humana for invaluable assistance in implementing the interventions. Andrew Chong, Christopher Clayton, Luca Maini, Michael Puempel, and Jung Sakong provided excellent research assistance. All communications with physicians were approved by Humana. This work was funded by the National Institute on Aging (NIA P30AG034532). The Eric M. Mindich Research Fund for the Foundations of Human Behavior and Humana also provided funding for this work. The views expressed in this article represent those of the authors and not their respective institutions or of Humana.

Below we report any additional affiliations and payments, erring on the side of over‐disclosure..

JB has received funding from Google. JC, DL, and BM have received funding from the Social Security Administration. DL has also received funding from the American Council of Life Insurers, and Russell Sage Foundation. JK has received compensation from OODA Health. GL has received compensation from NPD corporation. KV has received research funding from Horizon Blue Cross Blue Shield, CVS, Oscar Insurance, Vitality Institute, and Weight Watchers. He has also received consulting income from VAL Health, the National University of Singapore, and speaking fees from the Center for Corporate Innovation. He is a part‐owner of the consulting firm VAL Health.

Kong E, Beshears J, Laibson D, et al. Do physician incentives increase patient medication adherence?. Health Serv Res. 2020;55:503–511. 10.1111/1475-6773.13322

REFERENCES

- 1. Osterberg L, Blaschke T. Adherence to medication. N Engl J Med. 2005;353(5):487‐497. [DOI] [PubMed] [Google Scholar]

- 2. Cutler DM, Everett W. Thinking outside the pillbox — Medication adherence as a priority for health care reform. N Engl J Med. 2010;362(17):1553‐1555. [DOI] [PubMed] [Google Scholar]

- 3. James Julia. Pay‐for‐Performance Health Affairs Health Policy Brief. 2012. https://www.healthaffairs.org/do/10.1377/hpb20121011.90233/full/ [Google Scholar]

- 4. Kuhmerker K, Hartman Ipro T. Pay‐For‐Performance in State Medicaid Programs: A Survey of State Medicaid Directors and Programs; 2007. https://www.commonwealthfund.org/publications/fund‐reports/2007/apr/pay‐performance‐state‐medicaid‐programs‐survey‐state‐medicaid. Accessed July 2, 2018

- 5. Cotton P, Newhouse JP, Volpp KG, et al. Medicare advantage: issues, insights, and implications for the future. Popul Health Manag. 2016;19(S3):S‐1‐S‐8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Houle SKD, McAlister FA, Jackevicius CA, Chuck AW, Tsuyuki RT. Does performance‐based remuneration for individual health care practitioners affect patient care? Ann Intern Med. 2012;157(12):889. [DOI] [PubMed] [Google Scholar]

- 7. Mendelson A, Kondo K, Damberg C, et al. The effects of pay‐for‐performance programs on health, health care use, and processes of care. Ann Intern Med. 2017;166(5):341. [DOI] [PubMed] [Google Scholar]

- 8. Flodgren G, Eccles MP, Shepperd S, Scott A, Parmelli E, Beyer FR. An overview of reviews evaluating the effectiveness of financial incentives in changing healthcare professional behaviours and patient outcomes. Cochrane Database Syst Rev. 2011;(7):CD009255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Doran T, Maurer KA, Ryan AM. Impact of provider incentives on quality and value of health care. Annu Rev Public Health. 2017;38(1):449‐465. [DOI] [PubMed] [Google Scholar]

- 10. Bardach NS, Wang JJ, De Leon SF, et al. Effect of pay‐for‐performance incentives on quality of care in small practices with electronic health records. JAMA. 2013;310(10):1051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Petersen LA, Simpson K, Pietz K, et al. Effects of individual physician‐level and practice‐level financial incentives on hypertension care. JAMA. 2013;310(10):1042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Roter DL, Hall JA, Merisca R, Nordstrom B, Cretin D, Svarstad B. Effectiveness of interventions to improve patient compliance: a meta‐analysis. Med Care. 1998;36(8):1138‐1161. [DOI] [PubMed] [Google Scholar]

- 13. Zolnierek KBH, Dimatteo MR. Physician communication and patient adherence to treatment: a meta‐analysis. Med Care. 2009;47(8):826‐834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Ho PM, Rumsfeld JS, Masoudi FA, et al. Effect of medication nonadherence on hospitalization and mortality among patients with diabetes mellitus. Arch Intern Med. 2006;166(17):1836. [DOI] [PubMed] [Google Scholar]

- 15. Burnier M. Medication adherence and persistence as the cornerstone of effective antihypertensive therapy. Am J Hypertens. 2006;19(11):1190‐1196. [DOI] [PubMed] [Google Scholar]

- 16. Ho PM, Bryson CL, Rumsfeld JS. Medication adherence: its importance in cardiovascular outcomes. Circulation. 2009;119(23):3028‐3035. [DOI] [PubMed] [Google Scholar]

- 17. Schwartz L, Rhoads J. Adherence’s role in Medicare Part D star ratings. Am Pharm. 2014;28‐31.http://www.ncpa.co/pdf/the‐big‐picture‐adherence‐roles‐in‐medicare‐part‐d‐star‐ratings.pdf [Google Scholar]

- 18. Centers for Medicare & Medicaid Services . Medicare 2018 Part C & D Star Ratings Technical Notes; 2017.

- 19. L&M Policy Research LLC . Evaluation of the Medicare Quality Bonus Payment Demonstration. Washington, D.C.; 2016. https://innovation.cms.gov/Files/reports/maqbpdemonstration‐finalevalrpt.pdf. Accessed June 14, 2018

- 20. Martin LR, Williams SL, Haskard KB, Dimatteo MR. The challenge of patient adherence. Ther Clin Risk Manag. 2005;1(3):189‐199. [PMC free article] [PubMed] [Google Scholar]

- 21. Pladevall M, Williams LK, Potts LA, Divine G, Xi H, Lafata JE. Clinical outcomes and adherence to medications measured by claims data in patients with diabetes. Diabetes Care. 2004;27(12):2800‐2805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Sacarny A, Yokum D, Finkelstein A, Agrawal S. Medicare letters to curb overprescribing of controlled substances had no detectable effect on providers. Health Aff. 2016;35(3):471‐479. [DOI] [PubMed] [Google Scholar]

- 23. Athey S, Imbens G. The econometrics of randomized experiments. In Handbook of economic field experiments (Vol. 1, pp. 73‐140). North‐Holland. 2016. 10.1016/bs.hefe.2016.10.003 [DOI] [Google Scholar]

- 24. Asch DA, Troxel AB, Stewart WF, et al. Effect of financial incentives to physicians, patients, or both on lipid levels. JAMA. 2015;314(18):1926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Lesser LI, McCormack JP. Financial incentives and cholesterol levels. JAMA. 2016;315(15):1657. [DOI] [PubMed] [Google Scholar]

- 26. Li J, Hurley J, DeCicca P, Buckley G. Physician response to pay‐for‐performance: evidence from a natural experiment. Health Econ. 2014;23(8):962‐978. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Author matrix

Supplementary materials