Introduction

Thinking back to your basic research class, you may remember the instructor drumming into your head the basics of research—randomly assign subjects to intervention and control groups, make sure to keep your experiment tightly controlled to lesson the chance of other factors affecting your results. This is the classic efficacy study in which you really want to make sure that any changes can be attributed to what you have modified in your experiment. This is what research is …. Or is it?

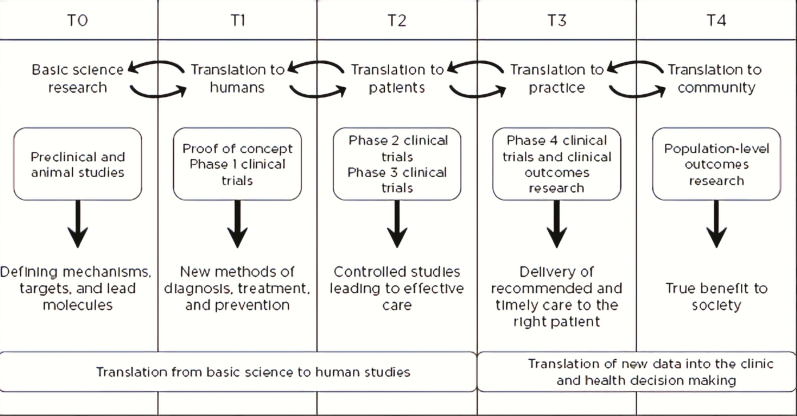

Perhaps you are now in your professional career and you notice that such experiments often suffer from difficulty in translating to the ‘real world’ of clinical practice. You may notice that your patients have so many different conditions that you can’t just focus on one, or that various contextual factors that you cannot control interact to make the intervention less effective in practice versus in an experimental situation. You have now entered into a different realm of research: pragmatic research. It is still research because you are still making hypotheses, gathering data, analysing that data and getting answers, but how it is conducted and the questions you are answering are different. In fact, roughly speaking, research is on a continuum from highly explanatory (like an efficacy study) to highly pragmatic; most research isn’t all one or the other. Many, including the National Institutes of Health (NIH), refer to this as the continuum of translational research, from T0 to T4. Figure 1 outlines this continuum with key points for different types of research. Typically, pragmatic research is considered as T3 or T4 research, which includes both effectiveness and implementation research. A related term that many consider pragmatic research is Dissemination and Implementation (or D&I) research, or outside the United States, is often called Knowledge Translation (KT). D&I or KT does include pragmatism and therefore shares much of the space with pragmatic research; however, a more specific focus is on the effective adoption, implementation and maintenance of evidence-based interventions into practice (1). It is important, however, to note that although for educational purposes Figure 1 presents research as if it is a linear progression, it rarely actually proceeds that way. For those interested, we refer to additional reading to highlight the complex multidimensional nature of translational research (2,3).

Figure 1.

Translational research continuum: operational phases of translational research.

Source: National Academies Press. The CTSA Program at NIH: Opportunities for Advancing Clinical and Translational Research, 2013. https://www.nap.edu/read/18323/chapter/3#20. Figure was adapted with permission from Macmillan Publishers Ltd: Nature Medicine (Blumberg et al., 2012), copyright 2012.

Pragmatic research is not ‘less than’ research, it is really ‘different than’ efficacy research. It is equally rigorous but broader and has a different purpose: it answers important questions about how an intervention can be used in actual clinical care or community settings. This is extremely important in family medicine. For example, it is useful that highly trained clinical psychologists paid by a study can demonstrate improvements in depression by delivering lengthy, controlled behavioral treatments to patients who don’t have any other medical or psychological conditions. However, it is also useful to know that behavioral health providers in actual community family medicine clinics working with complex patients under time and resource constraints can deliver those same clinical improvements. The difference in the latter is pragmatic research.

Efficacy Research—answers ‘does it work in ideal circumstances?’

Pragmatic Research—answers ‘does it work in typical clinical care settings?’

How does all this square with other related terms in clinical practice such as quality improvement, evaluation and patient-centered care? First, patient-centered care (meaning the study of issues that patients find important) should be a core tenant of all research, but especially of pragmatic research. Stakeholder engagement at all levels, but in particular with patients, is a critical feature of pragmatic research because the goal is, after all, relevant results delivered in a way that is useful. We encourage readers to consider the five Rs: rapid, relevant, rigorous, resource reporting and replicable (4). Quality improvement and evaluation have a lot in common with pragmatic research. The differences lie much in the end outcome of the work such as the difference of evaluating a program versus testing a hypothesis and the degree to which the results are generalizable past the specific organization studied and intended to contribute to generalizable knowledge for the field (5–7).

Useful frameworks for pragmatic research in family medicine

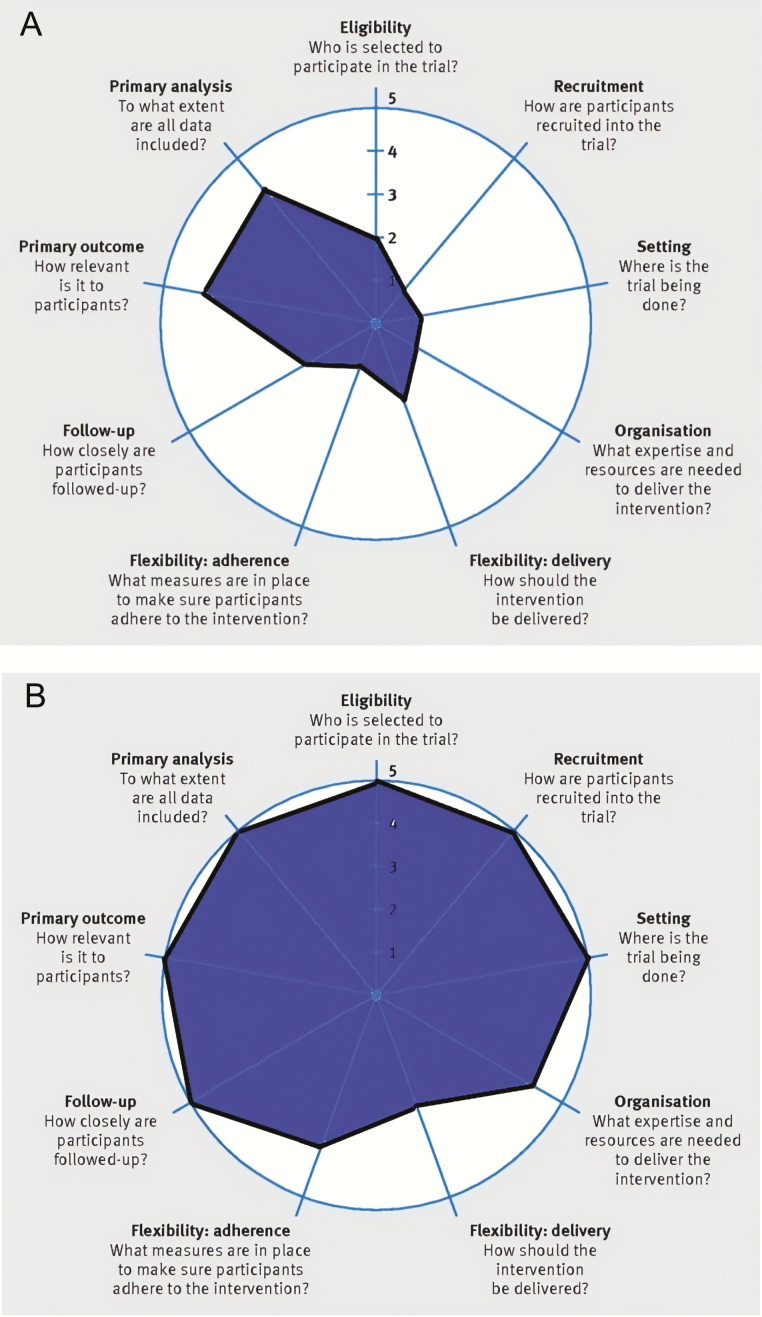

For those interested in learning more about the extent to which research is explanatory (i.e. efficacy) or pragmatic, a useful tool has been developed called the Pragmatic Explanatory Continuum Indicator Summary, or PRECIS-2 (www.precis-2.org). PRECIS-2 is a guide to nine key domains to consider that summarize ways in which a study is pragmatic versus explanatory (8,9). Figure 2 depicts two examples that show the PRECIS-2 ‘wheel’, where each point on the wheel represents how explanatory to how pragmatic a study is on that domain. How is this helpful? Mostly, it helps to align the question you have with the goals for the study and the stage of the research you are doing with the methods and procedures you will use. It was designed to help clinical trialists think more carefully about the impact their design decisions would have on applicability and what can be concluded (10). For example, if you are interested in learning if administering a new screening tool works to effectively identify patients with a particular condition and it has never been studied for this purpose, you might want to start with a more explanatory (efficacy) study. You would start with patients with and without the condition using a gold standard and test the tool against this. This type of study will help you to answer to what extent the tool works for its intended health outcome for patients under ideal conditions. However, if that same tool has been studied already and has been found to be effective, but now you want to know how that tool can be used in practice, then you might consider a more pragmatic study.

Figure 2.

The PRECIS-2 ‘wheel’ completed for examples. Example 1: Explanatory study of assessment tool. Example 2: Pragmatic study of assessment tool.

How would the example studies noted directly above be helped by the PRECIS-2 tool? You would begin by considering each domain on the wheel and rate each element from 1 to 5. Generally, the more pragmatic the study, the higher score. For the screening tool example, if you wanted to study the efficacy of the tool for its ability to identify a certain group of patients (Example Study 1, see Fig. 2), you might design a study where a research assistant identifies and recruits (recruitment) certain types of participants without any other problems or conditions than the one being studied (eligibility) and then conducts an experiment where those participants take the screening tool following a well-defined protocol (flexibility-delivery) in a highly controlled setting such as a lab (setting) with repeated, intensive follow-ups by the research assistant (follow-up). In contrast, Study Example 2 in Figure 2 shows a pragmatic study where medical assistants use the tool with all patients (recruitment and eligibility) in a typical clinic (setting), as the medical assistants are trained to deliver it within clinical circumstances and in the context of competing demands (flexibility-delivery).

The more pragmatic a study, the larger the resulting wheel; whereas, a more explanatory study produces a smaller wheel. It is, however, common to have some aspects of design decisions that make the wheel a bit ‘lopsided’ as can be seen in the two examples. Sometimes this can indicate that you purposefully decided that some domains should be more or less pragmatic. The goal is not to make all dimensions completely pragmatic (or explanatory), but to make thoughtful decisions for a given project. In summary, PRECIS-2 is a useful guide to understanding how a pragmatic study versus an explanatory study operationalize in practice.

Another framework useful in pragmatic research is RE-AIM (re-aim.org) (11–13). RE-AIM is an acronym for reach, efficacy (or effectiveness), adoption, implementation and maintenance. It is a planning and evaluation framework that helps researchers, program planners and evaluators to consider the types of outcomes important in producing population impact under real world conditions. A typical outcomes research study is most concerned with effectiveness, and sometimes reach. These are both at the patient level, and effectiveness measures are the patient-level health-related outcomes. Whereas the adoption, implementation and maintenance elements are implementation outcomes important to the clinical setting and refer to important issues at the setting or staff level. The reality is that even if an intervention is highly effective in producing health outcomes for patients, if it cannot be implemented in such a way that clinicians and their teams in typical care settings can deliver it or patients can receive it, then its benefit will not be realized. Zero (adoption or reach) times something (moderate effectiveness) is still zero; and thus, it is important to consider results on multiple RE-AIM elements, not just one or two to produce overall impact (14). For those interested in a further extension of RE-AIM, the Practical, Robust, Implementation and Sustainability Model includes RE-AIM elements as well as contextual and influencing factors that influence those elements such as the intervention, recipients of the intervention, the implementation and sustainability infrastructure and the external environment; and how these factors interact at the levels of the individual, organizational, system or community (15).

Reach—The absolute number, proportion and representativeness of individuals who are willing to participate in a given initiative.

Efficacy or Effectiveness—The impact of an intervention on important outcomes, including potential negative effects, quality of life and economic outcomes.

Adoption—The absolute number, proportion and representativeness of settings and intervention agents who are willing to initiate a program.

Implementation—At the setting level, implementation refers to the intervention agents’ fidelity to the various elements of an intervention’s protocol. This includes consistency of delivery as intended and the time and cost of the intervention.

Maintenance—The extent to which a program or policy becomes institutionalized or part of the routine organizational practices and policies. Maintenance in the RE-AIM framework also has referents at the individual level. At the individual level, maintenance has been defined as the long-term effects of a program on outcomes after 6 or more months after the most recent intervention contact.

Methods for pragmatic research

Many clinicians are confused about how pragmatic research works. If you are not randomly assigning research participants and tightly controlling for the conditions in which the experiment is happening, how do you know that it is working? First, if no research has been done at all on the intervention in question, then many would generally advise to start with an efficacy study. There is controversy around this concept, as the concept of designing for dissemination from the start to enhance the eventual applicability and use of the results is important to retain (16). Also, the designing for dissemination approach appreciates that innovation often starts with actual practice. However, many times a lot of the research has been done on a new intervention, approach, guideline or treatment. Thus, it is appropriate to study the intervention more pragmatically. Here are some ways that it helps to know what is happening/the effect in a pragmatic study. Table 1 lists considerations for pragmatic research and how pragmatic research contrasts with traditional clinical efficacy research. As can be seen, these types of research differ in their purpose, design, outcomes and analyses. A thorough explanation of the methods used with pragmatic research is beyond the scope of this paper; however, some key features of pragmatic research include careful attention and use of mixed methods (qualitative and quantitative methods and integrating them together) collected over time, and at multiple levels of stakeholders. This way, you know what happened and why as well as the result at the end. Overall, it is wise to appreciate that innovation, evaluation and implementation should go hand in hand.

Table 1.

Distinguishing differences between pragmatic and traditional clinical efficacy trials. Used with permission from Dr. Krist

| Pragmatic study | Traditional clinical efficacy | |

|---|---|---|

| Stakeholder involvement | Engaged in all study phases including study design, conducting the study, collecting data, interpreting results, disseminating findings | Limited engagement, often in response to investigator ideas or study subjects |

| Research design | Includes internal and external validity, design fidelity and local adaptation, real life settings and populations, contextual assessments | Focus on limiting threats to internal validity, typically uses randomized controlled trial, participants and settings typically homogenous |

| Outcomes | Reach, effectiveness, adoption, implementation, comparative effectiveness, sustainability | Efficacy, mechanism identification, component analysis |

| Measures | Brief, valid, actionable with rapid clinical utility, feasible in real world and low-resource settings | Validated measures that minimize bias, focus on internal consistency and theory rather than clinical relevance |

| Costs | Assessments include intervention costs and replication costs in relation to outcomes | Often not collected or reported |

| Data source | May include existing data (electronic health records, administrative data) and brief patient reports | Data generation and collection part of clinical trial |

| Analyses | Process and outcome analyses relevant to stakeholders and from different perspectives | Specified a priori and typically restricted to investigator hypotheses |

| Availability of findings | Rapid learning and implementation | Delay between trial completion and analytic availability |

Source: Krist et al. Designing a valid randomized pragmatic primary care implementation trial: the my own health report (MOHR) project. Implement Sci 2013; 8: 73.

Measures for pragmatic research

Pragmatic research is in essence, practical. This means that the way it is conducted not only includes typical and diverse types of patients, but usual care settings (such as a typical, resource constrained family medicine clinic), and usual circumstances in terms of funding, personnel and the competing demands of typical practice—like how workflows work and who has the knowledge and skills to do what within existing time constraints and competing demands (17,18). Key considerations include the need to appeal to and address issues relevant to multiple stakeholders—patients, clinicians and teams, health systems and communities (19). Therefore, survey instruments and study procedures need to be clinically relevant (i.e. used in the practice of medicine) and not take too long, not be too expensive (i.e. not be proprietary so users have to pay for it) and be able to be administered and interpreted by clinical staff. Likewise, interventions need to be feasible to use in practice, or potentially used in practice if circumstances change. For example, many patients have chronic pain, and family practices are tasked with assessing and intervening on this issue. A pragmatic study would consider a brief assessment tool that would provide clinically actionable information to guide care (like pain interference for example) and evaluate an intervention that could be done in most primary care clinics (like coordinating referrals with physical therapy and mental health and having an opioid prescribing policy). Follow-ups with patients would be during regular clinical visits, and data would be gleaned by a query to the electronic medical record. Results could be evaluated by assessing the percent and types of patients who participated (reach), who benefited and who did not (effectiveness) and how consistently the intervention could be delivered as intended and how it needed to be adapted (implementation).

Resources to learn more about pragmatic research

Beyond the references included at the end of this article, there is a growing number of places to learn about pragmatic research and how it can be used to add value to family medicine. Here is a short list of places to begin. This is not an exhaustive list, especially for international audiences.

National Cancer Institute. Implementation Science at a Glance: A guide for cancer control practitioners. NIH 2019. NIH Publication Number 19-CA-8055.

RE-AIM website: www.re-aim.org

PRECIS-2 website: www.PRECIS-2.org

Brownson, RC, Colditz, G, Proctor, EK (editors). Dissemination and implementation research in health (2nd edition). Oxford Univ. Press, 2018.

University of Colorado Pragmatic Trials e-book: http://www.crispebooks.org/workbook-18OF-1845R.html

NIH Pragmatic Research Collaboratory Living Textbook: https://rethinkingclinicaltrials.org/

Conclusions and the future of pragmatic research

As more evidence-based interventions become available, it becomes increasingly important to not just study new innovations, but also to examine and get good at implementation and dissemination of these interventions. This requires a different mindset and different methods to determine what will improve adoption and successful use, especially in low resource settings and with populations most in need. There is a lot of opportunity for growth in this area including development of clinically meaningful, pragmatic interventions and relevant tools for assessment, as well as means to gather and analyse the data from these interventions. Progress in these areas using pragmatic research approaches will result in more rapid and relevant findings that are more directly applicable to practice.

Acknowledgements

The authors wish to thank the CASFM methods group for the opportunity to participate in the creation and publication of this article.

Declaration

Funding: There was no funding for this work.

Ethics approval: Ethics approval was not needed as there were no human subjects.

Conflicts of interest: Dr Glasgow has no declarations or conflicts. Dr Holtrop is a Senior Scientific Advisor for the Agency for Healthcare Research and Quality. The findings and conclusions in this article are those of the authors, who are responsible for its content, and do not necessarily represent the views of the Agency for Healthcare Research and Quality. No statement in this article should be construed as an official position of the Agency for Healthcare Research and Quality or of the US Department of Health and Human Services. Dr Holtrop is also a consultant with Telligen.

References

- 1. Brownson RC, Colditz G, Proctor EK.. Dissemination and Implementation Research in Health: Translating Science to Practice. 2nd edn. New York: Oxford University Press, 2018. [Google Scholar]

- 2. Braithwaite J, Churruca K, Long JC, Ellis LA, Herkes J. When complexity science meets implementation science: a theoretical and empirical analysis of systems change. BMC Med 2018; 16: 63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Laan A, Boenink L. Beyond bench and bedside: disentangling the concept of translational research. Health Care Anal 2015; 23 (1):32–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Peek CJ, Glasgow RE, Stange KC, Klesges LM, Purcell EP, Kessler RS. The 5 R’s: an emerging bold standard for conducting relevant research in a changing world. Ann Fam Med 2014; 12: 447–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Kao L. Implementation science and quality improvement. In: Dimick JB, Greenberg CC. (eds). Success in Academic Surgery: Health Services Research. London, UK: Springer, 2014, pp. 85–100. [Google Scholar]

- 6. Batalden PB, Davidoff F. What is “quality improvement” and how can it transform healthcare? Qual Saf Health Care 2007; 16: 2–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Health Resources and Services Administration. Quality Improvement.2017. https://www.hrsa.gov/quality/toolbox/methodology/ qualityimprovement/ index.html. (accessed on 2 July 2017).

- 8. Loudon K, Treweek S, Sullivan F, Donnan P, Thorpe KE, Zwarenstein M. The PRECIS-2 tool: designing trials that are fit for purpose. BMJ 2015; 350: h2147. [DOI] [PubMed] [Google Scholar]

- 9. Gaglio B, Phillips SM, Heurtin-Roberts S, Sanchez MA, Glasgow RE. How pragmatic is it? Lessons learned using PRECIS and RE-AIM for determining pragmatic characteristics of research. Implement Sci 2014; 9: 96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Thorpe KE, Zwarenstein M, Oxman AD et al. . A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. J Clin Epidemiol 2009; 62: 464–75. [DOI] [PubMed] [Google Scholar]

- 11. Glasgow RE. RE-AIMing research for application: ways to improve evidence for family medicine. J Am Board Fam Med 2006; 19 (1):11–9. [DOI] [PubMed] [Google Scholar]

- 12. Glasgow RE, Estabrooks PE. Pragmatic applications of RE-AIM for health care initiatives in community and clinical settings. Prev Chronic Dis 2018; 15: E02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Holtrop JS, Rabin BA, Glasgow RE. Qualitative approaches to use of the RE-AIM framework: rationale and methods. BMC Health Serv Res 2018; 18: 177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Kessler RS, Purcell EP, Glasgow RE, Klesges LM, Benkeser RM, Peek CJ. What does it mean to “employ” the RE-AIM model? Eval Health Prof 2013; 36: 44–66. [DOI] [PubMed] [Google Scholar]

- 15. Feldstein AC, Glasgow RE. A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. Jt Comm J Qual Patient Saf 2008; 34: 228–43. [DOI] [PubMed] [Google Scholar]

- 16. Chambers DA. Sharpening our focus on designing for dissemination: lessons from the SPRINT program and potential next steps for the field. Transl Behav Med 2019. July 17; 1–3. doi:10.1093/tbm/ibz102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Stange KC, Woolf SH, Gjeltema K. One minute for prevention: the power of leveraging to fulfill the promise of health behavior counseling. Am J Prev Med 2002; 22: 320–3. [DOI] [PubMed] [Google Scholar]

- 18. Jaén CR, Stange KC, Tumiel LM, Nutting P. Missed opportunities for prevention: smoking cessation counseling and the competing demands of practice. J Fam Pract 1997; 45: 348–54. [PubMed] [Google Scholar]

- 19. Frank L, Basch E, Selby JV; Patient-Centered Outcomes Research Institute The PCORI perspective on patient-centered outcomes research. JAMA 2014; 312: 1513–4. [DOI] [PubMed] [Google Scholar]