Abstract

In recognition memory paradigms, emotional details are often recognized better than neutral ones, but at the cost of memory for peripheral details. We previously provided evidence that, when peripheral details must be recalled using central details as cues, peripheral details from emotional scenes are at least as likely to be recalled as those from neutral scenes. Here we replicated and explicated this result by implementing a mathematical modeling approach to disambiguate the influence of target type, scene emotionality, scene valence, and their interactions. After incidentally encoding scenes that included neutral backgrounds with a positive, negative, or neutral foreground objects, participants showed equal or better cued recall of components from emotional scenes compared to neutral scenes. There was no evidence of emotion-based impairment in cued recall in either of two experiments, including one in which we replicated the emotion-induced memory trade-off in recognition. Mathematical model fits indicated that the emotionality of the encoded scene was the primary driver of improved cued-recall performance. Thus, even when emotion impairs recognition of peripheral components of scenes, it can preserve the ability to recall which scene components were studied together.

Keywords: emotion, memory, cued recall, association-memory, scenes

Emotional items often are remembered at the expense of surrounding contextual or background information (see Levine & Edelstein, 2009), an effect that we and others have referred to as an emotion-induced memory trade-off. This memory trade-off was initially attributed to narrowed attention at encoding (e.g., Cahill & McGaugh, 1998; Hamann, 2001), drawing on evidence that arousing stimuli can restrict resources (Dolcos et al., 2017; Easterbrook, 1959; Mather, 2007). However, accumulating evidence suggests that attentional biases are insufficient to explain memory narrowing (e.g., Christianson et al., 1991; Kim, Vossel, & Gamer, 2013; Mickley Steinmetz & Kensinger, 2013), raising the possibility that retrieval methods play a role.

We provided suggestive evidence for a role of retrieval methods (Mickley Steinmetz et al., 2016), utilizing a paradigm in which participants view scenes that include emotional or neutral objects placed on neutral backgrounds (e.g., Chipchase & Chapman, 2013; Mickley Steinmetz & Kensinger, 2013; Waring et al., 2010). Participants then were given either an object or background as a memory cue and asked to recall the other scene component. In contrast to a large literature that has revealed an emotion-induced memory trade-off when testing recognition memory, emotional object cues led to better recall of backgrounds than neutral objects.

We suggested that the interplay between emotion-induced processes at encoding and retrieval may help to explain this pattern. Specifically, the emotion induced by the retrieval cue itself may facilitate what is remembered, as past studies have shown (Daselaar et al., 2008; Siddiqui & Unsworth, 2011); this may intensify the difference in recognition memory between emotional and neutral cues. However, this hypothesis could not be tested in the prior study. Using a mathematical modeling approach the present study sought to disambiguate effects of the retrieval cue from effects stemming from the emotionality of the scenes using a within-subjects design. This allowed us to examine the influences of different factors on recall: type of retrieval cue (object vs. background), emotionality of the scene (emotional vs. neutral), if emotional—the valence of the scene (positive vs. negative), or the interactions of these factors.

Importantly, cued recall is influenced by both item- and association-memory (Hockley & Cristi, 1996; Madan et al. 2010, 2012, 2019). If a cue is not recognized (a failure of item memory), cued recall will fail. Similarly, the target must be accessible in memory (a form of item memory) in order for the association between the cue and target to be retrieved. These item- and association-memory effects cannot be separated using behavior alone, but mathematical modeling approaches can be used to obtain estimates related to these component processes (Madan et al., 2010, 2012, 2019; Madan, 2014). Thus, in Experiment 1, we adopted a modeling approach to explicate the effects of emotion on memory for object-background associations. In Experiment 2, we further examined the effect of emotion on cued recall in relation to retrieval cue recognition. Although this modified design prevented the use of mathematical modeling, it enabled us to examine the effects of emotion on memory for object-background associations once removing the contribution of item memory failures for the cue. Experiment 2 also provided an opportunity to replicate, within a single experiment, the emotion-induced memory trade-off in recognition and the preservation of cued recall for emotional components of scenes.

To preview the results, we replicate Mickley Steinmetz et al. (2016), with better cued recall for components of emotional scenes than neutral scenes. Model fits (Experiment 1) suggest that this emotional enhancement was predominantly explained by emotionality of the scene and that facilitated processing of the retrieval cue may have played a lesser role. Experiment 2 confirmed that a preservation of cued recall for components of emotional scenes can co-occur with poorer recognition memory for backgrounds that had been presented with emotional compared to neutral objects.

Experiment 1

Method

Participants

A target sample of 30 participants was set. A power analysis indicated that 30 participants would provide 75% power to detect a moderate effect (Cohen’s d=0.50). A total of 31 participants (24 female) were tested, recruited online via social media or through paper advertisements at Boston College, and remunerated $10/hour. One male participant was excluded for not providing recall responses. Participants were native English speakers, reported no history of psychiatric or neuropsychological illness, and had normal or corrected-to-normal vision. The Boston College Internal Review Board approved the study.

Materials

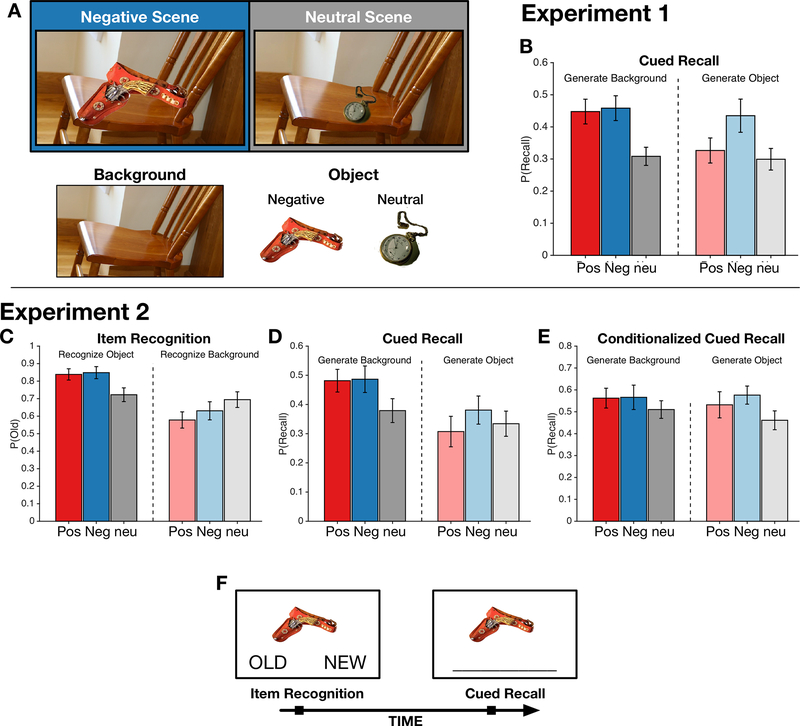

Constructed study scenes, adapted from previous studies (Mickley Steinmetz & Kensinger, 2013; Waring, et al., 2010), included background pictures (e.g., lawn) overlaid with neutral (e.g., toy sled), positive (e.g., man walking a dog), or negative (e.g., crying child) objects (see Figure 1A). Neutral and emotional objects were placed in approximately the same location for each background picture and were composited to be as realistic as possible. Neutral and emotional objects were of similar proportions, and included a similar mixture of objects, animals, and people. Each background was used to create two scenes: one included a neutral object and one an emotional (positive or negative) object. Each participant saw only one version of these scenes: scenes were varied across participants according to whether a background was seen with a neutral or emotional object.

Figure 1. Task design and behavioral results.

(A) Negative and neutral scenes, constructed from a background picture with a negative or neutral object, respectively. (B,D) Cued recall performance for Experiments 1 and 2. (C) Item recognition performance and (E) cued recall performance conditionalized on item recognition for Experiment 2. (F) Illustration of retrieval procedure for Experiment 2. Error bars represent 95% confidence intervals corrected for inter-individual differences (Loftus & Masson, 1994).

Based on previous normative studies, positive and negative objects were rated as equally arousing [p>.15], and more arousing than neutral objects [p<.001]. Background pictures were rated as neutral by naïve raters, with backgrounds receiving an average score of 5.0 to 5.5 on a Likert scale (1 = extremely negative, 5 = neutral, 10 = extremely positive; Mickley Steinmetz & Kensinger, 2013).

The study was presented online. Participants were instructed to complete the study at full screen on a computer and to complete the study in one sitting, without visual or auditory distraction or outside aid. Participants reported good adherence to instructions on a compliance survey. (One individual stopped briefly to take a phone call.)

Procedure

During study, participants viewed 88 scenes (44 neutral, 44 emotional [22 positive, 22 negative]) for five seconds each and indicated whether they would Approach, Back Away, or Stay the same distance from the scene.

Following study, participants were given a surprise, self-paced cued-recall test. Participants were shown previously studied background and objects in random order; for each, they were asked to type in a short description of the item that it was paired with during study. For half the scenes, the object alone was shown; for the other half, only the background was shown. All pictures were previously studied—as is standard for cued-recall tests; no new items were presented.

Data Analysis

Two raters scored recall responses, indicating 0 (for incorrect or absent responses), 0.5 (for vague or partially correct responses), or 1 (for correct responses). For example, if the correct item was “ballerina,” any response to a non-vague term that could be uniquely linked to the correct response, e.g., “ballerina”, “dancer”, or “girl in tutu”, would receive a 1; “girl” would receive 0.5; a blank or unrelated answer would be scored 0. Scores fell between 0 and 1, at intervals of 0.25. Scores demonstrated high inter-rater reliability [r >.8]. Across all participants and conditions, 33.1% of responses were scored as correct, 5.8% as partially correct, 48.6% were incorrect, and 12.5% were absent of a response. Raters’ scores were averaged for analyses.

Modeling cued recall

Mathematical modeling was used to disentangle effects of emotionality (whether or not there was any emotional content) and valence (whether the emotional content was positive or negative) on different component memory processes to the cued recall performance (based on the approach proposed in Madan et al., 2010). A constant or ‘tuning’ parameter (c) is first set to scale model fits to the mean accuracy across both conditions and participants. Model variants then additionally include parameters that correspond to relative enhancements or impairments of cued-recall performance between conditions (e.g., effects of emotionality, valence, or target type). For instance, the ‘Emotionality’ parameter can be included to estimate the relative enhancement or impairment for scenes that were studied with emotional objects, either positive or negative. A parameter greater than one indicates better recall for scenes with an emotional object than those with a neutral object; if instead this parameter was found to be below one, this would indicate worse recall for emotional scenes.

Here we implemented the modeling based on multiplicative and nested effects (valence nested within emotionality). This modeling approach was based upon three distinct considerations: (1) The current study included positively and negatively valenced associations, as well as emotionally neutral associations. As such, the modeling was implemented to include nested effects, where valence differences (i.e., differences in memory for positive vs. negative scenes) could only be included in a model if it already allowed for influences of emotionality (i.e., differences in memory for emotional [both positive and negative] vs. neutral scenes). (2) Stimuli in the current study were scenes with foreground objects that were either positive, negative, or neutral, along with a neutral background, such that the foreground object likely received more attention than the background object, regardless of its valence (and see Chipchase & Chapman, 2013, and Mickley Steinmetz & Kensinger, 2013). For this reason, it is likely that the object and background items were not afforded the same amount of attention and possible imbalances between generating a background from an object vs. an object given the background were estimated using the target type (T) parameter, and additional parameters quantified the interaction between target type and emotionality or valence (described in more detail below). (3) Parameters were estimated in relation to mean cued recall performance across participants. These three considerations result in the set of equations listed in Table 1.

Table 1. Model equations for each recall conditions.

Each row represents a recall condition, not a model variant (which are listed in Table 2). * and / symbols represent multiplication and division, respectively. Fitted parameters were as follows: c, constant; T, Target; E, Emotionality; V, Valence; Ei, Target×Emotionality; Vi, Target×Valence; also see Figure 2.

| Recall Condition | ||

|---|---|---|

| Generate | Valence | Equation |

| Background | Neutral | c * T / E / Ei |

| Positive | c * T * E * V * Ei * Vi | |

| Negative | c * T * E / V * Ei / Vi | |

| Object | Neutral | c * T / E * Ei |

| Positive | c / T * E * V / Ei / Vi | |

| Negative | c / T * E / V / Ei * Vi | |

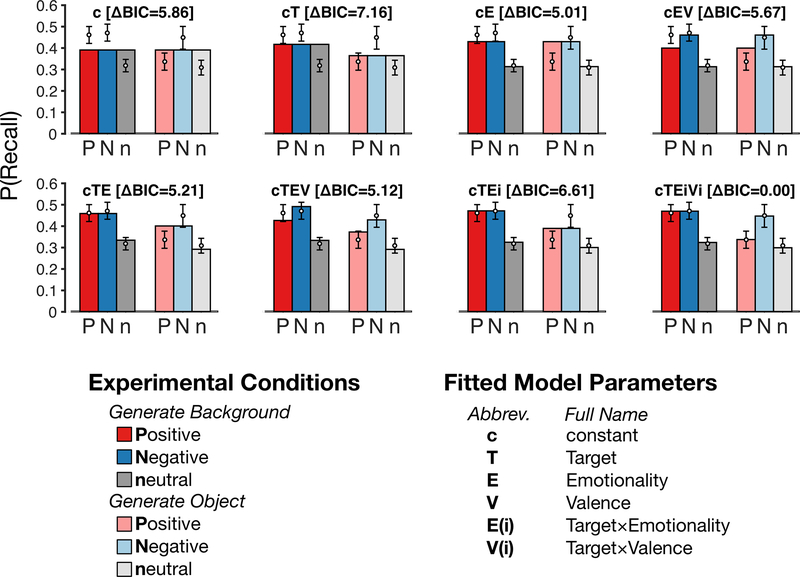

Model variants were formally assessed via Bayesian Information Criterion (BIC), which includes a penalty based on the number of free parameters. Smaller BIC values correspond to better model fits. As absolute BIC values are unitless and intended to compare the relative fit between different models, here we report ΔBIC values based on comparisions between each model and the best fitting model (Burnham & Anderson, 2002, 2004; Farrell & Lewandowsky, 2018). By convention, two models are considered equivalent if ΔBIC < 2 (Burnham & Anderson, 2002, 2004). To additionally evaluate the relative fit of the data, we additionally report R2. This provides an absolute measure of the amount of variance explained in the behavioural data.

Eight model variants were used to compare the relative contributions of main effects of emotionality (model parameter: E), valence (V), and target type (T), as well as their interactions (Ei, Vi), to cued-recall performance. Interaction terms were only considered when the relevant main effects were also included.

-

(1)

Model c only included the constant parameter and thus had only one free parameter. This model was constrained to have the same recall performance across the six experimental conditions (see Figure 2) and would be expected to fit the data poorly, but serves as a baseline for the subsequent model variants. All subsequent models included the constant parameter as well as at least one model parameter.

-

(2)

Model cT included a parameter to account for differences in recall related to the cued recall target being either a background or object, but would not account for any differences related to emotionality, as shown in Figure 2.

-

(3)

Model cE included a parameter related to the presence of an emotional object (i.e., emotionality), either positive or negative, relative to scenes that were wholly neutral; however, this model variant ignores any effects of the target type or valence.

-

(4)

Model cEV adds to the previous model by additionally including a parameter related to an influence of scene valence (i.e., differences in recall for scenes that had positive vs. negative objects), though did not account for effects of target type. Both cE and cEV correspond to effects of emotionality on the associations themselves, and would not be influenced by the possibility of emotional objects potentially being better memory cues or targets.

-

(5)

Model cTE includes parameters for both the cued recall target and emotionality, but does not include their interaction or effects of valence.

-

(6)

Models cTEV and (7) cTEi include either effect of valence or the interaction of Target and Emotionality, but not both.

-

(8)

Model cTEiVi includes all considered effects: effects of Target, Emotionality, and Valence, as well as the interactions of Target×Emotionality and Target×Valence. However, in including all of these model parameters, this variant now incorporates six free parameters to explain six experimental conditions and is thus a fully saturated model. This model variant will achieve a perfect fit to the behavioral data, though it is also penalized in the model fitness (BIC) for containing more free parameters than other model variants. Nonetheless, the confidence intervals for the fitted parameters can yield useful information.

Figure 2. Modeling of cued recall performance from Experiment 1, with each of the model variants.

White circles and error bars represent the actual behavioral data (see Figure 1B). Titles for each panel denote the model variant displayed, the number of letters in the model variant name indicates the number of free parameters (see Table 2). Bars show the predicted cued recall performance for the best-fitting model parameters. ‘P’, ‘N’, ‘n’ corresponds to scenes with a positive, negative, or neutral object, respectively. The left side of each panel displays performance where the cued recall target was the background; the right side displays performance when the cued recall target was the object (as in Figure 1B).

For the cued recall modeling, fitted model parameters were solved using the system of equations shown in Table 1. For a given model variant, parameters not fit were set to 1. Model fits are reported in Table 2. In addition, 95% confidence intervals for parameters were calculated by obtaining the mean performance for each condition across participants via boot-strapping across 10,000 iterations and are reported in Table 2.

Table 2. Model variant fitness and best-fitting parameters.

Model variants were named as an abbreviation of the parameters included; the number of letters in the model name corresponds to the number of parameters included in the model variant. Model variant names are abbreviated as follows: ‘c’ denotes the inclusion of the constant parameter to calibrate the model parameters to the mean behavioral performance (included in all model variants); ‘T’ denotes the inclusion of a parameter related to the type of ‘Target’ item being generated (either object or background); ‘E’ denotes the inclusion of an ‘Emotion’ parameter that influenced associations including both positive or negative objects; ‘V’ denotes the inclusion of a ‘Valence’ parameter that corresponded to the influence of positive as compared to negative objects; ‘i’ denotes the inclusion of a interaction term between the prior letter and Target, where the effect of the other parameter is not constrained to be equivalent across the two levels of Target. * denotes that the 95% CI significantly differs from 1. ΔBIC values shown in bold denote that model variants do not explain the data sufficiently better than the model with ΔBIC=0 (i.e., the best fitting model). Due to the multiplicative nature of the modeling, fitted model parameters are the same for all model variants; when a parameter is not included in a model, it is set to 1. R2 is additionally included as a measure of overall fitness, i.e., amount of variability explained.

| Model Variant | ΔBIC | ΔBIC without saturated model | No. Parameters | R2 |

| c | 5.86 | 0.85 | 1 | .000 |

| cT | 7.16 | 2.15 | 2 | .140 |

| cE | 5.01 | 0.00 | 2 | .603 |

| cEV | 5.67 | 0.65 | 3 | .729 |

| cTE | 5.21 | 0.20 | 3 | .763 |

| cTEV | 5.12 | 0.11 | 4 | .874 |

| cTEi | 6.61 | 1.59 | 4 | .786 |

| cTEiVi | 0.00 | -- | 6 | .994 |

| (saturated) | ||||

| Fitted Model Parameter | 95% Confidence Interval | |||

| Abbrev. | Full Name | |||

| T | Target | [ 1.01, 1.13 ] * | ||

| E | Emotion | [ 1.11, 1.23 ] * | ||

| V | Valence | [ 0.89, 0.97 ] * | ||

| (E)i | Target×Emotion | [ 0.99, 1.07 ] _ | ||

| (V)i | Target×Valence | [ 1.01, 1.15 ] * | ||

The modeling approach described here is generally consistent with prior our mathematical modeling of cued recall (i.e., Madan et al., 2010, 2012, 2019; Madan, 2014), however, here we extended this modeling to (1) accommodate the nesting of factors (i.e., for modeling both emotionality and nested valence effects) and (2) non-equivalent types of items (i.e., central and peripheral items). Additionally, here we (3) re-parameterised the ratios such that they more directly reflect relative influences of item properties. For instance, here modeling of accuracy involves multiplying by parameter E for emotional scenes, but instead divide by E for neutral scenes (see Table 1). In our previous modeling, we would multiply by parameter E for emotional scenes, but accuracy for neutral scenes would be irrespective of the parameter (as in the ratios listed in Madan et al., 2012, p. 702).

Results & Discussion

A Target (object, background) by Scene Valence (Positive, Negative, neutral) ANOVA was conducted on cued-recall performance (see Figure 1B). There was a significant effect of Target, F(1,29)=6.38, p=.017, =.180: backgrounds [M±SD=0.405±0.066] were more easily generated than objects [M=0.354±0.072; t(29)=2.53, p=.017]. In other words, objects served as better cues than backgrounds. There was also a significant effect of Valence, F(2,58)=24.17, p<.001, =.455: Components from negative [M=0.447±0.191] scenes were more likely to prompt memory than components from neutral scenes [M=0.304±0.151; t(29)=6.23, p<.001] or positive scenes [M =0.387±0.173; t(29)=3.03, p<.001]; components from positive scenes were also more likely to prompt memory than components from neutral scenes [t(29)=4.34, p<.001].

These effects were qualified by a Target×Valence interaction F(2,58)=4.14, p=.021, =.125. When generating backgrounds, participants were more likely to generate backgrounds given a positive or negative cue as compared to a neutral cue [Positive: t(29)=5.82, p<.001; Negative: t(29)=5.64, p<.001]. Participants also were more likely to be able to generate a negative object as compared to a positive or neutral object [Positive: t(29)=3.31, p=.003; neutral: t(29)=3.75, p<.001]. In examining cued-recall differences related to generating backgrounds vs. objects, participants were more easily able to generate backgrounds than objects for positive scenes [t(29)=3.61, p<.001, d=0.66]; cued recall did not differ in relation to target type for the negative [t(29)=0.60, p=.55, d=0.11] or neutral scenes [t(29)=0.47, p=.65, d=0.09].

Modeling cued recall

When considering all model variants, the best-fitting model included all factors and interactions: cTEiVi, based on the significant influence of nearly all fitted model parameters (see Table 2, lower portion). Though this model is saturated (i.e., as many fitted parameters as conditions), it provides useful information in the confidence intervals for the parameters. These intervals indicate that all effects were relevant to recall and that the influence of these effects were relatively similar in magnitude.

Comparisons excluding the saturated model indicated that the remaining models performed similarly (see Table 2, upper portion). However, the main effect of Emotionality had the most pronounced effect, and the presence of Emotionality and Valence explained performance well. The inclusion of Target (i.e., difference in recall related to generation of object vs. background) contributed the least to overall model fit, indicating that recalling an object vs. background had a small effect. This pattern suggests that facilitated processing of emotional retrieval cues was unlikely to be the dominant factor (as this would have led to a large Emotionality×Target interaction); instead, emotionality of the scene was the primary influence on cued recall. However, the Valence×Target parameter was present, indicating that valence influences cued recall performance directionally. In other words, the valence of the foreground objects influenced participants’ ability to generate the backgrounds to a different (greater) extent than the backgrounds cued memory for those valenced objects.

Experiment 2

Although Experiment 1 could rule out a hypothesis put forth in a prior paper—that preserved cued recall stemmed from emotional cues facilitating recall—it could not isolate why cues from emotional scenes were better at evoking associative recall. Cues from emotional scenes could lead to higher recall rates because (a) emotional scenes forged a stronger bond between the object and background, or (b) cues from emotional scenes were more likely to be remembered than neutral cues.

Experiment 1 and its accompanying modeling demonstrated that emotionality, valence, and target type all were relevant to cued recall performance. While this finding is the outcome of the modeling approach, it is partially based on inferences inherent to the modeling approach. To obtain complementary source of evidence and validate the model, we conducted a second experiment using a more complex behavioural task. The retrieval task in this experiment uses a modified cued-recall test where participants first provided explicit recognition decisions for retrieval cues marking each item as “old’ or “new.” Participants then recalled associated targets only if cues were recognized. This provided us with overall cued-recall performance, as before, and also the cued-recall success given that the cue was recognized. Obtaining both measures, we were able to test for the emotion-induced memory trade-off in recognition and to directly observe the correspondence between item recognition and cued recall. In this way, we were able to get multiple sources of memory information from the same trial, allowing us to examine whether the preservation of cued-recall for emotional scenes existed even when the responses were conditionalized for item memory.

Method

Participants

Data were collected for 27 participants (22 female), with recruitment and consent procedures identical to Experiment 1. Due to a computer error, data from 3 more participants were not collected.

Materials and Procedure

The materials and procedure were the same as Experiment 1, with the exception that participants were given a modified cued-recall test where they first indicated “old” if they recognized the cue and “new” if they did not. If the item was recognized as ‘old’ were they asked to describe the associated item (see Fig. 1F). As in Experiment 1, all cues had been studied; thus, all ‘new’ responses were misses.

Data Analysis

Cued-recall accuracy was computed in the same manner as in Experiment 1. Scores demonstrated high inter-rater reliability [r >.8]. Cued recall accuracy was computed both for all items (i.e., cues rated as ‘new’ were scored as 0) and conditionalized for successful recognition (i.e., trials on which cues were rated as ‘new’ were excluded).

Results & Discussion

An Item Type (object, background) by Scene Valence (Positive, Negative, neutral) ANOVA was conducted on recognition performance (Figure 1C). There was a significant effect of Item Type, F(1,26)=48.30, p<.001, =.650, such that objects [M±SD=0.803±0.120] were more easily recognized than backgrounds [M=0.634± 0.143; t(26)= 6.87, p<.001]. The effect of Valence was not significant, F(2,52)=1.16, p=.32, =.043, but the interaction was significant, F(2,52)=20.6, p<.001, =.442. Post-hoc t-tests indicated better recognition for emotional than neutral objects [Positive: t(26)=4.40, p<.001; Negative: t(26)=5.47, p<.001]. In contrast, memory was better for backgrounds from neutral than emotional scenes [Positive: t(26)=3.15, p=.004; Negative: t(26)=1.75, p=.092]. Thus, these findings replicated the emotion-induced memory trade-off in recognition.

A Target (object, background) by Scene Valence (Positive, Negative, neutral) ANOVA was conducted on cued-recall performance (see Figure 1D). As in Experiment 1, there was a significant effect of Target, F(1,26)=21.20, p<.001, =.449: backgrounds [M=0.449±0.162] were more easily generated than objects [M=0.341±0.154; t(26)=4.60, p<.001]. In other words, objects served as better cues than backgrounds. There was also a significant effect of Valence, F(2,52)=4.66, p=.014, =.152, where components from negative [M=0.434±0.176] scenes were more likely to prompt memory than components from neutral scenes [M=0.357±0.158; t(26)=3.15, p<.001]. These main effects were qualified by a Target×Valence interaction, F(2,52)=3.95, p=.025, =.132, again replicating Experiment 1. When generating backgrounds, participants were more likely to generate backgrounds given a positive or negative cue as compared to a neutral cue [Positive: t(26)=3.65, p<.001; Negative: t(26)=3.22, p<.001]. When generating objects, there were no differences in performance related to valence [all p’s>.05]. Thus, emotionality affected cued recall performance directionally, with emotional foreground objects leading to better generation of backgrounds than vice versa.

Cued recall conditionalized for successful recognition is shown in Figure 1E. A Target by Scene Valence ANOVA found a significant effect of Valence, F(2,52)=6.50, p=.003, =.200, with better performance for positive [M =0.547±0.176; t(26)=2.51, p=.045] and negative [M=0.571±0.162; t(26)=3.50, p=.003] than neutral scenes [M=0.486±0.171]. Neither the main effect of Target nor the interaction were significant [p’s>.05]. Thus, when only considering items that were successfully remembered, emotionality led to better recall regardless of target type. One goal was to clarify if increased recall from emotional scenes was due to strengthened association-memory or simply that participants were more likely to remember cues from emotional scenes. These results rule out that second proposition. Even when cue recognition was controlled, cues from emotional scenes were more likely to evoke memory for their targets than those from neutral scenes. If anything, the effect of emotion was strengthened as there was no interaction with target, suggesting that both object and background cues from emotional scenes were better at evoking recall targets than cues from neutral scenes.

In sum, participants simultaneously demonstrated the emotion-induced memory trade-off, while performing better at generating backgrounds for emotional scenes—a directional effect of emotion. This was further corroborated by the conditionalized cued-recall analysis, which directly accounted for contingencies between item recognition and cued recall.

General Discussion

After viewing scenes that included emotional objects placed on neutral backgrounds, item-recognition and cued-recall tests produced opposite results. Recognition tests revealed an emotion-induced memory trade-off: enhanced memory for emotional objects, and decreased memory for their backgrounds. However, cued-recall tests showed that backgrounds served as better cues for emotional objects than neutral objects, especially for negative objects, and that backgrounds were more likely to be recalled when cued with emotional objects compared to neutral objects. These results generally replicated those of Mickley Steinmetz et al. (2016), but shed new light on the influence of emotion on associative memory.

When the results of our prior experiment (Mickley Steinmetz et al., 2016) revealed that emotional cues enhanced memory for backgrounds, we suggested that this might be because the emotional valence of the cue may enhance retrieval processes. This speculation was based on past studies indicating that emotion can facilitate retrieval (Daselaar et al., 2008; Siddiqui & Unsworth, 2011) and would have been revealed in the present modeling analysis as an interactive effect of Target and Emotion. However, the modeling suggests that this speculation was not correct. In the model, the Emotion parameter had the strongest effect. One can think of this Emotion parameter as being related to the emotionality of the entire studied scene (object and background), rather than being related to either of these individual components (which would have instead manifested as the aforementioned interaction).

The results suggest that the associative nature of the cued-recall task is important. When a participant sees each object and background element separately in a recognition test, they do not have to recall the association. The cued-recall test, on the other hand, requires the association to be made. Under these associative conditions, emotion can facilitate memory. In both experiments presented here, all memory cues were old items. While this is common for cued recall studies, this was also true for the multi-step procedure of Experiment 2 which first asked participants to make an item-recognition judgment. As such, it is possible that emotionality may have shifted the response criterion here. Nonetheless, the intention of this procedural change for Experiment 2 was to distinguish item-recognition failure from a failure to recall the associate. To investigate the influence of including only “old” items on associative memory, future studies could examine the specificity of memory by adding in related new items or an alternate multi-step associative recognition procedure (e.g., see Madan et al., 2017) that probes associative memory performance even after a recall failure.

The fact that emotion enhanced association-memory stands in contrast to prior studies, using paired-associates tasks, which have found that when a negative item is present it leads to impairments in cued recall (e.g., Caplan et al., 2019; Madan et al., 2012, 2017; Mao et al., 2017; Rimmele et al., 2011; Touryan et al., 2007). The current study instead found that negative items lead to enhanced memory for the associated target. However, a key difference may be the relation between the paired stimuli. In prior studies, arbitrary items were presented as a pair; however, in the current study, the objects were congruent or meaningfully related with the background (i.e., object makes sense to appear in the scene based on prior semantic knowledge) and were presented as a unified scene. There has been little work on the effects of emotion on associative memory for meaningful vs. arbitrary associations, and the present results suggest the intriguing possibility that the way emotion affects associative memory may differ depending on this factor (broadly consistent with Mather’s, 2007, object-based framework; also see Chiu et al., 2013). There is evidence that meaningful associations are better remembered than arbitrary associations for neutral information (e.g., Amer et al., 2018, in press; Atienza et al., 2011; Castel, 2005; Ngo & Lloyd, 2016), but it is unclear if this effect would interact with emotion. Related to this, prior studies often present the to-be-associated items as distinct items, whereas our scenes were integrated composites of the two items. As such, it is possible that association-memory for our scenes were easier to unitize than in others’ paradigms (see Ahmad & Hockley, 2014; Madan et al., 2017; Murray & Kensinger, 2013). Future research will be needed to investigate these possibilities.

In addition, as Experiment 2 included only “old” items in the recognition memory test, this may have shifted participants’ response criterion. It is possible that differences in criterion response may relate to the ability to retrieve associative detail which may be an interesting question to examine in future studies.

The current study reveals an important boundary condition on emotion-induced memory trade-offs. When remembering the context in which an object appeared, emotional memory may particularly suffer when recognition assessments are used. Emotion appears to simultaneously impair the ability to recognize peripheral scene components while preserving the ability to recall the verbal labels for these components when cued with the emotional object. Indeed, when cued recall assessments are used, individuals can be even more likely to recall one component of a scene when cued with another when that scene is emotional rather than neutral.

References

- Ahmad FN, & Hockley WE (2014). The role of familiarity in associative recognition of unitized compound word pairs. Quarterly Journal of Experimental Psychology, 67, 2301–2324. [DOI] [PubMed] [Google Scholar]

- Amer T, Giovanello KS, Grady CL, & Hasher L (2018). Age differences in memory for meaningful and arbitrary associations: A memory retrieval account. Psychology & Aging, 33, 74–81. [DOI] [PubMed] [Google Scholar]

- Amer T, Giovanello KS, Nichol DR, Hasher L, & Grady CL (in press). Neural correlates of enhanced memory for meaningful associations with age. Cerebral Cortex. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atienza M, Crespo-Garcia M, & Cantero JL (2011). Semantic congruence enhances memory of episodic associations: Role of theta oscillations. Journal of Cognitive Neuroscience, 23, 75–90. [DOI] [PubMed] [Google Scholar]

- Burnham KE, & Anderson DR (2002). Model selection and multimodel interference (2nd ed.). New York: Springer-Verlag. [Google Scholar]

- Burnham KE, & Anderson DR (2004). Multimodel inference: Understanding AIC and BIC in model selection. Sociological Methods & Research, 33, 261–304. [Google Scholar]

- Caplan JB, Sommer T, Madan CR, & Fujiwara E (2019). Reduced association-memory for negative information: Impact of confidence and interactive imagery during study. Cognition and Emotion, 33, 1745–1753. [DOI] [PubMed] [Google Scholar]

- Cahill L, & McGaugh JL (1998). Mechanisms of emotional arousal and lasting declarative memory. Trends in Neurosciences, 21, 294–299. [DOI] [PubMed] [Google Scholar]

- Castel AD (2005). Memory for grocery prices in younger and older adults: The role of schematic support. Psychology and Aging, 20, 718–721. [DOI] [PubMed] [Google Scholar]

- Chipchase SY, & Chapman P (2013). Trade-offs in visual attention and the enhancement of memory specificity for positive and negative emotional stimuli. Quarterly Journal of Experimental Psychology, 66, 277–298. [DOI] [PubMed] [Google Scholar]

- Chiu Y-C, Dolcos F, Gonslaves BD, & Cohen NJ (2013). On opposing effects of emotion on contextual or relational memory. Frontiers in Psychology, 4, 103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christianson SÅ, Loftus EF, Hoffman H, & Loftus GR (1991). Eye fixations and memory for emotional events. Journal of Experimental Psychology: Learning, Memory, and Cognition, 17, 693–701. [DOI] [PubMed] [Google Scholar]

- Daselaar SM, Rice HJ, Greenberg DL, Cabeza R, LaBar KS, & Rubin DC (2008). The spatiotemporal dynamics of autobiographical memory: Neural correlates of recall, emotional intensity, and reliving. Cerebral Cortex, 18, 217–229. [DOI] [PubMed] [Google Scholar]

- Dolcos F, Katsumi Y, Weymer M, Moore M, Tsukiura T, & Dolcos S Emerging directions in emotional episodic memory. Frontiers in Psychology, 8, 1867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Easterbrook JA (1959). The effect of emotion on cue utilization and the organization of behavior. Psychological Review, 66, 183–201. [DOI] [PubMed] [Google Scholar]

- Farrell S, & Lewandowsky S (2018). Computational modeling of cognition and behavior. Cambridge: Cambridge University Press. [Google Scholar]

- Hamann S (2001). Cognitive and neural mechanisms of emotional memory. Trends in Cognitive Sciences, 5, 394–400. [DOI] [PubMed] [Google Scholar]

- Hockley WE, & Cristi C (1996). Tests of encoding tradeoffs between item and associative information. Memory & Cognition, 24, 202–216. [DOI] [PubMed] [Google Scholar]

- Kim JSC, Vossel G, & Gamer M (2013). Effects of emotional context on memory for details: The role of attention. PLOS ONE, 8, e77405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levine LJ & Edelstein RS (2009). Emotion and memory narrowing: a review and goal relevance approach. Cognition and Emotion, 23, 833–875. [Google Scholar]

- Loftus GR, & Masson MEJ (1994). Using confidence intervals in within-subject designs. Psychonomic Bulletin & Review, 1, 476–490. [DOI] [PubMed] [Google Scholar]

- Madan CR (2014). Manipulability impairs association-memory: Revisiting effects of incidental motor processing on verbal paired-associates. Acta Psychologica, 149, 45–51. [DOI] [PubMed] [Google Scholar]

- Madan CR, Caplan JB, Lau CSM, & Fujiwara E (2012) Emotional arousal does not enhance association-memory. Journal of Memory and Language, 66, 295–716. [Google Scholar]

- Madan CR, Fujiwara E, Caplan JB, & Sommer T (2017). Emotional arousal impairs association-memory: Roles of amygdala and hippocampus. NeuroImage, 156, 14–28. [DOI] [PubMed] [Google Scholar]

- Madan CR, Glaholt MG, & Caplan JB (2010). The influence of item properties on association-memory. Journal of Memory and Language, 63, 46–63. [Google Scholar]

- Madan CR, Scott SME, & Kensinger EA (2019). Positive emotion enhances association-memory. Emotion, 19, 733–740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mao WB, An S, & Yang XF (2017). The effects of goal relevance and perceptual features of emotional items and associative memory. Frontiers in Psychology, 8, 1223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mather M (2007). Emotional arousal and memory binding: An object-based framework. Perspectives on Psychological Science, 2, 33–52. [DOI] [PubMed] [Google Scholar]

- Mickley Steinmetz KR, & Kensinger EA (2013). The emotion-induced memory trade-off: More than an effect of overt attention? Memory & Cognition, 41, 69–81. [DOI] [PubMed] [Google Scholar]

- Mickley Steinmetz KR, Knight AG, & Kensinger EA (2016). Neutral details associated with emotional events are encoded: evidence from a cued recall paradigm. Cognition and Emotion, 30, 1352–1360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray BD, & Kensinger EA (2013). A review of the neural and behavioral consequences for unitizing emotional and neutral information. Frontiers in Behavioral Neuroscience, 7, 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ngo CT, & Lloyd ME (2016). Familiarity influences on direct and indirect associative memory for objects in scenes. Quarterly Journal of Experimental Psychology, 71, 471–482. [DOI] [PubMed] [Google Scholar]

- Rimmele U, Davachi L, Petrov R, Dougal S, & Phelps EA (2011). Emotion enhances the subjective feeling of remembering, despite lower accuracy for contextual details. Emotion, 11, 553–562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siddiqui AP, & Unsworth N (2011). Investigating the role of emotion during the search process in free recall. Memory & Cognition, 39, 1387–1400. [DOI] [PubMed] [Google Scholar]

- Touryan SR, Marian DE, & Shimamura AP (2007). Effect of negative emotional pictures on associative memory for peripheral information. Memory, 15, 154–166. [DOI] [PubMed] [Google Scholar]

- Waring JD, Payne JD, Schacter DL, & Kensinger EA (2010). Impact of individual differences upon emotion-induced memory trade-offs. Cognition and Emotion, 24, 150–167. [DOI] [PMC free article] [PubMed] [Google Scholar]