Abstract

We present a model for the process of redesigning the laboratory curriculum in Introductory Organismal Biology to increase opportunities for meaningful inquiry and increase student recognition of their scientific skill development. We created scaffolded modules and assignments to allow students to build and practice key skills in experimental design, data analysis, and scientific writing. Using the Tool for Interrelated Experimental Design, we showed significantly higher gains in experimental design scores in the redesigned course and a more consistent pattern of gains across a range of initial student scores compared with the original format. Students who completed the redesigned course rated themselves significantly higher in experimental design, data collection, and data analysis skills compared with students in the original format. Scores on the Laboratory Course Activity Survey were high for both formats and did not significantly differ. However, on written course evaluations, students in the redesigned course were more likely to report that they engaged in “real science” and their “own experiments.” They also had increased recognition of their specific analytical and writing skill development. Our results demonstrate that intentional, scaffolded instruction using inquiry modules can increase experimental design skills and sense of scientific ability in an introductory biology course.

INTRODUCTION

As undergraduate biology educators, we are called on to cultivate students’ scientific literacy by engaging them in the process of science and training them in competencies such as quantitative reasoning and science communication (1). Involving students in scientific research supports the development of students’ identity as scientists [reviewed in (2)], which has been linked to increased persistence of undergraduates in STEM (3). Development of science identity involves working with others in a scientific context, being socialized into the norms of scientific practices, and internalizing a desire to become a “science person” (4). Students’ sense of self-efficacy in science, a belief in one’s ability to complete tasks within the field, is a critical mediator between involvement in research and effective development of their scientist identity (2).

Introductory laboratory courses serve as a key curricular opportunity to cultivate self-efficacy and science identities. Undergraduates enter introductory science courses with a range of research experiences and quantitative skills. The experience and feedback gained during an introductory biology course is a more important determinant of sense of self-efficacy in undergraduate STEM than high school biology and chemistry preparation (5). Instructional approaches that reduce cognitive load by providing scaffolded guidance to students throughout the learning process are efficient and effective, particularly at the introductory level, when most undergraduates do not have substantial prior knowledge of the processes of science (6). Students who receive guided instruction in science process skills early in their undergraduate careers demonstrate better content acquisition and interdisciplinary ways of knowing (7, 8). When skill development was scaffolded and reinforced through progressive course assignments in an introductory course, students showed significant gains in experimental design and graphing ability and they had higher scores in subsequent required biology courses compared with peers who did not receive scaffolded instruction (9). Integration of interactive online science process skills tutorials into a course led to significant gains among introductory students with limited biology experience (10). These findings suggest that scaffolded teaching of scientific skills in the context of a course has positive impacts on immediate learning and long-term success among biology majors and can promote equity in STEM.

Course-based undergraduate research experiences (CUREs) that include elements of collaboration, broad relevance, discovery, and iteration are proposed to enhance student self-efficacy, understanding of the nature of science, and scientific aspiration (11). Undergraduate participation in semester-long introductory biology CUREs has led to gains in scientific thinking and skills in data analysis and data interpretation (12), and improved persistence of science majors (13). Additionally, participation in an introductory chemistry CURE had a lasting influence on students’ understanding of the research process, feelings of self-efficacy, and sense of accomplishment from doing laboratory work (14). While semester-long introductory CUREs are one way to increase research opportunities for undergraduates, they are not always feasible to implement. Alternatively, overlaying inquiry-based opportunities within traditional undergraduate laboratory course structures can also have significant positive impacts on scientific learning gains and attitudes toward the process of science (8, 15–18).

We present a model for redesigning the laboratory curriculum of an introductory Organismal Biology laboratory course. Our goals for course redesign were two-fold: 1) increase opportunities for students to engage in meaningful inquiry and 2) intentionally scaffolding skill-building to increase student recognition that they were developing concrete, transferable scientific skills. We used several measures to compare the outcomes between the original and redesigned laboratory courses to examine how the redesign influenced 1) student gains in experimental design skills, 2) students’ awareness of their own skill-building, and 3) students’ perceptions of the relevance of the course research experience. We hypothesized that redesigning the Organismal Biology laboratory course would foster student learning gains in experimental design, increase students’ recognition of skills they had developed by the end of the course, and lead to more positive student perceptions of the authenticity of their research experience.

METHODS

Institutional and course context

Introductory Organismal Biology is one of two introductory biology lab courses at Wellesley College, a small, private, selective, women’s liberal arts college. The course is taken to fulfill Biology and other STEM major requirements or by nonmajors to fulfill a general education requirement. Because it is an introductory course, many students take it before declaring a major. Students may take the two-course introductory biology sequence in either order; thus, some students in Introductory Organismal Biology have already taken Introductory Cell Biology, while for others this course was their first college biology lab experience. Six lab sections, capped at 16 students each and taught by full-time instructors, are offered each semester.

Course redesign process

The original course format introduced students to scientific practices in the context of instructor-prescribed experiments. Students completed weekly assignments for each lab that included graphing, statistical analysis, and/or writing exercises. While opportunities for skill development were offered, they did not emphasize student-driven questioning and hypothesizing, did not situate activities in novel, broadly relevant research, and were not tied to scaffolded learning objectives. Therefore, the original course format was not aligned with evidence-based practices for effective development of students’ scientific skills and science identity.

In the redesigned course, we sought to scaffold scientific skill development and provide increasing opportunities for student-driven inquiry and broadly relevant research throughout the semester. We articulated broad goals for the course and aligned measurable learning objectives that would allow students to engage in authentic research practices and move from peripheral to more central roles in the research community as they acquired skills throughout the semester (18, 19) (Appendix 1). A team of laboratory instructors and undergraduate research assistants developed two new course-based research modules (Introductory and Capstone) and modified the existing middle modules during the summer of 2016 (see Appendix 2 for a description of the modules in the redesigned course). A summary depiction of the scaffolded skill-building is shown in Table 1, including when each skill was introduced (I) and subsequently practiced (P).

TABLE 1.

Overview of scientific skills scaffolding in redesigned lab course.a

| Skill | Subskill | Introductory Module (week) | Middle Module (week) | Capstone Module (week) | |||||

|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 10 | 11 | 12 | ||

| 1. Collaboratively observe, measure organismal form and function | I | P | P | P | P | P | P | P | |

| 2. Design inquiry-driven scientific experiments | Questions & hypotheses: instructor-designed | I | P | P | P | P | |||

| Groups & variables: instructor-designed | I | P | P | P | P | ||||

| Questions & hypotheses: student-designed | I | P | P | ||||||

| Groups & variables: student-designed | I | P | P | ||||||

| 3. Summarize, present, and analyze data using graphing and statistical software | Graphs of means and standard deviations | I | P | P | P | P | P | P | P |

| Two-sample t-tests | I | P | P | P | P | P | P | ||

| ANOVA and Tukey’s post hoc tests | I | P | P | P | P | P | |||

| Figure and statistics selection | I | ||||||||

| 4. Effectively communicate scientific findings | Descriptive figure captions | I | P | P | P | P | P | P | P |

| Results text with statistics and figure references | I | P | P | P | P | P | P | ||

| Discussions connecting results to literature | I | P | P | P | |||||

| Proper citations in text and reference section | I | P | P | P | |||||

| Descriptive methods | I | P | |||||||

| Poster preparation and presentation | I | ||||||||

The redesigned lab course focused on students’ learning of four main skills (with associated subskills). The week in which each skill was introduced (I) and practiced (P) is shown. Weeks 6 to 9 are not shown because the activities were not part of the course redesign.

Study sample

We collected data from five of six lab sections in spring 2016 (original format) and in fall 2016 (redesigned format). The five sections selected each semester were taught by a common set of three instructors and the one section taught by a unique instructor each semester was excluded. Students in the redesigned semester were told by instructors that the course had been revised but did not receive a detailed description of changes made. In both semesters, the majority of students were first- and second-year students and the most common major was “undeclared.” Data about first-generation college status, race or ethnicity were not collected per our IRB protocol. There was no significant difference in distribution of majors (Pearson chi-square, p = 0.196) or class year (Pearson chi-square, p = 0.074) between semesters (Appendix 3). In spring 2016, 45% of students had already taken Introductory Cell Biology before they enrolled in Introductory Organismal Biology; in fall 2016, 47% of students had already taken Introductory Cell Biology. We assessed the redesign goals with a variety of tools. including surveys, mastery rubrics, and course evaluations (20, 21) (Table 2). The statistical software program JMP 13 was used to analyze all data. Our research protocols were reviewed and deemed exempt by the Wellesley College IRB under §46. 101b Exemption 1 and Exemption 2.

TABLE 2.

Alignment of redesign goals, redesign changes, and assessment tools.

| Goal | Course Changes to Address Redesign Goal | Tools Used to Assess Impacts of Course Changes |

|---|---|---|

| 1. To increase opportunities for students to engage in meaningful inquiry |

|

TIED (22)

|

| 2. To increase student recognition that they are developing concrete, transferable skills of scientists |

|

Personal Skills Rating on Mastery Rubric

|

Tool for Interrelated Experimental Design

Students’ experimental design skills were assessed using the Tool for Interrelated Experimental Design (TIED) (22). A high score on TIED requires students to demonstrate an understanding of five components of experimental design (hypothesis, biological rationale, experimental groups, data collection, and supporting observations), and design an experiment where these components are aligned (22). The highest possible total score on the TIED is 20 points. The TIED was administered in Lab 2 (Pre) and Lab 12 (Post). Pre- and post-responses for each student were paired and de-identified prior to scoring and analysis. Students with only one response were omitted. Three biology faculty members scored students’ responses with high interrater reliability [intraclass correlation coefficient (ICC) = 0.945; 95% CI = 0.933–0.955] and student scores were calculated using the average of the three scorers. To compare development of experimental design skills in the original lab format (n = 56; 90% response rate) and redesigned lab (n = 52; 84% response rate), we calculated normalized change, the ratio of gain to the maximum possible gain, or the loss to the maximum possible loss (23). Additionally, we examined the distribution of normalized change in TIED scores in each lab format based on student pre-semester TIED score quartile.

Personal skills rating

Students from two sections per semester (n = 27 in original course, n = 26 in redesigned course) completed a “Personal Skills rating.” We adapted science and biology self-efficacy scales (2, 24) to align with four main skill categories, and associated subskills, emphasized in the course objectives: Experimental Design, Collaborative Data Collection, Data Analysis and Presentation, and Scientific Communication (Appendix 4). Because we were interested in students’ perceptions of their skill level, we used a modified mastery rubric rather than the “confidence” scale rating system. Our modified mastery rubric (Appendix 4) articulates a developmental trajectory with explicit criteria describing each stage (25, 26). Students completed the Personal Skills rating in Lab 1 (Pre) and Lab 12 (Post). Pre- and post- responses for each student in the original lab format (n = 25; 93% response rate) and redesigned lab (n = 24; 92% response rate), were paired and de-identified and rubric levels were converted to numerical data prior to scoring and analysis. Students with only one response were omitted.

Laboratory Course Assessment Survey

The Laboratory Course Assessment Survey (LCAS) (27) was developed to distinguish CUREs, which contain a research component with the potential to produce results relevant to a broad scientific community, from “traditional laboratory courses” which contain a series of discrete “labs” or “exercises” that focus on content or technique mastery. To examine student perceptions of their research experience, we administered the LCAS in Lab 12 following completion of the TIED. Data were de-identified prior to analysis. The Collaboration subscale contained six items related to actions that students were encouraged to perform in lab, the Discovery and Relevance subscale contained five items related to expectations of the student research experience in lab, and the Iteration subscale contained six items related to how time was allocated to various activities in lab. All LCAS items were administered, though the Discovery and Relevance items were delivered in slightly different order than in the published instrument. Additionally, the Likert scale for Discovery and Relevance and Iteration items was reduced from a 6-point scale to a 4-point scale (Strongly Disagree, Disagree, Agree, Strongly Agree; we omitted Somewhat Agree and Somewhat Disagree). The scale responses were converted to numerical scores, and the average scores for each subscale were calculated for students from the original (n = 58; 94% response rate) and redesigned (n = 60; 97% response rate) lab formats. Responses of “I don’t know/I prefer not to answer” were not included in analyses.

Qualitative analysis of student evaluation questionnaires

To supplement data gleaned from the Personal Skills ratings and LCAS, we analyzed students’ written responses from anonymous end-of-course Student Evaluation Questionnaires (SEQs) [original format (n = 56, 90% response rate) and redesigned format (n = 58, 94% response rate)]. SEQs are administered by the college at the end of every lab and lecture course section and include open-ended questions that capture students’ thoughts and attitudes about their course experience. We compared SEQ responses between the original and redesigned course with respect to students’ reports of specific skills learned, of feelings of confidence or self-efficacy in their skills, of doing “real” science or biology research, and that the labs supported learning that was relevant or transferable to their future courses or career goals.

To analyze SEQs, we brainstormed possible keywords and phrases that would provide evidence for our research questions and we sorted them as either “skills” or “attitudes.” The unit of coding was the sentence because of the importance of context in understanding students’ statements, and only the first instance of a keyword in a sentence was coded. Sentences could contain multiple codes, such as references to course skills (e.g., “writing”) and attitudes towards those skills (e.g., “confident”). The qualitative analysis identified 18 different codes, with 7 codes representing various Course Skills and 11 codes representing Course Attitudes. The coding system was used by two authors (TLK and SMF) and intercoder reliability was confirmed to have “substantial agreement” [Cohen’s kappa: 0.75 (28)]. The relative frequency for each code was calculated by dividing the frequency of each code by the total number of student responses in that semester, allowing us to compare how often students mentioned each code between the two semesters.

RESULTS

TIED scores

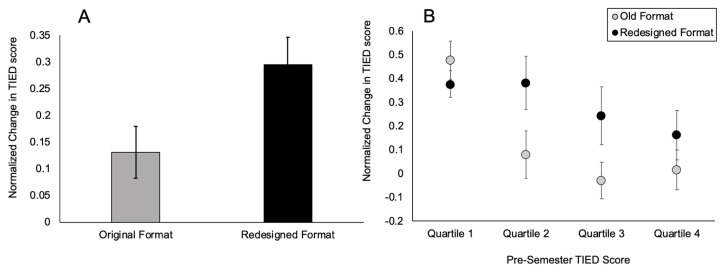

To assess the effect of the redesign on students’ experimental design skills, we compared TIED scores for the original and redesigned lab formats. Pre-semester TIED scores in the original format ranged from 13% to 92% (median score 77%) and in the redesigned format from 33% to 93% (median score 67%). Post-semester TIED scores in the original format ranged from 33% to 97% (median score 73%) and in the redesigned format from 43% to 98% (median score 79%). The redesigned format yielded significantly higher normalized change in TIED scores compared with the original format (unpaired t-test, p = 0.020) (Fig. 1A).

FIGURE 1.

A) Normalized change in TIED scores (mean ± SEM) and B) distribution of normalized change scores (mean ± SEM) based on pre-semester TIED score quartile in the Original and Redesigned lab formats. TIED = Tool for Interrelated Experimental Design; SEM = standard error of the mean.

In the original lab format group, normalized change in TIED score was high for students who were in the lowest quartile for pre-semester TIED scores yet was low for students in the other quartiles of pre-semester TIED scores [Welch’s analysis of variance (ANOVA), p < 0.001]. In contrast, in the redesigned lab format, there was no significant difference across the four quartiles of pre-semester TIED scores (Welch’s ANOVA, p = 0.405), showing a more consistent pattern of normalized change across the range of student pre-semester TIED scores (Fig. 1B).

Student personal skills rating

Pre-semester Personal Skills ratings were similar in the original and redesigned format (Table 3), except that scores for Data Analysis and Presentation started significantly lower in the redesigned format than in the original format (unpaired t-tests, p = 0.005). Post-semester skills ratings differed across lab formats, with students in the redesigned course rating themselves significantly higher in Experimental Design, Data Collection, and Data Analysis and Presentation compared with students in the original format. Growth in personal skills ratings (Post – Pre) was examined, and significant growth was observed in all four areas (Experimental Design, Data Collection, Data Analysis and Presentation, Science Communication) in both the original and redesigned formats (paired t-tests, p < 0.01). Growth scores were larger in magnitude in the redesigned format than in the original format.

TABLE 3.

Pre- and post-semester personal skills ratings* (mean ± SD) in the original and redesigned laboratory course formats.

| Pre-Semester Rating | Post-Semester Rating | Growth (Post-Pre Rating) | ||||

|---|---|---|---|---|---|---|

| Personal Skills Category | Original format | Redesign format | Original format | Redesign format | Original format | Redesign format |

| Experimental Design | 2.6±0.7 | 2.8±0.9 | 3.0±0.6 | 3.5±0.5b | 0.48±0.85c | 0.67±0.76c |

| Data Collection | 2.6±0.7 | 2.7±0.7 | 3.4±0.5 | 3.7±0.4b | 0.74±0.65c | 1.02±0.62c |

| Data Analysis and Presentation | 2.4±0.7 | 1.7±0.9a | 3.2±0.6 | 3.6±0.5b | 0.78±0.88c | 1.89±1.0c |

| Science Communication | 2.3±0.6 | 2.0±0.7 | 3.0±0.6 | 3.3±0.6 | 0.68±0.76c | 1.23±0.64c |

Ratings based on Mastery Rubric: 1 = Beginning, 2 = Developing, 3 = Proficient, 4 = Masterful.

Indicates that mean pre-assessment score differed significantly between Original and Redesigned format (t-test p < 0.01, Cohen’s d = 0.80).

Indicates that mean post-assessment score differed significantly between Original and Redesigned format (t-test p < 0.01, Cohen’s d > 0.70).

Indicates that within-semester Post-assessment was significantly higher than Pre-assessment (paired t-test p < 0.009).

LCAS scores

There were no significant differences in LCAS scores between the original and redesigned formats for any of the three categories: Collaboration, Discovery & Relevance, or Iteration (Table 4). Overall LCAS scores were high for all categories, with students generally reporting that they “Agree” or “Strongly Agree” that the elements of Collaboration, Discovery & Relevance, and Iteration were present in both the original and redesigned format.

TABLE 4.

Average LCAS category scores* (mean ± SD) in the original and redesigned laboratory course formats.

| LCAS category | Original format | Redesign formata |

|---|---|---|

| Collaboration (Average of 6 items) | 3.7±0.3 | 3.6±0.4 |

| Discovery & Relevance (Average of 5 items) | 3.6±0.4 | 3.4±0.5 |

| Iteration (Average of 6 items) | 3.1±0.5 | 3.0±0.5 |

Collaboration items: 1 = “never,” 2 = “one or two times,” 3 = “monthly,” 4 = “weekly”; Discovery & Relevance and Iteration items: 1 = “strongly disagree,” 2 = “disagree,” 3 = “agree,” 4 = “strongly agree.”

Mean LCAS scores from the Redesigned lab format were not significantly different from the Original format for any of the three features (p > 0.1, Cohen’s d = 0.20–0.30)

LCAS = Laboratory Course Assessment Survey.

SEQ qualitative analysis

SEQ data were analyzed to explore students’ self-awareness of their scientific skill-building and their perceptions of the relevance of the laboratory research experience (Table 5). Students in both the original and redesigned formats mentioned developing skills related to graphing and data presentation in general, yet students in the redesigned format were more likely to mention specific statistical tests and data analysis techniques (42.9% in original, 72.4% in redesigned). Additionally, while students in the original format were more likely to comment on science writing in general (91.1% in original, 58.6% in redesigned), students in the redesigned format were more likely to name specific aspects of science writing such as results texts, figure captions, and discussion section (5.4% in original, 31.0% in redesigned). Students in the redesigned format were more likely to comment that they designed or conducted their own experiment (34.5%) compared with students in the original format (14.3%), and were also more likely to mention that they engaged in “real science” or “actual science” (15.5%) than students in the original format (3.6%). Students in both formats commented that the labs were practical, valuable, or relevant, and that the labs would be useful for them in other courses, in their major, or for their career goals related to science or medicine. Students in both formats discussed learning and being challenged in the lab course (58.9% in original, 77.6% in redesigned), and commented on their academic growth and improvement (50.0% in the original, 31.0% in redesigned). Students in the original format were more likely to comment on their level of interest or enjoyment in the lab, with more frequent reports of both positive (26.8% in original, 8.6% in redesigned) and negative comments (19.6% in original, 6.9% in redesigned) about interest/enjoyment/excitement. Students rarely made reference in the SEQs to their feelings of confidence, but these types of comments were more likely for students in the original format (8.9% in original, 1.7% in redesigned).

TABLE 5.

Qualitative analysis of written Student Evaluation Questionnaire (SEQ) data from students in the original format and redesigned format.

| Relative Frequency (/# responses) | ||

|---|---|---|

| Attitudes Codes | Original Format | Redesign Format |

| Learn/learned, challenging/challenged [references to personal learning, “helped me learn”] | 58.9% | 77.6% |

| Improve, grow, helped me understand/develop, enhanced my skill/ability | 50.0% | 31.0% |

| Real science, actual science, real life, real world | 3.6% | 15.5% |

| Future/practical/useful/valuable/relevant | 37.5% | 31.0% |

| Science/biology/STEM major, upper level labs, other biology courses | 44.6% | 44.8% |

| Scientist/doctor/pre-med/medical school/dental/grad school | 39.3% | 31.0% |

| Positive interest/enjoy/excite/fun/engaging (general: for course, professor, “experiments”) | 62.5% | 51.7% |

| Positive interest/enjoy/excite/fun/engaging (specific skill, lab, assignment) | 26.8% | 8.6% |

| Negative interest/enjoy/excite/engaging (general and specific) | 19.6% | 6.9% |

| Practice/practicing | 12.5% | 15.5% |

| Confidence/confident | 8.9% | 1.7% |

| Skills Codes | Original Format | Redesign Format |

| Skills, techniques (general) (e.g., “scientific skills,” “laboratory skills”) | 23.2% | 19.0% |

| Graphs/graphing, figures, Excel, presenting data, visualizing data | 33.9% | 25.9% |

| Statistics, ANOVA, Tukey, t-test, JMP, analyze or interpret data, analysis | 42.9% | 72.4% |

| Writing (general) (e.g., “scientific writing,” “writing lab reports”) | 91.1% | 58.6% |

| Writing results text, captions, legends, discussion sections | 5.4% | 31.0% |

| Variables, hypothesis/hypotheses | 1.8% | 1.7% |

| Design/conduct own/new experiment | 14.3% | 34.5% |

DISCUSSION

We present a model for laboratory course redesign to increase inquiry and add explicit scaffolding of key scientific skills. We used several forms of assessment to evaluate whether these changes had effects on students’ learning and their perceptions of their skills and experiences in the lab.

Did the course redesign impact students’ development of experimental design skills?

Students in the redesigned lab format made significantly greater and more consistent gains in experimental design skills than students who experienced the original lab format, suggesting that opportunities for scaffolded skill-building, practice, and feedback were effective in supporting development of experimental design skills among a range of students in the redesigned course. These findings support prior research indicating that scaffolding and opportunities for inquiry-based practice may help increase equity for introductory students (9), which is an important goal for introductory courses that aim to attract and retain STEM majors from diverse backgrounds. These findings also suggest that full conversion to a semester-long CURE, which can be logistically, financially, or time-prohibitive for biology departments (16, 29), may not be required to significantly increase experimental design skills among introductory students. Rather, deliberately scaffolding skills and providing opportunities for students to practice these skills in the context of modular inquiry lab activities can effectively train students in these critical practices.

Did the course redesign impact students’ sense of their own scientific skill-building and mastery?

While growth in students’ perceptions of their skill levels occurred in both lab formats, students in the redesigned format rated themselves significantly higher at the end of the semester. Increased scaffolding in the redesigned course made the scientific skill-building more explicit on a weekly basis. This scaffolding may have helped students to recognize that they were gaining skills through their laboratory research experiences (rather than simply completing isolated tasks), which can contribute positively to students’ self-efficacy and development of science identity (2).

Student evaluation questionnaire analyses supported this finding. Students in the redesigned format were more likely to mention that they learned specific skills related to elements of data analysis and components of written scientific reports. Students in the redesigned format were also more likely to use the words “learned” or “learning” and discuss being challenged in the labs. These trends suggest that increased skill-scaffolding and inquiry opportunities in the course design helped students identify and appreciate the skills that they had learned. This supports previous findings that introductory students can acquire awareness of their skills and sophisticated views of the process of science when they engage in an inquiry-based introductory lab experience (15). Science self-efficacy is an important mediator to explain how research experiences improve academic outcomes for students (2). When students perceive that they are capable of doing science, they are more likely to develop a sense that they are becoming scientists (30). Therefore, our findings that students in the scaffolded, redesigned course were able to identify the specific learned skills and had positive perceptions of their ability to use the skills are particularly meaningful.

As part of the SEQ analysis, we observed that the redesigned group were less likely than the original group to mention their confidence, interest, or enjoyment in the labs. It is important to remember that the SEQs were open-ended and reflect students’ own decisions about what to share in their evaluations of the course. It may be that students in the redesign felt less confident or less interest in the course, but it may also be that these students were compelled to discuss specific aspects of their learning (e.g., their learning of statistics, scientific writing, designing experiments) instead of their personal feelings or attitudes. As we did not have direct measures of students’ motivation or attitudes in this study, we cannot disentangle the reason for these differences between the original and redesign groups. Because student engagement and self-efficacy can be important mediators of persistence in the sciences, future research should use more direct instruments to further understand how courses redesigned with this model impact these student attitudes.

Did the course redesign impact students’ views of the relevance of the laboratory course research experience?

The LCAS was created to distinguish “authentic” CUREs from “traditional” lab courses, particularly in the areas of Collaboration, Iteration, and Discovery and Relevance. LCAS scores were high in both the original format and redesigned format, although neither course was a CURE. A recent study also showed that students perceived high “authenticity” in both research and traditional introductory laboratory course formats (31). The fact that LCAS scores were high in both formats may indicate that introductory students perceive any experimental process to feel like legitimate research experience. As novice researchers, introductory students may not understand the nuanced ways in which the course research experience differs in authenticity from a real research experience, though the instructors do recognize this distinction (32). This reinforces the notion that educational experiences can have significant authenticity for the students, even when there is no purposeful design for authentic practice (31). The high LCAS scores in both of our course formats may also reflect that the LCAS is written using language that is meaningful to educators and education researchers, but perhaps less meaningful to or well-understood by students.

Because of the possible limitations of the LCAS language and because “authenticity” as a concept has varied definitions in biology education and biology education research, we should solicit and listen to candid student voices to understand the impact of course laboratory experiences (31). The data from open-ended SEQs showed that students in both semesters thought that the labs were practical and valuable. However, there were interesting differences in student comments about the course experience. More students in the redesigned course format mentioned designing their own experiment and doing “real” or “actual” science. Additionally, more students in the redesigned course wrote that the lab course contributed to their learning. These assertions from students in the redesigned course, combined with the increased number of statements that named the specific scientific skills that were gained (e.g., ANOVA, Tukey, writing figure captions and results texts), are particularly valuable because they are from students’ spontaneous responses about their overall lab experience, rather than responses to targeted prompts. These SEQ data may therefore offer a more authentic perspective of students’ perceptions of the course, and of which aspects of the course stood out for them as particularly impactful.

CONCLUSION

We found positive outcomes for introductory organismal biology students as a result of increasing scaffolding and student-centered inquiry in a redesigned laboratory course. Students in the redesigned course demonstrated significant growth in experimental design skills over the course of the semester, were more likely to mention specific scientific skills they had gained, and perceived that they were better able to do science. These positive gains in skills, combined with self-awareness of their skill development, can help students to develop their science identity during research experiences (2, 4) and reinforce the utility of this approach for curriculum redesign.

There are a number of limitations to our study. Our student population is from a single, highly selective, all-women’s college and therefore not broadly representative of the undergraduate population. The data collection was not replicated in multiple semesters, and, because of the attributes of our sample, we were unable to perform meaningful analyses based on demographics (e.g., disaggregate by major versus nonmajor, or by race/ethnicity or first-generation status). Finally, students were not tracked longitudinally following their introductory biology experience. Additional data about the impact of scaffolding and enhanced inquiry should be collected from more course sections and from a variety of diverse institutions to determine the broad significance of the model for curricular redesign presented here. Future studies should also examine how scaffolded inquiry course experiences at the introductory level influence student success and retention in STEM majors throughout their undergraduate careers, and how this curricular redesign approach could be expanded strategically across all course levels within undergraduate programs. Follow-up studies could also examine how curricular redesign to scaffold skill-building and enhance inquiry in lab courses has the potential to equitably develop research skills among introductory students with varied high school preparation.

From a logistical standpoint for practitioners, our study demonstrates that it is not necessary to go “full-CURE” in order for students to learn key facets of experimental design and to practice and work toward mastery of important scientific skills. We show that instructors can foster positive student outcomes by redesigning their courses to systematically and explicitly teach skills in the context of inquiry modules and scaffolded assignments. Additionally, this study demonstrates the value of gathering student voices to assess the impact of a course research experience (31). By analyzing written responses to open-ended course evaluation prompts, we gleaned important insights into student take-aways from their course that allow us to see what skills and experiences they internalized and valued, which is not always possible from Likert-type survey questions.

SUPPLEMENTARY MATERIALS

ACKNOWLEDGMENTS

Protocols were deemed exempt by Wellesley College IRB under §46.101b Exemption 1 and 2. We received support from the Mellon Foundation and Wellesley College Laboratory Science Curricular Innovation Program. We thank Emily Buchholtz, Leah Okumura, Janet McDonough, Jeff Hughes, and anonymous reviewers for their valuable feedback on previous versions of the manuscript. The authors have no conflicts of interest to declare.

Footnotes

Supplemental materials available at http://asmscience.org/jmbe

REFERENCES

- 1.American Association for the Advancement of Science. Vision and change in undergraduate biology education: a call to action: a summary of recommendations made at a national conference organized by the American Association for the Advancement of Science; July 15–17, 2009; Washington, DC. 2011. [Google Scholar]

- 2.Robnett RD, Chemers MM, Zurbriggen EL. Longitudinal associations among undergraduates’ research experience, self-efficacy, and identity. J Res Sci Teach. 2015;52:847–867. doi: 10.1002/tea.21221. [DOI] [Google Scholar]

- 3.Graham MJ, Frederick J, Byars-Winston A, Hunter AB, Handelsman J. Science education. Increasing persistence of college students in STEM. Science. 2013;341:1455–1456. doi: 10.1126/science.1240487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vincent-Ruz P, Schunn CD. The nature of science identity and its role as the driver of student choices. Int J STEM Educ. 2018;5:48. doi: 10.1186/s40594-018-0140-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ainscough L, Foulis E, Colthorpe K, Zimbardi K, Robertson-Dean M, Chunduri P, Lluka L. Changes in biology self-efficacy during a first-year university course. CBE Life Sci Educ. 2016;15:ar19. doi: 10.1187/cbe.15-04-0092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kirschner PA, Sweller J, Clark RE. Why minimal guidance during instruction does not work: an analysis of the failure of constructivist, discovery, problem-based, experiential, and inquiry-based teaching. Educ Psychol. 2006;41:75–86. doi: 10.1207/s15326985ep4102_1. [DOI] [Google Scholar]

- 7.Coil D, Wenderoth MP, Cunningham M, Dirks C. Teaching the process of science: faculty perceptions and an effective methodology. CBE Life Sci Educ. 2010;9:524–535. doi: 10.1187/cbe.10-01-0005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chaplin SB. Guided development of independent inquiry in an anatomy/physiology laboratory. Adv Physiol Educ. 2003;27:230–240. doi: 10.1152/advan.00002.2003. [DOI] [PubMed] [Google Scholar]

- 9.Dirks C, Cunningham M. Enhancing diversity in science: is teaching science process skills the answer? CBE Life Sci Educ. 2006;5:218–226. doi: 10.1187/cbe.05-10-0121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kramer M, Olson D, Walker JD. Design and assessment of online, interactive tutorials that teach science process skills. CBE Life Sci Educ. 2018;17:ar19. doi: 10.1187/cbe.17-06-0109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Auchincloss LC, Laursen SL, Branchaw JL, Eagan K, Graham M, Hanauer DI, Lawrie G, McLinn CM, Pelaez N, Rowland S, Towns M, Trautmann NM, Varma-Nelson P, Weston TJ, Dolan EL. Assessment of course-based undergraduate research experiences: a meeting report. CBE Life Sci Educ. 2014;13:29–40. doi: 10.1187/cbe.14-01-0004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Brownell SE, Hekmat-Scafe DS, Singla V, Chandler Seawell P, Conklin Imam JF, Eddy SL, Stearns T, Cyert MS. A high-enrollment course-based undergraduate research experience improves student conceptions of scientific thinking and ability to interpret data. Cell Biol Educ. 2015;14:ar21–ar21. doi: 10.1187/cbe.14-05-0092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rodenbusch SE, Hernandez PR, Simmons SL, Dolan EL. Early engagement in course-based research increases graduation rates and completion of science, engineering, and mathematics degrees. CBE Life Sci Educ. 2016;15:ar20. doi: 10.1187/cbe.16-03-0117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Szteinberg GA, Weaver GC. Participants’ reflections two and three years after an introductory chemistry course-embedded research experience. Chem Educ Res Pract. 2013;14:23–35. doi: 10.1039/C2RP20115A. [DOI] [Google Scholar]

- 15.Jeffery E, Nomme K, Deane T, Pollock C, Birol G. Investigating the role of an inquiry-based biology lab course on student attitudes and views toward science. CBE Life Sci Educ. 2016;15:ar61. doi: 10.1187/cbe.14-11-0203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Shanks RA, Robertson CL, Haygood CS, Herdliksa AM, Herdliska HR, Lloyd SA. Measuring and advancing experimental design ability in an introductory course without altering existing lab curriculum. J Microbiol Biol Educ. 2017;18(1):18.1.2. doi: 10.1128/jmbe.v18i1.1194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Myers MJ, Burgess AB. Inquiry-based laboratory course improves students’ ability to design experiments and interpret data. Adv Physiol Educ. 2003;27:26–33. doi: 10.1152/advan.00028.2002. [DOI] [PubMed] [Google Scholar]

- 18.Hanauer DI, Nicholes J, Liao FY, Beasley A, Henter H. Short-term research experience (SRE) in the traditional lab: qualitative and quantitative data on outcomes. LSE. 2018;17:ar64. doi: 10.1187/cbe.18-03-0046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lave J, Wenger E. Situated learning: legitimate peripheral participation. Cambridge University Press; Cambridge: 1991. [DOI] [Google Scholar]

- 20.Cooper KM, Soneral PAG, Brownell SE. Define your goals before you design a CURE: a call to use backward design in planning course-based undergraduate research experiences. J Microbiol Biol Educ. 2017;18(2) doi: 10.1128/jmbe.v18i2.1287. 18.2.30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Shortlidge E. How to assess your CURE: a practical guide for instructors of course-based undergraduate research experiences. J Microbiol Biol Educ. 2016;17:399–408. doi: 10.1128/jmbe.v17i3.1103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Killpack TL, Fulmer SM. Development of a tool to assess interrelated experimental design in introductory biology. J Microbiol Biol Educ. 2018;19(3):19.3.98. doi: 10.1128/jmbe.v19i3.1627. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Marx JD, Cummings K. Normalized change. Am J Phys. 2007;75:87–91. doi: 10.1119/1.2372468. [DOI] [Google Scholar]

- 24.Baldwin JA, Ebert-May D, Burns DJ. The development of a college biology self-efficacy instrument for nonmajors. Sci Educ. 1999;83:397–408. doi: 10.1002/(SICI)1098-237X(199907)83:4<397::AID-SCE1>3.0.CO;2-#. [DOI] [Google Scholar]

- 25.Tractenberg RE, Umans JG, McCarter RJ. A mastery rubric: guiding curriculum design, admissions and development of course objectives. Assess Eval High Educ. 2010;35:15–32. doi: 10.1080/02602930802474169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tractenberg R. How the mastery rubric for statistical literacy can generate actionable evidence about statistical and quantitative learning outcomes. Educ Sci. 2016;7:3. doi: 10.3390/educsci7010003. [DOI] [Google Scholar]

- 27.Corwin LA, Runyon C, Robinson A, Dolan EL. The laboratory course assessment survey: a tool to measure three dimensions of research-course design. CBE Life Sci Educ. 2015;14:ar37. doi: 10.1187/cbe.15-03-0073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977;33:159. doi: 10.2307/2529310. [DOI] [PubMed] [Google Scholar]

- 29.Shortlidge EE, Bangera G, Brownell SE. Faculty perspectives on developing and teaching course-based undergraduate research experiences. BioScience. 2016;66:54–62. doi: 10.1093/biosci/biv167. [DOI] [Google Scholar]

- 30.Aschbacher PR, Li E, Roth EJ. Is science me? High school students’ identities, participation and aspirations in science, engineering, and medicine. J Res Sci Teach. 2010;47:564–582. [Google Scholar]

- 31.Rowland S, Pedwell R, Lawrie G, Lovie-Toon J, Hung Y. Do we need to design course-based undergraduate research experiences for authenticity? CBE Life Sci Educ. 2016;15:ar79. doi: 10.1187/cbe.16-02-0102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Beck CW, Blumer LS. Alternative realities: faculty and student perceptions of instructional practices in laboratory courses. CBE Life Sci Educ. 2016;15:ar52. doi: 10.1187/cbe.16-03-0139. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.