Abstract

Background

An overwhelming body of evidence stating that the completeness of reporting of randomised controlled trials (RCTs) is not optimal has accrued over time. In the mid‐1990s, in response to these concerns, an international group of clinical trialists, statisticians, epidemiologists, and biomedical journal editors developed the CONsolidated Standards Of Reporting Trials (CONSORT) Statement. The CONSORT Statement, most recently updated in March 2010, is an evidence‐based minimum set of recommendations including a checklist and flow diagram for reporting RCTs and is intended to facilitate the complete and transparent reporting of trials and aid their critical appraisal and interpretation. In 2006, a systematic review of eight studies evaluating the "effectiveness of CONSORT in improving reporting quality in journals" was published.

Objectives

To update the earlier systematic review assessing whether journal endorsement of the 1996 and 2001 CONSORT checklists influences the completeness of reporting of RCTs published in medical journals.

Search methods

We conducted electronic searches, known item searching, and reference list scans to identify reports of evaluations assessing the completeness of reporting of RCTs. The electronic search strategy was developed in MEDLINE and tailored to EMBASE. We searched the Cochrane Methodology Register and the Cochrane Database of Systematic Reviews using the Wiley interface. We searched the Science Citation Index, Social Science Citation Index, and Arts and Humanities Citation Index through the ISI Web of Knowledge interface. We conducted all searches to identify reports published between January 2005 and March 2010, inclusive.

Selection criteria

In addition to studies identified in the original systematic review on this topic, comparative studies evaluating the completeness of reporting of RCTs in any of the following comparison groups were eligible for inclusion in this review: 1) Completeness of reporting of RCTs published in journals that have and have not endorsed the CONSORT Statement; 2) Completeness of reporting of RCTs published in CONSORT‐endorsing journals before and after endorsement; or 3) Completeness of reporting of RCTs before and after the publication of the CONSORT Statement (1996 or 2001). We used a broad definition of CONSORT endorsement that includes any of the following: (a) requirement or recommendation in journal's 'Instructions to Authors' to follow CONSORT guidelines; (b) journal editorial statement endorsing the CONSORT Statement; or (c) editorial requirement for authors to submit a CONSORT checklist and/or flow diagram with their manuscript. We contacted authors of evaluations reporting data that could be included in any comparison group(s), but not presented as such in the published report and asked them to provide additional data in order to determine eligibility of their evaluation. Evaluations were not excluded due to language of publication or validity assessment.

Data collection and analysis

We completed screening and data extraction using standardised electronic forms, where conflicts, reasons for exclusion, and level of agreement were all automatically and centrally managed in web‐based management software, DistillerSR®. One of two authors extracted general characteristics of included evaluations and all data were verified by a second author. Data describing completeness of reporting were extracted by one author using a pre‐specified form; a 10% random sample of evaluations was verified by a second author. Any discrepancies were discussed by both authors; we made no modifications to the extracted data. Validity assessments of included evaluations were conducted by one author and independently verified by one of three authors. We resolved all conflicts by consensus.

For each comparison we collected data on 27 outcomes: 22 items of the CONSORT 2001 checklist, plus four items relating to the reporting of blinding, and one item of aggregate CONSORT scores. Where reported, we extracted and qualitatively synthesised data on the methodological quality of RCTs, by scale or score.

Main results

Fifty‐three publications reporting 50 evaluations were included. The total number of RCTs assessed within evaluations was 16,604 (median per evaluation 123 (interquartile range (IQR) 77 to 226) published in a median of six (IQR 3 to 26) journals. Characteristics of the included RCT populations were variable, resulting in heterogeneity between included evaluations. Validity assessments of included studies resulted in largely unclear judgements. The included evaluations are not RCTs and less than 8% (4/53) of the evaluations reported adjusting for potential confounding factors.

Twenty‐five of 27 outcomes assessing completeness of reporting in RCTs appeared to favour CONSORT‐endorsing journals over non‐endorsers, of which five were statistically significant. 'Allocation concealment' resulted in the largest effect, with risk ratio (RR) 1.81 (99% confidence interval (CI) 1.25 to 2.61), suggesting that 81% more RCTs published in CONSORT‐endorsing journals adequately describe allocation concealment compared to those published in non‐endorsing journals. Allocation concealment was reported adequately in 45% (393/876) of RCTs in CONSORT‐endorsing journals and in 22% (329/1520) of RCTs in non‐endorsing journals. Other outcomes with results that were significant include: scientific rationale and background in the 'Introduction' (RR 1.07, 99% CI 1.01 to 1.14); 'sample size' (RR 1.61, 99% CI 1.13 to 2.29); method used for 'sequence generation' (RR 1.59, 99% CI 1.38 to 1.84); and an aggregate score over reported CONSORT items, 'total sum score' (standardised mean difference (SMD) 0.68 (99% CI 0.38 to 0.98)).

Authors' conclusions

Evidence has accumulated to suggest that the reporting of RCTs remains sub‐optimal. This review updates a previous systematic review of eight evaluations. The findings of this review are similar to those from the original review and demonstrate that, despite the general inadequacies of reporting of RCTs, journal endorsement of the CONSORT Statement may beneficially influence the completeness of reporting of trials published in medical journals. Future prospective studies are needed to explore the influence of the CONSORT Statement dependent on the extent of editorial policies to ensure adherence to CONSORT guidance.

Keywords: Checklist, Checklist/standards, Periodicals as Topic, Periodicals as Topic/standards, Publishing, Publishing/standards, Randomized Controlled Trials as Topic, Randomized Controlled Trials as Topic/standards, Reference Standards

Plain language summary

CONsolidated Standards Of Reporting Trials (CONSORT) and the completeness of reporting of randomised controlled trials published in medical journals

A group of experts has developed a checklist and flow diagram called the CONSORT Statement. The checklist is designed to help authors in the reporting of randomised controlled trials (RCTs). This systematic review aims to determine whether the CONSORT Statement has made a difference to the completeness of reporting of RCTs. Reporting of RCTs published in journals that encourage authors to use the CONSORT Statement with those that do not is compared. We found that some items in the CONSORT Statement were fully reported more often when journals encouraged the use of CONSORT. While the majority of items are reported more often when journals endorse CONSORT, the data only showed a statistically significant improvement in reporting for five of 27 items. No items suggest that CONSORT decreases the completeness of reporting of RCTs published in medical journals.

None of the evaluations included in this review used experimental designs, and their methodological approaches were mostly poorly described and variable when they were described. Furthermore, evaluations assessed the completeness of reporting of RCTs within a wide range of medical fields and in journals with a wide variation in the enforcement of CONSORT endorsement. Our results do have some limitations, but given the number of included evaluations and the number of assessed RCTs, we conclude that while most RCTs are incompletely reported, the CONSORT Statement beneficially influences their reporting quality.

Background

An overwhelming body of evidence demonstrating that the completeness of reporting of randomised controlled trials (RCTs) is sub‐optimal has accrued over time (Chan 2005; Glasziou 2008; Hopewell 2008; Moher 2010). In the mid‐1990s, in response to concerns about this issue, an international group of clinical trialists, statisticians, epidemiologists, and biomedical editors developed the CONsolidated Standards Of Reporting Trials (CONSORT) Statement (Begg 1996), which has been twice revised and updated (Moher 2001a; Schulz 2010). The CONSORT Statement is an evidence‐based set of recommendations for reporting two‐arm, parallel‐group RCTs, including a minimum set of items to be reported pertaining to the rationale, design, analysis, and interpretation of the trial (i.e. CONSORT checklist) and a diagram describing flow of participants through a trial (i.e. flow diagram). It is intended to facilitate the complete and transparent reporting of RCTs and in turn aid in their critical appraisal and interpretation.

The CONSORT Statement was first published in 1996 (Begg 1996). It included 21 checklist items pertaining to the rationale, design, analysis, and interpretation of a trial (i.e. CONSORT checklist) and a flow diagram outlining the progress of participants through a trial. In 2001, the CONSORT checklist, updated to 22 items, and flow diagram were revised to reflect emerging evidence indicating that lack of, or poor reporting of particular elements of RCTs is associated with biased estimates of treatment effect (Moher 2001a). Some new items were also added because reporting them was found to increase the ability to judge the validity or relevance of trial findings (Moher 2001a). Evidence and examples for each checklist item are found in an accompanying Explanation and Elaboration (E&E) document (Altman 2001). The second revision, and current version, of the CONSORT Statement (CONSORT 2010) was published in March 2010 (Schulz 2010). It contains an updated 25‐item checklist and flow diagram, also accompanied by an E&E document (Moher 2010). All CONSORT materials are available on the CONSORT website (www.consort‐statement.org; CONSORT Group 2009). For ease, henceforth, 'CONSORT' will refer to this collective body of literature, unless otherwise stated.

To date, the CONSORT Statement has received positive attention, in part, by way of endorsement by biomedical journals. To date, over 600 journals have endorsed the CONSORT Statement. Such endorsement is typically evidenced by a statement in a journal’s 'Instructions to Authors' regarding the use (suggested or required) of CONSORT while preparing trial reports for publication. Some journals publish editorials indicating their support, while others institute mandatory submission of a guideline checklist and/or flow diagram along with manuscript submission. As such, while the CONSORT Statement is widely endorsed, there is huge variation in terms of how CONSORT policies are implemented.

Description of the problem or issue

Concurrent with the publication of the 2001 CONSORT Statement, Moher and colleagues reported the first evaluation of endorsement of the CONSORT checklist. The authors reported that the completeness of reports of RCTs in CONSORT‐endorsing journals was higher than one non‐endorsing journal (Moher 2001). Since then, other evaluations have been published which assess the influence of CONSORT either directly or indirectly on the completeness of reporting of RCTs. In 2006, Plint and colleagues (Plint 2006) published a systematic review synthesising data from all such evaluations to gauge their combined findings about the influence of CONSORT endorsement on the completeness of reporting of RCTs. Despite methodological weaknesses of the eight included evaluations, the review found that endorsement of CONSORT may influence the completeness of reporting in some checklist items. For example, reporting of the method of sequence generation, allocation concealment, and overall number of CONSORT items (i.e. 'total sum score') was more common in RCTs published in CONSORT‐endorsing compared to non‐endorsing journals, but CONSORT endorsement seemed to have less effect on the reporting of participant flow and blinding (Plint 2006).

In the six years since this systematic review was published, a number of additional evaluations of the effects of CONSORT on the completeness of reporting have been published. Some of these evaluations directly assess the effect of CONSORT on complete reporting (e.g. Hopewell 2010), others assess complete reporting based on CONSORT criteria in a specific medical field or research area, for example RCTs investigating weight loss (e.g. Thabane 2007), glaucoma (Llorca 2005), and surgery (Agha 2007). For these latter evaluations, the effect of CONSORT can be assessed through a post hoc comparison of completeness of reporting of RCTs published in CONSORT‐endorsing versus non‐endorsing journals.

This systematic review updates Plint et al's review to include and synthesise results that have been published in the time since the first review was conducted.

Why it is important to do this review

The Plint et al systematic review included evaluations published between January 1996 and July 2005 (Plint 2006). Over six years have passed since the search for literature in that review was carried out and a considerable number of additional evaluations have been published that are relevant to include in this update. For readers looking to know whether CONSORT endorsement influences the completeness of reporting, it is necessary to update Plint et al's review and to incorporate the most comprehensive corpus of literature on this topic. This updated review provides a more complete perspective regarding the possible influence of CONSORT on the completeness of reporting of RCTs and, subsequently, will allow journal editors, methodologists, and trialists to understand the potential benefits of using CONSORT when reporting the design, analysis, and interpretation of RCTs.

Objectives

To assess whether journal endorsement of CONSORT is associated with more complete reporting of RCTs, by examining the following comparisons:

comparison 1: completeness of reporting of RCTs published in journals that have and have not endorsed the CONSORT Statement; and/or

comparison 2: completeness of reporting of RCTs published in CONSORT‐endorsing journals before and after endorsement; or

comparison 3: completeness of reporting of RCTs before and after the publication of CONSORT (i.e. 1996 and 2001).

During the review process, two additional comparisons were identified and reported in already included evaluations, namely completeness of reporting of RCTs published before endorsement in endorsing and non‐endorsing journals and completeness of reporting of RCTs published in non‐endorsing journals before and after endorsement (where after endorsement was determined by their endorsing counterparts). These comparisons were formed in evaluations to assess, by proxy, potential confounding. We collected data for these comparisons as encountered as they provided information on potential confounders (i.e. the effect of non‐endorsement over time and the effect of potential pre‐existing differences in completeness of reporting between endorsing and non‐endorsing journals). Data for these comparisons were sparse and we carried out no meta‐analyses; these data are available upon request.

Methods

Criteria for considering studies for this review

Types of studies

Any report evaluating the completeness of reporting of RCTs, potentially eligible for any of the three main comparisons, was included; such studies are termed 'evaluations' for the remainder of this report.

We identified evaluations for potential inclusion using the following pre‐specified screening questions:

Does the evaluation involve a relevant comparison (e.g. pre CONSORT publication versus post CONSORT publication or otherwise)?

Does the evaluation examine the influence of the CONSORT checklist on the completeness of reporting of RCTs?

Does the evaluation report any of the following: a) 22 items on the CONSORT checklist?, b) any type of overall quality indicators/score? c) adherence to CONSORT checklist?

We approached authors of evaluations that were not comparative, or did not report data in a format coinciding with our needs, for supplementary information. Subsequently any additional evaluations for which a comparison could be drawn, were included (e.g. Dias 2006).

Types of data

We included studies published in biomedical journals, pertaining to any general or medical subspecialty that enabled comparison of the completeness of reporting of RCTs in any of our three main comparison groups.

In addition, this review only includes evaluations of the 1996 and 2001 CONSORT Statements, since publication of the CONSORT 2010 statement coincides with the search dates for this review and so no evaluations could have been conducted and reported in time for inclusion.

Types of methods

Evaluations using any method to identify and evaluate the reporting of RCTs were included in this review. Evaluations may or may not have considered endorsement of CONSORT as the primary 'exposure' of interest. For instance, evaluations that did not specifically assess CONSORT checklist items, but evaluated the reporting of items relating to existing CONSORT items, were included.

Types of outcome measures

Primary outcomes

The primary outcome is the completeness of reporting of RCTs, as measured by adequate or inadequate reporting of any of the following 27 outcomes: 22 items on the 2001 CONSORT checklist, four additional items relating to the reporting of blinding (i.e. blinding of participants, data analyst, outcome assessor, or intervention), or a sum score across aggregate checklist items, as reported in evaluations. The 2001 CONSORT checklist is reproduced in Table 1. We considered the 22 checklist items in the 2001 Statement as the 'core' items and the four additional items on blinding are simply referred to en masse as pertaining to the CONSORT item on 'blinding'. All analyses presented are ordered in line with the CONSORT checklist (i.e. allocation concealment is checklist item number 9 and, hence, results are presented as 1.9, 2.9, and 3.9 for the three comparison groups).

1. 2001 CONSORT checklist of items to include when reporting a randomised controlled trial.

| PAPER SECTION and topic | Item | |

| TITLE and ABSTRACT | 1 | How participants were allocated to interventions (e.g. 'random allocation', 'randomised', or 'randomly assigned') |

| INTRODUCTION Background |

2 | Scientific background and explanation of rationale |

| METHODS Participants |

3 | Eligibility criteria for participants and the settings and locations where the data were collected |

| Interventions | 4 | Precise details of the interventions intended for each group and how and when they were actually administered |

| Objectives | 5 | Specific objectives and hypotheses |

| Outcomes | 6 | Clearly defined primary and secondary outcome measures and, when applicable, any methods used to enhance the quality of measurements (e.g. multiple observations, training of assessors) |

| Sample size | 7 | How sample size was determined and, when applicable, explanation of any interim analyses and stopping rules |

| Randomisation Sequence generation | 8 | Method used to generate the random allocation sequence, including details of any restriction (e.g. blocking, stratification) |

| Randomisation Allocation concealment | 9 | Method used to implement the random allocation sequence (e.g. numbered containers or central telephone), clarifying whether the sequence was concealed until interventions were assigned |

| Randomisation Implementation | 10 | Who generated the allocation sequence, who enrolled participants, and who assigned participants to their groups |

| Blinding (masking) | 11 | Whether or not participants, those administering the interventions, and those assessing the outcomes were blinded to group assignment. When relevant, how the success of blinding was evaluated. |

| Statistical methods | 12 | Statistical methods used to compare groups for primary outcome(s); methods for additional analyses, such as subgroup analyses and adjusted analyses |

| RESULTS Participant flow |

13 | Flow of participants through each stage (a diagram is strongly recommended). Specifically, for each group report the numbers of participants randomly assigned, receiving intended treatment, completing the study protocol, and analysed for the primary outcome. Describe protocol deviations from study as planned, together with reasons. |

| Recruitment | 14 | Dates defining the periods of recruitment and follow‐up |

| Baseline data | 15 | Baseline demographic and clinical characteristics of each group |

| Numbers analysed | 16 | Number of participants (denominator) in each group included in each analysis and whether the analysis was by 'intention‐to‐treat'. State the results in absolute numbers when feasible (e.g. 10/20, not 50%). |

| Outcomes and estimation | 17 | For each primary and secondary outcome, a summary of results for each group, and the estimated effect size and its precision (e.g. 95% confidence interval) |

| Ancillary analyses | 18 | Address multiplicity by reporting any other analyses performed, including subgroup analyses and adjusted analyses, indicating those pre‐specified and those exploratory |

| Adverse events | 19 | All important adverse events or side effects in each intervention group |

| DISCUSSION Interpretation |

20 | Interpretation of the results, taking into account study hypotheses, sources of potential bias or imprecision, and the dangers associated with multiplicity of analyses and outcomes |

| Generalisability | 21 | Generalisability (external validity) of the trial findings |

| Overall evidence | 22 | General interpretation of the results in the context of current evidence |

Secondary outcomes

Methodological quality of RCTs included in evaluations, as reported

In addition to primary and secondary outcomes, we have included and described evaluations which met the inclusion criteria, but were not eligible for inclusion in meta‐analyses.

Search methods for identification of studies

We conducted electronic searches of bibliographic databases, known item searching, and reference list scans to identify records published from January 2005 to March 2010, to capture studies reported in the period after the search of the original systematic review (Plint 2006).

It should be noted that the search was purposefully limited to exclude records published after the publication of the CONSORT 2010 Statement (on 25 March 2010), as there was insufficient time for evaluations of CONSORT 2010 to have been carried out. A future update of this systematic review will include evaluations of the 2010 Statement.

Electronic searches

To ensure all possibly relevant evaluations were obtained, we designed the main search strategy to retrieve reports published since the date of the last search of the original review, carried out in July 2005. Specifically, the dates of the search for this review cover publications from January 2005 in order to ensure that articles which may have been published in the first half of 2005, but not indexed at the time of searching during the original review, were identified.

We conducted literature searches in Ovid MEDLINE (January 2005 to 19 March 2010); OVID EMBASE (January 2005 to 2010 Week 10); ISI Web of Knowledge (including citing reference searches) 2005 to 19 March 2010; Cochrane Methodology Register; and the Cochrane Database of Systematic Reviews (The Cochrane Library 2010, Issue 1). We searched the Cochrane Methodology Register and the Cochrane Database of Systematic Reviews using the Wiley interface. We searched the Science Citation Index, Social Science Citation Index, and Arts and Humanities Citation Index through the ISI Web of Knowledge interface. Please see Appendix 1 for the full search strategy, which was developed in MEDLINE and tailored to EMBASE.

Searching other resources

Evaluations were also identified by members of the research team when attending conferences, or from discussions with experts in the field.

Data collection and analysis

Selection of evaluations

We conducted all screening using an online data management software, DistillerSR®, a program capable of tracking and managing the progress of records (i.e. abstracts and full‐text reports) through a review. Title and abstract screening were completed independently, in duplicate by two of three authors (LS, LT, LW) using broad screening criteria. All possibly relevant evaluations and those with all conflicting assessments of reports were included for further review.

The full text of all records identified as potentially eligible were retrieved and independently reviewed for eligibility by two authors (LS and LT) using standardised inclusion criteria developed a priori. Full‐text screening disagreements were resolved by consensus or by an independent third author (DM). Six non‐English language articles were assessed by colleagues fluent in the relevant language, who completed the same standardised inclusion forms as the other assessors.

Potentially eligible studies were either categorised into one of the three main comparisons of this review or needed further information from authors to determine eligibility, such as whether included trials were published in endorsing or non‐endorsing journals or, if that information was unavailable, a list of included journals for review authors to follow up with and determine date and status of endorsement. We contacted authors for this information during data extraction so that both eligibility and potentially necessary data could be obtained in one effort.

For the purpose of this review, endorsement is defined as any of the following situations, implying that, in principle, the CONSORT Statement is incorporated into the editorial process for a particular journal: (a) requirement or recommendation in journal's 'Instructions to Authors' to follow CONSORT when preparing their manuscript; (b) journal editorial statement endorsing the CONSORT Statement: either the flow diagram, the checklist or both; or (c) editorial requirement for authors to submit a CONSORT checklist and/or flow diagram with their manuscript. We determined endorsement status by first cross‐checking with the CONSORT group's endorser database. If the journal was not listed, we then reviewed the journals' 'Instructions to Authors' for related text and, if unavailable, lastly by searching for an editorial statement or through previous journal issues for such a statement. Finally, we assumed journals determined not to have endorsed CONSORT at the time we checked for this information never to have been endorsers.

For journals identified as CONSORT endorsers at the time of checking, we sought dates of endorsement by contacting their managing editors or other editorial staff. This information was collected to determine whether RCTs were published after a reasonable amount of time following endorsement, such that its effect had sufficient time to be realised in a journal's output. For this review, we considered six months an adequate amount of time. Determining dates of endorsement was a resource‐intense process; for evaluations assessing large numbers of RCTs or large numbers of journals it was not feasible to collect this information. For evaluations where endorsement status has not been verified, this has been noted in the Characteristics of included studies. Evaluations were not excluded on this basis; we used this information to conduct sensitivity analyses, as described below.

We did not exclude evaluations based on publication status, language of publication, or validity assessment. When multiple reports of a single evaluation were identified and outcomes were overlapping, only outcome data from the main publication were included. Data on additional outcomes presented in secondary publications were included under their corresponding secondary publications.

Data extraction and management

We completed data extraction using standardised electronic forms, where conflicts, reasons for exclusion, and level of agreement were all automatically and centrally managed in web‐based management software, DistillerSR®. One of two authors extracted general characteristics of included evaluations and all data were verified by a second author. Data describing completeness of reporting were extracted by one author using a pre‐specified form; a 10% random sample of evaluations was verified by a second author. Any discrepancies were discussed by both authors.

We extracted the following data from included evaluations:

We extracted general characteristics of evaluations including its journal of publication, number of included RCTs, number of journals, country of publication, source of funding, and CONSORT checklist version used and information pertaining to journal 'quality' (i.e. enforcement of the checklist, editorial policy, size of editorial team, volume of publications, impact factor, and other potential determinants) included in the evaluation.

We collected completeness of reporting of RCTs in included evaluations across 27 a priori outcome measures (Primary outcomes). These included adequacy of reporting any of the 22 2001 core CONSORT checklist items, four additional items pertaining to the 2001 CONSORT checklist item on blinding, and/or a 'sum score' of aggregate checklist items.

For simplicity, we used items on the 2001 CONSORT as data extraction items since they were all encompassing of both CONSORT checklist versions; they include all items contained within the 1996 checklist (some with rewording for improved reporting) as well as some additional items. When completeness of reporting using the 1996 CONSORT checklist was reported in an evaluation, we included items from that checklist that were the same as those in the 2001 checklist. However, for those items of the 2001 version which differed from the 1996 version, we conducted subgroup analyses as described below (Subgroup analysis and investigation of heterogeneity).

Again, for simplicity, we refer to core checklist items with abbreviated descriptions according to their 'Paper section and topic' as found on the CONSORT 2001 checklist. For example, when we refer to reporting of 'title and abstract' and/or 'item one', we are addressing whether reports of RCTs in evaluations contained "randomised" in the title or abstract. For full details of associated recommendations for these items (or more appropriately, methodological guidance) please see Table 1.

The four items reporting blinding stem from the 2001 CONSORT checklist item recommending that adequate reporting of blinding should detail "whether or not participants, those administering the interventions, and those assessing the outcomes were blinded to the assessment group". Reflective of the original systematic review (Plint 2006), included evaluations, and subsequent changes made to the CONSORT 2010 checklist, we collected reporting of blinding in four distinct items, in addition to the 'composite' item (i.e. blinding by any description) contained in the 2001 CONSORT checklist. These include: blinding of participants, blinding of the intervention, blinding of outcome assessment, and blinding of the data analyst. We sub‐categorised analyses for this item, as described in the subgroup analysis section below.

While calculation of a total sum score based on several CONSORT items is potentially misleading as items are of unequal importance, we collected this information if reported in included evaluations.

We abstracted data on assessment of methodological quality of RCTs included within evaluations, if reported. Although a recent study (Dechartres 2011) identified 74 different items and 26 different scales used for assessing quality of RCTs, measurement of methodological quality using any of these means (e.g. Jadad score, Olivo 2008, Schulz allocation concealment, MINCIR, MINCIR Score) was considered and was not pre‐specified for this review.

Validity assessment in included evaluations

The validity of included evaluations was assessed by one author (LS) and all assessments were independently verified by one of three authors (LT, AP, LW); we resolved all conflicts by consensus. We assessed validity using an a priori checklist developed by the research team for the purpose of this review. As no formal checklist for assessing validity of quasi‐experimental evaluations of RCTs currently exists, our research team developed a checklist based on principles of internal and external validity (Campbell 1966). We used the Data Collection Checklist developed by the Cochrane Effective Practice and Organisation of Care Review Group and the 'Risk of bias' tool as guides (Cochrane EPOC 2009; Higgins 2008). The resulting criteria used to gauge validity of evaluations in this review were as follows:

The RCTs included in the study represented a large cohort (i.e. at least an entire year), or were randomly chosen from a large cohort.

The reviewer(s) who assessed CONSORT criteria were blinded to study authors, institutions, sponsorship, and/or journal name.

Consideration of potential clustering by journal was reported (if potential for clustering did not exist, the study was deemed 'low risk').

There was no evidence of selective outcome reporting.

More than one reviewer assessed adherence to CONSORT criteria.

If more than one reviewer assessed CONSORT criteria, whether inter‐reviewer agreement was greater than or equal to 90% agreement or a kappa statistic of 0.8.

If quality of included RCTs was assessed, the reviewer(s) conducted a blinded assessment.

We assigned each criterion a judgement of yes (i.e. low risk of bias/high validity), no (i.e. high risk of bias/low validity), or can't tell (unclear risk of bias). For some criteria, we allowed an additional rating of 'not applicable' if it was irrelevant to a given comparison, or was dependent on the rating of a previous criterion. For instance, there was no potential for clustering by journal (criterion 3) in comparisons 2 and 3. Criterion 6 was dependent on the rating for criterion '5' being 'yes' and therefore was not applicable when the rating was 'no'. Likewise, criterion 7 is dependent on whether assessment of methodological quality was carried out. For these three criteria (3, 6, and 7) we chose not to penalise evaluations with 'not applicable' ratings, nor to rate them as 'unclear', since this is taken to mean 'not reported', which is also incorrect. As such, the only remaining option which would not connote any negative judgement is a rating of 'yes' (i.e. low risk of bias/high validity).

Note, with regards to item three above, we report here the terms used when validity assessment was conducted. For clarification, from here on we refrain from using the term 'clustering' as this potential bias, more aptly, refers to confounding by journal.

Measures of the effect of the methods

Comparison 1 examines the completeness of reporting of RCTs published in endorsing and non‐endorsing journals, comparison 2 examines the completeness of reporting of RCTs published in journals before and after endorsement, and comparison 3 examines completeness of reporting of RCTs before and after publication of CONSORT. Where data from a single evaluation were applicable to more than one comparison, the evaluation was included for each comparison. For instance, where data from an evaluation comparing endorsing and non‐endorsing journals were available, it was sometimes possible to use data from only the endorsing journals to also compare the reporting before and after endorsement.

For the primary outcome, where data on completeness of reporting were represented by one or more of the 22 CONSORT 2001 checklist items or of the four additional blinding items, we collected dichotomised adherence to each item. Where evaluations used more than two categories to judge adherence to a given checklist item, we collapsed these to create a dichotomy between 'adequately' and 'inadequately' reported RCTs. For instance, where an item was judged as 'partially' reported, it was considered 'inadequate'. As such, within each comparison, for each dichotomous outcome, the proportion of RCTs within each evaluation adequately reporting one or more checklist items in each comparison group was calculated. Using these proportions we compared completeness of reporting between comparison groups (i.e. endorsers versus non‐endorsers, before versus after endorsement, pre versus post publication) in each evaluation using a risk ratio (RR) with a 99% confidence interval for each outcome. A RR greater than 1 was taken to indicate relatively increased reporting of any CONSORT item following CONSORT endorsement. Where completeness of reporting of RCTs was represented by a sum score of aggregate checklist items, we collected the mean sum score for each comparison group within an evaluation. We then calculated the standardised mean difference (SMD) with 99% confidence interval to estimate the difference in completeness of reporting between comparison groups in each evaluation. An SMD greater than 0 indicates better overall reporting of items following CONSORT endorsement.

Due to the design of included evaluations and poor availability of data to make necessary adjustments to estimates of effect at the evaluation level, we were unable to adjust for potential confounders (i.e. improvements in completeness of reporting over time and/or by discrepancies in journal editorial 'quality') and we introduced the use of 99% confidence intervals post hoc to ensure conservative estimates of effect are presented throughout this review.

Data collected on the methodological quality of RCTs within evaluations were reported as collected in evaluations. As these were expected to be variable and inconsistently reported across evaluations, we planned no measures of effect to estimate whether groups within each comparison differed on methodological quality.

Issues of potential confounding

There are two potential factors by which the estimates of effect obtained for each evaluation could be confounded. The first is when there may have been an uneven distribution of journal quality (defined in Data extraction and management) between endorsing and non‐endorsing journals in comparison 1. Time is considered a second potential confounder of effect estimates for individual evaluations, since the completeness of reporting may have naturally changed over time with or without endorsement or publication of CONSORT. Time potentially affects effect estimates across all three comparisons of this review, however it is not considered a true confounder for comparison 1, since it may only play a role where comparison groups were sampled at different times. Please see Assessment of risk of bias in included studies.

Dealing with missing data

We experienced two types of missing data: endorsement status of journals included in evaluations and date of endorsement of journals determined to be endorsers by either authors of the evaluation or review authors.

Endorsement status of journals publishing RCTs included in each evaluation was needed to determine whether evaluations were eligible for inclusion in comparisons 1 or 2 or not at all. As described in the Selection of studies and Data extraction and management sections, we contacted corresponding authors a maximum of three times via email over an eight‐month period to provide us with these data. If data would have been needed to complete the comparative analysis (i.e. adequacy of reporting data for each checklist item for each included RCT), these were requested at the same time.

Where date of endorsement of CONSORT by journals was not explicit, data for RCTs that subsequently could not be identified as published in either an endorsing or non‐endorsing journal were not included in the analyses in order to prevent misclassification. In some circumstances, where this would result in a high proportion of data for a given evaluation being excluded, we categorised these reports as published in an endorsing journal, a conservative classification that underestimates the effect of CONSORT endorsement. Similarly, for before and after comparisons, when a number of evaluations were published in 2001 (or 1996, more infrequently), these evaluations would be classified as pre‐CONSORT to ensure that any estimate of the effect of CONSORT endorsement would be conservative.

Assessment of heterogeneity

We explored consistency across the included evaluations quantitatively using the I² statistic, and by visual inspection (Deeks 2008). Variation in journal policy regarding how CONSORT is implemented, for example whether submission of a completed checklist is 'required' versus 'recommended', will likely contribute to methodological heterogeneity of results across included evaluations. However, ongoing research by the CONSORT group suggests that the means of implementing CONSORT in the editorial process is difficult to determine without speaking to journal editorial staff directly. As our experience with this review has shown, even when in contact, this information is vague and generally no standardised processes are in place. As it was beyond the scope and feasibility of the current review, we were unable to explore this factor meaningfully.

Assessment of reporting biases

Selective reporting of outcomes has been assessed for each included evaluation as a component of validity assessment (Appendix 2). We conducted assessment by searching for a review protocol and, in the absence of a protocol, compared methods and results sections of included evaluations. An advantage to the design of this review is that unpublished data are provided and included by evaluation authors, which would contribute to mitigating the potential issue of selective reporting of CONSORT items.

Although it is possible to generate funnel plots to assess the potential of publication bias for each meta‐analysis in each evaluation within included evaluations, the suitability of this method of assessment is unexplored (although the number of included studies may be insufficient). We know of no alternative methods for assessing publication bias in this review of evaluations of RCTs. Moreover, the number of included studies would frequently not allow for this; as such we are unable to determine any failure to report within the literature.

Data synthesis

We used a pooled RR with 99% confidence intervals to estimate the overall difference between groups within each comparison. We used a random‐effects model for all analyses. All available data contributed to our main analyses.

Some evaluations totaled adherence to all or a subset of CONSORT checklist items, and reported averages over assessed RCTs. Because these continuous data are on differing scales, we calculated SMDs for this outcome, with 99% confidence intervals. When medians and ranges were reported instead of means and standard deviations, we used suitable approximations (Higgins 2008). When necessary, we imputed standard deviations.

Data from evaluations reporting on, and comparing, CONSORT‐endorsing journals' adherence to items of methodological quality, using means not otherwise evaluated in this review, were qualitatively described and not included in meta‐analysis.

In addition to our main analyses, we conducted a descriptive analysis of the included evaluations based on general characteristics of the evaluations. For example, we documented the number of RCTs and journals assessed in those evaluations and the validity of those evaluations.

Subgroup analysis and investigation of heterogeneity

We did not pre‐specify any subgroups for analysis. However, post hoc, we decided that for five items of the 1996 checklist that underwent substantial modifications (i.e. re‐arranging and wording modifications) in the 2001 checklist, analyses would be subgrouped by CONSORT checklist version (i.e. 1996 or 2001). These items are 'title and abstract', 'outcomes', 'sample size', 'participant flow', and 'numbers analysed'.

In addition, because data on adequacy of reporting of blinding were collected in five different outcomes in this review (as described in Data extraction and management), we sub‐categorised meta‐analyses for this item (blinding) by each of the five outcomes for which we collected data and carried out pooled estimates of effect within each subcategory.

Sensitivity analysis

As previously stated, when CONSORT endorsement status for a subset of journals in an evaluation was not available, we conducted a sensitivity analysis to compare the pooled risk ratios with journals that were and were not strictly compliant with our definition of a CONSORT endorser (i.e. endorsement occurred at least six months prior to publication of RCT). We also conducted sensitivity analysis for effects which we considered to result in outlying effect estimates when the forest plots were inspected.

Other methodological considerations

Review updating

Given the substantial number of new evaluations included in this update, we treated this update as if it were an original review following the original protocol. A full literature search was conducted from six months prior to the end search date of the original review (Plint 2006) to as recent a date as possible. We then screened all retrieved evaluations, at which point inclusion of the original eight evaluations was confirmed. We conducted data extraction for general characteristics, full data extraction, and validity assessment for all included evaluations in the same manner. We then compared data extracted for the original eight evaluations with the original published results as a means of validation. Data provided by authors and modified for inclusion in the original review were not sought again.

Results

Description of studies

Results of the search

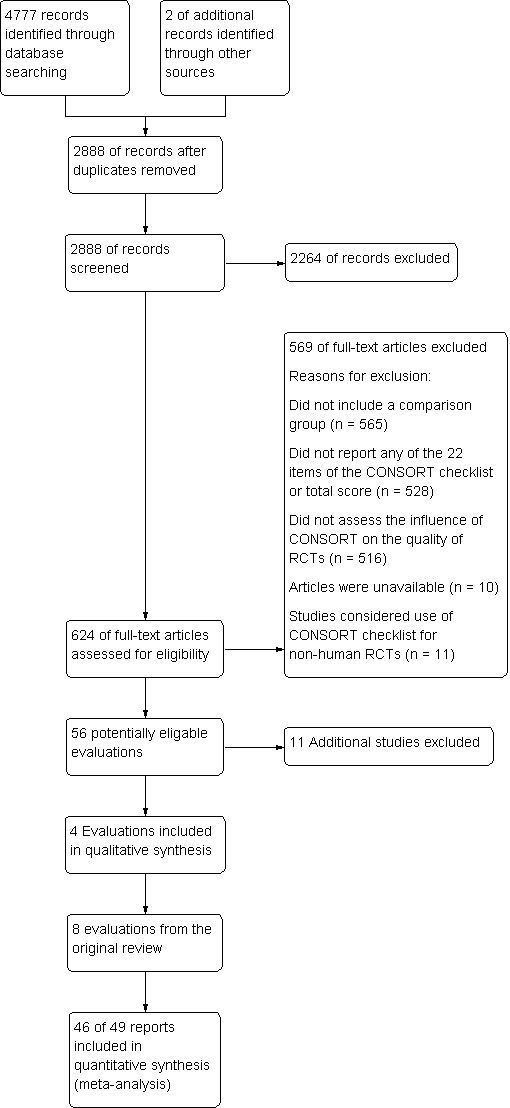

Our electronic search strategy identified a total of 4777 records. Two additional evaluations (Dickinson 2002; Kidwell 2001) were presented as posters at Cochrane Colloquia and identified by members of the research team. We removed duplicates and left the remaining 2888 records as potentially relevant articles. Details about the flow of evaluation records through this review are provided in the Preferred Reporting Items for Systematic Reviews and Meta‐Analyses (PRISMA) flow diagram (Moher 2009; Figure 1).

1.

Flow of evaluations through this review

Content experts identified four evaluations before the search was conducted (Agha 2007; Peckitt 2007; Smith 2008; Wang 2007), all of which were also identified through the electronic search. No additional evaluations were identified by screening reference lists of eligible evaluations.

Included studies

After title and abstract screening, we retrieved and reviewed 624 full‐text articles. Fifty evaluations, reported in 53 publications, were deemed eligible for inclusion (Figure 1).

We considered three pairs of evaluations to be potential multiple reports of each other as they reported outcomes from the same data set (Hopewell 2010 and Yu 2010; Balasubramanian 2006 and Tiruvoipati 2005; Spring 2007 and Thoma 2006). For 35 evaluations, additional data were needed to determine eligibility or to define the comparative analysis. Of 21 authors who responded, 20 were able to provide additional information to supplement the published data. Some of the information received from authors was not in the necessary format to allow inclusion in meta‐analyses. In these cases, only data provided in the evaluation report were included in meta‐analyses. One included evaluation was an abstract (Peckitt 2007), for which all necessary data were fully reported; a full‐text article for this evaluation was not available at the time of data extraction. One evaluation (Dickinson 2002) was presented as a poster and was not published as a full article. For this evaluation, supplementary details were supplied by the evaluation author. All other included evaluations were journal publications. An additional author (Ellis 2005) provided data that confirmed that their evaluation was ineligible for inclusion.

The total number of included RCTs was 16,604 (median per evaluation (interquartile range, IQR) 123 (77 to 226)). Included evaluations reviewed RCTs published in a median of six (IQR 3 to 26) journals. Two evaluations reported on especially large numbers of RCTs (Hopewell 2010; Wang 2007), with 1135 and 7496 RCTs respectively.

Thirty‐five included evaluations used CONSORT checklist items as a means of assessing completeness of reporting of RCTs within a given medical area, from which we could obtain information to form suitable comparisons. Seven evaluations did not list the influence of CONSORT or RCT adherence to the CONSORT checklist as primary or secondary outcomes, but assessed reporting on the basis of self determined methodological outcomes, consistent with the CONSORT checklist, which in turn allowed for a suitable comparison applicable to our review.

All included evaluations were published in English. Seventeen evaluations considered the influence of the 1996 CONSORT checklist, 25 reported data for the 2001 checklist, and the remaining eight evaluations considered outcomes from some form of modified CONSORT checklist. For example, Bian 2006 modified the CONSORT checklist suitable to their field of study or objectives. Forty‐one evaluations addressed reporting quality by focusing on trials published within a specific medical field; these fields were broad and diverse, including, for example, behavioural health, urology, drug abuse, and anaesthesiology.

Some evaluations were eligible for more than one of our three comparisons and across the these comparisons, 29 evaluations were included in comparison 1 (CONSORT endorsers versus CONSORT non‐endorsers), 11 evaluations were included in comparison 2 (CONSORT‐endorsing journals, before and after endorsement), and 21 evaluations were included in comparison 3 (before and after CONSORT publication). Overall, 69 outcomes were quantitatively reported, across the three comparison groups (mean of eight outcomes reported per evaluation).

Evaluations used varying definitions for endorsement. Of the total number of included RCTs, 84% (13,955/16,604) across 85% (45/53) of evaluations were published in journals which endorsed CONSORT at least six months prior to RCT publication (as defined in Selection of studies).

Eight evaluations also assessed RCT quality by proxy, using means of assessing methodological quality; eight assessed quality using the Jadad Score (Jadad 1996); three assessed the completeness of reporting of allocation concealment; two used Schulz allocation concealment (Schulz 1995); and four used other scores or means of quality assessment (Effects of methods).

Excluded studies

We screened 2888 evaluations by title and abstract; we excluded 2264 evaluations as they did not assess completeness of reporting of RCTs. Of the remaining 56 included evaluations, we excluded a further 11 from the review at the data extraction phase due to unavailability of data (Excluded studies).

Risk of bias in included studies

Validity assessment of included studies

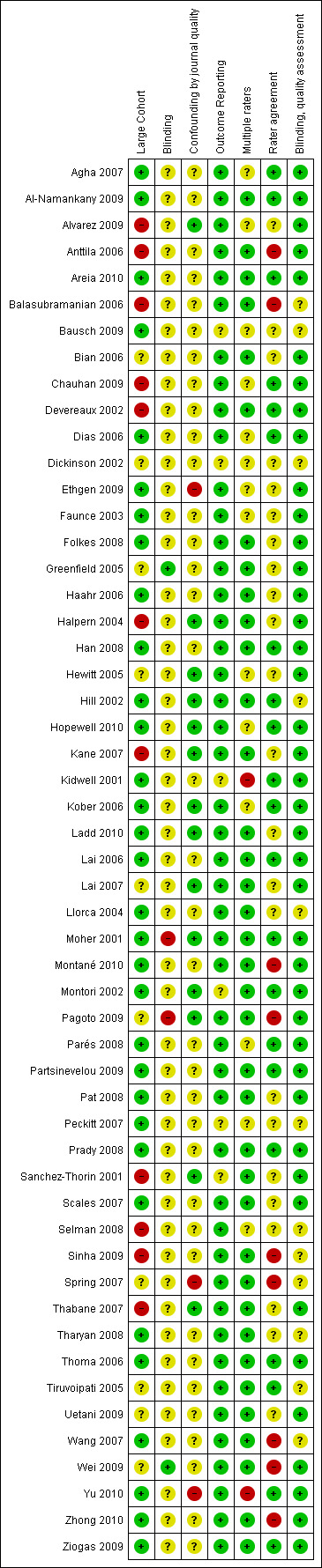

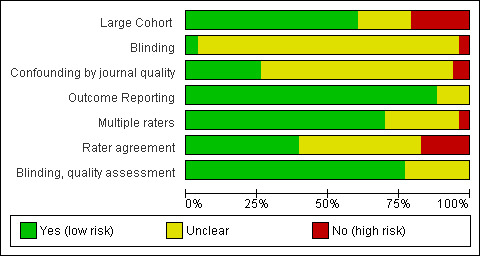

Overall, the rated validity of included evaluations was high or unclear (Figure 2; Figure 3). The majority of included evaluations had a large cohort, did not demonstrate selective reporting of outcomes, had more than one rater assessing CONSORT criteria and, if methodological quality was assessed using another tool, blinded assessments were performed. We note, however, that for this latter domain, as well as those pertaining to criteria 3 and 6 (as described in Assessment of risk of bias in included studies), a rating of high validity may appear as a potential overestimate of validity for a given evaluation.

2.

'Risk of bias' summary: review authors' judgements about each risk of bias item for each included study.

3.

'Risk of bias' graph: review authors' judgements about each risk of bias item presented as percentages across all included studies.

Across domains, we were uniformly unable to assess validity due to poor reporting of included evaluations, contributing to the large number of 'unclear' ratings. This 'unclear' rating also reflects the need for improvement in the validity assessment tool used in this review. For instance, whether or not confounding by journal occurred was difficult to assess since, for some evaluations, we used data provided by authors to create our own comparisons, thereby nullifying any adjustments for confounding that may have been carried out by authors. Moreover, as frequently discussed with regard to assessing quality of the RCT, the reporting of included evaluations may not reflect their actual conduct; however, information on many of our items was unobtainable from the text, which we thus rated 'unclear'.

It is important to note that these evaluations were not randomised trials; less than 8% (4/53) of the evaluations reported adjusting for potential confounding factors, for evaluations that did not adjust for confounding (criterion 3), their estimates of effect may potentially be confounded by the natural improvement in completeness of reporting of RCTs over time, or by journal 'quality', as discussed above ('Issues of potential confounding').

Effect of methods

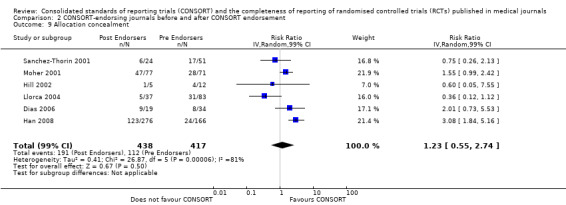

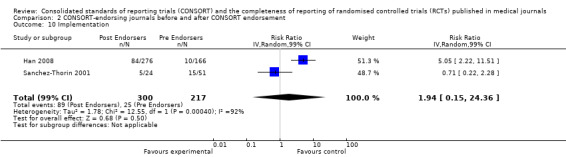

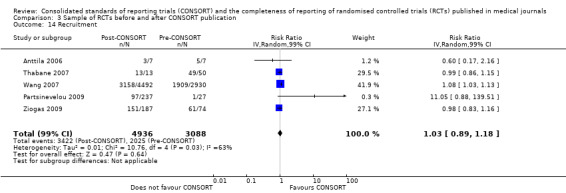

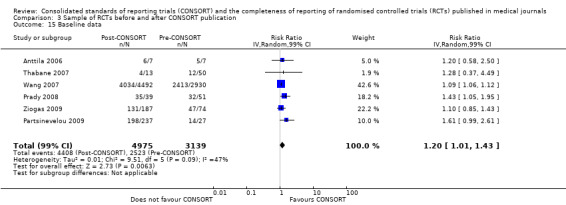

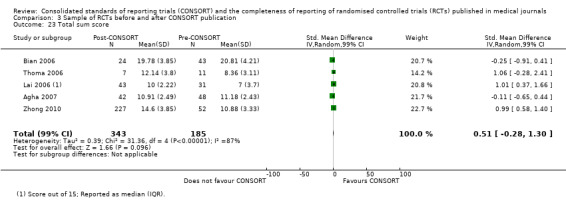

Comparison 1: Completeness of reporting of randomised controlled trials (RCTs) in CONSORT‐endorsing journals versus non‐endorsing journals

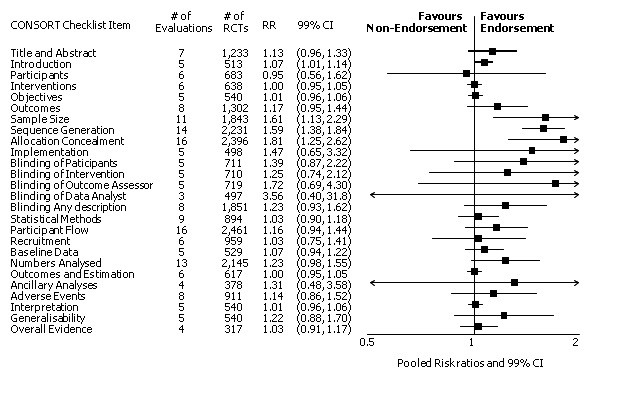

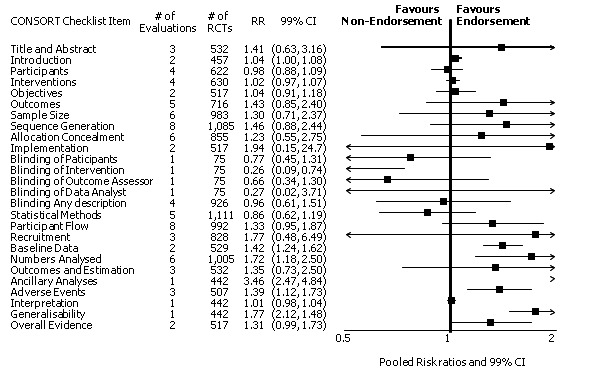

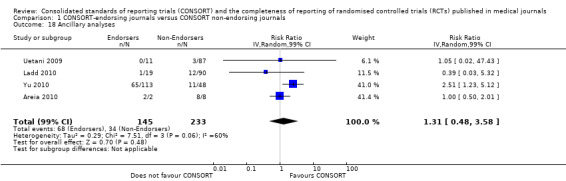

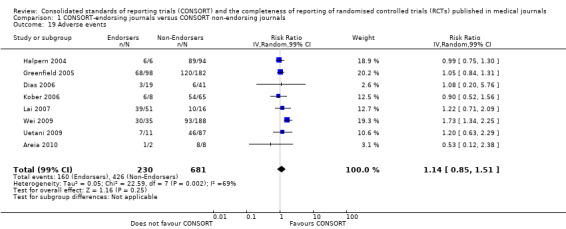

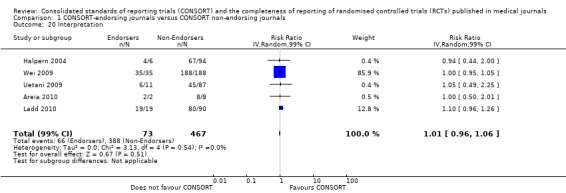

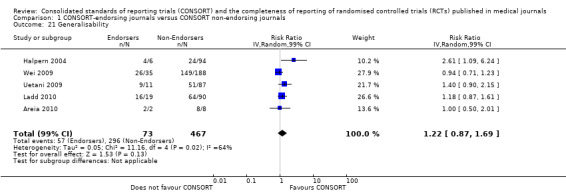

Twenty‐nine evaluations were included in this comparison, with RCT level data for at least one of the 2001 CONSORT checklist items, blinding subcategories, or total sum score. Across 27 potential outcomes, the number of evaluations per meta‐analysis varied (median (interquartile range, IQR) 6 (5 to 8)). 'Allocation concealment' and 'participant flow' were reported in the largest number of included evaluations: 16 each, with 2396 and 2140 assessed RCTs respectively. 'Ancillary analysis' and 'overall evidence' were reported in the fewest evaluations included in meta‐analyses, with four evaluations each, that assessed 378 and 317 RCTs respectively. Results for all outcomes in this comparison are presented in Figure 4.

4.

Pooled risk ratios across assessed 2001 CONSORT checklist items with 99% confidence intervals for primary comparison, adherence of RCTs published in CONSORT‐endorsing journals versus RCTs published in CONSORT non‐endorsing journals

Plot generated in Comprehensive Meta‐analysis Version 2.0 (CMA).

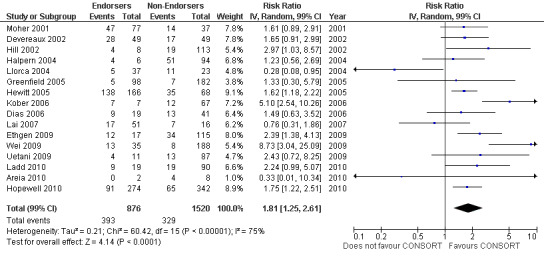

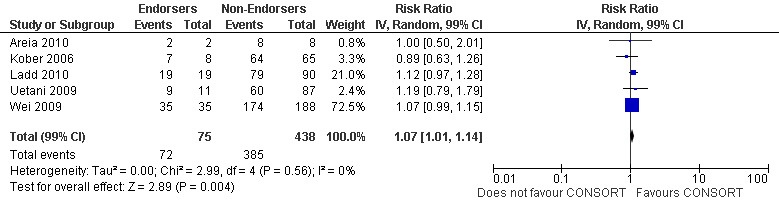

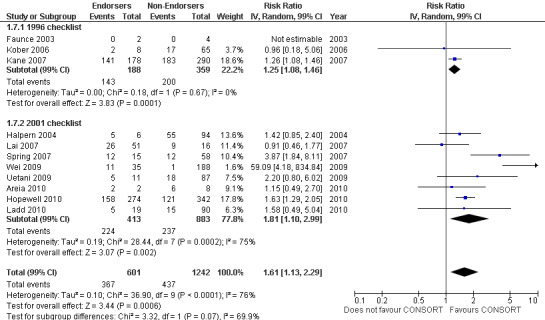

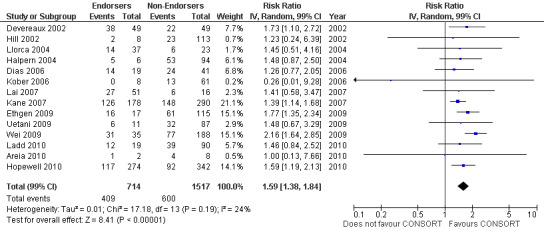

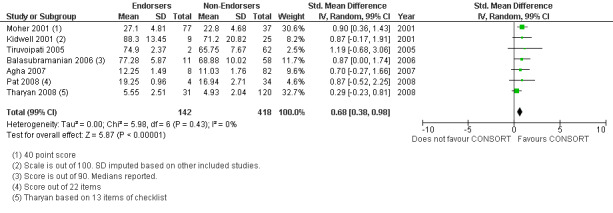

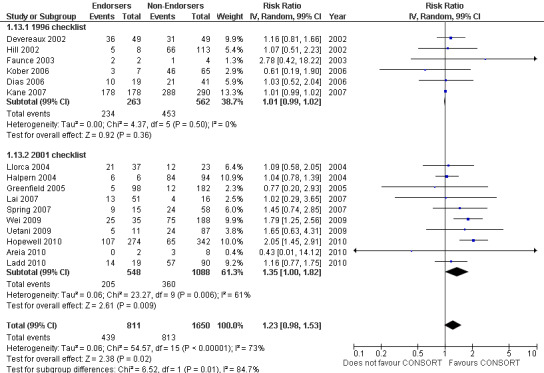

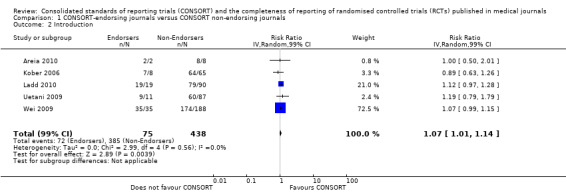

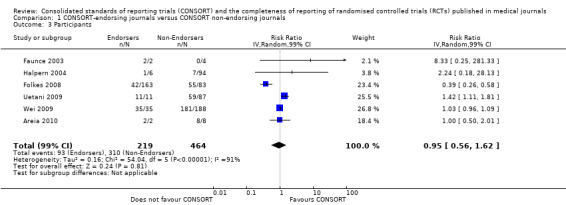

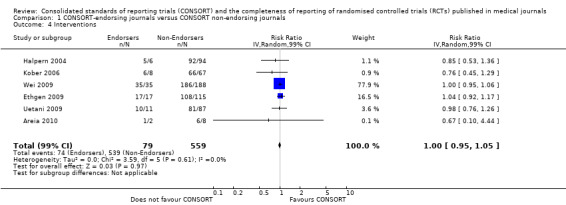

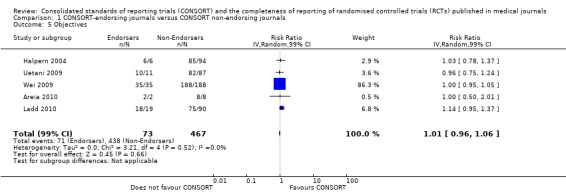

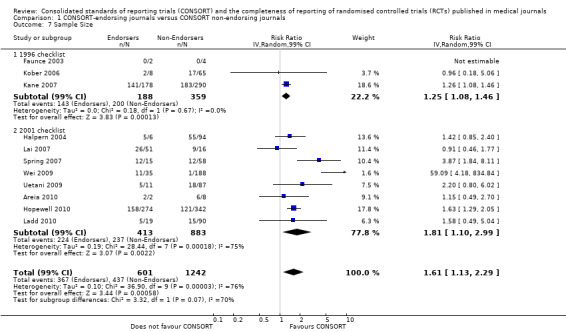

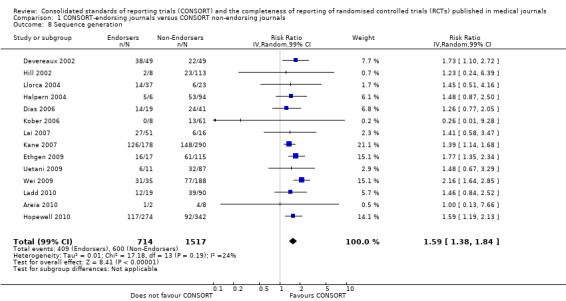

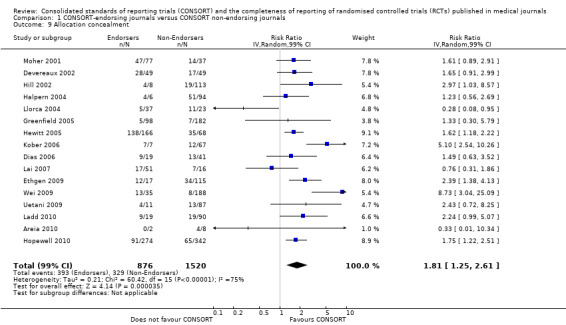

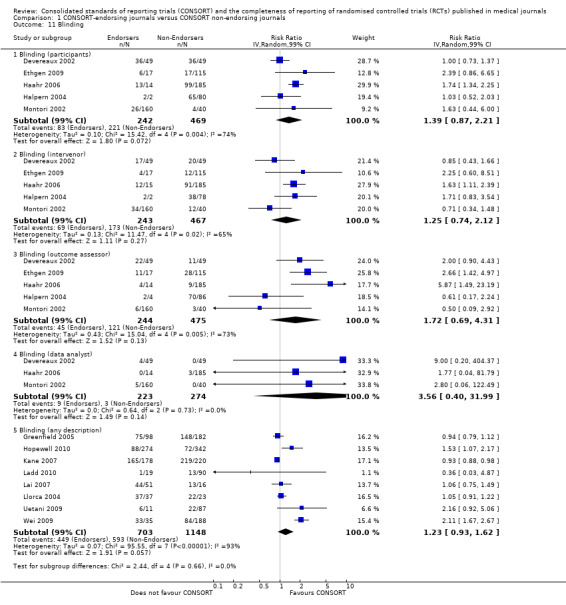

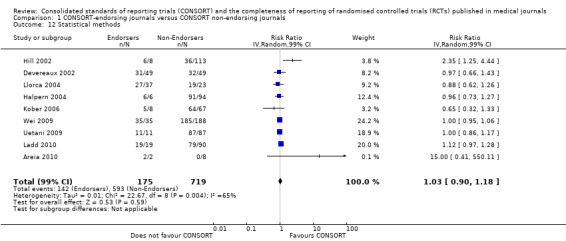

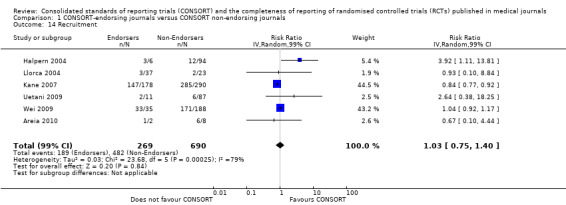

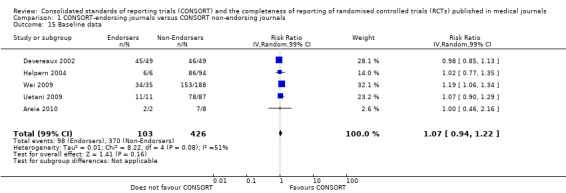

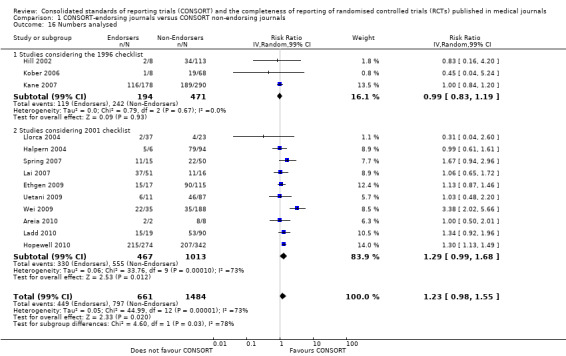

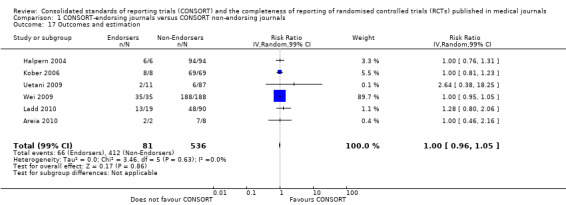

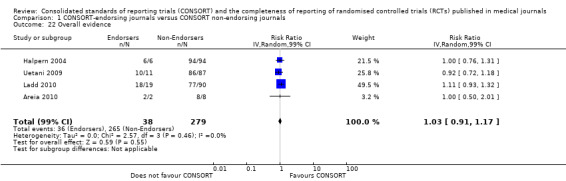

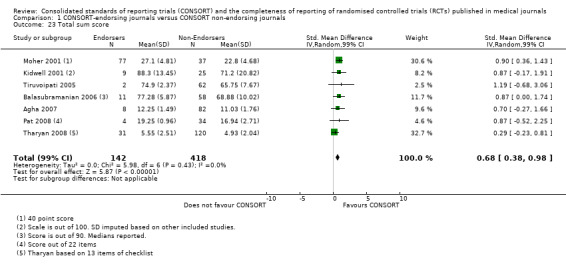

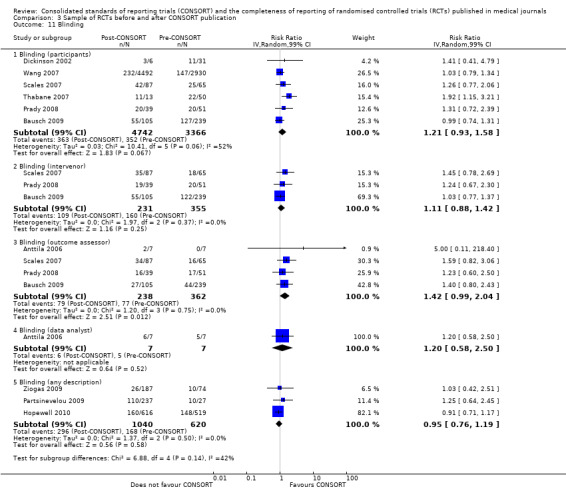

For the 27 outcomes evaluated, five items resulted in statistically significantly more complete reporting in CONSORT‐endorsing journals than non‐endorsing journals, including complete reporting of: allocation concealment, description of scientific explanation and rationale in the 'Introduction', how 'sample size' was determined, and total sum score. Reporting details of adequate 'allocation concealment' had the largest estimate of effect(risk ratio (RR) 1.81, 99% confidence interval (CI) 1.25 to 2.61) (16 evaluations, 2396 RCTs, I2 = 75%, Figure 5). For interpretation, this suggests an increase in adequate reporting of allocation concealment of between 25% and 161% in RCTs published in CONSORT‐endorsing journals. Allocation concealment was reported adequately in 45% (393/876) of RCTs in CONSORT‐endorsing journals and in 22% (329/1520) of RCTs in non‐endorsing journals. For all other significant outcomes, which can be interpreted in a similar manner, results are as follows. Description of scientific explanation and rationale in the 'Introduction' was reported 7% more in CONSORT‐endorsing journals than non‐endorsing journals (RR 1.07, 99% CI 1.01 to 1.14) (five evaluations, 513 RCTs, I2 = 0%, Figure 6). How 'sample size' was determined was reported between 13% and 129% more in RCTs of CONSORT‐endorsing journals (RR 1.61, 99% CI 1.13 to 2.29) (11 evaluations, 1843 RCTs, I2 = 76%, Figure 7). Description of the method used for 'sequence generation' was reported between 38% and 84% more in CONSORT‐endorsing RCTs (RR 1.59, 99% CI 1.38 to 1.84) (14 evaluations, 2231 RCTs, I2 = 24%, Figure 8). The 'total sum score' item resulted in a significant difference between endorsers and non‐endorsers(standardised mean difference (SMD) 0.68, 99% CI 0.38 to 0.98) (seven evaluations, 560 RCTs, I2 = 0%, Figure 9). This effect estimate suggests that the average reporting of items in RCTs in CONSORT‐endorsing journals was more complete than for RCTs in CONSORT non‐endorsing journals. For one evaluation (Kidwell 2001), standard deviations were not reported and were imputed from the values reported in other evaluations, using a weighted average.

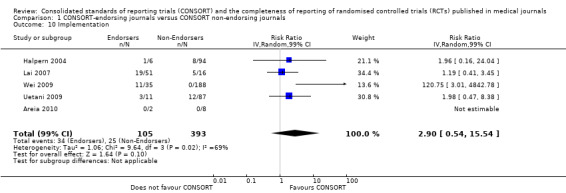

5.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.9 Allocation concealment.

6.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.2 Introduction.

7.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.7 Sample size.

8.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.8 Sequence generation.

9.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.23 Total sum score.

For 20 of the 22 remaining outcomes, pooled estimates of effect showed reporting was more complete in a higher proportion of RCT reports for CONSORT‐endorsing journals compared to non‐endorsing journals (RR > 1.0), but these were not statistically significant. Precise details of 'interventions', item four, were equally well reported in endorsing and non‐endorsing journals(RR 1.0, 99% CI 0.95 to 1.05) (six evaluations, 638 RCTs, I2 = 0%), and eligibility criteria for 'participants', item three, produced a non‐significant negative effect (RR 0.95, 99% CI 0.56 to 1.62) (six evaluations, 683 RCTs, I2 = 91%).

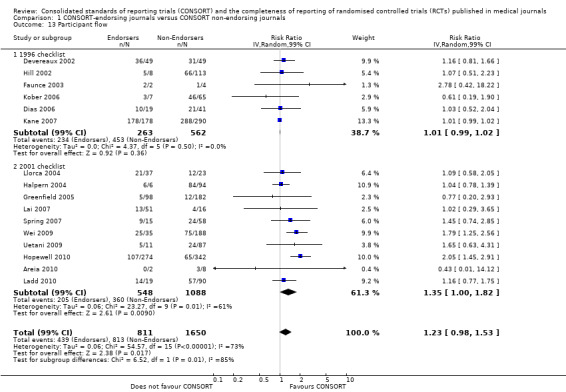

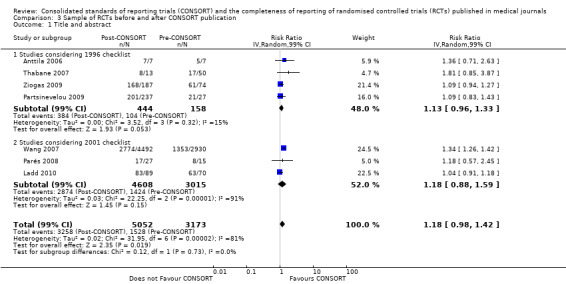

Subgroups for CONSORT 1996 and 2001 checklists

All items resulted in estimates of effect larger in those evaluations assessing reporting using the 2001 checklist than those using the 1996 checklist. Determination of 'sample size' was reported significantly more in CONSORT‐endorsing journals in evaluations assessing both the 1996 and 2001 CONSORT checklist versions. The completeness of reporting of 'participant flow' differs between 1996 and 2001 checklist versions. For 'title and abstract', 'outcomes', and 'numbers analysed' comparisons between endorsing and non‐endorsing journals were all non‐significant for both 1996 and 2001 subgroups. Complete reporting of how 'sample size' was determined yields significant results for CONSORT endorsers for evaluations adhering to either checklist version. This effect is greater in magnitude across evaluations assessing the 2001 checklist version, with RR 1.25 (99% CI 1.08 to 1.46) and RR 1.81 (99% CI 1.25 to 2.61) for 1996 evaluations and 2001 evaluations respectively, but these subgroups did not differ significantly (P = 0.07) (Figure 7). Complete reporting of 'participant flow' also increases in effect, with evaluations assessing the 1996 version, RR 1.01 (99% CI 0.99 to 1.02) and the 2001 evaluations, RR 1.35 (995 CI 1.00 to 1.82). Six evaluations were included in the 1996 subgroup and 10 evaluations in the 2001 subgroup; the latter considered inclusion of a flow diagram or otherwise to describe patient flow in 548 RCTs in CONSORT‐endorsing journals and 1088 RCTs in CONSORT non‐endorsing journals; testing for differences between subgroups demonstrates a statically significant difference between 1996 and 2001 checklist version groups (P = 0.01).

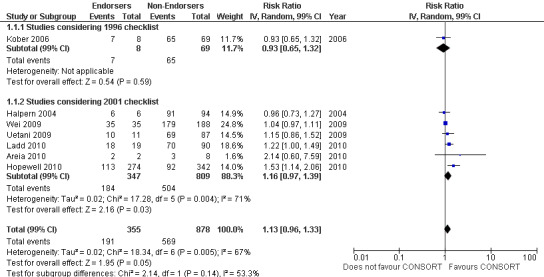

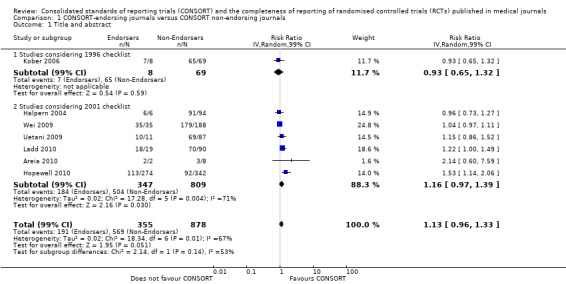

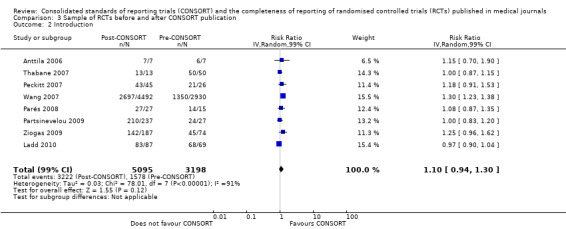

Complete reporting of randomisation in the 'title and abstract' was reviewed in one evaluation subject to the 1996 checklist, and six evaluations according to the 2001 checklist. Across all evaluations for this outcome, the pooled effect suggests an increase in reporting of 13% (RR 1.13, 99% CI 0.96 to 1.33). Estimates of effect did not differ greatly between checklist versions (P = 0.14), with effect estimates, RR 0.93 (99% CI 0.65 to 1.32) and RR 1.16 (99% CI 0.97 to 1.39) for 1996 and 2001 checklist versions respectively.

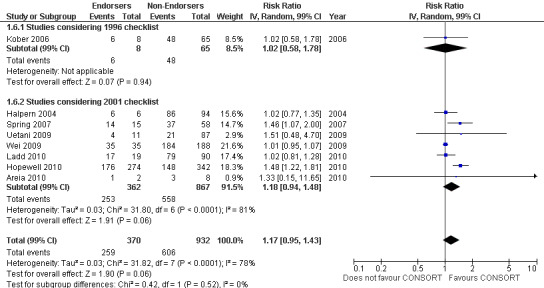

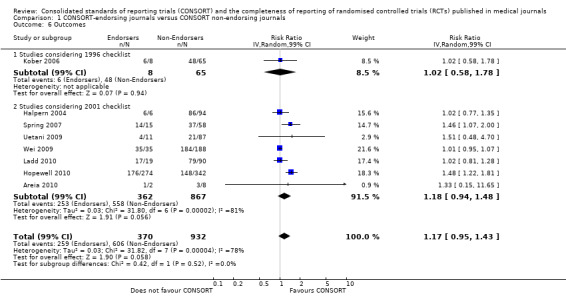

Overall, complete reporting of 'outcomes' is not significantly different in CONSORT‐endorsing journals compared to non‐endorsing(RR 1.17, 99% CI 0.95 to 1.43). The test for subgroup differences did not result in a difference between groups (P = 0.52), where one evaluation saw an effect of RR 1.02 (99% CI 0.58 to 1.78) in 1996 and seven evaluations saw an effect of RR1.18 (99% CI 0.94 to 1.48) in 2001.

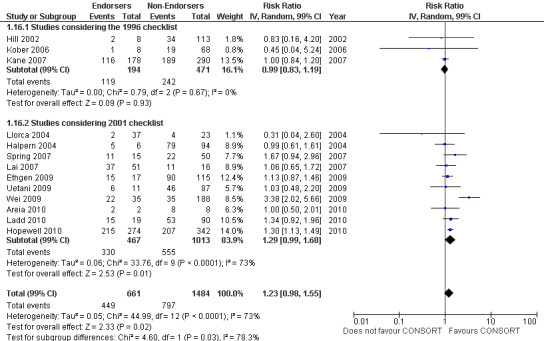

Complete reporting of 'numbers analysed' did not differ between the 1996 and the 2001 checklist versions. Across all 13 evaluations in this outcome assessing 2145 RCTs, the estimate of effect was not significant(RR 1.23, 99% CI 0.98 to 1.55). The 1996 version evaluations did not yield more complete reporting in endorsers when pooled(RR 0.99, 99% CI 0.83 to 1.19). The magnitude of effect increases according to the 2001 checklist definition (RR 1.23, 99% CI 0.98to 1.55); testing for differences between subgroups suggests that assessments subject to the two versions differ (P = 0.03) (Figure 10; Figure 11; Figure 12; Figure 13).

10.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.13 Participant flow.

11.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.1 Title and abstract.

12.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.6 Outcomes.

13.

Forest plot of comparison: 1 CONSORT‐endorsing journals versus CONSORT non‐endorsing journals, outcome: 1.16 Numbers analysed.

Sensitivity analysis

Eight included evaluations (Ethgen 2009; Hopewell 2010; Kidwell 2001; Tharyan 2008; Tiruvoipati 2005; Uetani 2009; Wei 2009; Yu 2010) were not strictly compliant with our definition of CONSORT‐endorsing journal (Objectives). Of these eight evaluations, three did not report how a CONSORT endorser was defined, one evaluation categorised endorsing journals as those listed on the CONSORT website, and the remaining four referred to the online journal 'Instructions to authors' to determine if RCTs in a given journal were associated with a journal that endorsed the CONSORT checklist. Although this met our definition for how journal endorsement information is obtained, it does not confirm the date of publication of each assessed RCT as six months prior to the publishing journal endorsing CONSORT; therefore it has not been confirmed that the journal was endorsing CONSORT at the time of manuscript writing. It is important to note that, for all known definitions, such misclassification would lead to underestimates of the relative effect of adherence to the CONSORT items by RCTs in journals which endorse the CONSORT Statement.

We conducted sensitivity analysis across outcomes, excluding the above mentioned evaluations that did not strictly meet our definition of CONSORT endorsement. Only 1/27 outcomes, although only minimally different, differed substantially when evaluations that did not directly meet our definition of endorsement were excluded. Completeness of reporting of the 'Introduction' changed from RR 1.07 (99% CI 1.01 to 1.14) to 1.05 (99% CI 0.87 to 1.27). This suggests that relaxing our criteria for CONSORT endorsement did not alter substantially the estimates for reporting of RCTs published in non‐endorsing journals versus those published in journals endorsing CONSORT.

In addition, we considered several point estimates large outliers and we examined these in sensitivity analyses. These include: 'statistical methods', item 12, reported in the Areia 2010 evaluation; 'blinding of data analyst' in the Devereaux 2002 evaluation; 'participants', item three in the Faunce 2003 evaluation; 'blinding of outcome assessor' in Haahr 2006; and 'sample size and allocation concealment' in Wei 2009. Sensitivity analyses excluding these evaluations did not change the significance of completeness of reporting items in RCTs in CONSORT‐endorsing journals compared with RCTs published in CONSORT non‐endorsing journals.

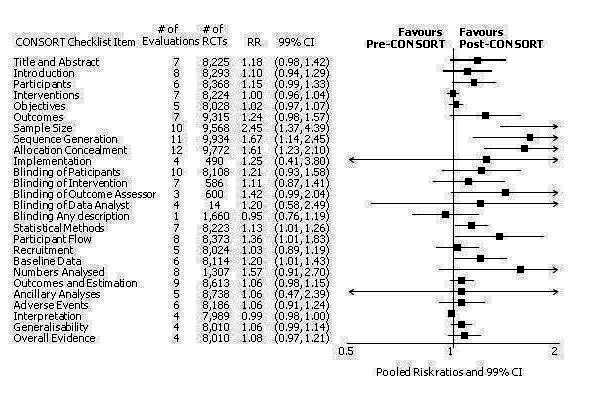

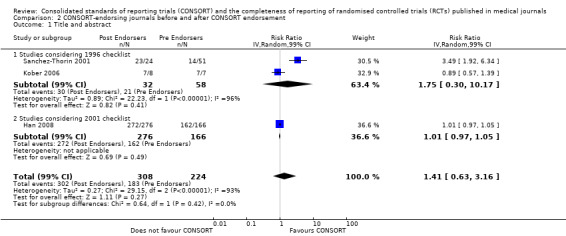

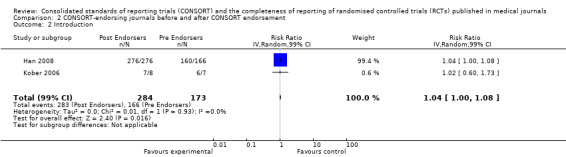

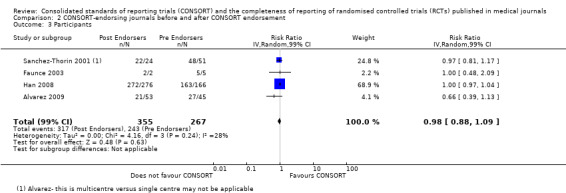

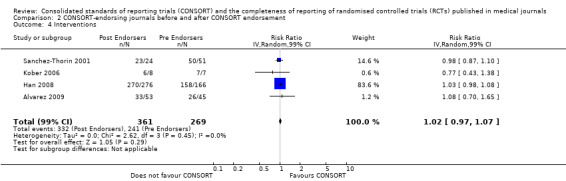

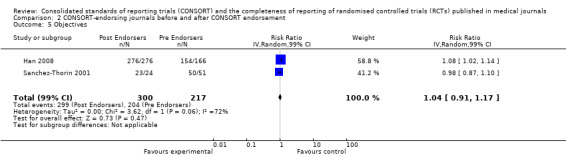

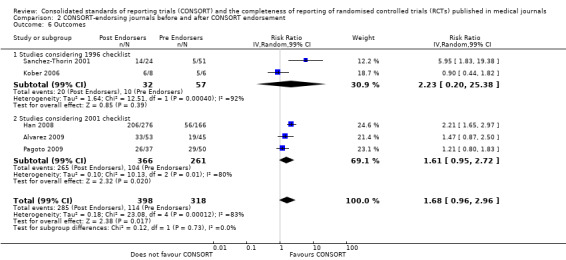

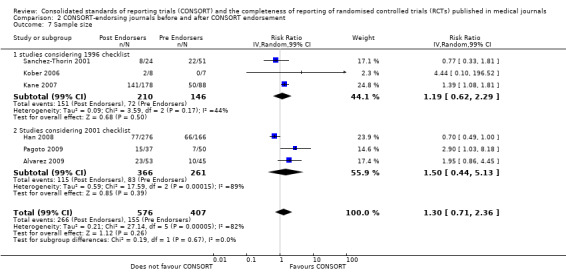

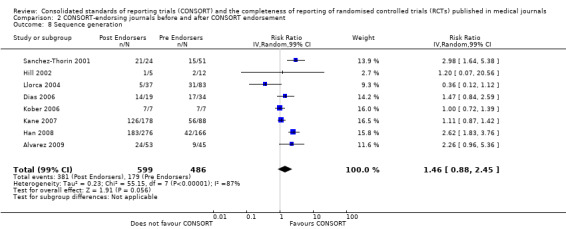

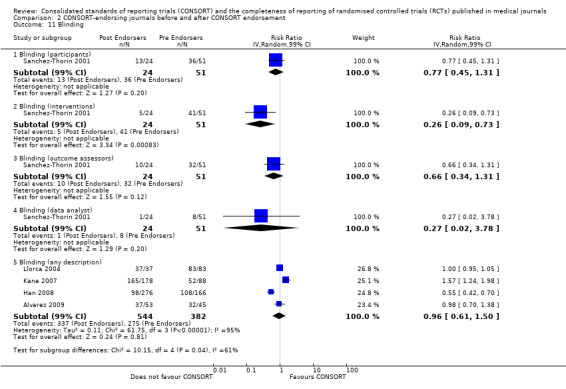

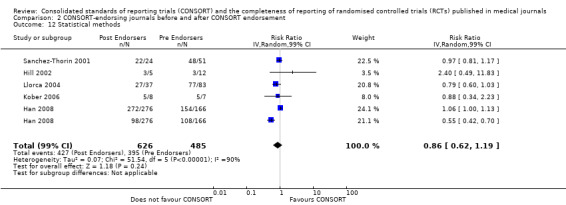

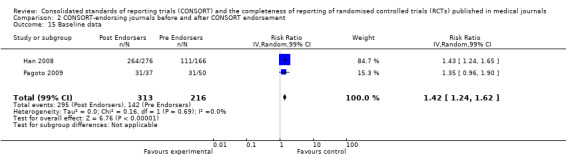

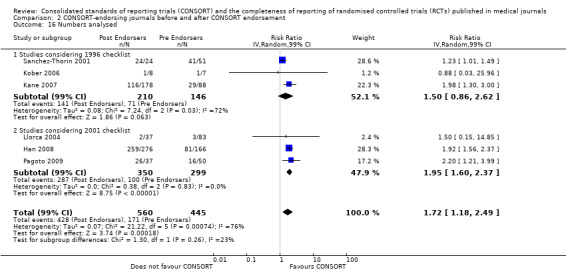

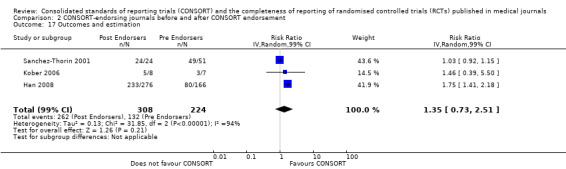

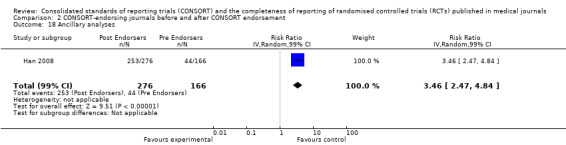

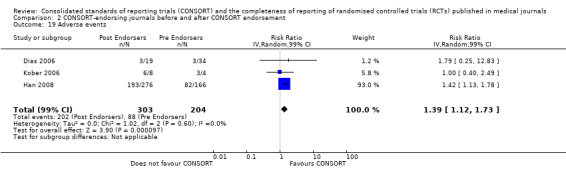

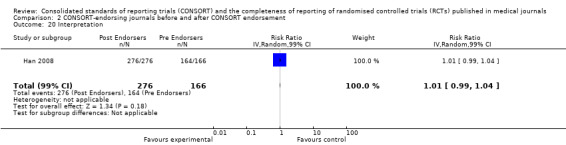

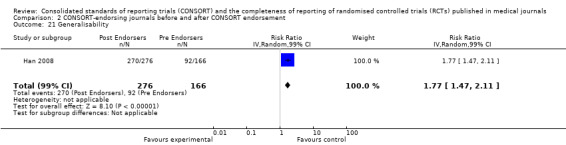

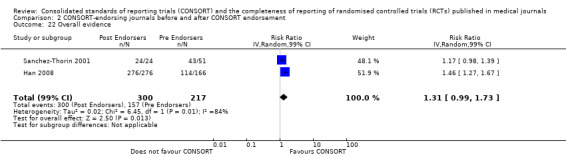

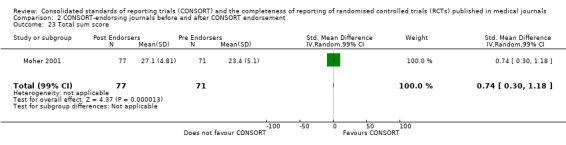

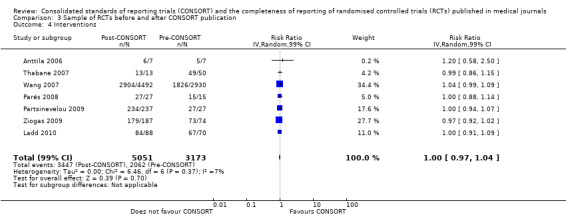

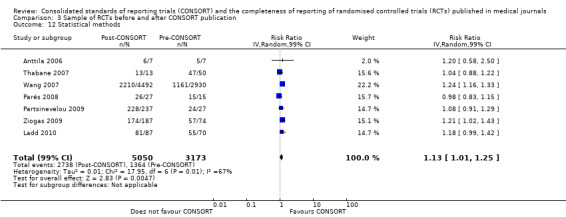

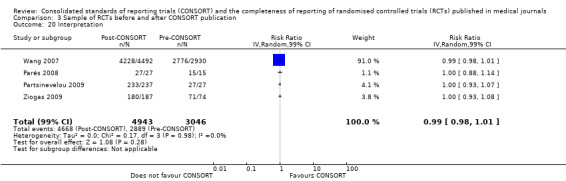

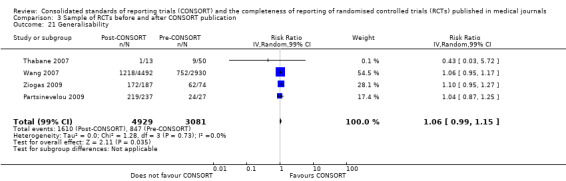

Comparison 2: Completeness of reporting of RCTs in CONSORT‐endorsing journals before and after endorsement

Eleven evaluations assessed only journals that endorse the CONSORT Statement, but presented RCT completeness of reporting of at least one CONSORT item before and after the journal's date of endorsement of CONSORT. The number of RCTs assessed per outcome had a median (IQR) of 532 (512 to 919). The number of reported CONSORT checklist items varied over evaluations, with a median of 3 (IQR 2 to 5). 'Sequence generation' and 'participant flow' were both reported in eight evaluations. For 15 of 27 outcomes data were reported in fewer than five evaluations. The results across all outcomes in this comparison are presented in Figure 14.

14.

Pooled risk ratios across assessed 2001 CONSORT checklist items with 99% confidence intervals for comparison 2, adherence of RCTs published in CONSORT‐endorsing journals before and after endorsement.

Plot generated in Comprehensive Meta‐analysis Version 2.0 (CMA).

Seven outcomes resulted in statistically significantly more complete reporting in journals after CONSORT endorsement. These include: complete reporting of the scientific rationale and background in the 'Introduction' (RR 1.04, 99% CI 1.00 to 1.08) (two evaluations, 457 RCTs, I2 = 0%); 'baseline data' (RR 1.42, 99% CI 1.24 to 1.62) (two evaluations, 529 RCTs, I2 = 0%); 'numbers analysed' (RR 1.72, 99% CI 1.18 to 2.49) (six evaluations, 1005 RCTs, I2 =76%); 'ancillary analyses' (RR 3.46, 99% CI 2.47 to 4.84) (one evaluation, 442 RCTs); 'adverse events' (RR 1.39, 99% CI 1.12 to 1.73) (three evaluations, 507 RCTs, I2 = 0%); and 'generalisability' (RR 1.77, 99% CI 1.47 to 2.11) (one evaluation, 442 RCTs). Aggregate scores of items were also significant for this comparison: the total sum score was SMD 0.74 (99% CI 0.30 to 1.18) (one evaluation, 148 RCTs).

Of the remaining outcomes, 13/20 resulted in pooled estimates of effect showing that reporting was more complete in a higher proportion of trial reports for CONSORT‐endorsing journals compared to non‐endorsing (RR > 1.0), but these were not statistically significant. Overall, completeness of reporting was not optimal either before or after endorsement, even when results have demonstrated a difference when journals have endorsed the statement. For example, only 76% (428/560) of RCTs published after journal endorsement of CONSORT and 38% (171/445) of RCTs published before completely reported 'numbers analysed' as per the CONSORT consolidated standards of reporting trials (CONSORT) and the completeness of reporting guidance.

For seven items, estimates of effect showed less complete reporting in RCTs published in journals after endorsement of CONSORT, but none of the differences were statistically significant. These outcomes include complete reporting of eligibility criteria for participants (RR 0.98, 99% CI 0.88 to 1.09) (four evaluations, 622 RCTs, I2 = 28%) and complete reporting of statistical methods used (RR 0.86, 99% CI 0.62 to 1.19) (five evaluations, 1111 RCTs, I2 = 90%). Across all possible blinding subgroups, the relative reporting of blinding decreased in RCTs in CONSORT‐endorsing journals after endorsement. Blinding of interventions was reported in one evaluation of 75 RCTs, indicating that reporting is significantly reduced post endorsement (RR 0.26, 99% CI 0.09 to 0.73) (one evaluation, 75 RCTs). All subgroups reflected larger reductions in reporting than the blinding (any description) item, which is considered to be most consistent with the 2001 checklist(RR 0.96, 99% CI 0.61 to 1.50) (four evaluations, 926 RCTs, I2 = 95%). All blinding subgroups were evaluated by one evaluation assessing 75 RCTs. For all blinding outcomes, RCTs in CONSORT‐endorsing journals post endorsement were found to report blinding less completely than in RCTs of CONSORT non‐endorsing journals.

Subgroup analyses for 1996 and 2001 checklist version

There were no statistically significant tests for differences in subgroups for the five identified outcomes. Three items saw effects of greater magnitude in the 2001 checklist version group, and two outcomes saw greater effects in the 1996 groups. Three items, the 'title and abstract', 'sample size', and 'numbers analysed' checklist items, were completely reported significantly more in CONSORT‐endorsing journals than non‐endorsing journals in both subgroups. Despite an increase in effect estimates from 1996 to 2001 checklist versions, 'title and abstract' subgroups did not differ significantly (P = 0.42). There was no statistically significant difference between 'sample size' subgroups, despite the 2001 checklist increasing the magnitude of the effect estimate (P = 0.67). Nor was there a difference between subgroups when assessing 'numbers analysed' (P = 0.26). Two items, 'participant flow' and 'outcomes' had larger effect estimates across evaluations assessing the 1996 checklist version, but neither of these groups differed significantly when subgroups were tested.

Sensitivity analysis

Two evaluations over three outcomes were considered for sensitivity analyses due to relatively large effects. The Sanchez‐Thorin 2001 evaluation reported relatively large effects in favour of CONSORT endorsement for reporting the CONSORT items 'outcomes' and 'participant flow', however, the comparisons remained non‐significant at the 1% level when this evaluation was excluded. The Han 2008 evaluation is one of two evaluations reporting on generated and assigned sequence allocation, namely 'implementation'. This evaluation reported a relatively large effect; excluding this evaluation did not change the overall significance of effect for this item.

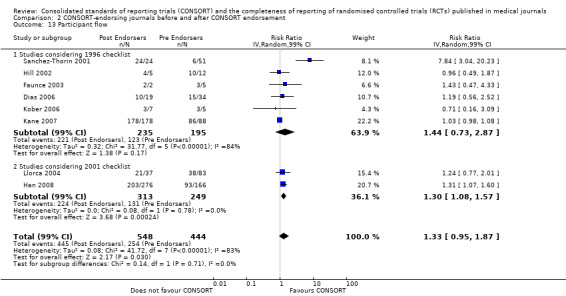

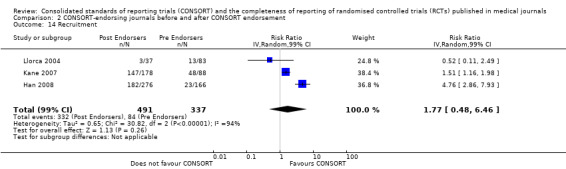

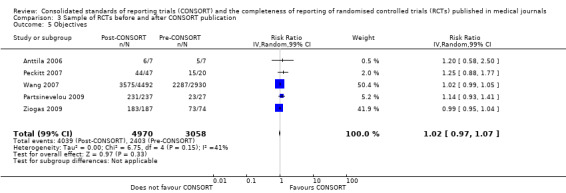

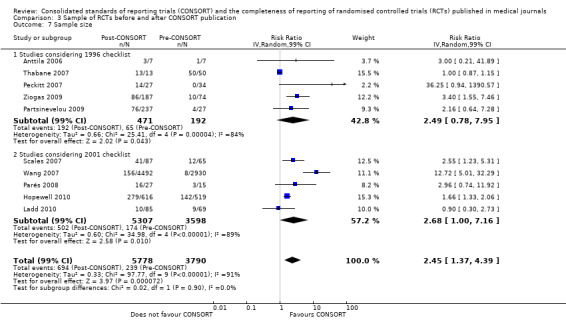

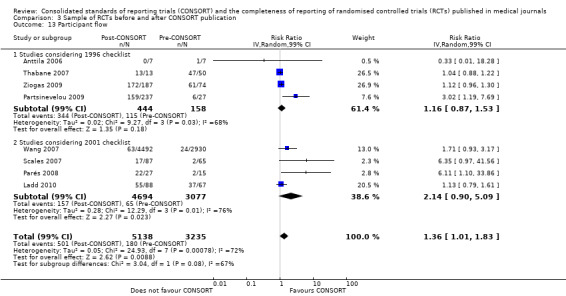

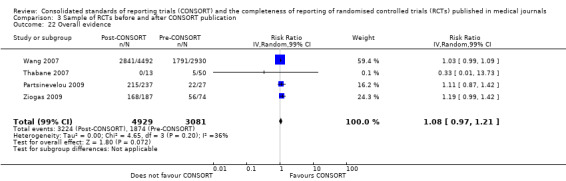

Comparison 3: Completeness of reporting of RCTs before and after CONSORT publication

This comparison was developed due to the large body of evidence that did not comply fully with our definition of endorsement. Although these data were abundant and consistent with the findings of the other comparisons, evaluations in this comparison did not comply with our prespecified definition of within‐journal endorsement (see Objectives). As such the findings may not be as robust and should be interpreted cautiously. The results across all outcomes for this comparison are presented in Figure 15.

15.

Cross‐sectional sample of RCTs before and after the publication of CONSORT.

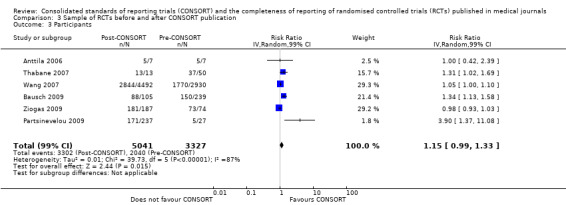

Twenty‐one evaluations provided comparisons of completeness of reporting compliant with the CONSORT checklist items, before and after either the 1996 or 2001 publication of CONSORT. Methods for assessing the pre‐post intervention were inconsistent across evaluations. Over all outcomes, there were on average 7 (5 to 8) (median, (IQR)) evaluations per checklist item, with an average of 8224 (8017 to 8676) (median (IQR) RCTs per outcome (CONSORT item). 'Allocation concealment' was reported in the largest number of included evaluations: 12 evaluations assessed reporting adherence in 9772 trials.

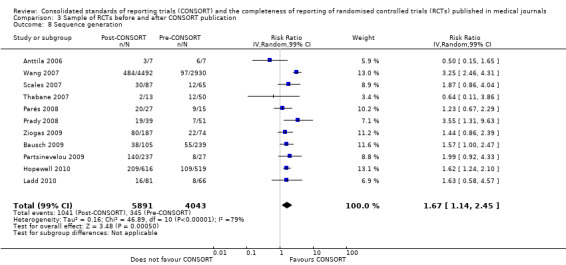

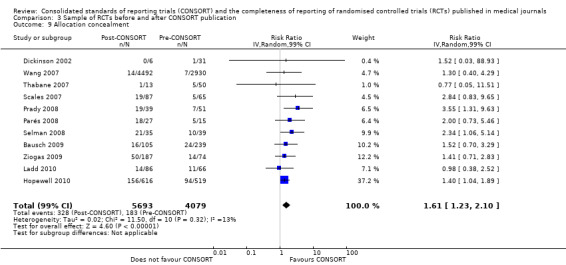

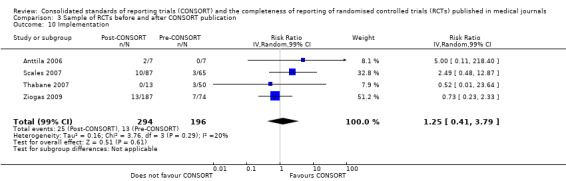

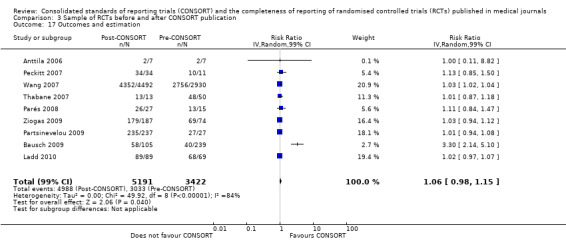

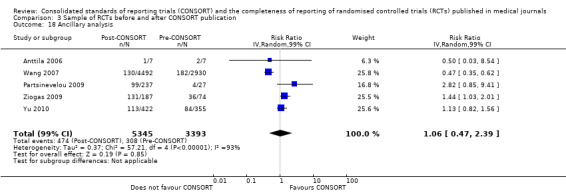

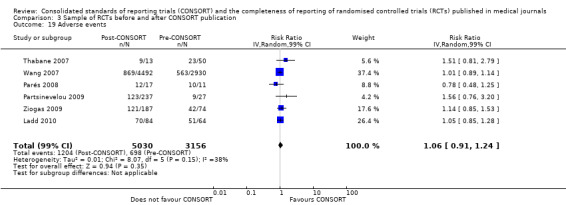

Six outcomes saw statistically significant results, suggesting that these items were statistically significantly more completely reported after the publication of the CONSORT Statement. These include complete reporting of 'sample size' (RR 2.45, 99% CI 1.37 to 4.39) (10 evaluations, 9568 RCTs, I2 = 91%), 'sequence generations (RR 1.67, 99% CI 1.14 to 2.45) (11 evaluations, 9934 RCTs, I2 = 79%), 'allocation concealment' (RR 1.61, 99% CI 1.23 to 2.10) (11 evaluations, 9772 RCTs, I2 = 13%), 'statistical methods' (RR 1.13, 99% CI 1.01 to 1.25) (seven evaluations, 8223 RCTs, I2 = 67%), 'participant flow' (RR 1.36, 99% CI 1.01 to 1.83) (eight evaluations, 8373 RCTs, I2 = 72%), and 'baseline data' (RR 1.20, 99% CI 1.01 to 1.43) (six evaluations, 8114 RCTs, I2 = 47%).

Of the 21 remaining outcomes, 18 showed completeness of reporting was higher in RCTs published after CONSORT, but the differences were not significantly significant. Complete reporting of the 'intervention' resulted in a neutral effect(RR 1.00, 99% CI 0.97 to 1.04) (seven evaluations, 8224 RCTs, I2 = 7%) and 'interpretation of the results' had a pooled effect which did not favour the impact of CONSORT on the completeness of reporting(RR 0.99, 99% CI 0.98 to 1.01) (four evaluations, 7989 RCTs, I2 = 0%).

All subcategories of blinding descriptions resulted in higher proportions of RCTs completely reporting, but the difference before and after publication of CONSORT was not significant. Evaluations providing analyses of any description of blinding showed that fewer RCTs reported a complete description of blinding after the publication of CONSORT (RR 0.95, 99% CI 0.76 to 1.19) (three evaluations, 1660 RCTs, I2 = 0%). Complete reporting was infrequent for both groups, for example, in total less than 18% (1041/5891) post CONSORT publication RCTs, and less than 9% (345/4043) of pre‐CONSORT RCTs, completely report their method of 'sequence generation' as per the CONSORT guidance.

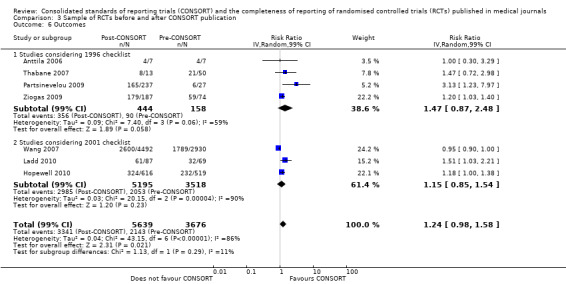

Subgroup analyses for 1996 and 2001 checklist versions

There were no differences between subgroup analyses for the five outcomes specified. Subgroup analyses effect estimates for complete reporting of randomisation in the 'title and abstract' were consistent: the 1996 version saw a relative increase in adequate reporting of 13% (RR 1.13, 99% CI 0.96 to 1.33), while the 2001 version saw a relative increase of 18%(RR 1.18, 99% CI 0.88 to 1.59); the difference between these two groups was not significant (P = 0.73). Complete reporting of derivation of 'sample size' was reported more frequently in assessed RCTs post CONSORT publication, with significant results for both checklist versions assessed. Evaluations considering the 2001 version of the checklist produced a larger pooled effect, suggesting that the percentage of RCTs published after publication of the 2001 CONSORT Statement reporting 'sample size' was greater than those RCTs published before 2001 (RR 2.68, 99% CI 1.00 to 7.16). There was no statistical difference between these groups (P = 0.90). Adequate reporting of 'participant flow' in RCTs published after the publication of the CONSORT Statement saw a larger improvement in evaluations considering the 2001 version of the checklist as the intervention, with 2.14 times more RCTs adequately reporting the flow of participants through the trial (RR 2.14, 99% CI 0.90 to 5.09) than those considering the 1996 evaluation where only 1.16 times more RCTs adequately reported 'participant flow' (RR 1.16, 99% CI 0.87 to 1.53); these differences were not statistically significant (P = 0.08).

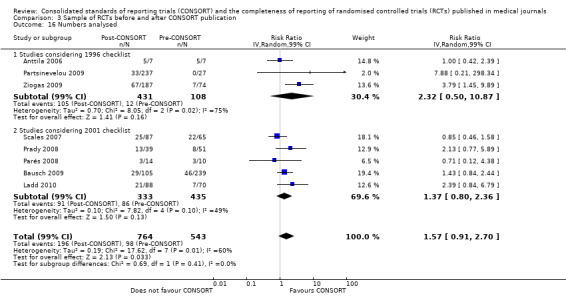

Reporting of primary and secondary 'outcomes' saw a greater magnitude of effect across those evaluations assessing the 1996 version(RR 1.47, 99% CI 0.87 to 2.48 and RR 1.15, 99% CI 0.85 to 1.54 for the 1996 and 2001 versions respectively); this difference between subgroups was not significant (P = 0.29). Adequate description of the 'numbers analysed' was non‐significantly relatively more frequent in RCTs published after the CONSORT Statement, for both subgroups of evaluations considering the 2001 version and the 1996 version(RR 1.37, 99% CI 0.80 to 2.36 and RR 2.32, 99% CI 0.50 to 10.87 respectively). Over all evaluations, there was a non‐significant 57% increase in adequate reporting of denominators for the number of participants analysed in RCTs published after than before the publication of the CONSORT Statement (RR 1.57, 99% CI 0.91 to 2.70); subgroup differences between checklist versions were not significant (P = 0.41).

Sensitivity analysis

The third comparison group was developed to synthesise results of cross‐sectional samples of RCTs before and after CONSORT publication, as well as evaluations for which timing of endorsement of CONSORT could not be confirmed as the intervention within journals. As a result, all included evaluations in this comparison have been confirmed to have RCTs pre‐ and post CONSORT publication of the CONSORT Statement. No sensitivity analysis could be conducted in relation confirmation of endorsement.

Five evaluations (Parés 2008; Partsinevelou 2009; Peckitt 2007; Scales 2007; Wang 2007) report effects that were relatively large. As such we performed sensitivity analyses to assess the difference in pooled effects when these evaluations were not included.

Across all outcomes, evaluations with large effects were not included in pooled effect estimates and discrepancies were observed. Peckitt 2007 and Wang 2007 were simultaneously excluded from the 'sample size' outcome, with a reduction in effect from RR 2.45 (99% CI 1.37 to 4.39) to RR 1.80 (99% CI 1.10 to 2.93). Parés 2008 and Scales 2007 were simultaneously removed from the 'participant flow' outcome, with a reduction from RR 1.36 (99% CI 1.01 to 1.83) to RR 1.20 (99% CI 0.95 to 1.50). When the Partsinevelou 2009 results were removed from the reporting of dates for the 'recruitment' outcome, the effect remained non‐significant; and from the adequacy of reporting of which 'numbers [were] analysed' (RR 1.57, 99% CI 0.91 to 2.70 to RR 1.52, 99% CI 0.88 to 2.61).

Qualitative reports on the influence of reporting

Four evaluations that met inclusion criteria were not included in the three quantitative comparisons for this review (Al‐Namankany 2009; Chauhan 2009; Montané 2010; Sinha 2009). Relatively few trials were assessed in these reports (n = 305 RCTs). Each provided qualitative descriptions of the influence of endorsement of CONSORT on the completeness of reporting, as detailed below. Three of the four evaluations reported that there was no difference in reporting subject to CONSORT endorsement.