Abstract

Motor simulation has emerged as a mechanism for both predictive action perception and language comprehension. By deriving a motor command, individuals can predictively represent the outcome of an unfolding action as a forward model. Evidence of simulation can be seen via improved participant performance for stimuli that conform to the participant’s individual characteristics (an egocentric bias). There is little evidence, however, from individuals for whom action and language take place in the same modality: sign language users. The present study asked signers and nonsigners to shadow (perform actions in tandem with various models), and the delay between the model and participant (“lag time”) served as an indicator of the strength of the predictive model (shorter lag time = more robust model). This design allowed us to examine the role of (a) motor simulation during action prediction, (b) linguistic status in predictive representations (i.e., pseudosigns vs. grooming gestures), and (c) language experience in generating predictions (i.e., signers vs. nonsigners). An egocentric bias was only observed under limited circumstances: when nonsigners began shadowing grooming gestures. The data do not support strong motor simulation proposals, and instead highlight the role of (a) production fluency and (b) manual rhythm for signer productions. Signers showed significantly faster lag times for the highly skilled pseudosign model and increased temporal regularity (i.e., lower standard deviations) compared to nonsigners. We conclude sign language experience may (a) reduce reliance on motor simulation during action observation, (b) attune users to prosodic cues (c) and induce temporal regularities during action production.

Keywords: sign language, forward models, motor simulation, grooming gesture, rhythm

Introduction

Pickering and Garrod (2013) and others (e.g., Kutas, DeLong, & Smith, 2011; Wilson & Knoblich, 2005) propose that action and language comprehension are predictive in nature. By deriving a motor command for an observed stimulus, and engaging in covert imitation, Pickering and Garrod (2013) (henceforth PG) specify that individuals can predictively represent the outcome of an unfolding action as a forward model. Because these predictive representations are drawn from the observer’s own motor simulation processes, it follows from PG’s proposal that (a) forward models will be the most efficient when the stimulus aligns with how the observer would produce the same action, (b) errors are more likely to occur when there is a greater difference between the stimulus and observer, and (c) errors that do occur will tend to reflect the observer’s articulatory patterns. The sum of these effects will be referred to as egocentric bias. PG describe language as a highly systematized form of action and predict that both action and language will exhibit egocentric bias.

Evidence in favor of PG’s simulation-based proposal for language comprehension can be seen in both action and language research. For example, Knoblich and Flach (2001) asked participants to predict the landing location of a dart thrown in a video in which either the participant themselves or another person threw the dart. Videos varied in how much of the thrower was visible, including trials in which only the arm could be seen. Even when the identity of the thrower was hidden, participants showed improved accuracy for videos featuring themselves, a form of egocentric bias (see also Knoblich, Seigerschmidt, Flach, and Prinz, 2002). Further evidence for motor simulation can be seen in magnetoencephalography (MEG) data pointing to primary motor area activation in pianists listening to highly trained piano pieces (Haueisen & Knösche, 2001). With sufficient training, motor areas of the brain can be recruited for perception, without direct motoric response. Some studies suggest, however, that motor recruitment is an intermediate effect, whereby some exposure to an action sequence will result in motor recruitment during perception, but further expertise will reduce the effect (e.g., Gardner, Aglinskas, & Cross, 2017). This u-shape recruitment curve is indicative of motor activation as an intermediate step in developing expertise.

Within the domain of language research, a behavioral investigation of forward modeling could make PG’s proposed covert imitations overt. Speech shadowing, as a methodology, does just that: participants are asked to imitate a spoken stimulus as closely in time as possible, and must rely on predictive processing to do so with a minimal gap between stimulus onset and shadowing response (e.g., Fowler, Brown, Sabadini, & Weihing, 2003; Marslen-Wilson, 1985; Miller, Sanchez, & Rosenblum, 2013; Mitterer & Ernestus, 2008). For example, Nye and Fowler (2003) asked participants to shadow phonetic samples that varied in their approximation to a language familiar to the participants, English. Shadowing, and therefore predictive abilities, were significantly better when the stimulus was more similar to the familiar language. In the context of PG’s proposal, this is an indication that forward models are more effective when the predicted stimulus (the motor output) is more consistent with the listener’s own language production processes. However, the results of Nye and Fowler (2003) could also be interpreted as a familiarity effect: participants were better able to predict a more familiar stimulus.

Despite PG’s claim that their proposal applies to both manual gestures and language, they rely strictly on spoken language evidence. The few available studies of sign language users are somewhat inconsistent with respect to effects of egocentric bias. Corina and Gutierrez (2016) conducted a lexical-decision task with right-handed signers viewing either right-handed or mirror-reversed left-handed signed stimuli. If signers exhibit an egocentric bias for motor simulations, then these right-handed signers should perform better with right-handed than left-handed signs. In accordance with this prediction, participants responded more quickly to handedness-aligned items, but this effect was limited to non-native signers, suggesting that native signers may not engage in motor simulation. Another possibility is that simulation effects are limited to cases of uncertainty or significant cognitive load, which would be greater for non-native than native signers when making lexical decisions. In a similar study by Watkins and Thompson (2017), right- and left-handed signers performed a picture-sign matching task (i.e., does this sign match the previous picture?) in which the sign model was either right- or left-handed. Watkins and Thompson (2017) reported a main effect of model handedness whereby both right- and left-handed participants showed faster response times to right-handed stimuli for one-handed and two-handed symmetrical signs. As the majority of the population is right-handed, this finding is an indication of a visual familiarity effect. However, some evidence of an egocentric bias was found for phonologically complex signs: two-handed asymmetrical signs in which the hand configuration differs for the left and right hands. In this condition, Watkins and Thompson (2017) found that left-handed participants performed better with the left-handed sign model and vice versa for right-handed participants. Results from both Corina and Gutierrez (2016) and Watkins and Thompson (2017) are consistent with the proposal that motor simulation is only engaged under difficult circumstances.

Hickok, Houde, and Rong (2011) make such a proposal in their review of the speech-based forward modeling literature and conclude that motoric simulation may only be a supportive mechanism under unfamiliar, difficult or uncertain conditions. Hickok et al. (2011) highlight stimulus ambiguity and temporal constraints as playing major roles in this regard; if the stimulus is sufficiently noisy or a response must be generated quickly, motor simulation may support existing processes. It may be the case that sign comprehension in the behavioral experiments presented above (Corina & Gutierrez, 2016; Watkins & Thompson, 2017) did not tax comprehension mechanisms enough to activate motor simulation, and therefore these studies failed to find strong evidence for an egocentric bias.

However, EEG data from Kubicek and Quandt (2019) suggest that both deaf signers and hearing non-signers may engage motor cortex when passively viewing signs. For both groups, viewing two-handed signs resulted in greater alpha and beta event-related desynchronization at electrode sites over sensorimotor cortex (a pattern associated with action simulation), which the authors attribute to greater demands on sensorimotor processing for producing two-handed compared to one-handed signs. This effect was smaller for signers than non-signers, suggesting reduced motor simulation for individuals with greater sign language expertise. These results are consistent with evidence that familiarity and expertise can sometimes reduce engagement of motor simulation processes during action perception (e.g., Liew, Sheng, Margetis, & Aziz-Zadeh, 2013; see Gardner et al., 2017, for discussion).

While PG treat spoken language and action modalities as functionally equivalent, there are modality differences between spoken and signed language that are worth considering with respect to the present paradigm. Spoken language perception is source invariant under normal circumstances: the acoustic signal for speech processing does not drastically differ for self-perception compared to perception of a nearby interlocutor. In contrast, the perception of one’s own signing is drastically different from the perception of another person’s signing, and self-perceived signing is very difficult to comprehend (unlike hearing one’s own voice) because a) the hands often fall outside the field of view (e.g., signs that are produced on the head), b) the hands are viewed from the back, and c) signers cannot see their own linguistic facial expressions (Emmorey, Bosworth, & Kraljic, 2009; Emmorey, Korpics, & Petronio, 2009). Further, signers are more familiar watching a friend’s signing than watching the same view of their own signing (e.g., when viewing a video of themselves or signing into a mirror). Therefore, sign motor simulation might be more dependent on the perception of others and less prone to egocentric bias.

Although most models primarily focus on the role of motor stimulation in generating representations about the immediate future, predictive mechanisms may also involve other abilities, such as memory capacity. Recent evidence by Huettig and Janse (2016) suggests working memory may be a mediating factor in predictive language abilities. Specifically, Huettig and Janse (2016) presented participants with a) a spatial memory task (Corsi block test; Corsi, 1972) and b) an array of four common objects in an eye-tracking Visual World Paradigm. During eye-tracking, participants could view the images for four seconds before they were instructed to “click on the [target]” in their native Dutch, where the definite article conveys gender information. Spatial memory ability significantly predicted anticipatory eye gazes to the target object prior to hearing the noun. In other words, participants with better memory of the set of objects showed evidence of more rapid predictive processing of the target object based on the gender of the determiner. Thus, visual memory may play a mediating role in forward modeling abilities.

With respect to memory for motor movements, Wu and Coulson (2014) created a working memory test for body movements in which participants watch and repeat meaningless movements, primarily articulated with the arms. Movements were presented with increasing span length until a participant could not successfully produce at least half of the items. This measure was correlated with an ability to judge the congruence between speech and co-speech gesture. However, Wu and Coulson (2014) found no correlation between their movement working memory task and scores on either verbal working memory (sentence span) or spatial working memory tasks (Corsi block test; Corsi, 1972). Thus, motor memory appears to be relatively independent of verbal and spatial working memory abilities. In the present study, we used an adapted version of this task to examine whether motor memory ability contributes to predictive abilities and whether sign language experience might enhance motor memory.

Present study

We provide a test case for (a) the proposal that action and language processing both rely on motor simulation, (b) the notion that predictive performance will exhibit an egocentric bias and/or familiarity effects, and (c) the possible role of motor memory in predictive processing. This study employed manual shadowing as a tool for examining both (non-communicative) gesture and sign language processing. As participants watched a video, they copied the stimulus with as much accuracy and as little delay as possible. A precise measurement of the delay between an action in a stimulus string and a participants’ shadowed action – the lag time – served as the dependent measure for participants’ ability to predict an upcoming stimulus.

Although PG proposed that forward language models facilitate predictions on many linguistic levels, the present study focuses on the form level, allowing us to compare across signer and nonsigner groups. Isolating form-level representations, in absence of semantics, requires utilizing pseudosigns as stimuli (i.e., possible but non-existent signs). Many spoken language studies have asked participants to shadow spoken nonsense syllables to examine phonological predictions (e.g., Honorof, Weihing, & Fowler, 2011; Miller et al., 2013). The present study employed the manual equivalent of pseudowords to uniquely focus on how signers’ phonological knowledge may facilitate predictions in absence of semantic or syntactic information. Nonsigers provide a control group for whom the phonological regularities of pseudosigns should not facilitate predictive representations. We also utilized grooming gestures (e.g., scratching one’s nose, adjusting one’s hair) as a nonlinguistic manual action comparison because they are meaningless, make use of the same articulators and body-contact, but they do not conform to sign-based phonological principles (e.g., they are not possible signs). We hypothesized that life-long sign language experience creates robust internal representations of ASL phonological structure and that signers can capitalize on these representations to generate predictions based on transitional information between pseudosign stimuli (e.g., Hosemann, Herrmann, Steinbach, Bornkessel-Schlesewsky, & Schlesewsky, 2013). We therefore predicted that signers would show better shadowing ability compared to non-signers for pseudosigns. Given the similarities of PG’s proposals for linguistic and non-linguistic forward modeling, it may be the case that experience with linguistic predictive representations strengthen the same processes used for action predictions. If signers’ predictive abilities generalize to non-linguistic actions, we may find that signers also outperform non-signers when shadowing grooming gestures as well.

In addition to effects on immediate lag time durations, we hypothesized that sign language experience might also impact sensitivity to larger prosodic structures within manual production. The shadowing task requires participants to reproduce a series of stimuli as quickly as possible, but signers may also be influenced by the natural prosodic structures of their language, such as manual rhythm. Both spoken and signed languages exhibit rhythmic structure through syllable stress patterns (e.g., Allen, Wilbur, & Schick, 1991). Nespor and Sandler (1999) have documented the use of stress in Israeli Sign Language to mark intonation boundaries and highlight potential parallels in American Sign Language. The list-like nature of stimuli in the present experiment may even encourage rhythmic list prosody, as described by Erickson (1992) for spoken language, where regular stress patterns imply a listed structure to information (see also Selting, 2007). We hypothesized that native adult signers would exhibit similar temporal regularities in their pseudosign productions, and that experience with manual list prosody may even transfer to grooming gestures. Specifically, signers may show greater rhythmic consistency in their productions and produce both pseudosigns and grooming gestures at more regular intervals (i.e., less variation in lag time durations) than their nonsigner counterparts.

To investigate whether an egocentric bias exists for manual shadowing, the present study involved filming each participant and a friend producing strings of pseudosigns and grooming gestures in order to examine predictive abilities when participants shadowed themselves compared to their friend. If an individual’s predictive system uses motor information, they should generate more efficient predictions to their own actions than those of a friend. That is, the idiosyncrasies of a participant’s own motoric system should facilitate shadowing, even in comparison to someone the participant had seen gesture and/or sign regularly.

Alternatively, a predictive system might rely on visual, rather than motoric, familiarity. We therefore added a third unknown model to which the visually familiar friend could be compared. The novel third stimulus model performed the same pseudosigns and grooming gestures but was unfamiliar to all participants. While the friend serves as a baseline for the motoric facilitation of oneself (egocentric bias), the unknown model serves as a baseline for effects of perceptual memory (visual familiarity). The third model also allows all participants to be compared using the same model, in contrast to the other models, which are participant-specific (the participant and his/her friend). In accordance with Pickering and Garrod (2013), motor simulation was hypothesized to regulate all predictive representations, both linguistic and nonlinguistic. In accordance with the right-hand familiarity effects from Watkins and Thompson (2017), visual familiarity was hypothesized to facilitate predictions as well, but perhaps to a lesser degree. Therefore, we predicted participants would demonstrate the shortest lag times for themselves, followed by their friend, with the longest lag times for the unknown model.

To understand manual shadowing in the context of potential memory effects, we administered a novel short-term Motor Memory (MM) test based on Wu and Coulson’s (2014) body movement working memory test. After completing the shadowing task, participants watched and repeated a series of the same grooming gestures and pseudosigns. Signers have more experience in the rehearsal, storage, and reproduction of novel signs (e.g., when learning a new word), and thus we expected this experience to give signers more efficient storage abilities for novel pseudosigns. Signers were therefore predicted to exhibit better memory performance for pseudosigns compared to grooming gestures. Following Wu and Coulson’s (2014) proposal that motor memory is separate from other forms of working memory, it was hypothesized that sign language experience would train motor memory abilities across domains. We predicted signers would outperform nonsigners on this task. In the context of predictive representations, motor memory abilities may be related to participants’ ability to retain an ad hoc lexicon of the possible items, and select individual items from that possible set. We further predicted that better shadowing abilities would be associated with higher motor memory scores.

Methods

Participants

Forty right-handed participants were recruited from the community in San Diego via fliers, online postings, and word of mouth. Participants were recruited as pairs of friends who spend time together regularly. Twenty Deaf signers (12 Female, M age = 37.0, range: 24 to 59, SD = 10.6), with either early or native sign language exposure (age of acquisition < 6), and 20 sign-naïve English speakers (15 Fem al e, M age = 38.8, range: 19 to 64, SD = 17.5) participated. Nonsigners had no sign experience or minimal sign knowledge (e.g., a few signs or the fingerspelled alphabet). One additional pair of nonsigners participated in session one, but they were excluded from the study due to unusual difficulty in imitating the stimuli, and one additional pair of signing participants failed to return for the second visit. After data collection, one nonsigner was excluded for failure to correctly complete the task.1 Technical issues prevented the inclusion of one signer in the temporal regularity analysis.

Materials

Shadowing

Participants saw six conditions in a 3 × 2 design: model (Self vs. Friend vs. Other) by stimulus type (grooming gestures vs. pseudosigns). Self stimuli were videos of the participant producing the stimuli set. The Friend model was the other member of the participant pair, an individual with whom the participant was familiar. The Other model was a hearing signer confederate unknown to all participants. Grooming gestures, such as scratching one’s nose, rubbing one’s eye, etc., were nonlinguistic actions to which both signers and nonsigners have regular exposure. Twelve common grooming gestures were used in this experiment. Each involved the dominant hand touching some part of the body, including the non-dominant hand, the nose or elsewhere on the head or face. The initial moment of contact is hereafter discussed as the contact point. See Figure 1 for a list of grooming gestures.

Figure 1.

Experimental items for Shadowing and Working Memory tasks, with screen captures highlighting handshape and location. Grooming Gestures (GG) and Pseudosigns (PS). Full videos have been posted to OSF: https://osf.io/y8eqh/?view_only=899078fb3c994814889e9af68d6eda7b.

The pseudosign stimuli were manual productions designed to match grooming gestures in both place of articulation and the number of hands involved (half were one-handed and half were two-handed), while conforming to the phonotactic and phonological constraints of ASL. Pseudosigns were generated by manipulating one or more parameters (handshape, location, or movement) of an existing sign to create a possible, but non-existing ASL sign. The full item inventory is listed in Figure 1.

Randomized eight-item subsets of the twelve-item inventory were generated. Twelve such subsets were selected to avoid repeating any individual item within a subset and to repeat each item eight times across all subsets. A hearing signer (who was different from the Other model) served as the demonstration model. She produced all twelve subsets as separate sentence-like strings for each stimulus type. The demonstration model also produced two practice strings for each stimulus type (pseudosigns and grooming gestures). The demonstration model’s productions employed fluid transitions from one item to the next, as if each string were a sentence. During each participants’ first session, they shadowed only the demonstration model. During the experimental session, each participant shadowed two practice strings (as performed by the demonstration model) per stimulus type (pseudosign, gesture), as well as twelve experimental strings for each model condition (self, friend, other) across the two stimulus types.

Motor Memory (MM)

Wu and Coulson (2014) designed their kinesthetic Working Memory test to include complex multi-part arbitrary movements. The present MM test replaces these movements with the pseudosign and grooming gesture items used in this study (see Figure 1) and includes a one second inter-stimulus interval. After each item was presented, the final frame was held for a one second inter-stimulus interval, in accordance with short-term memory span paradigms for words and signs (Hall & Bavelier, 2011). Lists were balanced to include equal numbers of each grooming gesture or pseudosign (see Figure 1 for full list). This task adopted the coding scheme and span test design features from Wu and Coulson’s (2014) test (see below), but the task extends the maximum span from five to seven and includes a subtest for each stimulus type. For each trial, participants were presented with one or more items (pseudosigns or grooming gestures). After each video, participants were instructed to produce the body movements shown in the videos in the order they were presented. Three such trials at a given span length were presented before increasing the span by one, up to seven. If a participant received less than half of the possible credit for a given span length, the stimulus type (pseudosign or grooming gesture) subtest was concluded. Subtest order was counterbalanced across participants.

The cumulative score for the motor memory test was measured by assigning one point for each correctly reproduced item and calculating the sum. For any given stimulus, a participant received one point for a correct production and zero points for an incorrect production. Half credit was awarded if the production differed by only one form-based parameter (i.e., handshape, movement or location), which demonstrated some memory of an item but with a flawed production (e.g., correct movement and location but an incorrect handshape). No credit was awarded for a given item if (a) the participant displayed no recollection of the item and/or produced a different item, or (b) only demonstrated recollection of one parameter (e.g., the location is correct, but the handshape and movement are incorrect). A participant was permitted to move to the next span length of the test if they received at least half of all possible points. The pseudo-randomized order of item presentation was preserved across stimulus type subtests: for example, if a given trial for the pseudosigns presented participants with item numbers 1, 7, 4 and 10 (see Figure 1), this was paralleled by a trial with the same numbered location-matched grooming gestures.

Procedure

This study took place over the course of two sessions for each participant. The first session was dedicated to filming productions for use in the shadowing task. Grooming gestures were always filmed first, and the procedure was the same for both stimulus types. The procedure was as follows: first, an experimenter showed a sample video of each item of a given inventory (pseudosign or grooming gesture) to the participant and asked the participant to repeat each stimulus. The experimenter provided feedback on the correct production of these items. Third, the experimenter showed the participant prerecorded videos of experimental strings, as performed by the demonstration model. The participant was asked to shadow each of these twenty-four demonstration videos three times. The experimenter offered feedback on both the production of individual items and the fluidity of the production. Participants were encouraged not to return to a rest position between individual items, but instead to fluidly transition from one item to the next in the context of a string. This procedure was repeated for both grooming gestures and pseudosign strings. Production of grooming gesture strings and pseudosign strings was blocked (i.e., the stimulus types were not mixed). The same demonstration model who produced the grooming gesture and pseudosign strings in the training videos was used for all participants’ filming (i.e., first elicitation session) as well as practice items at the next session. This filming session was limited to 75 minutes to avoid participant fatigue; this constraint also served as a limit on the amount of feedback that could be provided to participants. All videos were captured and presented to participants at 1920 by 1080 resolution, at 60 frames per second.

Between sessions, the most fluent of each participant’s productions were selected. Productions were chosen to maximize similarity to the demonstration model’s production, both in articulation of individual items and smooth transitions between items. Stimulus videos were selected to avoid hesitations and articulatory mistakes. These individual strings were concatenated into experimental videos with pauses between strings as well as cues to indicate a change in conditions or an opportunity to take a break. After every sixth string, the screen displayed the following text: “If you would like to take a break, please do so now.” Breaks were offered twice for each of the six conditions: at the halfway point and at the end. Before each new model condition, the screen displayed the following text: “You will now shadow [yourself / your friend / someone new].” Order of presentation of conditions (pseudosign vs. gesture, Self vs. Friend vs. Other) was counterbalanced across participants. Half of participants shadowed Pseudosigns before Grooming Gestures and half shadowed Grooming Gestures before Pseudosigns. For each participant, model order was consistent across the two stimulus types. Half shadowed themselves before their friend and half shadowed their friend prior to themselves. Given this Self vs. Friend order, 30% saw the novel Other model first, 30% saw her in the middle and 40% saw her last. Model order was nested within stimulus type to avoid switch costs.

The second session was dedicated to data collection. Participants stood in front of an iMac running OSX 10.8 running Quicktime 10.4. Participants were filmed using the forward-facing integrated iMac camera. This video was synchronized with a video screen-capture of presented stimuli via Silverback® software2. An example is shown in Figure 2. Participants were first asked to watch all the items from a given inventory individually as performed by the same model they had seen in the first session. This served as a reminder of the possible items. Participants then shadowed two practice strings before completing the stimulus type condition. Participants saw a total of twelve strings of eight items (96 trials) for each condition across six conditions, which sums to 576 data points per participant. After a brief break, participants then completed the motor memory task standing in front of the same computer.

Figure 2.

Two screenshots from videos used for measuring lag time, as combined using Silverback® software. A) A signer, bottom left, shadows himself. B) The same signer shadows a friend.

Coding

Shadowing Lag

The amount of lag, or delay, between the video recording of the participant and the stimulus presented on the computer screen was coded for each manual production. More precisely, two coders placed labels on an ELAN® timeline, beginning at the onset of the stimulus action, and ending at the onset of the participant’s action. For body-anchored signs (i.e., signs which contact the body), Caselli, Sehyr, Cohen-Goldberg and Emmorey (2017) define the onset of a sign as the moment the dominant hand contacts another part of the body, also called the contact point. We have extended this onset definition to both pseudosigns and grooming gestures, which were all body-anchored. Optimally, coders would label one specific frame in the video when a hand touches the target location. However, this moment of contact may take place between or over the course of two frames. In this case, coders made an inference about the trajectory of articulators by examining adjacent frames and marked the contact point as the frame closest to when the actual contact likely happened. In absence of any actual contact (i.e., the participant approached, but never touched the target location), coders marked the apex of the movement, or the first frame when the participant was no longer approaching the location before changing direction. Two coders were trained on the same sample data set such that ratings across both coders could be directly compared. Coders discussed any disagreements of 2 or more frames for the sample data to refine coding practices before coding the experimental data. Inter-rater reliability was calculated from 500 experimental data points. Seventy-five percent of annotations were within 1 frame margin of error across both coders and 97% of annotations were within a two-frame margin of error.

Coders also labelled productions that contained articulatory errors, those in which the participant was distracted, and those for which the contact point was not clearly visible. Of more than 24,000 data points, only 335 were rejected due to error. Nonsigners produced a larger portion of errors than signers (65% vs. 35%). Sixty-three of the errors were due to an error in the stimulus or technical difficulty. Sixty-five were due to the participants’ failure to shadow, whether through a failure to follow instructions or distraction. In forty-four cases, the participant produced a non-target item (i.e., rather than producing GG03, a scratch on the face, the participant produced GG04, a scratch on the head). One hundred forty-one errors were form-based: errors in handshape, location or movement. The majority of form-based errors were in location (n = 85), followed by handshape (n = 49), and movement (n = 7). These errors were excluded from lag time analyses, but were included in the temporal regularity analysis, as such incorrect productions were still considered reflective of potential rhythmic structure.

Temporal Regularity

Temporal regularity refers to a participant’s consistency in the delay between each production. For each string of eight items, seven delays between contact points were measured. The average onset-to-onset delay (the time between items) for participant shadowing productions was 1346 ms (SD = 287) for signers and 1374 ms (SD = 327) for nonsigners. For participant stimuli (i.e., the self or friend recorded productions), the average delay was 1391 ms (SD = 268) for signers and 1414 ms (SD = 302) for nonsigners. For the Other model stimuli, the average delay was 1275 ms (SD = 289). The standard deviation of the seven delays was calculated for each list, and served as our dependent variable. Smaller standard deviation represents greater regularity of productions within a string.

Analyses

The shadowing lag dataset was analyzed to address two groups of hypotheses. The first analysis examined the role of model identity (Self, Friend or Other) as it related to either egocentric bias or visual familiarity. The second major analysis examined production regularity across groups in order to investigate the potential impact of sign language experience on production rhythm. These analyses were conducted with Stata 14.2 (StataCorp, 2015), as linear mixed effects models, with post-estimation contrasts of marginal linear predictions.

For the motor memory cumulative scores, we conducted a 2×2, group by stimulus type, mixed repeated measures ANOVA, with group as a between subject factor. Post-hoc paired t-tests were used in follow-up analyses. Bivariate Pearson correlations were performed to compare mean shadowing lag times to cumulative motor memory scores.

Results

To examine the role of model identity in predicting lag time, model, group, stimulus type, and all interactions were included as fixed factors in the prediction of lag times. Condition order, an ordinal variable ranging from one to six, item identity, and subject were included as random intercepts. Finally, both stimulus type and model were included as random slopes relative to the subject intercept. In essence, this model focused on a full factorial design of stimulus type, model and group, while parsing out variation due to order effects, or variation in difficulty between items. Random slopes of conditions relative to subject allow for between subject variation in conditions of interest (model and stimulus type). One subject, for example, showing a huge contrast between grooming gestures and pseudosigns, will not sway the overall analysis. The omnibus test of this model is shown in Table 1 and parameter estimates for each condition are shown in Figure 3

Table 1.

LME effect of Model. Summary of effect regressions of Lag times across the fixed factors (model, stimulus type and group), and their interactions

| Coefficient | SE | z | p > |z| | |

|---|---|---|---|---|

| Fixed Effects | ||||

| Intercept | 448.101 | 20.173 | 22.21 | < 0.001 |

| Group | 15.784 | 28.171 | 0.56 | 0.575 |

| Model | ||||

| Self vs. Friend | 20.158 | 7.299 | 2.76 | 0.006 |

| Self vs. Other | 24.685 | 11.151 | 2.21 | 0.027 |

| Group * Model | ||||

| Self vs. Friend | −10.71 | 10.204 | −1.05 | 0.294 |

| Self vs. Other | −10.33 | 15.538 | −0.66 | 0.506 |

| Type | 30.055 | 15.819 | 1.9 | 0.057 |

| Group * Type | −44.825 | 22.094 | −2.03 | 0.042 |

| Model * Type | ||||

| Self vs. Friend | −17.179 | 7.778 | −2.21 | 0.027 |

| Self vs. Other | −22.385 | 7.842 | −2.85 | 0.004 |

| Group * Model | ||||

| Self vs. Friend | 20.575 | 10.87 | 1.89 | 0.058 |

| Self vs. Other | −7.85 | 10.916 | −0.72 | 0.472 |

| Random Effects | ||||

| Subject | ||||

| SD (Intercept) | 7360.171 | 1766.465 | ||

| SD (Type) | 4006.918 | 1018.044 | ||

| SD (Model) | 441.42 | 120.056 | ||

| SD (Item) | 1042.484 | 114.318 | ||

| SD (CondOrder) | NA | |||

Note. SD = Standard Deviation; SE = Standard Error; p = p-value significance; CondOrder = Order of Condition; NA = Not available: calculation suppressed due to computational limitations.

Figure 3.

Egocentric Bias by Model and Stimulus Type. Parameter estimates for a linear mixed effects model examining the effect of model and stimulus type (Gest = grooming gesture; PSign = pseudosign) on lag times for each group. Error bars indicate Standard Error. * p < .05

Counter to our predictions, there was no main effect of group. There were, however, main effects of model, as well as a marginal main effect of stimulus type. There was a significant two-way interaction between group and stimulus type, p = 0.042. Parameter estimates indicated a trend whereby nonsigners showed shorter lag times for grooming gestures compared to pseudosigns (M = 463 ms, SE = 20.5 ms; M = 480 ms, SE = 25.1 ms), while signers showed shorter lag times for pseudosigns compared to grooming gestures (M = 448 ms, SE = 24.5 ms; M = 472 ms, SE = 20.0 ms). A contrast of marginal linear predictions showed no simple effects: neither group showed a significant difference between stimulus types. Simple effects were only seen by testing at each level of model for each group: nonsigners showed a marginal stimulus type difference for Self stimuli, ??2(1) = 3.61, p = 0.057, and signers showed a significant stimulus type difference for Other stimuli, ??2(1) = 8.52, p < 0.01. This two-way interaction is better understood within the context of the three-way interaction of group, stimulus type and model, as described below.

Assessing egocentric bias

In the omnibus test, there was a marginal three-way interaction between the first two levels of model (Self and Friend), stimulus type and group, p = 0.058. Contrasts of marginal linear predictions were performed to test hypotheses regarding egocentric bias (Self vs. Friend) and visual familiarity (Friend vs. Other). Nonsigners exhibited an interaction between stimulus type and the Self vs. Friend model contrast, ??2 (1) = 4.88, p =0.03. Signers showed no such interaction, ??2(1) = 0.20, p = 0.65. An additional contrast to parse this two-way interaction showed that nonsigners exhibited an egocentric bias for grooming gestures, ??2(1) = 7.63, p = 0.01, but not pseudosigns, ??2(1) = 0.17, p = 0.68. Nonsigners were significantly faster for grooming gestures when shadowing themselves (M = 448 ms, SE = 20.2 ms) compared to their friend (M = 468 ms, SE = 20.7 ms).

Assessing visual familiarity

Regarding the effect of visual familiarity, there was a three-way interaction between model (Friend vs. Other), stimulus type and group, ??2(1) = 6.80, p = 0.01. Examining this as a two-way interaction for each group revealed that the signers showed a visual familiarity effect, ??2(1) = 19.62, p < 0.01, but the nonsigners did not, ??2(1) = 0.44, p = 0.50. Further investigation revealed that this effect was present in the pseudosign stimuli, ??2(1) = 16.24, p < 0.01, but not the grooming gestures, ??2(1) = 0.47, p = 0.49. Contrary to our predictions, signers were significantly slower when shadowing their friend (M = 462 ms, SE = 24.7 ms), compared to the unknown Other model (M = 433 ms, SE = 26.0 ms).

Assessing model habituation

Subjectively, participants seemed to get better at shadowing over time. While the effect of visual familiarity was initially considered to be long-term familiarity with a friend, it was possible that participants might develop familiarity with each model over the course of a condition. We therefore conducted a post-hoc follow-up model that also included a fixed factor for split-half condition progress. The omnibus test of this model is shown in Table 2 and parameter estimates for each condition are shown in Figure 4. Through this model, we can examine the presence or absence of egocentric bias across groups, stimulus types and condition half. Due to the post-hoc nature of these eight tests, contrasts of marginal linear predictions will be reported relative to a Bonferonni corrected alpha level of p = .006.

Table 2.

LME Split-half Egocentricity. Summary of effect regressions of Lag times across the fixed factors (model, stimulus type and group), and their interactions, with posthoc inclusion of split-half condition progress.

| Coefficient | SE | z | p > |z| | |

|---|---|---|---|---|

| Fixed Effects | ||||

| Intercept | 444.438 | 21.726 | 20.46 | < 0.001 |

| Group | 15.751 | 30.313 | 0.52 | 0.603 |

| Model | ||||

| Self vs. Friend | 29.602 | 8.448 | 3.5 | < 0.001 |

| Self vs. Other | 37.115 | 12.038 | 3.08 | 0.002 |

| Group * Model | ||||

| Self vs. Friend | −20.003 | 11.733 | −1.7 | 0.088 |

| Self vs. Other | −25.647 | 16.7 | −1.54 | 0.125 |

| Type | 22.839 | 16.359 | 1.4 | 0.163 |

| Group * Type | −36.369 | 22.806 | −1.59 | 0.111 |

| Model * Type | ||||

| Self vs. Friend | −18.57 | 9.703 | −1.91 | 0.056 |

| Self vs. Other | −1.592 | 9.828 | −0.16 | 0.871 |

| Group * Model * Type | ||||

| Self vs. Friend | 30.37 | 13.485 | 2.25 | 0.024 |

| Self vs. Other | −1.864 | 13.55 | −0.14 | 0.891 |

| CondHalf | 6.477 | 7.117 | 0.91 | 0.363 |

| Group * CondHalf | 0.895 | 9.872 | 0.09 | 0.928 |

| Model * CondHalf | ||||

| Self vs. Friend | −17.79 | 7.908 | −2.25 | 0.024 |

| Self vs. Other | −23.484 | 8.04 | −2.92 | 0.003 |

| Group * Model * CondHalf | ||||

| Self vs. Friend | 17.546 | 11.005 | 1.59 | 0.111 |

| Self vs. Other | 28.967 | 11.112 | 2.61 | 0.009 |

| Type * CondHalf | 14.789 | 7.955 | 1.86 | 0.063 |

| Group * Type * | ||||

| CondHalf | −17.042 | 11.052 | −1.54 | 0.123 |

| Model * Type * CondHalf | ||||

| Self vs. Friend | 2.196 | 11.177 | 0.2 | 0.844 |

| Self vs. Other | −41.655 | 11.322 | −3.68 | < 0.001 |

| Group * Model * Type * CondHalf | ||||

| Self vs. Friend | −19.04 | 15.569 | −1.22 | 0.221 |

| Self vs. Other | −13.147 | 15.71 | −0.84 | 0.403 |

| Random Effects | ||||

| Subject | ||||

| SD(Intercept) | 8061.822 | 1975.213 | ||

| SD(Type) | 3998.862 | 1016.509 | ||

| SD(Model) | 452.037 | 122.655 | ||

| SD(CondHalf) | 352.495 | 108.108 | ||

| SD(Item) | 1047.715 | 114.694 | ||

| SD(CondOrder) NA | NA | |||

Note. SD = Standard Deviation; SE = Standard Error; p = p-value significance; CondHalf = First or second half of trials within a Condition; NA = Not available: calculation suppressed due to computational limitations.

Figure 4.

Split-half Egocentric Bias. Parameter estimates for a linear mixed effects model examining the effect of Condition Half and type (Gest = grooming gesture; PSign = pseudosign) on lag times for each group. Error bars indicate Standard Error. † p < .1, * p < .05 after Bonferonni correction.

Across all instances, nonsigners only demonstrated an effect of egocentric bias within the first half of grooming gesture conditions, ??2(1) = 12.28, p = 0.0005. Nonsigners showed average increase in shadowing lag times for themselves in the first (M = 444 ms) versus second half (M = 451 ms), as well as average decreases in lag times for shadowing their friend (M = 474 ms vs. 462 ms). Signers demonstrated a marginal (p < 0.01) effect within the first half of the pseudosign condition, ??2(1) = 6.87, p = .009 (self first vs. second half: M = 447 ms vs. 451 ms; friend: M = 468 ms vs. 456 ms). All other tests were nonsignificant, p > 0.15. In summary, the presence of an egocentric bias among nonsigners shadowing grooming gestures disappeared over the course of the experiment. The duration of the experiment may have obscured the presence of an egocentric bias among signers shadowing pseudosigns.

Assessing group differences in temporal regularity

A very similar model to the one described above was used to examine production regularity. Two changes were made to the model. First, standard deviations of participants’ production delays served as the dependent measure. Second, standard deviation of stimulus production delays was included as a continuous random effect. Fixed factors included model, stimulus type, group, and all interactions. Random intercepts included list identity, condition order, and subject, with subject by type and subject by model random slopes.

The omnibus test of this model is shown in Table 3 and parameter estimates for each condition are shown in Figure 5. There was a main effect of group, p < 0.001, with signers showing reduced standard deviations (i.e., increased regularity) (M = 286 ms, SE = 2.9 ms) compared to nonsigners (M = 324 ms, SE = 2.8 ms). There was also a main effect of type, p < 0.001, with all participants showing improved regularity for Pseudosigns (M = 270 ms, SE = 3.0 ms) compared to Grooming Gestures (M = 341 ms, SE = 2.9 ms). There was also a group by type interaction, p = .018. Relative to nonsigners, signers exhibited greater improvements in regularity for Pseudosigns (??2(1) = 114.23, p < .001; M = 244 ms, SE = 3.9 ms vs. M = 295 ms, SE = 3.7 ms) compared to Grooming Gestures (??2(1) = 27.24, p < .001; M = 328 ms, SE = 3.7 ms vs. M = 353 ms, SE = 3.7 ms).

Table 3.

LME Regularity. Summary of effect regressions of production consistency across the fixed factors (group and type), and their interactions.

| Coefficient | SE | z | p > |z| | |

|---|---|---|---|---|

| Fixed Effects | ||||

| Intercept | 329.86 | 5.914 | 55.78 | < 0.001 |

| Group | 36.051 | 8.099 | 4.45 | < 0.001 |

| Type | −94.715 | 8.17 | −11.59 | < 0.001 |

| Group * Type | 26.882 | 11.338 | 2.37 | 0.018 |

| Model | ||||

| Self vs. Friend | −4.089 | 7.92 | −0.52 | 0.606 |

| Self vs. Other | −1.261 | 8.124 | −0.16 | 0.877 |

| Group * Model | ||||

| Self vs. Friend | −6.559 | 11.46 | −0.57 | 0.567 |

| Self vs. Other | −27.496 | 11.588 | −2.37 | 0.018 |

| Type * Model | ||||

| Self vs. Friend | 5.064 | 11.154 | 0.45 | 0.65 |

| Self vs. Other | 28.522 | 11.432 | 2.49 | 0.013 |

| Group * Type * Model | ||||

| Self vs. Friend | 5.16 | 16.041 | 0.32 | 0.748 |

| Self vs. Other | −9.149 | 16.247 | −0.56 | 0.573 |

| Random Effects | ||||

| Subject | ||||

| SD (Type) | 89.977 | 143.755 | ||

| SD (Model) | < 0.001 | < 0.001 | ||

| SD (Intercept) | 522.25 | 827.649 | ||

| SD (StimReg) | 4897.377 | 427.297 | ||

| SD (List) | NA | |||

Note. SD = Standard Deviation; SE = Standard Error; p = p- value significance; StimReg = Stimulus regularity, SD of delays between stimuli; NA = Not available: calculation suppressed due to computational limitations.

Figure 5.

Regularity Analysis. Parameter estimates for a linear mixed effects model examining the effect stimulus type (Gest = grooming gesture; PSign = pseudosign) on standard deviations (SD) of production delays for each group. Error bars indicate Standard Error. * p < .05

While there was no three-way interaction, there were group by model, p = .017, and type by model, p = .013, interactions. This result seems to stem from differences in the impact of visual familiarity (Friend vs. Other). While nonsigners show increased regularity for the Other model during Grooming Gesture production (χ2(1) = 4.77, p = .03; M = 337 ms, SD = 6.1 ms vs. M = 355 ms, SD = 6.0 ms), signers showed the opposite effect during Pseudosign production: namely, increased regularity for their Friend relative to the Other model (χ2(1) = 10.88, p = .001; M = 262 ms, SD = 6.0 ms vs. M = 236 ms, SD = 6.0 ms). See Figure 5.

Motor Memory

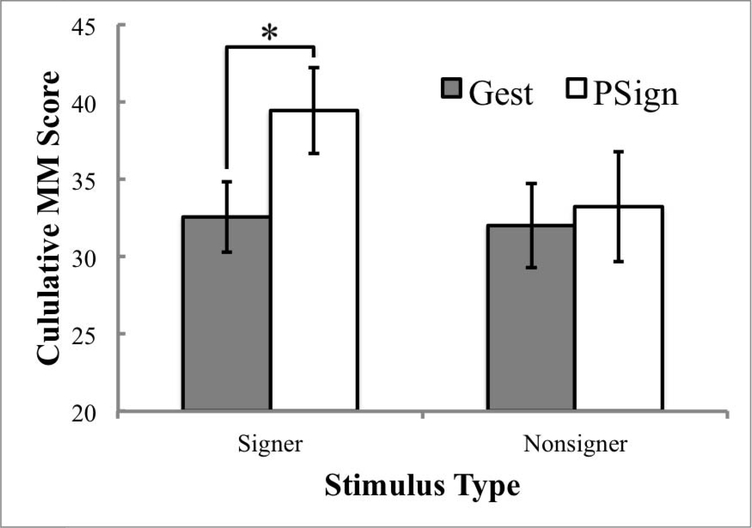

The mixed ANOVA of the motor memory cumulative scores showed a marginal effect of stimulus type, F(1, 37) = 3.48, p = .070, with participants, on average, showing higher cumulative scores for pseudosigns (M = 36, SD = 16) compared to grooming gestures (M = 32, SD = 11). There was neither a main effect of group, F(1, 37) = 1.00, p = .324, nor an interaction, F(1,37) = 1.76, p = .198. However, post-hoc paired t-tests revealed that signers showed a significantly higher performance for pseudosigns compared to grooming gestures, t(19) = −3.456, p = .003, while nonsigners showed no such difference, t(18) = −.308, p = .762. Mean cumulative scores for each group and stimulus type are shown in Figure 6.

Figure 6.

Motor Memory (MM) Cumulative Scores. Cumulative scores and standard errors on the motor memory test, by group and stimulus type, grooming gesture (Gest) or pseudosign (PSign). Error bars indicate Standard Error. * p < .05

A correlation between mean Shadowing lag times and motor memory cumulative score was performed for each group. While signers showed no relationship between these measures, r(20) = −.065, p = 0.786, nonsigners with better motor memory scores had shorter lag times, r(18) = −.541, p = 0.020. Thus, nonsigners seemed to rely on motor memory capacity to quickly reproduce items in the Shadowing task. Temporal regularity scores did not show a correlation with motor memory scores for either group.

Discussion

The present study was designed to address a number of related questions about predictive processing in the manual modality: (a) Do we find evidence for motor stimulation in the form of egocentric biases? (b) Does visual familiarity facilitate predictive processing? (c) Does the evidence of motor simulation hold true for signers across linguistic and nonlinguistic domains? (d) Does prosodic rhythm impact signer shadowing productions, increasing temporal regularity, and (e) Does motor memory facilitate manual shadowing? We present answers to each of these questions below, and we also discuss similarities and differences between vocal and manual shadowing.

Egocentric Bias

Under some proposals of forward modeling (e.g., Pickering & Garrod, 2013), covert motor imitation guides predictive representations, and results in egocentric bias. Previous studies have found an egocentric bias in individuals’ improved prediction abiliti es for themselves compared to others for commonplace actions (e.g., Knoblich et al., 2001; 2002). In the present study, we found evidence for egocentric bias when nonsigner participants shadowed common actions – grooming gestures. However, this effect was inconsistent in a post-hoc analysis which contrasted the first and second half of the condition. The egocentric bias found for nonsigners disappeared with continued exposure and practice. Predictive processing, over the course of the experiment, may have attuned to the specifics of each model, reducing the initial egocentric effect. The absence of an egocentric effect among nonsigners shadowing pseudosigns may reflect a familiarity bias. That is, predicted actions may need to be sufficiently familiar in order to generate a motor command from perception alone. Nonsigners have not been exposed to pseudosigns (prior to this study) which may render them less capable of accessing the motor commands required for fast covert imitation. However, the results of Corina and Gutierrez (2016), Watkins and Thompson (2017), and Hickok et al. (2011) indicate that covert imitation facilitates predictions for difficult stimuli. While overall lag time means indicate that pseudosign stimuli were more difficult for the nonsigners than the grooming gestures, there was no egocentric bias for the more difficult pseudosigns. Instead, the present evidence supports the proposal that only difficult but familiar contexts engage motor simulation.

Both the proposal by Hickok (2011) that only particularly difficult conditions engage motor simulation and the proposal by Gardner and colleagues’ (2017) that only moderate expertise results in motor simulation are relevant for examining signer shadowing performance. In the present study, egocentric bias among signers was limited to a marginal post-hoc effect during the first half of the pseudosign shadowing condition. In general, signers have much more experience with mapping the body of an observed individual onto their own body in order understand how to produce a manual movement (Shield & Meier, 2018), and it may be the case that extensive sign language experience renders shadowing a less cognitively demanding task for the signers compared to nonsigners3.

Additionally, sign language experience may improve users’ ability to rapidly accommodate the idiosyncrasies of various interlocutors, just as spoken language users can rapidly attune to accents (e.g., White & Aslin, 2011). Through everyday experiences with many non-native signers and with the sociolinguistic variation in ASL (see Lucas, 2001 for review), signers may be adept in overcoming egocentric bias more rapidly than spoken language users. Future studies might investigate differences in the rate of the phonological attunement process across spoken and signed languages.

Familiarity

Within the shadowing analyses, Friend versus Other model contrasts were performed on lag time data to examine the effect of visual familiarity on shadowing ability: can visual exposure to a friends’ gestures and/or signs improve predictive processing? We found no facilitative effect. Instead, signers were significantly faster for the unknown model when shadowing pseudosigns compared to shadowing their friend. This finding may have greater bearing on the quality of the to-be-shadowed stimuli than on the nature of shadowing familiar versus unfamiliar models. The quality of Friend stimuli was restricted as it reflected the best productions that participants (both signer and nonsigner) could produce in the limited span of an hour and fifteen minutes. In contrast, the Other model was a dedicated hearing signer confederate who was more highly trained over the course of several hours. While the average participant developed no memory for the randomized order of each string in their limited viewings, the Other model memorized these lists to some extent. The Other model may have provided higher quality, and therefore more predictable productions. We suggest that future studies investigating the impact of visual familiarity on shadowing performance might limit the training of any confederate models, or use Friend models from other participant pairs, in order to avoid variations in stimulus quality.

Production quality could refer to either (a) the features of a good production that were explicitly solicited from participants (i.e., minimal hesitations, target forms) or (b) phonological or prosodic regularities of the sort seen in natural sign language production. High quality productions were experimentally defined as having few hesitations and disfluencies (i.e., pauses or the manual equivalent of ‘umm’) as well as productions closer to the target handshape, location, and movement. If better performance for the Other model was simply an effect of increased fluidity and more consistency, yielding more predictable stimuli, one would expect to see shorter lag times for the Other model across groups and stimulus types. Instead, this benefit was only observed for the signers shadowing pseudosigns. Therefore, we suggest there may be some prosodic features of the Other model’s pseudosign productions that were inherently more predictable only by those with experience interpreting such prosodic cues. In other words, the Other model (a highly proficient signer), knowing she was producing pseudosigns, may have applied prosodic cues to these stimuli and not the grooming gestures. These prosodic cues may have only been interpretable to the signers familiar such prosodic information.

It is difficult, however, to specify exactly what these prosodic cues may be. Subtleties in the location of the hands for a given item can impart information about future locations via coarticulation (Grosvald & Corina, 2012; Mauk, 2003) and, while signers can glean important information from handshape transitions during fingerspelling (Schwarz, 2000; as cited in Geer & Keane, 2017), the same information may be distracting to nonsigners (Geer & Keane, 2014). The Other model often knew what the next pseudosign would be, as a result of more extensive training, and may have provided subtle co-articulatory cues to location, handshape and movement. She may have, for example, produced pseudosigns at the face slightly lower if the subsequent item were produced in neutral space. Considering stimuli differences, it is possible that the Other model produced robust co-articulation cues only during her production of pseudosign strings and not in her production of grooming gestures. Considering group differences, these co-articulatory cues may only be predictively useful for signers. Even if the same cues were present in the grooming gesture videos, it is possible that signers only considered co-articulation information relevant in linguistic contexts. Sign language comprehension, in general, makes use of manual co-articulation to facilitate phonological forward models (Grosvald & Corina, 2012; Hosemann et al., 2013), but evidence of group differences in this domain, while intuitive, have yet to be demonstrated. The present data cannot, however, differentiate between this information being only present in phonological contexts versus being only perceptually useful in these contexts.

Temporal Regularity

One major aspect of prosody we were able to examine was rhythm, in the form of temporal regularity. Controlling for the regularity of the model (i.e., stimulus temporal regularity was partialled out as a random effect in the mixed effects model), signers’ manual productions had more consistent lag times than the productions of nonsigners. Group differences were greater for pseudosign stimuli compared to grooming gestures. This result suggests that broader structures governing prosodic rhythm (see Allen et al., 1991) might be utilized during pseudosign shadowing, and may transfer to nonlinguistic gesture production in the same setting. Iverson, Patel, Nicodemus, and Emmorey (2015) demonstrated a similar form of nonlinguistic transfer when signers and nonsigners were asked to tap in tandem with a bouncing ball. In contrast to hearing nonsigners, deaf signers organized the visual rhythm metrically, coupling pairs of taps together. Ten of the twelve pseudosign stimuli exhibited a dual-motion structure (i.e., the movement is repeated, similar to ASL nouns like CHAIR). Thus, the pseudosign strings may lend themselves to this same natural rhythm.

Interestingly, across stimulus models, signers showed the shortest lag, but the least temporal regularity for the unfamiliar expert model. It may be the case that the coarticulation cues discussed above enhance predictability and permit very close shadowing, but they reduce the detection of temporal regularity. The lesser degree of coarticulation in the other conditions may not permit close in-tandem shadowing for signers, which may encourage a greater degree of rhythmic list prosody (see Erickson, 1992).

Motor Memory

The motor memory task was included to examine whether memory is a potential mediating variable in predictive processing. Overall, the two groups showed equivalent motor memory, suggesting that sign language experience does not grant general improvements to motoric memory. Instead, the benefits of sign language experience seem to be limited to the linguistic stimuli, with signers performing better on the pseudosign subtest than the grooming gesture subtest. Nonsigners, on the other hand, performed equivalently across these two stimulus types. The dissociation between signers’ pseudosign and grooming gesture scores reinforced the notion that signers maintain such information in a phonological loop, akin to the way spoken language users use subvocal articulation to maintain verbal information (Hall & Bavelier, 2010; Wilson & Emmorey, 1997). The equivalent grooming gesture scores for signers and nonsigners indicate that signers’ phonological loop does not naturally adapt to nonlinguistic gestures.

Correlations between shadowing lag times and motor memory scores indicated that the nonsigners, but not signers, relied on memory abilities to complete the shadowing task. Nonsigners have limited experiences producing manual actions in such rapid succession. Shadowing may therefore be a more cognitively demanding task for these participants, such that nonsigners need to recruit additional memory resources in order to rapidly pull from the ad hoc lexicon of the items in this study. Signers, on the other hand, have experience learning novel signs and integrating them into discourse, and therefore can more readily pull from an ad hoc lexicon without significant cognitive load.

Manual compared to vocal shadowing

This is the first study to examine shadowing in the manual modality. Early research with this method by Marslen-Wilson (1985) highlighted individual differences across participants with English prose stimuli. Close shadowers achieved lag times within 200 ms. In more recent studies, participants shadowing words in nonsense sentences showed average lag times around 610 ms (Nye & Fowler, 2003). When closely shadowing nonwords, average lag time ranged from 380 to 510 ms (Mitterer & Ernestus, 2008). When the shadowed stimulus was only one of three possible syllables, average lag time was 183 ms (Fowler et al., 2003). These disparities reflect differing degrees of stimulus predictability across studies. In general, without sentential context, larger possible inventories of shadowed items are associated with longer average lag times. In the present study, with a limited inventory of twelve actions in each condition, we observed a grand lag time mean of 448 ms for signers shadowing pseudosigns, when measuring from the contact point (see methods). Individual lag time averages ranged from 253 ms to 614 ms. While it is difficult to draw a direct comparison to studies with differing inventory sizes, our average lag time falls at the upper end of the range of previous findings for vocal shadowing. Our wide range of shadowing times across participants indicates that it may be possible to identify close shadowers in future studies, as has been done for spoken languages (Marslen-Wilson, 1985). Our mean lag time for pseudosigns for signers suggests that, in comparison to similar studies of vocal shadowing of nonwords (e.g., Fowler et al., 2003), manual shadowing takes slightly longer. This result is unsurprising given that manual shadowing employs larger and slower articulators in comparison to the vocal shadowing where the articulators travel a much shorter distance within the vocal tract.

A direct test of modality differences in this paradigm for individual nonwords would need to deal with the differing realizations of coarticulatory information. For spoken languages, coarticulation information is often hidden (e.g., the movement of the tongue to the alveolar ridge and lowering of the velum are not directly observable). In contrast, the articulators for sign languages are directly and consistently observable. In a cross-linguistic shadowing study, it may be difficult to determine the degree of verbal coarticulation equivalent to transitional information prior to (pseudo)sign onset. Instead, future studies could examine prose shadowing to look for possible modality differences in top-down facilitation effects and establish manual shadowing norms similar to Marslen-Wilson’s (1973; 1985) early work with this methodology.

Finally, it is important to note that the present study was limited by the capabilities of the hardware used. Future research in this domain could be improved by using equipment with higher frame rates than those available via Silverback® software, such as motion capture systems. A higher framerate of recorded shadowing would lead to reduced noise and increased precision in the coding process for lag times.

Conclusions

Overall, the present experiment does little to support the Pickering and Garrod (2013) model of persistent and robust motor simulation across linguistic and nonlinguistic domains. The limited evidence of egocentric bias presented here instead aligns more closely with proposals that predictive processes are related to familiarity biases (Watkins & Thompson, 2017) and/or cognitive load (Hickok et al., 2011). In contrast to Pickering and Garrod, a recent theoretical paper by Dogge, Custers, and Aarts (2019) proposes that the primary predictive mechanism may be non-motoric, rather than covert motoric imitation. In addition, Garner et al., (2017) propose that motoric simulation may be more of an intermediate step in the development of expertise. In line with these proposals, the present findings suggest that motor simulation is a supplementary rather than primary mechanism for predictive representations, and its use may vary across expertise levels. Pseudosigns were insufficiently familiar for the nonsigners to engage in egocentric motor simulation, and the disappearance of egocentric effects in the second half of grooming gesture trials is indicative of rapid attunement to the models in the shadowing task.

Finally, we found that signers demonstrated longer term rhythmic effects, especially during pseudosign production, reminiscent of the prosodic rhythm effects in natural language production (Allen et al., 1991; Nespor & Sandler, 1999; Selting, 2007) or even metric rhythm in nonlinguistic tasks (Iversen et al., 2015). Overall, these findings highlight the need for future research on the co-articulatory cues that facilitate comprehension and the phonological attunement processes among ASL signers.

Highlights.

Signers and nonsigners shadowed pseudosigns and grooming gestures.

Participants shadowed themselves, a friend, or an unknown model.

Limited evidence for covert imitation during shadowing (little egocentric bias).

Signers shadowed with greater temporal regularity than nonsigners.

Signers had better motor memory for pseudosigns than grooming gestures.

Acknowledgments

This work was supported by a grant from the National Institutes of Health (R01 DC010997). The authors would like express gratitude for the contributions of our participants, Emily Kubicek, Lucinda O’Grady Farnady, Jörg Matt for statistical consultation, as well as our research assistants: Cami Miner, Israel Montano, Francynne Pomperada, and Karina Maralit.

Footnotes

This participant’s mean lag time was an outlier relative to other participants. Rather than shadowing, this participant appears to have held items in memory briefly for one third of trials.

While stimuli were presented at 60 frames per second, hardware limitations prevented Silverback from recording above 23.98fps at the highest setting.

Additional correlation evidence with the motor memory task supports this notion of a difference in task difficulty across groups (see below).

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Allen GD, Wilbur RB, & Schick BB (1991). Aspects of rhythm in ASL. Sign Language Studies, 72(1), 297–320. doi: 10.1353/sls.1991.0020 [DOI] [Google Scholar]

- Caselli NK, Sehyr ZS, Cohen-Goldberg AM, & Emmorey K (2017). ASL-LEX: A lexical database of American Sign Language. Behavior research methods, 49(2), 784–801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina DP, & Gutierrez E (2016). Embodiment and American sign language. Gesture, 15(3), 291–305. doi: 10.1075/gest.15.3.01cor [DOI] [Google Scholar]

- Corsi P (1972). Memory and the medial temporal region of the brain. Unpublished doctoral dissertation), McGill University, Montreal, QB. [Google Scholar]

- Dogge M, Custers R, & Aarts H (2019). Moving Forward: On the Limits of Motor-Based Forward Models. Trends in cognitive sciences, 23(9), 743–753. doi: 10.1016/j.tics.2019.06.008 [DOI] [PubMed] [Google Scholar]

- Erickson F (1992). They know all the lines: Rhythmic organization and contextualization in a conversational listing routine. The contextualization of language, 365–397. [Google Scholar]

- Emmorey K, Bosworth R, & Kraljic T (2009). Visual feedback and self-monitoring of sign language. Journal of Memory and Language, 61(3), 398–411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Korpics F, & Petronio K (2009). The use of visual feedback during signing: Evidence from signers with impaired vision. Journal of Deaf Studies and Deaf Education, 14(1), 99–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fowler CA, Brown JM, Sabadini L, & Weihing J (2003). Rapid access to speech gestures in perception: Evidence from choice and simple response time tasks. Journal of memory and language, 49(3), 396–413. doi: 10.1016/S0749-596X(03)00072-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner T, Aglinskas A, & Cross ES (2017). Using guitar learning to probe the Action Observation Network’s response to visuomotor familiarity. NeuroImage, 156, 174–189. [DOI] [PubMed] [Google Scholar]

- Geer LC, & Keane J (2014). Exploring factors that contribute to successful fingerspelling comprehension. In Calzolari N, Choukri K, Declerck T, Loftsson H, Maegaard B, Mariani J, Moreno A, Odijk J, & Piperidis S (Eds.), Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14) (pp. 1905–1910). Reykjavik: European Language Resources Association (ELRA). [Google Scholar]

- Geer L, & Keane J (2017). Improving ASL fingerspelling comprehension in LW learners with explicit phonetic instruction. Language Teaching Research, 1–19. doi: 10.1177/1362168816686988 [DOI] [Google Scholar]

- Grosvald M, & Corina DP (2012). The perceptibility of long-distance coarticulation in speech and sign: A study of English and American sign language. Sign Language & Linguistics, 15(1), 73–103. doi: 10.1075/sll.15.1.04gro [DOI] [Google Scholar]

- Hall M, & Bavelier D (2010). Working memory, deafness and sign language In Marschark M & Spencer PE (Eds.), The handbook of deaf studies, language and education (pp. 458–472). Oxford, UK: Oxford University Press. [Google Scholar]

- Hall ML, & Bavelier D (2011). Short-term memory stages in sign vs. speech: The source of the serial span discrepancy. Cognition, 120(1), 54–66. doi: 10.1016/j.cognition.2011.02.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haueisen J, & Knösche TR (2001). Involuntary motor activity in pianists evoked by music perception. Journal of cognitive neuroscience, 13(6), 786–792. doi: 10.1162/08989290152541449 [DOI] [PubMed] [Google Scholar]

- Hickok G, Houde J, & Rong F (2011). Sensorimotor integration in speech processing: computational basis and neural organization. Neuron, 69(3), 407–422. doi: 10.1016/j.neuron.2011.01.019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Honorof DN, Weihing J, & Fowler CA (2011). Articulatory events are imitated under rapid shadowing. Journal of Phonetics, 39(1), 18–38. doi: 10.1016/j.wocn.2010.10.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hosemann J, Herrmann A, Steinbach M, Bornkessel-Schlesewsky I, & Schlesewsky M (2013). Lexical prediction via forward models: N400 evidence from German Sign Language. Neuropsychologia, 51(11), 2224–2237. [DOI] [PubMed] [Google Scholar]

- Iverson J, Patel A, Nicodemus B, & Emmorey K (2015). Synchronization to auditory and visual rhythms in hearing and deaf individuals. Cognition, 134, 232–244. doi: 10.1016/j.cognition.2014.10.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knoblich G, & Flach R (2001). Predicting the effects of actions: Interactions of perception and action. Psychological science, 12(6), 467–472. doi: 10.1111/1467-9280.00387 [DOI] [PubMed] [Google Scholar]

- Knoblich G, Seigerschmidt E, Flach R, & Prinz W (2002). Authorship effects in the prediction of handwriting strokes: Evidence for action simulation during action perception. The Quarterly Journal of Experimental Psychology Section A, 55(3), 1027–1046. doi: 10.1080/02724980143000631 [DOI] [PubMed] [Google Scholar]

- Kubicek E, & Quandt LC (2019). Sensorimotor system engagement during ASL sign perception: an EEG study in deaf signers and hearing non-signers. Cortex, 119, 457–469. [DOI] [PubMed] [Google Scholar]

- Kutas M, DeLong KA, & Smith NJ (2011). A. look around at what lies ahead: Prediction and predictability in language processing. Predictions in the brain: Using our past to generate a future, 190207. doi: 10.1093/acprof:oso/9780195395518.003.0065 [DOI] [Google Scholar]

- Liew SL, Sheng T, Margetis JL, & Aziz-Zadeh L (2013). Both novelty and expertise increase action observation network activity. Frontiers in human neuroscience, 7, 541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lucas C (Ed.). (2001). The sociolinguistics of sign languages. Cambridge: University Press. [Google Scholar]

- Marslen-Wilson WD (1973). Linguistic structure and speech shadowing at very short latencies. Nature, 189(4198), 226–228. doi: 10.1038/244522a0 [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson WD (1985). Speech shadowing and speech comprehension. Speech communication, 4(1–3), 55–73. doi: 10.1016/0167-6393(85)90036-6 [DOI] [Google Scholar]

- Mauk CE (2003). Undershoot in two modalities: Evidence from fast speech and fast signing (Unpublished doctoral dissertation). The University of Texas at Austin. [Google Scholar]

- Miller RM, Sanchez K, & Rosenblum LD (2013). Is speech alignment to talkers or tasks? Attention, Perception, & Psychophysics, 75(8), 1817–1826. doi: 10.3758/s13414-013-0517-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitterer H, & Ernestus M (2008). The link between speech perception and production is phonological and abstract: Evidence from the shadowing task. Cognition, 109(1), 168–173. doi: 10.1016/j.cognition.2008.08.002 [DOI] [PubMed] [Google Scholar]

- Nespor M, & Sandler W (1999). Prosody in Israeli sign language. Language and Speech, 42(2–3), 143–176. doi: 10.1177/00238309990420020201 [DOI] [Google Scholar]

- Nye PW, & Fowler CA (2003). Shadowing latency and imitation: the effect of familiarity with the phonetic patterning of English. Journal of Phonetics, 31(1), 63–79. doi: 10.1016/S0095-4470(02)00072-4 [DOI] [Google Scholar]

- Pickering MJ, & Garrod S (2013). An integrated theory of language production and comprehension. Behavioral and Brain Sciences, 36(04), 329–347. doi: 10.1017/S0140525X12001495 [DOI] [PubMed] [Google Scholar]

- Schwarz AL (2000). The perceptual relevance of transitions between segments in the fingerspelled signal (Unpublished doctoral dissertation). University of Texas at Austin. [Google Scholar]

- Selting M (2007). Lists as embedded structures and the prosody of list construction as an interactional resource. Journal of Pragmatics, 39(3), 483–526. doi: 10.1016/j.pragma.2006.07.008 [DOI] [Google Scholar]

- Shield A, & Meier RP (2018). Learning an embodied visual language: Four imitation strategies available to sign learners. Frontiers in Psychology, 9, 811. doi: 10.3389/fpsyg.2018.00811 [DOI] [PMC free article] [PubMed] [Google Scholar]

- StataCorp. (2015). Stata statistical software: Release 14. College Station, TX: StataCorp LP. [Google Scholar]

- Watkins F, & Thompson RL (2017). The relationship between sign production and sign comprehension: What handedness reveals. Cognition, 164, 144–149. doi: 10.1016/j.cognition.2017.03.019 [DOI] [PubMed] [Google Scholar]

- White KS, & Aslin RN (2011). Adaptation to novel accents by toddlers. Developmental Science, 14(2), 372–384. oi: 10.1111/j.1467-7687.2010.00986.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson M, & Emmorey K (1997). A visuospatial “phonological loop” in working memory: Evidence from American sign language. Memory & Cognition, 25(3), 313–320. doi: 10.3758/BF03211287 [DOI] [PubMed] [Google Scholar]

- Wilson M, & Knoblich G (2005). The case for motor involvement in perceiving conspecifics. Psychological bulletin, 131(3), 460. doi: 10.1037/0033-2909.131.3.460 [DOI] [PubMed] [Google Scholar]

- Wu YC, & Coulson S (2014). A psychometric measure of working memory capacity for configured body movement. PloS one, 9(1), e84834. doi: 10.1371/journal.pone.0084834 [DOI] [PMC free article] [PubMed] [Google Scholar]