Abstract

With the wide adoption of functional magnetic resonance imaging (fMRI) by cognitive neuroscience researchers, large volumes of brain imaging data have been accumulated in recent years. Aggregating these data to derive scientific insights often faces the challenge that fMRI data are high-dimensional, heterogeneous across people, and noisy. These challenges demand the development of computational tools that are tailored both for the neuroscience questions and for the properties of the data. We review a few recently developed algorithms in various domains of fMRI research: fMRI in naturalistic tasks, analyzing full-brain functional connectivity, pattern classification, inferring representational similarity and modeling structured residuals. These algorithms all tackle the challenges in fMRI similarly: they start by making clear statements of assumptions about neural data and existing domain knowledge, incorporate those assumptions and domain knowledge into probabilistic graphical models, and use those models to estimate properties of interest or latent structures in the data. Such approaches can avoid erroneous findings, reduce the impact of noise, better utilize known properties of the data, and better aggregate data across groups of subjects. With these successful cases, we advocate wider adoption of explicit model construction in cognitive neuroscience. Although we focus on fMRI, the principle illustrated here is generally applicable to brain data of other modalities.

Keywords: probabilistic graphical model, Bayesian, fMRI, cognitive neuroscience, big data, factor model, matrix normal

1. Introduction

Functional magnetic resonance imaging (fMRI) [1, 2] is a powerful tool to study the brain’s activity and functions. The fluctuation of the fMRI signal is related to the fluctuation of the concentrations of the oxygenated and deoxygenated hemoglobin in the blood, which follows the increase or decrease of local neuronal activity with a delay [3, 4]. This relation to the neural activity, together with its non-invasive nature, full brain coverage and reasonable balance between spatial and temporal resolution, makes fMRI a widely used brain imaging technique for studying the neural correlates of perceptual and cognitive processes in humans.

However, deriving insights about neural information processing from fMRI data can be challenging in many situations, because (1) fMRI signals only indirectly relate to neural activity [3, 5]; (2) the data typically contain noise and unknown physiological signals with complex spatial and temporal correlation, and various artifacts [6–8]; (3) the number of brain volumes scanned in each experiment is much smaller than the number of voxels (high dimensionality of data in contrast to small sample size); and (4) there is large variation in detailed brain anatomical structures and functional organization across people [9, 10], making it harder to aggregate data across people. Analysis tools need to take these factors into account in order to obtain fruitful insight from data. One promising approach to address these challenges is that of probabilistic graphical models. Probabilistic graphical models (PGM) are frameworks used to create probabilistic models of complex data distributions and represent them in compact graphical representation [11]. PGMs have been widely used in many different fields [12–14]. In behavioral studies of perception and cognition, PGM is not only a framework for describing the computational processes in the brain [15–17], but also a framework for testing different models against behavior [18–20]. However, except in few domains, its value for neural imaging analysis has not been fully appreciated. With PGMs, we can explicitly assume the relations and dependencies between quantities of interest and the data, construct hierarchical generative models that posit how fMRI data is generated from mental processes, incorporate structural assumptions about the data and domain knowledge into the models, and take into account the uncertainty/noise in fMRI data explicitly. These are benefits that are hard to achieve in conventional fMRI analysis tools, which provide a universal set of analyses whose baseline assumptions may or may not apply to specific datasets. Fig. 1 describes the scheme of building PGMs for fMRI study. In this paper, we illustrate how to build PGMs to solve neural imaging analysis problems following this scheme.

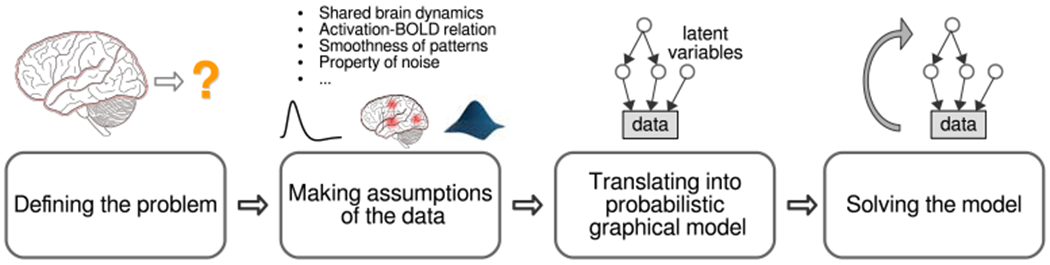

Figure 1:

The PGM-based approach to analyze neural data. In general, this involves four steps: (1) clearly defining the problem to solve or the question being asked; (2) making assumptions about the property of the data, including domain knowledge about data and causal relation between latent variables and measured data; (3) translating these assumptions to a probabilistic graphical model which expresses how latent variables together generate measured data. The model uses conditional probability distributions between variables to capture their causal relations; (4) solving the model to infer latent variables or to draw conclusion for the question being asked in the first step.

As illustrated in Fig. 1, an approach which relies on explicit PGM typically involves four major steps. The first step is defining the problem: deciding what question is asked or what problem needs to be solved, and deciding what quantity allows one to answer the question or to characterize certain aspect of the brain. This is essentially the hypothesis generation step of hypothesis-driven science, but we emphasize it as a distinct step because the hypothesis needs to be precise enough to translate into a model in subsequenct steps.

After the question is clearly defined, the second step is to make explicit assumptions of how the quantity of interest and experimental manipulations directly or indirectly contribute to the data to be analyzed, and to make assumptions of how variables of no interest (nuisance factors) may jointly impact the data. If there is domain knowledge of the properties of fMRI data that can help construct models of the data, it should be clearly stated at this step as well.

The third step is to translate these assumptions and domain knowledge into a computational model. Such computational models can often be described by probabilistic graphical models (PGM) [11] composed of nodes and directed edges between nodes. The nodes and edges together form a graph. When building PGMs, the data, experimental manipulation, quantity of interest and nuisance factors that are considered in the assumptions of the previous step all become variables (either known or unknown) and are each represented by a node in the graph. The hypothesized relations between variables in the models are expressed as conditional probability of one variable given one or more other variables, and are represented by directed edges. Each edge is directed from one variable to another variable that is conditioned on it (i.e., the distribution of the variable at the head of an edge (arrow) depends on the variable at the tail of the edge). The domain knowledge is either captured by the prior distribution of certain variables in the graph, or in the form of the conditional dependencies. The probabilistic nature of such models makes them a natural choice for capturing the noise properties in the system and the potential uncertainty in the estimates of parameters from the researchers’ perspective.

Once the PGM is built, the fourth step is to deploy computational techniques to estimate the unknown variables of interest in the model. This step essentially inverts the model by inferring variables of interest at the source of the directed edges in the graphical model. In some cases, inferring these variables serves to answer the original question by providing characterization of some aspect of brain activity. In other cases, when the scientific question is to test competing hypotheses, the competing hypotheses should be translated into PGMs that differ in either the range of values of some key variables or in the structures of the models. The selection of the winning model can be either based on classical statistical tests of the inferred values of the key variables (when each value is inferred independently), or based on the likelihood that each model can give rise to the data (model evidence), marginalizing unknown variables [21, 22]. To approximate posterior distributions of latent variables (variables that are directly or indirectly causal to the nodes representing observable data) in the probabilistic graphical models given the observed data, techniques such as Markov Chain Monte Carlo (MCMC) [23, 24] or variational Bayes [25] are often employed [26]. In certain cases, when the posterior distribution of these latent variables can be analytically derived, exact inference of the posterior distribution or the maximum a posteriori values of the variables can often be achieved. A full discussion of the inference methods is out of the scope of this paper. Interested readers may refer to tutorials such as Chapter 8 of [27] or part II of [11].

Because a PGM is explicitly built, it is easy to evaluate whether the inference procedure can reliably recover the variables of interest in the model, by simulating data according to the model and comparing the recovered values of those variables with the values used in the simulation. In contrast, traditional approaches without building an explicit model of the data generating process lack the ability to simulate data in accordance with its (implicit) assumptions. Without simulating data, it becomes impossible to verify that an analysis can yield correct results, because researchers are only left with real neural data of which the generative process and the ground truth of the variables of interest are not known. Thus, there is no guarantee that the quantity extracted by analysis methods without explicit assumptions of data generating process bears direct relation to what the researchers are interested in.

In addition to transparency and verifiability, PGMs offer the flexibility to combine the advantage of various pieces of domain knowledge of the brain (for example, brain activation patterns tend to be spatially smooth). This is because domain knowledge can be translated into a prior distribution of certain form over some latent variables in the PGM. With the PGM as a backbone, different prior distributions may act as add-on parts that can be plugged in at different places of the model, depending on what domain knowledge is proper for the purpose of analysis. For example, in 2.2, we show that the smoothness assumption of the brain activity and the similarity of brain networks across people are incorporated as the 3D Gaussian shape of the spatial basis for brain patterns and the Gaussian distribution of node location across subjects, respectively. In 2.3, we show that two types of prior knowledge about fMRI decoding weights, smoothness and sparsity, can also be incorporated together by assuming a Gaussian process prior on the joint distribution of the fMRI decoding weights of all voxels.

In the following, we select example analysis methods developed in different research domains to illustrate how the PGM approach to neural imaging data can be applied to discovering shared neural dynamics across participants doing the same task, modeling the functional connectivity among brain regions, improving the performance of decoding mental contents and obtaining more biologically informed decoding weights, reducing the bias in estimating similarity among activation patterns, and providing more comprehensive model of the noise in fMRI data. These methods together illustrate how the PGM [11] can accommodate domain knowledge and known properties of the data and facilitate aggregating information over larger datasets. These features allow us to mitigate the limitations in fMRI data: high dimensionality (many voxels), low sample size in single participant, heterogeneity across participants and high noise. Although introducing a PGM is not the only way to overcome these limitations, it is one of the simplest ways to achieve transparency, verifiability and interpretability, due to explicit modeling.

Because the focus is on illustrating the principle of PGMs, this paper can by no means provide a thorough review of all PGM-based methods of fMRI analysis. For example, the use of dynamic causal modeling [28, 29] to infer the interactive relations among brain regions, the construction of encoding models [30–33] to understand the features encoded by a brain region or to reconstruct perceived sensory inputs, and the development of a probabilistic event segmentation model [34] to discover distinctive and sustained brain states, are all good illustrations of the four-step procedure above. Readers are encouraged to also refer to several other reviews (e.g., [35, 36]) on Bayesian approaches to fMRI for a more comprehensive understanding of other existing PGM tools that share the advantages illustrated here.

While we advocate for explicit construction of probabilistic models in fMRI analysis, PGM-based methods do still have limitations. For example, performing efficient inference on PGMs can still scale poorly in both computation and memory, limiting their use on large-scale data without speciallytuned algorithms and approximations. Furthermore, deriving the inference algorithm for any specific model has historically required extensive knowledge in computer science or statistics, and it is not always easy to establish how robust any specific model is to mismatches between the assumptions made in the models and the true data properties. But the overall outlook is encouraging: algorithmic improvements have enabled scaling up models previously considered impossible (e.g. [37]), general tools for probabilistic inference have blurred the lines between practitioner and methods developer (e.g. [38–40]), and advances in these aspects of PGM are an area of active research that will continue within and outside the neuroscientific domain.

2. Examples of PGM-based analysis methods for fMRI data

2.1. Discovering latent neural dynamics for naturalistic task

Defining the problem: aggregating multi-subject fMRI data

fMRI datasets with naturalistic stimuli, such as movies or audiobooks, usually have limited number of samples per subject. In general, fMRI datasets not only have a large number of voxels, but also tend to have a small number of time points due to the limitation of samples per experiment session as a result of the slowness of the haemodynamic response and limited sample rate of the scanner. In fMRI datasets with naturalistic stimuli, it is also infeasible to collect many samples from a single subject when the experiments require the natural stimulus to be fresh to the subjects, so each subject could only be exposed to the same stimulus once. Therefore, to improve analysis sensitivity, we need to aggregate data from multiple subjects with the same stimulus effectively. The idea is similar to repeated-measures designs in neuroscience where the same variable is measured multiple times, but here the repetition is over different subjects. In our fMRI analysis application, we want to find what is common across subjects. The challenge is that the anatomical and functional structures between subjects are not aligned [41]. For example, when listening to the same music, a musician and a person without any music training will probably have different responses. Some early attempts applied pipelines such as averaging the fMRI data from all subjects after anatomical alignment, which assumes voxels of different brains have one-to-one correspondence [41, 42]. In contrast, the Shared Response Model (SRM) [43] is a Bayesian factor analysis model that finds the shared latent neural dynamics across subjects in a multi-subject fMRI dataset after anatomical alignment, without assuming one-to-one voxel correspondence.

Making assumptions: temporally-aligned stimulus

SRM assumes that the stimulus in a naturalistic task dataset is temporally-aligned. That is, all the subjects receive the same stimulus at the same time point in the task. Therefore, we assume that all the subjects share the same low-dimensional latent representation within a dataset, called “shared response.” On the other hand, to account for the differences between subjects, SRM assumes that each subject has a subject-specific spatial basis for generating the observed fMRI data from the shared response.

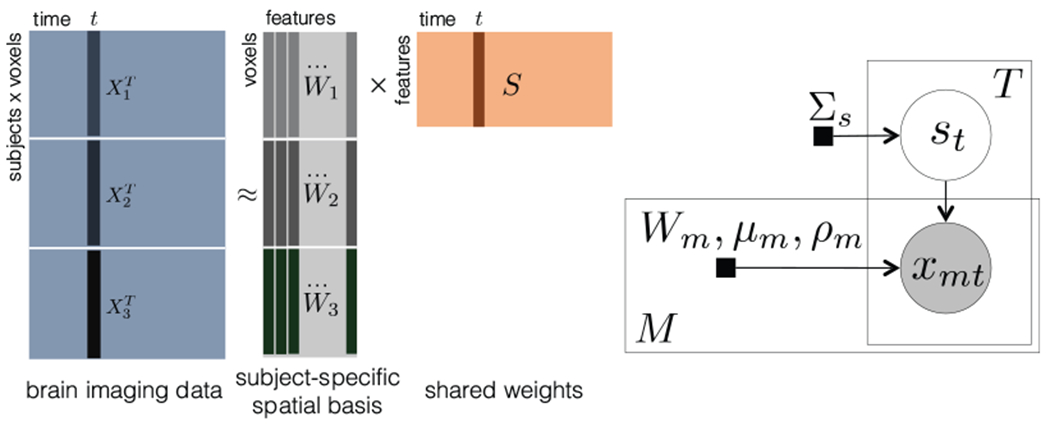

Translating assumptions to a graphical model: shared response as a latent variable

To translate the assumptions above into a computational model, let us look at the deterministic SRM first and then the probabilistic version. The deterministic SRM factorizes the transpose of each subject’s brain image data into a subject-specific spatial basis Wm and the shared response S with the orthogonal constraint (Fig. 2), where is the brain image data of subject m, is the subject-specific spatial basis of subject m, is the shared response across subjects, Vm is the number of voxels of subject m, T is the number of time points, and K is the number of features (the dimensionality of the shared response). K is a tunable hyper-parameter which is usually much smaller than T. More formally, deterministic SRM minimizes the Frob’ aus norm of reconstruction error

| (1) |

under the constraint . This simple model is then extended to a probabilistic setting, as shown in Fig. 2. Here denotes the observed brain image data of subject m at time t, denotes a shared latent random vector with

| (2) |

The distribution of xmt conditioned on st is then

| (3) |

where the subject-specific average μm accounts for non-zero mean and is the subject dependent isotropic noise covariance (for non-isotropic noise covariance in SRM, see 2.5). In the probabilistic version, the orthogonal constraint still holds. A constr ained expectation-maximization (EM) algorithm is used to solve this model.

Figure 2:

Left: Illustration of deterministic SRM for three subjects. Right: Graphical model for SRM with M subjects and T time points. (adapted from [43]) Brain image data (Vm voxels) is observed from subject m at time t, t = 1 : T, m = 1 : M. Each observation xmt is a linear combination of subject-specific orthogonal basis (columns of Wm) using the weights specified by st. The two plates are repeated T and M times, respectively. Shaded nodes: observations, unshaded nodes: latent variables, and black squares: parameters.

Applications: identified shared responses and extensions

The SRM identifies the shared and individual responses in a multi-subject fMRI dataset with naturalistic tasks. The explicit structure of SRM makes the fMRI data it is applied to more interpretable: the extracted shared responses allow us to aggregate information from multiple subjects, and the individual responses could be used to identify what is unique for each subject. SRM shows improved performance in various tasks, such as image-viewing fMRI data classification, using shared and individual responses, as described in [43], and movie scene classification [44].

Compared with hyperalignment (HA) [45], SRM also has a built-in dimensionality reduction mechanism with a tunable number of features, where HA is an earlier multi-subject alignment algorithm with the objective to minimize

| (4) |

under the constraint , . Note that Wm is a square matrix here because HA aims to rotate each subject’s Xm to match a global template S. More importantly, if Wm in HA is set to as in SRM, then it sometimes learns uninformative S. As illustrated in [43], when performing an image stimulus classification experiment, HA with shows much lower testing accuracy than SRM.

Furthermore, SRM already has several extensions which make it more useful. For example, searchlight SRM [46] combines SRM with searchlight analysis, which enables the localization of shared responses. Multi-dataset multi-subject analysis (MDMS) [47] extends SRM to the multi-dataset setting where the model can aggregate information across subjects and datasets with different stimuli. Semi-supervised SRM [48] combines SRM with an additional multinomial logistic regression objective, such that the model can leverage partially labeled data. Matrix-normal SRM [49], discussed below, makes different choices in modeling the residuals and the constraints on Wm. All of these recent development of SRM illustrate the expandability of PGM-based method and the flexibility of adapting one PGM to different experimental settings and research purposes. In all these developments, the effectiveness of inverting a PGM can be verified by simulation, which is difficult for algorithms that do not explicitly build PGMs.

2.2. Discovering full-brain functional connectivity from fMRI

Defining the problem: discovering full-brain functional connectivity

Recent research suggests that the functional connectivity (networks) in human brain, commonly represented by the spatial covariance structure of fMRI data, can change during different cognitive states [50]. To estimate functional connectivity during a particular cognitive state (or an experimental condition) from fMRI data, one approach is to compute the correlation between the time series of pairs of voxels [51]. Because of the computational time and memory demanded by this voxel-based approach, most researchers focus their analysis on pre-selected regions of interest (ROIs). But this requires anatomically predefined ROIs which may not correctly capture the voxel-wise correlation. Voxel-based methods such as independent component analysis [52, 53] generate statistically independent spatial maps and they are useful for applications that assume statistical independence between different neural sources. But each component discovered in this approach, or their combination, cannot be easily used to analyze spatially overlapping but functionally distinct activity patterns. Topographic Factor Analysis (TFA) [54] and Hierarchical Topographic Factor Analysis (HTFA) are Bayesian factor analysis models that can be used to efficiently analyze full-brain functional connectivity in large multi-subject neuroimaging datasets [55]. Further, one of properties of HTFA is to generate spatially compact factors that partially overlap, and this property can help analyze and detangle the contributions of activity patterns that are functionally distant but spatially overlapping.

Making assumptions: spatial function-based latent factors

Both TFA [54] and HTFA [55] cast each subject’s brain images as a linear combination of latent factors, where each latent factor is modeled as a parameterizable spatial function. Each latent factor can be interpreted as a node in a simplified representation of the brain’s network. A subject’s matrix of the changing weights on the nodes over time may be viewed as a low-dimensional embedding (or representation) of the original brain data. The pairwise correlations between each factor’s weights over time further reflect the signs and strengths of the node-to-node connections (i.e. the functional connectivity). Both TFA and HTFA approximate each subject’s functional connectivity by firstly representing each brain image in terms of the activities of a set of localized network nodes, and then computing the covariance of the activity. Furthermore, HTFA [55] is a multi-subject extension of TFA [54], and attempts to discover the network nodes that are common across a group of subjects. HTFA estimates a global template as well as each individual’s subject-specific template. The global template describes where each common network node is placed, how wide it is and how active it tends to be. Each subject-specific template is a particular instantiation of the common network nodes and the subject’s node activities.

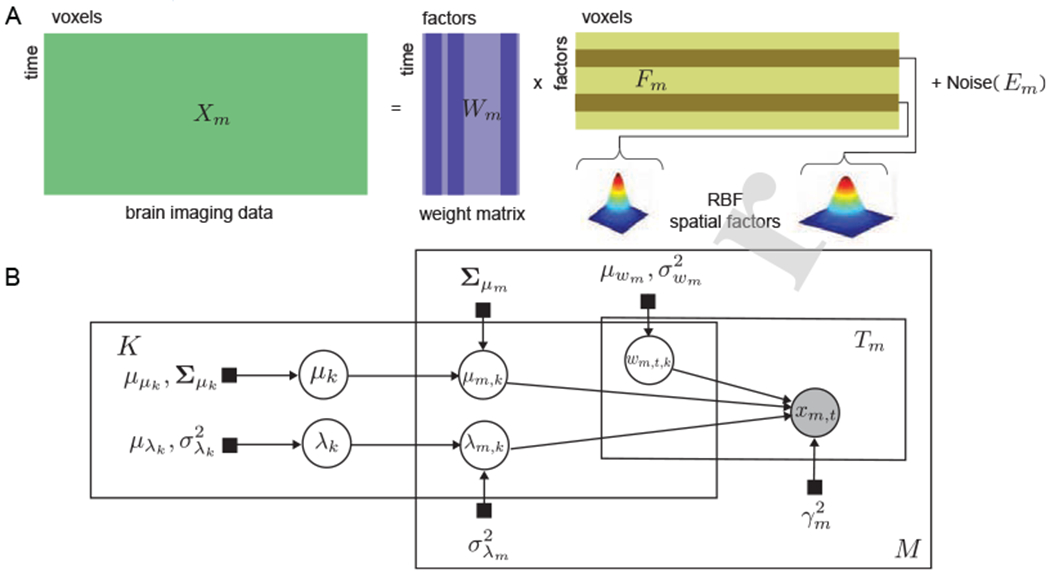

Translating assumptions to a graphical model: global and subject specific template

HTFA is formulated as a probabilistic latent variable model. Let represent subject m’s data as a matrix with Tm fMRI samples of the activity of Vm voxels, each sample being vectorized as one row in Xm. Then, each subject is approximated with a factor analysis model

| (5) |

where are the weights of , the latent factors. Em is the noise term. Each latent factor (row of Fm) is a radial basis function (RBF) with center at μm,k and width λm,k

| (6) |

in positions for all the voxels ir the three-dimensional voxel space of the brain. HTFA defines the local factors in Fm as perturbations of the factors of a global template in F. Therefore, the factor centers μm,k for all subjects are obtained from a multivariate normal distribution with mean μk and covariance Σμm. The mean μk represents the center of the global kth factor, while Σμm determines the distribution of the possible distance between the global and the Gcal center of the factor. Similarly, the widths λm,k for all subjects are drawn from a normal distribution with mean λk, the width of the global kth factor, and variance . The model defines multivariate Gaussian prior for the global parameters μk and Gaussian prior for λk, respectively. In addition, the columns of the weight matrices Wm are modeled with a distribution and the elements in the noise term Em are assumed to be independent with a distribution (for one approach to non-independent noise, see 2.5). The associated graphical model is shown in Fig. 3.

Figure 3:

A)(H)TFA factor model. fMRI data Xm is decomposed into weight maxtrix Wm and factor matrix Fm. Each factor is a RBF function. B) Graphical model for HTFA. Brain image data (Vm voxels) is observed from subject m at time t, t = 1 : Tm, m = 1 : M. Each observation xm,t is a linear combination of K subject-specific latent factors, using the weights specified by wm,t,k. Each latent factor (row of Fm) is a spatial function of and μm,k). The three plates are repeated K, Tm and M times, respectively. Shaded nodes: observations, unshaded nodes: latent variables, and black squares: parameters (adapted from [55]).

Solving the model

The maximum a posteriori (MAP) probability estimation procedure is used to solve the HTFA model. The method consists of a global and local step that iteratively update the parameters [56]. The global step updates the param Rers of the K distributions in the global template. The local step updates for each subject m the weight matrices Wm, the local centers μm,k and the widths λm,k of each latent factor. To update the parameters of the factors in Fm, the local step solves the following problem, where φm is a subsampling coefficient. Optimized implementations of TFA and HTFA [56] can be found in BrainlAK [57].

| (7) |

Eq. (7) consists of the reconstruction error, the Mahalanobis distance between global and local centers, and the Euclidean distance between global and local widths. Due to its non-linearity, the latent factors of each subject are computed using a non-linear least squares solver [57], and implemented with a trust-region reflective method [58]. The weight matrix is solved with a closed-form solution of the form of ridge regression. The hyper-parameters of the global template are updated given the local estimates and under the assumption that the posterior has a conjugate prior with multivariate normal and normal distribution for centers and width, respectively.

Advantages

Because the number of network nodes is typically substantially smaller than the number of fMRI voxels, one obvious advantage of HTFA is that it can be orders of magnitude more efficient than traditional voxel-based functional connectivity approaches. Compared to other dimensionality reduction methods, HTFA provides additional advantages: (a) it provides estimation of both global and subject-specific templates, and builds connections between them; (b) modeling the latent factors as spatially smooth allows them to be overlapping rather than distinct, as would be the case of functional connectivity based on anatomically defined brain region segmentation; (c) it provides a natural means of determining how many network nodes (latent factors) should be used for a given dataset (further details about determining K can be acquired from [55]); and (d) because HTFA decomposes brain images into sums of spatial functions, it supports seamless mapping between images of different resolutions and potentially different imaging modalities.

Applications

HTFA can be applied to different tasks with multi-subject fMRI datasets, for example, inferring dynamic full-brain inter-subject functional connectivity when participants are listening to a story or watching a television show [55]. The functional connectivity of each subject can be estimated by the correlation between the column of Wm. Since the global template of HTFA makes sure the columns of the W1…M correspond to the same network nodes across the different subjects, the ISFC can be computed by the correlation between the columns of W1…M across subjects. A recent study showed both HTFA-derived activities and HTFA-derived ISFC can be used to reliably decode which moments in the story or show the participants were experiencing. A decoder with the combination of these two types of patterns outperformed decoders with either activity or connectivity patterns alone [55].

2.3. Obtaining biologically informed decoding weights on fMRI patterns

Defining the problem: fMRI decoding with sparse weights

A primary research problem neuroscientists have been studying with fMRI is brain decoding or inverse inference [59–61]. The goal of a decoding task is to understand how brain activity represents task-related variables, e.g. the orientation of a grating [62] or the category of an object [63]. Researchers often use linear classification and regression methods to identify the brain regions or voxels that are most closely related to these task-related variables by inspecting the decoding weights.

A piece of domain knowledge in fMRI decoding is that different regions of the brain are specialized for different functions, implying that only few small regions of the brain are specifically activated during an individual task. In the linear regression methods that are common in the field, this assumption is equivalent to assuming that the weights mapping fMRI to task-related variables are mostly zeros with a few non-zero values, which is referred to as “sparsity”. This model assumption is also reasonable from a statistical standpoint, since the task variable is linked to fMRI data with usually tens of thousands of voxels, but the number of fMRI volumes with valid task labels is far smaller, e.g. a few hundred. We need to estimate tens of thousands of coefficients to map a full brain pattern down to a single task variable given only a few hundred observations. This is referred to as a high-dimensional and small-sample issue, where the linear regression model would fit seemingly predictive information from noise instead of the underlying brain signal, and thus would not generalize well to new data. To address this issue, one can reduce the number of coefficients. With the sparsity assumption, we effectively regularize the linear decoding model by restricting the weight parameter space to a much smaller one, thus mitigating the issue.

Making assumptions: region sparsity

Sparse decoding has already been exploited in the previous literature [64–66]. However, the non-zero coefficients are not randomly distributed throughout the brain, but tend to arise in clusters, and are therefore not independent a priori. Sets of voxels allowing to discriminate between different brain states are expected to form small localized and connected areas. If one voxel encodes information related to the task, its neighboring voxels should carry similar information, given that contiguous brain regions of shared functions extend over multiple adjacent voxels. This type of sparsity is referred to as “region sparsity” [67]. By considering such region sparsity, one can impose a structured sparsity regularization over the decoding weights which further constrains the parameter space to search and thus eases the decoding weights optimization task. Wu et al. [67] developed a Bayesian framework that incorporated such region sparsity into brain decoding for fMRI analysis and showed superior decoding performance and more biologically informed decoding weights for three brain imaging datasets.

Translating assumptions to a graphical model: building a region sparsity prior over the brain weights

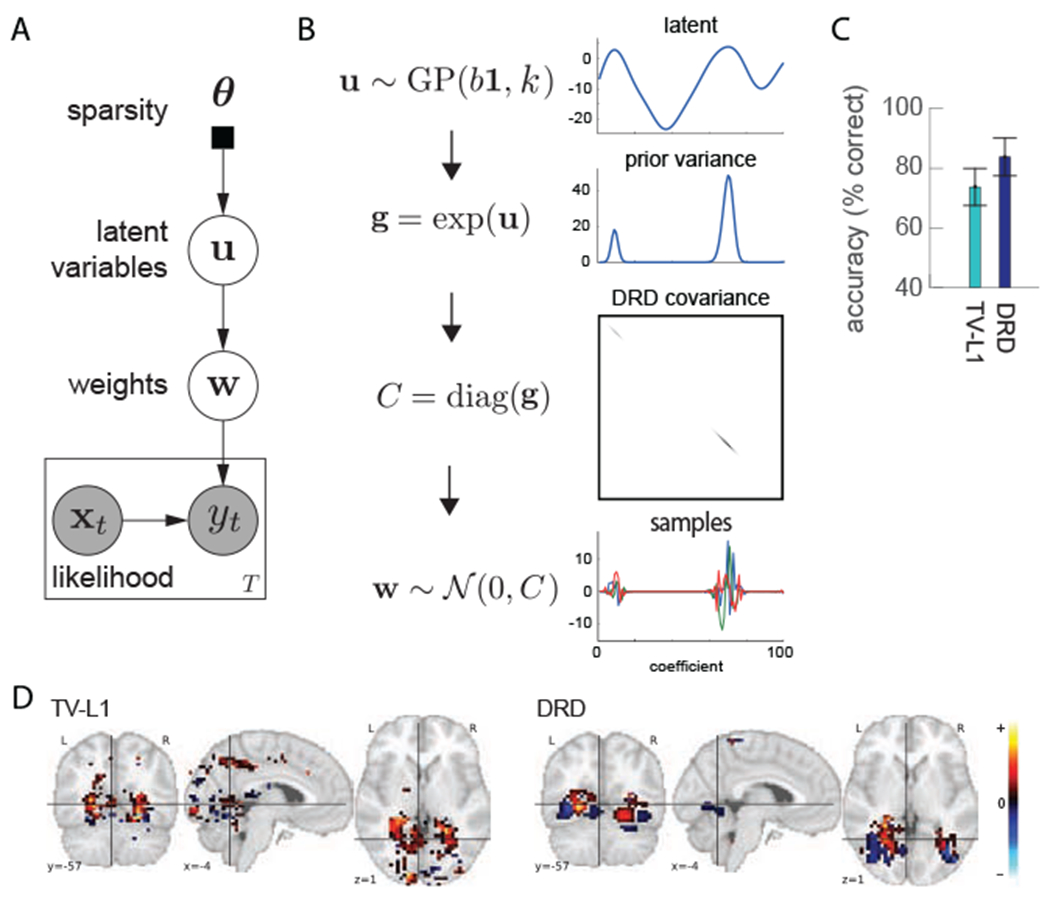

The model proposed in [67] is referred to as “Dependent Relevance Determination” (DRD). It builds a Bayesian hierarchical model that imposes a sparsity prior over the decoding weights. Unlike previous work with sparsity assumptions, DRD also assumes that nearby sparse voxel-activations should be correlated to each other based on their spatial locations.

Formally, the fMRI decoding problem can be formulated in a linear regression setting: at time t, consider a scalar response linked to an input vector via the linear model:

| (8) |

with observation noise , where T is the number of time points and V is the number of voxels. The regression (linear weight) vector is the quantity of interest. We can denote the fMRI data matrix by , where each row of X is the tth input vector xtT and T ≪ V, and the observation vector by . Since the noise is Gaussian, it can be written as

| (9) |

DRD imposes a zero-mean multivariate normal prior on w:

| (10) |

where the prior covariance matrix C(θ) is a function of hyperparameters θ. One can specify C(θ) based on prior knowledge on the regression vector, e.g. sparsity [68–70], smoothness [71, 72], or both [73]. Ridge regression assumes C(θ) = θ−1I where θ is a scalar for precision and I is the identity matrix. Automatic relevance determination (ARD) [74] uses a diagonal prior covariance matrix with a distinct hyperparameter θi for each element of the diagonal, thus . DRD an extension of ARD by imposing dependency between θi.

Given the general Bayesian linear regression setting, DRD aims to construct a covariance C(θ) which generates the region-sparse w. This is achieved by introducing a latent variable . u is from a Gaussian process (GP) prior i.e

| (11) |

A Gaussian process [75] is a stochastic process whose realizations are draws from a multivariate normal distribution, but whose mean b and covariance k can be functions of another input (e.g. spatial locations). For example, by defining a Gaussian process with covariance (kernel) that is a function of spatial distances, we can constrain the samples drawn from the Gaussian process distribution to exhibit spatial correlation based on the kernel. Most commonly in GPs, the squared exponential kernel is used, which constrains the draws from the multivariate normal to be smooth over space, i.e. where χ and χ′ are the spatial location of any two voxel. Functions sampled from such a GP are smooth functions. The smoothness is determined by the length scale and the magnitude of the functions is determined by . These three hyperparameters in the DRD prior are jointly denoted by θ = {b, ρ, l}.

By imposing a GP prior over the latent u, DRD effectively captures dependencies in u. Given such latent, Wu et al. formulate the covariance of w with

| (12) |

The exponential function here ensures the non-negativity of values on the diagonal of C, which makes it a valid covariance. When the mean b is very negative, exp(u) has many close-to-zero values that resuH in soft-sparsity (since their prior mean is zero and the variance is nearly zero as well). Note that the spatial smoothness of u induces dependencies between the variances of nearby voxels, that is, the prior variance changes slowly between neighboring coefficients. If the ith coefficient of u has a large prior variance, then probably the coefficients of its adjacent voxels are large as well.

Fig. 4A and B show the probabilistic graphical model of DRD and the process to generate region-sparse samples for w.

Figure 4:

A) Probabilistic graphical model for DRD. The rectangular box indicates a graph for each time point. Each fMRI volume xt at time t is mapped to the experimental response yt together with a global variable w (eq. 8). The decoding weight vector w is conditioned on a latent variable u (eq. 10 and 12). The latent variable u is generated from some hyperparameters in θ (eq. 11). B) The generating process for region-sparse decoding weight w. C) Accuracy comparison between TV-L1 and DRD. The accuracy value is averaged over all pairs of objects. D) Decoding weight map for the house vs bottle pair using TV-L1 (left) and DRD (right). Yellow indicates very positive values and light blue indicates very negative values. Black means small values. Adapted from [67].

Solving the model

In the paragraphs above, we show how to build a generative model for DRD to generate region-sparse decoding weights. When using DRD, one can apply it to fMRI decoding problems where we have the imaging data X and prediction targets y, and we aim to infer the decoding weight vector w. To solve this problem, we need to reverse the generating process using some inference methods. Exact Bayesian inference is infeasible with a DRD prior. However, approximate inference can be carried out efficiently using both Laplace approximation and Markov Chain Monte Carlo (MCMC) sampling. Further details regarding inference can be acquired from [67].

Application: classification on a visual recognition task

The visual recognition dataset [76] is from a study on object representation in human ventral temporal cortex. In the object recognition experiment, 6 subjects were asked to recognize 8 different types of objects (bottles, houses, cats, scissors, chairs, faces, shoes and scrambled control images). Wu et al. [67] examined this dataset to learn the weights mapping the fMRI brain activity to object categories for each subject. They cast the multi-category classification problems into multiple binary classification problems for each pair of categories. Wu et al. employed the same linear regression model as in eq. 8 for training the model. When making predictions, they took the sign of the output y as the discrete binary labels (+1/−1).

They showed that DRD achieved the highest accuracies for most of the binary classifications compared with other state-of-art sparse decoding methods [65, 66]. Fig. 4C shows a comparison of accuracies between DRD and a baseline model, total variation L1 (TV-L1) [77]. More specifically, DRD is able to find more biologically informed decoding weight maps for many pairs compared with TV-L1. By saying biologically informed, we mean that only small regions of voxels are correlated to a specific task and nearby voxels are more likely to be activated together compared with LASSO. Fig. 4D presents the brain map estimation for the house-vs-bottle pair for TV-L1 and DRD. DRD weights have significant positive regions in the parahippocampal place area (PPA) (responding more strongly to scenes depicting places) [78] and clustered negative weights in the lateral occipital comr Ox (LOC) (responding to objects in human occipito-temporal cortex) [79]. By comparison, TV-L1 weights in LOC are not very clustered and don’t show negative activations.

We describe the DRD model here in a generative way. The brain decoding weights are generated from a DRD prior, but the application is a discriminative model, i.e. mapping fMRI data to experimental variables. Because the DRD prior was proposed to learn region-sparse brain weights regardless of whether a model is discriminative or generative, it can also be inserted to generative models such as the factor analysis models in SRM in essentially the same way.

2.4. Inferring representational similarity between neural patterns

Defining the problem: neural pattern similarity

As sensory inputs get processed in the brain, each neural population of one brain region performs nonlinear computation of the input from neurons of other regions. The representation of the same external object thus changes from one region to another. One fundamental question in neuroscience is how these representations are transformed, in service for deciding the right actions to take [80, 81]. One way to describe representation is in terms of what stimuli are encoded closer and what are encoded farther apart. Beyond studying representation of external stimuli, the same question can also be asked about different cognitive states: which states are represented closer in a brain region?

Early behavioral studies investigated representations of objects by asking people to judge how similar a pair of stimuli are to each other [82]. The structure of the similarity matrix, composed of the judged degrees of similarity between all pairs of tested stimuli, reflects the geometry of the internal representational space being used to encode stimuli. Such approach is limited to representations accessible for conscious report [83]. To overcome this and to compare computational models against multiple types of neural data, Kriegeskorte et al. [84] proposed Representational Similarity Analysis (RSA), which utilizes neural recordings to understand the structure of representations. This analysis assumes that the similarity between the neural patterns elicited by each pair of stimuli in a brain region reflects the similarity between the representations of these stimuli in that region. Because it does not rely on subjective judgment, RSA can be applied to studying representation in any stage of sensory processing [85, 86]. Measuring similarity between neural activity patterns evoked by sensory stimuli or cognitive states is its central goal.

Making assumptions: relations of representational structure, neural patterns and fMRI data

In order to infer the similarity between neural activity patterns, one needs to first make assumptions about the relations between the neural patterns and the recorded neural data, and between the similarity structure and the patterns.

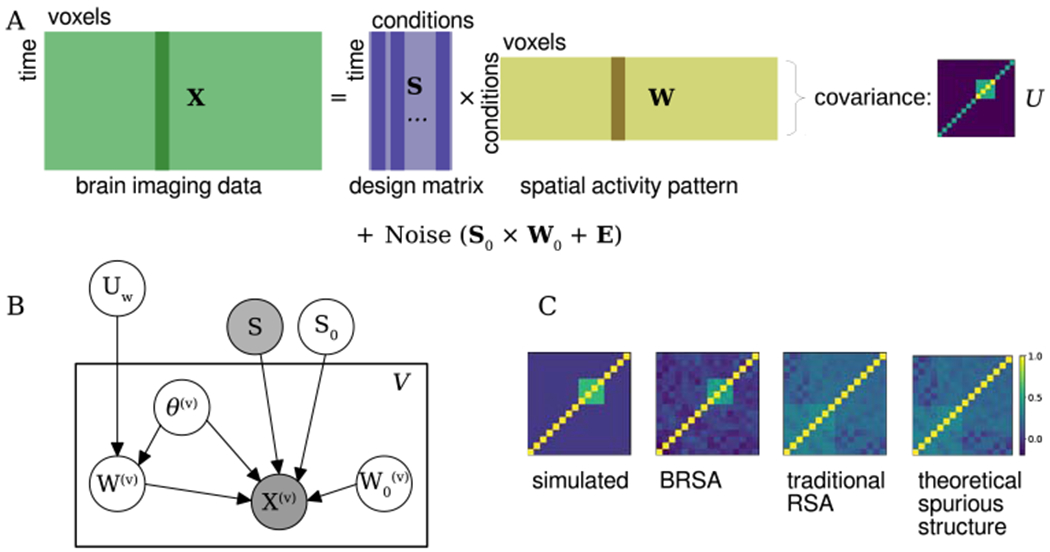

The neural activity of a region1 during a task can be considered as being generated by the sum of various spatial patterns, each being modulated by different time courses. In this sense, the basic assumption of fMRI data underlying RSA is also a factor model, as in SRM and (H)TFA (Fig. 5A). The difference here is that at least a subset of the modulation time courses are explicitly tied to when and how much the brain is engaged in each task condition, which are pre-defined by the researchers. The spatial pattern being modulated by each time course is the relative degree by which different voxels are activated by the task condition. In addition to the activity explained by the temporal modulation of these patterns, the data also contain unexplained fluctuation with both spatial and temporal correlation. Therefore, the similarity matrix one seeks to estimate is only indirectly related to the noisy fMRI data through unknown neural activity patterns and their modulation time courses predicted by the task.

Figure 5:

A) BRSA assumes a similar factor model as SRM and (H)TFA. To capture both spatial and temporal correlation in residual noise, the noise is further modeled by a factor decomposition of spatially correlated noise plus spatially independent noise. Additionally, each column of the weight matrix W (activation patterns) are assumed to share the same covariance structure, which underlies the similarity between patterns. B) Probabilistic graphical model for BRSA. The rectangular plate is repeated for each voxel. Variables within the plate are voxel-specific and those outside the plate are shared by all voxels. UW is the target to estimate but is indirectly related to X through unknown patterns W. To infer UW, other unknown variables are either marginalized or (in the case of S0) determined through an iterative fitting procedure (see [87]) C) The simulated similarity structure, the similarity structures recovered by BRSA, by correlation of point estimates of W (data-mining approach) and the theoretical spurious structure expected to be introduced by the design matrix S when estimating . B) and C) are adapted from [87]

There are many ways to define similarity. One way is based on the cosine of the angle between the vectors corresponding to activity patterns in the space spanned by the voxel activation levels, which is adopted by the algorithm of Bayesian RSA (BRSA) [87, 88]. Other common ways include correlation between demeaned patterns, and Euclidean distance or Mahalanobis distance between patterns (as measures of dissimilarity) [84, 89–91]. Here we focus on cosine of angle between patterns, which can be alternatively considered as correlation without demeaning patterns.

Translating assumptions to a graphical model: two-stage model of fMRI data with representational structure as latent variable

Since the time course of a task is known, the modulation time course (so-called design matrix) can be constructed based on the timing of the task conditions and the shape of the smooth delayed response (the haemodynamic response function, HRF) in fMRI signals following neuronal activity. We denote the design matrix as , where T is the total time points and K is the number of task conditions in an experiment. Then, the factor model of fMRI data can be expressed as

| (13) |

Here, is the time by voxel matrix of the fMRI time series in a region of interest, where V is the total number of voxels in that region. is the unknown activation patterns associated with all the task conditions. S0W0 captures spatially correlated fluctuation unrelated to the task. E denotes the residual spatially independent noise, but it can have temporal autocorrelation, which may be modeled with an auto-regressive (AR) process such as AR(1) (for an alternate approach to the residual noise in RSA, see 2.5). Generally, researchers do not have full knowledge of S0 or W0, but may have regressors (such as the head motion time course) which accounts for some variance in S0. Assuming that E is random variable drawn from the noise distribution, Eq. 13 implicitly defines the conditional probability of the data in each voxel given S, W, S0, W0 and the parameters θ of the AR process, i.e. for voxel v.

When cosine angle αi,j is used as a measure of similarity between patterns wi and wj (row vectors of W), . If the activation prohle of each voxel w(v) is a sample from a multivariate distribution, then is the covariance between the dimensions i and j of this distribution [92]. By estimating the covariance structure UW of W, one can obtain the cosine angle between patterns as a similarity measure. Therefore, the relation between unknown neural patterns and their similarity is modeled by assuming that each column of W is a sample drawn from a multivariate distribution with its covariance matrix being UW:

| (14) |

This specifies the form of conditional probability of w(v) given UW: p(w(v)|UW). The two-stage generative model from covariance structure through activity patterns to fMRI data is depicted in Fig. 5B.

Solving the model: inferring covariance structure of unknown neural patterns directly from data

After the probabilistic graphical model is built and the conditional probability distribution corresponding to each edge in the graphical model is specified, one can derive the likelihood p(X|UW). This can be achieved by marginalizing the intermediate variables such as W and other unknown quantities that X’s distribution is conditioned on (S0 is determined through an iterative fitting procedure as in [87]). Marginalization in probability refers to removing a variable in the expression of probability density by integrating the joint or conditional distribution over the variable, an important procedure in applying Bayesian models that allows the analyst to remain agnostic about the value of ‘nuisance’ variables unimportant to the main analysis. For example, for any two variables A and B, p(A) = ∫P(A, B)dB = ∫P(A|B)p(B)dB. For any three variables A, B and C, p(A|C) = ∫p(A|B, C)p(B|C)dB. In our case, A, B and C can be replaced by X, W and UW: we are agnostic about the specific mapping of the design matrix to the measurements, we are only interested in its implied covariance. In practice, the integration over several unknown variables have closed-form solution due to the assumption of Gaussian distributions in both and p(w(v)|UW), which makes the computation simple. Other unknown variables can be marginalized by numerical approximation. After obtaining the formula of the marginal likelihood p(X|UW), maximizing its logarithm with respect to UW yields the maximum likelihood estimation of UW. Finally, the consine angles between W can be obtained as the correlation matrix corresponding to the covariance matrix .

Application: reducing spurious similarity structure

Maximizing likelihood p(X|UW) while marginalizing unknown intermediate variables and uninteresting variables is a principled approach to infer the latent variable UW based on PGM. An alternative non-PGM approach is to instead first calculate as estimates of the unknown patterns W from the data by regressing X against S, and then calculate the similarity among rows of . This approach, however, has been shown [87, 88, 93, 94] to introduce spurious similarity structure unrelated to the neural activity corresponding to the task of interest. The reason is that although the regression provides unbiased estimates of the neural patterns, the covariance of is not the same as the covariance of is contaminated by noise with specific covariance structure introduced by the regression procedure. The noise itself originates from the task-unrelated fluctuation in fMRI data. The regression procedure, at the same time of disentangling W from X, also “entangles” the noise into each row of in a way that depends on the correlational structure between different columns of S. The covariance structure of the noise in can dominate the estimated similarity structure when signal-to-noise ratio is low [87, 88] (Fig. 5C). BRSA takes into account both the property of noise and uncertainty of intermediate variables W, thus avoiding analyzing with structured noise.

Instead of directly inferring UW from X, one can alternatively assume that UW is composed of the sum of a few theoretically-motivated candidate covariance structures, and estimate the mixture coefficient of each component covariance structure. This method is called Pattern Component Modeling (PCM) [95, 96]. One can even impose a hyperprior on the the mixing coefficients, and use variational Bayesian technique to infer them [97]. The introduction of a hyperprior can incorporate additional prior assumptions or knowledge of the data. Although not directly aimed at reducing statistical bias, these methods are both developed based on clear PGMs. It is worth pointing out that in using these methods, in order to overcome the spurious similarity structure introduced by the design matrix S, one still needs to either directly model the data X as in BRSA, or to model while explicitly taking into account the structure of the non-independent noise it carries.

Even if one takes an approach without explicitly relying on a PGM, a correct understanding of the confounding effect of noise by analyzing a PGM is helpful for developing a better non-Bayesian algorithm. For example, one can still approximate the covariance or distance structure based on the noisy patterns estimated from separate runs of experiment [89, 93]. This is because the noises in the patterns estimated separately from different fMRI runs come from independent sources and have zero correlation. Therefore, the covariance between estimated patterns from separate runs is an unbiased estimation of the covariance of the true unknown patterns. However, it is worth pointing out that the correlation derived from such cross-run covariance is still biased, because to calculate correlation we have to divide the covariance by the estimated standard deviations of each pattern across voxels, which is inflated by the existence of noise. To see these, one needs to understand how data X is generated from W (13) and how the noise in this data generating process impacts . Therefore, regardless of whether a researcher directly uses an analysis tool based on PGM, analyzing the data generating process and the interactions between the analysis procedures and noise is always important for realizing and avoiding any unintended consequence introduced by the chosen analysis methods.

2.5. Modeling structured residuals

Defining the problem: modeling spatiotemporal residuals in fMRI data

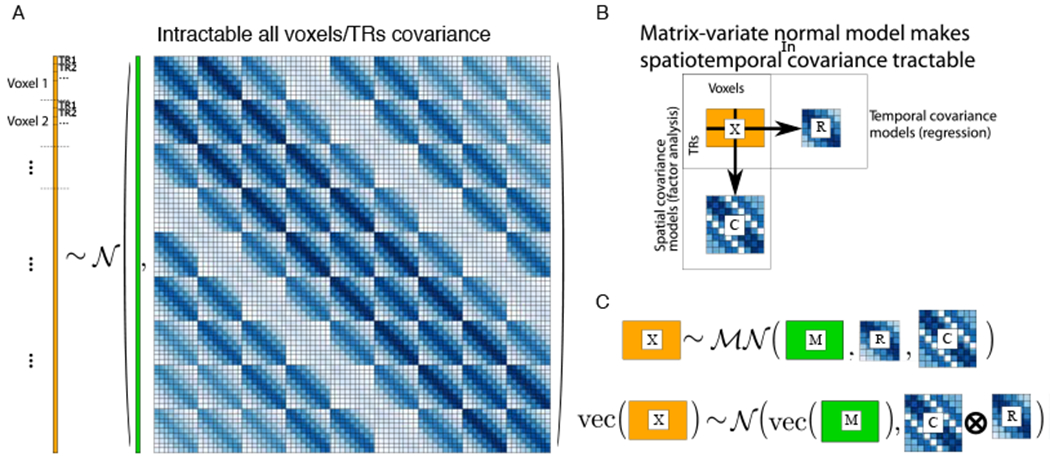

fMRI data has structure in both the spatial and temporal dimension, and this spatiotemporal consistency needs to be exploited (or at least, managed) in order to contend with this high-dimensional and noisy data. This spatiotemporal structure exists both in the neural components corresponding to the effects of interest, and in the residual components corresponding to everything else going on. In the context of supervised regression models for fMRI, practitioners tend to worry about temporal structure in both signal (by convolving the predictors with a synthetic haemodynamic response function) and residual (by performing generalized least squares, or GLS, estimation wherein the temporal structure of the residuals is modeled, [e.g. 98]). More recent factor-analytic unsupervised approaches likewise assume the signal of interest itself is spatially or temporally structured due to their low-rank structure, for example the case of TFA (above) modeling brain networks as a linear combination (in time) of spatially contiguous factors. Most of these methods leave handling the residual to preprocessing stages, but this is not the only possible choice – another is to model the spatial or temporal structure in the residuals explicity. One example of this is modeling the residual temporal autocorrelation per-voxel, as in the case of BRSA. Another, and the one we discuss here, is modeling spatiotemporable separable residuals.

Making assumptions: structured, separable residuals

As noted above, both the fMRI signal and residual are autocorrelated in both space and time; thus, modeling the residual structure in both dimensions is needed. This is not tractable in the general case, as it effectively means modeling the covariance between every voxel at every timepoint with every other voxel at every other timepoint. A simplifying assumption that permits modeling residuals in both space and time is that the spatial residuals of all time points have the same distribution, and the temporal residuals of all voxels likewise have the same distribution (for an illustration, see Fig. 6). This separable residuals assumption has been made in a GLS framework by Katanoda et al. [99] and factor-analytic framework by Shvartsman et al. [49]. A similar approach has been taken to modeling the entire dataset (rather than residuals only) in both neuroimaging [e.g. 100, 101] and elsewhere in the multitask learning community [e.g 102–106]. Once separability is assumed, theoretically motivated structure could be placed on the individual spatial and temporal residual covariances, for example autoregressive in time (as in BRSA, above) and smooth in space (as in DRD, above).

Figure 6: Matrix normal models simultaneously model spatial and temporal residuals.

[A]: a schematic view of a vectorized data matrix, where each voxel’s time series is vertically concatenated (in orange), and the covariance of every voxel at every timepoint with every other voxel at every other timepoint is modeled. Modeling all of these elements independently is intractable, and some structure needs to be imposed – in this case, kronecker-separable structure. [B]: the un-vectorized data matrix (orange rectangle), and its spatial and temporal covariances on the right and bottom. [C]: A matrix-normal distribution with the mean M and row/column covariances R, C is equivalent to the large structure in [A], but can be much more tractable to estimate.

Translating assumptions to a graphical model: matrix-normal

The informal claim of separability above is denoted by defining Σall to be equal to the kronecker product of a spatial and temporal residual covariance, Σall := Σt ⊗ Σv. The kronecker product is a generalization of the vector outer product to matrices, and precisely performs the weighted tiling illustrated in Fig. 6. Using this notation, we define the matrix-variate normal distribution, a distribution over matrices parameterized by a mean matrix and (separable) row and column covariances. We denote matrices drawn from this distribution as , with mean , row ccovariance and colmn covariance . It has the following log-likelihood:

| (15) |

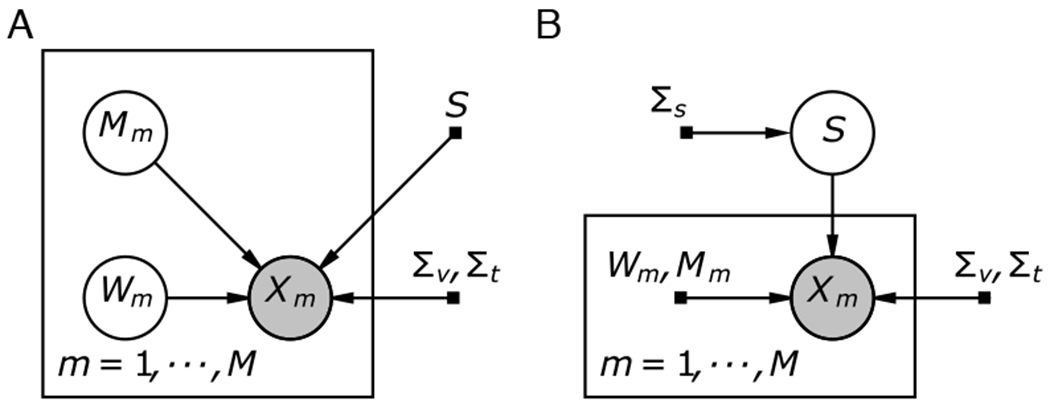

The above notation is equivalent to denoting , where ⊗ is the kronecker product and vec is the vectorization operator. If the column covariance C is the identity matrix (i.e. the columns are independent), the expression reduces to the log-likelihood of the multivariate normal distribution summed over the columns. We can use this notation to write, for example, a separable-residual model SRM model:

| (16) |

| (17) |

where Σv and Σt, are spatial and temporal residual covariances and the remaining par ameters are as defined above. In contrasting the diagram in Fig. 7 one can see the disappearance of the plate iterating over timepoints, since now temporal residuals are modeled. In this view, we can also see a similar model in which the prior on Wm is modeled instead:

| (18) |

| (19) |

In this view, which Shvartsman et al. labeled dual probabilistic SRM (DP-SRM) by analogy to dual probabilistic PCA [107], Wm can no longer be modeled as orthonormal but can now be integrated over with a gaussian prior, estimating substantially fewer parameters. Similar modeling of residual covariance can be performed on other factor models [49], including all of the generative models in this paper, or a generative variant of the structured sparsity (DRD) model, and others such as ISFC [108]. It is not obviously applicable to discriminative models, whose residuals are in the space of predictors and not the space of voxels.

Figure 7: Plate diagrams for matrix-normal shared response model.

In the matrix-normal notation one can see that there are two possible formulations for an SRM-type model: one which integrates over the shared timecourse (as SRM does), and one which integrates over the subject-specific weightings while removing the orthonormality assumption on Wm (this is termed ‘dual probabilistic SRM’ or DP-SRM by analogy to dual probabilistic PCA, which makes the same extension to PCA [107]). In both cases, the brain image data is observed from subject m, m = 1 : M. As in conventional SRM, each observation (now represented as the full data matrix) is a linear combination of subject-specific latent factors. In regular MN-SRM (A, left), the time-course S is treated as a latent variable that is integrated over and the mean Mm and weight vector Wm are treated as (hyper)parameters that need to be estimated. In DP-SRM (B, right), the weight vector and mean matrix are treated as latent variables and integrated over whereas the shared timecourse is treated as a (hyper)parameter to estimate. Note how in contrast to Fig. 2, there is no plate denoting independence between timepoints, since their covariance is now modeled. Shaded nodes: observations, unshaded nodes: latent variables, and black squares: parameters.

Solving the model

While simply estimating all parameters by gradient descent is theoretically possible, a more practical approach is to marginalize over nuisance parameters, and estimate only the parameters of interest. Marginalization in the multivariate normal setting with gaussian priors is well-known [109], but the separable covariance formulation introduces some new inference challenges: marginaliation yields a non-separable marginal likelihood, naive computation of which would require inverting a matrix of dimension vt × vt for v voxels and t time points, which is intractable for fMRI data. However, Rakitsch et al. [105] provided an efficient method for computing this likelihood by exploiting the compatibility between diagonalization and the kronecker product. If the spatial residual matrix itself needs to be separable (e.g. for efficiently modeling whole-brain spatial residuals by separating them in the x, y, and z dimensions), Shvartsman et al. [49] show that particular assumptions about prior covariances can likewise render the marginal separable (and thus tractable). Once the marginal likelihood can be computed efficiently, standard gradient-based techniques can be used for estimation. For even greater speed, Shvartsman et al. [49] derived an expectation-conditional-maximization algorithm for maximizing the marginal likelihood by coordinate ascent (though they only did so for matrix-normal SRM; matrix-normal RSA was estimated by gradient ascent).

Applications and benefits

Similarly to the other models in the paper, we are not advocating any specific spatiotemporal covariance model here, nor its specific application to any specific method. Rather, we highlight the explicit modeling approach and its ability to incorporate a class of structure assumptions into other models, as long as they are linear Gaussian regression or factor models (which includes many models in the literature). That said, specific empirical benefits of introducing a separable residual covariance to other models have been realized. In the case of the GLM for fMRI, Katanoda al. [99] validated the separable-residuals model on synthetic data, as well as on a finger-tapping experiment. There, they demonstrated that the separable model recovers larger activations more closely focused around the expected motor regions. Additionally, the separable model provided a higher goodness of fit to experimental data than models that included temporal residual structure only, or no residual structure at all. In the case of factor models, Shvartsman et al. [49] show that the separable model can be substantially faster to estimate than a model that includes voxel-specific temporal residuals (as in the case of BRSA vs MN-RSA) and can achieve lower error while retaining BRSA’s conservative behavior under the null. A separable variant of SRM achieves lower out-of-sample reconstruction error for new subjects than conventional SRM, though this reduced error does not seem to translate to improved feature extraction for brain decoding. The matrix-normal modeling toolkit (under review for inclusion in the BrainIAK analysis package [57]) makes it possible to prototype inclusion of separable covariances into other models.

3. Discussion

In this paper, we use five computational tools developed for different goals in fMRI research to illustrate how to build probabilistic graphical models (PGMs) to address important questions arising in neuroimaging studies. These methods also illustrate how the PGM [11], which is central to the methods reviewed, can accommodate domain knowledge and known properties of the data and facilitate aggregating information over larger datasets. These features allow us to mitigate the limitations in fMRI data: high dimensionality (many voxels), low sample size in single subject, heterogeneity across subjects and complex noise with high magnitude. The PGM-based approach helps ensure the faithfulness of an algorithm to its original purpose and provides flexibility in model building.

To tackle the limits of high dimensionality and low sample size, SRM [43] uses the existence of a shared latent response as its core assumption, which allows aggregating data from multiple subjects; HTFA [55] uses a hierarchical model across subjects to discover common nodes in many brains. By utilizing big data across many subjects, both methods essentially increase the sample size to discover common structure in the data. In addition, low-rank factor model underlying both methods reduces the model complexity, thus mitigating overfitting.

In aggregating data, both SRM and (H)TFA tolerate the heterogeneity of data across subjects, but in slightly different ways: SRM assumes different spatial weight matrices across subjects while HTFA allows the spatial location of the same node in different subjects to vary. Similarly, an extension of BRSA, the Group BRSA [87] allows spatial patterns to differ across subjects while assuming the same similarity matrix is shared by subjects.

An alternative way to mitigate high dimensionality and low sample size is to introduce domain knowledge which trades off between bias and variance in parameter estimation. The three-dimensional Gaussian kernel in (H)TFA [54, 55] can be considered as adopting the belief that fMRI activations are smooth and local. DRD [67] introduces similar domain knowledge (region sparsity) to tackle the problem by using a Gaussian Process prior on the logarithm of decoding weight variance. This prior allows the weights to have more flexible spatial patterns than Gaussian blobs. Although not reviewed in this article, the method of estimating population receptive field [33] and more generally, the encoding model approach [32] essentially also bring in domain knowledge of neural tuning properties in modeling fMRI data.

Aggregating more data and introducing domain knowledge both essentially reduce the impact of high noise in fMRI data. BRSA and kronecker-separable factor model variants [49] go one step further by explicitly modeling the spatial and temporal correlation structure in noise. BRSA separates the spatially correlated and independent noise components and models the former with a factor model, allowing for a more complex correlation structure. The matrix-normal formalism assumes separability of the residual covariance structure into one corresponding to spatial covariance and one corresponding to temporal covariance, largely reducing the number of free parameters while still being able to capture the major structure in noise. Explicitly modeling the noise structure helps reduce bias in estimation arising from the mismatch between an overly simplified noise assumption and the complex property of noise in the data.

In addition to tackling the limitation in fMRI to increase the power for discovering meaningful information in the data, one advantage of the PGM-based approach is its faithfulness to the original goal of a research. This is illustrated in the case of BRSA, where an approach without explicitly examining the data generating and analyzing process may overlook the difference between the output of an early-stage analysis procedure and the true quantity of data that the procedure attempts to estimate, and may introduce spurious results. PGMs allow for simulation of data according to the model and verification of the inference algorithm. This is an advantage not easily achieved by analysis procedures developed without an explicit model. In fact, during sequential applications of analysis or filtering steps, later steps may reintroduce artifacts intended to be removed by early steps [110], and variation in complex pipelines may vary the results [111]. In functional connectivity analysis, various denoising procedures can introduce spurious brain network correlational structures [112–116]. These are often due to the interaction between the preprocessing procedures and later-stage analysis, which is hard to foresee without building and analyzing explicit generative models.

The PGM-based approach to neuroimaging analysis also offers the flexibility of combining advantages of different models and tailoring models for new application domains. This has been illustrated by the extensions of SRM to several variants that utilize p artial labels of data [48] or datasets with partially overlapping subjects [47]. Likewise, it is illustrated in the development of separable-covariance variants of existing models [49]. It is an interesting future research direction to develop new tools that combine the advantage of the existing PGM-based methods, including the models reviewed here. Understanding the commonality among models is the first step towards integrating them. This is the reason we intentionally use the same notation and matrix orientation of the data matrices in this paper to help readers see the commonality among these methods. Furthermore, several of the tools in this article are available in the same open source package Brain Imaging Analysis Kit (BrainIAK) [57], which makes it easier for tool developers to understand how the computational models and inference algorithms ultimately turn into functioning code and to draw inspiration from these tools (examples for the usage of most algorithms can be found at https://github.com/brainiak/brainiak/tree/master/examples).

Although PGM-based methods come with the aforementioned advantages, they are not without limitations. The first limitation is the speed of computation. Because such models need to consider uncertainty of unknown variables, marginalization of unknown variables is involved, which often requires inverting relatively large matrices, a time- and memory-consuming computation. However, with the advance of parallel computing techniques and code optimization [118, 119], these limitations are gradually being resolved. Second, although the integrative approach of PGM-based methods reduces the chance of obtaining spurious outcomes due to interaction between different stages of data processing, it does reduce the flexibility provided by traditional approach which concatenates many modular analysis tools as a pipeline [120]. Traditional pipeline approaches allow for fast reanalysis when more data (e.g., a new subject) are added, while some of the PGM-based methods may need to redo the analysis on all the data in such situation, or at least require deriving new model update equations. The third limitation is the potential sensitivity of these methods to the correctness of the prior assumptions and generative models used in such methods. For example, noise are often assumed to follow variants of multivariate Gaussian distributions for the easiness of inverting models. But more investigations are needed on the impact of such assumptions when the data distributions in fact violate the assumptions, for example, by having heavier tails than Gaussian distributions. If a model is not a good description of the true generative process of data, then the analysis result may not reflect the ground truth underlying the data. Some assumptions may still be overly simplified compared to the complex nature of true fMRI noise (here, noise may include intrinsic neural activity). This is a challenge but also an opportunity of the PGM-based approach, because making all assumptions explicit makes it easier to examine the impact of the assumptions being made. One may ask how to check whether an assumption of the noise property is correct. Although it is hard to know the true model for data, PGMs at least offer the ability to calculate the likelihood of data given any assumption of noise property. By comparing which assumptions about noise gives higher likelihood for the data, one can decide what assumptions are most appropriate for the data acquired. Finally, although PGM-based approaches aim to consider as much of the noise property as possible, they are still not end-to-end, in the sense that various preprocessing procedures, such as slice-timing correction, motion correction, and spatial distortion correction, are still performed separately prior to employing these methods. The generative process of motion-induced artifacts is typically not modeled in these PGM-based methods. Building a PGM which directly models the raw data straight out of fMRI scanners is still too complex a process. One needs to find a balance between the advantage from the explicit and probabilistic nature of PGM and the complexity introduced by modeling every detail of the data.

Beyond the properties of fMRI data that have been discussed in this paper, there are many more complexities in the properties of fMRI data. These complexities may all influence the conclusions one can draw from the data, depending on how much they are taken into account. For example, the temporal profile of haemodynamic response can differ not only across regions, but also across brains. PGM-based methods in fact played an important role in modeling and quantifying this variation [121]. Future work that incorporates this variation in methods such as BRSA may improve the power of the algorithm and help users evaluate how sensitive their analyses are to such variations. Behavioral contingencies such as reaction time can also influence the fMRI response. Currently their impact is usually modeled deterministically when building design matrices in traditional regression analysis and in BRSA [87]. Future work may incorporate the probabilistic impact of such behavioral contingency on fMRI responses, or treat these behavioral measures as additional observations that can be probabilistically predicted by a PGM. The signal-to-noise ratio of data can be influenced by the speed of fMRI acquisition, together with other factors. So there is a trade-off between data quality and the spatial and temporal resolution of the data. Therefore, there is a need for developing new PGM which simultaneously incorporates multiple types of noise and artifacts, and estimate their relative magnitudes due to the choice of data acquisition protocol. Such work holds promise to improve over existing methods [122] that estimate different noise separately for evaluating the power of an fMRI study. However, as stated above, there is a tradeoff between the amount of details a model can capture and the difficulty of making inference based on a complex model.

In the new era of big data for neuroscience [123–125], facilitating data sharing is obviously one of the most important effort for making big data analysis possible [126–129]. One step further, developing computational models that derive insights from big data is another key for the field of neuroscience to benefit from increasing data size, which should also be in synergy with developing theories of the essence of the neural computation [130–132]. We suggest that future method development places model building at the center of its focus.

Highlights.

Probabilistic graphical models are well-suited for the challenges in fMRI analysis

They are transparent, verifiable, flexible and with better estimation accuracy

We illustrate how to build and use them with five examples from the recent literature

4. Acknowledgement

MBC is supported by National Institute of Drug Abuse award R01DA042065, USA and World Premier International Research Center Initiative (WPI), MEXT, Japan.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

RSA typically focuses on single brain region instead of the whole brain.

References

- [1].Ogawa S, Lee T-M, Kay AR, Tank DW, Brain magnetic resonance imaging with contrast dependent on blood oxygenation, Proceedings of the National Academy of Sciences 87 (1990) 9868–9872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Belliveau J, Kennedy D, McKinstry R, Buchbinder B, Weisskoff R, Cohen M, Vevea J, Brady T, Rosen B, Functional mapping of the human visual cortex by magnetic resonance imaging, Science 254 (1991) 716–719. [DOI] [PubMed] [Google Scholar]

- [3].Buxton RB, The physics of functional magnetic resonance imaging (fMRI), Reports on Progress in Physics 76 (2013) 096601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Heeger DJ, Ress D, What does fMRI tell us about neuronal activity?, Nature Reviews Neuroscience 3 (2002) 142. [DOI] [PubMed] [Google Scholar]

- [5].Friston KJ, Frith CD, Tur. ner R, Frackowiak RS, Characterizing evoked hemodynamics with fMRI, NeuroImage 2 (1995) 157–165. [DOI] [PubMed] [Google Scholar]

- [6].Triantafyllou C, Hoge RD, Krueger G, Wiggins CJ, Potthast A, Wiggins GC, Wald LL, Comparison of physiological noise at 1.5 t, 3 t and 7 t and optimization of fMRI acquisition parameters, NeuroImage 26 (2005) 243–250. [DOI] [PubMed] [Google Scholar]

- [7].Bright MG, Murphy TK, Is fMRI noise really noise? resting state nuisance regressors remove variance with network structure, NeuroImage 114 (2015) 158–169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Zarakn G E. Aguirre K, D’Esposito M, Empirical analyses of bold fMRI statistics, NeuroImage 5 (1997) 179–197. [DOI] [PubMed] [Google Scholar]

- [9].Finn ES, Constable RT, Individual variation in functional brain connectivity: implications for personalized approaches to psychiatric disease, Dialogues in clinical neuroscience 18 (2016) 277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Suárez LE, Markello RD, Betzel RF, Misic B, Linking structure and function in macroscale brain networks, Trends in Cognitive Sciences (2020). [DOI] [PubMed] [Google Scholar]

- [11].Koller D, Friedman N, Probabilistic graphical models: principles and techniques, MIT press, 2009. [Google Scholar]

- [12].Friedman N, Inferring cellular networks using probabilistic graphical models, Science 303 (2004) 799–805. [DOI] [PubMed] [Google Scholar]

- [13].Ahelegbey DF, The econometrics of bayesian graphical models: a review with financial application, University Ca’Foscari of Venice, Dept. of Economics Research Paper Series No 13 (2016). [Google Scholar]

- [14].Ji Q, Probabilistic Graphical Models for Computer Vision, Academic Press, 2019. [Google Scholar]

- [15].Ma WJ, Organizing probabilistic model; of perception, Trends in cognitive sciences 16 (2012) 511–518.22981359 [Google Scholar]

- [16].L Griffiths T, Kemp C, B Tenenbaum J, Bayesian models of cognition (2008). [Google Scholar]

- [17].Geisler WS, Contributions of ideal observer theory to vision research, Vision research 51 (2011) 771–781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Kruschke JK, What to believe: Bayesian methods for data analysis, Trends in cognitive sciences 14 (2010) 293–300. [DOI] [PubMed] [Google Scholar]

- [19].Shiffrin RM, Lee MD, Kim W, Wagenmakers E-J, A survey of model evaluation approaches with a tutorial on hierarchical bayesian methods, Cognitive Science 32 (2008) 1248–1284. [DOI] [PubMed] [Google Scholar]

- [20].Etz A, Vandekerckhove J, Introduction to bayesian inference for psychology, Psychonomic Bulletin & Review 25 (2018) 5–34. [DOI] [PubMed] [Google Scholar]