Abstract

COVID-19 pandemic has challenged the world science. The international community tries to find, apply, or design novel methods for diagnosis and treatment of COVID-19 patients as soon as possible. Currently, a reliable method for the diagnosis of infected patients is a reverse transcription-polymerase chain reaction. The method is expensive and time-consuming. Therefore, designing novel methods is important. In this paper, we used three deep learning-based methods for the detection and diagnosis of COVID-19 patients with the use of X-Ray images of lungs. For the diagnosis of the disease, we presented two algorithms include deep neural network (DNN) on the fractal feature of images and convolutional neural network (CNN) methods with the use of the lung images, directly. Results classification shows that the presented CNN architecture with higher accuracy (93.2%) and sensitivity (96.1%) is outperforming than the DNN method with an accuracy of 83.4% and sensitivity of 86%. In the segmentation process, we presented a CNN architecture to find infected tissue in lung images. Results show that the presented method can almost detect infected regions with high accuracy of 83.84%. This finding also can be used to monitor and control patients from infected region growth.

Keywords: COVID-19, CNN, DNN, Artificial intelligence, Classification, Segmentation

1. Introduction

Novel coronavirus disease (known as COVID-19) emerged in Wuhan, China in December 2019, and soon after it significantly impacted the world. So far, it resulted in millions of confirmed cases, and thousands of deaths globally [1]. As a result, early and accurate diagnosis of COVID-19 is of great importance for controlling the spread of the disease and to reduce its mortality [2,3]. Currently, the gold standard in COVID-19 diagnosis is based on Reverse Transcription Polymerase Chain Reaction (RT-PCR). This test involves the detection of viral nucleic acid from sputum or nasopharyngeal swab. There are a few problems with this testing mechanism. To begin with, this test needs specific materials, which are not available everywhere. Furthermore, this test is time-consuming and has a relatively low sensitivity (true positive rate). Another problem of this testing mechanism has to do with its complex and uncomfortable nature for the patients. Therefore, due to these issues, there is a great need for alternative diagnostic methods that address these problems. Deep neural networks (DNN) [4] have shown great achievement in many applications such as computer vision, speech recognition, and robotic control. Thanks to the availability of large datasets, and powerful graphical processing units (GPUs), DNNs can extract intelligence from the dataset which leads to superhuman performances in various applications. Furthermore, efficient DNN architecture synthesis has been explored recently. These architectures not only are very accurate in prediction, but they are computationally efficient as well [5,6]. As a result, researchers opted for using DNNs as AI diagnosis algorithms for COVID-19. The benefits of using this approach are efficiency and easy access for everyone. There are several applications of AI in the current fight against COVID-19 [7].

Since radiographic patterns on computed tomography (CT) chest scans are proven to show higher sensitivity and specificity compared to the RT-PCR testing method, several works in the literature use CT, and X-ray images datasets to train automatic classification AI algorithms. Furthermore, it has been shown in the literature that CT features and RT-PCR features are complimentary in detecting COVID-19, where CT features can be used as an immediate diagnosis and RT-PCR as a confirmation tool [8]. Also, by leveraging the predictive power of an AI tool, the characteristic of COVID-19 in CT chest scans can be distinguishable from other types of pneumonia. Specifically, by using deep learning algorithms, it is possible to accurately distinguish between COVID-19 cases, with those of other viral types of pneumonia. Hence, many of these works combine the pre-trained convolutional neural network (CNN) architectures, with the transfer learning on the chest X-ray dataset, to build such a classifier [9]. As a result of this, researchers started to collect CT and chest X-ray image datasets, and make them publicly available. Wang and Wong [10] collected a dataset called COVID-x, consisting of four classes, i.e., normal, bacterial pneumonia, viral non-COVID-19 pneumonia, and COVID-19 cases. However, the main problem in such works is the lack of availability of large data. Therefore, approaches such as transfer learning with pre-trained CNN architectures on large X-ray datasets, alongside using a small COVID-19 dataset are shown to be promising [11]. Other novel architectures such as Capsule networks [12] have also been used in the literature to address this problem. Also, using the features extracted from the CNN architectures alongside traditional machine learning classifiers such as support vector machines (SVM) are also investigated by Sethy and Behera [13]. Although such works showed great results, a lack of clinical studies can be mentioned as the downside of these works.

I this paper we present deep learning-based approaches for diagnosis and detection of COVID-19 in involved patients. For solving the diagnosis problem, we presented two AI-based methods for classification and diagnosis of patients and normal people lung MRI images. The first technique is consisting of classification and feature extraction based on ANN and fractal methods. In the second step we used classical CNN methods and then analyzed the accuracy and sensitivity of the approach finally, for solving detection problems, we presented CNN based segmentation methods that can separate infected tissue based on Lung MRI images. Other parts of the paper include the introduction of presented methods base science. In the results and discussion section, the paper findings are illustrated and analyzed. Finally, the conclusion section summarizes the results and practical findings.

2. Related work

In this section, we cover some of the related work of using AI algorithms in the fight against COVID-19. First, we discuss several publicly available datasets. Then, we cover the work related to using CT chest scans and X-ray images to build an AI-based classifier. We also cover some of the other novel use cases of AI to address this problem. There have been several studies on detecting COVID-19 based on CT images. CNN architectures are the most common approach that has been used in this regard. The authors of [14] reviewed two CNN architectures, the first one based on ResNet-23 architecture, and the second one with added location attention mechanism in the fully connected layer. This architecture achieved an overall accuracy of 86.7% on classifying into 3 groups, COIVD-19, Influenza-A-viral-pneumonia, and irrelevant-to-infection. Zhang et al. [15] used a ResNet-18 CNN architecture as the backbone network. They used this architecture with two added heads, the classification head, and anomaly detection head, which generates scores for detecting anomaly images (COVID-19). They achieved an accuracy of 96% on detecting COVID-19 cases, and an accuracy of 70.65% on detecting non-COVID-19 cases. The authors of [16] used an inception v3 CNN architecture for the task of classification between COVID-19 chest X-rays and normal chest X-rays and showed an accuracy of 100%. However, in their dataset, they removed all the other non-COVID diseases such as SARS, and MERS [17] used an architecture based on DenseNet-121 and achieved an accuracy of 80.12% in classifying into COVID-19 and other types of viral pneumonia classes. The authors of [18] used a lung segmentation module to extract the lung regions of interest in the slices of the CT images and used ResNet-50 for the classification. In the binary classification of COVID-19 vs normal cases, they achieved an area under the curve (AUC) of 0.994 with 94% sensitivity and 98% specificity. However, other types of viral pneumonia are not considered in the dataset in this work. The authors of [19] used bayesian CNN architecture to provide uncertainty values alongside their predictions. Providing the uncertainty values can be helpful in clinical settings. Barstugan et al. [20] used various feature extraction methods such as Grey Level Co-occurrence Matrix (GLCM), and Local Directional Pattern (LDP), and then used support vector machines (SVM) as the classification algorithm. They extracted various patches from the images and labeled them as COVID-19 or normal patches. Their best result showed an accuracy of 98.71 in classifying these batches. Narin et al. [11] also did a binary classification for COVID-19 and normal X-rays and used three different CNN architectures, ResNet-50, Inception V3, and Inception-ResNet V2 pre-trained architectures. ResNet-50 achieved the best results, with an accuracy of 98%. COVIDX-Net [21] did a binary classification using several well-known CNN architectures and showed an accuracy of 90%. However, the dataset used in this work is very small, consisting of only 25 COVID-19 cases, and 25 normal X-ray images. The authors of [22] developed a model to do a 3-class classification of X-ray images into classes of normal, COVID-19, and SARS cases. They achieved an accuracy of 95%, using a modified version of ResNet-18 architecture.

The authors of [23] fine-tuned a ResNet-50 architecture to do a binary classification between COVID-19 and other pneumonia cases. They achieved an accuracy of 94.4%. Sethy et al. [13] used features extracted with ResNet-50 with SVM classifier, they achieved an accuracy of 95.38% on a binary classification between normal and COVID-19 X-ray images. Another traditional approach is presented in [21], where the authors identified three clinical features: lactic dehydrogenase (LDH), lymphocyte, and High-sensitivity C-reactive protein (hs-CRP), and built a prediction model based on XGBoost machine learning algorithm, to predict survival or death cases [24]. COVNet of [25] is based on ResNet-50 architecture to distinguish between COVID-19, community-acquired pneumonia, and other non-pneumonia CT scans. This method was able to achieve an accuracy of 90% on detecting COVID-19 cases. The authors of [26] also used transfer learning on several recent well-known CNN architectures, such as VGG19 and MobileNetV2, to do 3-class classification on X-ray images between COVID-19, bacterial pneumonia, and normal classes. The highest accuracy achieved on 3-class classification is 93.48%. The authors of [27] used a UNet architecture for lung region segmentation in CT scans and used a custom CNN architecture for the classification. In addition to these works, the authors of [28] tried to identify the COVID-19 cases from the cough samples recorded by a smartphone application. However, since cough is a common symptom of several other non-COVID-19 diseases, it is difficult to distinguish between those. Nevertheless, the authors reported some promising results

3. Methods and material

3.1. Feature extraction using the fractal technique

Feature extraction involves simplifying the number of resources required to accurately describe a large set of data. When performing complex data analysis, one of the major problems is the number of variables involved. Analysis with a large number of variables generally requires a large amount of memory and computational power or classification algorithm that uses the instructional sample and generalizes to the new instances. Feature extraction is a general term for methods for constructing a combination of variables to solve high-precision problems. Image analysis aims to find a unique way to show the basic features of images and display a unique way. In the fractal method, a gray surface matrix was formulated to achieve statistical features. In statistical analysis, the image features of the probability distribution of the observed compounds from the light intensity of the specific positions relative to each other in the image are calculated. Statistics vary depending on the number of points (pixels) of intensity in each combination [29].

In this study, the fractal model is used to extract the feature. To deal with high input features, feature selection has been used to reduce the dimensions and identify the most relevant features that can separate the various classes sufficiently [30]. The fractal algorithm has been applied with the help of covariance analysis to produce eigenvalues from the image and reduce the dimension. In the fractal algorithm, the input images must be the same size, and one image is known as a two-dimensional matrix and as a single vector. Images must be gray images with a specific resolution. Each image is converted to a column vector by reshaping matrixes, and the images are loaded from a matrix the size of M × N, where N is the number of pixels in each image, and M is the number of images. The average image must be calculated to find its standard deviation from each original image. Then the covariance matrix is calculated, and eigenvalues and eigenvector of the covariance matrix are obtained. The approach of the fractal algorithm is that M shows the number of instructional images, Fi the average of the images, and li each image of the Ti vector. Initially, there are M images, each image containing the N × N dimension. Each image can be displayed in an N-dimensional space calculated as Eq. (1) and averaging operations are Eq. (2) [31].

| (1) |

| (2) |

Finding the standard deviation is considered an important issue in the fractal algorithm, which is also calculated through Eq. (3) and the covariance matrix from Eq. (4).

| (3) |

| (4) |

where and and . Therefore, Cov equals by a large value. Now the eigenvalues of Cov are obtained using Eq. (5).

| (5) |

The last step is to select an eigenvector. A set of properties of an inherent state function in the form of samples in a d-dimensional space that and belongs to . The class is available from . The purpose of the fractal algorithm is to search for linear transmission to map the d-dimensional space of the principal dimension to f-dimension that f < d. The new feature vector is located at . Scattered matrices in the class are given as total scatter or covariance matrices, which are calculated as Eq. (6) and Eq. (7) [31].

| (6) |

| (7) |

where μ is the mean of all samples and is a set of eigenvector of f-dimension of ST that is associated with the largest eigenvalue f. Samples in the new space are , which is WFractal ∈ Rfxd(170xd).

3.2. Deep convolutional neural network (DCNN)

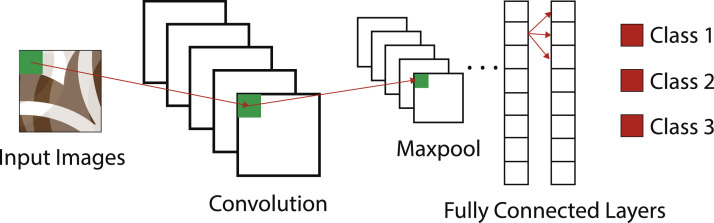

One of the most important methods of deep learning is convolutional neural networks (CNN), in which several layers are taught effectively. CNN uses a variety of multi-layered perspectives to minimize preprocessing. In general, a CNN consists of three main layers: the convolutional layer, the pooling layer, the fully connected layer, and the different layers performing different functions.

In each architecture, there are two stages for training, the forward and the back propagation [32]. In the forward step, the input goes from the hidden layer to the output layer, and the information only moves forward. The input layer sends the input value to the hidden layer, and then output is generated. In the backpropagation, when a neural network is defined, the input image is fed to the network in the first stage. When the output is reached, the value of the network error is calculated; this value is then returned to the network along with the cost function diagram to update the network weights (as seen in Fig. 1 ). CNN consist of several types of hidden sublayers explained as following [32]:

Fig. 1.

An overview of the architecture of a convolutional neural network.

Convolutional layer: The convolution layer is the core of the convolution network, and its output can be interpreted as a three - dimensional pile of neurons. In simple terms, the output of this layer is a three - dimensional pile. In these layers, the CNN network uses various kernels to convolve the input image as well as the central feature maps. The operation of convolution has three main benefits:

-

•

The weight sharing mechanism in each feature map results in a sharp reduction in the number of parameters.

-

•

The local connection learns the connection between the neighbor pixels.

-

•

It causes stability to changes in the location of the object.

Activation functions: Generally, in the neural network, activation functions are used to obtain the desired output from the input functions. Different activation functions can be used for neural networks; the most important of them are the sigmoid and hyperbolic tangent activation functions. The sigmoid function receives the input which can have a value between +∞ and -∞ and outputs the interval between 0 and 1. The hyperbolic tangent function gives the output value between 1 and -1. This feature makes these two functions less commonly used on CNN networks. Because the matrix images include different values, this results in the loss of image data, and in most cases, the system becomes unusable after using these functions. One of the activation functions that have been implemented in recent years is the rectified linear unit ReLU function. ReLU is an activation function g applied to all elements. Its purpose is to provide nonlinear behavior to the network. This function enters all the pixels in the image and makes all negative values to the zero. The purpose of using ReLU is to define a nonlinear part of the convolution neural network and its nonlinear training (convolution is a linear process that is obtained by multiplying and summing the elements) [32].

Max pooling: The use of max-pooling in neural networks has many effects. The use of max pooling makes the network able to identify the object at first with only minor changes to the image. Secondly, it allows the network more space of the image to identify features. Pooling in the CNN is used to summarize the feature during subtracting sampling task, so we can get into deeper layers of the network. When we reach the end of each stage and want to reduce the sampling, the spatial information storage capacity decreases due to the sampling. So, to keep this information, we need to start pooling to collect what we have. The two most common types of pooling are Max and Average [32].

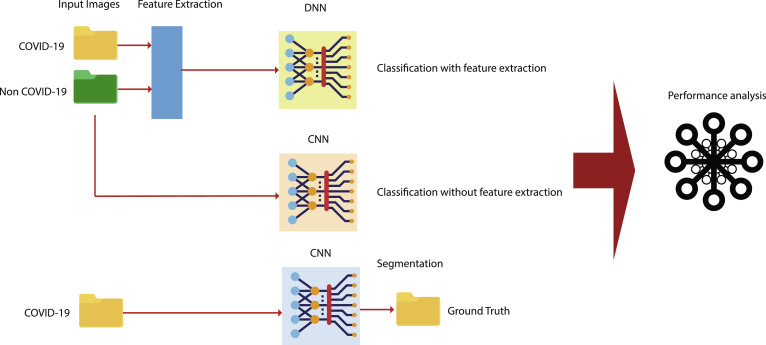

3.3. Conceptualization of presented methods

A variety of techniques have been proposed to detect of diagnosis of disease based on image processing. Our main objective is to design a method for identifying COVID-19 patients based on CT scan images of patient Lungs. The conceptual diagram of the proposed methods is illustrated in Fig. 2 . Regarding the conceptual diagram, we do three analyses for the diagnosis and segmentation of COVID-19 images. The first step is to extract images features using the fractal method. Then we used CNN techniques for classification patients' images. The second method is the architectural design of the convolutional neural network (CNN) to classify patients based on CT scan images. Finally, we train the network for the segmentation of infected tissue in lung MRI images. The architecture of each part is presented in the results and discussion section.

Fig. 2.

The conceptual diagram of the model.

3.4. Performance analysis

The following parameters are used to evaluate the efficiency of classification in data mining science:

-

•

True Positive (TP): The patient is correctly detected.

-

•

False Positive (FP): The healthy image is misdiagnosed.

-

•

True Negative (TN): The healthy image is correctly detected.

-

•

False Negative (FN): The patient is misdiagnosed.

In this study, five criteria are used to evaluate classification results: accuracy (ACC), Specificity(S), Re-call or sensitivity (R), S-dice and miss-rate, fall-out, and Jaccard similarity value to evaluate the overall performance of the system used. The definition of these criteria is given below.

| (8) |

| (9) |

| (10) |

| (11) |

| (12) |

| (13) |

| (14) |

Confusion matrix, in which evaluation criteria are compared in a matrix is a matrix used to describe the performance of a classification model (or "classifier") on a set of experimental data for which the actual values are known.

4. Results and discussion

In this section, we evaluate the performance of our three different methodologies explained in the previous section. The evaluation results are divided into three parts. In the first section, different datasets used are described. In the second one, we demonstrate the results of classification using deep neural network architectures on the fractal features extracted from the images. Then, we present the results of the binary classification task using CNN architectures directly on the images. In the last part, we cover the experiments based on the image segmentation to identify the infected areas of the lung in the chest x-ray images, alongside using a CNN architecture to classify such areas of interest. In these experiments, we use various metrics to evaluate our models. We explain these metrics in (8), (9), (10), (11), (12), (13). Furthermore, the Jaccard index is a similarity metric between two sets, defined as the size of the intersection divided by the size of the union of the sets. In the context of the segmentation task, it is defined as the area of overlap between the predicted segmentation and the ground truth divided by the area of union between the predicted segmentation and the ground truth.

4.1. Datasets

There are several publicly available datasets of medical images and metadata of COVID-10 cases. In this paper, we used a database of computed tomography images. The MRI image is captured in the superior position of the patient. Data are gathered by [33]. The images were categorized into two COVID-19 patients and non-COVID-19 images of patients and normal people. Data consist of 682 images of MRI image. This dataset is used for classification or diagnosis of COVID-19. Second dataset COVID-SemiSeg dataset for detection of infected tissue [34]. The dataset includes two parts consisting of input data and ground truth images that the infected tissue is labeled. In this paper, we used 100 images of CT scan with 100 ground truth images for segmentation using the CNN technique.

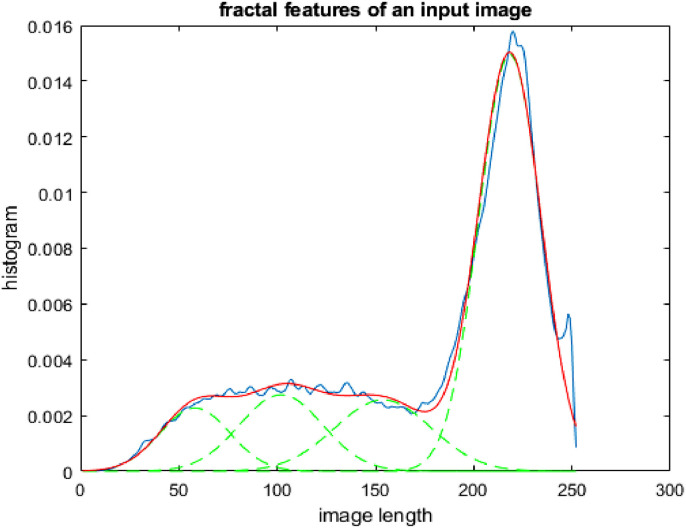

4.2. Diagnosis of COVID-19 patients using DNN and fractal features

In this section, we evaluate the use of DNN architecture proposed in this section on the fractal features extracted from the chest X-ray images. In the fractal method, the histogram of the images is extracted on a diagram like Fig. 3 .

Fig. 3.

The output of fractal feature extraction for a CT scan image of COVID-19 patient lung.

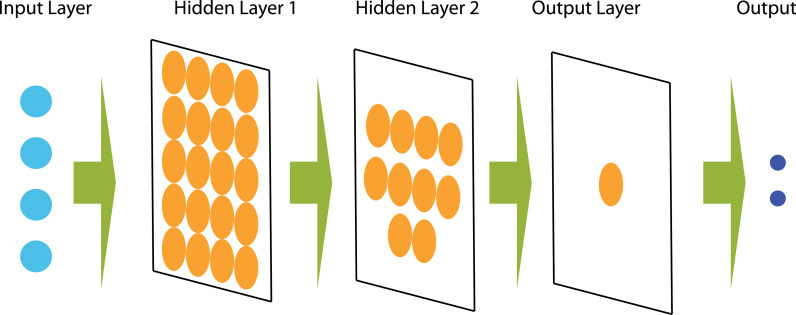

Regarding Fig. 3, the blue line shows the histogram of the image. The second step is modeling the histogram with Gaussian functions. In this step, we should find normal distribution functions that the sum of them would generate the histogram. Regarding images that used for modeling we chose four functions with higher accuracy and a small number of functions. The sum of functions is illustrated by the red color line. The features of the image are parameters of the Gaussian functions. The process is performed for all of the images of the data set. Therefore, the classification dataset is transformed into a matrix with the use of four features. The next step is training a DNN architecture with the use of features as an input layer and labels as an output layer. Fig. 4 shows the architecture of the DNN model we used in this experiment. This architecture consists of two hidden layers with 20, and 10 neurons respectively. The input layer takes the four features for each data instance, and the output layer generates one single output. Also, after each hidden layer, we apply the sigmoid activation function non-linearity.

Fig. 4.

The DNN architecture used on the fractal features.

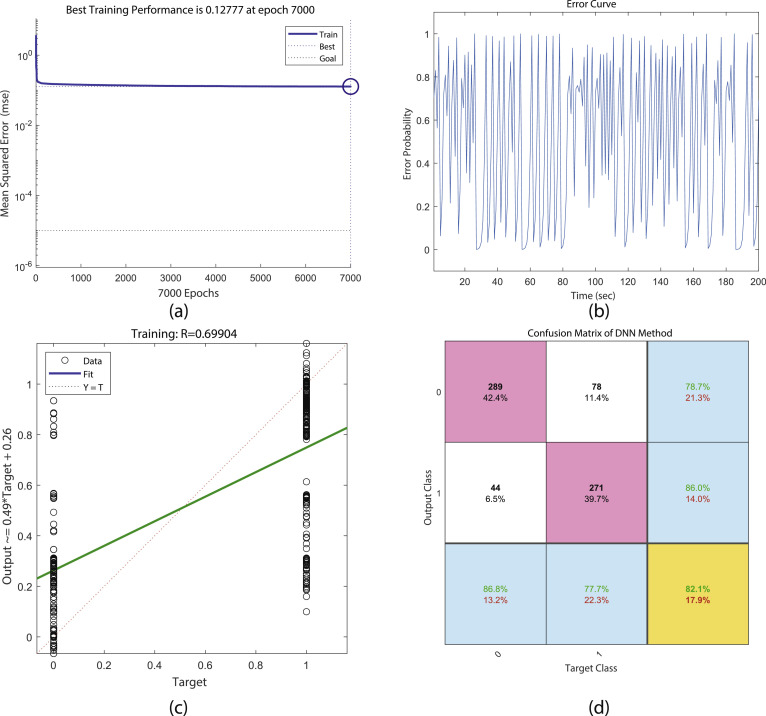

Fig. 5 shows the results of the classification using the DNN method. Output classes are labeled as 1 for COVI-19 images and 0 for non-COVID-19 images. Mean square error, error probability, and scatter plot of the model are illustrated in Figs. 5 (a), (b), and (c). Output R 2 for related classification is 70%. The main criteria for performance analysis of classification methods are presented in (8), (9), (10), (11), (12), (13). Table 1 presents the evaluation metrics of the binary classification using the DNN on fractal features. Results show that this model is efficient, and has a smaller number of parameters, significantly, and floating-point operations (FLOPs).

Fig. 5.

a) Mean square error of DNN method for 7000 epochs, b) Error probability of DNN modeling, c) Scatter plot of modeling and output labels, d) Confusion matrix of DNN method.

Table 1.

Performance evaluation of binary classification with DNN architecture on fractal features.

| Metric | Value |

|---|---|

| Miss rate | 0.1761 |

| Fallout | 0.1761 |

| Sensitivity | 0.8601 |

| Accuracy | 0.8239 |

| Specificity | 0.8239 |

| Similarity | 0.6896 |

| S-dice | 0.8163 |

The result of performance analysis is shown in Table 1 and Fig. 5 (d). Based on the confusion matrix of DNN methods in Fig. 5 (d) from 315 images of COVID-19 271(86%) of the image are detected correctly and 44(14%) of images are diagnosed as non-COVID-19 patients. On the other hand, from 367 images of non-COVID-19 patients, 78.7% of images are diagnosed. However, 21.3% of them are misdiagnosed. At the end sensitivity of the classification method is 86% with 82.39% accuracy of classification. The similarity between COVID-19 and other images is 68.96%. Other factors are brought in Table 1. Results are compared with CNN methods in the next section.

4.3. Diagnosis of COVID-19 patients using CNN method

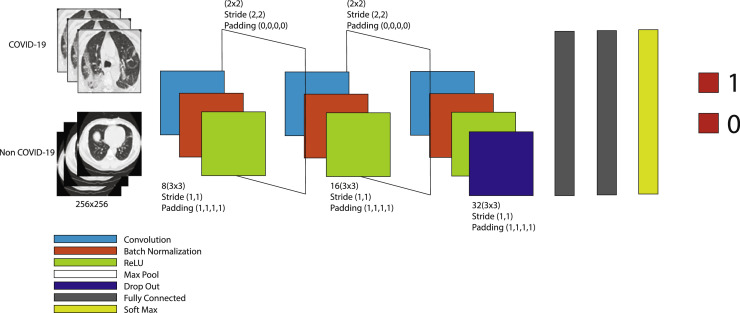

In this section, we present the results of using CNN architectures on the lung images from chest X-rays. In this classification task, there are two classes: COVID-19 positive cases, and normal X-rays. We train the CNN architecture model proposed in the section of methods and materials on a dataset of [33], and we perform different evaluation metrics to analyze the model.

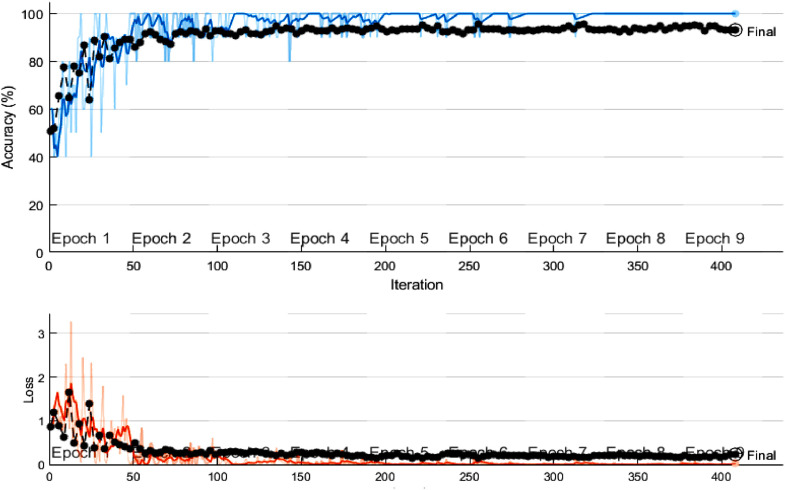

Fig. 6 shows the architecture of presented CNN methods for the diagnosis of COVID-19 patients. The network consists of 16 layers with three convolutional layers. Images are labels as 1 for COVID-19 and zero for non-COVID-19 patients. Moreover, 70 % of the images dataset is used for training the network and 30% for testing the model. The results are depicted in the following section. Fig. 7 shows the loss value and accuracy as a function of training iterations. These plots are depicted while training the network and the process was terminated after obtaining the best results with higher accuracy and lower network loos for 400 iterations.

Fig. 6.

The architecture of CNN methods for classification or diagnosis of COVID-19 patient from X-Ray images of patients lungs.

Fig. 7.

Accuracy and loss values vs the training iterations.

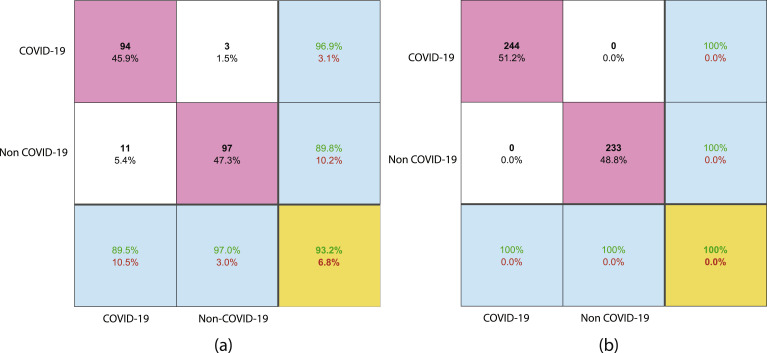

We evaluate the final trained model on both the train and test sets. Fig. 8 shows the confusion matrices of these predictions. As can be seen in this figure, the model accurately predicts 191 cases out of 205 instances in the test set. As a result, the accuracy of the test set is 93.2%. For the COVID-19 positive instances, the model achieves a false negative rate of 3%, indicating its effectiveness in raising alarms for COVID-19 cases.

Fig. 8.

Confusion matrix for binary classification (left) on the training set (right) on the test set.

Other evaluation metrics for this classification task are shown in Table 2 (these numbers don't seem to be right based on the test set confusion matrix, for example, the miss rate is 3%, and the fallout is 10.4% on the test set). This model has a specificity of 99%, which shows that the model correctly identifies the cases with COVID-19. Furthermore, a low fallout value indicates that the model does not predict normal cases as COVID-19 positive. Besides, the CNN architecture achieves an F1 score of 0.9797 for the binary classification task.

Table 2.

Performance evaluation of binary classification with CNN architecture in comparison with DNN methods.

| Metric | CNN | DNN |

|---|---|---|

| Miss rate | 0.0015 | 0.1761 |

| fallout | 0.0015 | 0.1761 |

| Sensitivity | 0.9609 | 0.8601 |

| Accuracy | 0.9320 | 0.8239 |

| Specificity | 0.9971 | 0.8239 |

| Similarity | 0.9602 | 0.6896 |

| S-dice | 0.9797 | 0.8163 |

Based on the results of the performance analysis of COVID-19 diagnosis with artificial intelligence we can result that the presented CNN method with the use of direct image has higher accuracy and sensitivity for the classification of patients. Regarding numerical results sensitivity of the DNN method for diagnosis of COVID-19 is 86%, however, for CNN this metric value is 96.1%. This number is significantly higher than DNN potential for diagnosis. On the other hand, CNN with higher accuracy is a pioneer in the classification of images. Other factors also are better for CNN methods. For instance, fall-out for DNN methods means the percentage of misdiagnosis of the method. This value for CNN algorithms is almost underestimated. However, for DNN methods, it is not ignorable. Based on the finding of this paper we can conclude that the presented CNN algorithm with high potential can be used for the diagnosis of COVID-19 patients from their X-Ray CT scan images of lungs. In the next section, we present a segmentation method for the detection of infected tissue from lung CT scan images.

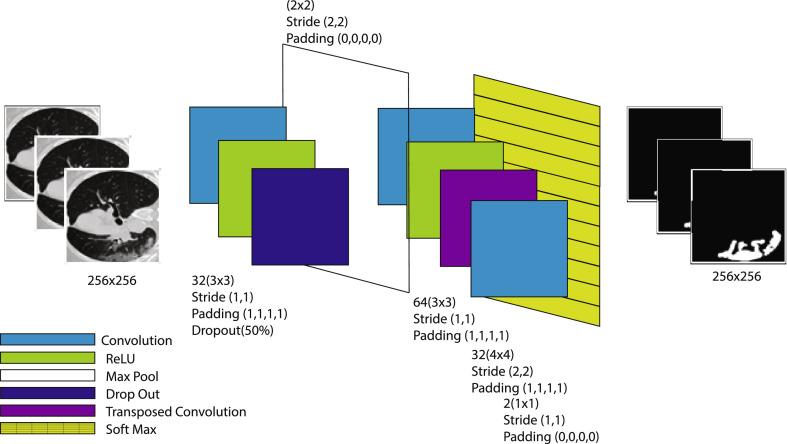

4.4. Detection of infected tissue from lung CT scan image for COVID-19

In this section, we present the results of the CNN segmentation algorithm. The architecture of the detection method is illustrated in Fig. 9 . It consists of 11 layers with three convolutional layers. Input images are 256×256 grayscale CT scan images of COVID-19 patients from their lungs and output layer include ground truth of input images used from the COVID-SemiSeg dataset of [33]. In these images, the infected tissue of patients' lungs is labeled by 255, and other points are shown with zero numbers. Input images show the main three regions. The black region shows the bulk parts of the lung. This area is the region that patients can breathe free air. In this region, capillaries of lung absorb O2 or other types of gases to human blood. Any damages in this region disrupt the human respiratory system.

Fig. 9.

The architecture of CNN method for segmentation of COVID-19 images.

Regarding the input image in Fig. 9, Dark gray region shows human Bronchus and bronchioles that in the human throat connected to the trachea of the human respiratory system. Among the black region in the lower part of the lung image, we can see the region of the light gray region. This part of the lung is infected by the COVID-19 virus. The recognition of this region with the use of a brain-computer is challenging. Given the potential of deep learning methods for segmentation of regions with more different colors is higher than images with an almost similar color. In this paper, the infected region firstly is detected and labels by medicine or an automatic algorithm. Therefore, the ground truth images consisting of the infected region are located in the output layer of the presented architecture.

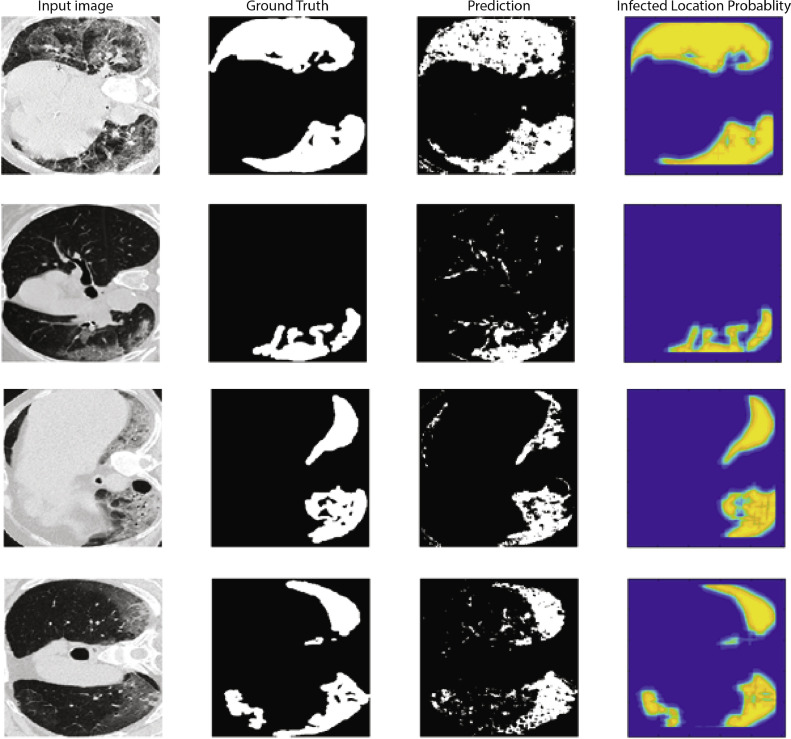

The results of the segmentation are illustrated in Fig. 10 . Regarding Fig.10, the first column shows the input image of the infected lung. And on the other side, the second column shows grind truth images of the output layer. For starting the process 70% of images used for training the network and 30% are used for testing results. Results of infected region detection are shown in the third column of Fig.10. Resulted images should be similar to ground truth images. We can simply see that, findings in almost similar to output. For better enhancement of output, we omitted small spots in the findings and shows it with a probability contour (fourth column). Results show that the presented architecture can correctly detect infected region with almost high precision.

Fig. 10.

Examples of segmentation of lung chest X-ray images of COVID-19 patients.

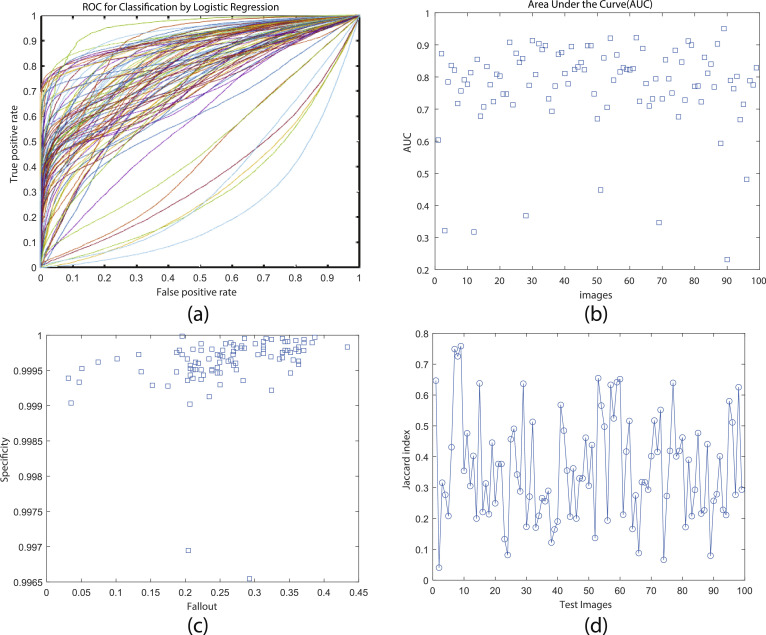

The results of segmentation methods, theoretically, presented with performance criteria. The illustration of the criteria is almost different from the classification methods shown in the previous section. The receiver operating characteristic (ROC) curve is plotted true positive rate versus false-positive rate. This criterion is unique for each image in the segmentation algorithm. Whether plots are depicted with a higher true positive rate and lower false positive rate shows that the process of the image is correct with high performance, results show that the maximum number of images ROC curves is an almost high efficiency (see Fig 11 (a)). For understanding the ROC curve with concrete values, we present area under the curve (AUC) value. This criterion whether to obtain values close to one shows high performance. Regarding Fig. 11 (b), 92% of images are shown in the high-performance region. This matter is also achievable from Fig. 11 (c). More than 90% of images are segmented with higher than 70% accuracy. In the end, the average number of accuracies for all images is 83.84%. The final criterion is the Jaccard value that shows the resulted image and ground truths overlapping regions. For many resulted images this value has fluctuated among 0.4. This lower value stems from black spots in resulted images in comparison with ground truth data.

Fig. 11.

Results of segmentation a) ROC cure, b) AUC criteria, c) Accuracy of segmentation d) Jaccard criteria.

5. Conclusion

In this paper, we presented three types of deep learning methods for the classification and segmentation of X-Ray images of patients' lungs infected by the COVID-19 virus. For the diagnosis of patients, we provide two methods of deep neural network (DNN) method on the fractal feature of input images and convolutional neural network (CNN) with direct use of CT scan images. Results classification shows that the presented CNN architecture with higher accuracy (93.2%) and sensitivity (96.1%) is outperforming than the DNN method with an accuracy of 83.4% and sensitivity of 86%. The presented CNN architecture can be used for the diagnosis of COVID-19 patients with high performance. Currently, the best method of diagnosis of patients is Reverse Transcription Polymerase Chain Reaction (RT-PCR). This method is almost unavailable for many patients. On the other hand, the method is expensive and time-consuming. Therefore, presenting an artificial intelligence method for better diagnosis of COVID-19 patients is crucial. The resulted method can be substituted for the RT-PCR method.

In the next step of the paper, the study is carried out with presenting a segmentation method for the detection of infected areas in the human lung. To achieve the goal, we designed a CNN architecture for pixel classification of COVID-19 patients' lung CT scan images. We used pre-processed ground truth imaged for the output layer. For training data, 70% of images were used and the rest of the images used for validation of the presented architecture. Results show that the presented method can almost detect the infected region of lung images with high accuracy of 83.84%. This network can also use for the separation of the infected region from patients' lung images for better treatment. This finding also can be used to monitor and control patients from infected region growth. There are many works about segmentation and classification patients with the use of artificial intelligence for example diagnosing brain tumor, breast cancer, and lung cancer. However, the implementation of these approaches almost is silent. These approaches almost used in a wearable monitoring system for diagnosis of disease, monitoring, and transferring to certain physicians. COVID-19 pandemic shows that science has a long-term future perspective. It is time to implement artificial intelligence methods in medicine to help doctors diagnosis better and as soon as possible fast.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

The authors are grateful to the Editor-in-Chief: Dr. Stefano Boccaletti for the fast review of the paper. The funding sources had no involvement in the study design, collection, analysis or interpretation of data, writing of the paper.

References

- 1.Mahase E. Coronavirus COVID-19 has killed more people than SARS and MERS combined, despite lower case fatality rate. BMJ. Feb. 2020;368:m641. doi: 10.1136/bmj.m641. [DOI] [PubMed] [Google Scholar]

- 2.Who, “Coronavirus disease (COVID-19) Situation Report-111,” 2020.

- 3.Ahmadi M., Sharifi A., Dorosti S., Jafarzadeh Ghoushchi S., Ghanbari N. Investigation of effective climatology parameters on COVID-19 outbreak in Iran. Sci Total Environ. Apr. 2020;729 doi: 10.1016/j.scitotenv.2020.138705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lecun Y., Bengio Y., Hinton G. Deep learning. Nature. May 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 5.S. Hassantabar, Z. Wang, and N. K. Jha, “SCANN: synthesis of compact and accurate neural networks,” Apr. 2019.

- 6.S. Hassantabar, X. Dai, and N. K. Jha, “STEERAGE: Synthesis of neural networks using architecture search and grow-and-prune methods,” Dec. 2019.

- 7.J. Bullock, A. Luccioni, K. H. Pham, C. S. N. Lam, and M. Luengo-Oroz, “Mapping the landscape of artificial intelligence applications against COVID-19,” Mar. 2020.

- 8.Li D. False-negative results of real-time reverse-transcriptase polymerase chain reaction for severe acute respiratory syndrome coronavirus 2: role of deep-learning-based CT diagnosis and insights from two cases. Korean J Radiol. 2020;21(4):505–508. doi: 10.3348/kjr.2020.0146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.M. Farooq and A. Hafeez, “COVID-ResNet: a deep learning framework for screening of COVID19 from radiographs,” Mar. 2020.

- 10.L. Wang and A. Wong, “COVID-Net: a tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-Ray images,” Mar. 2020. [DOI] [PMC free article] [PubMed]

- 11.A. Narin, C. Kaya, and Z. Pamuk, “Automatic detection of coronavirus disease (COVID-19) using x-ray images and deep convolutional neural networks,” Mar. 2020. [DOI] [PMC free article] [PubMed]

- 12.S. Sabour, N. Frosst, and G. E. Hinton, “Dynamic routing between capsules,” 2017.

- 13.Sethy P.K., Behera S.K., Ratha P.K., Biswas P. Detection of coronavirus disease (COVID-19) based on deep features. Int J Math Eng Manag Sci. 2020;5(4 (Mar.)):643–651. [Google Scholar]

- 14.Butt C., Gill J., Chun D., Babu B.A. Deep learning system to screen coronavirus disease 2019 pneumonia. Appl Intell. 2020;(Apr) doi: 10.1007/s10489-020-01714-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.J. Zhang, Y. Xie, Y. Li, C. Shen, and Y. Xia, “COVID-19 screening on chest x-ray images using deep learning based anomaly detection,” Mar. 2020.

- 16.Salman F.M., Abu-Naser S.S., Alajrami E., Abu-Nasser B.S., Ashqar B.A.M. COVID-19 Detection using artificial intelligence. Int J Acad Eng Res. 2020;4(3):18–25. [Google Scholar]

- 17.Wang S. A fully automatic deep learning system for COVID-19 diagnostic and prognostic analysis. Eur Respir J. 2020;(May) doi: 10.1183/13993003.00775-2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.O. Gozes et al., “Rapid AI development cycle for the coronavirus (COVID-19) pandemic: initial results for automated detection & patient monitoring using deep learning CT image analysis,” Mar. 2020.

- 19.Ghoshal B., Tucker A., Sanghera B., Wong W.L. Proceedings - IEEE Symposium on Computer-Based Medical Systems. Vol. 2019. 2019. Estimating uncertainty in deep learning for reporting confidence to clinicians when segmenting nuclei image data; pp. 318–324. June. [Google Scholar]

- 20.M. Barstugan, U. Ozkaya, and S. Ozturk, “Coronavirus (COVID-19) classification using CT images by machine learning methods,” Mar. 2020.

- 21.E. E.-D. Hemdan, M. A. Shouman, and M. E. Karar, “COVIDX-Net: a framework of deep learning classifiers to diagnose COVID-19 in x-ray images,” Mar. 2020.

- 22.A. Abbas, M. M. Abdelsamea, and M. M. Gaber, “Classification of COVID-19 in chest x-ray images using DeTraC deep convolutional neural network,” Apr. 2020. [DOI] [PMC free article] [PubMed]

- 23.L. O. Hall, R. Paul, D. B. Goldgof, and G. M. Goldgof, “Finding Covid-19 from chest x-rays using deep learning on a small dataset,” Apr. 2020.

- 24.L. Yan et al., “Prediction of criticality in patients with severe Covid-19 infection using three clinical features: a machine learning-based prognostic model with clinical data in Wuhan,” medRxiv, p. 2020.02.27.20028027, Mar. 2020.

- 25.Li L. Artificial Intelligence Distinguishes COVID-19 from Community Acquired Pneumonia on Chest CT. Radiology. 2020 doi: 10.1148/radiol.2020200905. Mar. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Apostolopoulos I.D., Mpesiana T.A. Covid-19: automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys Eng Sci Med. 2020;43(2 (Apr)):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.C. Zheng et al., “Deep learning-based detection for COVID-19 from chest CT using weak label,” medRxiv, p. 2020.03.12.20027185, Mar

- 28.Imran A. AI4COVID-19: AI enabled preliminary diagnosis for COVID-19 from cough samples via an app. Inf Med Unlocked. 2020;(Jun) doi: 10.1016/j.imu.2020.100378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Han D., Zhao N., Shi P. Gear fault feature extraction and diagnosis method under different load excitation based on EMD, PSO-SVM and fractal box dimension. J Mech Sci Technol. 2019;33(2):487–494. [Google Scholar]

- 30.Srinivasan A., Battacharjee P., Prasad A.I., Sanyal G. Proceedings of the 2nd international conference on electronics, communication and aerospace technology, ICECA 2018. 2018. Brain MR image analysis using discrete wavelet transform with fractal feature analysis; pp. 1660–1664. [Google Scholar]

- 31.Chaurasia V., Chaurasia V. Statistical feature extraction based technique for fast fractal image compression. J Vis Commun Image Represent. 2016;41:87–95. [Google Scholar]

- 32.McCann M.T., Jin K.H., Unser M. Convolutional neural networks for inverse problems in imaging: a review. IEEE Signal Process Mag. 2017;34(6):85–95. [Google Scholar]

- 33.Zhao J., He X., Yang X., Zhang Y., Zhang S., Xie P. COVID-CT-Dataset: a CT scan dataset about COVID-19. arXiv Prepr arXiv2003.13865. 2020 [Google Scholar]

- 34.“COVID-19 - Medical segmentation.” [Online]. Available:https://medicalsegmentation.com/covid19/. [Accessed: 04-Jul-2020].