Abstract

Background

A systematic and extensive search for as many eligible studies as possible is essential in any systematic review. When searching for diagnostic test accuracy (DTA) studies in bibliographic databases, it is recommended that terms for disease (target condition) are combined with terms for the diagnostic test (index test). Researchers have developed methodological filters to try to increase the precision of these searches. These consist of text words and database indexing terms and would be added to the target condition and index test searches.

Efficiently identifying reports of DTA studies presents challenges because the methods are often not well reported in their titles and abstracts, suitable indexing terms may not be available and relevant indexing terms do not seem to be consistently assigned. A consequence of using search filters to identify records for diagnostic reviews is that relevant studies might be missed, while the number of irrelevant studies that need to be assessed may not be reduced. The current guidance for Cochrane DTA reviews recommends against the addition of a methodological search filter to target condition and index test search, as the only search approach.

Objectives

To systematically review empirical studies that report the development or evaluation, or both, of methodological search filters designed to retrieve DTA studies in MEDLINE and EMBASE.

Search methods

We searched MEDLINE (1950 to week 1 November 2012); EMBASE (1980 to 2012 Week 48); the Cochrane Methodology Register (Issue 3, 2012); ISI Web of Science (11 January 2013); PsycINFO (13 March 2013); Library and Information Science Abstracts (LISA) (31 May 2010); and Library, Information Science & Technology Abstracts (LISTA) (13 March 2013). We undertook citation searches on Web of Science, checked the reference lists of relevant studies, and searched the Search Filters Resource website of the InterTASC Information Specialists' Sub‐Group (ISSG).

Selection criteria

Studies reporting the development or evaluation, or both, of a MEDLINE or EMBASE search filter aimed at retrieving DTA studies, which reported a measure of the filter’s performance were eligible.

Data collection and analysis

The main outcome was a measure of filter performance, such as sensitivity or precision. We extracted data on the identification of the reference set (including the gold standard and, if used, the non‐gold standard records), how the reference set was used and any limitations, the identification and combination of the search terms in the filters, internal and external validity testing, the number of filters evaluated, the date the study was conducted, the date the searches were completed, and the databases and search interfaces used. Where 2 x 2 data were available on filter performance, we used these to calculate sensitivity, specificity, precision and Number Needed to Read (NNR), and 95% confidence intervals (CIs). We compared the performance of a filter as reported by the original development study and any subsequent studies that evaluated the same filter.

Main results

Ninteen studies were included, reporting on 57 MEDLINE filters and 13 EMBASE filters. Thirty MEDLINE and four EMBASE filters were tested in an evaluation study where the performance of one or more filters was tested against one or more gold standards. The reported outcome measures varied. Some studies reported specificity as well as sensitivity if a reference set containing non‐gold standard records in addition to gold standard records was used. In some cases, the original development study did not report any performance data on the filters. Original performance from the development study was not available for 17 filters that were subsequently tested in evaluation studies. All 19 studies reported the sensitivity of the filters that they developed or evaluated, nine studies reported the specificities and 14 studies reported the precision.

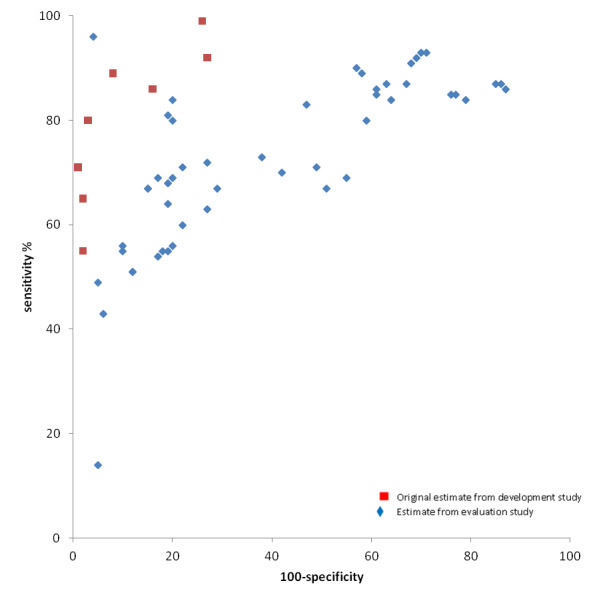

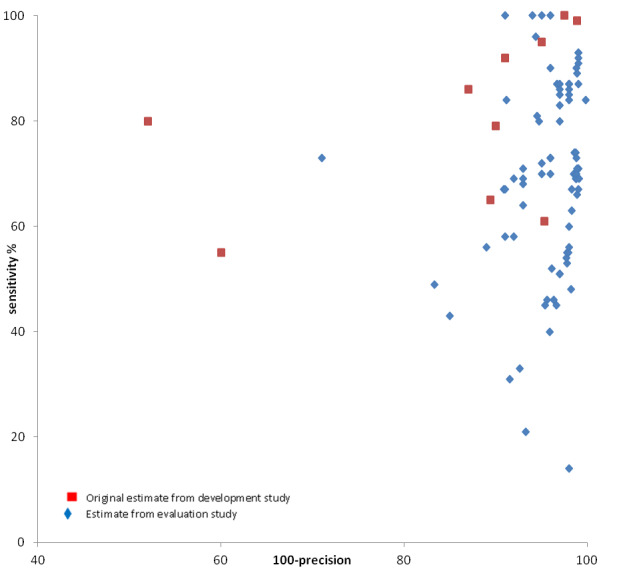

No filter which had original performance data from its development study, and was subsequently tested in an evaluation study, had what we defined a priori as acceptable sensitivity (> 90%) and precision (> 10%). In studies that developed MEDLINE filters that were evaluated in another study (n = 13), the sensitivity ranged from 55% to 100% (median 86%) and specificity from 73% to 98% (median 95%). Estimates of performance were lower in eight studies that evaluated the same 13 MEDLINE filters, with sensitivities ranging from 14% to 100% (median 73%) and specificities ranging from 15% to 96% (median 81%). Precision ranged from 1.1% to 40% (median 9.5%) in studies that developed MEDLINE filters and from 0.2% to 16.7% (median 4%) in studies that evaluated these filters. A similar range of specificities and precision were reported amongst the evaluation studies for MEDLINE filters without an original performance measure. Sensitivities ranged from 31% to 100% (median 71%), specificity ranged from 13% to 90% (median 55.5%) and precision from 1.0% to 11.0% (median 3.35%).

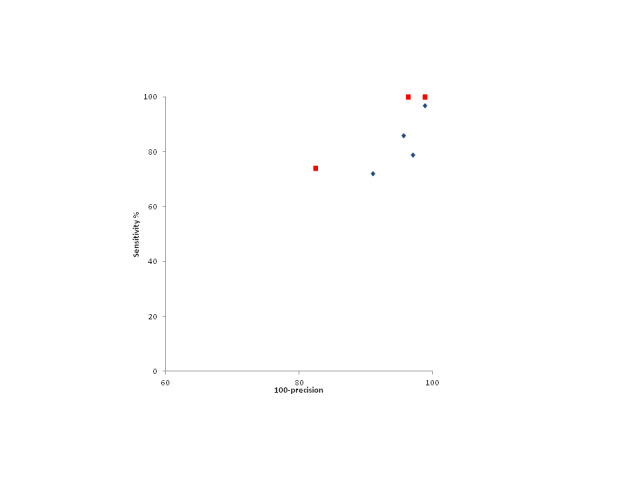

For the EMBASE filters, the original sensitivities reported in two development studies ranged from 74% to 100% (median 90%) for three filters, and precision ranged from 1.2% to 17.6% (median 3.7%). Evaluation studies of these filters had sensitivities from 72% to 97% (median 86%) and precision from 1.2% to 9% (median 3.7%). The performance of EMBASE search filters in development and evaluation studies were more alike than the performance of MEDLINE filters in development and evaluation studies. None of the EMBASE filters in either type of study had a sensitivity above 90% and precision above 10%.

Authors' conclusions

None of the current methodological filters designed to identify reports of primary DTA studies in MEDLINE or EMBASE combine sufficiently high sensitivity, required for systematic reviews, with a reasonable degree of precision. This finding supports the current recommendation in the Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy that the combination of methodological filter search terms with terms for the index test and target condition should not be used as the only approach when conducting formal searches to inform systematic reviews of DTA.

Plain language summary

Search strategies to identify diagnostic accuracy studies in MEDLINE and EMBASE

A diagnostic test is any kind of medical test performed to help with the diagnosis or detection of a disease. A systematic review of a particular diagnostic test for a disease aims to bring together and assess all the available research evidence. Bibliographic databases are usually searched by combining terms for the disease with terms for the diagnostic test. However, depending on the topic area, the number of articles retrieved by such searches may be very large. Methodological filters consisting of text words and database indexing terms have been developed in the hope of improving the searches by increasing their precision when these filters are added to the search terms for the disease and diagnostic test. On the other hand, using filters to identify records for diagnostic reviews may miss relevant studies while at the same time not making a big difference to the number of studies that have to be assessed for inclusion. This review assessed the performance of 70 filters (reported in 19 studies) for identifying diagnostic studies in the two main bibliographic databases in health, MEDLINE and EMBASE. The results showed that search filters do not perform consistently, and should not be used as the only approach in formal searches to inform systematic reviews of diagnostic studies. None of the filters reached our minimum criteria of a sensitivity greater than 90% and a precision above 10%.

Background

As with Cochrane reviews of interventions, Cochrane diagnostic test accuracy (DTA) reviews should aim to identify and evaluate as much available evidence about a specific topic as possible within the available resources (DeVet 2008). Thus, a systematic and extensive search for eligible studies is an essential step in any review. Recommendations for searching for DTA studies are that electronic bibliographic databases, such as MEDLINE and EMBASE, should be searched by combining search terms for disease indicators (target condition) with terms for the diagnostic test (index test) (DeVet 2008). Depending on the topic area, the number of articles retrieved by such searches may be too large to be processed with the available resources. A number of methodological filters consisting of text words and database specific indexing terms (such as MEDLINE Medical Subject Headings (MeSH)) have been developed in an attempt to increase the precision of searches and reduce the resources required to process results. These search filters are typically added to a search strategy consisting of the target condition and index test(s).

Methodological search filters have been developed for retrieving articles relating to many types of clinical question, including those about aetiology, diagnosis, prognosis and therapy. These filters are typically combinations of database indexing terms or text words, or both, that reflect the study design and statistical methods reported by the articles’ authors. For example, Haynes and co‐workers have developed a series of filters to assist searchers to retrieve articles according to aetiology, diagnosis, prognosis or therapy (Haynes 1994; Haynes 2005; Haynes 2005a; Wilczynski 2003; Wilczynski 2004). They are available as ‘Clinical Queries’ limits in both PubMed and via the OvidSP interfaces for MEDLINE and EMBASE (NLM 2005; OvidSP 2013; OvidSP 2013a).

Methodological search filters have proved to be particularly effective in identifying intervention (therapy) studies. Within The Cochrane Collaboration, a highly sensitive search strategy is widely used for identifying reports of randomised trials in MEDLINE (Lefebvre 2011).

For DTA studies, however, the relevant methodology is often not well reported by authors in their titles and abstracts. In addition, MEDLINE lacks a suitable publication type indexing term to apply to DTA studies. EMBASE has recently introduced a check tag for DTA studies (diagnostic test accuracy) but this is only being prospectively applied. Some relevant indexing terms do exist in both EMBASE and MEDLINE, for example sensitivity and specificity, however they are inconsistently assigned by indexers to DTA studies (Fielding 2002; Wilczynski 1995; Wilczynski 2005a; Wilczynski 2007). A consequence of adding filters to subject and index term strategies to identify records for DTA reviews is that relevant studies might be missed without, at the same time, significantly reducing the number of studies that have to be assessed for inclusion (Doust 2005; Leeflang 2006; Whiting 2008; Whiting 2011).

We conducted a methodology review of empirical studies that reported the development and evaluation of methodological search filters to retrieve reports of DTA studies in MEDLINE and EMBASE to assess the value of adding methodological search filters to search strategies to identify records for inclusion in DTA reviews. Until now, a comprehensive and systematic review of studies that develop or evaluate diagnostic search filters has not been published. The findings of this review will help to elucidate the performance of these filters to find studies relevant to diagnostic systematic reviews and to allow a recommendation for their use (or not) when conducting literature searches.

Objectives

To systematically review empirical studies that report the development or evaluation, or both, of methodological search filters designed to retrieve diagnostic test accuracy (DTA) studies in MEDLINE and EMBASE.

Methods

Criteria for considering studies for this review

Types of studies

Primary studies of any design were included. Studies in which the main objective was the development or evaluation, or both, of a methodological filter for the purpose of searching for DTA studies in MEDLINE and EMBASE were eligible. We defined a development study as one in which a new filter was conceived, tested in a reference set of diagnostic studies, and the performance reported. An evaluation study was one in which a filter from a development study publication was tested in a new reference set and the performance reported. A study could be both a development and an evaluation study if it reported on the development and performance of a newly designed filter and evaluated a filter which had previously been published by a different development study. We also included filters assessed in evaluation studies for which there was no corresponding development study publication. We excluded studies that developed or evaluated filters designed to retrieve clinical prediction studies or prognostic studies.

Types of data

Eligible studies must have reported the performance of search filters using a recognised measure, such as sensitivity or precision.

Types of methods

Assessments of the performance of search strategies for identifying reports of DTA in MEDLINE and EMBASE.

Types of outcome measures

Eligible outcome measures were those that assessed the accuracy of the search.

Primary outcomes

Measures of search performance, including:

sensitivity (proportion of relevant reports correctly retrieved by the filter);

specificity (proportion of irrelevant reports correctly not retrieved by the filter);

accuracy (the highest possible sensitivity in combination with the highest possible specificity);

precision (the number of relevant reports retrieved divided by the total number of records retrieved by the filter).

We defined a priori the levels of sensitivity (> 90%) and precision (> 10%) from the external validation of evaluation studies as the acceptable threshold for use when searching for DTA studies.

Secondary outcomes

Number Needed to Read (NNR) (also called Number Needed to Screen), which is the inverse of the precision (Bachmann 2002).

Search methods for identification of studies

Electronic searches

The following databases were searched to identify relevant studies: MEDLINE (1950 to week 1 November 2012); EMBASE (1980 to 2012 Week 48); the Cochrane Methodology Register (Issue 3, 2012); ISI Web of Science (11 January 2013); PsycINFO (13 March 2013); Library and Information Science Abstracts (LISA) (31 May 2010); and Library, Information Science & Technology Abstracts (LISTA) (13 March 2013). Three information specialists developed and conducted the searches. The search strategies are listed in the appendices (Appendix 1; Appendix 2; Appendix 3; Appendix 4; Appendix 5; Appendix 6; Appendix 7). No language restrictions were applied.

Searching other resources

We also undertook citation searches of the included studies on Web of Science. Furthermore, reference lists of all relevant studies were assessed (Horsley 2011) and the Search Filters Resource website of the InterTASC Information Specialists' Sub‐Group (ISSG) was screened (InterTASC 2011). InterTASC is a collaboration of six academic units in the UK who conduct and critique systematic reviews for the National Institute for Health and Care Excellence.

Data collection and analysis

Selection of studies

Two authors independently screened the titles and abstracts of all retrieved records. Inclusion assessment of full papers was conducted by one author and checked by a second. Any disagreements were resolved through discussion or referral to a third author.

Data extraction and management

Data extraction was performed by one author and checked by a second; disagreements were resolved through discussion. The ISSG Search Filter Appraisal Checklist (Glanville 2008) was used to structure the data extraction and assessment of methodological quality. This checklist was developed using consensus methods and tested on several filters. It assesses the scope of the filter (limitations, generalisability and obsolescence), and the methods used to develop the filter, including the generation of the reference set.

Data were extracted on the characteristics of the reference set (inclusion of gold and non‐gold standard records, years of publication of the records, journals covered, inclusion criteria, size); how search terms were identified; presence of internal and external validity testing; and any limitations or comparisons between studies. In the context of filter development, the reference set is the same as the reference standard or gold standard in DTA studies. In contrast, the gold standard in the context of filter development is equivalent to diseased individuals in diagnostic accuracy studies (that is the 'relevant' studies) and the non‐gold standard is equivalent to non‐diseased individuals (that is the non‐relevant studies).

Data were also extracted on the date the study was conducted; the date the searches were completed; the database(s) and search interface(s) used; the outcome measures of performance (sensitivity, specificity, precision) and their definitions; and whether the search strategy was developed for specific clinical areas or to identify diagnostic studies over a broad range of topics. We assessed whether the search strategies were described in sufficient detail to be reproducible (that is were the search terms and their combination reported, were the dates of the search reported, and was the interface and database reported?).

Where studies reported data on multiple filters, results were extracted for each filter. However, for filter development studies, if data were also presented on the sensitivity and precision of all tested individual terms, only single term filters that the original authors selected as reporting best performance were extracted, as well as all multiple term filters.

Assessment of risk of bias in included studies

Bias occurs if systematic flaws or limitations in the design or conduct of a study distort the results. Applicability refers to the generalisability of results: can the results of the filter development or evaluation study be applied to other settings with different populations, index tests, reference standards or target conditions?

We identified three areas that we considered to have the potential to introduce bias or affect the applicability of the included studies.

1. Absence of DTA search strategy in reference set development: bias may be introduced when either a development or an evaluation study used a systematic review (or reviews) to provide studies for the reference set, and this systematic review used a search strategy containing diagnostic terms to find primary studies. This could introduce bias because the performance of a filter tested in this reference set will naturally be higher when the difficult to retrieve studies have been missed by the reference set search.

2. Choice of gold standard: concerns about applicability may be introduced in both development and evaluation studies in the generalisability of the filter to all diagnostic studies. Some filters have been developed or evaluated using a reference set that is composed of topic specific studies (such as studies on the diagnosis of deep vein thrombosis), whereas other reference sets will be generic (studies covering a wide range of diagnostic tests and conditions). Ideally, a filter will perform equally well across different topic areas but if it is only evaluated in one specific topic area its performance in other areas will be unclear.

3. Validation of filters in development studies: the process of validation can be split into two parts; the method of internal validation can have bias issues, while the method of external validation (if done) can have both applicability and bias issues. Internal validity is the ability of the filter to find studies from the reference set from which it was developed. A study could be at risk of bias if the internal validation set contained the references from which the filter terms were derived. External validity is the ability of the filter to find studies in a real‐world setting (that is using a reference set composed of topic specific studies). This relates to how generalisable the results are to searching for diagnostic studies for different systematic review topics and most closely relates to how the filters would be used in practice by systematic reviewers. This issue only applies to development studies. A study which has used external validation in a real‐world setting will be judged to have low levels of concern about applicability. However, a study that includes external validity testing could still be at risk of bias if the validity testing occurred in a validation set containing the references used to derive the terms.

Data synthesis

We synthesised performance measures of the filters separately for MEDLINE and EMBASE. We tabulated the performance measures reported by development and evaluation studies grouped by individual filters, so that a comparison could be made between the original reported performance of a filter and its performance in subsequent evaluation studies. If sensitivity, specificity or precision together with 95% confidence intervals (CIs) were not reported in the original reports, these were calculated from the 2 x 2 data, where possible.

Each of the performance measures can be calculated as shown by the formulae below (a further description of performance measures is available in Appendix 8).

| Reference set | |||

| Gold standard records | Non‐gold standard records | ||

| Searches incorporating methodological filter | Detected | a (true positive) | b (false positive) |

| Not detected | c (false negative) | d (true negative) | |

Sensitivity = a/(a + c)

Precision = a/(a + b)

Specificity = d/(b + d)

Accuracy = (a + d)/(a + b + c + d)

Number needed to read = 1/(a/(a + b))

Reference set = gold standard + non‐gold standard records = (a + b + c + d)

Gold standard = relevant DTA studies = a + c

NB. This is different to the gold (reference) standard in DTA studies, which is equivalent to the reference set in filter evaluations. The gold standard in DTA studies is able to correctly identify the true positives and as well as the true negatives, unlike the gold standard in a filter evaluation study which is limited to the true positives.

Paired results of either sensitivity and specificity or sensitivity and precision for each filter were displayed in receiver operating characteristic (ROC) plots. The original individual filter performance estimates from the development studies were plotted in the same ROC space as the individual filter performance estimates from the evaluation studies, to allow for visual inspection of disparities and similarities. We did not pool data due to heterogeneity across studies.

Results

Description of studies

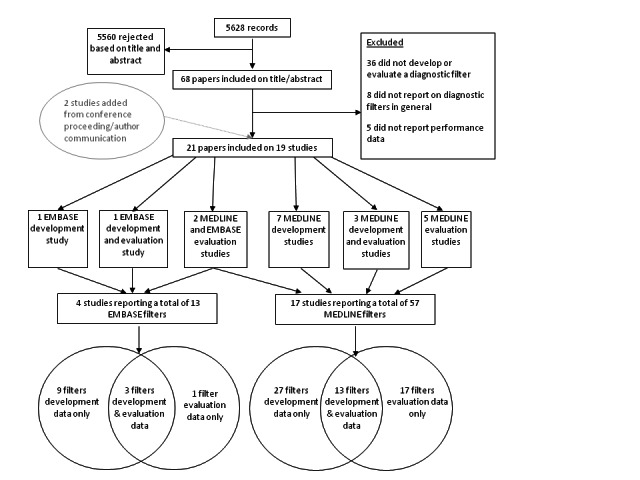

The searches retrieved 5628 records, of which 19 studies reported in 21 papers met the inclusion criteria (Figure 1). These assessed 57 MEDLINE filters and 13 EMBASE filters.

1.

Study selection process.

MEDLINE search filters

Description of development studies

Ten studies reported on the development of 40 MEDLINE filters (range 1 to 12 filters per study). Key features of each study are summarized in the Characteristics of included studies table and Table 1. Thirty‐one filters were composed of multiple terms and nine filters were single term strategies. Nine filters consisted of MeSH terms only, six filters had text words only, and 25 filters combined MeSH with text words. Full details of methods used in each study and the size of the reference set are given in Table 2. A description of each filter and its performance are listed in Table 3.

1. Summary of study designs of MEDLINE filter development studies.

| Author (year) | ||||||||||

| Astin 2008 | Berg 2005 | van der Weijden 1997 | Deville 2002 | Deville 2000 | Haynes 2004 | Haynes 1994 | Bachmann 2002 | Vincent 2003 | Noel‐Storr 2011 | |

| Method of identification of reference set records (one from list below selected for each study) | ||||||||||

| Hand‐searching for primary studies | ✓ | ✓ | ‐ | ‐ | ✓ | ✓ | ✓ | ✓ | ‐ | ‐ |

| DTA systematic reviews | ‐ | ‐ | ‐ | ✓ | ‐ | ‐ | ‐ | ‐ | ✓ | ✓ |

| Personal literature database | ‐ | ‐ | ✓ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

| If systematic reviews used in reference set development, did they include DTA search terms in search strategy? | ||||||||||

| ‐ | ‐ | ‐ | Unclear | ‐ | ‐ | ‐ | ‐ | ✓ | X | |

| Reference set also contained non‐gold standard records | ||||||||||

| ✓ | ✓ | X | NR | ✓ | ✓ | ✓ | ✓ | X | ✓ | |

| Description of non‐gold standard records if used in reference set | NR | ‐ | ‐ | ‐ | ‐ | NR | NR | NR | ‐ | ‐ |

| All studies retrieved by search not classified as gold standard records | ✓ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ✓ | |

| False positive papers selected by a previously published search strategy, exclusion of some publication types e.g. reviews and meta‐analyses. | ‐ | ‐ | ‐ | ✓ | ‐ | ‐ | ‐ | ‐ | ‐ | |

| Generic gold standard records i.e. not topic specific | ||||||||||

| X | X | X | X | X | ✓ | ✓ | ✓ | X | X | |

| Method of deriving filter terms (a combination of methods could be used) | ||||||||||

| Analysis of reference set | ✓ | ✓ | ‐ | ‐ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓* |

| Expert knowledge | ‐ | ‐ | ‐ | ‐ | ‐ | ✓ | ✓ | ‐ | ‐ | ‐ |

| Adaption of existing filter | ‐ | ✓ | ‐ | ✓ | ‐ | ‐ | ‐ | ‐ | ✓ | ‐ |

| Checking key publications for terms and language used | ‐ | ‐ | ✓ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ |

| Internal validation in reference set independent from records used to derive filter terms | ||||||||||

| ✓ | X | N/A** | N/A** | X | X | X | ✓ | X | X | |

| External validation in reference set independent from records used to derive filter terms and internal validation set | ||||||||||

| X | X | ✓ | ✓ | ✓ | X | X | ✓ | X | X | |

*Noel‐Storr derived filter terms by running published search filters in MEDLINE combined with a subject search, locating 10 papers that all filters missed and choosing a term from the title/abstract or keywords of each.

** Only external validation was carried out (no internal validation) in real‐world topics.

Abbreviations used: NR= not reported; N/A= not applicable

2. Study characteristics and methods of MEDLINE development studies.

| Author (Year) Study ID | Identification of reference set | How was reference set used | How were search terms identified for filter | Ref set years | # gold standard records | # non‐gold standard records | # journals ref set |

| Astin 2008 | Hand search. Articles reporting on imaging as a diagnostic test in imaging journals. 6 high impact journals used to find studies for development set and 6 lower impact journals used to find studies for validation set. Journals indexed in MEDLINE and were also selected to cover general radiology, specific modalities and specific systems. | Two independent sets of records developed. Test set used to derive terms and test strategies. Validation set used to test external validity | Performed statistical analysis of terms in test set | development set 1985 Clin Radiol, 1988 Am J Neuroradiol; validation set 2000 | 333 in development set; 186 in validation set | 2222 in development set; 1070 in validation set | 12 (6 in development set; 6 in validation set) |

| Berg 2005 | Manual review of a certain set of articles found using a search (via PubMed) combining sensitive terms for nursing literature plus cancer‐related fatigue diagnosis terms. Manual review of these articles carried out to find diagnostic studies. | To derive terms and test strategies. Did not validate in a separate set of references | Existing PubMed Clinical Queries filter with extra terms from filters for CINAHL, medical publications, published recommendations & diagnosis definitions. Inductively collected terms derived from indexing of included citations: MeSH terms and frequently used text words in titles/abstracts. | NR | NR | 238 | NR |

| van der Weijden 1997 | Personal literature database compiled over 10 years 'by every means of literature searching' of studies reporting on erythrocyte sedimentation rate as a diagnostic test. | To test strategies. | Checking key publications for definitions & terms used. | 1985‐1994 | 221 | 0 | NR |

| Deville 2002 | Studies included in two systematic reviews (relative recall). | To test strategies | Adapted three published search strategies | NR | NR | NR | NR |

| Deville 2000 | Reference set of publications found through handsearch of 9 highest rank family medicine journals available on MEDLINE for years 1992‐95. A ‘control’ set of publications for testing validity of strategies was found by adapting Haynes 1991 most sensitive and most specific searches by adding terms, then run in MEDLINE to retrieve all diagnostic primary studies, then limited to the 9 journals. | To derive terms from reference set; to test strategies in control set; to test external validity the best performing filters were compared against Haynes filters in a systematic review (SR) of meniscal lesions in the knee. | Performed statistical analysis of terms in reference set. Univariate analysis to calculate sensitivity, specificity & diagnostic odds ratio (DOR) of all relevant MeSH terms & text words. Models developed by forward stepwise logistic regression analysis. | 1992‐1995 | 75; 33 in meniscal lesions set | 2392; NR meniscal lesions set | 9 |

| Haynes 2004 | Manual review of 161 journals indexed on MEDLINE for year 2000. Journal titles regularly reviewed for appraisal for Evidence Based Medicine, Evidence Based Nursing, Evidence Based Mental Health and ACP Journal Club. | Test strategies and validate. The reference standard could not be divided into a test set and validation set. | MeSH terms and text words listed using expert knowledge of the field. | 2000 | 147 | 48881 | 161 |

| Haynes 1994 | Manual review of 10 high impact journals for the years 1986 and 1991. The 10 journals searched were American Journal of Medicine, Annals of Internal Medicine, Archives of Internal Medicine, BMJ, Circulation, Diabetes Care, Journal of Internal Medicine, JAMA, Lancet and NEJM | To test strategies and validate. | MeSH terms and text words listed using expert knowledge of the field. | 1986 and 1991 | 92 in 1986 set; 111 in 1991 set | 426 in 1986 set; 301 in 1991 set. | 10 |

| Bachmann 2002 | Hand search European Journal of Paediatrics, Gastroenterology, American Journal of Obstetrics and Gynecology, and Thorax for years 1989 and 1994. Four different journals searched in 1999: NEJM, JAMA, BMJ and Lancet. | 1989 set search used to derive terms and test strategies, 1994 and 1999 sets used to validate | Word frequency analysis on titles, abstracts and subject indexes of all references in 1989 set. | 1989, 1994 and 1999 | 83 in 1989 test set; 53 in 1994 validation set; 61 in 1999 validation set. | 1646 in 1989 test set; 1744 in 1994 validation set; 7875 in 1999 validation set | 8 |

| Vincent 2003 | SRs retrieved from MEDLINE and EMBASE on OVID reporting on diagnostic tests for DVT. 16 SRs selected and all articles included that were indexed on MEDLINE became the reference set. Only English language articles included | To test strategies | Adapted from 5 published strategies: CASP, PubMed, Rochester, Deville, and North Thames | 1969‐2000 | 126 | 0 | NR |

| Noel‐Storr 2011 | SR on the volume of evidence in biomarker studies in those with mild cognitive impairment, conducted by the authors. | To derive terms; to test strategies | Published search filters applied in MEDLINE combined with a subject search (Southampton A, Van der Weijden, and Southampton E), 10 papers were missed by all filters. One term from the title/abstract or keywords of each of 10 papers combined in the new filter. | 2000‐2011 | 128 in Sept 2010 set; additional 16 found in update search therefore 144 in August 2011 | 17266 in Sept 2010 set; additional 1654 found in update search therefore 18920 in August 2011 | NR |

Abbreviations used: NR=Not reported; ref set= reference set

3. Performance of diagnostic filters from MEDLINE development studies.

| Author | Filter Description | Interface | Reference set |

Sensitivity % (95% CI) |

Specificity % (95% CI) |

Accuracy (95% CI) |

Precision% (95% CI) |

NNR (95% CI) |

| Astin 2008 | 1. Exp "sensitivity and specificity"/ 2. False positive reactions/ 3. False negative reactions/ 4. du.fs 5. sensitivity.tw 6. (predictive adj4 value$).tw 7. distinguish$.tw 8. differentiat$.tw 9. enhancement.tw 10. identif$.tw 11. detect$.tw 12. diagnos$.tw 13. accura$.tw 14. comparison.tw 15. or/1‐14 | Ovid | Derivation set | 95.8 (93.1, 97.5) |

52.3 (50.2, 54.3) |

23.1 (21.0‐25.4) |

0.04* | |

| Validation set | 96.8 (93.1, 98.5) |

43.9 (41.0, 46.9) |

23.1 (20.3‐26.2) |

0.04* | ||||

| Berg 2005 | Some search terms were combined using "OR" thus increasing sensitivity and reducing specificity (e.g. nursing assessment [MeSH: noexp] AND questionnaire [Text Word])

Exemplary MeSH terms ‐ Diagnosis, Differential; psychological tests; Likelihood functions; Area Under Curve; diagnostic tests; routine; diagnosis [MeSH subheading]; Diagnostic Techniques and Procedures; nursing assessment. Exemplary text words: sensitivity; specificity; predictive value; validity; reliability; likelihood ratio; questionnaire. |

PubMed | 87 | 73 | Positive likelihood ratio (PLR)=3.2 | 2.3 | ||

| Some search terms were combined using "AND" thus increasing specificity and reducing sensitivity (e.g. nursing assessment [MeSH: noexp] AND questionnaire [Text Word])

Exemplary MeSH terms ‐ Diagnosis, Differential; psychological tests; Likelihood functions; Area Under Curve; diagnostic tests; routine; diagnosis [MeSH subheading]; Diagnostic Techniques and Procedures; nursing assessment. Exemplary text words: sensitivity; specificity; predictive value; validity; reliability; likelihood ratio; questionnaire. |

PubMed | 76 | 83 | PLR= 6.3 | 1.7 | |||

| Haynes 2004 | sensitiv:.mp OR diagnos:.mp OR di.fs | Ovid | 98.6 (96.8‐100) |

74.3 (73.9‐74.7) |

74.3 (74.0‐74.7) |

1.1 (1.0‐1.3) |

0.9* | |

|

High specificity: specificity.tw |

Ovid | 64.6 (56.9‐72.4) |

98.4 (98.2‐98.5) |

98.3 (98.1‐98.4) |

10.6 (8.6‐12.6) |

0.09* | ||

|

High Sensitivity: di.xs. |

Ovid | 91.8 (87.4‐96.3) |

68.3 (67.9‐68.7) |

68.4 (68.0‐68.8) |

0.9 (0.7‐1.0) |

1.11* | ||

| sensitiv:.mp OR predictive value:.mp OR accurac:.tw | Ovid | 92.5 (88.3‐96.8) |

92.1 (91.8‐92.3) |

92.1 (91.8‐92.3) |

3.4 (2.8‐3.9) |

0.29* | ||

|

Optimising sensitivitiy and specificity: exp "diagnostic techniques and procedures" |

Ovid | 66.7 (59.1‐74.3) |

74.6 (74.2‐75.0) |

74.5 (74.2‐74.9) |

0.8 (0.6‐0.9) |

1.25* | ||

| Sensitive:.mp. OR diagnos:.mp. OR accuracy.tw. | Ovid | 98.0 (95.7‐100.0) |

82.7 (82.4‐83.1) |

82.8 (82.5‐83.1) |

1.7 (1.4‐2.0) |

0.59* | ||

| Sensitive:.mp. OR diagnos:mp. OR test:.tw. | Ovid | 98.0 (95.7‐100.0) |

75.1 (74.8‐75.5) |

75.2 (74.8‐75.6) |

1.2 (1.0‐1.4) |

0.83* | ||

| Specificity.tw. OR predictive value:.tw. | Ovid | 72.8 (65.6‐80.0) |

97.9 (97.8‐98.1) |

97.9 (97.7‐98.0) |

9.6 (7.9‐11.3) |

0.10* | ||

| Accuracy:.tw. OR predictive value:tw. | Ovid | 52.4 (44.3‐60.5) |

97.9 (97.8‐98.1) |

97.8 (97.7‐97.9) |

7.1 (5.6‐8.6) |

0.14* | ||

| Sensitive:.mp. OR diagnostic.mp. OR predictive value:.tw. | Ovid | 92.5 (88.3‐96.8) |

91.8 (91.6‐92.1) |

91.8 (91.6‐92.1) |

3.3 (2.8‐3.8) |

0.30* | ||

| Exp sensitivity and specificity OR predicitive value:.tw. | Ovid | 79.6 | 94.9 | 94.8 | 4.5 | 0.22* | ||

| Haynes 1994 |

Best sensitivity: diagnosis (subheading pre‐explosion) OR specificity (tw) |

NR | 86 | 73 | 73 | 7 | 0.14* | |

|

Best accuracy: Exp sensitivity and specificity OR diagnosis (subheading) OR diagnostic use (subheading) OR specificity (tw) OR predicitive (tw) AND value (tw)) |

NR | 86 | 84 | 84 | 13 | 0.08* | ||

|

Best specificity: specificity (tw) OR (predictive (tw) AND value (tw)) OR (false (tw) and positive (tw)) |

NR | 49 | 98 | 36 | 0.03* | |||

|

Best specificity: Exp sensitivity and specificity OR predictive (tw) AND value (tw) |

NR | 55 | 98 | 40 | 0.03* | |||

| Diagnosis (subheading pre‐explosion) OR Specificity (tw) | NR | 86 | 73 | 7 | 0.14* | |||

|

Best sensitivity: Exp sensitivity and specificity OR diagnosis (subheading pre‐explosion) OR diagnostic use (subheading) OR sensitivity (tw) OR specificity (tw) |

NR | 92 | 73 | 9 | 0.11* | |||

| Haynes (2004) 2000 ref set | 96.6 | 65 | 0.008 | 65.7 | 0.02* | |||

| Diagnostic use (sh) | NR | 1986 set | 16 | 96 | 10 | 0.10* | ||

| 1991 set | 26 | 96 | 18 | 0.06* | ||||

| Diagnosis (sh) | NR | 1986 set | 62 | 89 | 9 | 0.11* | ||

| 1991 set | 59 | 88 | 13 | 0.08* | ||||

| Diagnosis& (px) | NR | 1986 set | 79 | 74 | 60 | 0.02* | ||

| 1991 set | 80 | 77 | 90 | 0.01* | ||||

| Exp Sensitivity and Specificity | NR | 1991 set | 50 | 98 | 3 | 0.33* | ||

| Specificity (tw) | NR | 1991 set | 54 | 96 | ||||

| Sensitivity (tw) | NR | 1991 set | 57 | 97 | ||||

| 1986 set | 43 | 98 | 3 | 0.33* | ||||

| van der Weijden 1997 |

MeSH short strategy (terms OR'd together) explode DIAGNOSIS/diagnosis DIAGNOSIS‐DIFFERENTIAL/all subheadings. explode SENSITIVITY‐AND‐SPECIFICITY REFERENCE‐VALUES/all subheadings . FALSE‐NEGATIVE‐REACTIONS/ all subheadings . FALSE‐POSITIVE‐REACTIONS/ all subheadings . explode MASS‐SCREENING/ all subheadings . |

OVID | 31 | 34 | 0.03* | |||

|

MeSH extended strategy (terms OR'd together) explode DIAGNOSIS/ all subheadings . explode SENSITIVITY‐AND‐SPECIFICITY REFERENCE‐VALUES/all subheadings . FALSE‐NEGATIVE‐REACTIONS/ all subheadings . FALSE‐POSITIVE‐REACTIONS/ all subheadings . Explode MASS‐SCREENING/ all subheadings . |

OVID | 69 | 11 | 0.09* | ||||

|

MeSH extended and free text strategy explode DIAGNOSIS/ all subheadings . explode SENSITIVITY‐AND‐SPECIFICITY REFERENCE‐VALUES/all subheadings . FALSE‐NEGATIVE‐REACTIONS/ all subheadings . FALSE‐POSITIVE‐REACTIONS/ all subheadings . Explode MASS‐SCREENING/ all subheadings . diagnos* OR sensitivity or specificity OR predictive value* OR reference value* OR ROC* OR likelihood ratio* OR monitoring |

OVID | 91 | 10 | 0.1* | ||||

| Deville 2002 | Sensitivity and specificity [Mesh; exploded] OR mass screening [Mesh; exploded] OR reference values [Mesh] OR false positive reactions [Mesh] OR false negative reactions [Mesh] OR specificit$.tw OR screening.tw OR false positive$.tw OR false negative$.tw | NR | Knee lesions SR | 70 | ||||

| Urine dipstick SR | 92 | |||||||

| Bachmann 2002 | "SENSITIVITY AND SPECIFICITY"# OR predict* OR diagnos* OR sensitiv* | Datastar | 1989 test set | 92.8 (84.9‐97.3) |

15.6 | 6.4 (5.2‐8.0) |

||

| 1994 validation set | 98.1 | 10.9 | 9.2 | |||||

| 1999 validation set | 91.8 | 4.7 | 21.3 | |||||

| "SENSITIVITY AND SPECIFICITY"# OR predict* OR diagnos* OR accura* | Datastar | 1989 test set | 95.2 (88.1‐98.7) |

16.9 | 5.9 (4.8‐7.3) |

|||

| 1994 validation set | 98.1 (89.9‐99.9) |

12 (9.1‐1.4) |

8.3 (6.7‐11.3) |

|||||

| 1999 validation set | 95.1 | 5 | 20.0 | |||||

| Vincent 2003 |

Strategy A 1. exp 'sensitivity and specificity'/; 2. (sensitivity or specificity or accuracy).tw.; 3. ((predictive adj3 value$) or (roc adj curve$)).tw.; 4. ((false adj positiv$) or (false negativ$)).tw.; 5. (observer adj variation$) or (likelihood adj3 ratio$)).tw.; 6. likelihood function/; 7. exp mass screening/; 8. diagnosis, differential/ or exp Diagnostic errors/; 9. di.xs or du.fs; 10. or/1‐9 |

Ovid | 100 | 3* | 0.33* | |||

|

Strategy B 1. exp 'sensitivity and specificity'/; 2. (sensitivity or specificity or accuracy).tw.; 3. (predictive adj3 value$); 4. exp Diagnostic errors/; 5. ((false adj positiv$) or (false adj negativ$)).tw; 6. (observer adj variation$).tw; 7. (roc adj curve$).tw; 8. (likelihood adj3 ratio$).tw.; 9. likelihood function/; 10. exp *venous thrombosis/di, ra, ri, us; 11. exp *thrombophlebitis/di, ra, ri, us; 12. or/1‐11 |

Ovid | 98.4 | 5* | 0.2* | ||||

|

Strategy C 1. exp 'sensitivity and specificity'/; 2. (sensitivity or specificity or accuracy).tw.; 3. ((predictive adj3 value$) or (roc adj curve$)).tw.; 4. ((false adj positiv$) or (false negativ$)).tw.; 5. (observer adj variation$); 6. likelihood function/ or; 7. exp Diagnostic errors/; 8. (likelihood adj3 ratio$).tw.; 9. or /1‐8 |

Ovid | 79.4 | 10* | 0.1* | ||||

| Deville 2000 |

Strategy 4 SENSITIVITY AND SPECIFICITY (exp) OR specificity (tw) OR false negative (tw) OR accuracy (tw) OR screening (tw) |

NR | 89.3 (82.3‐96.3) |

91.9 (90.8‐93) |

DOR 95 | |||

| Meniscal lesion | 61 (42.1‐77.1) |

4.7 | 0.22* | |||||

|

Strategy 3 SENSITIVITY AND SPECIFICITY (exp) OR specificity (tw) OR false negative (tw) OR accuracy (tw) |

NR | 80.0 (71.0‐89.1) |

97.3 (96.6‐97.9) |

48 (40‐56) |

DOR 149 | |||

|

Strategy 2 SENSITIVITY AND SPECIFICITY (exp) OR specificity (tw) OR false negative (tw) |

NR | 73.3 (63.3‐83.3) |

98.4 (97.9‐98.9) |

DOR 170 | ||||

|

Strategy 1 SENSITIVITY AND SPECIFICITY (exp) OR specificity (tw) |

NR | 70.7 (60.4‐81.0) |

98.5 (98.0‐98.9) |

DOR 158 | ||||

| Noel‐Storr 2011 | 1. Disease progression/ 2. di.fs. 3. logitudinal*.ab. 4. Follow‐up studies/ 5. conversion.ab. 6. transition.ab. 7. converters.ab. 8. progressive.ab. 9. “increased risk”.ab. 10. “follow‐up”.ab. |

Ovid | 2000‐Sept 2010 | 97 (92‐99) |

38 (37‐39) |

1.1 (0.95‐1.4) |

||

| 2000‐Aug 2011 | 98 (94‐100) |

38 (37‐39) |

1.2 (1.0‐1.4) |

NR=Not reported

Method of identification of reference set records

Different methods were used to compile the reference sets. Six studies handsearched journals to obtain a database of ‘gold standard’ references reporting relevant DTA studies (Astin 2008; Berg 2005; Deville 2000; Haynes 1994; Haynes 2004).

Three studies used a relative recall reference standard, that is the reference set was based on studies included in systematic reviews. Deville 2002 used references from two published systematic reviews (on diagnosing knee lesions and the accuracy of urine dipstick testing) that had formed part of the first author's thesis. Noel‐Storr 2011 used the references from a systematic review on the volume of evidence in biomarker studies in people with mild cognitive impairment. Another study (van der Weijden 1997) developed a reference set based on a personal literature database on erythrocyte sedimentation rate as a diagnostic test, compiled over 10 years ‘by every means of literature searching’. Finally, one study used a validated filter to locate systematic reviews indexed in MEDLINE and EMBASE reporting on diagnostic tests for deep vein thrombosis, and used the studies included in these reviews as the reference set (Vincent 2003).

Two of the 10 studies described above included all articles that were retrieved by the search for gold standard records but which were subsequently rejected from the gold standard as the non‐gold standard records in the reference set (Berg 2005; Noel‐Storr 2011). A third study used the false positive articles selected by a search using a previously published diagnostic search strategy as the non‐gold standard records in the reference set (Deville 2000). This study further restricted the non‐gold standard studies by excluding reviews, meta‐analyses, comments, editorials and animal studies. The remaining studies that included non‐gold standard records in their reference set did not provide details on how these were identified.

Composition of reference set

Seven studies included both gold and non‐gold standard references in their reference sets (Astin 2008; Bachmann 2002; Berg 2005; Deville 2000; Haynes 1994; Haynes 2004; Noel‐Storr 2011) and two studies used only gold standard studies (van der Weijden 1997; Vincent 2003). One study did not give any details on the composition of the reference set (Deville 2002). It was possible to calculate sensitivity, specificity, precision and NNR from the studies that had a reference set compiled of both included DTA studies (gold standard references) and studies that did not meet the criteria of a DTA study (non‐gold standard references) if 2 x 2 data were available. However, it was not possible to calculate specificity or precision from a reference set composed of only included DTA references. This was because the percentage of correctly non‐identified studies cannot be calculated since data for only half of a 2 x 2 table were available.

Of the six studies that used handsearching to develop the reference set, two studies concentrated on specific topic areas. Astin 2008 included records on imaging as a diagnostic test and Berg 2005 included articles from the nursing literature on cancer‐related fatigue diagnosis. The remaining studies that had a handsearched reference set were not topic specific. The two studies that used published systematic reviews to compile the reference set, and the study which used a personal literature database, were all topic specific.

Where reported, the mean number of gold standard studies in the reference set was 128 (range 33 to 333) from a mean of 35 journals (range 9 to 161). Of the studies that used reference sets which included non‐gold standard as well as gold standard records, the mean number of overall references included was 8582 (range 238 to 48,881).

Method of identification of search terms

Three studies used the reference set to derive search terms by performing statistical analysis on terms found in titles, abstracts and subject headings (Astin 2008; Bachmann 2002; Deville 2000). Three studies adapted existing search strategies (Berg 2005; Deville 2002; Vincent 2003), one of which expanded the existing filters by adding frequently occurring MeSH terms and text words found in titles and abstracts of the reference set (Berg 2005). Vincent 2003 also combined the use of existing filters with the results of reference set analysis. Of the remaining four studies, one used expert knowledge of the field to generate a list of terms (Haynes 1994), one used expert knowledge and analysis of the reference set (Haynes 2004), one checked key publications for the definitions and terms used (van der Weijden 1997), and one analysed terms in 10 studies missed by the three most sensitive published filters (Noel‐Storr 2011).

Description of studies that evaluated published MEDLINE filters

Ten evaluation studies that assessed 30 MEDLINE filters were included (Table 4; Table 5). Of these, three were development studies that also evaluated published filters and were therefore classed as both development and evaluation studies (Deville 2000; Noel‐Storr 2011; Vincent 2003). Most filters (n = 23) were evaluated by at least two studies. The median number of filters evaluated in a each study was 6, but ranged from 1 (Deville 2000; Kastner 2009) to 22 (Noel‐Storr 2011; Ritchie 2007; Whiting 2010).

4. Summary of study design characteristics of MEDLINE filter evaluation studies.

| Evaluation study: Author (year) | ||||||||||

| Kastner 2009 | Ritchie 2007 | Leeflang 2006 | Kassai 2006 | Doust 2005 | Whiting 2010 | Vincent 2003 | Deville 2000 | Mitchell 2005 | Noel‐Storr 2011 | |

| Method of identification of reference set records(one from list below selected for each study) | ||||||||||

|

‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ✓ | ✓ | ‐ |

|

✓ | ‐ | ✓ | ‐ | ‐ | ‐ | ✓ | ‐ | ‐ | ‐ |

|

‐ | ✓ | ‐ | ‐ | ✓ | ✓ | ‐ | ‐ | ‐ | ✓ |

|

✓ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | |||

| If systematic reviews used in reference set development, did they include DTA search terms in search strategy? | ✓ | X | Unclear | ‐ | ✓ | X | ✓ | ‐ | ‐ | X |

| Reference set also contained non‐gold standard records | X | ✓ | X | ✓ | X | ✓ | X | ✓ | ✓ | ✓ |

| Description of non‐gold standard records if contained in reference set | ‐ | ‐ | ‐ | ‐ | ‐ | NR | ‐ | ‐ | NR | ‐ |

|

‐ | ✓ | ‐ | ✓ | ‐ | ‐ | ‐ | ‐ | ‐ | ✓ |

|

‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ‐ | ✓ | ‐ | ‐ |

| Generic gold standard records I.e. not topic specific | ✓ | X | ✓ | X | X | ✓ | ✓ | X | X | X |

5. Study characteristics and methods of included MEDLINE filter evaluation studies.

| Study | Identification of reference set | Reference set selection criteria | Ref set years | # gold standard records | # non‐gold standard records | # journals ref set if handsearched | Definition of DTA study if handsearched gold standard identified | Description of filter allows reproducibility | Definitions of Se & Sp | Number of filters evaluated |

| Kastner 2009 | Included studies from 12 published SRs on the ACP Journal Club website and indexed on MEDLINE or EMBASE. | Eligibility criteria for including SR were: published in 2006; incorporated a MEDLINE and EMBASE search as a data source; and available and downloadable in electronic format. In addition the review cannot have used the Clinical Queries filter, but other search filters were permissible. | 2006 (date publication SRs | 441 | 0 | Not given. The 12 SRs were from 9 journals. | The study compared at least two diagnostic test procedures with one another. | yes | no | 1 |

| Ritchie 2007 | SR of DTA studies for UTI in young children carried out by the authors | Included studies that could be identified in Ovid MEDLINE | 1966‐2003 | 160 | 27804 | NA | NA | no | no | 22 |

| Leeflang 2006 | Included studies from 27 published SR. Reviews selected after an electronic search for SRs of DTA studies published between January 1999 and April 2002 in MEDLINE, EMBASE, DARE and Medion | Criteria for inclusion SRs: assessment of DTA, the inclusion of >10 original studies with inclusion not based on design characteristics, and sufficient data to reproduce the contingency table. Exclusion of reviews that reported the application of a diagnostic search filter. | 1999‐2002 | 820 | 0 | NA | NA | yes | no | 12 |

| Kassai 2006 | Used PubMed interface to search MEDLINE, Science Citation Index, EMBASE and Pascal Biomed for relevant articles using search strategies with terms (MeSH and free text for MEDLINE) related to venous thrombosis, venography and ultrasonography in all databases. | Any relevant article retrieved through topic search on MEDLINE, Science Citation Index, EMBASE and Pascal Biomed | 1966‐2002 | 237 | 1236 | NR | NR | yes | 3 | |

| Doust 2005 | Included studies from two SRs: tympanometry (TR) for the diagnosis of otitis media with effusion in children, and natriuretic peptides (NPR). Initial list of citations was generated from MEDLINE using the search strategy used by the sensitivity option of the Clinical Queries filter for DTA in PubMed. Reference lists of potentially relevant papers and review articles were checked for further possible papers. | Included in two SRs conducted by the authors | TR 1966‐2001; NPR 1994‐2002 | TR n=33; NPR n=20 | TR n=0; NPR n=0 | TR n=22; NPR n=16 |

NR | yes | yes | 5 |

| Whiting 2010 | Test accuracy studies indexed on MEDLINE from 7 SRs carried out by authors. Relative recall reference set. | All included studies indexed on MEDLINE from 7 SRs of DTA. SRs that conducted extensive searches that were not limited using methodological filters or search terms relating to measures of test accuracy | NR | 506 | 25880** | NR | Studies in which cross‐tabulation data comparing the results of the index test with the reference standard were available. | yes | yes | 22 |

| Vincent 2003 | SRs retrieved from MEDLINE and EMBASE on OVID using validated SR filter on diagnostic tests for DVT. 16 SR selected and all articles included that were indexed on MEDLINE became the reference set. Only English language articles included | Studies included in 16 SRs that compared one of the specified diagnostic tests for DVT against a venogram. | 1969‐2000 | 126 | 0 | NR | Compared specified diagnostic test to reference standard | yes | yes | 5 |

| Deville 2000 | Adapted Haynes 1991 most sensitive and specific filter by adding terms. Ran search in MEDLINE to retrieve all primary DTA studies. Second set of references selected on diagnosis of meniscal lesions of the knee for external validity testing. No further details on how this set was selected are provided. | Primary DTA studies indexed on MEDLINE; studies included on physical tests for the diagnosis of meniscal lesions of the knee. | 1992‐1995 | 75; 33 in meniscal lesions set | 2392; NR in meniscal lesions set | Diagnostic test was compared with a reference standard | yes | yes | 1 | |

| Mitchell 2005 | Handsearch of the 3 top ranking renal journals for the years 1990‐1991 and 2002‐2003. | Primary DTA studies that could be identified in MEDLINE on the diagnosis of kidney disease | 1991‐1992 2002‐2003 |

99 | 4409 | 3 | A test or tests being compared to a reference standard in a human population | yes* | NR | 6 |

| Noel‐Storr 2011 | SR on the volume of evidence in biomarker studies in those with mild cognitive impairment, conducted by the authors. | Primary DTA longitudinal studies indexed on MEDLINE with at least one follow‐up period; at least one of biomarkers of interest used as test of interest; included subjects with objective cognitive impairment at baseline, no dementia. | 2000‐Sept 2010; 2000‐Aug 2011 | 128 Sept 2010; 144 Aug 2011 | 17266 Sept 2010; 18920 Aug 2011 | NR | NA | yes* | no | 22 |

Abbreviations used: TR= Tympanometry review; NPR= Natriuretic peptides review; SR= systematic review; NA= not applicable; NR= not reported; ref set= reference set; Se= sensitivity; Sp= specificity.

* Full strategy obtained from authors

** Number of gold‐standard records obtained from authors

Method of identification of reference set records

Seven studies used a relative recall reference set consisting of studies included in DTA systematic reviews (Doust 2005; Kastner 2009; Leeflang 2006; Noel‐Storr 2011; Ritchie 2007; Vincent 2003; Whiting 2010). Of these, three studies located systematic reviews through electronic searches (Kastner 2009; Leeflang 2006; Vincent 2003) and four studies used a convenience sample of systematic reviews that either the authors or colleagues had undertaken themselves (Doust 2005; Noel‐Storr 2011; Ritchie 2007; Whiting 2010). One study used references located through handsearching of the nine highest ranking journals available on MEDLINE (Deville 2000); one study handsearched three high ranking renal journals (as identified by the authors) for primary studies on the diagnosis of renal disease (Mitchell 2005); and one study used an electronic search for primary DTA studies related to venous thrombosis, venography and ultrasonography (Kassai 2006).

Three of the studies that used a relative recall reference set included reviews which used a methodological filter to find diagnostic studies in addition to terms for test and condition (Doust 2005; Kastner 2009; Vincent 2003). One of these studies supplemented the search, which had first used the Clinical Queries diagnostic filter in PubMed, by searching the reference lists of included studies (Doust 2005).

Two studies included all articles that were retrieved by the search for gold standard records but which were subsequently rejected from the gold standard as the non‐gold standard records in the reference set (Kassai 2006; Ritchie 2007). A third study used the false positive articles selected by a search using a previously published diagnostic search strategy as the non‐gold standard records in the reference set (Deville 2000). This study further restricted the non‐gold standard studies by excluding reviews, meta‐analyses, comments, editorials and animal studies. The remaining studies that included non‐gold standard records in their reference set did not provide details on how these were identified.

Composition of reference set

Three of the seven studies derived their reference set from a systematic review that used gold standard and non‐gold standard studies (Noel‐Storr 2011; Ritchie 2007; Whiting 2010); the remaining four studies used a reference set comprised of only gold standard studies (Doust 2005; Kastner 2009; Leeflang 2006; Vincent 2003). The three studies which used an electronic search or a handsearch to find primary studies also included non‐gold standard studies in their reference sets (Deville 2000; Kassai 2006; Mitchell 2005).

The number of gold standard studies included in the reference standard ranged from 53 from two systematic reviews (Doust 2005) to 820 from 27 reviews (Leeflang 2006). In all studies that also included non‐gold standard studies, the number of irrelevant studies ranged from 1236 to 27,804.

Description of evaluated filters

All but one of the search strategies combined MeSH terms and text words; one used the single term strategy “specificity.tw” (Whiting 2010). Two of the evaluated filters that were displayed were based on the same original strategy by Haynes 1994. Falck‐Ytter 2004 presented an alternative interpretation of the original filter in a PubMed format.

EMBASE search filters

Description of development studies

Two studies reported the development of 12 search filters for finding DTA studies indexed in EMBASE (Table 6; Table 7) (Bachmann 2003; Wilczynski 2005). Eleven of the filters were composed of multiple terms. Table 6 gives a summary of the study design characteristics of the included studies.

6. Summary of study design characteristics of EMBASE filter development studies.

| Author | ||

| Bachmann 2003 | Wilczynski 2005 | |

| Method of identification of reference set records (one from list below selected for each study) | ||

|

✓ | ✓ |

|

‐ | ‐ |

|

‐ | ‐ |

| Reference set also contained non‐gold standard records | ✓ | ✓ |

| Description of non‐gold standard records if contained in reference set | ‐ | NR |

|

✓ | |

| Generic gold standard records i.e. not topic specific | ✓ | ✓ |

| Method of deriving filter terms (a combination of methods could be used) | ||

|

✓ | ✓ |

|

‐ | ✓ |

|

‐ | ‐ |

|

‐ | ‐ |

| Internal validation in reference set independent from records used to derive filter terms | x | X |

| External validation in reference set independent from records used to derive filter terms and internal validation set | x | x |

Abbreviations used: NR= not reported

7. Study characteristics and methods of EMBASE filter development studies.

| Study | Identification of reference set | How was reference set used | How were search terms identified for filter | Ref set years | # gold standard records | # non‐gold standard records | # journals ref set |

| Bachmann 2003 | Handsearching of all issues of NEJM, Lancet, JAMA and BMJ published in 1999. | To derive terms; to test strategies | Word frequency analysis on title, abstract and subject indexing of handsearched records | 1999 | 61 | 6082 | 4 |

| Wilczynski 2005 | Handsearching each issue of 55 journals in 2000. | To test strategies | Initial list of MeSH terms and text words compiled using knowledge of the field and input from librarians and clinicians. Stepwise logistic regression used to improve performance of filters. | 2000 | 97 | 27,672 | 55 |

Abbreviations used: ref set= reference set

Method of identification of reference set records

In both studies the reference set was generated by handsearching journals, and included both gold standard and non‐gold standard records. One study reported that the non‐gold standard records were identified as all articles retrieved by the search that were not classified as gold‐standard records (Bachmann 2003). The other study was not clear about how non‐gold standard records were selected (Wilczynski 2005).

Composition of reference set

Both studies included both gold standard and non‐gold standard records in the reference set.

Method of identification of search terms

One study used the reference set to derive filter terms using word frequency analysis (Bachmann 2003). The other study initially identified terms for the filter by consulting experts and then entered the terms into a logistic regression model to find the most frequently occurring terms (Wilczynski 2005).

Description of studies that evaluated published EMBASE filters

Three studies evaluated four filters designed to find DTA studies in EMBASE (Table 8; Table 9) (Kastner 2009; Mitchell 2005; Wilczynski 2005). One filter was evaluated by two studies, and three filters were evaluated by only one study. A summary of the study design characteristics of included studies is in Table 8.

8. Summary of study design characteristics of EMBASE filter evaluation studies.

| Author | |||

| Kastner 2009 | Wilczynski 2005 | Mitchell 2005 | |

| Method of identification of reference set records(one from list below selected for each study) | |||

|

‐ | ✓ | ✓ |

|

✓ | ‐ | ‐ |

|

‐ | ‐ | ‐ |

|

‐ | ‐ | |

| If systematic reviews used in reference set development, did they include DTA search terms in search strategy? | ✓ | ‐ | ‐ |

| Reference set also contained non‐gold standard records | x | ✓ | ✓ |

| Description of non‐gold standard records if contained in reference set | NR | NR | NR |

| Generic gold standard records i.e. not topic specific | ✓ | ✓ | x |

Abbreviations used: NR= not reported

9. Study characteristics and methods of studies evaluating EMBASE filters.

| Study | Identification of gold standard | Reference set selection criteria | Ref set years | # gold standard studies ref set | # non‐gold standard studies in ref set | # journals ref set for handsearched gold standard | Definition of DTA study | Description of filter allows reproducibility | Definitions of Se & Sp | Number of filters evaluated |

| Kastner 2009 | Included studies from 12 published SRs on the ACP Journal Club website and indexed in MEDLINE or EMBASE. | Eligibility criteria for including SR were: published in 2006; incorporated a MEDLINE and EMBASE search as a data source; and available and downloadable in electronic format. In addition the review cannot have used the Clinical Queries filter. | 2006 (date SRs published) | 441 | 441 | NA | The study compared at least two diagnostic test procedures with one another. | yes | no | 1 |

| Wilczynski 2005 | Handsearch of each issue of 55 journals in 2000. | Studies indexed in EMBASE found through handsearching which met the methodological criteria for a diagnostic study: | 2000 | 97 | 27575 | 55 | Inclusion of spectrum of participants; reference standard; participants received both the new test of reference standard; interpretation of index test without knowledge of reference standard and vice versa; analysis consistent with study design. | yes | yes | 2 |

| Mitchell 2005 | Handsearch of the 3 top ranking renal journals for the years 1990‐1991 and 2002‐2003 | Primary DTA studies that could be identified in EMBASE reporting on the accuracy of tests for kidney disease diagnosis | 1991‐1992 2002‐2003 |

96 | 3984 | 3 | A test or tests being compared to a reference standard in a human population | yes* | no | 4 |

Abbreviations used: ref set= reference set; Se= sensitivity; Sp= specificity

Method of identification of reference set records

One study used studies from 12 published systematic reviews to construct the reference standard (Kastner 2009). The other two EMBASE filter studies identified primary DTA studies through handsearching (Mitchell 2005; Wilczynski 2005). Neither study which had included non‐gold standard records described how those articles were identified.

Composition of reference set

Two studies included both gold standard and non‐gold standard records in the reference set (Mitchell 2005; Wilczynski 2005). The number of gold standard records ranged from 96 to 441. The number of non‐gold standard records ranged from 3984 to 27,575.

Description of evaluated filters

One evaluated filter consisted of MeSH terms and text words, the other three filters consisted of text words only. Every filter combined multiple terms.

Risk of bias in included studies

The methodological quality of the identified studies was not formally assessed using a validated tool, but we identified three areas that could affect the methodological quality of the studies in terms of the risk of bias and applicability as described above (see Assessment of risk of bias in included studies).

1. Use of systematic reviews to compile reference set search strategy

MEDLINE development and evaluation studies

Of the eight studies which used systematic reviews to compile their reference sets, three used reviews which did not include diagnostic terms in their search strategies and were at low risk of bias; one development and evaluation study and two evaluation studies specified that they only included systematic reviews which had not used a diagnostic search filter (Noel‐Storr 2011; Ritchie 2007; Whiting 2010). The systematic reviews used by Whiting and Noel‐Storr were conducted by the authors, therefore the reviewers could be sure that no such filter was applied. Ritchie also used a systematic review carried out by Whiting, which did not use a diagnostic filter.

Three studies used reviews with diagnostic terms in their search strategies and were therefore at high risk of bias. One was a development and evaluation study which contained the references from 16 systematic reviews and, of these, at least one used a diagnostic filter (Vincent 2003). Some of the other systematic reviews did not report whether they used a diagnostic filter or not, while the remaining studies were not available. Two evaluation studies also used reviews with diagnostic filter terms. Kastner's reference set contained the studies from 12 systematic reviews and, of these, just over half used diagnostic terms in their search strategies (Kastner 2009). Doust 2005 conducted two systematic reviews which were used in reference set development, and the search strategy for these applied the PubMed Clinical Queries filter for diagnostic studies.

For one development and one evaluation study, it was not clear whether the systematic reviews used a diagnostic filter in their searches (Deville 2002; Leeflang 2006). The risk of bias for these studies was unclear. The original source of the review used by Deville was not available (from the author's thesis), but a meta‐analysis published by the same author on the same topic did describe the use of diagnostic terms in the search strategy. Leeflang stated in their discussion that while they attempted to exclude any review which used a diagnostic filter in their literature search, they found that of the 27 reviews where the studies were included, seven did not describe their search in detail.

EMBASE development and evaluation studies

Only one evaluation study, reporting an EMBASE filter, used the studies from systematic reviews to compile the reference set, and just over half of the 12 systematic reviews used diagnostic terms in their search strategies (Kastner 2009). This study was, therefore, judged to be at high risk of bias.

2. Choice of gold standard records

MEDLINE development and evaluation studies

Of 17 studies, three development and three evaluation studies used generic gold standard records and caused a low level of concern regarding applicability (Bachmann 2002; Haynes 1994; Haynes 2004; Kastner 2009; Leeflang 2006; Whiting 2010). Of these, the development studies handsearched a broad range of general medical journals while the evaluation studies used the included studies from systematic reviews covering a range of diagnostic tests and conditions.

Four development studies used topic specific gold standard records to develop their filters (Astin 2008; Berg 2005; Deville 2002; van der Weijden 1997). In addition, the three studies which both developed and evaluated filters also used topic specific records (Deville 2000; Noel‐Storr 2011; Vincent 2003). Four evaluation studies used topic specific gold standard records to test the performance of published filters (Doust 2005; Kassai 2006; Mitchell 2005; Ritchie 2007). These studies caused high levels of concern regarding applicability as they were only likely to be applicable to the particular topic area in which they were developed or evaluated. The topics included in these studies varied in their breadth, for example a very narrow topic was used by Kassai 2006 (limited to studies comparing ultrasound to venography for the diagnosis of deep vein thrombosis), whereas Deville 2000 included studies on diagnostic tests from nine family medicine journals. Other topics included diagnostic tests in radiology and biomarkers for mild cognitive impairment. Noel‐Storr 2011 designed their filter to specifically retrieve longitudinal DTA studies and evaluated published filters for their ability to retrieve delayed cross‐sectional DTA studies.

EMBASE development and evaluation studies

All but one of the four studies that developed or evaluated a diagnostic EMBASE filter used a set of gold standard records derived from on a broad range of topics and tests. One evaluation study handsearched the three top ranking renal journals for studies on the diagnosis of kidney disease (Mitchell 2005).

3. Validation of filters

MEDLINE development studies

Of the 10 studies reporting the development of a MEDLINE filter, two studies used discrete derivation and validation sets of references to test internal validity and were considered to be at low risk of bias (Astin 2008; Bachmann 2002). Astin handsearched six high ranking radiology journals to find studies for the derivation set and used a different set of six journals to compile studies for the validation set. Bachmann handsearched journals in different years; the studies found in 1989 comprised the set of references used to derive terms, while the studies from 1994 comprised the validation set.

Six of the remaining studies used an internal validation set which contained the references used to derive the terms for the filter and the studies were therefore judged to be at high risk of bias (Berg 2005; Deville 2000; Haynes 1994; Haynes 2004; Noel‐Storr 2011; Vincent 2003). Of these studies, three independently selected terms to use as part of their filters, but the final strategies (made up of those terms) were derived from testing in the same set of references (Haynes 1994; Haynes 2004; Vincent 2003). Also of note, Noel‐Storr 2011 derived filter terms by running published search filters in MEDLINE combined with a subject search, locating 10 papers that all filters missed and choosing a term from the title, abstract or keywords of each. These 10 papers were included in the reference set of 144 studies.

Two studies did not perform internal validity testing of the two filters that had been developed, rather specific diagnostic topics (reviews) were used only to externally validate (Deville 2002; van der Weijden 1997). These studies reported sensitivities > 90% for their most sensitive filters.

Four studies carried out external validation of their filters in a validation set that represented real‐world settings, and the filters were judged to cause low levels of concern about applicability (Bachmann 2002; Deville 2000; Deville 2002; van der Weijden 1997). The remaining studies did not validate their filters in real‐world settings and were considered to cause high levels of concern regarding applicability (Astin 2008; Berg 2005; Haynes 1994; Haynes 2004; Noel‐Storr 2011; Vincent 2003).

EMBASE development studies

Both EMBASE development studies were at high risk of bias in this domain because neither study used a set of records independent from those used to derive the terms to internally validate their strategies (Bachmann 2003; Wilczynski 2005). Bachmann used word frequency analysis of all the titles and abstracts of studies included in the reference set to find and combine the 10 terms with the highest sensitivity and precision. Wilczynski first derived a list of potential diagnostic terms from clinical studies and then from clinicians and librarians. The individual search terms with sensitivity > 25% and specificity > 75%, when tested in the reference set, were then combined into the search strategies.

Neither study externally validated their newly developed filters and were therefore judged to have high concerns regarding applicability in this domain.

Effect of methods

1. Performance of MEDLINE filters as reported in development studies

Sensitivity ranged from 16% to 100% (median 86%; 39 filters, 10 studies), specificity ranged from 38% to 99% (median 88.5%; 30 filters, 6 studies) and precision ranged from 0.8% to 90% (median 9.3%; 32 filters, 8 studies) (Table 3).

2. Performance of evaluated MEDLINE filters

Performance data on each evaluated filter can be found in Table 10 and full search strategies can be found in Appendix 9. Thirteen of the 30 MEDLINE filters assessed by the evaluation studies had original performance data available from development studies. The other 17 filters were reported without any details on how they were developed or their performance.

10. MEDLINE filters evaluated by two or more studies (values given in percentages).

| SENSITIVITY | SPECIFICITY | PRECISION | ||||||||||||||||||||||||||||||||||||||||||||

| ORIGINAL DEVELOPMENT STUDY | RITCHIE | WHITING | LEEFLANG | KASTNER | *DOUST TR | *DOUST NPR | VINCENT | DEVILLE | DEVILLE ML | KASSAI | MITCHELL | NOEL‐STORR | ORIGINAL DEVELOPMENT STUDY | WHITING | MITCHELL | NOEL‐STORR | ORIGINAL DEVELOPMENT STUDY | RITCHIE | WHITING | *DOUST TR | *DOUST NPR | DEVILLE ALL | DEVILLE ML | MITCHELL | NOEL‐STORR | |||||||||||||||||||||

| Original development study did report performance data | ||||||||||||||||||||||||||||||||||||||||||||||

| Bachmann 2002 Sensitive | 95 | 74 | 87 | 88 | 70 | 90 | 84 | 84 | NR | 37 | 80 | 36 | 5.0 | 1.4 | 3.0 | 5.0 | 4.0 | 8.8 | 0.2 | |||||||||||||||||||||||||||

| Haynes 2004 Sensitive | 99 | 69 | 80 | 87 | 88 | 70 | 100 | 67 | 69 | 74 | 41 | 85 | 45 | 1.1 | 1.3 | 3.0 | 4.0 | 5.0 | 9.1 | 0.9 | ||||||||||||||||||||||||||

| Haynes 2004 Specific | 65 | 21 | 43 | 28 | 14 | 98 | 94 | 95 | 10.6 | 6.7 | 15.0 | 2.0 | ||||||||||||||||||||||||||||||||||

| Deville 2000 Strategy 4 | ALL=89 | 46 | 68 | 46 | 58 | 100 | 75 | 49 | 55 | 92 | 81 | 95 | 82 | NR | 4.4 | 7.0 | 9.0 | 9.0 | 16.7 | 2.2 | ||||||||||||||||||||||||||

| ML=61 | 4.7 | |||||||||||||||||||||||||||||||||||||||||||||

| Haynes 1994 Specific | 55 | 33 | 55 | 29 | 51 | 98 | 90 | 88 | 40.0 | 7.4 | 3.0 | |||||||||||||||||||||||||||||||||||

| Haynes 1994 Sensitive** | 92 | 70 | 85 | 81 | 96 | 73 | 45 | 95 | 80 | 91 | 73 | 23 | 80 | 32 | 9.0 | 1.5 | 29 | 3.4 | 5.3 | 1.0 | ||||||||||||||||||||||||||

| Vincent 2003 Strategy C | 79 | 87 | 67 | 44 | 54 | NR | 85 | 83 | 10.0 | 3.3 | 9.0 | 2.3 | ||||||||||||||||||||||||||||||||||

| van der Weijden 1997 Sensitive | 91 | 87 | 92 | 73 | 100 | 96 | 93 | NR | 15 | 96 | 30 | NR | 2.0 | 4.0 | 4.0 | 5.6 | 1.0 | |||||||||||||||||||||||||||||

| Deville 2002 Accurate |

KSR=70 USR=92 |

51 | ||||||||||||||||||||||||||||||||||||||||||||

| Haynes 1994 Accurate | 86 | 81 | 84 | 13.0 | ||||||||||||||||||||||||||||||||||||||||||

| Deville 2000 Strategy 3 | 80 | 41 | 97 | 48.0 | ||||||||||||||||||||||||||||||||||||||||||

| Deville 2000 Strategy 1 | 71 | 76 | 99 | |||||||||||||||||||||||||||||||||||||||||||

|

Vincent 2003 Strategy A |

100 | 87 | 81 | NR | 81 | 2.5 | 3.3 | 5.5 | ||||||||||||||||||||||||||||||||||||||

| Original development study did NOT report performance data | ||||||||||||||||||||||||||||||||||||||||||||||

| Falck‐Ytter 2004** | NR | 74 | 85 | 71 | NR | 39 | 51 | NR | 1.3 | 3.0 | 1.1 | |||||||||||||||||||||||||||||||||||

| CASP 2002$ | NR | 73 | 83 | 100 | 95 | 67 | NR | 53 | 49 | NR | 1.2 | 3.0 | 1.0 | |||||||||||||||||||||||||||||||||

| Deville 2002a Extended | NR | 52 | 71 | 58 | 100 | 60 | NR | 78 | 78 | NR | 3.9 | 7.0 | 8.0 | 6.0 | 2.0 | |||||||||||||||||||||||||||||||

| Aberdeen InterTASC 2011$ |

NR | 69 | 86 | 87 | NR | 39 | 33 | NR | 1.2 | 3.0 | 1.0 | |||||||||||||||||||||||||||||||||||

| Southampton A InterTASC 2011$ |

NR | 71 | 86 | 93 | NR | 13 | 29 | NR | 1.0 | 2.0 | 1.0 | |||||||||||||||||||||||||||||||||||

| Southampton B InterTASC 2011$ |

NR | 45 | 69 | 55 | NR | 80 | 81 | NR | 4.6 | 7.0 | 2.1 | |||||||||||||||||||||||||||||||||||

| Southampton C InterTASC 2011 |

NR | 31 | 56 | 51 | NR | 90 | 88 | NR | 8.5 | 11.0 | 3.0 | |||||||||||||||||||||||||||||||||||

| Southampton D InterTASC 2011 |

NR | 66 | 84 | 89 | NR | 21 | 42 | NR | 1.1 | 2.0 | 1.1 | |||||||||||||||||||||||||||||||||||

| Southampton E InterTASC 2011$ |

NR | 71 | 87 | 92 | NR | 14 | 31 | NR | 1.0 | 2.0 | 1.0 | |||||||||||||||||||||||||||||||||||

| CRD A InterTASC 2011 |

NR | 53 | 73 | 70 | NR | 62 | 58 | NR | 2.2 | 4.0 | 1.2 | |||||||||||||||||||||||||||||||||||

| CRD B InterTASC 2011 |

NR | 40 | 64 | 67 | NR | 81 | 71 | NR | 4.1 | 7.0 | 1.7 | |||||||||||||||||||||||||||||||||||

| CRD C InterTASC 2011 |

NR | 69 | 85 | 90 | NR | 24 | 43 | NR | 1.2 | 2.0 | 1.2 | |||||||||||||||||||||||||||||||||||

| HTBS InterTASC 2011 |

NR | 46 | 69 | 56 | NR | 83 | 80 | NR | 3.7 | 8.0 | 2.0 | |||||||||||||||||||||||||||||||||||

| Shipley Miner 2002 | NR | 48 | 72 | 63 | NR | 73 | 73 | NR | 1.8 | 5.0 | 1.7 | |||||||||||||||||||||||||||||||||||

| Deville 2002a Accurate | NR | 88 | NR | NR | ||||||||||||||||||||||||||||||||||||||||||

| University of Rochester 2002$ | NR | 79 | NR | NR | ||||||||||||||||||||||||||||||||||||||||||

| North Thames 2002$ | NR | 53 | NR | NR | ||||||||||||||||||||||||||||||||||||||||||