Abstract

Background

Publication of complete trial results is essential if people are to be able to make well‐informed decisions about health care. Selective reporting of randomised controlled trials (RCTs) is a common problem.

Objectives

To systematically review studies of cohorts of RCTs to compare the content of trial reports with the information contained in their protocols, or entries in a trial registry.

Search methods

We conducted electronic searches in Ovid MEDLINE (1950 to August 2010); Ovid EMBASE (1980 to August 2010); ISI Web of Science (1900 to August 2010) and the Cochrane Methodology Register (Issue 3, 2010), checked reference lists, and asked authors of eligible studies to identify further studies. Studies were not excluded based on language of publication or our assessment of their quality.

Selection criteria

Published or unpublished cohort studies comparing the content of protocols or trial registry entries with published trial reports.

Data collection and analysis

Data were extracted by two authors independently. Risk of bias in the cohort studies was assessed in relation to follow up and selective reporting of outcomes. Results are presented separately for the comparison of published reports to protocols and trial registry entries.

Main results

We included 16 studies assessing a median of 54 RCTs (range: 2 to 362). Twelve studies compared protocols to published reports and four compared trial registry entries to published reports. In two studies, eligibility criteria differed between the protocol and publication in 19% and 100% RCTs. In one study, 16% (9/58) of the reports included the same sample size calculation as the protocol. In one study, 6% (4/63) of protocol‐report pairs gave conflicting information regarding the method of allocation concealment, and 67% (49/73) of blinded studies reported discrepant information on who was blinded. In one study unacknowledged discrepancies were found for methods of handling protocol deviations (44%; 19/43), missing data (80%; 39/49), primary outcome analyses (60%; 25/42) and adjusted analyses (82%; 23/28). One study found that of 13 protocols specifying subgroup analyses, 12 of these 13 trials reported only some, or none, of these. Two studies found that statistically significant outcomes had a higher odds of being fully reported compared to nonsignificant outcomes (range of odds ratios: 2.4 to 4.7). Across the studies, at least one primary outcome was changed, introduced, or omitted in 4‐50% of trial reports.

Authors' conclusions

Discrepancies between protocols or trial registry entries and trial reports were common, although reasons for these were not discussed in the reports. Full transparency will be possible only when protocols are made publicly available or the quality and extent of information included in trial registries is improved, and trialists explain substantial changes in their reports.

Keywords: Publication Bias, Clinical Protocols, Clinical Protocols/standards, Cohort Studies, Double-Blind Method, Random Allocation, Randomized Controlled Trials as Topic, Randomized Controlled Trials as Topic/methods, Randomized Controlled Trials as Topic/standards, Registries, Registries/standards

Plain language summary

Comparison of protocols and registry entries to published reports for randomised controlled trials

The non‐reporting of a piece of research and the selective reporting of only some of its findings has been identified as a problem for research studies such as randomised trials and systematic reviews of these. If the decision about what to report and what to keep unpublished is based on the results obtained in the trial, this will lead to bias and potentially misleading conclusions by users of the research. One way to see if there might be discrepancies between what was planned or done in a trial and what is eventually reported is to compare the protocol or entry in a trial registry for the trial with the content of its published report. This might reveal that changes were made between the registration and planning of the trial and its eventual analysis. Any such changes should be described in the published report, to reassure readers and others who will use the trial's results that the risk of bias has been kept low.

This Cochrane methodology review examines the reporting of randomised trials by reviewing research done by others in which the information in protocols or trial registry entries were compared to that in the published reports for groups of trials, to see if this detected any inconsistencies for any aspects of the trials. We included 16 studies in this review and the results indicate that there are often discrepancies between the information provided in protocol and trial registry entries and that contained in the published reports for randomised trials. These discrepancies cover many aspects of the trials and are not explained or stated in the published reports.

Background

Full publication of complete trial results is essential if clinicians, patients, policy makers and others are to make well‐informed decisions about health care. The phenomenon whereby reports of studies are not submitted or published because of the strength and direction of the trial results has been termed ‘publication bias’ (Dickersin 1987; Hopewell 2009). An additional and potentially more serious threat to the validity of evidence‐based healthcare is selective reporting of results. If the decisions about which results to publish are based on the strength or direction of those results, it will result in bias. The selective reporting of outcomes, termed ‘outcome reporting bias (ORB)’, has been defined as the selection for publication of a subset of the original recorded variables from a trial based on the results (Hutton 2000; Williamson 2005a). Therefore, data available in published reports may be subject to bias (Tannock 1996; Hahn 2000; Chan 2008a). This type of bias will not only impact upon the interpretation of the individual randomised controlled trial (RCT) but also the results of any systematic review for which the trial is eligible.

Details of how an RCT will be conducted, including the outcomes to be measured and reported should be included in its protocol and, due to the varying quality of protocols and the need for transparency, the SPIRIT initiative (Standard Protocol items for Randomized Trials) has been established to produce a set of guidelines for the preparation of protocols (Chan 2008b). This should lead to improvements in the quality of protocols, which will make it easier to carry out a critical evaluation of a trial's results and to compare what was done with what was originally planned.

The case for clinical trial registration has been advocated for several decades (Simes 1986) and, in 2004, the International Committee of Medical Journal Editors (ICMJE) announced that its member journals would not consider a trial for publication unless it had been registered in a trial registry (De Angelis 2004). The ICMJE will accept registration of clinical trials in any of the primary registers that participate in the World Health Organisation's (WHO) International Clinical Trials Registry Platform (ICTRP) (Ghersi 2009). To enhance transparency of research, the International Clinical Trials Registry Platform, based at WHO, produced a minimum trial registration dataset of 20 items (see http://www.who.int/ictrp/network/trds/en/).

An earlier review (Dwan 2008) focused on the selective reporting of outcomes from among the complete set that were originally measured within a study. This helped to highlight the recent attention in the scientific literature on the problems associated with incomplete outcome reporting, and there is little doubt that non‐reporting of pre‐specified outcomes has the potential for bias (Chan 2004a; Williamson 2005b; Dwan 2008; Kirkham 2010).

Description of the problem or issue

However, selective reporting is not restricted to selective reporting of outcomes. Different measures of the same outcome may be selectively reported based on the results or an endpoint score might be reported instead of the change from baseline or vice versa. There may also be selective reporting of multiple analyses of the same data; for example, per protocol analyses may be reported rather than intention to treat analyses; or only first period results might be reported in cross over trials. Furthermore, a continuous outcome may be converted to binary data, with the choice of cut‐off selectively chosen from several different cut‐offs examined. Analyses may also be selectively reported from multiple time points (Williamson 2005b). Subgroup analyses are often undertaken in trials, although often not pre‐specified (Wang 2007) and the complete data are not always reported, with subgroup analyses with statistically significant results being more likely to be reported (Hahn 2000; Chan 2008c).

More broadly, discrepancies in any aspect of a trial (such as changes to the trial methodology) can occur between the preparation of the protocol or trial registry entry and publication of the trial's findings. Adherence to the trial protocol is important and any substantial changes to the protocol should be submitted to an ethics committee and described in the trial report.

The validity of a trial can more easily be judged with full disclosure of protocols (Chan 2008a) and by consulting the information in trial registries. Several journals now require submission of reports of trials to be accompanied by the trial protocol, and some publish this along with the manuscript. When conducting a systematic review, it is important to assess any discrepancy between the protocol and trial report, and to examine its potential to introduce bias.

Description of the methods being investigated

Adherence to what was described in trial protocols and entries in trial registries is investigated in this review for RCTs in humans (individuals or groups of people). Comparing what was planned in the original trial protocol or on a trial registry with what was actually reported in the subsequent publications provides information on adherence to the protocol or trial registry. However, if the trialists did intend to do something that was stated in the trial registry or protocol but this proved not to be possible, this would not be seen as non‐adherence to their original plan if a legitimate reason was declared in the trial report or when that report was submitted for publication.

Why it is important to do this review

To date, no systematic review has summarised the evidence from cohort studies that have compared protocols or trial registries to published articles for RCTs. A previous review (Dwan 2008) considered only cohort studies that looked at differences in outcome measures between the protocol and published report. This Cochrane methodology review considers all differences identified between protocols or trial registries and published reports, to provide evidence of non‐adherence to the intentions in the protocol or registry entry. It includes descriptive data relating in particular to outcome reporting bias, within study selective reporting bias, and other discrepancies. We highlight priority areas for establishing guidelines for improving reporting standards.

Objectives

To assess the reporting of RCTs, by reviewing research that used cohorts of RCTs to compare the content of the published reports of these trials with the information stated in

their protocols, or

their entries in a trial registry.

To assess whether these differences are related to trial characteristics, such as sample size, source of funding or the statistical significance of results.

Methods

Criteria for considering studies for this review

Types of studies

We sought any published or unpublished cohort study comparing protocols or trial registry entries to published reports of RCTs for any aspect of trial design or analysis. Published reports include any report in a peer reviewed journal resulting from the RCT, although the definition of a 'published report' may vary across cohorts. All published reports that a cohort study considered in their comparison will be considered in this review, i.e. any publication of the included trials, not just the report including the primary outcome. Cohort studies that only compared conference abstracts to a protocol or trial registry entry will not be included, due to the lack of sufficient space in the abstract to allow the level of reporting that would allow adherence to be assessed.

Cohorts containing exclusively RCTs are eligible. If studies included a mixture of RCTs and non‐RCTs but reported data separately for the two types of study, we used the findings for the RCTs. If studies included a mixture of RCTs and non‐RCTs but did not report these study designs separately, we contacted the authors for data on the RCTs alone.

Types of data

We included data regarding differences between the protocol (as defined in the cohort study) or the trial registry entry and the published report. Trial characteristics (including sample size and source of funding), and any assessment of the quality of the included RCTs (however measured in the cohort study) were extracted and reported. We recorded the definition of the "protocol" used for each cohort study, in particular whether they examined the original protocol or an amended version.

Types of methods

We recognise that eligible studies might not compare all aspects of the protocol or trial registry to the trial report. Therefore, we include any study that examines any difference between protocol or trial registry and the published report.

Types of outcome measures

Differences between the protocol or trial registry entry and the published report for any aspect of the included trials. These include:

a. All specified outcomes, and whether designated as primary or secondary, and whether reflecting efficacy or harm

b. Methodological features, including but not limited to randomisation, blinding, allocation concealment

c. Statistical analysis

d. Sample size and sample size calculations

e. Funding

f. Any other aspect.

Search methods for identification of studies

We conducted electronic searches and checked reference lists to identify studies. Studies were not excluded based on language of publication or our assessment of their quality.

Electronic searches

Literature searches were conducted in Ovid MEDLINE (1950 to August 2010); Ovid EMBASE (1980 to August 2010); ISI Web of Science (1900 to August 2010) and the Cochrane Methodology Register (Issue 3, 2010). See Appendix 1 for more details.

Searching other resources

Articles were sought through known item searching (i.e. studies that were already to known to the authors of this review through previous work and familiarity with the research area), with articles citing those references being retrieved for screening. Authors of studies that are deemed eligible for inclusion were contacted to ask if they knew of any other relevant published or unpublished studies.

Data collection and analysis

Selection of studies

The titles and abstracts of all reports identified using the search strategy detailed above were independently screened by two authors (KD and MB). The full‐text for all records identified as potentially eligible was retrieved and reviewed for eligibility by the same two authors, using the inclusion criteria listed in the protocol for this review. There were no disagreements between the authors. If there had been, these would have been resolved through discussion or by a third author (PRW).

Data extraction and management

One author (KD) extracted all relevant data from the eligible studies and recorded this on a specifically designed form, and a second author (LC) assessed the accuracy of data extraction. There were no discrepancies in data extraction. If there had been, these would have been resolved through discussion or by a third author (PRW). Data extraction included:

Study characteristics: author names, institutional affiliation, country, contact address, language of publication, type of document, and whether the study is a comparison of protocols or trial registry entries to published reports.

Population: journals in which the assessed RCTs were published, trial registry, definition of protocol, medical specialty area, number of RCT reports included in the comparison.

Reporting quality: comparisons made between protocol or trial registry and published report.

Discrepancies, similarities, completeness of reporting, non‐reporting, and factors of particular interest (i.e.sample size and source of funding).

Information on the statistical significance (i.e. p‐value above or below 0.05), and perceived importance (as decided by the authors of the cohort study) or direction of results.

RCT quality: score on any quality assessment scale, and name of quality assessment scale used. This will depend on how each cohort study assessed the quality of trials reviewed.

Assessment of risk of bias in included studies

An assessment of the risk of bias for each included cohort study was made independently by two authors (KD and LC) using the following criteria:

1. Was there complete or near complete follow up (after data analysis) of all of the RCTs in the cohort?

Yes, percentage of follow up to be recorded, including number of unpublished studies.

No

Unclear

2. Were cohort studies free of selective reporting?

Yes (i.e. all comparisons stated in the methods section were fully reported)

No (i.e. not all of the comparisons that were stated in the methods section were fully reported)

Unclear

Each criterion was assigned an answer of yes, no or unclear, corresponding to a low, high or unclear risk of bias within the cohort study, respectively. There were no disagreements between the authors on this assessment. If there had been, these would have been resolved through discussion or by a third author (PRW).

Measures of the effect of the methods

Discrepancies between protocols or trial registries and trial reports were sought and reported using the following framework.

Discrepancies regarding outcomes were considered, when possible, as follows:

Primary outcome stated in the protocol or trial registry is the same as in the published report;

Primary outcome stated in the protocol or trial registry is downgraded to secondary in the published report;

Primary outcome stated in the protocol or trial registry is omitted from the published report;

A non primary outcome in the protocol or trial registry is changed to primary in the published report;

A new primary outcome that was not stated in the protocol or trial registry (as primary or secondary) is included in the published report;

The definition of the primary outcome was different (although the same variable) in the protocol or trial registry compared to the published report.

Discrepancies regarding trial methodology were considered, when possible, as follows:

The method of randomisation, blinding and allocation concealment stated in the published report was different in the protocol or trial registry;

The method of randomisation, blinding and allocation concealment was stated in the protocol or trial registry but not stated in the published report.

Discrepancies regarding statistical analysis were considered, when possible, as follows:

Per protocol analyses reported rather than intention to treat analyses, with the analysis used in the published report being different to what was stated in the protocol or trial registry;

First period results in cross over trials only reported instead of the appropriate results, with the analysis used in the published report being different to what was stated in the protocol or trial registry;

Endpoint score reported instead of change from baseline or vice versa, with the analysis used in the published report being different to what was stated in the protocol or trial registry;

Continuous outcome converted to binary, with the cut off used in the published report being different to what was stated in the protocol or trial registry;

Analyses at multiple time points stated in the protocol or trial registry differ to those included in the published report;

Subgroup analyses in the published report are different from those stated in the protocol or trial registry.

Discrepancies regarding sample size and sample size calculations were considered, when possible, as follows:

Sample size and sample size calculation stated in the published report was different to that in the protocol or trial registry;

Sample size and sample size calculation was stated in the protocol or trial registry but not stated in the published report.

Discrepancies regarding funding were considered, when possible, as follows:

Source of funding in the published report was different in the protocol or trial registry;

Source of funding was stated in the protocol or trial registry but not stated in the published report.

Unit of analysis issues

The unit of analysis in all the cohort studies was the RCT for which a paired protocol or trial registry entry and a published report was compared.

Dealing with missing data

If any data were perceived to be missing, whether this is information on characteristics of the cohort study or results regarding the included RCTs, the correspondence author of the cohort study was contacted for further information. If we did not receive a reply, we contacted their co‐authors.

Assessment of heterogeneity

Heterogeneity of included cohort studies is discussed narratively.

Assessment of reporting biases

We assessed the selective reporting of results in the cohort studies by comparing the methods section of the included cohort study to its results section, and completing a table of all comparisons reported by each cohort study. Authors of included studies were contacted to ask if they reported all comparisons that they looked at and if they knew of any other cohort studies that may be eligible to be included in this review, to limit publication bias.

Data synthesis

This review provides a descriptive summary of the findings of the included cohort studies because they were too diverse to combine in a meta‐analysis, due to the population of included RCTs in each cohort study and the different aspects of RCTs that they investigated. The cohort studies that have compared protocols to published reports are considered separately from the cohort studies that compared trial registry entries to published reports.

Subgroup analysis and investigation of heterogeneity

We planned to explore the following factors in subgroup analyses, assuming enough studies were identified, as we believed that these were plausible explanations for heterogeneity: small sample size versus large sample size (as defined in the included cohort study); industry funding versus public funding of the RCTs; and significant results versus non‐significant results.

Sensitivity analysis

We did not plan or undertake any sensitivity analyses.

Results

Description of studies

Results of the search

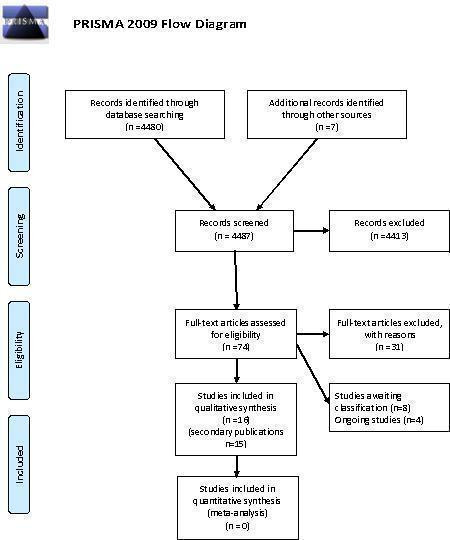

The electronic search strategies identified 4480 citations (Cochrane Methodology Register 479; EMBASE 1579; MEDLINE 1140 and Web of Science 1282). One of the studies that we already knew about was not found in this search (Hahn 2002). Our searching of conference proceedings identified one further study (You 2010) and contact with authors located a further study (Djulbegovic 2010). We did not find any additional studies by screening the reference lists of eligible studies. When we screened the titles and abstracts, 4413 citations were excluded as they were not relevant. This is shown in the PRISMA flow diagram (Figure 1).

1.

PRISMA 2009 Flow Diagram

We know of four ongoing studies (McKenzie 2010; Rasmussen 2010; Chan 2010; Urrutia 2010).

Included studies

After screening titles and abstracts, we obtained and assessed the full text for 70 citations. Sixteen studies were deemed eligible for inclusion, with 31 associated publications. The median sample size was 54 RCTs (range: 2 to 362).

There are eight studies awaiting classification (Chappell 2005; Djulbegovic 2009; Djulbegovic 2010; Ghersi 2006; Jureidini 2008; Mhaskar 2009; Smyth 2010;You 2010) as we were only able to find abstracts for these. They are likely to be eligible and we have contacted the authors for more information, so that they can be considered for inclusion in future updates of this review. Information on these studies is included in the table of Characteristics of studies awaiting classification.

Of the 16 included studies, 12 compared protocols to published reports (Al‐Marzouki 2008; Blumle 2008; Chan 2004a; Chan 2004b; Gandhi 2005; Hahn 2002; Pich 2003; Scharf 2006; Shapiro 2000; Soares 2004; Vedula 2009; von Elm 2008) and four compared registry entries to published reports (Bourgeois 2010; Charles 2009; Ewart 2009; Mathieu 2009).

Comparison of protocols to published reports

Ten of the 12 included cohort studies only considered RCTs and two considered a mixture of RCTs and other studies (Hahn 2002; Vedula 2009). Data were available for the RCTs included in the Hahn 2002 cohort study for outcomes. In the Vedula 2009 cohort, 19 of the 21 included studies were RCTs and, for the data on comparisons, 11 (92%) of the 12 included studies were RCTs and the other was an uncontrolled open label trial.

One cohort study followed up protocols that had been peer reviewed for publication by the Lancet (Al‐Marzouki 2008). Five cohort studies followed up a cohort of protocols approved by an ethics committee (Blumle 2008; Chan 2004a; Hahn 2002; Pich 2003; von Elm 2008). Chan 2004b considered protocols funded by the Canadian Institute of Health Research (CIHR), Gandhi 2005 looked at the National Institute of Health (NIH) funded RCTs for people with HIV and Vedula 2009 examined trials of gabapentin funded by Pfizer and Warner‐Lambert’s subsidiary, Parke‐Davis. One cohort study (Scharf 2006) looked at studies that used the Common Toxicity Criteria version 2.0 on the National Cancer Institute (NCI) Clinical Data Update System (CDUS). One cohort study investigated trials that served as the subject of a single study Clinical Alert (an advisory issued by the National Institutes of Health in the USA) for which the journal article was published (Shapiro 2000) and another followed up trials conducted by the Radiation Therapy Oncology Group (RTOG) since its establishment (Soares 2004). The definition of the protocol version used for the comparison with the published report are included in the Characteristics of included studies table and included: a summary on the Lancet's website (Al‐Marzouki 2008); the original protocol obtained from the ethics committee (Hahn 2002; Pich 2003) or the authors (Shapiro 2000); and protocols, amendments and correspondance (Blumle 2008; Chan 2004a; Chan 2004b; Gandhi 2005; Soares 2004; Vedula 2009; von Elm 2008). The version of the protocol used was not stated in one cohort study (Scharf 2006).

Comparison of trial registry entries to published reports

All four included studies that compared the content of a trial registry entry with the subsequent report of the research considered RCTs only.

One cohort study followed up drug trials listed on ClinicalTrials.gov (Bourgeois 2010). One cohort study searched MEDLINE for superiority RCTs published in six high impact factor general medical journals and then looked for registration details of these trials (Charles 2009). One cohort study looked for registration information on RCTs published in consecutive issues of five major medical journals (Ewart 2009). One cohort study searched MEDLINE via PubMed for reports of RCTs in three medical areas (cardiology, rheumatology, and gastroenterology) indexed in 2008 in the ten general medical journals and specialty journals with the highest impact factors (Mathieu 2009).

Excluded studies

Thirty one studies were excluded, the majority of which did not compare protocols or trial registry entries to publications (Characteristics of excluded studies).

Risk of bias in included studies

Risk of bias was assessed by considering the follow up of RCTs in the included cohort studies and selective reporting by the authors of the cohort studies.

Incomplete outcome data

Comparison of protocols to published reports

Nine cohort studies followed up all protocols, or had less than 10% loss to follow up in their cohort, and were deemed at low risk of bias (Blumle 2008,Chan 2004a; Chan 2004b; Gandhi 2005; Pich 2003; Scharf 2006; Shapiro 2000; Soares 2004; von Elm 2008). Three studies, with loss to follow up either greater than 10% or not reported, were deemed at high risk of bias (Al‐Marzouki 2008; Hahn 2002; Vedula 2009). Details are included in the risk of bias table for each study.

Comparison of trial registry entries to published reports

Three cohort studies did not follow up all trials in their cohort (with loss to follow up either greater than 10% or not reported) and were therefore deemed at high risk of bias (Charles 2009; Ewart 2009; Mathieu 2009). Details are included in the risk of bias table for each study. For one cohort study, follow up was unclear and authors have been contacted for more information (Bourgeois 2010).

Selective reporting

Comparison of protocols to published reports

Ten cohort studies reported all outcomes stated in their methods section and were therefore deemed at low risk of bias (Al‐Marzouki 2008; Chan 2004a; Chan 2004b; Gandhi 2005; Hahn 2002; Scharf 2006; Shapiro 2000; Soares 2004; Vedula 2009; von Elm 2008). One study did not report all outcomes stated in their methods section and was therefore deemed at high risk of bias (Blumle 2008). Details are included in the risk of bias table for each study and more information on outcomes are included in the results tables. In one cohort study, it was unclear whether any other comparisons had been made between protocols and published reports (Pich 2003).

Comparison of trial registry entries to published reports

Three cohort studies reported all outcomes stated in their methods section and were therefore deemed at low risk of bias (Bourgeois 2010; Charles 2009; Mathieu 2009). One study did not report all outcomes stated in their methods section and was therefore deemed at high risk of bias (Ewart 2009). Details are included in the risk of bias table for each study and more information on outcomes are included in the results tables.

Other potential sources of bias

No other potential sources of bias were identified.

Effect of methods

Comparison of protocols to published reports

Eligibility criteria

Three studies compared eligibility criteria (Blumle 2008; Gandhi 2005; Shapiro 2000) and found that between 0% and 63% of RCTs reported all eligibility criteria in the published reports that were stated in the protocol. Two of these studies (Blumle 2008; Gandhi 2005) found that there were differences between the protocol and published report (19% (6/32) and 100% (52/52)) and one study (Blumle 2008) found that in 86% of RCTs, new eligibility criteria were included in the published report which were not stated in the protocol (Table 1).

1. Differences between protocol and published reports: eligibility criteria.

| Studies that compared protocols to published reports | |

| Blumle 2008 | Eligibility criteria (EC) identical: 0/52 Differences in EC reporting: 100% (52/52); missing (96%) or modified (88%) in the publication, 86% were added in the publications |

| Gandhi 2005 | Subjective clinical criteria identical: 31% (10/32) Enrolment criteria: 34% (11/32) reported all, 31% (10/32) listed fewer than 50% of the eligibility criteria; 19% (6/32) disclosed less than a quarter of the actual enrolment criteria and no information was available for 5 trials. |

| Shapiro 2000 | 82% of protocol eligibility criteria were reported in methods papers, 63% in journal articles and 19% in clinical alerts Definition of disease (criteria that define clinical parameters of the disease being studied): 100% Precision (criteria that render the study population more homogeneous for the purposes of the trial): 66% Safety (criteria that exclude persons thought to be unduly vulnerable to harm from the study therapy): 57% Legal and ethical (criteria needed to ensure that research satisfies legal and ethical norms of human experimentation): 52% Administrative (criteria that ensure the smooth functioning of the trial): 17% |

Methodological information

Two studies compared methodological information (Chan 2004a; Soares 2004). Chan 2004a considered blinding, allocation concealment and sequence generation; six of 102 trials had adequate allocation concelament according to the trial publication and 96 of 102 trials had unclear allocation concealment. According to the protocols, 15 (16%) of these 96 trials had adequate allocation concealment, 80 (83%) had unclear concealment, and one of the 96 trials had inadequate concealment. In 6% (4/63) of trials that specified the method of allocation concealment, the protocol and the publication gave conflicting information on which method was used. In 79% (81/102) of trials, the publication gave no information on how the allocation sequence was generated; 20% of these (16/81) described adequate sequence generation in the protocol (Pildal 2005). Blinding was mentioned in the protocol for 72% (73/102) of trials and no publication reported a protocol change relevant to blinding. There was an exact match between the global terms used to describe blinding in 75% of the trials with blinding (55/73) and 32% (23/73) had an exact match of the key trial personnel who were described as blinded. Discrepant (but not necessarily contradictory) global terms were used to describe blinding in 22% (16/73) of trials with blinding, and, in 67% (49/73), there was discrepant information on who was blinded (Hrobjartsson 2009). Soares 2004 found that although all trials had adequate allocation concealment according to the protocol, this was reported in only 41% (24/59) of the papers (Table 2).

2. Differences between protocol and published reports: methods of randomisation, allocation, concealment or blinding.

| Studies that compared protocols to published reports | |

| Chan 2004a | 94% (96/102) trials had unclear allocation concealment according to the trial publication. According to the protocols, 15 of these 96 trials had adequate allocation concealment (16%, 95% CI 9% to 24%), 80 had unclear concealment (83%, 74% to 90%), and one had inadequate concealment. One was inadequate in both protocol and publication. Both were adequate in four. Unclear in protocol and adequate in publication in four Eighty one of the 102 trial publications (79%) gave no information on how the allocation sequence was generated; 16 of these 81 trials (20%, 12% to 30%) described adequate sequence generation in the protocol. No protocols or trial publications reported inadequate methods of sequence generation. Numbered coded vehicles was the most frequently applied method according to the protocols (26 of 102) but had the lowest rate of appearance in the trial publications (three of 26). In 39 of the 102 trials (38%) neither the protocols nor the publications provided any information on attempts to conceal the allocation. In four trials, the protocol and the publication gave conflicting information on which method was used. In 42 of the 55 double blind studies (76%), a security system for emergency code breaking was described in the protocol but mentioned in only one publication. Table in the paper includes differences in methods of allocation concealment. Blinding was mentioned for 73 of the 102 trials (72%; 95% CI: 62% to 80%) in the protocols alone (5), in the publications alone (9), or in both (59). No publication reported a protocol change relevant to blinding 55/73 (75%) exact match between the global terms used to describe blinding. 23/73 (32%) exact match of the key trial persons described as blinded 2/73 (3%) used overtly contradictory global terms to describe blinding 1/73 (1%) provided overtly contradictory information on who was blinded 16/73 (22%) used discrepant (but not necessarily contradictory) global terms to describe blinding 49/73 (67%) had discrepant information on who was blinded |

| Soares 2004 | All trials had adequate allocation concealment (through central randomisation), this was reported in only 24 (41%) of the papers. |

Authors

One study compared authors included in the protocol to the published report (Chan 2004a) and concluded that ghost authorship in industry‐initiated trials is very common with the company statistician listed only in the protocol in 23% (10/44) of trials (Table 3). Only five protocols explicitly identified the author of the protocol, but none of these individuals, all of whom were company employees were listed as authors of the publications or were thanked in the acknowledgments, although one protocol had noted that the 'author of this protocol will be included in the list of authors' (Gøtzsche 2007).

3. Differences between protocol and published reports: Authors (post hoc).

| Studies that compared protocols to published reports | |

| Chan 2004a | Company statistician listed only in the protocol: 10/44 (23%) Only five protocols explicitly identified the author of the protocol, but none of these individuals, all of whom were company employees were listed as authors of the publications or were thanked in the acknowledgments, although one protocol had noted that the 'author of this protocol will be included in the list of authors'. |

Funding

One study compared protocols and reports for information about funding. Chan 2004a found that 50% (22/44) of protocols stated that the sponsor either owned the data or needed to approve the manuscript, but such conditions for publication were not stated in any of the trial reports (Gøtzsche 2006) (Table 4).

4. Differences between protocol and published reports: source of funding.

| Studies that compared protocols to published reports | |

| Chan 2004a | 50% (22/44) protocols stated that the sponsor either owned the data or needed to approve the manuscript, but such conditions for publication were not stated in any of the trial reports. According to the protocols, the sponsor had access to accumulating data during 16 trials, eg, through interim analyses and participation in data and safety monitoring committees. Such access was disclosed in only 1 corresponding trial article. An additional 16 protocols noted that the sponsor had the right to stop the trial at any time, for any reason; this was not noted in any of the trial publications. Constraints on the publication rights were described in 40 (91%) of the protocols, and 22 (50%) noted that the sponsor either owned the data, needed to approve the manuscript, or both. None of the constraints were stated in any of the trial publications. |

Sample size

Four studies compared sample size (Chan 2004a; Chan 2004b; Pich 2003; Soares 2004). In summarising these results, the denominators differ because they are dependent on whether the particular component was mentioned in the publication. In the Chan 2004a study; 18% (11/62) of trials described sample size calculations fully and consistently in both the protocol and the publication, whilst six presented a power calculation in the publication but not in the protocol. In 13% (4/31) the power calculation was based on an outcome other than the one used in the protocol; the value of delta was different in 18% (6/33); the estimated standard deviation was different in 21% (3/14); and there were discrepancies in the power in 21% (7/34) and sample size in 27% (8/30). Publications for 24% (8/34) of trials reported components (delta, outcome measure, estimated event rates, estimated standard deviation, alpha, power) that had not been pre‐specified in the protocol. None of the publications mentioned any amendments to the original sample size calculation. Chan 2004b noted that 36 studies reported a power calculation; two trials used a different outcome from the protocol and one trial introduced a power calculation that had not been in protocol. A priori sample size calculations were performed in 76% (44/58) of the trials in the Soares 2004 study, but this information was given in only 16% (9/58) of the published reports. End points were clearly defined, and errors were prespecified in 76% (44/58) and 74% (43/58) trials, respectively, but only reported in 10% (6/58) of the papers.

In the Pich 2003 study, 45% (64/143) of RCTs had a recruitment rate lower than expected; 27% (39/143) was as expected, and it was higher than expected in 24% (34/143). In one of 143 trials, the recruitment period was not closed, and no information was available for five. (Table 5)

5. Differences between protocol/registry entry and published reports: sample size and sample size calculation.

| Studies that compared protocols to published reports | |

| Chan 2004a | 11/62 trials (18%) described sample size calculations fully and consistently in both the protocol and the publication 4/38 (11%) power calculation based on an outcome other than the one used in the protocol; 6/33 (18%) delta different; 3/14 (21%) estimated SD different; 7/34 (21%) power; 8/30 (27%) sample size; 16/34 (47%) discrepancies in any component of sample size 6 presented power calculation in the publication but not in the protocol 18/34 (53%) unacknowledged discrepancies between protocols and publications were found for sample size calculations Publications for eight trials reported components that had not been pre‐specified in the protocol 30 subsequently recruited a sample size within 10% of the calculated figure from the protocol; 22 trials randomised at least 10% fewer participants than planned as a result of early stopping (n=3), poor recruitment (2), and unspecified reasons (17); and 10 trials randomised at least 10% more participants than planned as a result of lower than anticipated average age (1), a higher than expected recruitment rate (1), and unspecified reasons (8). A calculated sample size was as likely to be reported accurately in the publication if there was a discrepancy with the actual sample size compared with no discrepancy (11/32 v 14/30). None of the publications mentioned any amendments to the original sample size calculation. |

| Chan 2004b | 36 studies reported a power calculation 2/36 (6%) used a different outcome from the protocol 1/36 (3%) introduced a power calculation that had not been in protocol. |

| Pich 2003 | 45% (64/143) recruitment rate was lower than expected; 27% (39/143) was as expected, and in 24% (34/143) was higher than expected. In 1 out of 143 clinical trials (1%) the recruitment period was not closed, and no information was available for 5 out of 143 trials (3%) |

| Soares 2004 | a priori sample size calculations were performed in 44 (76%) trials, but this information was given in only nine of the 58 published papers (16%). End points were clearly defined, and errors were prespecified in 44 (76%) and 43 (74%) trials, respectively, but only reported in six (10%) of the papers. |

| Studies that compared registry entries to published reports | |

| Charles 2009 | 5% (10/215) did not report any sample size calculation 89% (31/35), the data for sample size calculation were given. For 52% (16/35) articles the reporting of the assumptions differed from the design article. (not clear if this is a comparison from trial registry or just other articles) For 96/113 registered articles (85%), an expected sample size was given in the online database and was equal to the target sample size reported in the article in 46/96 of these articles (48%). The relative difference between the registered and reported sample size was greater than 10% in 18 articles (19%) and greater than 20% in five articles (5%). The parameters for the sample size calculation were not stated in the online registration databases for any of the trials. |

Statistical analyses

Four studies compared the statistical analysis plan stated in the protocol with the published report (Chan 2004a; Scharf 2006; Soares 2004; Vedula 2009). In the Chan 2004a study, 99% (69/70) of parallel trials were designed and reported as superiority trials and one trial was stated to be an equivalence trial in the protocol but reported as a superiority trial in the publication, with no explanation given for the change. Unacknowledged discrepancies between protocols and publications were found for methods of handling protocol deviations (44%; 19/43) and missing data (80%; 39/49), primary outcome analyses (60%; 25/42) and adjusted analyses (82%; 23/28). Interim analyses were described in 13 protocols but mentioned in only five (38%) corresponding publications. A further two trials reported interim analyses in the publications, despite the protocol explicitly stating that there would be none. Scharf 2006 found that 27% (6/22) of studies did not identify any criteria adverse effect system and 33.3% (4/12) did not specify an adverse effect evaluation schedule. An intention to treat analysis was used in 83% (48/58) of studies in the Soares 2004 cohort but we need to clarify with the authors if a comparison was made between protocols and published reports. A statistical analysis plan was included in the internal company research report for 60% (12/20) of trials in the Vedula 2009 cohort, but they could not determine the relationship between the date of the statistical analysis plan, the protocol and the research report for 60% (3/5) published trials that had such a plan. Therefore, they could not assess the timing of the observed changes from the protocol‐defined outcomes (Table 6).

6. Differences between protocol and published reports: analysis plan.

| Studies that compared protocols to published reports | |

| Chan 2004a | One trial was stated to be an equivalence trial in the protocol but was reported as a superiority trial in the publication; no explanation was given for the change. 39/49 protocols and 42/43 publications reported the statistical test used to analyse primary outcome measures. The method of handling protocol deviations was described in 37 protocols and 43 publications. The method of handling missing data was described in 16 protocols and 49 publications. Unacknowledged discrepancies between protocols and publications were found for methods of handling protocol deviations (19/43) and missing data (39/49), primary outcome analyses (25/42), subgroup analyses (25/25), and adjusted analyses (23/28). Interim analyses were described in 13 protocols but mentioned in only five corresponding publications. An additional two trials reported interim analyses in the publications, despite the protocol explicitly stating that there would be none. A data monitoring board was described in 12 protocols but in only five of the corresponding publications. |

| Scharf 2006 | 6/22 (27%) did not identify any criteria adverse effects (AE) system 4/12 (33%) not specify AE evaluation schedule |

| Soares 2004 | 40/58 (69%) of these trials used an intention to treat analysis. This number was increased to 48/58 (83%) after verification by the Radiation Therapy Oncology Group. |

| Vedula 2009 | A statistical analysis plan was included in the internal company research report for 5/12 (42%) published trials and for 7/8 (88%) unpublished trials. They were unable to determine the date of the statistical‐analysis plan relative to the protocol and research report for 3/5 (60%) published trials that had such a plan, so they cannot assess the timing of the changes from the protocol‐defined outcomes that we observed. |

Subgroup analyses

Two studies compared subgroup analyses specified in protocols and those included in published reports (Al‐Marzouki 2008; Chan 2004a). Al‐Marzouki 2008 found that only 49% (18/37) of trials mentioned subgroup analysis in the protocols, but 76% (28/37) reported such an analysis in the report of the trial. Among the 51% (19/37) of trials with no prespecified subgroup analyses in the protocol, subgroup analyses were undertaken in 58% (11/19). None gave the reason for these analyses. In the 18 trials in which subgroup analyses were prespecified in the protocol, 61% (11/18) had at least one unreported subgroup analysis or at least one new subgroup analysis. Chan 2004a found that of 13 protocols specifying subgroup analyses, 12 of these 13 trials reported only some, or none, of these in the publication. Nineteen of the trials with published subgroup analyses reported at least one that was not pre‐specified in the protocol and four trials claimed that the subgroup analyses were pre‐specified, even though they did not appear in the protocol (Table 7).

7. Differences between protocol and published reports: subgroup analyses.

| Studies that compared protocols to published reports | |

| Al‐Marzouki 2008 | Only 18/37 trials (49%) mentioned subgroup analysis in the protocols, but 28/37 (76%) reported it. Only one protocol gave the reason for subgroup selection. None specified the total number of subgroups. Among the 19 trials with no prespecified subgroup analyses in the protocol, subgroup analyses were done in 11 (58%). None gave the reason for these analyses. In the 18 trials in which subgroup analyses were prespecified in the protocol, 11 (61%) had at least one unreported subgroup analysis or at least one new subgroup analysis. |

| Chan 2004a | Overall, 25 trials described subgroup analyses in the protocol (n=13) or publication (20). All had discrepancies between the two documents. Twelve of the trials with protocol specified analyses reported only some (n=7) or none (5) in the publication. Nineteen of the trials with published subgroup analyses reported at least one that was not pre‐specified in the protocol. Protocols for 12 of these trials specified no subgroup analyses, whereas seven specified some but not all of the published analyses. Only seven publications explicitly stated whether the analyses were defined a priori; four of these trials claimed that the subgroup analyses were pre‐specified even though they did not appear in the protocol. |

Outcomes

Table 8 includes results for differences in outcomes for six studies (Al‐Marzouki 2008; Chan 2004a; Chan 2004b; Hahn 2002; Vedula 2009; von Elm 2008). Three studies (Chan 2004a; Chan 2004b; Vedula 2009) found that the primary outcome was the same in the protocol as in the publication for 33% (11/21) to 67% (32/48) of RCTs and one study found that it was the same for secondary outcomes in one of 12 trials (Vedula 2009). Four studies (Al‐Marzouki 2008; Chan 2004a; Chan 2004b; Vedula 2009) considered the downgrading of a primary outcome from the protocol to a secondary outcome in the published report, and found that this happened in 5% (2/37) to 34% (26/76) of RCTs. All six studies considered primary outcomes that were included in protocols and omitted from published reports and found that this occurred in between 13% (6/48) and 42% (5/12) of RCTs. One study (Al‐Marzouki 2008) found that secondary outcomes were omitted in 86% (32/37) of the published reports for the RCTs. The studies found that outcomes that had not been included in the protocol were included in the published reports for between 11% (11/101) and 50% (6/12) of RCTs and two studies (Al‐Marzouki 2008; Vedula 2009) found that this occurred in 33% (4/12) and 86% (32/27) of RCTs for secondary outcomes. Three studies considered outcomes that were upgraded from secondary in the protocol to primary in the published report and found that this occurred in between 9% (4/45) and 19% (12/63) of RCTs in two studies (Chan 2004a; Chan 2004b). The third study reported that this occurred for 18% (5/28) of outcomes but did not report this as a proportion of the RCTs (Vedula 2009).

8. Differences between protocol/registry entry and published report: outcomes.

| Study | Outcome stated in the protocol or trial registry is the same as in the published report | Primary outcome stated in the protocol or trial registry is downgraded to secondary in the published report | Outcome stated in the protocol or trial registry is omitted from the published report | A non primary outcome in the protocol or trial registry is changed to primary in the published report | A new outcome that was not stated in the protocol or trial registry (as primary or secondary) is included in the published report | Other information on outcomes |

| Studies that compared protocols to published reports | ||||||

| Al‐Marzouki 2008 | 5% (2/37) | primary: 14% (5/37) secondary: 86% (32/37) |

primary: 22% (8/37) secondary: 86% (32/37) |

|||

| Blumle 2008 | 128/299 No primary outcomes stated in publications | |||||

| Chan 2004a | primary: 47% (36/76) | 34% (26/76) | primary: 26% (20/76) | 19% (12/63) | primary: 17% (11/63) | 71% (70/99) and 60% (43/72) had at least 1 unreported efficacy or harm outcome, respectively 62% (51/82) of trials had major discrepancies in primary outcomes |

| Chan 2004b | primary: 67% (32/48) | 23% (11/48) | primary:13% (6/48) | 9% (4/45) | primary:18% (8/45) | 42/48 (88%): at least 1 unreported efficacy outcome; 16/26 (62%) at least 1 unreported harm outcome; 40% (19/48) of the trials contained major discrepancies in the specification of primary outcomes |

| Hahn 2002 | all outcomes in RCTs: 100% (2/2) (4 outcomes) |

all outcomes in RCTs: 100% (2/2) (10 outcomes) |

40% (6/15) stated which outcome variables were of primary interest | |||

| Vedula 2009 | primary: 33% (4/12) (11/21 outcomes) secondary: 8% (1/12) (55/180 outcomes) |

17% (2/12) (4/21 outcomes) |

primary: 42% (5/12) (6/21 primary outcomes and 122/180 secondary outcomes) |

(5/28 outcomes) |

primary: 50% (6/12) (12/28 outcomes) secondary: 33% (4/12) |

For 67% (8/12) reported trials, the primary outcome defined in the published report differed from that described in the protocol 17% (2/12) failed to distinguish between primary and secondary |

| von Elm 2008 | primary: 26% (24/92) (preliminary results) |

primary: 11% (11/101) (preliminary results) |

||||

| Studies that compared registry entries to published reports | ||||||

| Bourgeois 2010 | primary: 82% (70/85) | |||||

| Charles 2009 | Only compared report to design article | |||||

| Ewart 2009 | Primary:69% (76/110) Secondary:30% (33/110) |

5% (5/110) | Primary: 18% (20/110) Secondary: 44% (48/110) |

3% (3/110) | Primary: 9% (10/110) Secondary:49% (54/110) |

In 31% (34/110), a primary outcome had been changed In 70% (77/110), a secondary outcome had been changed 42% (20/48) of excluded studies did not record a primary outcome, or the outcome recorded was too vague to use in the registry |

| Mathieu 2009 | primary:69% (101/147) | 4% (6/147) | primary: 10% (15/147) | primary:15% (22/147) | 18% (42/234): registered

with no or an unclear description of the primary outcome. 31% (46/147): some evidence of discrepancies between the outcomes registered and the outcomes published 3% (4/147): different timing of assessment |

|

Two studies (Chan 2004a; Chan 2004b) found that statistically significant outcomes had higher odds of being fully reported compared to nonsignificant outcomes (range of odds ratios: 2.4 to 4.7).

Factors associated with discrepancies

Table 9 includes the results for factors associated with differences between protocols and published reports. One study suggested that statistical significance of the results could be associated with differences in the primary outcome between protocols and published reports (Vedula 2009). Three studies found that statistical significance was associated with complete reporting (Chan 2004a; Chan 2004b; von Elm 2008).

9. Factors associated with differences between protocol/registry entry and published reports.

| Study | Statistical significance | Funding | Sample size | Other |

| Studies that compared protocols to published reports | ||||

| Blumle 2008 | No correlation between funding and selective reporting of eligibility criteria could be determined | No correlation between sample size and selective reporting of eligibility criteria could be determined | Study design, multicentre, number of treatment groups | |

| Chan 2004a | Statistically significant outcomes had a higher odds of being fully reported compared with nonsignificant outcomes for both efficacy (pooled odds ratio, 2.4; 95% confidence interval [CI], 1.4‐4.0) and harm (pooled odds ratio, 4.7; 95% CI, 1.8‐12.0) data | Regression coefficient 0.34 SE 0.29, p=0.23 | Regression coefficient ‐0.17 SE 0.11, p=0.11 | Number of study centres (p=0.03) |

| Chan 2004b | Fully versus incompletely reported Efficacy outcomes: OR 2.7 (95% CI 1.5–5.0) Harm outcomes: OR 7.7 (95% CI 0.5–111) |

Prevalence of major discrepancies: Jointly funded 35% (7/20) CIHR funded 43% (12/28) |

Published in a general medical journal; speciality journal; Investigators responded to follow‐up survey | |

| Vedula 2009 | Trials that presented findings that were not significant (P≥0.05) for the protocol‐defined primary outcome in the internal documents either were not reported in full or were reported with a changed primary outcome. The primary outcome was changed in the case of 5/8 published trials for which statistically significant differences favoring gabapentin were reported For 3/4 studies in which the primary outcome was unchanged, statistically significant results were reported. For the remaining study, with nonsignificant findings, the results were published as part of a pooled analysis. For five of the eight studies with a changed primary outcome, statistically significant findings were reported, and four of the five were published as full‐length articles |

|||

| von Elm 2008 | OR 4.1 (95% CI 1.8 to 9.7) for complete reporting (preliminary results) | OR 1.0 (95% CI 0.3 to 4.0) for complete reporting (preliminary results) | This was considered for full publication | Time to event versus other, primary versus secondary, efficacy versus harm |

| Studies that compared registry entries to published reports | ||||

| Bourgeois 2010 | Industry‐funded trials reported positive outcomes in 85.4% of publications, compared with 50.0% for government‐funded trials and 71.9% for nonprofit or nonfederal organization –funded trials (P 0.001). Trials funded by nonprofit or nonfederal sources with industry contributions were also more likely to report positive outcomes than those without industry funding (85.0% vs. 61.2%; P=0.013). Differences in primary outcome reporting was associated with funding source: industry 8.7% (4/46), government 40.0% (4/10), nonprofit/nonfederal 24.1% (7/29) (p=0.03). |

|||

| Charles 2009 | differences between the assumptions and the results were large and small in roughly even proportions, whether the results were significant or not. | The size of the trial and the differences between the assumptions for the control group and the results did not seem to be substantially related (rho=0.03, 95% CI −0.05 to 0.15). | ||

| Ewart 2009 | Although not part of our research question, we noted that there were almost no differences in outcomes when comparing trials funded by pharmaceutical companies with those that had noncommercial sponsorship | |||

| Mathieu 2009 | For the 46 articles with a discrepancy between the registry and the published article, the influence of this discrepancy could be assessed only in half (23/46). Among them, 19 of 23 (82.6%) had a discrepancy that favored statistically significant results (ie, a new, statistically significant primary outcome was introduced in the published article or a nonsignificant primary outcome was omitted or not defined as the primary outcome in the published article). | General medical and speciality journals | ||

In one study, no correlation between funding or sample size and selective reporting of eligibility criteria could be determined (Blumle 2008). Chan 2004a found that a change in the primary outcome was not associated with funding or sample size. Chan 2004b found major discrepancies in 35% (7/20) of jointly funded (industry and the Canadian Institue of Health Research) and 43% (12/28) of CIHR funded RCTs. von Elm 2008 found that funding was not associated with complete reporting.

Comparison of trial registry entries to published reports

Eligibility criteria, methodological information, authors, funding, statistical analyses and subgroup analyses

None of the cohort studies that compared trial registry entries to published reports considered differences in eligibility criteria, methodological information, authors, funding, statistical analyses or subgroup analyses.

Sample size

One study (Charles 2009) compared sample size from trial registry to published report (Table 5) and found that, of 96 trials where an expected sample size was given in the online databse, the sample size was the same in 48% (46/96) of RCTs. Ten of 215 trials (5%) did not report and sample size calculation. They also found that the parameters for the sample size calculation were not included in trial registries.

Outcomes

Table 8 includes results for the three studies that compared differences in outcomes between trial registry entry and published reports (Bourgeois 2010; Ewart 2009; Mathieu 2009). These studies found that the primary outcome was the same in the trial registry as in the publication for 69% (76/110 and 101/147) to 82% (70/85) of RCTs, and one study found it was the same for secondary outcomes in 30% (33/110) of RCTs (Ewart 2009). Two studies (Ewart 2009; Mathieu 2009) considered the downgrading of an outcome that was a primary in the trial registry but which was included as a secondary outcome in the published report, and found that this happened in 4% (6/147) and 5% (5/110) of RCTs. These studies also considered primary outcomes that were included in trial registries and omitted from published reports and found that this occurred in 10% (15/147) and 18% (20/110) of RCTs. One study (Ewart 2009) found that secondary outcomes were omitted in 44% (48/110) of published reports. Both studies also found that outcomes that had not been included in the trial registry were included in the published reports for 9% (10/110) and 15% (22/147) of RCTs, and one study (Ewart 2009) found that this occurred in 49% (54/110) of RCTs for secondary outcomes. Ewart 2009 considered outcomes that were upgraded from secondary in the trial registry to primary in the published report, and found that this occurred in 3% (3/110) of RCTs.

Factors associated with discrepancies

Table 9 includes the results for factors associated with differences between registry entries and published reports. Two studies considered statistical significance; one found that the size of the trial and the differences between the assumptions for the control group and the results did not seem to be substantially related (rho=0.03, 95% confidence interval: −0.05 to 0.15) (Charles 2009). Another study found that 83% (19/23) had a discrepancy that favoured statistically significant results (ie, a new, statistically significant primary outcome was introduced in the published article or a nonsignificant primary outcome was omitted or not defined as the primary outcome in the published article) (Mathieu 2009). Two studies investigated funding; one study found that industry funding was associated with reporting of positive outcomes for the new drug (Bourgeois 2010) and another study found that there was no difference in outcomes in industry and non‐industry funded trials (Ewart 2009). Charles 2009 found that the size of the trial and the differences between the assumptions for the control group and the results did not seem to be substantially related.

Explanation of discrepancies

Twelve studies did not comment on the reasons for discrepancies (Shapiro 2000; Scharf 2006; Pich 2003; Hahn 2002; Gandhi 2005; Ewart 2009; Charles 2009; von Elm 2008; Vedula 2009; Mathieu 2009; Soares 2004; Bourgeois 2010). Two studies stated that no reasons for discrepancies were given in any of the trial reports within the cohort (Al‐Marzouki 2008; Blumle 2008). Two studies sent questionnaires to trialists to determine reasons for discrepancies. Chan 2004b found that among 78 trials with any unreported outcomes (efficacy or harm or both) they received 24 survey responses (31%) that provided reasons for not reporting outcomes for efficacy (23 trials) or harm (ten trials) in their published articles. The most common reasons for not reporting efficacy outcomes were lack of statistical significance (7/23 trials), journal space restriction (7/23) and lack of clinical importance (7/23). Similar reasons were provided for harm data. Chan 2004a found that the most common reason given by 29 investigators for not reporting efficacy outcomes included a lack of clinical importance (18 trials) and a lack of statistical significance (13 trials). These two reasons were also provided by five of 11 survey respondents for harm outcomes. Investigators for three of six studies with unreported primary outcomes provided reasons for omission: to be submitted for future publication (two trials) and not relevant for published article (one trial).

Discussion

Summary of main results

The results for the comparisons of published reports with both trial registries and protocols indicate that there are often discrepancies between the plans for a trial and what is eventually published, for many aspects of RCTs. Explanations for these are not stated in the published reports. The majority of research has focused on discrepancies in outcomes and its association with statistical significance.

Sixteen studies were included in this Cochrane methodology review, with 12 comparing protocols to published reports and four comparing trial registry entries to published reports. Three studies focused on discrepancies in eligibility criteria; two focused on methods of randomisation, allocation concealment and blinding; one focused on authors; two focused on funding; six focused on sample size and sample size calculation; five focused on the analysis plan and nine focused on outcomes.

This review shows that there are many different discrepancies between protocols and trial registry entries and the subsequent published reports. However, we have not identified any study that has reported a comparison of all three sources; protocols, trial registries and published reports in the same cohort of RCTs but we know of one ongoing study that is investigating this (Chan 2010). This is important, in part because it will identify whether information in trial registries is updated when protocol amendments are made, and whether reasons are included to justify these changes.

The full statistical analysis plan is often not included in the protocol and unless this information is obtained from the trialist it would be difficult to tell if any changes had been made to it. Several studies found that there were discrepancies between what was written about the statistical analyses in the protocol or trial registry entry and what was in the published report.

The SPIRIT initiative (Chan 2008b) will produce guidelines to standardise protocols, which could have an impact on the information to be included in trial registries. Trial registration should be enforced, and should include all 20 recommended items from the WHO minimum data set (WHO 2006) and allow changes to be documented with reasons and dates for these changes. However, Moja 2009 found that compliance of information in trial registries is unsatisfactory and largely incomplete even though many agree that transparency is paramount (see Implications for systematic reviews and evaluations of healthcare for further information on this study). The studies that have compared trial registries to published reports are more recent and have been facilitated by the ICMJE requirements in 2004 that trials would have to be registered before they commenced if researchers wanted to publish in their journals (De Angelis 2004).

The updated CONSORT statement now advises (in item 3b) that important changes to methods after trial commencement (such as eligibility criteria) should be included in the published report along with the reasons for these changes. Furthermore, item 6b in the CONSORT statement advises that any changes to trial outcomes after the trial commenced, and the reasons for these changes, should be included. No other items state that changes to other aspects, for example statistical analysis, should be reported. However, CONSORT urges completeness, clarity, and transparency of reporting, which simply reflects the actual trial design and conduct (Schulz 2010). CONSORT 2010 also now requires authors to include details of trial registration in the abstract of a randomized trial (Schulz 2010).

Overall completeness and applicability of evidence

Although not every included cohort study investigated all aspects of RCTs, between the 16 included studies identified, all aspects listed in the protocol of this review have been considered. These studies have been conducted in different countries and cover a wide variety of RCTs, and despite the included studies being heterogeneous they have broadly similar conclusions in that there are often discrepancies between protocols or trial registry entries and published reports.

Quality of the evidence

The majority of included cohort studies had a low risk of bias for follow up. However, the authors of some cohort studies were not given permission by authors of some included studies to access their protocols, which raises the issue of whether discrepancies may differ in these RCTs. Some cohort studies excluded RCTs that were not registered or those where a primary outcome was not explicitly identified or registered. Again, in such instances, it would be impossible to know if there were any changes made between protocol or trial registry and the published report and discrepancies may be more or less prevalent in these cases.

Although many of the included cohort studies were deemed at low risk of bias for selective reporting as the outcomes stated in the methods section were fully reported, there are many outcomes that could have been measured and were not, which is a missed opportunity. For example, only one included study addressed authorship and only two addressed methods such as allocation concealment. Authors have been contacted to check that all comparisons have been reported.

Limitations

There are limitations to this review. For example, eight studies are still awaiting assessment and should contribute more information to the body of evidence when this review is updated. There were also problems in combining studies to provide overall summary estimates and so the results of the studies had to be discussed narratively.

Potential biases in the review process

No potential biases have been identified during the review process.

Agreements and disagreements with other studies or reviews

In a previous review (Dwan 2008), publication bias and outcome reporting bias were considered and it was found that statistically significant outcomes were more likely to be fully reported. That review also identified discrepancies in the primary outcome between the protocol and published report for five included cohort studies. This Cochrane methodology review has updated that information and shows that discrepancies in outcomes occur frequently, with no explanation of the changes in the published reports.

Other studies, which were not eligible for this review have compared information submitted to the Food and Drug Administration and regulatory agencies to published reports and have also identified discrepancies (Bardy 1998; Melander 2003; Rising 2008; Turner 2008). These studies were excluded because they did not compare protocols or trial registry entries to published reports. Information submitted to the Food and Drug Administration and regulatory agencies may also differ to protocols and trial registry entries although we know of no study that has considered this.

Authors' conclusions

Implication for methodological research.

It would be of interest to see what effect the differences between protocol and registry entries and subsequent reports might have on the conclusions presented in trial reports and the impact this has on the decisions people make after reading those reports. Few of the authors of the cohort studies in this review asked the original trialists for reasons for discrepancies (Chan 2004a; Chan 2004b). One study, which is awaiting classification, found that trialists seemed generally unaware of the implications for the evidence base of not reporting all outcomes and protocol changes (Smyth 2010). Future work might also involve looking at a cohort of systematic reviews, and contacting the authors of included RCTs to obtain the protocols for these studies, to see if there are any discrepancies and to examine how this impacts on the conclusions of the reviews.

Acknowledgements

The authors would like to thank Sarah Chapman and Natalie Yates for their help with the search strategy, Mike Clarke for his valuable input and the referees for their helpful comments which improved the review.

Appendices

Appendix 1. Search Strategy

OvidSP MEDLINE (1950 to August 2010)

1 Clinical Protocols/

2 protocol$.ti,ab.

3 regist$.ti,ab.

4 Registries/

5 or/1‐4

6 Randomized Controlled Trials as Topic/

7 clinical trials as topic/

8 rct.ti,ab.

9 rcts.ti,ab.

10 (randomized or randomised).ti,ab.

11 trial$.ti,ab.

12 or/6‐11

13 "Bias (Epidemiology)"/

14 publication bias/

15 (unreported or "incompletely reported" or "partially reported" or "fully reported" or "not reported" or "non‐report$" or missing or omission or omit$ or "not publish$").ti,ab.

16 ((selectiv$ or suppress$ or non$ or bias$) adj5 (report$ or publish$ or publication$)).ti,ab.

17 or/13‐16

18 (discrepan$ adj5 (protocol$ or regist$)).ti,ab.

19 (compar$ adj8 publication$ adj8 protocol$).ti,ab.

20 (compar$ adj8 protocol$ adj8 publication$).ti,ab.

21 (publication$ adj8 protocol$ adj8 compar$).ti,ab.

22 (publication$ adj8 compar$ adj8 protocol$).ti,ab.

23 (protocol$ adj8 publication$ adj8 compar$).ti,ab.

24 (protocol$ adj8 compar$ adj8 publication$).ti,ab.

25 (compar$ adj8 publication$ adj8 regist$).ti,ab.

26 (compar$ adj8 regist$ adj8 publication$).ti,ab.

27 (publication$ adj8 regist$ adj8 compar$).ti,ab.

28 (publication$ adj8 compar$ adj8 regist$).ti,ab.

29 (regist$ adj8 publication$ adj8 compar$).ti,ab.

30 (regist$ adj8 compar$ adj8 publication$).ti,ab.

31 or/18‐30

32 5 and 12 and 17

33 12 and 31

34 32 or 33

35 cochrane database of systematic reviews.jn.

36 34 not 35

OVIDSP EMBASE (1980 to August 2010)

1 Clinical Protocols/

2 protocol$.ti,ab.

3 regist$.ti,ab.

4 Register/

5 or/1‐4

6 randomized controlled trial/

7 clinical trial/

8 rct.ti,ab.

9 rcts.ti,ab.

10 (randomized or randomised).ti,ab.

11 trial$.ti,ab.

12 or/6‐11

13 publishing/

14 (unreported or "incompletely reported" or "partially reported" or "fully reported" or "not reported" or "non‐report$" or missing or omission or omit$ or "not publish$").ti,ab.

15 ((selectiv$ or suppress$ or non$ or bias$) adj5 (report$ or publish$ or publication$)).ti,ab.

16 or/13‐15

17 (discrepan$ adj5 (protocol$ or regist$).ti,ab.

18 (compar$ adj8 publication$ adj8 protocol$).ti,ab.

19 (compar$ adj8 protocol$ adj8 publication$).ti,ab.

20 (publication$ adj8 protocol$ adj8 compar$).ti,ab.

21 (publication$ adj8 compar$ adj8 protocol$).ti,ab.

22 (protocol$ adj8 publication$ adj8 compar$).ti,ab.

23 (protocol$ adj8 compar$ adj8 publication$).ti,ab.

24 (compar$ adj8 publication$ adj8 regist$).ti,ab.

25 (compar$ adj8 regist$ adj8 publication$).ti,ab.

26 (publication$ adj8 regist$ adj8 compar$).ti,ab.

27 (publication$ adj8 compar$ adj8 regist$).ti,ab.

28 (regist$ adj8 publication$ adj8 compar$).ti,ab.

29 (regist$ adj8 compar$ adj8 publication$).ti,ab

30 or/17‐29

31 5 and 12 and 16

32 12 and 30

33 31 or 32

34 “cochrane database of systematic reviews”.jn.

35 “Cochrane database of systematic reviews (online)”.jn.

36 34 or 35

37 33 not 36

Cochrane Methodology Register Issue 3 2010 (Wiley InterScience (Online))

#1 (protocol* OR regist*):ti in Methods Studies

#2 (protocol* OR regist*):ab in Methods Studies

#3 #1 OR #2

#4 (randomised OR randomized OR rct OR rcts OR trial*):ti in Methods Studies

#5 (randomised OR randomized OR rct OR rcts OR trial*):ab in Methods Studies

#6 #4 OR #5

#7 "bias in trials":kw in Methods Studies

#8 ("study identification" next "publication bias"):kw in Methods Studies

#9 (unreported OR "incompletely reported" OR "partially reported" OR "fully reported" OR "not reported" OR "non reported" OR "non‐reported" OR "non reporting" OR "nonreporting" OR missing OR omission OR "not published" OR "not publishing"):ti in Methods Studies

#10 (unreported OR "incompletely reported" OR "partially reported" OR "fully reported" OR "not reported" OR "non reported" OR "non‐reported" OR "non reporting" OR "nonreporting" OR missing OR omission OR "not published" OR "not publishing"):ab in Methods Studies

#11 omit*:ti in Methods Studies

#12 omit*:ab in Methods Studies

#13 ((selectiv* OR suppress* OR non* OR bias*) NEAR/5 (report* OR publish* OR publication*)):ti in Methods Studies

#14 ((selectiv* OR suppress* OR non* OR bias*) NEAR/5 (report* OR publish* OR publication*)):ab in Methods Studies

#15 #7 OR #8 OR #9 OR #10 OR #11 OR #12 OR #13 OR #14

#16 (discrepan* NEAR/5 (protocol* OR regist*)):ti in Methods Studies

#17 (discrepan* NEAR/5 (protocol* OR regist*)):ab in Methods Studies

#18 (compar* NEAR/8 publication* NEAR/8 protocol*):ti in Methods Studies

#19 (compar* NEAR/8 publication* NEAR/8 protocol*):ab in Methods Studies

#20 (compar* NEAR/8 publication* NEAR/8 regist*):ti in Methods Studies