Abstract

The two catecholamines, noradrenaline and dopamine, have been shown to play comparable roles in behavior. Both noradrenergic and dopaminergic neurons respond to cues predicting reward availability and novelty. However, even though both are thought to be involved in motivating actions, their roles in motivation have seldom been directly compared. We therefore examined the activity of putative noradrenergic neurons in the locus coeruleus and putative midbrain dopaminergic neurons in monkeys cued to perform effortful actions for rewards. The activity in both regions correlated with engagement with a presented option. By contrast, only noradrenaline neurons were also (i) predictive of engagement in a subsequent trial following a failure to engage and (ii) more strongly activated in nonrepeated trials, when cues indicated a new task condition. This suggests that while both catecholaminergic neurons are involved in promoting action, noradrenergic neurons are sensitive to task state changes, and their influence on behavior extends beyond the immediately rewarded action.

Keywords: dopamine, error, motivation, noradrenaline, state change

Introduction

Catecholaminergic neuromodulation is thought to be critical for numerous aspects of behavior, including motivation, learning, decision-making, and behavioral flexibility (Robbins and Roberts 2007; Doya 2008; Sara 2009; Robbins and Arnsten 2009; Sara and Bouret 2012). Both noradrenaline and dopamine neurons respond to novel and salient stimuli and signal predictions of future reward (Schultz et al. 1997; Bouret and Sara 2004; Ravel and Richmond 2006; Berridge 2007; Ventura et al. 2007; Matsumoto and Hikosaka 2009; Bromberg-Martin et al. 2010), and both systems have been implicated in motivating action (Robbins and Everitt 2007; Nicola 2010; Bouret et al. 2012; Varazzani et al. 2015; Jahn et al. 2018; Walton and Bouret 2019). Nonetheless, the specific contributions of dopamine and noradrenaline to these functions remain unclear, in part as their roles have seldom been compared in the same task (but see Bouret et al. 2012 and Varazzani et al. 2015).

Locus coeruleus (LC) noradrenaline-containing neurons have a long-stated role in signaling changes in the state of the world, specifically a change in predictability of the environment (Swick et al. 1994; Vankov et al. 1995; Dalley et al. 2001; Aston-Jones and Cohen 2005; Bouret and Sara 2005; Yu and Dayan 2005). LC neurons are particularly sensitive to unexpected and/or novel stimuli (Foote et al. 1980; Aston-Jones and Bloom 1981; Grant et al. 1988; Sara and Segal 1991; Vankov et al. 1995; Bouret and Sara 2004; Bouret et al. 2012), and the transient activation of LC neurons in response to unexpected stimuli is often thought to facilitate adaptation through an increase in behavioral flexibility (Kety 1972; Bouret and Sara 2005; Dayan and Yu 2006, Einhauser et al. 2008; Nassar et al. 2012, Urai et al. 2017; Muller et al. 2019). In that frame, the magnitude of LC responses to sensory stimuli increases when these stimuli are unexpected and therefore require an updating of the behavior to face the new state of the world. By contrast, perfectly expected stimuli do not indicate a change in the state of the world, and so their presentation should not require the updating of behavior. Hence, the activation of LC neurons would promote the adaptation of behavior in response to a change in the state of the world (Aston-Jones and Cohen 2005; Bouret and Sara 2005; Yu and Dayan 2005). Such a role for noradrenaline in behavioral flexibility has received strong support from pharmacological studies (Devauges and Sara 1990; Tait et al. 2007; McGaughy et al. 2008; Jahn et al. 2018; Jepma et al. 2018).

More recently, noradrenaline function has been extended to include the promotion of effortful actions (Ventura et al. 2008; Bouret and Richmond 2009; Zénon et al. 2014; Varazzani et al. 2015). Indeed, LC neurons are reliably activated when animals initiate an action (Bouret and Sara 2004; Rajkowski et al. 2004; Kalwani et al. 2014). Critically, the magnitude of this activation seems to be related to the amount of effort necessary to trigger the action (Bouret and Richmond 2015; Varazzani et al. 2015). In line with this interpretation, we recently used a pharmacological manipulation to demonstrate directly that, on top of its role in behavioral flexibility, noradrenaline was also causally involved in motivation (Jahn et al. 2018). But the tripartite relationship among LC activity, processing of expected versus unexpected stimuli and motivation remain unexplored.

To address this issue, we reanalyzed a data set of putative noradrenergic neurons in the LC recorded in monkeys presented with cues signaling how much effort they would need to expend to gain rewards of various sizes (Varazzani et al. 2015). The task was designed such that rejecting an offer meant that the same offer would be presented again on the subsequent trial. Repeated trials constituted a sequence of actions toward the same goal (the reward). A task state change occurs after the goal has been reached and a new offer is presented. The analyses reported by Varazzani et al. (2015) deliberately excluded the repeated trials. Here, by including those trials, we could investigate separately the sensitivity to (i) task state changes by comparing activity in nonrepeated (task state change) versus repeated (no task state change) trials and (ii) motivational processes, by examining the modulation of LC activity by willingness to perform the presented option (engagement) in the current or in the future trials.

To gain further insight on the specific role of noradrenaline as compared with dopamine neurons, we compared the activity of putative noradrenergic neurons in the LC with that of putative dopaminergic neurons from the midbrain (substantia nigra pars compacta and ventral tegmental area, SNc/VTA) in the same paradigm. Indeed, dopamine is also implicated in novelty and information seeking (Horvitz et al. 1997; Schultz et al. 1997; Costa et al. 2014; Bromberg-Martin and Hikosaka 2009; Naudé et al. 2016), as well as playing a prominent role in motivation and action initiation (Walton and Bouret 2019). As for LC noradrenergic neurons, we could examine separately the relation between dopaminergic neurons and sensitivity to task state changes and willingness to perform the presented option.

We found that that although the magnitude of the neuronal response at the cue predicted the engagement in effortful actions similarly in the two catecholaminergic systems, only noradrenaline neurons were sensitive to changes in task state, i.e., to the difference between repeated and nonrepeated stimuli. Moreover, while dopamine neurons only responded at the cue onset, noradrenaline cells were also activated after erroneous fixation breaks, in a manner that predicted engagement in the subsequent trial. Taken together, our analyses demonstrate distinct roles for noradrenaline and dopamine in signaling a change in task state and in motivating current or future engagement with effortful actions.

Materials and Methods

Monkeys

Three male rhesus monkeys (Monkey D, 11 kg, 5 years old; Monkey E, 7.5 kg, 4 years old; Monkey A, 10 kg, 4 years old) were used as subjects for the experiments. During testing days (Monday to Friday), they received all their water as reward on testing days, and they received water ad libitum on nontesting days. All experimental procedures were designed in association with the Institut du Cerveau et de la Moelle Epiniere (ICM) veterinarians, approved by the Regional Ethical Committee for Animal Experiment (CREEA IDF no. 3) and performed in compliance with the European Community Council Directives (86/609/EEC).

Task

The behavioral paradigm has previously been described in detail in Varazzani et al. (2015). In brief, each monkey sat in a primate chair positioned in front of a monitor on which visual stimuli were displayed. A pneumatic grip (M2E Unimecanique, Paris, France) was mounted on the chair at the level of the monkey’s hands. Water rewards were delivered from a tube positioned between the monkey’s lips. Behavioral paradigm was controlled using the REX system (NIH, MD, USA) and presentation software (Neurobehavioral systems, Inc, CA, USA). Eye position was recorded using an eye-tracking system (ISCAN).

The task consisted of squeezing the grip to a minimum imposed force threshold to obtain rewards. Rewards were delivered at the end of each successful trial (Fig. 1A and B). At the beginning of each trial, subject had to fixate a red dot at the center of the screen before a cue appeared. The cue indicated the minimum amount of force to produce to obtain the reward (3 force levels) and the amount of reward at stake (3 reward levels: 1, 2 and 4 drops of water). After a variable delay (1500 ± 500 ms from cue display), the red dot at the center of the cue turned green (Go signal) and subject had 1000 ms to initiate the action, meaning squeezing the clamp very little (threshold set to detect any attempt to perform the action). If the monkey reached the minimum force threshold indicated by the cue, the dot turned from green to blue. It remained blue if the effort was maintained. If the least the minimum required effort had been sustained for 500 ± 100 ms, the water reward was delivered.

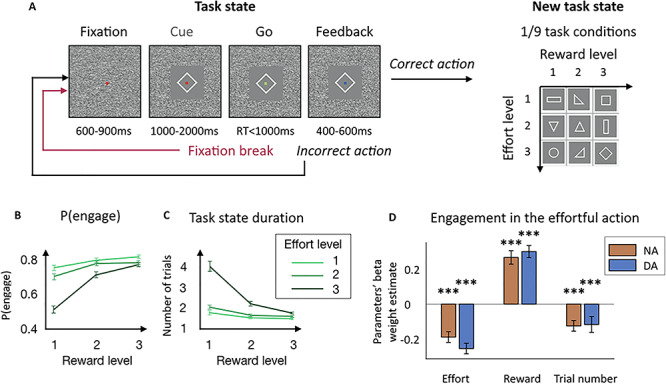

Figure 1.

Task and behavior. (A) Task structure. Monkeys had to squeeze a clamp with a certain minimum intensity to obtain reward of a certain magnitude. During the whole trial, monkeys had to maintain fixation on a dot at the center of the screen. If they broke the fixation, the same trial was repeated from the start after an intertrial interval delay. A trial started with monkeys fixating the red dot and then a cue appeared, indicating the effort and reward levels for the current trial. The dot turned green (Go signal) and monkeys had to squeeze the clamp to the minimum force threshold indicated by the cue. Upon reaching this threshold, the dot turned blue (Feedback) and remained blue as long as monkeys had to keep on squeezing. If monkeys maintain the effort long enough, they received the amount of reward indicated by the cue. If monkeys failed to produce the correct action, the same trial was repeated from the start after an intertrial interval delay. After a correct action, a new task condition was selected among the nine experimental conditions, defined by three levels of effort and three levels of reward, which defined a new task state. (B) Probability to engage with the action as a function of effort and reward levels. Points and error bars represent means for NA and DA computed for each session ± SEM. (C) Mean task state duration. Mean number of trials to perform the correct action and receive the reward. Points and error bars represent means for NA and DA computed for each session ± SEM. (D) Beta weights of the task parameters in the decision to engage with the effortful action. Multilevel logistic regression of the decision to initiate the action by the three experimental task parameters: effort level, reward level and trial number. Bars and error bars represent means of the beta weights ± SEM. Significant negative effect of effort level (P < 0.001) and trial number (P < 0.001) and significant positive effect of reward level (P < 0.001) in both NA and DA session (no difference between NA and DA sessions for all three parameters (P < 0.05)). ***P ≤ 0.001.

Fixation of the central dot had to be maintained through the different phases of the task. A trial was incorrect if: (i) the monkey broke fixation before the reward delivery, (ii) he squeezed the clamp before the go signal, (iii) he failed to squeeze the clamp at all or (iv) at the minimum force threshold, or (v) did not maintain the effort long enough. After an error the same trial was repeated until it was successfully completed. Within a session, the nine combinations of effort and reward conditions were selected with equal probability and presented in a random order. As erroneous trials were repeated, the policy with the highest reward rate was to always engage until satiety.

Electrophysiological Recordings

Single unit recording using vertically movable single electrodes was carried out using conventional techniques. The electrophysiological signals were acquired, amplified (x10 000), digitized, and band-pass filtered (100 Hz–2 kHz) using the OmniPlex system (Plexon). Noradrenergic neurons recordings were performed on monkey A (29 neurons in 15 sessions) and monkey D (63 neurons in 38 sessions) (92 neurons in total). Midbrain dopaminergic neurons recordings were performed on monkey D (56 neurons in 38 sessions, sometimes simultaneously as noradrenergic neurons recordings) and monkey E (28 neurons in 19 sessions) (84 neurons in total).

Precise description of the recording procedures and cell identification methods can be found in the article where LC and SNc/VTA data used here were originally reported (Varazzani et al. 2015). In brief, LC cells were identified based on anatomical and physiological criteria (low spontaneous rate of activity (<4 Hz) and broad waveform (>2.5 ms)). Based on our reconstruction, a large proportion of the neurons were recorded in the SNc and some were probably recorded in the VTA. Therefore, we choose to label dopaminergic neurons SNc/VTA rather than SNc alone. SNc/VTA cells were identified based on anatomical (MRI scan) and physiological criteria (low spontaneous rate of activity (<8 Hz), broad (>2 ms), and characteristic shape of the waveform) and their response to apomorphine injection (tested on 5/84 neurons). As we used an indirect method for the identification of neurons, we can only state that they are putative noradrenergic and dopaminergic neurons; however, for simplicity, we refer to them as noradrenergic and dopaminergic neurons.

Data Analysis

Data were analyzed with Matlab software (MathWorks). Figures represent data ± standard deviation to the mean (SEM).

In our analyses, we only considered correct and incorrect trials in which monkeys did not break the fixation before the onset of the cue (noradrenergic neurons recording sessions (NA): 324 trials on average for monkey A and 281 for monkey D, and dopaminergic neurons recording sessions (DA): 314 trials on average for monkey D and 274 for monkey E). Because of the task structure nonrepeated trials or trial following a task state change corresponded to the first time monkeys actually saw the cue. We took all those trials and computed the probability that for a given effort and reward level (or a given task condition), subjects would engage with the trial. We considered that monkeys engaged if they maintained fixation throughout the trial and initiated the action even if it occurred before the Go signal, (5% of trials in both NA and DA sessions), with less than the minimum required effort (0% and 0.1% of trials in NA and DA sessions, respectively) or without maintaining the minimum required effort for long enough (8% and 10% of trials in NA and DA sessions, respectively). Although it was possible to fail to engage with a trial by maintaining fixation but not squeezing the clamp, this type of mistake was rare (2% and 1% of trials in NA and DA sessions respectively), and monkeys mostly rejected a trial by breaking fixation (20% of all trials in both NA and DA sessions). Erroneous trials were therefore mainly of two types: (i) monkeys broke the fixation and failed to engage with the trial (no engagement and trial is repeated as the same trial type is presented again: 20% of all trials in both NA and DA sessions) and (ii) monkeys engaged (tried to squeeze the clamp) but did not complete the correct action (engagement but trial is repeated: 17% and 20% of engaged trials, which corresponds to 13% and 15% of all trials in NA and DA sessions respectively). Note that this way of computing the overall engagement is different than in Figure 1B, where we compute the probability to engage in each task condition for each individual session before computing the mean. For correct trials, we also measured reaction times as a complementary measure of our assessment of the subject’s motivation to perform the task. RT measures should be taken carefully due to potential interference from factors independent from the subjects’ willingness to engage the task such as which effector was used (left or right arm) or the task design imposing a delay between cue and action. We examined the effects of effort, reward, and trial number on the engagement in the action using a multilevel logistic regression for each session. The three variables were z-scored so that we could compare their standardized beta weights across sessions.

A similar approach was used to examine how task conditions influenced neuronal activity in different task epochs. To assess the effect of task conditions on neurons’ activity at the time of cue onset, we used a standard window from 0 to 500 ms from cue onset (Bouret and Richmond 2009; Varazzani et al. 2015). When we looked at these effects in the precue period, we used a window from −500 to 0 ms from cue onset. Neurons’ activity was measured in firing rates (spikes per second) and was z-scored for each session to compare the activity across neurons. First, the effects of the task factors: effort, reward and trial number in a session on neurons’ activity were estimated using a multilevel linear regression for each neuron. Second, we looked at the sensitivity to the z-scored probability to engage given the task condition on neurons’ firing rates using a linear regression. Finally, we assessed the relationship between neurons’ firing rates and engagement on a trial-by-trial basis by running a logistic regression of neurons’ firing rates on engagement (1 = engage, 0 = does not engage).

When we looked at the effect of the trial repetition (here referred to as “task state change”) on neuronal activity, we first looked at whether the fact that the trial was repeated (trial repetition = 1) or not (trial repetition = 0) affected the sensitivity of neurons for the task factor (effort, reward and trial number) at the time cue. To do so, we ran a linear regression with: the three task factors, the trial repetition and the three interactions between the task factors and the trial repetition (trial repetition = 0 or 1) onto the trial-by-trial neurons’ activity. A significant interaction would mean that the task state change (in nonrepeated trials) would increase or decrease the sensitivity for the task factor. We then wanted to assess the conjoint effect of engagement and task state change on neurons’ firing rates over and above the effect of effort and reward levels. To do so, we ran a multilevel linear regression taking into account the task condition variability. In other words, we removed from neurons’ firing rates the effect of the task condition using a mixed model:

|

where β0 is a constant, βtask condition is a constant fitted for each combination of effort and reward level (9 possibilities), xi is the experimental factor and βi is its weight in the linear regression (e.g., engagement, task state change, interaction). When looking at the effect of engagement and task state change at cue onset, we tested the following experimental factors: engagement, task state change and interaction between effects. We then added to the regression the following confounds: trial number, interaction between trial number and engagement and interaction between trial number and task state change. All results hold when adding the confounds.

We then moved on to assess whether noradrenergic and dopaminergic neurons were activated before the fixation break. We only considered fixation breaks that occurred after the display of the cue. We compared firing rates from 600 ms before the fixation break to 300 ms after (in 300 ms windows). For all analyses at fixation break, we only included sessions during which there were more than 20 fixation break events after the onset of the cue (91% of NA sessions and 89% of DA sessions) to ensure that we had enough statistical power to perform the linear regressions (Bouret et al. 2012). Delays between the onset of the cues and fixation break events followed a skewed distribution of median 845 ms for NA session and 713 ms for DA sessions (statistically different, t-test on the mean of the log-transformed distributions: P < 0.001). To ensure that the activity at the fixation break was not contaminated by the cue response, we also looked only at fixation break events that occurred at least 500 ms after the cue onset (83% of NA sessions and 75% of DA sessions). However, all main results were similar both with and without exclusion of the early fixation break events. To assess whether neurons were activated at the fixation break, we compared the difference in firing rate before and after the fixation break and the % of change in firing rate (by dividing by the firing rate before the fixation break). We ran a similar analysis to assess whether neurons were activated before the fixation break. When looking at the modulation of the evoked activity at fixation break, we used the same methodological approach as for the analysis of activity at cue onset. When looking at the effect of engagement in the next trial at fixation break, we only tested the experimental factor: engagement in the next trial. We then added to the regression the following confounds: task state change (in the current trial), trial number, interaction between the effect of engagement in the next trial and task state change and interaction between the effect of engagement in the next trial and trial number. All results hold when adding the confounds. To assess the size of the effect of engagement in the next trial, we ran the linear regression of the effect of engaging in the next trial while taking into account the task condition on the non-z-scored firing rate of neurons at fixation break and divided the regression weight (difference between engage and nonengage conditions) by the fixed intercept (mean firing rate across both conditions).

For sliding window analyses, we counted spikes in a 200 ms test window that was moved in 25 ms increments around the onset of the cue (from −400 to 1000 ms) and around the fixation break (from—400 to 500 ms). Note that the x-axis of the figures corresponds to the center of the test window at each time point. At each point, we performed the linear regression of the z-scored regressors of interest on z-score firing rate for each and every unit recorded. Sliding window analyses are shown for illustrative purpose only, and statistics were performed on standard windows around cue and fixation break.

Second-level analyses were performed by comparing the distributions of the standardized beta weights against zero or other distributions (paired t-test and unpaired t-test, respectively, or ANOVA). Statistical reports include means of the distribution ± standard deviation to the mean, t-values or F-values, degrees of freedom, and P-values.

Results

Behavior

Three monkeys were trained to perform a task in which visual cues indicated the amount of effort (3 effort levels) that was required to obtain a reward (3 reward levels) (Fig. 1A). Effort and reward levels were manipulated independently across the nine task conditions. On a given trial, monkeys had to fixate the center of the screen where the cue was displayed. After seeing this cue, they could either engage in the effortful action (whether or not the action correctly executed) or not engage and break fixation (the proportion of trials where monkeys maintained fixation and omitted the response was negligible). Importantly, unsuccessful trials were repeated, whether they resulted from a fixation break or from a failure to produce the correct response (see Material and Methods and Fig. 1A). After a correct response, a new task condition was selected among the nine possibilities.

The monkeys’ willingness to engage in the task, measured as the attempt to squeeze the clamp after seeing the cue (Fig. 1B), as well as the average number of trials it took them to produce the correct action (Fig. 1C) were clearly affected by the effort and reward levels (task condition) of the trial. In both sessions when noradrenergic or dopaminergic neurons were recorded from, the likelihood of engagement in the effortful action was negatively affected by the effort level (NA: β = −0.19 ± 0.03, t(91) = −6.19, P < 0.001; DA: β = −0.26 ± 0.03, t(83) = −8.43, P < 0.001) and positively modulated by the reward level (NA: β = 0.27 ± 0.04, t(91) = 6.93, P < 0.001; DA: β = 0.31 ± 0.04, t(83) = 8.78, P < 0.001). Moreover, monkeys’ engagement was negatively modulated by the trial number (NA: β = −0.13 ± 0.03, t(91) = −4.11, P < 0.001; DA: β = −0.12 ± 0.05, t(83) = −2.58, P < 0.001) (Fig. 1D). Note that since the regressors were z-scored when performing the linear analysis, we can compare their beta weights’ values. There was no significant difference between effort level, reward level, and trial number beta weights in engagement across for NA and DA recording sessions (P = 0.13, P = 0.52 and P = 0.88, respectively). This was confirmed by a 2-way ANOVA measuring the effect of task factor (effort and reward) and recording type (NA or DA) onto –β(effort) and β(reward): no main effect of recording session type (F(1,348) = 2.14, P = 0.15) and no interaction (F(1,348) = 0.23, P = 0.63), meaning that there was no difference in the way that engagement was affected by the task factors in NA and DA recording sessions. Moreover, we found no reliable main effect of task factor (F(1,348) = 3.35, P = 0.07) meaning there was no difference in the way that the effort and reward levels affected engagement in both types of recordings.

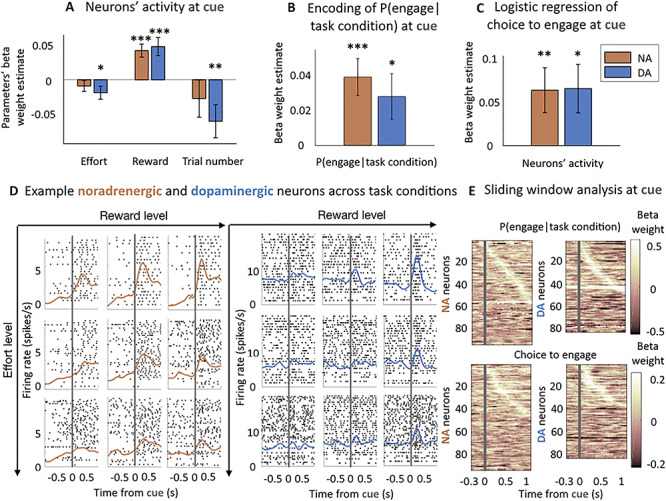

Noradrenergic and Dopaminergic Neurons’ Activity Reflects Monkeys’ Engagement in the Task

We have seen previously that the task factors (i.e., effort level, reward level, and trial number) influenced the probability of monkeys to engage with the effortful action. Therefore, we first measured the influence of these task factors on neurons’ activity at the time of cue, again using GLMs. As all regressors were z-scored in our linear analysis, we can assess (and compare) the sensitivity to a task factor by the absolute (and relative) value of its beta weight. Dopaminergic neurons’ activity was significantly positively modulated by reward level (β = 0.05 ± 0.01, t(83) = 3.67, P < 0.001, significant modulation for 29% of neurons (23% positive/6% negative) (post hoc two-tailed T-test, P < 0.05)), meaning that the greater the reward, the more dopaminergic neurons were active, and negatively modulated by the effort level (β = −0.02 ± 0.001, t(83) = −2.01, P = 0.05, significant modulation for 18% of neurons (14% negative/4% positive)), as well as by trial number (β = −0.06 ± 0.03, t(83) = −2.53, P = 0.01, significant modulation for 59% of neurons (42% negative/17% positive)) (Fig. 2A and D). Noradrenergic neurons’ activity was only significantly modulated by the reward size (β = 0.04 ± 0.001, t(91) = 4.05, p < 0.001, significant modulation for 20% of neurons (16% positive/4% negative)) but not reliably modulated by either the effort level (β = −0.01 ± 0.01, t(91) = −1.15, P = 0.25, significant modulation for 14% of neurons (10% negative/4% positive)) nor trial number (β = −0.03 ± 0.03, t(91) = −1.02, P = 0.31, significant modulation for 54% of neurons (30% negative/24% positive)) (Fig. 2A and D and Supplemental Fig. 1A). However, we found no significant difference between the sensitivity to the effort level between dopaminergic and noradrenergic neurons (post hoc T-test on the distribution of β(effort) in NA and DA: P = 0.42), or between the sensitivity to trial number (post hoc T-test on the distribution of β(trial number) in NA and DA: P = 0.37). Critically, there was a significant difference between the beta weights of effort and reward in the firing rates of both noradrenergic and dopaminergic neurons (2-way ANOVA measuring the effect of task factor (effort and reward) and recording type (NA or DA) onto –β(effort) and β(reward): main effect of task factor F(1,348) = 9.71, P = 0.02) but no main effect of recording type (F(1,348) = 0.61, P = 0.4) and no interaction (F(1,348) = 0.04,P = 0.80). This means that the relative sensitivity of noradrenergic and dopaminergic neurons to the task factors was similar, with a greater sensitivity for reward than effort in both noradrenergic and dopaminergic neurons (post hoc T-test on the distribution of –β(effort) and β(reward): t(350) = −3.13, P = 0.002), which was not the case in the behavior.

Figure 2.

Noradrenergic and dopaminergic neurons’ sensitivity to the task parameters and engagement at the time of cue. (A) Sensitivity to task parameters at the time of cue (0–500 ms from cue onset). Bars and error bars represent means of the beta weights ± SEM. Dopaminergic neurons were sensitive to all three task parameters (effort level: P = 0.05; reward level: P < 0.001; trial number:Pp = 0.01). Noradrenergic neurons were only significantly sensitive to the reward level (P < 0.001). No significant difference between the sensitivity to effort level and trial number in noradrenergic and dopaminergic neurons (P > 0.05). * P < 0.05; ** P ≤ 0.01; *** P ≤ 0.001. (B) Noradrenergic and dopaminergic neurons reflect the engagement in a task condition. Linear regression of the probability to engage in a given task condition (effort and reward levels) for each session. Bars and error bars represent means of the beta weights ± SEM. Both populations are significantly sensitive to the probability to engage (P < 0.05), no difference between sensitivities across populations (P > 0.05). * P < 0.05; ** P ≤ 0.01; *** P ≤ 0.001. (C) Noradrenergic and dopaminergic neurons’ activity reflects the engagement on a trial-by-trial basis throughout the session. Logistic regression of noradrenergic and dopaminergic neurons’ activity on engagement in the action. Bars and error bars represent means of the beta weights ± SEM. Both populations predict the engagement in the action (P < 0.05). * P < 0.05; ** P ≤ 0.01; *** P ≤ 0.001. (D) Example noradrenergic and dopaminergic neurons. Neuronal activity of two representative neurons around the cue onset (gray vertical line). Left: spike activity (raster and spike density function) of a noradrenergic neuron at cue. The activation is stronger when the reward increases (left to right) and the effort decreases (bottom to top). Right: same but for a dopaminergic neuron showing an intermediate activation at cue onset. Each panel corresponds to a different number of trials (each trial is a line in the raster plot). (E) Sliding window analysis of the sensitivity of the firing rate to engagement in the task condition and logistic regression onto the choice to engage. Top: linear regression of neurons’ activity by the probability to engage in the task condition. Bottom: logistic regression of neurons’ activity onto the choice to engage. Both are measured in 200 ms windows with an increment of 25 ms. X-axis represents the center of the window. Y-axis corresponds to each neuron. Color code represents the value of the beta weight in the regression. Neurons are ordered (top to bottom) by latency of their peak if it was significant (P < 0.05).

After having considered the relation between neuronal activity and task factors, we looked at the relationship between neuronal activity and the engagement in the effortful action. First, we did it across the nine task conditions (defined by a combination of effort and reward levels) by using an aggregate measure of the engagement for each condition (the probability to engage given the task condition). This tested whether neuronal activity directly reflected the probability for the monkeys to engage in a particular task condition. For each recording, we regressed this z-scored probability of engagement on neurons’ activity and found a significant positive effect at the population level, for both noradrenergic and dopaminergic neurons (NA: β = 0.04 ± 0.01, t(91) = 3.70, P < 0.001, significant modulation for 20% of neurons (18% positive/2% negative) (post hoc two-tailed T-test, P < 0.05), DA: β = 0.03 ± 0.01, t(83) = 2.16, P = 0.03, significant modulation for 24% of neurons (18% positive/6% negative)) (Fig. 2B and E). Again, there was no difference in sensitivity between populations (post hoc T-test on the distribution of β (probability of engagement in task condition) in NA and DA: P = 0.50). Moreover, this activity was specific to the onset of the cue as neurons were not sensitive to this probability before the cue onset (precue period) even in repeated trials, in which monkeys already knew which cue was coming (500 ms window before cue onset: NA: P = 0.17, DA: P = 0.71). We also examined the relation between neuronal activity and engagement on a trial by trial basis. We found that both noradrenergic and dopaminergic responses were predictive of engagement on a trial by trial basis (NA: β = 0.06 ± 0.03, t(91) = 2.47, P = 0.01, significant modulation for 17% of neurons (14% positive/3% negative) (post hoc two-tailed T-test, P < 0.05); DA: β = 0.06 ± 0.03, t(83) = 2.36, P = 0.02, significant modulation for 21% of neurons (14% positive/7% negative)) (Fig. 2C and E). Here again, there was no difference in sensitivity between dopaminergic and noradrenergic neurons (post hoc T-test on the distribution of the beta weights of the logistic regression in NA and DA: P = 0.96). Moreover, the activity was specific to the onset of the cue, with no sensitivity to the engagement in the precue period (NA: P = 0.08, DA: P = 0.88). We found no significant relationship between the activity of noradrenergic and dopaminergic neurons at cue and response time (NA: P = 0.83, DA: P = 0.49), even when we removed the effect of task condition (NA: P = 0.83, DA: P = 0.47).

Overall, the firing of noradrenergic and dopaminergic neurons reflected the engagement in the effortful action in a similar fashion at the time of the cue, even if, contrary to behavior, their activity was more sensitive to reward compared with effort.

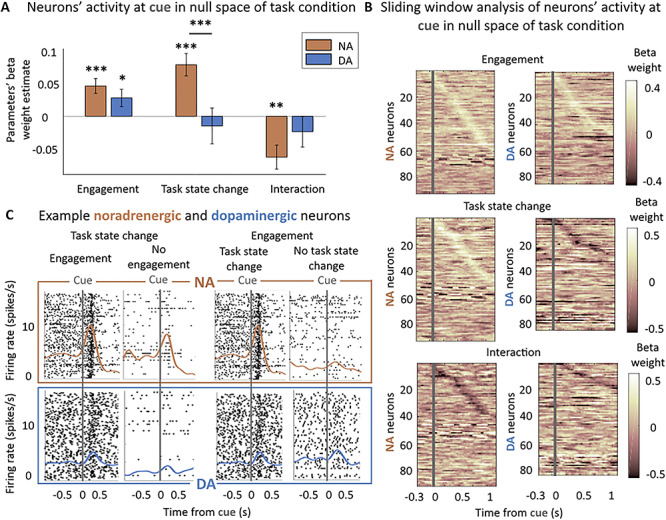

Both Noradrenergic and Dopaminergic Neurons are Sensitive to Monkeys’ Engagement, but only Noradrenergic Neurons Are Sensitive to Changes in Task State

To understand if catecholaminergic neurons are also sensitive to changes in task states and to determine the relationship between this factor and motivation (engagement), we compared the sensitivity to these two variables at the time of cue. To examine the effect of changes in task states, we compared cue-evoked activity in repeated (no task state change) versus nonrepeated (task state change) trials. Since erroneous trials were repeated, monkeys were presented with the same task condition (with the same visual cue) until they reached the goal and obtained the associated reward. It is only after the reward has been obtained that a new task condition is presented. A nonrepeated trial—that immediately follows a correct trial—represents the discovery of the new task condition and the beginning of a new goal-oriented behavior. In that frame, we measured the influence of trial repetition on the activity of noradrenergic and dopaminergic neurons to evaluate their sensitivity to changes in task state. Erroneous trials were mainly of two types: (i) monkeys broke the fixation (no engagement) and (ii) monkeys engaged (tried to squeeze the clamp) but did not execute the action correctly. Therefore, as not all trials in which monkeys engaged were successful, we could dissociate the effect of engagement from the effect of trial repetition on neuronal activity.

First, we found that the beta weights of the interactions between task factors (effort and reward levels, as well as trial number) and trial repetition (repeated vs. nonrepeated trials) were not significantly different from 0 in either noradrenergic neurons or dopaminergic neurons (see Materials and Methods, NA: P(effort x trial repetition) = 0.15, P(reward x trial repetition) = 0.23 and P(trial number x trial repetition) = 0.46; DA: P(effort x trial repetition) = 0.24, P(reward x trial repetition) = 0.21 and P(trial number x trial repetition) = 0.13). This means that neurons were sensitive to the task condition in a similar fashion whether the trial was repeated or not.

To examine the effect of engagement and trial repetition over and above the effect of a particular task condition (effort and reward levels), we regressed out the effect of the task condition on the firing rate of neurons and looked at the effect of engagement and trial repetition on the remaining neuronal activity (see Material and Methods). Here, we found an important dissociation between the activity of noradrenergic and dopaminergic neurons (Fig. 3). For a given trial condition, noradrenergic neurons were more active either when the action was initiated (vs. not) or in nonrepeated trials (vs. repeated trials), when the cue signaled a change in task state (β(engagement) = 0.05 ± 0.01, t(91) = 4.10, P < 0.001, significant modulation for 13% of neurons (10% positive/3% negative) (post hoc two-tailed T-test, P < 0.05); β(task state change) = −β(trial repetition) = 0.08 ± 0.02, t(91) = 4.60, P < 0.001, significant modulation for 13% of neurons (11% positive/2% negative)). We also found a significant negative interaction (β(interaction) = −0.06 ± 0.02, t(91) = −3.37, P = 0.001, significant modulation for 7% of neurons (5% negative/2% negative)), which indicates that engagement and trial repetition effects were not perfectly additive: when both factors were combined, the firing rate increased less than by the sum of the two separate effects. On the other hand, while dopaminergic neurons were on average more active when monkeys engaged in a given condition (β = 0.03 ± 0.01, t(83) = 2.13, P = 0.04, significant modulation for 13% of neurons (8% positive/5% negative)), they were not sensitive to trial repetition (P = 0.59). There was also no significant interaction between the two effects (P = 0.32), and the main effects were similar when we removed the interaction. A direct comparison of these effects between noradrenergic and dopaminergic neurons confirmed that, while there was no difference in the sensitivity to engagement in the task (post hoc T-test on the distribution of β(engagement) in NA and DA: P = 0.29), noradrenergic neurons were significantly more sensitive to trial repetition than dopaminergic neurons (post hoc T-test on the distribution of β(trial repetition) in NA and DA: t(175) = −2.95, P = 0.003, same effect when comparing the absolute values of the beta weights: t(173) = 2.01, P = 0.04).

Figure 3.

Noradrenergic but not dopaminergic neurons are sensitive to the task state change. (A) Sensitivity to engagement and task state change in null space of task condition at cue (0–500 ms from cue onset). Multilevel linear regression of neurons’ activity by: engagement, trial repetition (task state change), and their interaction. Bars and error bars represent means of the beta weights ± SEM. Noradrenergic neurons were sensitive to the task sate change, the engagement and their interaction (all P < 0.01). Dopaminergic neurons were only significantly sensitive to the engagement (P < 0.05). * P < 0.05; ** P ≤ 0.01; *** P ≤ 0.001. (B) Sliding window analysis of the sensitivity of the firing rate to engagement, task state change, and their interaction in null space of task condition at cue. Multilevel linear regression of neurons’ activity by: engagement, trial repetition (task state change), and their interaction in 200 ms windows with an increment of 25 ms. X-axis represents the center of the window. Y-axis corresponds to each neuron. Color code represents the value of the beta weight in the regression. Neurons are ordered (top to bottom) by latency of their peak if it was significant (P < 0.05). (C) Example noradrenergic and dopaminergic neurons. Neuronal activity of two representative neurons around the cue onset (gray vertical line). Each panel corresponds to a different number of trials (each trial is a line in the raster plot). Top: spike activity (raster and spike density function) of a noradrenergic neuron showing a strong activation at cue. The activation is slightly stronger in engaged versus nonengaged trials (β = 0.04 in regression shown in Fig. 3A) (all task conditions pooled together in nonrepeated trials (task state change) only: 156 and 25 trials) and much stronger (β = 0.36) for nonrepeated (task state change) versus repeated trials (no task state change a) (engage trials only: 156 and 63 trials). Bottom: same but for a dopaminergic neuron showing an intermediate activation at cue onset. Its activity was greater for engaged than nonengaged trials (β = 0.23, 153 and 27 trials) but was not modulated by the task state change of the cue (153 and 57 trials). Note that even though the baseline firing of this example dopaminergic neuron appears modulated by the engagement, it is not the case (P = 0.14) and actually reflects the sensitivity of this particular neuron to trial number (β = −0.16, P = 0.01), which is not reliable at the population level (P = 0.08).

Here again, this effect was specific to the onset of the cue as when we examined the 500 ms precue period, there was neither an effect of engagement in noradrenergic and dopaminergic neurons (NA: P = 0.12, DA: P = 0.73). If we did not find an effect of trial repetition in noradrenergic neurons’ activity (NA: P = 0.22), we found a negative effect of task state change (corresponding to a smaller activity in nonrepeated trials) on dopaminergic neurons’ precue activity (β = −0.07 ± 0.03, t(83) = −2.51, P = 0.01). However, as nonrepeated trials follow the delivery of the reward in the previous trial, we looked if this effect could be linked to a carry-over of the response to the reward delivery. Indeed, we found a negative effect of the size of the reward obtained on the previous trial (P = 0.005) and the repetition effect did not reach significance when we added as coregressor the size of the reward obtained on the previous trial (P = 0.10). Moreover, in nonrepeated trials, the precue activity of only 3% of noradrenergic neurons and 4% of dopaminergic neurons was significantly different in engage versus does not engage condition (T-test on the distribution of baseline firing rates). There was also no effect of engagement in the precue period if we only examined repeated trials where monkeys already knew the task condition (NA: P = 0.08, DA: P = 0.36), even in the null space of task condition (NA: P = 0.10; DA: P = 0.24), whereas there was the effect of engagement was still significant after the cue onset (NA: P < 0.001; DA: P = 0.02).

In short, when comparing the sensitivity to engagement and trial repetition over and above task variables (in the null space of task condition), both noradrenergic and dopaminergic neurons were sensitive to the engagement in the task, but only noradrenergic neurons were sensitive to the task state change (in nonrepeated vs. repeated trials). We then tried to assess if the effect of trial repetition in noradrenergic neurons was related to a change in task state (the fact that a new reward could be obtained for a given action) or to a simpler change in visual stimulus. Indeed, even if the same condition and therefore the same visual cue was presented in all repeated trials (after an error), the same visual cue had 1/9 chance of being repeated even in nonrepeated trials, since in those trials the condition was selected in a pseudorandom fashion. To do so, when possible, we isolated the small subset of trials during which the same cue is presented after a successful trial (i.e., the 1/9 trials after a correct response where the same cue type was presented again). We looked if the sensitivity to task state change was affected by the fact that the same cue was repeated. We found that the addition of an interaction term between the task state change and the cue repetition was nonsignificant (t(73) = 0.61, P = 0.54) and did not affect the previously described effects of engagement, task state change and interaction on LC neurons activity at cue (all P < 0.001). Therefore, we could not find evidence that LC response to the task state change was affected by the repetition of the same visual stimulus.

In addition, these effects were relatively independent of trial number, which captures the influence of fatigue and satiety. Indeed, the main effects of engagement and task state change were not affected when we added trial number as a covariate in the model; main effect of trial number: NA: P = 0.31, DA: P = 0.02; interaction of engagement and trial repetition with trial number did not reach significance in either noradrenergic or dopaminergic neurons, NA: P(engagement) = 0.85, P(trial repetition) = 0.82, DA: P(engagement) = 0.85, P(trial repetition) = 0.16) (see Supplemental Fig. 2A). Thus, engagement and task state change, as indexed by trial repetition, had specific effects on neurons’ firing rates, which in turn were independent of the progression in the session.

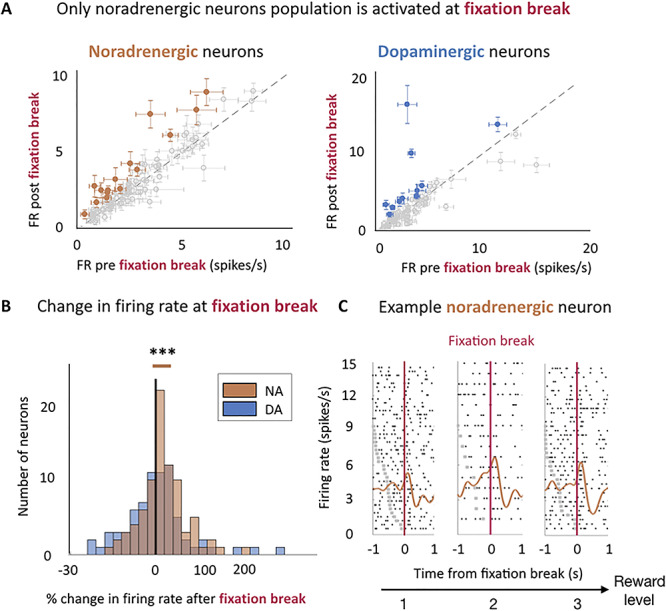

Only Noradrenergic Neurons Were Activated After a Failure to Engage and Were Sensitive to the Task Condition

We next examined the activity of dopaminergic and noradrenergic neurons time-locked to fixation break, which resulted in trial abortion. We focused our analysis on three epochs: a baseline epoch from −600 to −300 ms prior to fixation, a prefixation break epoch corresponding to the 300 ms prior to fixation break, and post fixation break epoch corresponding to the 300 ms following fixation break. There was neither a significant activation of dopaminergic neurons before fixation break (P = 0.62) nor after the fixation break (P = 0.49). In contrast, noradrenergic neurons were significantly activated after (mean difference = 0.30 ± 0.09 spikes/s, t(83) = 3.31, P = 0.001), but not before (P = 0.81) the fixation break had occurred. This activation corresponds to an average change of 16.5 ± 4.5% of activity between before (average firing rate = 2.83 spikes/s) and after (average firing rate = 3.12 spikes/s) the fixation break (Fig. 4A). At the single neuron level, 18% noradrenergic neurons and 15% dopaminergic neurons were activated at the fixation break (one-tailed T-test, P < 0.05) with an average change in firing rate of 78.1 ± 14.2% for noradrenergic neurons and 127.0 ± 42.4% for dopaminergic neurons. Note that all results hold true if we removed fixation break events that occurred less than 500 ms after the cue onset.

Figure 4.

Noradrenergic but not dopaminergic neurons were activated after the fixation break. (A) Only noradrenergic neuron population is activated at fixation break. Firing rate pre- (−300–0 ms) and post- (0–300 s) fixation break for both noradrenergic (left) and dopaminergic neurons (right). Points and error bars are means ± SEM. Gray points indicate nonsignificant activation and colored points indicate a significant activation (One-tailed T-test, P < 0.05). For illustration purposes only, we have removed two dopaminergic neurons (with a nonsignificant activation at fixation break), whose firing rates were above 20 spikes/s from the display. (B) Noradrenergic and Dopaminergic neurons’ change in firing rate evoked by activity after fixation break (0–300 ms from fixation break). The distributions are represented on a log-scale. Negative % correspond to neurons which were more active before than after the fixation break and conversely for positive %. Noradrenergic neurons population was significantly activated after the fixation break (P = 0.001) but not dopaminergic neurons population (P = 0.49). *** P ≤ 0.001. (C) Example noradrenergic neurons at fixation break for each reward level. Neuronal activity representative of noradrenergic neuron around fixation break (pink vertical line). Trials are sorted by decreasing latency between cue onset (gray dots) and fixation break. Cue onset is only visible for bottom trials, with latencies shorter than the displayed 1 sec. Spike activity (raster and spike density function) of a noradrenergic neuron showing an increase after the fixation break. In addition, its activity is modulated by the reward level (β = 0.15).

We then looked at the modulation of fixation-break related activity across task conditions. Dopaminergic neurons’ activity was not sensitive to the probability to engage with a task condition (P = 0.98) nor the engagement in the next trial (P = 0.43), and it will not be described further. By contrast, noradrenergic neurons’ evoked activity was positively modulated by the reward size (β = 0.06 ± 0.02, t(83) = 3.64, P < 0.001, significant modulation for 8% of neurons (7% positive/1% negative) (post hoc two-tailed T-test, P < 0.05)) but neither by the effort level nor by the trial number (β(effort level) = −0.01 ± 0.02, t(83) = −0.91, P = 0.37; β(trial number) = −0.04 ± 0.03, t(83) = −1.31, P = 0.20) (Fig. 4B). Note however that the difference between the sensitivity to effort and reward did not reach significance (post-hoc T-test on –β(effort) and β(reward): t(166) = 1.88, P = 0.06). This activity was specific to the onset of the fixation break as there was no modulation of the activity by these task factors in the 300 ms window before the fixation break (effort level: P = 0.50; reward level: P = 0.15; trial number: P = 0.9).

Noradrenergic Neuron Activity Predicted the Engagement on the Current and the Next Trial

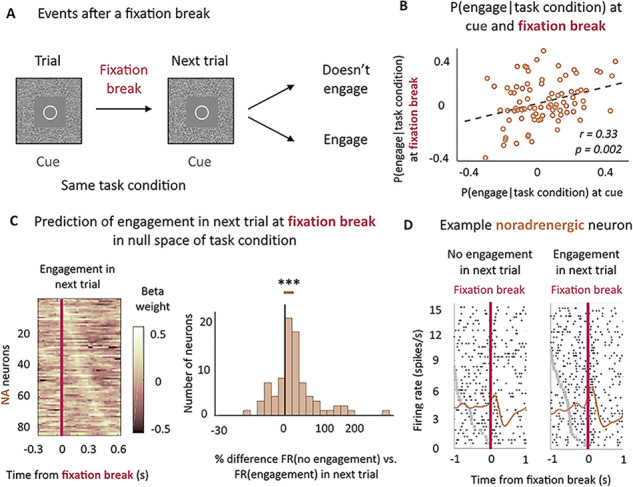

Finally, we examined the relationship between fixation-break evoked activity and the probability, across sessions, which the monkeys engaged on the next trial. Here again, we only looked at fixation break events that occurred after cue onset, meaning that the monkeys always knew the task condition at the time of the fixation break.

We found a significant positive effect of the probability to engage given the task condition on LC activity at the time of the fixation break (β = 0.05 ± 0.02, t(83) = 2.79, P = 0.007, significant modulation for 11% of neurons (11% positive/0% negative) (post-hoc two-tailed T-test, P < 0.05)). In other words, the more monkeys tended to engage in a specific task condition, the more noradrenergic neurons would be active if a fixation break occurred in this task condition. This effect was also present in the prefixation break activity (−300–0 ms to fixation break) (β = 0.05 ± 0.02, t(83) = 2.49, P = 0.01, significant modulation for 19% of neurons (12% positive (30% of which show also a significant positive effect after fixation break)/7% negative)), suggesting that it appeared after cue onset, in line with the fact that noradrenergic neurons also displayed a positive relation with task engagement at the time of the cue onset (Fig. 2B). Indeed, we found a significant positive correlation (r = 0.33, P = 0.002) between the sensitivity to the probability to engage at the time of cue and at the time of the post-fixation break (Fig. 5B). In short, noradrenergic neurons were activated both at cue onset and at the fixation break when it occurred. They tended to be more active in conditions associated with a greater probability of engagement, both at the cue onset and at the time of fixation break, and these two responses were correlated across the population of LC neurons.

Figure 5.

Noradrenergic neurons’ activity predicts the engagement on the next trial. (A) Task structure after a fixation break. (B) Correlation between noradrenergic neurons’ sensitivity to the probability to engage for each task condition at the cue onset and the fixation break across sessions. Significant correlation (r = 0.33, P < 0.01). (C) Noradrenergic neurons’ activity at fixation break is predictive of engagement in the next trial above and beyond the task condition. Left: sliding window analysis of the sensitivity of the firing rate to engagement in the next trial in null space of task condition at cue. Linear regression in 200 ms windows with an increment of 25 ms. X-axis represents the center of the window. Y-axis corresponds to each neuron. Color code represents the value of the beta weight in the regression. Neurons are ordered (top to bottom) by latency of their peak. Right: % difference between the firing rate distribution for no engagement in next trial and engagement in next trial in the null space of task conditions (mixed effect linear regressions on non-z-scored distributions). The distribution is represented on a log-scale. Significant difference (P < 0.001). *** P ≤ 0.001. (D) Example noradrenergic neurons at fixation break for no engagement (left) and engagement (right) in the next trial. Neuronal activity (raster and spike density function) is displayed around fixation break (t = 0, pink vertical line). Trials are sorted by decreasing latency between cue onset (gray dots) and fixation break. Cue onset is only visible for bottom trials, with latencies shorter than the displayed 1 sec. As a majority of LC neurons, this one shows a stronger activation when monkeys engaged on the next trial (β = 0.17).

Given this strong relation between LC activity and probability of engagement in the current trial when monkeys erroneously break fixation, we were interested to examine whether this activity could also predict monkeys’ engagement in the following trial. After a fixation break, two things could happen on the next trial (and therefore in the same task condition): monkeys could now choose to engage with the same task condition or could again reject the offer (Fig. 5A). We therefore examined if LC activity at the time of fixation break could provide information about engagement in the next trial, over and above task condition.

In fact, the magnitude of the fixation-break activation of noradrenergic neurons (controlled for task condition) was predictive of subsequent engagement in the next trial (β = 0.06 ± 0.01, t(83) = 3.88, P < 0.001, significant modulation for 7% of neurons (6% positive/1% negative) (post-hoc two-tailed T-test, P < 0.05); effect calculated on the z-scored distributions of firing rates and translating to an average difference of 25.1% ± 0.1 of activity between nonengage and engage on the next trial conditions) (Fig. 5C and Supplemental Fig. 1B). Hence, although the effects seen at the fixation break are relatively weak at the single neuron level, they are very consistent across the population, such that at the population level the effect clearly did reach significance. In fact, 73% of neurons showed small but consistently greater activation in trials in which monkeys engage on the next trial, which is comparable with the proportion of neuron displaying a positive relation with reward at the fixation break (69%) or at the cue (66%). We controlled for potential interactions with confounding factors such as trial repetition (whether the erroneous trial was itself repeated or not), trial number and their interactions with the effect of the engagement in the next trial, but none of them were significant (main effects: P(trial repetition) = 0.14, P(trial number) = 0.18; interactions with engagement with next trial: P(trial repetition) = 0.29, P(trial number) = 0.99). As previously mentioned, this activity was specific of noradrenergic neurons as dopaminergic neurons were not activated at fixation break and did not signal the engagement in the next trial (P = 0.43) (see Supplemental Fig. 2B).

Finally, we looked whether the effect of the engagement in the next trial could be found before the cue of the next trials. In other words, we looked if we could predict the engagement before the cue (−500–0 ms) for trials where a fixation break had occurred. We found that it was not the case (P = 0.20), even in the null space of task condition (P = 0.19), whereas we could predict the engagement after the cue onset (P = 0.004). Therefore, the modulation of LC firing as a function of engagement in the next trial was restricted to the period after the fixation break in the current trial but did not last in the intertrial interval.

In summary, we found that noradrenergic but not dopaminergic neurons’ activity at fixation break reflected the probability to engage both in the current and in the subsequent trial, over and above cost-benefit task conditions.

Discussion

In this task, monkeys were presented with cues instructing them to produce actions of different intensities to gain rewards of different magnitudes. The probability that monkeys would try to produce the action (engagement) depended on the task condition (effort and reward levels) even if failing to engage was clearly not instrumental (it would only lead to the repetition of the same task condition). This indicates that the information provided by the cues induced a change in motivation. In both LC and midbrain dopamine neurons, cue-evoked activity was significantly related to motivation, as indexed by engagement, over and above task conditions. But since cues also indicated the beginning of a trial, an opportunity to get the reward, their meaning differed between repeated and nonrepeated trials. After obtaining the reward, the cue signaled the start of a new opportunity to get a reward and provided information about the new the task condition: the amount of reward to be gained and the force level required. By contrast, in repeated trials, the cue provided no information since task condition (reward and effort level) is repeated and the goal remains the same as the previous trial. From that perspective, there is a clear transition in task state at cue onset in nonrepeated trials, when animals get started on another goal directed behavior, but not in repeated trials, which is only a continuation of the task state initiated at the previous nonrepeated trial (see also Bouret and Richmond 2009 and Bouret et al. 2012).

We used this task structure to reveal the precise roles of noradrenergic and dopaminergic neurons in signaling motivation and task state changes. As our cell identification methods were indirect, we can only consider cells as putative dopaminergic and noradrenergic neurons. However, they were reliable enough to consider that we were able to identify dopaminergic and noradrenergic neurons’ populations (Eddine et al. 2015; Xiang et al. 2019). Our general approach was to consider noradrenergic and dopaminergic neurons as two homogeneous populations and look at the population’s sensitivity to certain variables. We therefore do not try to isolate subpopulations of neurons. Even though we do not deny the functional heterogeneity of these populations, as demonstrated by studies in rodents, our goal here was to identify the common and distinctive features of noradrenergic and dopaminergic neurons as a whole. We found that both noradrenergic and dopaminergic neurons’ activities were predictive of the engagement in the task, a reliable measure of motivation (Bowman et al. 1996; Berridge 2004; Minamimoto et al. 2009). Their activities were not only correlated with the session-average probability to engage in a particular task condition, but also with the trial-by-trial engagement. Furthermore, their activities were correlated with engagement over and above the specific task condition. This strengthens the role of both catecholaminergic systems in motivating effortful, reward-directed actions. By contrast, the activity of noradrenergic and dopaminergic neurons differed significantly when it came to signaling task state changes at the cue onset. First, only noradrenergic neurons’ activity was sensitive to trial repetition factor, with greater activation when cues signaled a change in task state, over and above its relation with upcoming reward and effort levels. Moreover, noradrenergic, but not dopaminergic, neurons displayed activity after a fixation break, which ended the trial and represented a failure to engage. This activity scaled not only with the probability of engagement in the current trial type, but also with the engagement in the next trial. Hence, noradrenaline, contrary to dopamine, plays a role both in signaling a task state change and in promoting current and future effortful actions.

Similarities and Dissimilarities of the Role of the Catecholaminergic Systems in Motivation

This study builds on experiments presented in Varazzani et al. (2015), but here includes both repeated and nonrepeated, and correct and incorrect trials, rather than just the nonrepeated correct trials reported in Varazzani et al. (2015). This allowed us to examine the influence of task state changes and motivation to engage, and not just the cost-benefit parameters of the presented cues, on neural activity. The inclusion of these additional trials did lead to slight differences in sensitivity to the task parameters (Fig. 2A) to those reported previously (Fig. 4 in Varazzani et al. 2015). However, the overall pattern of effects was comparable, and any difference was negligible (and nonsignificant) compared with the difference in terms of sensitivity in noradrenaline and dopamine neurons to changes in task state.

Both noradrenergic and dopaminergic neurons’ activity was related to the engagement in the effortful actions. Dopaminergic neurons’ activity was tightly linked with the engagement in the rewarded course of action independently of whether the trial was repeated or not. Dopaminergic neurons were also activated at the time of producing the action, but contrary to noradrenergic neurons, they did not correlate with the actual force produced and pupil size (Varazzani et al. 2015). The causal role of dopamine in incentive processes has been shown in different species, with an emphasis on its role in controlling reward sensitivity (Denk et al. 2005; Hosking et al. 2015; Le Bouc et al. 2016; Yohn et al. 2016; Zénon et al. 2016; Noritake et al. 2018). Moreover, our results are in line with studies demonstrating that dopamine release is strongly driven by the initiation of a purposeful action for reward (Phillips et al. 2003; Roitman et al. 2004; Syed et al. 2016). Our data are broadly compatible with the well know role of dopamine in signaling the current, subjective cached values associated with stimuli (Schultz et al. 1997; Stauffer et al. 2016). Notably, however, a significant fraction of dopamine neurons was also modulated by the prospective willingness to engage with the response required to gain the reward. This is in line with recent evidence indicating that dopamine does not simply signal potential future incentive value but also promotes the initiation of reward seeking actions (Guitart-Masip et al. 2012; Syed et al. 2016; Walton and Bouret 2019).

Our previous study (Varazzani et al. 2015) demonstrated that only noradrenergic neurons activity correlated with arousal, as indexed by pupil diameter (see also Foote et al. 1980). Crucially, it also correlated with the force produced, even when pupil size and the required effort level were added as coregressors (Varazzani et al. 2015). This strong relationship with the behavioral response, as well as engagement, show that noradrenergic neuron activity is linked to the engagement in the effortful course of action as well as to the actual production of the action. This is in line with previous demonstrations that LC neurons respond to stimuli predicting future rewards and action initiation responses (Bouret and Sara 2004; Bouret and Richmond 2009, 2015; Kalwani et al. 2014). Contrary to dopamine, causal manipulation of the noradrenergic system does not seem to affect incentive processes (Hosking et al. 2015; Jahn et al. 2018). Indeed, our recent study showed that the noradrenergic system controls the amount of force produced during the action, but not the selection nor the initiation of the action (Jahn et al. 2018). Hence, the noradrenergic system might be critical to ensure that the effortful action is appropriately performed once a decision to engage has been taken (Bouret and Richmond 2015; Varazzani et al. 2015), whereas dopamine is instead key for signaling the subjective future reward to be gained by performing an action and promoting that response (Ishiwari et al. 2004; Gan et al. 2010; Pasquereau and Turner 2013; Varazzani et al. 2015; Papageorgiou et al. 2016; Salamone et al. 2016).

Why Are Dopaminergic Neurons Not Sensitive to the Task State Change in Our Task?

Dopamine neurons have long been reported to respond to salient novel stimuli (Strecker and Jacobs 1985; Ljungberg et al. 1992; Horvitz et al. 1997; Menegas et al. 2017) and to be implicated in novelty seeking (Costa et al. 2014). Therefore, it may initially seem surprising that in our task, dopaminergic neurons were not sensitive to the novelty of the presented task condition. However, there are a number of important differences between these experiments and the current one. For instance, in previous experiments examining novelty seeking, it is unclear whether dopaminergic neurons were sensitive to new information based on the change in uncertainty about the world, independent of choice, or as a variable driving the behavior. While Bromberg-Martin and Hikosaka showed that dopaminergic neurons were sensitive to the advanced information about the size of the reward, importantly in their study, monkeys showed a preference for obtaining this information, implying that it was therefore relevant for guiding the behavior (Bromberg-Martin and Hikosaka 2009; Charpentier et al. 2018). In another experiment, Naudé and colleagues showed that mice preferred a probabilistic outcome to a deterministic outcome, and that this preference was controlled by the dopaminergic system (Naudé et al. 2016). These two studies show that dopaminergic neurons are sensitive to information as a variable that can influence choices through preferences, since it acted as a reward (Charpentier et al. 2018). In our task, as the cost-benefit cues were all well known, information (as provided by the cues in nonrepeated, but not in repeated trials) would neither cause sensory surprise (as cues themselves were not novel) nor be relevant for modulating future choices. Therefore, although we cannot rule out that some individual dopamine neurons do code for this factor, it seems that dopamine neurons as a population are not sensitive to the information about task state changes when this is not relevant to guide the behavior.

Noradrenergic Neurons’ Activity Could Reflect the Role of Noradrenaline in Information Processing

The crucial difference between dopaminergic and noradrenergic neurons was that noradrenergic neurons were sensitive to changes in task state, as measured by the contrast between repeated and nonrepeated trials at the cue. Because task state changes only occur after a successful trial, lower activation of LC neurons at cue on repeated trials could reflect the fact that an error just occurred. However, we found no significant effect of error on the previous trial in baseline activity before the cue. Therefore, it is unlikely that there is a carry-over effect of error on the next trial. This lower activation in repeated trials could also be simply due to the repetition of a visual cue. However, we found no evidence that the cue repetition affected the sensitivity to the task state change. Moreover, there was no significant difference in the sensitivity to the task factors (effort and reward levels) in repeated and nonrepeated trials. Hence, there is no evidence in our data for a simple stimulus repetition suppression effect. From a goal directed behavior perspective, there is much more likely to be a state transition after a sequence ended with a reward, which would argue against a simple cue repetition response. Therefore, we attributed this lower activation to the fact that the monkeys already knew the task condition in repeated trials.

Noradrenaline has a long-stated role in signaling important events in the environment (Kety 1972; Foote et al. 1980; Aston-Jones and Bloom 1981; Abercrombie and Jacobs 1987; Berridge and Waterhouse 2003; Vazey et al. 2018). In particular, noradrenergic system’s activation to novelty would facilitate sensory processing (Aston-Jones and Bloom 1981; Hasselmo et al. 1997; Devilbiss and Waterhouse 2004; Yu and Dayan 2005; Devilbiss et al. 2006; Rodenkirch et al. 2019). In our task, after the reward has been obtained, monkeys expect that a new task condition will be presented. When the cue is displayed, they need to process this sensory information and retrieve its meaning about the task condition from memory. Consistently with the role of noradrenaline in facilitating sensory processing and retrieval (Devauges and Sara 1991; Bouret et al. 2012), here LC greater activation at cue when the task state changes could be linked to information processing. Noradrenaline release in target structures would facilitate the integration of information. Along this line, noradrenaline has been implicated in signaling a need to provoke or facilitate a cognitive shift to adapt to the environment (Bouret and Sara 2005; Yu and Dayan 2005; Glennon et al. 2019) and pharmacological enhancement of the activity of the noradrenergic system facilitates the acquisition of new contingencies (Devauges and Sara 1990; Tait et al. 2007; McGaughy et al. 2008). This interpretation is also in line with a recent study demonstrating the stronger increase in pupil dilation (a correlate of LC activation) when subjects must update their internal representation of the world compared with situations when changes in stimuli predict no change in task progression (Filipowicz et al. 2019).

Noradrenergic Neurons’ Activity Reflects the Engagement at the Cue and After a Failure

Both the displays of the cue and fixation break had an activation effect on LC neurons. These events are characterized by the fact that they trigger a variable behavioral response (engagement or not in the current trial and engagement or not in the next trial) and that noradrenergic neurons response to these events is linked to the engagement. A break of fixation was an important event not only as it signaled the end of the trial, but also the re-occurrence of same task condition in the next one. This post-fixation break activity was tightly linked to firing rates at the time of cue, which in turn reflected the probability of engagement in the effortful action. A potential scenario is that if the activity at the cue was too small to enable maintenance of the fixation and the engagement in the trial, then activity at the fixation break reflects an update to enable performance of the action on the subsequent trial. Indeed, we found that when we controlled for task condition, noradrenaline neurons were more active after fixation break when monkeys then engaged in the subsequent trial. Finally, as we were never able to predict the engagement in the trial from the baseline activity at the cue, even for repeated trials and even for trials following a fixation break, we can only conclude that noradrenergic neurons predict the engagement on a trial-by-trial basis but have no evidence that they do so through a slow fluctuation of activity that lasts beyond the range of a trial.

Based on the latencies reported in our previous study (Varazzani et et al. 2015), LC neurons fast responses to these salient events is likely to driven by bottom-up influence notably from the nucleus paragigantocellularis (Ennis and Aston-Jones 1988). The modulation of LC activity by the task factors represented by the visual cues is late enough to permit a top-down influence by frontal structures, such as orbito-frontal cortex and amygdala (Bouret and Richmond 2009; Sugase-Miyamoto and Richmond 2005). In the same way, we have shown that the ventromedial-prefrontal cortex represents monkeys’ engagement with a much longer time-scale, beyond the individual trial (San-Galli et al. 2018). Hence it is likely that LC activity modulation is the result of a top-down influence from structures in the frontal cortex. Similarly, top-down influence of the medial prefrontal cortex on the dopaminergic neurons have also been shown (Noritake et al. 2018). Crucially, in our experiment, only noradrenergic neurons were activated following a break in fixation, which represents a failure to engage in the effortful action. Similar patterns of activity at the break of fixation have also been observed in midcingulate cortex (MCC), here modulated by how close to reward delivery the error occurred or how much effort was already invested in the task (Amiez et al. 2005). Given the connections between LC and MCC, this suggests that MCC and LC might well interact when required to signal salient events, potentially to facilitate behavioral adaptation.

To conclude, our data show the specific and complementary roles of dopamine and noradrenaline in motivation and behavioral flexibility. The former would promote actions directed toward currently available rewards, while the latter would signal and potentially facilitate the need to engage resources to undertake and complete effortful actions when important events occur and require adaptation (Bouret et al. 2012; Walton and Bouret 2019).

Supplementary Material

Funding

This research was funded by the ERC BIOMOTIV, the Paris Descartes University doctoral and mobility grants and the Wellcome Trust fellowships (MEW: 202831/Z/16/Z, JS: 105651/Z/14/Z). The Wellcome Centre for Integrative Neuroimaging is supported by core funding from the Wellcome Trust (203139/Z/16/Z).

Notes

The authors declare no competing financial interest. Correspondence should be addressed to Sebastien Bouret: sebastien.bouret@icm-institute.org. Team Motivation Brain and Behavior, Institut du Cerveau et de la Moelle Épinière, Hôpital Pitié-Salpêtrière, 47, boulevard de l’Hôpital, 75013 Paris, France.

References

- Abercrombie ED, Jacobs BL. 1987. Single-unit response of noradrenergic neurons in the locus coeruleus of freely moving cats. I. Acutely presented stressful and nonstressful stimuli. J Neurosci. 7:2837–2843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amiez C, Joseph J-P, Procyk E. 2005. Anterior cingulate error-related activity is modulated by predicted reward. Eur J Neurosci. 21:3447–3452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aston-Jones G, Bloom FE. 1981. Nonrepinephrine-containing locus coeruleus neurons in behaving rats exhibit pronounced responses to non-noxious environmental stimuli. J Neurosci. 1:887–900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aston-Jones G, Cohen JD. 2005. An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu Rev Neurosci. 28:403–450. [DOI] [PubMed] [Google Scholar]

- Berridge CW, Waterhouse BD. 2003. The locus coeruleus-noradrenergic system: modulation of behavioral state and state-dependent cognitive processes. Brain Res Brain Res Rev. 42:33–84. [DOI] [PubMed] [Google Scholar]

- Berridge KC. 2004. Motivation concepts in behavioral neuroscience. Physiol Behav. 81:179–209. [DOI] [PubMed] [Google Scholar]

- Berridge KC. 2007. The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology. 191:391–431. [DOI] [PubMed] [Google Scholar]

- Bouret S, Richmond BJ. 2009. Relation of locus Coeruleus neurons in monkeys to Pavlovian and operant behaviors. Journal of Neurophysiology. 101:898–911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouret S, Richmond BJ. 2015. Sensitivity of locus Ceruleus neurons to reward value for goal-directed actions. J Neurosci. 35:4005–4014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouret S, Richmond BJ, Ravel S. 2012. Complementary neural correlates of motivation in dopaminergic and noradrenergic neurons of monkeys. Front Behav Neurosci. 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouret S, Sara SJ. 2004. Reward expectation, orientation of attention and locus coeruleus-medial frontal cortex interplay during learning. European Journal of Neuroscience. 20:791–802. [DOI] [PubMed] [Google Scholar]

- Bouret S, Sara SJ. 2005. Network reset: a simplified overarching theory of locus coeruleus noradrenaline function. Trends Neurosci. 28:574–582. [DOI] [PubMed] [Google Scholar]

- Bowman EM, et al. 1996. Neural signals in the monkey ventral striatum related to motivation for juice and cocaine rewards. J Neurophysiol. 75:1061–1073. [DOI] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Hikosaka O. 2009. Midbrain dopamine neurons signal preference for advance information about upcoming rewards. Neuron. 63:119–126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. 2010. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron. 68:815–834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charpentier CJ, Bromberg-Martin ES, Sharot T. 2018. Valuation of knowledge and ignorance in mesolimbic reward circuitry. PNAS. 115:E7255–E7264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costa VD, Tran VL, Turchi J, Averbeck BB. 2014. Dopamine modulates novelty seeking behavior during decision making. Behav Neurosci. 128:556–566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dalley JW, McGaughy J, O’Connell MT, Cardinal RN, Levita L, Robbins TW. 2001. Distinct changes in cortical acetylcholine and noradrenaline efflux during contingent and noncontingent performance of a visual attentional task. J Neurosci. 21:4908–4914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dayan P, Yu AJ. 2006. Phasic norepinephrine: a neural interrupt signal for unexpected events. Network. 17:335–350. [DOI] [PubMed] [Google Scholar]

- Denk F, Walton ME, Jennings KA, Sharp T, Rushworth MFS, Bannerman DM. 2005. Differential involvement of serotonin and dopamine systems in cost-benefit decisions about delay or effort. Psychopharmacology (Berl). 179:587–596. [DOI] [PubMed] [Google Scholar]

- Devauges V, Sara SJ. 1990. Activation of the noradrenergic system facilitates an attentional shift in the rat. Behav Brain Res. 39:19–28. [DOI] [PubMed] [Google Scholar]

- Devauges V, Sara SJ. 1991. Memory retrieval enhancement by locus coeruleus stimulation: evidence for mediation by beta-receptors. Behav Brain Res. 43:93–97. [DOI] [PubMed] [Google Scholar]

- Devilbiss DM, Page ME, Waterhouse BD. 2006. Locus ceruleus regulates sensory encoding by neurons and networks in waking animals. J Neurosci. 26:9860–9872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devilbiss DM, Waterhouse BD. 2004. The effects of tonic locus ceruleus output on sensory-evoked responses of ventral posterior medial thalamic and barrel field cortical neurons in the awake rat. J Neurosci. 24:10773–10785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doya K. 2008. Modulators of decision making. Nat Neurosci. 11:410–416. [DOI] [PubMed] [Google Scholar]

- Eddine R, Valverde S, Tolu S, Dautan D, Hay A, Morel C, Cui Y, Lambolez B, Venance L, Marti F et al. . 2015. A concurrent excitation and inhibition of dopaminergic subpopulations in response to nicotine. Sci Rep. 5:8184. [DOI] [PMC free article] [PubMed] [Google Scholar]