Abstract

The novel coronavirus disease 2019 (COVID-19) began as an outbreak from epicentre Wuhan, People’s Republic of China in late December 2019, and till June 27, 2020 it caused 9,904,906 infections and 496,866 deaths worldwide. The world health organization (WHO) already declared this disease a pandemic. Researchers from various domains are putting their efforts to curb the spread of coronavirus via means of medical treatment and data analytics. In recent years, several research articles have been published in the field of coronavirus caused diseases like severe acute respiratory syndrome (SARS), middle east respiratory syndrome (MERS) and COVID-19. In the presence of numerous research articles, extracting best-suited articles is time-consuming and manually impractical. The objective of this paper is to extract the activity and trends of coronavirus related research articles using machine learning approaches to help the research community for future exploration concerning COVID-19 prevention and treatment techniques. The COVID-19 open research dataset (CORD-19) is used for experiments, whereas several target-tasks along with explanations are defined for classification, based on domain knowledge. Clustering techniques are used to create the different clusters of available articles, and later the task assignment is performed using parallel one-class support vector machines (OCSVMs). These defined tasks describes the behavior of clusters to accomplish target-class guided mining. Experiments with original and reduced features validate the performance of the approach. It is evident that the k-means clustering algorithm, followed by parallel OCSVMs, outperforms other methods for both original and reduced feature space.

Keywords: COVID-2019, One-class classification, Clustering, CORD-19, One-class support vector machine

1. Introduction

Coronaviruses are a large family of deadly viruses that may cause critical respiratory diseases to the human being. Severe acute respiratory syndrome (SARS) is the first known life-threatening epidemic, occurred in 2003, whereas the second outbreak reported in 2012 in Saudi Arabia with the middle east respiratory syndrome (MERS). The current outbreak is reported in Wuhan, China during late December 2019 [41]. On January 30, 2020, the world health organization (WHO) declared it a public health emergency of international concern (PHEIC) as it had spread to more than 18 countries [38] and on Feb 11, 2020, WHO named this “COVID-19”. On March 11, 2020, as the number of COVID-19 cases reaches to 118,000 in 114 countries and over 4,000 deaths, WHO declared this a pandemic.

Several research articles have been published on coronavirus caused diseases after 2003 till date. These articles belong to diverse domains like medicine and healthcare, data mining, pattern recognition, machine learning, etc. Manual extraction of the research papers of an individuals interest is a time consuming and impractical task in the presence of enormous research articles. More specifically, a researcher looks for a target class guided solution, i.e., the researcher seeks for a cluster of research articles meeting his/her area of interest. To accomplish this task, this paper proposes a cluster-based parallel OCSVM [40] approach. The cluster techniques are used to group the articles properly so that the articles in the same group are more similar to each other than to those in other clusters, whereas multiple OCSVMs are trained using individual cluster information. For experiments, a set of target-tasks is defined from the domain knowledge to generalize the nature of all articles, and these tasks will become the cluster representatives. To assign the target-tasks to the clusters, OCSVM plays a decisive role.

The clustering and classification problems are essential and admired topics of research in the area of pattern recognition and data mining. The conventional binary and multi-class classifiers are surely not suitable for this target-task mining task because, in this unsupervised learning mode, it is always possible that some of the clusters may not be assigned with any target-class, whereas an increase in the number of target-tasks to solve this problem leads to generation of duplicate information. These conventional classifiers work fine in the presence of at least two well-defined classes but may become biased, if the dataset suffers from data irregularity problems (imbalanced classes, small disjunct, skewed class distribution, missing values, etc.). Specially, when a class is ill-defined, the classifier may give biased outcome. Firstly, Minter [33] observed this problem and termed it “single-class classification”, and later, Koch et al. [22] named this fact “one-class classification” (OCC). Subsequently, researchers used different terms based on the application domain to which one-class classification was applied, like “outlier detection” [36], “novelty detection” [5] and “concept learning” [16]. In OCC problems, the target class samples are well-defined and the outliers are poorly defined which make decision boundary detection a difficult problem.

For OCC, many machine-learning algorithms have been proposed like: one-class random forest (OCRF), one-class deep neural network (OCDNN), one-class nearest neighbours (OCNN), one-class support vector classifiers (OCSVCs), etc. [1], [2], [7], [19], [29], [35]. The benifit of OCSVCs over other state-of-the-art OCC techniques is its work-ability with only positive class samples whereas the other methods need negative class samples too for smooth operation, hence the OCSVCs are found more suitable for this research. Based on extensive literature review, it is evident that OCSVMs (a type of OCSVC) are mostly used for novelty/anomaly detection in various application domains such as intrusion detection [20], [27], fraud detection [10], [15] disease diagnosis [8], [43] novelty detection [46] and document classification [28]. These assorted applicability make OCSVMs very interesting and important. Though many research articles have been published concerning OCSVM during the last two decades, still, it is not applied and tested for research article mining task. In this paper, OCSVM is used along with clustering techniques for article categorization, and results show that this approach gives promising performance.

The rest of the paper is organized as follows: Section 2 discusses the related work in this field and details of the dataset used. The proposed model is discussed in Sections 3 and 4 briefs the experimental setup and results. Finally, the conclusion is discussed in Section 5.

2. Related work

Document classification is an admired area of research in pattern recognition and data mining. In the present era, the presence of massive online research repositories makes the search of research articles of a user’s interest, a time-consuming process. Several web search engines [39] are available to search keywords related documents on the web. It is observed that, still, the target class guided information retrieval from the internet is a challenging task. Specifically, for a researcher, it is a very difficult task to extract only the related articles satisfying or meeting his/her objectives. This paper proposes a novel cluster-based parallel one-class classification model to assign the most promising tasks to a group of relevant articles in available repository. The experiments have been performed with original and reduced features to justify the computational benefit of low-dimensional features. This section briefs the relevant background of data-preprocessing, clustering, one-class classification, and dimensionality reduction techniques, along with the published papers related to COVID-19 for research article mining techniques using CORD-19 dataset.

2.1. Data-preprocessing for text mining

Document embeddings help to extract richer semantic content for document classification in text mining applications [9], [13]. Numeric representation of text documents is a challenging task in various applications such as document retrieval, web search, spam filtering, topic modeling, etc. In 1972, Jones [37] proposed a technique known as term frequency-inverse document frequency (Tf-idf). Tf-idf is a technique of information retrieval and text mining that uses a weight, which is a statistical measure to calculate the importance of a word within a document corpus. It is a frequency-based measure, and its significance increases proportionally as the frequency of the word in the corpus increases. Later, Bengio et al. [3] proposed a feed-forward neural network language model for word embedding. Later, a simpler and more effective neural architecture was developed to learn word vectors, word2vec by Mikolov et al. [31], where the objective functions produce high-quality vectors. Recently, doc2vec as an extension of word2vec has been provided by Mikolov et al. [32] to implement document-level embeddings. This technique is designed to work with the different granularity of the word sequences such as word n-gram, sentence, paragraph or document level. Avinash et al. [17] performed intensive experiments on multiple datasets and observed that doc2vec method performs better than TF-idf. In this paper, doc2vec is used to generate features of the research documents and defined tasks. The generated features are further used in other components of the proposed model for clustering and classification.

2.2. Clustering techniques

Clustering is an unsupervised learning approach used iteratively to create groups of relatively similar samples from the population. In this research, following three clustering techniques have been used to create the clusters of the available samples (research articles):

-

•

k-means

-

•

Density-based spatial clustering of applications with noise (DBSCAN)

-

•

Hierarchical agglomerative clustering (HAC)

The k-means algorithm is a popular clustering technique that aims to divide the data samples into k pre-defined distinct non-overlapping subgroups, where each data point belongs to only one group. It keeps inter-cluster data points similar to each other and also tries to maximize the distance between two clusters [6]. DBSCAN is a data clustering algorithm proposed by Martin et al. [12]. It is a non-parametric algorithm based on the concept of the nearest neighbour. It considers a set of points in some space and identifies groups of close data points as nearest neighbours and also identifies outliers to the points which are away from their neighbors. HAC [34] is a “bottom-up” approach in which each data point is initially considered as a single-element cluster. Later, in the next steps, the two similar clusters are merged to form a bigger cluster, and subsequently, converges to a single cluster. Experiments have been performed on CORD-19 dataset using above mentioned clustering algorithms, and the objective is to find the best cluster representation of the whole sample space.

2.3. One-class classification

One-class classification (OCC) algorithms are suitable when the negative class is either absent, poorly sampled or ill-defined. The objective of OCC is to maximize the learning ability using only the target class samples. The conventional classifiers need at least two well-defined classes for healthy operation but give biased results, if the test sample is an outlier. As a solution to this problem, the one-class classification techniques are used, majorly applicable for outliers/novelty detection and concept learning [18]. In this research, parallel one-class support vector machines are used for target-tasks assignment.

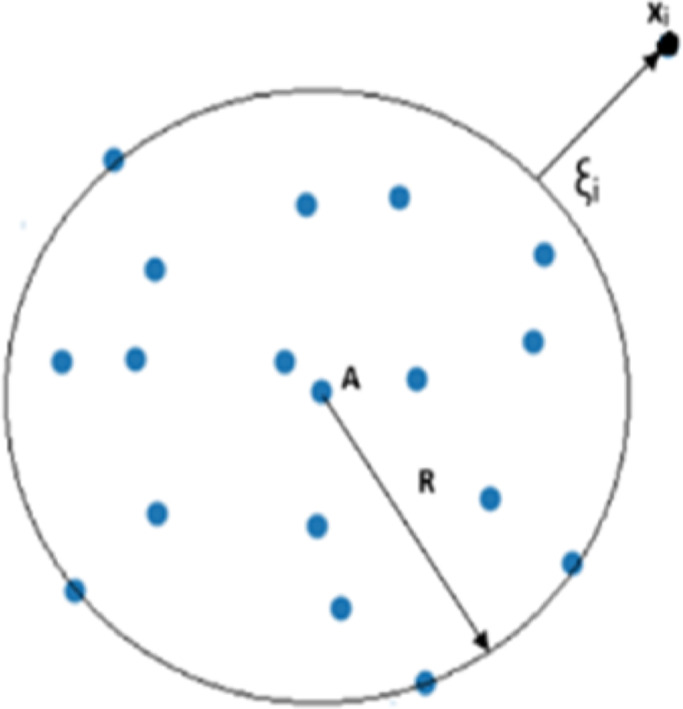

Tax et al. [42] solved the OCC problem by separating the target class objects from other samples in sample space, and proposed a model called support vector data description (SVDD), where target class samples are enclosed by a hypersphere, where the data points at decision boundary are treated as support vectors. The SVDD rejects a test sample as an outlier, if it falls outside of the hypersphere; otherwise accepts it as a target class sample, as shown in Fig. 1 . The objective function of SVDD is defined as:

| (1) |

subject to: i

Fig. 1.

Support Vector Data Description (SVDD).

where R is the radius of the hypersphere (objective is to minimize the radius), data point xi is an outlier, a is center of hypersphere, samples at decision boundary are support vectors, the parameter C controls the trade-off between the volume and the errors, and ξ is the slack variable to penalize the outlier. With the Lagrange multipliers αi ≥ 0, γi ≥ 0 and the purpose is to minimize the hyperspheres volume by minimizing R to cover all target class samples with the penalty of slack variables for outliers. By setting partial derivatives to zero and substituting those constraints into Eq. (1), following is obtained:

| (2) |

A test sample x is classified as an outlier if the description value is not smaller than C. SVDD can also be expanded using kernels. Thus, the problem can be formulated as follows:

| (3) |

The output of the SVDD can be calculated as follows:

| (4) |

In Eq. (4), the output is positive if the sample is inside the boundary, whereas for an outlier the output becomes negative.

Schlökopf et al. [40] proposed an alternative approach (named one-class support vector machine (OCSVM)) to SVDD by separating the target class samples from outliers using a hyperplane instead of creating hypersphere (Fig. 2 ). In this approach, the target class samples are separated by a hyperplane with the maximal margin from the origin and all negative class samples are assumed to fall on the subspace of the origin. This algorithm returns value +1 for target class region and -1 elsewhere. Following quadratic Eq. (5) must be solved to separate the target class samples from the origin:

| (5) |

subject to: and for all i = 1, 2, 3 n

Fig. 2.

One-Class Support Vector Machine (OCSVM).

where ϕ represents a point xi in feature space and ξi is the slack variable to penalize the outlier. The objective is to find a hyperplane characterized by ω and ρ to separate the target data points from the origin with maximum margin. Lower bound on the number of support vectors and upper bound on the fraction of outliers are set by ϵ (0,1]. Experimental results of this research ensures that for OCSVM, the Gaussian kernel outperforms other kernels.

The dual optimization problem of Eq. (5) is defined as follows:

| (6) |

subject to: i = 1, 2, 3..., n.

where and αi is the Lagrange multiplier, whereas the weight-vector w can be expressed as:

| (7) |

ρ is the margin parameter and computed by any xi whose corresponding Lagrange multiplier satisfies

| (8) |

With kernel expansion the decision function can be defined as follows:

| (9) |

Finally, the test instance x can be labelled as follows:

| (10) |

where sign(.) is sign function.

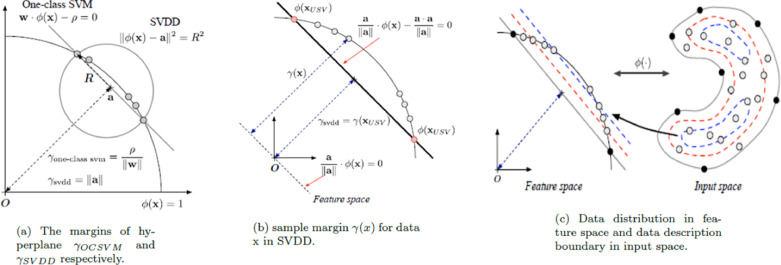

Both these breakthrough approaches for OCC (SVDD and OCSVM) perform equally with Gaussian kernel and origin plays a decisive role where all the negative class data points are pre-assumed to lie on the origin. In unit norm feature space, the margin of a hyperplane of OCSVM is equal to the norm of the centre of SVDD is [21] as shown in Fig. 3 (a). SVDD can be reformulated by a hyperplane equation as follows:

| (11) |

where a is the centre of SVDD hypersphere. The normal vector wsvdd and the bias ρsvdd of SVDD hyperplane can be defined as below:

| (12) |

Fig. 3.

Geometric representation of SVDD and one-class SVM [35].

In feature space, the virtual hyperplane passes through the origin and the sample margin is defined by its distance from the image of the data as shown in Fig. 3(b). The sample margin in SVDD can be defined as below:

| (13) |

where a is the centre of SVDDs hypersphere and y() is the image of data in feature space. In OCSVM, the sample margin is defined as follows:

| (14) |

Because data examples exist on the surface of a unit hypersphere, the sample margin has “0” as the minimum value and “1” as the maximum, i.e.

| (15) |

Also, sample margins of unbounded support vectors xUSV (0 < <) are the same as the margin of hyperplane.

Hence:

| (16) |

| (17) |

Sample margin represents the distribution of samples in feature space. Fig. 3(c) shows the distribution of sample margin of training data and hyperplane of OCSVM in feature space. It is also evident that the SVDD and OCSVM perform equally with RBF kernel. In the present research work, OCSVM is used for experiments.

2.4. Dimensionality reduction

The research articles are associated with massive features and all are not equally important that leads to the curse of dimensionality. In this paper, doc2vec technique is used to generate features for experiments. Reduced features obtained by applying the following dimensionality reduction (DR) techniques are also used for experiments aiming to reduce the computation cost without affecting the performance:

-

•

Principal component analysis (PCA)

-

•

Isometric Mapping (ISOMAP)

-

•

t-distributed stochastic neighbor (t-SNE)

-

•

Uniform manifold approximation and projection (UMAP)

Principal component analysis [45] is a linear DR technique that extracts the dominant features from the data. PCA generates a lower-dimensional representation of the data and describes the maximum variance in the data as possible. This is done by finding a linear basis of reduced dimensionality for the data, in which the amount of variance in the data is maximal. ISOMAP is a nonlinear DR technique for manifold learning that uses geodesic distances to generate features by using transformation from a larger to smaller metric space [4]. t-SNE works as an unsupervised, non-linear technique mainly supports data exploration and visualization in a high-dimensional space. It calculates a similarity count between pairs of instances in the high dimensional and low dimensional space and then optimizes these two similarity counts using a cost function [26]. Whereas, UMAP supports effective data visualization based on Riemannian geometry and algebraic topology with excellent run time performance as compared to other techniques [30].

2.5. Recent researches on CORD-19 dataset

The COVID-19 open research dataset (CORD-19) [23] is prepared by White House in partnership with leading research groups characterizing the wide range of literature related to coronavirus. It consists of 45,000 scholarly articles, within 33,000 articles are full text about various categories of coronavirus. The dataset is a collection of commercial, noncommercial, custom licensed and bioRxiv/medRxiv subsets of documents from multiple repositories contributed by various research groups all over the world. The CORD-19 dataset is hosted by kaggle, consists of a set of useful questions about disease spread in order to find out information regarding its origin, causes, transmission, diagnostic, etc. These questions can motivate researchers to explore preceding epidemiological studies to have better planning of preventive measures under present circumstances of COVID-19 disease outbreak. This dataset is aimed to cater the global research community, an opportunity to perform machine learning, data mining and natural language processing tasks to explore the hidden insights within it and utilize the knowledge to tackle this pandemic worldwide. Thus, there is an utmost need to explore the literature with minimum time and effort so that all possible solutions related to the worldwide pandemic could be achieved.

In a research initiative, Wang et al. [44] generated a named entity recognition (NER) based dataset from CORD-19 corpus (2020-03-13). This derived CORD-19-NER dataset consists of 75 categories of entities extracted from 4 different data sources. The data generation method is a weakly supervised method and useful for text mining in both biomedical as well as societal applications. Later, Han et al. [14] in their research work, focused on the impact of outdoor air pollution on the immune system. The research work highlighted that increased air pollution causes respiratory virus infection, but during the lock-down, along with social distancing and home isolation measures, air pollution gets reduced. This research work utilizes daily confirmed COVID-19 cases in selected cities of China, air pollution, meteorology data, intra/inter city level movements, etc. They proposed a regression model to establish the relationship between the infection rate and other surrounding factors.

Since the entire world is suffering from the severity of the novel coronavirus outbreak, researchers are keen to learn more and more about coronavirus. In a research work, Dong et al. [11] proposed a latent Dirichlet allocation (LDA) based topic modeling approach using CORD-19 dataset. This article highlights the areas with limited research; therefore, future research activities can be planned accordingly. The corona outbreak pandemic situation demands extensive research on the corona vaccine so that we can control this outbreak. Manual methods of exploring the available literature are time-consuming; therefore, Joshi et al. [17], proposed deep learning-based automatic literature mining for summarization of research articles for faster access.

It is observed that mining scholarly articles is a promising area of research when enormous papers are available concerning COVID-19. Search engines may give unrelated articles, and therefore the overall search process becomes ineffective and time-consuming. As the overall analysis is supposed to perform in unlabeled data, the clustering technique helps to group the related articles in an optimized way. It is obvious that the search query is always task specific, hence the target-tasks are defined with domain knowledge. Conventional classifiers (binary or multi-class) may give biased results and never ensure task-specific classification, thus parallel one-class SVMs are used for target-task assignment to clusters. This method helps the researchers to instantly find the desired research articles more precisely. For experiments, the CORD-19 dataset is used to validate the performance of the proposed approach.

3. Proposed methodology

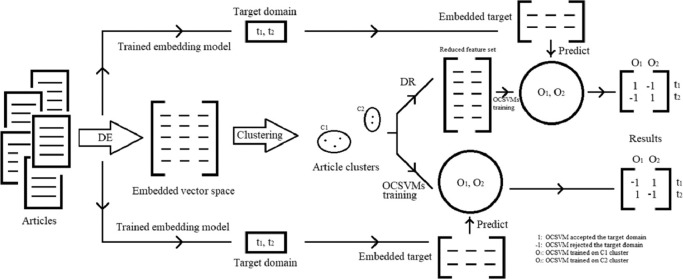

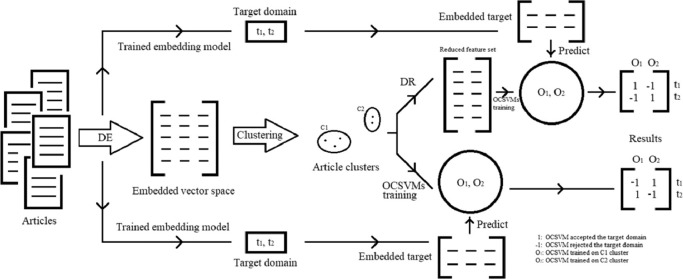

This research work offers a bottom-up approach to mine the COVID-19 articles concerning the target-tasks. These tasks are defined and prepared by domain knowledge to answer all possible queries of coronavirus related research articles. In this paper, instead of associating the articles to address the target-tasks, the intention is to map the defined tasks to the cluster of similar articles. The overall approach is divided into several components, such as document embedding (DE), articles clustering (AC), dimensionality reduction (DR) and visualization, and one-class classifier (OCC) as shown in Fig. 4

Fig. 4.

Schematic representation of the proposed approach.

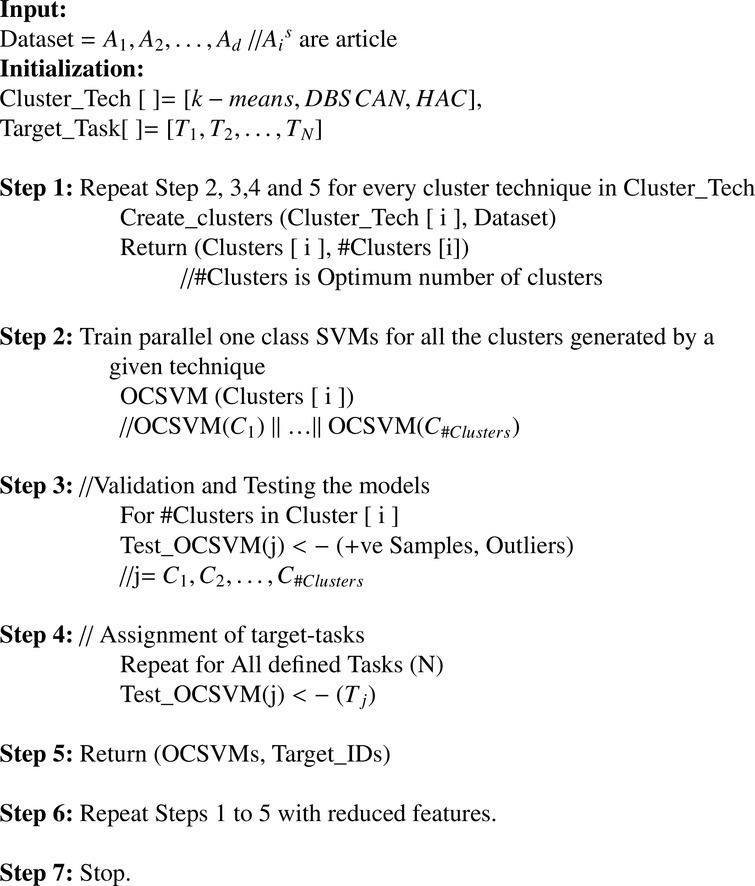

Since the abstract of a scholarly article represents the semantic meaning of the whole article, this along with the target-tasks are mapped onto the numeric data represented in multi-dimensional vector space using the state-of-the-art natural language processing (NLP) document embedding techniques such as doc2vec [25] based on distributed memory version of paragraph vector (PV-DM) [24]. The generated vector spaces of the article’s abstracts are utilized to generate segregated clusters of related articles. The extensive trials are conducted with varying clustering approaches like k-means [6], DBSCAN [12] and HAC [34], to generate an appropriate number of segregated clusters. The generated clusters are also analyzed with the two-dimensional visual representation via dimensionality reduction techniques such as PCA [45], ISO- MAP [4], t-SNE [26], and UMAP [30]. Each of the generated clusters are parallely trained on one-class support vector machines (OCSVM) separately. The trained models are then utilized to associate the most appropriate articles for the concerned required information (target-task). Furthermore, these generated results are statistically compared in contrast to the computational effective approach (CEA), where in CEA, the OCSVM models are trained on reduced clusters feature set generated using above discussed dimensionality reduction techniques. Algorithm 1 presents the complete process of the proposed approach.

Algorithm 1.

Cluster creation and target-task assignment.

4. Experimentation

The proposed methodology is trained and evaluated on CORD-19 [23] dataset, retrieved on April 4, 2020. After extensive study, the articles and the target domain comprising nine target-tasks as shown in Table 1 [23]. The details of these tasks (not included in this article because of length) are later explored to generate features for further processing. These task descriptions are embedded in a high dimensional vector space using doc2vec approach. After extensive trials it is evident that 150 features are sufficient for optimized performance.

Table 1.

Target description from CORD-19 [23].

| Target ID | Target Domain |

|---|---|

| T-1 | What is known about transmission incubation and environmental stability? |

| T-2 | What do we know about COVID-19 risk factors? What have we learned from epidemiological studies? |

| T-3 | What do we know about virus genetics origin and evolution?” |

| T-4 | What do we know about vaccines and therapeutics? What has been published concerning research and development and evaluation efforts of vaccines and therapeutics? |

| T-5 | What do we know about the effectiveness of non-pharmaceutical interventions? |

| T-6 | What do we know about diagnostics and surveillance? |

| T-7 | What has been published about medical care? |

| T-8 | What has been published concerning ethical considerations for research? |

| T-9 | What has been published about information sharing and inter-sectoral collaboration? |

The doc2vec implementation is based on the PV-DM model that is analogous to the continuous bag of words (CBOW) approach of word2vec [24] with just an additional feature vector representing the full article. The PV-DM model obtains the document vector by training a neural network to predict the words from the context of vocabulary or word vector and full doc-vector, represented as W and D [24]. Following this, the word model is trained on words w 1, w 2, ..., wT, with the objective to maximize the log likelihood as given in Eq. (18). The word is predicted with probability distribution obtained using softmax activation function provided in Eq. (19). The Eq. (20) indicates the hypothesis (yi) associated with each output word (i).

| (18) |

where indicates the window size to consider the words context.

| (19) |

| (20) |

where and are trainable parameters and h is obtained by concatenation of word vectors (W) and document vector (D).

This approach is utilized to embed the target-tasks and corpus of COVID-19 articles into high dimensional vector space. Due to the small corpus of targets, instead of directly generating the target vector space, each target query is elaborated to emphasize on the expected type of articles by adding the appropriate semantic meaning.

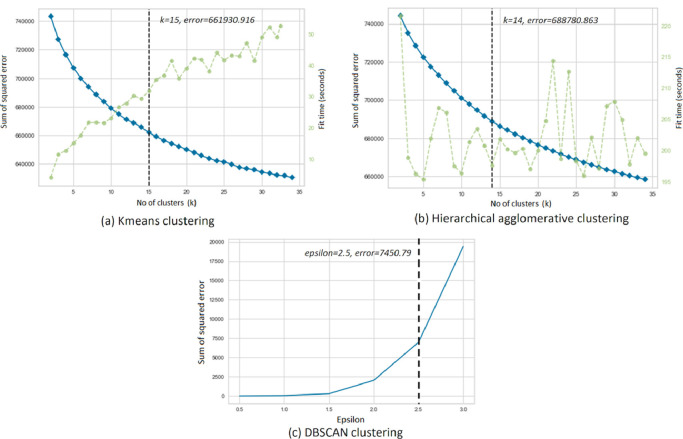

The generated document vector space of the articles is utilized by clustering approaches; k-means, DBSCAN, and HAC. In k-means and HAC, the appropri- ate number of clusters are set by computing the sum of squared error for the number of clusters varying from 2 to 35. As shown in Fig. 5 (a) and 5(b), the elbow point indicates that there are 14 and 15 favourable numbers of clusters that can represent the most related articles. Whereas DBSCAN approach considers groups of close data points (minimum sample points) in a defined space (epsilon) as nearest neighbours without specifying the number of clusters. Fig. 5(c) illustrates the sum of squared error for the generated clusters with varying epsilon value. The epsilon is kept as 2.5 due to the sudden increase in the sum of squared error for higher values which resulted in skewed 16 clusters of articles.

Fig. 5.

Computing most suitable number of clusters.

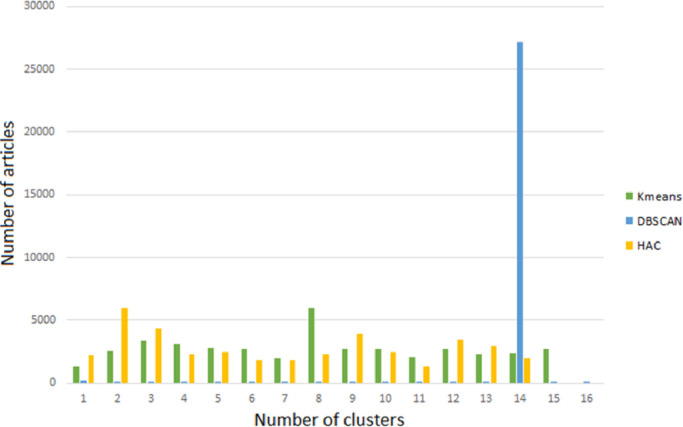

Fig. 6 presents the number of articles constituting each cluster generated from the above discussed clustering algorithm out of which DBSCAN performs the worst due to the sparse mapping of the articles in the cluster.

Fig. 6.

Distribution of samples in each cluster formed by using k-means, DBSCAN and HAC.

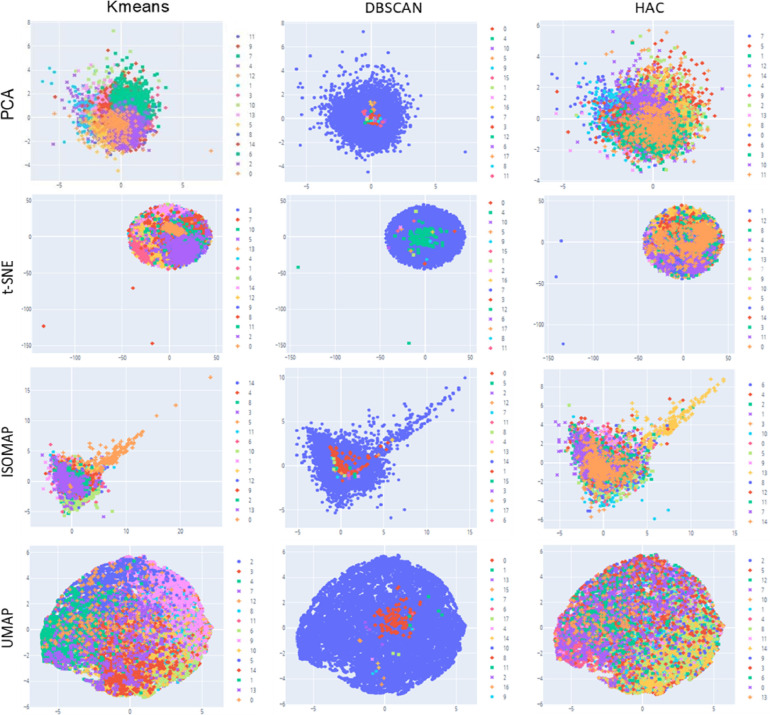

Since these clusters are featured in high dimensional space, visualizing them in two dimensional space requires dimensionality reduction techniques like PCA, ISOMAP, etc. Later, these reduced feature-sets are also utilized to train the OCSVMs in order to compare the results in presence of original feature space. The clusters are visualized with the principal components displaying highest variance. Fig. 7 illustrates the two dimensional cluster representation from the multi-dimensional feature vector of subsample of articles for each possible combination of the discussed clustering and dimensionality reduction approaches. It is evident that from the visualization shown in Fig. 7, among the utilized clustering approaches, k-means is able to generate the meaningful clusters with better segregation and aggregation of COVID-19 articles.

Fig. 7.

Clustering visualization of corpus of COVID-19 articles.

There are 14, 15 and 16 numbers of clusters generated from HAC, k-means, and DBSCAN respectively. For each of the generated clusters, dedicated OCSVMs are trained parallely where each OCSVM learns to confine a cluster on the positive side of the hyperplane with a maximum margin from the origin. The trained models are utilized to predict the most related target queries concerning a cluster as per Eq. (21).

| (21) |

| (22) |

where P and T indicate any vector space. where Oi indicates the ith OCSVM trained on Ci cluster, and Tt indicates the target-task for which to identify the related articles. Each predicted target domain is verified using the cosine similarity metric as given in Eq. (22) in contrast to the assigned clusters of articles. The metric value ranges between 0 and 1, with the meaning of articles being totally different and same respectively. Finally, the articles are sorted in the order of most relevance based on the highest cosine score. The Table 2 presents the top five related articles and the corresponding similarity score along with the total number of articles found with the cosine score greater than 0.1, using the OCSVMs trained on the clusters generated via k-means approach. It is also observed that the inter-cluster similarity is always less that 0.1 that plays a significant role in target class classification of COVID-19 based research articles.

Table 2.

Prediction analysis of the OCSVMs trained on k-means clusters corresponding to each target from CORD-19 dataset.

| Target ID | Most relevant article | Similarity score | Associated cluster | Total articles |

|---|---|---|---|---|

| T-1 | Persistence of Antibodies against Middle East Respiratory Syndrome coronavirus | 0.4 | 1 | 7953 |

| Aerodynamic Characteristics and RNA Concentration of SARS-CoV-2 Aerosol in Wuhan Hospitals during COVID-19 Outbreak | 0.4 | 12 | ||

| Evaluation of SARS-CoV-2 RNA shedding in clinical specimens and clinical characteristics of 10 patients with COVID-19 in Macau | 0.39 | 8 | ||

| Effects of temperature on COVID-19 transmission | 0.37 | 12 | ||

| Human coronavirus 229E Remains Infectious on Common Touch Surface Materials | 0.37 | 11 | ||

| T-2 | Clinical and Epidemiologic Characteristics of Spreaders of Middle East Respiratory Syndrome coronavirus during the 2015 Outbreak in Korea | 0.51 | 12 | 20630 |

| Comparative Pathogenesis of Covid-19, Mers And Sars In A Non-Human Primate Model | 0.51 | 12 | ||

| A comparison study of SARS-CoV-2 IgG antibody between male and female COVID-19 patients: a possible reason underlying different outcome between gender | 0.49 | 5 | ||

| Severe acute respiratory syndrome (SARS) in intensive care units (ICUs): limiting the risk to healthcare workers | 0.48 | 5 | ||

| Comparison of viral infection in healthcare- associated pneumonia (HCAP) and community-acquired pneumonia (CAP) | 0.48 | 5 | ||

| T-3 | The impact of within-herd genetic variation upon inferred transmission trees for foot-and-mouth disease virus | 0.43 | 3 | 9412 |

| Epidemiologic data and pathogen genome sequences: a powerful synergy for public health | 0.42 | 2 | ||

| Transmission Parameters of the 2001 Foot and Mouth Epidemic in Great Britain | 0.39 | 3 | ||

| Middle East respiratory syndrome coronavirus neutralising serum antibodies in dromedary camels: a comparative serological study | 0.39 | 11 | ||

| FastViromeExplorer: a pipeline for virus and phage identification and abundance profiling in metagenomics data | 0.39 | 13 | ||

| T-4 | Development of a recombinant truncated nucleocapsid protein based immunoassay for detection of antibodies against human coronavirus OC43 | 0.42 | 10 | 13499 |

| The Effectiveness of Convalescent Plasma and Hyperimmune Immunoglobulin for the Treatment of Severe Acute Respiratory Infections of Viral Etiology: A Systematic Review and Exploratory Meta-analysis | 0.4 | 3 | ||

| Journal Pre-proof Discovery and development of safe-in-man broad-spectrum antiviral agents Discovery and Development of Safe-in-man Broad-Spectrum Antiviral Agents | 0.4 | 10 | ||

| Evaluation of Group Testing for SARS-CoV-2 RNA | 0.4 | 3 | ||

| Antiviral Drugs Specific for coronaviruses in Preclinical Development | 0.39 | 10 | ||

| T-5 | Communication of bed allocation decisions in a critical care unit and accountability for reasonableness | 0.45 | 2 | 12170 |

| Collaborative accountability for sustainable public health: A Korean perspective on the effective use of ICT-based health risk communication | 0.44 | 7 | ||

| Pandemic influenza control in Europe and the constraints resulting from incoherent public health laws | 0.44 | 11 | ||

| Street-level diplomacy and local enforcement for meat safety in northern Tanzania: knowledge, pragmatism and trust | 0.44 | 2 | ||

| Staff perception and institutional reporting: two views of infection control compliance in British Columbia and Ontario three years after an outbreak of severe acute respiratory syndrome | 0.43 | 2 | ||

| T-6 | Planning and preparing for public health threats at airports | 0.42 | 10 | 8777 |

| Can free open access resources strengthen knowledge-based emerging public health priorities, policies and programs in Africa? | 0.4 | 10 | ||

| Implications of the One Health Paradigm for Clinical Microbiology | 0.39 | 2 | ||

| Annals of Clinical Microbiology and Antimicrobials Predicting the sensitivity and specificity of published real-time PCR assays | 0.38 | 3 | ||

| A field-deployable insulated isothermal RT-PCR assay for identification of influenza A (H7N9) shows good performance in the laboratory | 0.38 | 7 | ||

| T-7 | Strategy and technology to prevent hospital-acquired infections: Lessons from SARS, Ebola, and MERS in Asia and West Africa | 0.41 | 15 | 9912 |

| Rapid Review The psychological impact of quarantine and how to reduce it: rapid review of the evidence | 0.41 | 4 | ||

| Factors Informing Outcomes for Older Cats and Dogs in Animal Shelters | 0.4 | 4 | ||

| A Critical Care and Transplantation-Based Approach to Acute Respiratory Failure after Hematopoietic Stem Cell Transplantation in Children | 0.39 | 8 | ||

| Adaptive multiresolution method for MAP reconstruction in electron tomography | 0.39 | 14 | ||

| T-8 | Twentieth anniversary of the European Union health mandate: taking stock of perceived achievements, failures and missed opportunities - a qualitative study | 0.47 | 12 | 15191 |

| Towards evidence-based, GIS-driven national spatial health information infrastructure and surveillance services in the United Kingdom | 0.46 | 7 | ||

| H1N1 influenza pandemic in Italy revisited: has the willingness to get vaccinated suffered in the long run? | 0.44 | 7 | ||

| What makes health systems resilient against infectious disease outbreaks and natural hazards? Results from a scoping review | 0.44 | 14 | ||

| A qualitative study of zoonotic risk factors among rural communities in southern China | 0.44 | 14 | ||

| T-9 | A cross-sectional study of pandemic influenza health literacy and the effect of a public health campaign | 0.5 | 1 | 12332 |

| Public Response to Community Mitigation Measures for Pandemic Influenza | 0.48 | 1 | ||

| Casualties of war: the infection control assessment of civilians transferred from conflict zones to specialist units overseas for treatment | 0.47 | 8 | ||

| Communications in Public Health Emergency Preparedness: A Systematic Review of the Literature | 0.47 | 4 | ||

| Ethics-sensitivity of the Ghana national integrated strategic response plan for pandemic influenza | 0.46 | 4 |

5. Results and discussion

The Table 3 illustrates the detailed results of the OCSVMs to map the target domain to the group of articles trained on each cluster generated using k-means, DBSCAN, and HAC. The percentage score indicates the quality of the article clusters to accommodate at most one target-task, which is computed as the ratio of total number of negative targets to the total number of targets (-1 and +1) as given in Eq. (23). A higher value indicates the results provided by the concerned cluster are concise and most relevant.

| (23) |

where N indicates the total number of target-tasks, and indicates the number of targets accepted and not accepted by OCSVM.

Table 3.

Target-task mapping using OCSVMs end-to-end trained on article clusters.

| CA | Tasks | OCSVMs trained on clusters |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | ||

| k-means | T1 | 1 | -1 | -1 | 1 | -1 | -1 | -1 | 1 | -1 | -1 | 1 | 1 | -1 | -1 | -1 | - |

| T2 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | 1 | -1 | 1 | 1 | 1 | -1 | 1 | 1 | - | |

| T3 | -1 | 1 | 1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | 1 | -1 | -1 | -1 | - | |

| T4 | -1 | -1 | 1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | 1 | -1 | -1 | - | |

| T5 | -1 | -1 | 1 | -1 | -1 | -1 | 1 | -1 | -1 | -1 | 1 | 1 | -1 | -1 | -1 | - | |

| T6 | -1 | 1 | 1 | -1 | -1 | -1 | 1 | -1 | -1 | 1 | -1 | -1 | 1 | -1 | 1 | - | |

| T7 | 1 | -1 | -1 | 1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | 1 | -1 | 1 | - | |

| T8 | 1 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | 1 | -1 | 1 | 1 | -1 | 1 | 1 | - | |

| T9 | 1 | -1 | -1 | 1 | -1 | -1 | -1 | 1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | - | |

| % score | 0.44 | 0.56 | 0.56 | 0.67 | 0.89 | 0.78 | 0.56 | 0.44 | 0.89 | 0.56 | 0.44 | 0.44 | 0.56 | 0.78 | 0.56 | - | |

| DBSCAN | T1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 |

| T2 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | |

| T3 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | |

| T4 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | |

| T5 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | |

| T6 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | |

| T7 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | |

| T8 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | |

| T9 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | |

| % score | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| HAC | T1 | 1 | -1 | -1 | 1 | -1 | -1 | -1 | 1 | -1 | -1 | 1 | 1 | -1 | -1 | - | - |

| T2 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | 1 | -1 | 1 | 1 | 1 | -1 | 1 | - | - | |

| T3 | -1 | 1 | 1 | -1 | -1 | -1 | -1 | -1 | 1 | -1 | 1 | 1 | -1 | -1 | - | - | |

| T4 | -1 | -1 | 1 | -1 | 1 | -1 | -1 | -1 | -1 | 1 | -1 | -1 | 1 | -1 | - | - | |

| T5 | -1 | 1 | 1 | -1 | -1 | -1 | 1 | -1 | -1 | -1 | 1 | 1 | -1 | -1 | - | - | |

| T6 | -1 | 1 | 1 | -1 | -1 | -1 | 1 | -1 | 1 | 1 | -1 | -1 | 1 | -1 | - | - | |

| T7 | -1 | -1 | -1 | 1 | -1 | 1 | -1 | 1 | -1 | 1 | -1 | -1 | 1 | -1 | - | - | |

| T8 | 1 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | 1 | -1 | 1 | 1 | -1 | 1 | - | - | |

| T9 | 1 | -1 | 1 | 1 | -1 | -1 | -1 | 1 | -1 | 1 | -1 | -1 | 1 | -1 | - | - | |

| % score | 0.56 | 0.56 | 0.44 | 0.67 | 0.78 | 0.67 | 0.56 | 0.44 | 0.67 | 0.44 | 0.44 | 0.44 | 0.56 | 0.78 | - | - | |

Based on the extensive trials, it is observed that k-means and HAC outperf- ormed the DBSCAN with a significant margin in generating good quality of clusters, whereas k-means performed better than HAC as observed from the statistics in Table 3, which is utilized for training the OCSVMs with the generated clusters for mapping the target domain appropriately.

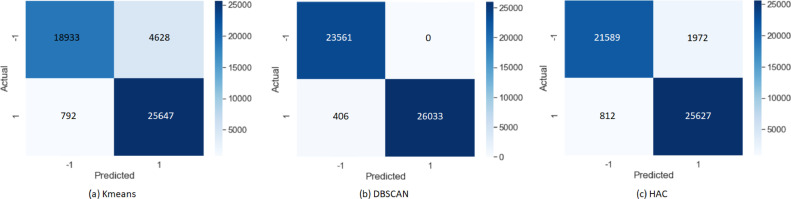

The confusion matrix shown in Fig. 8 represents the average performance of the trained OCSVMs by using the clusters generated from k-means, DBSCAN, and HAC in original feature space. It shows that the false negative rate is almost negligible, and ensures the robustness of the approach. Results show that among all three clustering techniques DBSCAN sounds most promising, however due to its heavily skewed distribution of articles in the clusters it is not a robust technique for this problem, hence k-means is observed most suitable clustering technique to work along with parallel OCSVMs.

Fig. 8.

Normalized confusion matrix for average performance of the parallel OCSVMs.

Furthermore, the complete procedure was repeated with the dimensionally reduced embedding of the articles with the concern to achieve similar or better results with less computational cost. Table 4 describes the target mapping results on the articles vector space whose embedded feature set is reduced from 150 to 2 dimensions. The performance of the proposed approach is analysed in the presence of reduced features as shown in Table 5 . The results exhibit that in presence of reduced features obtained by ISOMAP, the k-means along with OCSVMs method outperforms others and needs less computation power.

Table 4.

OCSVM target-task mapping quality score per cluster on the reduced feature set.

| CA | DR | Target mapping score of OCSVMs trained on reduced feature clusters |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | ||

| k-means | PCA | 0 | 0.78 | 0.78 | 0.44 | 0.44 | 0.44 | 0.56 | 0.67 | 0.22 | 0.33 | 0.56 | 0.56 | 0.56 | 0.56 | 0 | - |

| t-SNE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | - | |

| ISOMAP | 0.44 | 0.56 | 0.44 | 0.67 | 0.44 | 0.44 | 0.44 | 0.56 | 0.44 | 0.11 | 0.56 | 0.44 | 0.67 | 0.44 | 0.44 | - | |

| UMAP | 0 | 0.78 | 0.67 | 0.56 | 0.56 | 0.44 | 0.67 | 0.56 | 0.33 | 0.22 | 0.56 | 0 | 0.56 | 0.11 | 0 | - | |

| DBSCAN | PCA | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.89 | 0 | 0 | 0 | 0.22 | 0 | 0 |

| t-SNE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| ISOMAP | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.67 | 0 | 0 | 0 | 0.69 | 0 | 0 | |

| UMAP | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.89 | 0 | 0 | 0.22 | 0.89 | 0 | 0 | |

| HAC | PCA | 0.22 | 0.56 | 0.11 | 0.78 | 0.22 | 0.67 | 0.67 | 0.11 | 0.56 | 0.44 | 0.56 | 0.89 | 0.56 | 0.22 | - | - |

| t-SNE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | - | - | |

| ISOMAP | 0.44 | 0.56 | 0.11 | 0.44 | 0.44 | 0.44 | 0.56 | 0.44 | 0.44 | 0.44 | 0.44 | 0.44 | 0.44 | 0 | - | - | |

| UMAP | 0.56 | 0.67 | 0.22 | 0.89 | 0.22 | 0.78 | 0.67 | 0.22 | 0.67 | 0.56 | 0.56 | 0 | 0.56 | 0.22 | - | - | |

Table 5.

Overall behavior of all models.

| Clustering scheme | DR scheme | TP | TN | FP | FN | Precision | Accuracy | Recall | Specificity | F1-Score |

|---|---|---|---|---|---|---|---|---|---|---|

| Kmeans | PCA | 19464 | 25748 | 4097 | 691 | 0.826 | 0.904 | 0.966 | 0.863 | 0.890 |

| t-SNE | 19640 | 26028 | 4101 | 411 | 0.827 | 0.910 | 0.980 | 0.864 | 0.897 | |

| ISOMAP | 18485 | 25699 | 5076 | 740 | 0.785 | 0.884 | 0.962 | 0.835 | 0.864 | |

| UMAP | 18907 | 26118 | 4654 | 321 | 0.802 | 0.901 | 0.983 | 0.849 | 0.884 | |

| DBSCAN | PCA | 23519 | 25862 | 42 | 577 | 0.998 | 0.988 | 0.976 | 0.998 | 0.987 |

| t-SNE | 19460 | 26028 | 0 | 411 | 1.000 | 0.991 | 0.979 | 1.000 | 0.990 | |

| ISOMAP | 23538 | 26071 | 23 | 368 | 0.999 | 0.992 | 0.985 | 0.999 | 0.992 | |

| UMAP | 23499 | 26128 | 62 | 311 | 0.997 | 0.993 | 0.987 | 0.998 | 0.992 | |

| HAC | PCA | 19334 | 25315 | 4227 | 1124 | 0.821 | 0.893 | 0.945 | 0.857 | 0.878 |

| t-SNE | 18722 | 26016 | 4839 | 423 | 0.795 | 0.895 | 0.978 | 0.843 | 0.877 | |

| ISOMAP | 19356 | 25723 | 4205 | 716 | 0.822 | 0.902 | 0.964 | 0.859 | 0.887 | |

| UMAP | 17653 | 26121 | 5908 | 318 | 0.749 | 0.875 | 0.982 | 0.816 | 0.850 |

6. Conclusion

The pandemic environment of COVID-19 has brought attention to the research community. Many research articles have been published concerning the novel coronavirus. The CORD initiative has developed a repository to record the published COVID-19 articles with the update frequency of a day. This article proposed a novel bottom-up target guided approach for mining of COVID-19 articles using parallel one-class support vector machines which are trained on the clusters of related articles generated with the help of clustering approaches such as k-means, DBSCAN, and HAC from the embedded vector space of COVID-19 articles generated by doc2vec approach. With extensive trials, it was observed that the proposed approach with reduced features produced significant results by providing quality of relevant articles for each discussed target-task, which is verified by the cosine similarity score. The domain of this article is not limited to mining the scholarly articles, where it can further be extended to other applications concerned with the data mining problems.

CRediT authorship contribution statement

Sanjay Kumar Sonbhadra: Methodology, Formal analysis, Resources, Writing - original draft, Visualization, Validation. Sonali Agarwal: Methodology, Formal analysis, Resources, Writing - original draft, Visualization, Validation, Supervision. P. Nagabhushan: Supervision, Formal analysis.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

We are very grateful to our institute, Indian Institute of Information Technology Allahabad (IIITA), India and Big Data Analytics (BDA) lab for providing the necessary infrastructure and resources. We would like to thank our supervisors and colleagues for their valuable guidance and suggestions. We are also very thankful for providers of CORD-19 dataset.

References

- 1.Alam S., Sonbhadra S.K., Agarwal S., Nagabhushan P. One-class support vector classifiers: A survey. Knowl-Base Syst. 2020:105754. [Google Scholar]

- 2.Alam S., Sonbhadra S.K., Agarwal S., Nagabhushan P., Tanveer M. Sample reduction using farthest boundary point estimation (fbpe) for support vector data description (svdd) Patt Recogn Lett. 2020 [Google Scholar]

- 3.Bengio Y., Ducharme R., Vincent P., Jauvin C. A neural probabilistic language model. J Mach Learn Res. 2003;3(Feb):1137–1155. [Google Scholar]

- 4.Bengio Y., Paiement J.-f., Vincent P., Delalleau O., Roux N.L., Ouimet M. Advances in neural information processing systems. 2004. Out-of-sample extensions for lle, isomap, mds, eigenmaps, and spectral clustering; pp. 177–184. [Google Scholar]

- 5.Bishop C.M. Novelty detection and neural network validation. IEE Proceed-Vis Image Signal Process. 1994;141(4):217–222. [Google Scholar]

- 6.Bradley P.S., Fayyad U.M. ICML. Vol. 98. Citeseer; 1998. Refining initial points for k-means clustering. pp. 91–99. [Google Scholar]

- 7.Chalapathy R. Chawla S. 2019. Deep learning for anomaly detection: A survey. arXiv preprint arXiv:1901.03407.

- 8.Cohen G., Sax H., Geissbuhler A. Mie. 2008. Novelty detection using one-class parzen density estimator. an application to surveillance of nosocomial infections. pp. 21–26. [PubMed] [Google Scholar]

- 9.Dai AM, Olah C, Le QV. 2015. Document embedding with paragraph vectors. arXiv preprint arXiv:1507.07998.

- 10.Dong L., Shulin L., Zhang H. A method of anomaly detection and fault diagnosis with online adaptive learning under small training samples. Patt Recog. 2017;64:374–385. [Google Scholar]

- 11.Dong M., Cao X., Liang M., Li L., Liang H., Liu G. Understand research hotspots surrounding covid-19 and other coronavirus infections using topic modeling. medRxiv. 2020 [Google Scholar]

- 12.Ester M., Kriegel H.-P., Sander J., Xu X. Int. Conf. Knowledge Discovery and Data Mining. Vol. 240. 1996. Density-based spatial clustering of applications with noise; p. 6. [Google Scholar]

- 13.Feldman R., Sanger J. Cambridge university press; 2007. The text mining handbook: advanced approaches in analyzing unstructured data. [Google Scholar]

- 14.Han Y., Lam J., Li V., Guo P., Zhang Q., Wang A. 2020. The effects of outdoor air pollution concentrations and lockdowns on covid-19 infections in Wuhan and other provincial capitals in China. [Google Scholar]

- 15.Hejazi M., Singh Y.P. One-class support vector machines approach to anomaly detection. Appl Artif Intell. 2013;27(5):351–366. [Google Scholar]

- 16.Japkowicz N. 1999. Concept-learning in the absence of counter-examples: an autoassociation-based approach to classification. [Google Scholar]

- 17.Joshi B.P., Bakrola V.D., Shah P., Krishnamurthy R. deepmine-natural language processing based automatic literature mining and research summarization for early stage comprehension in pandemic situations specifically for covid-19. bioRxiv. 2020 [Google Scholar]

- 18.Khan S.S., Madden M.G. Irish conference on artificial intelligence and cognitive science. Springer; 2009. A survey of recent trends in one class classification; pp. 188–197. [Google Scholar]

- 19.Khan S.S., Madden M.G. One-class classification: taxonomy of study and review of techniques. Knowl Eng Rev. 2014;29(3):345–374. [Google Scholar]

- 20.Khreich W., Khosravifar B., Hamou-Lhadj A., Talhi C. An anomaly detection system based on variable n-gram features and one-class svm. Inform Softw Technol. 2017;91:186–197. [Google Scholar]

- 21.Kim P.J., Chang H.J., Choi J.Y. 2008 19th International Conference on Pattern Recognition. IEEE; 2008. Fast incremental learning for one-class support vector classifier using sample margin information; pp. 1–4. [Google Scholar]

- 22.Koch M.W., Moya M.M., Hostetler L.D., Fogler R.J. Cueing, feature discovery, and one-class learning for synthetic aperture radar automatic target recognition. Neur Netw. 1995;8(7–8):1081–1102. [Google Scholar]

- 23.Kohlmeier S., Lo K., Wang L.L., Yang J. Covid-19 open research dataset (CORD-19) J Bio-Base Market. 2020;1 [Google Scholar]

- 24.Le Q., Mikolov T. International conference on machine learning. 2014. Distributed representations of sentences and documents; pp. 1188–1196. [Google Scholar]

- 25.Lau J.H., Baldwin T. 2016. An empirical evaluation of doc2vec with practical insights into document embedding generation. [Google Scholar]; arXiv preprint arXiv:1607.05368

- 26.Maaten L.v.d., Hinton G. Visualizing data using t-sne. J Mach Learn Res. 2008;9(Nov):2579–2605. [Google Scholar]

- 27.Maglaras L.A., Jiang J., Cruz T.J. Combining ensemble methods and social network metrics for improving accuracy of ocsvm on intrusion detection in scada systems. J Inform Secur Applica. 2016;30:15–26. [Google Scholar]

- 28.Manevitz L.M., Yousef M. One-class svms for document classification. J Mach Learn Res. 2001;2(Dec):139–154. [Google Scholar]

- 29.Mazhelis O. One-class classifiers: a review and analysis of suitability in the context of mobile-masquerader detection. S Afri Comput J. 2006;2006(36):29–48. [Google Scholar]

- 30.McInnes L, Healy J, Melville J. 2018. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv preprint arXiv:1802.03426.

- 31.Mikolov T., Sutskever I., Chen K., Corrado G.S., Dean J. Advances in neural information processing systems. 2013. Distributed representations of words and phrases and their compositionality; pp. 3111–3119. [Google Scholar]

- 32.Mikolov T, Chen K, Corrado G, Dean J. 2013. Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781.

- 33.Minter T. LARS Symposia. 1975. Single-class classification; p. 54. [Google Scholar]

- 34.Müllner D. 2011. Modern hierarchical, agglomerative clustering algorithms. arXiv preprint arXiv:1109.2378.

- 35.Pimentel M.A., Clifton D.A., Clifton L., Tarassenko L. A review of novelty detection. Signal Process. 2014;99:215–249. doi: 10.1007/s11265-013-0835-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ritter G., Gallegos M.T. Outliers in statistical pattern recognition and an application to automatic chromosome classification. Patt Recogn Lett. 1997;18(6):525–539. [Google Scholar]

- 37.Robertson S. Understanding inverse document frequency: on theoretical arguments for idf. J Document. 2004 [Google Scholar]

- 38.WHO. Who corona-viruses (covid-19). https://www.who.int/emergencies/diseases/novel-corona-virus-2019,2020; 2020. [Online; accessed May 02, 2020].

- 39.Sánchez D., Martínez-Sanahuja L., Batet M. Survey and evaluation of web search engine hit counts as research tools in computational linguistics. Inform Syst. 2018;73:50–60. [Google Scholar]

- 40.Schölkopf B., Platt J.C., Shawe-Taylor J., Smola A.J., Williamson R.C. Estimating the support of a high-dimensional distribution. Neur Comput. 2001;13(7):1443–1471. doi: 10.1162/089976601750264965. [DOI] [PubMed] [Google Scholar]

- 41.Stoecklin S.B., Rolland P., Silue Y., Mailles A., Campese C., Simondon A. First cases of coronavirus disease 2019 (covid-19) in france: surveillance, investigations and control measures, january 2020. Eurosurveillance. 2020;25(6):2000094. doi: 10.2807/1560-7917.ES.2020.25.6.2000094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Tax D.M., Duin R.P. Support vector domain description. Patt Recogn Lett. 1999;20(11–13):1191–1199. [Google Scholar]

- 43.Uttreshwar G.S., Ghatol A. 2008. Hepatitis b diagnosis using logical inference and self-organizing map 1. [Google Scholar]

- 44.McInnes L, Healy J, Melville J. 2020. Comprehensive named entity recognition on cord-19 with distant or weak supervision. arXiv preprint arXiv:2003.12218.

- 45.Wold S., Esbensen K., Geladi P. Principal component analysis. Chemomet Intell Lab Syst. 1987;2(1–3):37–52. [Google Scholar]

- 46.Yin L., Wang H., Fan W. Active learning based support vector data description method for robust novelty detection. Knowl-Base Syst. 2018;153:40–52. [Google Scholar]