Abstract

Artificial Intelligence (AI) has taken radiology by storm, in particular, mammogram interpretation, and we have seen a recent surge in the number of publications on potential uses of AI in breast radiology. Breast cancer exerts a lot of burden on the National Health Service (NHS) and is the second most common cancer in the UK as of 2018. New cases of breast cancer have been on the rise in the past decade, while the survival rate has been improving. The NHS breast cancer screening program led to an improvement in survival rate. The expansion of the screening program led to more mammograms, thereby putting more work on the hands of radiologists, and the issue of double reading further worsens the workload. The introduction of computer-aided detection (CAD) systems to help radiologists was found not to have the expected outcome of improving the performance of readers. Unreliability of CAD systems has led to the explosion of studies and development of applications with the potential use in breast imaging. The purported success recorded with the use of machine learning in breast radiology has led to people postulating ideas that AI will replace breast radiologists. Of course, AI has many applications and potential uses in radiology, but will it replace radiologists? We reviewed many articles on the use of AI in breast radiology to give future radiologists and radiologists full information on this topic. This article focuses on explaining the basic principles and terminology of AI in radiology, potential uses, and limitations of AI in radiology. We have also analysed articles and answered the question of whether AI will replace radiologists.

Keywords: artificial intelligence, radiology, breast cancer, mammogram

Introduction and background

Women in the UK have one in eight chances of developing cancer of the breast during their lives [1]. According to the official statistics of 2018, breast cancer is the second most common cancer diagnosed in the UK, with 47,476 recorded cases. It has been the most frequently diagnosed cancer in England since 1996 [2,3]. The incidence rate increased from 2008 to 2018 while the mortality rate decreased as shown in Table 1.

Table 1. Age-standardized mortality and incidence rates per 100,000 for breast cancer in females, England, 2008 and 2018.

Statistics credit: Cancer registration statistics, England [3].

| 2008 | 2018 | ||

| Mortality rate | Incidence rate | Mortality rate | Incidence rate |

| 39.9 | 165.5 | 34.1 | 167.7 |

This decreasing mortality contrasts to the increase in new cases, indicating that the number of patients surviving breast cancer has improved [3]. This is as a result of early detection of breast cancer through expanded mammography screening [3]. Mammography is the best method available to detect cancer of the breast before the lesions become clinically visible, and mortality is reduced by as much as 30% [4]. However, mammograms are complex, and the high numbers (1.79 million mammograms done in 2017-18 under the National Health Service [NHS] Breast Screening Program) of exams per reader can result in inaccurate diagnosis [1,5]. The incorrect diagnosis led to double reading of mammograms in the UK and Europe [6]. When using double reading, sensitivity of mammography is increased by 5%-15% when compared with single reading [6]. Computer-aided detection (CAD) systems introduced because radiologists missed about 25% of visible cancers on mammograms due to interpretation errors [7]. The reason for the introduction of CAD systems was to try and improve human detection performance [8]. However, recent studies show that CAD models have not had the expected impact, and there was no significant improvement in the diagnostic accuracy of mammography, or reduction in recall rates [9]. Advances in AI are promising to address the flaws of CAD systems [10]. A present-day breast radiologist and those aspiring to be radiologists should be aware of the fundamental principles of AI, its use, limitations, and what the future holds. The nitty-gritty of AI is not of much importance to radiologists. Still, they must know the technical jargon used by application developers to communicate with them and be prepared for the future efficiently.

Review

Artificial intelligence

Artificial intelligence (AI) technology has existed for more than half a century and has become more and more sophisticated [11]. The first reports on AI use in radiology date back to 1963, but the flames of those years quickly dozed off [12]. A recent increase in amounts of electronic medical data and technological improvements brought with it a new vigour in AI applications [11-13]. AI is defined as a technology that could broadly mimic the intelligence of humans [11-13]. AI has two broad categories, namely, general AI and narrow AI [11-13]. General AI is a concept where machines can function and exhibit all the intellectual capabilities of humans such as reasoning, seeing and even hearing, whereas narrow AI is where technologies are only able to do specific tasks [11-13]. Narrow AI is what is achievable at this time, and general AI is still a pipe dream.

Machine Learning

The Idea of introducing technology in medicine started as a tool known as expert systems, and the goal was to make algorithms that would make decisions like physicians [13-15]. However, it was not possible to make an algorithm for all diseases given the different presentations and scenarios encountered in day-to-day medicine [13-15]. Machine learning (ML) then took over from expert systems in the 1990s [13-15]. ML is a component of AI, and it tries to replicate the learning part of human brains [13-15]. In ML, the algorithm learns from exposure to large and new data sets and improves with continuous exposure to data [13]. There is no need for explicit programming in ML, and this differentiates it from standard programming, which requires clear step-by-step instructions the program must take [14].

Categories of ML

ML can be divided into supervised, unsupervised and reinforcement types of learning [13-15]. In unsupervised learning, there is no labelled input data [13-15]. The algorithm is supposed to analyse the data, group the data, and produce an output. There is no feedback supplied [13-15]. In reinforcement learning, the algorithm analyses data, and it is either rewarded or punished depending on the accuracy of the output it produces (reinforcements) [13-15]. The machine learns how to act in a particular environment to maximize rewards [15]. Supervised learning is when the algorithm is provided with labelled data to learn from, and this is known as the training phase [15]. The algorithm is expected to find recurring patterns from the training data and be able to pair inputs to results. In radiology, this means the mammogram diagnosis suggested by AI has to match tissue diagnoses [13].

Artificial Neural Networks

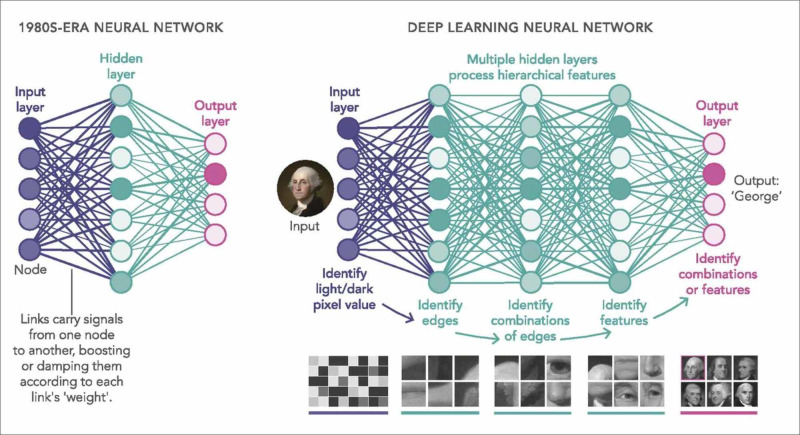

Artificial neural networks (ANNs) are a component of ML that uses mathematical and statistical principles to analyse data [16]. These networks' development came after inspiration from the biologic nervous systems' way of processing information using a large number of highly interconnected neurons [16]. An ANN has one input layer and one output layer of neurons [13-16]. In between the input and output is one or more layers known as "hidden layers." A hidden segment consists of a set of neurons, with connections to all neurons in the previous and forward layers [13-16].

Deep Neural Network (Deep Learning)

Deep learning (DL) is the AI concept used in image interpretation. A deep neural network (DNN) is an ANN consisting of five or more layers of algorithms connected and organised according to the meaningfulness of the data, and this enables improved predictions from data [13-16]. These layers store data from inputs and provide an output that is liable to change in an orderly manner once the AI system learns new features from the data [13-16]. DNNs are good in that they continuously improve as the size of the training data increases and doesn’t only work as a classifier but also as a feature extractor [13-17]. The differences in the DNN and simple neural network are illustrated in Figure 1.

Figure 1. Simple neural network compared to the deep neural network.

Photo credit: Waldrop [17].

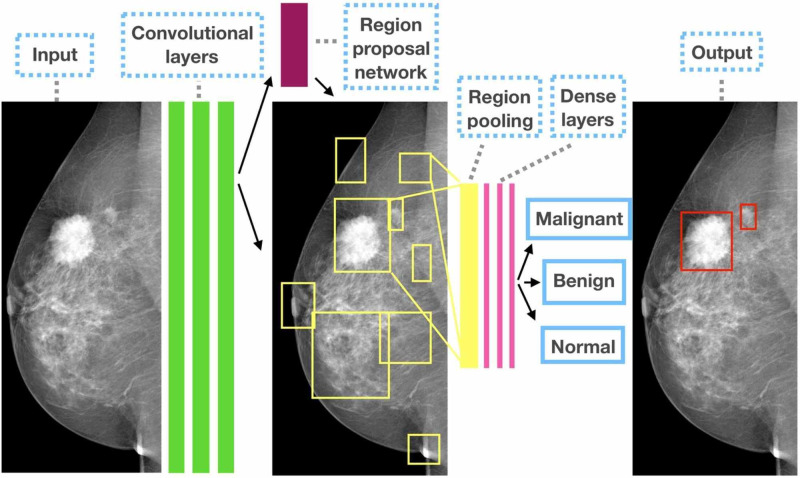

Convolutional Neural Networks

DL is a typical network that takes one-dimensional inputs, while convolutional neural networks (CNNs) take two- or three-dimensional shaped data [15,18]. Convolution is a mathematical principle that is used to find repeated features in images [15,18]. CNNs consist of an input, an output, as well as many hidden layers that get useful information by convolving (filtering) the data. CNNs are the most commonly used AI tool in breast imaging [15,18]. Figure 2 shows how a CNN extracts data from an image and process the data to give a possible diagnosis.

Figure 2. Schematic illustration of how a convolutional neural network extracts data and process it to give an output.

Photo credit: Ribli et al. [18].

Transfer Learning

Transfer learning is when knowledge obtained from a different experience is used on a separate but related job, for example, the use of an algorithm trained for non-medical visual recognition in feature extraction on mammograms [15]. Knowledge attained from regular photo image analysis is transferable to mammogram analysis [15].

Use of AI in breast imaging

The role of AI in breast imaging is not about finding whether machines are more ingenious than humans. Instead, AI is for expanding, sharpening, and relaxing the mind of the radiologist so that radiologists can do the same for their patients [19]. Following are the current uses and possible future uses of AI in breast radiology.

Image Interpretation

Advances in AI and imaging technology led to an increase in the proposed applications of AI in breast radiology. A number of studies have explored the use of deep learning in mammogram interpretation. DL tried in making a diagnosis of breast pathology in a number of cases, such as differentiating benign from malignant breast masses, separating masses from micro-calcifications, distinguishing between tumor and healthy tissue, discrimination between benign, malignant, and healthy tissue and detect masses in mammogram images [20-24]. AI has potential use in density segmentation and risk calculation, classifying breast tissue into different densities, namely, scattered and uniformly dense breast density categories, image segmentation that is mapping the edges of a lesion, lesion identification, measurement, labeling, comparison with previous images, comparing images from both left and right breasts and also the craniocaudal and mediolateral-oblique view of each breast and breast anatomy classification in mammograms [25-28].

Radiomics

Radiomics is extraction of large amounts of features from diagnostic images, using algorithms [29,30]. These features are known as radiomic features [29,30]. On a mammogram, radiomics extract vast amounts of features that a human eye cannot see and link these features with each other and other data [29,30]. The obtained data provides valuable information to radiologists and helps to predict prognosis, treatment response and many different potential uses such as differentiating benign and malignant breast tumors [30].

Imaging Banks

There are now vast amounts of data stored due to the continually increasing memory capacity of computers [29]. Overloaded picture archiving and communications system (PACS) is now familiar because we save raw images and lots of data generated from imaging [29]. AI can be used to store quantitative photos, and these stored images would be a handy tool to use as training data sets through which algorithms are trained [29].

Structured Reporting

AI can be used to make reporting standard by helping with the reporting template, assist with choice of vocabulary and recommend the most probable diagnosis [31].

Deciding Exam Priority Level

AI tools can also help the radiologist deciding exam priority using appropriateness criteria [15]. A clinical decision support (CDS) system helps referring clinicians in deciding the most relevant imaging procedure [32,33]. AI together with CDS, can make the process better and ultimately improve efficiency in the radiology department [29,33].

Quick Identification of Negative Studies

AI can be used to improve sensitivity by finding studies that are normal and leaving the rest for human readers [29,34]. This practice would be useful in high-volume sites or where double reporting is practiced [29,34]. Another way is to use AI to classify exams into normal and abnormal, and one radiologist will read those labelled as normal while the abnormal ones will go for double reading [29,34].

Clerical Work and Clinical Data Management

Physicians are now loaded with increasing amounts of administrative work, and this is among the leading causes of burnout. We can rope in AI applications in this area [34-37]. The ultimate goal expected from such ML-based systems is to assist healthcare workers cut documentation time and improve on report quality [34-37].

AI challenges

Acceptance of AI

In the early days of autopilot, pilots were reluctant to accept the technology, and this is the current situation now with radiologists fearing for their jobs [34-38]. However, technological advancements are not new to radiology; they were always part of the specialty, and technology is what drives radiology [34-38]. Bertalan Mesko has dubbed AI “the stethoscope of the 21st century” [36]. It was not easy for the early physicians to incorporate and trust the stethoscope as one of their best tools [36]. There is a need to sensitize people about AI through different channels to make the adoption of AI smooth [37]. We should not forget acceptance by patients as well. There is need to sensitize patients about AI. We also need consent from patients to use AI on image interpretation. Patients should be able to choose between AI and humans.

Training Data

Vast amounts of images are now available from the PACS, but the challenge arises in labeling the data for AI training [28-38]. Image labeling takes a lot of time and needs a lot of effort, and also, this process must be very robust [29-38]. Another problem comes with rare conditions; it is difficult to find enough images to train the algorithm so that the algorithm can identify them on its own in future [29-38]. Sometimes random variations on pictures can be seen by the program as a pathological lesion [29-38]. If input data used in training is from a different ethnic group, age group or different gender, it may give different results if given raw data from other diverse groups of people [15].

Medicolegal Issues

The question is if AI systems make an autonomous decision and make a mistake, who is responsible: radiologist, machine or builder of the device [38-43]? Physicians always take responsibility for the medical decisions made for patients. In case something went wrong, the programmers may not take responsibility, given that, the machines are continuously learning in ways not known by the developers. Recommendations provided by the tool may need to be ratified by a radiologist, who may agree or disagree with the software [38-43].

Is it the end for breast radiologists?

Geoffrey Hinton, a cognitive psychologist and computer scientist from Canada, was quoted saying, "if you work as a radiologist, you're like the coyote that's already over the edge of the cliff, but hasn't yet looked down so doesn't realize there's no ground underneath him. People should stop training radiologists now. It's just self-evident that within five years, deep learning is going to do better than radiologists; we've got plenty of radiologists already" [29]. Yes, in terms of image analysis and computing, AI has the potential to be more efficient than radiologists [29-45]. Radiologists cannot process millions of mammograms in any reasonable space of time, and this has led to some ideas that radiologists are going to be displaced by AI [29-45]. In the recent years, we have seen algorithms in various domains that are comparable or even better than humans in various radiological tasks, especially in breast imaging, which is why it is our main focus in this article [29-45]. Because of this success of DL in breast imaging, people started discussing the possibility of automating image interpretation and bury radiologists. These are far-fetched expectations; AI systems have their limitations discussed above. Also, it is crucial to know that AI is good at solving super-specific isolated problems [15-29]. In contrast, humans can understand different concepts, reason and put together vast amounts of information from various aspects and come up with an inclusive decision [29-45]. It is difficult to predict the future of AI on radiology. Still, many authors believe that AI will become part and parcel of the daily job of radiologists, making them more efficient [29-45]. AI will be valuable in performing routine tasks and help radiologists to concentrate on more useful jobs [38-45]. Radiologists will spend more time discussing with clinicians, in multi-disciplinary team meetings contributing to the long-term care of patients. More time will also be freed up and will enable them to communicate abnormal results in person or through a telephone call, and even quality enforcement, education, policy formulation and interventional procedures [29-42]. In general, when automation comes, jobs are not lost, but humans promote themselves to tasks needing a human touch [43].

Future roles of a radiologist

Since Roentgen, AI will be the biggest thing to come into radiology. Radiologists have a vital role to play for the good of both AI and radiologists [29-44]. They are an essential piece of the puzzle for several challenges AI is facing now, such as the production of training images [29-47]. There is a need for many labelled images provided by experienced radiologists and creating these datasets is difficult and time-consuming [29-45]. However, even if necessary, the radiologist's role is not only image labeling. Radiologists should be involved in directing programmers to areas where AI methods are needed most because they are the end-users, and they know where they need help [40-47]. There is a need to increase partnership with AI developers in the development of applications to be used in breast radiology [46]. These professionals should become part and parcel of radiological departments, creating a “multidisciplinary AI team” that will ensure the adequacy of patient safety standards. In this way, radiologists can take responsibility for legal liability [45,46]. There is also a need for the development of rigorous evaluation criteria of applications before they are licensed for use and radiologists should take this responsibility [29-47].

Conclusions

Indeed, AI will change radiology, and it is not too early to incorporate it into your workplace. While there are even more grey areas that need clarification, our opinion is that AI will not replace radiologists. Still, those who incorporate AI in their daily work will probably be better off than those who don't. Every radiologist must prepare for a future of working with machines more. We predict a future where radiologists will continue to make strides and draw many benefits from the use of more sophisticated AI systems over the next 10 years. If you are interested in breast radiology, from medical students to radiologists, anticipate a more satisfying and rewarding career, especially if you have skills in programming. AI knowledge and data science must be a part of the medical school and radiology trainees’ curriculum. Finally, it is important to say our patients, not AI, should be central to every decision that we take as a professional.

The content published in Cureus is the result of clinical experience and/or research by independent individuals or organizations. Cureus is not responsible for the scientific accuracy or reliability of data or conclusions published herein. All content published within Cureus is intended only for educational, research and reference purposes. Additionally, articles published within Cureus should not be deemed a suitable substitute for the advice of a qualified health care professional. Do not disregard or avoid professional medical advice due to content published within Cureus.

Footnotes

The authors have declared that no competing interests exist.

References

- 1.Breast cancer screening. [Feb;2020 ];https://www.nhs.uk/conditions/breast-cancer-screening/ 2018

- 2.Breast cancer statistics. [Feb;2020 ];https://www.cancerresearchuk.org/health-professional/cancer-statistics/statistics-by-cancer-type/breast-cancer 2018

- 3.Cancer registration statistics, England: first release, 2018. [Feb;2020 ];https://www.gov.uk/government/publications/cancer-registration-statistics-england-2018/cancer-registration-statistics-england-first-release-2018 2018 2017 [Google Scholar]

- 4.Computer-aided detection of breast cancer on mammograms: a swarm intelligence optimized wavelet neural network approach. Dheeba J, Singh AN, Selvi TS. J Biomed Inform. 2014;49:45–52. doi: 10.1016/j.jbi.2014.01.010. [DOI] [PubMed] [Google Scholar]

- 5.Quantifying the benefits and harms of screening mammography. Welch HG, Passow HJ. JAMA Intern Med. 2014;3:448–454. doi: 10.1001/jamainternmed.2013.13635. [DOI] [PubMed] [Google Scholar]

- 6.Improved cancer detection using artificial intelligence: a retrospective evaluation of missed cancers on mammography. Watanabe AT, Lim V, Vu HX, et al. J Digit Imaging. 2019;32:625–637. doi: 10.1007/s10278-019-00192-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Addition of tomosynthesis to conventional digital mammography: effect on image interpretation time of screening. Dang PA, Freer PE, Humphrey KL, Halpern EF, Rafferty EA. Radiology. 2014;270:49–56. doi: 10.1148/radiol.13130765. [DOI] [PubMed] [Google Scholar]

- 8.A survey of computer-aided detection of breast cancer with mammography. Li Y, Chen H, Cao L, Ma J. J Health Med Inform. 2016;7:238. [Google Scholar]

- 9.Diagnostic accuracy of digital screening mammography with and without-aided detection. Lehman CD, Wellman RD, Buist DSM, Kerlikowske K, Tosteson ANA, Miglioretti DL. JAMA Intern Med. 2015;175:1828–1837. doi: 10.1001/jamainternmed.2015.5231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Deep learning in medical imaging: general overview. Lee JG, Jun S, Cho YW, Lee H, Kim GB, Seo JB, Kim N. Korean J Radiol. 2017;4:570–584. doi: 10.3348/kjr.2017.18.4.570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Artificial intelligence and radiology: have rumors of the radiologist's demise been greatly exaggerated? Nawrocki T, Maldjian PD, Slasky SE, Contractor SG. Acad Radiol. 2018;25:967–972. [Google Scholar]

- 12.The coding of roentgen images for computer analysis as applied to lung cancer. Lodwick GS, Keats TE, Dorst JP. Radiology. 1963;81:185–200. doi: 10.1148/81.2.185. [DOI] [PubMed] [Google Scholar]

- 13.What’s the difference between artificial intelligence, machine learning, and deep learning? [Mar;2020 ];Copeland (2016) Copeland M. https://blogs.nvidia.com/blog/2016/07/29/whats-difference-artificial-intelligence-machine-learning-deep-learning-ai/ 2016

- 14.Implementing machine learning in radiology practice and research. Kohli M, Prevedello LM, Filice RW, Geis JR. AJR Am J Roentgenol. 2017;208:754–760. doi: 10.2214/AJR.16.17224. [DOI] [PubMed] [Google Scholar]

- 15.Current applications and future impact of machine learning in radiology. Choy G, Khalilzadeh O, Michalski M, et al. Radiology. 2018;288:318–328. doi: 10.1148/radiol.2018171820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Artificial neural networks: opening the black box. Dayhoff JE, DeLeo JM. https://www.ncbi.nlm.nih.gov/pubmed/11309760. Cancer. 2001;91:1615–1635. doi: 10.1002/1097-0142(20010415)91:8+<1615::aid-cncr1175>3.0.co;2-l. [DOI] [PubMed] [Google Scholar]

- 17.New feature: what are the limits of deep learning? [Mar;2020 ];Waldrop MM. Proc Natl Acad Sci U S A. 2019 116:1074–1077. doi: 10.1073/pnas.1821594116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Detecting and classifying lesions in mammograms with deep learning. Ribli D, Horváth A, Unger Z, Pollner P, Csabai I. Sci Rep. 2018;8:4165. doi: 10.1038/s41598-018-22437-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Your future doctor may not be human. This is the rise of AI in medicine. [Mar;2020 ];Norman (2018) Norman A. https://futurism.com/ai-medicine-doctor 2018

- 20.Computer-aided diagnosis with deep learning architecture: applications to breast lesions in us images and pulmonary nodules in CT scans. Cheng J, Ni D, Chou Y, et al. Sci Rep. 2016;6:24454. doi: 10.1038/srep24454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Discrimination of breast cancer with microcalcifications on mammography by deep learning. Wang J, Yang X, Cai H, Tan W, Jin C, Li L. Sci Rep. 2016;6:27327. doi: 10.1038/srep27327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Artificial intelligence in digital breast pathology: techniques and applications. Ibrahim A, Gamble P, Jaroensri R, Abdelsamea MM, Mermel CH, Chen CP, Rakha EA. Breast. 2020;49:267–273. doi: 10.1016/j.breast.2019.12.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Breast cancer detection using deep convolutional neural networks and support vector machines. Ragab DA, Sharkas M, Marshall S, Ren J. https://www.semanticscholar.org/paper/Detection-and-Diagnosis-of-Breast-Tumors-using-Deep-Gallego-Posada-Montoya-Zapata/9566d1f27a0e5f926827d3eaf8546dab51e40e21. PeerJ. 2019;7:0. doi: 10.7717/peerj.6201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Automatic mass detection in mammograms using deep convolutional neural networks. Agarwal R, Diaz O, Lladó X, Yap MH, Martí R. J Med Imaging (Bellingham) 2019;6:31409. doi: 10.1117/1.JMI.6.3.031409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Unsupervised deep learning applied to breast density segmentation and mammographic risk scoring. Kallenberg M, Petersen K, Nielsen M, et al. IEEE Trans Med Imaging. 2016;35:1322–1331. doi: 10.1109/TMI.2016.2532122. [DOI] [PubMed] [Google Scholar]

- 26.The future of radiology augmented with artificial intelligence: a strategy for success. Liew C. Eur J Radiol. 2018;102:152–156. doi: 10.1016/j.ejrad.2018.03.019. [DOI] [PubMed] [Google Scholar]

- 27.Christoyianni I, Constantinou E, Dermatas E. Methods and Applications of Artificial Intelligence. SETN 2004. Lecture Notes in Computer Science. Vol. 3025. Berlin, Heidelberg: Springer; 2004. Automatic detection of abnormal tissue in bilateral mammograms using neural networks; pp. 265–275. [Google Scholar]

- 28.Automatic breast density classification using a convolutional neural network architecture search procedure. Fonseca P, Mendoza J, Wainer J, Ferrer J, Pinto J, Guerrero J, Castaneda B. J Med Imaging. 2015;9414:941428. [Google Scholar]

- 29.What the radiologist should know about artificial intelligence - an ESR white paper. Neri E, de Souza N, Brady A, Bayarri AA, Becker CD, Coppola F, Visser J. Insights Imaging. 2019;10:44. doi: 10.1186/s13244-019-0738-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Radiomics: images are more than pictures, they are data. Gillies RJ, Kinahan PE, Hricak H. Radiology. 2016;278:563–577. doi: 10.1148/radiol.2015151169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Content analysis of reporting templates and free-text radiology reports. Hong Y, Kahn CE Jr. J Digit Imaging. 2013;26:843–849. doi: 10.1007/s10278-013-9597-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Collaboration, campaigns and champions for appropriate imaging: feedback from the Zagreb workshop. Remedios D, Brkljacic B, Ebdon-Jackson S, Hierath M, Sinitsyn V, Vassileva J. Insights Imaging. 2018;9:211–214. doi: 10.1007/s13244-018-0602-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Imaging study protocol selection in the electronic medical record. Sachs PB, Gassert G, Cain M, Rubinstein D, Davey M, Decoteau D. J Am Coll Radiol. 2013;10:220–222. doi: 10.1016/j.jacr.2012.11.004. [DOI] [PubMed] [Google Scholar]

- 34.Stand-alone artificial intelligence - the future of breast cancer screening? Sechopoulos L, Mann RM. Breast. 2020;49:254–260. doi: 10.1016/j.breast.2019.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Will doctors fear being replaced by AI in the hospital settling? [Mar;2020 ];Sennaar (2018) Sennaar K. https://emerj.com/ai-sector-overviews/will-doctors-fear-being-replaced-by-ai-in-the-hospital-settling/ 2018

- 36.Artificial intelligence is the stethoscope of the 21st century. [Mar;2020 ];Meskó (2017) Meskó B. https://medicalfuturist.com/ibm-watson-is-the-stethoscope-of-the-21st-century/ 2017

- 37.The impact of artificial intelligence in medicine on the future role of the physician. Ahuja AS. PeerJ. 2019;7:0. doi: 10.7717/peerj.7702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Artificial intelligence in radiology. Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Nat Rev Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Artificial intelligence as a medical device in radiology: ethical and regulatory issues in Europe and the United States. Pesapane F, Volonté C, Codari M, Sardanelli F. Insights Imaging. 2018;9:745–753. doi: 10.1007/s13244-018-0645-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Artificial intelligence (AI) systems for interpreting complex medical datasets. Altman RB. Clin Pharmacol Ther. 2017;101:585–586. doi: 10.1002/cpt.650. [DOI] [PubMed] [Google Scholar]

- 41.Artificial intelligence: threat or boon to radiologists? Recht M, Bryan RN. J Am Coll Radiol. 2017;14:1476–1480. doi: 10.1016/j.jacr.2017.07.007. [DOI] [PubMed] [Google Scholar]

- 42.Fears of an AI pioneer. Russell S, Bohannon J. Science. 2015;349:252. doi: 10.1126/science.349.6245.252. [DOI] [PubMed] [Google Scholar]

- 43.Adapting to artificial intelligence: radiologists and pathologists as information specialists. Jha S, Topol EJ. JAMA. 2016;316:2353–2354. doi: 10.1001/jama.2016.17438. [DOI] [PubMed] [Google Scholar]

- 44.Deep learning for health informatics. Ravi D, Wong C, Deligianni F, Berthelot M, Andreu-Perez J, Lo B, Yang G. IEEE J Biomed Health Inform. 2017;21:4–21. doi: 10.1109/JBHI.2016.2636665. [DOI] [PubMed] [Google Scholar]

- 45.Deep learning: a primer for radiologists. Chartrand G, Cheng PM, Vorontsov E, et al. Radiographics. 2017;37:2113–2131. doi: 10.1148/rg.2017170077. [DOI] [PubMed] [Google Scholar]

- 46.Trends in radiology and experimental research. Sardanelli F. Eur Radiol Exp. 2017;1:1. doi: 10.1186/s41747-017-0006-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Radiomics: the bridge between medical imaging and personalized medicine. Lambin P, Leijenaar RTH, Deist TM, et al. Nat Rev Clin Oncol. 2017;14:749–762. doi: 10.1038/nrclinonc.2017.141. [DOI] [PubMed] [Google Scholar]