Abstract

The current study was aimed at evaluating the effects of age on the contributions of head and eye movements to scanning behavior at intersections. When approaching intersections, a wide area has to be scanned requiring large lateral head rotations as well as eye movements. Prior research suggests older drivers scan less extensively. However, due to the wide-ranging differences in methodologies and measures used in prior research, the extent to which age-related changes in eye or head movements contribute to these deficits is unclear. Eleven older (mean 67 years) and 18 younger (mean 27 years) current drivers drove in a simulator while their head and eye movements were tracked. Scans, analyzed for 15 four-way intersections in city drives, were split into two categories: eye-only (consisting only of eye movements) and head+eye (containing both head and eye movements). Older drivers made smaller head+eye scans than younger drivers (46.6° vs. 53°), as well as smaller eye-only scans (9.2° vs. 10.1°), resulting in overall smaller all- gaze scans. For head+eye scans, older drivers had both a smaller head and a smaller eye movement component. Older drivers made more eye-only scans than younger drivers (7 vs. 6) but fewer head+eye scans (2.1 vs. 2.7). This resulted in no age effects when considering all-gaze scans. Our results clarify the contributions of eye and head movements to age-related deficits in scanning at intersections, highlight the importance of analyzing both eye and head movements, and suggest the need for older driver training programs that emphasize the importance of making large scans before entering intersections.

Keywords: Aging, Driving, Scanning, Head and Eye Movements, Driving Simulation

1. Introduction

Before entering an intersection drivers must visually scan a wide field of view. For example, stop controlled T-intersections typically have a clear sight triangle subtending about 170° of visual angle (AASHTO 2011). This means that drivers need to scan approximately 85° to their left and right in order to fully inspect the intersection before pulling out. Humans have a maximal oculomotor extent of around 55° (Guitton 1992, Haggerty et al. 2005); however, eye movements of such magnitudes are extremely infrequent as they are uncomfortable and most naturally occurring eye saccades rarely exceed 15° (Bahill et al. 1975). Scans of about 85° require large lateral head rotations as well as eye saccades. Therefore in order to fully quantify scanning behavior at intersections it is important to track a driver’s head-in-world and eye-in-head movements as well as their gaze-in-world, the combination of the head-in-world and eye-in-head movements (referred to as gaze, head and eye, respectively, throughout the rest of the paper). The goal of the current study was to evaluate the effects of age and following a Lead Car on the relative contributions of eye and head movements to scanning at intersections.

Although prior studies reported that older drivers scan less extensively at intersections than younger drivers (Bao and Boyle 2009, Romoser and Fisher 2009, Romoser et al., 2013, Bowers et al. 2019), several questions remain unanswered. Firstly, it is unclear whether age-related deficits in scanning occur primarily in the head movement component of scans, or in the eye movement component. Secondly, older drivers might attempt to compensate for deficits in head movements by using increased eye movements but this has not been systematically investigated. Thirdly, following a Lead Car might have contributed to a lack of scanning or exacerbated age-related deficits in scanning at intersections in some driving simulator studies (e.g., Romoser et al., 2013). Following a Lead Car requires the driver to remain vigilant of the Lead Car’s distance, speed, and brake lights, which may cause the driver to spend more time looking straight ahead and reduce the number of scans on approach to intersections. Addressing each of these questions is relevant to providing a better understanding of the scanning deficits of older drivers as well as to the design of training programs aimed at improving scanning.

The methods and metrics with which scanning behavior at intersections have previously been quantified vary greatly across studies making it difficult to determine the relative contributions of eye and head movements to scanning deficits of older drivers. Some studies tracked only head movements (Bao and Boyle 2009, Bowers et al. 2014, Bowers et al. 2019), some tracked both eye and head movements and reported them separately (Rahimi et al. 1990) or combined (Kito et al. 1989), while others tracked only the point of gaze within the scene (Romoser et al. 2013). Any studies that tracked only head movements would have failed to detect scans where the driver moved their eyes without moving their head (eye-only scans) and for scans where subjects moved their head and eyes (head+eye scans), the full extent of the gaze movement would not have been measured. Thus, it is likely that both the amount and the magnitude of scanning at intersections would have been underestimated. By comparison, in studies that tracked the point-of-gaze in the virtual world, but did not track head position separately, it is unknown whether reported age effects were primarily a result of age-related differences in head or eye movement behaviors. Therefore, the aim of the current study was to investigate the effects of age on the relative contributions of eye and head movements to gaze scanning behavior. We focus on scans made before entering the intersection, sometimes called primary scans, as distinct from scans made after entering the intersection, sometimes called secondary scans (Romoser and Fisher, 2009).

We hypothesize that age effects will be more apparent in the head than the eye movement components of scanning because older drivers may have age-related reductions in neck flexibility, which could reduce maximum head rotation extent (Isler et al. 1997, Dukic and Broberg 2012, Chen et al. 2015). In support of this, Bowers et al (2019) previously found that older drivers made fewer head scans and had smaller maximum head scan magnitudes close to the intersection than middle-aged drivers, and Bao and Boyle (2009) reported that older drivers head scanned over a narrower area than middle-aged drivers. One way to compensate for a reduction in head scan magnitudes and numbers might be to make more and larger eye-only movements and fewer head+eye scans. However, older subjects were reported to make smaller eye saccades than younger subjects in a real-world walking task (Dowiasch et al. 2015). In order to better understand age-related differences in gaze scanning behavior at intersections, in the current study scans were classified in two categories: scans which were predominately eye-only, and scans which had a substantial head component as well as an eye component head+eye (see Table 2). We analyzed both the number as well as the horizontal size of eye-only, head+eye and all-gaze (combined eye-only and head+eye) scans. Our main hypotheses were:

Table 2.

Classification of gaze scans based on the size of the head movement component and the subject’s distance to the intersection.

| Distance to Intersection [m] | Size of Head movement [°] | Classification of Scan |

|---|---|---|

| 100 – 50 | ≥ 4 | head+eye |

| < 4 | eye-only | |

| 50 – 20 | ≥ 6 | head+eye |

| < 6 | eye-only | |

| 20 – 0 | ≥10 | head+eye |

| < 10 | eye-only |

Older subjects would have a smaller head movement component and a smaller eye movement component to head+eye scans than younger subjects;

Older subjects would make fewer head+eye scans than younger subjects but might make more eye-only scans in an attempt to compensate;

Following a Lead Car would reduce the numbers of scans (eye-only and head+eye), compared to driving with very simple GPS navigation instructions, but would not affect scan magnitudes. Simple auditory GPS instructions were used as the comparison for the Lead Car condition because they had been used to guide participants in prior simulator studies investigating scanning at intersections (Bowers et al. 2014, Bowers et al. 2019), and auditory navigation systems are used by both older and younger drivers in real-world driving (Jenness et al. 2008).

2. Methods

2.1. Design

This study implemented a 2 (Age – Young vs. Old - between) × 2 (Guidance type – GPS vs. Lead Car - within) mixed design. The dependent variables were the numbers and horizontal magnitudes of scans (see head and gaze measures section).

2.2. Subjects

We recruited a total of 51 subjects in two age groups: 28 older (11 female and 17 male; 61–81 years) and 23 younger (10 female and 13 male; 22–41 years) with visual acuity that met the requirements for a driver’s license in MA (at least 20/40 corrected or uncorrected). The age ranges were selected to provide good separation between the older and younger groups, and were based on age ranges used in prior studies investigating the effects of age on driving performance (Wood et al. 2009, Bao and Boyle 2009). Subjects were required to be current drivers (have a valid driving license and drive on at least one day per week), have at least two years of driving experience and have no adverse ocular history (self-reported) of eye disease that might affect visual acuity or visual fields.

Sixteen subjects did not complete the study either because of simulator discomfort (N= 10; 2 younger and 8 older) or because of eye tracking software issues (N= 6; 2 younger and 4 older) and were excluded. The remaining subjects completed the study; however, 6 subjects (1 younger and 5 older) were discarded prior to running our analyses due to excessive noise in their gaze data. Therefore a total of 11 older and 18 younger subjects (Table 1) were included in analyses. Both groups had good visual acuity and contrast sensitivity (Table 1) and there was no statistical difference in the number of miles driven per year between older and younger subjects (W= 76.5; p= .12). The study followed the tenets of the Declaration of Helsinki and was approved by the institutional review board at the Schepens Eye Research Institute. Informed consent was obtained from each subject prior to data collection.

Table 1.

Characteristics of study subjects

| Factor | Older (N=11) | Younger (N=18) |

|---|---|---|

| Age [years], mean | 67.5 | 26.5 |

| (SD) | (6.7) | (5.9) |

| Male [N] | 7 | 9 |

| (%) | (64) | (50) |

| Visual acuity [logMAR1], mean | 0.00 (0.07) | −0.07 (0.05) |

| (SD) | 20/20 | 20/17 |

| Contrast sensitivity [log], mean | 1.70 | 1.78 |

| (SD) | (0.09) | (0.07) |

| Annual mileage [miles], median | 2860 | 1170 |

| Driving experience [years], median | 49 | 6 |

logMAR – Logarithm of the minimum angle of resolution

2.3. Materials

2.3.1. Driving simulator

The driving simulator (LE-1500, FAAC Corp, Ann Arbor, MI) consisted of five 42-inch LCD monitors yielding approximately 225° horizontal field of view. The central screen (~64° horizontally) provided the view through the windshield, while the screens to the left and right of the central screen provided the view through the side windows. Rear- and side-view mirrors were inset on the LCD monitors simulating their real positions in a car. The dashboard was displayed at the bottom of the central monitor and contained the speedometer and a clock. The controls and dashboard resembled a fully automatic transmission Ford Crown-Victoria along with a motion base seat with 3 degrees of freedom (Figure 1). Driving simulator data, including the position of the subject’s vehicle, its speed and heading, as well as information about other scripted vehicles, were collected at 30Hz.

Figure 1.

FAAC® driving simulator with SmartEye® 6-camera head and eye tracking system (yellow circles).

Head and eye movement data were recorded using a remote, digital 6-camera tracking system at 60 Hz (SmartEye Pro Version 6.1, Goteborg, Sweden, 2015 - Figure 1), which allowed for natural eye and head movements. The system tracked head and eye movements across approximately 180° (90° to the left and right), enabling us to capture the large scans that drivers make before entering an intersection. Data from the head and eye tracker and driving simulator were synchronized into a single text file using custom software in MATLAB®.

2.3.2. Driving scenarios

All driving scenarios were scripted with Scenario Toolbox software (version 3.9.4. 25873, FAAC Incorporated). The scenarios were set in a light industrial virtual world with the roads primarily laid out on a grid system. The world included high rise office buildings, business parks, small shopping precincts, and residential houses such as bungalows and town houses. In the current study, subjects drove along one route with 42 intersections, which took about 12 to 15 minutes to complete (depending on the subject’s driving speed). Data were analyzed for 15 intersections along the route which were all four-way intersections with cross streets perpendicular to the street along which the subject’s car was approaching the intersection. The remaining 27 intersections were not included in analyses either because there was a motorcycle hazard at the intersection (data will be reported in a future paper), the intersection configuration was asymmetric (incoming road on only one side), or the cross street was not perpendicular.

As summarized in Table A1 (appendix), the 15 intersections for which data were analyzed included a variety of maneuvers (turn left, turn right, go straight), signage (stop, yield, traffic lights, no signage) and non-hazardous cross traffic. Since the presence of other traffic may affect scanning behaviors at intersections (Rahimi et al. 1990, Keskinen et al. 1998), cross traffic was programmed to behave in the same manner for all subjects. Cross traffic was triggered to start moving when the subject’s vehicle was a predetermined distance from the intersection, and the number of vehicles on the cross street was the same for every subject (see Table A1). If the cross-traffic vehicle was scripted to cross the intersection, then it did so well before the subject’s vehicle reached the intersection (to avoid unnecessary crashes). Otherwise, if the cross-traffic was scripted to stop at a traffic light or stop sign, it did not move again until the participant drove past the intersection. There were many buildings along the route such that the view of the cross street was obscured on either one or both sides at 11 of the 15 intersections (see Table A1).

2.4. Procedure

The driving simulator session began with two acclimatization drives. The first took place on a rural highway without other vehicles. This allowed subjects to get used to the controls and handling of the simulated vehicle and the simulated environment. The second acclimation drive took place in the same simulated city as the experimental drives and allowed subjects to practice controlling the vehicle within the city, making 90° turns and stopping at the appropriate signage. For these two acclimation drives, subjects were given as much time as they needed in order to become comfortable maneuvering the simulated vehicle. After the acclimation drives, we adjusted the six cameras of the SmartEye tracking system and calibrated the subject’s head position. Next, subjects completed a practice drive which included all the elements of the experimental drives. During the practice drive the SmartEye system automatically built a profile of the subject and tracked their facial features. Eye position was calibrated after the final practice drive by means of a five point calibration procedure on the center screen.

After calibration, subjects completed two experimental drives. Eye and head data were collected and analyzed for these two experimental drives. Subjects drove the same route twice in counterbalanced order, once with GPS instructions and once while following a Lead Car (Figure 2). Between the two experimental drives, subjects drove an unrelated scenario in a different virtual world and were offered an opportunity to take a break. If the subject stepped out of the simulator for a break, eye position was re-calibrated before the second experimental drive.

Figure 2.

Examples of the scene on the center screen for each of the guidance methods within the light industrial city, taken from the point of view of the subject’s vehicle. Left panel shows the view when driving in the GPS condition. The right side panel shows the view when following a Lead Car in the Lead Car condition. Screenshots were taken from the center screen of the driving simulator.

In the GPS drive, simple pre-recorded auditory navigation instructions were used to guide the subject along the route. Navigation instructions were only given when the participant needed to make a turn (“Turn left/right at next intersection”), with the instruction delivered when the subject’s vehicle was approximately 70 meters from the intersection. In the Lead Car drive, subjects followed a car that was scripted to drive at 35 mph. The Lead Car made periodic stops to ensure that subjects did not lose sight of it and would have to monitor the Lead Car’s speed and braking behavior. Subjects were instructed to drive as they normally would and follow all normal traffic rules. They were not, however, given any specific instructions about how to scan. In the Lead Car drive they were instructed to follow the Lead Car at a safe distance, as they would when following a friend’s car in an unfamiliar city.

2.5. Quantifying scanning at intersections

In order to quantify the breadth of scanning when approaching an intersection, our analysis focused on lateral scanning (yaw movements). Vertical head and eye movements are needed when checking the rear view mirror and speedometer, but contribute little when scanning a wide horizontal field of view at intersections. We therefore chose to focus only on lateral scanning.

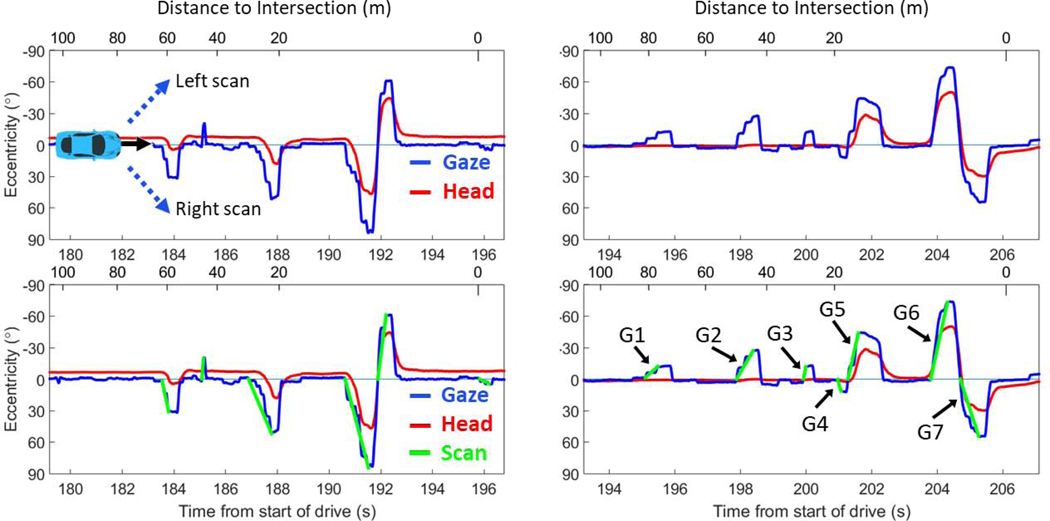

On approaching an intersection, subjects made multiple lateral gaze movements that took the gaze point away from the travel direction toward the left or right side with a subsequent movement that brought gaze back to the travel direction (Figure 3). These gaze movements could be composed of a single saccadic eye movement or a series of sequential saccadic movements headed in the same direction (i.e. towards the left or right) either with or without an associated lateral head rotation (Figure 3). A gaze scan was thus defined as the whole series of lateral eye and head movements that took gaze away from the travel direction (0°) to the maximum eccentricity on the left or right (Figure 3 bottom row) so that the full lateral extent of the gaze scan was quantified. In analyses, only gaze scans larger than 5° (four times the size of the SmartEye manufacturer’s accuracy under ideal conditions) headed away from the subject’s direction of travel were used.

Figure 3.

Examples of lateral gaze (blue) and head (red) movements on approach to an intersection. The top left figure serves as a reference, with the car’s travel path being left to right (i.e. increasing in time). Movements towards the left have a negative eccentricity and movements towards the right have a positive eccentricity. The top row provides two examples of the head and gaze data; each plot is data for one subject at one intersection, selected to demonstrate representative scanning behaviors. The bottom row shows the same data with the gaze scans (green) detected using the gaze scan algorithm superimposed. In the plot on bottom right, gaze scan G1 is an eye-only scan comprising 2 saccades, G2 is eye-only with 3 saccades, G3 and G4 are eye-only with one saccade, G5, G6 and G7 are head+eye scans each comprising a large lateral head rotation with 2 or more saccades.

A custom algorithm (the ‘gaze scan algorithm’ Swan et al., in press) was used to automatically detect and quantify the magnitude of gaze scans from 100 – 0 m before the intersection (Bowers et al., 2014). In brief, the algorithm first detected saccades and then merged sequential saccades into gaze scans. Saccades were defined as movements with a velocity greater than 30 °/s (Hamel et al. 2013, Bahnemann et al. 2015), a magnitude greater than 1° and a duration longer than 30ms (Beintema et al. 2005). In order to be merged, the saccades had to be headed in the same direction, on the same side of the travel direction, and close in time (separated by no more than approximately 400 ms). Full details of the algorithm are given elsewhere (Swan et al. in press). Each marked gaze scan had a start and end point (in eccentricity and time), which was used to link the gaze scan to the eccentricity of the head scan (see below) and to characteristics of the driver’s vehicle (e.g. speed of the car and distance of the car to the intersection).

Head scans were defined with respect to gaze scans, which meant every gaze scan had a corresponding head scan component. The start of the head movement was set to coincide with the start of the gaze scan. The end of the head movement was defined as the local maximum of head eccentricity around the end of the marked gaze scan. Head magnitude was the absolute difference between the start and end eccentricities for the head movement, and represented the head component of the gaze scan. The eye movement component of each gaze scan was computed as the difference between the end eccentricity of the gaze scan and the end eccentricity of the corresponding head scan (Figure 4).

Figure 4.

Example of lateral gaze (blue line) and head (red line) movements for one subject on approach to an intersection with the head and eye movement components marked for one of the large head+eye scans. The vertical green dashed line demarks the start of a large leftward gaze scan, the horizontal red dashed line demarks the maximum eccentricity of the head and the horizontal blue dashed line demarks the maximum eccentricity of gaze. The plot is data for one subject at one intersection, selected to demonstrate representative large gaze scans with eye and head movement components.

Gaze scans were classified into two major categories: 1) scans which comprised predominately eye movement only (eye-only scans); and 2) scans which contained both a substantial head and eye movement component (head+eye scans). The classification was based on the magnitude of the head scan component of each gaze scan. A three-tier head magnitude threshold (4°, 6° and 10°) was used depending on the distance of the subject’s car to the intersection (Table 2). These threshold magnitudes were the same as those used to define a head scan in prior driving simulator studies (Bowers et al. 2014, Bowers et al. 2019). They were based on the minimum size of head movement required to turn the head to the center of the incoming traffic lane on the same side of the intersection as the subject was driving, given their distance to the intersection, when the cross street had two lanes in each direction. If the head movement component of the scan was equal to or greater than the minimum threshold, the scan was classified as head+eye, otherwise it was classified as eye-only (Table 2).

2.6. Statistical Analyses

Prior to conducting statistical analyses we first excluded gaze scans which were artifacts resulting from noise in the gaze data and were physically impossible (removed 2.4% of scans). We then excluded single intersections on a per-subject basis where there was excessive noise in the gaze data for that intersection (removed 5.4% of all intersections and 14.3% of scans). Ultimately 823 intersections and 7486 scans were included in analyses.

Our main analyses evaluated the effects of age (older vs. younger) and guidance type (GPS vs. Lead Car) on the numbers and magnitudes of eye-only scans, head+eye scans and all-gaze scans (eye-only and head+eye combined) and, for head+eye scans only, the magnitude of the head movement component and the eye movement component. In addition, in a second set of analyses we included distance to the intersection as a factor because scanning behaviors may change as the driver comes closer to the intersection (e.g., scans become larger; Figure 3). Thus three distance bins were created (close, medium and far; Table 3) which contained roughly equal numbers of observations (and were the same as the distance bins used in the three-tier minimum head magnitude threshold used when categorizing scans as eye-only or head+eye; Table 3).

Table 3.

Number of scans for each distance bin used in analyses

| Distance Bin | Range [m] | Mean [m]* | Number of scans |

|---|---|---|---|

| Close | 0–20 | 9.6 | 2438 |

| Medium | 20–50 | 35.0 | 2506 |

| Far | 50–100 | 75.5 | 2542 |

Mean distance to the intersection at which scans within each bin were made

For the analyses of magnitudes (continuous numerical data) Linear Mixed Models (LMMs) were constructed in the R statistical programming environment (Version 3.5 - (R Development Core Team 2019)). LMMs are particularly well suited to datasets such as those collected in this study because they can combine continuous and categorical factors within the same model and they can be used to account for differences in effects between subjects and items simultaneously (Kliegl et al. 2012). Prior to analyzing the data, numerical continuous outcome variables were checked for normality (histograms, boxplots and normal quantile-quantile plots). We opted for visual inspection rather than statistical tests of normality, such as the Shapiro-Wilks test, because our linear mixed models were complex and run on a large number of data points, a situation in which traditional tests of normality are not as useful as when assessing the distributions of means per condition (or subject) as would be done for an ANOVA or t-test (Loy et al. 2017). The data for magnitudes of eye-only scans, head+eye scans and all-gaze scans were not normally distributed. The data for these three variables were normalized with a log transform prior to entering them into our models. Next, we removed outliers for numerical continuous data by removing all values which were greater than ± 3 standard deviations from the mean (in transformed units). When reporting the data for normalized variables, we transformed them back to their raw unit format for ease of understanding.

In order to assess the overall effect of age and guidance type we created a model in which we entered age (younger vs. older) and guidance type (GPS vs. Lead Car) as fixed factors. Next, in order to evaluate the effects of the subject’s distance to the intersection, we entered age, guidance type, and distance bin (close, medium, far) as fixed factors. As described in Section 2.3.2 (Driving scenarios), the view of the cross street was obstructed by buildings at some of the intersections which might have affected scanning behaviors. Therefore obstruction on the side of the scan (yes/no) was included as a fixed factor in all analyses of scan magnitudes. In addition, in all of our LMMs we entered the event number as a random factor to account for any variance contributed by the individual intersections as well as a random effects structure for subject to account for the variability contributed by individual differences.

P-values for main effects were estimated by means of the lmerTest package (Kuznetsova et al. 2017). P-values for any interactions between age, guidance type and distance bin were calculated by means of model comparisons. We compared the simplest form of each model (with all interactions removed) with the same model plus the interaction of interest. The interaction model and baseline model were then compared using an analysis of variance (ANOVA), with the resulting p-value derived from our χ2 statistic representing the significance of the interactions of interest.

For the analyses of scan numbers we ran a series of ANOVAs on the aggregated scan numbers datasets. First a 2 (age) × 2 (guidance type) mixed design ANOVA was run to test the overall effects of age and guidance type on the number of scans. Next, in order to assess the effects the distance of the subject’s car to the intersection we ran a 2 (age) × 2 (guidance type) × 3 (distance bin – close, medium & far) mixed design ANOVA.

In order to assess the effects and interactions of age (a binomial categorical variable) and the distance to the intersection (3 level categorical variable) on the counts of scans considered eye-only and head+eye we fit a series of log-linear models to multidimensional contingency tables by iterative proportional fitting using the loglm() from the “MASS” package (Venables and Ripley 2002).

3. Results

3.1. Speed on approach to intersections

Average speed on approach to an intersection did not differ between GPS (17.1 mph) and Lead Car drives (17.5 mph; p = .4). However younger subjects approached the intersection at a higher speed, on average, (18.1 mph) than older subjects (15.9 mph; p = .006).

3.2. Composition of eye-only and head+eye scans

Eye-only scans comprised, on average, 2.25 saccades per scan with no significant difference between older and younger drivers (means 2.4 and 2.09, respectively, F(1, 25)= 2.86; p= .1). Head+eye scans comprised a single lateral head rotation with, on average, 4.2 saccades per scan. Again, the number of saccades per scan did not differ between older and younger drivers (means 4.33 and 4.07, respectively, F(1, 25)= 1.9; p= .18)

3.3. Scan magnitudes

Average magnitudes for eye-only, head+eye and all-gaze scans can be seen plotted in Figure 5. Older subjects made smaller eye-only, β= −.07, SE= .03; t= −2.57; p= .016, smaller head+eye scans, β= −.24, SE= .06; t= −3.5; p= .002, and smaller all-gaze scans, β= −.18, SE= .06; t= −2.93; p= .007, than younger subjects. A follow up analysis on eye-only scans confirmed that each eye saccade within eye-only scans was smaller, on average, for older than younger subjects (5.4° and 6.4°, respectively, β = .07, SE= .03; t= 2.09; p= .04). By comparison, guidance type had no significant effects on the magnitudes of eye-only, β= .01, SE= .02; t= .07; p= .48, head+eye, β= .06, SE= .04; t= 1.54; p= .13, or all-gaze scans, β= .03, SE= .03; t= 1.05; p= .3. A significant interaction between age and guidance type was only found for eye-only scans, χ2(1, 8)= 5.56; p=.018. The difference between older and younger subjects’ eye-only scan magnitudes was slightly greater in the Lead Car as compared to the GPS condition.

Figure 5.

Average magnitudes for eye-only, head+eye and all-gaze scans plotted separately for GPS and Lead Car drives and split by older and younger subjects. Error bars represent the SEM

3.3.1. Changes in scan magnitudes with distance to the intersection

Eye-only and head+eye scan magnitudes are plotted separately for older and younger subjects at each of the three distance bins in Figure 6 (left - eye-only; right - head+eye). Both eye-only and head+eye scans became larger on approach to the intersection. Eye-only scans were larger in the medium as compared to the far distance bin, β= .15, SE= .03; t= 5.58; p< .001, and larger in the close as compared to the medium distance bin, β= .24, SE= .03; t= 8.01; p< .001. Similarly, magnitudes of head+eye scans were larger in the medium than in the far distance bin, β= .7; SE= .05; t= 13.06; p< .001, and larger in the close than in the medium, β= .35, SE= .04; t= 7.87; p< .001. For eye-only scans we found no significant interaction between guidance type and distance bin, χ2(2, 11)= 1.41; p= .49, or age and distance bin, χ2(2, 11)= 2.27; p= .32. This indicated that the rate of change in the size of eye-only scans on approach to the intersection differed neither between younger and older drivers nor between GPS and Lead Car conditions. Similarly for head+eye scans we did not find an interaction between distance bin and age, χ2(2, 11)= 4.17; p=.12; or distance bin and guidance type, χ2(2, 11)= .18; p= .91.

Figure 6.

Eye-only (left panel) and head+eye (right panel) scan magnitudes on approach to the intersection plotted separately for older and younger subjects at each of the three distance bins to the intersection. Data collapsed across GPS and Lead Car conditions. Note: the different scale on the y-axes: head+eye scans were much larger than eye- only scans. Error bars represent the SEM.

All-gaze scan magnitudes for younger and older subjects are plotted for each of the three distance bins in Figure 7. All-gaze scan magnitudes were larger in the medium as compared to the far distance bin, β = .52, SE= .04; t= 12.15; p< .001, and larger in the close as compared to the medium distance bin, β = .52, SE= .04; t= 12.8; p< .001. There was a significant interaction between age and distance bin, χ2(2, 11)= 8.15; p= .02, but no interaction between guidance type and distance bin, χ2(2, 11)= 4; p= .13. The age by distance interaction was because younger subjects’ all-gaze scan magnitudes increased at a faster rate on approach to the intersection than older subjects.

Figure 7.

Average all-gaze scan magnitudes on approach to the intersection plotted separately for older and younger subjects. Error bars represent the SEM.

3.4. Magnitudes of Head and Eye Movement Components within Head+Eye Scans

The magnitudes of the head and eye movement components for head+eye scans for younger and older subjects are plotted in Figure 8. Older subjects had a significantly smaller head movement component, β= −.11, SE= .05; t= −2.11; p= .036, and also a significantly smaller eye movement component than younger subjects, β= −3.24, SE= .78; t= −4.16; p< .001. We found no evidence that guidance type affected the size of the head movement component, β= .04, SE= .03; t= 1.2; p= .24, or the eye movement component, β= .45, SE= .34; t= 1.33; p= .19. We also found no interaction between age and guidance type for either the head, χ2(1, 8)= .005; p= .94, or the eye movement component, χ2(1, 8)= .18; p= .67. Interestingly, the age-related difference was greater for the eye component (difference = 7.15°; 18.65% of total eye component) than for the head component (difference = 4.75°; 10.62% of total head component).

Figure 8.

Stacked bar graph of average magnitudes of the head and eye movement components for older and younger subject’s head+eye scans. The total size of the stack is the overall average head+eye scan magnitude.

3.4.1. Change in the Head and Eye Movement Components with Distance to the Intersection

The contributions of the head movement (left panel) and the eye movement (right panel) components to the magnitudes of head+eye scans for each of the three distance bins can be seen in Figure 9. The magnitude of both the head and the eye components increased on approach to the intersection. The head component was larger in the medium compared to the far distance bin, β= .56, SE= .04; t= 13.93; p< .001, and larger in the close compared to the medium distance bin, β= .41, SE= .03; t= 11.9; p< .001. Similarly, the eye component was larger in the medium compared to the far, β= 5.13, SE= .73; t= 7.03; p< .001, and also larger in the close compared to the medium distance bin, β= .41, SE= .03; t= 11.9; p< .001. We found no interactions for the head movement component between age and distance, χ2(2, 13)= 1.69; p= .43, or guidance type and distance, χ2(2, 13)= .61; p= .74. We also found no interactions for the eye-movement component between age and distance, χ2(2, 11)= .13; p= .94, or guidance type and distance, χ2(2, 11)= 2.06; p= .36.

Figure 9.

Average magnitudes of head (left panel) and eye (right panel) movement components of head+eye scans on approach to the intersection plotted for older and younger subjects. Error bars represent the SEM.

3.5. Scan Numbers

Average number of eye-only, head+eye and all-gaze scans per intersection can be seen plotted for older and younger subjects as well as for Lead Car and GPS conditions in Figure 10. Older subjects made more eye-only, F(1, 27)= 11.96; p< .001, and fewer head+eye scans, F(1, 27)= 20.54; p< .001. This resulted in no effect of age on the total number of all-gaze scans, F(1, 27)= 1.07; p= .31. Guidance type had no effect on the number of eye-only scans, F(1, 27)= .18; p= .67, the number of head+eye scans, F(1, 27)= .17; p= .68, or the total number of all-gaze scans F(1, 27)= .32; p= .5. Furthermore we found no interaction between age and guidance type for eye-only, F(2, 27)= .6; p= .54, head+eye, F(2, 27)= .03; p= .86, and all-gaze scans, F(2, 27)= .45; p=.51.

Figure 10.

Average number of eye-only, head+eye and all-gaze scans per intersection plotted separately for older and younger subjects, split by GPS and Lead Car conditions. Error bars represent the SEM.

3.5.1. Changes in scan numbers with distance to the intersection

Average numbers of eye-only (left panel) scans and head+eye (right panel) scans for older and younger subjects are plotted in Figure 11 for each of the three distance bins. Eye-only scans became less frequent on approach to the intersection, F(1, 27)= 6.81 p= .001, whereas head+eye scans became more frequent on approach to the intersection, F(2,54) = 56.68; p< .001. For eye- only scans we found no interaction between distance bin and age, F(2, 54)= .1.44: p= .25, or distance bin and guidance type, F(2, 54)= :24 p= .78, indicating that the decrease of eye-only scans on approach to the intersection differed neither between older and younger subjects nor between GPS and Lead Car drives. For head+eye scans we found no significant interaction between distance bin and age, F(2, 54)= .89; p= .46 or between distance bin and guidance type, F(2,54)= .26; p= .77 (Figure 12, right).

Figure 11.

Number of eye-only (left panel) and head+eye (right panel) scans made on approach to the intersection split by older and younger subjects. Error bars represent the SEM.

Figure 12.

Average number of all-gaze scans on approach to the intersection plotted separately for older and younger subjects (left panel) and for the Lead Car and GPS conditions (right panel). Error bars represent the SEM.

All-gaze scan numbers for each distance bin are summarized for older and younger subjects as well as Lead Car and GPS conditions in Figure 12. There was no effect of distance bin on all-gaze scan numbers because the distance bins were created to have an approximately equal number of all-gaze scans in each bin. Age did not interact with distance to the intersection, F(2,54)= .24; p= .79. However, we did find a significant interaction between distance bin and guidance type, F(2, 54)= 4.56: p= .015, because the number of all-gaze scans increased slightly when approaching the intersection in the GPS condition whereas in the Lead Car condition the number of all-gaze scans decreased slightly on approach to the intersection.

4. Discussion

The current study was aimed at assessing the effects of age and following a Lead Car on the relative contributions of eye and head movements to gaze movements while scanning on approach to intersections. Specifically, we were interested in determining whether older subjects made overall less frequent and smaller scans. If so, whether the reduction in the number and size of scans was due to older subjects having smaller head and /or eye contributions to their scans, and whether following a Lead Car resulted in fewer scans, especially for older subjects.

4.1. Effect of age on scanning behavior

4.1.2. Scan Magnitudes

Overall we found that older subjects made smaller all-gaze scans (combined eye-only and head+eye scans) than younger subjects. This was a consequence of older subjects making smaller eye-only scans as well as smaller head+eye scans. For scans that contained a meaningful head movement (head+eye scans) we were especially interested in determining the effects of age on the relative contribution of both the head and the eye movement components. As predicted, older subjects had a smaller head movement component, similar to the finding of smaller maximum head scan magnitudes in the prior study by Bowers et al. (2019). These smaller head movements are consistent with older subjects having less neck rotation flexibility than younger subjects (Isler et al. 1997, Dukic and Broberg 2012, Chen et al. 2015), making it more difficult to execute very large head movements. In addition, we found that older subjects had a smaller eye movement component to their head+eye scans and did not compensate for smaller head movements with a larger eye movement component.

The finding that older subjects had a smaller eye component in head+eye scans is consistent with the finding that they also had smaller eye-only scans. As the number of eye saccades per head+eye scan and also per eye-only scan did not differ significantly between older and younger subjects, smaller eye movement components must have resulted from smaller magnitude eye saccades. This is consistent with findings from a real world walking study by Dowiasch et al. (2015) in which the authors found that older subjects had significantly smaller eye saccade amplitudes than younger subjects. In contrast, Hamel et al. (2013) reported that age did not affect the magnitude of gaze scanning when performing a hazard detection task while driving in a simulator. One reason for this difference may be that we assessed gaze movements on approach to intersections in a simulator with 225° horizontal field of view whereas Hamel et al. (2013) analyzed scanning across the whole drive (not specifically at intersections) on a single screen with a narrower, 58° horizontal field of view.

Eye-only as well as head+eye (both head and eye components) and all-gaze scans became larger in magnitude as a wider field of view had to be scanned on approach to the intersection, as previously reported for head scans (Bowers et al. 2014). There was no significant interaction between age and distance bin for eye-only scans suggesting that although older subjects made smaller eye-only scans, the rate at which eye-only scan magnitudes increased on approach to the intersection was similar for older and younger drivers. Similarly, we found no significant interaction between distance bin and age for head+eye scan magnitudes. However, all-gaze scan magnitudes of younger subjects increased slightly more rapidly on approach to the intersection than those of older subjects. Taken together, our findings suggest that older drivers scan a narrower area than younger drivers when approaching an intersection, which is consistent with previous research (Bao and Boyle 2009, Romoser and Fisher 2009, Pollatsek et al. 2012, Romoser et al. 2013).

4.1.3. Number of Scans

Overall, subjects made more eye-only scans than head+eye scans, typically about 6.7 vs 2.4 per intersection. We found that, in line with our hypothesis, older subjects made fewer head+eye scans but more eye-only scans such that we found no effect of age on the total number of all-gaze scans. Previous studies have quantified scanning at intersections by examining head (e.g. (Bowers et al. 2014, Bowers et al. 2019), eye (Kosaka et al. 2005) or head and eye (Kito et al. 1989, Rahimi et al. 1990) movements. However none of these previous studies looked at the relative contribution of both the eye and the head to overall gaze scanning behavior. Whereas some prior studies (Romoser and Fisher 2009, Pollatsek et al. 2012, Romoser et al. 2013) reported that older drivers did not scan as often as younger drivers, to date the underlying cause of the reduction in visual exploration was unclear because eye-only and head+eye scans were not considered separately. In the current study, we found that older subjects made fewer scans with a significant head component, which is consistent with previous work by Bowers et al. (2019) who reported that older drivers made fewer head movements than younger drivers. However, importantly, we found evidence that older subjects made more eye-only scans than younger subjects. This seems to suggest that older subjects may be trying to compensate for their lack of neck flexibility by making more eye-only as compared to head+eye scans. Thus splitting all-gaze scans into eye-only and head+eye scans reveals a more nuanced picture of the effects of age on scanning behavior while driving.

When looking at eye-only scans our analyses revealed a significant decrease in the number of scans on approach to the intersection. This decrease was independent of age in that the rate of eye-only scans dropped in equal measures in younger and older drivers on approach to the intersection. By contrast, the number of head+eye scans increased as distance to the intersection decreased. As we approach an intersection, the area across which a driver needs to scan becomes greater requiring large gaze scans. This explains why we see both a reduction in the number of eye-only and an increase in number of head+eye scans when coming closer to the intersection.

4.2. Effect of guidance type on scanning behavior

4.2.1. Scan Magnitudes

Consistent with our expectations, we found no main effect of guidance type on scan magnitudes (all-gaze scans, eye-only scans, head+eye scans), indicating that following a Lead Car did not significantly alter the overall extent of scanning as compared to following GPS instructions.

4.2.2. Number of Scans

We found no main effects of guidance type on scan numbers (eye-only, head+eye and all-gaze scans), and no interaction between age and guidance type for eye-only and head+eye scans. However, we did find a weak age by guidance type interaction for all-gaze scans. Consistent with our hypothesis, the number of all-gaze scans gradually decreased on approach to the intersection in the Lead Car condition but gradually increased in the GPS condition. It should be noted, however, that the magnitude of the effect was quite small.

Our results suggest that the Lead Car paradigm we used did not substantially impair scanning behaviors and did not exacerbate scanning deficits of older drivers. The effect of the Lead Car on the number of scans was less than we had initially anticipated, possibly because we instructed subjects to follow the Lead Car at a safe distance, as if following a friend’s car, and told them that the Lead Car would wait for them. We did not require subjects to maintain a specific following distance or to keep as close to the Lead Car as possible, which might have resulted in subjects spending more time looking at the Lead Car and making fewer scans on approach to intersections. Our findings suggest that using a Lead Car paradigm similar in nature to the one we implemented is likely to have only small effects, if any, on scanning behaviors.

4.3. Study Limitations

A number of study limitations need to be considered. First, a relatively high proportion of older subjects (29%) compared to younger subjects (9%) experienced simulator discomfort and were unable to complete the study. Higher rates of simulator discomfort are expected in older populations (Brooks et al. 2010) and may have been exacerbated by the left and right turns along the driving route. Simulator discomfort is an unfortunate limitation of all driving simulator studies. Gaze data from five older subjects had to be excluded as it was excessively noisy and could not be analyzed. This again is an unfortunate limitation of driving simulator studies in which eye or gaze movements are tracked across a wide field of view. The final data set included eleven older subjects. While this was fewer older subjects than initially planned, large numbers of gaze scans, about 250 per subject, were included in analyses. The older subjects in the final data set were predominantly in the young-old, rather than the old-old, age range, thus the extent of age-related scanning deficits may have been underestimated.

As with any driving simulator study, there are limitations regarding the generalizability of the findings to real-world driving. Simulated driving does not capture all aspects of on-road driving, but does provide a safe, repeatable environment in which to evaluate driving performance and gaze scanning behaviors. We used a wide-field driving simulator with a horizontal field of view of 225° and head and eye tracking across 180°. As such, we were able to display realistic four-way intersection scenarios without restrictions in the field of view (which can be a limitation in other simulators with a smaller field of view). Although we have not directly compared scanning in the driving simulator and on-road driving, we expect that drivers who scan less extensively in the driving simulator will likely also scan less extensively in real- world driving. In a study by Romoser and Fisher (2009), older drivers with higher rates of failing to make secondary scans in a driving simulator also had higher rates of failing to make secondary scans at intersections when driving their own vehicle along a 30-minute, self-chosen route without a researcher in the vehicle.

4.4. Conclusions

In the current study we evaluated the effects of age and guidance type (Lead Car or GPS instructions) on gaze scanning on approach to intersections. Following a Lead Car slightly reduced the number of scans close to the intersection, but the magnitude of the effect was small. In contrast, age effects were much greater, especially for the large head+eye scans (comprising a substantial head movement as well as eye movements). Older drivers made fewer head+eye scans and the average scan magnitude was smaller than was the case for younger drivers. Furthermore, older drivers did not compensate for deficits in the head movement component of head+eye scans by making larger eye movements.

Overall, our results suggest that older drivers may be at increased risk for intersection collisions because they do not adequately scan the full width of the field of view at intersections. Romoser and Fisher (2009) demonstrated the efficacy of a training program for older drivers which focused on the importance of making secondary scans to check for hazards after entering an intersection. Our findings suggest that training programs also need to raise awareness of the importance of scanning the full width of an intersection before entering it. Training should encourage older drivers to make large lateral scans before entering intersections, which may be helped by the use of body and shoulder movements, as well as head and eye movements.

Our findings not only provide strong evidence that older subjects scan less extensively on approach to intersections but also underline the importance of tracking both head and eye movements to determine the relative contributions of each to scanning deficits. The next step will be to determine the extent to which scanning predicts the safe detection of hazards at intersections and to evaluate the relative importance of eye-only and head+eye scans to timely detection of hazards by older and younger drivers.

Highlights.

Prior work on the effects of age on visual scanning at intersections is conflicting

The contributions of head and eye movemeent components to gaze scanning was examined

Older drivers made smaller head and smaller eye movements than younger drivers

Older drivers made fewer scans with a significant head movement component

Older drivers made more scans consisting only of an eye movement component

Acknowledgements

The authors would like to acknowledge Sarah Sheldon for assistance in driving simulator scenario development, and Sarah Sheldon and Dora Pepo for their help with recruiting and testing subjects.

Funding information:

Funded in part by NIH grants R01-EY025677, T35-EY007149, S10-RR028122, and P30- EY003790.

6. Appendix I

Table A1.

Inventory of 4-Way Intersections

| INT # | Obstruction (Left / Right) | Signage | Cross Traffic (Left / Right) | Intersection maneuver |

|---|---|---|---|---|

| 1 | Open / Open | None | None / U-Haul | Turn right |

| 2 | Open / Obstructed | None | None / Red Van | Straight |

| 3 | Open / Open | Stop | None / Police Car | Turn right |

| 4 | Obstructed / Open | None | None / Car | Straight |

| 5 | Obstructed / Obstructed | None | None / None | Turn left |

| 6 | Obstructed / Obstructed | None | Car / School Bus | Straight |

| 7 | Open / Open | Traffic Light | Ambulance / Car | Straight |

| 8 | Open / Open | Yield | None / None | Straight |

| 9 | Obstructed / Open | None | None; Motorcycle | Straight |

| 10 | Open / Obstructed | None | None / None | Turn right |

| 11 | Obstructed/ Obstructed | Yield | None / Car | Straight |

| 12 | Obstructed / Open | Traffic Light | U-Haul / None | Turn left |

| 13 | Obstructed / Open | None | Car / None | Straight |

| 14 | Obstructed / Obstructed | None | None / Car | Straight |

| 15 | Open / Obstructed | none | None / Car | Turn left |

Footnotes

Disclosures: none

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

5. References

- AASHTO. (2011). A policy on Geometric Design of Highways and Streets. In American Association of State Highway and Transportation Officials, Washington, DC, 6th Edition. [Google Scholar]

- Bahill AT, Adler D, & Stark L. (1975). Most naturally occurring human saccades have magnitudes of 15 degrees or less. Investigative Ophthalmology & Visual Science, 14(6), 468–469. [PubMed] [Google Scholar]

- Bahnemann M, Hamel J, De Beukelaer S, Ohl S, Kehrer S, Audebert H, & Brandt SA (2015). Compensatory eye and head movements of patients with homonymous hemianopia in the naturalistic setting of a driving simulation. Journal of Neurology, 262(2), 316–325. [DOI] [PubMed] [Google Scholar]

- Bao S, & Boyle LN (2009). Age-related differences in visual scanning at median-divided highway intersections in rural areas. Accident Analysis & Prevention, 41(1), 146–152. [DOI] [PubMed] [Google Scholar]

- Beintema JA, van Loon EM, & van den Berg AV (2005). Manipulating saccadic decision-rate distributions in visual search. Journal of Vision, 5(3), 150–164. [DOI] [PubMed] [Google Scholar]

- Bowers AR, Ananyev E, Mandel AJ, Goldstein RB, & Peli E. (2014). Driving with hemianopia: IV. Head scanning and detection at intersections in a simulator. Investigative Ophthalmology & Visual Science, 55(3), 1540–1548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bowers AR, Bronstad PM, Spano LP, Goldstein RB, & Peli E. (2019). The Effects of Age and Central Field Loss on Head Scanning and Detection at Intersections. Translational Vision Science & Technology, 8(5), 1–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooks JO, Goodenough RR, Crisler MC, Klein ND, Alley RL, Koon BL, Logan WC, Ogle JH, Tyrrell RA, & Wills RF (2010). Simulator sickness during driving simulation studies. Accident Analysis and Prevention, 42(3), 788–796. [DOI] [PubMed] [Google Scholar]

- Chen KB, Xu X, Lin J-H, & Radwin RG (2015). Evaluation of older driver head functional range of motion using portable immersive virtual reality. Experimental Gerontology, 70, 150–156. [DOI] [PubMed] [Google Scholar]

- Dowiasch S, Marx S, Einhäuser W, & Bremmer F. (2015). Effects of aging on eye movements in the real world. Frontiers In Human Neuroscience, 9, 46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dukic T, & Broberg T. (2012). Older drivers’ visual search behaviour at intersections. Transportation Research Part F: Traffic Psychology and Behaviour, 15(4), 462–470. [Google Scholar]

- Guitton D. (1992). Control of eye—head coordination during orienting gaze shifts. Trends In Neurosciences, 15(5), 174–179. [DOI] [PubMed] [Google Scholar]

- Haggerty H, Richardson S, Mitchell KW, & Dickinson AJ (2005). A modified method for measuring uniocular fields of fixation: reliability in healthy subjects and in patients with Graves orbitopathy. Archives of Ophthalmology, 123(3), 356–362. [DOI] [PubMed] [Google Scholar]

- Hamel J, DeBeukelear S, Kraft A, Ohl S, Audebert HJ, & Brandt SA (2013). Age-related changes in visual exploratory behavior in a natural scene setting. Frontiers In Psychology, 4(339), 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isler RB, Parsonson BS, & Hansson GJ (1997). Age related effects of restricted head movements on the useful field of view of drivers. Accident Analysis & Prevention, 29(6), 793–801. [DOI] [PubMed] [Google Scholar]

- Jenness JW, Lerner ND, Mazor S, Osberg JS, Tefft BC (2008) Use of Advanced In-Vehicle Technology by Young and Older Early Adopters. Selected Results from Five Technology Surveys. Report No. DOT HS 811 004. U.S. Department of Transportation, National Highway Traffic Safety Administration; Retrieved from: https://www.nhtsa.gov/sites/nhtsa.dot.gov/files/documents/811_004.pdf accessed 6/9/2020 [Google Scholar]

- Keskinen E, Ota H, & Katila A. (1998) Older drivers fail in intersections: Speed discrepancies between older and younger male drivers. Accident Analysis & Prevention,30(3):323–330. [DOI] [PubMed] [Google Scholar]

- Kito T, Haraguchi M, Funatsu T, Sato M, & Kondo M. (1989). Measurements of gaze movements while driving. Perceptual And Motor Skills, 68(1), 19–25. [DOI] [PubMed] [Google Scholar]

- Kliegl R, Dambacher M, Dimigen O, Jacobs AM, & Sommer W. (2012). Eye movements and brain electric potentials during reading. Psychological Research, 76(2), 145–158. [DOI] [PubMed] [Google Scholar]

- Kosaka H, Koyama H, & Nishitani H. (2005). Analysis of driver’s behavior from heart rate and eye movement when he/she causes an accident between cars at an intersection. SAE Technical Paper, No.: 2005–01-0432 [Google Scholar]

- Kuznetsova A, Brockhoff PB, & Christensen RHB (2017). lmerTest Package: Tests in Linear Mixed Effects Models. Journal Of Statistical Software, 82(13), 1–26. [Google Scholar]

- Loy A, Hofmann H, & Cook D. (2017) Model choice and diagnostics for linear mixed-effects models using statistics on street corners. Journal of Computational and Graphical Statistics, 26(3), 478–492 [Google Scholar]

- Pollatsek A, Romoser MR, & Fisher DL (2012). Identifying and remediating failures of selective attention in older drivers. Current Directions In Psychological Science, 21(1), 3–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Development Core Team. (2019). R: A Language and Environment for Statistical Computing. Vienna, Austria.: R Foundation for Statistical Computing; Retrieved from https://www.R-project.org/ [Google Scholar]

- Rahimi M, Briggs R, & Thom D. (1990). A field evaluation of driver eye and head movement strategies toward environmental targets and distractors. Applied Ergonomics, 21(4), 267–274. [DOI] [PubMed] [Google Scholar]

- Romoser MR, & Fisher DL (2009). The effect of active versus passive training strategies on improving older drivers’ scanning in intersections. Human Factors, 51(5), 652–668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romoser MR, Pollatsek A, Fisher DL, & Williams CC (2013). Comparing the glance patterns of older versus younger experienced drivers: Scanning for hazards while approaching and entering the intersection. Transportation Research Part F: Traffic Psychology And Behaviour, 16, 104–116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swan G, Goldstein RB, Savage SW, Zhang L, Ahmadi A, & Bowers AR (in press). Automatic processing of gaze movements to quantify gaze scanning behaviors in a driving simulator. Behavior Research Methods [DOI] [PMC free article] [PubMed] [Google Scholar]

- Venables W, & Ripley B. (2002). Random and mixed effects In: Modern applied statistics with S, Springer, New York, NY, 271–300. [Google Scholar]

- Wood J, Chaparro A, & Hickson L. (2009). Interaction between visual status, driver age and distracters on daytime driving performance. Vision Research, 49 (17), 2225–2231 [DOI] [PubMed] [Google Scholar]