Abstract

Recently, speed of presentation of facially expressive stimuli was found to influence the processing of compound threat cues (e.g., anger/fear/gaze). For instance, greater amygdala responses were found to clear (e.g., direct gaze anger/averted gaze fear) versus ambiguous (averted gaze anger/direct gaze fear) combinations of threat cues when rapidly presented (33 and 300ms), but greater to ambiguous versus clear threat cues when presented for more sustained durations (1, 1.5, and 2s). A working hypothesis was put forth (Adams et al., 2012) that these effects were due to differential magnocellular versus parvocellular pathways contributions to the rapid versus sustained processing of threat, respectively. To test this possibility directly here, we restricted visual stream processing in the fMRI environment using facially expressive stimuli specifically designed to bias visual input exclusively to the magnocellular versus parvocellular pathways. We found that for magnocellular-biased stimuli, activations were predominantly greater to clear versus ambiguous threat-gaze pairs (on par with that previously found for rapid presentations of threat cues), whereas activations to ambiguous versus clear threat-gaze pairs were greater for parvocellular-biased stimuli (on par with that previously found for sustained presentations). We couch these findings in an adaptive dual process account of threat perception and highlight implications for other dual process models within psychology.

Keywords: Magnocellular, Parvocellular, Threat, Fear expression, Eye gaze, Facial expression, Threat perception, Shared signal hypothesis, Threat-related ambiguity

Prior studies have reported opposing results for the involvement of the amygdala in the processing clear versus ambiguous threat. In our own work, we have found that the speed with which threat-related expressions are presented has a dramatic influence on the pattern of these effects. As a vehicle for testing potential differential attunements of the magnocellular versus parvocellular pathways to compound threat cues, which we hypothesize underlie these different patterns of effects, we sought here to replicate and then extend our previous findings for the combinatorial processing of threat-gaze cues in the face (Adams et al., 2012). Specifically, we predicted that the magnocellular pathway would be preferentially tuned to clear threat cues (e.g., averted gaze fear expressions), whereas the parvocellular pathway would be preferentially tuned to ambiguous threat cues (e.g., direct looking fear expressions). Below, we review the evolution of work on gaze by threat expression integration, and its relevance to the current question of interest.

1. Resolving an initial puzzle

Early fMRI studies revealed a more robust and consistent amygdala response to direct-gaze fearful faces compared to direct gaze-anger faces. This finding was puzzling given that the amygdala was long believed to be critical for the detection of threat, and fear expressions are arguably a less direct and immediate signal of threat compared to anger. Based on this premise previous researchers posited that the amygdala may not only be involved in detecting clear threat, but may also be critical to deciphering ambiguity surrounding the source of threat (Whalen et al., 2001). Based on early fMRI findings in humans, and related effects reported in the animal literature, Whalen (1998) hypothesized that the amygdala response to threat may be inversely related to the amount of information there is regarding the nature and source of a threat.

Around this same time, behavioral evidence was accruing revealing that gaze direction exerts a meaningful influence on the clarity/ambiguity of threat expressions. Adams and Kleck introduced the “shared signal hypothesis” (Adams and Kleck, 2003, 2005). This hypothesis predicts that when paired, cues relevant to threat that share a congruent underlying signal value of approach versus avoidance should facilitate the processing efficiency of that threat. Anger is a threat-related emotion associated with an approach orientation; its behavioral expression is aggression (note: etymologically “aggress” literally means “to move toward”). Direct eye gaze likewise signals a heightened likelihood of approach. Fear, on the other hand, is associated with a greater likelihood to flee/avoid. Likewise, averted gaze is a signal, and at times itself a form, of withdrawal/avoidance. Thus, because anger and direct gaze both signal approach, and fear and averted gaze both signal avoidance, Adams and Kleck predicted that they would mutually facilitate the processing of one another. That is what they found. Speed of processing, accuracy, and perceived intensity of anger and fear expressions were found to be enhanced for congruent threat-gaze pairs (i.e., direct-gaze anger/both approach signals and averted-gaze fear/both avoidance signals) compared to incongruent threat-gaze pairs (i.e., averted-gaze anger and direct-gaze fear, both being combinations of approach and avoidance signals; see Adams and Kleck, 2003, 2005). This work revealed that gaze direction can influence the perceived clarity/ambiguity of threat expressions, thereby offering a vehicle for directly testing the threat-ambiguity hypothesis neuroscientifically.

Bringing the gaze and emotion paradigm into the fMRI environment, Adams et al. (2003) sought to test the threat-ambiguity hypothesis at the neural level. In this first study, Whalen et al.’s (2001) initial findings were replicated. Greater amygdala activation was found for direct gaze fear compared to direct gaze anger. Critically, and in line with the threat-ambiguity hypothesis, this study also found an opposite pattern for averted gaze faces, such that there was more amygdala activation to averted gaze anger than averted gaze fear. This finding offered the first direct evidence for a role of the amygdala in processing threat-related ambiguity. At the time, this finding also helped resolve the long-standing puzzle as to why amygdala activation was consistently and robustly found in response to fear displays, but not to anger displays, when anger (at least coupled with direct gaze) is arguably a clearer signal of threat (it signals the presence of threat, its source, and its target).

2. Facing a new puzzle

Although subsequent studies replicated these initial findings for greater amygdala response to threat-related ambiguity (e.g., Ewbank et al., 2010; George et al., 2001; Straube et al., 2009; Ziaei et al., 2016), other studies emerged that reported the exact opposite pattern, greater amygdala response found to congruent versus ambiguous threat-gaze pairs (Hadjikhani et al., 2008; N’Diaye et al., 2009; Sato et al., 2004). Helping address these disparate findings, a new factor was tested that systematically moderated these effects, namely stimulus presentation speed (Adams et al., 2012). In reviewing the existing literature, it had become clear that findings yielding relatively more amygdala responsivity to clear threat-gaze combinations all utilized presentation parameters favoring magnocellular visual input (e.g., rapid/peripheral/dynamic presentations), a pathway known to be implicated in the initial, rapid orienting response to threat (Vuilleumier et al., 2003). Findings for greater amygdala responsivity to threat-related ambiguity, however, implemented presentation parameters favoring parvocellular visual input (e.g., focally presented, sustained, and static threat expressions).

Drawing on these insights, Adams et al. (2012) tested the effects of presentation speed on responses to compound threat cues, finding that the pattern of amygdala responses to otherwise identical direct versus averted gaze fear expressions systematically flipped depending only on how long they were presented, with 300ms presentations favoring reflexive responses to clear threat, and 1s presentations favoring sustained, reflective processing to ambiguous threat. Specifically, when presented briefly, the amygdala reliably responded more to averted-gaze fear expressions, thereby replicating previous studies finding clear-threat sensitivity. When presented for 1 s, the amygdala responded more to direct-gaze fear expressions, thereby replicating previous studies finding more sensitivity to threat-related ambiguity. These findings were then replicated in a within-subjects comparison using an ultra-high-field 7T scanner (see van der Zwaag et al., 2012).

3. The current work

By using the gaze and emotion paradigm as a vehicle in the current study, we aimed to directly examine whether magnocellular and parvocellular visual systems are preferentially tuned to different combinations of threat cues, clear versus ambiguous, respectively. If so, it would help explain why some studies have found evidence for more amygdala activation to clear threat cues, while others have more to threat-related ambiguity. This approach also meaningfully extends prior findings by focusing on different visual pathway contributions, thus examining different neural networks underlying threat processing, rather than focusing primarily on just the amygdala.

3.1. Method

3.1.1. Participants

Forty-one participants (25 females) from the Massachusetts General Hospital (MGH) and surrounding communities with an age range from 18 to 29 (mean=23.0, SD=2.01) participated in exchange for $50 for their participation. This study was part of a larger battery of unrelated tasks and a larger (N=108) sample of subjects that included older (30–70years old) participants. The data from the full set of participants were recently published in two studies focusing on individual differences in anxiety (Im et al., 2017) and sex differences in brain function and structure (Im et al., 2018) affecting threat cue perception in the magnocellular and parvocellular pathways. For all participants, this was the first task they completed during scanning. In the present study we only included participants who were 30 years or younger to match previous studies using this paradigm for direct comparison (e.g., Adams et al., 2003; van der Zwaag et al., 2012), and specifically to address the hypothesis being tested here put forth in our previous related work also using a young adult sample (Adams et al., 2012). All had normal or corrected-to-normal visual acuity, luminance contrast sensitivity, and normal color vision, as established by pretests using the Snellen acuity chart (Snellen, 1862), the Mars Letter Contrast Sensitivity Test (Mars Perceptrix, Chappaqua, NY, USA), and the Ishihara Color Plates. Informed written consent was obtained according to the protocol approved by the Institutional Review Board of MGH.

3.1.2. Apparatus and stimuli

We utilized a total of 24 face images (12 females) selected from the Pictures of Facial Affect (Ekman and Friesen, 1976), from the FACE database (Ebner et al., 2010), and from the NimStim Emotional Face Stimuli database (Tottenham et al., 2009). The face images displayed either a neutral or fearful expression with either a direct gaze or averted gaze. Faces with an averted gaze had the gaze pointing averted either leftward or rightward. Permutations of visually biased stimuli were generated using MATLAB® (Mathworks Inc., Natick, MA), together with the Psychophysics Toolbox extensions (Brainard, 1997; Pelli, 1997). Two-tone images of faces were presented in the center of a gray screen, subtending a visual angle of 5.79° × 6.78°.

Each face image was first converted to a two-tone image (black-white; unbiased stimuli) by a custom MATLAB® thresholding script. From the two-tone image, low-luminance contrast and achromatic stimuli (magno-biased stimuli) and chromatically defined and isoluminant stimuli (red-green; parvo-biased stimuli) were generated. The foreground-background luminance contrast for achromatic M-biased stimuli and the iso-luminance values for chromatic P-biased stimuli typically vary across individual observers. Therefore, these values were established for each participant in separate pretests, with the participant positioned in the scanner, immediately before commencing functional scanning. This ensured that the exact viewing conditions were subsequently used for functional scanning in the main experiment.

3.1.3. Procedure

Participants were consented, metal screened, and given a visual acuity test (Snellen chart, Snellen, 1862), luminance contrast sensitivity (Mars Letter Contrast Sensitivity Test, Mars Perceptrix, Chappaqua, NY, USA) and color perception task (Ishihara color plates). Then once positioned in the fMRI scanner, participants completed the two pretests (luminance contrast and isoluminance point determination), followed by the main experiment. For all these tasks, facial expressions were rear-projected onto a mirror attached to a 32-channel head coil in the fMRI scanner, located in a dark room. To determine the appropriate thresholds for magnocellular and parvocellular biased stimuli for each participant required two pretests to establish the appropriate luminance contrast and isoluminance point, which are commonly employed, standard techniques that have been successfully used in studies exploring the M- and P-pathway contributions to object recognition, visual search, schizophrenia, and dyslexia (Cheng et al., 2004; Kveraga et al., 2007; Schechter et al., 2003; Steinman et al., 1997; Thomas et al., 2012).

Unbiased stimuli.

Black and white thresholded stimuli were produced from photographic images, which served as a baseline control, and base image for generating the magnocellular and parvocellular biased stimuli.

Magnocellular-biased stimuli.

To establish the foreground-background luminance contrast to bias the two-tone stimuli described above to processing along the magnocellular pathway, we employed an algorithm that computed the mean of the turnaround points above and below medium-gray background, which reliably converged around the true background value ([127 127 127] for rgb value). From this threshold, the appropriate luminance (~3.5% Weber contrast) value was computed for the face images to be used in the low-luminance-contrast (M-biased) condition.

Parvocellular-biased stimuli.

For the chromatically defined, isoluminant (P-biased) stimuli, each participant’s isoluminance point was found using heterochromatic flicker photometry with two-tone face images displayed in rapidly alternating colors, between red and green. The alternation frequency was ~14Hz, because we previously obtained the best estimates for the isoluminance point (e.g., narrow range within-subjects and low variability between-subjects; Kveraga et al., 2007; Thomas et al., 2012) at this frequency. The isoluminance point was defined as the color values at which the flicker caused by luminance differences between red and green colors disappeared and the two alternating colors fused, making the image looked steady. For each trial, participants were required to report via a key press whether the stimulus appeared flickering or steady. Depending on the participant’s response, the value of the red gun in [r g b] was adjusted up or down in a pseudorandom manner for the next cycle. The average of the values in the narrow range when a participant reported a steady stimulus became the isoluminance value for the subject used in the experiment. Thus, isoluminant stimuli were defined only by chromatic contrast between foreground and background, which appeared equally bright to the observer.

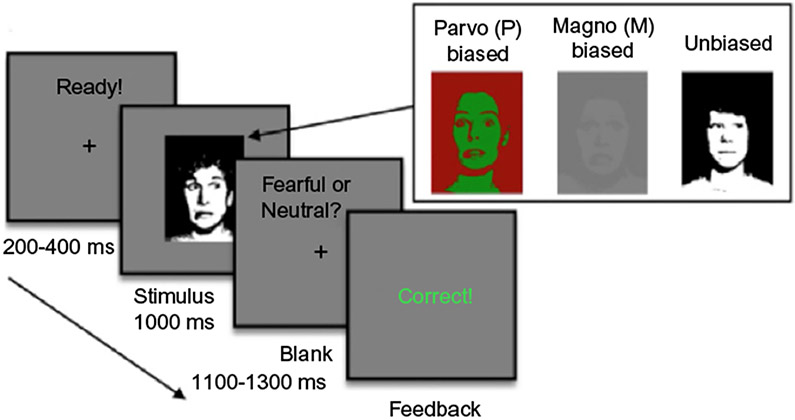

For the experimental task, participants were presented with fear and neutral facial displays in a 3 (stimulus modality: unbiased, M-biased, P-biased) × 2 (emotion: neutral, fear) × 2 (gaze: direct, averted) factorial design. Each stimulus was presented for 500ms, after which participants were instructed to make a key press to indicate the expression they just saw as quickly and accurately as possible: (1) neutral, (2) angry, (3) fearful, or (4) did not recognize the expression. Only neutral and fear expressions were shown. Anger was included as a response option to be sure neutral faces were seen as neutral and not anger. One-fourth of the trials were catch trials in which a stimulus face did not appear (see Fig. 1). Overall accuracy was high (90.4%). Accuracy for neutral and fear expressions was comparable across all conditions: unbiased (neutral=91.3%, fear=94.5%), M-biased (neutral=89.5%, fear=91.3%), and P-biased (neutral=87.5%, fear=87.5%).

FIG. 1.

Illustrates a sample trial of the main experiment. After a jittered ISI (200–400ms), a face stimulus was presented for 1000ms, followed by a blank screen (1100–1300ms). Participants were required to indicate whether a face image presented on the screen looked fearful or neutral. Key-target mapping was counterbalanced across participants such that a half of the participants pressed the left key for neutral and the right key for fearful and the other half pressed the left key for fearful and the right key for neutral. The participants were presented with 384 trials total and feedback was provided every trial.

3.1.4. fMRI data acquisition and analysis

fMRI images of brain activity were acquired using a 1.5T scanner (Siemens Avanto 32-channel “TIM” system) located at the Athinoula A. Martinos Center for Biomedical Imaging, Massachusetts General Hospital (Charlestown, MA, USA). High-resolution anatomical MRI data were acquired using T1-weighted images for the reconstruction of each subject’s cortical surface (TR=2300ms, TE=2.28 ms, flip angle=8°, FoV=256 × 256 mm2, slice thickness=1mm, sagittal orientation). The functional scans were acquired using simultaneous multislice, gradient-echo echoplanar imaging with a TR of 2500ms, three echoes with TEs of 15, 33.83, and 52.66 ms, flip angle of 90°, and 58 interleaved slices (3 × 3 × 2mm resolution). Scanning parameters were optimized by manual shimming of the gradients to fit the brain anatomy of each subject, and tilting the slice prescription anteriorly 15–25° up from the AC-PC line as described in the previous studies (Deichmann et al., 2003; Kveraga et al., 2007; Wall et al., 2009), to improve signal and minimize susceptibility artifacts in the brain regions including orbitofrontal cortex (OFC) and amygdala (Kringelbach and Rolls, 2004). We acquired 384 functional volumes per subject in four functional runs, each lasting 4min.

The acquired fMRI images were pre-processed using SPM8 (Wellcome Department of Cognitive Neurology, http://www.fil.ion.ucl.ac.uk/spm/software/spm8/). The functional images were corrected for differences in slice timing, realigned, corrected for movement-related artifacts, coregistered with each participant’s anatomical data, normalized to the Montreal Neurological Institute (MNI) template, and spatially smoothed using an isotropic 8-mm full width half-maximum (FWHM) Gaussian kernel. Outliers due to movement or signal from preprocessed files, using thresholds of 3 SD from the mean, 0.75mm for translation and 0.02 rad rotation, were removed from the data sets, using the ArtRepair software (Mazaika et al., 2009).

Subject-specific contrasts were estimated using a fixed-effects model. These contrast images were used to obtain subject-specific estimates for each effect. For group analysis, these estimates were then entered into a second-level analysis treating participants as a random effect, using one-sample t-tests at each voxel. The resulting contrasts were thresholded at P<0.005 and k=10, then family-wise error (FWE) corrected (P<0.05). Coordinates are reported in MNI space. For visualization and anatomical labeling purposes, all group contrast images were overlaid onto the inflated group average brain, by using 2D surface alignment techniques implemented in FreeSurfer (Fischl et al., 2002).

3.2. Results

Comparisons of direct versus averted fear expressions for our two-tone unbiased facial stimuli yielded similar patterns of activation to that previously found using visually rich photographic depictions of threat faces. This finding replicates, in particular, greater left amygdala activation to threat-related ambiguity (see Table 1). Next, we directly tested the differential contribution of M and P pathways to threat cue processing. To do this, we focused on brain activity evoked when contrasting clear threat cues (fearful faces with averted eye gaze) and ambiguous threat cues (fearful faces with direct eye gaze), for M-biased and P-biased images separately. As shown in Fig. 2B (right image), we observed that M-biased clear versus ambiguous threat cues preferentially activated the parietal and prefrontal regions along the dorsal visual stream (including the right amygdala; see Fig. 2A). M-biased ambiguous threat cues (versus clear threat cues) did not evoke brain activation at all above our threshold of FWE P<0.05 (see Table 2). For P-biased images (see Fig. 2B, right image), all activation was evoked by ambiguous versus clear threat cues, particularly in the occipital and inferior temporal regions along the ventral visual stream (including the left amygdala; see too Fig. 2A). P-biased clear threat cues (versus ambiguous threat cues) did not evoke brain activation at all, above our threshold of FWE P<0.05 (see Table 3).

Table 1.

Regions of increased activation to clear (averted gaze fear) versus ambiguous threat cue (direct gaze fear) presented in unbiased images.

| Anatomical location | MNI coordinates | ||||

|---|---|---|---|---|---|

| Unbiased stimuli | t-Value | Extent | x | y | z |

| Clear—ambiguous | |||||

| L Orbitofrontal cortex | 3.616 | 12 | −24 | 50 | −8 |

| R Inferior frontal gyrus | 3.585 | 34 | 30 | 17 | 22 |

| R Cerebellum | 3.505 | 26 | 18 | −73 | −16 |

| L Middle occipital gyrus | 3.467 | 16 | −39 | −88 | 16 |

| L Superior temporal gyrus | 3.444 | 17 | −42 | 5 | −30 |

| L Cerebellum | 3.202 | 10 | −33 | −55 | −42 |

| R Orbitofrontal cortex | 3.153 | 10 | 27 | 23 | −10 |

| L Visual cortex (BA 18) | 3.110 | 15 | 0 | −79 | 14 |

| Ambiguous—Clear | |||||

| L Amygdala/parahippocampal gyrus | 2.738 | 14 | −18 | −8 | −26 |

| R Superior frontal gyrus | 3.554 | 56 | 27 | −10 | 66 |

| R Precentral gyrus | 3.506 | 25 | 21 | −34 | 68 |

| R Inferior temporal gyrus | 3.481 | 16 | 57 | 2 | −36 |

| L Insula | 3.303 | 16 | −45 | −22 | 14 |

| L Superior temporal gyrus | 3.289 | 11 | −48 | −25 | 0 |

| R Supplementary motor area | 3.241 | 16 | 15 | −4 | 62 |

P < 0.05, FWE-corrected.

FIG. 2.

(A) ROI analysis depicting differential activation in the right and left amygdala in response to M- versus P-biased stimuli. (B) Activations evoked by M-biased clear minus ambiguous threat stimuli (shown in orange), and by P-biased ambiguous minus clear threat stimuli (shown in green).

Table 2.

Regions of increased activation to clear (averted gaze fear) versus ambiguous threat cue (direct gaze fear) presented in M-biased images.

| Anatomical location | MNI coordinates | ||||

|---|---|---|---|---|---|

| M-biased stimuli | t-Value | Extent | x | y | z |

| Clear—Ambiguous | |||||

| R Prefrontal cortex | 5.181 | 710 | 18 | 65 | 8 |

| L Medial prefrontal cortex | 3.754 | – | −3 | 62 | 10 |

| R Orbitofrontal cortex | 5.115 | 1226 | 36 | 26 | −8 |

| R Orbitofrontal cortex | 5.061 | – | 54 | 32 | 6 |

| R Inferior frontal cortex | 4.303 | – | 48 | 20 | 26 |

| R Middle temporal gyrus | 4.497 | 204 | 72 | −43 | 0 |

| R Superior temporal sulcus | 3.418 | – | 72 | −19 | 0 |

| L Inferior frontal gyrus | 4.357 | 361 | −54 | 20 | 28 |

| L Inferior frontal gyrus | 3.605 | – | −45 | −1 | 30 |

| L Middle frontal gyrus | 3.056 | – | −45 | 47 | 22 |

| R Medial orbitofrontal cortex | 4.289 | 208 | 12 | 44 | −14 |

| L Visual cortex (BA 18) | 4.047 | 228 | −21 | −106 | −10 |

| L Visual cortex (BA 19) | 3.247 | – | −45 | −91 | −10 |

| R Amygdala* | 3.823 | 29 | 18 | −4 | −12 |

| Ambiguous—Clear | |||||

| None | |||||

indicates activation at P< 0.005 and k=10, uncorrected.

–indicates that this cluster is part of a larger cluster immediately above.

P<0.05, FWE-corrected.

Table 3.

Regions of increased activation to clear (averted gaze fear) versus ambiguous threat cue (direct gaze fear) presented in P-biased images.

| Anatomical location | MNI coordinates | ||||

|---|---|---|---|---|---|

| P-biased stimuli | t-Value | Extent | x | y | z |

| Clear—Ambiguous | |||||

| None | |||||

| Ambiguous—Clear | |||||

| R Visual cortex (BA 18) | 4.878 | 1824 | 15 | −103 | 20 |

| R Visual cortex (BA 19) | 4.835 | – | 33 | −79 | 10 |

| L Visual cortex (BA 17) | 4.795 | – | −6 | −85 | 8 |

| R Fusiform cortex | 3.886 | 347 | 36 | −55 | −22 |

| 3.504 | – | 30 | −70 | −10 | |

| R Parahippocampal cortex | 3.705 | – | 24 | −28 | −18 |

| L Amygdala* | 2.767 | 12 | −24 | −1 | −20 |

indicates activation at P<0.005 and k=10, uncorrected.

–indicates that this cluster is part of a larger cluster immediately above.

P<0.05, FWE-corrected.

4. General discussion

Previously, Adams et al. (2012) offered evidence that reflective responses previously found to ambiguous threat-gaze cues (e.g., Adams et al., 2003) appear to be functionally and neurally differentiated from an earlier, presumably reflexive attunement to clear threat cues. This work revealed different neural responses to rapid versus sustained presentations of threat-gaze pairs, with greater amygdala responses to clear threat-gaze combinations when rapidly presented, and greater amygdala responses to ambiguous threat-gaze combinations. The current work examined whether these effects might be due to magnocellular and parvocellular visual pathways being differentially implicated in compound threat-cue perception. Such differential attunement to compound threat cues is consistent with there being both an early-warning, action-based response system, and a parallel analysis-based response system that acts to either confirm and refine the response already set in motion, or to disconfirm and inhibit inappropriate behavioral responses already in progress.

As noted earlier, employing speeded reaction-time tasks and self-reported perception of emotional intensity, prior behavioral studies have found that direct gaze, which signals approach, facilitated the processing efficiency, accuracy, and increased the perceived intensity of facially communicated approach-oriented emotions (e.g., anger), whereas averted gaze, which signals avoidance, facilitated avoidance-oriented emotions in the same ways (e.g., fear; Adams and Kleck, 2003, 2005; Adams et al., 2003; see also Cristinzio et al., 2010; Sander et al., 2007). Consistent with this, congruent threat cue combinations have likewise been found to evoke stronger amygdala responsivity (Adams et al., 2012; Hadjikhani et al., 2008; N’Diaye et al., 2009).

The amygdala, however, is not simply a reflexive threat detector. Ambiguous combinations of facial threat cues, which mix approach and avoidance signals, have also been found to evoke heightened amygdala activity in humans (Adams et al., 2003; Boll et al., 2011; van der Zwaag et al., 2012). This finding is consistent with evidence from high-resolution neuroimaging in both humans (Boll et al., 2011) and monkeys (Hoffman et al., 2007) revealing that ambiguity, induced by context (Boll et al., 2011), or by averted gaze combined with a direct threat expression (Hoffman et al., 2007), activates different nuclei in the amygdala compared to the same facial expression making eye contact. A neurophysiological examination has likewise found larger neuronal firing rates to threatening faces than appeasing or neutral faces in the monkey amygdala, but different populations of neurons were selective for facial identity, facial expression cues, and for both identity and expression cues together (Gothard et al., 2007).

As reviewed in our previous research, we have also shown that clear threat cue combinations (e.g., fear with averted gaze) engage reflexive processes and activate the amygdala when displayed briefly (33 and 300ms). However, ambiguous cues (e.g., fear coupled with direct gaze) at a longer stimulus exposure (1000, 1500, and 2000ms) also engage the amygdala, which, in conjunction with other face-selective regions, participates in this reflection and ambiguity resolution process (see Adams and Kveraga, 2015). These seemingly opposing findings appear to be explained by a dual process role of the amygdala in reflexive versus reflective processing of threat cues (more on this below).

Some classic face processing models (see Bruce and Young, 1986) and emotion theories (e.g., Ekman and Friesen, 1971) have long championed the view that functionally distinct sources of information conveyed by the face are processed along functionally distinct, and doubly dissociable neurological routes. This understanding of face and expression processing has led to subfields of research examining various facial cues (e.g., gaze, emotion, gender) in isolation of one another, treating other cues as noise to be controlled. Motivating such research has been the assumption that each type of facial cue is processed in parallel, in a noninteractive manner, even when the physical cues themselves are visually confounded, as is the case between gender and emotion (e.g., Becker et al., 2007; Hess et al., 2009; Le Gal and Bruce, 2002; see Adams et al., 2015 for review). Given the wealth and variety of information simultaneously conveyed by the human face, parallel processing of this kind can be argued to make adaptive sense, avoiding perceptual interference.

There is good reason, however, to argue that perceptual integration of compound emotion cues is itself highly adaptive. Given that social cues communicated by the face convey information about a person’s internal states such as wishes, desires, feelings, and intentions (see Baron-Cohen, 1995), it is arguably only in combination that we should expect such cues to gain ecological significance to the perceiver. There are now numerous accounts of functional interactivity among a wide range of social cues (race, gender, emotion, gaze direction, etc.) in both the social and vision sciences that support this view (see Adams and Kveraga, 2015 for review). It has become clear that social visual cues combine in perception to form unified representations that guide our impressions of and responses to others. To date, however, no discipline has adequately addressed the underlying cognitive and neural mechanisms driving such combinatorial processes in social perception.

From a social functional perspective (see Adams et al., 2017), it stands to reason that social and emotional information conveyed visually only becomes adaptive to the observer when integrated into a holistic percept from which meaningful social affordances relevant to our survival can be extracted. In order to respond in a timely and efficient manner, we seek information about others’ intentions toward us, whether they are likely to approach or avoid us, to be a friend or foe, competitor or cooperator. Such information is necessarily combinatorial in nature, derived from many cues as well as the context within which they are observed. Here we extend this understanding by finding different combinatorial effects depending on magno- versus parvocellular pathway contributions to threat perception, which is consistent with dual-process responses to threat.

Dual process models of social and emotion perception abound. There exist scholarly volumes (e.g., Sherman et al., 2014) and popular books (e.g., Gladwell, 2005; Kahneman, 2011) highlighting the utility of applying dual process models to a wide range of psychological phenomena (e.g., cognition, memory, emotion, social perception, stereotyping, self-regulation, person perception, and threat perception). A central tenet of these theories is that our perceptions, decisions, and behaviors are the product of an interplay between two primary modes of thought: one that is relatively reflexive, automatic, associative, affective, and impulsive, and the other that is thought to be reflective, effortful, rule based, cognitive, and controlled. The reflexive system implicates relatively primitive neural systems that can operate outside of conscious awareness, rapidly computing the relative appetitive or aversive value of situations or stimuli, whereas the reflective system yields a more deliberate, calculated response to threat (see also Lieberman, 2003).

In the area of threat perception, dual-process models have likewise been widely examined. Like other dual-process models, these highlight a relatively automatic, affectively laden “hot” system that triggers rapid responses, and a controlled, analytic “cold” system involved in deeper level analysis. Based on studies examining audition in rats, LeDoux (1998) proposed two distinct pathways for the processing of threatening stimuli, both involving the amygdala. He referred to a “low road” (i.e., fast processing route), which implicates a direct, subcortical pathway from the thalamus to the amygdala. This route bypasses the primary sensory cortices and therefore is thought to be sensitive only to coarse representations of highly salient threat cues that afford automatic responding to clear, imminent danger. He contrasted this with a “high road” (i.e., slower processing route), which involves subcortical and cortical networks that together allow for conscious evaluation of a stimulus and its contextual cues. This route helps organize a more deliberate cognitive and behavioral response.

Current models of face perception have put forth a similar dual pathway for threat perception via the human visual system (see Palermo and Rhodes, 2007). In humans, the magnocellular-based “low” road is thought to produce only a crude image of threat and this is prone to false alarms (see also Vuilleumier et al., 2003), but allows for immediate responding. The parvocellular-based “high” road analysis stream, however, constructs a richer representation of threat, with time to disengage threat responses to false alarms. Our current findings offer good reason to believe that neural responses along these two visual streams are also differentially calibrated to processing clear versus ambiguous threat cues. This is consistent with previous findings. For instance, the amygdala is known to be involved in the early detection of highly salient threat cues involving presumably bottom-up processing (Adolphs and Tranel, 2000; LeDoux, 1998). It is also thought to be involved in the top-down modulated processes necessary for deciphering ambiguous cues such as in: (1) high-level decision making (Hsu et al., 2005), (2) responses to visual stimuli such as complex clips from surrealist films (Hamann et al., 2002), and (3) responses to ambiguity related to threat displays (Adams et al., 2003; see also Davis and Whalen, 2001).

Although the existence, and even usefulness, of the dual pathway approach has been challenged (Pessoa and Adolphs, 2010), recent evidence using intracranial recordings supports the presence of a subcortical “low” road in humans using intracranial recordings (Méndez-Bértolo et al., 2016). Notably, the current work does not necessitate assuming a purely subcortical processing route. The magnocellular pathway subserves rapid responses to threat through projections from early visual cortices to the OFC, which may involve connections through the thalamus as well, that then guide rapid responses even to nonsocial objects (Kveraga et al., 2007). Our current design addresses the presence of both cortical and subcortical contributions. With that said, the primary focus of the current work was on isolating magno-versus parvocellular visual pathway contributions to different combinations of threat-gaze cues signaled in the face.

Further, rather than dual-process models of threat detection that highlight a fast and a slow response threat-response system to the same threat cues (e.g., LeDoux, 1998), our findings suggest a dual process involving visual streams that are differentially tuned to different aspects of the same threat stimuli. Here the magnocellular pathway responded more to clear threat, presumably to trigger immediate adaptive action, and the parvocellular system responded more to ambiguous threat cues, presumably for additional analysis when threat cues are ambiguous. Differences may not simply implicate speed of processing, but also a more coordinated differential processing that works in tandem to provide timely and accurate responses to threat. The current findings expose important differences in how these two visual pathways function that likely have, as of yet unexplored, implications for other research domains underscoring dual modes of processing as well (e.g., attention, memory, appraisal, categorization).

Acknowledgments

Funding for this research was provided by R01 MH101194 to K.K. and R.B.A. Authors declare no conflict of interest.

References

- Adams RB Jr., Kleck RE, 2003. Perceived gaze direction and the processing of facial displays of emotion. Psychol. Sci 14, 644–647. [DOI] [PubMed] [Google Scholar]

- Adams RB Jr., Kleck RE, 2005. Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion 5, 3–11. [DOI] [PubMed] [Google Scholar]

- Adams RB Jr., Kveraga K, 2015. Social vision: functional forecasting and the integration of compound social cues. Rev. Philos. Psychol 6 (4), 591–610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams RB Jr., Gordon HL, Baird AA, Ambady N, Kleck RE, 2003. Effects of gaze on amygdala sensitivity to anger and fear faces. Science 300, 1536. [DOI] [PubMed] [Google Scholar]

- Adams RB Jr., Franklin RG Jr., Kveraga K, Ambady N, Kleck RE, Whalen PJ, Hadjikhani N, Nelson AJ, 2012. Amygdala responses to averted versus direct gaze fear vary as a function of presentation speed. Soc. Cogn. Affect. Neurosci 7, 568–577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams RB Jr., Hess U, Kleck RE, 2015. The intersection of gender-related facial appearance and facial displays of emotion. Emot. Rev 7 (1), 5–13. [Google Scholar]

- Adams RB Jr., Albohn DN, Kveraga K, 2017. Social vision: applying a social-functional approach to face and expression perception. Curr. Dir. Psychol. Sci 26 (3), 243–248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, 2000. Emotion recognition and the human amygdala In: Aggleton JP (Ed.), The Amygdala: A Functional Analysis. Oxford University Press, USA, pp. 587–630. [Google Scholar]

- Baron-Cohen S, 1995. Theory of Mind and Face-Processing: How Do They Interact in Development and Psychopathology? John Wiley & Sons, New York, NY. [Google Scholar]

- Becker DV, Kenrick DT, Neuberg SL, Blackwell KC, Smith DM, 2007. The confounded nature of angry men and happy women. J. Pers. Soc. Psychol 92, 179–190. [DOI] [PubMed] [Google Scholar]

- Boll S, Gamer M, Kalisch R, Buchel C, 2011. Processing of facial expressions and their significance for the observer in subregions of the human amygdala. Neuroimage 56, 299–306. [DOI] [PubMed] [Google Scholar]

- Brainard DH, 1997. The psychophysics toolbox. Spat. Vis 10, 433–436. [PubMed] [Google Scholar]

- Bruce V, Young A, 1986. Understanding face recognition. Br. J. Psychol 77, 305–327. [DOI] [PubMed] [Google Scholar]

- Cheng A, Eysel UT, Vidyasagar TR, 2004. The role of the magnocellular pathway in serial deployment of visual attention. Eur. J. Neurosci 20, 2188–2192. [DOI] [PubMed] [Google Scholar]

- Cristinzio C, N’Diaye K, Seeck M, Vuilleumier P, Sander D, 2010. Integration of gaze direction and facial expression in patients with unilateral amygdala damage. Brain 133, 248–261. [DOI] [PubMed] [Google Scholar]

- Davis M, Whalen PJ, 2001. The amygdala: vigilance and emotion. Mol. Psychiatry 6, 13–34. [DOI] [PubMed] [Google Scholar]

- Deichmann R, Gottfried JA, Hutton C, Turner R, 2003. Optimized EPI for fMRI studies of the orbitofrontal cortex. Neuroimage 19, 430–441. [DOI] [PubMed] [Google Scholar]

- Ebner NC, Riediger M, Lindenberger U, 2010. FACES—a database of facial expressions in young, middle-aged, and older women and men: development and validation. Behav. Res. Methods 42, 351–362. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV, 1971. Constants across cultures in the face and emotion. J. Pers. Soc. Psychol 17 (2), 124. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV, 1976. Pictures of Facial Affect. Consulting Psychologists Press, Palo Alto, CA. [Google Scholar]

- Ewbank MP, Fox E, Calder AJ, 2010. The interaction between gaze and facial expression in the amygdala and extended amygdala is modulated by anxiety. Front. Hum. Neurosci 4, 56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B, Salat DH, Busa E, Albert M, Dieterich M, Haselgrove C, et al. , 2002. Whole brain segmentation. Neuron 33, 341–355. [DOI] [PubMed] [Google Scholar]

- George N, Driver J, Dolan RJ, 2001. Seen gaze-direction modulates fusiform activity and its coupling with other brain areas during face processing. Neuroimage 13 (6), 1102–1112. [DOI] [PubMed] [Google Scholar]

- Gladwell M, 2005. Blink: The Power of Thinking Without Thinking. Little, Brown and Co, New York. [Google Scholar]

- Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG, 2007. Neural responses to facial expression and face identity in the monkey amygdala. J. Neurophysiol 97, 1671–1683. [DOI] [PubMed] [Google Scholar]

- Hadjikhani N, Hoge R, Snyder J, de Gelder B, 2008. Pointing with the eyes: the role of gaze in communicating danger. Brain Cogn. 68, 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamann SB, Ely TD, Hoffman JM, Kilts CD, 2002. Ecstasy and agony: activation of the human amygdala in positive and negative emotion. Psychol. Sci 13, 135–141. [DOI] [PubMed] [Google Scholar]

- Hess U, Adams RB Jr., Grammer K, Kleck RE, 2009. Face gender and emotion expression: are angry women more like men? J. Vis 9, 1–8. [DOI] [PubMed] [Google Scholar]

- Hoffman KL, Gothard KM, Schmid MC, Logothetis NK, 2007. Facial-expression and gaze-selective responses in the monkey amygdala. Curr. Biol 17, 766–772. [DOI] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF, 2005. Neural systems responding to degrees of uncertainty in human decision-making. Science 310, 1680–1683. [DOI] [PubMed] [Google Scholar]

- Im HY, Ward N, Cushing CA, Boshyan J, Adams RB Jr., Kveraga K, 2017. Observer′s anxiety facilitates magnocellular processing of clear facial threat cues, but impairs parvocellular processing of ambiguous facial threat cues. Sci. Reports 7, 15151 10.1038/s41598-017-15495-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Im HY, Adams RB Jr., Cushing C, Boshyan J, Ward N, Kveraga K, 2018. Sex-related differences in behavioral and amygdalar responses to compound facial threat cues. Hum. Brain Mapp 39, 2725–2741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, 2011. Thinking, Fast and Slow. Farrar, Straus and Giroux, New York. [Google Scholar]

- Kringelbach ML, Rolls ET, 2004. The functional neuroanatomy of the human orbitofrontal cortex: evidence from neuroimaging and neuropsychology. Prog. Neurobiol 72 (5), 341–372. [DOI] [PubMed] [Google Scholar]

- Kveraga K, Boshyan J, Bar M, 2007. Magnocellular projections as the trigger of top-down facilitation in recognition. J. Neurosci. 27, 13232–13240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux JE, 1998. The Emotional Brain. Touchstone, New York. [Google Scholar]

- Le Gal PM, Bruce V, 2002. Evaluating the independence of sex and expression in judgments of faces. Percept. Psychophys 64 (2), 230–243. [DOI] [PubMed] [Google Scholar]

- Lieberman MD, 2003. Reflective and reflexive judgment processes: a social cognitive neuroscience approach In: Forgas JP, Williams KR, von Hippel W (Eds.), Social Judgments: Implicit and Explicit Processes. Cambridge University Press, New York, pp. 44–67. [Google Scholar]

- Mazaika PK, Hoeft F, Glover GH, Reiss AL, 2009. Methods and software for fMRI analysis of clinical subjects. Neuroimage 47, 77309 10.1016/S1053-8119(09)70238-1. [DOI] [Google Scholar]

- Méndez-Bértolo C, Moratti S, Toledano R, Lopez-Sosa F, Martínez-Alvarez R, Mah YH, et al. , Strange BA, 2016. A fast pathway for fear in human amygdala. Nat. Neurosci 19, 1041–1049. [DOI] [PubMed] [Google Scholar]

- N’Diaye K, Sander D, Vuilleumier P, 2009. Self-relevance processing in the human amygdala: gaze direction, facial expression, and emotion intensity. Emotion 9, 798–806. [DOI] [PubMed] [Google Scholar]

- Palermo R, Rhodes G, 2007. Are you always on my mind? A review of how face perception and attention interact. Neuropsychologia 45, 75–92. [DOI] [PubMed] [Google Scholar]

- Pelli DG, 1997. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat. Vis 10, 437–442. [PubMed] [Google Scholar]

- Pessoa L, Adolphs R, 2010. Emotion processing and the amygdala: from a “low road” to “many roads” of evaluating biological significance. Nat. Rev. Neurosci 11, 773–783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sander D, Grandjean D, Kaiser S, Wehrle T, Scherer KR, 2007. Interaction effects of perceived gaze direction and dynamic facial expression: evidence for appraisal theories of emotion. Eur. J. Cogn. Psychol 19, 470–480. [Google Scholar]

- Sato W, Yoshikawa S, Kochiyama T, Matsumura M, 2004. The amygdala processes the emotional significance of facial expressions: an fMRI investigation using the interaction between expression and face direction. Neuroimage 22, 1006–1013. [DOI] [PubMed] [Google Scholar]

- Schechter I, Butler PD, Silipo G, Zemon V, Javitt DC, 2003. Magnocellular and parvocellular contributions to backward masking dysfunction in schizophrenia. Schizophr. Res 64, 91–101. [DOI] [PubMed] [Google Scholar]

- Sherman JW, Gawronski B, Trope Y (Eds.), 2014. Dual-Process Theories of the Social Mind. The Guilford Press, New York. [Google Scholar]

- Snellen H, 1862. Probebuchstaben zur Bestimmung der Sehschärfe. Utrecht. [Google Scholar]

- Steinman BA, Steinman SB, Lehmkuhle S, 1997. Research note transient visual attention is dominated by the magnocellular stream. Vision Res. 37, 17–23. [DOI] [PubMed] [Google Scholar]

- Straube T, Langohr B, Schmidt S, Mentzel H, Miltner WHR, 2009. Increased amygdala activation to averted versus direct gaze in humans is independent of valence of facial expression. Neuroimage 49, 2680–2686. [DOI] [PubMed] [Google Scholar]

- Thomas C, Kveraga K, Huberle E, Karnath H-O, Bar M, 2012. Enabling global processing in simultanagnosia by psychophysical biasing of visual pathways. Brain J. Neurol 135 (5), 1578–1585. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, et al. , 2009. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 168, 242–249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Zwaag W, Da Costa S, Adams RB Jr., Hadjikhani N, 2012. Effect of gaze and stimulus duration on amygdala activation to fearful faces—a 7T study. Brain Topogr. 25, 125–128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ, 2003. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat. Neurosci. 6, 624–631. [DOI] [PubMed] [Google Scholar]

- Wall MB, Walker R, Smith AT, 2009. Functional imaging of the human superior colliculus: an optimised approach. Neuroimage 47, 1620–1627. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, 1998. Fear, vigilance and ambiguity: initial neuroimaging studies of the human amygdala. Curr. Dir. Psychol. Sci 7, 177–188. [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL, 2001. A functional MRI study of human amygdala responses to facial expressions of fear versus anger. Emotion 1, 70–83. [DOI] [PubMed] [Google Scholar]

- Ziaei M, Ebner NC, Burianová H, 2016. Functional brain networks involved in gaze and emotional processing. Eur. J. Neurosci 45, 312–320. [DOI] [PubMed] [Google Scholar]